Abstract

Emotion based brain computer system finds applications for impaired people to communicate with surroundings. In this paper, electroencephalogram (EEG) database of four emotions (happy, fear, sad, and relax) is recorded and flexible analytic wavelet transform (FAWT) is proposed for the emotion classification. FAWT analyzes the EEG signal into sub-bands and statistical measures are computed from the sub-bands for extraction of emotion specific information. The emotion classification performance of sub-band wise extracted features is examined over the variants of k-nearest-neighbor (KNN) classifier. The weighted-KNN provides the best emotion classification performance 86.1% as compared to other KNN variants. The proposed method shows better emotion classification performance as compared to other existing four emotions classification methods.

Keywords: Emotion classification, Electroencephalogram, Flexible analytic wavelet transform, k-nearest-neighbor

Introduction

Brain computer interface (BCI) is the medium of establishing the connection between brain and external peripherals. The BCI system can provides support for the physically disabled and impaired people. The BCI system can be develop by analysis, transforming, monitoring, and evaluation of electrical activity of the brain known as electroencephalogram (EEG) signals, which is extracted by placing electrodes over the scalp of the brain. The emotion recognition based BCI system can be develop by classification of EEG signals.

Most of the features extracted from EEG signals are in time domain, frequency domain and time-frequency domain for classification of emotions. The Kolmogorov entropy and the principal Lyapunov exponent parameters [1]. Fractal dimension [2], event related potential and event related oscillation based features [3], higher order crossing based features [4], the time domain and frequency domain based features [5], time-frequency based features [6], short time Fourier transform based features [7], the spectrogram, Zhao–Atlas–Marks and Hilbert–Huang spectrum based features [8], combination of surface Laplacian (SL) filtering, wavelet transforms (WT) [9], multiwavelet based features [10, 11] are explored as input to classifier for classification of emotion EEG signals.

Wavelet transform with surface Laplacian filter have been fed into k-nearest neighbor (KNN) classifier [12]. Higher order crossing (HOC) features used different classifiers for emotion classification [13]. Fractal dimension used as feature for emotion classification [14]. Features extracted from Discrete wavelet transform have been fed into artificial neural network (ANN) classifier for emotion classification [15]. Entropy of bands of EEG signals is used as features to least squares support vector machine (LS-SVM) with radial basis function (RBF) kernel for classification of emotions [16].The power spectral density (PSD) based features are classified the emotions using SVM classifier [17]. Features based on magnitude squared coherence (MSCE) are fed as input to KNN classifier for emotions classification [18]. Wavelet based features are proposed to classify emotions [19].The late positive potential (LPP) based selected frequency domain features are tested on KNN and SVM classifier for emotion classification [20].

Recently, recurrence quantification analysis (RQA) with learning method has been explored for emotion recognition [21]. Power spectral density (PSD) of EEG signals and statistical features of ECG used as input to SVM classifier for emotion recognition [22]. Correlation-based feature selection (CFS) and a KNN have been explored for three class emotion classification [23]. Wavelet packet decomposition based features has been explored for emotion recognition [24]. Evolutionary computation (EC) algorithms used for optimal features selection method for emotion recognition [25]. Correlation-based subset selection technique with higher order statistics has been used for emotion classification [26].

In this paper, an audio–video stimuli based EEG dataset is recorded and flexible analytic wavelet transform is proposed to classified emotions with single EEG channel. FAWT explores the complexity of EEG signal into sub-bands. The statistical measures extract the different emotions information from FAWT provided sub-bands. The emotion classification ability of statistical measures is evaluated over the variants of KNN classifier.

Methodology

The proposed methodology is described under the following subsections: EEG signals (data-set), feature extraction, and classification.

Data-set

In this paper, audio–video stimuli based experiment is conducted for recording of happy, fear, sad, and relax emotions EEG dataset. The subjects who are participated in the experiment has age group of 20–25 years. All subjects are the undergraduate students of Indian Institute of Information Technology Design and Manufacturing, Jabalpur. Before beginning the experiment subjects are introduced with the experiment procedure and rating scale. The 12-video clips, 3 for each emotion are selected based on the questioner session of 30 volunteers, who do not participate in the experiment. The first neutral or relax emotion clip is shown to subjects prior to any clip for generating the baseline signal and avoid the daily dependencies on the recorded signals [27, 28]. The subjects evoked emotional states is assessed by Self-Assessment Manikin (SAM) standard [29].

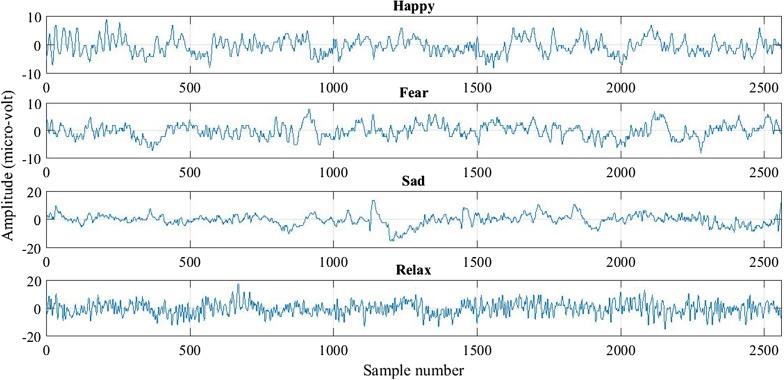

The EEG signals are acquired using a 24-channel EEG traveler system. The bipolar montage is considered for acquiring the emotion EEG signals with 10–20 international electrode placement system. The EEG signals are recorded at 256 Hz sampling frequency using different bipolar channels. In this paper, audio–visual stimulation method is used for elicitation of emotion EEG signals, because this stimulus is more effective for evoking emotions as compared to alone audio or visual stimulus [30]. For a stimulus the frontal region of brain is responded more actively as compared to other regions, so electrode positions FP1, FP2, F3, F4, F7, and F8 recorded EEG signals are used for emotion classification [31]. An example of happy, fear, sad, and relax emotions EEG signals is shown in Fig. 1.

Fig. 1.

EEG signals of happy, fear, sad, and relax emotions EEG signals

Flexible analytic wavelet transform

In this work, a recently proposed flexible analytic wavelet transform (FAWT) is used for emotion classification. FAWT has several advantages as compared to traditional dyadic wavelet transform. FAWT low-pass and high-pass channels are allowed arbitrary sampling rates, which provide flexible TF covering [32]. FAWT uses complex pair of atoms in high-pass channel that gained the flexibility in selection of transform parameters. Because of these merits, FAWT finds applications for analysis of complex oscillatory signals like vibrations and ECG [33, 34].

FAWT uses iterative filter bank structure for decomposition of EEG signals. The filter bank stages of FAWT consist of one LP-channel and two HP-channels. The HP-channels consist of Hilbert transform pair of atoms for analyzing the positive and negative structure. Such complex structure provides the flexible selection of FAWT parameters. The parameters of FAWT are: LP-channel up sampling factor k and down sampling factors l; HP-channel up sampling factor m and down sampling factors n; and quality factor (QF) controlling parameter . These parameters are controlled the frequency response of FAWT filter banks. The HP filter and LP filter used in FAWT filter bank are can be defined as, respectively [32]:

| 1 |

| 2 |

where

| 3 |

the terms can be defined as [31],

| 4 |

To satisfied perfect reconstruction condition of FAWT filter bank, the QF controlling parameter is defined as [31],

| 5 |

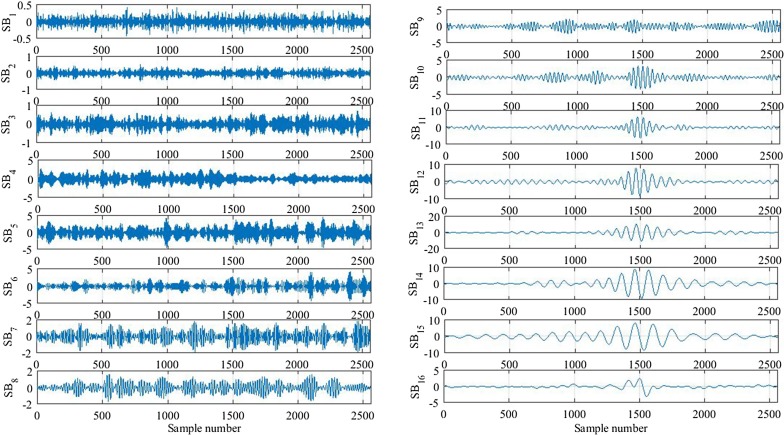

With these constraints, the FAWT is tuned with following values of parameters: FAWT stages , , , , and for decomposition of EEG signals [31]. An example of FAWT decomposed EEG signal into SBs is shown in Fig. 2.

Fig. 2.

FAWT provided sub-bands of an emotion EEG signals

Feature extraction

In this work, statistical measures of FAWT provided sub-bands are used as features for emotion classification. The statistical measures are minimum value (MIN), maximum value (MAX), interquartile range (IQR), mean absolute deviation (MAD), activity (), and entropy (H(S)). The minimum and maximum values are the part of five point summary in a database, which can assessed the variation of data points. The IQR and MAD are assessed the variability of sub-bands. The activity is a measures of power of the sub-band and entropy is assessed the complexity of sub-band. The equations used for computation of these features are defined as [35–37],

| 6 |

| 7 |

| 8 |

| 9 |

| 10 |

| 11 |

where S represents sub-band (SB), N number of samples in SB, mean of SB, first quartiles of SB, third quartiles of SB, and represents the probability of SB samples lying nearby of particular value. The computed features are normalized by unit length normalization method to avoid the biased classification [38].

Classification

KNN is a one of the simplest non-parametric classification algorithm, which has good predictive power for instances and requires less computation time. KNN classified the testing instance according to the k-nearest training instances by computing each possible category probabilities. The largest category probability defines the class of testing instance. The probability density function for a feature vector F of class can be defined as [39],

| 12 |

where represents number of nearest neighbors, represents one of the neighbors of training set , and represents similarity function for F and . In this paper, six variants of KNN fine, medium, coarse, cosine, cubic, and weighted are tested for emotion classification [40].

Results and discussion

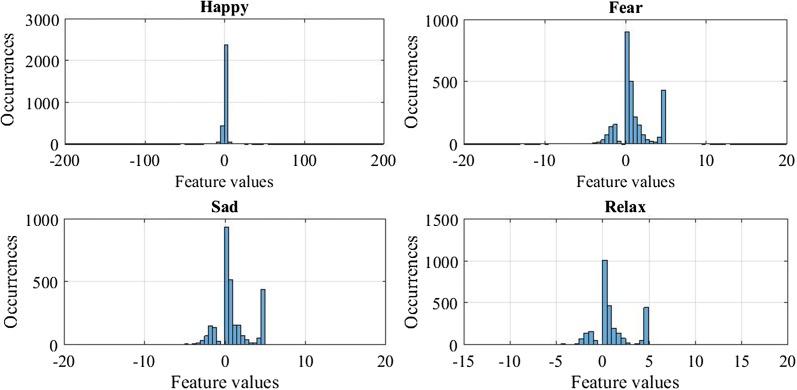

FAWT decomposes EEG signals into sub-bands and statistical measures are computed from each sub-band for classification of emotions. The dimension of sub-band wise features set for each emotion is , where rows represent the number of features and columns represent the number of instances. The emotion discrimination capability of proposed features set is assessed by their histogram distribution. Figure 3 shows an example of histogram of extracted features set for happy, fear, sad, and relax emotions. From this figure, it can be observed that proposed features set is shown different occurrences of feature values for different emotions. The different histogram distributions for emotions signified that the proposed features set has ability to discriminate emotions properly.

Fig. 3.

Histograms of a sub-band features for different emotions

In this paper, frontal bipolar channels (FP1-F3, FP1-F7, FP2-F4, and FP2-F8) recorded EEG signals are compared for emotion classification. The classification performance of each bipolar channel features is evaluated with variants of KNN classifier. The fine, medium, and coarse KNNs are defined in terms number on neighbors, which are 1, 10, and 100, respectively, while their distance metric is euclidean. The cosine and cubic KNNs are defined by their used distance metrics are cosine and minikowski, respectively and number of nearest neighbors 10 is common in both variants. The weighted-KNN contains number of nearest neighbors 10 and distance metric is euclidean, but the squared inverse distance weight is considered. The classification performance of each KNN variant is evaluated with 10-folds cross validation method. Tables 1, 2, 3 and 4 present the SB-wise classification performance with KNN variants for all frontal channels. In all KNN-variants the fine and weighted KNNs are provided better classification accuracy for all sub-bands as compared to other variants. Although, highest emotion classification accuracy 86.1% is obtained by weighted-KNN for SB7 of FP2-F8 bipolar channel. The confusion matrix of SB7 of FP2-F8 channel is shown in Table 5, where the diagonal elements show the exactly classified instances and off diagonal elements show the misclassified instances of different classes. In this table, the emotion-wise classification accuracy is also computed from the diagonal elements. The classification accuracy of happy, fear, sad, and relax emotions are 96.12%, 78.37%, 72.24%, and 97.76%, respectively.

Table 1.

Sub-band wise classification accuracy of FP1-F3 channel for different KNN classifiers

| KNN variants | SB1 | SB2 | SB3 | SB4 | SB5 | SB6 | SB7 | SB8 |

|---|---|---|---|---|---|---|---|---|

| Fine KNN | 81.9 | 73.9 | 84.4 | 74.3 | 40.9 | 60 | 51.4 | 47.8 |

| Medium KNN | 71.2 | 67.7 | 79.3 | 72.5 | 40.9 | 59 | 47.1 | 45.7 |

| Coarse KNN | 61.9 | 58.4 | 63.2 | 59.4 | 38.1 | 47.9 | 42.8 | 42 |

| Cosine KNN | 74.5 | 71.4 | 70.3 | 68.7 | 42.9 | 57.2 | 45.8 | 45.6 |

| Cubic KNN | 65.7 | 63.9 | 77.4 | 72.3 | 41.7 | 57.9 | 47.5 | 44.5 |

| Weighted KNN | 80.4 | 73.9 | 84.3 | 75.9 | 44.1 | 62.4 | 50.9 | 48.5 |

Table 2.

Sub-band wise classification accuracy of FP1-F7 channel for different KNN classifiers

| KNN variants | SB1 | SB2 | SB3 | SB4 | SB5 | SB6 | SB7 | SB8 |

|---|---|---|---|---|---|---|---|---|

| Fine KNN | 71.2 | 80.9 | 78.6 | 71.4 | 45.2 | 62.2 | 53.1 | 51.4 |

| Medium KNN | 64 | 75.3 | 73.2 | 67.7 | 42.1 | 62.6 | 50.9 | 49.4 |

| Coarse KNN | 55.1 | 59.7 | 52.2 | 54.7 | 36.7 | 51.5 | 44.9 | 44.6 |

| Cosine KNN | 63.3 | 73.7 | 65.6 | 58.8 | 43.5 | 57.3 | 52.5 | 47.4 |

| Cubic KNN | 60 | 71.8 | 71.4 | 67 | 42.2 | 61.5 | 52.8 | 50.3 |

| Weighted KNN | 69.4 | 79.6 | 78.9 | 70.9 | 44.9 | 64.1 | 54.1 | 52.4 |

Table 3.

Sub-band wise classification accuracy of FP2-F4 channel for different KNN classifiers

| KNN variants | SB1 | SB2 | SB3 | SB4 | SB5 | SB6 | SB7 | SB8 |

|---|---|---|---|---|---|---|---|---|

| Fine KNN | 72.4 | 70.9 | 70.3 | 73.7 | 73.4 | 78.6 | 85.2 | 79.7 |

| Medium KNN | 65.3 | 67.6 | 66.6 | 70.6 | 70.3 | 79.4 | 84 | 78.3 |

| Coarse KNN | 55.7 | 59.1 | 56.3 | 58.3 | 57.9 | 68.8 | 78 | 69.4 |

| Cosine KNN | 68.7 | 60.7 | 61.4 | 62.1 | 61.3 | 78.2 | 84.8 | 81.4 |

| Cubic KNN | 60.6 | 65 | 64.9 | 70.2 | 69.6 | 78.6 | 83.2 | 76.6 |

| Weighted KNN | 71.3 | 72 | 71.8 | 74.1 | 73.5 | 79.9 | 86.1 | 80.7 |

Table 4.

Sub-band wise classification accuracy of FP2-F8 channel for different KNN classifiers

| KNN variants | SB1 | SB2 | SB3 | SB4 | SB5 | SB6 | SB7 | SB8 |

|---|---|---|---|---|---|---|---|---|

| Fine KNN | 70.5 | 78.1 | 66.4 | 65.8 | 68.3 | 67.7 | 57.4 | 55.7 |

| Medium KNN | 64.2 | 73 | 63.5 | 65.8 | 67.5 | 67.1 | 57 | 56.5 |

| Coarse KNN | 56.9 | 60.1 | 50.5 | 54.3 | 57.4 | 58.9 | 55.7 | 57.6 |

| Cosine KNN | 64.1 | 66.6 | 60.5 | 59.7 | 65.3 | 65.5 | 56.5 | 54.6 |

| Cubic KNN | 58.7 | 69.5 | 61.9 | 64.9 | 66.3 | 64.8 | 57.1 | 56.9 |

| Weighted KNN | 70.6 | 78.4 | 68.3 | 68.1 | 69.7 | 69.7 | 58.1 | 58.1 |

Table 5.

The confusion matrix of weighted KNN classifier for SB7 of FP2-F4 channel

| Emotions | Happy | Fear | Sad | Relax |

|---|---|---|---|---|

| Happy | 471 | 3 | 13 | 3 |

| Fear | 1 | 384 | 103 | 2 |

| Sad | 1 | 126 | 354 | 9 |

| Relax | 0 | 9 | 2 | 479 |

| ACC (%) | 96.12 | 78.37 | 72.24 | 97.76 |

Table 6 presents the emotion classification performance comparison report of proposed method with other existing methods. The all methods in this table are worked for classification of four emotions. These methods are compared in terms of used stimuli for evoking the emotions, proposed feature extraction approach, and used classifier. In this table it can be seen that in all existing methods audio–video stimuli based method proposed by Bajaj [28] is provided highest classification accuracy 84.79%. The proposed method is also used audio–video stimuli and obtained better classification accuracy 86.1% as compared to Bajaj [28]. The classification performance of proposed method signified its utility for emotion classification, which can be used in emotion recognition based BCI system.

Table 6.

Emotion classification performance comparison report

| Authors | Stimulus, classes | Feature extraction method | Classifier | Accuracy (%) |

|---|---|---|---|---|

| Mikhail [17] | Perform movements, 4 | PSD | SVM Linear | 55.75 |

| Majid Mehmood [20] | IAPS, 4 | Power-spectrum, statistical and LPP |

SVM | 58 |

| Wang et al. [5] | Video, 4 | Minimum redundancy, maximum relevance |

SVM | 66.5 |

| Bhatti [19] | Music, 4 | Statistical, PSD, FFT, and WT |

MLP | 78.11 |

| Lin [7] | Music, 4 | FFT | SVM RBF | 80.86 |

| Khosrowabadi et al. [18] | IAPS with music, 4 | MSCE | KNN | 84.5 |

| Bajaj [10] | Audio–video, 4 | Multi wavelet | MC-LS-SVM | 84.79 |

| Proposed method | Audio–video, 4 | FAWT | KNN | 86.1 |

FFT fast fourier transform, MLP multi-layer perceptron

Conclusion

In this work, audio–video stimuli based EEG dataset of four basic emotions is recorded and FAWT-based features are proposed for classification of emotions. FAWT decomposed recorded EEG signal into sub-bands and statistical features are extracted from sub-bands for each frontal channel. The sub-band wise features of each channel are tested on the variants of KNN classifier. The different frontal channels features are compared for emotion classification and utility of single channel is explored for emotion classification. The SB7 of channel FP2–FP4 with weighted-KKN provides highest emotion classification accuracy 86.1% as compared to other channels SBs. The obtained emotion classification performance of proposed method is better as compared to other existing methods. The proposed single EEG channel based emotion classification method can be used in BCI system for the impaired people to assessed their emotions.

Acknowledgement

Support obtained from the PDPM Indian Institute of Information Technology Design and Manufacturing Jabalpur, project titled Brain computer interface for classification of human Emotion, Project No. PDPM IIITDMJ/Dir.Office/officeorder/2016/10-2902 is greatly acknowledged.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Varun Bajaj, Email: bajajvarun056@yahoo.co.in.

Sachin Taran, Email: taransachin2@gmail.com.

Abdulkadir Sengur, Email: ksengur@gmail.com.

References

- 1.Aftanas LI, Lotova NV, Koshkarov VI, Pokrovskaja VL, Popov SA, Makhnev VP. Non-linear analysis of emotion EEG: calculation of Kolmogorov entropy and the principal Lyapunov exponent. Neurosci Lett. 1997;226(1):13–16. doi: 10.1016/S0304-3940(97)00232-2. [DOI] [PubMed] [Google Scholar]

- 2.Boostani R, Moradi MH. A new approach in the BCI research based on fractal dimension as feature and Adaboost as classifier. J Neural Eng. 2004;1(4):212. doi: 10.1088/1741-2560/1/4/004. [DOI] [PubMed] [Google Scholar]

- 3.Frantzidis CA, Bratsas C, Papadelis CL, Konstantinidis E, Pappas C, Bamidis PD. Toward emotion aware computing: an integrated approach using multichannel neurophysiological recordings and affective visual stimuli. IEEE Trans Informn Technol Biomed. 2010;14(3):589–597. doi: 10.1109/TITB.2010.2041553. [DOI] [PubMed] [Google Scholar]

- 4.Petrantonakis PC, Hadjileontiadis LJ. Emotion recognition from EEG using higher order crossings. IEEE Trans Inform Technol Biomed. 2010;14(2):186–197. doi: 10.1109/TITB.2009.2034649. [DOI] [PubMed] [Google Scholar]

- 5.Wang Xiao-Wei, Nie Dan, Lu Bao-Liang. Neural Information Processing. Berlin, Heidelberg: Springer Berlin Heidelberg; 2011. EEG-Based Emotion Recognition Using Frequency Domain Features and Support Vector Machines; pp. 734–743. [Google Scholar]

- 6.Chanel G, Kierkels JJ, Soleymani M, Pun T. Short-term emotion assessment in a recall paradigm. Int J Hum-Comput Stud. 2009;67(8):607–627. doi: 10.1016/j.ijhcs.2009.03.005. [DOI] [Google Scholar]

- 7.Lin YP, Wang CH, Jung TP, Wu TL, Jeng SK, Duann JR, Chen JH. EEG-based emotion recognition in music listening. IEEE Trans Biomed Eng. 2010;57(7):1798–1806. doi: 10.1109/TBME.2010.2048568. [DOI] [PubMed] [Google Scholar]

- 8.Hadjidimitriou SK, Hadjileontiadis LJ. Toward an EEG-based recognition of music liking using time-frequency analysis. IEEE Trans Biomed Eng. 2012;59(12):3498–3510. doi: 10.1109/TBME.2012.2217495. [DOI] [PubMed] [Google Scholar]

- 9.Murugappan M, Nagarajan R, Yaacob S. Combining spatial filtering and wavelet transform for classifying human emotions using EEG signals. J Med Biol Eng. 2011;31(1):45–51. doi: 10.5405/jmbe.710. [DOI] [Google Scholar]

- 10.Bajaj Varun, Pachori Ram Bilas. Brain-Computer Interfaces. Cham: Springer International Publishing; 2014. Detection of Human Emotions Using Features Based on the Multiwavelet Transform of EEG Signals; pp. 215–240. [Google Scholar]

- 11.Bajaj V, Pachori RB. Human emotion classification from EEG signals using multiwavelet transform. In: Medical Biometrics, 2014 International Conference on, IEEE; 2014. pp. 125–30.

- 12.Murugappan M. Human emotion classification using wavelet transform and KNN. In: Pattern Analysis and Intelligent Robotics (ICPAIR), 2011 International Conference on, 1, IEEE; 2011. pp. 148–53.

- 13.Petrantonakis PC, Hadjileontiadis LJ. Emotion recognition from brain signals using hybrid adaptive filtering and higher order crossings analysis. IEEE Trans Affect Comput. 2010;1(2):81–97. doi: 10.1109/T-AFFC.2010.7. [DOI] [Google Scholar]

- 14.Liu Y, Sourina O, Nguyen MK. Real-time EEG-based human emotion recognition and visualization. In: Cyberworlds (CW), 2010 International Conference on, IEEE; 2010. pp. 262–9.

- 15.Ang AQX, Yeong YQ, Ser W. Emotion classification from EEG signals using time-frequency-DWT features and ANN. J Comput Commun. 2017;5(03):75. doi: 10.4236/jcc.2017.53009. [DOI] [Google Scholar]

- 16.Singh M, Singh M, Goyal M. Emotion classification using EEG entropy. Int J Inform Technol Knowl Manag. 2015;8(2):150–158. [Google Scholar]

- 17.Mikhail M, El-Ayat K, Coan JA, Allen JJ. Using minimal number of electrodes for emotion detection using brain signals produced from a new elicitation technique. Int J Auton Adapt Commun Syst. 2013;6(1):80–97. doi: 10.1504/IJAACS.2013.050696. [DOI] [Google Scholar]

- 18.Khosrowabadi R, Quek HC, Wahab A, Ang KK. EEG-based emotion recognition using self-organizing map for boundary detection. In: Pattern Recognition (ICPR), 2010 20th International Conference on, IEEE; 2010. pp. 4242–45.

- 19.Bhatti AM, Majid M, Anwar SM, Khan B. Human emotion recognition and analysis in response to audio music using brain signals. Comput Hum Behav. 2016;65:267–275. doi: 10.1016/j.chb.2016.08.029. [DOI] [Google Scholar]

- 20.Mehmood RM, Lee HJ. A novel feature extraction method based on late positive potential for emotion recognition in human brain signal patterns. Comput Electr Eng. 2016;53:444–457. doi: 10.1016/j.compeleceng.2016.04.009. [DOI] [Google Scholar]

- 21.Fan M, Chou CA. Recognizing affective state patterns using regularized learning with nonlinear dynamical features of EEG. In: Biomedical and Health Informatics (BHI), 2018 IEEE EMBS International Conference on, IEEE; 2018. pp. 137–40.

- 22.Katsigiannis S, Ramzan N. Dreamer: a database for emotion recognition through eeg and ecg signals from wireless low-cost off-the-shelf devices. IEEE J Biomed Health Inform. 2018;22(1):98–107. doi: 10.1109/JBHI.2017.2688239. [DOI] [PubMed] [Google Scholar]

- 23.Hu B, Li X, Sun S, Ratcliffe M. Attention recognition in EEG-based affective learning research using CFS+ KNN algorithm. IEEE/ACM Transactions on Computational Biology and Bioinformatics; 2016. [DOI] [PubMed]

- 24.Balasubramanian G, Kanagasabai A, Mohan J, Seshadri NG. Music induced emotion using wavelet packet decompositionAn EEG study. Biomed Signal Process Control. 2018;42:115–128. doi: 10.1016/j.bspc.2018.01.015. [DOI] [Google Scholar]

- 25.Nakisa B, Rastgoo MN, Tjondronegoro D, Chandran V. Evolutionary computation algorithms for feature selection of EEG-based emotion recognition using mobile sensors. Expert Syst Appl. 2018;93:143–155. doi: 10.1016/j.eswa.2017.09.062. [DOI] [Google Scholar]

- 26.Chakladar DD, Chakraborty S. EEG based emotion classification using correlation based subset selection. Biol Inspir Cognit Archit. 2018;24:98–106. [Google Scholar]

- 27.Gabert-Quillen CA, Bartolini EE, Abravanel BT, Sanislow CA. Ratings for emotion film clips. Behav Res Methods. 2015;47(3):773–787. doi: 10.3758/s13428-014-0500-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Koelstra S, Muhl C, Soleymani M, Lee JS, Yazdani A, Ebrahimi T, Patras I. Deap: a database for emotion analysis; using physiological signals. IEEE Trans Affect Comput. 2012;3(1):18–31. doi: 10.1109/T-AFFC.2011.15. [DOI] [Google Scholar]

- 29.Bradley MM, Lang PJ. Measuring emotion: the self-assessment manikin and the semantic differential. J Behav Therapy Exp Psychiatr. 1994;25(1):49–59. doi: 10.1016/0005-7916(94)90063-9. [DOI] [PubMed] [Google Scholar]

- 30.Murugappan M, Juhari MRBM, Nagarajan R, Yaacob S. An investigation on visual and audiovisual stimulus based emotion recognition using EEG. J. Med Eng Inform. 2009;1(3):342–356. doi: 10.1504/IJMEI.2009.022645. [DOI] [Google Scholar]

- 31.Rosen HJ, Pace-Savitsky K, Perry RJ, Kramer JH, Miller BL, Levenson RW. Recognition of emotion in the frontal and temporal variants of frontotemporal dementia. Dement Geriatr Cognit Disord. 2004;17(4):277–281. doi: 10.1159/000077154. [DOI] [PubMed] [Google Scholar]

- 32.Bayram I. An analytic wavelet transform with a flexible time-frequency covering. IEEE Trans Signal Process. 2013;61(5):1131–1142. doi: 10.1109/TSP.2012.2232655. [DOI] [Google Scholar]

- 33.Zhang C, Li B, Chen B, Cao H, Zi Y, He Z. Weak fault signature extraction of rotating machinery using flexible analytic wavelet transform. Mech Syst Signal Process. 2015;64:162–187. doi: 10.1016/j.ymssp.2015.03.030. [DOI] [Google Scholar]

- 34.Acharya UR, Sudarshan VK, Koh JE, Martis RJ, Tan JH, Oh SL, Chua CK. Application of higher-order spectra for the characterization of coronary artery disease using electrocardiogram signals. Biomed Signal Process Control. 2017;31:31–43. doi: 10.1016/j.bspc.2016.07.003. [DOI] [Google Scholar]

- 35.Taran S, Bajaj V, Siuly S. An optimum allocation sampling based feature extraction scheme for distinguishing seizure and seizure-free EEG signals. Health Inform Sci Syst. 2017;5(1):1–7. doi: 10.1007/s13755-017-0020-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Taran S, Bajaj V. Rhythm based identification of alcohol EEG signals. IET Sci Meas Technol. 2018;12(3):343–349. doi: 10.1049/iet-smt.2017.0232. [DOI] [Google Scholar]

- 37.Taran S, Bajaj V. Motor imagery tasks-based EEG signals classification using tunable-Q wavelet transform. INeural Comput Appl. 2018 doi: 10.1007/s00521-018-3531-0. [DOI] [Google Scholar]

- 38.Taran S, Bajaj V, Sharma D, Siuly S, Sengur A. Features based on analytic IMF for classifying motor imagery EEG signals in BCI applications. Measurement. 2018;116:68–76. doi: 10.1016/j.measurement.2017.10.067. [DOI] [Google Scholar]

- 39.Kim KS, Choi HH, Moon CS, Mun CW. Comparison of k-nearest neighbor, quadratic discriminant and linear discriminant analysis in classification of electromyogram signals based on the wrist-motion directions. Curr Appl Phys. 2011;11(3):740–745. doi: 10.1016/j.cap.2010.11.051. [DOI] [Google Scholar]

- 40.Johnson Jenifer Mariam, Yadav Anamika. Information and Communication Technology for Sustainable Development. Singapore: Springer Singapore; 2017. Fault Detection and Classification Technique for HVDC Transmission Lines Using KNN; pp. 245–253. [Google Scholar]