Abstract

Cadaver-based anatomical education is supplemented by a wide range of pedagogical tools—from artistic diagrams, to photographs and videos, to 3-dimensional (3D) models. However, many of these supplements either simplify the true anatomy or are limited in their use and distribution. Photogrammetry, which overlaps 2-dimensional (2D) photographs to create digital 3D models, addresses such shortcomings by creating interactive, authentic digital models of cadaveric specimens. In this exploratory pilot study, we used a photogrammetric setup and rendering software developed by an outside group to produce digital 3D models of 8 dissected specimens of regional anatomy. The photogrammetrically produced anatomical models authentically and precisely represented their original specimens. These interactive models were deemed accurate and teachable by faculty at the Stanford University Division of Clinical Anatomy. Photogrammetry is, according to these results, another possible method for rendering cadaveric materials into interactive 3D models, which can be used for anatomical education. These models are more detailed than many computer-generated versions and provide more visuospatial information than 2D images. Future researchers and educators could use such technology to create institutional libraries of digital 3D anatomy for medical education.

Keywords: Medical education, photogrammetry, anatomy education, anatomical models, digital anatomy, 3D models, prosections

Introduction

Human anatomy has been the foundational basis of medical education for centuries. Cadaver-based instruction (alongside didactic lectures) has long been the standard method for such education.1,2 However, cadaver-based anatomy courses are not available in all medical institutions, as maintaining cadaveric materials requires financial, ethical, and safety considerations.3 In addition, with medicine’s ever-growing body of knowledge, many medical schools are moving away from traditional models of anatomy education; new sciences, technologies, and resources are increasingly favored in modern curricula.3–5 Most institutions are therefore devoting fewer hours to preclinical anatomy education—between 1955 and 2009, total anatomy course hours in US medical schools dropped by approximately 55%.4 Despite these pedagogical changes, anatomical and visuospatial mastery remain necessary for high-quality medical practice. Several medical schools are thus augmenting their traditional approaches to anatomy education with new technologies and resources.4,6

One such resource is digital 2-dimensional (2D) photography of cadaveric specimens. Photographs are easily accessible and clearly depict human anatomy. However, 2D images are limited in their conveyed information, as depth and 3-dimensional (3D) relations are sacrificed for authenticity. A second resource, videography, addresses this deficiency by depicting cadavers or professionally dissected cadaveric specimens (prosections) from various angles in 3D. Although videos are both accessible and spatially informative, they are also prerecorded and thus offer no interaction.

Medical educators have recently turned toward high-tech innovations to circumvent the pedagogical shortcomings of photos and videos.4,7 Prominent examples include 3D-printed anatomical models, virtual reality applications, and 3D computer models.5,8,9 This study is primarily concerned with this last category. Although there is no consensus, many professionals agree that 3D computer models have potential as cost-effective, ethical supplements to traditional anatomy curricula.5,8–12 There are multiple reasons for this perspective. First, digital 3D anatomical models are notably accessible. Although physical cadavers and prosections are restricted to certain locations, students can access digital learning tools at any place and time. As one study noted, digital libraries of anatomical specimens would provide regular access to anatomical variety which might be difficult to find in the laboratory.13

Furthermore, guided 3D manipulation of anatomical specimens has been shown to increase learner performance in certain tasks of spatial ability and structural recognition.13–15 Beyond gross anatomy, 3D models have also performed better than 2D diagrams in aiding student comprehension of peritoneal embryogenesis.16 Both digital and physical 3D models are also believed to assist students in visualizing certain aspects of neuroanatomy.17,18

However, it is important to consider than many of the digital models currently employed in anatomy education are acquired by 1 of 2 methods: either by “slicing” physical specimens, such as in the Visible Human Project, or through grayscale imaging, such as in computed tomographic scans.19 The former method destroys original cadaveric specimens, is technically complicated, and is extremely expensive. The latter method sacrifices authenticity by rendering a specimen’s surface anatomy from grayscale, cross-sectional 2D data.

Photogrammetry—the applied science of using photographs to represent an object in 3D—combines the advantages of photographs, videos, and computerized models while avoiding most of their drawbacks. In photogrammetry, 2D photographs of an object are taken at varying angles and then overlaid using computer software to generate a 3D reconstruction. The software is used to identify common points between images taken at differing angles and then to overlay the images by matching their common points. As consecutive images are overlaid, and their corresponding points matched, a “point cloud” develops as the 3D location of certain points is determined. The point cloud becomes denser as more images are added, giving greater structural detail to the 3D cloud. A “wireframe model” is then generated from connecting the dots within these point clouds, and surface features are added to produce a surface mesh. Finally, the original photographs are overlaid onto this mesh to give color and texture to the final 3D model. This model thus presents both geometric and textural data from the original object. Further explanation for the theory of photogrammetry is described elsewhere in great detail.20

Previously, photogrammetry’s anatomical application was restricted largely to the measurement of certain organs or structures.21–23 For example, one recent study used photogrammetry to capture serial dissections of the human brain to measure the structural connectivity of cerebellar white matter.23 By capturing and overlaying images of dissected brain specimens, researchers were able to calculate the distance between cortical gyri and their respective white matter stems. Other work has supported the use of photogrammetry in neuroanatomical morphometric studies.22 On the clinical side, this method has also been used to assess tracheostoma anatomy in patients who have undergone laryngectomies.21 Here, the 3D capture and measurement of stoma parameters have allowed researchers to ensure proper attachment of heat exchangers and stoma valves based on a patient’s distinct tracheostoma.

Beyond the research and clinical applications, photogrammetry also has tremendous potential for producing accurate, interactive, and accessible digital 3D prosection models. This study presents the first use of photogrammetry for the creation of such resources in anatomy education.

Photogrammetry has several advantages for creating digital 3D prosection models. First, the process is relatively inexpensive, requiring equipment such as digital cameras, lighting tools, and appropriate rendering software. Second, photogrammetry creates authentic anatomical models, by generating 3D renderings from digital photographs. This authenticity surpasses most computerized models, which often simplify subtle anatomical features. Third, its digital 3D models are virtually interactive, offering visuospatial engagement which pictures and videos lack. Users can actively manipulate and annotate such models, given the appropriate software. This interactivity will be discussed later in this study. Fourth, photogrammetry does not damage physical specimens nor rely on grayscale or cross-sectional data to build 3D models. Finally, photogrammetric prosection models are digital. They can therefore be distributed without limit and will not degrade over time.

Materials and Methods

Prosection specimens

Eight human prosection specimens were used to evaluate photogrammetry’s creation of digital 3D prosection models: (1) a spleen; (2) a cancerous liver; (3) a uterus with fibroids and ovarian cyst; (4) a heart, with major vessels and lungs; (5) a heart, with major vessels, abdominal aorta, and kidneys; (6) a head (sagittal cross section, viscera removed); (7) a stepwise-dissected anterior forearm; and (8) a stepwise-dissected anterior torso.

These specimens were chosen for their wide range of sizes, textures, shapes, and contours. The spleen, liver, and uterus were grouped as Single-Organ Specimens. These were primarily used to evaluate photogrammetry’s capture of small anatomical structures. In addition, the Single-Organ Specimens were widely varied in surface texture, allowing us to examine what kinds of anatomical surfaces are best captured through this method.

The “heart with major vessels, and lungs,” and “heart, with major vessels, abdominal aorta, and kidneys” specimens were grouped into the Multiple-Organ Specimens category. The Multiple-Organ Specimens represented mid-size prosections in this study. They were also used to evaluate photogrammetric capture of more complex and multifaceted specimens.

One specimen, a sagittal cross-sectioned head (viscera removed), was chosen to evaluate photogrammetric rendering of internal cavities. This specimen occupied its own Internal Cavities grouping.

Finally, 2 specimens were selected for the Stepwise Dissections portion of the study: an anterior forearm and an anterior torso. The goal of this section was to document sequential stages in cadaveric dissection and examine whether photogrammetry could be used to create 3D dissection guides. The torso was this study’s largest specimen and thus provided insight into possible size limitations of anatomical photogrammetry.

Photogrammetry setup, image capture, and data processing

To optimize accuracy and resolution, an advanced photogrammetric apparatus was developed by Anatomage, Inc. The proprietary setup featured multiple digital cameras (Nikon 5300), aligned equidistant from one another on a rotatable arch. Because the Anatomage technology has not yet been patented, this study was not authorized to present visual examples.

Prosections were placed on a height-adjustable table. Four lights were placed at respective corners of the table to eliminate shadows and darkened areas. Cameras were manually rotated in a 210° arc, with each camera taking 1 photograph every 15°. Prosections were then flipped 180° (revealing surfaces previously on the table), and the cameras were once more rotated in a 210° arc, capturing images every 15°. This process gave 196 different images per prosection, requiring approximately 15 minutes per specimen.

Following image capture, photographic data were transferred to a proprietary 3D-rendering software developed by Anatomage, Inc. Using this software, 2D images were manually rendered into 3D models, by matching the surface points of a given prosection to different image locations between individual photographs. After rendering, all 3D models were viewed using the Anatomage InVivo software.

Faculty feedback interviews

The faculty at the Stanford University Division of Clinical Anatomy were interviewed to provide feedback on photogrammetrically generated 3D models. None of the interviewed faculty were involved in the present study. All faculty were current instructors in ongoing anatomy courses and used the physical prosections regularly in teaching. Feedback was given in the form of 20-minute one-on-one interviews, featuring a series of open-ended questions. All interviews were recorded and transcribed. The questions covered 4 general themes: authenticity of 3D models, their usefulness for lecturers, their usefulness for students, and comparisons with other readily available pedagogical tools. Patterns and themes were identified throughout faculty feedback and used for qualitative evaluation. As such, this method was neither a strict thematic analysis nor a strict survey. Rather, the method was intended to provide a broad, anecdotal picture of early-stage feedback. These questions were meant to elicit qualitative, narrative data about the accuracy and utility of photogrammetrically generated 3D prosection models. In doing so, the answers provided insight into the possible benefits and drawbacks of photogrammetrically generated 3D prosection models. Much like the utility of face validity interviews in supporting certain measurement tools, these interviews were valuable for their early-stage insight into the these 3D models.24 Future researchers might take these perspectives into account when designing focused, rigorous evaluations of these models’ pedagogical impact.

Results

Single-organ prosections

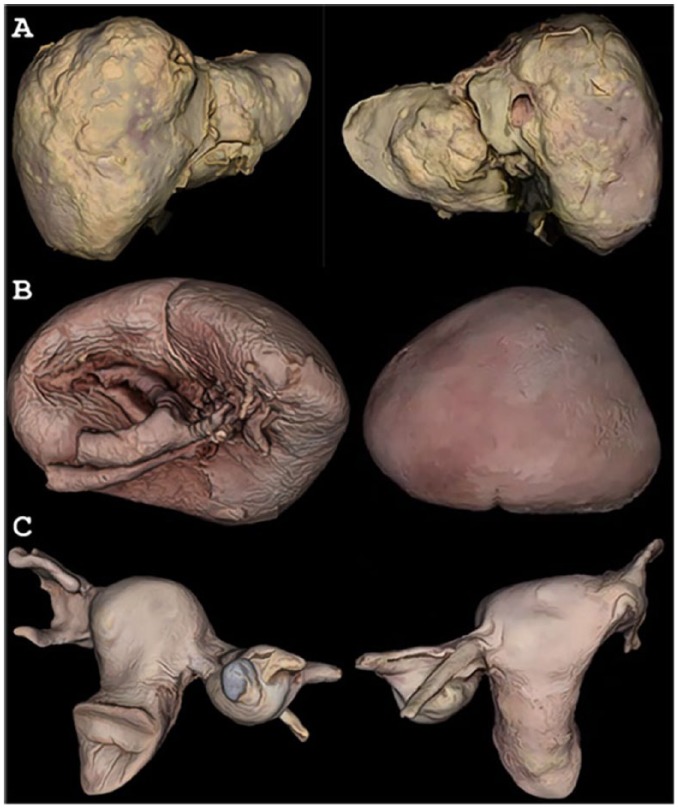

The original texture and color of the cancerous liver specimen were preserved in its 3D model, such that tumors were clearly distinguishable from normal liver tissue (Figure 1A). The spleen model retained original color and texture, as well (Figure 1B). Its digitally reproduced details showed subtle features, such as entry of the splenic artery and exit of the splenic vein. The uterus and liver models both highlighted photogrammetry’s potential for documenting unique pathologies in 3D (Figure 1C).

Figure 1.

Anterior and posterior views of Single-Organ Specimen 3D Models. (A) Liver with metastatic tumors, (B) spleen, and (C) uterus with fibroids and ovarian cyst.

Models shown on the Anatomage, Inc. InVivo software.

Multiple-organ prosections

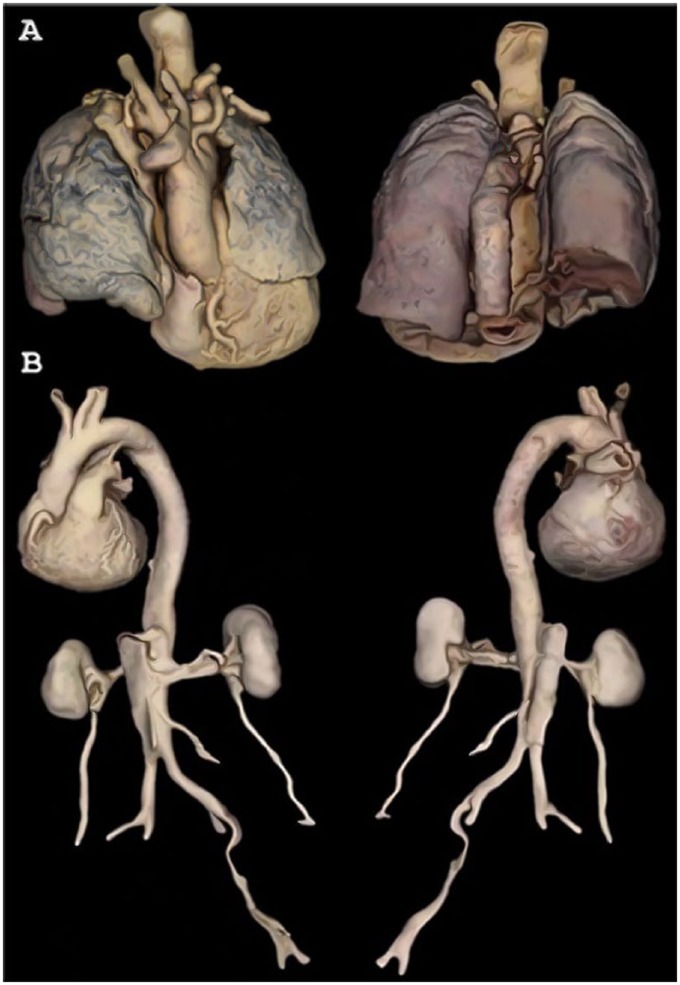

The Multiple-Organ Specimens were rendered in 3D with remarkable detail and integrity (Figure 2). For example, contours shown in the heart-and-lungs model revealed fissures of the lungs, allowing for clear distinction between lobes (Figure 2A). In the heart-and-kidneys specimen (anterior view), the coronary arteries were visible even when the model was zoomed-out to reveal the whole specimen (Figure 2B).

Figure 2.

Anterior and posterior views of Multiple-Organ Specimen 3D Models. (A) Heart with great vessels and lungs and (B) heart with great vessels, abdominal aorta, renal arteries, kidneys, and ureters.

Models shown on the Anatomage, Inc. InVivo software.

Color accuracy was especially nuanced in the heart-and-lungs model (Figure 2A). Black tar deposits were visible within lung tissue, and the posterior view showed red coloration of the posterior lungs—resultant from blood pooling during cadaver dissection in the supine position.

Internal cavities

The sagittal cross section of a head (viscera removed) showed that photogrammetry can render internal cavities in 3D (Figure 3). Head and neck cavities were clearly distinguishable in this model, conferring relative depth: more-shallow spaces (such as the spinal canal) appeared lighter and less-shadowed; deeper negative spaces (such as the cranial cavity) appeared darker.

Figure 3.

Sagittal skull cross section.

Model shown on the Anatomage, Inc. InVivo software.

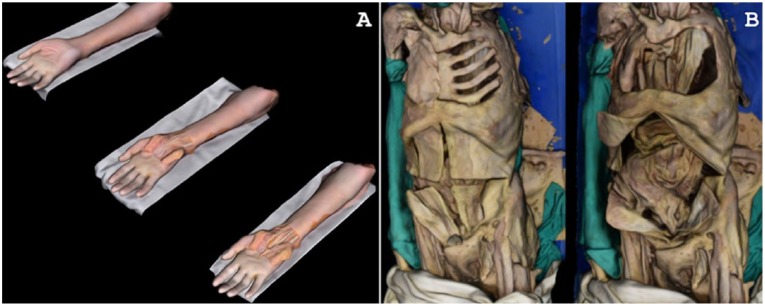

Stepwise dissections

Stepwise dissections were documented for the anterior forearm and torso, respectively (Figure 4). The anterior forearm featured 3 stages of dissection (Figure 4A). The textural precision of stepwise-dissected models fell short of the other models in this study (Figures 1 to 4). This decreased resolution can be attributed to reflective moisture from “wetting fluid,” which was applied to the forearm during dissection (to maintain color and texture). Reflective surfaces are known to decrease resolution and quality of 3D-rendered photogrammetric models.

Figure 4.

Stepwise dissection models. (A) Anterior forearm and wrist and (B) anterior torso.

Models shown on the Anatomage, Inc. InVivo software.

Two dissection stages were shown for the torso (Figure 4B). Torso 3D models were nuanced in detail; for example, Ribs 7-10 are visible under the skin on the left side of the specimen. However, 2 issues were encountered when documenting this prosection. First, the specimen was (like the forearm) moistened between dissections, lowering resolution of the final model. Second, the torso was longer than the diameter of the camera arch; the standard procedure (210° of rotation, 15° between each image) was therefore not applicable for this prosection. Instead, image capture was divided into 2 portions. The superior and inferior torso were, respectively, captured in 120° of rotation (10° between images), and the 2 sections were combined into one model during 3D rendering.

User experience

This section provides a brief anecdotal description of the digital 3D prosection models and their user experience. These are presented to describe how the models are used and how students and others might interact with them.

Both anatomy students and their instructors are the intended users for these 3D models. At Stanford University, both groups interacted with the models through an iPad application developed at Stanford. This application was usable on any device with an operating software above iOS 6, although access to the specific application was restricted to Stanford students and instructors. A similar resource may become available to other institutions at a later time. With touch screen technology, students could zoom, rotate, and annotate structures as they wish. For example, a student viewing the heart-and-lungs dissection might start by examining the anterior surface (Figure 2A). The student could then rotate the model 180° horizontally, to view the descending aorta’s path on the posterior side. The model could then be rotated 90° in the vertical axis, revealing inferior surfaces of the heart and lungs. If the student wanted to zoom in on the left anterior descending artery, they could do so as well. All these interactions are smooth and simple within the user interface; any student or instructor can investigate a specimen with freedom and control. In short, these models provide a controlled and interactive user experience. With touch screen technology, students can actively examine any position or facet of a given specimen.

Faculty feedback

Instructors at the Stanford University Division of Clinical Anatomy responded positively to the 3D prosection models. Prior to this study, these faculty taught almost exclusively from a combination of 2D images, physical prosections, and cadaveric dissection. The institution itself trains approximately 100 medical students each year in gross anatomy courses based on whole-body dissection. In addition, 50 to 60 undergraduate students participate in a separate, analogous course. Other courses teach solely from prosections, for example, an undergraduate anatomy course for bioengineering students. Finally, there is one course, “Virtual to Real,” which teaches basic anatomy to undergraduates using both digital 3D models and physical prosections. Other smaller elective courses are also offered throughout the academic year.

The instructors themselves come from a diverse range of backgrounds. Four were originally trained as surgeons or anatomists, and other instructors include 1 pathologist, 1 physical therapist, and 2 retired dentists. In the medical school course, didactic lectures are conducted by 1 of 2 professors, whereas most faculty attend the dissection laboratory sessions. Medical students have access to an application of digital 3D anatomical models to supplement their learning. Prior to this study, these models were computerized representations not derived from physical specimens and were (and still are) used outside of class at the total discretion of students.

When interacting with the digital 3D prosection models developed in this study, faculty found the models anatomically representative and authentic to the original specimens. One lecturer noted that, as opposed to many computerized versions, the models “represent authentic anatomy because they are based on . . . real cadaver specimens. [The models] are extremely accurate, and represent very well what the actual prosections look like.” Other instructors noted that the detail of these models matches or exceeds that of many high-definition textbook images.

Stanford faculty also noted a diverse range of possible uses for the models. One instructor suggested the models be used to create instructional videos: their authenticity would enable instructors to create videos with digital models nearly identical to cadaveric specimens. A senior faculty noted that such tools “could complement [current learning approaches] very well,” whereas another labeled the models as “great for teaching.” Most instructors highlighted digital accessibility as a key strength compared to physical materials. The faculty did, however, identify one main weakness of the models: a lack of cross-sectional information. Of course, this limitation exists in all physical models as well—including actual cadaveric specimens. Nonetheless, this reinforces the proposition that the models be used as adjuncts to traditional learning methods (such as cross-sectional anatomy instruction).

Instructors also noted the combined accuracy and interactivity of these models. This combination suggests the models could serve as effective study tools, outside of class: “The fact that [the model] is rotatable and controllable by the student, makes [these models] robust.” Highlighting the ability to manipulate and annotate these digital resources, one lecturer said that “the quality of the [model] is so good . . . that you could use them for quizzes—almost like remote [practical examinations].”

Lecturers were impressed with the models’ simultaneous provision of representative surface anatomy, interactivity, kinesthetic learning, and potential for annotation and self-examination. Referring to currently available 3D anatomical models, one faculty said that the precision and authenticity of the versions “far exceed any animated, interactive model that I’ve seen.” Instructors were particularly excited about the potential for digital 3D prosection libraries, which could be accessed by both faculty and students.

Once more, it should be noted that these faculty perspectives were early-stage, subjective attitudes on the newly developed models shown in this study. As such, further evaluation is required to give objective analysis of the models’ accuracy and efficacy.

Discussion

This study has presented an innovative use of photogrammetry: creation of photo-real, interactive 3D prosection models. The 3D models were authentic to their original specimens. In addition, irregular shapes and structures were captured with nuanced accuracy, highlighting photogrammetry’s ability to capture unique pathologies.

These results show that subtle anatomical features—such as the protrusion of coronary arteries or pooling of blood in the posterior lungs—are distinctly visible in photogrammetrically generated 3D models. Similar digital models have been useful supplements in anatomy education, suggesting the same may be true for photogrammetric models.5,25 For example, recent studies indicate that “priming” medical students with realistic anatomical materials can relieve anxiety during initial cadaveric interactions.26,27 In addition, digital tools are more accessible than physical models, being available for smartphone, tablet, or computer applications.28 Such applications can allow for annotation and manipulation, providing students with interactive and realistic study tools.

The efficacy of computer-based anatomy education (compared with traditional methods) is yet unproven.8,10–12,14 Indeed, multiple studies suggest that these tools contribute minimally to anatomy learning.11,29,30 In addition, much of the current debate surrounding digital 3D anatomical models is predicated on disparate studies—often performed at individual medical institutions—putting forth contradictory results. A consensus therefore evades medical educators regarding the utility or place of digital 3D models in modern anatomy education. For example, studies have suggested that individuals with high visualization ability (VA) often perform better than their low-VA peers when faced with tasks of anatomical spatial ability, irrespective of learning strategy.31,32 If true, this notion certainly complicates the simple dichotomy of educational tools either having or not having educational benefit. Indeed, one review considered “factors related to learner characteristics” as critical in the assessment of any 3D anatomical model.8

A different dichotomy therefore arises when considering digital 3D anatomical models. On one hand, new and effective tools must continue to be developed, bolstering and improving on the current set of available resources. On the other hand, evaluation of these tools must move past individual institutions and into multi-institutional studies grounded in educational theory. There is little current theory to comprehensively suggest if digital 3D models truly contribute to anatomy learning and skill development—and if so, in what fashion. As one reviewer noted, modern anatomy education requires studies which will “examine theories behind learning by using 3D tools and impact of learning by 3D models on the enhancement of knowledge.”8

This need becomes even more potent when considering photogrammetry itself. This study is, to the authors’ knowledge, the first documented use of photogrammetry for the creation of interactive digital 3D prosection models. As such, there have been no prior studies on the efficacy of photogrammetric prosection models. Now that this method is available, such models may be used in large, comparative studies of 3D anatomical learning tools. These future studies will be necessary to characterize the pedagogical impacts of digital 3D anatomical models and to understand how photogrammetry compares with other methods of generating those models. In any case, it seems that photogrammetric 3D models would only serve as adjuncts in cadaver-based courses, especially as multimodal education continues to gain mainstream support in medical education.4,7

At present, then, it may be useful to consider other currently available methods of generating digital 3D anatomical models. A recent study by Graham et al33 compared 4 such methods, examining them for geometric accuracy, textural detail, and capacity for digital dissemination and use. These methods were (1) structured light scanning, (2) triangulation laser scanning, (3) photometric stereo, and (4) photogrammetry.

In structured light scanning, a fixed or handheld scanner projects light patterns onto an object, capturing alterations in the pattern to collect simultaneous data on multiple surface points. In the case of a handheld scanner, this many involve 360° rotation of the object and fixed positioning of the scanner. Although there is need for considerable postprocessing, structured light scanning is highly efficient. Furthermore, structured light scanning appears to retain the greatest geometric fidelity of the 4 methods.33 As such, it is especially useful in developing digital models for 3D printing.

Triangulation laser scanning takes a slightly different approach. In this method, a laser is shone onto the object, and a camera locates the corresponding laser dot.34 The laser, laser dot, and camera thus form a triangle. Using the laser-camera distance, the angle of the laser, and the angle of the camera, the location of the laser dot can then be determined. By collecting position data on many laser points, triangulation laser scanning tends to return high optic resolutions in its 3D models. Using this method, Graham et al returned an optical resolution of 0.025 mm on a Babylonian tablet measuring 22.6 mm × 17.0 mm × 3.7 mm. This was markedly better than the 0.1 mm optical resolution of the same tablet yielded by structured light scanning33 (no resolutions were listed for photometric stereo or photogrammetry). However, triangulation laser scanning is unfavorable in its lack of textural data. Furthermore, because the process relies on single laser points—instead of the light patterns of structured light scanning—the process can also be time-intensive. Graham et al, for example, required 1.5 hours of imaging to capture the entire Babylonian tablet.

Third, there is the method known as photometric stereo. In this method, objects are placed in dark rooms with one or multiple light sources and a camera in fixed position.35 The light sources then illuminate the object from different angles. In postprocessing, these varying light angles enable the user to create 3D models combined from different orientations of light on the object surface. In the work by Graham et al, this method did not generate enough reliable data to create a full reconstruction of the Babylonian tablet. Instead, 2 models were reconstructed from respective sides of the tablet. Nonetheless, these models presented very precise surface details with comparatively little postprocessing. Despite its overall geometric imprecision, therefore, this method may be useful in characterizing fine structural detail.

Finally, when considering close-range photogrammetry (photogrammetry at less than 300 m), Graham et al returned mixed results. On one hand, authors found this to be a cost-effective and time-efficient method of generating digital 3D models. But, on the other hand, the method was quite susceptible to issues in lighting and background. This finding was reinforced by this study, which found that smooth or reflective surfaces can decrease the resolution of photogrammetric 3D models. Indeed, such susceptibilities can affect the reliability and reproducibility of any imaging method. Nonetheless, the present authors suggest that these limitations do not impede on the inherent utility of photogrammetry in anatomy education. Rather, they merely accentuate the need for high-quality specimens and well-controlled environments for data collection.

As such, the present authors concur that photogrammetry is an accessible and sound method for creating digital 3D models. In addition, this method could conceivably be used to create digital libraries of 3D anatomical specimens.33 These libraries would be invaluable in regions and institutions which lack cadavers or prosections and could otherwise serve as useful educational supplements. Prior to this study, physical prosections could only be distributed as 2D photos or videos which maneuver to show specimens in 3D. Photogrammetric models thus combine the 3D advantage of videos, the high-resolution quality of digital photographs, and the accessibility of both. One should note that alternative methods of 3D model construction might also be employed in this regard. Again, the comparative efficacy of these methods is beyond the scope of this study; however, this would be useful to examine in later research.

For medical schools which do have cadaver-based anatomy courses, the stepwise dissection portion of this study highlights a novel instructive tool: 3D models which guide medical students through cadaver dissections. However, creating such a series through photogrammetry can be time-intensive, depending on the number of dissection stages. In-progress dissections also require regular moisture to maintain their quality; such moisture reduces the textural precision of photogrammetric reconstructions. Further testing and optimization is therefore necessary to substantiate this use of photogrammetry.

Further research must also validate digital 3D prosection models as useful educational resources. Recent experimental and review studies have suggested that similar technologies do benefit anatomical comprehension.5,8 Given photogrammetry’s utility in creating large volumes of digital 3D models, the method is a convenient source for testable materials in examining this question of efficacy.

Limitations

This study was relatively small and exploratory. Accordingly, it did not employ photogrammetry on a comprehensive range of specimens. For example, prosections featuring muscles or bones—which require high detail to differentiate between structures—were not documented. Furthermore, it was mentioned that environmental factors such as difficult angles, poor lighting, and occlusions may decrease the quality of photogrammetric 3D models. These issues are nontrivial and are here addressed. In this study, documented prosection size was limited by the radius (r) of the rotatable arch on which the cameras were fixed (see “Materials and Methods”). If specimen length was equal to or greater than 2r, the arch could not rotate sufficiently to capture the full range of angles required for reconstruction of a precise 3D model. For instance, this explains why the specimen in Figure 4B was only captured from the waist-up.

Poor lighting can also present issues in photogrammetry, as evidenced by the results of Graham et al. However, lighting was not an issue in this study (except for fluid-induced reflectivity of specimens in Figure 4). Consistent lighting was accomplished, as described in the methods, by placing 4 lighting apparatuses at respective corners of the table on which specimens were placed. This lighting environment eliminated any shadows or darkened areas, thus benefitting the quality of reconstructed models.

Occlusions had little impact on this study, although they certainly pose a challenge to photogrammetry when present. In essence, the quality of any given photogrammetric 3D prosection model is highly dependent on the physical prosection. If the physical specimen is free of occlusions, then the 3D model may be as well. If occlusions are present, then they may appear on the final 3D model or hinder its resolution.

This study, as a descriptive communication of a technical advance, was also limited by a dearth of comparative or quantitative evaluation. As noted elsewhere, the study was intended to put forth a novel and potentially beneficial method of generating interactive, photo-real digital 3D models for anatomy education. Faculty feedback were presented as early-stage insights into instructor perceptions of this new resource. However, future studies of photogrammetric 3D prosection models are needed to (1) quantitatively assess their technical features of optical resolution and geometric fidelity, as in Graham et al and (2) evaluate their benefits on learner comprehension, compared with other digital and physical learning tools.

In similar fashion to Graham et al, quantitative assessment might be reached by measuring the optical resolution and geometric fidelity of photogrammetric 3D prosection models. Pedagogical evaluation could be achieved first through single-institution studies comparing student performance with and without the models. If the models were shown to benefit student performance, they could also be used in larger, comparative multi-institution studies of various digital 3D learning tools. These assessments are beyond the scope of the present exploratory study, but both forms of evaluation—the technical and the pedagogical—should be performed to justify further use of photogrammetric 3D prosection models.

Finally, photogrammetry also presents its own inherent limitations in the creation of digital 3D prosection models. First, photogrammetry can only capture detailed anatomy to the level of its original specimen. Prosection quality is thus a limiting factor for 3D models; anatomical specimens must be well dissected and maintained if they are to be used for this purpose. Second, smooth and reflective surfaces often produce suboptimal 3D images. Therefore, prosections should not be moistened prior to image capture. Third, while photogrammetric image capture is relatively simple, data processing can be time-intensive. The process of corroborating hundreds of 2D images, applying surface mesh, and refining the final 3D image involves heavy user interaction, and thus requires well-trained personnel.

Despite these limitations, this study clearly demonstrates photogrammetry’s power for creating digital, interactive resources for anatomy education. The photo-real 3D models produced have large potential for bolstering the anatomy learning experience. Students and instructors alike may benefit from their authenticity, interactivity, ease of use, and digital nature. Small scope in this study will be overcome through future, large-scale application of these methods. This method may be scaled to develop a digital library of 3D prosection models. Such a resource could augment traditional anatomical education and enable further evaluation of high-tech educational tools.

Acknowledgments

The authors of this study would like to thank those who have donated their bodies to medical education, through the Stanford Willed Body Program. The authors would also like to acknowledge Mr Christopher Dolph, who maintains the dissection laboratory at the Stanford University Division of Clinical Anatomy, and Sung Joo Park of Anatomage, Inc., for his technical contributions. An earlier version of the abstract for this study was presented at the 2017 conference of the American Association of Clinical Anatomists in Minneapolis, MN.

Footnotes

Funding:The author(s) received no financial support for the research, authorship, and/or publication of this article.

Declaration of conflicting interests:The author(s) declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: The authors of this study are all affiliates of the Stanford University Division of Clinical Anatomy, which collaborates with Anatomage, Inc. for medical education purposes only. Anatomage, Inc. may patent and profit from the described method in the future. None of the authors have any financial ties to Anatomage, Inc.

Author Contributions: AHP and ASP contributed equally to this study.

ORCID iD: Aldis H Petriceks  https://orcid.org/0000-0001-5136-1023

https://orcid.org/0000-0001-5136-1023

References

- 1. Parker LM. Anatomical dissection: why are we cutting it out? dissection in undergraduate teaching. ANZ J Surg. 2002;72:910–912. doi: 10.1046/j.1445-2197.2002.02596.x. [DOI] [PubMed] [Google Scholar]

- 2. McLachlan JC, Patten D. Anatomy teaching: ghosts of the past, present and future. Med Educ. 2006;40:243–253. doi: 10.1111/j.1365-2929.2006.02401.x. [DOI] [PubMed] [Google Scholar]

- 3. Raja DS, Sultana B. Potential health hazards for students exposed to formaldehyde in the gross anatomy laboratory. J Environ Health. 2012;74:36–40. http://www.ncbi.nlm.nih.gov/pubmed/22329207. [PubMed] [Google Scholar]

- 4. Johnson EO, Charchanti AV, Troupis TG. Modernization of an anatomy class: from conceptualization to implementation. A case for integrated multimodal-multidisciplinary teaching. Anat Sci Educ. 2012;5:354–366. doi: 10.1002/ase.1296. [DOI] [PubMed] [Google Scholar]

- 5. Lim KHA, Loo ZY, Goldie SJ, Adams JW, McMenamin PG. Use of 3D printed models in medical education: a randomized control trial comparing 3D prints versus cadaveric materials for learning external cardiac anatomy. Anat Sci Educ. 2016;9:213–221. doi: 10.1002/ase.1573. [DOI] [PubMed] [Google Scholar]

- 6. Drake RL, McBride JM, Lachman N, Pawlina W. Medical education in the anatomical sciences: the winds of change continue to blow. Anat Sci Educ. 2009;2:253–259. doi: 10.1002/ase.117. [DOI] [PubMed] [Google Scholar]

- 7. Rizzolo LJ, Rando WC, O’Brien MK, Haims AH, Abrahams JJ, Stewart WB. Design, implementation, and evaluation of an innovative anatomy course. Anat Sci Educ. 2010;3:109–120. doi: 10.1002/ase.152. [DOI] [PubMed] [Google Scholar]

- 8. Azer SA, Azer S. 3D anatomy models and impact on learning: a review of the quality of the literature. Heal Prof Educ. 2016;2:80–98. doi: 10.1016/j.hpe.2016.05.002. [DOI] [Google Scholar]

- 9. McMenamin PG, Quayle MR, McHenry CR, Adams JW. The production of anatomical teaching resources using three-dimensional (3D) printing technology. Anat Sci Educ. 2014;7:479–486. doi: 10.1002/ase.1475. [DOI] [PubMed] [Google Scholar]

- 10. Keedy AW, Durack JC, Sandhu P, Chen EM, O’Sullivan PS, Breiman RS. Comparison of traditional methods with 3D computer models in the instruction of hepatobiliary anatomy. Anat Sci Educ. 2011;4:84–91. doi: 10.1002/ase.212. [DOI] [PubMed] [Google Scholar]

- 11. Khot Z, Quinlan K, Norman GR, Wainman B. The relative effectiveness of computer-based and traditional resources for education in anatomy. Anat Sci Educ. 2013;6:211–215. doi: 10.1002/ase.1355. [DOI] [PubMed] [Google Scholar]

- 12. Preece D, Williams SB, Lam R, Weller R. “Let’s get physical”: advantages of a physical model over 3D computer models and textbooks in learning imaging anatomy. Anat Sci Educ. 2013;6:216–224. doi: 10.1002/ase.1345. [DOI] [PubMed] [Google Scholar]

- 13. Pujol S, Baldwin M, Nassiri J, Kikinis R, Shaffer K. Using 3D modeling techniques to enhance teaching of difficult anatomical concepts. Acad Radiol. 2016;23:507–516. doi: 10.1016/j.acra.2015.12.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Nicholson DT, Chalk C, Funnell WRJ, Daniel SJ. Can virtual reality improve anatomy education? a randomised controlled study of a computer-generated three-dimensional anatomical ear model. Med Educ. 2006;40:1081–1087. doi: 10.1111/j.1365-2929.2006.02611.x. [DOI] [PubMed] [Google Scholar]

- 15. Müller-Stich BP, Löb N, Wald D, et al. Regular three-dimensional presentations improve in the identification of surgical liver anatomy—a randomized study. BMC Med Educ. 2013;13:131. doi: 10.1186/1472-6920-13-131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Abid B, Hentati N, Chevallier J-M, Ghorbel A, Delmas V, Douard R. Traditional versus three-dimensional teaching of peritoneal embryogenesis: a comparative prospective study. Surg Radiol Anat. 2010;32:647–652. doi: 10.1007/s00276-010-0653-1. [DOI] [PubMed] [Google Scholar]

- 17. Ruisoto P, Juanes JA, Contador I, Mayoral P, Prats-Galino A. Experimental evidence for improved neuroimaging interpretation using three-dimensional graphic models. Anat Sci Educ. 2012;5:132–137. doi: 10.1002/ase.1275. [DOI] [PubMed] [Google Scholar]

- 18. Estevez ME, Lindgren KA, Bergethon PR. A novel three-dimensional tool for teaching human neuroanatomy. Anat Sci Educ. 2010;3:309–317. doi: 10.1002/ase.186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Park JS, Chung MS, Hwang SB, Shin BS, Park HS. Visible Korean human: its techniques and applications. Clin Anat. 2006;19:216–224. doi: 10.1002/ca.20275. [DOI] [PubMed] [Google Scholar]

- 20. Schenk T. Introduction to photogrammetry. Department of Civil and Environmental Engineering and Geodetic Science, The Ohio State University; 2005:79–95. http://www.mat.uc.pt/~gil/downloads/IntroPhoto.pdf. [Google Scholar]

- 21. Dirven R, Hilgers FJM, Plooij JM, et al. 3D stereophotogrammetry for the assessment of tracheostoma anatomy. Acta Otolaryngol. 2008;128:1248–1254. doi: 10.1080/00016480801901717. [DOI] [PubMed] [Google Scholar]

- 22. Tunali S. Digital photogrammetry in neuroanatomy. Neuroanatomy. 2008;7:47–48. [Google Scholar]

- 23. Nocerino E, Menna F, Remondino F, et al. Application of photogrammetry to brain anatomy. Paper presented at: 2nd International ISPRS Workshop on PSBB: The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; May 15-17, 2017; Moscow, Vol XLII-2/W4:213–219. doi: 10.5194/isprs-archives-XLII-2-W4-213-2017. [DOI] [Google Scholar]

- 24. Paul F, Connor L, McCabe M, Ziniel S. The development and content validity testing of the Quick-EBP-VIK: a survey instrument measuring nurses’ values, knowledge and implementation of evidence-based practice. J Nurs Educ Pract. 2016;6:118–126. doi: 10.5430/jnep.v6n5p118. [DOI] [Google Scholar]

- 25. Chen S, Pan Z, Wu Y, et al. The role of three-dimensional printed models of skull in anatomy education: a randomized controlled trail. Sci Rep. 2017;7:575. doi: 10.1038/s41598-017-00647-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Arráez-Aybar L-A, Casado-Morales MI, Castaño-Collado G. Anxiety and dissection of the human cadaver: an unsolvable relationship? Anat Rec B New Anat. 2004;279B:16–23. doi: 10.1002/ar.b.20022. [DOI] [PubMed] [Google Scholar]

- 27. Boeckers A, Brinkmann A, Jerg-Bretzke L, Lamp C, Traue HC, Boeckers TM. How can we deal with mental distress in the dissection room? an evaluation of the need for psychological support. Ann Anat. 2010;192:366–372. doi: 10.1016/j.aanat.2010.08.002. [DOI] [PubMed] [Google Scholar]

- 28. Lewis TL, Burnett B, Tunstall RG, Abrahams PH. Complementing anatomy education using three-dimensional anatomy mobile software applications on tablet computers. Clin Anat. 2014;27:313–320. doi: 10.1002/ca.22256. [DOI] [PubMed] [Google Scholar]

- 29. Garg AX, Norman GR, Eva KW, Spero L, Sharan S. Is there any real virtue of virtual reality?: the minor role of multiple orientations in learning anatomy from computers. Acad Med. 2002;77:S97–S99. http://www.ncbi.nlm.nih.gov/pubmed/12377717. [DOI] [PubMed] [Google Scholar]

- 30. Donnelly L, Patten D, White P, Finn G. Virtual human dissector as a learning tool for studying cross-sectional anatomy. Med Teach. 2009;31:553–555. doi: 10.1080/01421590802512953. [DOI] [PubMed] [Google Scholar]

- 31. Nguyen N, Mulla A, Nelson AJ, Wilson TD. Visuospatial anatomy comprehension: the role of spatial visualization ability and problem-solving strategies. Anat Sci Educ. 2014;7:280–288. doi: 10.1002/ase.1415. [DOI] [PubMed] [Google Scholar]

- 32. Nguyen N, Nelson AJ, Wilson TD. Computer visualizations: factors that influence spatial anatomy comprehension. Anat Sci Educ. 2012;5:98–108. doi: 10.1002/ase.1258. [DOI] [PubMed] [Google Scholar]

- 33. Graham CA, Akoglu KG, Lassen AW, Simon S. Epic dimensions: a comparative analysis of 3D acquisition methods. Int Arch Photogramm Remote Sens Spat Inf Sci. 2017;42:287–293. doi: 10.5194/isprs-archives-XLII-2-W5-287-2017. [DOI] [Google Scholar]

- 34. Malhotra A, Gupta K, Kant K. Laser triangulation for 3D profiling of target. Int J Comput Appl. 2011;35:47–50. [Google Scholar]

- 35. Woodham RJ. Photometric method for determining surface orientation from multiple images. Opt Eng. 1980;19:191139. doi: 10.1117/12.7972479. [DOI] [Google Scholar]