Abstract

Background

Autism spectrum disorder (ASD) is characterized by atypical behaviors in social environments and in reaction to changing events. While this dyad of symptoms is at the core of the pathology along with atypical sensory behaviors, most studies have investigated only one dimension. A focus on the sameness dimension has shown that intolerance to change is related to an atypical pre-attentional detection of irregularity. In the present study, we addressed the same process in response to emotional change in order to evaluate the interplay between alterations of change detection and socio-emotional processing in children and adults with autism.

Methods

Brain responses to neutral and emotional prosodic deviancies (mismatch negativity (MMN) and P3a, reflecting change detection and orientation of attention toward change, respectively) were recorded in children and adults with autism and in controls. Comparison of neutral and emotional conditions allowed distinguishing between general deviancy and emotional deviancy effects. Moreover, brain responses to the same neutral and emotional stimuli were recorded when they were not deviants to evaluate the sensory processing of these vocal stimuli.

Results

In controls, change detection was modulated by prosody: in children, this was characterized by a lateralization of emotional MMN to the right hemisphere, and in adults, by an earlier MMN for emotional deviancy than for neutral deviancy. In ASD, an overall atypical change detection was observed with an earlier MMN and a larger P3a compared to controls suggesting an unusual pre-attentional orientation toward any changes in the auditory environment. Moreover, in children with autism, deviancy detection depicted reduced MMN amplitude. In addition in children with autism, contrary to adults with autism, no modulation of the MMN by prosody was present and sensory processing of both neutral and emotional vocal stimuli appeared atypical.

Conclusions

Overall, change detection remains altered in people with autism. However, differences between children and adults with ASD evidence a trend toward normalization of vocal processing and of the automatic detection of emotion deviancy with age.

Keywords: Mismatch negativity (MMN), Autism spectrum disorder, Change detection, Emotion, Prosody, Children, Adults, EEG

Background

Autism spectrum disorder (ASD) is marked by persistent deficits in social communication and social interaction [1]. A key factor of this dimension is the failure to orient naturally to social stimuli [2] and in particular to display a preference for voice [3, 4] and to detect its emotional prosodic modulations [5]. Experimental investigations using event-related potentials (ERP) or functional magnetic resonance imaging (fMRI) have argued the absence of a voice-preferential brain response in ASD. In children, no voice-preferential response was evidenced [6] in relation to a larger brain response to non-vocal stimuli in subjects with ASD than in controls [7]. In adults, an absence of voice-preferential response was also originally described [8] attributed to a decreased brain activation to vocal stimuli, though this result has recently been refuted on a larger sample [9]. Overall, contradictory results emerged from the analysis of the auditory ERPs (i.e., P1, N1, P2, N2), which indexed the sensory vocal processing. For the P1 for example, several studies evidenced no group differences between controls and ASD in both children [6, 10, 11] and adults [12] while other investigations pointed to a smaller P1 amplitude [13, 14] and a longer latency [14, 15] in ASD compared to controls. Alteration of voice processing per se has thus not been fully confirmed.

The “need for sameness” described in the second dimension of the pathology has been associated with atypical brain responses to change in patients with ASD. Oddball paradigms are frequently used to elicit MMN (mismatch negativity) and eventually P3a responses indexing respectively automatic detection of change and automatic orientation of attention toward the change. In subjects with ASD, the severity of the symptomatology is positively correlated with an atypical MMN response (i.e., shorter MMN latency) [16]. Alterations of automatic detection processing were evidenced for changing stimuli without any social information [13, 16, 17]. Such pre-attentional detection process can be triggered by change, but also by emotion. Hence, alterations of this mechanism could be found for both non-social and social stimuli and could constitute a general impairment in patients with ASD, which could be related to the two dimensions of the pathology.

Most MMN studies using vocal stimuli focused on the discrimination of sound features (e.g., intensity) or speech changes. Investigations of speech change detection in ASD have shown some particularities. In preschool-age children with ASD, an absence of MMN was observed in response to speech syllable change [4]. In both school-age children and adults, change of vowel or consonant resulted in a similar amplitude between clinical groups but a delayed MMN was found in ASD compared to controls [12, 13, 18, 19]. Smaller P3a was also observed in response to speech change in children and adults with autism compared to controls [12, 18, 20]. Finally, when physical attributes (e.g., fundamental frequency, intensity,…) of speech stimuli varied, several alterations of MMN and P3a amplitude and latency were evidenced. MMN amplitude was found either larger, smaller, or similar between controls and ASD. Similarly, MMN laterality or topography were also reported as different or similar between groups [11, 12, 18, 20–22]. For P3a, amplitude was described as smaller or typical in people with ASD compared to controls while the latency appeared shorter, longer or similar [12, 18, 20, 22]. Overall, these few studies question the existence of an atypical detection of change in speech stimuli in autism, regardless of age.

Subsequent research showed interest in the evaluation of vocal prosodic change detection in people with ASD. Emotional voices are prosodic stimuli that contain great amounts of social information. Their interpretation has even been associated with social competence during childhood and adolescence [23]. Atypical prosodic production is a hallmark of autism and was linked to social awkwardness [24] and to poor communication and socialization skills of children with ASD [25]. These production issues might be related to an atypical perception of the prosody of vocal stimuli [26]. Behavioral studies conducted in children and adults with ASD predominantly reported lower performances on tasks of emotional prosody perception compared to controls [5, 26–34]. However, some other investigations showed similar performances between groups [35–39] but patients included in these studies had a good cognitive level and thus could have developed compensatory strategies to succeed at these active tasks. Thus, performances of patients at behavioral tasks could remain uninformative about potential alterations of brain processes involved in the processing of vocal emotional stimuli especially considering that a large part of the ASD population was not represented in these studies.

The few studies that have attempted to analyze sensory ERPs to emotional voices showed a reduction of amplitude in people with ASD compared to controls [40, 41]. Only four studies have addressed the automatic detection of emotional vocal change in ASD so far. In children with Asperger syndrome (AS), using a paradigm with a word uttered with either tender or commanding prosodies as standard and deviant, respectively, the emotional change detection elicited a double-peaked MMN response [40]. The early MMN component was lateralized to the right hemisphere in control (CTRL) children but not in the AS group. Moreover, the late MMN latency was shorter in the AS group compared to the CTRL group. In another study in children with ASD with low verbal skills [42], a neutral standard and three emotional deviants (scornful, sad, and commanding prosodies) were presented. Scornful MMN and P3a amplitudes were reduced in children with ASD compared to CTRL while no group differences were reported for the other emotions. In adults with AS, using the same paradigm, smaller MMN amplitude was evidenced in the right hemisphere for the scornful deviant compared to controls [43]. For the commanding deviant, MMN displayed a different topography in the AS group and a delayed latency. Here again, the sad MMN remained intact in ASD. Another study showed smaller P3a amplitude for angry condition in adults with ASD compared to controls [41]. Overall, these studies described either impaired or intact automatic detection of emotional changes depending on the population (age and diagnosis), the paradigm, and the emotion. Though comparing different emotional vocal stimuli, most of these studies did not control for acoustic features. Emotional prosody is based on first-order acoustical variations (e.g., fundamental frequency, intensity, …) known to modulate MMN [44]; subjects with ASD are particularly sensitive to the influence of these factors [16, 17], yet they have not been controlled making it difficult to assess the origin of group differences. The use of acoustically matched non-vocal sounds in Fan and Cheng study [41] showed that most of the between groups amplitude differences evidenced in response to emotional sounds were related to pure acoustic variations and can be found with stimuli without any emotional content. Moreover, none of these studies compared the detection of emotional deviancy to neutral deviancy; thus, the impact of the emotional component per se could not be determined. Finally, potential differences regarding these brain processes between children and adults with ASD are still unknown. In CTRL, prosodic deviancy triggered a right-lateralized MMN [45] that appeared to be either earlier or larger [45–51] in response to emotional than to neutral deviancy in adults while no emotion-related differences were found in children [45]. Altogether, age-related modifications of emotional change detection are still poorly investigated in both CTRL and ASD. Several essential questions regarding specific vocal emotional change detection in ASD thus remained unanswered.

Do people with autism present an atypical detection of change regardless of prosody (neutral, emotional) or do they have a specific alteration of emotional change detection? In view of the previous findings, we hypothesize that people with autism present an alteration of the detection of change regardless of prosody (neutral, emotional), together with an impairment for emotion-specific change detection. Based on the scarce literature on emotional prosody change detection, we predicted that MMN and P3a amplitudes would be reduced in ASD groups compared to CTRL. However, given the inconsistency of previous findings regarding MMN latency, no specific hypotheses could be drawn about this parameter. In addition, we also predicted an atypical response to the emotional change, characterized by a default of lateralization of the emotional MMN in children with ASD compared to CTRL children in whom emotional MMN appears right-lateralized. To address these hypotheses, a paradigm composed of stimuli with tightly controlled acoustic parameters was used to elicit brain responses to neutral and emotional deviancy. These responses were compared between groups (CTRL, ASD) and across age (children, adults) to evaluate potential age-related differences.

Methods

Participants

Fifteen children with ASD (7–11 years) and 16 adults with ASD (18–37 years; Table 1) were recruited through the Child Psychiatry Department and the Autism Resource Centre of Tours. An experienced team of clinicians diagnosed the participants according to DSM-5 criteria [1] and by using ADI-R and ADOS [52, 53]. Fifteen healthy children and 16 healthy adults also participated in the study as control participants (CTRL; Table 1). None of the CTRL reported any developmental difficulties in language or sensorimotor acquisition. For all participants, no disease of the central nervous system, infectious or metabolic disease, epilepsy, or abnormal audition was reported. Although most patients were not medicated, we report neuroleptic treatment for three adults and one child, anxiolytic for one adult, and methylphenidate for two children. Intellectual quotients (verbal, performance) were obtained for 30 patients with psychometric tests adapted to their cognitive level [54, 55]. An estimation of verbal and performance IQ was performed in CTRL using four subtests (vocabulary, similarities, block design, and matrix) of the age-adapted Wechsler intelligence scales. Two-tailed t tests were used to determine if verbal and performance IQ differed between CTRL and ASD and also between children and adults with ASD. Both verbal and performance IQ scores were significantly lower in the ASD population than in the CTRL population. Moreover, the verbal IQ was significantly reduced in children with ASD as compared to adults with ASD (Table 1).

Table 1.

Group characteristics

| Children | Adults | |||

|---|---|---|---|---|

| CTRL | ASD | CTRL | ASD | |

| Age a | 9.8 ± 1.4 | 10.0 ± 1.4 | 26.2 ± 6.4 | 26.2 ± 6.8 |

| Sex ratio ♂/♀ | 12/3 | 13/2 | 14/2 | 14/2 |

| Verbal IQ b,c,* | 118 ± 19 | 75 ± 29 | 116 ± 16 | 95 ± 18 |

| Performance IQ b, * | 116 ± 16 | 81 ± 16 | 113 ± 12 | 90 ± 21 |

| ADOS d | – | 13 ± 6 | – | 13 ± 4 |

aMean age (years) ± SD

bVerbal and performance IQ measured with Wechsler intelligence scale (average on 15 adults with ASD)

cSignificant difference between children and adults with ASD (p = .03)

dADOS sum of social interaction and communication scores (average on 14 children with ASD)

*Significant differences between CTRL and ASD (p < .05) for both children and adults

Informed written consent was obtained from all adult participants or from their legal guardian and from children’s parents. Of course, the entire experiment was performed with the assent of all participants (children or adults). The protocol was approved by the Ethics Committee of the University Hospital of Tours and complied with the principles of the Declaration of Helsinki.

Experimental design

The vowel /a/ uttered by different female speakers with either neutral or emotional prosody (anger, fear, happiness, surprise, disgust, sadness) was recorded with Adobe Audio 2.0. Stimuli were edited in order to have the same duration (400 ms) and loudness (70 dB SPL) and were validated on neurotypical samples of adults (n = 16; valence and emotion recognition) and children (n = 18; valence recognition) [45]. Selected stimuli displayed close mean fundamental frequencies (220–231 Hz).

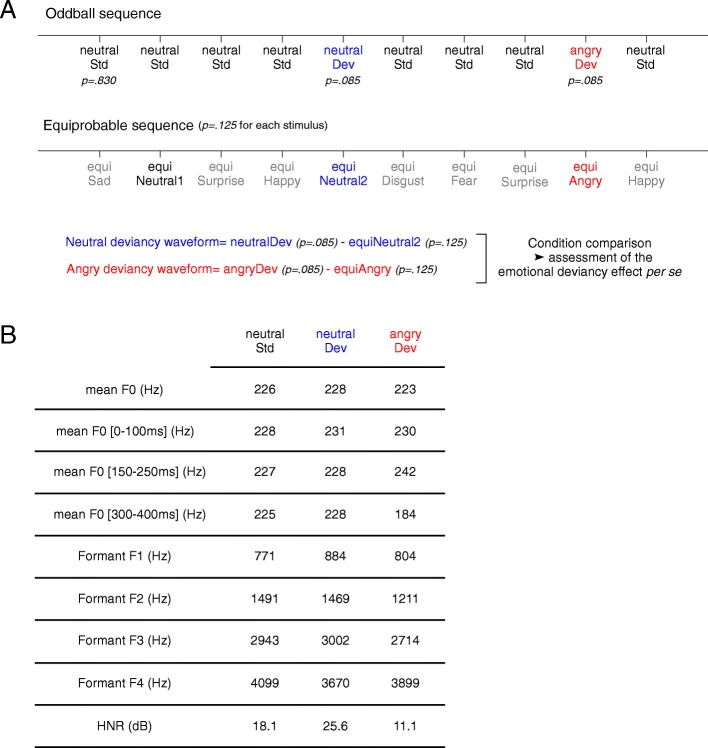

During EEG recording, participants were asked to watch a silent movie without subtitles while the sounds were delivered through speakers. Automatic detection processes were studied using passive oddball and equiprobable sequences to control for both sensory processing and neuronal adaptation effects [56]. The oddball sequence comprised 1172 neutral standards (neutralStd; identity 1; probability of occurrence, p = .83), 120 neutral deviants (neutralDev; identity 2), and 120 angry deviants (angryDev; identity 3 and emotional deviant) (p = .085 each), with the constraint that two deviants were separated by a minimum of three standards. The second sequence was composed of eight different stimuli presented with an equal probability of occurrence (p = .125; 120 stimuli): two neutral stimuli (equiNeutral1, equiNeutral2: the neutralStd and the neutralDev from the oddball sequence) and six emotional stimuli representing the six basic emotions (equiHappy, equiSad, equiSurprise, equiDisgust, equiFear, and equiAngry, i.e., angryDev of the oddball sequence). None of the stimuli were repeated more than two times in a row in order to avoid creation of a regularity pattern. The stimulus onset asynchrony was 700 ms (total recording time: 28 min). In order to obtain the brain response to deviancy detection (Fig. 1), the neutral difference wave was obtained by subtracting the ERP elicited by equiNeutral2 from that elicited by neutralDev (neutral deviancy waveform = neutralDev − equiNeutral2; same sound in equiprobable and oddball sequences). The same subtraction (angry deviancy waveform = angryDev − equiAngry) was applied to obtain the emotional difference wave. Since stimuli in the equiprobable sequence have identical acoustic characteristics and similar probability of occurrence as the oddball deviants, the resulting difference wave more likely reflects a genuine MMN than in the oddball paradigm [57]. Moreover, the application of this subtraction process to the emotional condition allowed to control for the influence of the emotional processing which operates in both sequences, in order to isolate the effect related to emotional deviancy. Finally, the direct comparison between neutral and emotional difference waves contrasted “identity deviancy” (neutral deviancy waveform) and “identity/emotion deviancy” (angry deviancy waveform) leaving only the emotional deviancy as a differential factor between conditions, which allowed the assessment of a specific emotional deviancy effect (Fig. 1). This direct condition comparison was performed with ANOVAs, which will be detailed later in the “Statistical analysis” section.

Fig. 1.

a Illustration of oddball and equiprobable sequences composed of neutral standard (neutralStd) and neutral and angry deviants (neutralDev and angryDev) in the oddball sequence and of equiNeutral1, equiNeutral2, equiAngry, equiSurprise, equiHappy, equiDisgust, equiFear, and equiSad in the equiprobable sequence. Black, blue, and red ink colors highlight that the three stimuli of the oddball sequence were also presented in the equiprobable sequence. b Acoustic properties of all stimuli of interest

EEG recording and ERP measurements

The EEG was recorded from 64 active electrodes (ActiveTwo Systems Biosemi, The Netherlands) with a sampling rate of 512 Hz while eye movements were monitored using electrodes placed on left and right outer canthi and below the left eye. An electrode was placed on the nose of the subject, and data were re-referenced offline to its potential. The ELAN software package was used for the analysis of EEG-ERP [58]. The EEG signal was amplified and filtered (0.3 Hz high-pass filter). Artifacts resulting from eye movements were removed using independent component analysis (EEGlab), and movement artifacts were discarded manually. A 30 Hz low-pass filter was applied and ERP were averaged on a 800-ms time window including a 100-ms prestimulus baseline.

Artifact-free trials were averaged for each stimulus of interest (neutralDev, angryDev, equiNeutral2, equiAngry) in CTRL adults (107 ± 9, 107 ± 13, 106 ± 12, 107 ± 9), in adults with ASD (103 ± 8, 104 ± 11, 104 ± 10, 102 ± 10), in CTRL children (88 ± 14, 89 ± 14, 88 ± 15, 86 ± 14), and in children with ASD (98 ± 7, 99 ± 8, 91 ± 14, 94 ± 13). A main ANOVA performed on these data with condition (neutral, emotional) as within-subject factor and age (children, adults) and group (CTRL, ASD) as between-subject factors, revealed no condition effect. An age effect (F(1,58) = 29.16, p < .001, ηp2 = .33) was reported due to a higher number of artifact-free trials in adults than in children, which is classical in developmental studies. Moreover, a group by age interaction (F(1,58) = 4.77, p = .033, ηp2 = .08) was also observed because of a higher number of artifact-free trials in children with ASD than in CTRL.

In the entire manuscript, the terms “sensory/vocal processing” will refer to obligatory auditory evoked potentials (P1, N1, P2, N2) to vocal stimuli while “change/ deviance detection” will refer to results related to MMN/P3a.

One cannot exclude that a poor sensory encoding of the acoustic characteristics of each sound could influence the detection of changes between different sounds. Therefore, an analysis of sensory responses appears as an essential control to estimate the potential involvement of atypical sensory processing in MMN/ P3a results. In line with this idea, an analysis of auditory ERP was performed on P1 component, which is commonly observed in both children and adults in response to vocal stimuli. Peak amplitude and latency of P1 elicited by the equiNeutral2 and equiAngry stimuli were measured in a 50–150-ms time window in children and adults. Peak amplitudes and latencies of the MMN and P3a were measured in each subject on neutral and angry deviancy waveforms (Fig. 1). MMN was identified as the negative deflection occurring in a 120–220-ms time window for children and in a 110–210-ms time window for adults. P3a was identified as the positive deflection occurring between 240 and 340 ms and 230 and 330 ms for children and adults, respectively.

Statistical analysis

P1 amplitude and latency to equiNeutral2 and equiAngry were analyzed with a mixed-design ANOVA performed on the electrode where the response culminates (Fz) with condition (neutral, emotional) as a within-subject factor and age (children, adults) and group (CTRL, ASD) as between-subject factors.

In order to assess differences between groups after P1, which is the only peak clearly identified in all conditions and groups, randomizations were realized for each stimulus (equiNeutral2 and equiAngry) on a 50–500-ms time window on all electrodes with a Guthrie-Buchwald correction over 25 ms [59]. Such analysis allowed determining periods of between groups’ statistical differences and constituted a good alternative for processing data, which do not display measurable components.

Two-tailed t tests were used to determine whether the amplitudes of evoked potentials cited below (MMN and P3a) significantly differed from zero.

A main mixed-design ANOVA analysis was performed for MMN amplitude on the electrodes where the response culminates (F3, Fz, F4, FC3, FCz, FC4, C3, Cz, C4, CP3, CPz, CP4, P3, Pz, P4) with condition, anterior-posterior (frontal, fronto-central, central, centro-parietal, parietal) and laterality (left, medial, right) as within-subject factors and age and group as between-subject factors. This selection of electrodes is consistent with previous MMN studies of emotional change detection in ASD [40–43]. MMN latency was evaluated with a mixed-design ANOVA on Cz with condition as within-subject factor and age and group as between-subject factors.

Mixed-design ANOVA was performed over Fz, FCz, and Cz for P3a amplitude with condition and anterior-posterior (frontal, fronto-central, central) as within-subject factors and age and group as between-subjects factors while a mixed-design ANOVA was realized on FCz for P3a latency.

Greenhouse-Geiser correction was applied when necessary. For significant results, the effect sizes are shown as ηp2. Post-hoc analysis (Newman-Keuls) was performed when needed to determine the origins of interactions.

For each significant result involving the factor “Group,” correlations between electrophysiological data (i.e., MMN and P3a amplitude and latency) and verbal/performance IQ scores were calculated to estimate the potential influence of cognitive skills on the measures of evoked potentials. To assess the significance of the correlation in each group, permutations were used to generate 15,000 theoretical correlations based on random pairs of IQ score and electrophysiological measure. This operation gave a distribution of correlation slopes under the null hypothesis of an absence of correlation. Observed correlations were considered significant if they fell outside the 95% CI (confidence interval) of the theoretical distribution. P values were calculated by counting the number of times the random samples provided value of slopes greater than the empirical one. Confidence Intervals around the slope were estimated by bootstrapping with replacement of electrophysiological/IQ pairs within each group.

To assess differences between ASD and CTRL groups, individual participant data were permuted across groups, that is, individuals were randomly assigned to either the ASD or the CTRL group; correlations were computed between IQ scores and electrophysiological data for permuted groups. Theoretical differences between slopes of permuted groups were computed. This operation was repeated 15,000 times to generate a theoretical distribution of group differences under the null hypothesis (no ASD/CTRL difference) with a 95% CI. Empirical group differences between slopes were deemed significant if they fell outside the 95% CI. Significant threshold for these tests was adjusted for multiple comparisons with a Bonferroni correction (p = .0063 for eight comparisons in adults and p = .0056 for nine comparisons in children).

Results

Obligatory auditory ERPs to voice

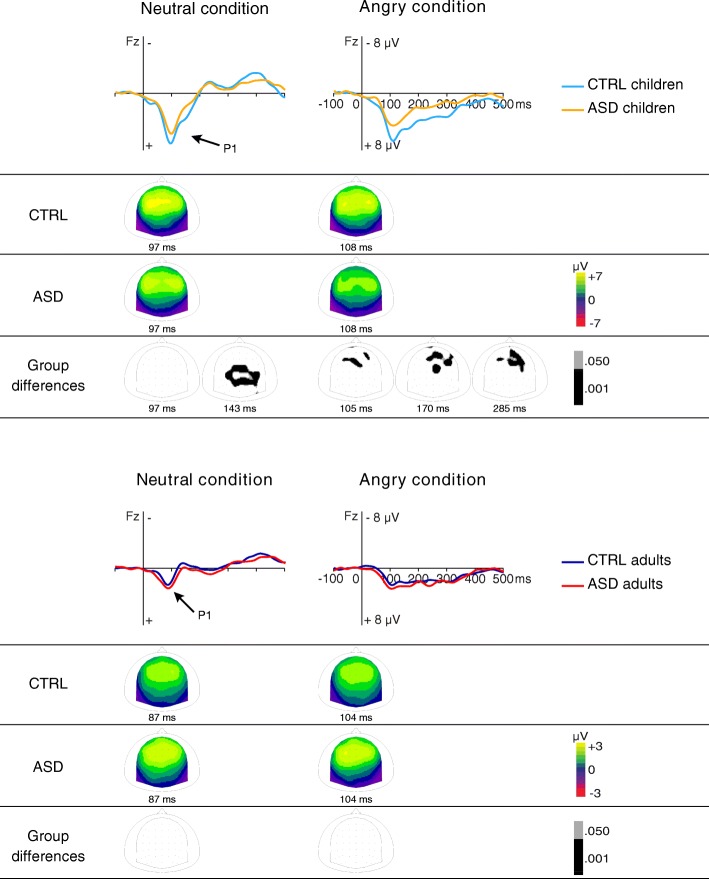

Larger P1 amplitude was observed in CTRL children than in children with ASD (p = .003) while no difference was observed between groups in adults (group by age interaction, F(1,58) = 8.61, p = .005, ηp2 = .13; Fig. 2). In both groups, P1 amplitude was generally larger in children than in adults (age effect, F(1,58) = 80.17, p < .001, ηp2 = .58; Fig. 2). Condition did not affect P1 amplitude.

Fig. 2.

Grand average auditory brain responses to neutral (equiNeutral2) and angry (equiAngry) stimuli. Scalp distribution of the P1 response is displayed for each group along with group difference scalp distributions obtained with randomizations performed on the 50–500-ms time window (Guthrie-Buchwald time correction). Amplitude differences were observed between children groups for equiNeutral2 (123–181 ms) and for equiAngry (92–127 ms, 152–193 ms, 255–322 ms) while no differences were seen between adult groups

Earlier P1 was evidenced in participants with ASD compared to CTRL participants (group effect, F(1,58) = 6.84, p = .011, ηp2 = .11). A P1 latency shortening was also observed in adults compared to children in the neutral condition for both groups (p = .001; condition by age interaction, F(1,58) = 5.89, p = .018, ηp2 = .09).

Randomizations performed over all electrodes in the 50–500-ms time window (Fig. 2) revealed amplitude differences between children groups (more positive response in CTRL than in ASD) for equiNeutral2 (123–181 ms) and for equiAngry (92–127 ms, 152–193 ms, 255–322 ms) while no amplitude differences were observed between adult groups.

Discrimination of neutral and emotional prosodic changes

In children and in adults, MMN and P3a were elicited by both neutral and emotional changes (Figs. 3 and 4). An early negativity was also observed in children regardless of group and condition.

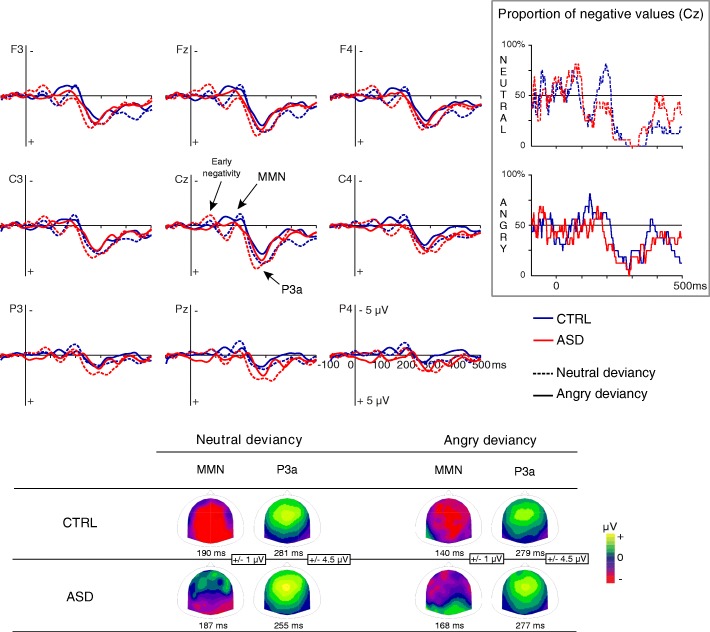

Fig. 3.

Brain responses to neutral and angry prosodic deviancies in CTRL adults and ASD. ERP of CTRL and ASD are displayed in blue and red line, respectively. Scalp distributions of MMN and P3a are displayed at the bottom of the figure. In the upper right corner, the proportion (%) of participants displaying a negative response at Cz is represented at each time point for neutral and angry deviancies in CTRL and ASD

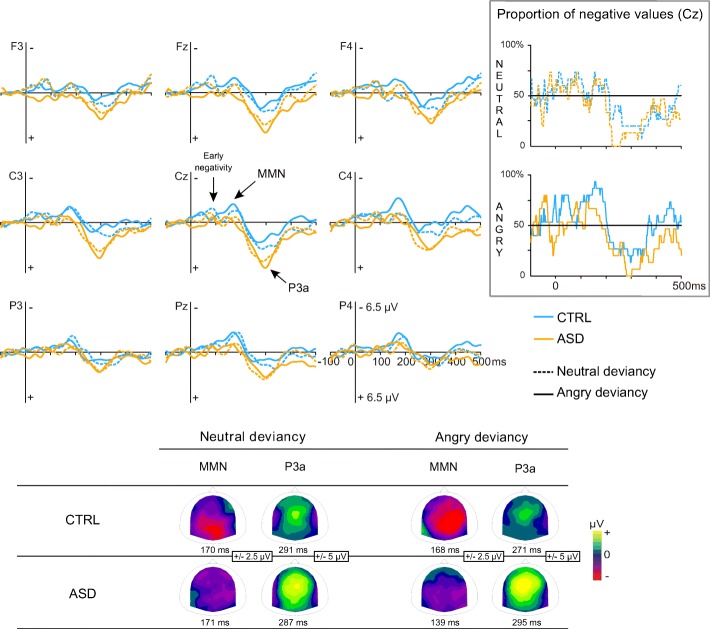

Fig. 4.

Brain responses to neutral and angry prosodic deviancies in CTRL children and ASD. ERP of CTRL and ASD are displayed in light blue and orange line, respectively. Scalp distributions of MMN and P3a are displayed at the bottom. In the upper right corner, the proportion (%) of participants displaying a negative response at Cz is represented at each time point for neutral and angry deviancies in CTRL and ASD

All the deflections studied in this section significantly differed from 0 (two-tailed t tests; p < .05).

MMN amplitude

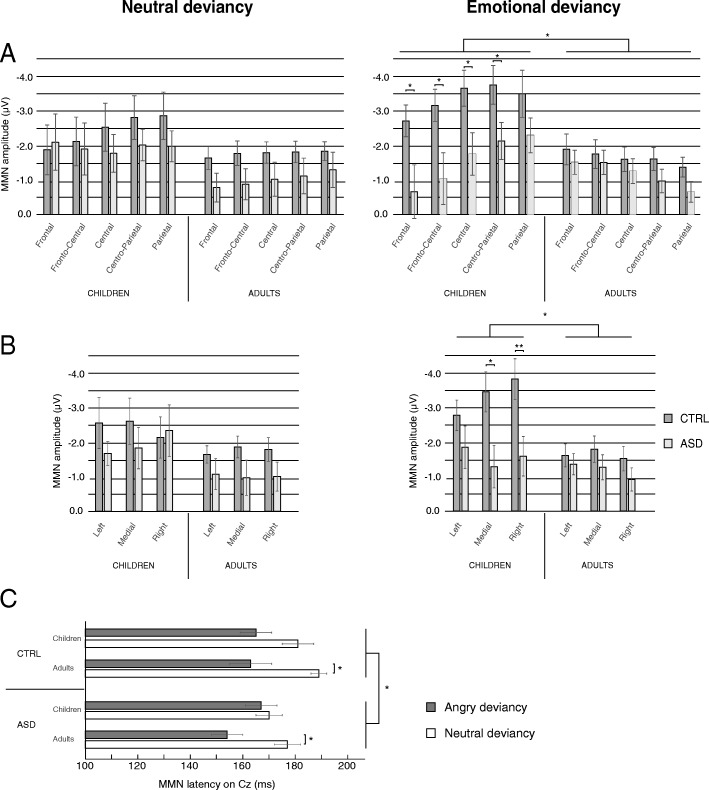

The main analysis performed on MMN amplitude evidenced a group effect (F(1,58) = 5.04, p = .029, ηp2 = .08) with a larger amplitude in CTRL than in ASD and an age effect (F(1,58) = 5.71, p = .020, ηp2 = .09) with a larger amplitude in children than in adults. This main analysis also revealed significant interactions on MMN amplitude between condition, anterior-posterior, age, and group (F(4,232) = 3.87, GG-corrected p = .034, ηp2 = .06; Fig. 5a) and between condition, laterality, age, and group (F(2,116) = 3.39, p = .037, ηp2 = .06; GG-corrected p = .054; Fig. 5b). As these interactions revealed that scalp distributions were different in children and in adults, MMN data were further analyzed separately for adults and children.

Fig. 5.

MMN amplitude (a, b) and MMN latency (c) for neutral and emotional deviancies. Main effects of group (CTRL/ASD) and age are reported for MMN amplitude while main effects of group and condition are shown for MMN latency. *p ≤ .05, **p ≤ .001. Barr errors represent the standard error to the mean

Amplitude differences were analyzed with mixed-design ANOVA performed on Fz, FCz, Cz, CPz, and Pz in adults and on Cz, CPz, and Pz in children accordingly to the MMN locations in age groups. Indeed, in both groups of children, MMN displayed posterior distribution with reduced amplitude over fronto-central site. Previous studies [60, 61] also reposted a posterior negativity in school-aged children in the MMN latency range in response to vowel or syllable deviancy. Thus, depending on the characteristics of the stimuli used to elicit the mismatch process, the MMN distribution using vocal speech stimuli in children may or may not display the classical fronto-central distribution, which guided our electrode selection for the analysis.

In adults, regardless of the group, angry MMN displayed a fronto-central topography (p < .005) whereas neutral MMN displayed a broader topography (condition by anterior-posterior interaction, F(4,120) = 8.56, GG-corrected p < .001, ηp2 = .22; Fig. 3). No group or condition effects and no other interactions were observed on MMN amplitude. Despite this lack of significant group difference on amplitude, the observed proportion of adults with autism displaying a negative response at MMN latency appeared to differ from CTRL (Fig. 3). Hence, inter-individual variability in the ASD group might hide potential group differences as visual observation of the difference wave highlights that MMN tended to be smaller in ASD than in CTRL.

In children, MMN amplitude was significantly smaller in ASD compared to CTRL children, regardless of condition (group effect, F(1,28) = 4.40, p = .045, ηp2 = .14; Fig. 4). As proportions of children displaying a negative response appeared similar between groups (Fig. 4), this result appeared genuine. No condition effect and no interaction involving the group were shown.

As no interaction between group and electrode were shown, correlations between IQ measures and MMN amplitude were performed on the mean peak amplitude over the centro-parietal electrode pool (Cz, CPz, and Pz) in children. Correlations for the neutral and for the emotional conditions (Table 2) revealed no significant correlations between MMN amplitude and verbal or performance IQ scores in either group. Moreover, between-group comparison of slopes did not reveal any significant difference (Table 2). Overall, these findings suggest that MMN results are not likely to be explained by intellectual discrepancies between groups (Table 1).

Table 2.

Correlation slopes between electrophysiological measures and verbal or performance IQ scores in the CTRL and ASD children groups and between-group comparisons

| Verbal IQ | Performance IQ | |||||

|---|---|---|---|---|---|---|

| CTRL | ASD | Group difference | CTRL | ASD | Group difference | |

| MMN amplitude (Cz, CPz, Pz) | ||||||

| Neutral | .02 [−.03; .11] p = .57 | −.01 [−.05; .03] p = .55 | .03 p = .25 | −.06 [−.15; .01] p = .21 | .02 [−.04; .08] p = .61 | −.08 p = .09 |

| Emotional | −.01 [−.08; .11] p = .84 | .00 [−.03; .05] p = .76 | −.01 p = .59 | −.02 [−.14; .07] p = .71 | .06 [−.01; .12] p = .10 | −.08 p = .03 |

| MMN emotional laterality effect (C4, CP4, P4) | .01 [−.06; .10] p = .87 | .04 [−.01; .09] p = .10 | −.03 p = .26 | −.01 [−.12; .08] p = .88 | .04 [−.03; .13] p = .35 | −.05 p = .23 |

| MMN latency (Cz) | ||||||

| Neutral | −.16 [− 1.17; .56] p = .61 | −.07 [−.41; .33] p = .70 | −.09 p = .75 | −.29 [−.83; .49] p = .46 | −.06 [−.73; .63] p = .84 | −.23 p = .49 |

| Emotional | −.08 [−.82; .89] p = .80 | −.03 [−.32; .34] p = .87 | −.05 p = .88 | −.14 [−.74; .50] p = .73 | .32 [−.24; 1.29] p = .40 | −.46 p = .11 |

| P3a amplitude (Fz, FCz, Cz) | ||||||

| Neutral | .00 [−.09; .10] p = .95 | −.04 [−.09; .03] p = .20 | .04 p = .27 | −.03 [−.14; .10] p = .63 | −.03 [−.16; .10] p = .68 | −.00 p = .96 |

| Emotional | −.03 [−.10; .04] p = .48 | −.07 [−.14; −.00] p = .11 | .04 p = .47 | .05 [−.03; .16] p = .34 | −.05 [−.26; .06] p = .53 | .10 p = .49 |

| P3a latency (FCz) | ||||||

| Neutral | .05 [− 1.24; .88] p = .92 | −.22 [− 1.09; .27] p = .42 | .27 p = .50 | .38 [−.84; 1.17] p = .48 | −.61 [− 1.41; .15] p = .17 | .99 p = .04 |

| Emotional | .45 [−.29; .89] p = .20 | −.12 [− 1.07; .35] p = .68 | .57 p = .08 | −.35 [− 1.15; .62] p = .42 | −.32 [− 1.56; .55] p = .52 | −.03 p = .95 |

All results displayed in black are non-significant (Bonferroni-corrected p value threshold: p = .0056; nine correlations in each group)

Laterality effect was characterized with an ANOVA performed on C3, CP3, P3, C4, CP4, and P4 in children only in accordance with the results of the main ANOVA. A condition by laterality by group interaction (F(1,28) = 3.87, p = .059, ηp2 = .12) tended to be significant. This result together with the right-laterality effect of emotional MMN reported in the literature [40] led us to perform a post hoc analysis. A lateralization of the angry MMN to the right hemisphere was evidenced in CTRL children (p = .042) while a symmetrical response was observed in children with ASD (p = .684, ns). No lateralization was showed for the neutral condition.

Correlation analyses between IQ measures and emotional MMN amplitude performed over the right centro-parietal electrode pool (average of C4, CP4, and P4) did not reveal any significant findings for both within-group correlations and between-group comparisons (Table 2).

MMN latency

MMN latency was shorter in participants with ASD compared to CTRL participants (group effect, F(1,58) = 4.39, p = .041, ηp2 = .07) and also for the angry condition than for the neutral condition (condition effect, F(1,58) = 16.19, p < .001, ηp2 = .22). MMN latency did not differ between age groups. There were no significant interactions.

No correlation between verbal IQ and MMN latency appeared significant in children or in adults and no between-group comparison p value reached the Bonferroni-corrected threshold (Tables 2 and 3). In contrast, the correlation between performance IQ and neutral MMN latency was significant in CTRL adults at the Bonferroni-corrected threshold (Table 3): MMN latency in the CTRL group decreased with increasing performance IQ; therefore, this correlation is not likely to explain the group difference by intellectual discrepancies.

Table 3.

Correlation slopes between electrophysiological measures and verbal or performance IQ scores in the CTRL and ASD adult groups and between-group comparisons

| Verbal IQ | Performance IQ | |||||

|---|---|---|---|---|---|---|

| CTRL | ASD | Group difference | CTRL | ASD | Group difference | |

| MMN amplitude (Cz, CPz, Pz) | ||||||

| Neutral | −.03 [−.06; .01] p = .20 | .02 [−.05; .08] p = .60 | −.05 p = .17 | .02 [−.01; .07] p = .39 | .04 [.00; .08] p = .14 | −.02 p = .63 |

| Emotional | −.01 [−.06; .04] p = .76 | −.01 [−.08; .02] p = .62 | −.00 p = .91 | .04 [−.00; .10] p = .19 | .00 [−.02; .04] p = .80 | .04 p = .16 |

| MMN latency (Cz) | ||||||

| Neutral | −.20 [−.67; .30] p = .43 | .50 [.06; 1.11] p = .09 | −.70 p = .04 | −.73 [− 1.19; −.19] p < .01 | −.18 [−.66; .22] p = .49 | −.55 p = .11 |

| Emotional | −.45 [− 1.45; .47] p = .39 | −.05 [−.49; .09] p = .88 | −.40 p = .40 | −1.51 [− 2.24; −.82] p = .01 | −.40 [−.89; .04] p = .16 | −1.11 p = .01 |

| P3a amplitude (Fz, FCz, Cz) | ||||||

| Neutral | −.02 [−.06; .03] p = .47 | .03 [−.04; .08] p = .34 | −.05 p = .22 | .01 [−.05; .07] p = .75 | .03 [−.02; .08] p = .18 | −.02 p = .65 |

| Emotional | −.02 [−.08; .05] p = .67 | .00 [−.06; .05] p = .94 | −.02 p = .58 | .06 [−.01; .15] p = .17 | .02 [−.03; .07] p = .39 | .04 p = .28 |

| P3a latency (FCz) | ||||||

| Neutral | .34 [−.42; 1.18] p = .40 | .18 [−.87; .75] p = .62 | .16 p = .74 | −.97 [− 2.01; −.04] p = .03 | −.08 [−.62; 0.60] p = .78 | −.89 p = .08 |

| Emotional | −.39 [−.98; .14] p = .19 | .11 [−.66; .58] p = .68 | −.50 p = .14 | .40 [− 1.19; .42] p = .27 | .03 [−.36; .43] p = .89 | −.43 p = .30 |

All results displayed in black are non-significant (Bonferroni-corrected p value threshold p = .0063; eight correlations in each group)

Although no interaction between group and condition was observed for MMN latency, the observation of data (Fig. 5c) and the absence of latency difference between neutral and emotional MMN found in children in the literature [45] suggested that the condition effect on MMN latency might be present only in adults. In order to further investigate the existence of this condition effect in our groups, planned comparisons were performed. These analyses revealed that the shorter latency for the emotional condition compared to the neutral condition was significant in both adult groups (CTRL adults p = .002; adults with ASD p = .007) but not in children groups.

Orientation of attention to neutral and emotional prosodic changes

P3a amplitude

The main ANOVA performed on P3a amplitude revealed a typical fronto-central distribution and a larger P3a for participants with ASD than for CTRL participants (group effect, F(1,58) = 5.44, p = .023, ηp2 = 0.09). Similar amplitudes were observed between conditions in children while neutral P3a amplitude was larger than angry P3a amplitude in adults (condition by age interaction, F(1,58) = 4.34, p = .042, ηp2 = 0.07). No other significant result was observed on P3a amplitude.

Finally, P3a amplitude did not correlate with verbal or performance IQ in children or in adults and no between-group differences were observed (Tables 2 and 3).

P3a latency

P3a latency was shorter in adults than in children in the ASD group (p = .001) but not in the CTRL group regardless of the condition (age by group interaction, F(1,58) = 5.01, p = .029, ηp2 = 0.08). No other significant result was observed on P3a latency.

For both verbal and performance IQ, no correlations with P3a latency were found in adults or in children and no between-group differences were observed (Tables 2 and 3).

Discussion

The present study evaluated the early processing of change with vocal stimuli in children and adults with ASD. The goals of this study were to characterize automatic detection of vocal deviancy and to assess whether it is modulated by emotion in people with autism.

An atypical detection of deviancy in vocal stimuli has been evidenced in both adults and children with autism while an absence of specific emotional deviancy response was observed only during childhood.

Obligatory auditory brain responses in ASD

Before focusing on deviancy processing, auditory brain responses to vocal prosodic stimuli were investigated in an equiprobable context in order to assess voice processing in ASD across age groups. Atypical auditory processing of vocal stimuli was evidenced in children with autism especially for the emotional stimulus. Previous studies investigating voice processing in children with autism [6, 7] did not report any significant difference between groups for vocal stimuli processing. The context of stimulus presentation (speech stimuli only or speech/ non-speech/ non-vocal stimuli) could be responsible for the discrepancy between previous studies and ours as children with autism are sensitive to the stimulus sequence composition [10]. In studies using oddball paradigms composed mostly or even exclusively of speech sounds as in the present study, reductions of ERP amplitude to standard sounds were repeatedly evidenced in children with autism compared to controls [10, 13, 18, 20], including when the stimuli displayed an emotional prosody [40]. Overall, these studies highlight an atypical processing of human voice in children with autism, which could hamper more complex brain processes such as change detection. In adults with autism, no impairment of vocal processing was evidenced in the present work in accordance with a recent fMRI study [9]. Although replication is still necessary to assess the similarity of brain responses to prosody between CTRL and ASD adults, the present findings suggest a normalization of auditory brain responses to vocal sounds with age.

Atypical deviancy processing for both neutral and emotional conditions in ASD

MMN amplitude tends to normalize according to age but earlier MMN and larger P3a were found in both children and adults with ASD compared to CTRL, highlighting a persistent atypical detection and orientation of attention toward change. The earlier MMN observed in the present study is in contradiction with the few studies that used emotional vocal stimuli in ASD, which either reported no latency difference [40, 41, 62] or a delayed MMN in adults with Asperger syndrome compared to controls [43]. Differences between our findings and those from previous studies might be explained by differences in the paradigm itself or the stimuli. For example, previous studies frequently used a traditional oddball paradigm (in which the differential wave is obtained by subtracting the response to the standard sounds in the oddball sequence from that of the deviants), whereas we used an equiprobable sequence allowing a better control of neural adaptation. Moreover, while previous studies used stimuli like words with complex emotions such as scorn, we presented simple stimuli (vowel) with basic emotions like anger, which might trigger responses that appeared closer to those evoked by simple non-vocal stimuli. Accordingly, our results are consistent with previous investigations using speech stimuli [22] or tones with frequency changes which have already evidenced a faster processing of deviancy in ASD [16, 17]. This earlier response was also reported for non-social stimuli in the visual modality [63]. This would indicate that a general atypical deviancy processing operates in ASD independently of the type (social/non-social) of stimuli and of the sensory modality; this could possibly be related to the need of sameness.

An increased P3a amplitude was found in ASD compared to CTRL contrary to our hypotheses. Though there is limited literature on the P3a response in autism, our result is in contradiction with previous reports [12, 20] including two emotional oddball studies [41, 42], which described smaller P3a in patients with autism. This discrepancy might be explained by the paradigm used (minimizing acoustic differences and neural adaptation effects) or by an instability of involuntary attention across studies varying from low to high awareness. Nonetheless, previous works using tones rather than phonemes have also reported larger P3a responses in participants with ASD in oddball paradigms [16, 17]. Our results add to this by showing a larger P3a response in ASD compared to CTRL for simple vocal stimuli. Larger P3a response indicates a greater involuntary attention to deviancy in ASD which could contribute to the sameness dimension but also suggests that ASD participants noticed emotional changes. The existence of this finding for social vocal stimuli might indicate that this atypical attention orientation to change is a hallmark of the pathology and could be responsible for patients’ difficulties to adapt to their environment [16].

In addition, in children with autism, deviancy processing was also characterized by an MMN amplitude reduction in both conditions compared to CTRL. Amplitude reduction was already evidenced in vocal change detection studies in response to variation in acoustics [11, 18] and emotion [42]. The present finding confirms that this group difference constitutes a general impairment of change detection of vocal stimuli in children with autism.

Contrary to our hypotheses, MMN amplitude reduction was not found for adults with ASD in the present study even though the amplitude tended to be smaller compared to CTRL. In previous emotional MMN studies on which our hypotheses were drawn, an amplitude reduction was reported [41, 43] but this result appeared even for non-vocal counterparts of vocal stimuli [41] confirming the major role of acoustic attributes. As first-order acoustical parameters were controlled in the present study, the absence of significant amplitude reduction appears consistent. However, a lack of group difference might be due to heterogeneity in the adult ASD group. To sum up, in both children and adults with ASD, the response pattern is characterized by an earlier MMN and a larger P3a. Both indicate a heightened pre-attentional processing of change. In children with ASD, despite this greater pre-attentional deviancy processing, change detection was also characterized by a smaller MMN suggesting a reduced fine-grained analysis of the characteristics of the change [64], possibly in relation to the altered sensory processing.

Atypical processing of emotion-specific deviancy detection in ASD

The comparison of brain responses to neutral and emotional deviancy showed a specificity of the emotional change detection in children which was represented by a right-hemispheric lateralization in the control group that was missing in ASD. This absence of lateralization for emotional deviancy was already evidenced in children with Asperger syndrome [40]. Brain regions involved in emotional processing vary according to stimulus type (e.g., stimuli with/without speech content) and attentional level (e.g., implicit/explicit) [65]. Some fMRI studies have evidenced a right-hemispheric specialization for the processing of emotional prosody in control adults [66–68]. In our study, this lateralization of the emotional MMN did not persist in adults possibly because this right-hemispheric lateralization might be present on larger time windows in adults, which would explain its recording in fMRI studies but not in the time scale of the MMN response.

In CTRL and ASD adults, the emotional MMN displayed an earlier latency compared to the neutral condition. This latency difference may originate from a faster processing of emotional stimuli possibly through a subcortical short route involving the amygdala [69]. It may also reflect a delayed processing of neutral stimuli, due to the presence of emotional stimuli in the sequence [70]. Although this finding was already known in CTRL adults [49, 50], it is the first time that it is also reported for adults with ASD, suggesting that adults with autism are not only able to discriminate between neutral and emotional prosody at a pre-attentive level but also display the appropriate response by prioritizing the emotional deviancy processing. Hence, as we recorded pre-attentional responses, people with ASD do not exclusively base their emotional perception on learned compensatory strategies as previously suggested [36]. Moreover, even if the use of ecological stimuli did not allow to control for all voice parameters (such as sound envelope), the equiprobable paradigm used in the present work reduces the odds of an over-processing of first-order acoustic attributes in ASD to explain this emotional deviancy prioritized processing.

Altogether, the present study highlighted that children with autism did not fully show the specific brain response to emotion revealing an atypical processing of emotional deviancy whereas adults with autism display appropriate emotional deviancy brain responses. The evolution between children and adults with ASD also evidenced a trend toward normalization of vocal processing and automatic detection of emotion with age in ASD. Despite this improvement of brain processes involved in the perception of emotional vocal stimuli, some behavioral studies still show deficits in emotion recognition [71] and prosodic production of adults with autism [72, 73]. Abnormal prosodic perception and production represent a significant obstacle to the social integration of persons with ASD. Therapies helping persons with autism to apprehend emotions [74] therefore seem essential to aid people with autism on the social dimension. Indeed, even if adults with autism are able to pre-attentively detect socially relevant changes, they still display social anhedonia [75], probably because the coping strategies they use in order to fit in and increase connections with others are energy consuming [76]. That is why these interventions need to be administered early to children with ASD to possibly trigger changes of perceptual processes as soon as possible in order to ease the “reading” of the environment and improve the clinical evolution of patients.

Limitations

Although this study brought innovative results about the perception of vocal stimuli in autism, these processes should be investigated on larger samples to assess the relative influence of IQ and diagnosis even if no influence of cognitive skills was evidenced in our study. Additional experiments with different sequences would also be beneficial to determine the influence of the context on the studied brain processes (e.g., an emotional context compared to the neutral context created by the repeated presentation of the neutral standard in the present study). Behavioral results about emotional recognition tested in laboratory and in real social interactions would also have been helpful to assess the potential link between automatic low-level pre-attentional differences and high-level socio-emotional performances. Finally, longitudinal studies will be of great use to properly evaluate the developmental changes in patients with ASD and to confirm the findings of the present study.

Conclusions

Detection and orientation of attention toward vocal deviancies remained atypical in adults with autism even if greater group differences were reported in children compared to adults. This long-lasting particularity may be a key element of the ASD symptomology, as an atypical perception of social and non-social changes in the environment prevents people with autism to correctly adapt their reactions and may lead to the sensory overload often reported by individuals with ASD.

In addition to this atypical processing of change, children with ASD exhibited an abnormal sensory encoding of neutral and emotional stimuli along with an atypical pre-attentive discrimination of neutral and emotional deviancies. In adults with ASD, auditory sensory encoding was similar to CTRL adults and both groups discriminated neutral and emotional deviancies. These differences between children and adults with ASD indicate that poor sensory encoding during childhood might have hinder the development of normal automatic change detection. However, normal change detection is not mandatory to discriminate between different emotions as long as sensory encoding is typical as indicated by results obtained in adults with ASD. Overall, the present study evidenced a trend toward normalization of vocal processing and automatic detection of emotion with age in ASD.

Acknowledgements

We thank all the volunteers for their time and effort while participating in this study. We are grateful to Sylvie Roux for her help during EEG and statistical analyses, Luce Corneau and Céline Courtin for the assistance during EEG recordings, Remy Magné for the technical support, and Mathieu Lemaire for including participants.

Funding

This work was supported by a French National Research Agency grant (ANR-12-JSH2-0001-01-AUTATTEN). Judith Charpentier was supported by an INSERM–Région Centre grant. Marianne Latinus was in part supported by the John Bost Foundation. Funding sources had no involvement in the design of the study and collection, in the analysis and interpretation of the data, and in the writing the manuscript.

Availability of data and materials

Please contact the corresponding author for data requests.

Abbreviations

- ADI-R

Autism Diagnostic Interview-Revised

- ADOS

Autism Diagnostic Observation Schedule

- angryDev

Angry deviant stimulus

- AS

Asperger syndrome

- ASD

Autism spectrum disorder

- CTRL

Controls

- DSM-5

Diagnostic and Statistical Manual of Mental Disorders, 5th edition

- EEG

Electroencephalography

- equiAngry

Equiprobable angry stimulus

- equiDisgust

Equiprobable disgust stimulus

- equiFear

Equiprobable fear stimulus

- equiHappy

Equiprobable happy stimulus

- equiSad

Equiprobable sad stimulus

- equiSurprise

Equiprobable surprise stimulus

- ERP

Event-related potential

- fMRI

Functional magnetic resonance imaging

- IQ

Intellectual quotient

- MMN

Mismatch negativity

- neutralDev

Neutral deviant stimulus

- neutralStd

Neutral standard stimulus

Authors’ contributions

The study presented here results from teamwork. JC and MG designed the study. JC and KK performed the data acquisition. JM, AS, EHD, and FBB carried-out the clinical evaluation of the patients. JC, ML, and MG were responsible for data and statistical analyses. JC, KK, EHD, ML, and MG were involved in the manuscript preparation. All authors read and approved the final manuscript.

Ethics approval and consent to participate

The protocol was approved by the Ethics Committee of the University Hospital of Tours (Comité de Protection des Personnes Tours Ouest 1; CPP 2013-R50). Informed written consent was obtained from all adult participants or from their legal guardian when needed and from children’s parents.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.American Psychiatric Association . American psychiatric pub. 2013. Diagnostic and statistical manual of mental disorders (DSM-5®) [Google Scholar]

- 2.Dawson G, Meltzoff AN, Osterling J, Rinaldi J, Brown E. Children with autism fail to orient to naturally occurring social stimuli. J Autism Dev Disord. 1998;28:479–485. doi: 10.1023/A:1026043926488. [DOI] [PubMed] [Google Scholar]

- 3.Klin A. Young autistic children’s listening preferences in regard to speech: a possible characterization of the symptom of social withdrawal. J Autism Dev Disord. 1991;21:29–42. doi: 10.1007/BF02206995. [DOI] [PubMed] [Google Scholar]

- 4.Kuhl PK, Coffey-Corina S, Padden D, Dawson G. Links between social and linguistic processing of speech in preschool children with autism: behavioral and electrophysiological measures. Dev Sci. 2005;8:F1–12. doi: 10.1111/j.1467-7687.2004.00384.x. [DOI] [PubMed] [Google Scholar]

- 5.Boucher J, Lewis V, Collis GM. Voice processing abilities in children with autism, children with specific language impairments, and young typically developing children. J Child Psychol Psychiatry. 2000;41:847–857. doi: 10.1111/1469-7610.00672. [DOI] [PubMed] [Google Scholar]

- 6.Ceponiene R, Lepistö T, Shestakova A, Vanhala R, Alku P, Näätänen R, et al. Speech-sound-selective auditory impairment in children with autism: they can perceive but do not attend. Proc Natl Acad Sci U S A. 2003;100:5567–5572. doi: 10.1073/pnas.0835631100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bidet-Caulet A, Latinus M, Roux S, Malvy J, Bonnet-Brilhault F, Bruneau N. Atypical sound discrimination in children with ASD as indicated by cortical ERPs. J Neurodev Disord. 2017;9:13. doi: 10.1186/s11689-017-9194-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Gervais H, Belin P, Boddaert N, Leboyer M, Coez A, Sfaello I, et al. Abnormal cortical voice processing in autism. Nat Neurosci. 2004;7:801–802. doi: 10.1038/nn1291. [DOI] [PubMed] [Google Scholar]

- 9.Schelinski S, Borowiak K, von Kriegstein K. Temporal voice areas exist in autism spectrum disorder but are dysfunctional for voice identity recognition. Soc Cogn Affect Neurosci. 2016. [DOI] [PMC free article] [PubMed]

- 10.Whitehouse AJO, Bishop DVM. Do children with autism “switch off” to speech sounds? An investigation using event-related potentials. Dev Sci. 2008;11:516–524. doi: 10.1111/j.1467-7687.2008.00697.x. [DOI] [PubMed] [Google Scholar]

- 11.Kujala T, Kuuluvainen S, Saalasti S, Jansson-Verkasalo E, von Wendt L, Lepistö T. Speech-feature discrimination in children with Asperger syndrome as determined with the multi-feature mismatch negativity paradigm. Clin Neurophysiol Off J Int Fed Clin Neurophysiol. 2010;121:1410–1419. doi: 10.1016/j.clinph.2010.03.017. [DOI] [PubMed] [Google Scholar]

- 12.Lepistö T, Nieminen-von Wendt T, von Wendt L, Näätänen R, Kujala T. Auditory cortical change detection in adults with Asperger syndrome. Neurosci Lett. 2007;414:136–140. doi: 10.1016/j.neulet.2006.12.009. [DOI] [PubMed] [Google Scholar]

- 13.Jansson-Verkasalo E, Ceponiene R, Kielinen M, Suominen K, Jäntti V, Linna SL, et al. Deficient auditory processing in children with Asperger syndrome, as indexed by event-related potentials. Neurosci Lett. 2003;338:197–200. doi: 10.1016/S0304-3940(02)01405-2. [DOI] [PubMed] [Google Scholar]

- 14.Russo N, Zecker S, Trommer B, Chen J, Kraus N. Effects of background noise on cortical encoding of speech in autism spectrum disorders. J Autism Dev Disord. 2009;39:1185–1196. doi: 10.1007/s10803-009-0737-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Jansson-Verkasalo E, Kujala T, Jussila K, Mattila ML, Moilanen I, Näätänen R, et al. Similarities in the phenotype of the auditory neural substrate in children with Asperger syndrome and their parents. Eur J Neurosci. 2005;22:986–990. doi: 10.1111/j.1460-9568.2005.04216.x. [DOI] [PubMed] [Google Scholar]

- 16.Gomot M, Blanc R, Clery H, Roux S, Barthelemy C, Bruneau N. Candidate electrophysiological endophenotypes of hyper-reactivity to change in autism. J Autism Dev Disord. 2011;41:705–714. doi: 10.1007/s10803-010-1091-y. [DOI] [PubMed] [Google Scholar]

- 17.Gomot M, Giard M-H, Adrien J-L, Barthelemy C, Bruneau N. Hypersensitivity to acoustic change in children with autism: electrophysiological evidence of left frontal cortex dysfunctioning. Psychophysiology. 2002;39:577–584. doi: 10.1111/1469-8986.3950577. [DOI] [PubMed] [Google Scholar]

- 18.Lepistö T, Silokallio S, Nieminen-von Wendt T, Alku P, Näätänen R, Kujala T. Auditory perception and attention as reflected by the brain event-related potentials in children with Asperger syndrome. Clin Neurophysiol Off J Int Fed Clin Neurophysiol. 2006;117:2161–2171. doi: 10.1016/j.clinph.2006.06.709. [DOI] [PubMed] [Google Scholar]

- 19.Kasai K, Hashimoto O, Kawakubo Y, Yumoto M, Kamio S, Itoh K, et al. Delayed automatic detection of change in speech sounds in adults with autism: a magnetoencephalographic study. Clin Neurophysiol Off J Int Fed Clin Neurophysiol. 2005;116:1655–1664. doi: 10.1016/j.clinph.2005.03.007. [DOI] [PubMed] [Google Scholar]

- 20.Lepistö T, Kujala T, Vanhala R, Alku P, Huotilainen M, Näätänen R. The discrimination of and orienting to speech and non-speech sounds in children with autism. Brain Res. 2005;1066:147–157. doi: 10.1016/j.brainres.2005.10.052. [DOI] [PubMed] [Google Scholar]

- 21.Lepistö T, Kajander M, Vanhala R, Alku P, Huotilainen M, Näätänen R, et al. The perception of invariant speech features in children with autism. Biol Psychol. 2008;77:25–31. doi: 10.1016/j.biopsycho.2007.08.010. [DOI] [PubMed] [Google Scholar]

- 22.Kujala T, Aho E, Lepistö T, Jansson-Verkasalo E, Nieminen-von Wendt T, von Wendt L, et al. Atypical pattern of discriminating sound features in adults with Asperger syndrome as reflected by the mismatch negativity. Biol Psychol. 2007;75:109–114. doi: 10.1016/j.biopsycho.2006.12.007. [DOI] [PubMed] [Google Scholar]

- 23.Goodfellow S, Nowicki S. Social adjustment, academic adjustment, and the ability to identify emotion in facial expressions of 7-year-old children. J Genet Psychol. 2009;170:234–243. doi: 10.1080/00221320903218281. [DOI] [PubMed] [Google Scholar]

- 24.Grossman RB. Judgments of social awkwardness from brief exposure to children with and without high-functioning autism. Autism Int J Res Pract. 2015;19:580–587. doi: 10.1177/1362361314536937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Paul R, Shriberg LD, McSweeny J, Cicchetti D, Klin A, Volkmar F. Brief report: relations between prosodic performance and communication and socialization ratings in high functioning speakers with autism spectrum disorders. J Autism Dev Disord. 2005;35:861–869. doi: 10.1007/s10803-005-0031-8. [DOI] [PubMed] [Google Scholar]

- 26.Peppé S, McCann J, Gibbon F, O’Hare A, Rutherford M. Receptive and expressive prosodic ability in children with high-functioning autism. J Speech Lang Hear Res JSLHR. 2007;50:1015–1028. doi: 10.1044/1092-4388(2007/071). [DOI] [PubMed] [Google Scholar]

- 27.Taylor LJ, Maybery MT, Grayndler L, Whitehouse AJO. Evidence for shared deficits in identifying emotions from faces and from voices in autism spectrum disorders and specific language impairment. Int J Lang Commun Disord. 2015;50:452–466. doi: 10.1111/1460-6984.12146. [DOI] [PubMed] [Google Scholar]

- 28.Stewart ME, McAdam C, Ota M, Peppé S, Cleland J. Emotional recognition in autism spectrum conditions from voices and faces. Autism Int J Res Pract. 2013;17:6–14. doi: 10.1177/1362361311424572. [DOI] [PubMed] [Google Scholar]

- 29.Van Lancker D, Cornelius C, Kreiman J. Recognition of emotional-prosodic meanings in speech by autistic, schizophrenic, and normal children. Dev Neuropsychol. 1989;5:207–226. doi: 10.1080/87565648909540433. [DOI] [Google Scholar]

- 30.Hesling I, Dilharreguy B, Peppé S, Amirault M, Bouvard M, Allard M. The integration of prosodic speech in high functioning autism: a preliminary FMRI study. PLoS One. 2010;5:e11571. doi: 10.1371/journal.pone.0011571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lindner JL, Rosén LA. Decoding of emotion through facial expression, prosody and verbal content in children and adolescents with Asperger’s syndrome. J Autism Dev Disord. 2006;36:769–777. doi: 10.1007/s10803-006-0105-2. [DOI] [PubMed] [Google Scholar]

- 32.Philip RCM, Whalley HC, Stanfield AC, Sprengelmeyer R, Santos IM, Young AW, et al. Deficits in facial, body movement and vocal emotional processing in autism spectrum disorders. Psychol Med. 2010;40:1919–1929. doi: 10.1017/S0033291709992364. [DOI] [PubMed] [Google Scholar]

- 33.Rutherford MD, Baron-Cohen S, Wheelwright S. Reading the mind in the voice: a study with normal adults and adults with Asperger syndrome and high functioning autism. J Autism Dev Disord. 2002;32:189–194. doi: 10.1023/A:1015497629971. [DOI] [PubMed] [Google Scholar]

- 34.Wang AT, Lee SS, Sigman M, Dapretto M. Neural basis of irony comprehension in children with autism: the role of prosody and context. Brain J Neurol. 2006;129:932–943. doi: 10.1093/brain/awl032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Baker KF, Montgomery AA, Abramson R. Brief report: perception and lateralization of spoken emotion by youths with high-functioning forms of autism. J Autism Dev Disord. 2010;40:123–129. doi: 10.1007/s10803-009-0841-1. [DOI] [PubMed] [Google Scholar]

- 36.Jones CRG, Pickles A, Falcaro M, Marsden AJS, Happé F, Scott SK, et al. A multimodal approach to emotion recognition ability in autism spectrum disorders. J Child Psychol Psychiatry. 2011;52:275–285. doi: 10.1111/j.1469-7610.2010.02328.x. [DOI] [PubMed] [Google Scholar]

- 37.Grossman RB, Bemis RH, Plesa Skwerer D, Tager-Flusberg H. Lexical and affective prosody in children with high-functioning autism. J Speech Lang Hear Res JSLHR. 2010;53:778–793. doi: 10.1044/1092-4388(2009/08-0127). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Heikkinen J, Jansson-Verkasalo E, Toivanen J, Suominen K, Väyrynen E, Moilanen I, et al. Perception of basic emotions from speech prosody in adolescents with Asperger’s syndrome. Logoped Phoniatr Vocol. 2010;35:113–120. doi: 10.3109/14015430903311184. [DOI] [PubMed] [Google Scholar]

- 39.O’Connor K. Brief report: impaired identification of discrepancies between expressive faces and voices in adults with Asperger’s syndrome. J Autism Dev Disord. 2007;37:2008–2013. doi: 10.1007/s10803-006-0345-1. [DOI] [PubMed] [Google Scholar]

- 40.Korpilahti P, Jansson-Verkasalo E, Mattila M-L, Kuusikko S, Suominen K, Rytky S, et al. Processing of affective speech prosody is impaired in Asperger syndrome. J Autism Dev Disord. 2007;37:1539–1549. doi: 10.1007/s10803-006-0271-2. [DOI] [PubMed] [Google Scholar]

- 41.Fan Y-T, Cheng Y. Atypical mismatch negativity in response to emotional voices in people with autism spectrum conditions. PLoS One. 2014;9:e102471. doi: 10.1371/journal.pone.0102471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Lindström R, Lepistö-Paisley T, Vanhala R, Alén R, Kujala T. Impaired neural discrimination of emotional speech prosody in children with autism spectrum disorder and language impairment. Neurosci Lett. 2016;628:47–51. doi: 10.1016/j.neulet.2016.06.016. [DOI] [PubMed] [Google Scholar]

- 43.Kujala T, Lepistö T, Nieminen-von Wendt T, Näätänen P, Naatanen R. Neurophysiological evidence for cortical discrimination impairment of prosody in Asperger syndrome. Neurosci Lett. 2005;383:260–265. doi: 10.1016/j.neulet.2005.04.048. [DOI] [PubMed] [Google Scholar]

- 44.Sams M, Paavilainen P, Alho K, Näätänen R. Auditory frequency discrimination and event-related potentials. Electroencephalogr Clin Neurophysiol. 1985;62:437–448. doi: 10.1016/0168-5597(85)90054-1. [DOI] [PubMed] [Google Scholar]

- 45.Charpentier J, Kovarski K, Roux S, Houy-Durand E, Saby A, Bonnet-Brilhault F, et al. Brain mechanisms involved in angry prosody change detection in school-age children and adults, revealed by electrophysiology. Cogn Affect Behav Neurosci. 2018. [DOI] [PubMed]

- 46.Jiang A, Yang J, Yang Y. MMN responses during implicit processing of changes in emotional prosody: an ERP study using Chinese pseudo-syllables. Cogn Neurodyn. 2014;8:499–508. doi: 10.1007/s11571-014-9303-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Pakarinen S, Sokka L, Leinikka M, Henelius A, Korpela J, Huotilainen M. Fast determination of MMN and P3a responses to linguistically and emotionally relevant changes in pseudoword stimuli. Neurosci Lett. 2014;577C:28–33. doi: 10.1016/j.neulet.2014.06.004. [DOI] [PubMed] [Google Scholar]

- 48.Pinheiro AP, Barros C, Vasconcelos M, Obermeier C, Kotz SA. Is laughter a better vocal change detector than a growl? Cortex J Devoted Study Nerv Syst Behav. 2017;92:233–248. doi: 10.1016/j.cortex.2017.03.018. [DOI] [PubMed] [Google Scholar]

- 49.Schirmer A, Striano T, Friederici AD. Sex differences in the preattentive processing of vocal emotional expressions. Neuroreport. 2005;16:635–639. doi: 10.1097/00001756-200504250-00024. [DOI] [PubMed] [Google Scholar]

- 50.Schirmer A, Escoffier N, Cheng X, Feng Y, Penney TB. Detecting temporal change in dynamic sounds: on the role of stimulus duration, speed, and emotion. Front Psychol. 2016;6:2055. doi: 10.3389/fpsyg.2015.02055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Thönnessen H, Boers F, Dammers J, Chen Y-H, Norra C, Mathiak K. Early sensory encoding of affective prosody: neuromagnetic tomography of emotional category changes. NeuroImage. 2010;50:250–259. doi: 10.1016/j.neuroimage.2009.11.082. [DOI] [PubMed] [Google Scholar]

- 52.Lord C, Rutter M, Le Couteur A. Autism diagnostic interview-revised: a revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. J Autism Dev Disord. 1994;24:659–685. doi: 10.1007/BF02172145. [DOI] [PubMed] [Google Scholar]

- 53.Lord C, Risi S, Lambrecht L, Cook EH, Leventhal BL, DiLavore PC, et al. The autism diagnostic observation schedule-generic: a standard measure of social and communication deficits associated with the spectrum of autism. J Autism Dev Disord. 2000;30:205–223. doi: 10.1023/A:1005592401947. [DOI] [PubMed] [Google Scholar]

- 54.Wechsler D. WISC-IV - Echelle d’intelligence de Wechsler pour enfants et adolescents - Quatrième édition - ECPA [Internet] 2005. [Google Scholar]

- 55.Wechsler D. WAIS-IV - Echelle d’intelligence de Wechsler pour adultes - Quatrième Edition - ECPA [Internet] 2011. [Google Scholar]

- 56.Jacobsen T, Schröger E. Is there pre-attentive memory-based comparison of pitch? Psychophysiology. 2001;38:723–727. doi: 10.1111/1469-8986.3840723. [DOI] [PubMed] [Google Scholar]

- 57.Kimura M, ‘ichi KJ, Ohira H, Schröger E. Visual mismatch negativity: new evidence from the equiprobable paradigm. Psychophysiology. 2009;46:402–409. doi: 10.1111/j.1469-8986.2008.00767.x. [DOI] [PubMed] [Google Scholar]

- 58.Aguera P-E, Jerbi K, Caclin A, Bertrand O. ELAN: a software package for analysis and visualization of MEG, EEG, and LFP signals. Comput Intell Neurosci. 2011;2011:158970. doi: 10.1155/2011/158970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Guthrie D, Buchwald JS. Significance testing of difference potentials. Psychophysiology. 1991;28:240–244. doi: 10.1111/j.1469-8986.1991.tb00417.x. [DOI] [PubMed] [Google Scholar]

- 60.Maurer U, Bucher K, Brem S, Brandeis D. Development of the automatic mismatch response: from frontal positivity in kindergarten children to the mismatch negativity. Clin Neurophysiol. 2003;114:808–817. doi: 10.1016/S1388-2457(03)00032-4. [DOI] [PubMed] [Google Scholar]

- 61.Paquette N, Vannasing P, Lefrançois M, Lefebvre F, Roy M-S, McKerral M, et al. Neurophysiological correlates of auditory and language development: a mismatch negativity study. Dev Neuropsychol. 2013;38:386–401. doi: 10.1080/87565641.2013.805218. [DOI] [PubMed] [Google Scholar]

- 62.Lindström R, Lepistö T, Makkonen T, Kujala T. Processing of prosodic changes in natural speech stimuli in school-age children. Int J Psychophysiol Off J Int Organ Psychophysiol. 2012;86:229–237. doi: 10.1016/j.ijpsycho.2012.09.010. [DOI] [PubMed] [Google Scholar]

- 63.Cléry H, Bonnet-Brilhault F, Lenoir P, Barthelemy C, Bruneau N, Gomot M. Atypical visual change processing in children with autism: an electrophysiological study. Psychophysiology. 2013;50:240–252. doi: 10.1111/psyp.12006. [DOI] [PubMed] [Google Scholar]

- 64.Näätänen R, Paavilainen P, Rinne T, Alho K. The mismatch negativity (MMN) in basic research of central auditory processing: a review. Clin Neurophysiol Off J Int Fed Clin Neurophysiol. 2007;118:2544–2590. doi: 10.1016/j.clinph.2007.04.026. [DOI] [PubMed] [Google Scholar]

- 65.Frühholz S, Grandjean D. Multiple subregions in superior temporal cortex are differentially sensitive to vocal expressions: a quantitative meta-analysis. Neurosci Biobehav Rev. 2013;37:24–35. doi: 10.1016/j.neubiorev.2012.11.002. [DOI] [PubMed] [Google Scholar]

- 66.Beaucousin V, Lacheret A, Turbelin M-R, Morel M, Mazoyer B, Tzourio-Mazoyer N. FMRI study of emotional speech comprehension. Cereb Cortex N Y N 1991. 2007;17:339–352. doi: 10.1093/cercor/bhj151. [DOI] [PubMed] [Google Scholar]

- 67.Ethofer T, Kreifelts B, Wiethoff S, Wolf J, Grodd W, Vuilleumier P, et al. Differential influences of emotion, task, and novelty on brain regions underlying the processing of speech melody. J Cogn Neurosci. 2009;21:1255–1268. doi: 10.1162/jocn.2009.21099. [DOI] [PubMed] [Google Scholar]

- 68.Frühholz S, Ceravolo L, Grandjean D. Specific brain networks during explicit and implicit decoding of emotional prosody. Cereb Cortex N Y N 1991. 2012;22:1107–1117. doi: 10.1093/cercor/bhr184. [DOI] [PubMed] [Google Scholar]

- 69.Liebenthal E, Silbersweig DA, Stern E. The language, tone and prosody of emotions: neural substrates and dynamics of spoken-word emotion perception. Front Neurosci. 2016;10:506. doi: 10.3389/fnins.2016.00506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Campanella S, Gaspard C, Debatisse D, Bruyer R, Crommelinck M, Guerit J-M. Discrimination of emotional facial expressions in a visual oddball task: an ERP study. Biol Psychol. 2002;59:171–186. doi: 10.1016/S0301-0511(02)00005-4. [DOI] [PubMed] [Google Scholar]

- 71.Globerson E, Amir N, Kishon-Rabin L, Golan O. Prosody recognition in adults with high-functioning autism spectrum disorders: from psychoacoustics to cognition. Autism Res. 2015;8:153–163. doi: 10.1002/aur.1432. [DOI] [PubMed] [Google Scholar]

- 72.Shriberg LD, Paul R, McSweeny JL, Klin AM, Cohen DJ, Volkmar FR. Speech and prosody characteristics of adolescents and adults with high-functioning autism and Asperger syndrome. J Speech Lang Hear Res JSLHR. 2001;44:1097–1115. doi: 10.1044/1092-4388(2001/087). [DOI] [PubMed] [Google Scholar]

- 73.Peppé S, Martínez-Castilla P, Lickley R, Mennen I, McCann J, O’Hare A, et al. Functionality and perceived atypicality of expressive prosody in children with autism spectrum disorders. Proc Speech Prosody [Internet]. 2006; [cited 2014 Nov 13]. Available from: http://20.210-193-52.unknown.qala.com.sg/archive/sp2006/papers/sp06_060.pdf.

- 74.Fridenson-Hayo S, Berggren S, Lassalle A, Tal S, Pigat D, Meir-Goren N, et al. “Emotiplay”: a serious game for learning about emotions in children with autism: results of a cross-cultural evaluation. Eur Child Adolesc Psychiatry. 2017. [DOI] [PubMed]

- 75.Carré A, Chevallier C, Robel L, Barry C, Maria A-S, Pouga L, et al. Tracking social motivation systems deficits: the affective neuroscience view of autism. J Autism Dev Disord. 2015;45:3351–3363. doi: 10.1007/s10803-015-2498-2. [DOI] [PubMed] [Google Scholar]

- 76.Hull L, Petrides KV, Allison C, Smith P, Baron-Cohen S, Lai M-C, et al. “Putting on My Best Normal”: social camouflaging in adults with autism spectrum conditions. J Autism Dev Disord. 2017. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Please contact the corresponding author for data requests.