Abstract

Medical staff must be able to perform accurate initial interpretations of radiography to prevent diagnostic errors. Education in medical image interpretation is an ongoing need that is addressed by text-based and e-learning platforms. The effectiveness of these methods has been previously reported. Here, we describe the effectiveness of an e-learning platform used for medical image interpretation education. Ten third-year medical students without previous experience in chest radiography interpretation were provided with e-learning instructions. Accuracy of diagnosis using chest radiography was provided before and after e-learning education. We measured detection accuracy for two image groups: nodular shadow and ground-glass shadow. We also distributed the e-learning system to the two groups and analyzed the effectiveness of education for both types of image shadow. The mean correct answer rate after the 2-week e-learning period increased from 34.5 to 72.7%. Diagnosis of the ground glass shadow improved significantly more than that of the mass shadow. Education using the e-leaning platform is effective for interpretation of chest radiography results. E-learning is particularly effective for the interpretation of chest radiography images containing ground glass shadow.

Keywords: e-Learning platform, Education, Interpretation, Chest radiography, Medical students

Introduction

Early diagnosis and treatment are important for the convalescence of patients, as they enable lifesaving treatments in emergencies. Patients whose radiography results are interpreted in a timely manner have better convalescence [1]. However, due to the low numbers of radiologists and increases in the numbers of radiographic examinations at night, interpretation of radiography images on the same day during emergencies has become difficult [1].

The interpretation of radiography images by medical assistants would have a large positive impact in clinical practice. However, medical staff do not have sufficient education to interpret imaging results in university or training institutions, as the interpretation of radiographs is difficult and requires considerable training [2–5]. The effectiveness of e-learning and its use along with traditional methods of education has been reported in recent years [6–13]. This has led to more widespread use of textbooks and e-learning systems for the interpretation of radiography images, although details regarding the effects of these types of training are unclear.

The initial stage of image interpretation is to recognize an abnormal shadow. In the emergency setting in particular, the recognition of the abnormal shadow by medical staff greatly influences the convalescence time of the patient. Radiological image interpretation may involve several modalities and organs. We evaluated the effects of e-learning on student learning of the interpretation of chest radiography images that would be considered relatively common radiological images.

Materials and Methods

Subjects

Ethical review board approval was obtained for this study, and informed consent was obtained from all participants. Ten volunteers were invited via public announcements for the evaluation of the e-learning system. The volunteers were third-year medical students who (1) had anatomy education and so could understand the names and positions of the internal organs, and (2) had not attended any lectures on the interpretation of radiograms. This ensured that the subjects would be consistently knowledgeable but not have any radiology experience.

The e-Learning Platform Software Used for the Interpretation of Radiographic Images

The e-learning system software used for the interpretation of radiograms was “Dokuei-Shinan,” which is produced by the non-profit medical organization Shinansha (https://160.16.228.72/navitool/full_system_en/www/main/). We can purchase these platforms on the Internet. This system can be used to learn the interpretations of radiography images and to add to the experience of specialized physicians using the semantic web [14]. This e-learning soft was made based on diagnosis results of specialists of many physicians and was made for the education purpose of the beginner. This platform supports interpretation of images from various organs using different modalities. We used the chest radiogram interpretation tool in the software. This tool utilizes 500 cases of chest radiography. Nodular shadows, granular shadows, ground glass shadows, and linear shadows are distinguished and are compiled into a database along with high-definition images. The software also includes a tool to test the learner’s interpretation ability.

Study Protocol

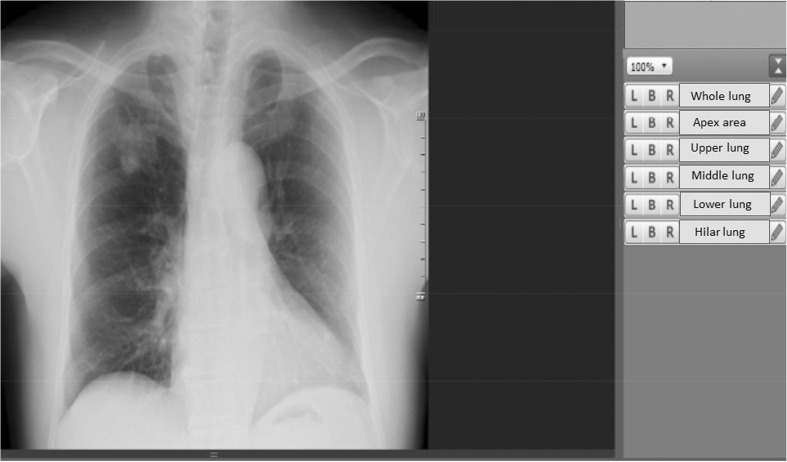

At first, the subjects’ abilities to evaluate chest radiography images were assessed using the test tool accompanying the e-learning system. The students were asked to choose the bronchopulmonary segment where they though the disease shadow was present. The question for each test image was “Is there an abnormal lesion?.” If appropriate, this was followed up with “Which area of the lung is it?”; otherwise, there was no follow-up question. An example of a test question is shown in Fig. 1.

Fig. 1.

An example of a question images used by the testing tool of the e-learning platform in this study. Similar images were used for the questions presented to the novice students during testing

Nodular shadows and ground glass shadows were displayed at random. The type of disease was not revealed to the subjects. The observation time, adjustment of the window level, the window width, and the enlargement ratios of the images were controlled by the observer. We calculated the correct answer rates for nodular shadows and ground glass shadows.

We conducted Kruskal-Wallis tests to assess significant differences (P < 0.05). The StatMate software package (ATMS; Tokyo, Japan) was used for all statistical analyses.

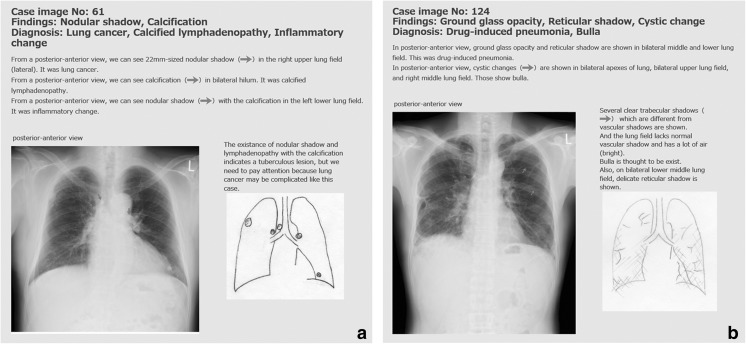

The 10 subjects were divided into group A and group B at random. During the week after the initial testing, group A learned about nodular shadows and group B learned about ground glass shadows. The subjects learned to interpret radiograms with the above shadows using the adjuvant learning software. Examples of a nodular shadow and a ground glass shadow used in the learning tool are shown in Fig. 2a, b. The software explained the visual pattern of the illness. For learning to use the software, the learning time and the learning method entrusted each person. We directed the subjects to only look at images containing shadows specified by us.

Fig. 2.

a Screenshot from the e-learning platform. a Commentary screen for a nodular shadow. b Commentary screen for a ground glass shadow

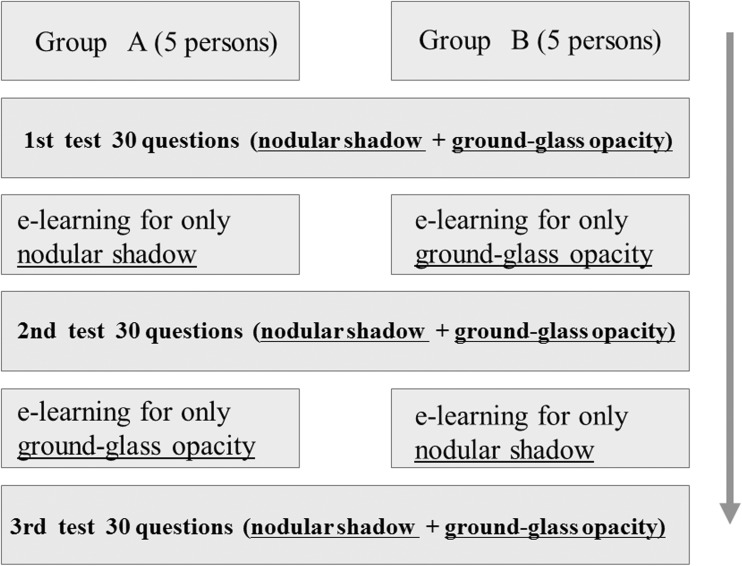

The second round of testing was conducted 1 week after the initial testing. We assessed the difference in the rate of correct answers between the two testing rounds. During the second testing round, the test images were different than those used in the first test. Each group then underwent another week of training to recognize the different shadows. This was followed by a third test. We defined the learning effect in terms of the increased ability to interpret from before to after e-learning. The learning effect was evaluated comparing the numbers of correct answers in each test. The chronological scheme of the three tests and the learning process of the two groups are shown in Fig. 3.

Fig. 3.

The chronological scheme for the three tests and the learning process in the two groups

Results

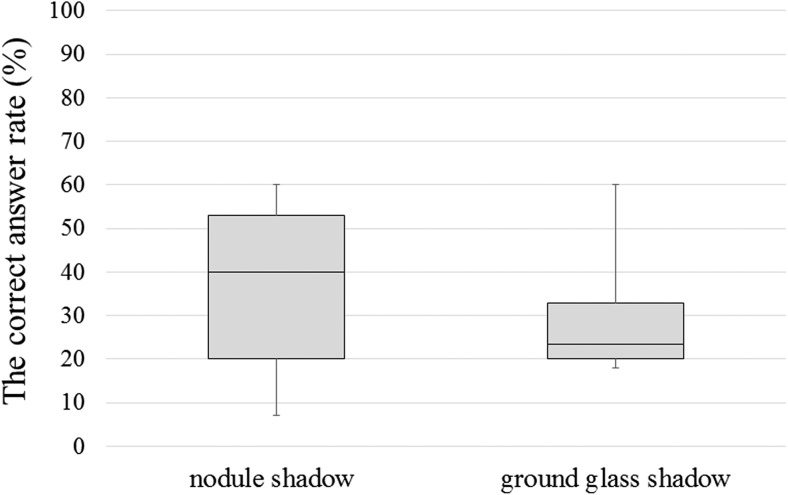

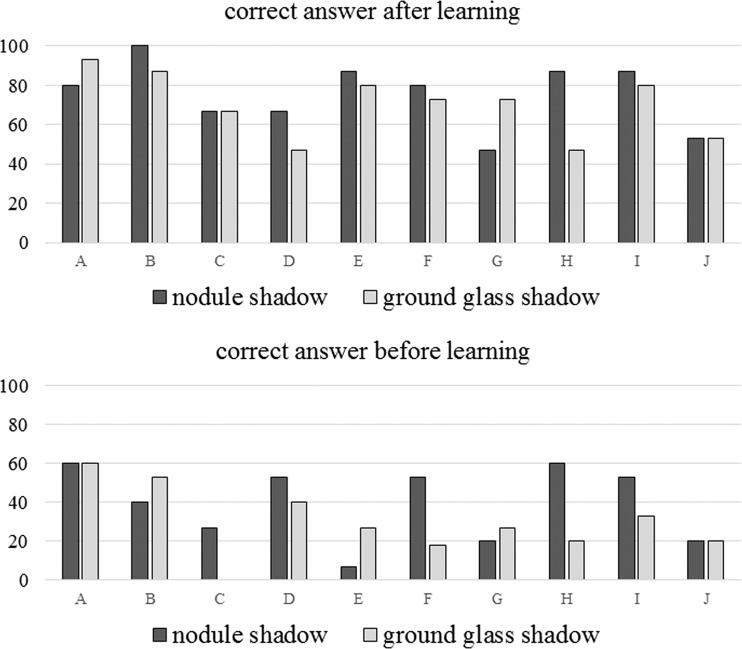

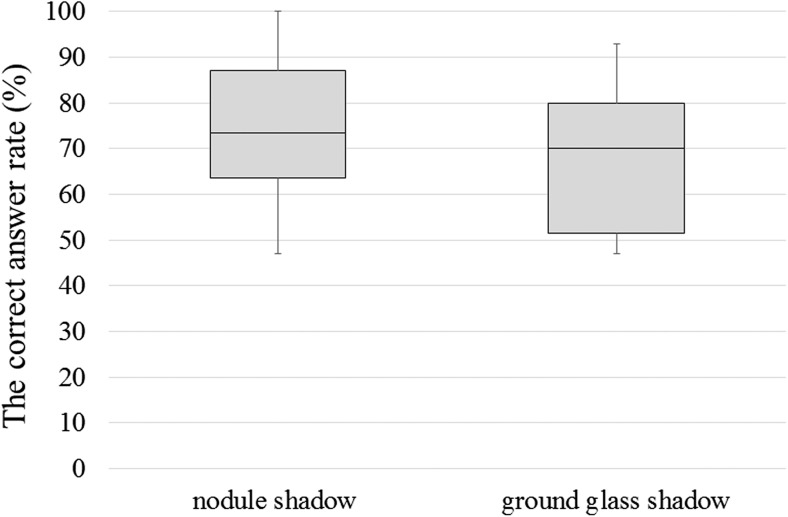

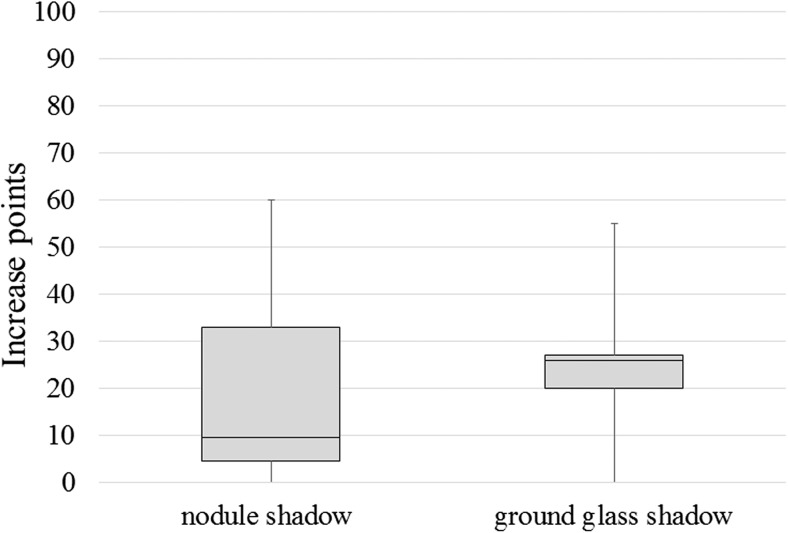

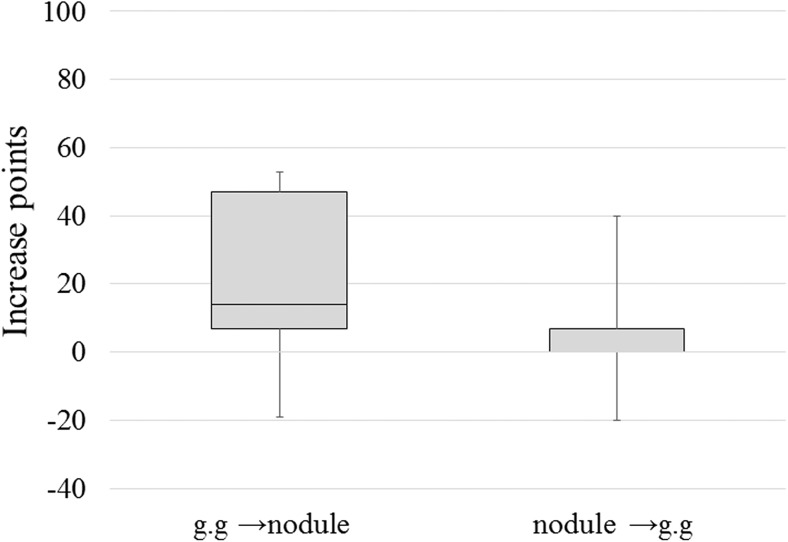

Figure 4 shows the results of the initial test conducted before the learning. The correct answer rate was 23% for images of ground glass shadows and 40% for those containing nodule shadows before the learning. The correct answer rate was significantly higher for images with nodule shadows than for those with ground glass shadows (P < 0.05). The correct answer rate for the initial test and that for the test after 2 weeks of learning are shown in Fig. 5. In all 10 subjects, the correct answer rates improved after learning. The correct answer rates after learning about each shadow type are shown in Fig. 6. The median correct answer rate was higher for nodule shadows, although this difference was not significant. The mean correct answer rate increased from 34.5 to 72.7% between the test conducted before learning and the test conducted after 2 weeks of learning. The difference in the correct answer rate between the tests conducted before vs. after the learning is shown in Fig. 7 for each shadow type. The rate of detection after learning about ground glass shadows improved significantly more than that following learning about nodule shadows. Figure 8 shows the improvement in the correct answer rate for nodule shadows after learning only about ground glass shadows and for ground glass shadows after learning only about mass shadows. This graph thus indicates the effectiveness of the program when the subject learns about a different type of shadow. The correct answer rate for ground glass shadows was affected more significantly than that for mass shadows following this type of learning.

Fig. 4.

The correct answer rate for the initial test before the training. The correct answer rate for ground glass shadows was 23% and that for nodule shadows was 40%. The correct answer rate for nodule shadows was higher than that for ground glass shadows (P < 0.05)

Fig. 5.

The correct answer rate for the initial test before the training and that for the third test after 2 weeks of training. The correct answer rates of all subjects improved after the training

Fig. 6.

The correct answer rate after learning about each shadow type. The median correct answer rate was higher for nodule shadows than for ground glass shadows, although the difference was not significant

Fig. 7.

Differences in the correct answer rates before and after the training. The rate of detection improved more for ground glass shadows than for nodule shadows after the training

Fig. 8.

Improvements in the correct answer rate for nodule shadows after learning only about ground glass shadows and that for ground glass shadows after learning only about mass shadows. The correct answer rate increased more significantly for ground glass shadows than for mass shadows after learning about the other type of shadow

Discussion

Chest radiography is the most frequent type of radiologic scan, but its interpretation is difficult for medical staff and students. Medical care would be improved if medical staff at universities learn to interpret chest radiography images. Many reports have described the utility of effective learning methods for the education of students and medical staff. We examined the effectiveness of e-learning for the interpretation of radiographic images containing different types of disease shadows as nodular shadows and ground glass opacity. The reason that chose nodular shadows and ground glass opacity as a type of the lesions is because these two were characteristic as a lesion of the lungs.

The mean correct answer rates increased from 34.5 to 72.7% following 2 weeks of training. The correct answer rate for ground glass shadows was 23% and that for mass shadows was 40% before the training. The students found the interpretation of ground glass shadows more difficult than that of mass shadows. After 2 weeks of training, the correct answer rate rose to 70% for both types of shadows. The effect of the training was particularly remarkable for images with frosted ground glass shadows.

We found that the learning program was particularly effective for the interpretation of ground glass shadows by the novice students. In fact, the correct answer rate for images with ground glass shadows by students who learned only about mass shadows was under 10%. In contrast, the correct answer rate for images with mass shadows by students who learned only about ground glass shadows was 30%. Several reports indicate that education using both e-learning and face-to-face training is effective. However, e-learning, which can be accomplished in the student’s free time, is very effective for postgraduate students who are working, as it is difficult for them to attend face-to-face training.

This study is limited by the fact that we only examined 10 students. The novel aspect of our study is the recruitment of students with no prior interpretation experience. We thus tested the ability of students to learn diagnosis and interpretation via e-learning from the null situation. This foundational study will be further developed in the future. The e-learning software that was developed includes 500 radiograms that demonstrate many different abnormalities besides the lesions targeted for this study; in addition, there are other modalities of use for it. The purpose of this study was to demonstrate the basic efficacy of e-learning software in radiology, even in those students with no experience, using the two abnormalities as test samples. In this study, we included a test of the ability to interpret a moderately difficult radiograph. We feel that our results demonstrate that students’ ability to interpret even difficult radiograms was improved by e-learning. Future studies will focus on interpreting trauma images that non-radiologists are likely to encounter. We also plan to increase the number of cases and the types of the lesions reviewed. We hope that this e-learning approach is used for the interpretation of radiological images of other organs using a wider range of modalities in the future.

Conclusions

Here, we examined the effectiveness of an e-learning platform used to train medical staff and students in the interpretation of chest radiography images. Education using the e-leaning platform was very effective for the interpretation of chest radiography images. e-Learning was particularly effective for the interpretation of ground glass shadows on chest radiography images. This application software helps student’s training very much.

Compliance with Ethical Standards

Ethical review board approval was obtained for this study, and informed consent was obtained from all participants.

References

- 1.Sardanelli F, Quarenghi M, Fausto A, et al. How many medical requests for US, body CT, and musculoskeletal MR exams in outpatients are inadequate? La Radiologica Medica. 2005;109(3):229–233. [PubMed] [Google Scholar]

- 2.Dawes TJ, Vowler SL, Allen CM, et al. Training improves medical student performance in image interpretation. BJR. 2004;77(921):775–776. doi: 10.1259/bjr/66388556. [DOI] [PubMed] [Google Scholar]

- 3.Subramaniam RM. Medical student radiology training: What are the objectives for contemporary medical practice? Acad Radiol. 2003;10(3):295–300. doi: 10.1016/S1076-6332(03)80104-6. [DOI] [PubMed] [Google Scholar]

- 4.Eisen LA, Berger JS, Hegde A, Schneider RF. Competency in chest radiography: A comparison of medical students, residents, and fellows. J Gen Intern Med. 2006;21:460–465. doi: 10.1111/j.1525-1497.2006.00427.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Doubilet P, Herman PG. Interpretation of radiographs: Effect of clinical history. AJR. 1961;137:1055–1058. doi: 10.2214/ajr.137.5.1055. [DOI] [PubMed] [Google Scholar]

- 6.Scarsbrook AF, Graham RNJ, Perriss RW, et al. Radiology education: A glimpse into the future. Clin Radiol. 2006;61(8):640–648. doi: 10.1016/j.crad.2006.04.005. [DOI] [PubMed] [Google Scholar]

- 7.Pint A, Selvaggi S, Sicignano G, et al. E-learning tools for education: Regulatory aspects, current applications in radiology and future prospects. Radiol Med. 2008;113(1):144–157. doi: 10.1007/s11547-008-0227-z. [DOI] [PubMed] [Google Scholar]

- 8.Marshall NL, Spooner M, Galvin PL, Ti JP, McElvaney NG, Lee MJ. Informatics in radiology: Evaluation of an e-learning platform for teaching medical students competency in ordering radiologic examinations. RadioGraphics. 2011;31:1463–1474. doi: 10.1148/rg.315105081. [DOI] [PubMed] [Google Scholar]

- 9.Pinto A, Brunesse L, Pinto F, et al. E-learning and education in radiology. Eur J Radiol. 2011;78(3):368–371. doi: 10.1016/j.ejrad.2010.12.029. [DOI] [PubMed] [Google Scholar]

- 10.Grunwwald M, Heckemann RA, Gebhard H, et al. Compare radiology: Creating an interactive web-based training program for radiology with multimedia authoring software. Acad Radiol. 2003;10(3):543–553. doi: 10.1016/S1076-6332(03)80065-X. [DOI] [PubMed] [Google Scholar]

- 11.Salajeghen A, Jahangiri A, Evant EO, et al. A combination of traditional learning and e-learning can be more effective on radiological interpretation skills in medical students: A pre- and post-intervention study. BMC Med Educ. 2016;16:46. doi: 10.1186/s12909-016-0569-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Choules AP. The use of elearning on medical education: A review of the current situation. Postgrad Med J. 2007;83(978):212–216. doi: 10.1136/pgmj.2006.054189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Howlett D, Vincent T, Watson G, Owens E, Webb R, Gainsborough N, Fairclough J, Taylor N, Miles K, Cohen J, Vincent R. Blending online techniques with traditional face to face teaching methods to deliver final year undergraduate radiology learning content. Eur J Radiol. 2011;78(3):334–341. doi: 10.1016/j.ejrad.2009.07.028. [DOI] [PubMed] [Google Scholar]

- 14.Shadbolt N., Berners-Lee T., Hall W. The Semantic Web Revisited. IEEE Intelligent Systems. 2006;21(3):96–101. doi: 10.1109/MIS.2006.62. [DOI] [Google Scholar]