Abstract

Detecting genomes with similar expression patterns using clustering techniques plays an important role in gene expression data analysis. Non-negative matrix factorization (NMF) is an effective method for clustering the analysis of gene expression data. However, the NMF-based method is performed within the Euclidean space, and it is usually inappropriate for revealing the intrinsic geometric structure of data space. In order to overcome this shortcoming, Cai et al. proposed a novel algorithm, called graph regularized non-negative matrices factorization (GNMF). Motivated by the topological structure of the GNMF-based method, we propose improved graph regularized non-negative matrix factorization (GNMF) to facilitate the display of geometric structure of data space. Robust manifold non-negative matrix factorization (RM-GNMF) is designed for cancer gene clustering, leading to an enhancement of the GNMF-based algorithm in terms of robustness. We combine the -norm NMF with spectral clustering to conduct the wide-ranging experiments on the three known datasets. Clustering results indicate that the proposed method outperforms the previous methods, which displays the latest application of the RM-GNMF-based method in cancer gene clustering.

Keywords: robust, manifold, matrix factorization, gene clustering

1. Introduction

With the progressive implementation of human whole-genome and microarray technologies, it is possible to simultaneously observe the expressions of numerous genes in different tissue samples. By analyzing gene expression data, genes with varying expressions in tissues and their relationships may be identified to figure out the pathogenic mechanism of cancers based on genetic changes [1]. Recently, cancer classification based on gene expression data has become a hot research topic in bioinformatics.

Due to the fact that the analysis of genome-wide expression patterns can provide unique perspectives into the structure of genetic networks, the clustering technique has been used to analyze gene expression data [2,3]. Cluster analysis is the most widespread statistical techniques for analyzing massive gene expression data. Its major task is to classify genes with similar expressions to discover groups of genes with identical features or similar biological functions, in order that people can acquire a deeper understanding about the essence of many biological phenomena such as gene functions, development, cancer, and pharmacology [4].

Currently, it has been shown that non-negative matrix factorization (NMF) [5,6] is superior to the hierarchical clustering (HC) and self-organizing map (SOM) [7] in the application of cancer samples in clustering gene expression data. Over the past few years, the NMF-based method has been used for the gene expressions of statistically analyzing data for clustering [8,9,10,11,12,13]. The main idea is to approximately factorize a non-negative data matrix into a product of two non-negative matrices, which makes sure that all elements of the matrices are non-negative. Therefore, the appearance of NMF has attracted considerable attention. Recently, various variants based on the original NMF have been developed by modifying the objective function or the constraint conditions [14,15,16]. For instance, Cai et al. proposed graph regularized non-negative matrix factorization (GNMF), giving forth to the neighboring geometric structure. It illustrates the nearest neighbor graph that preserves the neighborhood information of high-dimensional space in low-dimensional space. The GNMF reveals the intrinsic geometrical structure by incorporating a Laplacian regularization term [17], which is effective for solving clustering problems. After that, the sparse NMF [18] was proposed with sparse constraints upon the basis matrices and coefficient matrices factored by the NMF so that the sparseness may be reflected from data. The non-smooth NMF [19] can realize the global or local sparseness [20] by making basis and encoding matrices sparse simultaneously. For the sake of enhancing the robustness of the GNMF-based method in gene clustering, we propose improved robust manifold non-negative matrix factorization (RM-GNMF) by making use of the combination of -norm and spectral clustering with Laplacian regularization, leading to the internal geometry of data representations. It facilitates the display purposes of intrinsic geometric structure of the cancer gene data space.

This paper is organized as follows. In Section 2, we give a brief review on the NMF and the GNMF. In Section 3, we propose an improved RM-GNMF algorithm. In Section 4, we give the experimental results comparing with the previous methods. Finally, the conclusions are drawn in Section 5.

2. The NMF-Based and GNMF-Based Method

The NMF-based method [5] is a linear and non-negative approximate data description for non-negative matrices. We consider an original decomposed matrix X of size , where m represents data characteristics and n represents the number of samples. Based on the NMF method, the matrix X is decomposed into two non-negative matrices and , i.e.,

| (1) |

where . For a given decomposition , sample m can be divided into r classes according to matrix . Each sample is placed in the highest metagene expression level in the sample, meaning that if is the largest in column j, then sample j is assigned to class i .

Using the square of the Euclidean distance between X and [21], we have the objective function of the NMF method

| (2) |

According to the iterative update algorithms [6], the NMF-based method is performed on the basis of multiplicative update rules of W and H given by

| (3) |

In order to overcome the limitation of the NMF-based method, Cai et al. [17] proposed the GNMF-based method, in which an affinity graph is generated to encode the geometrical information followed by a matrix factorization with respect to the graph structure. In contrast to the NMF-based method, it has a regular graph constraint, which preserves the advantage of the local sparse representation of the NMF-based method and preserves the similarity between the original data points after dimensionality reduction.

There are several weighting schemes, such as zero-one weighting, heat kernel weighting, and Gaussian weighting [17]. In what follows, we consider the zero-one weighting described as

| (4) |

Based on the weight matrix Q, we obtain the objective function of the GNMF method given by

| (5) |

where denotes the trace of matrices and . D is a diagonal matrix whose entries are column or row sums of Q with [22]. The regularization parameter can be used for the smoothness control of the new representation. By the iterative algorithms to minimize the objective function , we achieve the updating rules

| (6) |

3. The RM-GNMF-Based Method for Gene Clustering

So far, we have described the NMF-based method and GNMF-based method. In what follows, we seek RM-GNMF gene clustering by making use of the combination of -norm and spectral clustering with the Laplacian regularization.

3.1. The -Norm

The -norm of a matrix was initially employed as a rotational invariant -norm [23], which was usually used for multi-task learning [24,25] and tensor factorization [26]. Instead of using -norm-based loss function that is sensitive to outliers, we resort to the -norm-based loss function and regularization [23], which is convergence-proved.

For the sake of getting over the drawbacks of the NMF-based method and enhancing the robustness of the GNMF-based method, we employ the -norm for the matrix factorization in the RM-GNMF-based method. For a non-negative matrix X of size , the -norm of matrice X is defined as

| (7) |

where data vectors are arranged in columns, and the -norm calculates the -norm for column vectors first. Subsequently, the matrix factorization assignment becomes

| (8) |

3.2. Spectral-Based Manifold Learning to Constrained GNMF

The spectral method is a classical method of analysis and algebra in mathematics. It is widely used in low dimensional representation and clustering problems of high dimensional data [27,28]. A relational matrix describing the similarity of the pair of data points is defined according to the given sample dataset, and the eigenvalues and eigenvectors of the matrices are calculated. After that, the appropriate eigenvectors are selected and the low dimensional embedding of the data is obtained. The degree matrices are defined on a given graph, such as an adjacency matrix of the graph, a Laplacian matrix, and so on [22].

Based on the spectrum of the matrices with respect to the graph, spectral theory further reveals the information contained in the graph , and establishes the connection between the discrete space and the continuous space through the techniques of geometry, analysis, and algebra. It has a wide range of applications in manifold learning. In this section, the p-nearest neighbor method can be used for establishing the relationship between each data point and its neighborhood.

For a data matrix , we treat each column of X as a data point and each data point as a vertex, respectively. The p-nearest-neighbor graph G can be constructed with n vertices. Then the symmetric weight matrix is generated, where the element denotes the weight of the edge joining vertices i and j and the value of is given by

| (9) |

where denotes the set of p-nearest neighbors of . It is obvious that the matrix Q represents the affinity between the data points.

There is an assumption about manifold. Namely, if two data points and are close in the intrinsic geometric structure of the data distribution, then their presentations under a new basis will be close [29]. Therefore, we define the relationship as follows

| (10) |

where and denote the mappings of and , respectively. The degree matrix D is a diagonal matrix given by . Obviously, is the sum of all the similarities related with . Then, the graph Laplacian matrix is given by

| (11) |

The graph embedding can be written as

| (12) |

In the RM-GNMF-based method, we combine the GNMF-based method with the spectral clustering, resulting in the -norm constrained GNMF as follows

| (13) |

where is the regularization parameter. We resort to the augmented Lagrange multiplier (ALM) method to solve the above problem.

For an auxiliary variable , we rewrite the in Equation (13) as

| (14) |

satisfying the constraints and . Then, we define the augmented Lagrangian function

| (15) |

satisfying the constraint , where is the step size of update and is the Lagrangian multiplier.

To optimize Equation (15), we rewrite the objective function to get the following task

| (16) |

satisfying the constraint .

3.3. Computation of Z

For the given W and H, we can solve Z in Equation (15) by using the update of Z related to the following issue

| (17) |

We need the following Lemma to solve Z in Equation (15). Please see the Appendix for a detailed proof.

Lemma 1.

Given a matrix and a positive scalar λ, is the optimal solution of

(18) and the i-th column of is given by

(19)

3.4. Computation of W and H

For the given other parameters, we solve the optimal W. The update of W amounts to solve

| (20) |

Let . The problem in Equation (20) can be rewritten as

| (21) |

If the partial derivative of W is set to be 0, we obtain

| (22) |

Then, we derive the optimal H. Taking , the update problem of H can be expressed as

| (23) |

satisfying the constraint . We have

| (24) |

Therefore, the optimal can be achieved by counting eigenvectors of

| (25) |

3.5. Updating of and

The update standard of and can be described as follows

| (26) |

| (27) |

where p is the nearest neighbor graph parameter. The detailed process of the RM-GNMF-based method is listed in Algorithm 1.

| Algorithm 1: The RM-GNMF-based Algorithm |

| Input: The dataset , a predefined number of clusters k, parameters , , the nearest neighbor graph parameter p, maximum iteration number . Initialization: , , . Repeat Fix other parameters, and then update Z by formula (17); Fix other parameters, and then update W by: ; Update H by , where U and V are the left and right singular values of the SVD decomposition; Fix other parameters, and then update and by formulas (26)(27); t = t + 1; Until . Output: matrix , matrix . |

4. Results and Discussion

In this section, we evaluate the performance of the proposed method on the three gene expression datasets. We compare the RM-GNMF-based method with the NMF-based method [6], the -NMF-based method [23], the LNMF-based method [20], and the GNMF-based method [17].

4.1. Datasets

In order to evaluate the performance of proposed RM-GNMF algorithm, the clustering experiment was conducted on several gene expressions datasets of cancer patients. Three classical genetic datasets were used in the experiment, including leukemia [1], colon, and GLI_85 [30]. These gene expression datasets are downloaded from: http://featureselection.asu.edu/datasets.php.The colon cancer datasets consist of the gene expression profiles of 2000 genes for 62 tissue samples among which 40 are colon cancer tissues and 22 are normal tissues. The leukemia datasets consist of 7129 genes and 72 samples (47 ALL and 25 AML).

A brief description of experimental datasets is described in Table 1.

Table 1.

Statistics of three gene expression datasets.

| Data Sets | Instances | Features | Classes |

|---|---|---|---|

| Colon | 62 | 2000 | 2 |

| GLI_85 | 85 | 22,283 | 2 |

| Leukemia | 72 | 7070 | 2 |

More detailed information on these datasets can be found in the relevant references, and these datasets are available for download from the reference website.

4.2. Evaluation Metrics

For the sake of evaluating the clustering results, we use the clustering accuracy and normalized mutual information (NMI) to demonstrate the performance of the proposed algorithm.

Clustering accuracy can be calculated as

| (28) |

where is the cluster label of , and is the true class label i-th sample, n denotes the total number of samples, and is a delta function. If , we obtain , where is the mapping function that maps the cluster label into the actual label . Otherwise, we have . We can find the best mapping by the Kuhn–Munkres method [31]. NMI can be described as

| (29) |

where is the size of the i-th cluster and is the size of the j-th class, is the number of data between the intersections, and N denotes the number of clusters. We perform 100 experiments under each target feature dimension, taking the mean of the accurate and NMI values as the experimental results.

4.3. Parameter Selection

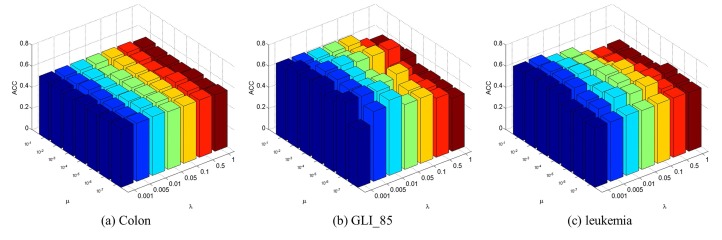

The RM-GNMF-based method involves two essential parameters, i.e., the regularization parameter and the regularity coefficient determining the penalty for infeasibility.

We set the parameters and in the range of and . We use the cross-validation method to get the best parameter values and . In order to intuitively to analyze the influence of parameters and of the RM-GNMF-based method on the accuracy of clustering, Figure 1 shows the variation on clustering accuracy when the two parameters are modified. The three subgraphs in Figure 1 correspond to three gene expression datasets respectively. As can be viewed in Figure 1, the parameter can get higher ACC. With the change of regular parameter , the change of ACC is relatively flat, and the clustering accuracy is higher when the value of is smaller. Therefore, we set in the follow-up experiments.

Figure 1.

Influence of parameters on clustering accuracy.

4.4. Clustering Results

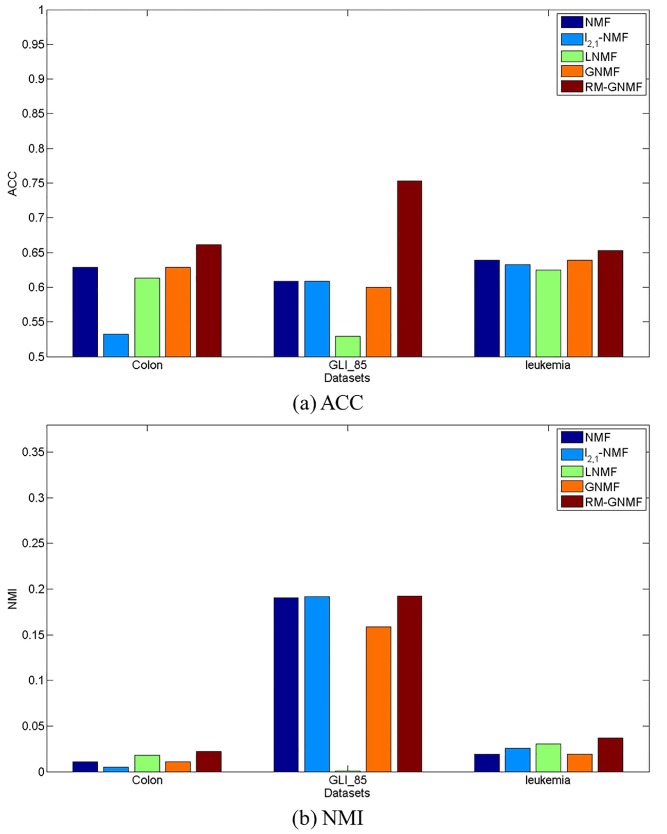

In Table 2, we demonstrate the clustering results on the colon, GLI_85 and leukemia datasets, respectively. Reported is the mean of clustering results from 100 runs of different NMF methods together.

Table 2.

Clustering results on different datasets. NMF: non-negative matrix factorization; GNMF: graph regularized non-negative matrices factorization; RM-GNMF: robust manifold non-negative matrix factorization; NMI: normalized mutual information.

| Methods | Colon | GLI_85 | Leukemia | |||

|---|---|---|---|---|---|---|

| ACC | NMI | ACC | NMI | ACC | NMI | |

| NMF | 0.6290 | 0.0110 | 0.6088 | 0.1906 | 0.6389 | 0.0193 |

| -NMF | 0.5323 | 0.0048 | 0.6088 | 0.1916 | 0.6328 | 0.0258 |

| LNMF | 0.6129 | 0.0181 | 0.5294 | 0.0011 | 0.6250 | 0.0306 |

| GNMF | 0.6290 | 0.0110 | 0.6000 | 0.1584 | 0.6389 | 0.0193 |

| RM-GNMF | 0.6613 | 0.0220 | 0.7529 | 0.1925 | 0.6528 | 0.0369 |

It can be found that the RM-GNMF-based method outperforms the original NMF-based method, while the RM-GNMF-based method achieves the best performance compared with the other three datasets. The clustering accuracies of the RM-GNMF-based method are , and for the colon, GLI_85, and leukemia datasets, respectively.

Our tests on several gene expression profiling datasets of cancer patients consistently indicate that the RM-GNMF-based method achieves significant improvements in comparison with the NMF-based method, the -NMF-based method, the LNMF-based method, and the GNMF-based method, in terms of cancer prediction accuracy.

As shown in Figure 2, the RM-GNMF-based method always gives birth to better clustering results than other NMF-based method using the three original datasets.

Figure 2.

Clustering results on different datasets.

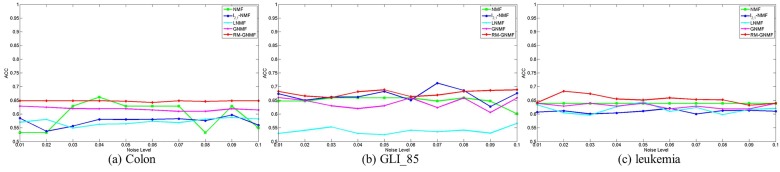

To demonstrate the robustness of our approach to data changes, we add uniform noise onto the three gene expression datasets. A disturbed matrix is generated by adding independent uniform noise, defined as follows:

| (30) |

where Y is the original matrix, r is a random number generated by a uniform distribution on the interval , and is the maximum expression of Y .

The experimental results with noise added are shown in Figure 3. It can be seen that the clustering result of RM-GNMF algorithm is still stable with the addition of noise, which shows that RM-GNMF algorithm is robust.

Figure 3.

Influence of noise on clustering accuracy.

In order to verify the results obtained from the algorithms in the experiments, we import the clustering result of the comparison methods into STAC web platform to perform the statistical test (http://tec.citius.usc.es/stac/). We selected the Friedman test of non-parametric multiple groups; the significance level is . The analysis results obtained are presented in Table 3 and Table 4.

Table 3.

Friedman test (significance level of 0.05).

| Statistic | p-Value | Result |

|---|---|---|

| 7.00000 | 0.01003 | is rejected |

Table 4.

Ranking.

| Rank | Algorithm |

|---|---|

| 1.33333 | LNMF |

| 2.33333 | NMF |

| 2.66667 | -NMF |

| 3.66667 | GNMF |

| 5.00000 | RM-GNMF |

From the above test results it can be concluded that is rejected. Hence, we believe that the clustering results of five algorithms are significantly different.

5. Conclusions

We have proposed the RM-GNMF-based method with the -norm and spectral-based manifold learning. This algorithm is suitable for cancer gene expression data clustering with an elegant geometric structure. Our tests on several gene expression profiling datasets of cancer patients consistently indicate that the RM-GNMF-based method achieves significant improvements in comparison with the NMF-based method, the -NMF-based method, the LNMF-based method, and the GNMF-based method, in terms of cancer prediction accuracy and robustness.

Acknowledgments

This work was supported by the National Natural Science Foundation of China (Grant Nos. 61379153, 61572529, 61572284).

Appendix A

Lemma A1.

With the given matrix and the positive scalar λ, is the optimal solution of

(A1) and the i-th column of can be calculated as

(A2)

Proof.

The objection function in Equation (A1) is equivalent to the following equation

(A3) which can be solved in a decoupled manner

(A4) After taking derivative with respect to , we get

(A5) where r is a subgradient vector and . For , we get

(A6) where . For , we get

(A7) Combining Equation (A6) with Equation (A7), we obtain

(A8) where . Plugging in Equation (A8) to Equation (A7), we solve , which is substituted back into Equation (A8). After performing the above-mentioned steps, we obtain

(A9) □

Author Contributions

Rong Zhu and Jin-Xing Liu conceived and designed the experiments; Rong Zhu performed the experiments; Rong Zhu and Yuan-Ke Zhang analyzed the data; Rong Zhu and Ying Guo wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Golub T.R., Slonim D.K., Tamayo P., Huard C., Gaasenbeek M., Mesirov J.P., Coller H., Loh M.L., Downing J.R., Caligiuri M.A., et al. Molecular classification of cancer: Class discovery and class prediction by gene expression monitoring. Science. 1999;286:531–537. doi: 10.1126/science.286.5439.531. [DOI] [PubMed] [Google Scholar]

- 2.Jiang D., Tang C., Zhang A. Cluster analysis for gene expression data: Survey. IEEE Trans. Knowl. Data Eng. 2004;16:1370–1386. doi: 10.1109/TKDE.2004.68. [DOI] [Google Scholar]

- 3.Devarajan K. Nonnegative matrix factorization: an analytical and interpretive tool in computational biology. PLoS Comput. Biol. 2008;4:e1000029. doi: 10.1371/journal.pcbi.1000029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Luo F., Khan L., Bastani F., Yen I.L., Zhou J. A dynamically growing self-organizing tree (DGSOT) for hierarchical clustering gene expression profiles. Bioinformatics. 2004;20:2605–2617. doi: 10.1093/bioinformatics/bth292. [DOI] [PubMed] [Google Scholar]

- 5.Lee D.D., Seung H.S. Learning the parts of objects by non-negative matrix factorization. Nature. 1999;401:788. doi: 10.1038/44565. [DOI] [PubMed] [Google Scholar]

- 6.Lee D.D., Seung H.S. Advances in Neural Information Processing Systems. The MIT Press; Cambridge, MA, USA: 2001. Algorithms for non-negative matrix factorization; pp. 556–562. [Google Scholar]

- 7.Brunet J.P., Tamayo P., Golub T.R., Mesirov J.P. Metagenes and molecular pattern discovery using matrix factorization. Proc. Natl. Acad. Sci. USA. 2004;101:4164–4169. doi: 10.1073/pnas.0308531101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Li T., Ding C.H. Data Clustering: Algorithms and Applications. CRC Press; Boca Raton, FL, USA: 2013. Nonnegative Matrix Factorizations for Clustering: A Survey. [Google Scholar]

- 9.Ding C., He X., Simon H.D. Proceedings of the 2005 SIAM International Conference on Data Mining. SIAM; Philadelphia, PA, USA: 2005. On the equivalence of nonnegative matrix factorization and spectral clustering; pp. 606–610. [Google Scholar]

- 10.Kuang D., Ding C., Park H. Proceedings of the 2012 SIAM International Conference on Data Mining. SIAM; Philadelphia, PA, USA: 2012. Symmetric nonnegative matrix factorization for graph clustering; pp. 106–117. [Google Scholar]

- 11.Akata Z., Thurau C., Bauckhage C. Non-negative matrix factorization in multimodality data for segmentation and label prediction; Proceedings of the 16th Computer vision winter workshop; Mitterberg, Austria. 2–4 February 2011. [Google Scholar]

- 12.Liu J., Wang C., Gao J., Han J. Proceedings of the 2013 SIAM International Conference on Data Mining. SIAM; Philadelphia, PA, USA: 2013. Multi-view clustering via joint nonnegative matrix factorization; pp. 252–260. [Google Scholar]

- 13.Singh A.P., Gordon G.J. Relational learning via collective matrix factorization; Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and Data Mining; Las Vegas, Nevada, USA. 24–27 August 2008; pp. 650–658. [Google Scholar]

- 14.Huang Z., Zhou A., Zhang G. Computational Intelligence and Intelligent Systems. Springer; Berlin, Germany: 2012. Non-negative matrix factorization: A short survey on methods and applications; pp. 331–340. [Google Scholar]

- 15.Wang Y.X., Zhang Y.J. Nonnegative matrix factorization: A comprehensive review. IEEE Trans. Knowl. Data Eng. 2013;25:1336–1353. doi: 10.1109/TKDE.2012.51. [DOI] [Google Scholar]

- 16.Kim J., He Y., Park H. Algorithms for nonnegative matrix and tensor factorizations: A unified view based on block coordinate descent framework. J. Glob. Optim. 2014;58:285–319. doi: 10.1007/s10898-013-0035-4. [DOI] [Google Scholar]

- 17.Cai D., He X., Han J., Huang T.S. Graph regularized nonnegative matrix factorization for data representation. IEEE Trans. Pattern Anal. Mach. Intell. 2011;33:1548–1560. doi: 10.1109/TPAMI.2010.231. [DOI] [PubMed] [Google Scholar]

- 18.Kim H., Park H. Sparse non-negative matrix factorizations via alternating non-negativity-constrained least squares for microarray data analysis. Bioinformatics. 2007;23:1495–1502. doi: 10.1093/bioinformatics/btm134. [DOI] [PubMed] [Google Scholar]

- 19.Pascual-Montano A., Carazo J.M., Kochi K., Lehmann D., Pascual-Marqui R.D. Nonsmooth nonnegative matrix factorization (nsNMF) IEEE Trans. Pattern Anal. Mach. Intell. 2006;28:403–415. doi: 10.1109/TPAMI.2006.60. [DOI] [PubMed] [Google Scholar]

- 20.Li S.Z., Hou X.W., Zhang H.J., Cheng Q.S. Learning spatially localized, parts-based representation; Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR); Kauai, HI, USA. 8–14 December 2001. [Google Scholar]

- 21.Paatero P., Tapper U. Positive matrix factorization: A non-negative factor model with optimal utilization of error estimates of data values. Environmetrics. 1994;5:111–126. doi: 10.1002/env.3170050203. [DOI] [Google Scholar]

- 22.Chung F.R. Spectral Graph Theory. American Mathematical Soc.; Providence, RI, USA: 1997. Number 92. [Google Scholar]

- 23.Nie F., Huang H., Cai X., Ding C.H. Advances in Neural Information Processing Systems. The MIT Press; Cambridge, MA, USA: 2010. Efficient and robust feature selection via joint l2, 1-norms minimization; pp. 1813–1821. [Google Scholar]

- 24.Argyriou A., Evgeniou T., Pontil M. Advances in Neural Information Processing Systems. The MIT Press; Cambridge, MA, USA: 2007. Multi-task feature learning; pp. 41–48. [Google Scholar]

- 25.Guyon I., Weston J., Barnhill S., Vapnik V. Gene selection for cancer classification using support vector machines. Mach. Learn. 2002;46:389–422. doi: 10.1023/A:1012487302797. [DOI] [Google Scholar]

- 26.Huang H., Ding C. Robust tensor factorization using r1 norm; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Anchorage, AK, USA. 23–28 June 2008. [Google Scholar]

- 27.Tao H., Hou C., Nie F., Zhu J., Yi D. Scalable Multi-View Semi-Supervised Classification via Adaptive Regression. IEEE Trans. Image Process. 2017;26:4283–4296. doi: 10.1109/TIP.2017.2717191. [DOI] [PubMed] [Google Scholar]

- 28.Shawe-Taylor J., Cristianini N., Kandola J.S. Advances in Neural Information Processing Systems. The MIT Press; Cambridge, MA, USA: 2002. On the concentration of spectral properties; pp. 511–517. [Google Scholar]

- 29.Yin M., Gao J., Lin Z. Laplacian regularized low-rank representation and its applications. IEEE Trans. Pattern Anal. Mach. Intell. 2016;38:504–517. doi: 10.1109/TPAMI.2015.2462360. [DOI] [PubMed] [Google Scholar]

- 30.Li J., Cheng K., Wang S., Morstatter F., Robert T., Tang J., Liu H. Feature Selection: A Data Perspective. arxiv. 2017. 1601.07996

- 31.Lovász L., Plummer M.D. Matching Theory. Volume 367 American Mathematical Soc.; Providence, RI, USA: 2009. [Google Scholar]