Abstract

We show that in a common high-dimensional covariance model, the choice of loss function has a profound effect on optimal estimation.

In an asymptotic framework based on the Spiked Covariance model and use of orthogonally invariant estimators, we show that optimal estimation of the population covariance matrix boils down to design of an optimal shrinker η that acts elementwise on the sample eigenvalues. Indeed, to each loss function there corresponds a unique admissible eigenvalue shrinker η* dominating all other shrinkers. The shape of the optimal shrinker is determined by the choice of loss function and, crucially, by inconsistency of both eigenvalues and eigenvectors of the sample covariance matrix.

Details of these phenomena and closed form formulas for the optimal eigenvalue shrinkers are worked out for a menagerie of 26 loss functions for covariance estimation found in the literature, including the Stein, Entropy, Divergence, Fréchet, Bhattacharya/Matusita, Frobenius Norm, Operator Norm, Nuclear Norm and Condition Number losses.

Keywords: Covariance Estimation, Precision Estimation, Optimal Nonlinearity, Stein Loss, Entropy Loss, Divergence Loss, Fréchet Distance, Bhattacharya/Matusita Affinity, Quadratic Loss, Condition Number Loss, High-Dimensional Asymptotics, Spiked Covariance, Principal Component Shrinkage

1. Introduction

Suppose we observe p-dimensional Gaussian vectors with Ʃ = Ʃp the underlying p-by-p population covariance matrix. To estimate Ʃ, we form the empirical (sample) covariance matrix this is the maximum likelihood estimator. Stein [1, 2] observed that the maximum likelihood estimator S ought to be improvable by eigenvalue shrinkage.

Write for the eigendecomposition of S, where V is orthogonal and the diagonal matrix contains the empirical eigenvalues. Stein [2] proposed to shrink the eigenvalues by applying a specific nonlinear mapping φ producing the estimate where φ maps the space of positive diagonal matrices onto itself. In the ensuing half century, research on eigenvalue shrinkers has flourished, producing an extensive literature. We can point here only to a fraction, with pointers organized into early decades [3, 4, 5, 6, 7, 8], the middle decades [9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19], and the last decade [20, 21, 22, 23, 24, 25, 26, 27, 28, 29]. Such papers typically choose some loss function where is the space of positive semidefinite p-by-p matrices, and develop a shrinker η with “favorable” risk

In high dimensional problems, p and n are often of comparable magnitude. There, the maximum likelihood estimator is no longer a reasonable choice for covariance estimation and the need to shrink becomes acute.

In this paper, we consider a popular large n, large p setting with p comparable to n, and a set of assumptions about Ʃ known as the Spiked Covariance Model [30]. We study a variety of loss functions derived from or inspired by the literature, and show that to each “reasonable” nonlinearity η there corresponds a well-defined asymptotic loss.

In the sibling problem of matrix denoising under a similar setting, it has been shown that there exists a unique asymptotically admissible shrinker [31, 32]. The same phenomenon is shown to exist here: for many different loss functions, we show that there exists a unique optimal nonlinearity η*, which we explicitly provide. Perhaps surprisingly, η* is the only asymptotically admissible nonlinearity, namely, it offers equal or better asymptotic loss than that of any other choice of η, across all possible Spiked Covariance models.

1.1. Estimation in the Spiked Covariance Model

Consider a sequence of covariance estimation problems, satisfying two basic assumptions.

[ASY(γ)] The number of observations n and the number of variables pn in the n-th problem follows the proportional-growth limit pn/n → γ, as n → ∞, for a certain 0 < γ ≤ 1.

Denote the population and sample covariances in the n-th problem by Σ = Σpn and S = Sn,pn and assume that the eigenvalues ℓi of Σpn satisfy:

[Spike(ℓ1, …, ℓr)] The r “spikes” ℓ1 > … > ℓr ≥ 1 are fixed independently of n and pn, and ℓr+1 = … = ℓpn = 1.

The spiked model exhibits three important phenomena, not seen in classical fixed-p asymptotics, that play an essential role in the construction of optimal estimators. Drawing on results from [33, 34, 35, 36, 37, 38], we highlight:

-

a.Eigenvalue spreading. Consider model [Asy(γ)] in the null case ℓ1 = … = ℓr = 1. The empirical distribution of the sample eigenvalues λ1n, …, λpn converges as n → ∞ to a non-degenerate absolutely continuous distribution, the Marcenko-Pastur or ‘quarter-circle’ law [33]. The distribution, or ‘bulk’, is supported on a single interval, whose limiting ‘bulk edges’ are given by

(1.1) -

b.Top eigenvalue bias. Consider models [Asy(γ)] and [Spike(ℓ1, …, ℓr)]. For i = 1, …, r, the leading sample eigenvalues satisfy

where the ‘biasing’ function(1.2)

for ℓ ≤ ℓ+(γ), the Baik-Ben Arous-Peché transition point(1.3) (1.4) Thus the empirical eigenvalues λi are shifted upwards from their theoretical counterparts ℓi by an asymptotically predictable amount, of a size that exceeds γ even for very large signal strengths ℓi.

-

c.Top eigenvector inconsistency. Again consider models [Asy(γ)] and [Spike(ℓ1, …, ℓr)], noting that ℓ1 > … > ℓr are distinct. The angles between the sample eigenvectors v1n, …, vpn, and the corresponding “true” population eigenvectors u1n, …, upn, have non-zero limits:

where the cosine function is given by(1.5)

and c(ℓ) = 0 for ℓ ≤ ℓ+(γ).(1.6)

Loss functions and optimal estimation. Now consider a class of estimators for the population covariance , based on individual shrinkage of the sample eigenvalues. Specifically,

| (1.7) |

where vi is the sample eigenvector with sample eigenvalue λi and η(λ) is a scalar nonlinearity, η : ℝ+ → [1, ∞), so that the same function acts on each sample eigenvalue. While this appears to be a significant restriction from Stein’s use of vector functions φ [2], the discussion in Section 8 shows that nothing is lost in our setting by the restriction to scalar shrinkers.

Consider a family of loss functions and a fixed nonlinearity η : [0, ∞) → ℝ. Define the asymptotic loss relative to L of the shrinkage estimator in model [Spike(ℓ1, …, ℓr)] by

| (1.8) |

assuming such limit exists. If a nonlinearity η* satisfies

| (1.9) |

for any other nonlinearity η, any r and any spikes ℓ1, … , ℓr, and if for any η the inequality is strict at some choice of ℓ1, … , ℓr, then we say that η* is the unique asymptotically admissible nonlinearity (nicknamed “optimal”) for the loss sequence L.

In constructing estimators, it is natural to expect that the effect of the biasing function λ(ℓ) in (1.3) might be undone simply by applying its inverse function ℓ(λ) given by

| (1.10) |

However, eigenvector inconsistency makes the situation more complicated (and interesting!), as we illustrate using Figure 1. Focus on the plane spanned by u1, the top population eigenvector, and by v1, its sample counterpart. We represent the top rank one component of Ʃ, by the vector The corresponding top rank one component of S is represented by λ1v1. If we apply the inverse function (1.10) to λ1, we obtain Since v1 is not collinear with u1, there is a non-vanishing error that remains, even though As the picture suggests, it is quite possible that a different amount of shrinkage, will lead to smaller error. However, we will see that the optimal choice of η depends greatly on the particular error measure that is chosen.

Figure 1:

Shrinking empirical eigenvalue λ1 to a value η(λ1) that is smaller than the inverse function ℓ(λ1) may reduce the error of estimation.

To give the flavor of results to be developed systematically later, we now look at four error measures in common use. The first three, based on the operator, Frobenius and nuclear norms, use the singular values σj of :

| (1.11) |

The fourth is Stein’s loss, widely studied in covariance estimation [1, 9, 39].

For convenience, we begin with the single spike model Spike(ℓ), so that When η is continuous, the losses have a deterministic asymptotic limit defined in−(1.8).

For many losses, including (1.11), this deterministic limiting loss has a simple form, and we can evaluate, often analytically, the optimal shrinkage function, namely the shrinkage function satisfying (1.9). For example, writing for the four popular loss functions (1.11) we find that on the corresponding four optimal shrinkers are

| (1.12) |

where s2 = 1 − c2. Figure 2 shows these four optimal shrinkers as a function of the sample eigen value λ. These are just four examples; The full list of optimal shrinkers we discover in this paper appears in Table 2 below. In all cases, for Figure 3 in Section 6 below shows all the full list of optimal shrinkers when γ = 1.

Figure 2:

Vertical axis: optimal shrinkers η* from (1.12), shown as functions η*(ℓ(λ)) of the empirical eigenvalue λ, horizontal axis. Here γ = lim pn/n = 1, so λ+(γ) = 4. (Color online.)

Table 2:

Optimal shrinkers η*(λ) for 18 of the loss families L discussed. Values shown are shrinkers for λ > λ+(γ). All shrinkers obey η*(λ) = 1 for λ≤λ+(γ). Here, ℓ, c and s depend on λ (and implicitly on γ) according to (1.10), (1.6) and . In cases marked “N/A” the optimal shrinker does not seem to admit a simple closed form, but can be easily calculated numerically.

| Frobenius | Operator | Nuclear | |

| A − B | ℓc2 + s2 | ℓ | max(l + (ℓ − l)(l − 2s2), l) |

| A−1 − B−1 | ℓ | ||

| A−1B − I | N/A | ||

| B−1A − I | N/A | ||

| A−1/2 BA−1/2 − I | |||

| St | Ent | Div | |

| Stein | ℓc2 + s2 | ||

| Fréchet | |||

| Affine | |||

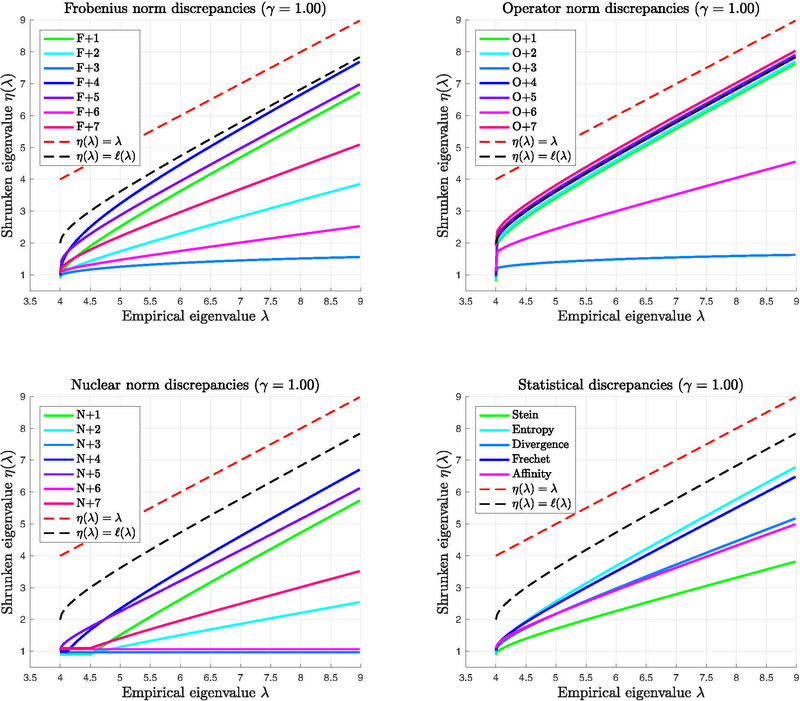

Figure 3:

Optimal Shrinkers for 26 Component Loss Functions for γ = 1 and 4 ≤ λ ≤ 10. Upper Left:Frobenius-norm-based losses; Lower Left: Nuclear-Norm based losses; Upper Right: Operator-norm-based losses; Lower Right: Statistical Discrepancies. (Color online; curves jittered in vertical axis to avoid overlap.) The supplemental article [40] contains an larger version of these plots. Reproducibility advisory: The code supplement [41] includes a script that reproduces any one of these individual curves.

The main conclusion is that the optimal shrinkage function depends strongly on the loss function chosen. The operator norm shrinker simply inverts the biasing function λ(ℓ), while the other functions shrink by much larger, and very different, amounts, with typically shrinking most. There are also important qualitative differences in the optimal shrinkers: is discontinuous at the bulk edge The others are continuous, but has the additional feature that it shrinks a neighborhood of the bulk to 1.

Remark. The optimal shrinker also depends on γ, so we might write η*(λ, γ). In model [Asy(γ)], one can use the same γ for each problem size n. Alternatively, in the n-th problem, one might use γn = pn/n. The former choice is simpler, as η* can be regarded as a univariate function of λ, and so we make it in Sections 1–6. The latter choice is preferable technically, and perhaps also in practice, when one has p and n, but not γ. It does, however, require us to treat η(λ, c) as a bivariate function – see Section 7.

1.2. Some key observations

The sections to follow construct a framework for evaluating and optimizing the asymptotic loss(1.8). We highlight here some observations that will play an important role. Beforehand, let us introduce a useful modification of (1.7) to a rank-aware shrinkage rule:

| (1.13) |

where the dimension r of the spiked model is taken as known. While our main results concern estimators that naturally do not require r to be known in advance, it will be easier conceptually and technically to analyze rank-aware shrinkage rules as a preliminary step.

[Obs. 1] Simultaneous block diagonalization. (Lemmas 1 and 5). There exists a (random) basis W such that

where Ai and Bi are square blocks of equal size di, and Σdi = 2r. (Here and below, A ⊕ B denotes a block-diagonal matrix with blocks A and B).

[Obs. 2] Decomposable loss functions. The loss functions (1.11) and many others studied below satisfy

or the corresponding equality with sum replaced by max.

[Obs. 3] Asymptotic deterministic loss. (Lemmas 3 and 7). For rank-aware estimators, when η and L are suitably continuous, almost surely

[Obs. 4] Asymptotic equivalence of losses.(Proposition 2). Conclusions derived for rank-aware estimators (1.13) carry over to the original estimators (1.7) because, under suitable condition

This relies on the fact that in the [Spike(ℓ1, …, ℓr)] model, the sample noise eigenvalues λin, i ≥ r+1 “stick to the bulk” in an appropriate sense.

1.3. Organization of the paper

For simplicity of exposition, we assume a single spike, r = 1, in the first half of the paper. [Obs. 1], [Obs. 2] and [Obs. 3] are developed respectively in Sections 2, 3 and 4, arriving at an explicit formula for the asymptotic loss of a shrinker. Section 5 illustrates the assumptions with our list of 26 decomposable matrix loss functions. In Section 6 we use the formula to characterize the asymptotically unique admissible nonlinearity for any decomposable loss, provide an algorithm for computing the optimal nonlinearity, and provide analytical formulas for many of the 26 losses. Section 7 extends the results to the general case where r > 1 spikes are present. We develop [Obs. 4] , remove the rank-aware assumption and explore some new phenomena that arise in cases where the optimal shrinker turns out to be discontinuous. In Section 8 we show, at least for Frobenius and Stein losses, that our optimal univariate shrinkage estimator, which applies the same scalar function to each sample eigenvalue, in fact asymptotically matches the performance of the best orthogonally-equivariant covariance estimator under assumption [Spike(ℓ1, …, ℓr)]. Section 9 extends to the more general spiked model with for σ > 0 known or unknown. Section 10 discusses our results in light of the high-dimensional covariance estimation work of El Karoui [24] and Ledoit and Wolf [26]. Some proofs and calculations are deferred to the supplementary article [40], where we also evaluate and document the strong signal (large-ℓ) asymptotics of the optimal shrinkage estimators, and the asymptotic percent improvement over naive hard thresholding of the sample covariance eigenvalues. Additional technical details and software are provided in the Code Supplement available online as a permanent URL from the Stanford Digital Repository [41].

2. Simultaneous Block-Diagonalization

We first develop [Obs. 1] in the simplest case, r = 1, assumping a rank-aware shrinker. In general, the estimator and estimand Ʃ are not simultaneously diagonalizable. However, in the particular case that both are rank-one perturbations of the identity, we will see that simultaneous block diagonalization is possible.

Some notation is needed. We denote the eigenvalues and eigenvectors of the spectral decompostion by

Whenever possible, we supress the index n and write e.g. S, λi and vi instead. Similarly, we often write Ʃp or even Ʃ for

Lemma 1. Let Ʃ and be (fixed, nonrandom) p-by-p symmetric positive definite matrices with

| (2.1) |

| (2.2) |

Let and Then there exists an orthogonal matrix W, which depends on Ʃ and such that

| (2.3) |

| (2.4) |

where the fundamental 2 × 2 matrices A and B are given by

| (2.5) |

Proof. Let where e1 denotes the unit vector in the first co-ordinate direction. It is evident that

| (2.6) |

It is natural, then, to work in the “common” basis of u1 and v1. We apply one step of Gram-Schmidt if we can, setting

In the second–exceptional–case, v1 = ±u1, so we pick a convenient vector orthogonal to u1. In either case, the columns of the p × 2 matrix W2 = [u1 z] are orthonormal and their span contains both u1 and v1. Now fill out W2 to an orthogonal matrix Observe now that if y lies in the column span of W2 and α is a scalar, then necessarily

The expressions (2.3) – (2.5) now follow from the rank one perturbation forms (2.6) along with

3. Decomposable Loss Functions

Here and below, by loss function Lp we mean a function of two p-by-p positive semidefinite matrix arguments obeying Lp ≥ 0, with Lp(A, B) = 0 if and only if A = B. A loss family is a sequence one for each matrix size p. We often write loss function and refer to the entire family. [Obs. 2] calls out a large class of loss functions which naturally exploit the simultaneously block-diagonalizability property of Lemma 1; we now develop this observation.

Definition 1. Orthogonal Invariance. We say the loss function Lp(A, B) is orthogonally invariant if for each orthogonal p-by-p matrix O,

For given p and a given sequence of block {di} sizes ∑i di=p, consider block-diagonal matrix decompositions of p by p matrices A and B into blocks Ai and Bi of size di:

| (3.1) |

Definition 2. Sum-Decomposability and Max-Decomposability. We say the loss function Lp(A, B) is sum-decomposable if for all decompositions (3.1),

We say that it is max-decomposable if if for all decompositions (3.1),

Clearly, such loss functions can exploit the simultaneous block diagonalization of Lemma 1. Indeed,

Lemma 2. Reduction to Two-Dimensional Problem. Consider an orthogonally invariant loss function, Lp, which is sum- or max-decomposable. Suppose that Ʃ and satisfy (2.1) and (2.2) respectively. Then

Proof. Lemma 1 provides a change of basis W yielding decompositions (2.3) and (2.4). From the invariance and decomposability hypotheses,

4. Asymptotic Loss in the Spiked Covariance Model

Consider the spiked model with a single spike, r = 1, namely, make assumptions [Asy(γ)] and [Spike(ℓ)]. The principal 2× 2 block estimator occurring in Lemmas 1 and 2 is B(η(λ1n),c1n) where λ1n is the largest eigenvalue of Sn and If η is continuous, then the convergence results (1.2) and (1.5) imply that the principal block converges as n → ∞. Specifically,

| (4.1) |

say, with the convergence occurring in all norms on 2 × 2 matrices.

In accord with [Obs. 3], we now show that the asymptotic loss (1.8) is a deterministic, explicit function of the population spike ℓ. For now, we will continue to assume that the shrinker η is rank-aware. Alternatively, we can make a different simplifying assumption on η, which will be useful in what follows:

Definition 3. We say that a scalar function η : [0, ∞) → [1, ∞) is a bulk shrinker if η(λ) = 1 when λ ≤ λ+(γ), and a neighborhood bulk shrinker if for some ∊ > 0, η(λ) = 1 whenever λ ≤ λ+(γ) + ∊.

The neighborhood bulk shrinker condition on η is rather strong, but does hold for in (1.12), for example. (Note that our definitions ignore the lower bulk edge which is of less interest in the spiked model.)

Lemma 3. A Formula for the Asymptotic Loss. Adopt models [Asy(γ)] and [Spike(ℓ)] with ℓ1, …, ℓr > ℓ+(γ). Suppose (a) that the family L = {Lp} of loss functions is orthogonally invariant and sum- or max-decomposable, and that is continuous. Let be given by (1.7), and let be the corresponding rank-aware shrinkage rule (1.13) for r = 1. Suppose the scalar nonlinearity η is continuous on (λ+(γ), ∞) . Then

| (4.2) |

Furthermore, if (b) η is a neighborhood bulk shrinker, then also has this limit a.s.

Each of the 26 losses considered in this paper satisfies conditions (a).

Proof. In the rank-aware case satisfies

Lemma 2 implies that

where the limit on the right hand side follows from convergence (4.1) and the assumed continuity of L2.

Now assume that η is a neighborhood bulk shrinker. From (1.2) we know that From eigenvalue interlacing (see (7.11) below) we have , where μ1n is the largest eigenvalue of a white Wishart matrix Wpn-1(n,I), and satisfies from [42]. Let ∊ > 0 be small enough that and also lies in the neighborhood shrunk to 1 by η. Hence, there exists a random variable such that almost surely, for all For such n, the first display above of this proof applies and we then obtain the second display as before.

5. Examples of Decomposable Loss Functions

Many of the loss functions that appear in the literature are Pivot-Losses. They can be obtained via the following common recipe:

Definition 4. Pivots. A matrix pivot is a matrix-valued function Δ(A, B) of two real positive definitee matrices A, B such that: (i) Δ(A, B) = 0 if and only if A = B, (ii) Δ is orthogonally equivariant and (iii) Δ respects block structure in the sense that

| (5.1) |

| (5.2) |

for any orthogonal matrix O of the appropriate dimension.

Matrix pivots can be symmetric-matrix valued, for example Δ(A, B) = A – B, but need not be, for example Δ(A, B) = A−1B − I.

Definition 5. Pivot-Losses. Let g be a non-negative function of a symmetric matrix variable that is definite: g(A) = 0 if and only if A = 0, and orthogonally invariant: g(OΔO‵) = g(Δ) for any orthogonal matrix O. A symmetric-matrix valued pivot Δ induces an orthgonally-invariant pivot loss

| (5.3) |

More generally, for any matrix pivot Δ, set and define

| (5.4) |

An orthogonally invariant function g depends on its matrix argument Δ or |Δ| only through its eigenvalues or singular values δ1, ..., δp. We abuse notation to write g(Δ) = g(δ1, ..., δp). Observe that if g has either of the forms

for some univariate g1, then the pivot loss L(A, B) = g(Δ(A, B)) (symmetric pivot) or L(A,B)=g(|Δ|(A,B)) (general pivot) is respectively sum- or max-decomposable. In case Δ is symmetric, the two definitions agree so long as g1 is an even function of δ.

5.1. Examples of Sum-Decomposable Losses

There are different strategies to derive sum-decomposable pivot-losses. First, we can use statistical discrepancies between the Normal distributions and :

-

This is just twice the Kullback distance Stein’s loss is a pivot-loss with respect to Δ(A, B) = A−1/2BA−1/2 and where g1(δ) = δ − 1 − log δ.

- Entropy/Divergence Losses: Because the Kullback discrepancy is not symmetric in its arguments, we may consider two other losses: reversing the arguments we get Entropy loss Lent(A, B) = Lst(B, A) [11, 15] and summing the Stein and Entropy losses gives divergence loss:

see [43, 18]. Each can be shown to be sum-decomposable, following the same argument as above. -

This measures the statistical distinguishability of and based on independent observations, since with ϕA and ϕB the densities of and Hence convergence of affinity loss to zero is equivalent to convergence of the underlying densities in Hellinger or Variation distance. This is a pivot-loss w.r.t Δ(A, B) = A−1/2BA−1/2 and

as is seen by setting C = A−1/2(A + B)B−1/2 and noting that C′C = (2I + Δ + Δ−1). Here, Fréchet Discrepancy [46, 47]: Let Lfre(A, B) = tr(A + B − 2A1/2B1/2). This measures the minimum possible mean-squared difference between zero-mean random vectors with covariances A and B respectively. This is a pivot-loss w.r.t Δ(A, B) = A1/2 − B1/2, and with g1(δ) = δ2.

Second, we may obtain sum-decomposable pivot-losses L(A, B) = g(Δ(A, B)) by simply taking g to be one of the standard matrix norms:

Squared Error Loss [3, 28, 25, 26]: Let This is a pivot-loss w.r.t Δ(A, B) = A − B and with g1(δ) = δ2.

Squared Error Loss on Precision [8]: Let This is a pivot-loss w.r.t Δ(A, B) = A−1 − B−1 and g(Δ)=tr Δ’Δ.

Nuclear Norm Loss. Let where denotes the nuclear norm of the matrix Δ, i.e. the sum of its singular values. This is a kpivot-loss k w.r.t Δ(A, B) = A – B and

Let This is a pivot-loss w.r.t Δ(A, B) = A−1B – I. It was studied in [48, 6, 10] and later work.

Let where log() denotes the matrix logarithm1 [51, 49]. This is a pivot-loss w.r.t

5.2. Examples of Max-Decomposable Losses

Max-decomposable losses arise by applying the operator norm (the maximal singular value or eigenvalue of a matrix) to a suitable pivot. Here are a few examples:

Operator Norm Loss [52]: Let This is a pivot-loss w.r.t Δ(A, B) = A – B and

Operator Norm Loss on Precision: Let This is a pivot-loss w.r.t. Δ(A, B) = A−1 − B−1.

Condition Number Loss: Let This is a pivot-loss w.r.t Δ(A, B) = log(A−1/2BA−1/2), related to [29]. In the spiked kmodel discussed below, LO,7 effectively measures the condition number of A−1/2BA−1/2.

We adopt the systematic naming scheme Lnorm,pivot where norm ∈ {F, O, N}, and pivot ∈ {and 1, ..., 7}. This set of 21 combinations covers the previous matrix norm examples and adds some more. Together with Stein’s loss and the others based on statistical discrepancy mentioned above, we arrive at a set of 26 loss functions, Table 1, to be studied in this paper.

Table 1:

Systematic notation for the 26 loss functions considered in this paper.

| MatrixNorm | |||

| Pivot | Frobenius | Operator | Nuclear |

| Log(A−1/2 BA−1/2) | LF,7 | LO,7 | LN,7 |

| St | Ent | Div | |

| Stein | Lst | Lent | Ldiv |

| Fréchet | Lfre | ||

6. Optimal Shrinkage for Decomposable Losses

6.1. Formally Optimal Shrinker

Formula (4.2) for the asymptotic loss has only been shown to hold in the single spike model and only for a certain class of nonlinearities η. In fact, the same is true in the r-spike model and for a much broader class of nonlinearities η. To preserve the narrative flow of the paper, we defer the proof, which is more technical, to Section 7. Instead, we proceed under the single spike model, and simply assume that from (4.2) is the correct limiting loss, and draw conclusions on the optimal shape of the shrinker η.

Definition 6. Optimal Shrinker. Let be a given loss family and let be the asymptotic loss corresponding to a nonlinearity η, as defined in (4.2), under assumption [Asy(γ)] . If η∗ satisfies

| (6.1) |

and for any η ≠ η∗ there exists ℓ ≥ 1 with L∞(ℓ, η*) < L∞(ℓ, η), then we say that η∗ is the formally optimal shrinker for the loss family L and shape factor γ, and denote the corresponding shrinkage rule by

Below, we call formally optimal shrinkers simply “optimal”. By definition, the optimal shrinkage rule η∗(λ ; γ, L) is the unique admissible rule, in the asymptotic sense, among rules of the form in the single-spike model. In the single spiked model (and as we show later, generally in the spiked model) one never regrets using the optimal shrinker over any other (reasonably regular) univariate shrinker. In light of our results so far, an obvious characterization of an optimal shrinker is as follows.

Theorem 1. Characterization of Optimal Shrinker. Let be a loss family. Define

| (6.2) |

Here, c = c(ℓ) and s = s(ℓ) satisfy and s2(ℓ) = 1 − c2(ℓ). Suppose that for any ℓ > ℓ+(γ), there exists a unique minimizer

| (6.3) |

Further suppose that for every we have argmin where

| (6.4) |

Then the shrinker

where ℓ(λ) is given by (1.10), is the optimal shrinker of the loss family L.

Many of the 26 loss families discussed in Section 3 admit a closed form expression for the optimal shrinker; see Table 2. For others, we computed the optimal shrinker numerically, by implementing in software a solver for the simple scalar optimization problem (6.3). Figure 3 portrays the optimal shrinkers for our 26 loss functions. We refer readers interested in computing specific individual shrinkers to our reproducibility advisory at the bottom of this paper, and invite the reader to explore the code supplement [41], consisting of online resources and code we offer.

6.2. Optimal Shrinkers Collapse the Bulk

We first observe that, for any of the 26 losses considered, the optimal shrinker collapses the bulk to 1. The following lemma is proved in the supplemental article [40]:

Lemma 4. Let L be any of the 26 losses mentioned in Table 1. Then the rule η**(ℓ) = 1 is unique asymptotically admissible on [1,ℓ+(γ)] namely, for every ℓ ∈[1,ℓ+(γ)] we have with strict inequality for at least one point in [1,ℓ+(γ)]

As part of the proof of Lemma 4, in Table 6 in the supplemental article [40], we explicitly calculate the fundamental loss function G(ℓ, η) of (6.4) for many of the loss families discussed in this paper.

To determine the optimal shrinker η∗(λ ; γ, L) for each of our loss functions L, it therefore remains to determine the map or equivalently only for ℓ > ℓ+(γ) This is our next task.

6.3. Optimal Shrinkers by Computer

The scalar optimization problem (6.3) is easy to solve numerically, so that one can always compute the optimal shrinker at any desired value λ. In the code supplement [41] we provide Matlab code to compute the optimal nonlinearity for each of the 26 loss families discussed. In the sibling problem of singular value shrinkage for matrix denoising, [53] demonstrates numerical evaluation of optimal shrinkers for the Schatten-p norm, where analytical derivation of optimal shrinkers appears to be impossible.

6.4. Optimal Shrinkers in Closed Form

We were able to obtain simple analytic formulas for the optimal shrinker η∗ in each of 18 loss families from Section 3. While the optimal shrinkers are of course functions of the empirical eigenvalue λ, in the interest of space, we state the lemmas and provide the formulas in terms of the quantities c and s. To calculate any of the nonlinearities below for a specific empirical eigenvalue λ, use the following procedure:

If λ ≤ λ+(γ) set η∗(λ) = 1. Otherwise:

Calculate ℓ(λ) using (1.10).

Calculate s(λ) = s(ℓ(λ)) using

Substitute ℓ(λ) c(λ) and s(λ) into the formula provided to get η∗(λ).

The closed forms we provide are summarized in Table 2. Note that ℓ, c and s refer to the functions ℓ(λ), c(ℓ(λ)) and s(ℓ(λ)) These formulae are formally derived in a sequence of lemmas that are stated and proved in the supplemental article [40]. The proofs also show that these optimal shrinkers are unique, as in each case the optimal shrinker is shown to be the unique minimizer, as in (6.3), of (6.2). We make some remarks on these optimal shrinkers by focusing first on operator norm loss for covariance and precision matrices:

| (6.5) |

This asymptotic relationship reflects the classical fact that in finite samples, the top empirical eigen value is always biased upwards of the underlying population eigenvalue [54, 55]. Formally defining the (asymptotic) bias as

we have bias(λ(ℓ),ℓ) > 0. The formula η∗(λ) = ℓ shows that the optimal nonlinearity for operator norm loss is what we might simply call a debiasing transformation, mapping each empirical eigenvalue back to the value of its “original” population eigenvalue, and the corresponding shrinkage estimator uses each sample eigenvectors with its corresponding population eigenvalue. In words, within the top branch of (6.5), the effect of operator-norm optimal shrinkage is to debias the top eigenvalue:

On the other hand, within the bottom branch, the effect is to shrink the bulk to 1. In terms of Definition 3 we see that η∗ is a bulk shrinker, but not a neighborhood bulk shrinker.

One might expect asymptotic debiasing from every loss function, but, perhaps surprisingly, precise asymptotic debiasing is exceptional. In fact, none of the other optimal nonlinearities in Table 2 is precisely debiasing.

In the supplemental article [40] we also provide a detailed investigation of the large-λ asymptotics of the optimal shrinkers, including their asymptotic slopes, asymptotic shifts and asymptotic percent improvement.

7. Beyond Formal Optimality

The shrinkers we have derived and analyzed above are formally optimal, as in Definition 6, in the sense that they minimize the formal expression L∞(ℓ|η) So far we have only shown that formally optimal shrinkers actually minimize the asymptotic loss (namely, are asymptotically unique admissible) in the single-spike case, under assumptions [Asy(γ)] and [Spike(ℓ)], and only over neighborhood bulk shrinkers.

In this section, we show that formally optimal shrinkers in fact minimize the asymptotic loss in the general Spiked Covariance Model, namely under assumptions [Asy(γ)] and [Spike(ℓ1,…,ℓr)], and over a large class of bulk shrinkers, which are possibly not neighborhood bulk shrinkers.

We start by establishing the rank r analog of Lemma 1. For a vector let Δr(ℓ) = diag(ℓ1,…,ℓr)

Lemma 5. Assume that Ʃ and are fixed matrices with

Let Ur and Vr denote the p-by-r matrices consisting of the top r eigenvectors of Ʃ and respectively. Suppose that [Ur Vr] has full rank 2r, and consider the QR decomposition

where Q has 2r orthonormal columns and the 2r × 2r matrix R is upper triangular. Let R2 denote the 2r × r submatrix formed by the last r columns of R. Fill out Q to an orthogonal matrix W = [Q Q⊥]. Then in the transformed basis we have the simultaneous block decompositions

| (7.1) |

| (7.2) |

Proof. We start with observations about the structure of Q and R. Since the first r columns of Q are identically those of Ur, we let Zr be the n-by-r matrix such that Q = [Ur Zr]. For the same reason, R has the block structure

where the matrices R12 and R22 satisfy Vr = UrR12 + ZrR22 , so that

| (7.3) |

Since Vr has orthogonal columns, we have

| (7.4) |

Let H be a p×r matrix whose columns lie in the column span of Q and let Δ be an r × r diagonal matrix. Observe that

say, since the columns of Q⊥ are orthogonal to those of H. By analogy to (2.6), we may write

| (7.5) |

and so both of the form I + HΔH′, with H = Ur and Vr respectively. We find that

We can then compute the value of C2r in the two cases to be given by and respectively, which establishes (7.1) and (7.2), and hence the lemma.

We intend to apply Lemma 5 to Ʃ and the “rank-aware” modification (1.13) of the estimator in (1.7). Assume now that and the p × r matrix Vr,n formed by the top eigenvectors of V are random.

Lemma 6. The rank of [Ur Vr,n] equals 2r almost surely.

Proof. Let ∏r(V) be the projection that picks out the first r columns of an orthogonal matrix V. For a fixed r-frame Ur, we consider the event

where Op is the group of orthogonal p-by-p matrices. Let denote the joint distribution of eigenvalues Λ = diag(λ1,...,λp) and eigenvectors V when S ~ Wp(n, Ʃ). As shown by [56], PƩ is absolutely continuous with respect to vp × μp’, the product of Lebesgue measure on and Haar measure on O(p). Since μp(A) = 0, it follows that PƩ(A) = 0.

Lemma 7. Adopt models [Asy(γ)] [Spike(ℓ1,…,ℓr)]and with ℓ1,…,ℓr > ℓr > ℓ+(γ). Suppose the scalar nonlinearity η is continuous on (λ+(γ), ∞). For each p there exists w.p. 1 an orthogonal change of basis W such that

| (7.6) |

where the 2r ×2r matrices satisfy

| (7.7) |

and the 2 × 2 matrices A(ℓ), B(ℓ, η) are defined at (2.5).

Suppose also that the family L = {Lp} of loss functions is orthogonally invariant and sum- or maxdecomposable, and that B → L2r (A, B) is continuous. Then

| (7.8) |

If η is a neighborhood bulk shrinker, then also has this limit a.s.

This is the rank r analog of Lemma 3. The optimal nonlinearity η∗ is continuous on [0,∞) for all losses except the operator norm ones, for which η∗ is continuous except at λ = λ (γ)+. Our result (7.7) requires only continuity on (λ+(γ), ∞) and so is valid for all 26 loss functions, as is the deterministic limit (7.8) for the rank-aware However, as we saw earlier, only the nuclear norm based loss functions yield optimal functions that are neighborhood bulk shrinkers. To show that (7.8) holds for for most other important shrinkage functions, some further work is needed – see Section 7.1 below.

Proof. We apply Lemma 5 to ∑ and on the set of probability 1 provided by Lemma 6. First, we rewrite (7.2) to show the subblocks of R:

where we write η(n) = (η(λ1,n),…, η(λr,n)) to show explicitly the dependence on n. The limiting behavior of R may be derived from (7.3) and (7.4) along with spiked model properties (1.2) and (1.5), so we have2, as n → ∞,

| (7.9) |

Here c = (c(ℓ1),…, c(ℓr)) and s = (s(ℓ1),…, s(ℓr)).

Again by (1.2) λi,n →a.s. λ(ℓi) > λ+(γ) and so continuity of η above λ+ (γ) assures that Δr (η(η)–1) → Δr(η −1), where η = (ηi) and ηi = η(λ(ℓi)). Together with (1.5), we obtain simplified structure in the limit,

| (7.10) |

To rewrite the limit in block diagonal form, let Π2r be the permutation matrix corresponding to the permutation defined by

| (1,…,2r) ⟼ (1,r + 1, 2, r + 2, 3,…, 2r). |

Permuting rows and columns in (7.1) and (7.10) using Π2r to obtain

we obtain (7.7). Using (7.6), the orthogonal invariance and sum/max decomposability, along with the continuity of L2r(A,·), we have

which completes the proof of Lemma 7.

7.1. Removing the rank-aware condition

In this section we prove Proposition 2 below, whereby the asymtotic losses coincide for a given estimator sequence and the rank-aware versions This result is plausible because of two observations:

Null eigenvalues stick to the bulk, i.e. for i ≥ r + 1 exceptions , most eigenvalues λin λ+(γ) and the few exceptions are not much larger. Hence, if η is a continuous bulk shrinker, we expect to be close to

under a suitable continuity assumption on the loss functions Lp, should then be close to

Observation 1 is fleshed out in two steps. The first step is eigenvalue comparison: The sample eigenvalue λin arise as eigenvalues of XX′/n when X is a pn-by-n matrix whose rows are i.i.d draws from (0, ∑pn). Let denote the projection on the last pn – r coordinates in and let μ1n ≥ … ≥ μpn−r,n denote the eigenvalues of ∏X(∏X)′/n. By the Cauchy interlacing Theorem (e.g. [57, p. 59]), we have

| (7.11) |

where the (μin) are the eigenvalues of a white Wishart matrix Wpn−r(n, I).

The second step is a bound on eigenvalues of a white Wishart that exit the bulk. Before stating it, we return to an important detail introduced in the Remark concluding Section 1.1.

Definition 3 of a bulk shrinker depends on the parameter γ = lim p/n through λ+(γ). Making that dependence explicit, we obtain a bivariate function η(λ, c). In model [Asy(γ)]and in the n-th problem, we might use η(λ, cn) either with cn = γ or cn = p/n. For Proposition 1 below, it will be more natural to use the latter choice. We also modify Definition 3 as follows.

Definition 7. We call η : [0, ∞) × (0, 1] → [1, ∞) a jointly continuous bulk shrinker if η(λ, c) is jointly continuous in λ and c, satisfies η(λ, c) = 1 for λ ≤ λ+(c) and is dominated: η(λ, c) ≤ Mλ for some M and all λ.

The following result is proved in [58, Theorem 2(a)].

Proposition 1. Let denote the sample eigenvalues of a matrix distributed as WN(n, I), with N/n → γ > 0. Suppose that η(λ, c) is a jointly continuous bulk shrinker and that cn − N/n = O(n−2/3). Then for q > 0,

| (7.12) |

The continuity assumption on the loss functions may be formulated as follows. Suppose that A, B1, B2 are p-by-p positive definite matrices, with A satisfying assumption [Spike(ℓ1,…, ℓr)] and spec(Bk) = [(ηki), (vi)], thus B1 and B2 have the same eigenvectors. Set η1 = max {η11, η21} . We assume that for some q ∈ [1, ∞] and some continuous function C(ℓ1, η1 ) not depending on p, we have

| (7.13) |

whenever Condition (7.13) is satisfied for all 26 of the loss functions of Section 3, as is verified in Proposition 1 in SI.

In the next proposition we adopt the convention that estimators of (1.7) and of (1.13) are constructed with a jointly continuous bulk shrinker, which we denote η(λ, cn).

Proposition 2. Adopt models [Asy(γ)] and [Spike(ℓ1,…, ℓr)]. Suppose also that the family L = {Lp} of loss functions is orthogonally invariant and sum- or max- decomposable, and satisfies continuity condition (7.13). If η(λ, cn) is a jointly continuous bulk shrinker with cn = pn/n, then

and so converges in probability to the deterministic asymptotic loss (7.8).

proof in the left side of (7.13), substitute A=∑, and By definition , and share the same eigenvectors. The components of η1‒η2 then satisfy

We now use (7.11)to compare the eigenvectors λin of the spiked model to those of a suitable white Wishart matrix to which Proposition 1 applies. The fuction η↑(μ,c) = max{η(λ,c),1 ≤ λ ≤ μ} and is non-decreasing and jointly continuos. Hence η(λin, cn) ≤ η↑(λin, cn) ≤ η↑(μi‒r,nin,cn), and so

with a corresponding bound for q = ∞. From continuity condition (7.13),

The constant C(ℓ1,η(λ1n,cn)) remains bounded by (1.2). The ℓq norm converges to 0 in probability, applying Proposition 1 to the eigenvalues of Wpn−r(n, I), with N = pn − r, noting that cn − N/n = r/n = O(n−2/3).

7.2. Asymptotic loss for discontinuous optimal shrinkers

Formula (6.5) showed that the optimal shrinker η*(λ, γ) for operator norm losses LO,1,LO,2 is discontinuous at In this section, we show that when η* is used, a deterministic asymptotic loss exists for LO,1, but not for LO,2. The reason will be seen to lie in the behavior of the optimal component loss F*(ℓ) = L2[A(ℓ),B(ℓ, η*)]. Indeed, calculation based on (6.2), (6.5) shows that for ℓ ≥ ℓ+,

as ℓ ↓ ℓ+, where indices a = 1 and −1 correspond to and respectively. Importantly, is strictly increasing on [ℓ+,∞) while is strictly decreasing there.

Proposition 3. Adopt models [Asy(γ)] and [Spike(ℓ1,…, ℓr)] with ℓr > ℓ+(γ). Consider the optimal shrinker η*(λ, γ ) with γn = pn/n given by (6.5) for both LO,1 and LO,2. For LO,1, the asymptotic loss is well defined:

| (7.14) |

However, for LO,2,

| (7.15) |

where W has a two point distribution in which

and for a real Tracy-Widom variate TW1 [59].

Roughly speaking, there is positive limiting probability that the largest noise eigenvalue will exit the bulk distribution, and in such cases the corresponding component loss F*(ℓ+) – which is due to noise alone – exceeds the largest component loss due to any of the r spikes, namely F*(ℓr). Essentially, this occurs because precision losses decrease as signal strength a increases. The effect is not seen for L{F,N},2 because the optimal shrinkers in those cases are continuous at ℓ+ !

Proof. For the proof, write ∥·∥ for ∥·∥∞. Let W = [W1 W2] be the orthogonal change of basis matrix constructed in Lemma 7, with W1 containing the first 2r columns. We treat the two losses LO,1 and LO,2 at once using an exponent a = ±1, and write ηa(λ) for ηa(λ, γn). Thus, let

and observe that the loss of the rank-aware estimator

lies in the column span of W1. We have and the main task will be to show that for a = ±1,

| (7.16) |

Assuming the truth of this for now, let us derive the proposition. The quantities of interest in(7.14), (7.15) become First, note from Lemma 7 that

First, note from Lemma 7 that

| (7.17) |

Observe that for both a = 1 and −1,

The rescaled noise eigenvalue has a limiting real Tracy-Widom distribution with scale factor σ(γ) > 0 [60, Prop. 5.8]. Hence, using the discontinuity of the optimal shrinker η*, and the square root singularity from above

Consequently, recalling that we have

| (7.18) |

For LO,1, with a = 1, F*(ℓ) is strictly increasing and so from (7.17) and (7.18), we obtain and hence (7.14).For LO,2, with a = −1, F* (ℓ) is strictly so on the event TW > 0,

which leads to (7.15) and hence the main result.

It remains to prove (7.16). For a symmetric block matrix,

| (7.19) |

Apply this to with

since Hence

| (7.20) |

We now show that ∥ΔW1∥ →P 0. Using notation from Lemma 5,

Since Δvk = 0 for k = 1,…, r,

From (7.9), we have and hence is bounded. Observe that where we have set δin = η(λi, γn)−1. Note from (6.5) that δin = 0 unless λi > λ+(γn). With Nn = #{i ≥ r + 1 : λin > λ+(γn)}, we then have

| (7.21) |

From (7.18) we have ∥Δn∥ = OP (1). Since each vi, i > r is uniformly distributed on Sp−1, a simple union bound based on (7.23) below yields

| (7.22) |

It remains to bound Nn. From the interlacing inequality (7.11),

where {μjn} are the eigenvalues of a white Wishart matrix Wpn−r(n, I). This quantity is bounded in [58, Theorem 2(c)], which says that Ñn = Op(1). In more detail, we make the correspondences N ← pn − r, γN ← (pn − r)/n and cN ← pn/n so that cN − γN = r/n = o(n−2/3) and obtain EÑn→ c0 = 0.17.

From (7.21) and the preceding two paragraphs, we conclude that and so

Returning to (7.20), we deduce now that ∥Bn∥ ≤ ∥ΔW1∥ →P 0. From the definition of W1 we have and hence the inequalities

Now observe that ∥Cn∥ ≤ ∥Δn∥. Apply (7.19) to W′ΔW to get

and hence that ∥Cn∥ ≥ ∥Δn∥ − oP (1). Thus ∥Cn∥ = ∥Δn∥ + oP (1). Inserting these results into (7.20), we obtain

which completes the proof of (7.16), and hence of Proposition 3.

Finally, we record a concentration bound for the uniform distribution on spheres. While more sophisticated results are known [61], an elementary bound suffices for us.

Lemma 8. If U is uniformly distributed on Sn-1 and u ∈ Sn-1 if fixed, then for M < 0 and n ≥ 4,

| (7.23) |

Proof. Since has the Beta distribution,

where by Gautschi’s inequality [62, 63, (5.6.4)]

Since (1 − x/m)m < e−x for x, m > 0, and 4/n ≥ 2/(n − 2) for n ≥ 4,

8. Optimality Among Equivariant Procedures

The notion of optimality in asymptotic loss, with which we have been concerned so far, is relatively weak. Also, the class of covariance estimators we have considered, namely procedures that apply the same univariate shrinker to all empirical eigenvalues, is fairly restricted.

Consider the much broader class of orthogonally-equivariant procedures for covariance estimation [2, 19, 64], in which estimates take the form Here, Δ = Δ (Λ) is any diagonal matrix that depends on the empirical eigenvalues ∧ in possibly a more complex way than the simple scalar element-wise shrinkage η(∧) we have considered so far. One might imagine that the extra freedom available with more general shrinkage rules would lead to improvements in loss, relative to our optimal scalar nonlinearity; certainly the proposals of [2, 19, 26] are of this more general type.

The smallest achievable loss by any orthogonally equivariant procedure is obtained with the “oracle” procedure , where

| (8.1) |

the minimum being taken over diagonal matrices with diagonal entries ≥1. Clearly, this optimal performance is not attainable, since the minimization problem explicitly demands perfect knowledge of Σ, precisely the object that we aim to recover. This knowledge is never available to us in practice – hence the label oracle3. Nevertheless, this optimal performance is a legitimate benchmark.

Interestingly, at least for the popular Frobenius and Stein losses, our optimal nonlinearities η* deliver oracle-level performance – asymptotically. To state the result, recall expression (6.2) for these losses: .

Theorem 2. (Asymptotic optimality among all equivariant procedures.) Let L denote either the direct Frobenius loss LF,1 or the Stein loss Lst. Consider a problem sequence satisfying assumptions [Asy(γ)] and [Spike(ℓ1,…, ℓr)]. We have

where η* is the optimal shrinker for the losses LF,1 or Lst in Table 2.

In short, the shrinker η*(), which has been designed to minimize the limiting loss, asymptotically delivers the same performance as the oracle procedure, which has the lowest possible loss, in finite-n, over the entire class of covariance estimators by arbitrary high-dimensional shrinkage rules. On the other hand, by definition, the oracle procedure outperforms every orthogonally-equivariant statistical estimator. We conclude that η* – as one such orthogonally-invariant estimator – is indeed optimal (in the sense of having the lowest limiting loss) among all orthogonally invariant procedures. While we only discuss the cases LF,1 and Lst, we suspect that this theorem holds true for many of the 26 loss functions considered.

Proof. We first outline the approach. We can write Σ and Σ−1 in the form I + F , and with

where βk = ℓk − 1 for LF,1 and for LSt and . Write

| (8.2) |

For both L = LF,1 and LSt, we establish a decomposition

| (8.3) |

Here, a is a constant depending only on the loss function,

| (8.4) |

and

| (8.5) |

Decomposition (8.3) shows that the oracle estimator (8.1) may be found term by term, using just univariate minimization over each Δi. Consider the first sum in (8.3), and let denote the summand. We will show that

| (8.6) |

and that

| (8.7) |

Together (8.6) and (8.7) establish the Theorem.

Turning to the details, we begin by showing (8.3). For Frobenius loss, we have from our definitions and (8.2) that

For i ≥ r + 1, each summand in the first sum equals H(bi, Δi) and for i ≤ r, we use the decomposition bi = (ℓi − 1)c(ℓi)2 + ∈i. We obtain decomposition (8.3) with a = −2 and

For Stein’s loss, our definitions yield

Again, for each i ≥ r + 1, each summand in the first sum equals H(bi, Δi) and with bi = (ℓi ‒ 1)c(ℓi)2 + ∈i we obtain (8.3) with a = 1 and

It remains to verify (8.6) and (8.7). Theorem 1 says that for 1 ≤ i ≤ r,

which yields (8.6). From (8.5), we observe that in our two cases

| (8.8) |

Now, using (8.2) and (7.22), we get

From the previous two displays, we conclude

which is (8.7), and so completes the full proof.

9. Optimal Shrinkage with common variance σ2 ≠ 1

Simply put, the Spiked Covariance Model is a proportional growth independent-variable Gaussian model, where all variables, except the first r, have common variance σ. Literature on the spiked model often simplifies the situation by assuming σ2 = 1, as we have done in our assumption [Spike(ℓ1,…, ℓr)] above. To consider optimal shrinkage in the case of general common variance σ2 > 0, assumption [Spike(ℓ1,…, ℓr)] has to be replaced by

[Spike(ℓ1,…, ℓr|σ2)] The population eigenvalues in the n-th problem, namely the eigenvalues of Σpn, are given by (ℓ1,…, ℓr, σ2,…, σ2), where the number of “spikes” r and their amplitudes ℓ1 >…> ℓr ≥ 1 are fixed independently of n and pn.

In this section we show how to use an optimal shrinker, designed for the spiked model with common variance σ2 = 1, in order to construct an optimal shrinker for a general common variance σ2, namely, under assumptions [Asy(γ)] and [Spike(ℓ1,…, ℓr|σ2)].

9.1. σ2 known

Let Σp and Sn,p be population and sample covariance matrices, respectively, under assumption [Spike(ℓ1,…, ℓr|σ2)]. When the value of σ is known, the matrices and the sample covariance matrix constitute population and sample covariance matrices, respectively, under assumption [Spike(ℓ1,…, ℓr)]. Let L be any of the loss families considered above and let η be a shrinker. Define the shrinker corresponding to η by

| (9.1) |

Observe that for each of the loss families we consider, Lp (σ2A, σ2B) = σ2κLp(A, B), where κ ∈ {−2, −1, 0, 1, 2} depends on the family {Lp} alone. Hence

It follows that if η* is the optimal shrinker for the loss family L, in the sense of Definition 6, under Assumption [Spike(ℓ1,…, ℓr)] , then is the optimal shrinker for L under Assumption [Spike(ℓ1,…, ℓr|σ2)]. Formula (9.1) therefore allows us to translate each of the optimal shrinkers given above to a corresponding optimal shrinker in the case of a general common variance σ2 > 0.

9.2. σ2 unknown

In practice, even if one is willing to assume a common variance σ2 and subscribe to the spiked model, the value of σ2 is usually unknown. Assume however that we have a sequence of estimatorswhere for each n, is a real function of a pn-by-pn positive definite symmetric matrix argument. Assume further that under the spiked model with general common variance σ2, namely under assumptions [Asy(γ)] and [Spike(ℓ1,…, ℓr |σ2)], the sequence of estimators is consistent in the sense that , almost surely. For a continuous shrinker η, define a sequence of shrinkers by

| (9.2) |

Again for each of the loss families we consider, almost surely,

We conclude that, using (9.2), any consistent sequence of estimators yields a sequence of shrinkers with the same asymptotic loss as the optimal shrinker for known σ2. In other words, at least inasmuch as the asymptotic loss is concerned, under the spiked model, there is no penalty for not knowing σ2.

Estimation of σ2 under Assumption [Spike(ℓ1,…, ℓr |σ2)] has been considered in [65, 66, 31] where several approaches have been proposed. As an simple example of a consistent sequence of estimators , we consider the following simple and robust approach based on matching of medians [32]. The underlying idea is that for a given value of n the sample eignevalues λr+1,…, λpn form an approximate Marčenko-Paster bulk inflated by σ2, and that a median sample eigenvalue is well suited to detect this inflation as it is unaffected by the sample spikes λ1,…, λr.

Define, for a symmetric p-by-p positive definite matrix S with eigenvalues λ1,…, λp the quantity

| (9.3) |

where λmed is a median of λ1,…, λp and μγ is the median of the Marčenko-Pastur distribution, namely, the unique solution in λ−(γ) ≤ x ≤ λ+(γ) to the equation

where as before . Note that the median μγ is not available analytically but can easily be obtained numerically, for example using remarks on the Marčenko-Pastur cumulative distribution function included in SI. Now for a sequence {Sn,pn} of sample covariance matrices,define the sequence of estimators

| (9.4) |

Lemma 9. Let σ2 > 0, and assume [Asy(γ)] and [Spike(ℓ1, … , ℓr|σ2)]. Then almost surely

In summary, using (9.1) (for σ2 known) or (9.2) with (9.4) (for σ2 unknown) one can use the optimal shrinkers for each of the loss families discussed above, designed for the case σ = 1, to construct a shrinker that is optimal, for the same loss family, under the spiked model with common variance σ2 ≠ 1.

10. Discussion

In this paper, we considered covariance estimation in high dimensions, where the dimension p is comparable to the number of observations n. We chose a fixed-rank principal subspace, and let the dimension of the problem grow large. A different asymptotic framework for covariance estimation would choose a principal subspace whose rank is a fixed fraction of the problem dimension; i.e. the rank of the principal subspace is growing rather than fixed. (In the sibling problem of matrix denoising, compare the “spiked” setup [32, 31, 53] with the “fixed fraction” setup of [67].)

In the fixed fraction framework, some of underlying phenomena remain qualitatively similar to those governing the spiked model, while new effects appear. Importantly, the relationships used in this paper, predicting the location of the top empirical eigenvalues, as well as the displacement of empirical eigenvectors, in terms of the top theoretical eigenvalues, no longer hold. Instead, a complex nonlinear relation exists between the limiting distribution of the empirical eigenvalues and the limiting distribution of the theoretical eigenvalues, as expressed by the Marčenko-Pastur (MP) relation between their Stieltjes transforms [33, 68].

Covariance shrinkage in the proportional rank model should then, naturally, make use of the so-called MP Equation. Noureddine El Karoui [24] proposed a method for debiasing the empirical eigenvalues, namely, for estimating (in a certain specific sense) their corresponding population eigenvalues; Olivier Ledoit and Sandrine Peché [25] developed analytic tools to also account for the inaccuracy of empirical eigenvectors, and Ledoit and Michael Wolf [26] have implemented such tools and applied them in this setting.

The proportional rank case is indeed subtle and beautiful. Yet, the fixed-rank case deserves to be worked out carefully. In particular, the shrinkers we have obtained here in the fixed-rank case are extremely simple to implement, requiring just a few code lines in any scientific computing language. In comparison, the covariance estimation ideas of [24, 26], based on powerful and deep insights from MP theory, require a delicate, nontrivial effort to implement in software, and call for expertise in numerical analysis and optimization. As a result, the simple shrinkage rules we propose here may be more likely to be applied correctly in practice, and to work as expected, even in relatively small sample sizes.

An analogy can be made to shrinkage in the normal means problem, for example [69]. In that problem, often a full Bayesian model applies, and in principle a Bayesian shrinkage would provide an optimal result [70]. Yet, in applications one often wants a simple method which is easy to implement correctly, and which is able to deliver much of the benefit of the full Bayesian approach. In literally thousands of cases, simple methods of shrinkage - such as thresholding - have been chosen over the full Bayesian method for precisely that reason.

Supplementary Material

Reproducible Research.

In the code supplement [41] we offer a Matlab software library that includes:

A function to compute the value of each of the 26 optimal shrinkers discussed to high precision.

A function to test the correctness of each of the 18 analytic shrinker fomulas provided.

Scripts that generate each of the figures in this paper, or subsets of them for specified loss functions.

Acknowledgements.

We thank Amit Singer, Andrea Montanari, Sourav Chatterjee and Boaz Nadler for helpful discussions. We also thank the anonymous referees for significantly improving the manuscript through their helpful comments. This work was partially supported by NSF DMS-0906812 (ARRA). MG was partially supported by a William R. and Sara Hart Kimball Stanford Graduate Fellowship.

Proofs and Additional Results

In the supplementary material [40] we provide proofs omitted from the main text for space considerations and auxiliary lemmas used in various proofs. Notably, we prove Lemma 4, and provide detailed derivations of the 17 explicit formulas for optimal shrinkers, as summarized in Table 2. In addition, in the supplementary material we offer a detailed study of the large-λ asymptotics (asymptotic slope and asymptotic shift) of the optimal shrinkers discovered in this paper, and tabulate the asymptotic behavior of each optimal shrinker. We also study the asymptotic percent improvement of the optimal shrinkers over naive hard thresholding of the sample covariance eigenvalues.

The matrix logarithm transfers the matrices from the Riemannian manifold of symmetric positive semidefinite matrices to its tangent space at A. It can be shown that LF,7 is the squared geodesic distance in this manifold. This metric between covariances has attracted attention, for example, in diffusion tensor MRI [49, 50].

For simplicity, we chose the QR decomposition to make the sign of s(ℓi) positive.

The oracle procedure does not attain zero loss since it is “doomed” to use the eigenbasis of the empirical covariance, which is a random basis corrupted by noise, to estimate the population covariance.

References

- [1].Stein Charles. Some problems in multivariate analysis Technical report, Department of Statistics, Stanford University, 1956. [Google Scholar]

- [2].Stein Charles. Lectures on the theory of estimation of many parameters. Journal of Mathematical Sciences, 34(1):1373–1403, 1986. [Google Scholar]

- [3].James William and Stein Charles. Estimation with quadratic loss. In Proceedings of the fourth Berkeley symposium on mathematical statistics and probability, volume 1, pages 361–379, 1961. [Google Scholar]

- [4].Efron Bradley and Morris Carl. Multivariate empirical bayes and estimation of covariance matrices. The Annals of Statistics, 4(1):pp. 22–32, 1976. [Google Scholar]

- [5].Haff LR. An identity for the wishart distribution with applications. Journal of Multivariate Analysis, 9(4):531–544, 1979. [Google Scholar]

- [6].Haff LR. Empirical bayes estimation of the multivariate normal covariance matrix. The Annals of Statistics, 8(3):586–597, 1980. [Google Scholar]

- [7].Berger James. Estimation in continuous exponential families: Bayesian estimation subject to risk restrictions and inadmissibility results. Statistical Decision Theory and Related Topics III, 1:109–141, 1982. [Google Scholar]

- [8].Haff LR. Estimation of the inverse covariance matrix: Random mixtures of the inverse wishart matrix and the identity. The Annals of Statistics, pages 1264–1276, 1979. [Google Scholar]

- [9].Dey Dipak K and Srinivasan C. Estimation of a covariance matrix under stein’s loss. The Annals of Statistics, pages 1581–1591, 1985. [Google Scholar]

- [10].Sharma Divakar and Krishnamoorthy K. Empirical bayes estimators of normal covariance matrix. Sankhya: The Indian Journal of Statistics, Series A (1961–2002), 47(2):pp. 247–254, 1985. [Google Scholar]

- [11].Sinha BK and Ghosh M. Inadmissibility of the best equivariant estimators of the variance covariance matrix, the precision matrix, and the generalized variance under entropy loss. Statist. Decisions, 5:201–227, 1987. [Google Scholar]

- [12].Kubokawa Tatsuya. Improved estimation of a covariance matrix under quadratic loss. Statistics & Probability Letters, 8(1):69 – 71, 1989. [Google Scholar]

- [13].Krishnamoorthy K and Gupta AK. Improved minimax estimation of a normal precision matrix. Canadian Journal of Statistics, 17(1):91–102, 1989. [Google Scholar]

- [14].Loh Wei-Liem. Estimating covariance matrices. The Annals of Statistics, pages 283–296, 1991. [Google Scholar]

- [15].Krishnamoorthy K and Gupta AK. Improved minimax estimation of a normal precision matrix. Canadian Journal of Statistics, 17(1):91–102, 1989. [Google Scholar]

- [16].Pal N. Estimating the normal dispersion matrix and the precision matrix from a decision theoretic point of view: a review. Statistical Papers, 34(1):1–26, 1993. [Google Scholar]

- [17].Yang Ruoyong and Berger James O. Estimation of a covariance matrix using the reference prior. The Annals of Statistics, pages 1195–1211, 1994. [Google Scholar]

- [18].Gupta AK and Samuel Ofori-Nyarko. Improved minimax estimators of normal covariance and precision matrices. Statistics: A Journal of Theoretical and Applied Statistics, 26(1):19–25, 1995. [Google Scholar]

- [19].Lin SF and D Perlman M. A monte carlo comparison of four estimators for a covariance matrix In Multivariate Analysis VI (Krishnaiah PR, ed.), pages 411–429. North Holland, Amsterdam, 1985. [Google Scholar]

- [20].Daniels Michael J and Kass Robert E. Shrinkage estimators for covariance matrices. Biometrics, 57(4):1173–1184, 2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Ledoit Olivier and Wolf Michael. A well-conditioned estimator for large-dimensional covariance matrices. Journal of multivariate analysis, 88(2):365–411, 2004. [Google Scholar]

- [22].Sun D and Sun X. Estimation of the multivariate normal precision and covariance matrices in a star-shape model. Annals of the Institute of Statistical Mathematics, 57(3):455–484, 2005. cited By (since 1996)7. [Google Scholar]

- [23].Jianhua Z Huang Naiping Liu, Pourahmadi Mohsen, and Liu Linxu. Covariance matrix selection and estimation via penalised normal likelihood. Biometrika, 93(1):85–98, 2006. [Google Scholar]

- [24].Karoui Noureddine El. Spectrum estimation for large dimensional covariance matrices using random matrix theory. The Annals of Statistics, pages 2757–2790, 2008. [Google Scholar]

- [25].Ledoit Olivier and Sandrine Péché Eigenvectors of some large sample covariance matrix ensembles. Probability Theory and Related Fields, 151(1–2):233–264, 2011. [Google Scholar]

- [26].Ledoit Olivier and Wolf Michael. Nonlinear shrinkage estimation of large-dimensional covariance matrices. The Annals of Statistics, 40(2):1024–1060, 2012. [Google Scholar]

- [27].Fan Jianqing, Fan Yingying, and Lv Jinchi. High dimensional covariance matrix estimation using a factor model. Journal of Econometrics, 147(1):186–197, 2008. [Google Scholar]

- [28].Chen Yilun, Wiesel Ami, Eldar Yonina C, and Hero Alfred O. Shrinkage algorithms for mmse covariance estimation. Signal Processing, IEEE Transactions on, 58(10):5016–5029, 2010. [Google Scholar]

- [29].Won Joong-Ho, Lim Johan, Kim Seung-Jean, and Rajaratnam Bala. Condition-number-regularized covariance estimation. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Johnstone Iain M. On the distribution of the largest eigenvalue in principal components analysis. The Annals of statistics, 29(2):295–327, 2001. [Google Scholar]

- [31].Shabalin Andrey a. and Nobel Andrew B.. Reconstruction of a low-rank matrix in the presence of Gaussian noise. Journal of Multivariate Analysis, 118:67–76, 2013. [Google Scholar]

- [32].Gavish M and Donoho DL. The Optimal Hard Threshold for Singular Values is 4/√3 . IEEE Transactions on Information Theory, 60(8):5040–5053, 2014. [Google Scholar]

- [33].Marčenko Vladimir A and Pastur Leonid Andreevich. Distribution of eigenvalues for some sets of random matrices. Sbornik: Mathematics, 1(4):457–483, 1967. [Google Scholar]

- [34].Baik Jinho, Arous Gérard Ben, and Péché Sandrine Phase transition of the largest eigenvalue for nonnull complex sample covariance matrices. Annals of Probability, pages 1643–1697, 2005. [Google Scholar]

- [35].Baik Jinho and Silverstein Jack W. Eigenvalues of large sample covariance matrices of spiked population models. Journal of Multivariate Analysis, 97(6):1382–1408, 2006. [Google Scholar]

- [36].Paul Debashis. Asymptotics of sample eigenstructure for a large dimensional spiked covariance model. Statistica Sinica, 17(4):1617, 2007. [Google Scholar]

- [37].Benaych-Georges Florent and Nadakuditi Raj Rao. The eigenvalues and eigenvectors of finite, low rank perturbations of large random matrices. Advances in Mathematics, 227(1):494–521, 2011. [Google Scholar]

- [38].Bai Zhidong and Yao Jian-feng. Central limit theorems for eigenvalues in a spiked population model. Annales de l’Institut Henri Poincare (B) Probability and Statistics, 44(3):447–474, 2008. [Google Scholar]

- [39].Konno Yoshihiko. On estimation of a matrix of normal means with unknown covariance matrix. Journal of Multivariate Analysis, 36(1):44–55, 1991. [Google Scholar]

- [40].Donoho David L., Gavish Matan, and Johnstone Iain M.. Supplementary Material for “Optimal Shrinkage of Eigenvalues in the Spiked Covariance Model”. http://purl.stanford.edu/xy031gt1574, 2016. [DOI] [PMC free article] [PubMed]

- [41].Donoho David L., Gavish Matan, and Johnstone Iain M.. Code supplement to “Optimal Shrinkage of Eigenvalues in the Spiked Covariance Model”. http://purl.stanford.edu/xy031gt1574, 2016. [DOI] [PMC free article] [PubMed]

- [42].Geman Stuart. A limit theorem for the norm of random matrices. Annals of Probability, 8:252– 261, 1980. [Google Scholar]

- [43].Kubokawa Tatsuya and Konno Yoshihiko. Estimating the covariance matrix and the generalized variance under a symmetric loss. Annals of the Institute of Statistical Mathematics, 42(2):331– 343, 1990. [Google Scholar]

- [44].Kailath Thomas. The divergence and bhattacharyya distance measures in signal selection. Communication Technology, IEEE Transactions on, 15(1):52–60, 1967. [Google Scholar]

- [45].Matusita Kameo. On the notion of affinity of several distributions and some of its applications. Annals of the Institute of Statistical Mathematics, 19:181–192, 1967. [Google Scholar]

- [46].Olkin Ingram and Pukelsheim Friedrich. The distance between two randomvectorswith given dispersion matrices Linear Algebra and its Applications, 48:257–263, 1982. [Google Scholar]

- [47].Dowson DC and Landau BV. The fréchet distance between multivariate normal distributions. Journal of Multivariate Analysis, 12(3):450–455, 1982. [Google Scholar]

- [48].Selliah Jegadevan Balendran. Estimation and testing problems in a Wishart distribution. Department of Statistics, Stanford University, 1964. [Google Scholar]

- [49].Lenglet Christophe, Rousson Mikaël, Deriche Rachid, and Faugeras Olivier. Statistics on the manifold of multivariate normal distributions: Theory and application to diffusion tensor mri processing. Journal of Mathematical Imaging and Vision, 25(3):423–444, 2006. [Google Scholar]

- [50].Dryden Ian L., Koloydenko Alexey, and Zhou Diwei. Non-euclidean statistics for covariance matrices, with applications to diffusion tensor imaging. The Annals of Applied Statistics, 3(3):pp. 1102–1123, 2009. [Google Scholar]

- [51].Förstner Wolfgang and Moonen Boudewijn. A metric for covariance matrices. Quo vadis geodesia, pages 113–128, 1999. [Google Scholar]

- [52].Karoui Noureddine El. Operator norm consistent estimation of large-dimensional sparse covariance matrices. The Annals of Statistics, pages 2717–2756, 2008. [Google Scholar]

- [53].Gavish M and Donoho DL. Optimal Shrinkage of Singular Values. ArXiv 1405.7511, 2016. [Google Scholar]

- [54].van der Vaart HR. On certain characteristics of the distribution of the latent roots of a symmetric random matrix under general conditions. The Annals of Mathematical Statistics, 32(3):pp. 864–873, 1961. [Google Scholar]

- [55].Cacoullos Theophilos and Olkin Ingram. On the bias of functions of characteristic roots of a random matrix. Biometrika, 52(1/2):pp. 87–94, 1965. [Google Scholar]

- [56].James AT. Normal multivariate analysis and the orthogonal group. Annals of Mathematical Statistics, 25(1):40–75, 1954. [Google Scholar]

- [57].Bhatia Rajendra. Matrix analysis, volume 169 of Graduate Texts in Mathematics. Springer-Verlag, New York, 1997. [Google Scholar]

- [58].Johnstone Iain M.. Tail sums of wishart and gue eigenvalues beyond the bulk edge. Australian and New Zealand Journal of Statistics, 2017. to appear. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [59].Tracy CA and Widom H. On orthogonal and symplectic matrix ensembles. Comm Math Phys, 177:727–754, 1996. [Google Scholar]

- [60].Benaych-Georges F, Guionnet A, and Maida M. Fluctuations of the extreme eigenvalues of finite rank deformations of random matrices. Electron. J. Probab, 16:no. 60, 1621–1662, 2011. [Google Scholar]

- [61].Ledoux Michel. The concentration of measure phenomenon. Number 89. American Mathematical Society, 2001. [Google Scholar]

- [62].NIST Digital Library of Mathematical Functions. http://dlmf.nist.gov/, Release 1.0.9 of 2014–08-29. Online companion to [63].

- [63].Olver FWJ, Lozier DW, Boisvert RF, and Clark CW, editors. NIST Handbook of Mathematical Functions. Cambridge University Press, New York, NY, 2010. Print companion to [62]. [Google Scholar]

- [64].Muirhead Robb J. Developments in eigenvalue estimation. In Advances in Multivariate Statistical Analysis, pages 277–288. Springer, 1987. [Google Scholar]

- [65].Kritchman Shira and Nadler Boaz. Non-parametric detection of the number of signals: Hypothesis testing and random matrix theory. Signal Processing, IEEE Transactions, 57(10):3930– 3941, 2009. [Google Scholar]

- [66].Passemier Damien and Yao Jian-feng. Variance estimation and goodness-of-fit test in a high-dimensional strict factor model. arXiv:1308.3890, 2013. [Google Scholar]

- [67].Donoho DL and Gavish M. Minimax Risk of Matrix Denoising by Singular Value Thresholding. ArXiv e-prints, 2013. [Google Scholar]

- [68].Bai Zhidong and Silverstein Jack W. Spectral analysis of large dimensional random matrices. Springer, 2010. [Google Scholar]

- [69].Donoho David Land Johnstone Iain M. Minimax risk over ℓp-balls for ℓp-error. Probability Theory and Related Fields, 99(2):277–303, 1994. [Google Scholar]

- [70].Brown Lawrence D. and Greenshtein Eitan. Nonparametric empirical bayes and compound decision approaches to estimation of a high-dimensional vector of normal means. The Annals of Statistics, 37(4):pp. 1685–1704, 2009. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.