Abstract

Background

Congress, veterans’ groups, and the press have expressed concerns that access to care and quality of care in Department of Veterans Affairs (VA) settings are inferior to access and quality in non-VA settings.

Objective

To assess quality of outpatient and inpatient care in VA at the national level and facility level and to compare performance between VA and non-VA settings using recent performance measure data.

Main Measures

We assessed Patient Safety Indicators (PSIs), 30-day risk-standardized mortality and readmission measures, and ORYX measures for inpatient safety and effectiveness; Healthcare Effectiveness Data and Information Set (HEDIS®) measures for outpatient effectiveness; and Consumer Assessment of Healthcare Providers and Systems Hospital Survey (HCAHPS) and Survey of Healthcare Experiences of Patients (SHEP) survey measures for inpatient patient-centeredness. For inpatient care, we used propensity score matching to identify a subset of non-VA hospitals that were comparable to VA hospitals.

Key Results

VA hospitals performed on average the same as or significantly better than non-VA hospitals on all six measures of inpatient safety, all three inpatient mortality measures, and 12 inpatient effectiveness measures, but significantly worse than non-VA hospitals on three readmission measures and two effectiveness measures. The performance of VA facilities was significantly better than commercial HMOs and Medicaid HMOs for all 16 outpatient effectiveness measures and for Medicare HMOs, it was significantly better for 14 measures and did not differ for two measures. High variation across VA facilities in the performance of some quality measures was observed, although variation was even greater among non-VA facilities.

Conclusions

The VA system performed similarly or better than the non-VA system on most of the nationally recognized measures of inpatient and outpatient care quality, but high variation across VA facilities indicates a need for targeted quality improvement.

KEY WORDS: veterans, Veterans Affairs, Veterans Health Administration, quality

INTRODUCTION

The Veterans Health Administration in the Department of Veterans Affairs (VA), the nation’s largest integrated healthcare system, offers comprehensive healthcare services to eligible U.S. military veterans who enroll. Congress, veterans’ groups, and the press have expressed concerns that access to care and quality of care in VA settings are inferior to access and quality in non-VA settings (Veterans Access, Choice, and Accountability Act of 2014, Public Law 113–146).1, 2

Previous studies have found that quality of care provided in VA settings compares favorably to non-VA care systems.3–7 The most recent systematic review of the published literature found that the VA often—but not always—performed better than or similarly to other systems of care with regard to the safety and effectiveness of care.8 Similarly, a recent study comparing commonly reported measures in VA and non-VA hospitals found that VA hospitals performed better or the same as non-VA hospitals with regard to patient safety, better than non-VA hospitals with regard to mortality and readmissions, and showed mixed performance compared to non-VA hospitals on measures of patient care experiences and behavioral health.9

Building upon this prior work, we assessed quality of both outpatient and inpatient care in VA settings using nationally recognized performance measures from 2013 to 2014. We examined VA’s performance at the national level, assessed variation in performance across VA facilities, and compared performance in VA and non-VA settings in the USA.

METHODS

We analyzed quality measures related to inpatient and outpatient care, comparing VA and non-VA settings. For inpatient measures, we used propensity score matching to identify a subset of non-VA facilities with similar characteristics to VA facilities. For outpatient measures, we compared VA facility performance rates to rates for health plans reported by the National Committee for Quality Assurance State of Health Care Quality Report.10

Quality Measures and Data Sources

To select VA quality measures for inpatient and outpatient settings, we prioritized quality measures that reflect national standards, are reported for non-VA settings by national performance measurement programs (allowing for comparisons), and address safety, effectiveness, and patient-centeredness of care, three of the domains of quality of care outlined by the Institute of Medicine.11 VA and non-VA performance measure data were not available to us for comparing the other three quality domains of timeliness, efficiency, or equity.

Inpatient Measures

To assess patient safety in the inpatient setting, we used Patient Safety Indicators (PSIs) developed by the Agency for Healthcare Research and Quality (AHRQ) to monitor adverse events and complications of care that may occur in the hospital,12 and 30-day risk-standardized mortality and readmission measures developed by the Centers for Medicare & Medicaid Services (CMS) in conjunction with the Hospital Quality Alliance,13 both for the fourth quarter of fiscal year 2014. Complete descriptions of the standard risk adjustments for the 2014 mortality and readmission measures are published elsewhere.14, 15

To assess effectiveness of care in the inpatient setting, we used ORYX measures (also known as the National Hospital Quality Measures) developed by the Joint Commission16 for the fourth quarter of fiscal year 2014.

To assess patient-centeredness of health care received in VA inpatient settings, we used measures from the VA Survey of Health Experiences of Patients (SHEP) for fiscal year 2014.17 We compared VA measures from the SHEP surveys with measures for the fourth quarter of 2014 from the Consumer Assessment of Healthcare Providers and Systems (CAHPS) Hospital Survey (HCAHPS); measures on the inpatient SHEP parallel those of HCAHPS. VA inpatient SHEP data were adjusted for case mix using CMS’s recommended HCAHPS case mix adjustment for Hospital Compare.

For non-VA facilities, data for all inpatient quality measures were downloaded from the CMS Hospital Compare website for the fourth quarter of fiscal year 2014. For VA facilities, data for inpatient quality measures were obtained from three sources. Risk-standardized readmission and mortality rates and ORYX measures were downloaded from the CMS Hospital Compare website for the fourth quarter of fiscal year 2014. AHRQ PSIs were provided by the VA Inpatient Evaluation Center for calendar year 2014. SHEP measures were provided by the VA Office of Performance Measurement for fiscal year 2014.

Outpatient Measures

To assess effectiveness of care in the outpatient setting, we analyzed 16 measures in the Healthcare Effectiveness Data and Information Set (HEDIS®) developed by the National Committee for Quality Assurance that are reported both by VA facilities and by NCQA for health plans nationwide.10 Rates of outpatient effectiveness for VA facilities were compared with rates for three non-VA groups: commercial health maintenance organizations (HMOs), Medicare HMOs, and Medicaid HMOs. The category of HMOs included HMOs, HMO/Point of Service (POS) combined, HMO/Preferred Provider Organization (PPO)/POS combined, HMO/PPO combined, and POS. HEDIS measure data were provided by the VA Office of Performance Measurement for 140 VA facilities providing outpatient care in fiscal year 2013. For non-VA care, HEDIS measure rates were extracted from an NCQA published report for 814 HMO plans during calendar year 2013.10 As there is no nationally representative source of non-VA outpatient patient experience data, we did not compare patient experiences of outpatient care in VA and non-VA facilities. Complete specifications for all measures are available from the authors upon request.

Analysis

Identifying Non-VA Hospitals

To ensure comparability between VA and non-VA hospitals for comparisons of inpatient measure performance, we identified a subset of non-VA hospitals with characteristics similar to VA hospitals. To do so, we conducted propensity-score matching based on the predicted likelihood that a non-VA facility could be a VA facility given certain characteristics (covariates). Hospital characteristics were identified from the 2014 American Hospital Association dataset,18 which includes facility-level characteristics for 135 VA facilities and 6332 non-VA facilities. Seven of 135 VA facilities in the American Hospital Association database could not be linked to the CMS Hospital Compare file and were therefore not included in the analysis of CMS Hospital Compare measures. For matching VA hospitals to non-VA hospitals, we selected four facility characteristics shown to be predictive of performance on Hospital Compare measures19: bed size (< 100 beds, 100–199 beds, and 200+ beds), census division (East North Central, East South Central, Mid-Atlantic, Mountain, New England, Other, Pacific, South Atlantic, West North Central, and West South Central), location (urban, rural), and teaching status (teaching facility, nonteaching facility). A facility was categorized as urban if it is located inside a Metropolitan Statistical Areas (MSA) and rural if outside an MSA18 Teaching facilities were defined to include major and minor teaching hospitals, with a major teaching hospital having a Council of Teaching Hospitals designation and a minor teaching hospital having another teaching hospital designation. Facilities without a teaching hospital designation were classified as nonteaching facilities. Next, we ran a logistic regression model to compute a propensity score for each facility and matched non-VA facilities to VA facilities based on these probabilities. Three non-VA facilities were matched to each VA facility with a maximum allowable absolute difference between propensity scores of 0.0009. There were no significant differences between VA and the matched non-VA facilities for any characteristic in the model, indicating that the two sets were well matched. We analyzed measures for which data were available both for VA hospitals and the non-VA comparison hospitals. In the analysis, if a VA hospital had a missing value for a measure, the non-VA hospitals matched to that hospital were excluded from the analysis of that measure. In addition, if one of the matched non-VA hospitals had a missing value for a measure, the remaining two non-VA hospitals were “up-weighted” by a factor of 3/2 or 1.5, and if two of the matched non-VA hospitals had a missing value for a measure, the remaining hospital was up-weighted by a factor of 3. This ensures that every VA hospital has the equivalent of three matched non-VA hospitals for each measure, and all analyses account for these weights. The number of VA and non-VA hospitals in the analysis also varies because of CMS reporting criteria.20 Performance data may be missing for hospitals if the number of cases did not meet the minimum number required for public reporting; the measure had inadequate reliability; protection of personal health information could not be assured; there were no data for the hospital; or the hospital did not have any patients who met the inclusion criteria for a measure.

Comparing VA and Non-VA Performance

For both inpatient and outpatient measures, national measure performance rates were calculated as means of the facility-level rates. We tested the statistical significance of the difference between each pair of VA and non-VA means using a t test with the P value reported. Standard deviations were not available for non-VA rates for HEDIS® measures; therefore, the VA standard deviation for each measure was used in the t test.

RESULTS

Inpatient Care

For inpatient quality measures, we included 135 VA hospitals in the CMS Hospital Compare database and identified 402 non-VA hospitals in a 3-to-1 match using propensity-score matching; three non-VA hospitals could not be matched because of missing values. None of the characteristics (bed size, census division, location, and teaching status) differed between the VA hospitals and the matched set of non-VA hospitals (Table 1).

Table 1.

Comparison of Characteristics of VA Hospitals and Matched Hospitals Outside VA, 2014

| Variable | VA facilities (%, N = 135) | Non-VA facilities* (%, N = 402) | P value |

|---|---|---|---|

| Bed size* | |||

| < 100 beds | 28.2 | 28.1 | 0.993 |

| 100–199 beds | 48.9 | 48.3 | 0.899 |

| 200+ beds | 23.0 | 23.6 | 0.874 |

| Census division | |||

| East North Central | 14.1 | 13.2 | 0.793 |

| East South Central | 7.4 | 7.5 | 0.983 |

| Mid-Atlantic | 14.1 | 14.2 | 0.976 |

| Mountain | 9.6 | 9.5 | 0.952 |

| New England | 6.7 | 6.5 | 0.936 |

| Other | 1.5 | 1.5 | 0.993 |

| Pacific | 8.9 | 10.0 | 0.719 |

| South Atlantic | 17.8 | 17.9 | 0.972 |

| West North Central | 11.1 | 11.0 | 0.958 |

| West South Central | 8.9 | 9.0 | 0.981 |

| Teaching hospital* | 81.5 | 81.6 | 0.977 |

| Urban* | 85.2 | 86.6 | 0.688 |

*For definitions and more explanation, see the “Methods” section

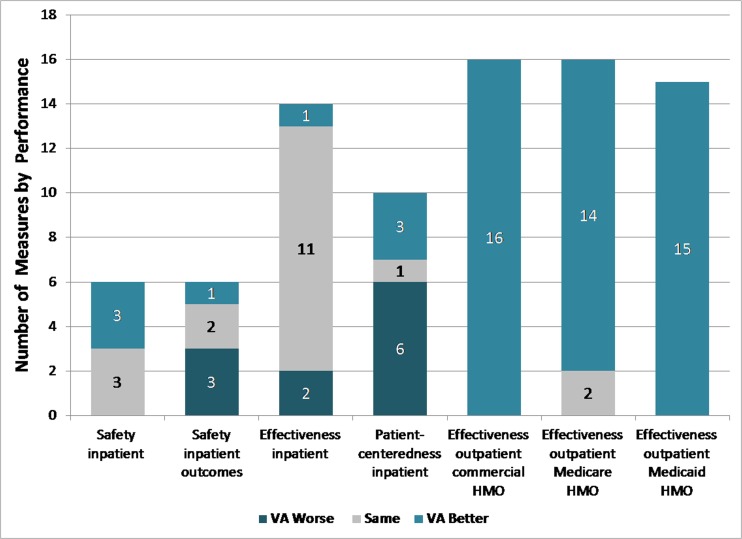

On quality measures related to inpatient care, VA hospitals performed on average the same as or significantly better than non-VA hospitals on all six measures of inpatient safety, all three inpatient mortality measures, and 12 inpatient effectiveness measures, but significantly worse than non-VA hospitals on three readmission measures and two effectiveness measures (Table 2). Compared to patient-reported experiences in non-VA hospitals, veteran-reported experiences of care in VA hospitals were, on average, significantly worse on six and significantly better on three of ten measures (Table 2). The largest difference where VA inpatient performance was significantly lower was the patient experience measure for pain management (6.6%; P < 0.001). The largest difference for which VA inpatient performance was significantly higher was the patient experience measure for care transition (10.4%; P < 0.001). For reference, differences of 1, 3, and 5 percentage points are sometimes referred to as small, medium, and large for CAHPS measures.21 Figure 1 summarizes how VA performance compares to non-VA performance on the inpatient and outpatient measures in Tables 2 and 3, respectively.

Table 2.

Inpatient Quality Measures: Comparison of Performance of VA Hospitals and Matched Hospitals Outside VA, Fiscal Year 2014, Quarter 4

| Measure name | VA hospitals* | Matched hospitals outside VA system† | P value‡ | ||

|---|---|---|---|---|---|

| Number | Mean | Number | Mean | ||

| Safety: patient safety indicators | |||||

| Composite of serious complication indicators (observed to expected)§ | 118 | 0.9 | 316 | 0.9 | 0.588 |

| In-hospital deaths per 1000 surgical discharges with serious treatable complications§ | 81 | 100.6 | 191 | 118.0 | < 0.001 |

| Iatrogenic pneumothorax§ | 117 | 0.4 | 311 | 0.4 | 0.177 |

| Postoperative pulmonary embolism or deep vein thrombosis rate§ | 111 | 3.3 | 286 | 4.6 | < 0.001 |

| Postoperative wound dehiscence§ | 100 | 1.7 | 258 | 1.9 | 0.354 |

| Accidental puncture or laceration§ | 117 | 1.7 | 311 | 2.0 | 0.002 |

| Safety: 30-day risk-standardized readmission rate | |||||

| Acute myocardial infarction 30-day readmission rate§ | 73 | 18.6 | 178 | 17.8 | < 0.001 |

| Heart failure 30-day readmission rate§ | 115 | 23.4 | 319 | 22.6 | < 0.001 |

| Pneumonia 30-day readmission rate§ | 117 | 18.1 | 323 | 17.5 | < 0.001 |

| Safety: 30-day risk-standardized mortality rate | |||||

| Acute myocardial infarction 30-day mortality rate§ | 80 | 14.3 | 201 | 14.7 | 0.066 |

| Heart failure 30-day mortality rate§ | 114 | 11.0 | 310 | 11.8 | < 0.001 |

| Pneumonia 30-day mortality rate§ | 117 | 11.6 | 323 | 11.7 | 0.482 |

| Effectiveness: ORYX measures | |||||

| Aspirin prescribed at discharge | 64 | 99.6 | 156 | 98.9 | 0.055 |

| Statin prescribed at discharge | 64 | 99.0 | 156 | 97.8 | 0.088 |

| Discharge instructions | 112 | 95.8 | 304 | 94.5 | 0.213 |

| Evaluation of left ventricular systolic (LVS) function | 115 | 99.8 | 315 | 98.5 | 0.043 |

| Angiotensin converting enzyme inhibitor (ACEI) or angiotensin receptor blockers (ARB) for left ventricular systolic dysfunction (LVSD) | 102 | 96.3 | 264 | 96.8 | 0.427 |

| Initial antibiotic for community-acquired pneumonia (CAP) in immunocompetent patient | 114 | 94.8 | 313 | 95.4 | 0.396 |

| Effectiveness: surgical care improvement project measures | |||||

| Prophylactic antibiotic received within 1 h prior to surgical incision | 96 | 96.3 | 266 | 98.5 | < 0.001 |

| Prophylactic antibiotics discontinued within 24 h after surgery end time | 96 | 97.1 | 266 | 97.8 | 0.113 |

| Surgery patients who received appropriate venous thromboembolism prophylaxis within 24 h prior to surgery to 24 h after surgery | 96 | 98.1 | 268 | 98.5 | 0.127 |

| Surgery patients on beta-blocker therapy prior to arrival who received a beta-blocker during the perioperative period | 92 | 95.9 | 251 | 96.8 | 0.460 |

| Prophylactic antibiotic selection for surgical patients | 96 | 98.2 | 266 | 98.8 | 0.059 |

| Cardiac surgery patients with controlled 6 a.m. postoperative blood glucose | 28 | 92.6 | 57 | 92.1 | 0.791 |

| Urinary catheter removed on postoperative day 1 (POD 1) or postoperative day 2 (POD 2) with day of surgery being day zero | 93 | 98.1 | 259 | 97.4 | 0.173 |

| Surgery patients with perioperative temperature management | 93 | 99.1 | 261 | 99.8 | < 0.001 |

| Patient experience measures | |||||

| Communication with nurses | 114 | 74.1 | 321 | 77.8 | < 0.001 |

| Communication with doctors | 114 | 77.1 | 321 | 80.3 | < 0.001 |

| Communication about medicine | 110 | 65.1 | 309 | 63.0 | 0.001 |

| Responsiveness of hospital staff | 109 | 63.0 | 306 | 64.8 | 0.024 |

| Discharge information | 113 | 85.9 | 318 | 85.8 | 0.852 |

| Pain management | 108 | 63.3 | 304 | 69.9 | < 0.001 |

| Care transition | 114 | 53.7 | 320 | 43.3 | < 0.001 |

| Cleanliness of hospital environment | 114 | 72.8 | 321 | 71.2 | 0.031 |

| Quietness of hospital environment | 114 | 55.4 | 321 | 58.9 | < 0.001 |

| Overall rating of hospital | 114 | 67.1 | 321 | 70.3 | < 0.001 |

*Rates in this column represent mean performance of VA hospitals in the fourth quarter of fiscal year 2014. Means are not weighted for the size of the eligible population and, therefore, may differ from national measure rates in VA publications, which are based on patient-level data

†Rates in this column represent performance of matched non-VA hospitals in the fourth quarter of fiscal year 2014. Means are not weighted for the size of the eligible population

‡P value based on a t test

§For this measure, lower rates are better

Figure 1.

VA compared to non-VA quality of care, by type of measure and setting. Source: RAND summary of results of VA to non-VA comparisons. Notes: Categories are defined on the basis of statistical tests for difference in means with P < 0.05 or less: VA better = VA quality of care shown to be better than non-VA; same = quality of care in VA and non-VA did not differ; VA worse = VA quality of care was shown to be worse than non-VA. Non-VA comparison data were not available for outpatient measures of patient-centeredness.

Table 3.

Outpatient Quality Measures: Comparison of Performance of Veterans Affairs (VA) Facilities and Commercial, Medicare, and Medicaid HMOs, 2013

| Measure | VA facilities* | Commercial HMOs†, ‡ | Medicare HMOs†, § | Medicaid HMOs†, ‡ |

|---|---|---|---|---|

| Preventive care | ||||

| Tobacco use: advising smokers and tobacco users to quit | 95.9 | 77.3 | 84.6 | 75.8 |

| Breast cancer screening (50–74 years) | 86.6 | 74.3 | 71.3 | 57.9 |

| Colorectal cancer screening (50–75 years) | 81.4 | 63.3 | 64.3 | NA |

| Diabetes | ||||

| Eye examination | 90.0 | 55.7 | 68.5 | 53.6 |

| Measurement of hemoglobin A1c (HbA1c) | 98.5 | 89.9 | 92.3 | 83.8 |

| Low-density lipoprotein (LDL) cholesterol screening | 97.1 | 84.9 | 88.9 | 76.0 |

| Medical attention for nephropathy | 95.3 | 84.5 | 91.1 | 79.0 |

| Poor glycemic control (HbA1c > 9) ‖ | 19.0 | 30.5 | 25.3 | 45.6 |

| Blood pressure control (< 140/90 mmHg) | 78.9 | 65.0 | 65.6 | 60.4 |

| LDL cholesterol control (< 100 mg/dL) | 68.2 | 46.7 | 53.8 | 33.9 |

| Hypertension | ||||

| Controlling high blood pressure (diagnosis of hypertension, 18–85 years and < 140/90 mmHg) | 76.1 | 64.4 | 65.5 | 56.5 |

| Cardiovascular conditions | ||||

| Persistence of beta-blocker treatment after acute myocardial infarction | 91.7 | 83.9 | 90.0 | 84.2 |

| LDL cholesterol screening | 96.0 | 86.7 | 89.6 | 81.1 |

| LDL cholesterol control (< 100 mg/dL) | 69.7 | 57.5 | 58.6 | 40.5 |

| Depression | ||||

| Antidepressant medication management: acute phase | 70.3 | 64.4 | 66.8 | 50.5 |

| Antidepressant medication management: continuation phase | 53.6 | 47.4 | 53.3 | 35.2 |

NA rate is not reported for this subgroup

*Rates in this column represent mean performance in fiscal year 2013 of 140 VA facilities, with the exception of “Persistence of beta-blocker treatment after acute myocardial infarction” which is based on 134 VA facilities. Means are not weighted for the size of the eligible population and, therefore, may differ from national measure rates in VA publications, which are based on patient-level data

†Rates in this column represent performance in calendar year 2013 of HMO plans, including HMOs, HMO/POS combined, HMO/PPO/POS combined, HMO/PPO combined, and POS. Means are not weighted for the size of the eligible population

‡Differences between all rates in this column and VA rates are statistically significant at P < 0.001

§Differences between rates in this column and VA rates are statistically significant at P < 0.001, except for those in italics which are not significant at P < 0.05

‖For this measure, lower rates are better

Outpatient Care

The performance of VA facilities was significantly better than commercial HMOs and Medicaid HMOs for all of the 16 HEDIS® measures and for Medicare HMOs, it was significantly better for 14 measures and did not differ for 2 measures (Table 3). The smallest difference between VA and commercial HMOs was in the rate of antidepressant medication management during the acute phase (5.9%; P < 0.001), and the largest difference was in the rate of eye examinations for patients with diabetes (34.3%; P < 0.001). The smallest difference between VA and Medicare HMOs was in the rate of antidepressant medication management during the continuation phase (0.3%; not significant), and the largest difference was in the rate of eye examinations for patients with diabetes (21.5%; P < 0.001). The smallest difference between VA and Medicaid HMOs was in the rate of persistence of beta-blocker treatment after acute myocardial infarction (7.5%; P < 0.001), and the largest difference was in the rate of eye examinations for patients with diabetes (36.4%; P < 0.001).

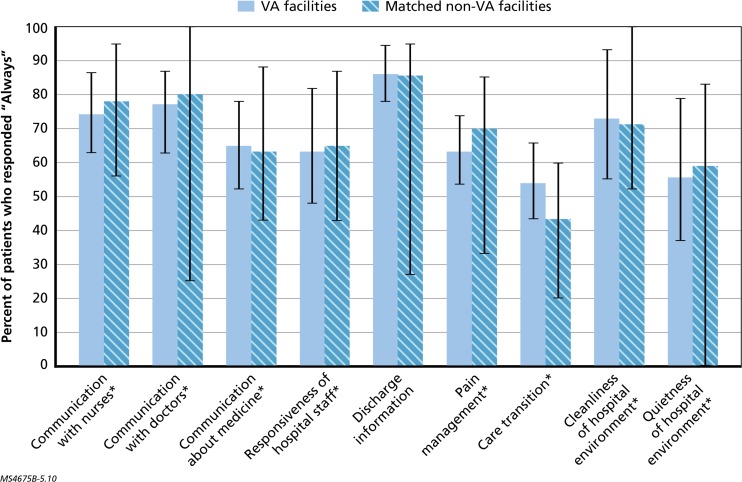

Variation

On average, VA care performed better than non-VA care on most measures. There was wide variation in performance across VA facilities for some measures and even wider variation across non-VA facilities. As examples, there was a 50-percentage point difference in performance between the lowest- and highest-performing VA facilities on the FY 2014 rate of beta-blocker treatment for at least 6 months in patients after discharge for an acute myocardial infarction hospitalization in the outpatient setting (excluding one facility with a measure rate of zero).In addition, all of the FY 2014 patient experience measures for the inpatient setting exhibited large differences between the lowest and highest measure rates for VA facilities, ranging from a 17-percentage point difference for “discharge information” to a 42-percentage point difference for “quietness of hospital environment” (Fig. 2). Notably, variation in performance was even higher across the matched non-VA hospitals on the same measures, ranging from a 39-percentage point difference for “communication with nurses” to an 83-percentage point difference for quietness of hospital environment (Fig. 2).

Figure 2.

National variation in VA and non-VA performance on patient experience measures for inpatient setting, FY 2014. Sources: VA facility-level data for patient experience measures for FY 2014 were obtained from the VA Office of Performance Measurement. Non-VA facility-level data for patient experience measures for quarter 4 of FY 2014 were obtained from the CMS Hospital Compare website. Notes: Minimum and maximum values for the reporting facilities in each subgroup are represented by the line extending from each bar. An asterisk next to the measure name indicates a statistically significant difference at P < 0.05 or less between VA and non-VA hospitals.

DISCUSSION

Using a broad set of measures reported in national performance measurement programs, we found that rates of adherence to recommended processes of inpatient care, risk-adjusted 30-day mortality rates, and four case-mix adjusted patient experience measures in VA hospitals were better or the same as rates in a matched set of non-VA hospitals. However, risk-adjusted readmission rates were significantly worse than those in matched non-VA hospitals, as were six of ten patient experience measures for the hospital setting. We also found that rates of adherence to almost all recommended outpatient processes of care in VA facilities nationwide met or exceeded those in commercial, Medicare, and Medicaid health plans for outpatient care.

Our findings differ somewhat from other recent studies comparing VA and non-VA inpatient care.9, 22 For example, in contrast to a study of Hospital Compare performance data from a similar timeframe,9 we found that readmission rates were higher for AMI, heart failure, and pneumonia in VA hospitals than in non-VA hospitals; such discrepancies may be because the non-VA comparison hospitals in our analysis are a subset of all U.S. hospitals that have been matched to more closely mirror the characteristics of VA hospitals, rather than a broader set of non-VA hospitals.

There is substantial variation in quality measure performance across VA facilities, indicating that Veterans in some areas are not receiving the same high-quality care that other VA facilities are able to provide. This variation is lower than that observed in private-sector health plans and hospitals, and some variations in performance across regions and VA facilities may be inevitable because of differences in patient characteristics. However, promoting more uniform quality of care across VA facilities is important for ensuring that veterans can count on high-quality care no matter which facility they access.

Our study assesses quality of care across a range of health conditions in both inpatient and outpatient settings and addresses several quality domains, including safety, effectiveness, and patient experience. We use data from CMS Hospital Compare for all inpatient safety and effectiveness measures for VA and non-VA hospitals; we used data provided by VA for PSIs to minimize concerns about comparing data collected using different methods and, therefore, subject to different biases. Although the outpatient measures were based on different data sources, the same specifications were used to derive the rates for most measures. Nonetheless, our study has several limitations. First, some quality measures are based on administrative data, which includes limited clinical information, and therefore, individuals might be misclassified as receiving or not receiving the recommended care.23, 24

Second, veterans may differ from patients in non-VA settings in terms of demographic characteristics (e.g., age, gender) and clinical characteristics (e.g., severity of disease, comorbidities, including mental health conditions). However, the effectiveness measures in the study focus on care recommended for all eligible patients; therefore, all patients, regardless of characteristics, should receive the recommended care, and differing patient characteristics should not bias those comparisons. The outcome measures (i.e., mortality and readmissions) that require risk adjustment for unbiased comparison between subgroups are risk-standardized using the CMS Hospital Compare methods for both VA and non-VA hospitals, although some argue that reporting of these measures is imprecise and standardized adjustments are insufficient across all hospitals.25 Variables used in the risk adjustment include age, gender, condition-specific clinical information (e.g., cardiovascular risk factors for acute myocardial infarction), and comorbidities (e.g., hypertension, diabetes, dementia).26

Third, the propensity score matching of non-VA to VA hospitals relied on limited number of variables, so there may be differences between the two groups of hospitals and the patients they serve. In addition, a small number of VA hospitals did not appear in the AHA file and, therefore, could not be matched to non-VA hospitals and were excluded from the analyses of inpatient quality measures. To estimate the effect of this exclusion, we compared the measure rates in excluded VA hospitals to those included and found that the two sets did not differ significantly.

Finally, although our study included a large number of quality measures, there are many aspects of VA care, such as care coordination, that are not represented. In addition, quality measures focus on care provided for veterans who have successfully accessed the system but do not examine the degree to which VA may be able to facilitate Veterans enrolling or gaining access to care in the VA system in a timely manner.

In conclusion, in 2013–2014, on most publicly reported measures, on average, the quality of VA inpatient care was the same as or better than the quality of non-VA inpatient care and, on average, the quality of VA outpatient care was better than the quality of non-VA outpatient care. High variation in the performance of some quality measures was observed across VA facilities and indicates a need for targeted quality improvement to ensure that veterans received uniformly high-quality care at all VA facilities.

Acknowledgments

The authors gratefully acknowledge Marc N. Elliott for his statistical consultation, Chris Chan and Mike Cui for their programming support, Zachary Predmore and Clare Stevens for their research assistance, and Susan Hosek, Terri Tanielian, and Robin Weinick for their managerial support and guidance.

Funding/Support

This work was completed under a subcontract from The MITRE Corporation for the U.S. Department of Veterans Affairs at the request of the Veterans Access, Choice, and Accountability Act of 2014 Section 201. The report was prepared under Prime Contract No. HHS-M500-2012-00008I, Prime Task Order No. VA118A14F0373.

Role of the Sponsor

The funding organization had no role in the design or conduct of the study; the collection, analysis, or interpretation of the data; or the preparation or approval of the manuscript. VA had the opportunity to review the manuscript before submission, but submission for publication was not subject to VA approval.

The analyses upon which this publication is based were performed under a contract for the Department of Veterans Affairs. The content of this publication does not necessarily reflect the views or policies of the Department of Veterans Affairs nor does the mention of trade names, commercial products, or organizations imply endorsement by the U.S. Government. The authors assume full responsibility for the accuracy and completeness of the ideas presented.

Author Contributions

Drs. Anhang Price and Sloss had full access to all of the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

Study concept and design; drafting of the manuscript: RAP, ES.

Acquisition of data; analysis and interpretation of data: RAP, ES, CC, MC.

Critical revision of the manuscript for important intellectual content: RAP, ES, PH, CF.

Administrative, technical, or material support: PH, CF.

Study supervision: RAP, ES, PH, CF.

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they do not have a conflict of interest.

References

- 1.Concerned Veterans for America. How to fix the VA health care system. Arlington, VA; 2015. Available at: http://fixing-veterans-health-care.legacy.cloud-pages.com/accounts/fixing-veterans-health-care/original.pdf. Accessed February 21, 2018.

- 2.Rein L. Cancer patients died waiting for care at troubled veterans hospital, probe finds. Washington Post. 2015. Available at: https://www.washingtonpost.com/news/federal-eye/wp/2015/10/20/lapses-in-care-delayed-urology-treatment-for-at-least-1500-veterans-leading-to-some-deaths-watchdog-finds/. Accessed February 21, 2018.

- 3.Asch S, Glassman P, Matula S, Trivedi A, Miake-Lye I, Shekelle PG. Comparison of Quality of Care in VA and Non-VA Settings: a Systematic Review. VA-ESP Project #05–226; 2010. [PubMed]

- 4.Jha AK, Perlin JB, Kizer KW, Dudley RA. Effect of the transformation of the Veterans Affairs Health Care System on the quality of care. N Engl J Med. 2003;348(22):2218–2227. doi: 10.1056/NEJMsa021899. [DOI] [PubMed] [Google Scholar]

- 5.Khuri SF, Daley J, Henderson WG. The comparative assessment and improvement of quality of surgical care in the Department of Veterans Affairs. Arch Surg-Chicago. 2002;137(1):20–27. doi: 10.1001/archsurg.137.1.20. [DOI] [PubMed] [Google Scholar]

- 6.Matula SR, Trivedi AN, Miake-Lye I, Glassman PA, Shekelle P, Asch S. Comparisons of quality of surgical care between the US Department of Veterans Affairs and the private sector. J Am Coll Surg. 2010;211(6):823–832. doi: 10.1016/j.jamcollsurg.2010.09.001. [DOI] [PubMed] [Google Scholar]

- 7.Trivedi AN, Matula S, Miake-Lye I, Glassman PA, Shekelle P, Asch S. Systematic review: comparison of the quality of medical care in Veterans Affairs and non-Veterans Affairs settings. Med Care. 2011;49(1):76–88. doi: 10.1097/MLR.0b013e3181f53575. [DOI] [PubMed] [Google Scholar]

- 8.O’Hanlon C, Huang C, Sloss E, et al. Comparing VA and non-VA quality of care: a systematic review. J Gen Intern Med. 2016. [DOI] [PMC free article] [PubMed]

- 9.Blay E, Jr, Delancey JE, Hewitt DB, Chung JW, Bilimoria KY. Initial public reporting of quality at Veterans Affairs vs non-Veterans Affairs hospitals. JAMA Int Med. 2017;177(6):882–3. doi: 10.1001/jamainternmed.2017.0605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.National Committee for Quality Assurance. The State of Health Care Quality 2014. 2014. Available at: www.ncqa.org. Accessed February 21, 2018.

- 11.Institute of Medicine . Crossing the Quality Chasm: a New Health System for the 21st Century. Washington, DC: National Academies Press; 2001. [PubMed] [Google Scholar]

- 12.Agency for Healthcare Research and Quality. Patient safety indicators overview. 2015. Available at: http://www.qualityindicators.ahrq.gov/modules/psi_resources.aspx. Accessed February 21, 2018.

- 13.Centers for Medicare & Medicaid Services. Outcome measures (background). 2014. Available at: http://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HospitalQualityInits/OutcomeMeasures.html. Accessed February 21, 2018.

- 14.Centers for Medicare & Medicaid Services. 2014 measures updates and specifications report hospital-level 30-day risk-standardized mortality measures. 2014. Available at: http://www.qualitynet.org/dcs/ContentServer?cid=1228774398696&pagename=QnetPublic%2FPage%2FQnetTier4&c=Page. Accessed February 21, 2018.

- 15.Centers for Medicare & Medicaid Services. 2014 measures updates and specifications report hospital-level 30-day risk-standardized readmission measures. 2014. Available at: http://www.qualitynet.org/dcs/ContentServer?cid=1228774371008&pagename=QnetPublic%2FPage%2FQnetTier4&c=Page. Accessed February 21, 2018.

- 16.Joint Commission. Performance measurement for hospitals. 2015. Available at: http://www.jointcommission.org/accreditation/performance_measurementoryx.aspx. Accessed February 21, 2018.

- 17.U.S. Department of Veterans Affairs. VHA facility quality and safety report. 2013. Available at: http://www.va.gov/HEALTH/docs/VHA_Quality_and_Safety_Report_2013.pdf. Accessed February 21, 2018.

- 18.American Hospital Association. Annual survey database fiscal year 2014 documentation manual. 2014. Available at: http://www.ahadataviewer.com/book-cd-products/aha-survey/. Accessed February 21, 2018.

- 19.Lehrman WG, Elliott MN, Goldstein E, Beckett MK, Klein DJ, Giordano LA. Characteristics of hospitals demonstrating superior performance in patient experience and clinical process measures of care. Med Care Res Rev. 2010;67(1):38–55. doi: 10.1177/1077558709341323. [DOI] [PubMed] [Google Scholar]

- 20.Centers for Medicare & Medicaid Services. Frequently asked questions, hospital value-based purchasing program, Last Updated March 9, 2012. Available at: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/Value-Based-Programs/HVBP/HVBP-FAQs.pdf. Accessed February 21, 2018.

- 21.Quigley Denise D., Elliott Marc N., Setodji Claude Messan, Hays Ron D. Quantifying Magnitude of Group-Level Differences in Patient Experiences with Health Care. Health Services Research. 2018;53:3027–3051. doi: 10.1111/1475-6773.12828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Nuti SV, Qin L, Rumsfeld JS, et al. Association of admission to Veterans Affairs hospitals vs non-Veterans Affairs hospitals with mortality and readmission rates among older men hospitalized with acute myocardial infarction, heart failure, or pneumonia. JAMA. 2016;315(6):582–592. doi: 10.1001/jama.2016.0278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Maier B, Wagner K, Behrens S, et al. Comparing routine administrative data with registry data for assessing quality of hospital care in patients with myocardial infarction using deterministic record linkage. BMC Health Serv Res. 2016;16:605. doi: 10.1186/s12913-016-1840-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tang PC, Ralston M, Arrigotti MF, Qureshi L, Graham J. Comparison of methodologies for calculating quality measures based on administrative data versus clinical data from an electronic health record system: implications for performance measures. J Am Med Inform Assoc: JAMIA. 2007;14(1):10–15. doi: 10.1197/jamia.M2198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Baker DW, Chassin MR. Holding providers accountable for health care outcomes. Ann Intern Med. 2017;167:418–423. doi: 10.7326/M17-0691. [DOI] [PubMed] [Google Scholar]

- 26.Joint Commission. Performance measurement for hospitals. 2015. Available at: http://www.jointcommission.org/specifications_manual_for_national_hospital_inpatient_quality_measures.aspx. Accessed February 21, 2018.