Abstract

Background

Social comparison feedback is an increasingly popular strategy that uses performance report cards to modify physician behavior. Our objective was to test the effect of such feedback on the ordering of routine laboratory tests for hospitalized patients, a practice considered overused.

Methods

This was a single-blinded randomized controlled trial. Between January and June 2016, physicians on six general medicine teams at the Hospital of the University of Pennsylvania were cluster randomized with equal allocation to two arms: (1) those e-mailed a summary of their routine laboratory test ordering vs. the service average for the prior week, linked to a continuously updated personalized dashboard containing patient-level details, and snapshot of the dashboard and (2) those who did not receive the intervention. The primary outcome was the count of routine laboratory test orders placed by a physician per patient-day. We modeled the count of orders by each physician per patient-day after the intervention as a function of trial arm and the physician’s order count before the intervention. The count outcome was modeled using negative binomial models with adjustment for clustering within teams.

Results

One hundred and fourteen interns and residents participated. We did not observe a statistically significant difference in adjusted reduction in routine laboratory ordering between the intervention and control physicians (physicians in the intervention group ordered 0.14 fewer tests per patient-day than physicians in the control group, 95% CI − 0.56 to 0.27, p = 0.50). Physicians whose absolute ordering rate deviated from the peer rate by more than 1.0 laboratory test per patient-day reduced their laboratory ordering by 0.80 orders per patient-day (95% CI − 1.58 to − 0.02, p = 0.04).

Conclusions

Personalized social comparison feedback on routine laboratory ordering did not change targeted behavior among physicians, although there was a significant decrease in orders among participants who deviated more from the peer rate.

Trial registration

Clinicaltrials.gov registration: #NCT02330289.

Electronic supplementary material

The online version of this article (10.1007/s11606-018-4482-y) contains supplementary material, which is available to authorized users.

INTRODUCTION

Nearly $200 billion of annual healthcare spending in the USA has been attributed to overuse of medical interventions (tests, procedures, and therapies) that do not offer any benefit to patients.1 Routine laboratory testing or “morning labs” for hospitalized patients has long been considered an overused practice in US healthcare. Nearly 50 years ago, a third of patients with a normal biochemical profile on admission received an average of two additional profiles during their hospital stay that did not affect clinical decision making.2 Since then, efforts to reduce laboratory overuse in the hospital included educational interventions, decision support, utilization peer reviews, and financial incentives.3–11 However, the existing interventions have had a limited effect on laboratory test utilization for hospitalized patients.12,13 In a recent survey of internal medicine and general surgery residents, about a third reported ordering laboratory tests that they perceived as unnecessary on a daily basis.14

Social comparison feedback (SCF) is an increasingly popular strategy that uses performance report cards to modify physician behavior.15 This strategy provides participants with information on their own performance compared to their peers to correct misperceptions about norms that may drive overuse of some tests. Previous studies found SCF to be effective in some areas of overuse (for example, in reducing inappropriate prescribing of antibiotics for acute upper respiratory infections16,17). Potential advantages of SCF over other interventions include allowing ordering providers to maintain autonomy over individual decision making, which is salient in areas of practice with high clinical uncertainty,18 and offering the potential for broad adoption of electronic medical record (EMR)-based automated dashboards to facilitate automated personalized feedback.19 Previous studies of the effect of SCF on physicians’ laboratory test-ordering behaviors showed some reduction in ordering.3,6,20 However, those studies either used labor-intensive manual chart audit and in-person feedback from peers6 or combined SCF with other resource-intensive interventions such as provider education.20 With increased use of EMR-based dashboards by healthcare systems,19 automated SCF alone represents an easily scalable intervention to influence frontline providers’ treatment patterns. In this study, we incorporated SCF into a personalized, EMR-based, real-time utilization dashboard that was combined with a simple summary statement delivered via e-mail to internal medicine physicians in training (residents). We focused the intervention on residents because they represent a group of clinicians who provide direct care to patients hospitalized at teaching hospitals (representing 53% of admissions to medicine services in 2012,21 for example). We hypothesized that personalized SCF about routine laboratory test ordering would result in a reduction of laboratory test ordering for patients hospitalized on the general medicine services.

METHODS

Participants and Enrollment

All internal medicine interns and residents scheduled to be on general medicine services at the Hospital of the University of Pennsylvania during the study period (January 4 to June 20, 2016) were eligible to receive the intervention. Physicians pulled emergently for coverage, medical students who were serving as interns on their sub-internships and rotating physicians from other departments were excluded. At the beginning of the study, all physicians scheduled to be on service during the study period were e-mailed a brief description of the study (e.g., objectives, intervention). The investigators did not collect any patient-identifiable, protected health data during the study. The University of Pennsylvania Institutional Review Board reviewed and approved this study with a waiver of written documentation of consent (see Online Appendix). The study was registered on clinicaltrials.gov (#NCT02330289).

Randomization

Each of the six general medicine teams was composed of one attending physician, one resident physician (PGY2 or PGY3), and two PGY1 level resident physicians (interns). Each physician’s schedule was divided into service blocks that were 2 weeks in duration. Thus, each team block made up one cluster (one resident and two interns during a 2-week service block). During each 2-week block, we randomized three of the clusters to the experimental group and three to the control group using a random number generator. Randomization was repeated for each of the 12 blocks during the study interval, and the teams were reassigned.

Design and Intervention

This was a single-blinded randomized controlled trial. The investigators were blinded to randomization and during data collection and analysis. During the study period, 216 resident blocks were cluster randomized with equal allocation to two arms: (1) those e-mailed a summary of their routine lab ordering (e.g. complete blood count, basic or complete metabolic panel, liver function panel, and common coagulation tests) vs. the service average for the prior week, linked to a continuously updated personalized EMR-based dashboard containing patient-level details, and a snapshot of the dashboard and (2) those who did not receive the intervention. The service average counted the number of tests ordered across all general medicine teams divided by the number of patients over the previous 7 days. The initial e-mails, sent in the middle of each resident’s 2-week service to allow for baseline and follow-up periods, were followed by a reminder e-mail within 24 h. An example of the timeline for each 2-week block is shown in Online Appendix. The dashboard contained real-time lab ordering information linked to individual patients’ EMR records and is described elsewhere.22

Data, Measures, and Attribution

Variables were derived from an electronic medical records database containing all orders placed for patients admitted to the general medicine teams during the study interval (additional details about data collection and analysis are provided in Online Appendix). The primary outcome was the count of routine laboratory orders placed by each physician per patient-day. Day was defined in concordance with the start of physician shifts. Based on prior literature regarding physician attribution of orders in the teaching setting,23 all orders placed by PGY1 resident physicians were attributed to that physician. For PGY2 and PGY3 resident physicians who were supervising the PGY1 resident physicians on the team, in addition to all orders entered by those physicians themselves, all orders for patients assigned to the physician’s team were attributed to that physician. Orders placed on weekend days were excluded a priori in an effort to minimize cross contamination because attending physicians cross-cover multiple teams on weekends.

Analyses

Outcomes were analyzed using an intent-to-treat approach comparing orders per physician per patient-day during the 4 days after the day when physicians were e-mailed their feedback reports (intervention day 0) to their orders during the corresponding 4 days the week prior, between the intervention and control groups. The orders placed on intervention day were excluded. We modeled the count of orders per physician-patient-day after the intervention as a function of trial arm and the physician’s order count before the intervention using an ANCOVA style approach.24

We conducted several post hoc analyses using the same analytic approach to compare the following: (1) orders by day after the intervention, (2) orders by residents who did not open the e-mail vs. those who opened the e-mail, (3) orders by residents who did not access the dashboard vs. those who accessed the dashboard, (4) spillover effects of the intervention on non-targeted laboratory test ordering, and to evaluate (5) whether the magnitude and direction of the difference between each physician’s ordering rate in their feedback report and the service average had a differential effect on physician behavior.

Two-sided p values < 0.05 were considered significant. All analyses were conducted using STATA 14.2 (College Station, TX).

RESULTS

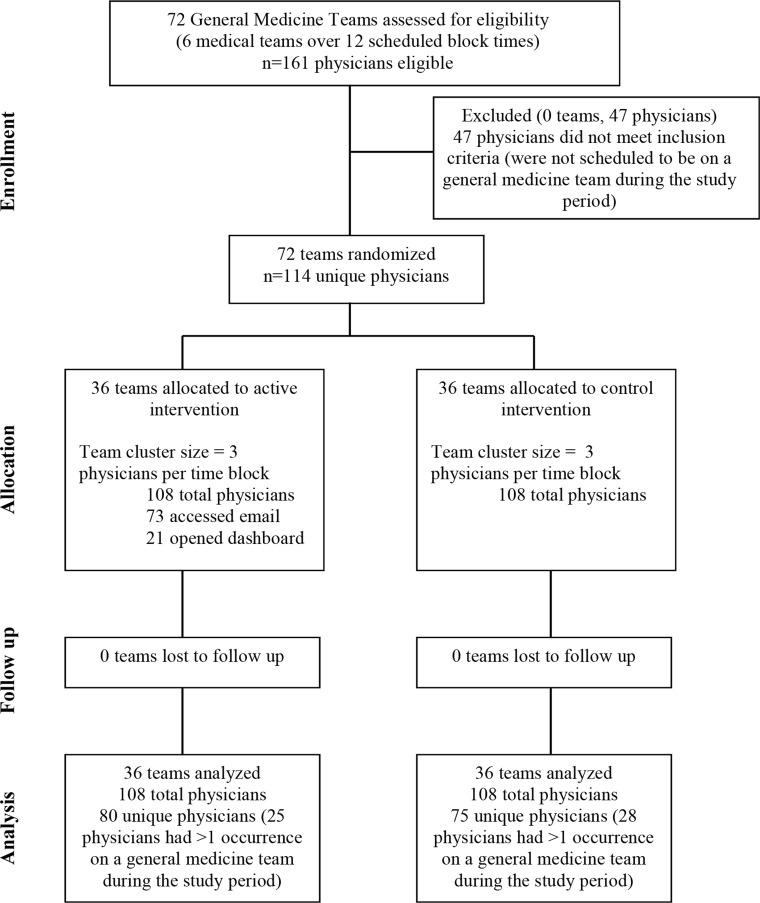

Figure 1 displays the CONSORT diagram for this study. During the study interval, 161 physicians were assessed for eligibility to participate in the study. Forty-seven were ineligible because they were not scheduled to general medicine services during the study period. The remaining 114 physicians (63 PGY1s and 51 higher-level residents) were cluster-randomized: 39 (34%) were in the intervention arm only, 34 (30%) were in the control arm only, and 41 (36%) were in both intervention and control arms during different service blocks. Nineteen (17%) were in the control arm first, followed by the intervention arm, and 22 (19%) were in the intervention arm first, followed by the control arm. Of the 108 physician blocks in the intervention group, 73 (68%) accessed feedback via e-mail and 21 (19%) accessed the detailed EMR-based dashboard. Study participants placed orders for 2042 unique patients who had 2403 hospitalizations during the study period.

Figure 1.

CONSORT diagram.

Table 1 describes physician characteristics, patient census, and ordering rates before and after the intervention. There were no statistically significant differences in physician characteristics, patient census per physician per day, or non-targeted laboratory tests between intervention and control groups. The mean count of laboratory tests per physician per patient-day during the baseline period was higher for the intervention group (2.26 (SD 1.68) compared to 2.04 (SD 1.26) for controls, p = 0.03). After the intervention, the number of laboratory tests per physician per patient-day declined in both groups (to 1.52 in the intervention group (SD 1.65) and 1.55 (SD 1.53) in the control group, p = 0.74).

Table 1.

Physician Census and Ordering Before and After the Intervention (N = 114)

| Intervention | Control | p value | |

|---|---|---|---|

| PGY level, n (%) | |||

| PGY1 | 51 (63.8) | 44 (58.7) | 0.10 |

| PGY2 | 12 (15.0) | 21 (28.0) | |

| PGY3 | 17 (21.3) | 10 (13.3) | |

| Gender | |||

| Female (%) | 40 (50.0) | 33 (44.0) | 0.45 |

| Patient census per day, mean (SD) | |||

| Before intervention | 6.24 (2.95) | 6.47 (3.09) | 0.27 |

| After intervention | 5.04 (2.51) | 5.30 (2.77) | 0.56 |

| Targeted laboratory orders per patient-day, mean (SD) | |||

| Before intervention | 2.26 (1.68) | 2.04 (1.26) | 0.03 |

| After intervention | 1.52 (1.65) | 1.55 (1.53) | 0.74 |

| Non-targeted laboratory orders per patient-day, mean (SD) | |||

| Before intervention | 0.92 (1.10) | 0.77 (0.57) | 0.29 |

| After intervention | 0.63 (0.79) | 0.82 (0.95) | 0.19 |

p-value <0.05

After accounting for baseline ordering rates, we did not observe a statistically significant difference in the reduction of routine laboratory ordering between physicians in the intervention and control groups (Table 2). Ordering among physicians who were randomized to receive the intervention dropped by 0.14 more laboratory tests per patient-day after the intervention compared to controls (95% CI − 0.56 to 0.27, p = 0.50). Thus, the findings of intent-to-treat analysis did not support our hypothesis that providing physicians SCF on their ordering of routine laboratory tests for hospitalized patients would result in reduced ordering of routine laboratory tests.

Table 2.

Adjusted Difference in Laboratory Orders per Physician per Day by Group (n = 861)

| Difference in orders per physician/patient/day (after vs. before intervention) | 95% confidence interval | p value | |

|---|---|---|---|

| Control (reference) | – | – | – |

| Intervention (intent-to-treat) | − 0.14 | − 0.56 to 0.27 | 0.50 |

| Post-intervention day 1 | − 0.03 | − 0.58 to 0.53 | 0.92 |

| Post-intervention day 2 | 0.01 | − 0.56 to 0.59 | 0.97 |

| Post-intervention day 3 | − 0.44 | − 1.00 to 0.12 | 0.13 |

| Post-intervention day 4 | − 0.10 | − 0.76 to 0.57 | 0.77 |

| Physicians who did not open e-mail | 0.22 | − 0.26 to 0.70 | 0.37 |

| Physicians who opened e-mail | − 0.31 | − 0.76 to 0.13 | 0.16 |

| Post-intervention day 1 | − 0.10 | − 0.73 to 0.53 | 0.76 |

| Post-intervention day 2 | − 0.27 | − 0.85 to 0.31 | 0.37 |

| Post-intervention day 3 | − 0.82 | − 1.31 to − 0.32 | 0.001 |

| Post-intervention day 4 | − 0.05 | − 0.85 to 0.76 | 0.91 |

| Physicians who did not access dashboard | − 0.06 | − 0.50 to 0.37 | 0.77 |

| Physicians who accessed dashboard | − 0.44 | − 0.94 to 0.05 | 0.08 |

| Non-intervention laboratory orders | − 0.12 | − 0.68 to 0.44 | 0.68 |

Estimates reported from six models: (1) intent-to-treat (two arms) comparing control vs. intervention, (2) post-hoc model (two arms) comparing intervention vs. control by day post intervention, (3) post-hoc model (three arms) comparing controls vs. physicians randomized to the intervention who opened the intervention e-mail vs. those who did not open the intervention e-mail, (4) post-hoc model (three arms) comparing controls vs. physicians randomized to the intervention who accessed the dashboard vs. those who did not access the dashboard, and (5) secondary outcome: controls vs. intervention for laboratory orders not targeted by the intervention

p-value <0.05

Sixty-seven percent (67.6%) of the participants in the intervention group opened the e-mailed messages containing SCF information, and 19.4% accessed the electronic dashboard. After stratifying the intervention group into physicians who opened the e-mail containing their personalized SCF report and those who did not open the e-mail, we observed that physicians who opened the e-mail ordered 0.82 fewer laboratory tests on day 3 after the intervention, relative to the controls (95% CI − 1.31 to − 0.32, p = 0.001) (Table 2). Overall, there was no statistically significant difference between physicians who opened the e-mail and those who did not or controls, comparing the average ordering rate per patient-day during the 4-day interval after the intervention to the corresponding 4-day interval before the intervention. After stratifying the intervention cohort by electronic dashboard use, physicians who accessed the dashboard ordered 0.44 fewer laboratory tests in the post-intervention period compared to controls; however, the difference was not significant (95% CI − 0.94 to 0.05, p = 0.08).

Although in both groups—physicians whose baseline ordering rate was higher than that of their peers and those whose baseline ordering rate was lower than that of their peers—post-intervention rates of ordering routine labs were lower in the intervention compared to the control group; we did not observe a greater decrease in ordering among physicians with higher baseline ordering rates (Table 3). Among the physicians whose rates were lower than their peers’ rates, physicians in the intervention group ordered 0.12 fewer labs than the control group (95% CI − 0.68 to 0.44, p = 0.67). Among the physicians whose rates were higher than their peers’ rates, physicians in the intervention group ordered 0.20 fewer labs than the control group (95% CI − 0.76 to 0.37, p = 0.50).

Table 3.

Change in Laboratory Orders per Physician per Patient-Day by the Magnitude and Direction of the Reported Deviation Between the Individual and Peer Team Ordering Rates on Intervention Day

| Difference in orders per physician/patient/day (after vs. before intervention) | 95% confidence interval | p value | |

|---|---|---|---|

| Control (reference) | – | – | – |

| Residents whose rate was lower than peer rates | − 0.12 | − 0.68 to 0.44 | 0.67 |

| Residents whose rate was higher than peer rates | − 0.20 | − 0.76 to 0.37 | 0.50 |

| Residents whose absolute rate deviated from peer rate by < 1.0 test per patient-day | − 0.02 | − 0.44 to 0.41 | 0.93 |

| Residents whose absolute rate deviated from peer rate by > 1.0 test per patient-day | − 0.80 | − 1.58 to − 0.02 | 0.04 |

p-value <0.05

While physicians who were ordering more tests than the service average did not change their ordering behavior in a significantly different way compared to physicians who were ordering fewer tests than the service average, the magnitude of the physicians’ deviation from the service average resulted in differential effects. We observed a reduction of 0.80 laboratory test orders per patient-day among physicians whose absolute ordering rate deviated from the peer rate by more than 1.0 laboratory test per patient-day (95% CI − 1.58 to − 0.02, p = 0.04). There was no difference between physicians in the intervention and control groups among the physicians whose absolute ordering rate deviated from the peer average by less than 1.0 laboratory test per patient-day (the physicians in the intervention group ordered 0.02 fewer laboratory tests per patient-day, 95% CI − 0.44 to 0.41, p = 0.93).

DISCUSSION

Our findings did not support the hypothesis that SCF reduces the rate of routine laboratory testing for hospitalized patients. On average, all physicians reduced their routine laboratory orders during the study, which may reflect local4 and national efforts to reduce overuse of routine laboratory testing for hospitalized patients. However, we observed a differential effect based on the magnitude of the absolute difference between an individual physician’s ordering rate and the average for their peers. A threshold difference of one laboratory test per patient per day appeared to influence physician behavior. This finding is consistent with earlier observations that physicians with greater absolute deviation from the service average were more likely to access the dashboard.22

Possible explanations of the null result of the intent-to-treat analysis are relatively poor uptake of the intervention among physicians and cross-contamination between the two groups of physicians. Data on physician engagement with SCF is lacking. Previous studies of SCF interventions to change physician behavior were designed to ensure feedback was delivered through in-person interactions6 or did not measure the level of participant engagement with the feedback.20,25 In our study, only about two thirds of participants in the intervention group accessed feedback via e-mail and fewer than one in five accessed the EMR-based dashboard. Another possibility is that physicians were already receiving feedback or were otherwise aware of their ordering patterns compared to their peers. In fact, the Accreditation Council for Graduate Medical Education requires residency programs to provide trainees and faculty information about the quality of care delivered to their patient population.26 Although other initiatives aiming to reduce overuse of routine laboratory tests were ongoing at our institution, those interventions were implemented at the health system level,4,14 and there was no concerted effort to provide ordering data to physicians outside of our study. Furthermore, evidence from an earlier study at the same institution showed that residents did not routinely receive feedback about their utilization of laboratory tests: only 37% of internal medicine residents reported receiving any feedback on their ordering of medical services and 20% reported receiving such feedback regularly.23

Another possible explanation of the null finding is cross-contamination between the intervention and control groups. Close to a third of the participants were on teams randomized to both intervention and control during different blocks, with half of those participants randomized to the intervention arm first, followed by the control arm. Furthermore, single-blind single-site design of the trial may have resulted in some discussion of the intervention between the two groups.

Since we could not definitively determine a level of laboratory use that would be considered appropriate for each physicians’ patient mix, we did not attempt to characterize physicians’ ordering, identify top performers, or provide suggestions for improvement. Those steps might have strengthened the intervention.18 However, more empiric evidence is needed to understand the differential effects of how SCF is designed and delivered on physician behavior. Evidence suggests that factors that influence physician test-ordering behaviors range broadly beyond physician knowledge and local practice patterns.10,27–29 Residents may be reluctant to reduce ordering because of perceived institutional or attending expectations, risk-aversion, and uncertainty about potential harms (e.g., lack of cost transparency).14,30 Interventions that aim to influence physician behavior should be thoroughly tested prior to broad implementation to avoid unanticipated effects, particularly in areas of practice with high clinical uncertainty.

Our study contrasts with previous trials of SCF to affect physician behavior that required in-person feedback. Although feedback delivered in-person by a peer is more likely to affect physician practice behaviors,31 such feedback can be prohibitively labor-intensive. EMR-based automated feedback has the advantages of easy dissemination and replication to other areas of overuse and may take less time away from patient care. Our findings suggest some promise of this approach in an area of overuse that represents a long-standing challenge, although better design is needed. Other interventions found to be most effective in reducing routine laboratory orders involved limiting ordering abilities of the providers either by eliminating recurrent test ordering options in the EMR32 or adding prompts to stop continued testing for prolonged hospitalizations.33 Although such interventions are effective, healthcare organizations and practices may be hesitant to adopt them broadly because they place limits on physicians’ professional autonomy. Most practices considered overused may still be necessary and appropriate in some cases. Unlike traditional audit and feedback, which involves historical comparison and target setting, SCF enables the use of contemporary peer data that is dynamic and may result in greater participant buy-in. Furthermore, EMR-based SCF may be an effective and scalable approach to high-value care teaching in graduate medical education. A review of interventions for high-value care teaching found that reflective practice that incorporates feedback about performance is an important component of successful interventions.34 Adoption of EMR-based automated SCF may facilitate reflective practice by both trainees and faculty.

This study has several important limitations. First, due to the nature of physician scheduling, the possibility of spillover effects between intervention and control groups exist. Second, single-center study design likely allowed further cross-contamination across groups and may also limit generalizability of the findings to other settings. Third, we did not evaluate whether accessing the e-mail or dashboard meant that the participant engaged with the information, how much time was spend digesting or discussing the feedback with colleagues or supervisors, and whether the participants understood the feedback. Fourth, we did not determine the utility of each particular order and whether an order was unnecessary. Fifth, this was a single-dose intervention, which may have limited its effect. Further iterations of SCF interventions in controlled settings may help determine what works best.

In sum, a SCF intervention reporting laboratory orders did not change targeted practice among ordering providers, although there was a significant decrease in orders among participants who deviated from their peers’ average by at least one laboratory order per patient-day. This suggests a potential target population for social feedback interventions to reduce laboratory ordering and should be evaluated in future studies. Effectiveness of SCF interventions using EMR-based dashboards to reduce physician use of potentially overused medical interventions may require efforts to increase physicians’ uptake of such dashboards.

Electronic Supplementary Material

(DOCX 694 kb)

Funding Information

This work was supported in part by Career Development Award K08AG052572 (Dr. Ryskina) and the Matt Slap Pilot Research Award from the Division of General Internal Medicine at Perelman School of Medicine at the University of Pennsylvania.

Compliance with Ethical Standards

The University of Pennsylvania Institutional Review Board reviewed and approved this study with a waiver of written documentation of consent

Conflict of Interest

Mitesh Patel is principal at Catalyst Health, a technology and behavior change consulting firm, and an advisory board member at Healthmine Services Inc. and Life.io. All other authors declare that they have no conflict of interest.

References

- 1.Berwick DM, Hackbarth AD. Eliminating waste in US health care. JAMA. 2012;307(14):1513–1516. doi: 10.1001/jama.2012.362. [DOI] [PubMed] [Google Scholar]

- 2.Dixon RH, Laszlo J. Utilization of clinical chemistry services by medical house staff. An analysis. Arch Intern Med. 1974;134(6):1064–1067. doi: 10.1001/archinte.1974.00320240098012. [DOI] [PubMed] [Google Scholar]

- 3.Axt-Adam P, van der Wouden JC, van der Does E. Influencing behavior of physicians ordering laboratory tests: a literature study. Med Care. 1993;31(9):784–794. doi: 10.1097/00005650-199309000-00003. [DOI] [PubMed] [Google Scholar]

- 4.Sedrak MS, Myers JS, Small DS, et al. Effect of a price transparency intervention in the electronic health record on clinician ordering of inpatient laboratory tests: the PRICE Randomized Clinical Trial. JAMA Intern Med. 2017;177(7):939–945. doi: 10.1001/jamainternmed.2017.1144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Fogarty AW, Sturrock N, Premji K, Prinsloo P. Hospital clinicians’ responsiveness to assay cost feedback: a prospective blinded controlled intervention study. JAMA Intern Med. 2013;173(17):1654–1655. doi: 10.1001/jamainternmed.2013.8211. [DOI] [PubMed] [Google Scholar]

- 6.Martin AR, Wolf MA, Thibodeau LA, Dzau V, Braunwald E. A trial of two strategies to modify the test-ordering behavior of medical residents. N Engl J Med. 1980;303(23):1330–1336. doi: 10.1056/NEJM198012043032304. [DOI] [PubMed] [Google Scholar]

- 7.Schroeder SA, Myers LP, McPhee SJ, et al. The failure of physician education as a cost containment strategy. Report of a prospective controlled trial at a university hospital. JAMA. 1984;252(2):225–230. doi: 10.1001/jama.1984.03350020027020. [DOI] [PubMed] [Google Scholar]

- 8.Feldman LS, Shihab HM, Thiemann D, et al. Impact of providing fee data on laboratory test ordering: a controlled clinical trial. JAMA Intern Med. 2013;173(10):903–908. doi: 10.1001/jamainternmed.2013.232. [DOI] [PubMed] [Google Scholar]

- 9.Bates DW, Kuperman GJ, Rittenberg E, et al. A randomized trial of a computer-based intervention to reduce utilization of redundant laboratory tests. Am J Med. 1999;106(2):144–150. doi: 10.1016/S0002-9343(98)00410-0. [DOI] [PubMed] [Google Scholar]

- 10.Young DW. Improving laboratory usage: a review. Postgrad Med J. 1988;64(750):283–289. doi: 10.1136/pgmj.64.750.283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wang TJ, Mort EA, Nordberg P, et al. A utilization management intervention to reduce unnecessary testing in the coronary care unit. Arch Intern Med. 2002;162(16):1885–1890. doi: 10.1001/archinte.162.16.1885. [DOI] [PubMed] [Google Scholar]

- 12.Zhi M, Ding EL, Theisen-Toupal J, Whelan J, Arnaout R. The landscape of inappropriate laboratory testing: a 15-year meta-analysis. PLoS One. 2013;8(11):e78962. doi: 10.1371/journal.pone.0078962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Schroeder SA. Can physicians change their laboratory test ordering behavior? A new look at an old issue. JAMA Intern Med. 2013;173(17):1655–1656. doi: 10.1001/jamainternmed.2013.7495. [DOI] [PubMed] [Google Scholar]

- 14.Sedrak MS, Patel MS, Ziemba JB, et al. Residents’ self-report on why they order perceived unnecessary inpatient laboratory tests. J Hosp Med. 2016. [DOI] [PubMed]

- 15.Emanuel EJ, Ubel PA, Kessler JB, et al. Using behavioral economics to design physician incentives that deliver high-value care. Ann Intern Med. 2016;164(2):114–119. doi: 10.7326/M15-1330. [DOI] [PubMed] [Google Scholar]

- 16.Meeker D, Linder JA, Fox CR, et al. Effect of behavioral interventions on inappropriate antibiotic prescribing among primary care practices: a randomized clinical trial. JAMA. 2016;315(6):562–570. doi: 10.1001/jama.2016.0275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Persell SD, Doctor JN, Friedberg MW, et al. Behavioral interventions to reduce inappropriate antibiotic prescribing: a randomized pilot trial. BMC Infect Dis. 2016;16:373. doi: 10.1186/s12879-016-1715-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Navathe AS, Emanuel EJ. Physician peer comparisons as a nonfinancial strategy to improve the value of care. JAMA. 2016;316(17):1759–1760. doi: 10.1001/jama.2016.13739. [DOI] [PubMed] [Google Scholar]

- 19.Rolnick JR, Ryskina KL. The use of individual provider performance reports by U.S. hospitals. J Hosp Med. 2018;in press. [DOI] [PMC free article] [PubMed]

- 20.Iams W, Heck J, Kapp M, et al. A multidisciplinary housestaff-led initiative to safely reduce daily laboratory testing. Acad Med. 2016;91(6):813–820. doi: 10.1097/ACM.0000000000001149. [DOI] [PubMed] [Google Scholar]

- 21.Patel MS, Volpp KG, Small DS, et al. Association of the 2011 ACGME resident duty hour reforms with mortality and readmissions among hospitalized Medicare patients. JAMA. 2014;312(22):2364–2373. doi: 10.1001/jama.2014.15273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kurtzman G DJ, Epstein A, Gitelman Y, Leri D, Patel MS, Ryskina KL. Internal medicine resident engagement with a laboratory utilization dashboard: mixed methods study. J Hosp Med. 2017. [DOI] [PMC free article] [PubMed]

- 23.Dine CJ, Miller J, Fuld A, Bellini LM, Iwashyna TJ. Educating physicians-in-training about resource utilization and their own outcomes of care in the inpatient setting. J Grad Med Educ. 2010;2(2):175–180. doi: 10.4300/JGME-D-10-00021.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Vickers AJ, Altman DG. Statistics notes: analysing controlled trials with baseline and follow up measurements. BMJ. 2001;323(7321):1123–1124. doi: 10.1136/bmj.323.7321.1123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Sey MSL, Liu A, Asfaha S, Siebring V, Jairath V, Yan B. Performance report cards increase adenoma detection rate. Endosc Int Open. 2017;5(7):E675–E682. doi: 10.1055/s-0043-110568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.ACGME. ACGME Common Program Requirements. Accreditation Council for Graduate Medical Education;2017. [DOI] [PMC free article] [PubMed]

- 27.Sood R, Sood A, Ghosh AK. Non-evidence-based variables affecting physicians’ test-ordering tendencies: a systematic review. Neth J Med. 2007;65(5):167–177. [PubMed] [Google Scholar]

- 28.Ryskina KL, Pesko MF, Gossey JT, Caesar EP, Bishop TF. Brand name statin prescribing in a resident ambulatory practice: implications for teaching cost-conscious medicine. J Grad Med Educ. 2014;6(3):484–488. doi: 10.4300/JGME-D-13-00412.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ryskina KL, Dine CJ, Kim EJ, Bishop TF, Epstein AJ. The effect of attending practice style on generic medication prescribing by residents in the clinic setting. J Gen Intern Med. 2015;30(9):1286–1293. doi: 10.1007/s11606-015-3323-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Dine CJ, Bellini LM, Diemer G, et al. Assessing correlations of physicians’ practice intensity and certainty during residency training. J Grad Med Educ. 2015;7(4):603–609. doi: 10.4300/JGME-D-15-00092.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ivers N, Jamtvedt G, Flottorp S, et al. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012(6):CD000259. [DOI] [PMC free article] [PubMed]

- 32.Iturrate E, Jubelt L, Volpicelli F, Hochman K. Optimize your electronic medical record to increase value: reducing laboratory overutilization. Am J Med. 2016;129(2):215–220. doi: 10.1016/j.amjmed.2015.09.009. [DOI] [PubMed] [Google Scholar]

- 33.Neilson EG, Johnson KB, Rosenbloom ST, et al. The impact of peer management on test-ordering behavior. Ann Intern Med. 2004;141(3):196–204. doi: 10.7326/0003-4819-141-3-200408030-00008. [DOI] [PubMed] [Google Scholar]

- 34.Stammen LA, Stalmeijer RE, Paternotte E, et al. Training physicians to provide high-value, cost-conscious care: a systematic review. JAMA. 2015;314(22):2384–2400. doi: 10.1001/jama.2015.16353. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 694 kb)