Abstract

Studies have linked Accountable Care Organizations (ACOs) to improved primary care, but there is little research on how ACOs affect care in other settings. We examined whether Medicare ACOs have improved hospital quality of care, specifically focusing on preventable inpatient mortality. We used 2008-2014 Healthcare Cost and Utilization Project hospital discharge data from 34 states’ Medicare ACO and non-ACO hospitals in conjunction with data from the American Hospital Association Annual Survey and the Survey of Care Systems and Payment. We estimated discharge-level logistic regression models that measured the relationship between ACO affiliation and mortality following admissions for acute myocardial infarction, abdominal aortic aneurysm (AAA) repair, coronary artery bypass grafting, and pneumonia, controlling for patient demographic mix, hospital, and year. Our results suggest that, on average, Medicare ACO hospitals are not associated with improved mortality rates for the studied IQI conditions. Stakeholders may potentially consider providing ACOs with incentives or designing new programs for ACOs to target inpatient mortality reductions.

Keywords: Medicare, Accountable Care Organizations, inpatients, mortality, quality of health care, continuity of patient care, logistic models, Healthcare Cost and Utilization Project

What do we already know about this topic?

There is some (mixed) evidence showing how Medicare Accountable Care Organizations (ACOs) are implementing organizational change and improving cost and quality on graded outcomes.

How does your research contribute to the field?

Our study is the first, to our knowledge, to examine the association between Medicare ACO affiliation and inpatient quality measures on which hospitals are not explicitly evaluated by the Medicare ACO programs.

What are your research’s implications toward theory, practice, or policy?

ACOs are supposed to affect broad-based organizational change; however, if ACOs are not improving quality for outcomes on which they are not explicitly evaluated by the ACO program, this would suggest that the ACO model is not as comprehensive as it was intended to be and that more targeted programs might be needed to improve quality along other dimensions.

Introduction

Accountable Care Organizations (ACOs) are groups of physicians, hospitals, and other health care professionals and facilities that come together to provide a continuum of care for an attributed population, typically with financial incentives to keep costs down. The goal of the ACO model is to achieve the triple aim of improving quality, reducing costs, and improving beneficiary health. The number of ACOs has grown rapidly, from 64 at the beginning of 2011 to 838 in 2016.1 Over half of this growth is due to Medicare ACOs, which were authorized by the Affordable Care Act. Commercial and Medicaid ACO arrangements have also been proliferating.

ACOs are broadly responsible for cost and quality across the continuum of care, which includes primary care, specialty care, and care received in outpatient and residential facilities and hospitals. As part of this mission, ACOs have focused in large part on primary care through efforts such as encouraging use of patient-centered medical homes,2,3 a delivery model whereby the primary care physician coordinates patient treatment that is comprehensive, team based, coordinated, accessible, and focused on quality and safety. ACO improvements in Consumer Assessment of Healthcare Providers and Systems scores, screening rates, and preventive care metrics suggest that ACO efforts to improve primary care are achieving the desired results.1,4

What is less well known is whether ACOs have improved care quality along peripheral dimensions they are not explicitly evaluated on. There is some evidence that ACOs are focusing on improving behavioral health care5 and are better managing post-acute care.6 But little is known about how ACOs impact inpatient care quality.

In this study, we used 2008-2014 hospital discharge data from 34 states to assess whether Medicare ACOs have improved inpatient quality by comparing discharges from hospitals that joined Medicare ACOs against discharges from hospitals that did not join ACOs. We examined mortality outcomes following admission for acute myocardial infarction (AMI), coronary artery bypass grafting (CABG), pneumonia, and abdominal aortic aneurysm (AAA) repair. Hospital inpatient deaths following admissions for these conditions are generally considered preventable with better inpatient care.

New Contribution

Our study is the first, to our knowledge, to examine the impact of ACOs on a set of quality dimensions beyond those that ACOs are required to report as part of Medicare’s shared savings calculations. Previous studies looked at ACO performance only with respect to quality metrics on which they are graded.7-9 Our results will help stakeholders understand whether Medicare ACOs are impacting quality beyond the metrics for which they are directly accountable. It is of interest to ensure that hospitals do not focus on graded care metrics to the detriment of other quality aspects.

Conceptual Framework

There are several reasons why Medicare ACOs may reduce inpatient mortality. Hospitals are typically part of ACO leadership or key partners, so they may push to prioritize hospital quality issues if they believe the ACO infrastructure can be used to help address them. Hospitals are known to have serious quality issues that can increase the risk of death, including medical errors, avoidable complications, and hospital-acquired infections.10,11 Medicare ACOs in particular may focus on reducing inpatient mortality because of its higher prevalence among the Medicare population than among the general populace.

Many ACOs have set out to create a “culture of quality improvement” across all their partners. For example, members of the Premier Health Care Alliance’s Accountable Care Collaborative have identified 6 components that they believe are essential to ACO success, 3 of which involve cultural changes within a hospital.12 The first, people-centered foundations, requires every level of an ACO to focus not just on care coordination but also on involving individuals in the management of their diagnosis. An example of this takes place at the Billings Clinic in Montana, a member of the Accountable Care Collaborative, which instituted a scorecard for diabetic patients, holding both doctors and patients responsible for measures throughout the year. A second change identified as necessary in a successful ACO is strong leadership to implement the cultural transformations. To this end, Summa Health Systems, another member of the Accountable Care Collaborative, instituted a governing board that consists of partners from across the ACO. This governing board is responsible for overseeing the ACO and includes committees focused on quality improvement within the ACO, as well as the overall financial viability of the ACO. The final cultural change indicated as a requirement for a successful ACO is population health and data management, which entails cultural transformation at both the leadership and practitioner level. Changes are required to support a workflow for individuals within an ACO that not only encourages the collection of data to assess progress but also creates a clinical agenda that focuses on the utilization of evidence-based care protocols. OSF HealthCare, a Pioneer ACO, attributes savings of $9 million to changes in the cultural transformation that they made regarding data analytics.13 ACOs invest in infrastructure that can lead to quality improvement throughout the care continuum, including dedicated staff, information systems, data-sharing mechanisms, predictive data analytics, and new communication channels to spread best practices. We hypothesize that these broad-based cultural and organizational changes ACOs are engaging in may reduce error rates across the spectrum of care provided by hospitals participating in ACOs and thus have beneficial impacts with respect to reducing Inpatient Quality Indicator (IQI) mortality rates.

On the contrary, there are some reasons to think that ACO hospitals might not reduce inpatient mortality more than non-ACO hospitals. Most saliently, there are no Medicare ACO quality metrics focused on inpatient mortality. Centers for Medicare & Medicaid Services (CMS) shared savings quality metrics focus on patient/caregiver experience, care coordination/patient safety, preventive health, and at-risk populations.14 The shared savings quality metrics may lead providers to focus less on inpatient mortality than on these other categories. It also bears emphasis that improving care quality is difficult and often proceeds slowly. Improvements in inpatient mortality may be especially difficult if the hospital already had made large gains in reducing mortality prior to having joined or formed an ACO.

Methods

Data

We conducted a secondary data analysis of hospital discharge data to assess whether joining a Medicare ACO was associated with a reduction in hospital inpatient mortality for preventable conditions. We extracted hospital inpatient discharge records from the 34 states that contributed data to the Healthcare Cost and Utilization Project (HCUP) State Inpatient Databases (SID) continuously over 2008-2014. The 34 included states are Arizona, Arkansas, California, Colorado, Connecticut, Florida, Georgia, Hawaii, Illinois, Indiana, Iowa, Kansas, Kentucky, Maryland, Michigan, Minnesota, Missouri, Nebraska, Nevada, New Jersey, New York, North Carolina, Oklahoma, Oregon, South Carolina, South Dakota, Tennessee, Texas, Vermont, Virginia, Washington, West Virginia, Wisconsin, and Wyoming. The SID, sponsored by the Agency for Healthcare Research and Quality (AHRQ), contain the universe of hospital discharge abstracts from community nonrehabilitation (CNR) hospitals in participating states. Some states provide data from other types of hospitals, but for consistency, we limited our analysis to CNR, non-long-term-care (non-LTAC) hospitals. Each SID record provides information about the patient’s age and sex, diagnoses recorded, procedures performed during the hospital stay, status at discharge, and expected primary payer. The HCUP databases are consistent with the definition of limited data sets under the Health Insurance Portability and Accountability Act Privacy Rule and contain no direct patient identifiers. The AHRQ institutional review board does not consider use of HCUP data for human subjects research.

The American Hospital Association (AHA) surveys all hospitals in the country annually, collecting information on hospital type (eg, whether it is a CNR non-LTAC hospital). A linkage file developed by AHRQ links hospitals between the AHA and HCUP databases. Starting with the 2011 survey, the AHA began asking hospitals whether they participate in an ACO and if so, whether they participate in an ACO serving Medicare, commercial, Medicaid, or other populations. Hospitals may participate in multiple ACOs.

In 2013, the AHA launched a new survey, the Survey of Care Systems and Payment (SCSP), which asks detailed questions about care coordination processes and payment models and additional questions specifically about hospitals’ ACO implementation. In this survey, hospitals with an ACO are asked to characterize their ACO governance as physician-led, hospital-led, led jointly by hospitals and physicians (jointly led), or one of several other options.

Measures

Outcomes

Our outcome measures were IQIs that do not depend on present-on-admission indicators. We used these IQIs because not all states provide present-on-admission indicators, and these IQIs provide a sufficient number of qualifying admissions to generate reliable mortality rate estimates from a large number of hospitals. IQIs capture adverse events experienced by patients admitted for specific conditions that could have been prevented with adequate inpatient care, so they can be interpreted as measures of hospital quality. We studied mortality IQIs following admissions for AMI, AAA repair, CABG, and pneumonia.

Predictors

The key predictors in our analysis are year-specific indicators interacted with indicators for the hospital being currently affiliated with a Medicare ACO, which is the only type of ACO hospital we study. Thus, these interaction terms estimate the average impact of ACO affiliation separately in each year the ACO was active. In a subanalysis, we further stratified ACOs into 2 types on the basis of governance structure: (1) hospital-led or jointly led by hospital and physician group or (2) other-led. Other-led includes, most prominently, physician-led ACOs in which the hospital did not also take a leadership role. Because the SCSP from which ACO governance structure information was extracted did not exist before 2013, we treated governance as a fixed attribute: If a hospital indicated hospital or joint leadership in the 2013 or 2014 SCSP, we assigned the hospital to that group. Otherwise, if the hospital indicated ACO participation in any year, we considered it to be other-led.

In sensitivity analyses, we allowed for differences in pre-2011 time trends between non-ACO hospitals and hospitals that went on to join ACOs, to account for the possibility that preexisting trends in IQI mortality rates may have influenced the hospital’s decision to join an ACO. However, these trends yielded implausible implied counterfactual outcome trajectories for the hospitals that went on to join ACOs (with quadratic trend difference specifications) or yielded largely insignificant coefficients on the time trend differentials (with a linear specification), so the primary results presented here do not include these trend differential terms. However, the results from these sensitivity runs are available from the authors upon request.

We controlled for the demographic profile of each discharge using 5-year age bins interacted with sex, noting that our analysis was restricted to patients aged 65 years or older and with Medicare as their primary expected payer. The models also included hospital random effects and year fixed effects.

Analysis

We linked discharges at hospitals in the HCUP SID to the hospital information in the AHA Annual Survey from 2008 to 2014 and to the AHA SCSP in 2013 and 2014. We limited our analysis to discharges from CNR hospitals that consistently reported data over the entire period and, as noted above, to discharges of patients aged 65 years or older with Medicare as the primary expected payer. Among hospitals that indicated any ACO affiliation, we excluded those that did not have a Medicare ACO. For each outcome, we excluded discharges from hospitals in a given year that had less than 10 qualifying denominator admissions from the Medicare 65+ years population. Among hospitals participating in ACOs, we further limited our data set to include only Medicare ACO hospitals that reported their ACO participation as beginning in fiscal year 2011; this allowed us to better track the dynamics of ACO impacts as hospitals gained more experience under the ACO paradigm by holding the composition of ACO hospitals fixed. We note that although the first Medicare ACO program did not start until January 2012, the proposed rule provisions for ACOs under the Medicare Shared Savings Program were announced in March 2011 and CMS issued a request for applications for the Pioneer ACO program in May 2011. Thus, the hospitals that indicated Medicare ACO participation in the 2011 survey most likely began implementing organizational changes to conform with the ACO requirements prior to the formal start of the Medicare ACO programs. Hospitals that participated in ACOs but later dropped out were included only for the years in which they were active in the ACO.

For years in which responses from both the AHA Annual Survey and the AHA SCSP were available, hospitals were deemed to have participated in a Medicare ACO that year if they indicated Medicare ACO participation on either survey. For years in which a hospital’s ACO participation status was missing, we assigned it the value of the hospital’s most recent nonmissing response. We assigned nonparticipant status to missing responses that preceded all nonmissing responses.

Our analytic goal was to understand the relationship between ACO participation and the probability of mortality for individuals admitted with IQI-associated conditions and how this relationship evolved as ACOs gained more experience over time. We implemented the following regression design for each IQI measure:

In the above model, E[ ] is the expectation operator, with |·denoting conditioning on all the right-hand side terms. i indexes discharges, each of which is associated with an age group by sex demographic profile (d), hospital (h), year (t), and ACO status of the associated hospital (a). y is the outcome, a binary indicator for whether the IQI admission resulted in mortality. is the logistic function. Subscripted coefficients denote vectors of fixed effects along with their coefficients: βd represents a vector of demographic indicators and their corresponding coefficients, with all indicators set to zero except for the entry of the demographic bin into which the discharge falls. Similarly, μh is a vector of hospital indicators and random coefficients, and λt is a vector of year indicators and coefficients. In the main analysis, a contains indicators for ACO hospital and non-ACO hospital status; in the subanalysis in which ACOs are stratified by governance structure, a contains indicators for hospital-led or jointly led ACOs, other-led ACOs, and non-ACO hospitals. The primary term we are interested in is the vector δat, which contains the indicator variables and corresponding coefficients for ACO affiliation in each post-ACO year over 2011-2014.

Results

Our sample included between 11 547 discharges for AAA repair in 2010 and 232 637 discharges for pneumonia in 2008, with counts varying by IQI and year (Table 1). Hospital counts also varied by year and condition, with hospital-led or jointly led ACO counts ranging from 13 to 16, other-led ACO counts ranging from 26 to 64, and non-ACO counts ranging from 424 to 2015. Mortality rates declined during the time period for all 4 conditions examined.

Table 1.

Inpatient Quality Indicator Mortality Counts and Rates.

| 2008 | 2009 | 2010 | 2011 | 2012 | 2013 | 2014 | |

|---|---|---|---|---|---|---|---|

| AAA | |||||||

| Discharge count | 11 883 | 11 832 | 11 547 | 11 724 | 11 945 | 11 806 | 11 743 |

| Mortality rate (%) | 5.08 | 4.70 | 4.57 | 3.97 | 3.93 | 3.61 | 3.34 |

| Hospital/jointly led ACO hospital count | 14 | 14 | 14 | 13 | 14 | 13 | 13 |

| Other-led ACO hospital count | 35 | 35 | 35 | 33 | 34 | 34 | 35 |

| Non-ACO hospital count | 671 | 664 | 662 | 649 | 665 | 651 | 641 |

| CABG | |||||||

| Discharge count | 54 335 | 52 469 | 51 112 | 49 101 | 49 488 | 49 831 | 49 076 |

| Mortality rate (%) | 4.13 | 3.76 | 3.76 | 3.88 | 3.76 | 3.49 | 3.56 |

| Hospital/jointly led ACO hospital count | 13 | 13 | 13 | 14 | 13 | 13 | 13 |

| Other-led ACO hospital count | 28 | 28 | 28 | 27 | 27 | 26 | 26 |

| Non-ACO hospital count | 424 | 428 | 436 | 444 | 451 | 447 | 447 |

| AMI | |||||||

| Discharge count | 117 363 | 113 445 | 115 626 | 116 677 | 122 447 | 122 188 | 121 437 |

| Mortality rate (%) | 9.27 | 8.79 | 8.55 | 8.24 | 7.90 | 7.47 | 7.26 |

| Hospital/jointly led ACO hospital count | 16 | 15 | 16 | 16 | 16 | 16 | 15 |

| Other-led ACO hospital count | 62 | 64 | 62 | 61 | 63 | 61 | 60 |

| Non-ACO hospital count | 1847 | 1823 | 1814 | 1784 | 1791 | 1762 | 1717 |

| Pneumonia | |||||||

| Discharge count | 232 637 | 217 420 | 220 481 | 225 096 | 218 390 | 216 709 | 197 687 |

| Mortality rate (%) | 5.46 | 5.24 | 5.02 | 4.82 | 4.56 | 4.43 | 4.08 |

| Hospital/jointly led ACO hospital count | 16 | 16 | 16 | 16 | 16 | 16 | 16 |

| Other-led ACO hospital count | 63 | 63 | 63 | 64 | 63 | 64 | 63 |

| Non-ACO hospital count | 1983 | 1990 | 2004 | 2007 | 2015 | 2010 | 2009 |

Note. For ACO hospitals, ACOs began in 2011. AAA—average age: 76.1, percent female: 22.1. AMI—average age: 79.9, percent female: 52.0. CABG—average age: 73.7, percent female: 31.4. Pneumonia—average age: 80.2, percent female: 54.5. ACO = Accountable Care Organization; AAA = abdominal aortic aneurysm; AMI = acute myocardial infarction; CABG = coronary artery bypass grafting.

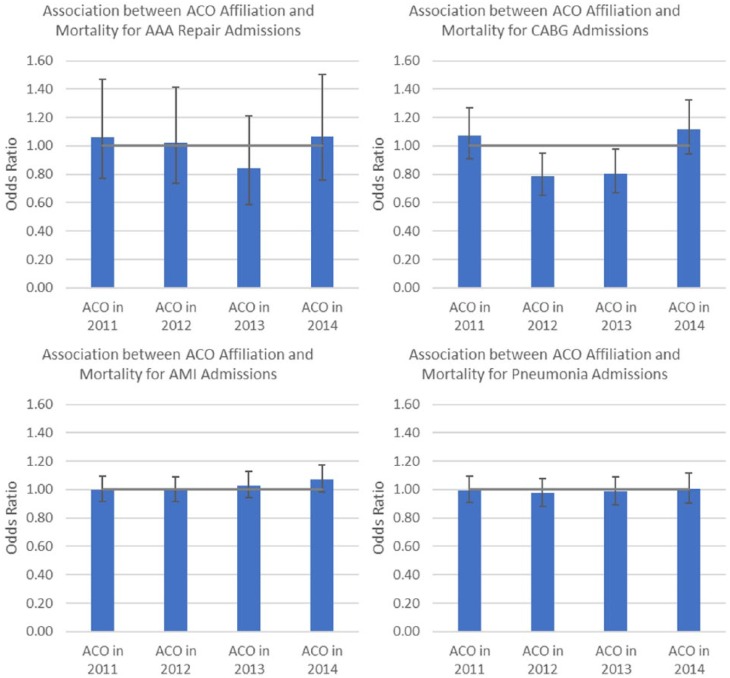

We first discuss regression results from the main analyses in which all ACOs were grouped together, presented in Figure 1. Full regression results are available in the supplemental online appendix. For AAA repair, the odds ratios (ORs) associated with joining an ACO did not differ substantively or statistically from 1 (ie, no effect), with the exception of the 2013 ACO effect, for which the OR differed substantively (OR = 0.84) but not statistically (P = .26) from 1. For CABG, the ACO effects did not exhibit a monotonic pattern; estimated OR point estimates fluctuated from 1.07 (P = .40) to 0.79 (P = .01) to 0.81 (P = .03) to 1.12 (P = .20) over 2011-2014, with only the 2012 and 2013 ACO effect estimates achieving point-wise statistical significance. Thus, one would be strained to suggest that the middle-year effects with significant P values, in the context of fluctuating point estimates and other nonsignificant P values, hint at any true underlying association. For AMI, none of the estimated ACO effects deviated substantively or statistically from 1. Likewise, for pneumonia, none of the estimated ACO effects deviated substantively or statistically from 1.

Figure 1.

Logistic regressions of hospital inpatient mortality among admissions for select conditions: ACOs without stratification.

Note. The odds ratios presented in this figure were obtained from logistic regressions controlling for age group (in 5-year bins) interacted with sex, and year indicators and hospital random effects. ACO = Accountable Care Organization; AAA = abdominal aortic aneurysm; AMI = acute myocardial infarction; CABG = coronary artery bypass grafting.

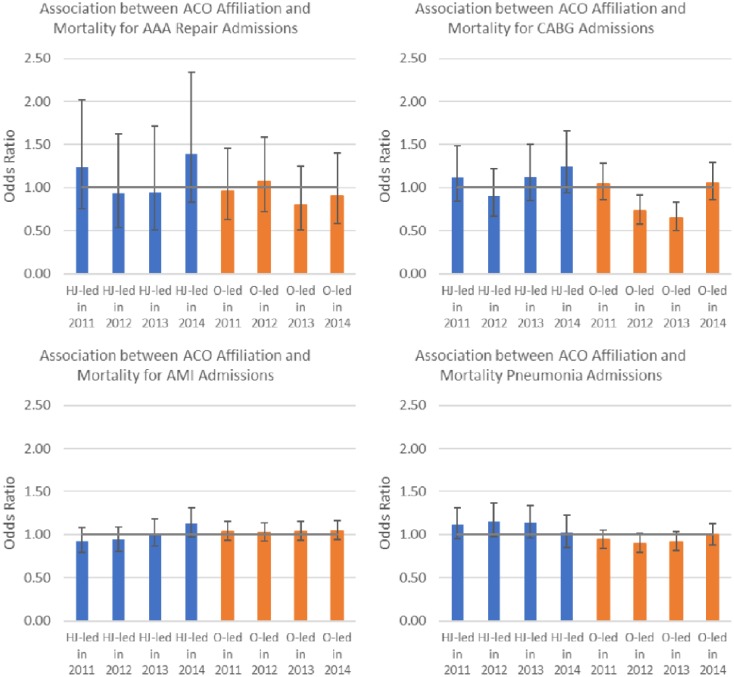

For the regressions in which ACOs are stratified by leadership, the results of which are presented in Figure 2, there were some differences in point estimates between the ACO effects estimated for hospital-led or jointly led ACOs compared with corresponding ACO effects estimated for other-led ACOs. However, the majority of these differences were not statistically significant, nor did they exhibit any clear pattern. For example, considering AAA repair in 2011, the point estimate OR for hospital or jointly led ACOs was 1.24 with a P value of .38, while the point estimate OR for other-led ACOs was 0.96 with a P value of .85. In 2012, the signs on the OR point estimates for both hospital or jointly led ACOs and other-led ACOs were reversed relative to their corresponding 2011 point estimates: The point estimate OR for hospital or jointly led ACOs was .94 with a P value of .81, while the point estimate OR for other-led ACOs was 1.07 with a P value of .73. For CABG admissions, the OR point estimates for other-led ACOs achieved statistical significance in 2012 and 2013, but in the context of all the other nonsignificant estimates and lack of substantive patterns, one is hard-pressed to interpret these estimates as indicative of a true underlying effect.

Figure 2.

Logistic regressions of hospital inpatient mortality among admissions for select conditions: ACOs stratified by leadership.

Note. The odds ratios presented in this figure were obtained from logistic regressions controlling for age group (in 5-year bins) interacted with sex, and year indicators and hospital random effects. ACO = Accountable Care Organization; AAA = abdominal aortic aneurysm; AMI = acute myocardial infarction; CABG = coronary artery bypass grafting; HJ-led = hospital-led or jointly led by hospital and physician groups; O-led = other-led.

Discussion

Our findings suggest that ACOs have not had a noticeable impact on hospital inpatient mortality rates, at least on average. Instead, we found that ACO hospitals and non-ACO hospitals have similar mortality rate trends. These results suggest that ACOs may not be improving quality across the complete care continuum, as intended by their mission.

Despite the fact that avoidable inpatient mortality is a known issue and there are clear interventions that can reduce hospital inpatient mortality rates,15 there are reasons ACOs may not be focusing on disseminating these interventions to partner hospitals. As previously noted, hospitals may have already established infrastructure and programs to minimize preventable deaths. Hospitals have to report these metrics to CMS, and the metrics are included in public reporting efforts such as CMS Hospital Compare. ACOs may not have much to add to make these programs more successful. In fact, there may be little room for improvement in mortality rates at some hospitals. Another possibility is that ACOs may not have a direct way to engage hospital staff in efforts to reduce inpatient mortality rates. Hospital staff and specialists and surgeons with admission privileges may not be engaged in ACO activities. In addition, ACOs may partner with multiple hospitals, so efforts may be time-consuming and costly. Perhaps most important, as mentioned earlier, inpatient mortality is not a measure for which ACOs are held accountable.

Even though ACOs may not have a clear path to reducing hospital mortality, they have discretion over which hospitals are included as partners and may selectively identify high-performing hospitals, such as those that have lower mortality rates. However, our analysis provided no compelling evidence that ACO hospitals had lower inpatient mortality than non-ACO hospitals prior to ACO formation.

Our study has limitations that are important to consider when interpreting results. We relied on hospital reporting of ACO participation in the AHA Annual Survey. Although there was a very high response rate to the AHA survey, some respondents may not have accurately reported ACO participation. Also, there may biasing characteristics associated with hospitals in ACOs; for example, higher quality hospitals may be more likely to become ACOs. However, when looking at pre-2011 trends of ACO and non-ACO hospitals, we did not find evidence that this was the case. In addition, we were not able to perform a selective assessment of inpatient mortality rates of ACO beneficiaries. Rather, we examined inpatient mortality rate trends of all Medicare patients. We may not have been able to detect change if ACOs adopted interventions to reduce mortality rates specifically for their ACO beneficiaries. However, it would be difficult for hospitals to focus efforts to reduce preventable mortality on a single patient group. Finally, it is important to note that particular ACO implementation strategies may be more successful at reducing inpatient mortality than others, which our analysis of average impacts might not have sufficient power to detect.

Our study had several strengths. We used longitudinal data (2008-2014) from the universe of hospital discharges in 34 states. We used nationally endorsed AHRQ IQI mortality rate specifications. We limited our analysis to Medicare ACOs, hypothesizing that these ACOs were most likely to have focused on mortality rates, because Medicare beneficiaries are most likely to be at risk for inpatient mortality.

In conclusion, our results suggest that the ACOs may not be associated with reductions in preventable inpatient mortality rates. It is possible that if inpatient mortality were added to the list of Medicare ACO quality measures, ACOs would focus more attention on reducing mortality. Because there are known gaps in quality at hospitals that contribute to mortality, policy makers may want to consider that possibility. The potential problem with adding inpatient mortality to the quality measure set is that some physician-led ACOs do not have hospital partners or have more limited relationships with hospitals in their networks. These ACOs may feel pressured to expand their core partners to include hospitals, which could reduce the benefit associated with being a physician-led ACO, which current results suggest is the most promising ACO configuration.1,16 It is also possible that expanding the quality measure set would add to the already heavy burden of data collection and reporting. Finally, it is important to note that even though we did not find ACOs to be improving the quality of hospital inpatient care on average, it is possible that particular ACO implementation strategies may be more successful than others, which our analysis of average impacts might not have detected.

Supplemental Material

Supplemental material, Supplemental_Tables for The Effects of Medicare Accountable Organizations on Inpatient Mortality Rates by Eli Cutler, Zeynal Karaca, Rachel Henke, Michael Head and Herbert S. Wong in INQUIRY: The Journal of Health Care Organization, Provision, and Financing

Acknowledgments

We acknowledge Minya Sheng for expert programming and Paige Jackson for editorial assistance. We thank 3 anonymous referees for their helpful comments. We also acknowledge the following Healthcare Cost and Utilization Project (HCUP) Partners: Arkansas Department of Health, Arizona Department of Health Services, California Office of Statewide Health Planning & Development, Colorado Hospital Association, Connecticut Hospital Association, Florida Agency for Health Care Administration, Georgia Hospital Association, Hawaii Health Information Corporation, Iowa Hospital Association, Illinois Department of Public Health, Indiana Hospital Association, Kansas Hospital Association, Kentucky Cabinet for Health and Family Services, Maryland Health Services Cost Review Commission, Michigan Health & Hospital Association, Minnesota Hospital Association, Missouri Hospital Industry Data Institute, North Carolina Department of Health and Human Services, Nebraska Hospital Association, Nevada Center for Health Information Analysis, New Jersey Department of Health, New York State Department of Health, Oklahoma State Department of Health, Oregon Association of Hospitals and Health Systems, South Carolina Revenue and Fiscal Affairs Office, South Dakota Association of Healthcare Organizations, Tennessee Hospital Association, Texas Health Care Information Collection, Virginia Health Information, Vermont Association of Hospitals and Health Systems, Washington State Department of Health, West Virginia Health Care Authority, Wisconsin Department of Health Services, and Wyoming Hospital Association.

Footnotes

Authors’ Note: The views expressed in this article are those of the authors and do not necessarily reflect those of the Agency for Healthcare Research and Quality or the U.S. Department of Health and Human Services. The authors have no conflict of interest.

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This study was funded by the Agency for Healthcare Research and Quality (AHRQ) under contract no. HHSA-290-2013-00002-C to develop and support the Healthcare Cost and Utilization Project (HCUP).

Supplementary Materials: We used State inpatient data from the Healthcare Cost and Utilization Project (HCUP) for this study. For information about how to obtain a copy of these data, please visit https://www.hcup-us.ahrq.gov.

ORCID iDs: Eli Cutler  https://orcid.org/0000-0003-3438-6821

https://orcid.org/0000-0003-3438-6821

Rachel Henke  https://orcid.org/0000-0001-6438-4662

https://orcid.org/0000-0001-6438-4662

References

- 1. Muhlestein D, McClellan M. Accountable Care Organizations in 2016: private and public-sector growth and dispersion. Health Affairs Blog. http://healthaffairs.org/blog/2016/04/21/accountable-care-organizations-in-2016-private-and-public-sector-growth-and-dispersion/. Published April 21, 2016. Accessed August 13, 2018.

- 2. L&M Policy Research. Evaluation of CMMI Accountable Care Organization Initiatives: Pioneer ACO Evaluation Findings From Performance Years One and Two. https://innovation.cms.gov/Files/reports/PioneerACOEvalRpt2.pdf. Published March 10, 2015. Accessed August 13, 2018.

- 3. Shortell SM, McClellan SR, Ramsay PP, Casalino LP, Ryan AM, Copeland KR. Physician practice participation in accountable care organizations: the emergence of the unicorn. Health Serv Res. 2014;49(5):1519-1536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Centers for Medicare & Medicaid Services. Medicare Accountable Care Organizations 2015 Performance Year Quality and Financial Results. https://www.cms.gov/newsroom/mediareleasedatabase/fact-sheets/2016-fact-sheets-items/2016-08-25.html. Published 2016. Accessed August 13, 2018.

- 5. Fullerton CA, Henke RM, Crable EL, Hohlbauch A, Cummings N. The impact of Medicare ACOs on improving integration and coordination of physical and behavioral health care. Health Aff (Millwood). 2016;35(7):1257-1265. [DOI] [PubMed] [Google Scholar]

- 6. Colla CH, Lewis VA, Bergquist SL, Shortell SM. Accountability across the continuum: the participation of postacute care providers in accountable care organizations. Health Serv Res. 2016;51:1595-1611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Albright BB, Lewis VA, Ross JS, Colla CH. Preventive care quality of Medicare accountable care organizations: associations of organizational characteristics with performance. Med Care. 2016;54(3):326-335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Jacob JA. Medicare ACOs improving quality of care: CMS report. JAMA. 2015;314(16):1683. [Google Scholar]

- 9. Spatz ES, Lipska KJ, Dai Y, et al. Risk-standardized acute admission rates among patients with diabetes and heart failure as a measure of quality of Accountable Care Organizations: rationale, methods, and early results. Med Care. 2016;54(5):528-537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Kohn LT, Corrigan JM, Donaldson MS, eds. To Err Is Human: Building a Safer Health System. Washington, DC: National Academy Press; 2000. [PubMed] [Google Scholar]

- 11. Leape LL, Berwick DM. Five years after to err is human: what have we learned? JAMA. 2005;293(19):2384-2390. [DOI] [PubMed] [Google Scholar]

- 12. Forster AJ, Childs BG, Danmore JF, DeVore SD, Kroch EA, Lloyd DA. Accountable Care Strategies: Lessons From the Premier Health Care Alliance’s Accountable Care Collaborative. The Commonwealth Fund. https://www.commonwealthfund.org/sites/default/files/documents/___media_files_publications_fund_report_2012_aug_1618_forster_accountable_care_strategies_premier.pdf. Published 2012. Accessed August 13, 2018.

- 13. Hohulin M, Foulger R. How One Pioneer ACO Is Improving Healthcare Performance Through Analytics and Cultural Transformation. https://www.healthcatalyst.com/webinar/one-pioneer-aco-improving-healthcare-performance-analytics-cultural-transformation/. Published 2015. Accessed August 13, 2018.

- 14. Centers for Medicare & Medicaid Services. Table: 33 ACO Quality Measures. Date unknown. https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/sharedsavingsprogram/Downloads/ACO-Shared-Savings-Program-Quality-Measures.pdf. Accessed August 13, 2018.

- 15. Whittington J, Simmonds T, Jacobsen D. Reducing Hospital Mortality Rates (Part 2). Cambridge, MA: Institute for Healthcare Improvement; 2005. (IHI Innovation Series White Paper). [Google Scholar]

- 16. McWilliams JM, Hatfield LA, Chernew ME, Landon BE, Schwartz AL. Early performance of accountable care organizations in Medicare. N Engl J Med. 2016;374(24):2357-2366. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, Supplemental_Tables for The Effects of Medicare Accountable Organizations on Inpatient Mortality Rates by Eli Cutler, Zeynal Karaca, Rachel Henke, Michael Head and Herbert S. Wong in INQUIRY: The Journal of Health Care Organization, Provision, and Financing