Abstract

Vocal emotion perception is an important part of speech communication and social interaction. Although older adults with normal audiograms are known to be less accurate at identifying vocal emotion compared to younger adults, little is known about how older adults with hearing loss perceive vocal emotion or whether hearing aids improve the perception of emotional speech. In the main experiment, older hearing aid users were presented with sentences spoken in seven emotion conditions, with and without their own hearing aids. Listeners reported the words that they heard as well as the emotion portrayed in each sentence. The use of hearing aids improved word-recognition accuracy in quiet from 38.1% (unaided) to 65.1% (aided) but did not significantly change emotion-identification accuracy (36.0% unaided, 41.8% aided). In a follow-up experiment, normal-hearing young listeners were tested on the same stimuli. Normal-hearing younger listeners and older listeners with hearing loss showed similar patterns in how emotion affected word-recognition performance but different patterns in how emotion affected emotion-identification performance. In contrast to the present findings, previous studies did not find age-related differences between younger and older normal-hearing listeners in how emotion affected emotion-identification performance. These findings suggest that there are changes to emotion identification caused by hearing loss that are beyond those that can be attributed to normal aging, and that hearing aids do not compensate for these changes.

Keywords: hearing loss, aging, emotions, speech intelligibility

Introduction

Successful communication depends on the perception of nonlinguistic as well as linguistic information. Nonlinguistic information can be conveyed through visual cues, including body gestures and facial expressions, or auditory cues, including emotional nonspeech vocalizations and emotional speech prosody. In the auditory domain, emotional nonspeech vocalizations include sounds such as laughter or sighs that convey emotion without using words, while in emotional speech, the same words may be spoken with different emotional prosodic cues that alter their meaning. For instance, the phrase That went well might be spoken with relief to convey that something did indeed go well or it might be spoken with irony to convey quite the opposite meaning. A meta-analytic review has shown that older adults generally have greater difficulty than younger adults at identifying emotions from faces, body cues, and voices, though the magnitude of age-related differences varies across emotions and modalities (Ruffman, Henry, Livingstone, & Phillips, 2008). The underlying reasons for these general age-related differences in emotion identification are not yet clear. Neuroimaging studies show that brain structures involved in emotional processing such as the amygdala are fairly well preserved with age, but that older adults exhibit greater brain activity than younger adults in the prefrontal cortex during emotional processing tasks, suggesting that cognitive factors may play a role in age-related differences in performance (Nashiro, Sakaki, & Mather, 2012). Nevertheless, studies that have examined the relationship between cognition and emotion identification have produced mixed results, with some studies showing that measures of fluid intelligence, vocabulary, and working memory predict the performance of older adults on auditory emotion identification tasks (Ruffman, Halberstadt, & Murray, 2009; Sen, Isaacowitz, & Schirmer, 2017), while other studies show that these measures do not predict performance on emotion identification tasks (Lambrecht, Kreifelts, & Wildgruber, 2012; Lima, Alves, Scott, & Castro, 2014). Finally, studies have also examined the role of sensory factors in age-related changes in emotion-identification performance, in particular, how audiometric pure-tone thresholds and psychoacoustic measures may be related to auditory emotion perception. In general, pure-tone thresholds do not predict the identification of emotional prosody in speech by older adults who have relatively normal hearing (Dupuis & Pichora-Fuller, 2015; Lambrecht et al., 2012; Mitchell, 2007). Likewise, fundamental frequency, level, and duration difference limens do not predict emotion-identification performance in older listeners with normal hearing (Dupuis & Pichora-Fuller, 2015), but they do predict older listeners’ ability to discriminate between happy and sad prosody (Mitchell & Kingston, 2014).

Thus far, studies that tested relatively normal-hearing older adults have not found strong effects of hearing ability on the perception of emotional speech prosody. However, studies that tested older adults with hearing loss have found clear effects of hearing ability on auditory emotion perception. When middle-aged adults were tested using affective sounds that included nonspeech vocalizations such as crying or laughter, listeners with mild to moderately severe hearing loss responded to affective sounds more slowly than listeners with normal hearing, and listeners with hearing loss also showed less limbic system activity in response to affective sounds compared to listeners with normal hearing (Husain, Carpenter-Thompson, & Schmidt, 2014). In another study that used similar affective sounds, older adults with mild-to-severe hearing loss had a smaller range of arousal and valence ratings and more negative valence ratings for pleasant sounds, compared to older adults with normal hearing (Picou, 2016a). Besides affecting the perception of nonspeech emotional sounds, hearing loss in older adults also seems to affect the perception of emotional prosodic cues in running speech. A recent study tested a group of older adults with a range of hearing profiles from normal-hearing to moderate hearing loss and showed that listeners with worse pure-tone average (PTA) audiometric thresholds rated segments of emotional conversational speech to have more positive valence (Schmidt, Janse, & Scharenborg, 2016).

Technological intervention using hearing aids is a standard treatment for hearing loss in adults regardless of age. It has been well established that hearing aids improve word recognition (for a systematic review, see Ferguson et al., 2017). However, it is not yet clear if hearing aids improve the perception of nonlinguistic speech information such as emotion. First, the importance of different acoustic cues to the listener may change depending on the task. For instance, fundamental frequency is an important cue for identifying emotion (Scherer, 1995), but it is not as important for word recognition (at least in a quiet listening environment). Conversely, formants provide key information for identifying vowels, but they are not as important for emotion identification. Second, the changes caused by hearing aids may be more or less helpful depending on whether the listener’s task is to recognize words or emotions. To improve speech intelligibility for most older adults, hearing aids provide amplification to increase the audibility of low-amplitude sounds, with frequency-specific increases in energy depending on the configuration of the hearing loss. In addition to amplification, hearing aids also provide compression that prevents overamplification depending on the individual’s loudness tolerance (e.g., more gain in higher frequencies for those with high-frequency hearing loss but more compression for those with greater loudness recruitment). Although amplification with amplitude compression may increase the overall audibility of the speech signal within the listener’s dynamic range, these alterations of the speech signal may hamper emotion recognition by decreasing the amplitude variation of utterances and by changing the speech spectrum. The ability of listeners with significant hearing loss to perceive vocal emotion has mostly been studied in cochlear implant users (for a review, see Jiam, Caldwell, Deroche, Chatterjee, & Limb, 2017). There has been much less work on emotion perception by hearing aid users, who would differ from cochlear implant users on the use of emotional cues such as pitch. In addition, most studies on vocal emotion perception by hearing aid users have only tested the performance of listeners with hearing loss under aided conditions (e.g., Most & Aviner, 2009; Waaramaa, Kukkonen, Stoltz, & Geneid, 2016). As expected, these studies showed that hearing aid users performed worse on emotion-identification tasks than their peers with normal hearing. Given the lack of within-subject comparisons on unaided and aided performance, it is not clear how much hearing aids compensate for hearing loss in terms of emotion perception.

Two studies so far have tested the perception of emotional speech in hearing aid users with and without their hearing aids, with both studies using the dimensional approach to emotion perception. In the dimensional approach, participants rate emotional stimuli on arousal and valence dimensions, with arousal referring to the mobilization of energy, ranging from calm to excited, and valence referring to the hedonic dimension of emotion, ranging from pleasant to unpleasant. The first study found that older listeners with hearing loss rated emotional speech more highly on arousal when they were aided than when they were unaided (Schmidt, Herzog, Scharenborg, & Janse, 2016), but that hearing aids did not affect valence ratings. In contrast, the second study found that hearing aids did not change listeners’ arousal ratings of emotional sounds (Picou, 2016b). The inconsistencies in results between studies may be due to the use of naturalistic speech stimuli in Schmidt et al.’s study (2016) versus the use of nonspeech affective sounds in the other (Picou, 2016b) or possibly because the former used participants’ own hearing aids while the latter fitted participants with hearing aids for research purposes with all digital features disabled except for feedback reduction. In any case, more data are needed on how hearing aids affect emotion perception.

No study has yet tested the perception of emotional speech in hearing aid users with and without their hearing aids using a discrete rather than a dimensional approach. A discrete approach involves identifying which emotion from a set of given emotions (e.g., anger, fear, disgust, happiness) is being conveyed by a talker. The discrete approach has been extensively used in the context of nonverbal communication in other populations (Ekman, 2007). One criticism of the discrete approach is that there is great variability in how each emotion may be expressed which is not captured by the use of a single emotion label (Barrett, Gendron, & Huang, 2009). However, a recent meta-analysis of neuroimaging studies concluded that there are reliable neural activation patterns that correspond to each of several basic emotions (anger, fear, disgust, happiness, and sadness), despite the use of different modalities (visual and auditory) and different types of stimuli (Vytal & Hamann, 2010).

In the present study, we adopted the discrete approach to emotion perception and measured the emotion-identification accuracy of older listeners with hearing loss. Besides being asked to identify the emotion conveyed in sentences, listeners were also asked to report a keyword from each sentence. The word-recognition task allowed us to check that the hearing aids were supplying the expected benefit in terms of improved speech understanding. Having a measure of accuracy for both word recognition and emotion identification allowed us to examine whether emotion-identification accuracy covaried with word-recognition accuracy, which would suggest common factors underlying performance on both tasks. We were also interested in whether emotional speech cues alter speech intelligibility, as typical audiological word recognition tests involve mostly semantically neutral content spoken using speech characterized as neutral in regard to emotion.

To summarize, the current study investigated the following research questions in a sample of older adults who were experienced hearing aid users: (a) whether hearing aids improved word-recognition accuracy for speech spoken with emotion, (b) whether hearing aids improved emotion identification, (c) whether the type of emotion affected word recognition and emotion identification, and (d) whether participant characteristics such as pure-tone thresholds, cognitive ability, and self-evaluations of hearing difficulty predicted benefit from hearing aids for both tasks.

Method

Participants

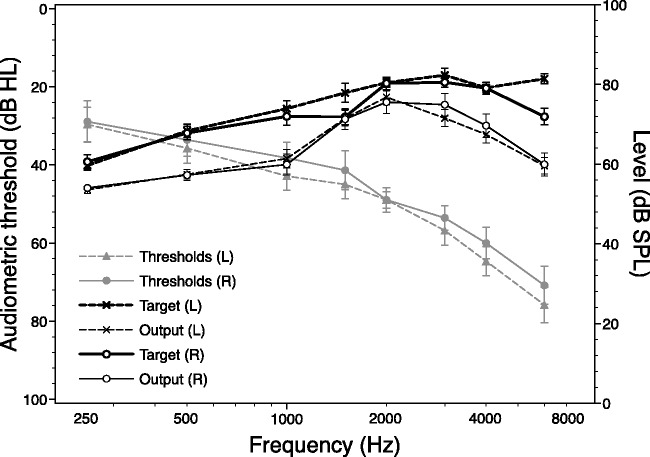

The current study was approved by the institutional review board at the University of Toronto. The data presented are from 14 participants1 who were recruited from the community (mean age = 76.6 years, range: 67–94). Participants were all native English speakers, with all reporting no neurological, speech, or language disorders, and average to excellent health. Their average audiogram is shown in Figure 1. All participants were experienced hearing aid users (median = 5.5 years, range: 2–50 years), and all wore hearing aids in both ears except for one user, who wore a hearing aid only in the right ear. Participants reported being satisfied with how their hearing aids performed. Three additional participants who were initially recruited for the study were replaced because they reported being dissatisfied with their hearing aids’ sound quality after the most recent adjustments (e.g., reporting that sounds were highly distorted with a reverberant or other abnormal quality).

Figure 1.

Thresholds are referenced to the left axis and show the mean audiometric pure-tone thresholds of the participants. NAL-NL1 hearing aid targets and measured outputs are referenced to the right axis, averaged across three input levels of 55, 65, and 75 dB SPL. Standard error bars are shown.

General Procedure

All participants provided written informed consent. Participants completed the study in three sessions, with each session conducted on a different day, and they used their own hearing aids in the aided listening condition. The use of participants’ own hearing aids likely introduced some variability in the results due to different types of hearing aid processing; however, results are likely more representative of participants’ normal listening experiences insofar as they had acclimatized to these hearing aids and were satisfied with them. In the first session, a hearing instrument specialist performed otoscopy and audiometry, checked that participants’ hearing aids were in good working order (no cracks in the housing; passed a listening check), and measured participants’ hearing aid output in response to the International Speech Test Signal (Holube, Fredelake, Vlaming, & Kollmeier, 2010) using an Audioscan Verifit Model VF-1. Figure 1 shows participants’ hearing aid output, averaged across input levels of 55, 65, and 75 dB SPL. In general, participants were underfit relative to NAL-NL1 targets by an average of 8.0 dB across ears, input levels, and frequencies. The difference between participants’ fitting and target levels is typical of hearing aid users; hearing aid users generally prefer a lower gain than the prescribed targets (Wong, 2011), with one study showing that the 95% confidence interval of the difference between preferred listening levels and prescribed targets was 5.8 to 8.4 dB (Polonenko et al., 2010). In the second session, participants were tested on the listening tasks without their hearing aids. In the third session, participants were tested on the listening tasks with their hearing aids, using a different set of sentences than had been used in the previous session. Participants were free to select whichever hearing aid program they would usually use when listening to speech in daily life.

Listening Tasks

Stimuli

The speech materials were sentences from the younger female talker in the Toronto Emotional Speech Set (Dupuis & Pichora-Fuller, 2011). These sentences were based on the Northwestern University Auditory Test No. 6 (Tillman & Carhart, 1966) and consisted of the carrier phrase Say the word followed by a monosyllabic keyword (e.g., bath, bean, bought). The talker spoke each sentence in seven portrayed emotion conditions: Anger, Disgust, Fear, Happy, Neutral, Pleasant Surprise, and Sad, which were chosen to align with the emotion conditions of two speech sets used in prior studies (Castro & Lima, 2010; Paulmann, Pell, & Kotz, 2008). The talker produced versions of each sentence to portray each of the seven emotions. The final tokens for each sentence in each emotion condition were chosen by a panel of listeners, with a minimum agreement of 80% between raters as to which token was the best representation of the particular emotion (Dupuis & Pichora-Fuller, 2011). Sentences were presented at an average level of 70 dB SPL but contained some item-to-item variation in level, with a standard deviation (SD) of 4.0 dB across the full set of 1,176 sentences. After listener data collection, logistic regression analyses were conducted with the average level of the stimulus as a continuous predictor, emotion as a categorical predictor, and accuracy of word recognition or emotion identification as a binary outcome. Neither sentence level nor the level of the target word significantly predicted word-recognition accuracy or emotion-identification accuracy.

For this study, seven lists of 168 sentences were constructed. Each list consisted of 24 sentences in each of seven emotion conditions. All seven lists contained the same 168 keywords; however, from list to list, the emotion portrayed in each keyword was different (e.g., bean would be spoken in the Angry condition in List 1 and in the Disgust condition in List 2).

Procedure

The listening tasks were conducted while the participant was seated in a double-walled sound-attenuating booth. Sentences were presented from a loudspeaker at a distance of 1.8 m and at head height in the horizontal plane at 315° azimuth relative to the participant (i.e., 45° to the left of the participant). After hearing each sentence, participants were asked to report the keyword at the end of each sentence (word-recognition measure) and to select one of the seven emotion choices (emotion-identification measure) using a touchscreen before pressing a button that would play the next sentence. Two participants were tested on each of the seven sentence lists, with the task order counter-balanced such that half of the participants completed the word-recognition task before the emotion-identification task, and the order of tasks was reversed for the other half of the participants. An experimenter scored the participant’s verbal responses for word recognition as correct or incorrect. The participant’s emotion identification responses were automatically recorded by the computer. Participants completed 14 practice trials with their hearing aids before completing the experimental trials. The experimental trials generally took 40 min to complete, including three short breaks at 10-min intervals.

Cognitive Tests and Questionnaires on Hearing and Emotion

Participant characteristics were assessed using a battery of measures, including a health history, two cognitive tests, four self-report measures of hearing, and three self-report measures of emotion. To examine whether participants’ performance was affected by cognitive ability (Gordon-Salant & Cole, 2016; Pichora-Fuller & Singh, 2006), participants completed the Montreal Cognitive Assessment (MoCA; Nasreddine et al., 2005) and a reading working memory span task (Daneman & Carpenter, 1980). The MoCA is a screening tool for detecting mild cognitive impairment and evaluates various cognitive functions, including visuospatial abilities, short-term memory, attention, working memory, and language abilities (Nasreddine et al., 2005). MoCA scores were adjusted for education by adding one point if the participant had less than 12 years of formal education, as per the instructions in the MoCA scoring manual. For each trial in the reading working memory span task, participants were visually presented with sentences one at a time on a computer screen followed by a recall test after a set of sentences had been presented. A trial could consist of between two to six sentences in a recall set, with the number of sentences in the set increasing in size as the task progressed. After participants read each sentence aloud, they had to answer whether the sentence made sense or not and to try to remember the last word of the sentence. At the end of each trial, participants had to recall the final word of all the sentences presented in the set for that trial. They scored one point for the final word that was correctly recalled in each sentence, regardless of the order in which they were recalled. All cognitive testing was conducted while participants were wearing their hearing aids.

Participants also filled out hearing-related questionnaires that are frequently used to assess self-reported hearing difficulties and benefit from hearing aids. These questionnaires were the 12-item Speech Spatial Qualities Questionnaire (SSQ12; Noble, Jensen, Naylor, Bhullar, & Akeroyd, 2013), the Hearing Handicap Inventory for Adults (HHIA; Newman, Weinstein, Jacobson, & Hug, 1990), the Abbreviated Profile of Hearing Aid Benefit (APHAB; Cox & Alexander, 1995), and the International Outcome Inventory for Hearing Aids (IOI-HA; Cox & Alexander, 2002). The SSQ12 measures self-perceived difficulty in various daily listening situations, including speech understanding in noisy or multiple-talker situations and localization of sounds in the environment. Higher scores on the SSQ12 indicate better performance (less difficulty) in various listening situations. The HHIA measures social and emotional consequences of hearing impairment on the listener, with higher scores indicating worse consequences resulting from hearing loss. The APHAB measures the amount of benefit from hearing aids by asking about ease of communication, reverberation, background noise, and the aversiveness of sounds as perceived by the listener with and without hearing aids. A benefit score is obtained by taking the difference between aided and unaided scores, with a higher score indicating more benefit from hearing aids. The IOI-HA evaluates the effectiveness of a listener’s hearing aids by asking about the amount of daily use, the amount of benefit from using the aids, and the effect of using the aids on the listener’s activities and quality of life. A higher IOI-HA score indicates a better outcome from using hearing aids.

Finally, participants’ self-perceived experiences of emotion were assessed so that it would be possible to examine whether participants’ self-perceived difficulty with emotion perception was associated with their performance on the emotion identification experimental task. These questionnaires were the Emotional Communication in Hearing Questionnaire (EMO-CHeQ; Singh, Liskovoi, Launer, & Russo, 2018), the 20-Item Toronto Alexithymia Scale (TAS20; Bagby, Parker, & Taylor, 1994), and the Positive and Negative Affect Scale (PANAS; Watson, Clark, & Tellegen, 1988). The EMO-CHeQ measures self-perceived difficulty in perceiving and producing vocal emotion, with higher scores indicating more difficulty. The TAS20 measures self-perceived difficulty in identifying and describing feelings, with higher scores indicating more difficulty. The PANAS lists 20 adjectives describing various emotions (10 positive and 10 negative emotions) and asks to what extent on a typical day the participant experiences each emotion on a scale from very slightly or not at all to extremely. A higher total score for a category indicates more frequent experiences with those emotions.

Statistical Analyses

Percent correct word recognition scores and percent correct emotion identification scores were transformed into Rationalized Arcsine Units (RAU) to make the data more suitable for statistical analysis, as the proportion scores obtained in typical sound identification testing tend to have variances that are correlated with the means, a non-normal distribution of scores around the mean, and nonlinearity of the scale at the extreme ends (Studebaker, 1985). RAU scores were used for all statistical analyses, while percentage scores were used for figures and reported means and SDs. For each outcome measure, an analysis of variance (ANOVA) was conducted with Listening Condition (Unaided or Aided) and Emotion (seven emotion conditions) as within-subject factors and Task Order (word recognition task first or emotion identification task first) as a between-subjects factor. Pairwise t tests with Holm correction were conducted when there were significant main effects of Emotion.

Correlational analyses were conducted between each participant characteristic and unaided and aided perceptual scores using Pearson’s correlations with Holm-Bonferroni correction. Participant characteristics were age, PTA (average of pure-tone thresholds at 0.5, 1, 2, and 4 kHz for both ears), MoCA score, reading working memory span total recall score, four hearing-related questionnaire scores, four emotion-related questionnaire scores (with PANAS results separated into positive and negative subscores), amount of hearing aid experience in years, and hearing aid fit (the difference between test output and target, averaged across ears, three input levels, and frequencies).

Results

Listening Task

Word recognition

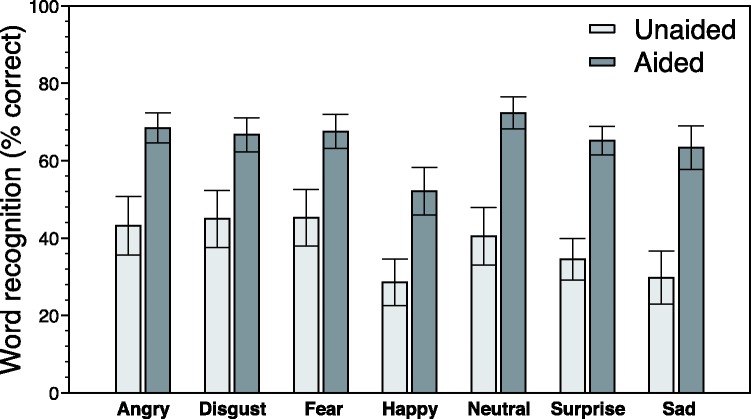

There was a significant main effect of Listening Condition on word-recognition accuracy, F(1, 12) = 28.49, p < .001, with better performance in the Aided condition (mean = 65.1%, SD = 13.8%) than in the Unaided condition (mean = 38.1%, SD = 23.4%). As seen in Figure 2, the advantage of hearing aids was consistent across emotions. There was also a significant main effect of Emotion on word-recognition accuracy, F(6, 72) = 11.60, p < .001. As shown in Figure 2, words spoken in the Happy condition were less accurately recognized than words spoken in any other Emotion condition (p values < .05) except for Sad. Words spoken in the Sad condition were also less accurately recognized than words spoken in any other Emotion condition (p values < .05) except for Happy and Surprise. Words spoken in the Angry, Disgust, Fear, and Neutral conditions were comparable with respect to word-recognition accuracy.

Figure 2.

Word recognition accuracy across Emotion conditions in Unaided and Aided listening conditions. Standard error bars are shown.

There was no significant effect on word-recognition accuracy of Task Order, F(1, 12) = 0.34, p = .57, and no significant interaction of Task Order with Listening Condition, F(1, 12) = 0.023, p = .88, or with Emotion, F(6, 72) = 2.06, p = .069. There was no significant interaction of Listening Condition with Emotion, F(6, 72) = 2.06, p = .068, and no significant interaction of Listening Condition, Emotion, and Task Order, F(6, 72) = 1.08, p = .38.

Emotion identification

There was no significant main effect of Listening Condition on the accuracy of emotion identification, F(1, 12) = 3.93, p = .071, with performance in the Aided condition (mean = 41.8%, SD = 16.4%) being comparable to performance in the Unaided condition (mean = 36.0%, SD = 13.5%). As shown in Figure 3, there was a significant main effect of Emotion on emotion-identification accuracy, F(6, 72) = 2.39, p = .037. Sad was more accurately identified than all other emotions except for Neutral and Pleasant Surprise. There was no significant interaction of Listening Condition with Emotion, F(6, 72) = 0.36, p = .90.

Figure 3.

Emotion identification accuracy across Emotion conditions in Unaided and Aided listening conditions. Standard error bars are shown.

There was no significant effect of Task Order, F(1, 12) = 0.09, p = .77, no significant two-way interaction of Task Order with Listening Condition, F(1, 12) = 1.34, p = .27, or with Emotion, F(6, 72) = 1.18, p = .33, and no significant three-way interaction of Listening Condition, Emotion, and Task Order, F(6, 72) = 0.59, p = .74.

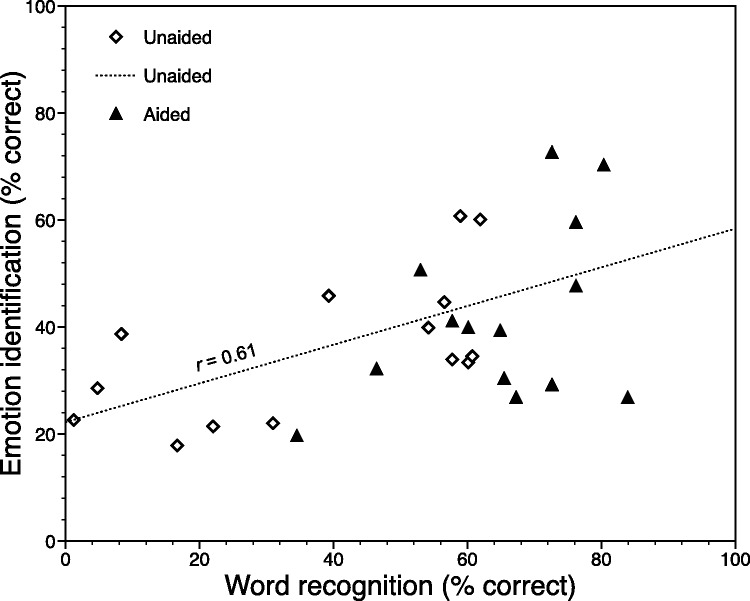

Relationship Between Word Recognition and Emotion Identification Performance

Correlations were tested and revealed that word-recognition performance was correlated with emotion-identification performance when listeners were unaided. The correlation of word recognition scores with emotion identification scores in the Unaided condition was r = .61, p = .021, while the correlation of the two measures in the Aided condition was r = .43, p = .12 (Figure 4).

Figure 4.

Scatterplot of word recognition scores against emotion identification scores for 14 older listeners in Unaided and Aided listening conditions. The regression line is for the Unaided condition only.

Results for Cognitive Tests and Hearing and Emotion Questionnaires

Table 1 shows the mean values of participants’ cognitive test results and self-report measures of hearing and emotion. On the MoCA, participants’ mean score and SD resembled those of cognitively normal older adults ranging in age from 70 to 99 years, adjusted for education (mean = 25.29, SD = 2.99; Malek-Ahmadi et al., 2015). Five participants (36%) fell below the cut-off score of 26 on the MoCA (Nasreddine et al., 2005), compared to 46% in a recent large normative study (Malek-Ahmadi et al., 2015). Most participants reported some perceived benefit from hearing aids, with 11 of the 14 participants reporting less difficulty on the APHAB Aided component than on the APHAB Unaided component. On the HHIA, there was a wide range of self-perceived hearing handicap, with 3 of the 14 participants reported experiencing no hearing handicap at all (total score of 0), and the remaining participants reporting some handicap. Most participants did not perceive that they had difficulty with emotional communication, with 12 of the 14 participants ranging from neither agree nor disagree (having an average Emo-CheQ score of 3) to strongly disagree (having an average Emo-CheQ score of approximately 1).

Table 1.

Mean Scores on Cognitive Tests and Hearing- and Emotion-Related Questionnaires, With SDs in Parentheses.

| Measure | Mean (SD) | Min–Max |

|---|---|---|

| MoCA (of 30), adjusted for education | 25.5 (2.8) | 19–28 |

| Reading Working Memory Span (best possible score 100) | 42.0 (12.4) | 30–68 |

| APHAB global unaided score | 42.4 (14.6) | 18–72 |

| APHAB global aided score | 25.4 (17.0) | 7–59 |

| APHAB benefit (unaided - aided) | 17.0 (17.7) | −16 to 55 |

| HHIA total score (worst possible score 100) | 28.0 (21.0) | 0–64 |

| HHIA social component (worst possible score 48) | 14.0 (10.9) | 0–32 |

| HHIA emotional component (worst possible score 52) | 14.0 (11.4) | 0–38 |

| IOI-HA total score (best possible score 40) | 33.6 (3.5) | 25–39 |

| SSQ12 total score (best possible score 120) | 74.0 (19.4) | 38–102 |

| PANAS positive (best possible score 50) | 34.5 (4.9) | 29–43 |

| PANAS negative (worst possible score 50) | 14.5 (4.5) | 10–25 |

| EMO-CHeQ average score (worst possible score 5) | 2.3 (0.6) | 1.2–3.3 |

| TAS20 (worst possible score 100) | 52.8 (6.2) | 39–63 |

Note. MoCA = Montreal Cognitive Assessment; APHAB = Abbreviated Profile of Hearing Aid Benefit; IOI-HA = International Outcome Inventory for Hearing Aids; HHIA = Hearing Handicap Inventory for Adults; SSQ12 = 12-item Speech Spatial Qualities Questionnaire; PANAS = Positive and Negative Affect Scale; EMO-CHeQ = Emotional Communication in Hearing Questionnaire; TAS20 = 20-Item Toronto Alexithymia Scale; SD = standard deviation.

Relationship Between Participant Characteristics and Listening Task Performance

Pearson’s correlations were conducted to investigate how characteristics related to participants’ hearing, cognition, and emotion influenced their performance in the study. A Holm-Bonferroni procedure was used to correct for the use of multiple tests.

Table 2 shows the correlations between participant characteristics and measures of word-recognition accuracy and emotion-identification accuracy in unaided and aided listening conditions. For the word-recognition task, lower PTAs were associated with better word recognition in the unaided listening condition. Higher MoCA scores were associated with better word recognition in the aided listening condition. For the emotion-identification task, lower (better) EMO-CHeQ scores were associated with better unaided and aided emotion identification. Lower (better) TAS20 scores were associated with better unaided emotion identification.

Table 2.

Multiple Correlations Between Participant Characteristics and Listening Task Performance in Unaided and Aided Listening Conditions.

| Word recognition |

Emotion identification |

|||

|---|---|---|---|---|

| Unaided | Aided | Unaided | Aided | |

| Age | −0.47 | −0.42 | −0.45 | −0.40 |

| PTA | −0.90 * | −0.71 | −0.49 | −0.29 |

| HA average fit | 0.04 | 0.27 | 0.10 | −0.01 |

| HA experiencea | −0.28 | 0.07 | −0.19 | −0.14 |

| MoCA | 0.45 | 0.76 * | 0.59 | 0.53 |

| RWM span | 0.26 | 0.29 | 0.66 | 0.66 |

| APHAB benefit | −0.37 | −0.03 | −0.16 | −0.14 |

| HHIA total | −0.39 | −0.15 | 0.08 | 0.07 |

| IOI-HA | −0.36 | 0.01 | −0.20 | −0.05 |

| SSQ12 | 0.47 | 0.57 | 0.40 | 0.33 |

| PANAS positive | 0.14 | 0.22 | 0.29 | 0.33 |

| PANAS negative | −0.16 | 0.04 | −0.56 | −0.54 |

| EMO-CHeQ | −0.36 | −0.33 | −0.77 * | −0.82 * |

| TAS20 | −0.60 | −0.52 | −0.76 * | −0.69 |

Note. MoCA = Montreal Cognitive Assessment; PTA = pure-tone average; HA = hearing aid; APHAB = Abbreviated Profile of Hearing Aid Benefit; IOI-HA = International Outcome Inventory for Hearing Aids; HHIA = Hearing Handicap Inventory for Adults; SSQ12 = 12-item Speech Spatial Qualities Questionnaire; PANAS = Positive and Negative Affect Scale; EMO-CHeQ = Emotional Communication in Hearing Questionnaire; TAS20 = 20-Item Toronto Alexithymia Scale; RWM = Reading Working Memory.

aThe correlations between hearing aid experience and listening task performance were calculated after excluding one participant who had 50 years of hearing aid experience. As the other participants had a range of hearing aid experience from 2 to 15 years with a median of 5 years, the inclusion of an outlying data point of 50 years resulted in a statistically significant r value that was not meaningful.

Boldface values signify p < .05 with Holm-Bonferroni correction.

Relationship Between Participant Characteristics and Amount of Hearing Aid Benefit

Hearing aid benefit scores were calculated for word recognition and emotion identification by taking the difference between unaided RAU scores and aided RAU scores (Aided − Unaided). For word recognition, the average benefit was +28.7 RAU (range: 6.8–59.8 RAU). For emotion identification, the average benefit was +5.8 RAU (range: −6.6 to +18.4 RAU). Multiple Pearson’s correlations with Holm correction showed that there were no significant correlations between participant characteristics and hearing aid benefit for either word recognition or emotion identification, except that higher PTAs were associated with more hearing aid benefit for word recognition (r = .75, p = .03).

Discussion

The results from the current study showed that for speech spoken with emotion, hearing aids improved word recognition but did not affect emotion identification. The type of emotion was also shown to affect both word recognition and emotion identification by listeners with hearing loss.

The presence of the effect of emotion on word recognition has implications for speech testing in the laboratory and in the clinic. Speech stimuli used in laboratory and clinical testing are generally produced with a neutral tone, unlike speech that is heard in the real world, which includes both neutral phrasing and various emotional inflections. The current study found that words spoken in the Happy or Sad conditions were less intelligible than words spoken in the Neutral condition, raising the possibility that real-world word-recognition performance by listeners with hearing loss may be worse than in the laboratory or clinic, depending on a talker’s vocal emotion. Importantly, the lack of an interaction between listening condition (with or without aids) and emotion condition for word recognition and emotion identification suggests that current hearing aids may process acoustical speech or emotional cues similarly regardless of the vocal emotion portrayed in the speech of talkers.

For the task of word recognition, correlational analyses showed that performance was better when participants had better audiometric thresholds, and that participants obtained more benefit from hearing aids when they had more hearing loss, as would be expected. Interestingly, aided word-recognition performance was positively correlated with MoCA test scores, while unaided word-recognition performance was not. This finding is consistent with studies in which a larger working memory capacity was associated with better word recognition in difficult listening conditions such as noise (e.g., Rudner & Lunner, 2014). A growing body of evidence also suggests that hearing aid users with larger working memory capacities can take advantage of the increased audibility of words created by fast-acting wide dynamic range compression, and that they are not as negatively affected by distortions as listeners with smaller working memory capacities (e.g., Souza, Arehart, & Neher, 2015). Thus, the relationship between aided word-recognition performance in the present study and MoCA scores is consistent with the current state of knowledge about how cognitive ability in general can affect speech understanding by listeners under adverse conditions.

For the task of emotion identification, a key outcome measure in the study, listeners who reported fewer difficulties with emotional communication (lower EMO-CHeQ scores) and fewer difficulties identifying and describing feelings (lower TAS20 scores) performed better at emotion identification. In other words, self-perceived difficulty with emotion correlated in the expected manner with emotion-identification performance in both unaided and aided conditions. The positive correlation between word-recognition scores and emotion-identification scores suggests that there are common factors that affect performance on both tasks, whether the common factor is sensory or cognitive.

Although the main purpose of this study was to determine whether hearing aids affect emotion identification by listeners with hearing loss, we wished to gain some insight into whether hearing-aided listeners and normal-hearing listeners processed emotional cues in a similar way. In a previous study with normal-hearing younger and older listeners using similar speech materials, there was an age-related difference in emotion-identification performance of about 17 percentage points (Dupuis & Pichora-Fuller, 2015). Despite the overall difference in accuracy level, younger and older listeners with normal hearing showed similar patterns in identification accuracy across emotions (Dupuis & Pichora-Fuller, 2015). In other words, the two groups were likely using the same cues to determine which emotion was being portrayed. The performance gap between normal-hearing younger listeners and older listeners with hearing loss would likely be even greater than the gap between younger and older listeners without hearing loss, but it is difficult to predict whether the pattern of emotion identification would remain the same. As the experimental procedures in the present study differed somewhat from those of the previous study in which word recognition and emotion identification were not tested together for each sentence (Dupuis & Pichora-Fuller, 2015), we decided to test a new group of young normal-hearing listeners using the present study’s protocol so that they could be directly compared with the present group of older listeners. It was expected that younger listeners would outperform older listeners on both measures of word recognition and emotion identification, likely with an even bigger gap in performance than the one found between younger and older listeners without hearing loss in the previous study. The question of interest was whether the pattern of emotion identification would be different for older listeners with hearing loss compared to young normal-hearing listeners, which could indicate a qualitatively different way of processing vocal emotion in the two groups.

Follow-Up Study of Young Normal-Hearing Listeners

Younger adults with normal audiograms were tested using the same experimental procedure as the older listeners with hearing loss, except that younger listeners were only tested once instead of in unaided and aided conditions, and half of the younger listeners were tested in quiet while the other half were tested in noise. We expected the performance of younger listeners in quiet to be close to ceiling, and hence we tested half of the younger listeners in more difficult noisy conditions. A signal-to-noise ratio (SNR) of −5 dB was chosen to match the SNR used in a previous study with younger listeners (Dupuis & Pichora-Fuller, 2014).

Method

Participants were 28 healthy younger adults (mean age = 18.3, SD = 0.6). They had PTAs of 20 dB HL or less at octave frequencies from 250 to 8000 Hz in both ears and no interaural differences greater than 20 dB. They all learned English in Canada before the age of 6 years. Half of the participants were tested in quiet, and the other half in the multitalker babble noise from the Speech Perception in Noise Test (Revised; Bilger, Nuetzel, Rabinowitz, Rzeczkowski, 1984) at −5 dB SNR. As in the previous experiment with older listeners, percent correct scores for word recognition and emotion identification were transformed to RAU. Data were analyzed separately for listeners tested in quiet and listeners tested in noise. ANOVAs were conducted with Emotion as a single within-subject factor for the outcome measures of word recognition and emotion identification. Significant effects of emotion were further analyzed using multiple t tests with Holm correction.

Results

Word recognition

Young listeners in quiet performed near ceiling for word identification (mean = 97.1%, SD = 3.4%), with performance in no Emotion condition being less than 95%. Young listeners in noise performed worse than young listeners in quiet (mean = 80.7%, SD = 11.2%). In quiet, an ANOVA with Emotion as a within-subjects factor showed a significant effect of Emotion, F(6, 78) = 2.67, p = .021; however, none of the paired comparisons were significant (p values > .08). In noise, there was also a significant effect of Emotion, F(6, 78) = 11.68, p < .001. As shown in Figure 5, words spoken to portray Angry, Neutral and Disgust emotions were more accurately recognized in noise than words spoken to portray Happy and Sad (p values < .05).

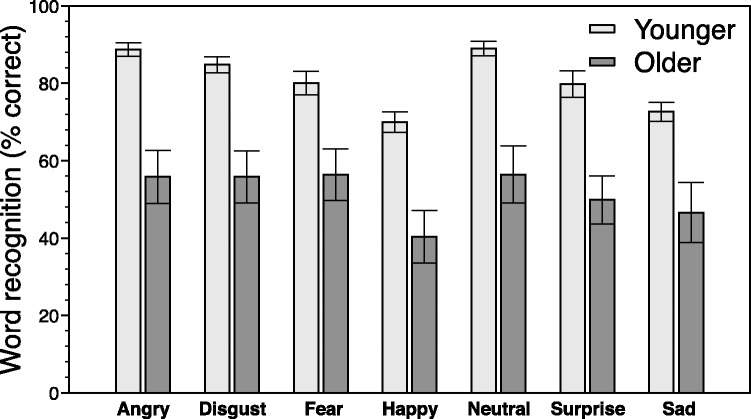

Figure 5.

Word recognition accuracy of younger listeners in noise and older listeners with hearing loss. Data for older listeners are collapsed across Unaided and Aided conditions.

To compare the word-recognition accuracy of younger and older listeners, the Unaided and Aided scores of older listeners were collapsed (since Emotion had a similar effect in both the Unaided and Aided conditions), and an ANOVA was performed with Group as a between-subjects factor (younger listeners in noise vs. older listeners with hearing loss) and with Emotion condition as a within-subject factor. There were significant main effects on word-recognition accuracy of Group, F(1, 26) = 33.86, p < .001, and Emotion, F(6, 156) =20.71, p < .001, but there was no interaction between Group and Emotion, F(6, 156) = 1.99, p = .07. Younger listeners in noise recognized words more accurately than older listeners with hearing loss by 29.1 percentage points overall. As shown in Figure 5, Emotion affected the word-recognition accuracy of young normal-hearing listeners and older listeners with hearing loss in a similar way, such that words spoken in the Happy and Sad conditions were less intelligible than words spoken in the Fear, Disgust, Angry and Neutral conditions.

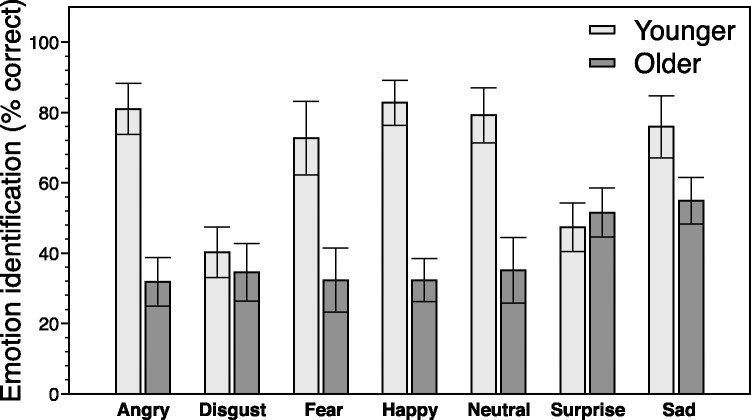

Emotion identification

Young listeners in noise performed worse (mean = 68.4%, SD = 32.1%) than young listeners in quiet (mean = 84.7%, SD = 24.7%). For young listeners in quiet, there was a significant effect on emotion-identification accuracy of Emotion, F(6, 78) = 6.23, p < .001. The Sad emotion was more accurately identified than Pleasant Surprise (p = .01) or Disgust (p = .05), and Angry was more accurately identified than Pleasant Surprise (p = .05). For young listeners in noise, there was also a significant effect of Emotion, F(6, 78) = 7.72, p < .001, such that Disgust was less accurately identified than all other emotions (p values < .05) except Fear and Pleasant Surprise (p values > .1).

An ANOVA with Group as a between-subjects factor (younger listeners in noise vs. older listeners with hearing loss) and Emotion condition as a within-subject factor showed significant effects of Group on emotion-identification accuracy, F(1, 26) = 21.72, p < .001, and Emotion, F(6, 156) = 4.13, p < .001, and a significant interaction between Group and Emotion, F(6, 156) = 6.14, p < .001. Young listeners in noise identified emotions better than older listeners with hearing loss by 29.5 percentage points overall. In general, Sad was more accurately identified than Disgust, but no other pairs of emotion condition were significantly different. However, as shown in Figure 6, younger and older listeners showed a different pattern for the effect of Emotion. Younger listeners identified Angry and Happy more accurately than Pleasant Surprise and Disgust (p values < .05), while older listeners with hearing loss identified Sad more accurately than Disgust and Fear (p values < .05).

Figure 6.

Emotion identification accuracy of younger listeners in noise and older listeners with hearing loss. Data for older listeners are collapsed across Unaided and Aided conditions.

Discussion

Emotion had the same effect on word-recognition accuracy for younger listeners tested in noise and older listeners with hearing loss tested in quiet (aided or unaided). Words spoken in the Happy and Sad conditions were less intelligible than words spoken in the Disgust, Angry, and Neutral conditions. Thus, one interpretation of the findings is that emotion affects the speech cues for word recognition in the same way regardless of hearing status or age.

Comparing the overall emotion-identification accuracy rate for younger listeners in quiet and older listeners with hearing loss, the performance gap between the two groups was 46 percentage points, compared to 17 percentage points between normal-hearing younger and older listeners (Dupuis & Pichora-Fuller, 2015). This performance gap decreased when younger listeners were in a more difficult, noisy listening environment, but it was still relatively wide at 29 percentage points. In addition to the large gap between younger listeners in quiet and in noise and older listeners with hearing loss, emotion also had different effects on emotion identification for the two groups, unlike a previous study with similar speech materials that showed that younger and older listeners with normal hearing were affected similarly by emotion (Dupuis & Pichora-Fuller, 2015). Taking the findings from the previous and current study together, the results suggest that there are changes to emotion identification by listeners with hearing loss that are beyond the changes that can be attributed to normal aging, and that hearing aids do not compensate for these changes. However, a more direct comparison between hearing-aided listeners and age-matched controls without hearing loss would be needed to fully investigate how hearing loss affects the identification of vocal emotion.

General Discussion

Although older adults with hearing loss received benefit from hearing aids as evidenced by their performance on a word-recognition task, they received no significant benefit on an emotion-identification task. It is clear that vocal emotion is conveyed through acoustic cues (Scherer, 1995). However, it is less clear from the existing literature whether the difficulties with emotion identification experienced by older listeners with hearing loss stem from threshold hearing loss, changes in the auditory processing of supra-threshold speech signals, changes in neural circuits involved in emotion processing, changes in cognitive processing, or a mixture of factors. The present study found that emotion identification by hearing aid users was unrelated to listeners’ pure-tone thresholds, similar to the findings for relatively normal-hearing older adults (Dupuis & Pichora-Fuller, 2015; Orbelo, Grim, Talbot, & Ross, 2005). Therefore, previous and current findings do not support a strong association between hearing thresholds and emotion identification performance in older listeners with normal hearing or hearing loss. It is also noteworthy that amplification that supports word recognition does not mitigate difficulty with emotion identification, again suggesting that factors other than peripheral hearing loss may be relevant.

Besides auditory factors, studies have investigated whether cognitive factors play a role in the decline of emotion-identification accuracy in older adults. In the present study, neither MoCA scores nor reading working memory span scores were correlated with emotion-identification performance in older adults with hearing loss. In relatively normal-hearing older adults, measures of verbal intelligence, sustained attention, and working memory did not mediate the relationship between age and emotion-identification performance (Lambrecht et al., 2012). Putting the findings from the current study and Lambrecht et al.’s (2012) study together, it seems that cognitive ability does not affect emotion-identification performance even when a listener experiences a greater degree of hearing difficulty.

Regardless of the root causes of problems with emotion identification, it is clear that listeners with hearing loss experience impairment that is greater than might be expected from aging alone. Listeners with hearing loss may not be explicitly aware of any problems with emotional communication, especially if they employ compensatory strategies that ameliorate some of these problems. In general, older adults do not rely more heavily on visual cues for emotion identification than younger adults (Lambrecht et al., 2012), but older adults do regulate their emotions better than younger adults and are more skilled at predicting the amount of arousal they would feel in different emotional situations (Urry & Gross, 2010). Nonetheless, difficulties with emotion identification may contribute to challenges in social functioning on top of other communication difficulties caused by hearing loss. However, the issue of emotion perception is rarely discussed during audiological rehabilitation. Self-report questionnaires such as the EMO-CHeQ (Singh et al., 2018) reflect emotion-identification performance, and as such may be a useful tool to gauge a client’s difficulties with emotional communication. Given that hearing aids help with emotional word recognition but do not compensate for problems with emotion identification, it would be helpful for potential clients to have a clear understanding of areas of speech communication that will and will not benefit from the use of hearing aids. At this time, it is not clear whether the lack of benefit is due to hearing aids being unsuccessful at processing emotional cues in a way that compensates for users’ hearing loss, or if hearing aid users are unable to make use of these emotional cues. At present, hearing aids cannot compensate for losses in some auditory abilities that may be important for emotion perception, such as frequency resolution. In addition, listeners with more severe hearing losses may not be able to use all the auditory information even if it is made audible through amplification (Ching, Dillon, & Byrne, 1998).

Conclusion

It has been well established that older adults with normal hearing are less accurate at emotion identification than younger adults. However, little is known about how well older adults with hearing loss can identify emotions conveyed in speech, or whether hearing aids improve emotion identification. In the current study, the emotion-identification performance of older adults with hearing loss was poorer than the results of previous studies that compared normal-hearing younger and older listeners. Although listeners benefited from using their hearing aids for word recognition of emotional speech, there was no significant benefit of hearing aids for emotion identification. Pure-tone thresholds predicted the amount of hearing aid benefit for word recognition. Neither hearing thresholds nor cognitive status predicted the amount of hearing aid benefit for emotional identification, but self-reported emotion measures were related to performance on the emotion-identification task. In contrast to previous research that showed that the type of vocal emotion affected younger and older normal-hearing listeners in a similar way, older hearing aid users were affected by emotion differently than younger listeners on emotion identification. The findings from the current study suggest that older listeners with hearing loss experience changes in emotion perception beyond those expected from normal aging, which may add to the known communication challenges associated with hearing loss. The findings from this study may guide research and development teams working in the hearing aid industry and researchers and practitioners interested in new strategies for auditory rehabilitation.

Note

Previous studies on emotion identification did not compare performance within the same participants in unaided and aided conditions. Studies that compared emotion-identification performance between normal-hearing and hearing-aided participants showed an average effect size of ds = 2.47 (Most & Aviner, 2009; Most & Michaelis, 2012; Most, Weisel & Zaychik, 1993). With this effect size, a sample of only three participants per group would be needed to achieve a power of 0.8. Other studies that compared emotion-identification performance between younger and older normal-hearing adults showed an average effect size of ds = 1.08 (Dupuis & Pichora-Fuller, 2015; Paulmann, Pell, & Kotz, 2008; Ryan, Murray, & Ruffman, 2010). With this effect size, a sample size of 14 participants per group would be needed to achieve a power of 0.8. Within-subject designs generally yield a larger effect size than between-subjects designs (all other things being equal); therefore, a sample size of 14 participants in the current study (tested twice) was expected to yield sufficient power.

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by a Mitacs Accelerate Cluster Grant [IT03746] awarded to Frank A. Russo, M. Kathleen Pichora-Fuller and Gurjit Singh, a Sonova Hear the World Research Chair awarded to Frank A. Russo, and a Mitacs Elevate Postdoctoral Fellowship [IT07823] awarded to Huiwen Goy.

References

- Bagby R. M., Parker J. D. A., Taylor G. J. (1994) The twenty-item Toronto Alexithymia Scale—I. Item selection and cross-validation of the factor structure. Journal of Psychosomatic Research 38(1): 23–15 DOI:10.1016/0022-3999(94)90005-1. [DOI] [PubMed] [Google Scholar]

- Barrett L. F., Gendron M., Huang Y.-M. (2009) Do discrete emotions exist? Philosophical Psychology 22(4): 427–437. DOI:10.1080/09515080903153634. [Google Scholar]

- Bilger R. C., Nuetzel J. M., Rabinowitz W. M., Rzeczkowski C. (1984) Standardization of a test of speech perception in noise. Journal of Speech and Hearing Research 27(1): 32–48. [DOI] [PubMed] [Google Scholar]

- Castro, S. L., & Lima, C. F. (2010). Recognizing emotions in spoken language: A validated set of Portuguese sentences and pseudosentences for research on emotional prosody. Behavior Research Methods, 42(1), 74–81. DOI:10.3758/BRM.42.1.74. [DOI] [PubMed]

- Ching T. Y. C., Dillon H., Byrne D. (1998) Speech recognition of hearing-impaired listeners: Predictions from audibility and the limited role of high-frequency amplification. Journal of the Acoustical Society of America 103(2): 1128–1140. 10.1121/1.421224. [DOI] [PubMed] [Google Scholar]

- Cox R. M., Alexander G. C. (1995) The abbreviated profile of hearing aid benefit. Ear and Hearing 16(2): 176–186. [DOI] [PubMed] [Google Scholar]

- Cox R. M., Alexander G. C. (2002) The International Outcome Inventory for Hearing Aids (IOI-HA): Psychometric properties of the English version. International Journal of Audiology 41(1): 30–35. [DOI] [PubMed] [Google Scholar]

- Daneman M., Carpenter P. A. (1980) Individual differences in working memory and reading. Journal of Verbal Learning and Verbal Behavior 19: 450–466. [Google Scholar]

- Dupuis K., Pichora-Fuller M. K. (2011) Recognition of emotional speech for younger and older talkers: Behavioural findings from the Toronto Emotional Speech Set. Canadian Acoustics—Acoustique Canadienne 39(3): 182–183. [Google Scholar]

- Dupuis K., Pichora-Fuller M. K. (2014) Intelligibility of emotional speech in younger and older adults. Ear and Hearing 35(6): 695–707. [DOI] [PubMed] [Google Scholar]

- Dupuis K., Pichora-Fuller M. K. (2015) Aging affects identification of vocal emotions in semantically neutral sentences. Journal of Speech, Language, and Hearing Research 58(3): 1061–1076. DOI:10.1044/2015_JSLHR-H-14-0256. [DOI] [PubMed] [Google Scholar]

- Ekman P. (2007) Emotions revealed: Recognizing faces and feelings to improve communication and emotional life, New York, NY: St. Martin’s Griffin. [Google Scholar]

- Ferguson M. A., Kitterick P. T., Chong L. Y., Edmondson-Jones M., Barker F., Hoare D. J. (2017) Hearing aids for mild to moderate hearing loss in adults. Cochrane Database of Systematic Reviews 2017(9)): . DOI:10.1002/14651858.CD012023.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon-Salant S., Cole S. S. (2016) Effects of age and working memory capacity on speech recognition performance in noise among listeners with normal hearing. Ear and Hearing 37(5): 593–602. DOI:10.1097/AUD.0000000000000316. [DOI] [PubMed] [Google Scholar]

- Holube I., Fredelake S., Vlaming M., Kollmeier B. (2010) Development and analysis of an international speech test signal (ISTS). International Journal of Audiology 49(12): 891–903. DOI:10.3109/14992027.2010.506889. [DOI] [PubMed] [Google Scholar]

- Husain F. T., Carpenter-Thompson J. R., Schmidt S. A. (2014) The effect of mild-to-moderate hearing loss on auditory and emotion processing networks. Frontiers in Systems Neuroscience 8: 10 DOI:10.3389/fnsys.2014.00010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiam N. T., Caldwell M., Deroche M. L., Chatterjee M., Limb C. J. (2017) Voice emotion perception and production in cochlear implant users. Hearing Research 352: 30–39. DOI:10.1016/j.heares.2017.01.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lambrecht L., Kreifelts B., Wildgruber D. (2012) Age-related decrease in recognition of emotional facial and prosodic expressions. Emotion 12(3): 529–539. DOI:10.1037/a0026827. [DOI] [PubMed] [Google Scholar]

- Lima C. F., Alves T., Scott S. K., Castro S. L. (2014) In the ear of the beholder: How age shapes emotion processing in nonverbal vocalizations. Emotion 14(1): 145–160. DOI:10.1037/a0034287. [DOI] [PubMed] [Google Scholar]

- Malek-Ahmadi, M., Powell, J. J., Belden, C. M., O Connor, K., Evans, L., Coon, D. W., & Nieri, W. (2015). Age-and education-adjusted normative data for the Montreal Cognitive Assessment (MoCA) in older adults age 70-99. Aging, Neuropsychology, and Cognition, 22(6), 755–761. DOI:10.1080/13825585.2015.1041449. [DOI] [PubMed]

- Mitchell R. L. C., Kingston R. A. (2014) Age-related decline in emotional prosody discrimination: Acoustic correlates. Experimental Psychology 61(3): 215–223. DOI:10.1027/1618-3169/a000241. [DOI] [PubMed] [Google Scholar]

- Mitchell, R. L. C. (2007). Age-related decline in the ability to decode emotional prosody: Primary or secondary phenomenon? Cognition and Emotion, 21(7), 1435–1454. DOI: 10.1080/02699930601133994.

- Most, T., & Michaelis, H. (2012). Auditory, visual, and auditory-visual perceptions of emotions by young children with hearing loss versus children with normal hearing. Journal of Speech, Language, and Hearing Research, 55(4), 1148–1162. DOI:10.1044/1092-4388(2011/11-0060). [DOI] [PubMed]

- Most, T., Weisel, A., & Zaychik, A. (1993). Auditory, visual and auditory-visual identification of emotions by hearing and hearing-impaired adolescents. British Journal of Audiology, 27(4), 247–253. DOI:10.3109/03005369309076701. [DOI] [PubMed]

- Most T., Aviner C. (2009) Auditory, visual, and auditory-visual perception of emotions by individuals with cochlear implants, hearing aids, and normal hearing. Journal of Deaf Studies and Deaf Education 14(4): 449–464. DOI:10.1093/deafed/enp007. [DOI] [PubMed] [Google Scholar]

- Nashiro K., Sakaki M., Mather M. (2012) Age differences in brain activity during emotion processing: Reflections of age-related decline or increased emotion regulation? Gerontology 58(2): 156–163. DOI:10.1159/000328465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nasreddine, Z. S., Phillips, N. A., Be'dirian, V., Charbonneau, S., Whitehead, V., Collin, I., Cummings, J. L., & Chertkow, H. (2005). The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. Journal of the American Geriatrics Society, 53(4), 695–699. DOI:10.1111/j.1532-5415.2005.53221.x. [DOI] [PubMed]

- Newman C. W., Weinstein B. E., Jacobson G. P., Hug G. A. (1990) The hearing handicap inventory for adults: Psychometric adequacy and audiometric correlates. Ear and Hearing 11(6): 430–433. [DOI] [PubMed] [Google Scholar]

- Noble W., Jensen N. S., Naylor G., Bhullar N., Akeroyd M. A. (2013) A short form of the Speech, Spatial and Qualities of Hearing Scale suitable for clinical use: The SSQ12. International Journal of Audiology 52(6): 409–412. DOI:10.3109/14992027.2013.781278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orbelo D. M., Grim M. A., Talbott R. E., Ross E. D. (2005) Impaired comprehension of affective prosody in elderly subjects is not predicted by age-related hearing loss or age-related cognitive decline. Journal of Geriatric Psychiatry and Neurology 18(1): 25–32. DOI:10.1177/0891988704272214. [DOI] [PubMed] [Google Scholar]

- Paulmann S., Pell M. D., Kotz S. A. (2008) How aging affects the recognition of emotional speech. Brain and Language 104(3): 262–269. DOI:10.1016/j.bandl.2007.03.002. [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller M. K., Singh G. (2006) Effects of age on auditory and cognitive processing: Implications for hearing aid fitting and audiologic rehabilitation. Trends in Amplification 10(1): 29–59. DOI:10.1177/108471380601000103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Picou E. M. (2016. a) How hearing loss and age affect emotional responses to nonspeech sounds. Journal of Speech, Language, and Hearing Research 59(5): 1233–1246. DOI:10.1044/2016_JSLHR-H-15-0231. [DOI] [PubMed] [Google Scholar]

- Picou E. M. (2016. b) Acoustic factors related to emotional responses to sound. Canadian Acoustics—Acoustique Canadienne 44(3): 126–127. [Google Scholar]

- Polonenko, M. J., Scollie, S. D., Moodie, S., Seewald, R. C., Laurnagaray, D., Shantz, J., & Richards, A. (2010). Fit to targets, preferred listening levels, and self-reported outcomes for the DSL v5.0a hearing aid prescription for adults. International Journal of Audiology, 49(8), 550–560. DOI:10.3109/14992021003713122. [DOI] [PubMed]

- Rudner M., Lunner T. Cognitive spare capacity and speech communication: A narrative overview. BioMed Research International 2014. 2014. DOI:10.1155/2014/869726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruffman T., Halberstadt J., Murray J. (2009) Recognition of facial, auditory, and bodily emotions in older adults. Journals of Gerontology—Series B Psychological Sciences and Social Sciences 64(6): 696–703. DOI:10.1093/geronb/gbp072. [DOI] [PubMed] [Google Scholar]

- Ruffman T., Henry J. D., Livingstone V., Phillips L. H. (2008) A meta-analytic review of emotion recognition and aging: Implications for neuropsychological models of aging. Neuroscience and Biobehavioral Reviews 32(4): 863–881. DOI:10.1016/j.neubiorev.2008.01.001. [DOI] [PubMed] [Google Scholar]

- Ryan, M., Murray, J., & Ruffman, T. (2010). Aging and the perception of emotion: Processing vocal expressions alone and with faces. Experimental Aging Research, 36(1), 1–22. DOI:10.1080/03610730903418372. [DOI] [PubMed]

- Scherer K. R. (1995) Expression of emotion in voice and music. Journal of Voice 9(3): 235–248. DOI:10.1016/S0892-1997(05)80231-0. [DOI] [PubMed] [Google Scholar]

- Schmidt J., Janse E., Scharenborg O. (2016) Perception of emotion in conversational speech by younger and older listeners. Frontiers in Psychology 7 , 781. DOI:10.3389/fpsyg.2016.00781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmidt J., Herzog D., Scharenborg O., Janse E. (2016) Do hearing aids improve affect perception? Advances in Experimental Medicine and Biology 894: 47–55. DOI:10.1007/978-3-319-25474-6_6. [DOI] [PubMed] [Google Scholar]

- Sen A., Isaacowitz D., Schirmer A. (2017) Age differences in vocal emotion perception: On the role of speaker age and listener sex. Cognition and Emotion 32(6): 1189–1204. DOI:10.1080/02699931.2017.1393399. [DOI] [PubMed] [Google Scholar]

- Singh G., Liskovoi L., Launer S., Russo F. A. (2018) The Emotional Communication in Hearing Questionnaire (EMO-CheQ): Development and evaluation. Ear and Hearing.. Advance online publication. DOI:10.1097/AUD.0000000000000611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Souza P., Arehart K., Neher T. (2015) Working memory and hearing aid processing: Literature findings, future directions, and clinical applications. Frontiers in Psychology 6: 1894 DOI:10.3389/fpsyg.2015.01894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Studebaker G. A. (1985) A “rationalized” arcsine transform. Journal of Speech and Hearing Research 28(3): 455–462. [DOI] [PubMed] [Google Scholar]

- Tillman T. W., Carhart R. (1966) An expanded test for speech discrimination utilizing CNC monosyllabic words: Northwestern University Auditory Test No. 6 (SAM-TR-66-55), Brooks Air Force Base, TX: USAF School of Aerospace Medicine. [DOI] [PubMed] [Google Scholar]

- Urry H. L., Gross J. J. (2010) Emotion regulation in older age. Current Directions in Psychological Science 19(6): 352–357. DOI:10.1177/0963721410388395. [Google Scholar]

- Vytal K., Hamann S. (2010) Neuroimaging support for discrete neural correlates of basic emotions: A voxel-based meta-analysis. Journal of Cognitive Neuroscience 22(12): 2864–2885. DOI:10.1162/jocn.2009.21366. [DOI] [PubMed] [Google Scholar]

- Waaramaa T., Kukkonen T., Stoltz M., Geneid A. (2016) Hearing impairment and emotion identification from auditory and visual stimuli. International Journal of Listening 32(3): 150–162. DOI:10.1080/10904018.2016.1250633. [Google Scholar]

- Watson D., Clark L. A., Tellegen A. (1988) Development and validation of brief measures of positive and negative affect: The PANAS scales. Journal of Personality and Social Psychology 54(6): 1063–1070. [DOI] [PubMed] [Google Scholar]

- Wong L. L. N. (2011) Evidence on self-fitting hearing aids. Trends in Amplification 15(4): 215–225. DOI:10.1177/1084713812444009. [DOI] [PMC free article] [PubMed] [Google Scholar]