Rapid antigen tests for influenza, here referred to as rapid influenza diagnostic tests (RIDTs), have been widely used for the diagnosis of influenza since their introduction in the 1990s due to their ease of use, rapid results, and suitability for point of care (POC) testing. However, issues related to the diagnostic sensitivity of these assays have been known for decades, and these issues gained greater attention following reports of their poor performance during the 2009 influenza A(H1N1) pandemic.

KEYWORDS: influenza, rapid tests, diagnostics, public health

ABSTRACT

Rapid antigen tests for influenza, here referred to as rapid influenza diagnostic tests (RIDTs), have been widely used for the diagnosis of influenza since their introduction in the 1990s due to their ease of use, rapid results, and suitability for point of care (POC) testing. However, issues related to the diagnostic sensitivity of these assays have been known for decades, and these issues gained greater attention following reports of their poor performance during the 2009 influenza A(H1N1) pandemic. In turn, significant concerns arose about the consequences of false-negative results, which could pose significant risks to both individual patient care and to public health efforts. In response to these concerns, the FDA convened an advisory panel in June 2013 to discuss options to improve the regulation of the performance of RIDTs. A proposed order was published on 22 May 2014, and the final order published on 12 January 2017, reclassifying RIDTs from class I to class II medical devices, with additional requirements to comply with four new special controls. This reclassification is a landmark achievement in the regulation of diagnostic devices for infectious diseases and has important consequences for the future of diagnostic influenza testing with commercial tests, warranting the prompt attention of clinical laboratories, health care systems, and health care providers.

INTRODUCTION

Influenza is a rapidly transmitted and highly contagious infection capable of causing severe acute respiratory disease. The clinical utility of laboratory testing for influenza includes confirming infection, distinguishing it from other respiratory agents, assessing the need for further testing, and determining whether the virus is circulating in a patient population. The prevention of serious, complicated, or even fatal outcomes is assisted by prompt and accurate diagnosis, which facilitates appropriate treatment and patient management. In many health care facilities, testing also supports infection prevention efforts to mitigate nosocomial transmission through patient isolation or cohorting. The data generated by influenza testing also provide a wealth of information on public health, including epidemiological information on circulating viral types and subtypes, associated disease severity, antiviral drug susceptibility, determination of the populations affected, and identification of special risk groups. Additionally, these data aid in determining the degree of match to vaccine strains, monitoring for novel subtypes with pandemic potential, and providing key information to policy makers, emergency response officials, clinicians, the public, and the media. Poorly performing diagnostic laboratory tests therefore not only jeopardize patient management decisions but adversely impact the accuracy of public health data, appropriate public health policy, guidance, and interventions.

Accurate influenza diagnosis can also prevent the overuse of unnecessary antibiotics. From 2005 to 2006, 59% of ambulatory visits for acute respiratory tract infections in the United States resulted in an antibiotic prescription, even though the majority are caused by viruses (1). Antibiotics increase patients' risks for Clostridium difficile and antibiotic-resistant infections and cause an array of side effects, together accounting for 1 out of every 5 adverse drug event visits to emergency departments (2). False-negative influenza tests could further contribute to these adverse events, since patients who test negative for influenza are more likely to receive an antibiotic prescription than patients who test positive (3).

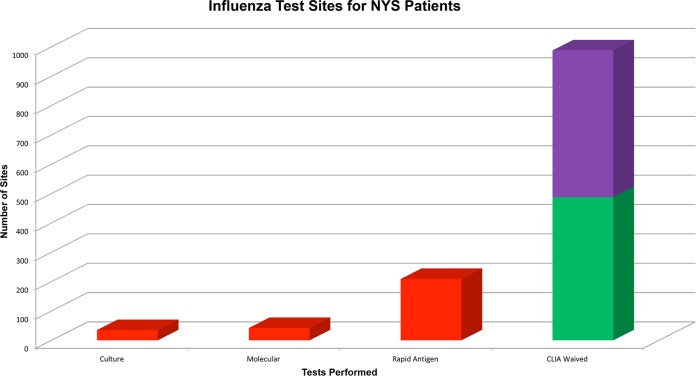

INFLUENZA TESTING LABORATORIES

There are a range of testing environments in the United States where diagnostic influenza testing is performed, with various requirements for quality management across these facilities. Clinical laboratories that are certified by the Center for Medicare and Medicaid Services/Clinical Laboratory Improvement Amendments (CLIA), College of American Pathologists, or other agencies, such as the Clinical Laboratory Evaluations Program in New York, are required to have extensive quality management systems (QMS) in place. These QMS include extensive documentation requirements and regulations regarding staff qualifications, equipment maintenance, testing procedures, and many other aspects of laboratory operations. Further, there are requirements for annual proficiency testing on target analytes and annual competency testing on all staff. Clinical laboratories are also inspected biannually, and the directors of these laboratories are almost universally doctoral qualified. In contrast, limited service laboratories (LSLs) and physician office laboratories (POLs) have greatly reduced QMS requirements and quality assurance oversight, with far fewer operating standards and regulations, no requirement for proficiency testing, and minimal inspections, except for POLs that perform high-complexity testing. Notably, there are many times more LSLs and POLs than clinical laboratories. The extensive laboratory regulatory system in New York State (NYS) facilitates the collection of accurate data on numerous aspects of laboratory operations and testing in the state. Data collated in preparation for the FDA hearing on RIDT reclassification in 2013 found that there were 286 clinical laboratories performing influenza testing, compared with 488 limited service laboratories and approximately 500 POLs performing RIDTs (Fig. 1).

FIG 1.

Categories of test sites where NYS patients are tested for influenza and the types of influenza tests done at each type of laboratory. Clinical laboratories with extensive QMS (red), limited service laboratories (green), and physician office laboratories (purple) which have much fewer quality assurance (QA) requirements as described in the text. Data are from 2013, presented at the public FDA hearing on the reclassification of rapid devices for influenza detection.

TEST METHODS FOR INFLUENZA

Available laboratory methods for influenza testing include serology, viral culture, antigen detection, and nucleic acid amplification tests (NAATs). The selection by any facility as to which method and assay to use is based on many factors, including staff expertise, available instrumentation, budget, patient population being served, available space, and other aspects of the facility and resources. Historically, choices focused on viral culture and antigen-based methods, such as RIDT and immunofluorescent staining. However, for many years, laboratories have increasingly been using NAATs, particularly reverse transcription-PCR (RT-PCR). More recently, there has been rapid adoption of multiplex molecular panels that test for multiple respiratory pathogens in a single, often highly automated, test. As the data in Fig. 1 demonstrate, most clinical influenza testing is performed at POLs and LSLs using RIDTs. In NYS, influenza is a reportable result for clinical laboratories, and the test methods are recorded together with the results. Data from the 2012-2013 season also showed that even in clinical laboratories with scientifically qualified staff and doctoral-qualified directors, RIDTs were extensively used by a large proportion of sites (Fig. 2). Overall, however, a major proportion of influenza testing is performed at test sites with minimal QMS and therefore a heightened reliance on the intrinsic performance of the test kit.

FIG 2.

Positive influenza laboratory tests reported to NYS during the 2012-2013 influenza season by test type. Data were presented at the public FDA hearing on the reclassification of rapid devices for influenza detection. DFA, direct fluorescent antigen detection; RT-PCR, reverse transcription-PCR; RIDT, rapid influenza detection test.

ADVANTAGES OF RIDTs

While RIDTs are less sensitive than other influenza detection methods, they offer three main advantages of rapid results, low cost, and simplicity, with simplicity lending them usable in minimal QMS settings. Many RIDTs have short turnaround times (TATs) of 10 to 15 min, which is significantly faster than most NAAT methods, which have run times of 1 h or more. Many RIDTs are “CLAI waived,” defined as being very simple to perform and not requiring scientifically qualified personnel. For office or clinic-based outpatient visits, POC testing can aid providers in establishing a diagnosis without having to send specimens to an offsite laboratory. Performing RIDTs at the point of care facilitates the rapid provision of anti-influenza therapy directly to laboratory-confirmed-positive patients while they are still physically present to receive a prescription. However, with rapid NAATs recently receiving CLIA-waived status, RIDTs are no longer the only method available for POC influenza testing, with some rapid NAATs providing TATs of less than 20 min (4, 5). However, commercial NAATs are more expensive than RIDTs, and testing costs are an important consideration for health systems. Overall, test costs must be balanced against patient outcomes and global costs to the system.

GENERAL ISSUES WITH INFLUENZA DETECTION

Influenza virus detection is affected by many variables, including those intrinsic to the virus itself, as well as extrinsic factors related to sample collection, transport, storage, and testing practices.

Two key phenomena in influenza virus biology can potentially reduce the sensitivity of diagnostic assays, antigenic drift and antigenic shift. Antigenic drift occurs in both influenza A and B viruses by the accumulation of genetic mutations that can alter the immunogenicity of virus-coded antigens, resulting in seasonal influenza epidemics. In contrast, antigenic shift occurs only in influenza A viruses, when gene segments among two or more strains reassort to give rise to a novel virus, such as in the 2009 pandemic influenza A(H1N1), which resulted from the reassortment of four different human, swine, and avian influenza strains within a swine host. The most significant changes occur in the hemagglutinin and neuraminidase genes, which can affect the sensitivities of molecular methods (6).

As for all infectious diagnostic tests, influenza virus detection is also highly dependent on sample collection. For optimal sensitivity, samples should be collected within 72 h of symptom onset, ideally earlier so that appropriate antiviral therapy can be given within 48 h of symptom onset. Swabs of the posterior nasopharynx are most commonly recommended for respiratory viral testing, although nasal aspirates and washes may be better tolerated by young children (7). Nasal aspirates and washes are acceptable for some FDA-cleared assays, while nasal, oral, and throat swabs are not recommended. Lower-respiratory-tract sampling may be indicated for viral pneumonia or for testing for suspected avian influenza, although these specimen sources require laboratory validation. All specimens should be promptly transported to the testing laboratory, as delays in transport or improper specimen storage can adversely affect specimen quality.

SPECIFIC CONCERNS REGARDING RIDT PERFORMANCE

General RIDT concerns.

Despite the advantages that RIDTs offer, including rapid TAT, low cost, and suitability for POC test settings, the clinical utility of these assays is limited by poor sensitivity and negative predictive value (NPV). Unlike viral culture or NAAT, antigen-based detection methods cannot achieve the lower limits of detection attained by amplified methods. RIDTs require 104 to 106 infectious influenza particles for a positive result (8) and are therefore not as well suited for adult specimens, which contain less virus than those from children. Due to these detection limits, RIDT performance is more heavily dependent on optimal sample collection and transportation than other methods (9, 10).

Another major concern with RIDTs is that performance can vary significantly year to year due to antigenic variation of circulating or novel strains (11, 12). Furthermore, testing is often performed by non-laboratory-trained personnel, with results determined by subjective observation and interpretation, introducing additional potential sources of error. To mitigate human error, newer formats of rapid antigen tests have been developed that utilize automated readers to augment detection and eliminate interobserver variability. These improved assays have been shown to be more sensitive than traditional-format RIDTs for both influenza A and B (13–15), with a limit of detection up to 40-fold lower depending on the comparator RIDT assay and the specific influenza strain(s) evaluated (13, 16, 17).

RIDTs are also limited by their inability to subtype influenza viruses (e.g., H1 versus H3), although this is also a limitation for many NAAT assays. For hospitalized inpatients, viral subtyping is critical for patient isolation or cohorting in accordance with hospital infection control practices and in some influenza seasons has been important for the selection of appropriate antiviral treatment options. Subtyping is also essential for the accurate collection of local, regional, and national epidemiologic information to appropriately coordinate public health responses.

Published metadata studies.

Hundreds of studies have been published describing the analytic performance of RIDTs, with a wide range in reported sensitivity, dependent on many of the factors described above. The pooled sensitivity in three meta-analyses was between 51 and 67.5% (18–20). Chartrand et al. (20) found that RIDT sensitivity was higher in children (66.6%) than in adults (53.9%), higher for influenza A (64.6%) than influenza B (52.2%), and higher if culture (72.3%) rather than RT-PCR (53.9%) was used as the comparator method.

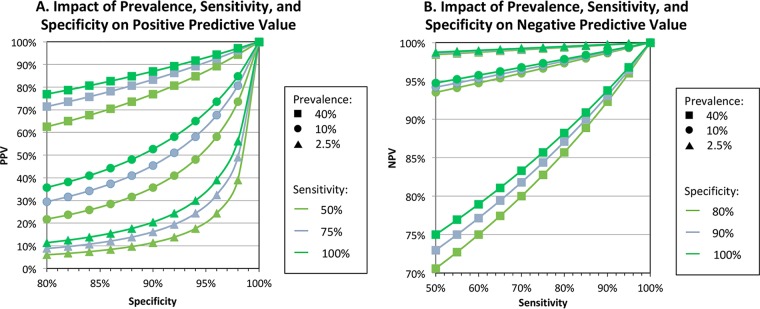

Due to the low to moderate sensitivity of influenza RIDTs, the CDC recommends reflexing samples that test negative to a more sensitive method, such as RT-PCR. Since predictive values of diagnostic tests are dependent on prevalence, test results must be interpreted within the context of influenza activity (see Fig. 3). When seasonal activity is high, tests with low to moderate sensitivity, like RIDTs, will have a substantial proportion of false-negative results (21). For comparison, a high-sensitivity test (i.e., sensitivity = 95%), such as NAAT, will have good negative predictive value (NPV) when activity is either low (2.5% prevalence) or high (40% prevalence), at 99.9% versus 96.8%, respectively. In contrast, a low-sensitivity test (sensitivity = 50%) will have adequate NPV when activity is low (NPV, 98.7%) but significantly worse NPV when activity is high (NPV, 75.0%). Furthermore, when prevalence is low, the positive predictive value of RIDTs is poor. Thus, when the need for influenza diagnostic testing is highest, i.e., when influenza is not at high prevalence and there is less clinical certainty regarding the diagnosis of a presenting patient, RIDTs cannot be counted on for an accurate result. To mitigate these risks, the CDC recommends that anti-influenza therapy not be withheld due to negative RIDT results when there is a firm clinical suspicion of influenza (www.cdc.gov/flu).

FIG 3.

Impact of prevalence, sensitivity, and specificity on positive (A) and negative (B) predictive values. When sensitivity is low and prevalence is high, PPV is high and NPV is low ( ); when sensitivity is low and prevalence is low, NPV is high and PPV is low (

); when sensitivity is low and prevalence is low, NPV is high and PPV is low ( ). Influenza prevalence is usually very high for only a few weeks each year in temperate climates.

). Influenza prevalence is usually very high for only a few weeks each year in temperate climates.

Detection of pdmH1N1.

While the low sensitivity of RIDTs has been a well-known issue for years, the topic received national attention during the 2009 influenza A(H1N1) pandemic. A worrisome report from a high-volume New York laboratory showed that the real-world performance of two commercial RIDTs for this novel virus was 17.8% overall, with one assay detecting only 9.6% of positive samples (10). The authors considered viral antigenic variation to be a significant contributor to the extremely poor performance, as even direct fluorescent antibody (DFA) testing showed a sensitivity of just 48.6%. In addition, there may have been some season- or hospital-specific factors also contributing to the poor performance, including poor specimen quality collected by inexperienced staff and a very high workload during a busy season (22). Nevertheless, the sensitivity of a commercial NAAT instrument was 97.8% for these same suboptimal samples. A subsequent smaller study by CDC also found unreliable sensitivity for influenza A(H1N1) strains using 3 different commercial RIDTs, with sensitivities ranging from 40 to 69%, depending on the manufacturer (12). Many subsequent studies also found lower RIDT sensitivity for pandemic influenza A(H1N1) than for seasonal influenza (22).

Multistrain, multikit RIDT analysis.

Given these concerns regarding the sensitivity of RIDTs, a collaborative effort by the CDC and the Medical College of Wisconsin evaluated 11 commercially available RIDTs for the detection of 23 contemporary influenza strains in order to provide a comprehensive update on RIDT analytic performance (23). Performance was found to be dependent on both the viral strain and concentration; at high viral concentrations, all strains were detected by most of the RIDTs, but performance was highly varied at moderate dilutions, and almost all the assays failed to detect any strains at low viral concentrations. These results confirmed that RIDT sensitivity can substantially vary from season to season, depending on which strains are circulating, and that many tests may not be suitable for detecting some circulating strains each year.

Detection of variant or novel influenza viruses.

There have also been longstanding concerns regarding the detection capability of RIDTs for newly emerging influenza subtypes with an elevated risk of pandemic threat. For example, an assessment of six RIDTs showed that detection limits for avian influenza A(H7N9) virus were insufficient compared with the viral loads observed in most clinical specimens, and that most of the tests would therefore not have detected the majority of positive specimens from clinical cases of influenza A(H7N9) virus (17). The performance of RIDTs for detecting variant influenza A(H3N2) strains has also been assessed and found to vary significantly by strain and by assay (24). Some RIDTs failed to detect certain variant strains at any tested dilution, again highlighting serious concerns that the tests could fail to detect the emergence of variant or novel strains during a public health emergency.

CONVENING OF FDA PANEL

In June 2013, the FDA Division of Microbiology Devices convened an advisory panel to discuss the regulation of RIDTs, considering recent studies highlighting their low sensitivity, especially within the context of the 2009 influenza A(H1N1) virus pandemic. There was significant concern at the FDA, CDC, and Biomedical Advanced Research and Development Authority (BARDA) about the risks associated with false-negative RIDT results and the potential harm that could be caused to both patients and public health efforts. Panel members included influenza experts from academia, clinical medicine, public health, and the laboratory diagnostic industry. At the public hearing, invited speakers presented detailed explanations and data describing the issues, and the floor was opened to additional comments and questions. The panel listened to and discussed a detailed explanation of the proposed reclassification of RIDTs from class I to class II medical devices, as well as details of the additional controls that could be enforced to ensure a more robust diagnostic performance by RIDTs.

FDA CLASSIFICATION OF MEDICAL DEVICES

The FDA regulates in vitro diagnostic (IVD) devices based on the device's classification, derived from the 1976 Medical Devices Amendments to the Food, Drug, and Cosmetics Act. All medical devices, including IVD devices, are categorized into one of three risk-based classes that differ in the level of control needed to ensure their safety and effectiveness. Class I devices require the least amount of regulatory oversight, whereas class III devices require the most. Most class I and all class II devices can be cleared for marketing through the 510(k) submission process, whereby a device must demonstrate safety and efficacy similar to those of a predicate device. Class III devices, on the other hand, require a premarket approval application, and the FDA exerts greater oversight. More information can be found on the FDA website (https://www.fda.gov/medicaldevices/deviceregulationandguidance/overview/classifyyourdevice/).

CLASS I VERSUS CLASS II DEVICES

Class I medical devices are considered to not present any unreasonable risk of illness or injury to persons. About 47% of all medical devices fall into this categorization. Common examples include dental floss, elastic bandages, and bedpans, which all present very little risk of harm if the device is compromised. In clinical microbiology laboratories, common class I devices include incubators, multipurpose culture media, and most serologic reagents. General controls are sufficient to regulate class I devices and include registration of manufacturing facilities; listing of products; good manufacturing practices; quality system procedures that provide for notification of risks, repair, replacement, or refund; restrictions on sale and distribution of use; and other regulatory controls, such as labeling, adverse event reporting, and reporting of misbranding or adulteration of the device. Due to their low risk, the FDA has exempted most class I devices from the 510(k) requirement, although RIDTs were not previously exempted, as they are IVD tests performed directly on clinical samples.

In contrast, class II medical devices pose higher potential risks than class I devices. About 43% of all medical devices are grouped into this classification. Common examples include condoms, powered wheelchairs, and pregnancy tests, all of which could pose potential harm if their safety or effectiveness were compromised. Common class II devices used in clinical microbiology laboratories include culture media for antimicrobial susceptibility testing and multiplex NAATs for respiratory pathogens. In addition to general controls, class II devices require special controls, examples of which include performance standards, postmarket surveillance, patient registries, guidelines, design controls, and other appropriate actions to mitigate risk.

HISTORY OF RIDT CLASSIFICATION

RIDTs were initially cleared for marketing as class I medical devices in the 1990s, and many were then CLIA waived in the early 2000s. At the time, there was a substantial unmet need for a rapid test that could augment the clinical diagnosis of influenza and direct appropriate therapy and patient management. Although it has been long known that RIDTs are relatively insensitive, they were nonetheless FDA cleared as low-risk devices due their utility as easy-to-use rapid tests that were available at the point of care and capable of providing a high positive predictive value during an influenza season.

However, in the context of more recent data demonstrating the poor sensitivity of some RIDTs to detect 2009 influenza A(H1N1) virus (10, 12), as well as variant and novel influenza strains (17, 24), concerns about false-negative RIDTs escalated, and their reclassification was reconsidered. Failure to correctly diagnose influenza could lead to nonprovision or delay in administering anti-influenza therapy. Not only can anti-influenza therapy mitigate symptoms and shorten the course of illness, but its use can also reduce the risk of serious sequelae of infection and mortality (25, 26).

False-negative RIDTs can also falsely assure patients that they do not have influenza, which could lead to increased viral transmission to personal contacts, promoting further disease spread. Sensitive virus detection is also essential for collecting accurate epidemiologic information, and false-negative results could lead to inappropriate coordination of public health responses and forestall the recognition of novel viruses that have pandemic potential.

With the collective data confirming longstanding concerns about RIDT sensitivity, the convened panel unanimously agreed with all aspects of the proposal, and the FDA published a proposed order to reclassify RIDTs from class I to class II medical devices with new special controls on 22 May 2014 (28).

PROPOSED SPECIAL CONTROLS

The four special controls that were proposed to mitigate the risks associated with RIDTs are the following:(i) products must demonstrate compliance with minimum performance standards (Table 1) or can no longer be marketed after 12 January 2018, (ii) an appropriate (FDA-accepted) comparator method, either viral culture or molecular method, is required for establishing performance of a new antigen-based RIDT, (iii) annual analytical reactivity testing performed with contemporary influenza strains that must meet specific criteria, and (iv) analytic testing with newly emergent strains is required in situations involving an emergency or a potential emergency to public health.

TABLE 1.

Minimum performance standards for rapid influenza virus detection tests

| Comparator method | Influenza A virus |

Influenza B virus |

||

|---|---|---|---|---|

| Point estimate (%) | Lower bound of 95% CI (%) | Point estimate (%) | Lower bound of 95% CI (%) | |

| Sensitivity | ||||

| Viral culture | 90 | 80 | 80 | 70 |

| Molecular | 80 | 70 | 80 | 70 |

| Specificity | ||||

| Viral culture | 90 | 90 | ||

| Molecular | 90 | 90 | ||

Minimum performance standards.

The proposal for minimum performance standards focused on sensitivity, since RIDT specificity has not been an issue. A review of all prospective clinical studies in support of 510(k) submissions was conducted for all RIDTs cleared for marketing since 1998, and a literature review was also performed for studies published within the preceding 5 years. Not surprisingly, real-world performance of RIDTs showed considerably poorer sensitivity than the 510(k) submission data (27). Based on these reviews, the FDA proposed the minimum performance standards shown in Table 1, including a minimum sensitivity of 80% for influenza A and B when molecular methods are used as the comparator, and a minimum sensitivity of 90% for influenza A when viral culture is used as the comparator. Of the 16 RIDTs on the market at the time, all products met the proposed specificity criteria, but only 7 of 16 RIDTs met the proposed sensitivity criteria.

Reference method.

While viral culture has long been considered the “gold standard” for influenza detection, the FDA recognizes that molecular methods are also suitable comparators for establishing the performance of a new device. Molecular methods are more sensitive than viral culture, although they cannot distinguish live virus from nonviable viral nucleic acids. Even though molecular methods are capable of lower detection limits than viral culture, FDA maintains that viral culture, if performed well, is still a useful reference method. Therefore, either viral culture or molecular testing can be used as a reference method, albeit with different minimum sensitivity requirements for each method (see Table 1).

Annual testing with contemporary strains.

Manufacturers are required to perform annual analytical reactivity testing against contemporary human influenza viruses that are identified by the FDA in consultation with the CDC, and sourced from the CDC or an alternative provider identified by the FDA. The CDC Influenza Virus Panel for annual reactivity testing can be requested at https://www.cdc.gov/flu/dxfluviruspanel/index.htm. Testing must be performed according to a standardized protocol that is acceptable to the FDA. Test results must be made publicly available by 31 July of each year either by including them in the device labeling or through the manufacturer's public website. Nonreactivity with a particular strain must be reflected in the updated product labeling, but these updates do not have to be submitted to the FDA.

Provisions for a public health emergency.

In the event of a declared public health emergency due to an emerging novel influenza virus, manufacturers must perform analytical reactivity testing within 30 days of FDA notification of the availability of characterized virus samples. Within 60 days from the date that the FDA notifies manufacturers that characterized viral samples are available for test evaluation, manufacturers must include the influenza emergency analytical reactivity test results in the product labeling. Test results must also be made publicly available through a hyperlink on the manufacturer's website.

PUBLICATION OF THE ORDER AND PUBLIC COMMENT

After publication of the proposed order, a period of public comment was opened, and 13 comments were received. Commenters expressed support for the proposed reclassification, noting the serious consequences of misdiagnosed influenza. Some comments expressed concern over the inclusion of two different comparator methods, which could lead users to make false assumptions about test performance and could also drive manufacturers to select data for submission using only the comparison method that meets minimum performance standards. The inclusion of two methods would also prevent the direct comparison of data from manufacturers who used different comparators. The FDA acknowledged these concerns but maintained the inclusion of both reference methods. A summary of public comments and FDA responses can be found in the Federal Register (28).

SCHEDULE

The FDA published the final order to reclassify RIDTs on 12 January 2017, effective 13 February 2017. Going forward, manufacturers of any new or modified RIDT must obtain 510(k) clearance and demonstrate that their product complies with the new special controls before they can be marketed.

Manufacturers of devices that had been legally marketed prior to 13 February 2017 were given a period of 1 year from the published order to comply with the special controls (compliance date, 12 January 2018). Products that already met the minimum performance standards did not have to be altered but must be tested against the CDC panel of contemporary strains, and a written plan must be developed for a public health emergency. Manufacturers of products that did not meet the minimum performance standards were required to modify their assays and submit a new 510(k) to demonstrate that the modified device is compliant with all of the special controls. The FDA may consider taking action against manufacturers that continue to market noncompliant devices.

CONCLUSIONS AND FUTURE DIRECTIONS

Laboratories, health care systems, and providers that currently use RIDTs should first ascertain whether the product they use meets the minimum performance standards by checking the package insert or visiting the manufacturer's website to access annual analytical reactivity test results. A list of current FDA-cleared RIDTs is also available at https://www.cdc.gov/flu/professionals/diagnosis/table-ridt.html.

The FDA required noncompliant manufacturers to stop marketing their product after 12 January 2018. Testing facilities that already purchased RIDTs prior to 12 January 2018 that do not meet the special controls may continue to use those kits until they expire. For testing facilities that intend to continue using RIDTs moving forward, users should feel somewhat assured that RIDTs marketed after this date are compliant with the special controls designed to mitigate their risks. However, they should be aware that more sensitive molecular methods for influenza diagnosis are also available.

The FDA reclassification of RIDTs will significantly reshape the influenza testing landscape, effectively leading to the discontinuation of poorly performing RIDTs and promoting the use of influenza tests that have demonstrated better diagnostic performance. This landmark achievement in the regulation of diagnostic devices for infectious diseases will hopefully set a precedent for routine performance evaluation of diagnostic devices after FDA approval, with potentially significant consequences for tests with poor clinical performance.

ACKNOWLEDGMENT

We sincerely thank Stephen Lindstrom for helpful discussions and editorial suggestions.

REFERENCES

- 1.Grijalva CG, Nuorti JP, Griffin MR. 2009. Antibiotic prescription rates for acute respiratory tract infections in US ambulatory settings. JAMA 302:758–766. doi: 10.1001/jama.2009.1163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Budnitz DS, Pollock DA, Weidenbach KN, Mendelsohn AB, Schroeder TJ, Annest JL. 2006. National surveillance of emergency department visits for outpatient adverse drug events. JAMA 296:1858–1866. doi: 10.1001/jama.296.15.1858. [DOI] [PubMed] [Google Scholar]

- 3.Green DA, Hitoaliaj L, Kotansky B, Campbell SM, Peaper DR. 2016. Clinical utility of on-demand multiplex respiratory pathogen testing among adult outpatients. J Clin Microbiol 54:2950–2955. doi: 10.1128/JCM.01579-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Binnicker MJ, Espy MJ, Irish CL, Vetter EA. 2015. Direct detection of influenza A and B viruses in less than 20 minutes using a commercially available rapid PCR assay. J Clin Microbiol 53:2353–2354. doi: 10.1128/JCM.00791-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hazelton B, Gray T, Ho J, Ratnamohan VM, Dwyer DE, Kok J. 2015. Detection of influenza A and B with the Alere i Influenza A & B: a novel isothermal nucleic acid amplification assay. Influenza Other Respir Viruses 9:151–154. doi: 10.1111/irv.12303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Klungthong C, Chinnawirotpisan P, Hussem K, Phonpakobsin T, Manasatienkij W, Ajariyakhajorn C, Rungrojcharoenkit K, Gibbons RV, Jarman RG. 2010. The impact of primer and probe-template mismatches on the sensitivity of pandemic influenza A/H1N1/2009 virus detection by real-time RT-PCR. J Clin Virol 48:91–95. doi: 10.1016/j.jcv.2010.03.012. [DOI] [PubMed] [Google Scholar]

- 7.Ginocchio C, McAdam AJ. 2011. Current best practices for respiratory virus testing. J Clin Microbiol 49:S44–48. doi: 10.1128/JCM.00698-11. [DOI] [Google Scholar]

- 8.Sakai-Tagawa Y, Ozawa M, Tamura D, Le M, Nidom CA, Sugaya N, Kawaoka Y. 2010. Sensitivity of influenza rapid diagnostic tests to H5N1 and 2009 pandemic H1N1 viruses. J Clin Microbiol 48:2872–2877. doi: 10.1128/JCM.00439-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Agoritsas K, Mack K, Bonsu BK, Goodman D, Salamon D, Marcon MJ. 2006. Evaluation of the Quidel QuickVue test for detection of influenza A and B viruses in the pediatric emergency medicine setting by use of three specimen collection methods. J Clin Microbiol 44:2638–2641. doi: 10.1128/JCM.02644-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ginocchio CC, Zhang F, Manji R, Arora S, Bornfreund M, Falk L, Lotlikar M, Kowerska M, Becker G, Korologos D, de Geronimo M, Crawford JM. 2009. Evaluation of multiple test methods for the detection of the novel 2009 influenza A (H1N1) during the New York City outbreak. J Clin Virol 45:191–195. doi: 10.1016/j.jcv.2009.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Uyeki TM, Prasad R, Vukotich C, Stebbins S, Rinaldo CR, Ferng YH, Morse SS, Larson EL, Aiello AE, Davis B, Monto AS. 2009. Low sensitivity of rapid diagnostic test for influenza. Clin Infect Dis 48:e89–e92. doi: 10.1086/597828. [DOI] [PubMed] [Google Scholar]

- 12.Centers for Disease Control and Prevention. 2009. Evaluation of rapid influenza diagnostic tests for detection of novel influenza A (H1N1) Virus–United States, 2009. MMWR Morb Mortal Wkly Rep 58:826–829. [PubMed] [Google Scholar]

- 13.Peters TR, Blakeney E, Vannoy L, Poehling KA. 2013. Evaluation of the limit of detection of the BD Veritor system flu A+B test and two rapid influenza detection tests for influenza virus. Diagn Microbiol Infect Dis 75:200–202. doi: 10.1016/j.diagmicrobio.2012.11.004. [DOI] [PubMed] [Google Scholar]

- 14.Lee CK, Cho CH, Woo MK, Nyeck AE, Lim CS, Kim WJ. 2012. Evaluation of Sofia fluorescent immunoassay analyzer for influenza A/B virus. J Clin Virol 55:239–243. doi: 10.1016/j.jcv.2012.07.008. [DOI] [PubMed] [Google Scholar]

- 15.Hassan F, Nguyen A, Formanek A, Bell JJ, Selvarangan R. 2014. Comparison of the BD Veritor system for Flu A+B with the Alere BinaxNOW influenza A&B card for detection of influenza A and B viruses in respiratory specimens from pediatric patients. J Clin Microbiol 52:906–910. doi: 10.1128/JCM.02484-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Centers for Disease Control and Prevention. 2012. Evaluation of rapid influenza diagnostic tests for influenza A (H3N2)v virus and updated case count–United States, 2012. MMWR Morb Mortal Wkly Rep 61:619–621. [PubMed] [Google Scholar]

- 17.Baas C, Barr IG, Fouchier RA, Kelso A, Hurt AC. 2013. A comparison of rapid point-of-care tests for the detection of avian influenza A(H7N9) virus, 2013. Euro Surveill 18:pii=20487. doi: 10.2807/ese.18.21.20487-en. [DOI] [PubMed] [Google Scholar]

- 18.Babin SM, Hsieh YH, Rothman RE, Gaydos CA. 2011. A meta-analysis of point-of-care laboratory tests in the diagnosis of novel 2009 swine-lineage pandemic influenza A (H1N1). Diagn Microbiol Infect Dis 69:410–418. doi: 10.1016/j.diagmicrobio.2010.10.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Chu H, Lofgren ET, Halloran ME, Kuan PF, Hudgens M, Cole SR. 2012. Performance of rapid influenza H1N1 diagnostic tests: a meta-analysis. Influenza Other Respir Viruses 6:80–86. doi: 10.1111/j.1750-2659.2011.00284.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Chartrand C, Leeflang MM, Minion J, Brewer T, Pai M. 2012. Accuracy of rapid influenza diagnostic tests: a meta-analysis. Ann Intern Med 156:500–511. doi: 10.7326/0003-4819-156-7-201204030-00403. [DOI] [PubMed] [Google Scholar]

- 21.Centers for Disease Control and Prevention. Rapid diagnostic testing for influenza: information for clinical laboratory directors. Centers for Disease Control and Prevention, Atlanta, GA: https://www.cdc.gov/flu/professionals/diagnosis/rapidlab.htm. [Google Scholar]

- 22.Landry ML. 2011. Diagnostic tests for influenza infection. Curr Opin Pediatr 23:91–97. doi: 10.1097/MOP.0b013e328341ebd9. [DOI] [PubMed] [Google Scholar]

- 23.Centers for Disease Control and Prevention. 2012. Evaluation of 11 commercially available rapid influenza diagnostic tests–United States, 2011–2012. MMWR Morb Mortal Wkly Rep 61:873–876. [PubMed] [Google Scholar]

- 24.Balish A, Garten R, Klimov A, Villanueva J. 2013. Analytical detection of influenza A(H3N2)v and other A variant viruses from the USA by rapid influenza diagnostic tests. Influenza Other Respir Viruses 7:491–496. doi: 10.1111/irv.12017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.McGeer A, Green KA, Plevneshi A, Shigayeva A, Siddiqi N, Raboud J, Low DE, Toronto Invasive Bacterial Diseases Network. 2007. Antiviral therapy and outcomes of influenza requiring hospitalization in Ontario, Canada. Clin Infect Dis 45:1568–1575. doi: 10.1086/523584. [DOI] [PubMed] [Google Scholar]

- 26.Lee N, Cockram CS, Chan PK, Hui DS, Choi KW, Sung JJ. 2008. Antiviral treatment for patients hospitalized with severe influenza infection may affect clinical outcomes. Clin Infect Dis 46:1323–1324. doi: 10.1086/533477. [DOI] [PubMed] [Google Scholar]

- 27.US Food and Drug Administration. Executive summary: proposed reclassification of the rapid influenza detection tests, abstr CDRH. Microbiology Devices Advisory Committee Meeting, 13 June 2013, Gaithersburg, MD. [Google Scholar]

- 28.US Food and Drug Administration. 2017. Microbiology devices; reclassification of influenza virus antigen detection test systems intended for use directly with clinical specimens. FDA-2014-N-0440. 21 CFR Part 866. US Food and Drug Administration, Silver Spring, MD. [PubMed] [Google Scholar]