Abstract

Abundant accumulation of digital histopathological images has led to the increased demand for their analysis, such as computer-aided diagnosis using machine learning techniques. However, digital pathological images and related tasks have some issues to be considered. In this mini-review, we introduce the application of digital pathological image analysis using machine learning algorithms, address some problems specific to such analysis, and propose possible solutions.

Keywords: Histopathology, Deep learning, Machine learning, Whole slide images, Computer assisted diagnosis, Digital image analysis

1. Introduction

Pathology diagnosis has been performed by a human pathologist observing the stained specimen on the slide glass using a microscope. In recent years, attempts have been made to capture the entire slide with a scanner and save it as a digital image (whole slide image, WSI) [1]. As a large number of WSIs are being accumulated, attempts have been made to analyze WSIs using digital image analysis based on machine learning algorithms to assist tasks including diagnosis.

Digital pathological image analysis often uses general image recognition technology (e.g. facial recognition) as a basis. However, since digital pathological images and tasks have some unique characteristics, special processing techniques are often required. In this review, we describe the application of digital pathological image analysis using machine learning algorithms, and its problems specific to digital pathological image analysis and the possible solutions. Several reviews that have been published recently discuss histopathological image analysis including its history and details of general machine learning algorithms [[2], [3], [4], [5], [6], [7]]; in this review, we provide more pathology-oriented point of view.

Since the overwhelming victory of the team using deep learning at ImageNet Large Scale Visual Recognition Competition (ILSVRC) 2012 [8], most of the image recognition techniques have been replaced by deep learning. This is also true for pathological image analysis [[9], [10], [11]]. Therefore, even though many techniques introduced in this review are related to deep learning, most of them are also applicable for other machine learning algorithms.

2. Machine Learning Methods

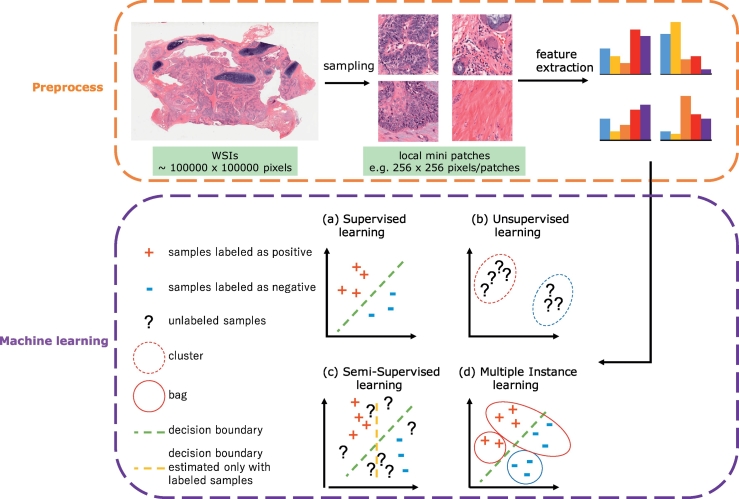

Fig. 1 shows typical steps for histopathological image analysis using machine learning. Prior to applying machine learning algorithms, some pre-processing should be performed. For example, when cancer regions are detected in WSI, local mini patches around 256 × 256 are sampled from large WSI. Then feature extraction and classification between cancer and non-cancer are performed in each local patch. The goal of feature extraction is to extract useful information for machine learning tasks. Various local features such as gray level co-occurrence Matrix (GLCM) and local binary pattern (LBP) have been used for histopathological image analysis, but deep learning algorithms such as convolutional neural network [9,10,[12], [13], [14]] starts the analysis from feature extraction. Features and classifiers are simultaneously optimized in deep learning and features learned in deep learning often outperforms other traditional features in histopathological image analysis.

Fig. 1.

Typical steps for machine learning in digital pathological image analysis. After preprocessing whole slide images, various types of machine learning algorithms could be applied including (a) supervised learning (see Section 2), (b) unsupervised learning (see Section 2), (c) semi-supervised learning (see Section 4.2.2), and (d) multiple instance learning (see Section 4.2.2). The histopathological images are adopted from The Cancer Genome Atlas (TCGA) [33].

Machine learning techniques often used in digital pathology image analysis are divided into supervised learning and unsupervised learning. The goal of supervised learning is to infer a function that can map the input images to their appropriate labels (e.g. cancer) well using training data. Labels are associated with a WSI or an object in WSIs. The algorithms for supervised learning include support vector machines, random forest and convolutional neural networks. On the other hand, the goal of unsupervised learning is to infer a function that can describe hidden structures from unlabeled images. The tasks include clustering, anomaly detection and dimensionality reduction. The algorithms for unsupervised learning include k-means, autoencoders and principal component analysis. There are derivatives from these two learning such as semi-supervised learning and multiple instance learning, which are described in Section 4.2.2.

3. Machine Learning Application in Digital Pathology

3.1. Computer-assisted Diagnosis

The most actively researched task in digital pathological image analysis is computer-assisted diagnosis (CAD), which is the basic task of the pathologist. Diagnostic process contains the task to map a WSI or multiple WSIs to one of the disease categories, meaning that it is essentially a supervised learning task. Since the errors made by a machine learning system reportedly differ from those made by a human pathologist [14], classification accuracy could be improved using CAD system. CAD may also lead to the reduce variability in interpretations and prevent overlooking by investigating all pixels within WSIs.

Other diagnosis-related tasks include detection or segmentation of Region of Interest (ROI) such as tumor region in WSI [15,16], scoring of immunostaining [11,17], cancer staging [14,18], mitosis detection [19,20], gland segmentation [21], [22], [23], and detection and quantification of vascular invasion [24].

3.2. Content Based Image Retrieval

Content Based Image Retrieval (CBIR) retrieves similar images to a query image. In digital pathology, CBIR systems are useful in many situations, particularly in diagnosis, education, and research [25], [26], [27], [28], [29], [30], [31], [32]. For example, CBIR systems can be used for educational purposes by students and beginner pathologists to retrieve relevant cases or histopathological images of tissues. In addition, such systems are also helpful to professional pathologists, particularly when diagnosing of rare cases.

Since CBIR does not necessarily require label information, unsupervised learning can be used [29]. When label information is available, supervised learning approaches could learn better similarity measure than unsupervised learning approaches [27,28] since the similarity between histopathological images may differ by definition. However, preparing sufficient number of labeled data can be a serious problem as will be described later.

In CBIR, not only accuracy but also high-speed search of similar images from numerous images are required. Therefore, various techniques for dimensionality reduction of image features such as principal component analysis, and fast approximate nearest neighbor search such as kd-tree and hashing [31] are utilized for high speed search.

3.3. Discovering New Clinicopathological Relationships

Historically, many important discoveries concerning diseases such as tumor and infectious diseases have been made by pathologists and researchers who have carefully and closely observed pathological specimens. For example, H. pylori was discovered by a pathologist who was examining the gastric mucosa of patients with gastritis [32]. Attempts have also been made to correlate the morphological features of cancers with their clinical behavior. For example, tumor grading is important in planning treatment and determining a patient's prognosis for certain types of cancer, such as soft tissue sarcoma, primary brain tumors, and breast and prostate cancer.

Meanwhile, thanks to the progress in digitization of medical information and advance in genome analysis technology in recent years, large amount of digital information such as genome information, digital pathological images, MRI and CT images has become available [33]. By analyzing the relationship between these data, new clinicopathological relationships, for example, the relationship between the morphological characteristic and the somatic mutation of the cancer, can be found [34,35]. However, since the amount of data is enormous, it is not realistic for pathologists and researchers to analyze all the relationships manually by looking at the specimens. This is where the machine learning technology comes in. For example, Beck et al. extracted texture information from pathological images of breast cancer and analyzed with L1 - regularized logistic regression, and indicated that the histology of stroma correlates with prognosis in breast cancer [36]. Other researches include prognosis predictions from histopathological image of cancer [37], prediction of somatic mutation [13], and discovery of new gene variants related to autoimmune thyroiditis based on image QTL [38].

4. Problems Specific to Histopathological Image Analysis

In this section, we describe unique characteristics of pathological image analysis and computational methods to treat them. Table 1 presents an overview of papers dealing with the problems and the solutions.

Table 1.

Overview of papers dealing with problems and solutions for histopathological image analysis.

| Solution | Reference |

|---|---|

| Very large image size | |

| Case level classification summarizing patch or object level classification | Markov Random Field [17], Bag of Words of local structure [18] and random forest [14,39,40] |

| Insufficient labeled images | |

| GUI tools | Web server [41,42] |

| Tracking pathologists' behavior | Eye tracking [43], mouse tracking [44] and viewport tracking [45] |

| Active learning | Uncertainly sampling [42], Query-by-Committee [46], variance reduction [47] and hypothesis space reduction [48] |

| Multiple instance learning | Boosting-based [49,50], deep weak supervision [51] and structured support vector machines (SVM) [52] |

| Semi-supervised learning | Manifold learning [30] and SVM [53] |

| Transfer learning | Feature extraction [54], fine-tuning [16,55,56] |

| Different levels of magnification result in different levels of information | |

| Multiscale analysis | CNN [57], dictionary learning [58] and texture features [59] |

| WSI as orderless texture-like image | |

| Texture features | Traditional textures [[60], [61], [62], [63]] and CNN-based textures [64] |

| Color variation and artifacts | |

| Removal of color variation effect | Color normalization [65], [66], [67], [68] and color augmentation [69,70] |

| Artifact detection | Blur [71,72] and tissue-folds [73,74] |

4.1. Very Large Image Size

When images such as dogs or houses are classified using deep learning, small sized image such as 256 × 256 pixels is often used as an input. Images with large size often need to be resized into smaller size which is enough for sufficient distinction, as increase in the size of the input image results in the increase in the parameter to be estimated, the required computational power, and memory. In contrast, WSI contains many cells and the image could consist of as many as tens of billions of pixels, which is usually hard to analyzed as is. However, resizing the entire image to a smaller size such as 256 × 256 would lead to the loss of information at cellular level, resulting in marked decrease of the identification accuracy. Therefore, the entire WSI is commonly divided into partial regions of about 256 × 256 pixels (“patches”), and each patch is analyzed independently, such as detection of ROIs. Thanks to the advances in computational power and memory, patch size is increasing (e.g. 960 × 960), which is expected to contribute to better accuracy. There is still a room for improvement in the method of integrating the result from each patch. For example, as the entire WSI could contain hundreds of thousands of patches, false positives are highly likely to appear even if individual patches are accurately classified. One possible solution for this is regional averaging of each decision, such that the regions is classified as ROI only when the ROI extends over multiple patches. However, this approach may suffer from false negatives, resulting in missing small ROIs such as isolated tumor cells [39].

In some applications such as IHC scoring, staging of lymph node metastasis of specimens or patients, and staging of prostate cancer diagnosed by Glisson score of multiple regions within one slide, more sophisticated algorithms to integrate patch-level or object-level decisions are required [14,17,18,39,40,75]. For example, for pN-staging of metastatic breast cancer, which was one of the tasks in Camelyon 17, multiple participating teams including us applied random forest classifiers of pixel or patch-level probabilities estimated by deep learning using various features such as estimated tumor size [39].

4.2. Insufficient Labeled Images

Probably the biggest problem in pathological image analysis using machine learning is that only a small number of training data is available. A key to the success of deep learning in general image recognition task is that training data is extremely abundant. Although label information at patch-level or pixel-level (e.g. inside/outside boundary of cancerous regions) is required in most tasks in digital pathology such as diagnosis, most labels of WSIs are at case-level (e.g. diagnosis) at most. Label information in general image analysis can be easily retrieved from the internet and it is also possible to use crowdsource labeling because anyone can identify objects and perform labeled work. However, only pathologists can label the pathological image accurately, and labeling at the regional level in a huge WSIs requires a lot of labor.

It is possible to reuse public ready-to-analyze data as training data in machine learning, such as ImageNet [76] in natural images and International Skin Imaging Collaboration [77] in macroscopic diagnosis of skin. In the field of digital pathology, there are some public datasets that contain hand-annotated histopathological images as summarized in Table 2, Table 3. They could be useful if the purpose of the analysis, slide condition (e.g. stain), and image condition (e.g. magnification level and image resolution) are similar. However, because each of these datasets focuses on specific disease or cell types, many tasks are not covered by these datasets. There are also several large-scale histopathological image databases that contain high-resolution WSIs: The Cancer Genome Atlas (TCGA) [78] contains over 10,000 WSIs from various cancer types, and Genotype-Tissue Expression (GTEx) [79,80] contains over 20,000 WSIs from various tissues. These databases may serve as potential training data for various tasks. Furthermore, both TCGA and GTEx also provide genomic profiles, which could be used to investigate relationships between genotype and morphology. The problem is that the WSIs in these repositories contain labels at the case-level, and in order to be able to use them for training data, some preprocessing or specialized machine learning algorithm for treating case-level labels is required.

Table 2.

Downloadable WSI database.

| Dataset or author's name | # slides or patches | Stain | Disease | Additional data |

|---|---|---|---|---|

| TCGA [33,78] | 18,462 | H&E | Cancer | Genome/transcriptome/epigenome |

| GTEx [79,80] | 25,380 | H&E | Normal | Transcriptome |

| TMAD [81,82] | 3726 | H&E/IHC | IHC score | |

| TUPAC16 [83] | 821 from TCGA | H&E | Breast cancer | Proliferation score for 500 WSIs, position for mitosis for 73 WSIs, ROI for 148 cases |

| Camelyon17 [40] | 1000 | H&E | Breast cancer (lymph node metastasis) | Mask for cancer region (in 500 WSIs with 5 WSIs per patient) |

| Köbel et al. [52,84] | 80 | H&E | Ovarian carcinoma | |

| KIMIA Path24 [85,86] | 24 | H&E/IHC and others | various tissue |

Table 3.

Hand annotated histopathological images publicly available.

| Dataset or paper | Image size (px) | # images | Stain | Disease | Additional data | Potential usage |

|---|---|---|---|---|---|---|

| KIMIA960 [87,88] | 308 × 168 | 960 | H&E/IHC | various tissue | Disease classification | |

| Bio-segmentation [89,90] | 896 × 768, 768 × 512 | 58 | H&E | Breast cancer | Disease classification | |

| Bioimaging challenge 2015 [91,92] | 2040 × 1536 | 269 | H&E | Breast cancer | Disease classification | |

| GlaS [23,93] | 574–775 × 430–522 | 165 | H&E | Colorectal cancer | Mask for gland area | Gland segmentation |

| BreakHis [15,94] | 700 × 460 | 7909 | H&E | Breast cancer | Disease classification | |

| Jakob Nikolas et al. [88,95] | 1000 × 1000 | 100 | IHC | Colorectal cancer | Blood vessel count | Blood vessel detection |

| MITOS-ATYPIA-14 [96] | 1539 × 1376, 1663 × 1485 | 4240 | H&E | Breast cancer | Coordinates of mitosis with a confidence degree/six criteria to evaluate nuclear atypia | Mitosis detection, nuclear atypia classification |

| Kumar et al. [97,98] | 1000 × 1000 | 30 | H&E | Various cancer | Coordinates of annotated nuclear boundaries | Nuclear segmentation |

| MITOS 2012 [20,99] | 2084 × 2084, 2252 × 2250 | 100 | H&E | Breast cancer | Coordinates of mitosis | Mitosis detection |

| Janowczyk et al. [100,101] | 1388 × 1040 | 374 | H&E | Lymphoma | None | Disease classification |

| Janowczyk et al. [100,101] | 2000 × 2000 | 311 | H&E | Breast cancer | Coordinates of mitosis | Mitosis detection |

| Janowczyk et al. [100,101] | 100 × 100 | 100 | H&E | Breast cancer | Coordinates of lymphocyte | Lymphocyte detection |

| Janowczyk et al. [100,101] | 1000 × 1000 | 42 | H&E | Breast cancer | Mask for epithelium | Epithelium segmentation |

| Janowczyk et al. [100,101] | 2000 × 2000 | 143 | H&E | Breast cancer | Mask for nuclei | Nuclear segmentation |

| Janowczyk et al. [100,101] | 775 × 522 | 85 | H&E | Colorectal cancer | Mask for gland area | Gland segmentation |

| Janowczyk et al. [100,101] | 50 × 50 | 277,524 | H&E | Breast cancer | None | Tumor detection |

| Gertych et al.[22] | 1200 × 1200 | 210 | H&E | Prostate cancer | Mask for gland area | Gland segmentation |

| Ma et al.[102] | 1040 × 1392 | 81 | IHC | Breast cancer | TIL analysis | |

| Linder et al. [63,103] | 93–2372 × 94–2373 | 1377 | IHC | Colorectal cancer | Mask for epithelium and stroma | Segmentation of epithelium and stroma |

| Xu et al. [54] | Various size | 717 | H&E | Colon cancer | ||

| Xu et al. [54] | 1280 × 800 | 300 | H&E | Colon cancer | Mask for colon cancer | Segmentation |

Many researches have attempted to solve the problem. Most of the approaches fall into one of the following categories: 1) efficient increase of label data, 2) utilization of weak label or unlabeled information, or 3) utilization of models/parameters for other tasks.

4.2.1. Efficient Labeling

One way to increase training data is to reduce the working time of pathologists to specify ROIs in the WSI. Easy-to-use GUI tools helps pathologists efficiently label more samples in shorter periods of time [41,42]. For example, Cytomine [41] not only allows pathologists to surround ROIs in WSIs with ellipses, rectangles, polygons or freehand drawings, but also applies content-based image retrieval algorithms to speed up annotation. Another interesting idea to reduce working time is to automatically localize ROIs during diagnosis, which uses the usual working time for diagnosis as labeling by tracking pathologists' behavior. This approach tracks pathologists' eye movement [43], mouse cursor positions [44] and change in viewport [45]. However, localizing ROIs accurately from these tracking data is not always easy since pathologist's do not always spend time looking at ROIs, and boundary information obtained by these approaches tends to be less clear.

Another approach that utilizes a machine learning method is active learning [42], [46], [47], [48], [49], [104], [105]. This is generally effective when the acquisition cost of label data is large (i.e. pathological images). Active learning is a method used in supervised learning, and it automatically chooses the most valuable unlabeled sample (i.e. the one that is expected to improve the identification performance of classifiers when labeled correctly and used as a training data) and display it for labeling by pathologists. Since this approach is likely to increase discrimination performance with smaller number of labeled images, the total labeling time to obtain the same discrimination performance will be shortened [46]. Many criteria such as uncertainty sampling [42], Query-by-Committee [46], variance reduction [47], and hypothesis space reduction [48] have been applied for selecting valuable unlabeled samples.

4.2.2. Incorporating Insufficient Label

Even if the exact position of the ROI in a WSI is not known, it is possible that the information regarding the presence/absence of the ROI in the WSI is available from the pathological diagnosis assigned to the WSI or WSI-level labels. These so-called weak labels are easy to obtain compared to patch-level labels even when the WSIs have no further information, and in this regard, WSIs is considered as a “bag” made with many patches (instances) in machine learning settings. When diagnosing cancer, WSI is labeled as cancer if at least one patch contains cancerous tissue, or normal if none of the patches contain cancerous tissue. This setting is a problem of multiple instance learning [50,106] or weakly-supervised learning [49,51]. In a typical multiple instance learning problem, positive bags contain at least one positive instance and negative bags do not contain any positive instances. The aim of multiple instance learning is to predict bag or instance label based on training data that contains only bag labels. Various methods in multiple instance learning have been applied to histopathological image analysis including boosting-based approach [49], support vector machine-based approach [52] and deep learning-based approach [51].

In contrast, semi-supervised learning [30,53,107,108] utilizes both labeled and unlabeled data. Unlabeled data is used to estimate the true distribution of labeled data. For example, as shown in Fig. 1, decision boundary which takes only the labeled samples into account would form a vertical line, but that considering both labeled and unlabeled samples would form a slanting line, which could be more accurate. Since semi-supervised learning is considered particularly effective when samples in the same class form a well-discriminative cluster, relatively easy problem could be a good target.

4.2.3. Reusing Parameters from Another Task

Performing supervised learning using too few training data would only result in insufficient generalization performance. This is true especially in deep learning, where the number of parameters to be learned is very large. In such a case, instead of learning the entire model from scratch, learning often starts by using (a part of) parameters of a pre-trained model optimized in another similar task. Such a learning method is called transfer learning. In CNN, layers before the last (typically three) fully-connected layers are regarded as feature extractors. The fully-connected layers are often replaced by a new network suitable for the target task. The parameters in earlier layers can be used as is [54], or as initial parameters and then the network is learned partially or fully from the training data of the target task [16,55,56] (so-called fine-tuning). In pathological images, no network learned from tasks using other pathological images are available, and thus networks learned using ImageNet, which is a database containing vast number of general images, are often used [[16], [54], [55], [56]]. For example, Xu et al., performed classification and segmentation tasks on brain and colon pathological images using features extracted from CNN trained on ImageNet, and achieved state-of-the-art performance [54]. Although the pathological image itself looks very different to the general images (e.g. cats and dogs), they share common basic image structures such as lines and arcs. Since earlier layers in deep learning capture these basic image structures, such pre-trained models using general images work well in histopathological image analysis. Nevertheless, if models pre-trained on sufficient number of diverse tissue pathology images are available, they may outperform the ImageNet pre-trained models.

4.3. Different Levels of Magnification Result in Different Levels of Information

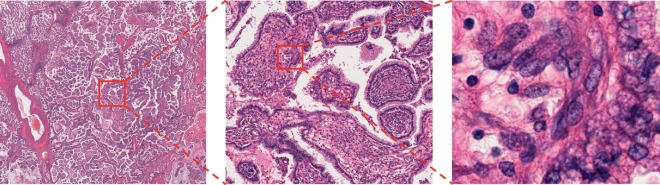

Tissues are usually composed of cells, and different tissues show distinct cellular features. Information regarding cell shape is well captured in high-power field microscopic images, but structural information such as a glandular structure made of many cells are better captured in a lower-power field (Fig. 2). Because cancerous tissues have both cellular and structural atypia, images taken at multiple magnifications would each contain important information. Pathologists diagnose diseases by acquiring different kinds of information from the cellular level to the tissue level by changing magnifications of a microscope. Even in machine learning, researches utilizing images at different magnifications exist [[57], [58], [59]]. As mentioned above, it is difficult to handle the images at its original resolution directly, images are often resized to correspond to various magnifications and used as input for analysis. Regarding diagnosis, the most informative magnification is still controversial [14,39,109], but improvement in accuracy is sometimes achieved by inputting both high and low magnification images simultaneously, probably depending on the types of diseases and tissues, and machine learning algorithms.

Fig. 2.

Multiple magnification levels of the same histopathological image. Right images show the magnified region indicated by red box on the left images. Leftmost image clearly shows papillary structure, and rightmost image clearly shows nucleus of each cell. The histopathological images are adopted from TCGA [33].

4.4. WSI as Orderless Texture-like Image

Pathological image is different from cats and dogs in nature, in a sense that it shows repetitive pattern of minimum components (usually cells). Therefore, it is rather closer to texture than object. CNN acquires shift invariance to a certain extent by pooling operations. In addition, even normal CNN can learn texture-like structure by data augmentation by shifting the tissue image with a small stride. Meanwhile, there has been methods which utilize texture structure more intensively, such as gray level co-occurrence matrix [110], local binary pattern [111], Gabor filter bank, and recently developed deep texture representations using a CNN [64,112]. Deep texture representations are computed using a correlation matrix of feature maps in a CNN layer. Converting the CNN features to texture representations would lead to the acquisition of invariance regarding cell position, while utilizing good representations learned by CNN. Another advantage of deep texture representation is that there are no constraints on the size of input image, which is very suitable for large image size of WSI. The boundary between texture and non-texture is unclear, but a single cell or a single structure is obviously not a texture. Better approach would thus depend on the object to be analyzed.

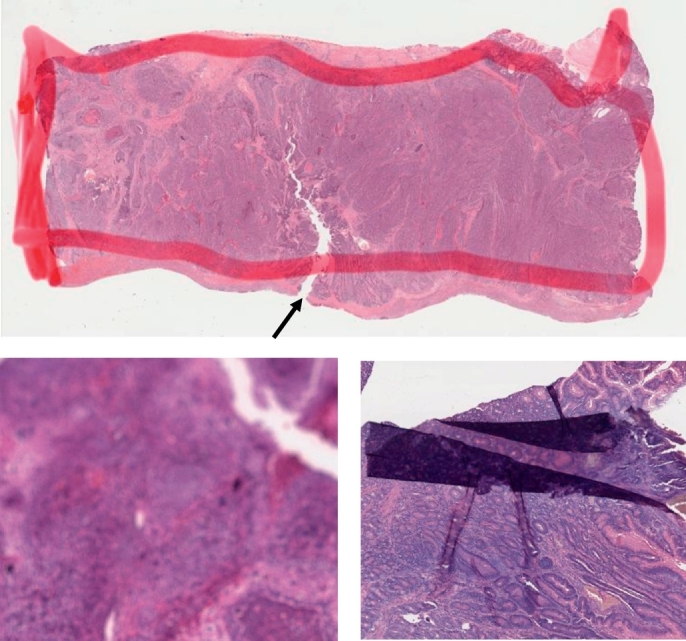

4.5. Color Variation and Artifacts

WSIs are created through multiple processes: pathology specimens are sliced and placed on a slide glass, stained with hematoxylin and eosin, and then scanned. At each step undesirable effects, which are unrelated to the underlying biological factors, could be introduced. For example, when tissue slices are being placed onto the slides, they may be bent and wrinkled; dust may contaminate the slides during scanning; blur attributable to different thickness of tissue sections may occur (Fig. 3); and sometimes tissue regions are marked by color markers. Since these artifacts could adversely affect the interpretation, specific algorithms to detect artifacts such as blur [71] and tissue-folds [73] have been proposed. Such algorithms may be used for preprocessing WSIs.

Fig. 3.

Artifacts in WSIs. Top: tumor region is outlined with red marker. The arrow indicates a tear possibly formed during the tissue preparation process. Left bottom: blurred image. Right bottom: folded tissue section. The histopathological images are adopted from TCGA [33].

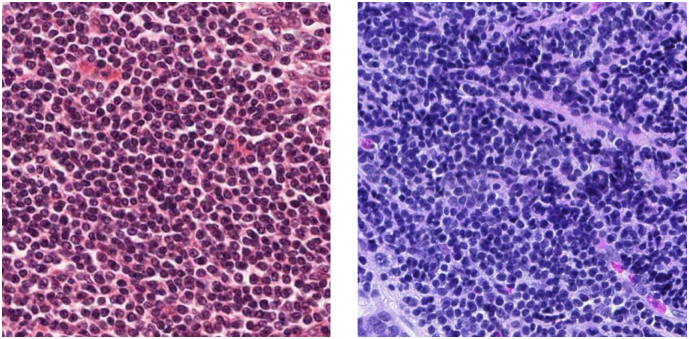

Another serious artifact is color variation as shown in Fig. 4. The sources of variation include different lots or manufacturers of staining reagents, thickness of tissue sections, staining conditions and scanner models. Learning without considering the color variation could worsen the performance of machine learning algorithm. If sufficient data on every stained tissue acquired by every scanner can be incorporated, the influence of color variation on classification accuracy may become negligible; however, that seems very unlikely at the moment.

Fig. 4.

Color variation of histopathological images. Both of these two images show lymphocytes. The histopathological images are adopted from TCGA [33].

To address this issue, various methods have been proposed so far including conversion to gray scale, color normalization [[65], [66], [67], [68]], and color augmentation [69,70]. Conversion to grayscale is the easiest way, but it ignores the important information regarding the color representation used routinely by pathologists. In contrast, color normalization tries to adjust the color values of an image on a pixel-by-pixel basis so that the color distribution of the source image matches to that of a reference image. However, as the components and composition ratios of cells or tissues in target and reference images differ in general, preprocessing such as nuclear detection using a dedicated algorithm to adjust the component is often required. For this reason, color normalization seems to be suitable when WSIs analyzed in the tasks contain, at least partially, similar compositions of cells or tissues.

On the other hand, color augmentation is a kind of data augmentation performed by applying random hue, saturation, brightness, and contrast. The advantage of color augmentation lies in the easy implementation regardless of the object being analyzed. Color augmentation seems to be suitable for WSIs with smaller color variation, since excessive color change in color augmentation could lead to the loss of color information in the final classifier. As color normalization and color augmentation could be complementary, combination of both approaches may be better.

5. Summary and Outlook

Digital histopathological image recognition is a very suitable problem for machine learning since the images themselves contain information sufficient for diagnosis. In this review, we brought up problems in digital histopathological image analysis using machine learning. Due to great efforts made so far, these problems are becoming tractable, but there is still room for improvement. Most of these problems are likely to be solved once a large number of well-annotated WSIs become available. Gathering WSIs from various institutes to collaboratively annotate them with the same criteria and making these data public will be sufficient to boost the development of more sophisticated digital histopathological image analysis.

Finally, we suggest some potential future research topics that have not been well studied so far.

5.1. Discovery of Novel Objects

In actual diagnostic situations, unexpected objects such as aberrant organization, rare tumor (thus not included in training data) and foreign bodies could exist. However, discrimination model including Convolutional Neural Networks forcibly categorizes such objects into one of the pre-defined categories. To solve the problem, outlier detection algorithms, such as one-class kernel principal component analysis [113], have been applied to the digital pathological images but only a few researches have addressed the problem so far. More recently, some deep learning-based methods utilizing reconstruction error [114] have been proposed for outlier detection in other domains, but they are not yet applied in the histopathological image analysis.

5.2. Interpretable Deep Learning Model

Deep learning is often criticized because its decision-making process is not understandable to humans and therefore often described as being a black box. Although decision-making process of human is not a complete white box either, people want to know the decision process or decision basis. This could lead to a new discovery in the pathology field. Although this problem has not been completely solved so far, some research has attempted to provide solutions, such as joint learning of pathological images and its diagnostic reports integrated with attention mechanism [115]. In other domains, decision basis can be inferred indirectly represented by visualizing the response of a deep neural network [115,116], or presenting the most helpful training image using influence functions [117].

5.3. Intraoperative Diagnosis

Pathological diagnosis during surgery influences intraoperative decision making, and thus could be another important application in histopathological image analysis. As diagnostic time in intraoperative diagnosis is very limited, rapid classification while keeping accuracy is of importance. Due to the time constraint, rapid frozen section is used instead of Formalin-fixed paraffin-embedded (FFPE) section which takes longer time to prepare. Therefore, for this purpose training of classifiers should be performed using frozen section slides. Few research has analyzed frozen sections [118] so far partly because the number of WSIs suitable for the analysis is not sufficient, and task is more challenging compared to FFPE slides.

5.4. Tumor Infiltrating Immune Cell Analysis

Because of the success of tumor immunotherapy, especially immune-checkpoint blockade therapies including anti-PD-1 and anti-CTLA-4 antibodies, immune cells in tumor microenvironment have gained substantial attention in recent years. Therefore, quantitative analysis of tumor infiltrating immune cells in slides using machine learning techniques will be one of the emerging themes in digital histopathological image analysis. Tasks related to this analysis include detection of immune cells from H&E stained image [119,120] and detection of more specific type of immune cells using immunohistochemistry [102]. Additionally, the pattern of immune cell infiltration and proximity of each immune cells are reportedly related to cancer prognosis [121], analysis of spatial relationships between tumor cells and immune cells, and the relationships between these data and prognosis or response to immunotherapy using specialized algorithms such as graph-based algorithms [62,122] will also be of great importance.

Conflicts of Interest

None.

Acknowledgement

This study was supported by JSPS Grant-in-Aid for Scientific Research (A), No. 25710020 (SI).

References

- 1.Pantanowitz L. Digital images and the future of digital pathology. J Pathol Inform. 2010;1 doi: 10.4103/2153-3539.68332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Shen D., Wu G., Suk H.-I. Deep learning in medical image analysis. Annu Rev Biomed Eng. 2017;19:221–248. doi: 10.1146/annurev-bioeng-071516-044442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bhargava R., Madabhushi A. Emerging themes in image informatics and molecular analysis for digital pathology. Annu Rev Biomed Eng. 2016;18:387–412. doi: 10.1146/annurev-bioeng-112415-114722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Madabhushi A. Digital pathology image analysis: opportunities and challenges. Imaging Med. 2009;1:7. doi: 10.2217/IIM.09.9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gurcan M.N., Boucheron L., Can A., Madabhushi A., Rajpoot N., Yener B. Histopathological image analysis: a review. IEEE Rev Biomed Eng. 2009;2:147–171. doi: 10.1109/RBME.2009.2034865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Litjens G., Kooi T., Bejnordi B.E., Setio A.A.A., Ciompi F., Ghafoorian M. A survey on deep learning in medical image analysis. Med Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 7.Xing F., Yang L. Robust nucleus/cell detection and segmentation in digital pathology and microscopy images: a comprehensive review. IEEE Rev Biomed Eng. 2016;9:234–263. doi: 10.1109/RBME.2016.2515127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Krizhevsky A., Sutskever I., Hinton G.E. ImageNet classification with deep convolutional neural networks. In: Pereira F., Burges C.J.C., Bottou L., Weinberger K.Q., editors. Adv. Neural Inf. Process. Syst. 25. Curran Associates, Inc.; 2012. pp. 1097–1105. [Google Scholar]

- 9.Hou L., Samaras D., Kurc T.M., Gao Y., Davis J.E., Saltz J.H. ArXiv150407947 Cs. 2015. Patch-based convolutional neural network for whole slide tissue image classification. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Xu J., Luo X., Wang G., Gilmore H., Madabhushi A. A deep convolutional neural network for segmenting and classifying epithelial and stromal regions in histopathological images. Neurocomputing. 2016;191:214–223. doi: 10.1016/j.neucom.2016.01.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sheikhzadeh F., Guillaud M., Ward R.K. ArXiv161209420 Cs Q-Bio. 2016. Automatic labeling of molecular biomarkers of whole slide immunohistochemistry images using fully convolutional networks. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Litjens G., Sánchez C.I., Timofeeva N., Hermsen M., Nagtegaal I., Kovacs I. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci Rep. 2016;6:26286. doi: 10.1038/srep26286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Schaumberg A.J., Rubin M.A., Fuchs T.J. BioRxiv. 064279. 2017. H&E-stained whole slide image deep learning predicts SPOP mutation state in prostate cancer. [Google Scholar]

- 14.Wang D., Khosla A., Gargeya R., Irshad H., Beck A.H. ArXiv160605718 Cs Q-Bio. 2016. Deep learning for identifying metastatic breast cancer. [Google Scholar]

- 15.Spanhol F.A., Oliveira L.S., Petitjean C., Heutte L. 2016 Int. Jt. Conf. Neural Netw. IJCNN. 2016. Breast cancer histopathological image classification using convolutional neural networks; pp. 2560–2567. [Google Scholar]

- 16.Kieffer B., Babaie M., Kalra S., Tizhoosh H.R. ArXiv171005726 Cs. 2017. Convolutional neural networks for histopathology image classification: training vs. using pre-trained networks. [Google Scholar]

- 17.Mungle T., Tewary S., Das D.K., Arun I., Basak B., Agarwal S. MRF-ANN: a machine learning approach for automated ER scoring of breast cancer immunohistochemical images. J Microsc. 2017;267:117–129. doi: 10.1111/jmi.12552. [DOI] [PubMed] [Google Scholar]

- 18.Wang D., Foran D.J., Ren J., Zhong H., Kim I.Y., Qi X. 2015 37th Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. EMBC. 2015. Exploring automatic prostate histopathology image gleason grading via local structure modeling; pp. 2649–2652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Shah M., Rubadue C., Suster D., Wang D. ArXiv161003467 Cs. 2016. Deep learning assessment of tumor proliferation in breast cancer histological images. [Google Scholar]

- 20.Roux L., Racoceanu D., Loménie N., Kulikova M., Irshad H., Klossa J. Mitosis detection in breast cancer histological images An ICPR 2012 contest. J Pathol Inform. 2013;4:8. doi: 10.4103/2153-3539.112693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chen H., Qi X., Yu L., Heng P.A. 2016 IEEE Conf. Comput Vis Pattern Recognit CVPR. 2016. DCAN: deep contour-aware networks for accurate gland segmentation; pp. 2487–2496. [Google Scholar]

- 22.Gertych A., Ing N., Ma Z., Fuchs T.J., Salman S., Mohanty S. Machine learning approaches to analyze histological images of tissues from radical prostatectomies. Comput Med Imaging Graph. 2015;46:197–208. doi: 10.1016/j.compmedimag.2015.08.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sirinukunwattana K., Pluim J.P.W., Chen H., Qi X., Heng P.-A., Guo Y.B. Gland segmentation in colon histology images: the glas challenge contest. Med Image Anal. 2017;35:489–502. doi: 10.1016/j.media.2016.08.008. [DOI] [PubMed] [Google Scholar]

- 24.Caie P.D., Turnbull A.K., Farrington S.M., Oniscu A., Harrison D.J. Quantification of tumour budding, lymphatic vessel density and invasion through image analysis in colorectal cancer. J Transl Med. 2014;12:156. doi: 10.1186/1479-5876-12-156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Caicedo J.C., González F.A., Romero E. Content-based histopathology image retrieval using a kernel-based semantic annotation framework. J Biomed Inform. 2011;44:519–528. doi: 10.1016/j.jbi.2011.01.011. [DOI] [PubMed] [Google Scholar]

- 26.Mehta N., Raja'S A., Chaudhary V. Eng. Med. Biol. Soc. 2009 EMBC 2009 Annu. Int. Conf. IEEE. IEEE; 2009. Content based sub-image retrieval system for high resolution pathology images using salient interest points; pp. 3719–3722. [DOI] [PubMed] [Google Scholar]

- 27.Qi X., Wang D., Rodero I., Diaz-Montes J., Gensure R.H., Xing F. Content-based histopathology image retrieval using CometCloud. BMC Bioinformatics. 2014;15:287. doi: 10.1186/1471-2105-15-287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sridhar A., Doyle S., Madabhushi A. Content-based image retrieval of digitized histopathology in boosted spectrally embedded spaces. J Pathol Inform. 2015;6:41. doi: 10.4103/2153-3539.159441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Vanegas J.A., Arevalo J., González F.A. 2014 12th Int. Workshop Content-Based Multimed. Index. CBMI. 2014. Unsupervised feature learning for content-based histopathology image retrieval; pp. 1–6. [Google Scholar]

- 30.Sparks R., Madabhushi A. Out-of-sample extrapolation utilizing semi-supervised manifold learning (OSE-SSL): content based image retrieval for histopathology images. Sci Rep. 2016;6 doi: 10.1038/srep27306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zhang X., Liu W., Dundar M., Badve S., Zhang S. Towards large-scale histopathological image analysis: Hashing-based image retrieval. IEEE Trans Med Imaging. 2015;34:496–506. doi: 10.1109/TMI.2014.2361481. [DOI] [PubMed] [Google Scholar]

- 32.Marshall B. Helicobacter Pylori. Springer; Tokyo: 2016. A brief history of the discovery of Helicobacter pylori; pp. 3–15. [Google Scholar]

- 33.Weinstein J.N., Collisson E.A., Mills G.B., Shaw K.R.M., Ozenberger B.A., Ellrott K. The cancer genome atlas pan-cancer analysis project. Nat Genet. 2013;45:1113–1120. doi: 10.1038/ng.2764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Molin M.D., Matthaei H., Wu J., Blackford A., Debeljak M., Rezaee N. Clinicopathological correlates of activating GNAS mutations in intraductal papillary mucinous neoplasm (IPMN) of the pancreas. Ann Surg Oncol. 2013;20:3802–3808. doi: 10.1245/s10434-013-3096-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Yoshida A., Tsuta K., Nakamura H., Kohno T., Takahashi F., Asamura H. Comprehensive histologic analysis of ALK-rearranged lung carcinomas. Am J Surg Pathol. 2011;35:1226–1234. doi: 10.1097/PAS.0b013e3182233e06. [DOI] [PubMed] [Google Scholar]

- 36.Beck A.H., Sangoi A.R., Leung S., Marinelli R.J., Nielsen T.O., van de Vijver M.J. Systematic analysis of breast cancer morphology uncovers stromal features associated with survival. Sci Transl Med. 2011;3:108ra113. doi: 10.1126/scitranslmed.3002564. [DOI] [PubMed] [Google Scholar]

- 37.Yu K.-H., Zhang C., Berry G.J., Altman R.B., Ré C., Rubin D.L. Predicting non-small cell lung cancer prognosis by fully automated microscopic pathology image features. Nat Commun. 2016;7:12474. doi: 10.1038/ncomms12474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Barry J.D., Fagny M., Paulson J.N., Aerts H., Platig J., Quackenbush J. BioRxiv. Vol. 126730. 2017. Histopathological image QTL discovery of thyroid autoimmune disease variants. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Liu Y., Gadepalli K., Norouzi M., Dahl G.E., Kohlberger T., Boyko A. ArXiv170302442 Cs. 2017. Detecting cancer metastases on gigapixel pathology images. [Google Scholar]

- 40.CAMELYON17. https://camelyon17.grand-challenge.org

- 41.Marée R., Rollus L., Stévens B., Hoyoux R., Louppe G., Vandaele R. Collaborative analysis of multi-gigapixel imaging data using cytomine. Bioinformatics. 2016;32:1395–1401. doi: 10.1093/bioinformatics/btw013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Interactive phenotyping of large-scale histology imaging data with HistomicsML | bioRxiv. http://www.biorxiv.org/content/early/2017/05/19/140236 [DOI] [PMC free article] [PubMed]

- 43.Eye movements as an index of pathologist visual expertise: a pilot study. http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0103447 [DOI] [PMC free article] [PubMed]

- 44.Raghunath V., Braxton M.O., Gagnon S.A., Brunyé T.T., Allison K.H., Reisch L.M. Mouse cursor movement and eye tracking data as an indicator of pathologists' attention when viewing digital whole slide images. J Pathol Inform. 2012;3:43. doi: 10.4103/2153-3539.104905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Mercan E., Aksoy S., Shapiro L.G., Weaver D.L., Brunyé T.T., Elmore J.G. Localization of diagnostically relevant regions of interest in whole slide images: a comparative study. J Digit Imaging. 2016;29:496–506. doi: 10.1007/s10278-016-9873-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Doyle S., Monaco J., Feldman M., Tomaszewski J., Madabhushi A. An active learning based classification strategy for the minority class problem: application to histopathology annotation. BMC Bioinformatics. 2011;12:424. doi: 10.1186/1471-2105-12-424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Padmanabhan R.K., Somasundar V.H., Griffith S.D., Zhu J., Samoyedny D., Tan K.S. An active learning approach for rapid characterization of endothelial cells in human tumors. PLoS ONE. 2014;9 doi: 10.1371/journal.pone.0090495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Zhu Y., Zhang S., Liu W., Metaxas D.N. Med Image Comput Comput-Assist Interv MICCAI Int Conf Med Image Comput Comput-Assist Interv. Vol. 17. 2014. Scalable histopathological image analysis via active learning; pp. 369–376. [DOI] [PubMed] [Google Scholar]

- 49.Xu Y., Zhu J.-Y., Chang E.I.-C., Lai M., Tu Z. Weakly supervised histopathology cancer image segmentation and classification. Med Image Anal. 2014;18:591–604. doi: 10.1016/j.media.2014.01.010. [DOI] [PubMed] [Google Scholar]

- 50.Xu Y., Li Y., Shen Z., Wu Z., Gao T., Fan Y. Parallel multiple instance learning for extremely large histopathology image analysis. BMC Bioinformatics. 2017;18:360. doi: 10.1186/s12859-017-1768-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Jia Z., Huang X., Chang E.I.-C., Xu Y. ArXiv170100794 Cs. 2017. Constrained deep weak supervision for histopathology image segmentation. [DOI] [PubMed] [Google Scholar]

- 52.BenTaieb A., Li-Chang H., Huntsman D., Hamarneh G. A structured latent model for ovarian carcinoma subtyping from histopathology slides. Med Image Anal. 2017;39:194–205. doi: 10.1016/j.media.2017.04.008. [DOI] [PubMed] [Google Scholar]

- 53.Peikari M., Zubovits J., Clarke G., Martel A.L. Springer; Cham: 2015. Clustering analysis for semi-supervised learning improves classification performance of digital pathology; pp. 263–270. (Mach. Learn. Med. Imaging). [Google Scholar]

- 54.Xu Y., Jia Z., Wang L.-B., Ai Y., Zhang F., Lai M. Large scale tissue histopathology image classification, segmentation, and visualization via deep convolutional activation features. BMC Bioinformatics. 2017;18:281. doi: 10.1186/s12859-017-1685-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Transfer learning for cell nuclei classification in histopathology Images | SpringerLink. https://link.springer.com/chapter/10.1007/978-3-319-49409-8_46

- 56.Wei B., Li K., Li S., Yin Y., Zheng Y., Han Z. Breast cancer multi-classification from histopathological images with structured deep learning model. Sci Rep. 2017;7:4172. doi: 10.1038/s41598-017-04075-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Song Y., Zhang L., Chen S., Ni D., Lei B., Wang T. Accurate segmentation of cervical cytoplasm and nuclei based on multiscale convolutional network and graph partitioning. IEEE Trans Biomed Eng. 2015;62:2421–2433. doi: 10.1109/TBME.2015.2430895. [DOI] [PubMed] [Google Scholar]

- 58.Romo D., García-Arteaga J.D., Arbeláez P., Romero E. vol. 9041. International Society for Optics and Photonics; 2014. A discriminant multi-scale histopathology descriptor using dictionary learning; p. 90410Q. [Google Scholar]

- 59.Doyle S., Madabhushi A., Feldman M., Tomaszeweski J. Med image comput comput-assist interv MICCAI int conf med image comput comput-assist interv. Vol. 9. 2006. A boosting cascade for automated detection of prostate cancer from digitized histology; pp. 504–511. [DOI] [PubMed] [Google Scholar]

- 60.Kather J.N., Weis C.-A., Bianconi F., Melchers S.M., Schad L.R., Gaiser T. Multi-class texture analysis in colorectal cancer histology. Sci Rep. 2016;6:27988. doi: 10.1038/srep27988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Rexhepaj E., Agnarsdóttir M., Bergman J., Edqvist P.-H., Bergqvist M., Uhlén M. A texture based pattern recognition approach to distinguish melanoma from non-melanoma cells in histopathological tissue microarray sections. PLoS ONE. 2013;8 doi: 10.1371/journal.pone.0062070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Doyle S., Hwang M., Shah K., Madabhushi A., Feldman M., Tomaszeweski J. 2007 4th IEEE Int. Symp. Biomed. Imaging Nano Macro. 2007. Automated grading of prostate cancer using architectural and textural image features; pp. 1284–1287. [Google Scholar]

- 63.Linder N., Konsti J., Turkki R., Rahtu E., Lundin M., Nordling S. Identification of tumor epithelium and stroma in tissue microarrays using texture analysis. Diagn Pathol. 2012;7:22. doi: 10.1186/1746-1596-7-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Wang Chaofeng, Shi Jun, Zhang Qi, Ying Shihui. Conf Proc Annu Int Conf IEEE Eng Med Biol Soc IEEE Eng Med Biol Soc Annu Conf 2017. 2017. Histopathological image classification with bilinear convolutional neural networks; pp. 4050–4053. [DOI] [PubMed] [Google Scholar]

- 65.Bejnordi B.E., Litjens G., Timofeeva N., Otte-Höller I., Homeyer A., Karssemeijer N. Stain specific standardization of whole-slide histopathological images. IEEE Trans Med Imaging. 2016;35:404–415. doi: 10.1109/TMI.2015.2476509. [DOI] [PubMed] [Google Scholar]

- 66.Ciompi F., Geessink O., Bejnordi B.E., de Souza G.S., Baidoshvili A., Litjens G. ArXiv170205931 Cs. 2017. The importance of stain normalization in colorectal tissue classification with convolutional networks. [Google Scholar]

- 67.Khan A.M., Rajpoot N., Treanor D., Magee D. A nonlinear mapping approach to stain normalization in digital histopathology images using image-specific color deconvolution. IEEE Trans Biomed Eng. 2014;61:1729–1738. doi: 10.1109/TBME.2014.2303294. [DOI] [PubMed] [Google Scholar]

- 68.Cho H., Lim S., Choi G., Min H. ArXiv171008543 Cs. 2017. Neural stain-style transfer learning using GAN for histopathological images. [Google Scholar]

- 69.Lafarge M.W., Pluim J.P.W., Eppenhof K.A.J., Moeskops P., Veta M. ArXiv170706183 Cs. 2017. Domain-adversarial neural networks to address the appearance variability of histopathology images. [Google Scholar]

- 70.ScanNet: a fast and dense scanning framework for metastatic breast cancer detection from whole-slide images - semantic scholar. https://www.semanticscholar.org/paper/ScanNet-A-Fast-and-Dense-Scanning-Framework-for-Me-Lin-Chen/9484287f4d5d52d10b5d362c462d4d6955655f8e

- 71.Wu H., Phan J.H., Bhatia A.K., Shehata B., Wang M.D. Conf Proc Annu Int Conf IEEE Eng Med Biol Soc IEEE Eng Med Biol Soc Annu Conf 2015. 2015. Detection of blur artifacts in histopathological whole-slide images of endomyocardial biopsies; pp. 727–730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Gao D., Padfield D., Rittscher J., McKay R. Med Image Comput Comput-Assist Interv MICCAI Int Conf Med Image Comput Comput-Assist Interv. Vol. 13. 2010. Automated training data generation for microscopy focus classification; pp. 446–453. [DOI] [PubMed] [Google Scholar]

- 73.Kothari S., Phan J.H., Wang M.D. Eliminating tissue-fold artifacts in histopathological whole-slide images for improved image-based prediction of cancer grade. J Pathol Inform. 2013;4 doi: 10.4103/2153-3539.117448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Bautista P.A., Yagi Y. Conf Proc Annu Int Conf IEEE Eng Med Biol Soc IEEE Eng Med Biol Soc Annu Conf 2009. 2009. Detection of tissue folds in whole slide images; pp. 3669–3672. [DOI] [PubMed] [Google Scholar]

- 75.Wollmann T., Rohr K. ArXiv170707565 Cs. 2017. Automatic breast cancer grading in lymph nodes using a deep neural network. [Google Scholar]

- 76.Russakovsky O., Deng J., Su H., Krause J., Satheesh S., Ma S. ImageNet large scale visual recognition challenge. Int J Comput Vis. 2015;115:211–252. [Google Scholar]

- 77.Gutman D., Codella N.C.F., Celebi E., Helba B., Marchetti M., Mishra N. ArXiv160501397 Cs. 2016. Skin lesion analysis toward melanoma detection: a challenge at the international symposium on biomedical imaging (ISBI) 2016, hosted by the International Skin Imaging Collaboration (ISIC) [Google Scholar]

- 78.Genomic data commons data portal (legacy archive) https://portal.gdc.cancer.gov/legacy-archive/

- 79.The genotype-tissue expression (GTEx) project. Nat Genet. 2013;45:580–585. doi: 10.1038/ng.2653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Biospecimen Research Database https://brd.nci.nih.gov/brd/image-search/searchhome

- 81.Marinelli R.J., Montgomery K., Liu C.L., Shah N.H., Prapong W., Nitzberg M. The Stanford tissue microarray database. Nucleic Acids Res. 2008;36:D871–7. doi: 10.1093/nar/gkm861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.TMAD main menu. https://tma.im/cgi-bin/home.pl

- 83.MICCAI grand challenge: tumor proliferation assessment challenge (TUPAC16). MICCAI Gd Chall Tumor Prolif Assess Chall TUPAC16. http://tupac.tue-image.nl/

- 84.Ovarian carcinomas histopathology dataset. http://ensc-mica-www02.ensc.sfu.ca/download/

- 85.Babaie M., Kalra S., Sriram A., Mitcheltree C., Zhu S., Khatami A. ArXiv170507522 Cs. 2017. Classification and retrieval of digital pathology scans: a new dataset. [Google Scholar]

- 86.KimiaPath24: dataset for retrieval and classification in digital pathology. KIMIA Lab; 2017. [Google Scholar]

- 87.Kumar M.D., Babaie M., Zhu S., Kalra S., Tizhoosh H.R. ArXiv171001249 Cs. 2017. A comparative study of CNN, BoVW and LBP for classification of histopathological images. [Google Scholar]

- 88.KIMIA Lab: Image Data and Source Code http://kimia.uwaterloo.ca/kimia_lab_data_Path960.html

- 89.Gelasca E.D., Byun J., Obara B., Manjunath B.S. 2008 15th IEEE Int. Conf. Image Process. 2008. Evaluation and benchmark for biological image segmentation; pp. 1816–1819. [Google Scholar]

- 90.Bio-segmentation | center for bio-image informatics | UC Santa Barbara. http://bioimage.ucsb.edu/research/bio-segmentation

- 91.Bioimaging Challenge 2015 Breast Histology Dataset - CKAN. https://rdm.inesctec.pt/dataset/nis-2017-003

- 92.Classification of breast cancer histology images using convolutional neural networks. http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0177544 [DOI] [PMC free article] [PubMed]

- 93.BIALab@Warwick: GlaS Challenge Contest. https://warwick.ac.uk/fac/sci/dcs/research/tia/glascontest/

- 94.Breast Cancer Histopathological Database (BreakHis) – Laboratório Visão Robótica e Imagens. https://web.inf.ufpr.br/vri/databases/breast-cancer-histopathological-database-breakhis/

- 95.Kather J.N., Marx A., Reyes-Aldasoro C.C., Schad L.R., Zöllner F.G., Weis C.-A. Continuous representation of tumor microvessel density and detection of angiogenic hotspots in histological whole-slide images. Oncotarget. 2015;6:19163–19176. doi: 10.18632/oncotarget.4383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.MITOS-ATYPIA-14 - Dataset. https://mitos-atypia-14.grand-challenge.org/dataset/

- 97.Kumar N., Verma R., Sharma S., Bhargava S., Vahadane A., Sethi A. A Dataset and a technique for generalized nuclear segmentation for computational pathology. IEEE Trans Med Imaging. 2017;36:1550–1560. doi: 10.1109/TMI.2017.2677499. [DOI] [PubMed] [Google Scholar]

- 98.Nucleisegmentation. Nucleisegmentation. http://nucleisegmentationbenchmark.weebly.com

- 99.Mitosis detection in breast cancer histological images. http://ludo17.free.fr/mitos_2012/index.html

- 100.Janowczyk A., Madabhushi A. Deep learning for digital pathology image analysis: a comprehensive tutorial with selected use cases. J Pathol Inform. 2016;7:29. doi: 10.4103/2153-3539.186902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Janowczyk Andrew. Andrew Janowczyk - Tidbits from along the way. http://www.andrewjanowczyk.com

- 102.Ma Z., Shiao S.L., Yoshida E.J., Swartwood S., Huang F., Doche M.E. Data integration from pathology slides for quantitative imaging of multiple cell types within the tumor immune cell infiltrate. Diagn Pathol. 2017;12 doi: 10.1186/s13000-017-0658-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.egfr colon stroma classification. http://fimm.webmicroscope.net/supplements/epistroma

- 104.Tong S., Koller D. Support vector machine active learning with applications to text classification. J Mach Learn Res. 2001;2:45–66. [Google Scholar]

- 105.Lewis D.D., Gale W.A. A sequential algorithm for training text classifiers. In: Croft B.W., van Rijsbergen C.J., editors. SIGIR ‘94 Proc. Seventeenth Annu. Int. ACM-SIGIR Conf. Res. Dev. Inf. Retr. Organised Dublin City Univ. Springer London; London: 1994. pp. 3–12. [Google Scholar]

- 106.Dietterich T.G., Lathrop R.H., Lozano-Pérez T. Solving the multiple instance problem with axis-parallel rectangles. Artif Intell. 1997;89:31–71. [Google Scholar]

- 107.Miyato T., Maeda S., Koyama M., Ishii S. ArXiv170403976 Cs Stat. 2017. Virtual adversarial training: a regularization method for supervised and semi-supervised learning. [DOI] [PubMed] [Google Scholar]

- 108.Rasmus A., Valpola H., Honkala M., Berglund M., Raiko T. ArXiv150702672 Cs Stat. 2015. Semi-supervised learning with ladder networks. [Google Scholar]

- 109.Gupta V., Bhavsar Arnav. Breast cancer histopathological image classification: is magnification important? CVPR Workshops; 2017. pp. 769–776. [Google Scholar]

- 110.Saito A., Numata Y., Hamada T., Horisawa T., Cosatto E., Graf H.-P. A novel method for morphological pleomorphism and heterogeneity quantitative measurement: named cell feature level co-occurrence matrix. J Pathol Inform. 2016;7 doi: 10.4103/2153-3539.189699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Ojala T., Pietikäinen M., Harwood D. A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 1996;29:51–59. [Google Scholar]

- 112.Lin T.-Y., RoyChowdhury A., Maji S. ArXiv150407889 Cs. 2015. Bilinear CNN models for fine-grained visual recognition. [DOI] [PubMed] [Google Scholar]

- 113.One-class kernel subspace ensemble for medical image classification | SpringerLink. https://link.springer.com/article/10.1186/1687-6180-2014-17

- 114.Xia Y., Cao X., Wen F., Hua G., Sun J. 2015 IEEE Int. Conf Comput Vis ICCV. 2015. Learning discriminative reconstructions for unsupervised outlier removal; pp. 1511–1519. [Google Scholar]

- 115.Samek W., Binder A., Montavon G., Lapuschkin S., Muller K.-R. Evaluating the visualization of what a deep neural network has learned. IEEE Trans Neural Netw Learn Syst. 2017;28:2660–2673. doi: 10.1109/TNNLS.2016.2599820. [DOI] [PubMed] [Google Scholar]

- 116.Zintgraf L.M., Cohen T.S., Adel T., Welling M. ArXiv170204595 Cs. 2017. Visualizing deep neural network decisions: prediction difference analysis. [Google Scholar]

- 117.Koh P.W., Liang P. ArXiv170304730 Cs Stat. 2017. Understanding black-box predictions via influence functions. [Google Scholar]

- 118.Abas F.S., Gokozan H.N., Goksel B., Otero J.J., Gurcan M.N. Vol. 9791. 2016. Intraoperative neuropathology of glioma recurrence: cell detection and classification. (Medical Imaging 2016: Digital Pathology). [Google Scholar]

- 119.Chen J., Srinivas C. ArXiv161203217 Cs. 2016. Automatic lymphocyte detection in H&E images with deep neural networks. [Google Scholar]

- 120.Turkki R., Linder N., Kovanen P.E., Pellinen T., Lundin J. Antibody-supervised deep learning for quantification of tumor-infiltrating immune cells in hematoxylin and eosin stained breast cancer samples. J Pathol Inform. 2016;7:38. doi: 10.4103/2153-3539.189703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 121.Feng Z., Bethmann D., Kappler M., Ballesteros-Merino C., Eckert A., Bell R.B. Multiparametric immune profiling in HPV− oral squamous cell cancer. JCI Insight. 2017;2 doi: 10.1172/jci.insight.93652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 122.Basavanhally A.N., Ganesan S., Agner S., Monaco J.P., Feldman M.D., Tomaszewski J.E. Computerized image-based detection and grading of lymphocytic infiltration in HER2+ breast cancer histopathology. IEEE Trans Biomed Eng. 2010;57:642–653. doi: 10.1109/TBME.2009.2035305. [DOI] [PubMed] [Google Scholar]