Abstract

Integrated Information Theory (IIT) has become nowadays the most sensible general theory of consciousness. In addition to very important statements, it opens the door for an abstract (mathematical) formulation of the theory. Given a mechanism in a particular state, IIT identifies a conscious experience with a conceptual structure, an informational object which exists, is composed of identified parts, is informative, integrated and maximally irreducible. This paper introduces a space-time continuous version of the concept of integrated information. To this aim, a graph and a dynamical systems treatment is used to define, for a given mechanism in a state for which a dynamics is settled, an Informational Structure, which is associated to the global attractor at each time of the system. By definition, the informational structure determines all the past and future behavior of the system, possesses an informational nature and, moreover, enriches all the points of the phase space with cause-effect power by means of its associated Informational Field. A detailed description of its inner structure by invariants and connections between them allows to associate a transition probability matrix to each informational structure and to develop a measure for the level of integrated information of the system.

Author summary

In this paper we introduce a space-time continuous version for the level of integrated information of a network on which a dynamics is defined. The concept of integrated information comes from the IIT of consciousness. By a strict mathematical formulation, we complement the existing IIT theoretical framework from a dynamical systems perspective. In other words, we develop the bases for a continuous mathematical approach to IIT introducing a dynamical system as the driving rule of a given mechanism. We also introduce and define the concepts of Informational Structure and Informational Field as the complex network with the power to ascertain the dynamics (past and future scenarios) of the studied phenomena. The detailed description of an informational structure is showing the cause-effect power of a mechanism in a state and thus, a characterization of the quantity and quality of information, and the way this is integrated. We firstly introduce how network patterns arise from dynamic phenomena on networks, leading to the concept of informational structure. Then, we formally introduce the mathematical objects supporting the theory, from graphs to informational structures, throughout the integration of dynamics on graphs with a global model of differential equations. After this, we formally present some of the IIT’s postulates associated to a given mechanism. Finally, we provide the quantitative and qualitative characterization of the integrated information, and how it depends on the geometry of the mechanism.

Introduction

Dynamical Systems and Graph Theory are naturally coupled since any real phenomenon is usually described as a complex graph in which the evolution of time produces changes in specific measures on nodes or links among them [1, 2]. In this work, the starting point is any structural network, including a parcelling of the brain, possessing an intrinsic dynamics. For brain dynamics, the collective behavior of a group of neurons can be represented as a node with a particular dynamics along time [3–6]. In general, the mathematical way to describe and characterize dynamics is by (ordinary or partial) differential equations (continuous time) [7] or difference equations (discrete time) [8]. Global models on brain dynamics are grounded on anatomical structural networks built under parcelling of the brain surface [9–11]. Indeed, they are based on systems of differential equations described on complex networks, which may include noise, delays, and time-dependent coefficients. Thus, the designed dynamical system models the activity of nodes connected to each other by a given adjacency matrix. A global dynamics emerges through simulated dynamics at each node, which is coupled to others as detailed in the anatomical structural network (see, for instance, [12] for the structural networks on primate connectivity). Then, an empirical functional network and a simulated functional network emerge by correlation or synchronization of data on the structural network [4, 13, 14], showing a similar behavior and topology after a proper fitting of the parameters in the differential equations associated to the dynamics.

We take advantage of this approach to apply some of the main results on the modern theory of dynamical systems showing that, given a dynamics on a network, there exists an object, the global attractor [15–18], determining all the asymptotic behaviour of each state of the network. The attractor exists and its nature is essentially informational, as it possesses the power to produce a curvature of the phase space enriching every point with the information on its possible past and future dynamics. The structure of the global attractors (or attracting complex networks [19, 20]), described as composed structures by invariants and connections, naturally shows that its information is structured, composed by different parts, and can be unreachable from the study of the information of its parts, so allowing for a definition of integrated information.

Integrated Information Theory (IIT, [21]), created by G. Tononi [22–24] starts with a phenomenological approach to the theory of consciousness. It assumes that consciousness exists and tries to describe it by defining the axioms that it satisfies. Having the axioms on hand, they serve to introduce the postulates that every physical mechanism has to obey in order to produce a conscious experience. This fact opens the door to the possibility of the mathematization of the theory by defining and describing postulates on concrete networks where a dynamics can be settled. It is then possible to define the appropriate structured dynamics which is supposed to explain a conscious experience by preserving its axioms. The IIT approach allows to represent a conscious experience and even to measure it quantitatively and qualitatively by the so called integrated information Φmax, which, at the same time indicates that, at the base of consciousness, there are essentially phenomena of causal processes of integrated information nature [25]. This fact links IIT to Information Theory and the Theory of Causality [26]. On the mathematical level, IIT approach is based on graphs consisting of logic gates and transition probabilities describing causality of consecutive discrete states on those graphs [21].

In this paper we present a continuous-time version (see Section Results for a formal description) related to IIT based on the theory of dynamical systems. IIT bases any particular experience on a mechanism, defined in a particular state, which possesses a well defined cause-effect power. Our starting point is given by a graph describing a mechanism and, thus, a graph is first defined. However, we focus our study on the network patterns arising from dynamical phenomena. So, a dynamical system and the associated mathematical objects (global attractor, equilibrium points, unstable invariant sets) have to be also defined. As a novelty in dynamical systems theory, the global attractor (which, for the gradient case consists of the equilibria and the heteroclinic connections between them [15, 17, 27]) is redefined as an object of informational nature, an Informational Structure (IS). An IS is a flow-invariant object of the phase space described by a set of selected invariant global solutions of the associated dynamical system, such as stationary points (equilibria) and connecting orbits among them (Fig 1). This set of invariants inside the IS creates a new structure, a new complex network with the power to ascertain the dynamics (past and future scenarios) of natural phenomena. Every IS posseses an associated Informational Field (IF), globally described from the attraction and repulsion rates on the nodes of the IS. We are able to translate the energy landscape caused by the IS and the IF into a transition probability matrix (TPM) to pass from one state to another within the system (see Section Results). Thus, the level of information of a mechanism in a state is going to be given by the global amount of deformation of the phase space caused by the intrinsic power of the IS and IF. The geometrical characterization of ISs can provide both the quality of the related information and, in particular, the shape in which it is integrated in the whole system, allowing to measure the level of integrated information it contains. Thus, the quality of the information comes from the detailed study of informational structure, which now possesses an intrinsic dynamics and enjoys a continuous change. This structure depends on the parameters of the underlying equations and has the ability to possibly rapidly change in the response to the change of those parameters (see Section Materials and methods). From this continuous approach, we are able to introduce first definitions for postulates of existence, composition, information and integration for a mechanism in a state. There is still a gap to the more elaborated formal definitions from IIT 3.0 [21] (see Section Discussion), including the composition and exclusion postulates. However, our framework naturally leads to a study on the continuous dependence between the topology of the network and the level of integrated information for a given mechanism (see Section Results).

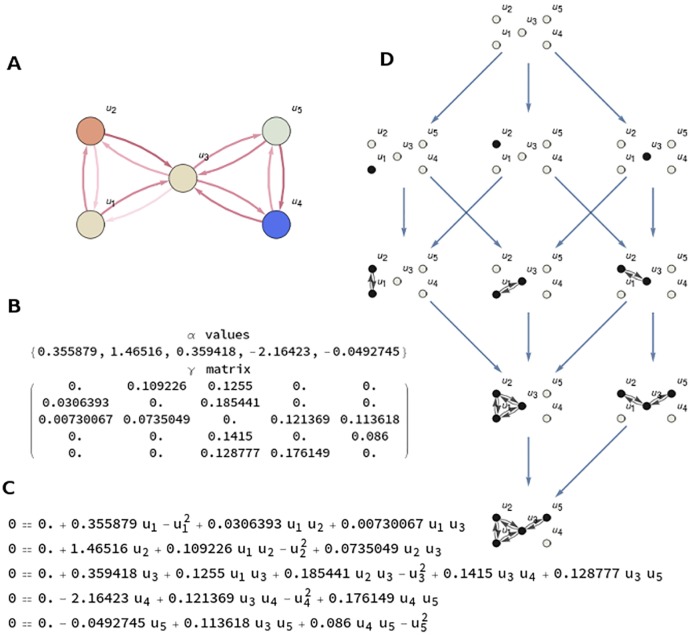

Fig 1.

A. A mechanism (graph) of two nodes where a system of two differential equations given by (7) is defined, one for each node, using the given values for the α and γ parameters. B. The Informational Structure (IS) is a new complex network made by four stationary points (equilibria) and directed links defined by global solutions. Each stationary point of the IS is a state associated to a subgraph of the original mechanism (non-null existing nodes are shown in black). The actual state of the mechanism corresponds to a state of the IS, highlighted in pink at the figure (the state where both u1 and u2 have a value greater than 0). C. (background) The associated directional field describing the tangent directions of trajectories inside the IS. The two straight lines (in orange and yellow) are the nullclines associated to the system and they intersect in three stationary points (except (0, 0)): one is a semitrivial stationary point in the X axis; the second is a semitrivial stationary point in the Y axis. The last one is the stationary point with two strictly positive values. All of the stationary points constitute the nodes of the IS. Each stationary point is hyperbolic and locally creates a field of directions towards (stability) or from them (unstabilities). The informational field can be globally described by the sum of the stability and unstability influences of each continuous stationary solution passing when going to one node to other in the IS. D. The measurement of the amount of information to link any pair of nodes allows to define a Transition Probability Matrix (TPM) with the probability for each state of going to any other. States of the IS are denoted by the list of nodes having a value greater than 0. State (0) represents the node of the IS with both u1 and u2 equal to 0.

Materials and methods

Dynamics on graphs

Many real phenomena can be described by a set of key nodes and their associated connections, building a (generically) complex network. In this way, we can always construct an application between a real situation and an abstract graph describing its essential skeleton. An undirected graph is an ordered pair comprising a non-empty set V of vertices (or nodes) together with a set E of edges joining 2-element subsets of V. The order of a graph is given by the number of nodes, and its size by the number of edges. A directed graph or digraph is a graph in which edges (named arcs) have orientations.

We want to study the behaviour on networks of systems of evolutionary differential equations as

| (1) |

where F is a nonlinear map from to . For modeling purposes, we could also add, for instance, delays, stochastic terms, or to make solution u(t) also depend on a subset Ω of the three dimensional space, i.e. u(t, x), for Given an initial condition, suppose existence and uniqueness of solutions. If not, a multivalued approach could also be adapted.

The phase space X (in our case ) represents the framework in which the dynamics described by a group of transformations S(t): X → X is developed. Given a phase space X we define a dynamical system on X as a family of non-linear operators ,

which describes the dynamics of each element u ∈ X. In particular, S(t)u0 = u(t;u0) is the solution of the differential Eq (1) at time t with initial condition u0.

The global attractor is the central concept in dynamical system theory, since it describes all the future scenarios of a dynamical system. It is defined as follows [15–18, 28, 29]:

A set is a global attractor for {S(t): t ≥ 0} if it is

-

(i)

compact,

-

(ii)

invariant under {S(t): t ≥ 0}, i.e. for all t ≥ 0, and

-

(iii)attracts bounded subsets of X under {S(t): t ≥ 0} for the Hausdorff semidistance; that is, for all B ⊂ X bounded

Observe that (ii) is showing a crucial property of an attractor, as supposes a set with a proper intrinsic dynamics. Moreover, (iii) points that this set is determining all the future dynamics on the phase space X. We say that u* ∈ X is an equilibrium point (or stationary solution) for the semigroup S(t) if S(t)u* = u*, for all t ≥ 0. A stationary point is a trivial case for a global solution associated to S(t), i.e., such that ξ(t + s) = S(t)ξ(s) for all , . Stationary points are the minimal invariant objects inside a global attractor. Every invariant set is a subset of the global attractor [15]. Generically, connections among invariant sets in the attractor describe its structure [27, 30]. To this aim we need the following definitions, which also allow us to define the behaviour towards the past in a global attractor.

The unstable set of an invariant set Ξ is defined by

The stable set of an invariant set Ξ is defined by

We have to think in a global attractor as a set which does not depend on initial conditions, with an intrinsic proper dynamics, composed by a set of special solutions (global solutions), which are connecting particular invariants, so generating a complex directed graph. Moreover, the global attractor has the following properties [17, 18]:

It is the maximal invariant set in the phase space.

It is the smallest closed attracting set.

It is made of bounded complete solutions, i.e., solutions that exists for all time and so giving information for the asymptotic past of the system.

Generically, its structure is described by invariant subsets and connecting global solutions among them [31, 32].

The Fundamental Theorem of Dynamical Systems

The Fundamental Theorem of Dynamical Systems [33] states that every dynamical system on a compact metric space X (the one defined on a global attractor, for instance) has a geometrical structure described by a (finite or countable) number (indexed by I) of sets {Ei}i∈I with an intrinsic recurrent dynamics and a gradient-like dynamics outside them. In other words, when we define a dynamical system on a graph, the attractor can be always described by a (finite or countable) number of invariants and connections between them.

Gradient attractors

We say [15, 17, 31] that a semigroup {S(t): t ≥ 0} with a global attractor and a disjoint family of isolated invariant sets E = {E1, ⋯, En} is a gradient semigroup with respect to E if there exists a continuous function such that

-

(i)

is non-increasing for each u ∈ X

-

(ii)

V is constant in Ei, for each 1 ≤ i ≤ n; and

-

(iii)

V(S(t)u) = V(u) for all t ≥ 0 if and only if

In this case we call V a Lyapunov functional related to E. For gradient semigroups, the structure of the global attractor can be described as follows [15, 27]: Let {S(t): t ≥ 0} be a gradient semigroup with respect to the finite set E ≔ {E1, E2, ⋯, En}. If {S(t): t ≥ 0} has a global attractor , then can be written as the union of the unstable manifolds related to each set in E, i.e,

| (2) |

When Ej are equilibria , the attractor is described as the union of the unstable manifolds associated to them

This description of a gradient system shows a geometrical picture of the global attractor, in which all the stationary points or isolated invariant sets (also defined as Morse sets, [31, 32]) are ordered by connections related to its level of attraction [34] or stability. They conform a Morse decomposition of the global attractor [32, 33, 35–37].

When we refer to the global attractor for the dynamics on a graph, observe that each node given by a partially feasible equilibrium point in the attractor represents an attracting complex subgraph of the original one. Thus, the attractor can be understood as a new complex dynamical network describing all the possible feasible future networks [19, 20]. In particular, it contains all the information related to future scenarios of the model.

Energy levels

Any Morse decomposition E = {E1, ⋯, En} of a compact invariant set leads to a partial order among the isolated invariant sets Ei; that is, we can define an order between two isolated invariant sets Ei and Ej if there is a chain of global solutions

| (3) |

with

and

1 ≤ ℓ ≤ r − 1, with E1 = Ei and Er = Ej.

This implies that, given any dynamically gradient semigroup with respect to the disjoint family of isolated invariant sets E = {E1, ⋯, En}, there exists a partial order in E. In [34] (see also [38]) it is shown that there exists a Morse decomposition given by the so-called energy levels , p ≤ n. Each of the levels , 1 ≤ i ≤ p is made of a finite union of the isolated invariant sets in E and N is totally ordered by the dynamics defined by (3). Indeed, the associated Lyapunov function has strictly decreasing values in any two different level-sets of N and any two elements of E which are contained in the same element of N (same energy level) are not connected.

Attracting invariant networks

The existence of a global attractor implies that when we look at the evolution in time of each ui(t) we can see the “picture” in asymptotic time drawn by all . Thus, this evolution is engaged with an invariant attracting network in which

-

i)

Each node represents a stationary solution (or, generically, a minimal invariant subset of the global attractor), which can be described as a binary vector representing the activation or inactivation of nodes, i.e., its points constitute a subgraph of the former graph representing the modelled system.

-

ii)

There exist oriented connections between the above subgraphs, so leading to a directed graph.

-

iii)

Each subgraph has totally determined stability properties; i.e., it is known not only which other nodes are connected to it or to which it is connected, but also, for instance, the velocity or the tendency for the attraction.

-

iv)

The whole invariant structure is determining the long time behaviour of all solutions of the system.

In summary, behind a graph with dynamics (a mechanism in our conception) there exists a new complex network of connected subgraphs, governing all possible scenarios of the system.

Informational structures: A formal definition

The starting point in our approach is a system of connected elements where a dynamics is defined. This system is called a mechanism. Composition and exclusion postulates in IIT 3.0 allow to consider mechanisms given by any subset of the system. Here, we are only focusing in the mechanism given by the whole system. A global attracting network can be characterized by the amount of information it provides to the mechanism, since the nature of a global attractor is essentially informational. Indeed, the informational nature of the attractor is based on the following assertions:

They live in the phase space X, an abstract formulation for the description of the flow.

Their existence is not experimentally established, i.e., they are not necessarily related to physical experiments. They exist, associated and grounded to a particular mechanism, as a (small) compendium of “selected solutions”, which forms a complex structure and, moreover, determines the behaviour of all other solutions.

The state of a mechanism is given by the state of its nodes. If those nodes take real values, the state is given by a vector of real numbers. When a dynamical system is defined for a mechanism in a state, it has cause-effect power, meaning that it conditions the possible past and future states of the mechanism. We can find the cause-effect power of a mechanism in a state by looking at its (local) attracting invariant sets. Typically, these are stationary points or periodic orbits [32, 39–41], but it could also contain invariant sets with chaotic dynamics [42–44]. Following IIT, we will measure the amount of information on the structure made by these invariants, in the sense of its power to restrict the past and the future states. In IIT language, this is intrinsic information: differences (state of a mechanism) that make a difference (restricted set of possible past and future states) within a system (see the IIT glossary in [21]).

Now we can define an Informational Structure (IS). Suppose we have a complex graph given by N nodes and links among them. We denote by Gi every subset of An informational structure is a complex graph which nodes are subgraphs Gi of and links among them, with the following properties

Links between nodes are directed by their dynamics.

Each link has a defined weight.

Each link has a dynamical behaviour given by a model of differential equations.

Dynamics are defined by stability of nodes.

An IS (see Fig 2) becomes a natural emerging object (of informational nature) from a given mechanism in a state with intrinsic cause-effect power to the past and the future. Moreover, some properties of ISs can be given:

Fig 2.

A. Mechanism with five nodes u1, ⋯, u5 representing five interacting components represented as a graph . B. α values and γ values (position (i, j) in the matrix represents the influence of node ui over uj) are the parameters defining the dynamics of the system. C. Nonlinear system of five equations to calculate the stationary points of the dynamical system. D. Informational Structure (IS) associated to the mechanism. Observe that the IS is a new complex network made by directed links, related to dynamics, of subgraphs Gi of the original one Each node in the IS contains a stationary point of the dynamical system. Nodes of each Gi with a value greater than 0 are shown in black. Grey nodes have value 0. Arrows in the IS relates to the cause-effect power of each state, going from one state to another. The relation induced by the arcs is transitive, but only the minimal arcs to understand the dynamics of the IS are represented.

ISs exist and are composed by a set of simple elements. They have cause-effect power, producing intrinsic information within the mechanism.

At any instant, an IS determines all the subset of past and futures states.

The quantity of information can be measured, for any given time, by the size of the IS at time t. Quality of the information is related to the shape of the IS.

Integration can be measured by partitions of the global graph.

A global model

Lotka-Volterra models have been used to generate reproducible transient sequences in neural circuits [45–49]. In our case, for a general model for N nodes, we define a system of N differential equations given by:

| (4) |

where the matrix Γ = (γij) ∈ RN×N is referred to the interaction-matrix. In matrix formulation, (4) reads as

| (5) |

with and . Given an initial data for (5), sufficient conditions for the existence and uniqueness of global solutions are well-known (see, for instance, [50, 51]) The phase space for (5) is the positive orthant

This set of equations will define a dynamics on a structural graph with N nodes, taking one equation for the description of the dynamics on each of the nodes. We model the dynamics on a given mechanism from Lotka-Volterra systems since they lead to a non-linear and nontrivial class of examples where the characterization of ISs and its dependence on parameters is, to some extent, very well understood [50, 52]. Indeed, we can find, under some conditions on parameters, that there exits a finite number of equilibria (then, with trivial recurrent behaviour) and directed connections between them, generating an hierarchical organization by level sets of equilibria ordered by connections in a gradient-like fashion [19, 20]. With respect to IIT, each IS is associated to a mechanism in a state and gives it intrinsic information.

As noted, we also want each Informational Structure to flow in time. Thus, we can consider the system of differential equations driven by time dependent sources α(t) (and/or γij(t)), given by:

| (6) |

Informational fields

Note that an IS is not only a complex network of possible configurations of a graph. Moreover, it is well organized by their connections, showing the intrinsic dynamics within the IS and, acting as a global attractor, it determines the behaviour of every particular realization in the system. In this sense, it is not only that every point in the IS possesses an amount of information, but every point of the phase space is enriched from the information given by the IS. Indeed, every state of the mechanism is determined by the Informational Field (IF) associated to the IS. See Fig 3 for an illustration of IFs. In this way, the IS can be seen just as the skeleton of a real body organized as a global vector field describing all the possible flows of information in the system. This is why, related to integrated information, it is the IS and its associated dynamics (IF) the object to be studied. Actually, it is the IF what is really responsible of the cause-effect power of a IS.

Fig 3. Informational fields.

Informational structures are the skeleton of informational fields, the ones with the power of causality to the past and the future in the IS. For a mechanism of two nodes as in Fig 1 there exist different possible ISs. We plot the four ones related to the existence of one, three or four equilibria for equations in (7), which depends on the values of the α and γ parameters. Observe that the IF produces a curvature of the XY-phase space, and governs the dynamics of all the states in the IF. Top left. Informational field for the informational structure in Top right in Fig 1, the one for which the four equilibria exist. Note that (0, 0) is unstable, (0, 1) and (1, 0) are saddle equililibria, and (2.5, 3) is a globally stable stationary solution. These stability and instability properties of each node, characterised from their eigenvalues and eigenvectors, define the curvature of the bidimensional plane drawn by the IF. Top right. IF for the IS with the three equilibria, the (0, 0) and the semitrivial equilibria (0, 1) in the Y-axis and (1, 0) in the X-axis, with the global stationary solution in the Y-axis. Bottom left. IF for the IS with the three equilibria, the (0, 0) and the semitrivial equilibria (0, 1) in the Y-axis and (1, 0) in the X-axis, with the global stationary solution in the X-axis. Bottom right. IF where (0, 0) is the only stationary solution, which is globally stable, making a global pending towards this stationary point.

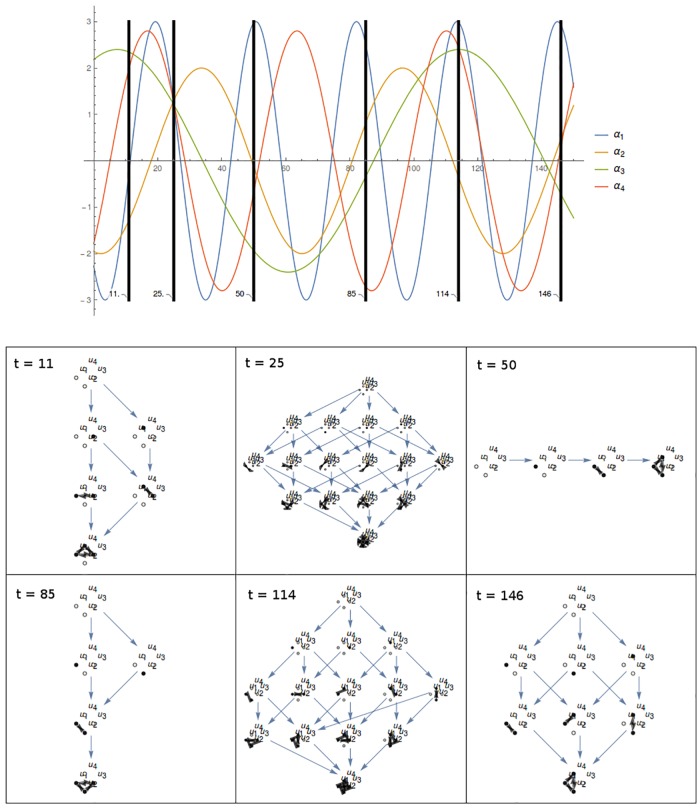

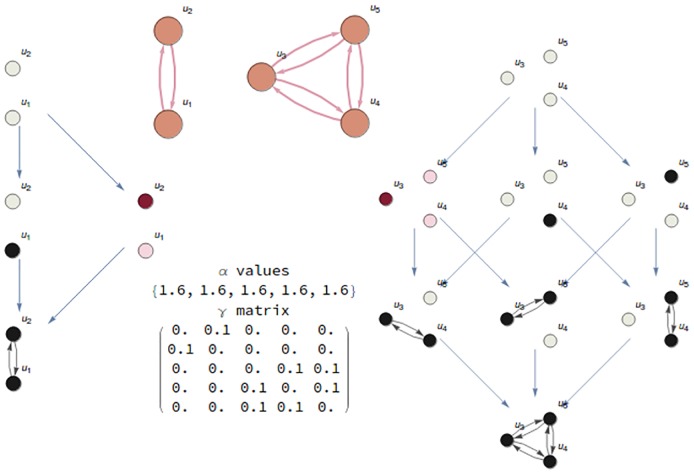

Dynamical informational structures

It seems clear that brain dynamics does not behave by converging or stabilizing around a fix set of invariants, but it can be described as a continuous flow of quick and irregular oscillations [53, 54]. We deal with this situation by introducing dynamic informational structures (DIS), i.e., a continuous time map on informational structures so that their influence can be realized at each time value (Fig 4). We can make parameters in (4) depend on time, so that for each time we have an associated Informational Structure . Thus, we can define Dynamical Informational Structures (DIS) as a continuous flow

which is giving a dynamical behaviour on the complex network of connected subgraphs given by the ISs at each time t. is the set of subsets of , where is the set of all stationary solutions. Note that induces a continuous movement of structures. The determination of critical values in which topological and/or geometrical changes are crucial, and will be related to bifurcation phenomena of attractors [55, 56].

Fig 4. Dynamic informational structures.

Changes in the parameters governing the dynamics of a mechanism produce changes in the corresponding informational structures. Actually, these changes can be proved to be continuous with respect to time when, for instance, they are associated to the global model of differential equations in (4). Top. Example for the evolution in time of αi parameters of a system of four nodes; γ parameters are fixed. Black bars select six different time points. Bottom. For a given mechanism, different ISs corresponding to the time steps shown above.

The reader wishing to retrace our formulation will find all code developed for the implementation of the results and the generation of the technical figures herein from the Open Science Framework (https://osf.io/5tajz/).

Results

Integrated information: A continuous approach

In this section we define a preliminary version of IIT postulates: existence, composition, information, integration and exclusion. We do not try to mimic or generalize the concepts of IIT 3.0 (see Section Discussion), where they appear in a more elaborated fashion, allowing finer computations and insights. For example, IIT considers the integrated information of each subset of a mechanism (candidate set), while we always focus on the whole mechanism.

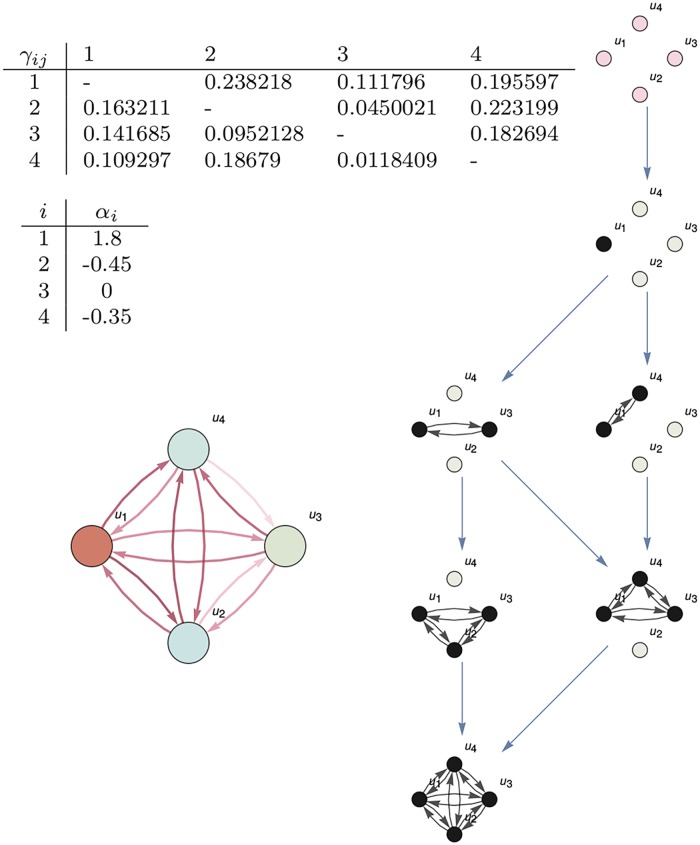

Existence: Mechanisms in a state

We consider mechanisms like in Fig 5. The mechanism contains a set of nodes with links representing the influences between them. On nodes we define a dynamical system driven by the set of differential equations (a particular case of (4)):

| (7) |

for each node ui from 1 to N (in Fig 5, N = 4). Values αi are relative to each node. Values γij represent the influence of node ui over uj.

Fig 5. Existence.

Left: The mechanism is given by a set of nodes (in the figure, u1, …, u4) and the α and γ parameters. Parameters αi affect only the dynamics of the node ui. Parameters γij model the influence of ui over uj. Right: The dynamics given by (7) on the mechanism generates an informational structure with nodes the non-negative solutions of the corresponding system of differential equations. This structure controls the behaviour of the system (all possible states of the mechanism). There exists a hierarchy (energy levels, represented by horizontal rows) between the points in the informational structure, with just one global stable point and arcs between them, representing solutions going from one point to another. There is an arc from stationary solution to another one when the set of non-zero values in is a subset of non-zero values in . For clarity, we have removed arcs going to each node to itself and all arcs that can be obtained by transitive closure of those already represented.

The dynamics of system (7) generates an attractor, which is a structured set of stationary points (or equilibria) for the system, for which there exists a globally stable stationary point (represented at the right part of Fig 5).

Colours in the nodes of Fig 5 (bottom left) go from dark blue (−3) to red (3) and represent αi values. Fig 5 (right) shows the whole attractor corresponding to the system on the left with the given α and γ parameters. We call this attractor the informational structure (IS) for the mechanism. Note that the same mechanism with different αi and γij values generates different ISs. Nodes in the IS correspond to distinguished states of the mechanism. We call them states of the IS. Each of these states is a subgraph of the mechanism in Fig 5 (bottom left) corresponding to a non-negative solution of (7). Black points represent non-zero values in the stationary solutions (i.e., ui > 0 in the equilibrium) and grey point are zero values (ui = 0). Each state of the IS governs the behaviour of a family of states of the mechanism, those with the same positive nodes. That is, the cause-effect power of each node of the IS determines the cause-effect power of the mechanism in a state represented by that node.

Cause-effect power of a mechanism in a state

The cause-effect power of nodes (i.e., stationary points or, equivalently, states) in an informational structure is represented by defining transition probability matrices (TPMs). Given that the attractor captures the behaviour of all solutions looking to the future (t → + ∞) and to the past (t → −∞), for each state we introduce two matrices, one with the transitions to the future and the other to the past. The procedure is analogous for both distributions. Observe that we do an approach by probability distributions of both the informational structure and the informational field.

We know that local dynamics on each node (stationary solution) in the IS can be described by the associated eigenvalues and eigenvectors when we linearize (7) on nodes [57]. Indeed, given by

the associated N × N Jacobian matrix is given by , with

On each node (state of the IS) , we calculate the eigenvalues λi and associated eigenvectors, normalise to one, for matrix J(u*). The exponential of negative eigenvalues gives the the strength of attraction towards the node. The exponentials of positive eigenvalues give the the strength of repulsion from the node, and the repulsion directions are given by the associated eigenvectors of this node. To take into account the influence of the wi, we also take vi = abs(wi), the absolute value of wi. Thus, if u* is a saddle node with associated eigenvalues λi = λi(u*) > 0, we calculate the N-dimensional vector

| (8) |

and we define the repulsion rate from u* as

| (9) |

In the same way, if u* is a saddle node with eigenvalues λi = λi(u*) < 0,

| (10) |

and so the attraction rate to u* is given by the exponential of the sum of the N-components of vector Att(u*), i.e.

| (11) |

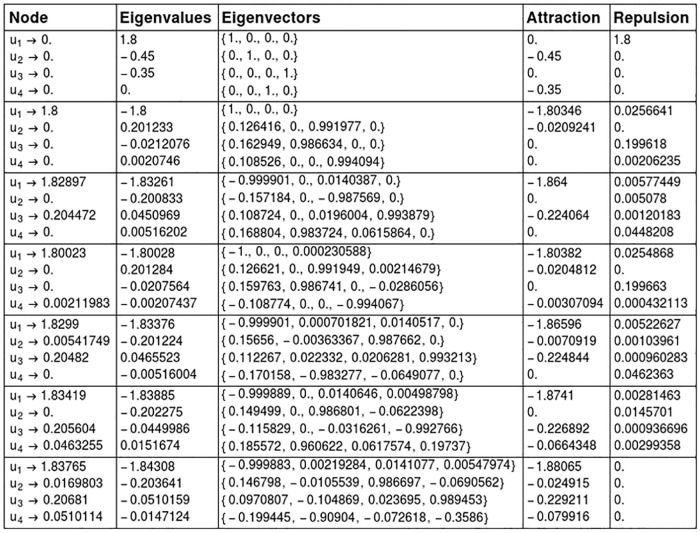

Heuristically, this functional on positive eigenvalues reflects the exponential divergence of trajectories in the unstable manifolds being pushed out from a neighbourhood of its associated node; the functional on negative eigenvalues of a node describes the exponential convergence of trajectories in the stable manifold being attracted to the node. Thereby, we reflect the degree to which the saddle fixed points (nodes) locally attracts, or repels trajectories to create a chain with the other nodes in the global attractor. Fig 6 shows and example for this calculation on .

Fig 6. Calculation of the eigenvalues and eigenvectors associated to each stationary solution for the system in Fig 5.

Rows correspond to the nodes (states) of the IS. States will be denoted by the list of components with a value greater than 0. This way, the second state, (1.8, 0, 0, 0), will be denoted by (u1). State (0, 0, 0, 0) is abbreviated by (0). Attraction is calculated from negative eigenvalues by (10), given a vector in Repulsion is calculated by positive eigenvalues from (8), again a vector in .

Our aim is to give a mathematical measure on connections between IS-nodes (i.e., between vertices in the IS). We go through the informational field, which is really causing the deformation of the phase space leading to these connections. Note that we will just consider the strengths which are directly pushing to create a link between nodes, given by the repulsion rates around all the intermediate nodes and the attraction rate to the final node. This is not the only option we could have been considered, as we could also have taken into account the attraction rates between intermediate nodes. This fact would not produce any relevant difference to our treatment. Specifically, if we start at IS-node which is connected in the future to , with i < j, we may pass through the local dynamical action of all intermediate nodes between them. In this way, to measure the strength connection (to the future) (Sfut) from to we sum the repulsion action of given by (9), the (exponential) repulsion from all intermediate nodes. Finally, we add the (exponential) attraction from given by (11). Thus, the strength of the connection (to the future) from and can be written as

where constant c is a corrector term for the difference between the dimension of the unstable manifold of and the stable manifold of , and is given by

To measure connections in the past we follow a similar argument, by changing the signs of attraction and repulsion values: if we start at IS-node which is connected in the past to , with j > i, we pass through the local dynamical action of all intermediate IS-nodes between them. In this way, to measure the strength connection (to the past) from to once we have changed signs, we sum the (exponential) attraction of , the (exponential) attraction from all intermediate nodes and finally the (exponential) repulsion dynamics from .

The normalization of these values so that their sum is 1 allows a natural translation into probability distributions associated to each state, one for the past and another for the future. Thus, to build the TPM for the future, noted as TPMfut with entries TPMfut(i, j) we make

with .

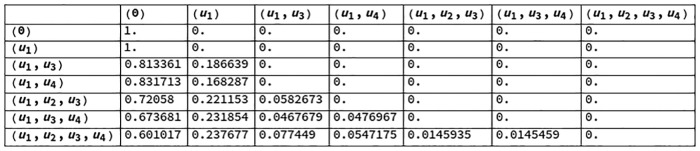

Figs 7 and 8 contain the transition probability matrices for the informational structure of Fig 5 (right) by looking, respectively, at the future and the past. States (nodes) in the informational structure are represented (with parentheses as notation) by the list of elements (nodes of the mechanism, Fig 5 left) with a value greater than 0. The state with no elements greater than 0 is represented by (0). Recall that a stationary solution can be reached from if and only if , in the sense that solutions are represented by the set of nodes with values greater than 0.

Fig 7. Transition probability matrix for the future of the IS in Fig 5.

Fig 8. Transition probability matrix for the past of the IS in Fig 5.

Information

Inspired by IIT, the level of information of a mechanism in a state is compared both for the past (cause information) and the future (effect information).

In order to introduce the formal definitions, consider an informational structure IS with N nodes . Also,

is the number of points in the informational structure accessible from to the future (supersets of ) and the number of accessible points from to the past (subsets of ). Note that each node in the IS determines a subset of it.

Cause information

Cause information measures the different probability distributions to the past obtained by considering the knowledge of just the structure (unconstrained past, pup) and the structure in the current state (cause repertoire, pcr). The probability distribution for unconstrained past, pup, is obtained by considering that any node can be the actual one with the same probability. Formally,

| (12) |

where cause repertoire is directly defined by pp in the TPM (past)

| (13) |

and

Cause information is the distance between both probability distributions. Earth mover’s distance EMD (or Wasserstein metric) [58] is used, so that

| (14) |

Effect information

Effect information is computed analogously to those presented for cause information. Formally,

| (15) |

| (16) |

| (17) |

Cause-effect information

Finally, the cause-effect information of the informational structure IS is the minimum of cause information and effect information,

| (18) |

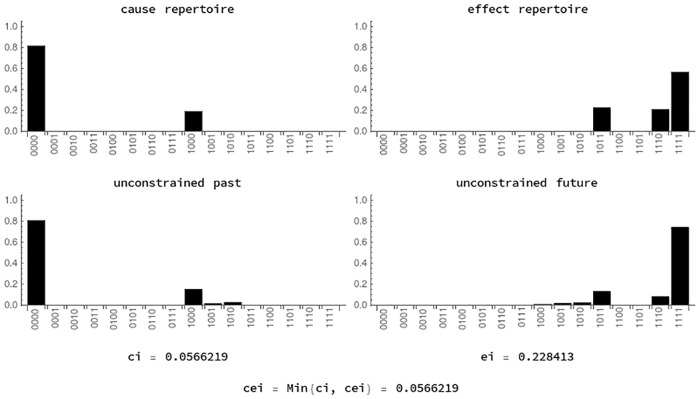

Fig 9 shows the distributions involved in the calculation of cause-effect information in state (u1, u3) of the informational structure in Fig 5 (that is, the state in which only u1 and u3 have a value greater than 0).

Fig 9. Information in state (u1, u3).

Cause and effect probabilities of both the structure (unconstrained repertoire given by (12)) and dynamics (cause repertoire from (13)) are compared by using EMD. Cause-effect information (cei) is the minimum of cause information (ci) and effect information (ei).

Integration

Information is said to be integrated when it cannot be obtained by looking at the parts of the system but only at the system as a whole. We can measure the integration of an informational structure by trying to reconstruct it from all possible partitions.

As we are considering mechanisms with a dynamical behaviour, when a partition of a mechanism with nodes {u1, …, uN} is considered, the IS is computed by taking apart from the dynamics of each ui the nodes outside the same partition. Partitions are computed for a mechanism at a given state , so in the equation for ui, any node uj in the other partition is given the constant value . So, the IS for the partition is not computed by using (7) but

| (19) |

The informational structure of the partition contains the set of stable points of (19) together with the transition probability matrices for them, as defined above.

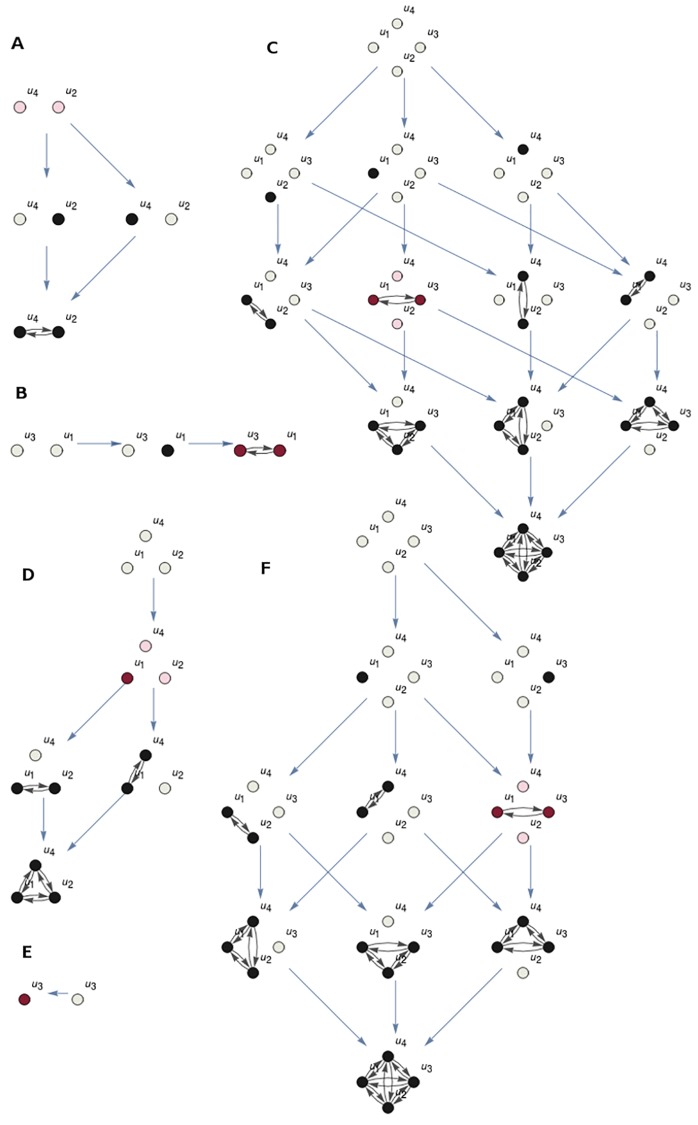

To measure integration, all partitions with non empty P and are considered. Cause and effect repertoires are calculated for all of them, following (12) and (15). The partition with the cause repertoire closer to the cause repertoire of the IS is MIPcause, the minimum information partition with respect to the cause. The partition with the effect repertoire closer to that of IS is MIPeffect. Fig 10 shows the minimum information partitions for the informational structure of Fig 5. The MIPcause is {u1, u3}/{u2, u4} (top) and MIPeffect is {u1, u2, u4}/{u3} (bottom).

Fig 10. Minimum information partition (MIP) for state (u1, u3).

(A-C): Partition {u1, u3}/{u2, u4} of the system in Fig 5 with the cause repertoire closer to that of the complete system for state (u1, u3) (Fig 9). The ISs in A and B correspond to the submechanisms {u1, u3} and {u2, u4}, respectively. Nodes in the IS C are those in the Cartesian product of A and B. States of the ISs are highlighted in pink, observe that only u1 and u3 have values greater than 0 in the state of each IS, as the partition is for state (u1, u3). (D-F): Partition {u1, u2, u4}/{u3} with the effect repertoire closer to that of the whole system for state (u1, u3).

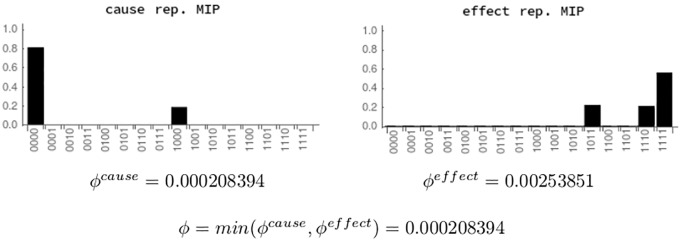

Finally, integration ϕ is given by the minimum of ϕcause and ϕeffect, where ϕcause is the distance between the cause repertoires of IS and MIPcause, and ϕeffect is the distance between the effect repertoires of IS and MIPeffect. Both distances are calculated using EMD. Fig 11 shows the calculation of ϕ for the system of Fig 5 in state (u1, u3).

Fig 11. Calculation of ϕ for state (u1, u3).

Cause and effect repertoires of the MIP (partitions for past and future) are compared to the repertoires of the informational structure of the complete system. The integration of the system is given by ϕ, the minimum of ϕcause and ϕeffect. Left: The cause repertoire of the MIPcause (Fig 10, top) at state (u1, u3). It is compared by using EMD with the cause repertoire of the whole system (Fig 9, top left) resulting ϕcause = 0.000208394. Right: ϕeffect is calculated in an analogous way.

Exclusion

Consciousness flows at a particular spatio-temporal grain, and this is the base for the exclusion postulate in IIT 3.0 [21], which we do not develop here (see Section Discussion). First, in a mechanism with many nodes, it may happen that not all of them are simultaneously contributing to the conscious experience. While ϕ = 0 for the whole mechanism, it may happen that for some submechanism ϕ > 0. Indeed, it may be the case that several submechanisms of the same given mechanism are simultaneously integrating information at a given state. Moreover, consciousness flows in time. But the way it evolves is slower than neuronal firing. In our conception, integrated information is related to the values given by α and γ parameters in (7). Those parameters may be associated with intensity on connectivity (γij) and neuromodulators (αi) which change at a slower flow than neuronal firing [21]. In our approach, small changes in the parameters do not always imply a change in the informational structure, if parameters move within the same cone (see [59, 60]). This fact might explain how a conscious experience may persist while neural activity is (chaotically) changing. However, when change moves the parameters to a different cone the IS suffers a bifurcation on its structure, so changing the level of integrated information.

Topology of a mechanism and integrated information

Although ISs and mechanisms possess quantitative and qualitative major differences on the structure and topology of both networks, it is clear that ISs possess an strongly dependence of their mechanisms’ topology. To show this dependence (but no determination) between mechanisms and associated ISs, we have tried to model the continuous evolution of integrated information for simplified mechanisms. This is probably one of the virtues of our continuous approach to integrated information. In particular, we consider the cases of totally disconnected mechanisms, lattice ones, the presence of a hub, and totally connected mechanisms, showing the key functions of the topology and strength of connections with respect to integrated information. To allow the comparison of the different topologies, the reference value for αi is 1.6 and for γij is 0.1. The behaviour of ϕ-cause and ϕ-effect when some of these parameters change is shown for the different mechanisms.

Totally disconnected mechanisms

The way we define the cause-effect power of a disconnected mechanism always leads to null integration for the level of information. We highlight that integrated information is positive only if we connect the parts in the mechanism, also showing the dependence on the value of integrated information related to the continuous change on the strenght of the connecting parameter.

Fig 12 shows an example of a disconnected mechanism. There are two groups of nodes {u1, u2} and {u3, u4, u5}. Connections are only inside each group but not from one group to the other. The consequence is that the IS of the mechanism behave like the Cartesian product of the ISs of the partition (left and right ISs in the figure).

Fig 12. A totally disconnected mechanism.

Top: Disconnected mechanism. Left and right: Each of the partitions in the disconnected mechanism has an associated IS. The nodes of the IS of the whole mechanism (not represented in the figure) correspond to the Cartesian product of both smaller ISs. For example, the values for nodes u2 and u3 in the state (u2, u3) of the whole IS are the same that their values at states (u2) and (u3) of the left and right ISs, respectively (states highlighted in pink). Bottom: αi and γij values for the mechanism.

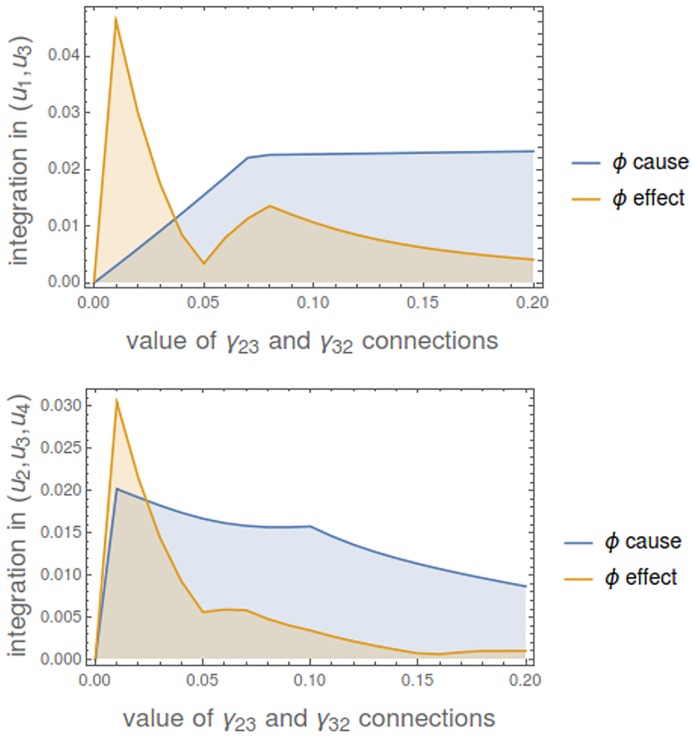

We can set the connections between u2 and u3, looking at the integration in different states of the mechanism (Fig 13). As expected, when γ23 = γ32 = 0 there is no integration.

Fig 13. Integration of the mechanism in Fig 12 as we increase the value of the connections between u2 and u3.

Values of ϕ-cause and ϕ-effect are shown. The resulting ϕ = min(ϕcause, ϕeffect) at each point is the minimum of both values. Top: Integration in state (u1, u3). Bottom: Integration in state (u2, u3, u4).

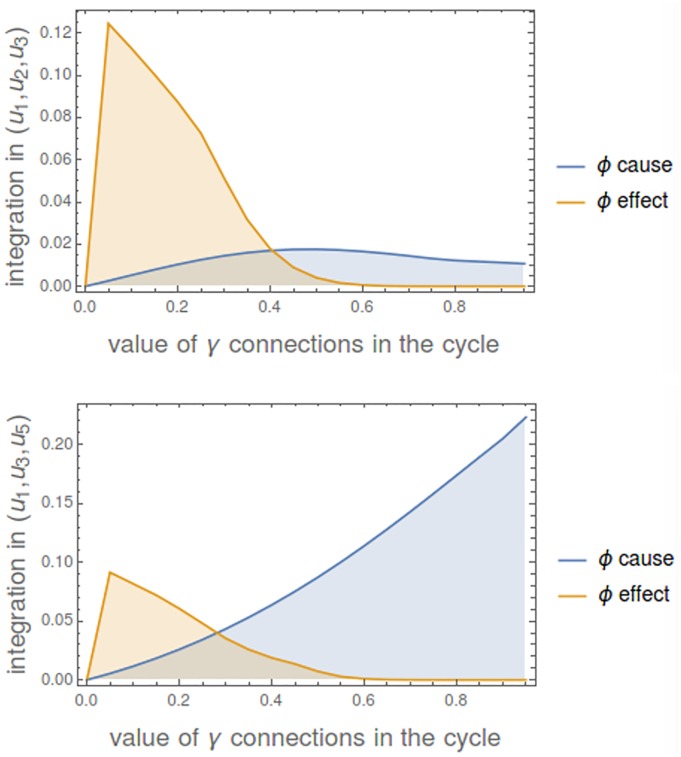

Cyclic mechanisms

Now we consider a mechanism of 5 nodes {u1, u2, u3, u4, u5} with all αi values equal to 1.6. Connections γij create a cycle so that all of them are 0 except {γ12, γ23, γ34, γ45, γ51} that have the same value. Fig 14 shows the changing level of integration of the mechanism in states (u1, u2, u3) and (u1, u3, u5) as the strength of the connections grows up. If we look at min(ϕ- cause, ϕ- effect), it is 0 when connections are 0, then it grows up to some maximum and then return to 0.

Fig 14. Integration in a cyclic mechanism.

Top: Integration in state (u1, u2, u3). Bottom: Integration in state (u1, u3, u5). Note that the level of integrated information is not only a consequence of the topology of the mechanism (a lattice one in this case), but also on the strength of the connecting parameters and the particular state. In case of state (u1, u2, u3) the three nodes with a positive value are consecutive and in (u1, u3, u5) while u1 and u5 are linked in the cycle, u3 is separated.

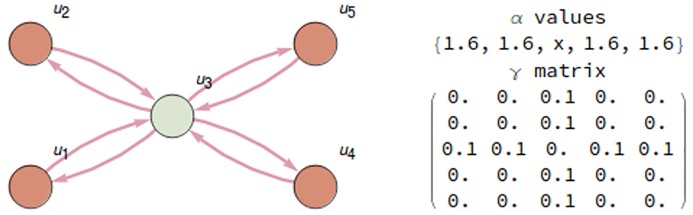

Small world mechanisms: Presence of hubs

Fig 15 shows a mechanism where node u3 has the role of a hub connecting all other nodes. Fig 16 shows the integration of the system in two different states as the value of α3 changes. Observe that in this case ϕ-effect is more sensible than ϕ-cause to small changes on the strength of the hub connection.

Fig 15. A mechanism with a hub node u3.

Values of parameters αi and γij are shown at the right, with α3 = x acting as a variable.

Fig 16. Integration in different states of the hub mechanism of Fig 15 as α3 increases.

Top: Integration in state (u1, u3). Bottom: Integration in state (u1, u2, u3).

Totally connected mechanisms

We consider now a totally connected mechanism of 5 nodes with all γij values equal to 0.1. Fig 17 shows how integration changes as the αi values change. It is observed that, even for a totally connected mechanism, integrated information is a delicate measure which generically does not hold with positive values. In both states, when αi values are greater to 2, integration (ϕ-effect) is 0.

Fig 17. Integration in a totally connected mechanism.

Parameters γij are all equal to 0.1. The evolution of ϕ-cause and ϕ-effect when αi values go from 0 to 3 is shown for two different states.

Discussion

Dynamics on complex networks characterized by informational structures

The concept of informational structure is not only related to the understanding of brain processes and their functionality but, more broadly, as an inescapable tool when analyzing dynamics in complex networks [19, 20]. For instance, in Theoretical Ecology and Economy related to the modeling of mutualistic systems [61, 62], a very important subject is the study of the dependence between the topology of complex networks (lattice systems, unconnected systems, totally connected ones, random ones, “small world” networks) and the observed dynamics on their sets of solutions. The architecture of biodiversity [63, 64] is thus referred as the way in which cooperative systems of plants-animals structure their connections in order to get a mechanism (complex graph) achieving optimal levels in robustness and life abundance. The nested organization of this kind of complex networks seems to play a key role for higher biodiversity. However, it is clear that the topology of these networks is not determining all the future dynamics [65], which seems also to be coupled to other inputs as modularity [66] or the strength of parameters [67]. This is also a very important task in Neuroscience [54, 68]. Informational structures associated to phenomena described by dynamics on complex networks contain all the future options for the evolution of the phenomena, as they possess all the information on the forwards global behaviour of every state of the system and the paths to arrive into them. Moreover, the continuous dependence on the parameters measuring the strength of connections and the characterization of the informational structure allow to understand the evolution of the dynamics of the system as a coherent process, whose information is structured, so giving a comprehension to the appearance of sudden bifurcations [69].

From a dynamical system point of view [44], informational structures introduce several conceptual novelties in the standard theory. In particular, for phenomena associated with high dimensional networks or models by partial differential equations [15, 18, 29]. On the one hand, an informational structure has to be understood as a global attractor at each time, i.e., we do not reach this compact set as the result of the long time behaviour of solutions, but it exists as a complex set made by selected solutions which is causing a curvature of the whole phase space at each time. Because we allow parameters to move in time, the informational structure also depends on time, so showing a continuous deformation of the phase space by time evolution. It is a real space-time process explaining all the possible forwards and internal backwards dynamics. On the other hand, the understanding of attracting networks as informational objects is also new in this framework, allowing a migration of this subject into Information Theory. It is remarkable that dynamical systems cover from trivial dynamics, as global convergence to a stationary point, to the much more richer one usually referred as chaotic dynamics [43, 44, 57] and dynamics of catastrophes [70]. While the first one can be found with total generality, attractors with chaotic dynamics can be only described in detail in low dimensional systems.

Note that some of the classical concepts of dynamical systems have been reinterpreted. In particular, the global attractor it is understood as a set of selected solutions (organized in invariant sets and their connections in the past and future) which creates the informational structure. This network, moreover, produces a global transformation of the phase space (the informational field) enriching every point in the phase space on the crucial information on possible past states and possible future states. It is the continuous change on time of informational structures and fields what allows to talk on an informational flow for which we can analyse the postulates of IIT. Our approach deals with dynamics on a graph for which a global description of the asymptotic behaviour is posible by means of the existence of a global attractor. That is, dissipative dynamical system for which a global attractor exists. It is important to remark the Fundamental Theorem of Dynamical Systems in [33], inspired in the work of Conley, because it gives a general characterisation of the phase space by gradient and recurrent points, so leading to a global description of the phase space as invariants and connections among them. This is the really crucial point which allows for a general framework on the kind of systems to be considered. The Lotka-Volterra cooperative model we consider is for the applications to IIT, because in this case we have a detailed description of the global attractor, which allows to make concrete computations for the level of integrated information on it. Thus, in application to L-V systems, we are considering heteroclinic channels inside the global attractor. But all the treatment in the paper can be done for every dissipative systems for which a detailed description of the global attractor is available, i.e., this description has not be to be restricted to heteroclinic channels coming from L-V models. Gradient dynamics [15, 27, 29, 31] describes the dynamics from heteroclinic connections and associated Lyapunov functionals [29, 32], and it naturally suits into higher order systems including infinite dimensional phase spaces [27, 34, 35]. Thus, although our description of informational structures associated to Lotka-Volterra systems is general enough to describe real complex networks, we think the concept may be also well adapted both for different topologies in the networks and also different kind of non-linearities defining the differential equations [51, 52]. Actually, for comparison with data and experiments associated for real brain dynamics we certainly need to allow much more complex networks as primary mechanisms as well as different kinds of dynamical rules and nonlinearities. But, in all of them, we expect to find the existence of dynamical informational structures providing the information on the global evolution of the system. The concise geometrical description of these structures and their continuous dependence on sets of parameters is a real open problem in the dynamical systems theory.

A continuous approach to integrated information

We have presented a preliminary approach to the notion of integrated information from informational structures, leading to a continuous framework for a theory inspired by IIT, in particular in order to define integrated information of a mechanism in a state. The detailed characterization of informational structures comes from strong theorems in dynamical systems theory about the characterization of global attractors. The description of causation in an informational structure by a coherent and continuous flow of integrated information is new in the literature. Indeed, the definition of TPMs between two equilibria in the IS by the strength of eigenvalues and direction of eigenvectors associated to these and intermediate equilibria produces a global analysis of the level of information contained in a particular IS. The same procedure for partitions of the associated mechanism allows a preliminary measure for integration. Moreover, the continuous dependence of the structure of ISs on parameters also opens the door to the analysis to sudden bifurcations, from an intrinsic point of view, for critical values of the parameters. It seems also very clear the close dependence between the topology of a mechanism, the actual value of the parameters and the current state with respect to its level of integrated information, so pointing for optimality for a “small world” configuration of the brain [71].

Global brain dynamics from informational structures and fields

With respect to global brain dynamics, we think the concept of informational structures and informational fields could serve as the abstract objects to describe functionality and global observed dynamics now described by other concepts and methodologies as multistability [6] or metastability [72, 73]. In [53] the notion of ghost attractors is used, which would seem to suit into our informational fields and its bifurcations under the movement of the coupling parameter in the connectivity matrix. We think it could also serve as a valuable tool in line of the perspectives developed in [5]. In [54] a detailed study of different attractor repertoires is studied for a global brain network for which a (local, global and dynamic) Hopfield model is defined. Note that, depending on parameters, the authors observe the bifurcation of dozens or even hundreds of stationary hyperbolic fixed points, which basis of attraction and stability properties has to be analyzed in an heuristic way by simulation of thousands of realizations related to initial conditions. The comparison with empirical data occurs at the edge of multistability. All of these phenomena could naturally enter into a broader global approach, by the study of an abstract formulation of their associated informational fields, creating a network of attractors and their connections, and their dependence on parameters to better understand transitions and bifurcations of structures. Moreover, the continuous approach for ISs possibly leads, if we are able to fit them to real data of brain activity, to a useful tool to analyze the functional connectivity dynamics [74].

Limitations and future work

The ability to mathematically represent human consciousness can help to understand the nature of conscious processes in human mind, and the dynamical systems approach may indeed be also a correct tool to start this trip towards a complementary mathematical approach to consciousness. Indeed, informational structures allow to associate the processes underlying consciousness to a huge functional and changing set of continuous flow structures. However, we think we are still far to the aim of describing a conscious experience with the actual development, which will be continued in the near future. We have introduced the postulates for IIT associated to ISs, and, in particular, we have developed a first approach for definitions of information and integration, leading to the introduction of measures as cei (cause-effect information) or ϕ (integrated information of a mechanism).

However, in order to make this approximation comparable to the latest published IIT version for discrete dynamical systems of this theory [21], a series of additional developments are required: In IIT 3.0 the exclusion postulate is applied to the causes and effects of the individual mechanisms, so that only the purview that generates the maximum value of integrated information intrinsically exists as the core cause (or core effect) of the candidate mechanism that generates a concept. In fact, a concept is defined as a mechanism in a state that specifies a maximally irreducible cause and effect, the core cause and the core effect, and both specify what the concept is about. The core cause (or effect) can be any set of elements, but only the set that generates the maximum value of integrated information in the past (or future) exists intrinsically as such. We can see the present work as a simplified particular case in which the candidates to core cause and effect, the candidate set to be a concept and the complete system match. But in general they will be different sets. In addition, elements outside the candidate set should be treated as background conditions. On the other hand, the partitions in this work isolate one part of the system from another for any instant of time. However, in line with IIT 3.0, partitions should be performed in this way: two consecutive time steps are considered (past and present or present and future) and the elements of the candidate mechanism in the present are joined to the elements of the candidate to core cause in the past (or core effect in the future) to form a set that will be separated in two, so that in each of the parts there may be elements of the two time steps or not. It is also necessary to distinguish between mechanisms for which the value of ϕ for each concept or quale sensu stricto is calculated, and systems of mechanisms for which Φ for each complex or quale sensu lato is calculated. For the later a conceptual structure or constellation of concepts is defined as the set of all the concepts generated by a system of mechanisms in a state. It is necessary to specify how the conceptual information (CI) and the integrated conceptual information Φ of that system and its corresponding constellation of concepts are calculated. To this aim an extended version of the earth mover’s distance (EMD) is used. When the exclusion postulate is applied to systems of mechanisms, the complexes are defined as maximum irreducible conceptual structures (MICS), that is, local maxima of integrated conceptual information. To do this we should use unidirectional partitions with which to verify that integrated conceptual structures are formed and see if apart from the major complex, and in a non-overlapping way, minor complexes are formed. We are now working in all of these items, and hope, with a much higher computational complexity, a refinement of the results, also in relation to the topology of mechanisms and the integrated information.

Note that the aim to relate (informational) fields and matter by a mathematical treatment is not restricted to our approach or even IIT theory. It is remarkable in this line of research the description of central neuronal systems (and more generically, biological systems) as a gauge theory where a lagrangian approach to model motion is the crucial adopted formalism ([75, 76]). Our techniques are different, since we move into a more classical approach given by dynamical systems related to differential equations. In this way we take advantage of the huge theory on the qualitative analysis of differential equations. In particular, the curvature in the phase space produced by the informational field, closely related to the local stability of stationary points given by their associated eigenvalues, is translated into dynamical properties in the physical space, as a gauge theory approach. But the informational field, in our case, is, for each time, a global transformation of the phase space. This characterisation of an energy landscape (the phase space) evolves continuously in time. It remains an interesting and outstanding challenge to relate gauge theoretic treatments based upon information geometry (for example, the free energy principle) ([76], S3 appendix, or [77]) to our dynamical formulation; however, their common focus on information geometry (and manifold curvature) may admit some convergence in the future. This framework may provide a promising and fruitful perspective.

Thus, our approach provides many open questions and possibilities for future work. Three key problems arise at this stage, showing the necessity for a really interdisciplinary work in the area: the necessity to define the level of information of a global attractor (a totally new question in dynamical systems’ theory), which notion of information better suits to our purposes, and how this concept of information is related to causality [78] on past and future events. We need to continue the development of the dynamical systems version of IIT 3.0, finding the intrinsic representation of cause-effect repertoires and a measure to assess the quantity and quality of integrated information encoded in the informational structure. We also need to represent the evolution in continuous time of this information, and to find a way to measure the strength of attraction and repulsion through measure and dimension of stable and unstable sets and their intersections. Finally, use this notion to define the measure of integrated information compliant with IIT’s Φmax and describe the dynamics of this measure, by also creating computer codes for the informational structures evolution.

Informational structures are really grounded in the topology of their associated mechanisms. In a very natural way, we can combine the (continuous) relation between structural networks and informational structures. A huge further research is probably deserved on this subject, not only in neuroscience, but in the huge area of dynamics on complex networks. This approach also opens the door to the development of a theory on the dependence of the structure of informational structures on parameters and on the topology on the complex graphs supporting them. To this aim, we need a theory on bifurcations of invariants and a theory on bifurcation of attractors, both in the autonomous and non-autonomous cases.

In order to the comparison of this approach with real data, we need to develop global models for brain dynamics where the functionality of informational structures and informational fields can be tested. We will need to use the global models of brain dynamics [4, 5, 13, 74, 79], allowing to the use of a brain simulator [80] which correlates with functional networks, and functional connectivity dynamics as developed from real data on human brains.

Acknowledgments

Preliminary drafts of this paper were discussed with L. Albantakis, W. Marshall, S. Streipert and G. Tononi. Authors thank their valuable feedback and enlightening discussions in the Wisconsin Institute for Sleep and Consciousness. They also thank the discussions with G. Deco from the Pompeu-Fabra University, and Piotr Kalita, from Jagiellonian University in Krakow.

Data Availability

All code developed for the implementation of the results and the generation of the technical figures can be found from the Open Science Framework (https://osf.io/5tajz/).

Funding Statement

All the authors were supported by Ministerio de Economía, Industria y Competitividad Proyecto Explora Ciencia MTM2014-61312-EXP. JAL was also partially supported by Junta de Andalucía under Proyecto de Excelencia FQM-1492 and FEDER Ministerio de Economía, Industria y Competitividad grant MTM2015-63723-P. FJE was also supported by ACCIÓN 1 PAIUJA 2017 2018: EI_CTS02_2017 (ref: 06.26.06.20.7A). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Mortveit HS, Reidys CM. An introduction to sequential dynamical systems Universitext. New York: Springer Verlag; 2008. [Google Scholar]

- 2. Osipenko G. Dynamical Systems, Graphs, and Algorithms Lecture Notes in Mathematics 1889. Berlin: Springer-Verlag; 2007. [Google Scholar]

- 3. Cabral J, Kringelbachb ML, Deco G. Exploring the network dynamics underlying brain activity during rest. Progress in Neurobiology. 2014; 114: 102–131. 10.1016/j.pneurobio.2013.12.005 [DOI] [PubMed] [Google Scholar]

- 4. Deco G, Jirsa VK, McIntosh AR, Sporns O, Kotter R. Key role of coupling, delay, and noise in resting brain fluctuations. Proceedings of the National Academy of Sciences of the USA. 2009; 106: 10302–10307. 10.1073/pnas.0901831106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Deco G, Tononi G, Boly M, Kringelbach ML. Rethinking segregation and integration: contributions of whole-brain modelling. Nature Reviews Neuroscience. 2015; 16(7): 430–439. 10.1038/nrn3963 [DOI] [PubMed] [Google Scholar]

- 6. Kelso JA. Multistability and metastability: understanding dynamic coordination in the brain. Philosophical Transactions of the Royal Society of London: Series B, Biological Sciences. 2012; 367: 906–918. 10.1098/rstb.2011.0351 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Hirsch MW, Smale S, Devaney RL. Differential Equations, Dynamical Systems, and an Introduction to Chaos, third edition Oxford: Academic Press; 2013. [Google Scholar]

- 8. Sandefur GT. Discrete Dynamical Systems: Theory and Applications. New York: Clarendon Press; 1990. [Google Scholar]

- 9. Cammoun L, Gigandet X, Meskaldji D, Thiran JP, Sporns O, Do KD, Maeder P, Meuli R, Hagmann P. Mapping the human connectome at multiple scales with diffusion spectrum MRI. Journal of Neuroscience Methods. 2012; 203: 386–397. 10.1016/j.jneumeth.2011.09.031 [DOI] [PubMed] [Google Scholar]

- 10. Collins DL, Zijdenbos AP, Kollokian V, Sled JG, Kabani NJ, Holmes CJ, Evans AC. Design and construction of a realistic digital brain phantom. IEEE Transactions on Medical Imagin. 1998; 17: 463–468. 10.1109/42.712135 [DOI] [PubMed] [Google Scholar]

- 11. Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M. (2002) Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain NeuroImage. 2002; 15: 273–289. [DOI] [PubMed] [Google Scholar]

- 12. Kotter R. Online retrieval, processing, and visualization of primate connectivity data from the CoCoMac database. Neuroinformatics. 2004; 2: 127–144. 10.1385/NI:2:2:127 [DOI] [PubMed] [Google Scholar]

- 13. Ghosh A, Rho Y, McIntosh AR, Kotter R, Jirsa VK. Cortical network dynamics with time delays reveals functional connectivity in the resting brain. Cognitive Neurodynamics. 2008; 2: 115–120. 10.1007/s11571-008-9044-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Honey CJ, Kotter R, Breakspear M, Sporns O. Network structure of cerebral cortex shapes functional connectivity on multiple time scales. Proceedings of the National Academy of Sciences of the USA. 2007; 104: 10240–10245. 10.1073/pnas.0701519104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Hale JK. Asymptotic Behavior of Dissipative Systems Math. Surveys and Monographs. Providence: Amer. Math. Soc.; 1988. [Google Scholar]

- 16. Ladyzhenskaya OA. Attractors for semigroups and evolution equations. Cambridge University Press, Cambridge, England; 1991. [Google Scholar]

- 17. Robinson JC. Infinite-Dimensional Dynamical Systems. Cambridge: Cambridge University Press; 2001. [Google Scholar]

- 18. Temam R. Infinite-Dimensional Dynamical Systems in Mechanics and Physics. Berlin: Springer-Verlag; 1988. [Google Scholar]

- 19. Guerrero G, Langa JA. Suárez A. Attracting Complex Networks In: Complex Networks and Dynamics -Social and Economic Interactions, Lecture Notes in Economics and Mathematical Systems. Springer; 2016. pp 309–327. [Google Scholar]

- 20. Guerrero G, Langa JA, Suárez A. Architecture of attractor determines dynamics on mutualistic complex networks. Nonlinear nal. Real World Appl. 2017; 34: 17–40. 10.1016/j.nonrwa.2016.07.009 [DOI] [Google Scholar]

- 21. Oizumi M, Albantakis L, Tononi G. From the Phenomenology to the Mechanisms of Consciousness: Integrated Information Theory 3.0. PLoS Comput Biology 2014; 10 (5). 10.1371/journal.pcbi.1003588 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Tononi G. An information integration theory of consciousness. BMC neuroscience. 2004; 5 (1). 10.1186/1471-2202-5-42 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Tononi G. Consciousness as integrated information: a provisional manifesto. The Biological Bulletin. 2008; 215 (3): 216–242. 10.2307/25470707 [DOI] [PubMed] [Google Scholar]

- 24. Tononi G. Integrated information theory of consciousness: an updated account. Arch Ital Biol. 2012; 150 (2-3): 56–90. [DOI] [PubMed] [Google Scholar]

- 25. Tononi G, Boly M, Massimini M, Koch C. Integrated information theory: from consciousness to its physical substrate. Nature Reviews Neuroscience; 2016. 10.1038/nrn.2016.44 [DOI] [PubMed] [Google Scholar]

- 26.Albantakis L, Marshall W, Hoel E, Tononi G (2017). What caused what? An irreducible account of actual causation. Preprint. Available from: arXiv:1708.06716. [DOI] [PMC free article] [PubMed]

- 27. Carvalho AN, Langa JA, Robinson JC. Attractors for infinite-dimensional non-autonomous dynamical systems. New York: Springer; Applied Mathematical Series 182; 2013. [Google Scholar]

- 28. Babin AV, and Vishik MI. Attractors of Evolution Equations. Amsterdam: North Holland; 1992. [Google Scholar]

- 29. Henry D. Geometric theory of semilinear parabolic equations Lecture Notes in Mathematics Vol. 840 Berlin: Springer-Verlag; 1981. [Google Scholar]

- 30. Babin AV, and Vishik M. I. Regular attractors of semigroups and evolution equations. J. Math. Pures Appl. 1983; 62: 441–491. [Google Scholar]

- 31. Aragão-Costa ER, Caraballo T, Carvalho AN, Langa JA. Stability of gradient semigroups under perturbation. Nonlinearity. 2011; 24: 2099–2117. 10.1088/0951-7715/24/7/010 [DOI] [Google Scholar]

- 32.Conley C. Isolated invariant sets and the Morse index. CBMS Regional Conference Series in Mathematics Vol. 38. Providence: American Mathematical Society; 1978.

- 33. Norton DE. The fundamental theorem of dynamical systems. Commentationes Mathematicae Universitatis Carolinae. 1995; 36 (3): 585–597. [Google Scholar]

- 34. Aragão-Costa ER, Caraballo T, Carvalho AN, Langa JA. Continuity of Lyapunov functions and of energy level for a generalized gradient system. Topological Methods Nonl. Anal. 2012; 39: 57–82. [Google Scholar]

- 35. Hurley M. Chain recurrence, semiflows and gradients. J. Dyn. Diff. Equations. 1995; 7: 437–456. 10.1007/BF02219371 [DOI] [Google Scholar]

- 36. Patrao M. Morse decomposition of semiflows on topological spaces. J. Dyn. Diff. Equations; 2007; 19 (1): 181–198. 10.1007/s10884-006-9033-2 [DOI] [Google Scholar]

- 37. Rybakowski KP. The homotopy index and partial differential equations. Berlin: Universitext, Springer-Verlag; 1987. [Google Scholar]

- 38. Patrao M, San Martin LAB. Semiflows on topological spaces: chain transitivity and semigroups. J. Dyn. Diff. Equations; 2007; 19 (1): 155–180. 10.1007/s10884-006-9032-3 [DOI] [Google Scholar]

- 39. Joly R, Raugel G. Generic Morse-Smale property for the parabolic equation on the circle. Ann. Inst. H. Poincaré Anal. Non Linéaire. 2010; 27 (6): 1397–1440. 10.1016/j.anihpc.2010.09.001 [DOI] [Google Scholar]

- 40. Hale JK, Magalhães LT, Oliva WM. An introduction to infinite-dimensional dynamical systems—geometric theory Applied Mathematical Sciences Vol. 47 New York: Springer-Verlag; 1984. [Google Scholar]

- 41. Palis J, de Melo W. Geometric theory of dynamical systems An introduction. Translated from the Portuguese by Manning A. K.. New York-Berlin: Springer-Verlag; 1982. [Google Scholar]

- 42. Ott E. Chaos in Dynamical Systems. Cambridge: Cambridge University Press; 2002. [Google Scholar]

- 43. Strogatz S. Nonlinear Dynamics and Chaos. New York: Perseus Publishing; 2000. [Google Scholar]

- 44. Wiggins S. Introduction to Applied Dynamical Systems and Chaos. New York: Springer; 2003. [Google Scholar]

- 45. Afraimovich VS, Moses G, Young T. Two-dimensional heteroclinic attractor in the generalized Lotka-Volterra system. Nonlinearity. 2016; 29 (5): 1645–1667. 10.1088/0951-7715/29/5/1645 [DOI] [Google Scholar]

- 46. Afraimovich VP, Zhigulin MI, Rabinovich MI. On the origin of reproducible sequential activity in neural circuits. Chaos. 2004; 14 (4): 1123–1129. 10.1063/1.1819625 [DOI] [PubMed] [Google Scholar]

- 47. Afraimovich VS, Tristan I, Varona P, Rabinovich MI. Transient dynamics in complex systems: heteroclinic sequences with multidimensional unstable manifolds. Discontinuity, Nonlinearity and Complexity. 2013; 2(1): 21–41. [Google Scholar]

- 48. Muezzinoglu ME, Tristan I, Huerta R, Afraimovich VS, Rabinovich MI. Transient versus attractors in complex networks. International J. of Bifurcation and Chaos. 2010; 20 (6): 1653–1675. 10.1142/S0218127410026745 [DOI] [Google Scholar]

- 49. Rabinovich MI, Varona P, Tristan I, Afraimovich VS. (2014) Chunking dynamics: heteroclinics in mind Frontiers in Computational Neuroscience. 2014; 8: 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Murray JD, Mathematical Biology. New York: Springer; 1993. [Google Scholar]

- 51. Takeuchi Y. Global Dynamical Properties of Lotka_Volterra Systems. Singapore: World Scientific Publishing Co. Pte. Ltd.; 1996. [Google Scholar]

- 52. Takeuchi Y, Adachi N. The existence of globally stable equilibria of ecosystems of a generalized Volterra type. J. Math. Biol. 1980; 10: 401–415. 10.1007/BF00276098 [DOI] [Google Scholar]

- 53. Deco G, Jirsa VK. Ongoing cortical activity at rest: criticality, multistability, and ghost attractors. J Neurosci. 2012; 32 (10): 3366–3375. 10.1523/JNEUROSCI.2523-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Golos M, Jirsa VK, Daucé E. Multistability in Large Scale Models of Brain Activity. PLOS Computational Biology. 2015; 11 10.1371/journal.pcbi.1004644 [DOI] [PMC free article] [PubMed] [Google Scholar]