Abstract

Due to the increasing scale of farms, it is increasingly difficult for farmers to monitor their animals in an automated way. Because of this problem, we focused on a sound technique to monitor laying hens. Sound analysis has become an important tool for studying the behaviour, health and welfare of animals in recent years. A surveillance system using microphone arrays of Kinects was developed for automatically monitoring birds’ abnormal vocalisations during the night. Based on the principle of time-difference of arrival (TDOA) of sound source localisation (SSL) method, Kinect sensor direction estimations were very accurate. The system had an accuracy of 74.7% in laboratory tests and 73.6% in small poultry group tests for different area sound recognition. Additionally, flocks produced an average of 40 sounds per bird during feeding time in small group tests. It was found that, on average, each normal chicken produced more than 53 sounds during the daytime (noon to 6:00 p.m.) and less than one sound at night (11:00 p.m.–3:00 a.m.). This system can be used to detect anomalous poultry status at night by monitoring the number of vocalisations and area distributions, which provides a practical and feasible method for the study of animal behaviour and welfare.

Keywords: sound analysis, sound source localization, Kinects, chicken, animal behaviour

1. Introduction

Information about animals can be transferred over long distances by sound. The acoustic monitoring of farm animals is non-invasive and may be used as an efficient management tool to improve animal health, welfare and the economic efficiency of farms [1,2,3,4]. In recent years, interest in the analysis of farm animal vocalisation has grown, and various attempts have been made to decode the relationship between animal sound and animal behaviour [1]. For instance, some approaches have examined the relationship between vocalisation and the health of pigs and dairy calves [2,3,5,6,7,8]. Others suggest that vocalisations could serve as indicators of poultry welfare [9,10,11]. Sound analysis systems can be used to predict feed intake by monitoring pecking sounds [12,13,14]. Sound source localisation systems can be used to detect pig respiratory disease [15]. Some researchers have focused on vocalisation analysis of small-scale poultry breeding groups to model intensive cultivation [16,17]. However, unlike monitoring large farm animals, real-time sound analysis or sound recognition in a large-scale henhouse remain a great challenge since many sounds are produced at the same time. The basis of the sound analysis method is the identification of particular poultry sounds. Rather than simply monitor the whole house, this study focused on real-time poultry sound source localisation for precision research and quantitative analysis. Certainly, automatically captured abnormal sounds, such as respiratory disease symptoms (grunts, snores, and coughs) or noises from machine malfunctions at night, can help find sick chickens and solve problems quickly.

Sound source localisation (SSL) is one of the most significant sound analysis methods in acoustic research. It has been applied to video conferencing, surveillance and advanced human–computer interaction analyses [18] and for localisation of birds based on their vocalisations [19]. Microphone array technology can be used to study any animal that makes distinctive sounds [20], presenting an important and transformative tool for behavioural biologists. The logic behind placing microphones in different places is to identify the origin and direction of the incoming sound. Arrays of simultaneously recording microphones provide a useful tool for passively monitoring animals’ abnormal sounds, as well as ascertaining sound location. As the microphones are placed in different positions, the sound arrives at each microphone at a different time. By analysing the differences in sound arrival time from the source, sensors can calculate the direction and approximate distance from which the sound is coming [21,22].

This study aims to develop a non-invasive method to monitor abnormal night vocalisations of birds by using Kinect’s SSL technology. The objectives are as follows: (i) description of a sound source localisation method and experimental platform; (ii) measurement of the accuracy of this system; and (iii) application of SSL method to small poultry group tests.

2. Materials and Methods

Experiments were divided into laboratory tests that developed an SSL algorithm and small poultry group tests that evaluated the algorithm. All experimental procedures were conducted in conformity with hy-line parent stock management guides for the care and use of laboratory animals. All efforts were made to improve animal welfare and ameliorate the animals’ suffering.

2.1. Animals and Housing

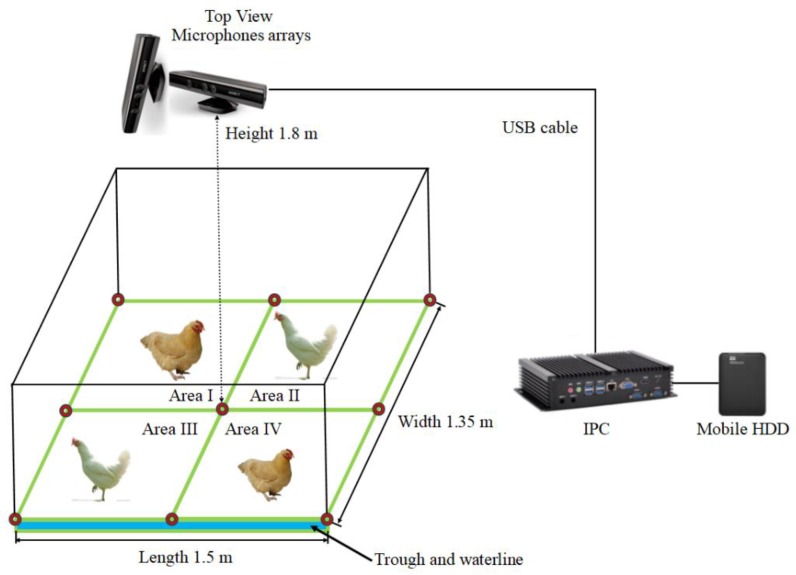

Small group tests were performed at the Shangzhuang Experimental Station of China Agricultural University, Beijing, China. Hy-line brown chickens (11 hens, 4 cocks) were raised on nets over a 36–38 week period, in a room 7.2 mL × 3.4 mW × 3.0 mH with a metal door and a surrounding wall made of colour steel plate. The floor-rearing area was 1.5 mL × 1.35 mW × 1.8 mH. The poultry flock was fed twice a day, once between noon and 1:00 p.m. and again between 5:00 and 6:00 p.m. Room temperature was kept at 15–18 °C, and 18 h light and 6 h dark were provided each day. The rearing area was divided into four sub areas: Area I was defined as a laying area; Area II was defined as an activity area; and Areas III and IV were both defined as feeding and drinking areas (Figure 1).

Figure 1.

A schematic of the small group test platform. Note: Area I: laying area. Area II: activity area. Areas III and IV: feeding and drinking areas.

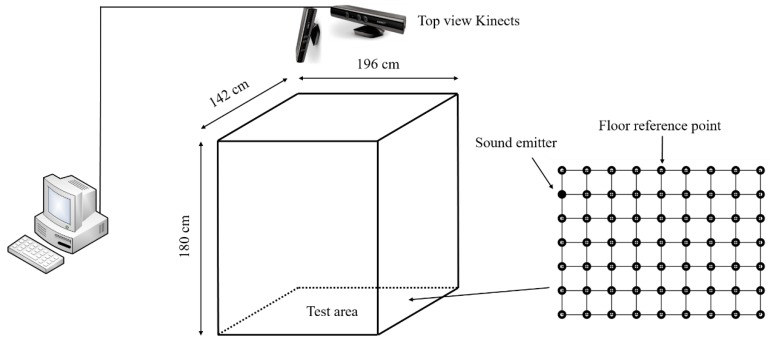

The laboratory experiment platform was similar to the small group test platform, except for the sound source and test area size. The room (7.0 mL × 7.0 mW × 3.0 mH) had a metal door with a surrounding wall made of ferroconcrete. The size of the test area was 1.96 mL × 1.42 mW × 1.80 mH. In the experiment, a mobile phone (type: Meizu MX6, Helio X20 CPU, 4 GB RAM, 3 GB + 32 GB storage capacity, Meizu Telecom Equipment Co., Ltd., Guangdong, China ) was used as a sound emitter. It was placed in different locations as reference points (Figure 2). In this paper, we simplified animal vocalisation as a point of moving sound source without considering the animal’s direction. Pre-set floor reference points, regarded as truth values, were used to measure the accuracy of a single Kinect by comparing measured values with the known sound source angles, such as −28°, −22°, −15°, −8°, 0°, 8°, 15°, 22°, 28°. Then, errors of different angle measurements for a single Kinect were calculated to evaluate the accuracy of this system. The selected signal was a simulated laying hen call at a 44.1 kHz sampling rate (loop playback, duration 2.48 s, stereo sound channel, 16-bit bit depth, 1411 Kbps bit rate).

Figure 2.

A schematic of the laboratory experiment platform.

2.2. Experimental Installations

Two top-view Kinect cameras for Windows V1 (Microsoft Corp., Washington, WA, USA) were installed perpendicular to each other 1.8 m above the centre of the floor. Each Kinect was equipped with an RGB camera, a depth camera and a microphone array consisting of four different microphones placed in a linear order (three are placed on the right side and the other one is placed on the left side), sharing a common horizontal axis. From the left to the right, the distances between the microphones were 149 mm, 40 mm and 37 mm [23]. The microphone array could supply four channels of 32-bit audio at a 16 kHz sampling rate. Kinects continuously collected both digital images in JPEG format (640 × 480 pixel resolution, recorded at approximately 1 s intervals, used for manual verification) and multi-channel signal input (4-channel, 32-bit, 16,000 Hz, used for automatic sound source localisation measurements at 1 s intervals). Figure 1 shows that the microphone arrays were connected via two USB ports to one mini-industrial personal computer (IPC, Shenzhen Konghui Intellitech Co., Ltd., Shenzhen, China) for achieving coherence of the time series. It was also equipped with a 2 TB USB 3.0 mobile hard disk drive (HDD, Western Digital Corporation, LakeForest, IL, USA) to record data.

2.3. Sound Source Localisation Method

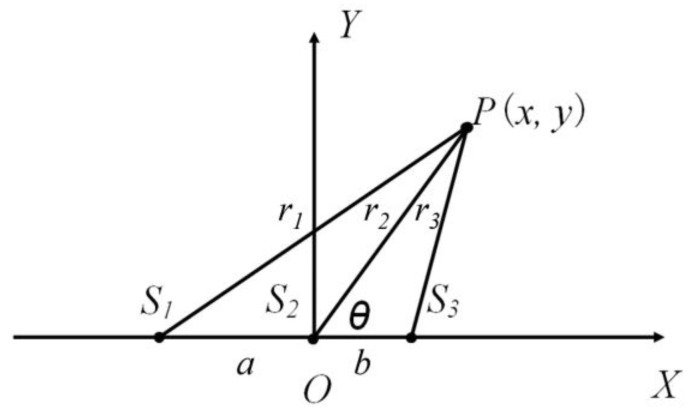

The SSL method is fundamentally based on the estimation of a sound source angle, a beam angle, and response to changes [23]. We combined Kinect Software Development Kits (SDK) such as NoiseSuppression, EchoCancellationMode and algorithms with LabVIEW sound and vibration modules to realise the online localisation function in our monitoring system. These Kinect SDK were called for sound signal preprocessing and the LabVIEW module was used to obtain the time delay. Because the microphones were placed in different positions, the sound arrived at different microphones at different times. As a result, the Kinect sensor determined the direction from which the sound came. Although Kinect position estimations are unsatisfactory, its direction estimations are very accurate based on the principle of time-difference of arrival (TDOA) and the steered response power using the PHAse Transform (SRP-PHAT) localization algorithm [24]. The reference algorithm and Kinect beamforming algorithm both need a large amount of calculation and are not suitable for real-time signal processing, while the cross correlation (CC) algorithm can realise the LabVIEW real-time localisation function with a small amount of calculation. Kinect signals were localised by extracting the time-difference of signal input in different microphones at 1 s intervals. 1 s was set as the SSL interval time because the movement of chickens was limited during that period. All four microphones shared a common horizontal axis, so a linear localisation could be detected with any three microphones (Figure 3). Though the Kinect device has 4 microphones, only three were utilised for SSL.

Figure 3.

Linear localisation chart with any three microphones.

In Figure 3, it is supposed that the sound source and any three microphones are at point P (x, y), S1 (−a, 0), S2 (0, 0) and S3 (b, 0), respectively. Point P (x, y) can be represented by the angle (the included angle between line S2S3 and line PS2, “°”) and the distance between P and S2 (line segment PO, ), which can be calculated through a geometrical relationship:

| (1) |

| (2) |

| (3) |

where ri stands for the distance between the sound source point P and every microphone point Si (i = 1, 2, 3), m; a stands for the distance between point S1 and point S2, m; and b stands for the distance between points S2 and S3, m.

Assuming that sound velocity c = 340 m·s−1, t12 is the arriving time difference between S1 and S2, s, and t23 is the arriving time difference between S2 and S3, s. Time delay is automatically computed by Kinect adaptive methods embedded in the LabVIEW software and can be found from the triangle cosine theorem [25]:

| (4) |

| (5) |

| (6) |

| (7) |

Solve the equation using Equations (4)–(7) for and [26,27]:

| (8) |

| (9) |

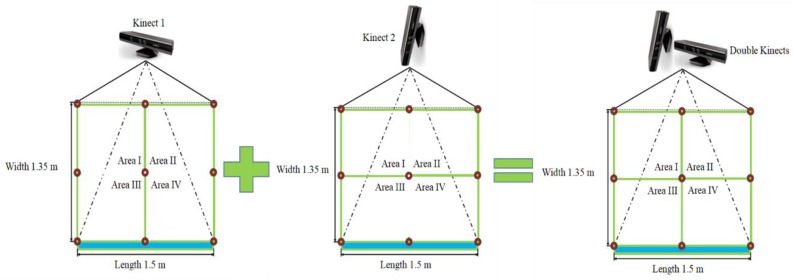

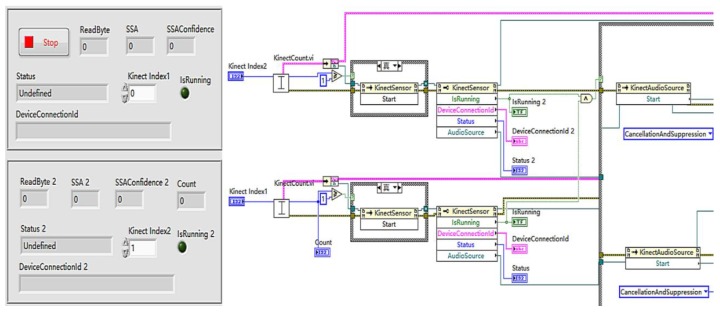

Based on the SSL method, one Kinect linear direction estimation with three microphones can determine right ( > 0°) or left ( < 0°) and two Kinect estimations with six microphones can synchronously localise four quadrants (Figure 4). Only Kinect direction estimations were chosen for SSL tests because its distance estimations were unsatisfactory due to their large error. Kinect microphones sensitivity and their 16 kHz sampling are important factors that can lead to delay estimation errors [28]. Time series of two Kinect sensors were consistent because of their connection to the same computer with the same measuring time. The SSL method was embedded in this online monitoring system by calling Kinects SDK in one whole loop to realise the real-time localisation function. The SSL measurements of two Kinects were LabVIEW controlled to start KinectSensor and KinectAudioSource together to invoke nodes (Kinect SDK) for synchronous acquisition at 1 s intervals (Figure 5).

Figure 4.

A schematic of Kinect quadrant estimations.

Figure 5.

LabVIEW panel (left) and block diagram (right).

The accuracy of sound source localisation is computed using:

| (10) |

where accuracy is the ratio of successful localisations (SSL area (I–IV) in accordance with visual assessment of the existing laying hen area (I–IV) at 1 s intervals), correct number is the quantity of successful localisations, and incorrect number is the quantity of failing localisations (SSL area (I–IV) is inconsistent with visual assessment of the existing laying hen area (I–IV) at 1 s intervals).

2.4. SSL Data Processing and Storage

SSL output data were automatically pre-processed and analysed based on the LabVIEW NET module. Digital images were manually checked to evaluate accuracy of sound source localisation. Additionally, null values and invalid values were filtered through LabVIEW software automatically. Null values mean running errors of LabVIEW SSL measurements or no sound production meant the program returned null. Kinect SDK provides a confidence coefficient parameter that allows us to estimate SSL method accuracy. The threshold value of the confidence coefficient was set at 0.5 to achieve highly credible data. Also, sound source angles outside −30° to +30° were eliminated because they were beyond our scope of interest. Ineffective angles or low confidence coefficients can cause invalid SSLs, which were detected and eliminated by using a LabVIEW conditional judgement algorithm. All SSL output data were screened to determine whether they were within effective angles (−30° to +30°) and confidence coefficients (>0.5) [29]. The remaining data stored in a MySQL database were manually checked to judge whether they were in accordance with the visual assessment (successful localisation) or not (failing localisation). Besides, only laboratory tests utilised Grubbs gross error processing [30].

3. Results

3.1. Laboratory Tests

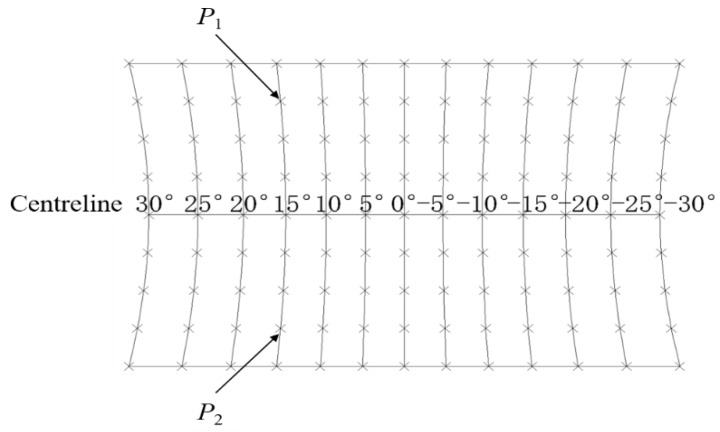

In laboratory conditions, the accuracy of this system was measured as compared to previous work. Figure 6 shows the reference values of floor reference points that were regarded as an accurate way to measure errors of different angles. The centreline is the medial axis of the captured image. Different angles are distributed unevenly along the centreline. The same sound source angle of the Kinect at different positions is nonlinear. Table 1 presents the errors of different angle measurements between the SSL method and referenced angles. On average, the absolute error of the Kinect was lower than 2°.

Figure 6.

Reference values of sound source angle for a single Kinect positioned on the centreline at 0°. P1, a random sound source point; P2, a symmetry point along the centreline that has the same and .

Table 1.

Errors of different angle measurements for a single Kinect in the laboratory experiment.

| Angle measurement | −28° | −22° | −15° | −8° | 0° | 8° | 15° | 22° | 28° |

| Absolute error (°) | 1 | 2 | 2 | 2 | 0 | 2 | 2 | 1 | 0 |

Then, 1068 of the 3272 samples generated during the single Kinect test were found to be valid SSLs, and 1087 of the 3272 samples were non-null values, while 19 samples were invalid SSLs due to ineffective angles or confidence coefficients (≤0.5) (Table 2). Most null values appeared due to no sound production and the LabVIEW program returned null SSL measurements.

Table 2.

SSL testing information at 1 s intervals.

| Day | Time (h) | Number of SSLs | Number of Non-Null SSLs | Number of Valid SSLs | Test Type | Remarks |

|---|---|---|---|---|---|---|

| 28 October | 2 | 1550 | 458 | 450 | Laboratory | Single Kinect test |

| 29 October | 2 | 1722 | 629 | 618 | ||

| Total | 4 | 3272 | 1087 | 1068 | ||

| 30 October | 2 | 751 | 266 | 261 | Laboratory | Double Kinects test |

| 31 October | 1 | 369 | 164 | 159 | One chicken | |

| Total | 3 | 1120 | 430 | 354 | ||

| 1 November | 10 | 23,149 | 2366 | 2314 | Small group | Continuous monitoring |

| 2 November | 14 | 31,459 | 2038 | 1989 | ||

| 3 November | 14 | 32,099 | 1123 | 1106 | ||

| 4 November | 12 | 23,959 | 2167 | 2139 | 22:00–24:00 data lost | |

| 5 November | 9 | 15,540 | 2276 | 2249 | 0:00–4:00 data lost | |

| 6 November | 14 | 23,903 | 3975 | 3897 | Continuous monitoring | |

| 7 November | 14 | 24,910 | 992 | 976 | ||

| Total | 87 | 175,019 | 14,937 | 14,670 |

Note: Valid SSLs mean effective angle within ±30°, confidence coefficient >0.5 and Grubbs gross error processing.

Limited by linear microphone arrays, a single Kinect cannot unambiguously determine source location, since SSL results can get two points with the same and (for instance, point P1 and P2 in Figure 6). For further analysis, two orthogonally positioned Kinects were used to realise 2D grid localisation. The accuracies of SSL of two Kinects in the laboratory experiments as determined by signal processing and analysis algorithm are presented in Table 3. The mobile phone was placed in different locations of four areas as 9 × 7 reference points and 12 points in each area were evaluated (Figure 2). Out of the 261 measurements of position in the laboratory experiments, 195 were correctly identified, a 74.7% accuracy. Out of the 72 total Area I events, the algorithm correctly recognised 66 events, a 91.7% accuracy. In addition, 3 of 21 events were incorrectly recognised in Area III. The accuracies of Area II and Area IV were relatively lower than those of other areas. The direction of the mobile phone speaker in these two areas might be closer to the centreline, around the 0° angle, which can lead to misjudgment by the Kinect low sampling rate microphones. This is probably because there is a substantial effect on angle measurement around the division between two adjacent areas. The distances between different reference points are 245 mmL and 237 mmW and the size of mobile phone is 153.6 mmL × 75.2 mmW. However, the direction of the mobile phone speaker might be an influencing factor that we did not pay much attention to.

Table 3.

Accuracies of sound source localisation of double Kinects in laboratory experiments.

| Source Position | Number of Correct SSLs | Number of Incorrect SSLs | Total Number | Accuracy (%) |

|---|---|---|---|---|

| Area I | 66 | 6 | 72 | 91.7 |

| Area II | 52 | 34 | 86 | 60.5 |

| Area III | 18 | 3 | 21 | 85.7 |

| Area IV | 59 | 23 | 82 | 72.0 |

| Total | 195 | 66 | 261 | 74.7 |

Note: Area partitions according to Figure 1. Correct and incorrect SSLs mean effective angle (−30° to +30°), confidence coefficient >0.5.

The accuracies of SSL in one chicken test are presented in Table 4. A hen’s chirping sounds were recorded over one hour, and 159 valid SSLs were extracted via this online monitoring system. In Area I, the algorithm correctly localised 44 sound sources, and 12 were incorrectly located as coming from other areas, yielding a localisation accuracy of 78.6%. In Area II, the algorithm correctly localised 42 out of 61 and incorrectly localised 19, yielding a 68.9% localisation accuracy. Similarly, localisation accuracies for Areas III and IV were 74.1% and 73.3%, respectively. The overall localisation accuracy in all areas, on average, was 73.6%.

Table 4.

Accuracies of SSL with two Kinects in one-chicken tests.

| Source Position | Number of Correct SSLs | Number of Incorrect SSLs | Total Number | Accuracy (%) |

|---|---|---|---|---|

| Area I | 44 | 12 | 56 | 78.6 |

| Area II | 42 | 19 | 61 | 68.9 |

| Area III | 20 | 7 | 27 | 74.1 |

| Area IV | 11 | 4 | 15 | 73.3 |

| Total | 117 | 42 | 159 | 73.6 |

Note: Area partitions according to Figure 1. Correct and incorrect SSLs mean effective angle (−30° to +30°) and confidence coefficient >0.5.

3.2. Small Group Tests

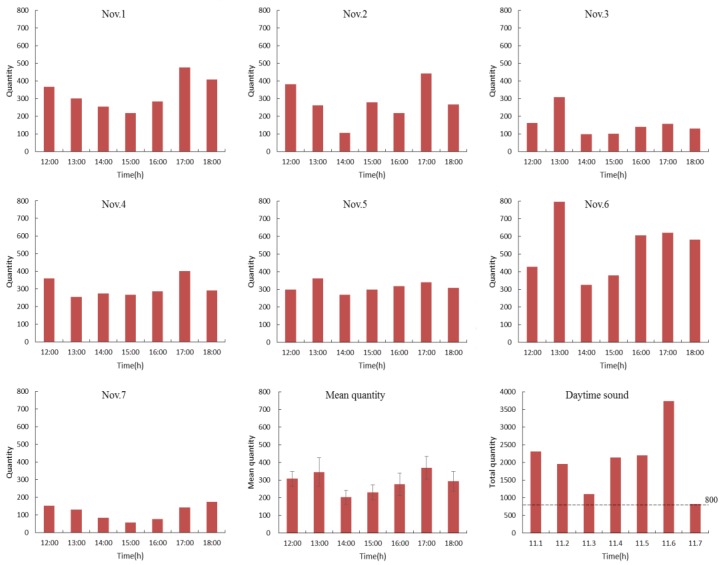

In the application of the SSL method to small poultry group tests, the number of hourly vocalisations (from noon to 6:00 p.m.) of the flock in the horizontal plane and the overall mean (mean ± SE) are presented in Figure 7 (data from 1–7 November were selected). The SSL method can count whether there was sound production and valid SSLs at 1 s intervals through data pre-processing of Section 2.4 or not. The number counted in an hour was the number of hourly vocalisations. It can be observed that the number of vocalisations during feeding time was higher than at any other time. The flock produced more vocalisations during feeding time, which derived from their demand or calling for food. The quantity of sounds was above 600 times (40 times per chicken) during feeding time on an average day. There is so much difference in amount of vocalisations between the days because different feeding time (normal or abnormal) as well as external stimulus might cause different vocalisation in laying hens. For example, more sounds arose on 6 November. This was the result of an egg collection causing disruption to normal feeding (1:00 p.m.) and the ensuing stress response from the flock. Lack of food resulted in more sounds arising between 4:00 p.m. and 6:00 p.m. on 6 November. The number of hourly vocalisations and area distributions (from 11:00 p.m. to 3:00 a.m.) of the flock are presented in Figure 8. The SSL method can not only count whether there was sound production and valid SSLs, but also locate to the exact sound source areas for quantitative sub area counting statistics. A high number of vocalisations arose in the daytime (noon to 6:00 p.m.), (more than 800 times or 53 times per chicken), and a minority arose during the night (11:00 p.m. to 3:00 a.m.), that is, normally fewer than 15 times (1 time per chicken) (Figure 7 and Figure 8). From noon to 6:00 p.m., laying hens might rest, socialise, eat, drink, and express their natural behaviour and the higher number of vocalisations tend to occur erratically during this period [31]. Generally, the number of vocalisations during the daytime was more random than that during the night.

Figure 7.

Examples of the hourly sounds of laying chickens.

Figure 8.

Area distribution of poultry vocalisations at night.

Figure 8 shows the area distributions of poultry vocalisations at night (from 11:00 p.m. to 3:00 a.m.). In normal conditions, vocalisations produced by hy-line browns can hardly be heard at night, yet abnormal environmental conditions or failing management practices can cause stress to the flock, resulting in the production of more abnormal sounds. Due to late and inadequate feeding, animals were in starvation status on the nights of 5 and 6 November, which lead to increased vocalisation distributions in the feeding and drinking areas (the 4th sub chart in Figure 8). By analysing the number of poultry vocalisations and their area distributions via SSL methods, we can identify abnormal sounds (e.g., if the number of the flock’s vocalisations are obviously higher than its normal quantity at night) to find feeding problems.

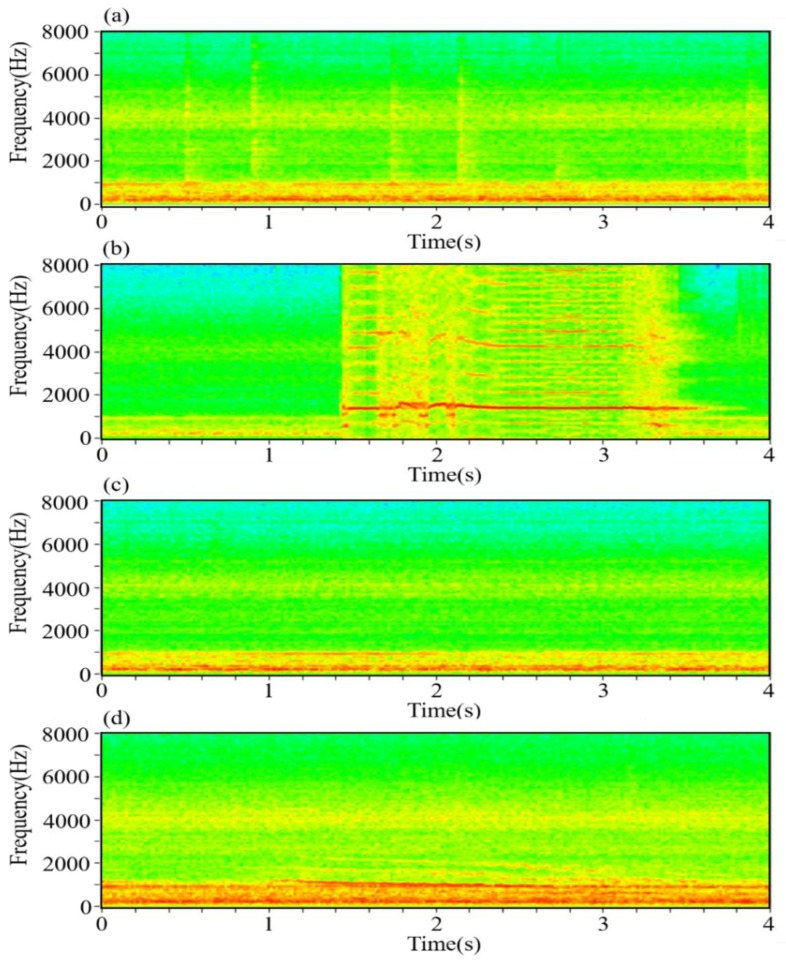

Figure 9 presents the typical night sound spectrograms. All types of night sound were classified by human subjective hearing as well as visual inspection of spectrograms. There were four main types of sound: the pecking sound for water, cock crow sound, mechanical sound and chicken grunt sound, which were easily discriminated by playing back audio recordings. Pecking sounds for water were rarely produced except during starvation events and expressed a wide frequency range (1–8 kHz) and a short-time characteristic (Figure 9a). Cock crows were produced according to the circadian clock [32] and expressed a wide frequency range (0–8 kHz) at stable formant frequency of approximately 2 s in duration (Figure 9b). Mechanical sounds produced by fans were considered noise and were present throughout the night with irregular and random signals between 0 and 1 kHz (Figure 9c) [33]. Occasionally, chicken grunt sounds could be heard and picked up by the surveillance system but were almost impossible to capture during the daytime. They expressed a small frequency range (1–2 kHz) as well as a faint formant of 2 s duration (Figure 9d). The majority of the sound that arose in Areas III and IV relative to other areas can be accounted for by starvation or urging for food (e.g., hanging around the trough and waterline) on the nights of Nov. 5 (Areas I and II, 9 times; Areas III and IV, 127 times) and Nov. 6 (Areas I and II, 6 times; Areas III and IV, 75 times). This is because the majority of the sound that arose in areas III and IV on the nights of Nov. 5 and 6 was pecking sound for water that was discriminated by human subjective evaluation (Figure 8 and Figure 9a). These actual sound source areas were detected by the SSL method and the results were also inspected by human subjective hearing as well as inspection of spectrograms in the LabVIEW sound and vibration module.

Figure 9.

Spectrograms depicting (a) pecking sound for water, (b) cock crow sound, (c) mechanical sound and (d) chicken grunt sound.

4. Discussion

Due to computational efficiency as well as robustness against mismatches of signal models and microphone errors, TDOA-based source localisation approaches are perhaps the most popular. Absolute horizontal errors of the SSL method were lower than 2°, and the relative error was lower than 25.0%, within the angle measurement range of −30° to +30°. The SRP-PHAT algorithm has shown less than 4° average direction errors (horizontal and vertical) with a sound emitter played by a standard PC speaker at a distance of 1.0–3.6 m [24]. The played signal was white Gaussian noise at a 44.1 kHz sampling rate. Horizontal errors were lower than 2°, while the vertical errors were lower than 4°. The SSL method is superior to the vertical measurement of reference method and is similar to the horizontal measurement. In addition, the PHAT algorithm has shown a mean error of less than 6° at different frequencies (0.1–5 kHz) of sinusoidal Pings-Pause sound for microphone pairs 1 and 2 of a single Kinect computed at a distance of 1 m [34]. The inaccuracy was large on either side towards 0° and 180° (90° facing the Kinect), which is different to what was observed in this paper, that the error close to 0° (or facing the Kinect) tends to be larger than that facing away from the centre [34,35]. This is because the reference algorithms are based on a planar-wave beam-forming model, which is more sensitive to delay estimation errors from the sides of the linear microphone array. Another beam-forming technology showed less than 4° average angle errors with a sound box at different environmental noise levels. The range from −60° to +60° showed excellent performance at a distance of 2 m with continuous sound [35]. In short, this SSL method can accurately point to the source’s direction rather than precisely identifying its position.

With an accurate source direction, it is easy to detect the sub area in which laying hens produce sound. The system can detect abnormal status of laying hens by automatically tracking and recording the flock’s number of vocalisations as well as location. For example, when lacking food, the poultry drank more water, which is different from the standard production performance suggested by Hy-Line International. The standard feed intake and water consumption are 112–116 g/bird/day and 168–232 mL/bird/day, respectively [31]. Normally, water consumption of birds is twice the amount of their feed intake. However, in abnormal feeding status or under feed restriction, water consumption is irregular [36]. Sounds of pecking for water from birds in abnormal status might be a kind of redirected behaviour when they cannot get access to food. Additionally, these vocalisations produced by pecking water nipples might be a kind of stereotyped behaviour [37]. By analysing the number of vocalisations and their area distributions via SSL, we can seek out abnormal sounds (e.g., if the flock’s number of vocalisations is higher than the normal night-time level) and find feeding problems. A real-time monitoring algorithm based on analysing image distribution indices of broilers is used in commercial chicken houses, and some of the possible applications are detection of feeding and drinking problems, detection of malfunctions in heating or ventilation and monitoring of vaccination effects [38]. In general, the SSL method can be used for the automatic detection of problems with feeding and drinking, which has not yet been reported for laying hen populations.

In addition, the relatively lower localisation accuracy in one-chicken tests compared with laboratory tests was caused by the animal’s activities (especially fast-moving activities), as well as ambient noise. Kinect SDK provides an audio pre-processing function including echo cancellation, automatic gain control, etc. It can help us to cope with sound wave reflection. Additionally, an improved algorithm would reduce interval time to cope with problems of chickens’ fast movement in future tests. In general, the accuracy of this SSL algorithm, based on a couple of Kinects with six microphones, was above 70.0%. A reference method has an accuracy of 84.2% (16 out of 19 pig cough attacks) with eight microphones, which is more than the suggested six microphones of the SSL method while the least required four microphones [15,39]. However, the fewer the number of microphones, the lower the SSL accuracy might be. Furthermore, the next step is to verify the acceptable accuracy of SSL at various distances and to determine 3D azimuth for application in commercial houses.

Our results support the notion that poultry make little sound at night [31,32]. It was observed in this paper that a large number of laying hens’ vocalisations arose in the daytime, whereas a small number of vocalisations normally occurred at night. In normal sleep time, birds barely express vocal behaviour, except for nocturnal birds, and a minority of bird species are nocturnal birds [40,41]. In contrast, a flock of laying hens may produce many vocalisations during their feeding time, with a number of vocalisations above 600 (or 40 times per chicken) on an average day. However, the frequency of laying hens’ vocalisations during feeding time has not been reported. The researchers therefore propose the development of a real-time sound processing technology to accurately and continuously detect the feeding behaviours of broiler chickens, including feeding rate (g/min), feed intake (g) and meal duration (min) [12,13,14]. Certainly, SSL technology can be combined to assess animal health and welfare by automatically and continuously monitoring their feeding behaviour.

5. Conclusions

A monitoring system using microphone arrays of Kinects was developed to automatically recognise bird distributions via sound source localisation techniques. The SSL results from these Kinects had an accuracy of 74.7% and 73.6% in laboratory tests and small group tests. The flock produced an average of 40 sounds per chicken during feeding time. Particularly, the flock made significantly more sounds while undergoing stress or suffering from starvation. The system can successfully monitor the abnormal night vocalisations of poultry by analysing sound distribution areas. Additionally, it was found that the poultry flock stayed silent during their sleep time. In terms of area distribution of the poultry at night, abnormal practice management was easily discovered by using this monitoring system. Future work should reduce noise disturbance in order to better capture and quantify the flock’s SSL, and thus improve the associated implications for animal welfare and facility design adequacy. In addition, a large-scale flock test and abnormal events such as the malfunction of machines or imposition of group stress will be artificially designed to validate this system.

Acknowledgments

This work is supported by the Key Laboratory of Agricultural Engineering in Structure and Environment, which provided research farms, hardware, and technical assistance. Especially, thanks to Bob Johnston for providing language help and Wanping Zheng for proofreading the article.

Author Contributions

Methodology, X.D.; Project administration, G.T.; Writing—original draft, X.D.; Writing—review & editing, F.L. and G.T.

Funding

This work is funded by the National Key Research and Development Program of China (2016YFD0700204).

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Manteuffel G., Puppe B., Schön P.C. Vocalization of farm animals as a measure of welfare. Appl. Anim. Behav. Sci. 2004;88:163–182. doi: 10.1016/j.applanim.2004.02.012. [DOI] [Google Scholar]

- 2.Exadaktylos V., Silva M., Aerts J.M., Taylor C.J., Berckmans D. Real-time recognition of sick pig cough sounds. Comput. Electron. Agric. 2008;63:207–214. doi: 10.1016/j.compag.2008.02.010. [DOI] [Google Scholar]

- 3.Ferrari S., Piccinini R., Silva M., Exadaktylos V., Berckmans D., Guarino M. Cough sound description in relation to respiratory diseases in dairy calves. Prev. Vet. Med. 2010;96:276–280. doi: 10.1016/j.prevetmed.2010.06.013. [DOI] [PubMed] [Google Scholar]

- 4.Steen K.A., Therkildsen O.R., Karstoft H., Green O.A. Vocal-Based Analytical Method for Goose Behaviour Recognition. Sensors. 2012;12:3773–3788. doi: 10.3390/s120303773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Van Hirtum A., Berckmans D. Fuzzy approach for improved recognition of citric acid induced piglet coughing from continuous registration. J. Sound Vib. 2003;266:677–686. doi: 10.1016/S0022-460X(03)00593-5. [DOI] [Google Scholar]

- 6.Ferrari S., Silva M., Guarino M., Aerts J.M., Berckmans D. Cough sound analysis to identify respiratory infection in pigs. Comput. Electron. Agric. 2008;64:318–325. doi: 10.1016/j.compag.2008.07.003. [DOI] [Google Scholar]

- 7.Guarino M., Jans P., Costa A., Aerts J.M., Berckmans D. Field test of algorithm for automatic cough detection in pig houses. Comput. Electron. Agric. 2008;62:22–28. doi: 10.1016/j.compag.2007.08.016. [DOI] [Google Scholar]

- 8.Silva M., Exadaktylos V., Ferrari S., Guarino M., Aerts J.M., Berckmans D. The influence of respiratory disease on the energy envelope dynamics of pig cough sounds. Comput. Electron. Agric. 2009;69:80–85. doi: 10.1016/j.compag.2009.07.002. [DOI] [Google Scholar]

- 9.Zimmerman P.H., Koene P. The effect of frustrative nonreward on vocalisations and behaviour in the laying hen, Gallus gallus domesticus. Behav. Process. 1998;44:73–79. doi: 10.1016/S0376-6357(98)00035-7. [DOI] [PubMed] [Google Scholar]

- 10.Evans C.S., Evans L. Chicken food calls are functionally referential. Anim. Behav. 1999;58:307–319. doi: 10.1006/anbe.1999.1143. [DOI] [PubMed] [Google Scholar]

- 11.Wauters A.M., Richard-Yris M.A. Mutual influence of the maternal hen’s food calling and feeding behavior on the behavior of her chicks. Dev. Psychobiol. 2002;41:25–36. doi: 10.1002/dev.10042. [DOI] [PubMed] [Google Scholar]

- 12.Aydin A., Bahr C., Viazzi S., Exadaktylos V., Buyse J., Berckmans D. A novel method to automatically measure the feed intake of broiler chickens by sound technology. Comput. Electron. Agric. 2014;101:17–23. doi: 10.1016/j.compag.2013.11.012. [DOI] [Google Scholar]

- 13.Aydin A., Bahr C., Berckmans D. A real-time monitoring tool to automatically measure the feed intakes of multiple broiler chickens by sound analysis. Comput. Electron. Agric. 2015;114:1–6. doi: 10.1016/j.compag.2015.03.010. [DOI] [Google Scholar]

- 14.Aydin A., Berckmans D. Using sound technology to automatically detect the short-term feeding behaviours of broiler chickens. Comput. Electron. Agric. 2016;121:25–31. doi: 10.1016/j.compag.2015.11.010. [DOI] [Google Scholar]

- 15.Silva M., Ferrari S., Costa A., Aerts J.M., Guarino M., Berckmans D. Cough localization for the detection of respiratory diseases in pig houses. Comput. Electron. Agric. 2008;64:286–292. doi: 10.1016/j.compag.2008.05.024. [DOI] [Google Scholar]

- 16.Yu L.G., Teng G.H., Li B.M., Lao F.D., Cao Y.F. Classification Methods of Vocalization for Laying Hens in Perch System. Trans. Chin. Soc. Agric. Mach. 2013;9:236–242. [Google Scholar]

- 17.Cao Y.F., Chen H.Q., Teng G.H., Zhao S.M., Li Q.W. Detection of Laying Hens Vocalization Based on Power Spectral Density. Trans. Chin. Soc. Agric. Mach. 2015;2:276–280. [Google Scholar]

- 18.Cai W.P., Wang S.K., Wu Z.Y. Accelerated steered response power method for sound source localization using orthogonal linear array. Appl. Acoust. 2010;71:134–139. doi: 10.1016/j.apacoust.2009.07.015. [DOI] [Google Scholar]

- 19.Bower J.L., Clark C.W. A Field Test of the Accuracy of a Passive Acoustic Location System. Bioacoustics. 2005;15:1–14. doi: 10.1080/09524622.2005.9753535. [DOI] [Google Scholar]

- 20.Mennill D.J., Battiston M., Wilson D.R., Foote J.R., Doucet S.M. Field test of an affordable, portable, wireless microphone array for spatial monitoring of animal ecology and behaviour. Methods Ecol. Evol. 2012;3:704–712. doi: 10.1111/j.2041-210X.2012.00209.x. [DOI] [Google Scholar]

- 21.Mennill D.J., Vehrencamp S.L. Context-Dependent Functions of Avian Duets Revealed by Microphone-Array Recordings and Multispeaker Playback. Curr. Biol. 2008;18:1314–1319. doi: 10.1016/j.cub.2008.07.073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Blumstein D.T., Mennill D.J., Clemins P., Girod L., Yao K., Patricelli G., Deppe J.L., Krakauer A.H., Clark C., Cortopassi K.A., et al. Acoustic monitoring in terrestrial environments using microphone arrays: Applications, technological considerations and prospectus. J. Appl. Ecol. 2011;48:758–767. doi: 10.1111/j.1365-2664.2011.01993.x. [DOI] [Google Scholar]

- 23.Jana A. Kinect for Windows SDK Programming Guide. Packt Publishing Ltd.; Birmingham, UK: 2012. [Google Scholar]

- 24.Seewald L.A., Gonzaga L., Veronez M.R., Minotto V.P., Jung C.R. Combining SRP-PHAT and two Kinects for 3D Sound Source Localization. Expert Syst. Appl. 2014;41:7106–7113. doi: 10.1016/j.eswa.2014.05.033. [DOI] [Google Scholar]

- 25.Kunin V., Turqueti M., Saniie J., Oruklu E. Direction of Arrival Estimation and Localization Using Acoustic Sensor Arrays. J. Sens. Technol. 2011;1:71–80. doi: 10.4236/jst.2011.13010. [DOI] [Google Scholar]

- 26.Jin Y., Yang R.Z. Research Status and Prospect of the Acoustic Localization Techniques. Audio Eng. 2007;31:4–8. (In Chinese) [Google Scholar]

- 27.Benesty J., Chen J., Huang Y. Microphone Array Signal Processing. Spring; Berlin/Heidelberg, Germany: 2008. pp. 181–184. [Google Scholar]

- 28.Rascon C., Fuentes G., Meza I. Lightweight multi-DOA tracking of mobile speech sources. Eurasip J. Audio Speech. 2015 doi: 10.1186/s13636-015-0055-8. [DOI] [Google Scholar]

- 29.Galatas G., Ferdous S., Makedon F. Multi-modal Person Localization and Emergency Detection Using The Kinect. Int. J. Adv. Res. Artif. Intell. 2013;2:41–46. [Google Scholar]

- 30.Grubbs F.E. Sample Criteria for Testing Outlying Observations. Ann. Math. Stat. 1950;21:27–58. doi: 10.1214/aoms/1177729885. [DOI] [Google Scholar]

- 31.Hy-Line Parent Stock Management Guides. [(accessed on 17 April 2016)]; Available online: http://www.hyline.com/aspx/general/dynamicpage.aspx?id=255.

- 32.Shimmura T., Yoshimura T. Circadian clock determines the timing of rooster crowing. Curr. Biol. 2013;23:R231–R233. doi: 10.1016/j.cub.2013.02.015. [DOI] [PubMed] [Google Scholar]

- 33.Cao Y.F., Yu L.G., Teng G.H., Zhao S.M., Liu X.M. Feature extraction and classification of laying hens’ vocalization and mechanical noise. Trans. Chin. Soc. Agric. Eng. 2014;18:190–197. [Google Scholar]

- 34.Reddy V.R., Deshpande P., Dasgupta R. Robotics Audition using Kinect; Proceedings of the 6th International Conference on Automation, Robotics and Applications (ICARA); Queenstown, New Zealand. 17–19 February 2015; New York, NY, USA: IEEE; pp. 34–41. [Google Scholar]

- 35.Wang S., Yang P., Sun H. Design and Implementation of Auditory System for Mobile Robot Based on Kinect Sensor; Proceedings of the 12th World Congress on Intelligent Control and Automation (WCICA); Guilin, China. 12–15 June 2016; New York, NY, USA: IEEE; pp. 3094–3098. [Google Scholar]

- 36.Tiecheng L. The importance and method of feed restriction in laying hens’ growing period. Mod. Anim. Husb. Sci. Technol. 2018;1:24. (In Chinese) [Google Scholar]

- 37.Kuhne F., Sauerbrey A.F.C., Adler S. The discrimination-learning task determines the kind of frustration-related behaviours in laying hens (Gallus gallus domesticus) Appl. Anim. Behav. Sci. 2013;148:192–200. doi: 10.1016/j.applanim.2013.09.003. [DOI] [Google Scholar]

- 38.Kashiha M., Pluk A., Bahr C., Vranken E., Berckmans D. Development of an early warning system for a broiler house using computer vision. Biosyst. Eng. 2013;116:36–45. doi: 10.1016/j.biosystemseng.2013.06.004. [DOI] [Google Scholar]

- 39.Spiesberger J.L. Hyperbolic location errors due to insufficient numbers of receivers. J. Acoust. Soc. Am. 2001;109:3076–3079. doi: 10.1121/1.1373442. [DOI] [PubMed] [Google Scholar]

- 40.Digby A., Towsey M., Bell B.D., Teal P.D. Temporal and environmental influences on the vocal behaviour of a nocturnal bird. J. Avian Biol. 2014;45:591–599. doi: 10.1111/jav.00411. [DOI] [Google Scholar]

- 41.Perrault K., Lobert L.M., Ehnes M., Foote J.R. Nocturnal singing in a temperate bird community. J. Ornithol. 2014;155:1059–1062. doi: 10.1007/s10336-014-1077-1. [DOI] [Google Scholar]