Abstract

Laplacian mixture models identify overlapping regions of influence in unlabeled graph and network data in a scalable and computationally efficient way, yielding useful low-dimensional representations. By combining Laplacian eigenspace and finite mixture modeling methods, they provide probabilistic or fuzzy dimensionality reductions or domain decompositions for a variety of input data types, including mixture distributions, feature vectors, and graphs or networks. Provable optimal recovery using the algorithm is analytically shown for a nontrivial class of cluster graphs. Heuristic approximations for scalable high-performance implementations are described and empirically tested. Connections to PageRank and community detection in network analysis demonstrate the wide applicability of this approach. The origins of fuzzy spectral methods, beginning with generalized heat or diffusion equations in physics, are reviewed and summarized. Comparisons to other dimensionality reduction and clustering methods for challenging unsupervised machine learning problems are also discussed.

Introduction

Extracting meaningful knowledge from large and nonlinearly-connected data structures is of primary importance for efficiently utilizing data. Big data problems (e.g. > 1 GB/s) often contain superpositions of multiple distinct processes, sources, or latent factors. Estimating or inferring the component distributions or statistical factors is called the mixture problem.

Methods for solving mixture problems are known as mixture models [1], and in machine learning they are used to define Bayes classifiers [2]. Mixture models are a widely applicable pattern recognition and dimensionality reduction approach for extracting meaningful content from large and complex datasets. Only finite mixture models are described here, although countably or uncountably infinite numbers of mixture components are also possible [3]. In terms of dimensionality reduction methods, Laplacian mixture models provide global and non-hierarchical analyses of massive datasets using scalable algorithms.

0.1 Laplacian eigenspace methods

Eigensystems of Laplacian matrices are widely used by spectral clustering methods [4]. Spectral clustering methods typically use the eigenvectors with small-magnitude eigenvalues as a basis for projecting data onto before applying some other clustering method on the projected item coordinates [5].

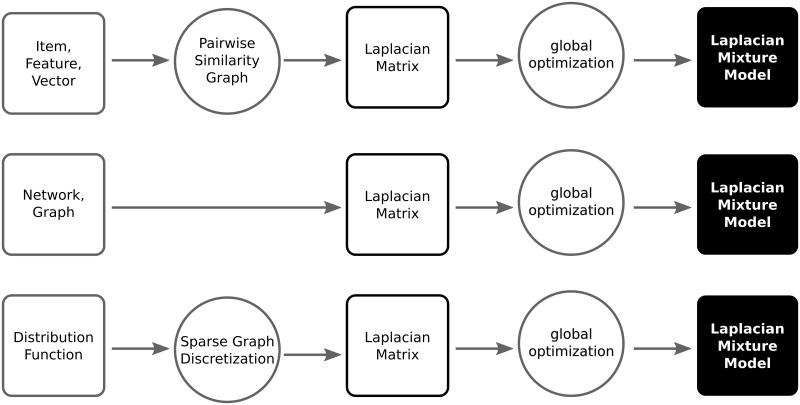

In addition to graph/network data, Laplacian eigenspace methods can be applied to both discrete observation data and also continuous mixture density function data as shown in section 0.6. As Fig 1 shows, feature vectors or item data are mapped to a graph via a distance or similarity measure [6], and mixture density data are mapped to a graph by finite-difference approximations of the differential operator on a discrete grid or mesh. Both feature vector data and continuous mixture density data are mapped to graph data as a preprocessing step prior to spectral graph cluster analysis. For such applications, simple graphs are sufficient, meaning no self-loops or multiple edges of the same type are allowed.

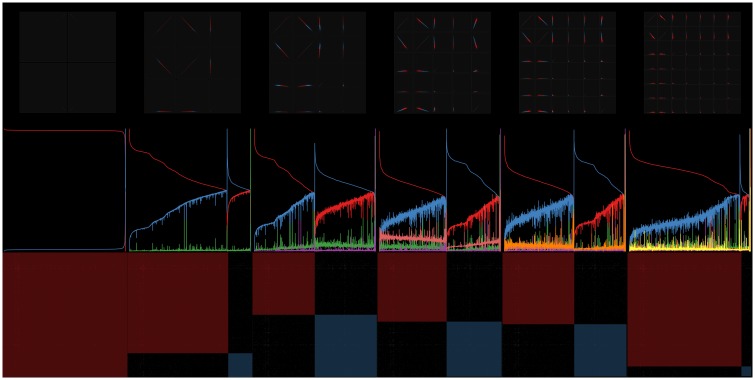

Fig 1. Laplacian mixture modeling flow, gray squares show input datatypes and their mapping to Laplacian matrices (black square).

Circles show processing steps, and the solid black square shows output model after globally optimizing the Laplacian eigenspace.

When clustering data items, pairwise similarity or distance measures describe the regions of data space or subgraphs that represent closely related items. In this context data are vectors, e.g. feature vectors in machine learning applications. Laplacian eigenspace methods fall into the class of pairwise distance based clustering methods when data vectors are input. It is the choice of this pairwise similarity or distance measure that is of utmost importance in creating accurate and useful results when generating Laplacian matrices from data items. One area of active research is in optimizing or learning the distance function based on some training data [7].

Negative Laplacian matrices are also known as transition rate matrices or (infinitesimal) generators of continuous-time Markov chains (CTMCs), as first noted by [8]. Their exponentials are also referred to as heat kernels by analogy to the continuous heat equations that involve the continuous Laplace operator [9–11]. Heat kernels are also known as diffusion kernels, and have the same eigenvectors as Laplacians for discrete state spaces, or eigenfunctions for continuous state spaces [12, 13].

For many practical purposes, assuming that the chains are irreducible, meaning there is a path connecting every pair of states or nodes in the corresponding graph, does not lose any generality. Strongly connected graphs correspond to irreducible chains, and chains can be broken into subchains and analyzed independently. Finite-state CTMCs contain embedded discrete time Markov chains with related stochastic or Markov transition probability matrices with related properties to Laplacian matrices. Given the holding times for each state, the stochastic matrix of the embedded chain is equivalent to the corresponding Laplacian for the CTMC.

On simple strongly connected networks, these matrices share the same first eigenvector (with different eigenvalues), called the Perron-Frobenius (PF) eigenvector or stationary probability distribution of the chain. For non-symmetric Laplacians, the PF eigenvector can be either a right/column or a left/row eigenvector, depending on the matrix indexing convention used (right/column eigenvectors are used here). In many cases of interest the Laplacians are symmetric, making this distinction irrelevant. According to the Perron-Frobenius theorem, the PF eigenvector is always nonnegative and can be interpreted as a probability distribution.

Laplacian matrices both define distributions by their PF eigenvectors, and can also can be defined by distributions by constructing a matrix with a matching PF eigenvector. This equivalence between distributions and Laplacian matrices provides a natural and useful bridge between probability distributions and Laplacian eigenspaces. The duality between Laplacian matrices and probability distributions can be used for the purposes of statistical analyses and unsupervised machine learning. Their spectral decompositions provide data-dependent bases for describing patterns that represent global, nonhierarchical structures in the underlying graph.

Laplacian mixture models are one way of probabilistically solving the multiple Laplacian eigenvector problem, as section 0.2 describes. They generate probabilistic mixture models directly from Laplacian eigenspaces by optimally combining other eigenvectors with the Perron-Frobenius eigenvector.

0.2 Mixture models

Distinct component processes generate superpositions of overlapping component distributions when observed in aggregate, creating a mixture distribution. The mixture problem is not easy to generally solve in part because it is so open-ended and difficult to objectively define in real-world contexts. In 1894, Karl Pearson stated that the analytical difficulties, even for the case n = 2 are so considerable, that it may be questioned whether the general theory could ever be applied in practice to any numerical case [14]. Current unmixing or separation algorithms still cannot predict the number of components directly from observations of the mixture without additional information, or else they are parametric approaches that restrict components to fixed functional forms which are often unrealistic assumptions, especially in high dimensional spaces [3].

Methods for separating the components of nonnegative mixtures of the form

| (1) |

are called mixture models, where is the number of mixture components and with x ∈ Ω an element of an index set e.g. in the continuous variable case or for the discrete case. All of the results presented here for the continuous variable cases carry over to the discrete cases by replacing integrals with summations for numerical accessibility.

Since all continuous problems must be discretized for numerical applications, the focus is on discrete variables x with continuous problems saved for the appendix. For all practical problems, it is safe to assume f(x) is normalized as a probability distribution without loss of generality.

The fk(x) are known as the mixture components or component probability distributions of each independent process or signal source, and are also assumed to be normalized without loss of generality. The ak ∈ [0, 1] are the weights or mixing proportions summing to one and forming a discrete probability distribution P(k) = ak, which is known as the class prior distribution in the context of probabilistic or Bayes classifiers [2].

Finite mixture models can be used to define probabilistic classifiers and vice versa. From exact knowledge of {f, f1, …, fm, a1, …, am}, the posterior conditional distribution of an optimal Bayes classifier for any observed y ∈ Ω can be expressed as

| (2) |

| (3) |

forming a partition of unity over the space of observations or data [15]. The component distributions fk(x) can be understood as the class conditional distributions P(x∣k) and f(x) as the evidence P(x) in the context of Bayes classifiers and supervised machine learning. As probabilistic/Bayes classifiers form partitions of unity (2) from finite mixture models (1), so do finite mixture models form partitions of unity as well. In other words, partitions of unity can be defined first, without any reference to finite mixture models (1), as

| (4) |

subject to the constraints

| (5) |

To connect mixture models with partitions of unity, the mixture components and weights from mixture models (1) can be related to (4) for k = 1, …, m according to

| (6) |

with for discrete Ω cases. This explicitly shows the formal equivalence of partitions of unity (4) as discriminative versions of finite mixture models (1). In this case, the partition of unity can be interpreted as , the mixture component conditional probabilities.

Partitions of unity (4) do not explicitly involve f(x) the mixture distribution, or require it as an input. In other words, the component conditional probabilities can still be computed even if knowledge of f(x) the underlying mixture distribution is not available or is not required. This makes the partition of unity form of mixture models (4) very useful in practice, since they apply even in cases where estimating f(x) is not relevant or available such as cluster analysis, graph partitioning, and domain decomposition. Therefore, mixture models can be considered as a special case of partitions of unity applied to the separation of mixture probability distributions.

In applications such as cluster analysis or graph partitioning, unlabeled data are automatically assigned to various groups known as clusters to distinguish them. Clusters can be understood as unsupervised analogs of classes from supervised machine learning. In cluster analysis, computing a partitions of unity of the form (4) provides a probabilistic or fuzzy clustering or partition. The components of the partition of unity can be interpreted as conditional probabilities over the clusters or partitions, analogous to the class conditional distributions (2) of Bayes classifiers.

For such problems the mixture components {fk(x)} are not relevant, and only the cluster conditional probabilities {pk(x)} are needed. Formally, they can be viewed as mixture models via (4) where f(x) = constant the uniform distribution over Ω the domain. Soft clusterings are most useful when insights into the global structures of data spaces or networks are of interest. Hard clustering algorithms, such as k-means, do not provide any information about the global, nonhierarchical relationships between items or nodes in a graph. Rather than being dichotomous as implied by their names, soft and hard clustering approaches are complementary, and may be used together in one analysis to answer different questions about the same dataset.

Materials and methods

Finite mixture models of the form (1) are easy to understand and interpret due to their probabilistic definition. This motivates hybridizing finite mixture models and Laplacian eigenspace methods, as described in this section.

0.3 Notation

Let V ≡ {1, …, N} be the set of ordered vertices of a simple strongly connected graph G with weight function w: V × V → [0, 1], and w(i, j) = 0 if no edge between vertices i and j exists. Let be the weighted adjacency matrix of G with aij = w(i, j), and let d ≡ eT A and be the corresponding diagonals weighted degree matrix. represents the column vector of all ones. The weighted Laplacian of graph G for some fixed ordering on the vertices V is given by

| (7) |

where dv is the weighted vertex degree of the v-th vertex, and u = 1, …, N, v = 1, …, N. In matrix notation this becomes Δ = D − A. Using this definition, the Perron-Frobenius vector corresponds to the right column eigenvector of Δ with eigenvalue zero.

Let ϕi(x), i = 0, …, N − 1 denote the right eigenvectors of N × N Laplacian matrices Δ of simple strongly connected weighted or unweighted graphs, and similarity for their continuous eigenfunction analogs where N = ∞. Since the Laplacian matrices Δ are assumed to be normal, their right eigenvectors form a complete orthonormal basis over Ω, the discrete or continuous domain. Assume that these eigenvectors are ordered according to ascending eigenvalue magnitude. The Laplacian mixture modeling algorithm estimates the true finite mixture distribution components fk(x), k = 1, …, m from (1) directly from {ϕ0, …, ϕm − 1} the first m right eigenvectors of normal Laplacian matrices. For now, assume that m is known or given as a constant Laplacian mixture models are determined directly from the, with m ≪ N in many dimensionality reduction problems [16, 17]. Section 0.6 describes methods for estimating m using existing model selection techniques in cases where it is not known.

Although the algorithm is defined for continuous domains, the scope is limited to discrete problems here, to focus on the most practical situations. The discrete input is the set of eigenvectors 1, …, m of Δ, the weighted graph Laplacian. Unweighted graphs occur for binary weights w: V × V → {0, 1} that are all zeros or ones, which can be considered a special case of continuous weights on the unit interval. The assumption that w has a maximum value of 1 can be made without loss of generality.

Three types of input data and their corresponding analysis problems are considered:

Graph or network data are converted to Laplacian matrices via their weighted adjacency matrices, and the problem is to infer the optimal fuzzy assignments or soft partitioning for community detection and centrality scoring.

Feature vector data, which are converted into Laplacian form using pairwise similarity or distance measures, in which case the problem is to estimate the conditional mixture probability estimates.

Sampled values of a mixture density function f(x), for which the resulting Laplacian is designed so that f(x) ≡ ϕ0(x), the Perron-Frobenius or first Laplacian eigenvector, and the problem is to estimate the mixture components.

The vector of fuzzy spectral estimates for the true values pk(x) from (4) can be defined in terms of a nonlinear optimization problem for , the square invertible m × m matrix of expansion coefficients.

Minimizing the error or nondeterminicity of the model from a Bayes classifier standpoint (2) serves as an objective for determining the optimal matrix M* having the least total overlap of the macrostate boundaries allowable by the subspace spanned by the selected right eigenvectors. The sum of the expected values of the squares of the conditional probabilities

| (8) |

equals one if and only if they are perfectly binary or deterministic. Therefore the deviation of the expected squares of the conditional probabilities from unity serves as a measure of the squared error i.e. fuzziness, overlap, or nondeterminicity.

0.4 Definition

Let the loss function 0 < L < 1 represent this expected error or nondeterminicity i.e. areas of fuzziness or overlap where the conditional probabilities are non-binary,

| (9) |

which is equivalent to the probabilistic classifier’s expected error using a quadratic loss function [2]. (∫Ω • dx can be replaced by ∑x∈Ω • in discrete cases.) This objective function definition connects Laplacian eigenspace methods and probabilistic/Bayes classifiers (2) derived from finite mixture models.

The minimum expected error condition

| (10) |

subject to the partition of unity constraints

| (11) |

selects maximally crisp (non-overlapping) or minimally fuzzy (overlapping) decision boundaries among classifiers formed by the span of the selected right eigenvectors. This objective function attempts to minimize the expected overlap or model error between the component distributions of the mixture distribution f(x) ≡ ϕ0(x) defined by the PF eigenvector of the input Laplacian. Minimizing the loss L(M) occurs over the matrix of expansion coefficients for the eigenspace spanned by the selected right eigenvectors which serve as an orthogonal basis. The eigenbasis provides linear inequality constraints defining a feasible region in terms of a convex hull over M ∈ GL(m) the expansion coefficient parameter space.

Therefore

| (12) |

provides the following definition of a Laplacian mixture model via (6):

| (13) |

where M* solves (10) and (11), a linear-constrained concave quadratic optimization problem.

0.5 Global optimization

In terms of probabilistic classifiers, the loss function L(M) represents the mean squared error of the probabilistic classifier derived from the mixture model specified by each value of M. By minimizing L(M), Laplacian mixture models provide spectrally regularized minimum mean squared error separations for a variety of input data types, as shown in Fig 1.

For any viable value for m the number of mixture components, the column vector valued function

| (14) |

is numerically optimized over M to compute the Laplacian mixture model. The optimal is determined via global optimization of L(M) over the set of coordinate transformation matrices M satisfying the partition of unity constraints (11) to compute M* the globally-optimized model parameters.

In matrix notation, any feasible can be written in terms of M and the column vector of basis functions

| (15) |

as

| (16) |

subject to

| (17) |

where e = (1 1 ⋯ 1)T and e1 = (1 0 ⋯ 0)T are m × 1 column vectors.

Now the objective function L(M) can be expressed in terms of ω and f(x) ≡ ϕ0(x) as

| (18) |

| (19) |

| (20) |

| (21) |

a concave quadratic function where tr MT M is equal to , the squared Frobenius norm of M the eigenbasis transformation matrix.

Weighted graph Laplacians may not always be normal matrices, in which case the eigenvectors may not be orthogonal. In many cases, the Laplacian can be symmetrized prior to running the Laplacian mixture modeling algorithm. Directed weighted graphs with combinatorially symmetric adjacency matrices correspond to finite ergodic (i.e. irreducible and aperiodic) Markov chains satisfying detailed balance. Combinatorially symmetric graph Laplacians can be symmetrized by conjugation with diagonal matrices, analogous to a change of variables in the corresponding heat equation [18, 19]. Reversing the change of variables after computing the Laplacian mixture model allows the same form as above to be used in this more general context. This type of symmetrized Laplacian has the same eigenvalues as the original unnormalized graph Laplacian, unlike the standard symmetric normalized graph Laplacian [20].

These results allow the linearly constrained global optimization problem for M* to be stated as

| (22) |

The linear inequality constraints Mϕ ≥ 0 encode all of the problem-dependent data from the input Laplacian via

| (23) |

This linearly constrained concave quadratic problem is an archetypal example of an NP-hard problem because of the combinatorial number of vertex solutions defined by the convex polytope formed by the constraints. Statistically useful solutions are not guaranteed to exist and even then they do, approximating the solution to 22 requires specialized numerical algorithms. Simple yet nontrivial problems where this optimization problem can be solved analytically, yielding optimal models, are shown next. This provides evidence for the usefulness of Laplacian mixture models, and highlights some of their spectral graph theoretic properties.

0.6 Interpolating cluster graphs

In this section, Laplacian mixture models are shown to perform optimally on a type of graph that can be called interpolating cluster graphs. Cluster graphs are unions of complete graphs, and have block diagonal adjacency matrices, corresponding to disjoint subgraphs. Complete graphs have adjacency matrices whose off-diagonal elements are all equal to one, corresponding to fully interconnected vertices. Cluster and complete graphs are both well studied and understood from the perspective of spectral graph theory [21].

Interpolating them allows spectral graph theoretic results for cluster and complete unweighted graphs to be extended to cluster-weighted complete graphs. Suppose that is the number of blocks, Nk ∈ N≥2, k = 1, …, K, are the vertex counts for each cluster, and N ≡ ∑k Nk is the total vertex count. Let the family of interpolating cluster graphs be defined by their adjacency matrix form:

| (24) |

| (25) |

The matrix denotes the n-by-n identity matrix and , , denotes the outer product of the real vector of all ones with itself, i.e. the rank-one n-by-n matrix of all ones.

Although the term “interpolating graph” was introduced here for the specific case of interpolating between cluster graphs and complete graphs, the concept can be defined for any two arbitrary graphs with the same nodes. In general, given two N × N graph adjacency matrices A0 and A1, an interpolating graph adjacency matrix Ainterp = (1 − ε)A0 + εA1 can be defined as their convex sum. Using this form, interpolating cluster graphs 24 can be written B = (1 − ε)Acomplete + εAcluster, where Acomplete and Acluster are the respective N × N adjacency matrices for a complete graph and a cluster graph. This shows that interpolating cluster graphs form a connection between complete and cluster graphs.

Therefore, interpolating graphs can be seen as a specific type of graph perturbation. Graph perturbations such as those involving mass-spring networks have been studied in [22–24]. Studying the properties interpolating graphs in more depth may be of theoretical interest, but that is not the focus of this article.

The adjacency matrices of interpolating cluster graphs interpolate between the adjacency matrices of cluster graphs and the adjacency matrices of complete graphs via ε ∈ [0, 1], a separation parameter. Interpolating cluster graphs are complete graphs when the separation parameter ε equals zero, and they are cluster graphs for ε = 1. In between, for 0 < ε < 1, interpolating cluster graphs provide models of highly similar of vertices representing homogeneous network communities.

When ε = 1, B = A, a block diagonal adjacency matrix corresponding to a cluster graph that is a union of K smaller complete Nk-vertex graphs. Results from spectral graph theory show that for cluster graphs, the zero eigenvalue has multiplicity K and that the connected components of the graph can be directly identified from the position of their nonzero elements. All of the information about the structure of cluster graphs is contained in the K-dimensional Laplacian eigenspace associated with the zero eigenvalue.

With ε = 0, , the adjacency matrix of a complete N-vertex graph with uniform edge weights. Spectral graph theory shows that the multiplicity of the 2nd eigenvalue of a complete graph is N − 1, and its value is N.

The 2nd Laplacian eigenvalue, called the Fiedler value, measures the connectivity of the graph and can be used to find optimal partitions [25]. When the Fiedler value has multiplicity one, its corresponding eigenvector is known as the Fiedler vector. The eigendecomposition of interpolating cluster graphs was found to have a simple analytically solvable form.

Interpolating cluster graphs have a 2nd Laplacian eigenvalue with multiplicity K − 1, so in some sense, they have K − 1 Fiedler vectors. This makes using the Fiedler vector to identify the corresponding cluster partitions a more challenging problem. In this section, Laplacian mixture models are shown to optimally solve it.

For any 0 ≤ ε < 1, the graph is connected, the zero eigenvalue of B has multiplicity one, and the eigenvector with eigenvalue zero is the constant vector. The one-dimensional Laplacian eigenspace associated with the zero eigenvalue no longer contains any information about the cluster structure of the graph. In addition, the 2nd eigenvalue equals N(1 − ε) with multiplicity K − 1, and the eigenvectors associated with this eigenvalue are not uniquely determined. It is no longer obvious how to infer the cluster assignments of each vertex directly from the eigenvectors associated with the first K eigenvalues.

For interpolating cluster graphs, the Laplacian mixture modeling optimization problem (22) can be analytically solved, as shown below. Laplacian mixture models recover the structure of interpolating cluster graphs exactly, converting the first K eigenvectors into binary conditional probabilities for belonging to each cluster. Although the eigenvectors associated with the 2nd eigenvalue are not uniquely determined, expressions for them can be defined to encode the transition rates of conserved quantities between blocks.

Choosing the first cluster as a reference for concreteness, the analytic expressions for these K − 1 eigenvectors, ϕij, where i = 1, …, N, and j = 0, …, K − 1, are given by:

| (26) |

The corresponding 2nd eigenvalue with multiplicity K − 1 equals N(1 − ε). These expressions can be easily verified e.g. by direct substitution or using symbolic manipulation tools for specific choices of K.

Although the eigenvectors listed above are not mutually orthogonal, they are orthogonal to the constant vector, since they sum to zero. They are also linearly independent, and can therefore be used to prove the optimality of the Laplacian mixture model for this graph. Putting

| (27) |

| (28) |

solves the Laplacian mixture modeling problem explicitly, in closed-form for any finite K < ∞ number of blocks.

These binary conditional probabilities form an indicator matrix for the cluster assignments of each vertex. I.e., pij = 1 if vertex i is in cluster j and 0 otherwise, providing an exact solution to the cluster weighted complete graph analysis problem. This corresponds to an ideal solution with no fuzziness in the assignments of each vertex to a single cluster. The objective function value L from (9) reaches its lower bound of zero for such binary mixture component conditional probabilities. Laplacian mixture models therefore have provable optimality properties on cluster interpolating graph types.

For this analytically solvable case, Laplacian mixture models exactly recover the block diagonal structure of the interpolating cluster graphs, for all cluster numbers K, cluster sizes , and separation parameters ε ∈ (0, 1). For any fixed K, This can be easily verified directly or via symbolic manipulation tools. Rigorous proofs, using mathematical induction, are beyond the scope of this article. Having solutions to Laplacian mixture modeling problems for any on arbitrarily large graphs and cluster numbers allows validation of numerical solvers.

In the limit of vanishing separation parameter ε, the graph approaches a complete graph without any cluster structure. When ε becomes arbitrarily close to zero, the 2nd eigenvalue becomes arbitrarily close to the higher eigenvalues, and the gap between the 2nd and higher eigenvalues becomes arbitrarily small. Laplacian mixture models remain exact for this class of graphs regardless of the magnitude of the spectral gap, i.e. even when there are no sparse cuts. These analytic results show that there are cases where Laplacian spectral gap presence is sufficient but not necessary for the first K eigenvectors to be useful in identifying graph structure.

Theoretical worst-case analysis suggests that exact solutions to the NP-hard global optimization problem involved are not possible. Nevertheless, useful approximate solutions have been developed for density function estimate, feature/vector, and graph data during the course of numerical testing. Numerical examples are presented next to demonstrate the flexibility, versatility, and other features of Laplacian mixture modeling approaches.

Results and discussion

The NP-hard nature of the linearly-constrained concave quadratic global optimization problem makes exhaustively computing all possible models is impractical, due to the geometric growth with increasing model dimension in the number of vertices in the convex hull defined by the linear inequality-constraints. Empirical testing suggests that for most applications, this theoretical obstacle can be avoided in practice, and accurate solutions sampling strategies can be scalably implemented for approximating the global optimizer on large datasets. In part, this is due to the detailed information contained in each of the factors or mixture components identified due to their fuzzy/overlapping/probabilistic form.

Laplacian mixture model components identify large-scale regions of the graph in such a way that each region covers the entire graph. Each mixture component, factor, or dimension provides information about the entire graph whose weighting favors the region it corresponds to. As demonstrated in this section, it is possible to efficiently compute a reasonable range of components or dimensions even on relatively large graphs, making the NP-hard aspects of the global optimization problem circumventable.

Optimizing the sampling strategy for approximately solving the linearly-constrained concave quadratic global optimization problem is an open problem not discussed here. These parameters are used as inputs for the modified Frank-Wolfe heuristic, which involves solving a set of linear programming problems. The details of the modified Frank-Wolfe heuristic are described in more detail in [26]. Since they are not data-dependent, and for the sake of consistency when comparing different runs, the global optimization search parameters were precomputed and stored in a lookup table. By using a lookup table, computing these parameters does not contribute any significant overhead to the optimization runtimes. The current strategy is to gain confidence in the reliability of the approximant by locating the same one multiple times using different search parameters by slightly oversampling the solution space.

Because of numerical limits of finite-precision arithmetic, it is impossible to perfectly enforce the constraint that the transformation matrix M* be invertible. One way of dealing with this issue is to apply an even stricter constraint that often makes sense for data analysis applications. This constraint requires that all factors contain at least one item whose probability for that factor is larger than all other factors.

This means that hard-thresholding the conditional factor probabilities must not create any degenerate clusters with zero items assigned to them. It’s a reasonable criterion for applications where each factor represents an independent observable signal generating process. Therefore, the optimal solution is chosen by minimizing the loss function L(M) over all non-degenerate models. Many of the computed solutions are degenerate, an aspect of the NP-hard optimization problem that is partially circumvented in practical situations using heuristics to accurately approximate the solution.

Algorithm 1 lists the pseudocode describing the actual operations performed for all of the numerical results presented here. The details of the modified Frank-Wolfe heuristic are described in [26]. In this section, several numerical examples are presented to illustrate some Laplacian mixture model sampling strategies and demonstrate their efficacy on both synthetic and real-world problems. The results show high levels of performance across a range of problem types without encountering problems such as numerical instability or sensitivity to small variations of tunable parameters. All model computation run-times were less than 24 hours using Matlab on a dual 2.40 GHz Intel Xeon E5-2640 CPU running the 64-bit Windows 10 operating system with 128GB of RAM.

Algorithm 1 Compute p for N × m Laplacian eigenvector matrix ϕ

1: procedure LaplacianMixtureModel (ϕ, S)

2: Initialize scalar Lmin ← ∞

3: Initialize m × m array M*

4: for s ∈ S do ⊳ Run the modified Frank-Wolfe heuristic

5:

6: pT ← Ms ϕT

7: Initialize 1 × m array c ← 0

8: for i = 1, …, N do ⊳ Run the degeneracy check

9:

10: cj ← cj + 1

11: end for

12: if then

13:

14: else

15: L(Ms) ← ∞

16: end if

17: if L(Ms) < Lmin then

18: Lmin ← L(Ms)

19: M* ← Ms

20: end if

21: end for

22: pT ← M*ϕT

23: Return {p, M*}

24: end procedure

Data are cleared from memory after the sparse similarity matrix is computed to conserve resources. Small-magnitude eigenvector estimates for are computed using the Matlab function eigs, a sparse iterative solver. The similarity matrix may be cleared from memory after computing the first m eigenvectors, before the spectral mixture models are computed.

Noisy interpolating cluster graphs

The analytic solution of the spectral mixture modeling problem for interpolating cluster graphs provides provable optimality results for these class of idealized noise-free graphs. Performance evaluation in the presence of noise is also necessary in order for these theoretical results to be meaningful on real graph datasets, which contain noise.

It is not possible to test every possible combination of cluster number, cluster size distribution, noise level, and noise type. Nevertheless, testing with a few varied noise levels and combinations is sufficient to provide evidence for the stability and robustness of the algorithm performance.

To demonstrate the algorithm’s multiscale pattern recognition capabilities, five log-linearly spaced cluster sizes were chosen, ranging from 2 to 10,000 vertices. Six varied noise levels corresponding to choices of the α uniform noise parameter were also tested. Uniform noise was symmetrically added to noise-free interpolating cluster graph adjacency matrices B(ε) according to

| (29) |

to generate noisy adjacency matrices , where u is a sample from the uniform distribution over the unit interval. This form ensures that all elements of lie between zero and one. (Matlab code implementing the generation of these noisy interpolating cluster graphs is available upon request.)

Sparsifying the graph prior to analysis by using a cutoff parameter on the nearest neighbors of each vertex is common in practice. See [27] for details on the intuition and justification for this sparsification parameter. Five different values of the kNN nearest neighbor parameter and three different values of the kmin outlier removal parameter were applied to validate the methodology subsequently used for real world data. The entire test was repeated for Nout = 0 and Nout = 1 choices of Nout, the outlier noise parameter.

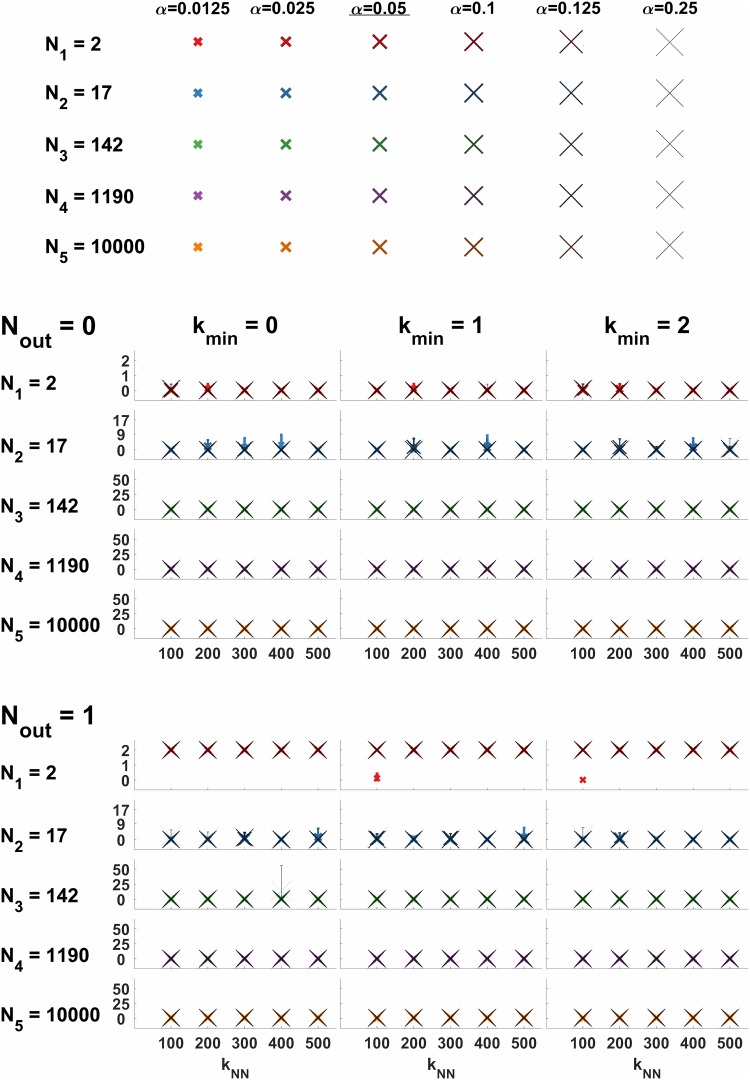

For each of the resulting 180 {α, kNN, kmin, Nout} parameter combinations, ten randomized samples were drawn using the same pseudorandom seed to allow direct comparisons to be made. Panels of Fig 2 show the number of errors vs. kNN as errorbar overlay plots, representing a total of 1800 runs. Markers represent average labeling errors after assigning each vertex to one of the 5 mixture components identified. Error bar lengths indicate one sample standard deviation, and colors correspond to the different cluster sizes as labeled.

Fig 2. Overlay plot matrix of number of errors vs. kNN, representing a total of 1800 Laplacian mixture modeling algorithm runs.

Noisy interpolating cluster graph matrices were randomly generated for each run. Markers represent sample averages of labeling error numbers, after assigning each vertex to one of the 5 mixture components identified. Marker sizes correspond to uniform noise level α as indicated by the marker key on top of the plot matrix. Error bar lengths indicate one sample standard deviation. Colors correspond to the different log-linearly spaced cluster sizes (2, 17, 142, 1190, 104) as labeled. Columns correspond to different values of kmin, the minimum strongly-connected neighbor number. Outlier noise level indicated by Nout parameter separating upper and lower sections of the plot matrix.

In the absence of outliers (Nout = 0), perfect accuracy was achieved for all noise levels on cluster sizes larger than 100. For some choices of kNN, for any choice of kmin, perfect accuracy was obtained for all cluster sizes over all samples, showing that setting kmin > 0 does not introduce algorithm errors. In fact, perfect accuracy was achieved at Nout = 1 for all cluster sizes and noise levels using kmin = 1 for several values of kNN.

For one outlier (Nout = 1), the accuracy of the algorithm on the smallest cluster size of 2 suffers significantly at all noise levels, as shown in the lower section of Fig 2. In this case, setting kmin = 1 significantly improved the accuracy of the smallest cluster size with 2 vertices and low noise levels (top panel, middle column), reducing the average errors count to 0.1.

Fig 2 shows that although high levels of noise make small cluster sizes difficult to recover, overall, the best accuracy occurs with kmin = 1 and kNN = 100. The improvements in accuracy shown for Nout = 1 using kmin = 1 were also verified using higher values of Nout (data not shown). This indicates that the algorithm’s optimal recovery property for cluster graphs is highly robust to noise and is not overly sensitive to the choice of kNN and kmin parameters. It also supports the methodology of using kmin > 0 and kNN < N for real data, as described in subsequent sections.

Data clustering/fusion

Only the eigenvectors with small magnitude eigenvalues are necessary to compute Laplacian mixture models, which are of lower dimensionality than the original data. The original data are not used as direct inputs to the algorithm, allowing efficient use of memory resources and storage after the initial preprocessing steps are completed. The maximum number of eigenvectors to compute is data and application dependent, and there are almost as many different conceptions and definitions of communities in network analysis as there are problem areas. All of the steps of the algorithm are listed in Algorithm 1 for the sake of clarity and reproducibility.

Once computed, the resulting Laplacian mixture models can be used as lower-dimensional or reduced representations of the original data. This type of dimensionality reduction occurs as part of a larger machine learning system, e.g. for supervised machine learning algorithm training problems such as classification. It can also be used for low-rank approximations in linear and multilinear (tensor) regression problems. In these cases, it is the details of the larger system that this dimensionality reduction step is embedded within that determine the amount of information loss that occurs.

This example involves single-cell expression profile data using a technique known as Drop-seq that can easily generate over 10,000 measurements per 50,000-cell tissue sample [28]. Cell contents are suspended within droplets, and the mRNAs are captured on microbeads with unique DNA barcodes that allow single-cell analyses to be done in parallel without losing the association to the individual cells captured [29]. Tissues such as the retina have specialized and differentiated cell types that have been identified as histologically distinct, and single-cell expression data potentially provides a means of matching cell types with unique gene expression features. One of the issues in the field is its exploratory nature, requiring unsupervised machine learning approaches since there are no “ground truth” data or labels available (E.Z. Macosko, personal communication).

Probabilistic models can infer whether some set of low-dimensional factors can explain the various expression profiles measured. This example represents one of the first nonhierarchical analyses of single-cell expression and potentially paves the way for useful biological insights into the structure of cellular expression patterns.

To tune the structures recognized by the first m Laplacian eigenvectors, a minimum weight parameter was introduced. This parameter adjusts the minimum similarity value. I.e., if an item’s max similarity is less than this value, it was set to it, connecting all of the items with at least one other item to prevent weakly-connected graphs. The minimum weight parameter was set to the 99-th percentile value over all pairs for the purpose of illustrating the method. According to [30], this parameter should be varied across some range of interest and consensus information extracted from the ensemble.

In addition to the minimum weight parameter, there are many possible choices of normalization for the measurements prior to fitting or learning the model in an unsupervised manner. Euclidean distances are well tested, but in multidimensional spaces they are sensitive to the choice of normalization. To identify unpredicted biological information, ensemble clustering approaches are recommended, where multiple perturbations of the cluster analysis are made, including the parameters of clustering [30]. The denoised normalization developed for the example shown below is suggested as an additional perturbation that may be used for future ensemble clustering setting, not as a general improvement for all cases.

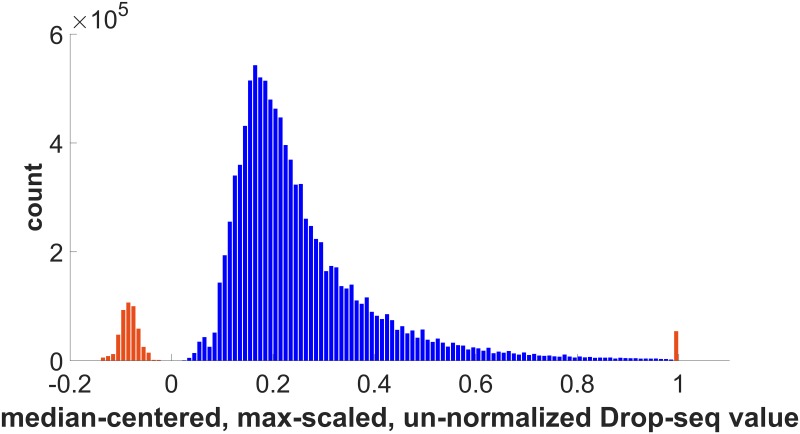

The motivation for developing a denoised normalization is provided by the histogram Fig 3, which shows the distribution of un-denoised median-centered and max-scaled data. After subtracting the median value, 9 cells were all zeros, i.e. these cells probably contained no useful data and were not included in the subsequent analyses to avoid spurious clusters. (The indices of these all-zero cells in the dataset are: 4583, 6148, 13026, 15439, 17395, 24267, 26655, 28148, and 43383.)

Fig 3. Histogram of 10,900,036 nonzero Drop-seq datapoints after subtracting median and dividing by max value for each cell.

Bins containing negative values on the left and the bin containing one on the right are colored orange to indicate outlier distributions and contain < 5% of the data. Bins containing values between 0 and 0.995 are colored in blue and capture > 95% of the data. Only the data from the bins shown in blue was used for subsequent data clustering steps.

The bulk of the data (> 95%) occurs in the region containing the broad peak shown in the blue colored bins, lying between the orange colored bins containing negative values and the bin containing a value of one. Bins containing negative values, and the bin containing the value of one, in orange, do not match the shape of the portion shown in blue containing most of the data, and appear to belong to other distributions that should be analyzed separately or treated as measurement artifacts or noise. For the purposes of the example shown here, the points indicated by orange bins in Fig 3 are treated as outliers and removed as a denoising step, prior to performing any data clustering. Since this denoising step removes less than 5% of the total data, it is reasonable to assume it does not affect the biological relevance of the results, when the goal is to obtain a view of global patterns contained in the entire dataset as whole. The saturated values indicated by the orange bin on the right cannot be distinguished from artifacts due to contamination or sensor malfunction. Removing them is a conservative choice in order to focus on the dominant mode of the distribution and to make the analysis less sensitive to potential outliers.

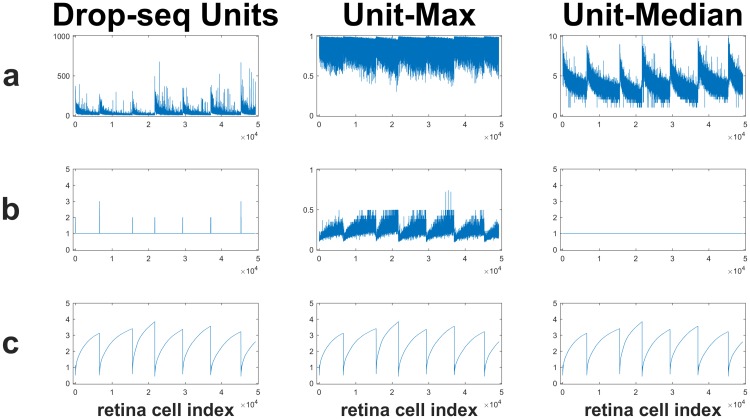

Another reason for using the denoised normalization developed here is because the normalization used in [28] contains features that may adversely affect statistical analyses that involve Euclidean distances. Fig 4 compares the denoised unit-max and unit-median normalizations for the 49299 cells (columns) and 24657 genes (rows) used here to the normalization in [28]. The bottom row c shows the median of the unnormalized data columns, corresponding to retina cell types, for reference to line up the relevant features in the top two rows. Rows a and b show the max and median of each cell type, respectively. The left column shows the Drop-seq normalization and the middle and right columns respectively show the denoised unit-max and unit-median normalizations used here.

Fig 4. Comparison of normalization in [28] (left column) to the denoised unit normalization used here (right column).

The bottom row c shows the median of the same unnormalized columns that were input into both normalization procedures. The middle row b shows the median of the normalized values for each column of data, where columns correspond to retina cell types. Row a shows the maximum normalized value for each column of data.

Note the alignment of the local minima of the unnormalized medians shown in row c with the artificial trends shown in rows a and b of the left column. In particular, the median shows a value of 1 except at the instabilities whenever the local minima in the bottom row occur. These spikes in the median shown in the middle row are enhanced by quantization noise, which can also adversely affect Euclidean distance based analyses. This shows that the Drop-seq normalization may be overly and contains outliers whenever the unnormalized median reaches a local minimum.

The top row of the left column shows that the trend in the max of the normalized values creates outliers around the local minima shown in the bottom row. This suggests that their normalization may potentially be amplifying noise to accentuate the nonlinear trend shown row a of the left column. Dividing by small values is well known to increase numerical instabilities using finite-precision arithmetic. Rows a and b of the middle and right columns show the denoised normalizations used here. The same denoising step was used for both unit-max and unit-median normalizations.

Additive offset noise was removed by subtracting the median value, and multiplicative gain noise was removed by scaling max values to one, after correcting the offset. After gain and offset correction, outlier noise was then identified as negative and unity values and removed. The unit-median normalization was obtained from the denoised unit-max normalization by dividing all columns by the median of their nonzero values. As the left and right columns if the top row of Fig 4 show, this makes the distribution more similar compared to the original Drop-seq normalization. The top row of Fig 4 shows that the unit-max or unit-median normalizations (middle and right columns, respectively) are distributed within a smaller range than the Drop-seq normalization (left column).

Unlike the Drop-seq normalization, the denoised unit-max and unit-median normalizations are unquantized and cannot be interpreted as representing physical molecular copy numbers. Denoising makes the data more appropriate for Euclidean distance based data analysis because of its sensitivity to outliers. This makes the denoised normalizations potentially better for statistical analyses involving Euclidean distances such as k-means, which become more sensitive to outliers as the dimensionality of the embedding space increases. Prior to analysis using pairwise Euclidean distance driven approaches, future studies may also convert these offset and gain corrected and denoised values to Z-scores, providing an additional clustering perturbation for use in ensemble approaches as described in [30].

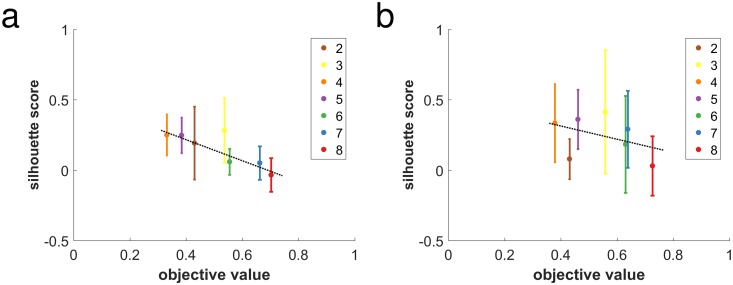

Fig 4 suggests that the denoised unit max normalization may provide better results when using Euclidean distances for pattern recognition because the resulting distribution is more compact. This justifies using the unquantized denoised normalization shown on the middle and right columns for the Laplacian mixture model examples shown here, although more thorough studies are needed using ensemble clustering approaches to validate these results in the future. In order to compare between the denoised unit-max and denoised unit-median normalizations, the residuals of a linear fit to the plot of silhouette score vs. objective value were compared. Fig 5 shows the silhouette scores for the 2-through-8 factor Laplacian mixture models generated for the (denoised) unit-max (a) and (denoised) unit-median (b) normalizations. The dotted line shows the robust linear fit identified from the silhouette scores, indicating a negatively sloping trend suggesting that higher factor models may be overfitting. Linear fit residuals for the unit-max normalization (a) are noticeably smaller in magnitude than those for the unit-median normalization suggesting it may be more stable for Euclidean-distance based network analyses. Therefore all subsequent results shown here made use of the denoised unit-max normalization. After computing the denoised unit-max normalization, 66 columns were found to have constant values and were removed prior to clustering, and 8653 (35%) of denoised rows were found to be duplicates and removed. An input data matrix of 49,233-by-16,004 values was left for computing the Laplacian that was input into the global optimization algorithm determining the corresponding Laplacian mixture model.

Fig 5. 2-through-8 factor model silhouette score estimates computed by averaging over 10 sets of randomly subsampled cells (2000 cells per sample) vs. optimal objective value for (a) unit-max and (b) unit-median denoised normalizations.

Dashed lines indicates robust linear fit computed using iteratively reweighted least squares. The 3-factor silhouette scores (yellow) were consistently outlying above the linear trend shown by the dashed line for both normalizations, and the 7-factor solution (blue) is the highest dimensional model with positive residual.

The k-nearest neighbor method with corresponding parameter kNN was used to set the maximum number of nonzero entries in any row or column of the resulting pairwise similarity matrix. This ensures sparsity and prevents instabilities in the Laplacian eigenvector structure.

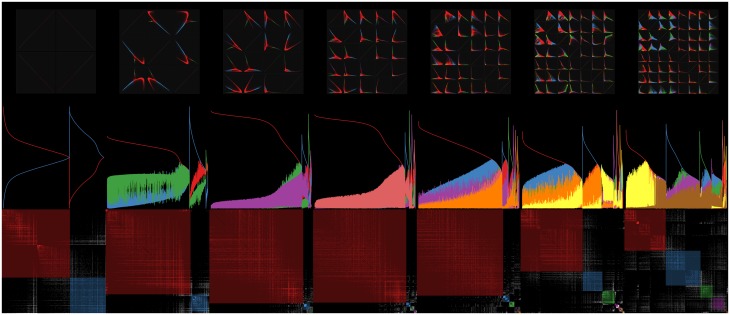

Empirically when a useful model can be computed, it is typically part of a sequence of models beyond which an acceptable (nondegenerate) solutions cannot be found. The rigorous mathematical explanation for this has not been fully understood yet but is discussed in more detail in section 0.6. Since no 9-factor solutions were found, the search was truncated at m = 8. Fig 6 shows the optimized 2-through-8 factor models for the unit-max normalization. Since the 7-factor solution showed the highest dimensionality with positive residual, it was chosen to visualize in more detail.

Fig 6. Sequence of models for the unit-max normalized Drop-seq retina cell profiles published on GEO (ID GSE63472).

Top row shows scatterplot matrices colored by thresholded cluster assignment index for 2-8 factor models. Middle row shows corresponding factor conditional probability line plots sorted by max assignment index. Diagonal blocks on images in the bottom row show the corresponding sorted input similarity matrix revealing hidden structure in the unlabeled data.

Fig 7 shows a zoom in on the scatterplot matrix for the m = 7 solution shown in the top row of Fig 6. Subsequent hierarchical or other hard-clustering algorithms can be applied using these 7-dimensional conditional probabilities as feature vectors in order to generate non-overlapping clusters or communities if needed.

Fig 7. Grouped scatterplot matrix of conditional probabilities for the 7-dimensional unit-max normalized Drop-seq retina cell Laplacian mixture model.

Axes are autoscaled inside the interval [0, 1] for all panels. Horizontal axes are aligned by columns, and vertical axes are aligned by rows. Colors indicate max probability assignment index showing the corresponding hard clustering generated by thresholding. Hard clustering assignment counts were for the overlapping modules detected.

Colors indicate hard cluster assignments generated by thresholding appear visually reasonable, suggesting that potentially informative overlapping communities were detected. Unlike many other community detection algorithms based on global optimization, Laplacian mixture models can identify communities with sizes that are different orders of magnitude.

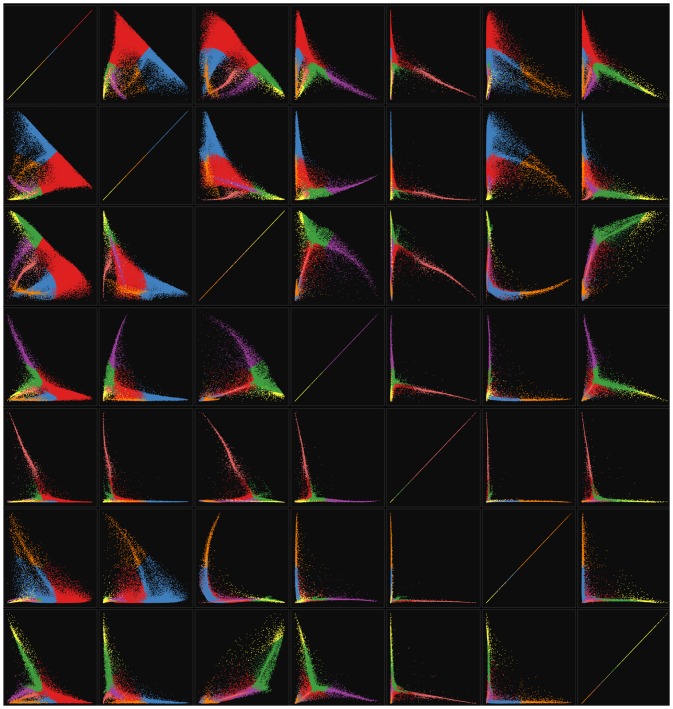

The grouped scatterplots for the 1st-vs-2nd conditional probabilities from the top row of Fig 7 are plotted separately in Fig 8. These highlight the differences between the models in terms of their ability to separate the data into potentially informative structures identified in the Drop-seq retinal cell profiles. From a signal processing and graph partitioning standpoint, higher factor numbers provide more fidelity and parallelization at the expense of compression ratio. Once such a sequence of models has been computed, standard model selection techniques from machine learning and statistics are directly applicable. The problem of model selection will not be dealt with in detail here since the focus is to demonstrate the basic approach. Once ground-truth data are available, these models can be selected for biological usefulness.

Fig 8. Grouped scatterplots of conditional probabilities for components 1 vs. 2 from the 3-through-8 factor unit-max normalized Drop-seq retina cell Laplacian mixture models.

Plots are in order of ascending model dimension (3 through 8) from left-to-right, top-to-bottom. Colors indicate max probability assignment index showing the corresponding hard clustering generated by thresholding. Axes are autoscaled inside the interval [0, 1] for all panels.

Graph/Network analysis

Unlike data clustering using symmetric item pairwise-similarity matrices as input, graph clustering accepts weighted adjacency matrices that are often not symmetric but can be symmetrized. Biological networks, social patterns, and the World Wide Web are just a few of the many real world problems that can be mathematically represented and topologically studied in terms of community detection [31].

Generally, network community detection tries to infer functional communities from their distinct structural patterns [32]. Functional definitions of network communities are based on common function or role that the community members share. Examples of functional communities are interacting proteins that form a complex, or a group of people belonging to the same social circle.

Modularity is one quantity that, when maximized, provides a measure of communities potentially having different properties such as node degree, clustering coefficient, betweenness, centrality, etc., from that of the average network. Because modules of many different sizes often occur, the potential limits of multiresolution modularity and all other methods using global optimization have been suggested by [33]. In particular, methods based on global optimization have been suspected of being incapable of finding communities at many different sizes or scales simultaneously.

Although Laplacian mixture models are a global optimization type method, they are inherently multiscale since higher dimensional eigenspaces encode multiresolution dynamics from a Markov process interpretation. In order to illustrate the promise of using Laplacian mixture models to provide useful global, nonhierarchical views of large graphs, the E. coli genome was analyzed as a test problem.

E. coli is a bacterial model organism in biology that has a relatively small genome but (like all genomes) one that still contains many transcribed regions with unknown functions. Using interactome data provided by the HitPredict protein-protein interaction database [34], one large connected component was identified for analysis, containing 3257 out of 3351 total genes/proteins.

In order to prepare the weighted adjacency matrix for the analysis, the top kmin connections were strengthened using the .99th quantile over all weights, and then a nearest-neighbor cutoff of kNN = 125 was applied. Next, rows of the weighted adjacency matrix were normalized to sum to one, to reduce the influence of proteins with stronger connections on average. Finally, because protein-protein interactions are mutual by nature, the matrix was symmetrized by taking the elementwise maximum between it and its transpose.

To select appropriate values of kmin and kNN, a grid search was performed by computing all five-factor models over the ranges kmin = 3, …, 5 and kNN = 1, …, 192, where 192 is the maximum degree for the entire graph. The largest cluster size after hard thresholding by maximum conditional probabilities was plotted as shown in Fig 9. For this dataset, a sequence of 2-through-7 factor solutions was found using kmin = 4 and kNN = ∞, keeping all of the edges from the original dataset. Fig 10 provides the same three views of the sequence of models as for the Drop-seq analysis above. Since the primary input was interaction data and no pairwise distances were computed, the silhouette scores are not available. Standard model selection techniques can be applied at this point, but are not performed here since the focus is on demonstrating Laplacian mixture modeling approaches. It is possible to gain some insight into model quality by examining the contributions of the individual model components to the squared loss or uncertainty score. Table 1 lists the component contributions to the loss function, with lower values being more favorable. The 6-factor model was the only one having two components less than 0.5 and all components less than 0.95. Its hard-thresholded sizes for this model’s components were 1869, 1362, 11, 7, 6, and 2. Different orders of magnitude component sizes suggest the ability to detect multiscale communities despite belonging to the class of global optimization type methods. The next steps in developing this method could include delving into the possible biological significance of the patterns identified by Laplacian mixture models in an unsupervised way by analyzing the Homo sapiens interactome.

Fig 9. Max cluster size of 5-factor models vs. kNN for E. coli protein protein interaction data.

Colors correspond to different values of kmin. The plot shows that kmin = 4 was the lowest value to show a reasonably large size for the 2nd-largest cluster after hard thresholding the conditional probabilities.

Fig 10. E. coli interactome analysis (3257 proteins with 20239 pairwise interactions) showing 2-through-7 factor models.

Top panel shows grouped scatterplot matrices, middle row shows conditional probability line plots, and bottom panel shows corresponding graph adjacency matrices sorted by max conditional probability value.

Table 1. Component losses of Laplacian mixture models for the E. coli interactome network dataset.

| dimensionality | component index | ||||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | |

| 2 | 0.912 | 0.016 | |||||

| 3 | 0.840 | 0.355 | 0.576 | ||||

| 4 | 0.824 | 0.510 | 0.904 | 0.431 | |||

| 5 | 0.473 | 0.519 | 0.901 | 0.823 | 0.895 | ||

| 6 | 0.478 | 0.943 | 0.489 | 0.824 | 0.924 | 0.890 | |

| 7 | 0.864 | 0.924 | 0.907 | 0.935 | 0.673 | 0.956 | 0.361 |

Density estimation

Nonparametric mixture density separation is a challenging problems in statistics. In the context of mixture density function separation or unmixing problems, Laplacian mixture models fall into the class of partial differential equation (PDE)-based methods. The connections of Laplacian mixture models to PDE “coarse-graining” methods are described in more detail in the appendix.

These differential equations allow Laplacian eigenspaces to be defined from input mixture density estimates as described below. The resulting Laplacian mixture models define globally optimized mixture component estimates directly from the spectral information contained in the discretized PDE.

This synthetic mixture density separation example allows unambiguous evaluation of the performances of different tuning parameter choices. For the first example, a randomized three-component mixture f(x) ∝ f1(x) + f2(x) + f3(x) was constructed, consisting of one component made from a mixture of three radial basis functions with randomized covariance transforms: Gaussians, Laplace distributions, and hyperbolic-secant functions. Since these components do not share any common parametrizations, using any one class of distribution to compute the unmixing will result in errors. This simple yet nontrivial example provides a test of the accuracy of the nonparametric Laplacian mixture modeling approach for nonparametric density function unmixing. that include additional smoothness or regularity assumptions.

Discretized density function values are taken as input for Laplacian mixture model computation via direct approximation of a class of heat equations known as Smoluchowski equations in physics as described in 0.6. As explained in [35], the input mixture density estimate f(x) is uniformly sampled on a discrete grid or lattice of points using neighbor indices Ii, i = 1, …, N. where β ∈ (0, ∞) acts as a scaling parameter with the interpretation of inverse absolute temperature in statistical physics. Non-boundary off-diagonal Laplacian matrix values are then set according to

| (30) |

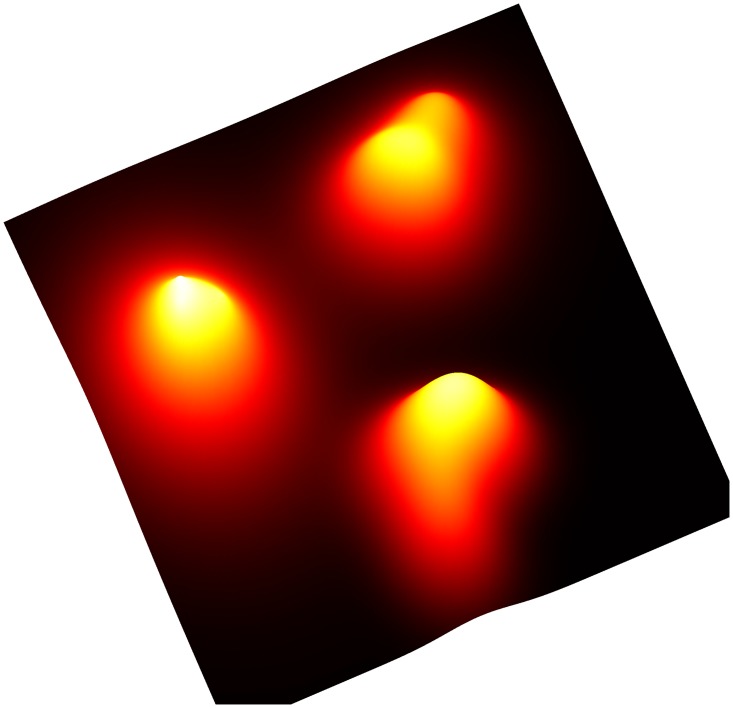

Fig 11 shows an image of f(x) evaluated at 40, 000 Cartesian gridpoints in two dimensions with colors indicating the value of f(x) at each point. Algorithm performance can be improved by tuning the β parameter. The topological structure of macrostate splitting as β increases from sufficiently small nonzero value towards ∞ has been useful for solving challenging global optimization problems [36]. Such homotopy-related aspects of macrostate theory are not explored here for the sake of brevity.

Fig 11. Gaussian/Laplace/hyperbolic-secant mixture density function surface plot colored by probability density.

Each of the three separable components were constructed by adding randomly generated anisotropic radial functions with either Gaussian, Laplacian, or hyperbolic-secant radial profiles. Finally, these randomized components were superimposed to generate the final mixture distribution shown here.

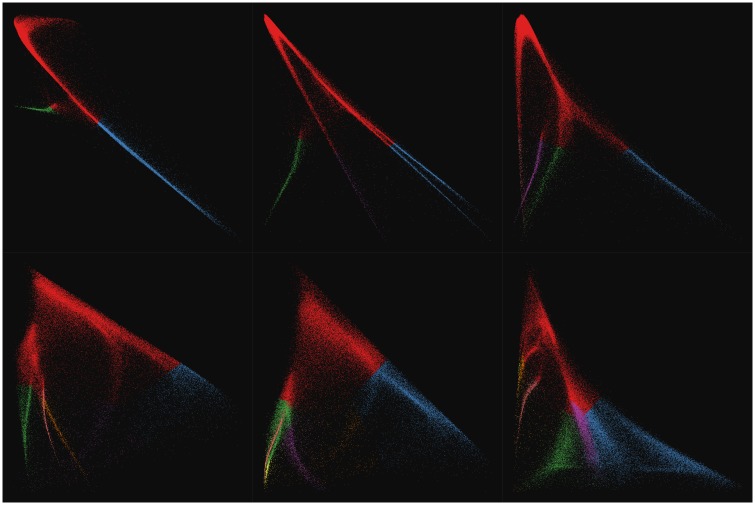

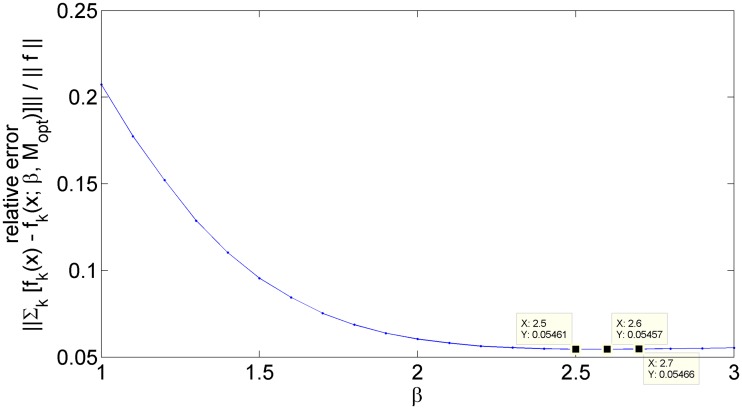

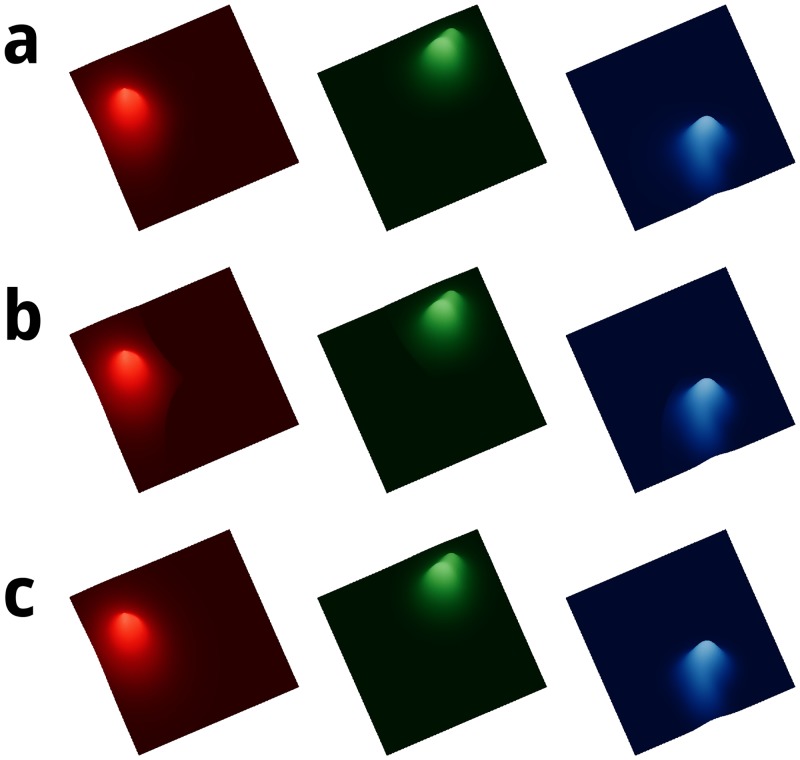

Since the true solution was known for this test problem, the relative error vs. β was optimized with a one-dimensional grid search as shown in Fig 12. Fig 13 shows the optimized Laplacian mixture model for this test problem. Rows a and b of Fig 13 appear acceptable with no visible mixing across components. Column 2 of Table 2 shows the relative errors for the probabilistic/unthresholded and hard-thresholded optimal β = 2.6 solutions. Notice that hard-thresholding these components along their decision boundaries increases their error value compared to the original soft/probabilistic/fuzzy model.

Fig 12. Relative error of the m = 3 Laplacian mixture model for the Gaussian/Laplace/hyperbolic-secant mixture test problem vs. β.

Minimum value of β = 2.6 indicated by flanking by datatips.

Fig 13. Optimally-scaled β = 2.6 Laplacian mixture model components for the Laplace (red)/hyperbolic-secant (green)/Gaussian (blue) 2-D test problem.

Row a: unthresholded Laplacian mixture model components, Row b: hard-thresholded components, Row c: original (unmixed) components. Column 2 of Table 1 lists the corresponding mean squared errors.

Table 2. Relative errors of Laplacian mixture models for the Gaussian/Laplace/hyperbolic-secant mixture density function separation/unmixing test problem.

| β | ||

|---|---|---|

| 1 | 2.6 | |

| no threshold | 0.2078 | 0.0541 |

| hard threshold | 0.1803 | 0.0718 |

Conclusion

Laplacian mixture models are a new tool for probabilistic/fuzzy spectral clustering and graph/network analysis because they nonhierarchically identify large separable regions and their interconnections. This provides high level soft partitions or dissections of big/massive data in their entirety without using any iterative/localized seed points. Their versatility and flexibility come at the cost of their computational and model selection challenges. Since their original formulation in [37], many implementation challenges have been overcome and their connections to other Laplacian eigenspace methods have been developed, leading to the current reformulation including new loss functions and notation.

Many possible models can be computed and model selection is an important consideration in order to select the subset of models that are most appropriate for a given application. In some applications such as compression or denoising, minimizing the number of components, partitions, clusters, or factors is more important than perfect reproduction of the original signal. Other applications such as recovery of a reference signal might benefit from choosing the largest number of factors that are computationally feasible. The optimal choice model may also be constrained by relative to available resources for a given application. Model selection is application dependent and the examples presented here may not provide the best results for every problem but may be useful as a guide for future studies.

Laplacian eigenspaces impose constraints on the space of possible models that can be defined, providing a form of spectral regularization. In the context of data clustering, eigenspace structures are determined by the choice of distance or similarity measure and the choice of parameters used for this measure. For graph/network analysis, the process of computing a weighted adjacency matrix can be adjusted to fine-tune the corresponding Laplacian eigenspace structure. Applications involving unmixing mixture distribution function estimates can tune a parameter in the corresponding partial differential equation used to define the Laplacian.

Another potential application not demonstrated in the examples section includes more accurate information retrieval, search, and recommender systems. The PageRank or Google algorithm and its personalized or localized variants have become standard methods in these application areas [38]. Originally, PageRank was used for ranking search results according to overall graph centrality score as given by the Perron-Frobenius eigenvector. The original PageRank algorithm provided a scalable and practical method for large graph datasets such as the World Wide Web, but nodes with similar centrality scores might not belong to the same location in the graph. Later, personalized and localized variants of the original PageRank algorithm were developed to address this issue, but introduce bias from the choice of seed nodes or locations and lose the global breadth of the original PageRank method. Laplacian mixture models may provide a regional PageRank variant, refining the original PageRank centrality scores according to the regions of the graph encoded by the mixture components, a possibility left for future work.

Regardless of the application or type of input data structure, optimizing the structure of Laplacian eigenspaces can be challenging to do manually and may be difficult to fully automate. While Laplacian mixture models are nonparametric in terms of the data-dependent functions their eigenspaces support, in practice there are tuning parameters involved for processing raw input data. Several strategies for semi-automatically optimizing the Laplacian eigenspace structure were presented here, and more work in this area will be done in the future.

Laplacian eigenspaces explain all of the nonequilibrium dynamics defined by the Markov chain generated by the corresponding Laplacian, and hence have dynamic interpretations. They also have physical interpretations, where the PF eigenvector represents a fixed point of the differential equation from a dynamic systems perspective. The physical and dynamic systems interpretations of Laplacian eigenpaces complement their statistical and algebraic interpretations, revealing new connections between ideas from previously separate fields. Laplacian mixture models are an example of how combining ideas from physics, and statistics provides valuable new data analysis algorithms, where many connections remain to be found.

During the global optimization step, many more models are computed and then only a subset (often one) is selected from these samples. Different loss functions can affect which solution(s) are accepted from the output of the global optimization algorithm, and the quadratic loss function used here can be modified freely depending on the application details. The squared loss function has been empirically verified to provide reasonably good models for a wide variety of data inputs, and similarly validating other loss functions would be of value. It also generates a linearly-constrained concave quadratic global optimization problem which has been well-studied in the literature and can be approximately solved with high accuracy for certain convex hull geometries.

The provably optimal recovery results for noise-free interpolating cluster graphs provides an absolute mathematical reference for the algorithm’s performance in ideal settings. Performance comparison to other community detection and spectral graph partitioning algorithms are needed, with a wider variety of test datasets. Subsequent studies will focus on thorough evaluation of various performance metrics to establish the pros and cons of this algorithm compared to other algorithms empirically.

Future studies will focus on empirically developing a better understanding of how the geometry of the convex hull formed by the linear inequality constraints relates to the statistical qualities of the resulting models. The next step will be a detailed experimental analysis of the pros and cons of Laplacian mixture models for item data, graph/network data, and density/distribution function data using a thorough set of benchmarks. Perhaps one day this story will circle back to its mathematical origins in Perron-Frobenius theory and create a picture connecting mathematics, physics, statistics, and machine learning. An intuitive formal theory to guide the development has been presented here, but the rigorous theory is incomplete.

Appendix

Macrostates

The original formulation of Laplacian mixture models comes from the definition of macrostates of classical stochastic systems, in equation (24) of [39, page 9990], restated as

| (31) |

where the coordinate vector R from the original statement has been replaced by x and k replaces the index α, to match the notation used here. The are equivalent to the Laplacian eigenfunctions. The mixture components Φk(x) were originally defined by equation (26) of [39], after substituting α ← k as in (31), as

| (32) |

Multiplying both sides by ψ0(x) to match the definition

| (33) |

recovers the form of (1), a finite mixture model, in terms of the Laplacian eigenspace .

Macrostates were originally created to rigorously define both the concept of metastability and also the physical mixture component distributions based on slow and fast time scales in the relaxation dynamics of nonequilibrium distributions to stationary states [39]. Mixture models are used for linearly separating these stationary or Boltzmann distributions in systems with nonconvex potential energy landscapes where minima on multiple size scales occur, e.g. high-dimensional overdamped drift-diffusions, such as macromolecules in solution. Proteins folding, unfolding, and aggregating in aqueous solution are one type of biological macromolecule that can be described in terms of overdamped drift-diffusions [39].

Transitions between states belonging to different components of a mixture occur on relatively slow timescales in such systems, making them appear as the distinct discrete states of a finite-state continuous time Markov process when measured over appropriate timescales. Such systems are called metastable [39, 40].

In the macrostate definition, the variable x is continuous and the Markov process is a continuous-state, continuous-time type known as a drift-diffusion in physics. Mathematically, drift-diffusions are described as a type of continuous-time Markov process analogous to CTMCs, and samples or stochastic realizations of drift-diffusions are described by systems of stochastic differential equations known as Langevin equations [40]. For the purposes of using Laplacian mixture models, it is sufficient to know that the eigenvectors of the Laplacian are analogous to the eigenfunctions of Smoluchowski or heat/diffusion operators [10–12].

Smoluchowski equations

The Laplacian mixture component estimates are defined as expansions of the Laplacian eigenvectors. For continuous mixture density functions, there is a continuous-space partial differential equation (PDE) analog of Laplacian matrices known as drift-diffusion or Smoluchowski operators [40], a type of heat equation.

Smoluchowski equations have the form

| (34) |

and belong to a class of reversible continuous-time, continuous-state Markov processes used to describe multiscale and multidimensional physical systems that can exhibit metastability [40].

The potential energy function V(x) determines the deterministic drift forces acting on ensemble members i.e. sample paths or realizations of this stochastic process and can often be viewed as a fixed parameter that defines the system structure. The drift forces bias the temporal evolution of initial distributions P(x, 0) to flow towards regions of lower energy as t increases compared to free diffusion (Brownian motion).

Technically there is a different Smoluchowski-type heat equation for each distinct choice of V(x). Hence the plural form should be used generally although this is often overlooked in the literature.

Smoluchowski operators

The elliptic differential operator

| (35) |

has e−βV(x) as an eigenfunction with eigenvalue zero, also called the stationary state or unnormalized Boltzmann distribution. It is easy to evaluate L0[e−βV(x)] directly to verify that it equals zero, satisfying the eigenvalue equation.

L0 is a normal operator and therefore a similarity transform S−1 L0 S to a self adjoint form L exists [40]. S has the simple form of a multiplication operator with kernel , giving

| (36) |

The stationary state of L from Eq (36) is denoted

| (37) |

and is used along with other eigenfunctions of L in the separation/unmixing of the Boltzmann distribution into m macrostates, analogous to the Perron-Frobenius eigenvector in Laplacian mixture models. Adapting the notation slightly from [39], the eigenfunctions of L are denoted and the eigenvalues are denoted as .

Discrete approximation

For sufficiently low dimensional nonnegative functions evaluated on evenly-spaced grids, the discrete approximation of (34) can be used via a nearest-neighbor Laplacian approximation to construct a sparse approximation of L in (36) with reflecting boundary conditions as described in [35]. The discrete approximation approach is useful for applications where the mixture function f(x) can be evaluated on a grid such as density estimates generated by histograms or kernel density estimation. This was the method used for the numerical example described in Section 0.6.

Discrete approximations can also be applied to nonnegative signals such as spectral density estimates and 2 and 3 dimensional images sampled on evenly-spaced nodes after preprocessing to remove random noise. Since discrete approximations of Smoluchowski or heat equations are microscopically reversible continuous-time Markov chains (CTMCs), macrostate models can also be constructed by embedding input data into Markov chains.

Just like in the continuous case for (35), discrete transition rate matrices for time reversible processes are similar to symmetric matrices. Similarly their eigenvalues and eigenvectors are real and the eigenvectors corresponding to distinct eigenvalues are orthogonal.

Previous formulations

The macrostate data clustering algorithm is an earlier formulation developed in [37, 41]. Detailed comparisons between the two formulations are not included here because the previous methods required customized algorithm implementations that limited their practicality. These earlier papers did not explicitly mention that macrostates are a type of finite mixture model of the form (1), nor did they mention the Bayes classifier posterior form and probabilistic interpretations of (2).

Previous formulations used a different objective function and a different global optimization solver. The objective function was a logarithm of the geometric mean uncertainty which led to a nonlinear optimization problem that was not as well-studied as quadratic programs.

Here, the original the objective function from [39] is used, providing a more standard concave quadratic programming (QP) problem that can be more easily solved. Linearly constrained concave QPs can be solved using established heuristics such as modified Frank-Wolfe type procedures [26].

Another difference is that in [37, 41] only unbounded inverse quadratic similarity measures and soft Gaussian thresholds that do not directly control sparsity were tested. Here, other choices of similarity/distance measures are tested and the use of hard thresholding is examined to directly control sparsity of the resulting Laplacian matrix used as the primary input into the algorithm.

The previous formulation defined in [37] did not mention the applications to density function unmixing/separation via (13) and the connection to discrete approximations of Smoluchowski equations as described in section 0.6.

Data spectroscopy