Abstract

Facial emotion recognition is a critical aspect of human communication. Since abnormalities in facial emotion recognition are associated with social and affective impairment in a variety of psychiatric and neurological conditions, identifying the neural substrates and psychological processes underlying facial emotion recognition will help advance basic and translational research on social-affective function. Ventromedial prefrontal cortex (vmPFC) has recently been implicated in deploying visual attention to the eyes of emotional faces, although there is mixed evidence regarding the importance of this brain region for recognition accuracy. In the present study of neurological patients with vmPFC damage, we used an emotion recognition task with morphed facial expressions of varying intensities to determine (1) whether vmPFC is essential for emotion recognition accuracy, and (2) whether instructed attention to the eyes of faces would be sufficient to improve any accuracy deficits. We found that vmPFC lesion patients are impaired, relative to neurologically healthy adults, at recognizing moderate intensity expressions of anger and that recognition accuracy can be improved by providing instructions of where to fixate. These results suggest that vmPFC may be important for the recognition of facial emotion through a role in guiding visual attention to emotionally salient regions of faces.

Keywords: Facial expression, Emotion recognition, Prefrontal cortex, Lesion, Neuropsychology

1. Introduction

Facial emotion recognition is a fundamental building block of human communication that relies on multiple psychological sub-processes and neural substrates (Adolphs, 2002). Given the ubiquity of facial emotion signals in human communication (Ekman & Friesen, 1971), it follows that impairments in facial emotion recognition might be associated with abnormal social behavior, ranging from difficulties with non-verbal communication and mental state inference to failing to appreciate the effect of one's actions on others. Indeed, abnormalities in processing or recognizing facial expressions have been reported for a variety of conditions, including autism spectrum disorders (Pelphrey et al., 2002), schizophrenia (Kohler et al., 2003), depression (Anderson et al., 2011), psychopathy (Blair et al., 2004), traumatic brain injury (Croker & McDonald, 2005), multiple sclerosis (Henry et al., 2009), and childhood maltreatment (Masten et al., 2008; Pollak & Kistler, 2002). Furthermore, studies suggest that treatments aimed at modifying how individuals attend to facial expressions of emotion can reduce symptom severity in anxiety disorders (Eldar et al., 2012; Hakamata et al., 2010). Thus, identifying the neural substrates and psychological processes underlying facial emotion recognition and attention will help advance basic and translational research on social-affective function

As researchers continue making progress in mapping out the neural circuitry subserving facial emotion recognition, the role of ventromedial prefrontal cortex (vmPFC)in this function has been a topic of some debate. It has long been established that patients with lesions in vmPFC exhibit social behavior abnormalities that indicate an emotion processing impairment (Barrash, Tranel, & Anderson, 2000; Damasio, 1996; Eslinger & Damasio, 1985), but the precise nature of the emotional impairment in this population remains unclear. A number of studies have specifically addressed facial emotion recognition in this population, with mixed results. There are multiple reports that patients with vmPFC lesions are unimpaired at recognizing emotional expressions (Hornak et al., 2003; Wolf, Philippi, Motzkin, Baskaya, & Koenigs, 2014). However, several studies have demonstrated a general emotion recognition impairment in patients with vmPFC lesions, relative to either healthy or brain-damaged comparison (BDC) populations (Heberlein, Padon, Gillihan, Farah, & Fellows, 2008; Hornak, Rolls, & Wade, 1996; Shamay-Tsoory, Tomer, Berger, & Aharon-Peretz, 2003; Shaw et al., 2005; Tsuchida & Fellows, 2012). In addition to the equivocal results of lesion studies, vmPFC activation in healthy subjects is less reliably observed in functional magnetic resonance imaging studies of facial emotion processing than other brain regions implicated in emotion recognition, such as the amygdala (Fusar-Poli et al., 2009). One potential explanation for these equivocal findings might be related to the intensity of emotional expressions in the stimuli. Studies reporting null findings have frequently used high intensity, exaggerated facial expressions that lack ecological validity and may introduce a ceiling effect as a result of the ease with which individuals can identify these expressions (Wolf et al., 2014). In contrast, studies reporting emotion recognition impairments amongst individuals with vmPFC lesions have used less intense morphed emotional expressions, putatively making the recognition task more difficult (Heberlein et al., 2008; Tsuchida & Fellows, 2012). It is possible that use of more ecologically valid, lower intensity expression stimuli is necessary for detecting emotion recognition impairments in vmPFC lesion patients.

In contrast to the mixed findings regarding the role of vmPFC in emotion recognition, a substantial body of research has clearly implicated the amygdala, a set of nuclei in the medial temporal lobe, in recognizing emotional content from facial expressions. The amygdala and vmPFC share strong bidirectional projections by way of the uncinate fasciculus (Ghashghaei & Barbas, 2002), suggesting that these regions may be two nodes in a network subserving emotion recognition. Results from both non-human primate research (Gothard, Battaglia, Erickson, Spitler, & Amaral, 2007) and human neuroimaging studies (Whalen et al., 2004; Whalen et al., 1998) converge on a role for the amygdala in processing facial expressions. Additionally, human lesion studies indicate that the amygdala contributes to the control of visual attention during emotion recognition by guiding fixations towards emotionally salient regions of the face, specifically the eyes. Adolphs et al. (2005) showed that a patient with bilateral amygdala lesions was impaired at recognizing fearful expressions when allowed to freely view the faces, and this patient also made fewer fixations than comparison subjects to the eyes of emotional faces. However, her recognition accuracy was rescued to levels commensurate with healthy performance when she was instructed to actively attend to the eyes of fearful expressions. This combination of results suggests that the pattern of fixations when viewing an emotional expression can causally affect the viewer's ability to recognize that expression.

Similar to the effect of amygdala damage leading to reduced fixations to the eyes of emotional faces, previous work has established that patients with vmPFC lesions also make fewer fixations than comparison subjects to the eyes during emotion recognition, particularly for fearful expressions (Wolf et al., 2014). Given that the amygdala and vmPFC share strong reciprocal connections (Ghashghaei & Barbas, 2002), if patients with vmPFC lesions have an emotion recognition impairment, this impairment may be a direct result of patients with vmPFC lesions making fewer fixations to the eyes of emotional faces (Wolf et al., 2014), as is the case for patients with amygdala damage (Adolphs et al., 2005). It might therefore be expected that effortful attention to the eyes of emotional faces could improve emotion recognition accuracy in patients with vmPFC lesions.

In the present study we used an emotion recognition task with morphed expressions of varying intensities to determine whether or not vmPFC plays a role in emotion recognition and if vmPFC contributes to guiding fixations towards emotionally salient regions of the face. First, we test the hypothesis that vmPFC plays a role in emotion recognition of low and moderate intensity expressions. Because prior work has shown that patients with vmPFC lesions fixate to the eyes of fearful faces less than comparison subjects (Wolf et al., 2014), we specifically hypothesized that patients with vmPFC lesions would have impaired recognition of fear at low and moderate intensities. Second, we test the hypothesis that vmPFC, like the amygdala, contributes to guiding fixations to emotionally salient regions of the face. Specifically, we hypothesized that any emotion recognition impairment in patients with vmPFC lesions would be alleviated by effortful attention to the eyes of emotional faces.

2. Material and methods

2.1. Participants

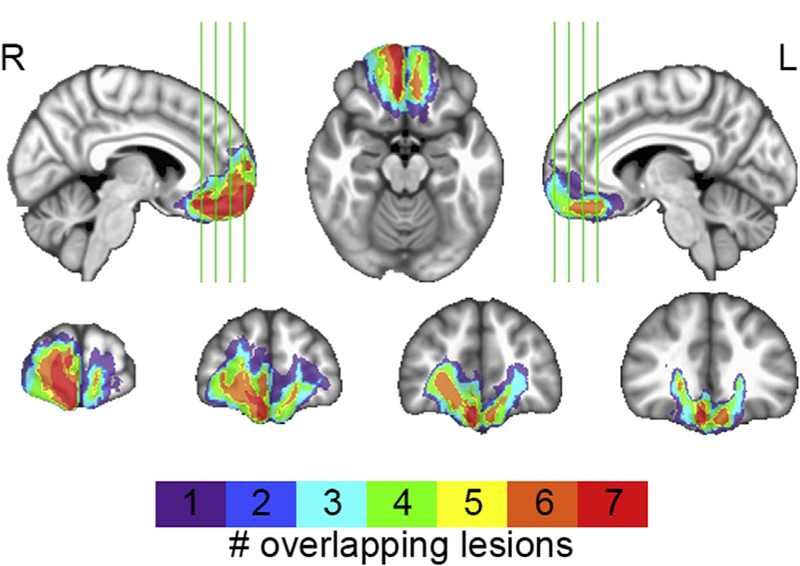

The target lesion group consisted of seven neurosurgical patients with extensive parenchymal changes, largely confined to vmPFC, where vmPFC is defined as Brodmann areas 11, 12, 25, 32, and the medial portion of 10 below the level of the genu of the corpus callosum (Fig. 1). Five vmPFC lesion patients had bilateral damage, while two had damage restricted to the right hemisphere. All bilateral vmPFC lesion patients had large anterior cranial fossa meningiomas that underwent gross total tumor resection. Both unilateral vmPFC lesion patients had right anterior cerebral artery aneurysms treated by surgery for clipping, one following subarachnoid hemorrhage.

Fig. 1–

vmPFC lesion overlap. Color indicates the number of overlapping lesions at each voxel.

Five neurosurgical patients who had focal lesions outside of vmPFC comprised a BDC group. Three patients had undergone surgery for aneurysm clipping following subarachnoid hemorrhage in the right anterior temporal lobe. The remaining two BDC patients underwent tumor resection, one in the cerebellum and one in dorsomedial frontal cortex. The inclusion of these BDC patients allows us to rule out the possibility that differences in emotion recognition accuracy observed in the vmPFC lesion group could be due to anatomically nonspecific effects of brain damage or a history of related medical issues (e.g., craniotomy, edema, seizure, past medications, etc.).

All vmPFC lesion and BDC patients' surgeries were performed in adulthood and all experimental data were collected at least three months after surgery, during the chronic phase of recovery. Testing was performed within seven years of surgery. All neurosurgical patients were recruited through a patient registry established through the University of Wisconsin, Department of Neurological Surgery.

Twenty-five neurologically healthy adults, matched in age and sex to the vmPFC lesion group, participated in the study as a healthy comparison group. The healthy comparison group had no history of brain damage, neurological or psychiatric illness, or current use of psychoactive medication. One healthy comparison participant was excluded as a statistical outlier based on deficient emotion recognition performance during the free viewing block of the experimental task (see Section 2.3. Emotion recognition task below). This resulted in a final healthy comparison group size of n = 24. Healthy comparison subjects were recruited through community advertisement. All participants had normal or corrected to normal vision. All participants provided informed consent.

2.2. Lesion segmentation and image normalization

Structural images for vmPFC patients' were obtained at least three months after surgery. For all vmPFC lesion patients, lesions were visually identified and manually segmented. For the five patients with bilateral lesions, high-resolution (1 mm3) T1-weighted anatomical magnetic resonance images were available. In this case, lesion boundaries were drawn in native space to include areas with evidence of gross tissue damage or signal abnormalities. A T2*-weighted FLAIR anatomical image was used to identify additional damage surrounding the core lesion area not apparent on the T1weighted image. T1-weighted anatomical images were preprocessed with the FreeSurfer image analysis suite (http://www.nmr.mgh.harvard.edu/freesurfer) to remove non-brain tissue, as previously described (Segonne et al., 2004). The resulting skull-stripped anatomical images were diffeomorphically aligned to the Montreal Neurological Institute (MNI) coordinate system using a symmetric normalization algorithm (Avants & Gee, 2004) with constrained cost-function masking to prevent warping of tissue within the lesion mask (Brett, Leff, Rorden, & Ashburner, 2001). For the two unilateral vmPFC lesion patients, MRI data were not available due to the presence of aneurysm clips. For these patients, lesions were segmented by inspecting CT images available from medical records and tracing areas with evidence of tissue damage onto an MNI template brain in relation to anatomical landmarks. A lesion overlap map (Fig. 1) was computed as the sum of lesion masks for all vmPFC lesion patients in MNI space.

2.3. Emotion recognition task

The emotion recognition task employed expressions of fear, anger, happiness, sadness, disgust, and surprise from the pictures of facial affect set (Ekman & Friesen, 1976). Morphing a neutral face with a full intensity expression depicted by the same model generated images of varying emotional intensities. Morph intensities were generated in 10% increments rangingfrom10% (i.e., 90% neutral) to 100% (Marsh, Yu, Pine,& Blair, 2010). Participants saw a total of 360 static images during the task, where each of the 6 expressions was depicted by each of 6 models (3 male, 3 female) at 10 intensity levels.

The emotion recognition task was broken into 3 blocks. In each block 120 images were randomly selected, without replacement, with the requirement that all intensities of each emotion be displayed once by a male model and once by a female model. All participants completed the three blocks in the same order: free viewing, attend-to-mouth, and attend-to-eyes. The attend-to-eyes condition was always presented last to ensure that participants did not identify focusing on the eyes as an optimal emotion recognition strategy that could be used in other blocks of the experiment. In the free viewing block, participants were instructed to look anywhere on the face to help them determine what expression was on the face, while in the attend-to-mouth and attend-to-eyes blocks participants were instructed focus only on those regions of the face, respectively. Each trial in the free viewing block began with a fixation cross in the center of the screen presented for 1.5 sec, followed by a face presented for 500 msec such that the tip of the nose was coincident with the previous location of the fixation cross.The course of the trial was identical in the attend-to-mouth and attend-to-eyes conditions, except that the fixation cross was shifted down or up on the screen to appear where the mouth or eyes, respectively, would subsequently appear. After each trial subjects were asked to identify the expression on the face as one of the six basic emotions (fear, anger, happiness, sadness, disgust, or surprise). Subjects provided their responses using a keyboard. The duration of the experimental task was approximately 25 min.

2.4. Supplementary cognitive tasks

All vmPFC lesion patients completed an abbreviated form of the Wechsler Adult Intelligence Scale-IV (WAIS) (Wechsler, 2008) to estimate IQ and its subcomponents. The mean WAIS score for vmPFC lesion patients was 99.93 (SD = 8.70, range = 91–112), indicating that vmPFC lesion patients were in the normal range of IQ scores. All vmPFC lesion patients and BDC patients completed the Trail Making Test (Reitan & Wolfson, 1985) to test for differences in visual search abilities and working memory. All participants completed the Wide Range Achievement Test 4 Blue Reading subtest (Wilkinson & Robertson, 2006) as an additional IQ estimate. Results of these neuropsychological measures are presented in Table 1.

Table 1.

– Group demographics.

| Sex | Age | IQ | Trails A | Trails B-A | |

|---|---|---|---|---|---|

| Healthy | 12 M, 12 F | 61.88 (3.88) | 111.92* (6.26) | NA | NA |

| BDC | 2 M, 3 F | 58.60 (9.15) | 102.00 (9.19) | 32.95 (7.55) | 46.63 (30.56) |

| vmPFC | 4 M, 3 F | 56.57 (9.61) | 102.00 (11.15) | 39.93 (11.60) | 52.76 (26.22) |

Age = age at time of testing. IQ = estimated IQ based on Wide Range Achievement Test 4 blue reading subtest (Wilkinson & Robertson, 2006). Trails A = Trail Making Test (Reitan & Wolfson, 1985) Part A time to completion (sec). Trails BeA = Trail Making Test Part B time to completion (sec) minus Part A time to completion. Means are presented with SD in parentheses.

Significantly greater than both BDC (W= 19.5, p= .020) and vmPFC patients (W 132.5, p .023).

2.5. Statistical analyses

All analyses were performed in R. Emotion recognition accuracy was calculated as the number of correct responses divided by the number of trials for a given emotion in a given intensity bin. Intensity was binned as low (10–30%), moderate (40–60%), and high (70–%). Due to the small sample sizes of patient groups, we performed non-parametric tests to address study hypotheses. For the free viewing block we used a two-tailed Kruskal–Wallis test to detect an emotion recognition accuracy difference amongst groups within a given emotion and intensity level. If this multi-group test was significant, it was followed by between group comparisons with Mann–Whitney U tests, to address the hypothesis that the vmPFC lesion group would have impaired emotion recognition relative to comparison groups. This manner of “protected” testing helps limit the number of statistical tests performed throughout the analysis. Finally, to address the hypothesis that vmPFC lesion patients' emotion recognition impairments could be improved by effortful attention to the eyes of emotional faces, for any emotion and intensity in which the vmPFC group had impaired recognition relative to comparison groups, we used a Mann–Whitney U test to determine if the vmPFC group's performance in the attend-to-eyes block was improved relative to the free viewing and attend-to-mouth blocks.

3. Results

3.1. Free viewing

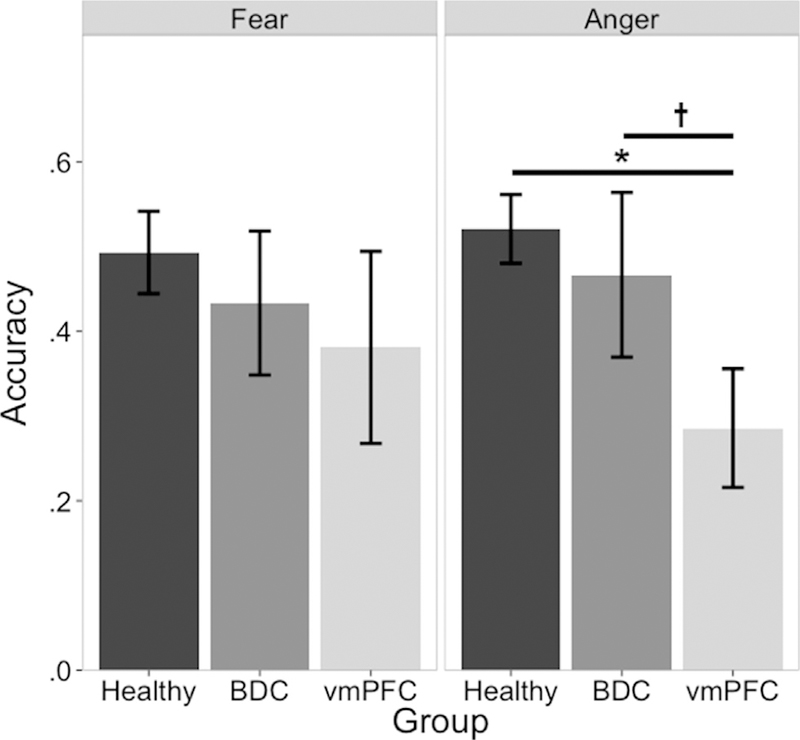

There were no significant group differences in emotion recognition accuracy of any emotion at low intensities (all p > .107; results of all intensities and conditions available in Supplementary Materials). At moderate intensities, there were no significant group differences in emotion recognition accuracy for fear (χ2 = 1.15 p = .562; Fig. 2), surprise (χ2 .= .80, p = .669), disgust (χ2 = .70, p = .705), happiness (χ2 = 2.35, p = .308), or sadness (χ2 = 2.57, p = .277). However, there was a significant group difference for anger at moderate intensity (Х2 = 7.10, p = .029; Fig. 2). Consistent with the hypothesis that the vmPFC lesion group would have impaired recognition of moderate intensity emotion relative to comparison groups, the vmPFC group had lower recognition accuracy of moderate intensity anger than both the healthy comparison group (W = 136, p = .012) and the BDC group (W = 28, p = .086), while the two comparison groups did not significantly differ (W = 45, p = .387). Thus, the only recognition deficit specific to the vmPFC lesion group was for moderate intensity angry faces.

Fig. 2–

Group effects of free viewing moderate intensity fear and anger. Left panel: there were no group differences for recognition of moderate intensity fear in the free viewing condition. Right panel: vmPFC lesion patients had lower recognition accuracy than either comparison group. *p < .05,†p < .1.

3.2. Gaze manipulation

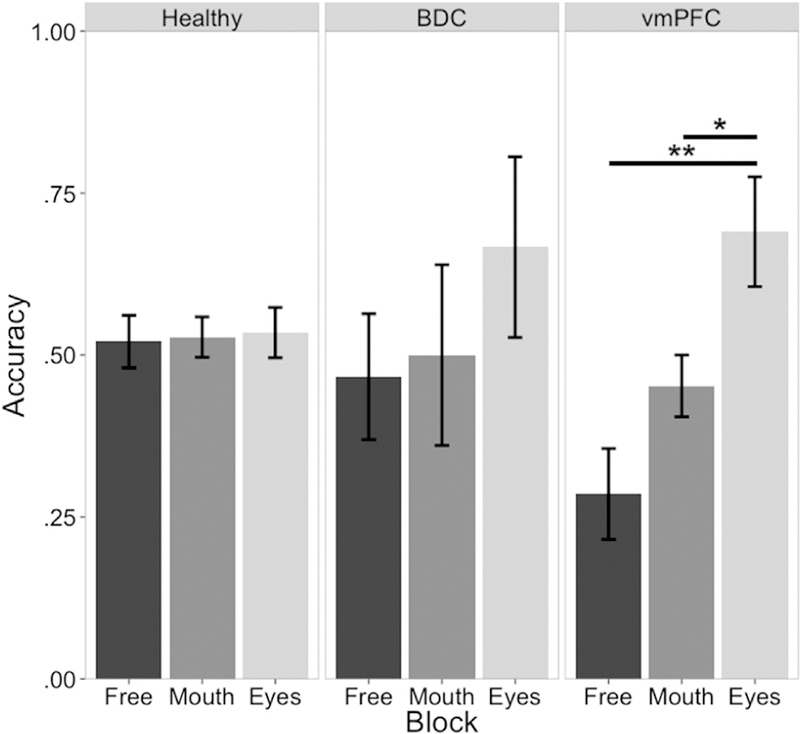

Consistent with the hypothesis that effortful attention to the eyes of emotional faces would improve vmPFC lesion patients' emotion recognition impairments, the vmPFC lesion group showed better moderate intensity anger recognition in the attend-to-eyes block relative to the attend-to-mouth block (W = 40, p = .049) and the free viewing block (W = 45, p = .009) (Fig. 3). There was also a trend towards vmPFC lesion patients having improved moderate intensity anger recognition in the attend-to-mouth block relative to the free viewing block (W = 9.5, p = .054). This performance improvement was not observed in either comparison group; the healthy comparison group did not have significantly better moderate intensity anger recognition accuracy in the attend-to-eyes block relative to the attend-to-mouth block (W = 287.5, p = .999) or to the free viewing block (W = 287.5, p = .999). Likewise, the BDC group also did not have significantly higher moderate intensity anger recognition accuracy in the attend-to-eyes block relative to the attend-to-mouth block (W = 17, p = .398) or to the free viewing block (W = 17.5, p = .338). Finally, the attend-to-eyes instruction raised the vmPFC lesion group's performance to normal; there was no significant difference between groups for moderate intensity anger recognition during the attend-to-eyes block (Х2 = 3.64, p = .162).

Fig. 3–

Moderate intensity anger recognition across groups and conditions. There was no moderate intensity anger recognition accuracy improvement in the attend-to-eyes condition relative to the free viewing condition for either healthy comparison subjects (left panel) or BDC patients (middle panel). Ventromedial PFC lesion patients' free viewing moderate intensity anger recognition impairment was rescued to normal in the attend-to-eyes condition. *p < .05, **p < .01.

4. Discussion

The present study examined the extent to which vmPFC contributes to the recognition of emotional expressions across different intensities. Our specific hypothesis of a low or moderate intensity fear recognition impairment amongst patients with vmPFC lesions was not supported; patients with vmPFC lesions had comparable recognition accuracy of fear relative to comparison subjects across intensities. However, consistent with the notion that vmPFC plays a role in emotion recognition, patients with vmPFC lesions were impaired at recognizing moderate intensity anger relative to comparison subjects. Moreover, patients with vmPFC lesions improved to normal levels of recognition accuracy when paying effortful attention to the eyes of moderate intensity anger faces. In addition to broadening the literature on vmPFC's role in emotion recognition, the gaze manipulation results offer novel insight on the precise contributions of vmPFC during the complex process of identifying facial expressions; vmPFC may contribute to the spatial deployment of visual attention during emotion recognition.

An important remaining question is the specificity of the emotion recognition impairment in patients with vmPFC damage. Several studies have identified emotion recognition impairments in individuals with vmPFC damage, although the specificity of the impairment differs across studies. Three studies of patients with vmPFC lesions found general emotion recognition impairments across all basic emotions (Heberlein et al., 2008; Hornak et al., 1996; Tsuchida & Fellows, 2012). Similar to the present study, a case study of a patient with a right vmPFC lesion reported an impairment in recognizing anger and disgust (Blair & Cipolotti, 2000). It is thus still unclear whether there is a general emotion recognition impairment related to vmPFC damage, or if this impairment is specific to negative emotions such as anger. Notably, some studies reporting more general impairments in patients with vmPFC damage have employed a multidimensional emotion rating task (Heberlein et al., 2008; Tsuchida & Fellows, 2012), in which each stimulus is rated for the presence of each of the basic emotions, rather than a forced-choice labeling task like the current study. One possible explanation for the discrepancy in generality of impairment may be that patients with vmPFC lesions are most profoundly impaired at anger recognition, but are also impaired at recognizing other emotions to a lesser degree that is only detectable with a dependent measure more sensitive than forced-choice labeling. The current study supports the view that vmPFC is important for emotion recognition, at least for anger.

Previous work has implicated visual attention to the eyes as a key factor in emotion recognition (Adolphs et al., 2005; Eisenbarth & Alpers, 2011; Smith, Cottrell, Gosselin, & Schyns, 2005). In the current study we found that exogenously directed attention to the eyes of moderate intensity anger faces was sufficient to improve recognition accuracy for patients with vmPFC damage, suggesting that the emotion recognition deficit associated with vmPFC damage is due in part to failing to deploy visual attention to emotionally informative regions of the face. This notion is further supported by previous work from our lab showing that patients with vmPFC damage make fewer fixations to the eyes of emotional faces during emotion recognition (Wolf et al., 2014). High spatial frequency information from the eyes of emotional faces is critical for discriminating fear, sadness, and anger from other emotions (Adolphs et al., 2005; Smith et al., 2005). These findings, together, indicate that vmPFC may play a role in deploying visual attention to emotionally salient regions of the face.

It is interesting to note that a similar pattern of findings has previously been observed in a patient with bilateral amygdala lesions (Adolphs et al., 2005; Adolphs, Tranel, Damasio, & Damasio, 1994). Given that this pattern of results has now separately been observed in patients with amygdala lesions (for fear) and patients with vmPFC lesions (for anger), it seems that both of these brain regions contribute to emotion recognition and fixating to emotionally salient regions of the face. It is likely that the amygdala and vmPFC actively exchange information during emotion recognition, and one result of this communication is the prototypic pattern of fixations to the eyes (Eisenbarth & Alpers, 2011). That these two regions share information during emotion recognition also fits well with current theories of affective visual stimulus processing, which have proposed that the amygdala helps coordinate cortical networks to evaluate the biological significance of visual stimuli (Pessoa & Adolphs, 2010). Additionally, a number of studies implicate vmPFC in affective and value-based decision-making (Bechara, Damasio, Damasio, & Anderson, 1994; Plassmann, O'Doherty, & Rangel, 2007; Plassmann, O'Doherty, & Rangel, 2010), and vmPFC is theorized to generate affective meaning for stimuli (Roy, Shohamy, & Wager, 2012). One possibility within these frameworks is that vmPFC provides affective value information to the amygdala about emotional expressions, and both regions need to be intact for healthy fixation patterns. Future research is necessary to more directly investigate the role of vmPFC during emotional recognition and address the hypothesis that vmPFC signals the affective value of visual stimuli.

While the current study provides novel evidence of vmPFC's role in emotion recognition, it is not without limitations. One limitation, present in the majority of human lesion work, is the inability to determine whether observed differences in the vmPFC lesion patient group are due to loss of gray matter in vmPFC or due to damage to fibers passing through vmPFC. One recent lesion study in non-human primates reported that emotion regulation abnormalities were only observed in animals that received ventromedial aspiration lesions, which also damage adjacent white matter (Rudebeck, Saunders, Prescott, Chau, & Murray, 2013), but these measures were normalin animals that received fiber-sparing excitotoxic lesions in orbitofrontal cortex. However, animals in this study that received excitotoxic orbitofrontal cortex lesions were still impaired in value-based decision-making. It remains unclear the extent to which vmPFC gray matter, versus the underlying white matter, is necessary for affective value judgments in humans.

Another aspect of the current study that warrants discussion is the modest significance of the group difference in moderate intensity anger recognition during free viewing. Given the number of emotions tested, this anger recognition difference would not survive a traditional multiple comparisons correction, such as the Bonferroni method. However, the observed effect is consistent with previous findings from other labs (Blair & Cipolotti, 2000; Heberlein et al., 2008; Tsuchida & Fellows, 2012). Additionally, the effect sizes of the vmPFC group's difference relative to the healthy and BDC groups were r = .45 and r = .52, respectively; indicating medium to large effect sizes, despite the group difference between vmPFC and BDC patients being limited to a trend. One likely explanation for the modest significance of the moderate intensity anger recognition difference across groups is the size of the vmPFC lesion patient sample. The present study employed stringent inclusion criteria that required patients to have damage localized to vmPFC, without substantial involvement of dorsomedial PFC, lateral PFC, or temporal lobe. Studies with larger samples of vmPFC patients have included subjects with more widely distributed damage. Additionally, the novel contribution of the present study is demonstrating that emotion recognition amongst vmPFC lesion patients can be improved with effortful fixation to the eyes of emotional faces. This effect was demonstrably large and specific to vmPFC lesion patients, further underscoring the likely presence of true anger recognition impairments under free viewing conditions for patients with vmPFC lesions.

The interpretation of these data is also limited by the lack of counterbalancing task order. While the present results are consistent with exogenous direction of attention improving emotion recognition in patients with vmPFC lesions, we cannot rule out the possibility that this improvement across conditions is due to a practice effect. To prevent participants from identifying fixating on the eyes as an optimal strategy, the attend-to-eyes condition was always presented last. This aspect of study design ensures the integrity of the free viewing and attend-to-mouth conditions, but introduces practice as a potential confound. Although there is no evidence of a practice effect in the healthy or BDC groups (Fig. 3), it is possible that the vmPFC lesion group was specifically susceptible to practice. As such, we consider these results preliminary evidence that warrant replication with future work with task order counterbalanced.

Finally, it is worth noting that this study is limited by suboptimal matching across groups on IQ. Emotion recognition ability is known to relate to intellectual ability in both healthy (Mayer & Geher, 1996) and clinical populations (Buitelaar, Wees, Swaab-Barneveld, & Gaag, 1999). Given this, it is possible that the moderate intensity anger recognition impairment observed in patients with vmPFC lesions was driven by differences in IQ. It is worth noting, however, that the vmPFC and BDC groups were well matched on IQ and there was still a trend towards vmPFC patients having lower moderate intensity anger recognition. Nonetheless, this finding warrants replication in a sample adequately matched for IQ.

In sum, the present study adds to a growing literature identifying a role for vmPFC in recognizing emotional facial expressions, and critically expands this literature by demonstrating that emotion recognition impairments present in patients with vmPFC damage stem from a failure to fixate to emotionally salient regions of the face. These results implicate vmPFC in a broader network for emotion recognition. Given that vmPFC dysfunction is hypothesized to be involved in the neuropathogenesis of a number of psychiatric disorders (Blair, 2007; Etkin & Wager, 2007; Hamani et al., 2011; Myers-Schulz & Koenigs, 2012), further research into the specific role of vmPFC in emotion recognition and its contribution to visual attention can help yield advances in developing targeted treatments for multiple disorders of social and affective function.

Supplementary Material

Acknowledgments

The authors would like to thank James Blair for graciously providing the stimuli used in the present study, Jaryd Hiser for assisting in participant scheduling, and Julian Motzkin for assisting with lesion tracing.

Funding

Support for RCW was provided by the National Institutes of Health Training Grant (T32-GM007507) and the National Science Foundation Graduate Research Fellowship (DGE1256259).Funding sources did not have any direct role in study design, analysis or interpretation of data, or preparation of the manuscript.

Abbreviation:

- BDC

brain damaged comparison

Footnotes

The authors declare no competing financial interests.

REFERENCES

- Adolphs R (2002). Neural systems for recognizing emotion. Current Opinion in Neurobiology, 12, 169–177. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, & Damasio AR (2005). A mechanism for impaired fear recognition after amygdala damage. Nature, 433, 68–72 [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, & Damasio A (1994). Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature, 372, 669–672. [DOI] [PubMed] [Google Scholar]

- Anderson IM, Shippen C, Juhasz G, Chase D, Thomas E, Downey D, et al. (2011). State-dependent alteration in face emotion recognition in depression. The British Journal of Psychiatry: the Journal of Mental Science, 198, 302–308. [DOI] [PubMed] [Google Scholar]

- Avants B, & Gee JC (2004). Geodesic estimation for large deformation anatomical shape averaging and interpolation. NeuroImage, 23(Suppl 1), S139–S150. [DOI] [PubMed] [Google Scholar]

- Barrash J, Tranel D, & Anderson SW (2000). Acquired personality disturbances associated with bilateral damage to the ventromedial prefrontal region. Developmental Neuropsychology, 18, 355–381. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio AR, Damasio H, & Anderson SW (1994). Insensitivity to future consequences following damage to human prefrontal cortex. Cognition, 50, 7–15. [DOI] [PubMed] [Google Scholar]

- Blair RJR (2007). The amygdala and ventromedial prefrontal cortex in morality and psychopathy. Trends in Cognitive Sciences, 11, 387–392. [DOI] [PubMed] [Google Scholar]

- Blair RJR, & Cipolotti L (2000). Impaired social response reversal. A case of ‘acquired sociopathy’. Brain, 123(Pt 6), 1122–1141. [DOI] [PubMed] [Google Scholar]

- Blair RJR, Mitchell D, Peschardt K, Colledge E, Leonard R, Shine J, et al. (2004). Reduced sensitivity to others' fearful expressions in psychopathic individuals. Personality and Individual Differences, 37, 1111–1122. [Google Scholar]

- Brett M, Leff AP, Rorden C, & Ashburner J (2001). Spatial normalization of brain images with focal lesions using cost function masking. NeuroImage, 14, 486–500. [DOI] [PubMed] [Google Scholar]

- Buitelaar JK, Wees MVD, Swaab-Barneveld H, & Gaag RJVD (1999). Verbal memory and performance IQ predict theory of mind and emotion recognition ability in children with autistic spectrum disorders and in psychiatric control children. Journal of Child Psychology and Psychiatry, 40, 869–881. [PubMed] [Google Scholar]

- Croker V, & McDonald S (2005). Recognition of emotion from facial expression following traumatic brain injury. Brain Injury, 19, 787–799. [DOI] [PubMed] [Google Scholar]

- Damasio AR (1996). The somatic marker hypothesis and the possible functions of the prefrontal cortex. Philosophical Transactions of the Royal Society B:BiologicalSciences,351,1413–1420. [DOI] [PubMed] [Google Scholar]

- Eisenbarth H, & Alpers GW (2011). Happy mouth and sad eyes:Scanning emotional facial expressions. Emotion, 11, 860–865. [DOI] [PubMed] [Google Scholar]

- Ekman P, & Friesen WV (1971). Constants across cultures in the face and emotion. Journal of Personality and Social Psychology, 17, 124–129. [DOI] [PubMed] [Google Scholar]

- Ekman P, & Friesen WV (1976). In Pictures of facial affect Palo Alto: Consulting Psychologists. [Google Scholar]

- Eldar S, Apter A, Lotan D, Edgar KP, Naim R, Fox NA, et al. (2012). Attention bias modification treatment for pediatric anxiety disorders: A randomized controlled trial. The American Journal of Psychiatry, 169, 213–220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eslinger PJ, & Damasio AR (1985). Severe disturbance of higher cognition after bilateral frontal lobe ablation Patient EVR. Neurology, 35, 1731–1741. [DOI] [PubMed] [Google Scholar]

- Etkin A, & Wager TD (2007). Functional neuroimaging of anxiety: A meta-analysis of emotional processing in PTSD, social anxiety disorder, and specific phobia. The American Journal of Psychiatry, 164, 1476–1488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fusar-Poli P, Placentino A, Carletti F, Landi P, Allen P, Surguladze S, et al. (2009). Functional atlas of emotional faces processing: A voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. Journal of Psychiatry & Neuroscience, 34, 418–432. [PMC free article] [PubMed] [Google Scholar]

- Ghashghaei HT, & Barbas H (2002). Pathways for emotion: Interactions of prefrontal and anterior temporal pathways in the amygdala of the rhesus monkey. Neuroscience, 115, 1261–1279. [DOI] [PubMed] [Google Scholar]

- Gothard KM, Battaglia FP, Erickson CA, Spitler KM, & Amaral DG (2007). Neural responses to facial expression and face identity in the monkey amygdala. Journal of Neurophysiology, 97, 1671–1683. [DOI] [PubMed] [Google Scholar]

- Hakamata Y, Lissek S, Bar-Haim Y, Britton JC, Fox NA, Leibenluft E, et al. (2010). Attention bias modification treatment: A meta-analysis toward the establishment of novel treatment for anxiety. Biological Psychiatry, 68, 982–990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamani C, Mayberg H, Stone S, Laxton A, Haber S, & Lozano AM (2011). The subcallosal cingulate gyrus in the context of major depression. Biological Psychiatry, 69, 301–308 [DOI] [PubMed] [Google Scholar]

- Heberlein AS, Padon AA, Gillihan SJ, Farah MJ, & Fellows LK (2008). Ventromedial frontal lobe plays a critical role in facial emotion recognition. Journal of Cognitive Neuroscience, 20, 721–733. [DOI] [PubMed] [Google Scholar]

- Henry JD, Phillips LH, Beatty WW, McDonald S, Longley WA, Joscelyne A, et al. (2009). Evidence for deficits in facial affect recognition and theory of mind in multiple sclerosis. Journal of the International Neuropsychological Society, 15, 277–285. [DOI] [PubMed] [Google Scholar]

- Hornak J, Bramham J, Rolls ET, Morris RG, O'Doherty J, Bullock PR, et al. (2003). Changes in emotion after circumscribed surgical lesions of the orbitofrontal and cingulate cortices. Brain, 126, 1691–1712. [DOI] [PubMed] [Google Scholar]

- Hornak J, Rolls ET, & Wade D (1996). Face and voice expression identification in patients with emotional and behavioural changes following ventral frontal lobe damage. Neuropsychologia, 34, 247–261. [DOI] [PubMed] [Google Scholar]

- Kohler CG, Turner TH, Bilker WB, Brensinger CM, Siegel SJ, Kanes SJ, et al. (2003). Facial emotion recognition in schizophrenia: Intensity effects and error pattern. The American Journal of Psychiatry, 160, 1768–1774. [DOI] [PubMed] [Google Scholar]

- Marsh AA, Yu HH, Pine DS, & Blair RJR (2010). Oxytocin improves specific recognition of positive facial expressions. Psychopharmacology, 209, 225–232. [DOI] [PubMed] [Google Scholar]

- Masten CL, Guyer AE, Hodgdon HB, McClure EB, Charney DS, Ernst M, et al. (2008). Recognition of facial emotions among maltreated children with high rates of posttraumatic stress disorder. Child Abuse & Neglect, 32, 139–153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mayer JD, & Geher G (1996). Emotional intelligence and the identification of emotion. Intelligence, 22, 89–113. [Google Scholar]

- Myers-Schulz B, & Koenigs M (2012). Functional anatomy of ventromedial prefrontal cortex: Implications for mood and anxiety disorders. Molecular Psychiatry, 17, 132–141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey KA,Sasson NJ,Reznick JS,Paul G,Goldman BD,& Piven J (2002). Visual scanning of faces in autism. Journal of autism and developmental disorders, 32, 249–261. [DOI] [PubMed] [Google Scholar]

- Pessoa L, & Adolphs R (2010). Emotion processing and the amygdala: From a ‘low road’ to ‘many roads’ of evaluating biological significance. Nature Reviews. Neuroscience, 11, 773–783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plassmann H, O'Doherty J, & Rangel A (2007). Orbitofrontal cortex encodes willingness to pay in everyday economic transactions. The Journal of Neuroscience, 27, 9984–9988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plassmann H, O'Doherty JP, & Rangel A (2010). Appetitive and aversive goal values are encoded in the medial orbitofrontal cortex at the time of decision making. The Journal of Neuroscience, 30, 10799–10808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pollak SD, & Kistler DJ (2002). Early experience is associated with the development of categorical representations for facial expressions of emotion. Proceedings of the National Academy of Sciences, 99, 9072–9076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reitan RM, & Wolfson D (1985). The Halstead-Reitan neuropsychological test battery: Theory and clinical interpretation (Vol. 4). Reitan Neuropsychology

- Roy M, Shohamy D, & Wager TD (2012). Ventromedial prefrontal-subcortical systems and the generation of affective meaning. Trends in Cognitive Sciences, 16, 147–156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck PH, Saunders RC, Prescott AT, Chau LS, & Murray EA (2013). Prefrontal mechanisms of behavioral flexibility, emotion regulation and value updating. Nature Neuroscience, 16, 1140–1145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Segonne F, Dale AM, Busa E, Glessner M, Salat D, Hahn HK, et al. (2004). A hybrid approach to the skull stripping problem in MRI. NeuroImage, 22, 1060–1075. [DOI] [PubMed] [Google Scholar]

- Shamay-Tsoory SG, Tomer R, Berger BD, & Aharon-Peretz J (2003). Characterization of empathy deficits following prefrontal brain damage: The role of the right ventromedial prefrontal cortex. Journal of Cognitive Neuroscience, 15, 324–337. [DOI] [PubMed] [Google Scholar]

- Shaw P, Bramham J, Lawrence EJ, Morris R, Baron-Cohen S, & David AS (2005). Differential effects of lesions of the amygdala and prefrontal cortex on recognizing facial expressions of complex emotions. Journal of Cognitive Neuroscience, 17, 1410–1419. [DOI] [PubMed] [Google Scholar]

- Smith ML, Cottrell GW, Gosselin F, & Schyns PG (2005). Transmitting and decoding facial expressions. Psychological Science, 16, 184–189. [DOI] [PubMed] [Google Scholar]

- Tsuchida A, & Fellows LK (2012). Are you upset? Distinct roles for orbitofrontal and lateral prefrontal cortex in detecting and distinguishing facial expressions of emotion. Cerebral Cortex, 22, 2904–2912. [DOI] [PubMed] [Google Scholar]

- Wechsler D (Ed.). (2008). Wechsler adult intelligence scale (Fourth Edition). San Antonio, TX: Pearson. [Google Scholar]

- Whalen PJ, Kagan J, Cook RG, Davis FC, Kim H, Polis S, et al. (2004). Human amygdala responsivity to masked fearful eye whites. Science, 306, 2061. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee MB, & Jenike MA (1998). Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. The Journal of Neuroscience: the Official Journal of the Society for Neuroscience, 18, 411–418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilkinson GS, & Robertson G (2006). Wide range achievement test (WRAT4) Lutz: Psychological Assessment Resources. [Google Scholar]

- Wolf RC, Philippi CL, Motzkin JC, Baskaya MK, & Koenigs M (2014). Ventromedial prefrontal cortex mediates visual attention during facial emotion recognition. Brain, 137, 1772–1780. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.