Abstract

Our ability to make sense of the auditory world results from neural processing that begins in the ear, goes through multiple subcortical areas, and continues in the cortex. The specific contribution of the auditory cortex to this chain of processing is far from understood. Although many of the properties of neurons in the auditory cortex resemble those of subcortical neurons, they show somewhat more complex selectivity for sound features, which is likely to be important for the analysis of natural sounds, such as speech, in real-life listening conditions. Furthermore, recent work has shown that auditory cortical processing is highly context-dependent, integrates auditory inputs with other sensory and motor signals, depends on experience, and is shaped by cognitive demands, such as attention. Thus, in addition to being the locus for more complex sound selectivity, the auditory cortex is increasingly understood to be an integral part of the network of brain regions responsible for prediction, auditory perceptual decision-making, and learning. In this review, we focus on three key areas that are contributing to this understanding: the sound features that are preferentially represented by cortical neurons, the spatial organization of those preferences, and the cognitive roles of the auditory cortex.

Keywords: auditory cortex, receptive field, model, map, cognition, plasticity

Introduction

Our seemingly effortless ability to localize, distinguish, and recognize a vast array of natural sounds, including speech and music, results from the neural processing that begins in the inner ear and continues through a complex sequence of subcortical and cortical brain areas. Many aspects of hearing—such as computation of the cues that enable sound sources to be localized or their pitch to be extracted—rely on the processing that takes place in the brainstem and other subcortical structures. It is widely thought to be the case, however, that the auditory cortex plays a critical role in the perception of complex sounds. Although this partly reflects the emergence of response properties, such as sensitivity to combinations of sound features, it is striking how similar many of the properties of neurons in the primary auditory cortex (A1) are to those of subcortical neurons 1. Equally important is the growing realization that auditory cortical processing, in particular, is highly context-dependent and integrates auditory (and other sensory) inputs with information about an individual’s current internal state, including their arousal level, focus of attention, and motor planning, as well as their past experience 2. The auditory cortex is therefore an integral part of the network of brain regions responsible for generating meaning from sounds, auditory perceptual decision-making, and learning.

In this review, we focus on three key areas of auditory cortical processing where there has been progress in the last few years. First, we consider what sound features A1 neurons represent, highlighting recent attempts to improve receptive field models that can predict the responses of neurons to natural sounds. Second, we examine the distribution of those stimulus preferences within A1 and across the hierarchy of auditory cortical areas, focusing on the extent to which this conforms to canonical principles of columnar organization and functional specialization that are the hallmark of visual and somatosensory processing. Finally, we look at the cognitive role of auditory cortex, highlighting its involvement in prediction, learning, and decision making.

Spectrotemporal receptive fields

The tuning properties of sensory neurons are defined by their receptive fields, which describe the stimulus features to which they are most responsive. Reflecting the spectral analysis that begins in the inner ear, auditory neurons are most commonly characterized by their sensitivity to sound frequency. The spectrotemporal receptive field 3, 4 (STRF) is the dominant computational tool for characterizing the responses of auditory neurons. Most widely used as part of a linear-nonlinear (LN) model, an STRF comprises a set of coefficients that describe how the response of the neuron at each moment in time can be modelled as a linear weighted sum of the recent history of the stimulus power in different spectral channels. Owing to their simplicity, STRFs can be reliably estimated by using randomly chosen structured stimuli, such as ripples 5– 7, and relatively small amounts of data. They can then be used, in combination with a static output nonlinearity, to describe and predict neural responses to arbitrary sounds 8, making them invaluable tools in understanding the computational roles of single neurons and whole neuronal populations. However, STRFs do not accurately capture the full complexity of the behavior of auditory neurons; for example, multiple studies have shown that there are differences between STRFs estimated by using standard synthetic stimuli and those estimated by using natural sounds 9– 11, suggesting that these models systematically fail to fully describe neural responses to complex stimuli.

Multiple stimulus dimensions

Standard STRF models contain a single receptive field—one set of spectrotemporal weighting coefficients that describes a single time-varying spectral pattern to which the neuron is sensitive. There is no reason, however, to believe that the spectrotemporal selectivity of auditory neurons is so simple. Artificial neural networks are built on the principle that arbitrarily complex computations can be constructed from simple neuron-like computing elements, and cortical neurons are likely to have similarly complex selectivity based on nonlinear combination of the responses of afferent neurons with simpler selectivity. Capturing this complexity requires STRF models that nonlinearly combine the responses of neurons to multiple stimulus dimensions.

With a maximally informative dimensions approach, it has been found that multiple stimulus dimensions are required to describe the responses of neurons in A1 12, 13, whereas a similar approach requires only a single dimension to describe most neurons in the inferior colliculus (IC) in the midbrain 14. This suggests that neuronal complexity is higher in the cortex and that this complexity can be captured by nonlinear combination of the responses of multiple simpler units, a finding that also applies to high-level neurons in songbirds 15, 16. Two recent articles have successfully shown that neural networks are an effective way to model these interactions. Harper et al. 17 used a two-layer perceptron to describe the behavior of neurons in ferret auditory cortex, and Kozlov and Gentner 16 used a similar network to describe high-level auditory neurons in the starling. To achieve this, both studies used neural networks of intermediate complexity, thus avoiding an explosion in the number of parameters that must be fitted. They also used careful regularization—where the model is optimized subject to a penalty on parameter values. Regularization effectively reduces the number of parameters that must be fitted, meaning that models can be fitted accurately using smaller datasets 18. This approach enabled both studies to show that auditory neurons can be better described by a model which takes into account the tuning of multiple afferent neurons (hidden units), suggesting that the selectivity of individual neurons is the result of a combination of information about multiple stimulus dimensions.

Other studies using synthetic stimuli also indicate that cortical neurons integrate different sound features, including frequency, spectral bandwidth, level, amplitude modulation over time, and spatial location 19, 20. By using an online optimization procedure (see earlier work by deCharms et al. 21) to dynamically generate sounds that varied along these dimensions, the authors of these studies were able to efficiently search stimulus space and constrain their model within a few minutes of stimulus presentation. They also found that individual neurons multiplex information about multiple stimulus dimensions and that neuronal responses to multidimensional stimuli could not be predicted simply from responses to low-dimensional stimuli.

Taken together, these studies suggest that the successor of the STRF will be a model that allows multiple STRF-like elements to be combined in nonlinear ways. The development of such models will require large datasets that include neuronal responses to complex, natural stimuli.

Dynamic changes in tuning

Static multidimensional models capture complex selectivity for the recent spectral structure of sounds. However, several studies have highlighted the importance of dynamic changes in the response properties of auditory cortical neurons 22– 26. Most real-life soundscapes are characterized by constant changes in the statistics of the sounds that reach the ears. Neurons rapidly adapt to stimulus statistics—for example, stimulus probability, contrast, and correlation structure—and adjust their sensitivity in response to behavioral requirements 27– 29. This can produce representations that are invariant to some changes in sound features and robustly selective for other features 30.

Several authors have incorporated such adaptation by adding a nonlinear input stage to the standard LN model 25, 31– 33. Willmore et al. 33 explicitly incorporated the behavior of afferent neurons into a model of A1 spectrotemporal tuning. In both the auditory nerve 34 and IC 35, dynamic coding occurs in the form of adaptation to mean sound level. When the mean sound level is high, neurons shift their dynamic ranges upwards, so that neuronal responses are relatively invariant to changes in background level. Because these structures are precursors of the auditory cortex, it makes sense to incorporate IC adaptation into the input stage of a model of auditory cortex and Willmore et al. 33 found that this improved the performance of their model of cortical neurons.

Neurons in the A1 of the ferret show compensatory adaptation to sound contrast (that is, the variance of the sound level distribution) 24, 36. When the contrast of the input to a given neuron is high, the gain of the neuron is reduced, thereby making it relatively insensitive to changes in sound level. When the contrast of the input is low, the gain of the neuron rises, increasing its sensitivity. This adaptive coding therefore tends to compensate for changes in sound contrast. Adaptation to the mean and variance of sound level is specifically beneficial for the representation of dynamic sounds against a background of constant noise. If the noise is statistically stationary (that is, the mean and variance are fixed), then the effect of adaptation is to minimize the responses of cortical neurons to the background. Thus, the neuronal responses depend mainly on the dynamic foreground sound and are relatively invariant to its contrast. This enables cortical neurons to represent complex sounds using a code that is relatively robust to the presence of background noise. Similar noise robustness has been shown independently in the songbird 37 and in decoding studies applied to populations of mammalian cortical neural responses 30, 38.

Normative models

To fully understand how sounds are represented by neurons in the auditory cortex, we need to ask why this particular representation has been selected, whether by evolution or by developmental processes. One approach to addressing this question is to build normative computational models. Normative models embody certain constraints that are hypothesized to be important for determining the neural representation. By building normative models and comparing their representations with real physiological representations, it is possible to test hypotheses about which constraints determine the structure of the neural codes used in the brain. For example, Olshausen and Field 39 built a neural network and trained it to produce a sparse, generative representation of natural visual scenes. Once trained, the network’s units exhibited receptive fields with many similarities to those found in neurons in the primary visual cortex (V1), suggesting that V1 itself may be optimized to produce sparse representations of natural visual scenes.

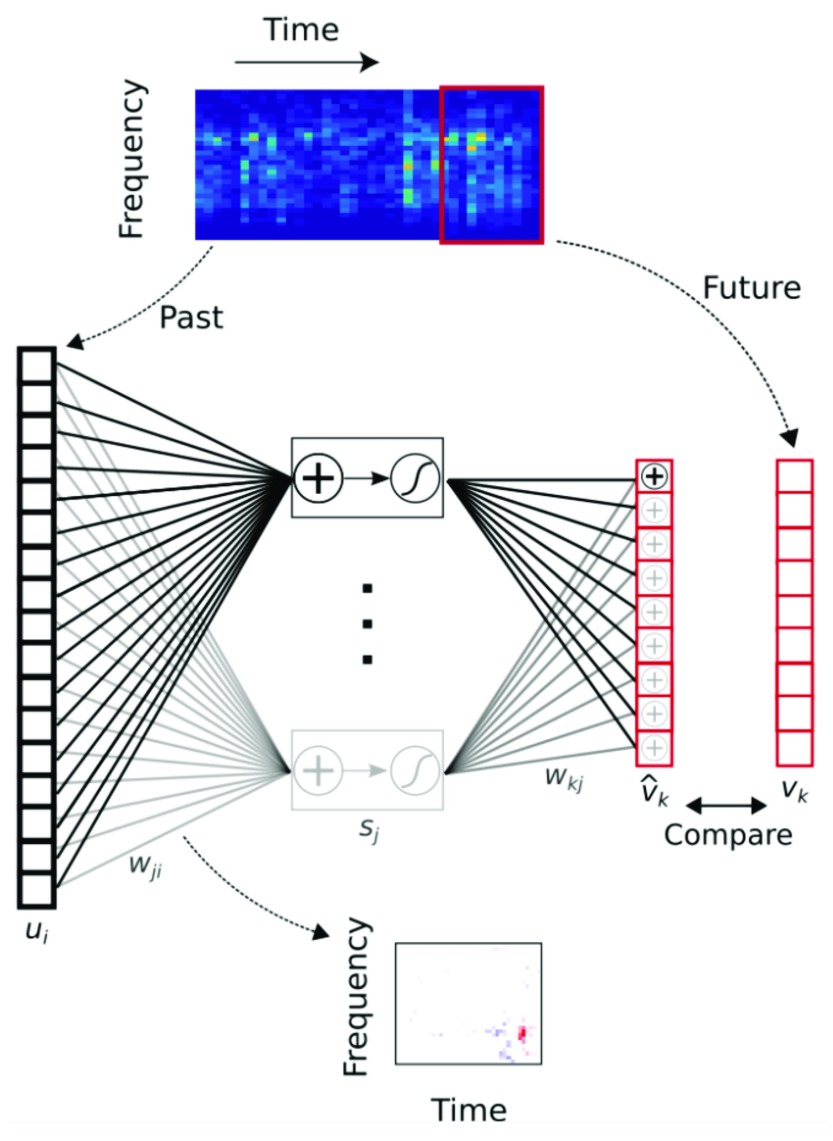

Carlson et al. 40 trained a neural network so that it produced a sparse, generative representation of natural auditory stimuli. They found that the model learned STRFs whose spectral structure resembled that of real neurons in the auditory system, suggesting that the auditory system may be optimized for sparse representation. Carlin and Elhilali 41 showed that optimizing model neural responses to produce a code based on sustained firing rates also revealed feature preferences similar to those of auditory neurons. Recently, Singer et al. 42 observed that the temporal structure of the STRFs in sparse coding models (which generally have envelopes that are symmetrical in time) is very different from those of real neurons in both V1 and A1, where neurons are typically most selective for recent stimulation and have envelopes that decay into the past. These authors trained networks to predict the immediate future of either natural visual or auditory stimuli and found that the networks developed receptive fields that closely matched those of real visual and auditory cortical neurons, including their asymmetric temporal envelopes ( Figure 1). This suggests that neurons in these areas may be optimized to predict the immediate future of sensory stimulation. Such a representation may be advantageous for acting efficiently in the world using neurons, which introduce inevitable delays in information transmission.

Figure 1. Neuronal selectivity in the auditory cortex is optimized to represent sound features in the recent sensory past that best predict immediate future inputs.

A feedforward artificial neural network was trained to predict the immediate future of natural sounds (represented as cochleagrams describing the spectral content over time) from their recent past. This temporal prediction model developed spectrotemporal receptive fields that closely matched those of real auditory cortical neurons. A similar correspondence was found between the receptive fields produced when the model was trained to predict the next few video frames in clips of natural scenes and the receptive field properties of neurons in primary visual cortex. Model nomenclature: s j, hidden unit output; u i, input—the past; v k, target output—the true future; , output—the predicted future; w ji, input weights (analogous to cortical receptive fields); w kj, output weights. Reprinted from Singer et al. 42.

As discussed above, STRFs do not provide a complete description of the stimulus preferences of auditory neurons. It is therefore important to extend the normative approach into more complex models in order to generate hypotheses about what nonlinear combinations of features we might expect to find in the auditory system. To address this question, Młynarski and McDermott 43 trained a hierarchical, generative neural network to represent natural sounds and found that some units in the second layer of the model developed sensitivity to combinations of elementary sound features but that others developed opponency between sound features. By providing insights into how different parameters interact to determine their response properties, these modelling approaches can generate testable hypotheses about the possible roles played by auditory neurons at different levels of the processing hierarchy.

Origin of auditory cortical tuning properties

The receptive field properties of auditory cortical neurons are derived from their ascending thalamic inputs and are shaped by recurrent synaptic interactions between excitatory neurons as well as by local inhibitory inputs. The importance of ascending inputs in determining the functional organization of the auditory pathway is illustrated by the presence of tonotopic maps at each processing level, which have their origin in the decomposition of sounds into their individual frequencies along the length of the cochlea in the inner ear. The presence of tonotopic maps in the cortex can be demonstrated by using a variety of methods, including the use of functional brain imaging in humans 44. However, this is a relatively coarse approach and the much finer spatial resolution provided by in vivo two-photon calcium imaging suggests that the spatial organization varies across the layers of A1 and that frequency tuning in the main thalamorecipient layer 4 is more homogenous than in the upper cortical layers 45. Whilst this laminar transformation is consistent with a possible integration of inputs in the superficial layers, which might contribute to the emergence of sensitivity to multiple sound features, it turns out that the thalamic input map to A1 is surprisingly imprecise 46. The significance of this for the generation of cortical response properties remains to be investigated, but heterogeneity in the frequency selectivity of ascending inputs might provide a substrate for the contextual modulation of cortical response properties.

In the last few years, progress has been made in determining how the local circuitry of excitatory and inhibitory neurons contributes to auditory cortical response properties 47– 50. For example, both parvalbumin-expressing and somatostatin-expressing inhibitory interneurons in layers 2/3 have been implicated in regulating the frequency selectivity of A1 neurons 48, 50, while manipulating the activity of parvalbumin-expressing neurons, the most common type of cortical interneuron, has been shown to alter the behavioral performance of mice on tasks that rely on their ability to discriminate different sound frequencies 49. Furthermore, local inhibitory interneurons are involved in mediating the way A1 responses change according to the recent history of stimulation 51– 54, and it is likely that dynamic interactions between different cell types are responsible for much of the context-dependent modulation that characterizes the way sounds are processed in the auditory cortex. Thus, in addition to being present at subcortical levels, adaptive coding of auditory information can arise de novo from local circuit interactions in the cortex.

Beyond tonotopy

A tonotopic representation is defined by a systematic variation in the frequency selectivity of the neurons from low to high values. A long-standing and controversial question regarding the functional organization of A1 and other brain areas that contain frequency maps is how neuronal sensitivity to other stimulus features is represented across the isofrequency axes. In the visual cortex of carnivores and primates, neuronal preferences for different stimulus features—orientation, spatial frequency and eye of stimulation—are overlaid so that they are all represented at each location within a two-dimensional map of the visual field 55. In a similar vein, functional magnetic resonance imaging (fMRI) studies in humans 56 and macaque monkeys 57 have revealed that core auditory fields, including A1, contain a gradient in sensitivity to the rate of amplitude modulation, which is arranged orthogonally to the tonotopic map. In other words, a temporal map seems to exist within the cortical representation of each sound frequency.

By contrast, analysis of the activity of large samples of neurons within the upper layers of mouse auditory cortex has failed to provide evidence for such topography. Instead, neurons displaying similar frequency tuning bandwidth 58, preferred sound level 58, and sensitivity to changes in sound level 59 or to differences in level between the ears 60—an important cue to localizing sound sources—form intermingled clusters across the auditory cortex. Within the isofrequency domain, clearer evidence for segregated processing modules with distinct spectral integration properties or preferences for other sound features has been obtained in monkeys 61 and cats 62. It therefore remains possible that the more diffuse spatial arrangement observed in mice may be a general property of sensory cortex in this species 63 or more generally of animals with relatively small brains. Although multiple, interleaved processing modules for behaviorally relevant sound features may exist to varying degrees in different species, our understanding of how they map onto the tonotopic organization of A1 or other auditory areas is far from complete.

Vertical clustering of neurons with similar tuning properties also represents a potentially important aspect of the functional organization of the cerebral cortex. Compared with other sensory systems, there has been less focus on the columnar organization of auditory cortex. As previously mentioned, there is evidence for layer-specific differences in the frequency representation in auditory cortex 45 and this has been shown to extend to other response properties 64, 65. At the same time, ensemble activity within putative cortical columns may play a role in representing auditory information 66, 67.

Representing sound features in multiple cortical areas

As with other sensory modalities, the auditory cortex is subdivided into a number of separate areas, which can be distinguished on the basis of their connections, response properties, and (to some extent) the auditory perceptual deficits that result from localized damage. That these areas are organized hierarchically is clearly illustrated by neuroimaging evidence in humans for the involvement of sequential cortical regions in transforming the spectral features of speech into its semantic content 68, 69. Furthermore, by training a neural network model on speech and music tasks, Kell et al. 70 found that the best performing model architecture separated speech and music into separate pathways, in keeping with fMRI responses in human non-A1 71. Neuroimaging evidence in monkeys also suggests that analysis of auditory motion may involve different pathways from those engaged during the processing of static spatial information 72. In both human and non-human primates, the concept of distinct ventral and dorsal processing streams is widely accepted. These were initially assigned to “what” and “where” functions, respectively, but their precise functions, and the extent to which they interact, continue to be debated 73– 76.

Evidence for a division of labor among the multiple areas that comprise the auditory cortex extends to other species, as illustrated, for example, by the finding that anatomically segregated regions of mouse auditory cortex can be distinguished by differences in the frequency selectivity of the neurons and in their sensitivity to frequency-modulated sweeps 77. Nevertheless, the question of whether a particular aspect of auditory perception is localized to one or more of those areas is, in some ways, ill posed. Given the extensive subcortical processing that takes place and the growing evidence for multidimensional receptive field properties in early cortical areas 19, 20, 78, it is likely that neurons convey information about multiple sound attributes. Indeed, recent neuroimaging studies have demonstrated widespread areas of activation in response to variations in spatial 79 or non-spatial 80 parameters, adding to earlier electrophysiological recordings which indicated that sensitivity to pitch, timbre, and location is distributed across several cortical areas 81.

Decoding auditory cortical activity

Even where different sound features are apparently encoded by the same cortical regions, differences in the evoked activity patterns may enable them to be distinguished. For example, auditory cortical neurons can unambiguously represent more than one stimulus parameter by independently modulating their spike rates within distinct time windows 78. Furthermore, a recent fMRI study demonstrated that although variations in pitch or timbre—two key aspects of sound identity—activate largely overlapping cortical areas, they can be distinguished by using multivoxel pattern analysis 80. Studies like these hint at how the brain might solve the problem of perceptual invariance—recognizing, for example, the melody of a familiar tune even when it is played on different musical instruments irrespective of where they are located.

This type of approach is part of a growing trend to investigate the decoding or reconstruction of sound features from the measured responses of populations of neurons (or other multidimensional measures of brain activity, such as fMRI voxel responses 82 or electroencephalography signals). Indeed, Yildiz et al. 83 found that a normative decoding model of auditory cortex described neuronal response dynamics better than some classic encoding models that define the relationship between the stimulus and the neural response. It is therefore possible that the responses of populations of cortical neurons can be more accurately understood in terms of decoding rather than encoding.

The application of decoding techniques has provided valuable insights into how neural representations change under different sensory conditions, such as in the presence of background noise 30, 38, 84, 85, or when specific stimuli are selectively attended 86– 89. They can also help to identify the size of the neural populations within the cortex from which auditory information needs to be read out in order to account for behavior 90– 92 and the way in which stimulus features are represented there. For example, evidence from electrophysiological 93, 94, two-photon calcium imaging 60 and fMRI 95, 96 studies is all consistent with an opponent-channel model in which the location of sounds in the horizontal plane, at least based on interaural level differences, is decoded from the relative activity of contralaterally and ipsilaterally tuned neurons within each hemisphere. Furthermore, based on the accuracy with which spectrotemporal modulations in a range of natural sounds could be reconstructed from high-resolution fMRI signals, Santoro et al. 97 concluded that even early stages of the human auditory cortex may be optimized for processing speech and voices.

Auditory scene analysis

Separating a sound source of interest from a dynamic mixture of potentially competing sounds is a fundamental function of the auditory system. In order to perceptually isolate a sound source as a distinct auditory object 98, its constituent acoustic features like pitch, timbre, intensity, and location need to be individually analyzed and then grouped together to form a coherent perceptual representation. Although neural computations underlying scene analysis have been demonstrated at different subcortical levels 99– 101, the cortex is the hub where stimulus-driven feature segregation and top-down attentional selection mechanisms converge 102. Indeed, several recent studies suggest a critical role for the auditory cortex in forming stable perceptual representations based on grouping and segregation of spectral, spatial, and temporal regularities in the acoustic environment 103– 115.

Whilst cortical spike-based accounts of auditory segregation of narrowband signals rely on mechanisms of tonotopicity, adaptation, and forward suppression 116, recent work has highlighted the importance of temporal coherence 113, 117– 119 and oscillatory sampling 120– 122 in driving dynamic segregation of attended broadband stimuli. Results from human electro-corticography experiments suggest that low-level auditory cortex encodes spectrotemporal features of selectively attended speech 86, 87. Speech representations derived from high-gamma (75- to 150-Hz) local field potential activity in non-A1 of subjects listening to two speakers talking simultaneously are dominated by the spectrotemporal features of whichever speaker attention is drawn to 86. Moreover, the attention-modulated responses were found to predict the accuracy with which target words were correctly detected in the two-speaker speech mixture, revealing a neural correlate of “cocktail party” listening 86. Attentional modulation of the representation of multiple speech sources increases along the cortical hierarchy 114, and a similar finding has been reported for the emergence of task-dependent spectrotemporal tuning in neurons in ferret cortex 123.

Prediction

The predictability of sounds plays an important role in processing natural sound sequences like speech and music 124. A prominent generative model of perception is based on the concept of predictive coding; that is, the brain learns to minimize the prediction error between internal predictions of sensory input and the external sensory input 125, 126. This model has been successfully applied to explain the encoding of pitch in a hierarchical fashion in distributed areas of the auditory cortex 127. Furthermore, distinct oscillatory signatures for the key variables of predictive coding models, including surprise, prediction error, prediction change, and prediction precision, have all been demonstrated in the auditory cortex 128. Most of the work in this area at the level of individual neurons has focused on stimulus-specific adaptation, a phenomenon in which particular sounds elicit stronger responses when they are rarely encountered than when they are common 22. Recent work has indicated that A1 neurons exhibit deviance or surprise sensitivity 129 and encode prediction error 124 and that prediction error signals increase along the auditory pathway 130. Furthermore, the auditory cortex encodes predictions for not only “what” kind of sensory event is to occur but also “when” it may occur 131.

Behavioral engagement and auditory decision-making

Further evidence for dynamic processing in the auditory cortex has been obtained from studies demonstrating that engagement in auditory tasks modulates both spontaneous 132, 133 and sound-evoked 92, 133– 135 activity, as well as correlations between the activity of different neurons 92, 136, in ways that enhance behavioral performance. Furthermore, changes in task reward structure can alter A1 responses to otherwise identical sounds 28, and other studies suggest that the auditory cortex is part of the network of brain regions involved in maintaining perceptual representations during memory-based tasks 137, 138. Although the source of these modulatory signals is not yet fully understood, accumulating evidence suggests the involvement of top-down inputs from areas such as the parietal and frontal cortices 88, 139– 141 as well as the neuromodulatory systems discussed in the next section. In addition, inputs from motor cortex have been shown to suppress both spontaneous and evoked activity in the auditory cortex during movement 142, 143.

The notion of the auditory cortex as a high-level cognitive processor is also supported by investigations into perceptual decision-making. Traditionally, decision making is a function that has been attributed to parietal and frontal cortex in association with subcortical structures like the basal ganglia. However, recent work suggests that the auditory cortex not only encodes physical attributes of task-relevant stimuli but also represents behavioral choice and decision-related signals 92, 144– 146. Tsunada et al. 147 provided direct evidence for a role for auditory cortex in decision making: they found that micro-stimulation of the anterolateral but not the mediolateral belt region of the macaque auditory cortex biased behavioral responses toward the choice associated with the preferred sound frequency of the neurons at the site of stimulation.

The auditory cortex and learning

An ability to learn and remember features in complex acoustic scenes is crucial for adaptive behavior, and plasticity of sound processing in the auditory cortex is an integral part of the circuitry responsible for these essential functions. Robust learning often occurs without conscious awareness and persists for a long time. Agus et al. 148 demonstrated that human participants can rapidly learn to detect a particular token of repeated white noise and remember the same pattern for weeks 149 in a completely unsupervised fashion. Functional brain imaging experiments have implicated the auditory cortex as well as the hippocampus in encoding memory representations for such complex scenes. The underlying mechanisms of this rapid formation of robust acoustic memories are not clear, but recent psychoacoustical experiments suggest a computation based on encoding summary statistics 150.

Recognition of structure in complex sequences based on passive exposure is also imperative for acquiring knowledge of the various rules manifest in speech and language 151. Electrophysiological recordings from songbird forebrain areas that correspond to the mammalian auditory cortex have revealed evidence for statistical learning, expressed as a decrease in the spike rate for familiar versus novel sequences 152. Following passive learning with sequences of nonsense speech sounds that contained an artificial grammar structure, exposure to violations in this sequence activated homologous cortical areas 153 and modulated hierarchically nested low-frequency phase and high-gamma amplitude coupling in the auditory cortex in a very similar way in humans and monkeys 154. Interestingly, this form of oscillatory coupling is thought to underlie speech processing in the human auditory cortex 155, suggesting that it may represent an evolutionarily conserved strategy for analyzing sound sequences.

Not all learning, however, occurs without supervision, and reinforcement in the form of reward or punishment is also key for successful learning outcomes. As in other sensory modalities, training can produce improvements in auditory detection and discrimination abilities, including linguistic and musical abilities 156. A number of studies have reported that auditory perceptual learning is associated with changes in the stimulus-encoding response properties of A1 neurons 157. This often entails an expansion in the representation of the stimuli on which subjects are trained, and the extent of the representational plasticity is thought to encode both the behavioral importance of these stimuli and the strength of the associative memory 158. Nevertheless, there have been few attempts to show that A1 plasticity is required for auditory perceptual learning. Indeed, training-induced changes in the functional organization of A1 have been found to disappear over time, even though improvements in behavioral performance are retained 159, 160. However, a recent study in which gerbils were trained on an amplitude-modulation detection task found both a close correlation between the magnitude and time course of cortical and behavioral plasticity and that inactivation of the auditory cortex reduced learning without affecting detection thresholds 161. Cortical inactivation has also been shown to impair training-dependent adaptation to altered spatial cues resulting from plugging of one ear 162. This is consistent with physiological evidence that the encoding of different spatial cues in the auditory cortex is experience-dependent, changing in ways that can explain the recovery of localization accuracy following exposure to abnormal inputs 94, 163, 164.

The alterations in cortical response properties that accompany perceptual learning are likely driven by top-down inputs that determine which stimulus features to attend to 165. Several neuromodulatory systems, including the cholinergic basal forebrain 166, 167 and the noradrenergic locus coeruleus 168, 169, play an important role in auditory processing and plasticity and appear to provide reinforcement signals and information about behavioral context to auditory cortex. It has recently been shown that cholinergic inputs primarily target inhibitory interneurons in the auditory cortex 170, 171, which suggests a possible basis by which the balance of cortical excitation and inhibition is transiently altered during learning 172.

Although its involvement in learning is probably one of the most important functions of the auditory cortex, there is growing evidence for a specific role for its outputs to other brain areas. For instance, selective strengthening of auditory corticostriatal synapses has been observed over the course of learning an auditory discrimination task 173, while the integrity of A1 neurons that project to the IC is required for adaptation to hearing loss in one ear 174. Indeed, it is likely that descending corticofugal pathways play a more general role in auditory learning 175, 176 as well as other aspects of auditory perception and behavior 177– 180.

Conclusions

The studies outlined in this article show how neurons in the auditory cortex encode sounds in ways that are directly relevant to behavior. Auditory cortical processing depends not only on the sounds themselves but also on the individual’s internal state, such as the level of arousal, and the sensory and behavioral context in which sounds are detected. Therefore, to understand how cortical neurons process specific sound features, we have to consider the complexity of the auditory scene and the presence of other sensory cues as well as factors such as motor activity, experience, and attention. Indeed, the auditory cortex seems to play a particularly important role in learning and in constructing memory-dependent perceptual representations of the auditory world.

We are now beginning to understand the computations performed by auditory cortical neurons as well as the role of long-range inputs and local cortical circuits, including the participation of specific cell types, in those computations. Research over the last few years has also provided insights into the way information flows within and between different auditory cortical areas as well as the complex interplay between the auditory cortex and other brain areas. In particular, through its extensive network of descending projections, cortical activity can influence almost every subcortical processing stage in the auditory pathway, but we are only beginning to understand how those pathways contribute to auditory function. Further progress in this field will require the application of coordinated computational and experimental approaches, including the increased use of methods for measuring, manipulating, and decoding activity patterns from populations of neurons.

Editorial Note on the Review Process

F1000 Faculty Reviews are commissioned from members of the prestigious F1000 Faculty and are edited as a service to readers. In order to make these reviews as comprehensive and accessible as possible, the referees provide input before publication and only the final, revised version is published. The referees who approved the final version are listed with their names and affiliations but without their reports on earlier versions (any comments will already have been addressed in the published version).

The referees who approved this article are:

Shihab Shamma, Department of Electrical & Computer Engineering, University of Maryland, College Park, Maryland, USA; The Institute for Systems Research, University of Maryland, College Park, Maryland, USA

Christoph Schreiner, UCSF Center for Integrative Neuroscience, University of California San Francisco, San Francisco, USA

Funding Statement

Our research is supported by Wellcome grants WT108369/Z/2015/Z (AK and BW) and WT106084/Z/14/Z (ST).

The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

[version 1; referees: 2 approved]

References

- 1. King AJ, Nelken I: Unraveling the principles of auditory cortical processing: Can we learn from the visual system? Nat Neurosci. 2009;12(6):698–701. 10.1038/nn.2308 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Kuchibhotla K, Bathellier B: Neural encoding of sensory and behavioral complexity in the auditory cortex. Curr Opin Neurobiol. 2018;52:65–71. 10.1016/j.conb.2018.04.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. de Boer R, Kuyper P: Triggered correlation. IEEE Trans Biomed Eng. 1968;15(3):169–79. 10.1109/TBME.1968.4502561 [DOI] [PubMed] [Google Scholar]

- 4. Aertsen AM, Johannesma PI: The spectro-temporal receptive field. A functional characteristic of auditory neurons. Biol Cybern. 1981;42(2):133–43. 10.1007/BF00336731 [DOI] [PubMed] [Google Scholar]

- 5. Schreiner CE, Calhoun BM: Spectral envelope coding in cat primary auditory cortex: Properties of ripple transfer functions. Aud Neurosci. 1994;1:39–61. Reference Source [Google Scholar]

- 6. Shamma SA, Versnel H, Kowalski N: Ripple analysis in the ferret primary auditory cortex. I. Response characteristics of single units to sinusoidally rippled spectra. Aud Neurosci. 1995;1:233–254. Reference Source [Google Scholar]

- 7. Shamma SA: Auditory cortical representation of complex acoustic spectra as inferred from the ripple analysis method. Netw Comput Neural Syst. 2009;7(3):439–76. 10.1088/0954-898X_7_3_001 [DOI] [Google Scholar]

- 8. Shamma SA, Versnel H: Ripple analysis in the ferret primary auditory cortex. II. Prediction of unit responses to arbitrary spectral profiles. Aud Neurosci. 1995;1:255–270. Reference Source [Google Scholar]

- 9. Theunissen FE, Sen K, Doupe AJ: Spectral-temporal receptive fields of nonlinear auditory neurons obtained using natural sounds. J Neurosci. 2000;20(6):2315–31. 10.1523/JNEUROSCI.20-06-02315.2000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Woolley SM, Fremouw TE, Hsu A, et al. : Tuning for spectro-temporal modulations as a mechanism for auditory discrimination of natural sounds. Nat Neurosci. 2005;8(10):1371–9. 10.1038/nn1536 [DOI] [PubMed] [Google Scholar]

- 11. Nagel KI, Doupe AJ: Organizing principles of spectro-temporal encoding in the avian primary auditory area field L. Neuron. 2008;58(6):938–55. 10.1016/j.neuron.2008.04.028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Sharpee T, Rust NC, Bialek W: Analyzing neural responses to natural signals: maximally informative dimensions. Neural Comput. 2004;16(2):223–50. 10.1162/089976604322742010 [DOI] [PubMed] [Google Scholar]

- 13. Atencio CA, Sharpee TO: Multidimensional receptive field processing by cat primary auditory cortical neurons. Neuroscience. 2017;359:130–41. 10.1016/j.neuroscience.2017.07.003 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 14. Atencio CA, Sharpee TO, Schreiner CE: Receptive field dimensionality increases from the auditory midbrain to cortex. J Neurophysiol. 2012;107(10):2594–603. 10.1152/jn.01025.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Kozlov AS, Gentner TQ: Central auditory neurons display flexible feature recombination functions. J Neurophysiol. 2014;111(6):1183–9. 10.1152/jn.00637.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Kozlov AS, Gentner TQ: Central auditory neurons have composite receptive fields. Proc Natl Acad Sci U S A. 2016;113(5):1441–6. 10.1073/pnas.1506903113 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 17. Harper NS, Schoppe O, Willmore BD, et al. : Network Receptive Field Modeling Reveals Extensive Integration and Multi-feature Selectivity in Auditory Cortical Neurons. PLoS Comput Biol. 2016;12(11):e1005113. 10.1371/journal.pcbi.1005113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. David SV, Mesgarani N, Shamma SA: Estimating sparse spectro-temporal receptive fields with natural stimuli. Network. 2007;18(3):191–212. 10.1080/09548980701609235 [DOI] [PubMed] [Google Scholar]

- 19. Chambers AR, Hancock KE, Sen K, et al. : Online stimulus optimization rapidly reveals multidimensional selectivity in auditory cortical neurons. J Neurosci. 2014;34(27):8963–75. 10.1523/JNEUROSCI.0260-14.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 20. Sloas DC, Zhuo R, Xue H, et al. : Interactions across Multiple Stimulus Dimensions in Primary Auditory Cortex. eNeuro. 2016;3(4): pii: ENEURO.0124-16.2016. 10.1523/ENEURO.0124-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. deCharms RC, Blake DT, Merzenich MM: Optimizing sound features for cortical neurons. Science. 1998;280(5368):1439–43. 10.1126/science.280.5368.1439 [DOI] [PubMed] [Google Scholar]

- 22. Ulanovsky N, Las L, Nelken I: Processing of low-probability sounds by cortical neurons. Nat Neurosci. 2003;6(4):391–8. 10.1038/nn1032 [DOI] [PubMed] [Google Scholar]

- 23. Schneider DM, Woolley SM: Extra-classical tuning predicts stimulus-dependent receptive fields in auditory neurons. J Neurosci. 2011;31(33):11867–78. 10.1523/JNEUROSCI.5790-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Rabinowitz NC, Willmore BD, Schnupp JW, et al. : Contrast gain control in auditory cortex. Neuron. 2011;70(6):1178–91. 10.1016/j.neuron.2011.04.030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. David SV, Shamma SA: Integration over multiple timescales in primary auditory cortex. J Neurosci. 2013;33(49):19154–66. 10.1523/JNEUROSCI.2270-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Williamson RS, Ahrens MB, Linden JF, et al. : Input-Specific Gain Modulation by Local Sensory Context Shapes Cortical and Thalamic Responses to Complex Sounds. Neuron. 2016;91(2):467–81. 10.1016/j.neuron.2016.05.041 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Fritz J, Shamma S, Elhilali M, et al. : Rapid task-related plasticity of spectrotemporal receptive fields in primary auditory cortex. Nat Neurosci. 2003;6(11):1216–23. 10.1038/nn1141 [DOI] [PubMed] [Google Scholar]; F1000 Recommendation

- 28. David SV, Fritz JB, Shamma SA: Task reward structure shapes rapid receptive field plasticity in auditory cortex. Proc Natl Acad Sci U S A. 2012;109(6):2144–9. 10.1073/pnas.1117717109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. David SV: Incorporating behavioral and sensory context into spectro-temporal models of auditory encoding. Hear Res. 2018;360:107–23. 10.1016/j.heares.2017.12.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Rabinowitz NC, Willmore BD, King AJ, et al. : Constructing noise-invariant representations of sound in the auditory pathway. PLoS Biol. 2013;11(11):e1001710. 10.1371/journal.pbio.1001710 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Ahrens MB, Linden JF, Sahani M: Nonlinearities and contextual influences in auditory cortical responses modeled with multilinear spectrotemporal methods. J Neurosci. 2008;28(8):1929–42. 10.1523/JNEUROSCI.3377-07.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. David SV, Mesgarani N, Fritz JB, et al. : Rapid synaptic depression explains nonlinear modulation of spectro-temporal tuning in primary auditory cortex by natural stimuli. J Neurosci. 2009;29(11):3374–86. 10.1523/JNEUROSCI.5249-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Willmore BD, Schoppe O, King AJ, et al. : Incorporating Midbrain Adaptation to Mean Sound Level Improves Models of Auditory Cortical Processing. J Neurosci. 2016;36(2):280–9. 10.1523/JNEUROSCI.2441-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Wen B, Wang GI, Dean I, et al. : Dynamic range adaptation to sound level statistics in the auditory nerve. J Neurosci. 2009;29(44):13797–808. 10.1523/JNEUROSCI.5610-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Dean I, Harper NS, McAlpine D: Neural population coding of sound level adapts to stimulus statistics. Nat Neurosci. 2005;8(12):1684–9. 10.1038/nn1541 [DOI] [PubMed] [Google Scholar]

- 36. Rabinowitz NC, Willmore BD, Schnupp JW, et al. : Spectrotemporal contrast kernels for neurons in primary auditory cortex. J Neurosci. 2012;32(33):11271–84. 10.1523/JNEUROSCI.1715-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Moore RC, Lee T, Theunissen FE: Noise-invariant neurons in the avian auditory cortex: Hearing the song in noise. PLoS Comput Biol. 2013;9(3):e1002942. 10.1371/journal.pcbi.1002942 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Mesgarani N, David SV, Fritz JB, et al. : Mechanisms of noise robust representation of speech in primary auditory cortex. Proc Natl Acad Sci U S A. 2014;111(18):6792–7. 10.1073/pnas.1318017111 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 39. Olshausen BA, Field DJ: Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature. 1996;381(6583):607–9. 10.1038/381607a0 [DOI] [PubMed] [Google Scholar]

- 40. Carlson NL, Ming VL, DeWeese MR: Sparse codes for speech predict spectrotemporal receptive fields in the inferior colliculus. PLoS Comput Biol. 2012;8(7):e1002594. 10.1371/journal.pcbi.1002594 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Carlin MA, Elhilali M: Sustained firing of model central auditory neurons yields a discriminative spectro-temporal representation for natural sounds. PLoS Comput Biol. 2013;9(3):e1002982. 10.1371/journal.pcbi.1002982 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Singer Y, Teramoto Y, Willmore BD, et al. : Sensory cortex is optimized for prediction of future input. eLife. 2018;7: pii: e31557. 10.7554/eLife.31557 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Młynarski W, McDermott JH: Learning Midlevel Auditory Codes from Natural Sound Statistics. Neural Comput. 2018;30(3):631–69. 10.1162/neco_a_01048 [DOI] [PubMed] [Google Scholar]; F1000 Recommendation

- 44. Saenz M, Langers DR: Tonotopic mapping of human auditory cortex. Hear Res. 2014;307:42–52. 10.1016/j.heares.2013.07.016 [DOI] [PubMed] [Google Scholar]

- 45. Winkowski DE, Kanold PO: Laminar transformation of frequency organization in auditory cortex. J Neurosci. 2013;33(4):1498–508. 10.1523/JNEUROSCI.3101-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 46. Vasquez-Lopez SA, Weissenberger Y, Lohse M, et al. : Thalamic input to auditory cortex is locally heterogeneous but globally tonotopic. eLife. 2017;6: pii: e25141. 10.7554/eLife.25141 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Oviedo HV, Bureau I, Svoboda K, et al. : The functional asymmetry of auditory cortex is reflected in the organization of local cortical circuits. Nat Neurosci. 2010;13(11):1413–20. 10.1038/nn.2659 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 48. Li LY, Ji XY, Liang F, et al. : A feedforward inhibitory circuit mediates lateral refinement of sensory representation in upper layer 2/3 of mouse primary auditory cortex. J Neurosci. 2014;34(41):13670–83. 10.1523/JNEUROSCI.1516-14.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Aizenberg M, Mwilambwe-Tshilobo L, Briguglio JJ, et al. : Bidirectional Regulation of Innate and Learned Behaviors That Rely on Frequency Discrimination by Cortical Inhibitory Neurons. PLoS Biol. 2015;13(12):e1002308. 10.1371/journal.pbio.1002308 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 50. Kato HK, Asinof SK, Isaacson JS: Network-Level Control of Frequency Tuning in Auditory Cortex. Neuron. 2017;95(2):412–423.e4. 10.1016/j.neuron.2017.06.019 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 51. Chen IW, Helmchen F, Lütcke H: Specific Early and Late Oddball-Evoked Responses in Excitatory and Inhibitory Neurons of Mouse Auditory Cortex. J Neurosci. 2015;35(36):12560–73. 10.1523/JNEUROSCI.2240-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 52. Natan RG, Briguglio JJ, Mwilambwe-Tshilobo L, et al. : Complementary control of sensory adaptation by two types of cortical interneurons. eLife. 2015;4: pii: e09868. 10.7554/eLife.09868 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Natan RG, Rao W, Geffen MN: Cortical Interneurons Differentially Shape Frequency Tuning following Adaptation. Cell Rep. 2017;21(4):878–90. 10.1016/j.celrep.2017.10.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Phillips EAK, Schreiner CE, Hasenstaub AR: Cortical Interneurons Differentially Regulate the Effects of Acoustic Context. Cell Rep. 2017;20(4):771–8. 10.1016/j.celrep.2017.07.001 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 55. Hübener M, Shoham D, Grinvald A, et al. : Spatial relationships among three columnar systems in cat area 17. J Neurosci. 1997;17(23):9270–84. 10.1523/JNEUROSCI.17-23-09270.1997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Barton B, Venezia JH, Saberi K, et al. : Orthogonal acoustic dimensions define auditory field maps in human cortex. Proc Natl Acad Sci U S A. 2012;109(50):20738–43. 10.1073/pnas.1213381109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Baumann S, Joly O, Rees A, et al. : The topography of frequency and time representation in primate auditory cortices. eLife. 2015;4:e03256. 10.7554/eLife.03256 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Bandyopadhyay S, Shamma SA, Kanold PO: Dichotomy of functional organization in the mouse auditory cortex. Nat Neurosci. 2010;13(3):361–8. 10.1038/nn.2490 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Deneux T, Kempf A, Daret A, et al. : Temporal asymmetries in auditory coding and perception reflect multi-layered nonlinearities. Nat Commun. 2016;7: 12682. 10.1038/ncomms12682 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Panniello M, King AJ, Dahmen JC, et al. : Local and Global Spatial Organization of Interaural Level Difference and Frequency Preferences in Auditory Cortex. Cereb Cortex. 2018;28(1):350–69. 10.1093/cercor/bhx295 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Recanzone GH, Schreiner CE, Sutter ML, et al. : Functional organization of spectral receptive fields in the primary auditory cortex of the owl monkey. J Comp Neurol. 1999;415(4):460–81. 10.1002/(SICI)1096-9861(19991227)415:4%3C460::AID-CNE4%3E3.0.CO;2-F [DOI] [PubMed] [Google Scholar]

- 62. Atencio CA, Schreiner CE: Spectrotemporal processing in spectral tuning modules of cat primary auditory cortex. PLoS One. 2012;7(2):e31537. 10.1371/journal.pone.0031537 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Ringach DL, Mineault PJ, Tring E, et al. : Spatial clustering of tuning in mouse primary visual cortex. Nat Commun. 2016;7: 12270. 10.1038/ncomms12270 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Atencio CA, Schreiner CE: Laminar diversity of dynamic sound processing in cat primary auditory cortex. J Neurophysiol. 2010;103(1):192–205. 10.1152/jn.00624.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Morrill RJ, Hasenstaub AR: Visual Information Present in Infragranular Layers of Mouse Auditory Cortex. J Neurosci. 2018;38(11):2854–62. 10.1523/JNEUROSCI.3102-17.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 66. Schaefer MK, Kössl M, Hechavarría JC: Laminar differences in response to simple and spectro-temporally complex sounds in the primary auditory cortex of ketamine-anesthetized gerbils. PLoS One. 2017;12(8):e0182514. 10.1371/journal.pone.0182514 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. See JZ, Atencio CA, Sohal VS, et al. : Coordinated neuronal ensembles in primary auditory cortical columns. eLife. 2018;7: pii: e35587. 10.7554/eLife.35587 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 68. Overath T, McDermott JH, Zarate JM, et al. : The cortical analysis of speech-specific temporal structure revealed by responses to sound quilts. Nat Neurosci. 2015;18(6):903–11. 10.1038/nn.4021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. de Heer WA, Huth AG, Griffiths TL, et al. : The Hierarchical Cortical Organization of Human Speech Processing. J Neurosci. 2017;37(27):6539–57. 10.1523/JNEUROSCI.3267-16.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 70. Kell AJE, Yamins DLK, Shook EN, et al. : A Task-Optimized Neural Network Replicates Human Auditory Behavior, Predicts Brain Responses, and Reveals a Cortical Processing Hierarchy. Neuron. 2018;98(3):630–644.e16. 10.1016/j.neuron.2018.03.044 [DOI] [PubMed] [Google Scholar]; F1000 Recommendation

- 71. Norman-Haignere S, Kanwisher NG, McDermott JH: Distinct Cortical Pathways for Music and Speech Revealed by Hypothesis-Free Voxel Decomposition. Neuron. 2015;88(6):1281–96. 10.1016/j.neuron.2015.11.035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Poirier C, Baumann S, Dheerendra P, et al. : Auditory motion-specific mechanisms in the primate brain. PLoS Biol. 2017;15(5):e2001379. 10.1371/journal.pbio.2001379 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 73. Cohen YE, Bennur S, Christison-Lagay K, et al. : Functional Organization of the Ventral Auditory Pathway. Adv Exp Med Biol. 2016;894:381–8. 10.1007/978-3-319-25474-6_40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74. Albouy P, Weiss A, Baillet S, et al. : Selective Entrainment of Theta Oscillations in the Dorsal Stream Causally Enhances Auditory Working Memory Performance. Neuron. 2017;94(1):193–206.e5. 10.1016/j.neuron.2017.03.015 [DOI] [PubMed] [Google Scholar]

- 75. Da Costa S, Clarke S, Crottaz-Herbette S: Keeping track of sound objects in space: The contribution of early-stage auditory areas. Hear Res. 2018;366:17–31. 10.1016/j.heares.2018.03.027 [DOI] [PubMed] [Google Scholar]

- 76. Rauschecker JP: Where, When, and How: Are they all sensorimotor? Towards a unified view of the dorsal pathway in vision and audition. Cortex. 2018;98:262–8. 10.1016/j.cortex.2017.10.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Issa JB, Haeffele BD, Young ED, et al. : Multiscale mapping of frequency sweep rate in mouse auditory cortex. Hear Res. 2017;344:207–22. 10.1016/j.heares.2016.11.018 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 78. Walker KM, Bizley JK, King AJ, et al. : Multiplexed and robust representations of sound features in auditory cortex. J Neurosci. 2011;31(41):14565–76. 10.1523/JNEUROSCI.2074-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79. Ortiz-Rios M, Azevedo FAC, Kuśmierek P, et al. : Widespread and Opponent fMRI Signals Represent Sound Location in Macaque Auditory Cortex. Neuron. 2017;93(4):971–983.e4. 10.1016/j.neuron.2017.01.013 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 80. Allen EJ, Burton PC, Olman CA, et al. : Representations of Pitch and Timbre Variation in Human Auditory Cortex. J Neurosci. 2017;37(5):1284–93. 10.1523/JNEUROSCI.2336-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 81. Bizley JK, Walker KM, Silverman BW, et al. : Interdependent encoding of pitch, timbre, and spatial location in auditory cortex. J Neurosci. 2009;29(7):2064–75. 10.1523/JNEUROSCI.4755-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82. Pasley BN, David SV, Mesgarani N, et al. : Reconstructing speech from human auditory cortex. PLoS Biol. 2012;10(1):e1001251. 10.1371/journal.pbio.1001251 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83. Yildiz IB, Mesgarani N, Deneve S: Predictive Ensemble Decoding of Acoustical Features Explains Context-Dependent Receptive Fields. J Neurosci. 2016;36(49):12338–50. 10.1523/JNEUROSCI.4648-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 84. Christison-Lagay KL, Bennur S, Cohen YE: Contribution of spiking activity in the primary auditory cortex to detection in noise. J Neurophysiol. 2017;118(6):3118–31. 10.1152/jn.00521.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85. Malone BJ, Heiser MA, Beitel RE, et al. : Background noise exerts diverse effects on the cortical encoding of foreground sounds. J Neurophysiol. 2017;118(2):1034–54. 10.1152/jn.00152.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86. Mesgarani N, Chang EF: Selective cortical representation of attended speaker in multi-talker speech perception. Nature. 2012;485(7397):233–6. 10.1038/nature11020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87. Zion Golumbic EM, Ding N, Bickel S, et al. : Mechanisms underlying selective neuronal tracking of attended speech at a "cocktail party". Neuron. 2013;77(5):980–91. 10.1016/j.neuron.2012.12.037 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 88. Rodgers CC, DeWeese MR: Neural correlates of task switching in prefrontal cortex and primary auditory cortex in a novel stimulus selection task for rodents. Neuron. 2014;82(5):1157–70. 10.1016/j.neuron.2014.04.031 [DOI] [PubMed] [Google Scholar]

- 89. Fuglsang SA, Dau T, Hjortkjær J: Noise-robust cortical tracking of attended speech in real-world acoustic scenes. Neuroimage. 2017;156:435–44. 10.1016/j.neuroimage.2017.04.026 [DOI] [PubMed] [Google Scholar]

- 90. Bathellier B, Ushakova L, Rumpel S: Discrete neocortical dynamics predict behavioral categorization of sounds. Neuron. 2012;76(2):435–49. 10.1016/j.neuron.2012.07.008 [DOI] [PubMed] [Google Scholar]; F1000 Recommendation

- 91. Ince RA, Panzeri S, Kayser C: Neural codes formed by small and temporally precise populations in auditory cortex. J Neurosci. 2013;33(46):18277–87. 10.1523/JNEUROSCI.2631-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92. Francis NA, Winkowski DE, Sheikhattar A, et al. : Small Networks Encode Decision-Making in Primary Auditory Cortex. Neuron. 2018;97(4):885–897.e6. 10.1016/j.neuron.2018.01.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93. Stecker GC, Harrington IA, Middlebrooks JC: Location coding by opponent neural populations in the auditory cortex. PLoS Biol. 2005;3(3):e78. 10.1371/journal.pbio.0030078 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94. Keating P, Dahmen JC, King AJ: Complementary adaptive processes contribute to the developmental plasticity of spatial hearing. Nat Neurosci. 2015;18(2):185–7. 10.1038/nn.3914 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95. Derey K, Valente G, de Gelder B, et al. : Opponent Coding of Sound Location (Azimuth) in Planum Temporale is Robust to Sound-Level Variations. Cereb Cortex. 2016;26(1):450–64. 10.1093/cercor/bhv269 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96. McLaughlin SA, Higgins NC, Stecker GC: Tuning to Binaural Cues in Human Auditory Cortex. J Assoc Res Otolaryngol. 2016;17(1):37–53. 10.1007/s10162-015-0546-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97. Santoro R, Moerel M, De Martino F, et al. : Reconstructing the spectrotemporal modulations of real-life sounds from fMRI response patterns. Proc Natl Acad Sci U S A. 2017;114(18):4799–804. 10.1073/pnas.1617622114 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 98. Griffiths TD, Warren JD: What is an auditory object? Nat Rev Neurosci. 2004;5(11):887–92. 10.1038/nrn1538 [DOI] [PubMed] [Google Scholar]

- 99. Pressnitzer D, Sayles M, Micheyl C, et al. : Perceptual organization of sound begins in the auditory periphery. Curr Biol. 2008;18(15):1124–8. 10.1016/j.cub.2008.06.053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100. Kondo HM, Kashino M: Involvement of the thalamocortical loop in the spontaneous switching of percepts in auditory streaming. J Neurosci. 2009;29(40):12695–701. 10.1523/JNEUROSCI.1549-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101. Yao JD, Bremen P, Middlebrooks JC: Emergence of Spatial Stream Segregation in the Ascending Auditory Pathway. J Neurosci. 2015;35(49):16199–212. 10.1523/JNEUROSCI.3116-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102. Shamma SA, Elhilali M, Micheyl C: Temporal coherence and attention in auditory scene analysis. Trends Neurosci. 2011;34(3):114–23. 10.1016/j.tins.2010.11.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103. Ding N, Simon JZ: Emergence of neural encoding of auditory objects while listening to competing speakers. Proc Natl Acad Sci U S A. 2012;109(29):11854–9. 10.1073/pnas.1205381109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104. Middlebrooks JC, Bremen P: Spatial stream segregation by auditory cortical neurons. J Neurosci. 2013;33(27):10986–1001. 10.1523/JNEUROSCI.1065-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105. Fishman YI, Steinschneider M, Micheyl C, et al. : Neural representation of concurrent harmonic sounds in monkey primary auditory cortex: Implications for models of auditory scene analysis. J Neurosci. 2014;34(37):12425–43. 10.1523/JNEUROSCI.0025-14.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106. Fishman YI, Micheyl C, Steinschneider M: Neural Representation of Concurrent Vowels in Macaque Primary Auditory Cortex. eNeuro. 2016;3(3): pii: ENEURO.0071-16.2016. 10.1523/ENEURO.0071-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107. Christison-Lagay KL, Gifford AM, Cohen YE: Neural correlates of auditory scene analysis and perception. Int J Psychophysiol. 2015;95(2):238–45. 10.1016/j.ijpsycho.2014.03.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108. Riecke L, Sack AT, Schroeder CE: Endogenous Delta/Theta Sound-Brain Phase Entrainment Accelerates the Buildup of Auditory Streaming. Curr Biol. 2015;25(24):3196–201. 10.1016/j.cub.2015.10.045 [DOI] [PubMed] [Google Scholar]

- 109. Teki S, Griffiths TD: Brain Bases of Working Memory for Time Intervals in Rhythmic Sequences. Front Neurosci. 2016;10:239. 10.3389/fnins.2016.00239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110. Teki S, Barascud N, Picard S, et al. : Neural Correlates of Auditory Figure-Ground Segregation Based on Temporal Coherence. Cereb Cortex. 2016;26(9):3669–80. 10.1093/cercor/bhw173 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111. Tóth B, Kocsis Z, Háden GP, et al. : EEG signatures accompanying auditory figure-ground segregation. NeuroImage. 2016;141:108–19. 10.1016/j.neuroimage.2016.07.028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112. Alain C, Arsenault JS, Garami L, et al. : Neural Correlates of Speech Segregation Based on Formant Frequencies of Adjacent Vowels. Sci Rep. 2017;7:40790. 10.1038/srep40790 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113. Lu K, Xu Y, Yin P, et al. : Temporal coherence structure rapidly shapes neuronal interactions. Nat Commun. 2017;8:13900. 10.1038/ncomms13900 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 114. Puvvada KC, Simon JZ: Cortical Representations of Speech in a Multitalker Auditory Scene. J Neurosci. 2017;37(38):9189–96. 10.1523/JNEUROSCI.0938-17.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 115. Shiell MM, Hausfeld L, Formisano E: Activity in Human Auditory Cortex Represents Spatial Separation Between Concurrent Sounds. J Neurosci. 2018;38(21):4977–84. 10.1523/JNEUROSCI.3323-17.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 116. Fishman YI, Steinschneider M: Formation of auditory streams. In The Oxford Handbook of Auditory Science: The Auditory Brain.215–246 (Oxford University Press, 2010). Reference Source [Google Scholar]

- 117. Teki S, Chait M, Kumar S, et al. : Segregation of complex acoustic scenes based on temporal coherence. eLife. 2013;2:e00699. 10.7554/eLife.00699 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118. Krishnan L, Elhilali M, Shamma S: Segregating complex sound sources through temporal coherence. PLoS Comput Biol. 2014;10(12):e1003985. 10.1371/journal.pcbi.1003985 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 119. O'Sullivan JA, Power AJ, Mesgarani N, et al. : Attentional Selection in a Cocktail Party Environment Can Be Decoded from Single-Trial EEG. Cereb Cortex. 2015;25(7):1697–706. 10.1093/cercor/bht355 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 120. Lakatos P, Musacchia G, O'Connel MN, et al. : The spectrotemporal filter mechanism of auditory selective attention. Neuron. 2013;77(4):750–61. 10.1016/j.neuron.2012.11.034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 121. VanRullen R, Zoefel B, Ilhan B: On the cyclic nature of perception in vision versus audition. Philos Trans R Soc Lond B Biol Sci. 2014;369(1641):20130214. 10.1098/rstb.2013.0214 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 122. Morillon B, Schroeder CE: Neuronal oscillations as a mechanistic substrate of auditory temporal prediction. Ann N Y Acad Sci. 2015;1337:26–31. 10.1111/nyas.12629 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 123. Atiani S, David SV, Elgueda D, et al. : Emergent selectivity for task-relevant stimuli in higher-order auditory cortex. Neuron. 2014;82(2):486–99. 10.1016/j.neuron.2014.02.029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 124. Rubin J, Ulanovsky N, Nelken I, et al. : The Representation of Prediction Error in Auditory Cortex. PLoS Comput Biol. 2016;12(8):e1005058. 10.1371/journal.pcbi.1005058 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 125. Rao RP, Ballard DH: Predictive coding in the visual cortex: A functional interpretation of some extra-classical receptive-field effects. Nat Neurosci. 1999;2(1):79–87. 10.1038/4580 [DOI] [PubMed] [Google Scholar]

- 126. Friston K: A theory of cortical responses. Philos Trans R Soc Lond B Biol Sci. 2005;360(1456):815–36. 10.1098/rstb.2005.1622 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 127. Kumar S, Sedley W, Nourski KV, et al. : Predictive coding and pitch processing in the auditory cortex. J Cogn Neurosci. 2011;23(10):3084–94. 10.1162/jocn_a_00021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 128. Sedley W, Gander PE, Kumar S, et al. : Neural signatures of perceptual inference. eLife. 2016;5:e11476. 10.7554/eLife.11476 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129. Polterovich A, Jankowski MM, Nelken I: Deviance sensitivity in the auditory cortex of freely moving rats. PLoS One. 2018;13(6):e0197678. 10.1371/journal.pone.0197678 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 130. Parras GG, Nieto-Diego J, Carbajal GV, et al. : Neurons along the auditory pathway exhibit a hierarchical organization of prediction error. Nat Commun. 2017;8(1): 2148. 10.1038/s41467-017-02038-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 131. Jaramillo S, Zador AM: The auditory cortex mediates the perceptual effects of acoustic temporal expectation. Nat Neurosci. 2011;14(2):246–51. 10.1038/nn.2688 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 132. Buran BN, von Trapp G, Sanes DH: Behaviorally gated reduction of spontaneous discharge can improve detection thresholds in auditory cortex. J Neurosci. 2014;34(11):4076–81. 10.1523/JNEUROSCI.4825-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 133. Carcea I, Insanally MN, Froemke RC: Dynamics of auditory cortical activity during behavioural engagement and auditory perception. Nat Commun. 2017;8: 14412. 10.1038/ncomms14412 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 134. Niwa M, Johnson JS, O'Connor KN, et al. : Active engagement improves primary auditory cortical neurons' ability to discriminate temporal modulation. J Neurosci. 2012;32(27):9323–34. 10.1523/JNEUROSCI.5832-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 135. von Trapp G, Buran BN, Sen K, et al. : A Decline in Response Variability Improves Neural Signal Detection during Auditory Task Performance. J Neurosci. 2016;36(43):11097–106. 10.1523/JNEUROSCI.1302-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 136. Downer JD, Niwa M, Sutter ML: Task engagement selectively modulates neural correlations in primary auditory cortex. J Neurosci. 2015;35(19):7565–74. 10.1523/JNEUROSCI.4094-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 137. Huang Y, Matysiak A, Heil P, et al. : Persistent neural activity in auditory cortex is related to auditory working memory in humans and nonhuman primates. eLife. 2016;5: pii: e15441. 10.7554/eLife.15441 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 138. Kumar S, Joseph S, Gander PE, et al. : A Brain System for Auditory Working Memory. J Neurosci. 2016;36(16):4492–505. 10.1523/JNEUROSCI.4341-14.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 139. Cusack R: The intraparietal sulcus and perceptual organization. J Cogn Neurosci. 2005;17(4):641–51. 10.1162/0898929053467541 [DOI] [PubMed] [Google Scholar]

- 140. Teki S, Chait M, Kumar S, et al. : Brain bases for auditory stimulus-driven figure-ground segregation. J Neurosci. 2011;31(1):164–71. 10.1523/JNEUROSCI.3788-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 141. Winkowski DE, Nagode DA, Donaldson KJ, et al. : Orbitofrontal Cortex Neurons Respond to Sound and Activate Primary Auditory Cortex Neurons. Cereb Cortex. 2018;28(3):868–79. 10.1093/cercor/bhw409 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 142. Schneider DM, Nelson A, Mooney R: A synaptic and circuit basis for corollary discharge in the auditory cortex. Nature. 2014;513(7517):189–94. 10.1038/nature13724 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 143. Zhou M, Liang F, Xiong XR, et al. : Scaling down of balanced excitation and inhibition by active behavioral states in auditory cortex. Nat Neurosci. 2014;17(6):841–50. 10.1038/nn.3701 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 144. Niwa M, Johnson JS, O'Connor KN, et al. : Activity related to perceptual judgment and action in primary auditory cortex. J Neurosci. 2012;32(9):3193–210. 10.1523/JNEUROSCI.0767-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 145. Bizley JK, Walker KM, Nodal FR, et al. : Auditory cortex represents both pitch judgments and the corresponding acoustic cues. Curr Biol. 2013;23(7):620–5. 10.1016/j.cub.2013.03.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 146. Runyan CA, Piasini E, Panzeri S, et al. : Distinct timescales of population coding across cortex. Nature. 2017;548(7665):92–6. 10.1038/nature23020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 147. Tsunada J, Liu AS, Gold JI, et al. : Causal contribution of primate auditory cortex to auditory perceptual decision-making. Nat Neurosci. 2016;19(1):135–42. 10.1038/nn.4195 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 148. Agus TR, Thorpe SJ, Pressnitzer D: Rapid formation of robust auditory memories: Insights from noise. Neuron. 2010;66(4):610–8. 10.1016/j.neuron.2010.04.014 [DOI] [PubMed] [Google Scholar]; F1000 Recommendation

- 149. Viswanathan J, Rémy F, Bacon-Macé N, et al. : Long Term Memory for Noise: Evidence of Robust Encoding of Very Short Temporal Acoustic Patterns. Front Neurosci. 2016;10:490. 10.3389/fnins.2016.00490 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 150. McDermott JH, Schemitsch M, Simoncelli EP: Summary statistics in auditory perception. Nat Neurosci. 2013;16(4):493–8. 10.1038/nn.3347 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 151. Yaron A, Hershenhoren I, Nelken I: Sensitivity to complex statistical regularities in rat auditory cortex. Neuron. 2012;76(3):603–15. 10.1016/j.neuron.2012.08.025 [DOI] [PubMed] [Google Scholar]

- 152. Lu K, Vicario DS: Statistical learning of recurring sound patterns encodes auditory objects in songbird forebrain. Proc Natl Acad Sci U S A. 2014;111(40):14553–8. 10.1073/pnas.1412109111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 153. Wilson B, Kikuchi Y, Sun L, et al. : Auditory sequence processing reveals evolutionarily conserved regions of frontal cortex in macaques and humans. Nat Commun. 2015;6:8901. 10.1038/ncomms9901 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 154. Kikuchi Y, Attaheri A, Wilson B, et al. : Sequence learning modulates neural responses and oscillatory coupling in human and monkey auditory cortex. PLoS Biol. 2017;15(4):e2000219. 10.1371/journal.pbio.2000219 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 155. Giraud AL, Poeppel D: Cortical oscillations and speech processing: emerging computational principles and operations. Nat Neurosci. 2012;15(4):511–7. 10.1038/nn.3063 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 156. White EJ, Hutka SA, Williams LJ, et al. : Learning, neural plasticity and sensitive periods: implications for language acquisition, music training and transfer across the lifespan. Front Syst Neurosci. 2013;7:90. 10.3389/fnsys.2013.00090 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 157. Irvine DRF: Plasticity in the auditory system. Hear Res. 2018;362:61–73. 10.1016/j.heares.2017.10.011 [DOI] [PubMed] [Google Scholar]

- 158. Bieszczad KM, Weinberger NM: Representational gain in cortical area underlies increase of memory strength. Proc Natl Acad Sci U S A. 2010;107(8):3793–8. 10.1073/pnas.1000159107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 159. Reed A, Riley J, Carraway R, et al. : Cortical map plasticity improves learning but is not necessary for improved performance. Neuron. 2011;70(1):121–31. 10.1016/j.neuron.2011.02.038 [DOI] [PubMed] [Google Scholar]

- 160. Elias GA, Bieszczad KM, Weinberger NM: Learning strategy refinement reverses early sensory cortical map expansion but not behavior: Support for a theory of directed cortical substrates of learning and memory. Neurobiol Learn Mem. 2015;126:39–55. 10.1016/j.nlm.2015.10.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 161. Caras ML, Sanes DH: Top-down modulation of sensory cortex gates perceptual learning. Proc Natl Acad Sci U S A. 2017;114(37):9972–7. 10.1073/pnas.1712305114 [DOI] [PMC free article] [PubMed] [Google Scholar]; F1000 Recommendation

- 162. Nodal FR, Bajo VM, King AJ: Plasticity of spatial hearing: behavioural effects of cortical inactivation. J Physiol. 2012;590(16):3965–86. 10.1113/jphysiol.2011.222828 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 163. Keating P, Dahmen JC, King AJ: Context-specific reweighting of auditory spatial cues following altered experience during development. Curr Biol. 2013;23(14):1291–9. 10.1016/j.cub.2013.05.045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 164. Trapeau R, Schönwiesner M: The Encoding of Sound Source Elevation in the Human Auditory Cortex. J Neurosci. 2018;38(13):3252–64. 10.1523/JNEUROSCI.2530-17.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]