Abstract

Introduction

Autism spectrum disorder (ASD) is characterised by impairments in social communication. Core symptoms are deficits in social looking behaviours, including limited visual attention to faces and sensitivity to eye gaze cues. We designed an intervention game using serious game mechanics for adolescents with ASD. It is designed to train individuals with ASD to discover that the eyes, and shifts in gaze specifically, provide information about the external world. We predict that the game will increase understanding of gaze cues and attention to faces.

Methods and analysis

The Social Games for Adolescents with Autism (SAGA) trial is a preliminary, randomised controlled trial comparing the intervention game with a waitlist control condition. 34 adolescents (10–18 years) with ASD with a Full-Scale IQ between 70 and 130 and a minimum second grade reading level, and their parents, will be randomly assigned (equally to intervention or the control condition) following baseline assessments. Intervention participants will be instructed to play the computer game at home on a computer for ~30 min, three times a week. All families are tested in the lab at baseline and approximately 2 months following randomisation in all measures. Primary outcomes are assessed with eye tracking to measure sensitivity to eye gaze cues and social visual attention to faces; secondary outcomes are assessed with questionnaires to measure social skills and autism-like behaviours. The analyses will focus on evaluating the feasibility, safety and preliminary effectiveness of the intervention.

Ethics and dissemination

SAGA is approved by the Institutional Review Board at Pennsylvania State University (00005097). Findings will be disseminated via scientific conferences and peer-reviewed journals and to participants via newsletter. The intervention game will be available to families in the control condition after the full data are collected and if analyses indicate that it is effective.

Trial registration number

Keywords: mental health

Strengths and limitations of this study.

This is a randomised controlled trial that employs an immersive computer game with serious game mechanics to maximise opportunities for adolescents with autism spectrum disorder (ASD) to discover the functional utility of eye gaze cues.

This intervention targets the developmental period of adolescence when eye gaze cues are especially important to changing social demands and when there are declining developmental trajectories in ASD.

Multiple eye tracking/behavioural metrics will be measured to assess improvements in social looking behaviour, which is a core symptom of ASD.

Given the nature of this design, the inability to use a completely blinded procedure is a limitation.

Introduction

Autism spectrum disorder (ASD) is a neurodevelopmental disorder characterised by impairments in social communicative behaviours. Core symptoms of these impairments are deficits in social looking behaviours including limited visual attention to faces and sensitivity to eye gaze cues.1 Reduced visual attention to faces is one of the earliest behavioural indicators of autism,2–4 persists across the lifespan5–7 and may serve as a reliable predictor of general social impairments in ASD.8 It is related to difficulties recognising face identity9 and emotional expressions10 and interferes with learning in domains outside of face perception as well.11–13 Similarly, reduced understanding of eye gaze cues is present in infants later diagnosed with autism14 and persists through the first two decades of life.12 15 16 It also has long-term consequences for understanding goal-directed behaviour,6 17 18 learning language and social communication.19 20 People with ASD have difficulty computing the trajectory of eye gaze, understanding the referential nature of gaze, and assigning social relevance to gazed-at objects.17 18 This deficit impacts the ability to use eye gaze direction to predict the actions and intentions of others.

One hypothesis about the underlying mechanism for these deficits suggests that individuals with ASD avoid looking at faces because doing so leads to an increased negative emotional response, as indexed by increased activation in the amygdala.21 However, a review of the literature suggests that there is little support for this hypothesis.22 Also, recent neuroimaging findings suggest that the neural systems for face processing are not impaired in autism; they are just tuned differently (ie, they exhibit typical levels of activation when looking at animal, but not human, faces).23 Together, these findings suggest the need to consider other mechanisms for atypical social looking behaviour in ASD; we hypothesise an early disruption in the learning environment for individuals with autism that contributes to this altered tuning of the face processing system. Although the origin of this disruption is not clear, one hypothesis is that it emerges from atypical coordination between early developing subcortical social orienting and later developing cortical social perception systems.24 The long-term developmental consequence from this disrupted learning environment is that it could deprive individuals with autism the opportunity to learn about the functional significance of social signals, like eye gaze, from the face. Accordingly, this atypical developmental context and learning cycle could lead to a state in which the face and eyes are not meaningful25 to people with autism. Using this conceptual framework, we hypothesise that it may be possible to, in part, retune the face processing system by employing an intervention that encourages individuals with ASD to focus visual attention on faces and discover the functional significance of eye gaze cues. We propose to train individuals with ASD to discover that the eyes, and shifts in gaze specifically, provide critical information about the world around them. Our prediction is that attention to faces, particularly in more social contexts, will also improve as a result of increased understanding how to interpret eye gaze cues. The hope is that such training may begin to ameliorate core symptoms of ASD and potentially facilitate aspects of social functioning (eg, face processing and social communication).

Existing studies have employed computer-based interventions for children and adolescents with ASD with the goal of improving aspects of face processing behaviour.26–29 Most of these interventions, however, have not been very successful in producing long-term changes in behaviour for several reasons. First, they often target multiple components of face processing behaviour, including accuracy of gazed-at objects,29 30 but do not isolate the active ingredients of the intervention on the outcome measures. Second, they often use highly repetitive and specific learning trials, which can lead to inflexible learning and behaviour in autism.31 Third, none of the existing interventions evaluated changes in social visual attention, which is a diagnostic feature of ASD, using eye tracking measures as outcome behaviours. Fourth, although some of these studies have demonstrated learning during the course of the intervention, they have had only limited success in showing evidence of clinical change, particularly in real-world social skills.32 We innovate beyond these previous computer-based interventions by embedding eye gaze direction cues within simulated social interactions with computer-animated characters and embed these interactions in an age-appropriate narrative storyline. This simulates the way social information cues are used in the real world and, we posit, is more likely to generalise to real-world behaviour. We designed the intervention game for adolescents because our prior work suggests that it is an important window of opportunity for altering declining developmental trajectories in autism.33–40

Current serious game intervention

We propose an intervention strategy that employs evidence-based ‘serious game’ mechanics (eg, storylines, long-term goals, and scaling difficulty) to design a learning environment that maximises opportunities for adolescents with ASD to discover the functional utility of eye gaze cues. Serious games are unique intervention tools that are designed to promote learning of targeted skills that are difficult and not rewarding for participants with the goal of improving real-life outcomes.32 The game mechanics that are especially relevant for enhancing motivation in serious games include immersive storylines, goals directed around targeted skills, rewards and feedback about goal progress, increasing levels of difficulty, individualised training, and the provision of choice.32 We designed a serious game in which participants discover that eye gaze cues are useful for guiding their own goal-directed behaviour to solve problems in the game. Participants learn to interpret non-verbal behaviours (eg, pointing, shoulder turns, head turning, and eye gaze cues) of game characters for the purpose of solving narrative-related quests (ie, mixing a potion in the chemistry lab to get gum off a locker in school). The game increases in task difficulty in response to successful demonstration of skills. Initial levels of the game allow participants to use multiple non-verbal behavioural cues to solve problems and increasingly focus on learning how to use eye gaze cues exclusively over time and with practice. This transitions to requiring participants to determine the direction of eye gaze cues with more precision, avoid highly salient objects that are not target gazed-at objects and differentiate predictive (eg, looking at an object of interest) from non-predictive (eg, avatar looking up as if to think before acting) eye gaze cues. Finally, in the most advanced levels of the game, participants learn to process eye gaze cues in episodes of joint attention between two avatars with all the same levels of complexity as are presented with the single avatar.

Aims and objectives

The aims of this study are to assess the feasibility and safety of this serious game intervention and examine the initial evidence for its effectiveness to alter sensitivity to eye gaze and social visual attention to faces in adolescents with ASD. The preliminary randomised controlled trial (RCT) will be conducted to determine the following questions:

Is it possible to recruit and randomise participants into the serious game intervention versus a waitlist control condition?

Do adolescents engage with the game at the intended level (playing 90 min/week for 2 months)?

Is the intervention tolerable and safe (ie, does retention remain high across all data collection points with minimal to no adverse events)?

Does sensitivity to eye gaze improve disproportionately in the intervention compared with the waitlist control group?

Does social visual attention to faces improve disproportionately in the intervention compared with the waitlist control group?

The trial will also allow exploratory analyses of changes in social skills and autism behaviours between the intervention and waitlist control group as a secondary measure of effectiveness of the intervention.

Methods

Study design

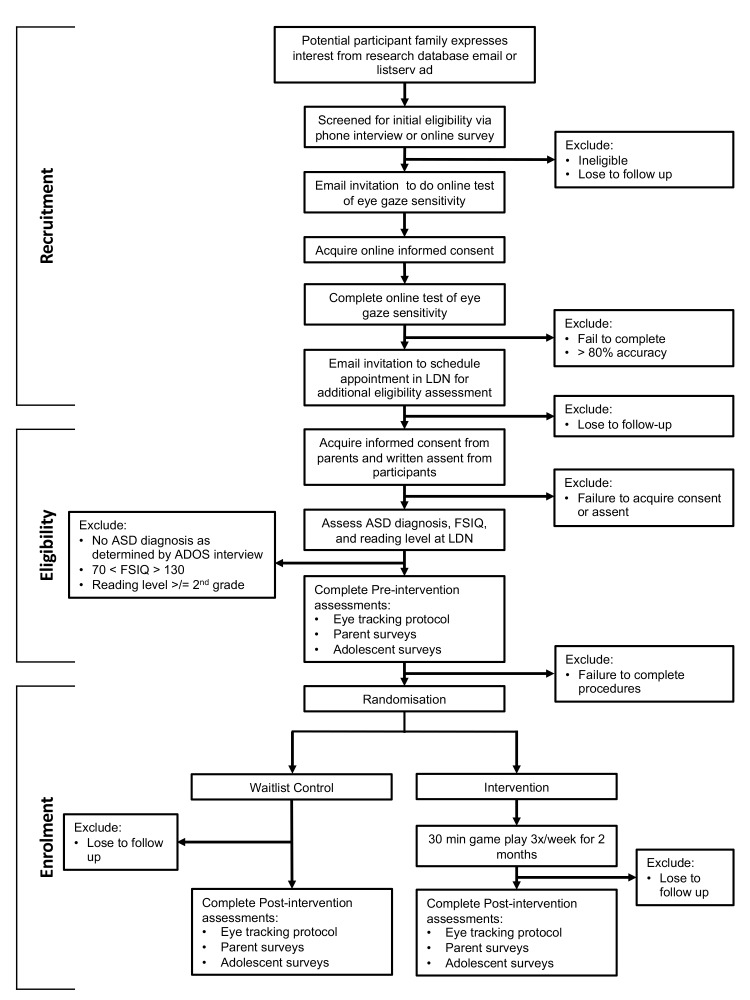

This study will be a preliminary, experimental RCT including an experimental group and a waitlist control group. The experimental group will consist of adolescents with ASD who will play an immersive computer game for 90 minutes a week over 2 months in their own home. This ‘dose’ of treatment was estimated based on the tolerance and relative amount of training required to evince learning in prior face-processing intervention studies of children and adolescents [28, Scherf, Whyte, Minshew & Behrmann, ‘Adolescents with autism learn to individuate novel objects holistically: Replicated Longitudinal Intervention Studies’] and adults41 with ASD. The goal is for participants to obtain a minimum of 10 hours of training specifically on eye gaze tasks across the 2-month training period, which may require a total of 15–20 hours of total game play. For this early trial, we will compare outcomes to a waitlist control group composed of adolescents with autism receiving treatment as usual in the community. The flow of participants through the study is shown in figure 1. These methods are reported following the Standard Protocol Items: Recommendations for Interventional Trials guidelines.42

Figure 1.

CONSORT diagram for SAGA protocol. ASD, autism spectrum disorder; CONSORT, Consolidated Standards of Reporting Trials; SAGA, Social Games for Adolescents with Autism.

Setting

The study assessments will be conducted in the USA in the Laboratory of Developmental Neuroscience at Penn State University, University Park, Pennsylvania, and the intervention, itself, will be executed in the homes of intervention participants.

Participants

Inclusion criteria

Inclusion criteria are: (1) parent/caregiver of an adolescent with a diagnosis of ASD, (2) parent/caregiver and adolescent with ASD both native English speakers, (3) adolescent with ASD aged between 10 years and 18 years at enrolment, (4) adolescent has normal vision and hearing with correction as indicated by parent report, (5) adolescent is able to use a computer for the purposes of game play, (6) adolescent scores ≤80% correct (ie, 0.5 SD less than mean of TD adolescents (M=85.6%, SD=9.0%) on online eye gaze screening task, (7) ASD diagnosis of adolescent confirmed in the lab via the Autism Diagnostic Observation Schedule (ADOS),43 (8) Full Scale IQ (FSIQ) of adolescent determined to be between 70 and 130 on the Kaufman Brief Intelligence Test,44 (9) reading ability of adolescent determined to be at least a second grade level as assessed by the Oral and Written Language Scales,45 (10) adolescent is capable of cooperating with testing, and (11) parent/caregiver and adolescent both consent/assent to participate in the research.

Exclusion criteria

Exclusion criteria are: (1) adolescent has had seizures within the previous 2 years, (2) family lacks stable home internet, (3) parent or adolescent refuses to consent/assent to take part in the research, (4) adolescent is 18 years and has a legal guardian, prohibiting him or her from legally consenting, or (5) adolescent is 18 years and cannot understand the consent (ie, fails consent quiz).

Sample size

A meta-analysis indicates that computer-based interventions for individuals with ASD generally have a medium effect size (Cohen’s d=0.47).46 Power calculations indicate that with a sample size of 34 (17 per group), and an expected correlation between the pretest/posttest measures of 0.58, we will have statistical power of 0.80 to detect a medium effect size for the expected group (intervention and control) × time (preintervention and postintervention) interaction with an α <0.05 in this repeated-measures design.

Recruitment

Our primary recruitment approach will be to recruit families who have registered with research databases like the Interactive Autism Network Research Database at the Kennedy Krieger Institute, Baltimore, and autismMatch at the Center for Autism Research, Philadelphia. Recruitment will proceed via a three-step process (see figure 1). First, the initial inclusion/exclusion criteria will be determined via brief phone interview or by completing an online survey. Second, eligible adolescents will be invited to take an online test of sensitivity to eye gaze through a secure website (Testable.org). Participants view complex images of an actor in a naturalistic scene looking at one of many possible objects and have to identify the target gazed-at object from a list of four labels. We have used this task previously to investigate the influence of autistic-like traits on sensitivity to detect eye gaze cues in typically developing (TD) adults.47 To evaluate the developmental appropriateness of the task, we tested a sample of 50 TD adolescents (ages 11–17 years). The TD adolescents performed above chance (M=85%, SD=9%) and below ceiling levels, which indicates sensitivity of the task to measure eye gaze cues in adolescents. Therefore, ASD participants who score minimally ½ SD below that of the TD adolescent mean (≤80%) on this online screening task will be invited to be evaluated for the remaining set of inclusion criteria. We will obtain written informed consent from the parent and 18-year-old adolescents and written assent from the adolescents aged 10–17 years to participate in the study (see supplementary appendices for consent/assent). Only after both consent and assent are obtained will we administer the remaining eligibility assessments. Participants who meet the final eligibility criteria are invited to continue with the pre-test procedures.

Randomisation procedures

Following completion of the preintervention testing procedures, the principal investigator, who will not be involved in testing participants, will randomise participants in a 1:1 ratio into either the intervention game or waitlist control condition. The randomisation list will be computer-generated prior to the enrolment of any participants and will be stratified by sex and Full Scale IQ (>100 and <100). None of the researchers collecting data will have access to the randomisation list.

Blinding procedures

Given the design of the study, parents and adolescents will know the condition to which they have been assigned. However, researchers involved in data collection will be blinded from condition assignment during the preintervention data collection session as these data will be collected prior to randomisation. Also, the research team is not involved in the randomisation process. The research team members who are involved in ensuring the fidelity of the intervention are not involved in data collection procedures. Although we will attempt to limit unblinding, it is not possible for researchers involved in data collection to be completely blinded to the assignment of participant condition at the postintervention visit as we cannot prohibit participants from talking to researchers about their experience in the study. Importantly, the primary outcome measures are believed to be robust to investigator bias.

Intervention conducted in the experimental group

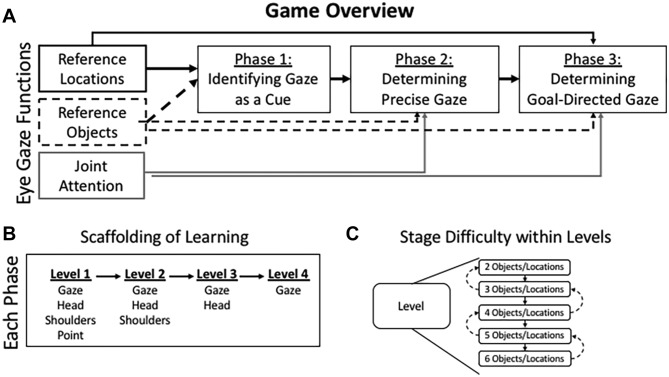

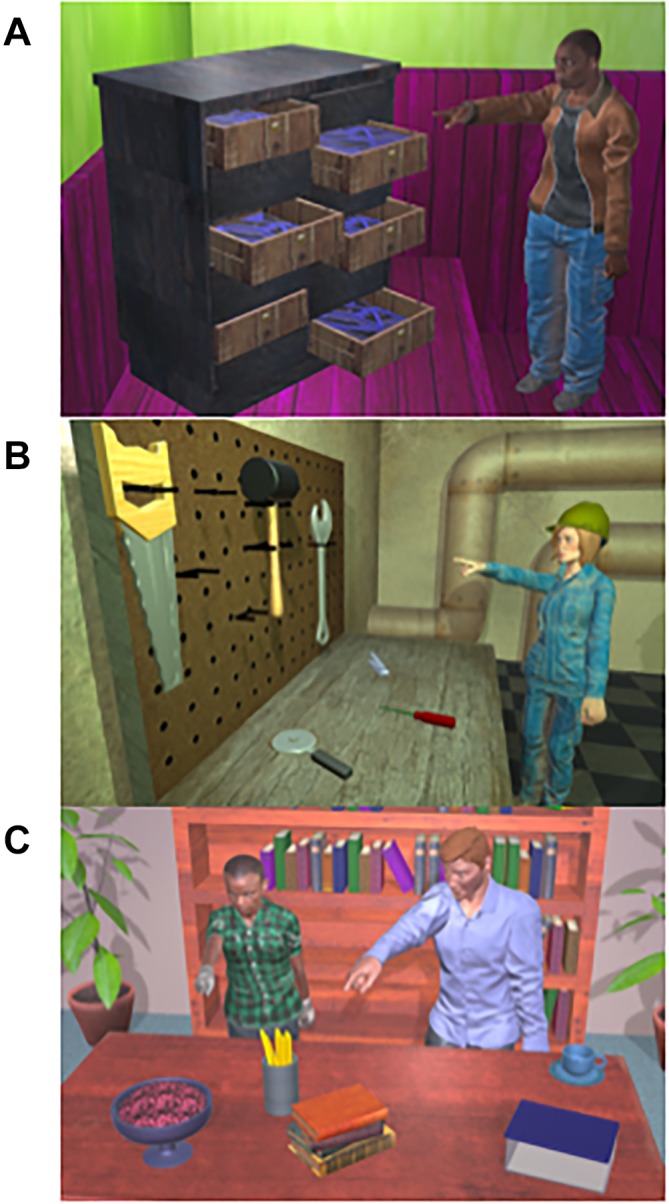

The intervention video game is designed to engage and shape learning of sensitivity to eye gaze information and social attention to faces. Specifically, the game is structured around learning to use eye gaze as a cue for (1) directional reference (eg, put the object there; see figure 2A for a screenshot from the game), first when gaze cues are highly predictive and subsequently when they are embedded in noisy cues that are not predictive of directional information; (2) reference to a specific object identity (eg, grab that one; see figure 2B), first when gaze cues are highly predictive and subsequently when they are embedded in noisy cues that are not predictive of object information; and (3) joint attention episodes when two people (ie, avatars) engage in mutual gaze with each other and then engage in joint attention on the same specific object (eg, hey Matt, look at that one; see figure 2C), first when gaze cues are highly predictive and subsequently when they are embedded in noisy cues that are not predictive of joint attention. The game is organised around three phases (see figure 3A), which are composed of multiple levels (see figure 3B), which are themselves composed of multiple stages (see figure 3C). Figure 3 provides a schematic illustration of the structure of the game.

Figure 2.

Screenshots from multiple training conditions in the intervention game. (A). The avatar is instructing the participant to select 1 of 5 possible drawer locations using pointing, shoulder direction, head direction, and gaze cues in a room scene. (B). The avatar is directing the participant to select 1 of 6 possible tools to put on the peg board using pointing, shoulder direction, head direction, and gaze cues in a tool shed scene. (C). The two avatars are engaged in an episode of joint attention on the bowl and are inviting the participant to select it from 5 possible objects using pointing, shoulder direction, head direction, and gaze cues in a library scene.

Figure 3.

Schematic illustration of the intervention game structure. The game is designed to train learning about three functional uses of eye gaze cues including the use of gaze to reference locations and objects in the world via a single informant and in episodes of joint attention between multiple informants (A). The game is organised around three sequential phases. The tasks in phase 1 are structured to help participants learn that eye gaze is an important cue to solving problems in the game. The tasks in phase 2 help participants learn to estimate precise gaze trajectories by making target gazed-at objects closer together and to ignore salient objects that are not the target gazed-at object. Episodes of joint attention are also introduced in phase 2 in which participants have to determine the target object that two avatars are looking at together. This is difficult because the timing of the non-verbal cues to identify the object is not perfectly synchronous between the two avatars. In phase 3, the tasks are structured around helping participants learn the difference between a goal-directed gaze cue (eg, looking at a target object to solve a puzzle) and a non-goal-directed gaze cue (eg, looking around at all the objects before deciding which one to select). To complete a phase of the game, participants must finish all levels within a phase. Each phase has multiple levels (B). Levels are defined by the number of non-verbal cues avatars use to guide participants to solve puzzles in the game. Easy levels have multiple cues. Level progression increasingly focuses learning to use eye gaze cues exclusively by stripping away other cues. Within each level, there are six stages (C). Each stage represents the number of potential objects or locations that the participant has to discriminate between based on the cue from the avatar. In the easiest stage, the participant chooses between two objects or locations that the avatar is pointing, directing shoulders, head, and gaze to (as in level 1), whereas in stage 6, the participant chooses between six possible objects or locations that the avatar could be referring to with the non-verbal cue(s). Within each stage, participants have five trials. They must perform with 80% accuracy to advance to the next stage, and they must finish all stages within a level before they can progress to the next level within a phase. When they do not reach 80% accuracy within a stage, they are returned to the previous stage to reify the learning where they were recently successful. Sometimes that means they are returned to later stages of previous levels.

The game is an adventure game with embedded gamification techniques in which participants solve mysteries in a 3D environment that is programmed in Unity (https://unity3d.com/unity). The core training mechanisms are delivered via character interactions, with participants learning skills via simulated social interactions with avatars in the game as participants solve problems related to the game narrative. Each of these puzzles has variable elements so that they can be dynamically altered with different objects, locations, and levels of difficulty. Moreover, they are executed with a variety of characters and environmental contexts to support generalised learning opportunities.

The training paradigm is much like a perceptual staircase paradigm in which participants start at a phase (figure 3A), level (figure 3B), and stage (figure 3C) meant to be easily processed and accomplished by all participants. Individuals advance through stages within levels and then between levels and phases, until they hit a threshold where their skills plateau (see figure 3C). When participants fail a stage, they go back to the preceding stage (and potentially phase or level) where they succeeded and must complete it before progressing to the failed stage/level/phase again. This keeps participants challenged without becoming too frustrated and allows them to practice and learn new skills. When participants repeat a stage or level, they do so in a new context with new avatars to foster generalisation of the learnt skills. If players are unable to progress from the easiest levels, they are redirected to remedial training in which more explicit guidance is afforded about how eye gaze cues provide information about objects and locations in the local environment (once completed, they are returned to the main game). See online supplementary figures 1–3 for the full Unified Modeling Language (UML) diagrams illustrating progression through the game.

bmjopen-2018-023682supp001.pdf (407.4KB, pdf)

Difficulty increases in several ways and is all controlled by choices of the individual participant, within design constraints of the game. First, the number of locations or objects that the avatars reference gradually increases across stages so that the precision of gaze sensitivity has to improve (see figure 3C). Second, in the early levels of each phase of the game, participants are provided with multiple kinds of non-verbal social cues in their interactions with the avatars (see figure 3B). For example, in the earliest levels of phase 1 of the game, the avatars simultaneously point, orient their shoulders, turn their head, and shift their eye gaze as cues to direct participants to solve quests in the game. As play progresses, learning is scaffolded by slowly removing the non-gaze social behaviours; ultimately avatars only direct participants via eye gaze cues (see figure 3B). Third, once a participant has mastered the easiest levels of gaze shifts, the levels increase in difficulty by reducing the spacing between the objects (requiring more precise tracking of gaze trajectory) and by increasing the salience of the non-target objects (requiring increasing focus of attention on the target gazed-at object) as participants move into the more advanced phases of the game. Finally, at the most advanced phase and levels of the game, it is necessary to learn to ignore non-predictive shifts of gaze (eg, looking up pensively, looking across all objects before landing on target object) and only focus on the predictive gaze shifts (see figure 3A).

Outcome measures

Feasibility outcomes

To measure intervention feasibility, in addition to participant attrition, we will report the mean number of sessions, total number of minutes played, total number of minutes engaged in eye gaze tasks, frequency of each level visited in the game, and accuracy of performance within each level of the game. The feasibility of the testing procedures will also be assessed. We will report adherence rates, means and SD for each outcome measure separately for each group in the preintervention and postintervention testing sessions. This will allow us to assess potential floor or ceiling effects in any of our measures, collect information relevant for determining effect sizes, and estimate sample sizes for a full trial.

Safety outcomes

The intervention is expected to have minimal risk, because it is designed from an empirically informed approach, administered remotely, designed to flexibly accommodate participants’ schedule, and is semi-supervised. However, potential adverse events and unintended effects occurring during testing or the intervention period will be reported and explored. A Data Safety Monitoring Board (DSMB) will be instituted (see Study Monitoring). Additionally, self-report and behavioural measures will be used to monitor unanticipated risks. This includes a usability questionnaire about the intervention game experience in which participants rate multiple aspects of game play on a Likert scale (eg, Experience was fun; I felt discouraged) at the postintervention testing session. Procedures are in place to monitor suicidal ideation and self-injurious behaviour among adolescents and to make recommendations about care based on the assessment outcome.

Primary outcomes (intervention effectiveness)

Primary and secondary outcomes will be measured at both the preintervention and postintervention sessions. We hypothesise that the intervention will improve eye gaze and social visual attention behaviours; therefore, all the primary measures of the intervention effectiveness are assessed with eye tracking technology. The analyses will focus on time spent looking at faces, which include the eyes, and gazed-at objects in the stimuli. Limiting the analyses to time spent looking at eyes may underestimate the effectiveness of the intervention, if adolescents only learn social communication cues related to turns of head, which are correlated with gaze cues, for example. Also, defining eye-specific areas of interest in dynamic stimuli can be imprecise and unreliable. The secondary measures evaluate changes in autism-like behaviours, social competence and problematic behaviours, which may be indirectly impacted by the intervention.

Visual attention to faces

This task is similar to that previously described.48 Participants passively view six 42-second clips from age-appropriate movies of social interactions with two or more characters that are matched by adult raters on emotional intensity and valence, number of visible faces, and amount of time faces are present. Four of the movies are unique at each time point (pre and post) and two movies repeat across time points to assess reliability of measurement. The dependent measures include the average gaze time to faces and the proportion of total gaze time to faces.

Eye gaze sensitivity

We will assess eye gaze sensitivity in two tasks. In the static version of the task, participants view still images (n=40) of an actor in a naturalistic scene looking a one of many possible objects, like in the online eye gaze screening task.17 47 Each image is displayed on a computer screen for 4 s. Participants must then identify the specific object that the person is looking at from a list of four labels presented on a subsequent screen. The dependent measures include both performance accuracy and the ratio of average gaze time to the target object versus average gaze time to non-target objects. Twenty-six images are unique at each time point and 14 images repeat across time points. None of the stimuli used in the online screening task will be used in the preintervention or postintervention testing sessions.

To measure sensitivity to real-time eye gaze cues, we will create a dynamic version of this static task that is modelled after dynamic stimuli used to test infant joint attention.15 On each trial, participants watch a movie of a female actress looking into the camera, then directing her gaze to a target object, holding the gaze on the target object for several seconds, and returning her gaze back to the camera. At the end of each trial, participants identify the target gazed-at object from a list of four labels presented on a subsequent screen. The dependent measures include performance accuracy, gaze shifts between the face and target and non-target objects,15 and ratio of average gaze time to the target object versus average gaze time to non-target objects. Twenty videos are unique at each time point and six videos repeat across time points.

Secondary outcomes (intervention effectiveness)

Autism, social, and problem behaviour questionnaires

To assess if the intervention influences autism symptoms, social skills, and adaptive functioning, parents and adolescents will complete the Social Skills Improvement System (SSIS)49 and parents will complete the Social Responsiveness Scale, 2nd Edition (SRS-2).50 On the SSIS measures, total scores will be computed separately for social skills and problem behaviour domains. Higher scores indicate the presence of more of these behaviours. We will compute the total score on the SRS-2; higher scores reflect more social impairment.

Patient and public involvement

KSS has been working with adolescents with ASD and their families in research settings for 15 years. The decision to design an intervention that targets sensitivity to eye gaze cues has been informed by her personal interactions with families and their desire to improve adaptive social skills in their children. The decision to employ serious game mechanics was informed by positive feedback from adolescents with ASD who were tested in previous home-based computerised interventions [Scherf, Whyte, Minshew & Behrmann, ‘Adolescents with autism learn to individuate novel objects holistically: Replicated Longitudinal Intervention Studies’]. The staff training and testing procedures used in this protocol, including accommodations in the testing rooms (ie, lighting, seating) and strategies for working with participants, are all informed by experiences and conversations with previous study participants with autism. Several adolescents with autism provided feedback to us about the intervention game during its development in pilot testing. Autism family networks will be used to facilitate recruitment into the study as described in the Recruitment section. We will inform participating families about findings from the study in the form of a newsletter (see Ethics and Dissemination). We will assess the burden of the intervention with a usability questionnaire (see Safety Outcomes). We thank all the families who have helped inform the development of this study.

Data collection

Intervention data

Strategies for maximising the fidelity of the video game intervention include: (1) establishing minimum computer requirements for participants, (2) designing instructional videos for participants about the game, (3) designing a web page portal for participants to find frequently asked questions about the game and submit electronic help tickets for technical problems, (4) establishing a texting reminder system on scheduled game play days for participants, (5) establishing a protocol for contacting parents when participants miss scheduled sessions of game play, (6) providing explicit directions to parents that no one else in the home is to play the intervention game, and (7) paying participants $5 for every 30 min of game play up to $200. Throughout the intervention, log files are generated for each participant with feasibility data (see Feasibility outcomes) for each day of game play. Log files will be uploaded every 8 min onto a secure, password-protected server that only designated research personnel can access. Data from the log files will be summarised across days and sessions for each participant.

Eye tracking data

Eye tracking data will be collected using a Tobii X2-60 eye tracker, which has a sampling rate of 60 Hz and approximate accuracy of 0.4° and precision of 0.34°. This eye tracker allows for bright and dark pupil eye tracking and small head movements, maximising comfort during testing (ie, no chin rest required). A nine-point automatic calibration procedure will be employed prior to each task to customise and accurately estimate gaze point calculations. To reduce fatigue and restlessness, we will incorporate multiple breaks in the eye tracking protocol for participants. To acclimate participants to the testing room and eye tracking equipment, we will include a 10 min warm up procedure and provide participants with an overview of the schedule of testing events. Based on pilot data collection with TD children, we estimate that the entire procedure for eye tracking will last approximately 70–90 min. The fidelity of the eye tracking data will be assessed through quality of the calibration procedure. Eye gaze samples in which there is no recordable information from at least one eye on the stimulus will be quantified as missing data. Measurement error of the eye tracking data will be minimised by having the same small number of highly trained researchers collect the data at both the preintervention and postintervention sessions.

Questionnaire data

Parents complete questionnaires while adolescents are tested in the eye tracking protocol. Adolescents complete the questionnaires following completion of the eye tracking protocol. The SSIS is clinically relevant and is sensitive to changes in social abilities from behavioural interventions in individuals with ASD.51 The SRS-2 is a reliable and valid measure of social impairment and repetitive behaviour as a single quantitative trait52 and also includes multiple questions specifically related to eye gaze behaviours and face-processing abilities. Missing data will be handled in congruence with the standardised SSIS/SRS-2 procedures.

Data management

All analyses will be completed using standard statistical software (eg, R2). Data will be scored and entered into the programme for further preprocessing/data reduction and backed up in multiple locations (ie, lab server and back-up hard drive). Data will be deidentified and stored on in password-protected partitions of cloud and lab servers. Only research project investigators and staff approved to work on the project and listed in the Institutional Review Board (IRB) protocol will have access to the identified data.

Prior to statistical analyses, all the data will be investigated for deviations from normality and transformed if necessary, and we will examine and manage statistical outlier data points (>2 SD of the group mean) where appropriate. Following randomisation, and after the preintervention data are collected, we will determine whether the intervention and waitlist control groups differ on any demographic characteristics (eg, age, FSIQ, ADOS total score and online eye gaze screening scores). Variables with reliable differences will be submitted to the subsequent analyses of group differences as covariates.

For the primary study outcomes, we will use linear mixed effects modelling to test the effectiveness of the intervention on outcome measures while accounting for repeated measures (ie, timepoint). Additionally, we plan to model variability in both stimulus items and participants by including them as random factors in our statistical models. For each dependent variable, we will fit a model with group (intervention and control) and timepoint (preintervention and postintervention) as fixed factors and age, IQ, and ADOS total score as covariates. The amount of missing eye gaze data will also be submitted as a covariate in analyses of intervention effectiveness.

Study monitoring

A DSMB will be established and will be composed of independent researchers who have expertise complementary to the aims of the project. We will meet with the DSMB prior to enrolling participants in the study and biannually during the duration of the intervention to review the safety and tolerance of the intervention for our participants. Any adverse events will be reported to both the DSMB and the Penn State IRB.

Ethics and dissemination

Results will be disseminated to the scientific community at scientific conferences and in the form of empirical articles in peer-reviewed scientific journals. Results will also be reported to the funding agency (National Institutes of Mental Health) annually and to ClinicalTrials.gov. Participants will be invited to share deidentified data acquired from this study with the National Institutes of Health Data Archive. Finally, we will present summaries of the findings in the form of a newsletter to study participants, and the intervention game will be made available to families in the waitlist control condition after the full data have been collected if analyses indicate that it is effective.

Discussion

This intervention game may have great potential for translation and dissemination. By combining serious game design principles with intervention science, the resulting intervention game has the potential to be highly motivating, scalable to individual skill level, inexpensive, engaging, and accessible by adolescents in their own homes at their own convenience. Although we are enthusiastic about this approach, we do note several limitations of this study. First, we are not able to fully blind the researchers during the postintervention testing session; however, we think the outcome measures are likely to be fairly robust to experimenter bias. Second, our ability to estimate the feasibility of the intervention is potentially influenced by the fact that our participants are compensated for their time. Importantly, given that the intervention game was designed to foster intrinsic motivation, we expect that participants will want to play the game because it is interesting and motivating. Also, in order to be compensated for the full amount that is offered to participants, they have to play 25% more sessions (100) than the maximum we are asking them to play (72) and 75% than the minimum (24) we are asking them to play. Therefore, given the nature of the intervention game and compensation schedule, we think that the influence of financial compensation in this study will be less of a concern than it might be in other studies.

In the future, we will continue to develop the intervention game with the goal of testing it against an active control game, evaluating if it is effective for improving a broader range of face processing behaviours that are difficult for individuals with ASD (eg, face identity recognition) and improving social skills in ASD. These data, including the generation of effect size estimates, will inform a future confirmatory clinical trial. More generally, these goals represent significant innovation in the design of RCTs for computer-based interventions for autism and may help advance theory and clinical practice.

bmjopen-2018-023682supp002.pdf (168.4KB, pdf)

bmjopen-2018-023682supp003.pdf (94.8KB, pdf)

Supplementary Material

Footnotes

Contributors: KSS, EMW, JMS and CFG secured funding to support this project. KSS, EMW and JMS conceived and designed the study. KSS, EMW, JMS and BJ designed the intervention game. JWG will be responsible for testing participants. DE, JMS, CFG and KSS are responsible for designing the procedures to manage the fidelity of the intervention. JWG and DE will be responsible for managing the data generated during the project. JWG and KSS will conduct the statistical analyses of the data. KSS and JWG drafted the manuscript and all authors reviewed the manuscript for intellectual content and approved the final version.

Funding: This work is supported by National Institutes of Mental Health (R61-MH110624; PI KSS).

Competing interests: EMW was at the Department of Psychology at Pennsylvania State University when she contributed to this work. She is currently working for Daybreak Games in San Diego, California.

Patient consent: Next of kin consent obtained.

Ethics approval: Pennsylvania State University Institutional Review Board (IRB# 00005097).

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1. Association, AP. Diagnostic and statistical manual of mental disorders: DSM-5. Washington, DC: American Psychiatric Association, 2013. [Google Scholar]

- 2. Chawarska K, Macari S, Shic F. Decreased spontaneous attention to social scenes in 6-month-old infants later diagnosed with autism spectrum disorders. Biol Psychiatry 2013;74:195–203. 10.1016/j.biopsych.2012.11.022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Falck-Ytter T, Bölte S, Gredebäck G. Eye tracking in early autism research. J Neurodev Disord 2013;5:28 10.1186/1866-1955-5-28 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Jones W, Klin A. Attention to eyes is present but in decline in 2-6-month-old infants later diagnosed with autism. Nature 2013;504:427–31. 10.1038/nature12715 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Bird G, Press C, Richardson DC. The role of alexithymia in reduced eye-fixation in Autism spectrum conditions. J Autism Dev Disord 2011;41:1556–64. 10.1007/s10803-011-1183-3 [DOI] [PubMed] [Google Scholar]

- 6. Guillon Q, Hadjikhani N, Baduel S, et al. Visual social attention in autism spectrum disorder: insights from eye tracking studies. Neurosci Biobehav Rev 2014;42:279–97. 10.1016/j.neubiorev.2014.03.013 [DOI] [PubMed] [Google Scholar]

- 7. Jarrold W, Mundy P, Gwaltney M, et al. Social attention in a virtual public speaking task in higher functioning children with autism. Autism Res 2013;6:393–410. 10.1002/aur.1302 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Papagiannopoulou EA, Chitty KM, Hermens DF, et al. A systematic review and meta-analysis of eye-tracking studies in children with autism spectrum disorders. Soc Neurosci 2014;9:1–23. 10.1080/17470919.2014.934966 [DOI] [PubMed] [Google Scholar]

- 9. Parish-Morris J, Chevallier C, Tonge N, et al. Visual attention to dynamic faces and objects is linked to face processing skills: a combined study of children with autism and controls. Front Psychol 2013;4:185 10.3389/fpsyg.2013.00185 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Bal E, Harden E, Lamb D, et al. Emotion recognition in children with autism spectrum disorders: relations to eye gaze and autonomic state. J Autism Dev Disord 2010;40:358–70. 10.1007/s10803-009-0884-3 [DOI] [PubMed] [Google Scholar]

- 11. Cornew L, Dobkins KR, Akshoomoff N, et al. Atypical social referencing in infant siblings of children with autism spectrum disorders. J Autism Dev Disord 2012;42:2611–21. 10.1007/s10803-012-1518-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Pickard KE, Ingersoll BR. Brief report: High and low level initiations of joint attention, and response to joint attention: differential relationships with language and imitation. J Autism Dev Disord 2015;45:262–8. 10.1007/s10803-014-2193-8 [DOI] [PubMed] [Google Scholar]

- 13. Freeman SF, Gulsrud A, Kasari C. Brief report: linking early joint attention and play abilities to later reports of friendships for children with ASD. J Autism Dev Disord 2015;45:2259–66. 10.1007/s10803-015-2369-x [DOI] [PubMed] [Google Scholar]

- 14. Bedford R, Elsabbagh M, Gliga T, et al. Precursors to social and communication difficulties in infants at-risk for autism: gaze following and attentional engagement. J Autism Dev Disord 2012;42:2208–18. 10.1007/s10803-012-1450-y [DOI] [PubMed] [Google Scholar]

- 15. Falck-Ytter T, Fernell E, Hedvall AL, et al. Gaze performance in children with autism spectrum disorder when observing communicative actions. J Autism Dev Disord 2012;42:2236–45. 10.1007/s10803-012-1471-6 [DOI] [PubMed] [Google Scholar]

- 16. Fletcher-Watson S, Leekam SR, Benson V, et al. Eye-movements reveal attention to social information in autism spectrum disorder. Neuropsychologia 2009;47:248–57. 10.1016/j.neuropsychologia.2008.07.016 [DOI] [PubMed] [Google Scholar]

- 17. Riby DM, Hancock PJ, Jones N, et al. Spontaneous and cued gaze-following in autism and Williams syndrome. J Neurodev Disord 2013;5:13 10.1186/1866-1955-5-13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Vivanti G, McCormick C, Young GS, et al. Intact and impaired mechanisms of action understanding in autism. Dev Psychol 2011;47:841–56. 10.1037/a0023105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Kasari C, Gulsrud A, Freeman S, et al. Longitudinal follow-up of children with autism receiving targeted interventions on joint attention and play. J Am Acad Child Adolesc Psychiatry 2012;51:487–95. 10.1016/j.jaac.2012.02.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Gulsrud AC, Hellemann GS, Freeman SF, et al. Two to ten years: developmental trajectories of joint attention in children with ASD who received targeted social communication interventions. Autism Res 2014;7:207–15. 10.1002/aur.1360 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Dalton KM, Nacewicz BM, Johnstone T, et al. Gaze fixation and the neural circuitry of face processing in autism. Nat Neurosci 2005;8:519–26. 10.1038/nn1421 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Falck-Ytter T, von Hofsten C. How special is social looking in ASD: a review. Prog Brain Res 2011;189:209–22. 10.1016/B978-0-444-53884-0.00026-9 [DOI] [PubMed] [Google Scholar]

- 23. Whyte EM, Behrmann M, Minshew NJ, et al. Animal, but not human, faces engage the distributed face network in adolescents with autism. Dev Sci 2016;19:306–17. 10.1111/desc.12305 [DOI] [PubMed] [Google Scholar]

- 24. Johnson MH. Autism: demise of the innate social orienting hypothesis. Curr Biol 2014;24:R30–R31. 10.1016/j.cub.2013.11.021 [DOI] [PubMed] [Google Scholar]

- 25. Klin A, Jones W, Schultz R, et al. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch Gen Psychiatry 2002;59:809–16. 10.1001/archpsyc.59.9.809 [DOI] [PubMed] [Google Scholar]

- 26. Faja S, Webb SJ, Jones E, et al. The effects of face expertise training on the behavioral performance and brain activity of adults with high functioning autism spectrum disorders. J Autism Dev Disord 2012;42:278–93. 10.1007/s10803-011-1243-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Golan O, Baron-Cohen S. Systemizing empathy: teaching adults with Asperger syndrome or high-functioning autism to recognize complex emotions using interactive multimedia. Dev Psychopathol 2006;18:591–617. 10.1017/S0954579406060305 [DOI] [PubMed] [Google Scholar]

- 28. Tanaka JW, Wolf JM, Klaiman C, et al. Using computerized games to teach face recognition skills to children with autism spectrum disorder: the Let’s Face It! program. J Child Psychol Psychiatry 2010;51:944–52. 10.1111/j.1469-7610.2010.02258.x [DOI] [PubMed] [Google Scholar]

- 29. Hopkins IM, Gower MW, Perez TA, et al. Avatar assistant: improving social skills in students with an ASD through a computer-based intervention. J Autism Dev Disord 2011;41:1543–55. 10.1007/s10803-011-1179-z [DOI] [PubMed] [Google Scholar]

- 30. Rice LM, Wall CA, Fogel A, et al. Computer-assisted face processing instruction improves emotion recognition, mentalizing, and social skills in students with ASD. J Autism Dev Disord 2015;45:2176–86. 10.1007/s10803-015-2380-2 [DOI] [PubMed] [Google Scholar]

- 31. Harris H, Israeli D, Minshew N, et al. Perceptual learning in autism: over-specificity and possible remedies. Nat Neurosci 2015;18:1574–6. 10.1038/nn.4129 [DOI] [PubMed] [Google Scholar]

- 32. Whyte EM, Smyth JM, Scherf KS. Designing serious game interventions for individuals with autism. J Autism Dev Disord 2015;45:3820–31. 10.1007/s10803-014-2333-1 [DOI] [PubMed] [Google Scholar]

- 33. Scherf KS, Behrmann M, Dahl RE. Facing changes and changing faces in adolescence: a new model for investigating adolescent-specific interactions between pubertal, brain and behavioral development. Dev Cogn Neurosci 2012;2:199–219. 10.1016/j.dcn.2011.07.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Garcia NV, Scherf KS. Emerging sensitivity to socially complex expressions: a unique role for adolescence? Child Dev Perspect 2015;9:84–90. 10.1111/cdep.12114 [DOI] [Google Scholar]

- 35. Motta-Mena NV, Scherf KS. Pubertal development shapes perception of complex facial expressions. Dev Sci 2017;20:e12451 10.1111/desc.12451 [DOI] [PubMed] [Google Scholar]

- 36. Scherf KS, Behrmann M, Minshew N, et al. Atypical development of face and greeble recognition in autism. J Child Psychol Psychiatry 2008;49:838–47. 10.1111/j.1469-7610.2008.01903.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Scherf KS, Luna B, Minshew N, et al. Location, location, location: Alterations in the functional topography of face- but not object- or place-related cortex in adolescents with autism. Front Hum Neurosci 2010;4:26 10.3389/fnhum.2010.00026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Scherf KS, Luna B, Kimchi R, et al. Missing the big picture: impaired development of global shape processing in autism. Autism Res 2008;1:114–29. 10.1002/aur.17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Scherf KS, Elbich D, Minshew N, et al. Individual differences in symptom severity and behavior predict neural activation during face processing in adolescents with autism. Neuroimage Clin 2015;7:53–67. 10.1016/j.nicl.2014.11.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Picci G, Scherf KS. A two-hit model of autism: adolescence as the second hit. Clin Psychol Sci 2015;3:349–71. 10.1177/2167702614540646 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Damiano C, Churches O, Ring H, et al. The development of perceptual expertise for faces and objects in autism spectrum conditions. Autism Res 2011;4:297–301. 10.1002/aur.205 [DOI] [PubMed] [Google Scholar]

- 42. Chan AW, Tetzlaff JM, Altman DG, et al. SPIRIT 2013 statement: defining standard protocol items for clinical trials. Ann Intern Med 2013;158:200–7. 10.7326/0003-4819-158-3-201302050-00583 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Rutter M, DiLavore PC, Risi S, et al. Autism diagnostic observation schedule: ADOS-2. Los Angeles, CA: Western Psychological Services, 2012. [Google Scholar]

- 44. Kaufman AS, Kaufman NL. Kaufman Brief Intelligence Test. 2nd ed Circle Pines, MN: AGS, 2004. [Google Scholar]

- 45. Carrow-Woolfolk E. OWLS, Oral and written language scales. NCS Pearson Incorporated 1995. [Google Scholar]

- 46. Grynszpan O, Weiss PL, Perez-Diaz F, et al. Innovative technology-based interventions for autism spectrum disorders: a meta-analysis. Autism 2014;18:346–61. 10.1177/1362361313476767 [DOI] [PubMed] [Google Scholar]

- 47. Whyte EM, Scherf KS. Gaze following is related to the broader autism phenotype in a sex-specific way: building the case for distinct male and female autism phenotypes. Clin Psychol Sci 2018;6:280–7. 10.1177/2167702617738380 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Rice K, Moriuchi JM, Jones W, et al. Parsing heterogeneity in autism spectrum disorders: visual scanning of dynamic social scenes in school-aged children. J Am Acad Child Adolesc Psychiatry 2012;51:238–48. 10.1016/j.jaac.2011.12.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Gresham FM, Elliott SN. Social Skills Improvement System: Rating Scales. Bloomington, MN: Pearson Assessments, 2008. [Google Scholar]

- 50. Constantino JN. Gruber Social Responsiveness Scale Manual SRS-2. 2Edn Los Angeles, CA: Western Psychological Services, 2012. [Google Scholar]

- 51. Anagnostou E, Jones N, Huerta M, et al. Measuring social communication behaviors as a treatment endpoint in individuals with autism spectrum disorder. Autism 2015;19 10.1177/1362361314542955 [DOI] [PubMed] [Google Scholar]

- 52. R Core Team. R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing, 2018. https://www.R-project.org/. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2018-023682supp001.pdf (407.4KB, pdf)

bmjopen-2018-023682supp002.pdf (168.4KB, pdf)

bmjopen-2018-023682supp003.pdf (94.8KB, pdf)