Abstract

Background:

Apraxia of Speech (AOS) has been associated with deviations in consonantal voice-onset-time (VOT), but studies of vowel acoustics have yielded conflicting results. However, a speech motor planning disorder that is not bound by phonological categories is expected to affect vowel as well as consonant articulations.

Aims:

We measured consonant VOTs and vowel formants produced by a large sample of stroke survivors, and assessed to what extent these variables and their dispersion are predictive of AOS presence and severity, based on a scale that uses clinical observations to rate gradient presence of AOS, aphasia, and dysarthria.

Methods & Procedures:

Picture-description samples were collected from 53 stroke survivors, including unimpaired speakers (12) and speakers with primarily aphasia (19), aphasia with AOS (12), primarily AOS (2), aphasia with dysarthria (2), and aphasia with AOS and dysarthria (6). The first three formants were extracted from vowel tokens bearing main stress in open-class words, as well as VOTs for voiced and voiceless stops. Vowel space was estimated as reflected in the formant centralization ratio. Stepwise Linear Discriminant Analyses were used to predict group membership, and ordinal regression to predict AOS severity, based on the absolute values of these variables, as well as the standard deviations of formants and VOTs within speakers.

Outcomes and Results:

Presence and severity of AOS were most consistently predicted by the dispersion of F1, F2, and voiced-stop VOT. These phonetic-acoustic measures do not correlate with aphasia severity.

Conclusions:

These results confirm that the AOS affects articulation across-the-board and does not selectively spare vowel production

Keywords: Apraxia of Speech, Aphasia, Vowel Formants, Speech Acoustics, Voice Onset Time

Introduction

Characterised by speech sound distortions, distorted substitutions, reduced speech rate, and dysprosody, apraxia of speech (AOS) is considered to be a disruption of speech motor planning (Ballard, Granier, & Robin, 2000; Haley, Jacks, de Riesthal, Abou-Khalil, & Roth, 2012; Maas, Gutierrez, & Ballard, 2014; McNeil, Doyle, & Wambaugh, 2000; Ogar, Slama, Dronkers, Amici, & Gorno-Tempini, 2005; Rosenbek, Kent, & LaPointe, 1984; Strand, Duffy, Clark, & Josephs, 2014; Ziegler, 2002). However, comorbidity of AOS with aphasia means that most speakers with AOS produce a mix of errors generated at different functional levels of production planning (Code, 1998; Den Ouden, 2011; Ziegler, Aichert, & Staiger, 2012).

Speakers with AOS commonly produce speech errors that might be confused with errors arising from problems with phonological encoding, for instance in the case of literal paraphasias for which it is challenging to distinguish articulatory distortions that happen to cross a category boundary from actual phonemic substitutions generated at a phonological planning level. Likewise, some articulatory distortions go undetected, because of listeners’ categorical perception, and other articulatory distortions may result from incomplete pre-articulatory self-corrections of phonemic substitutions. This impedes the objective assessment of the relative severity of the impairments (Kent & Kim, 2003). Therefore, in order to characterize the nature of the AOS deficit more objectively, as well as to aid in diagnostic differentiation from aphasic phonological output problems, a wide range of studies have used acoustic measurements to quantify features of disordered speech output. Indeed, articulatory and acoustic studies have revealed correlations between the presence of AOS and consonant production accuracy. With respect to vowel articulation and acoustics, however, study results are more equivocal, with some studies reporting no systematic relation between vowel productions and AOS (Galluzzi, Bureca, Guariglia, & Romani, 2015; Jacks, Mathes, & Marquardt, 2010).

Multiple studies have compared speakers with AOS and aphasia by focusing on the acoustic analysis of speech production. For example, a substantial number of studies have considered measurements of Voice Onset Time (VOT), the time between release of the articulatory constriction and the onset of vocal fold vibration during plosive consonant production (Auzou et al., 2000). These studies have generally shown VOT to be a fairly good predictor of AOS, indicative of timing problems between laryngeal and supralaryngeal motor control (e.g., Blumstein, Cooper, Goodglass, Statlender, & Gottlieb, 1980; Gandour & Dardarananda, 1984; Itoh et al., 1982; Kent & Rosenbek, 1983; Mauszycki, Dromey, & Wambaugh, 2007; Seddoh et al., 1996; Whiteside, Robson, Windsor, & Varley, 2012). In earlier studies, absolute VOT values outside of the language-specific ranges for voiced versus voiceless stops would commonly be interpreted as phonetic distortions, reflecting an articulatory disorder, whereas VOT distortions that remained within phonemic ranges would be interpreted as reflecting categorical phonemic selection errors (Auzou, et al., 2000). Since this approach based on absolute VOT values does not fully take into account the gradient nature of VOT distortion, within-subject variance of VOT productions may be a better measure of unstable articulatory motor planning (Mauszycki, et al., 2007; Seddoh, et al., 1996; Whiteside, et al., 2012).

Fewer instrumental studies have focused on vowel articulation, and their results have been less straightforward. Vowel duration has been noted to be longer in AOS (Maas, Mailend, & Guenther, 2015; Seddoh, et al., 1996; Vergis et al., 2014), but absolute segment duration is also a function of speech and articulation rate and not necessarily a measure of articulatory motor control (see Haley & Overton, 2001). Other acoustic measures may be more sensitive to articulatory impairment. Vowels are acoustically differentiated based on their formants, frequency peaks in the sound spectrum, which are a function of the shape of the speaker’s supralaryngeal articulatory space. Both the absolute values of these formants and their relative distance from one another determine the vowel that is categorically perceived by the listener. While some studies looking at vowel formants suggest particular articulatory problems with vowels in AOS (Jacks, 2008; Kent & Rosenbek, 1983; Maas, et al., 2015), others have not yielded significant differences between AOS and aphasic or unimpaired speech (Haley, Ohde, & Wertz, 2001; Jacks, et al., 2010; Ryalls, 1986).

Jacks et al. (2010) investigated whether vowel production is more variable in adults with apraxia compared to unimpaired speakers. Elicited data were repetitions of English vowels in a monosyllabic /hVC/ context by speakers with AOS, and these were compared to unimpaired speech data from existing databases. Focusing on the first two formants for each vowel production, Jacks et al. compared absolute average vowel formant values, Euclidean distance (the distance between vowels in a standardised vowel space), and within-subject formant variability between apraxic and unimpaired speakers. In line with their expectations, they found that absolute means of vowel formants as well as Euclidean distances were similar between unimpaired speakers and participants with AOS, confirming at the very least that there are no unidirectional consistent patterns in vowel articulation changes in AOS. Contrary to predictions, however, within-subject formant variability was also not found to differ between speakers with AOS and unimpaired speakers. Jacks et al. (2010) concluded that production of monophthongal vowels in words was stable, i.e., unimpaired, in their participants with AOS. Yet, they also allowed for possible limitations; the study only investigated monophthongs in short words in a small sample of speakers with AOS (n=7). Furthermore, we note that stimuli were repetitively elicited, which may have led to normalization and greater consistency within vowel productions compared to what might be found in spontaneous speech.

Haley et al. (2001) did not find systematic differences in vowel formant dispersion or absolute formant values between speakers with and without AOS (and aphasia) either, although they did note some deviant patterns in individual speakers. In this study, too, the speech samples analysed were from targeted elicitations in which the words ‘hid’ and ‘head’ were produced in the carrier phrase ‘The word ____’. Earlier, Ryalls (1986), using a similar single-word elicitation task, also had not found significant differences in formant dispersion nor absolute formant values between speakers with ‘anterior aphasia’, ‘posterior aphasia’ and a group of unimpaired speakers. Numerically, though, formant dispersion appeared to be greater in both groups of aphasic speakers, compared to the control speakers.

A recent study by Basilakos et al. (in press) used a multivariate analysis to assess the predictive strength of a number of acoustic measures on the classification of AOS in a sample of 57 stroke survivors with and without AOS and Aphasia. AOS classification accuracy was greater than 95%, with the strongest predictors being speech signal amplitude modulations in frequency ranges that are associated with fast articulatory transitions (16–32 Hz) and the prosodic contour of connected speech (1–2 Hz), whereas the predictive value of the range that reflects regular syllable-size transitions (4–8 Hz) was much lower. The latter range would likely be most sensitive to inconsistent vowel productions, so these results again suggest vowels are relatively stable in AOS. In the same study, however, VOT variability was not shown to be a predictor of AOS either, when entered into a model together with the other acoustic variables. This study used a wide range of acoustic variables, to optimize the differential diagnosis of AOS versus aphasia without AOS, but it did not include vowel formant measures.

If AOS is essentially a generalised speech motor planning deficit, rather than a phonological access or encoding problem, there is no straightforward reason why vowel articulations should be categorically spared. Consonants are articulatorily more complex than vowels, so they are more susceptible to distortions (Galluzzi, et al., 2015), but that is merely a matter of degree. Articulatory vowel distortions may be more subtle and less likely to cross phoneme boundaries, but should still be reflected in the acoustic signal.

Given variable results of experiments on vowel production in aphasia and AOS, the present study focused on the extent to which AOS is reflected in increased vowel formant dispersion. We hypothesised that if AOS is an articulatory (motor) planning deficit, this should also be reflected systematically in increased variance in vowel formants, which rely on subtle temporal and positional interactions between articulators, and a stable vowel space. With respect to the latter, we also explored whether the size of an individual’s vowel space might be affected by AOS and/or dysarthria, in that the deficit might cause all articulations to be generally more ‘centred’ and less enunciated. Contracted vowel space has indeed been observed in speakers with dysarthria, though not systematically in AOS (Fletcher, McAuliffe, Lansford, & Liss, 2017; Kent & Kim, 2003). Kent and Rosenbek (1983), however, do draw attention to an individual speaker with AOS who shows “centralisation” (242), i.e., a lack of differentiation between vowels and a tendency to produce a central diphthong in place of other vowels.

We measured formants in vowels produced during spontaneous speech by stroke survivors with and without AOS in a subsample of the data analysed by Basilakos et al. (in press), and assessed to what extent vowel formant characteristics, compared to consonant VOT characteristics, were predictive of aphasia, AOS and dysarthria, as well as AOS severity, based on the Apraxia of Speech Rating Scale (ASRS; Strand, et al., 2014). Note that the study by Basilakos et al (in press) had a different objective, namely to derive a maximally predictive model, based on acoustic variables, to optimize the objective classification of AOS. Basilakos et al. did not include the vowel formant measures that are the topic of the current study, partly for the reason that these did not show up in the existing literature as predictive of AOS, as noted above. By contrast, we suggest that articulatory instability for vowels and consonants should be reflected in both formant dispersion and VOT dispersion, respectively.

Our focus in the current study was on the status of vowel versus consonant acoustics in AOS. However, our sample included a small number of stroke survivors clinically diagnosed as having dysarthria (concomitant with either aphasia and/or AOS), so we extended our analysis to explore the predictive value of these same acoustic variables for that classification as well, as a secondary and exploratory goal.

Methods

Participants

Stroke survivors (N=53; 20 female, 33 male; mean age=61 years (range 32–82) were recruited as part of a larger study at the University of South Carolina. The original study included 57 participants, but data from 4 participants were excluded from the analysis, due to severe difficulties with vowel identification and delimitation in the speech samples. Most participants were characterised as aphasic (39) and classified by type according to the Western Aphasia Battery-Revised (WAB-R; Kertesz, 2007), supplemented with clinical judgment by two certified speech-language pathologists: anomic (13), Broca’s (15), conduction (7), Wernicke’s (2), global (1), combination of Wernicke’s and conduction (1). Fourteen participants were not classified as having aphasia based on their performance on the WAB-R (AQ > 93.8). Severity of aphasia was rated on the WAB-R Aphasia Quotient (AQ) scale, with a mean of 68.7 (range 20.1–93.4) for the speakers classified as having aphasia (mean 76.4, range 20.1–99.6 for the full sample). Presence of AOS and dysarthria was established with the ASRS (Strand et al., 2014), which presents deficit-specific characteristics (16 ‘primary distinguishing features’ per deficit type) that are each rated for presence and severity on a 5-point scale. According to ASRS criteria, for a diagnosis of AOS, a patient must have at least one primary distinguishing AOS feature, and an overall score greater than or equal to 8 on these features is most reliably associated with AOS. Inter-rater reliability of the ASRS scores was established using a two-way mixed consistency single-measures intraclass correlation coefficient (ICC) between two raters. Speech samples from six individuals were randomly selected and the secondary rater was blind to the primary rater’s scores. The ICC for the sum of all ASRS ratings was 0.90, which is considered “good” to “excellent” (Cicchetti, 1994). For the intra-rater reliability, one rater (AB) classified 6 samples twice, also yielding an ICC of 0.90. Based on these item-specific scores and clinical judgement of the overall effect of these production difficulties on each patient’s communication abilities, we rated the presence and severity of aphasic phonological output symptoms, dysarthria and AOS on a 5-point scale with 0 being least severe (absence of symptoms) and 4 being most severe (see also Basilakos et al., 2015). AOS severity in the sample varied with 33 speakers having score 0 (no AOS), 5 speakers with score 1, 4 speakers with score 2, 6 speakers with score 3, and 5 speakers with score 4 (most severe).

Within the participant sample, subjects were grouped based on impairment, the prevalence of one impairment, or the combination of disorders: unimpaired (12), primarily aphasia (19), aphasia with AOS (12), primarily AOS (2), aphasia with dysarthria (2), aphasia with AOS and dysarthria (6). Table 1 shows the gender and age distribution between these groups. Gender distribution was not equal (Chi2(5)=11.186; p<.05, with Yate’s correction), which is a minor concern that we address as a limitation below. Age did not differ significantly between the groups (Kruskal Wallis, Chi2(5)=6.817, p=.235).

Table 1.

Gender and age distribution in the six classification groups

| Group (n) | Female | Male | Mean Age (range) |

|---|---|---|---|

| Unimpaired (12) | 8 | 4 | 64.4 (39–82) |

| Primarily Aphasia (19) | 4 | 15 | 61.4 (45–80) |

| Aphasia + AOS (12) | 4 | 8 | 60.8 (46–77) |

| Primarily AOS (2) | 2 | 0 | 45 (44–46) |

| Aphasia + Dysarthria (2) | 1 | 1 | 56.5 (56–57) |

| Aphasia + AOS + Dysarthria (6) | 1 | 5 | 59.7 (41–69) |

| Total | 20 | 33 | 60.9 (39–82) |

Data collection and extraction of variables

Picture-description speech samples from the participants were used to measure speech production deficits during connected speech. The speech samples were elicited with the “Cookie Theft” picture (BDAE; Goodglass, Kaplan, & Barresi, 2000), the “Circus” picture (ABA-2; Dabul, 2000), and the “Picnic” picture (WAB-R; Kertesz, 2007). Speech samples were manually organised into sentences, words, consonants, and vowels, labelled on separate tiers in Praat phonetic analysis software (Boersma, 2001). Voice Onset Time (VOT) data were extracted for word-initial plosives in voiced and voiceless consonants separately, by marking the interval from the beginning of the burst release to the onset of voicing (see Basilakos, et al., in press; Basilakos, 2016). For the present study, vowel boundaries were identified manually, based on the visible formant structure (Ordin & Polyanskaya, 2015; Peterson & Lehiste, 1960). Vowel onset boundaries were placed at the onset of visible voicing. In cases of glottalisation (slowed vocal fold vibration), the onset boundary was placed at the point where the vocal fold vibration became faster and more regular. In cases when a vowel followed a stop or a fricative, the onset was placed at beginning of the visible F2. Vowel offset boundaries were identified by termination of the formant structure or an abrupt change in amplitude that preceded the onset of a consonant. The most reliable way to determine the offset was to move the cursor to different spots and listen: once only aspiration was heard, the offset was marked. In case of inability to identify the vowel boundary due to external noise, that individual vowel token was excluded. Identified vowels were then labelled using the SAMPA transcription convention (Wells, 1997). For the present study, all vowel identifications and boundary markings were performed by one of the authors (EG). Reliability was assessed by having another author (DdO) perform the same task on a subset of the data (100 trials) and assessing whether the outcome measures extracted from the resulting tokens, F1, F2 and F3, differed significantly. No differences approached significance, showing that the vowel boundaries were consistently marked and/or that the extraction of formants at the vowel midpoint is robust to slight boundary inconsistencies.

A dedicated Praat script (Lennes, 2003) was used to extract first (F1), second (F2), and third (F3) formants from the midpoint of stressed monophthongs and diphthongs in content words (total tokens identified: 10146). Due to the inherent formant variance in diphthongs, only monophthongs bearing primary stress were included in the final analysis: /ɑ, æ, ɛ, i, ɪ, ɒ, ɔ, ʊ, ʌ, u, ɜ/ (total tokens: 7171). To reduce participant-specific variance, (Adank, Smits, & van Hout, 2004), formants were normalised to the Bark scale using the algorithm described by Traunmüller (1990). We applied vowel-intrinsic normalisation, rather than a vowel-extrinsic method as proposed by Nearey (1978) or Lobanov (1971), because the latter rely on the integrity of the full vowel inventory and not all our samples contained a ‘full set’ of vowels. As a measure of individual vowel formant dispersion, standard deviations around the means were computed for normalised F1, F2 and F3 for all vowels separately, and then averaged for each individual.

Individual vowel space was estimated as a formant centralisation ratio (FCR), based on the first two formants of three vowels, /ɑ, i, u/, using the method described in Sapir et al. (see also Karlsson & van Doorn, 2012; 2010), given in (1). Note that the relation between vowel space and formant centralisation is inverse, so a higher FCR (increased centralisation) indicates a reduced vowel space:

| 1 |

The Discriminant and Ordinal Regression analyses assume normality of the independent variables, but this assumption was violated by Voiced-stop VOT, Voiced-stop VOT SD, Voiceless-stop VOT, Voiceless-stop VOT SD, and F3 SD (Shapiro-Wilk tests, p<.05), while F1 SD and F2 SD were close to violating the assumption of normality (Shapiro-Wilk, p=.051 and .057, respectively). After Lognormal (Ln) transformation of these variables, only Voiceless-stop VOT SD still violated the assumption of normality (Shapiro-Wilk test, p=.002; all other variables: p>.225). All variables were converted to z-scores, to remove scale differences. To summarise, the variables entered into the analyses were Voiced-stop VOT(Ln), Voiceless-stop VOT(Ln), Voiced-stop VOT SD(Ln), Voiceless-stop VOT SD(Ln), F1(Bark), F2(Bark), F3(Bark), F1 SD(Bark, Ln), F2 SD(Bark, Ln), F3 SD(Bark, Ln), and FCR. Participant-specific variables entered were Age, WAB-R-AQ, ASRS-aphasia-severity, ASRS-AOS-severity, and ASRS-dysarthria-severity.

Analyses

We first conducted a multiple-correlation analysis, which we report as it may be of interest for further studies, as well as relevant to the interpretation of our subsequent analyses. WAB-R-AQ, ASRS-aphasia, ASRS-AOS, and ASRS-dysarthria had non-normal distributions that could not be improved with log-transformations, so we used Spearman’s rho to assess all correlations. Given the exploratory nature of this initial test, we assessed the two-tailed significance of these correlations without correction for multiple comparisons, though correlations that survive Bonferroni correction (p<.0004) are marked in the results (Table 2). As the gender distribution was not equal between the classification groups, we also assessed the independent effect of gender on our outcome measures, through independent sample t-tests, except for Voiceless-stop VOT SD, which was assessed with a Mann-Whitney U-test (because of its non-normal distribution). Gender effects were not of specific interest in light of our research question here, but are important to note where they may affect our outcomes.

Table 2.

Spearman’s rho correlation coefficients between age, aphasia severity, apraxia of speech severity, dysarthria severity, and acoustic consonant and vowel characteristics (n=53).

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Age | 1 | |||||||||||||||

| 2 | WAB-R AQ | .151 | 1 | ||||||||||||||

| 3 | ASRS: Aphasia | −.166 | −.889*** | 1 | |||||||||||||

| 4 | ASRS: Apraxia | −.120 | −.447** | .367** | 1 | ||||||||||||

| 5 | ASRS: Dysarthria | −.080 | −.149 | .043 | .413** | 1 | |||||||||||

| 6 | Voiced VOT (Ln) | −.080 | −.001 | .042 | .119 | −.014 | 1 | ||||||||||

| 7 | Voiced VOT SD (Ln) | −.109 | −.046 | .010 | .302* | .146 | .483*** | 1 | |||||||||

| 8 | Voiceless VOT (Ln) | −.084 | −.126 | .267 | .034 | −.344* | .185 | .202 | 1 | ||||||||

| 9 | Voiceless VOT SD (Ln) | .017 | −.256 | .300* | .239 | −.001 | .117 | .146 | .499*** | 1 | |||||||

| 10 | F1 (Bark) | −.131 | −.053 | .043 | .321* | .065 | −.255 | −.110 | .064 | .154 | 1 | ||||||

| 11 | F1 SD (Bark) | −.305* | −.150 | .245 | .410** | .090 | .087 | .016 | .113 | .098 | .577*** | 1 | |||||

| 12 | F2 (Bark) | −.169 | −.084 | .088 | .193 | .021 | .233 | −.061 | −.074 | −.046 | .381** | .538*** | 1 | ||||

| 13 | F2 SD (Bark) | −.035 | −.210 | .168 | .306* | .082 | −.276* | .112 | .205 | .198 | .126 | .001 | −.452** | 1 | |||

| 14 | F3 (Bark) | −.275* | −.189 | .177 | .250 | −.042 | .319* | .189 | .186 | .046 | .200 | .317* | .549*** | −.066 | 1 | ||

| 15 | F3 SD (Bark) | −.081 | .090 | −.100 | .227 | .097 | −.190 | .075 | −.011 | −.008 | .357** | .077 | −.147 | .301* | −.277* | 1 | |

| 16 | FCR | −.070 | −.118 | .108 | −.066 | −.073 | .286* | .036 | −.018 | .071 | −.046 | −.156 | .276* | −.523*** | .199 | −.076 | 1 |

= significant at the 0.05 level (2-tailed, uncorrected);

= significant at the 0.01 level (2-tailed, uncorrected);

Significant at the 0.0004 level (2-tailed, p<.05 with Bonferroni correction);

Abbreviations: WAB-R AQ = Western Aphasia Battery-Revised Aphasia Quotient; ASRS = Apraxia of Speech Rating Scale; VOT = Voice Onset Time; Ln = Lognormal transformed; FCR = Formant Centralisation Ratio

To assess the extent to which our variables predict group membership, we conducted Stepwise Linear Discriminant Analyses (LDA, SPSS v. 24), with leave-one-out cross-validation. Variables were entered when they minimised Wilk’s lambda at an F value with p<.05, and removed at p>.10. We conducted multiple LDAs, so as to approach the predictive values of our consonant and vowel acoustics from different angles, thus zeroing in on the variables that are most consistently associated with AOS. The first LDA was for a three-way grouping, between (1) speakers with AOS with or without aphasia or dysarthria (n=20), (2) speakers without AOS, but with aphasia with or without dysarthria (n=21) and (3) stroke survivors without speech or language impairment (n=12). The second LDA was binary and compared only speakers with AOS (with or without aphasia and dysarthria, n=20) versus speakers with aphasia (without AOS, but with possible dysarthria, n=21). The third LDA was also binary, comparing speakers with AOS (n=20) to speakers with conduction aphasia (who have a phonological output problem, n=8). We then conducted three other binary LDAs, on aphasia (with or without AOS, n=39) compared to speakers without aphasia (n=14), AOS (with or without aphasia, n=20) compared to speakers without AOS (n=33), and dysarthria (with or without some level of AOS, all dysarthric speakers also had aphasia, n=8) compared to speakers without dysarthria (n=45). For any variables that were part of a successfully predictive model, we conducted follow-up t-tests, with Bonferroni correction where relevant, to evaluate group differences.

Finally, we used Ordinal Regression (SPSS v. 24) to assess the extent to which our variables predict AOS severity, as quantified with the ASRS. Model fit for the full model was compared against the fit for the intercept-only with a Likelihood Ratio (LR) Chi-Square test. The significance of the contribution of individual predictors, bases on their parameter estimates, was assessed with Wald Chi-square tests.

Results

Correlations

Table 2 shows the multiple correlation results. Age did not correlate with aphasia, AOS, or dysarthria severity, but did correlate negatively with F3 and with F1 SD. Aphasia severity as captured by the WAB-R AQ was correlated with AOS severity (the correlation is negative, as a lower score on the WAB-R AQ indicates more severe aphasia), but not with any of the acoustic measures. The ASRS aphasia rating, however, was also correlated with Voiceless-VOT SD (as well as highly correlated with WAB-R AQ and AOS severity). AOS severity further correlated with Voiced-VOT SD, mean F1, F1 SD, and F2 SD. Dysarthria severity was negatively correlated with Voiceless VOT. Formant centralisation (FCR) correlated negatively with F2 SD and positively with Voiced VOT. Voiced VOT also correlated negatively with F2 SD, which was in turn correlated with F3 SD. F1 correlated with F2 and with F3 SD. F2 correlated further with F3 and with F1 SD. F3 correlated further with Voiced-VOT, as well as with F1 SD. Furthermore, it must be noted that the mean VOTs for both voiced and voiceless consonants correlated with their own standard deviations and this also goes for F1 and its standard deviation and (negatively) for F3 and F2 and their standard deviations.

Gender effects

VOT for voiced consonants was significantly shorter for females (25.6ms) than for males (29.5ms; t(51)=2.137, p<.05). We tested gender effects on formant values both before and after normalisation. The raw values for F1 were trending towards a difference between the genders prior to normalisation (F1 female 540Hz, F1 male 509Hz, t(51)=1.741, p=.088), and this difference became significant after conversion to the Bark scale (t(51)=2.143, p=.037). No other gender differences were close to significant (all p>0.12). Reflecting the unequal distribution between males and females over the unimpaired and aphasic groups, there was a gender difference in WAB-R AQ (females 83.8, males 71.8, U=200, p<.05). However, there was no difference in severity of AOS or dysarthria between the genders (U=321, p=.849; U=304.5, p=.452, respectively).

Stepwise Linear Discriminant Analyses

Because of the non-normal distribution of Voiceless-VOT SD even after Lognormal transformation, we ran all the analyses below both with and without this variable. Results were not different when Voiceless-VOT SD was or was not included, so we report only the analyses in which Voiceless-VOT SD was included.

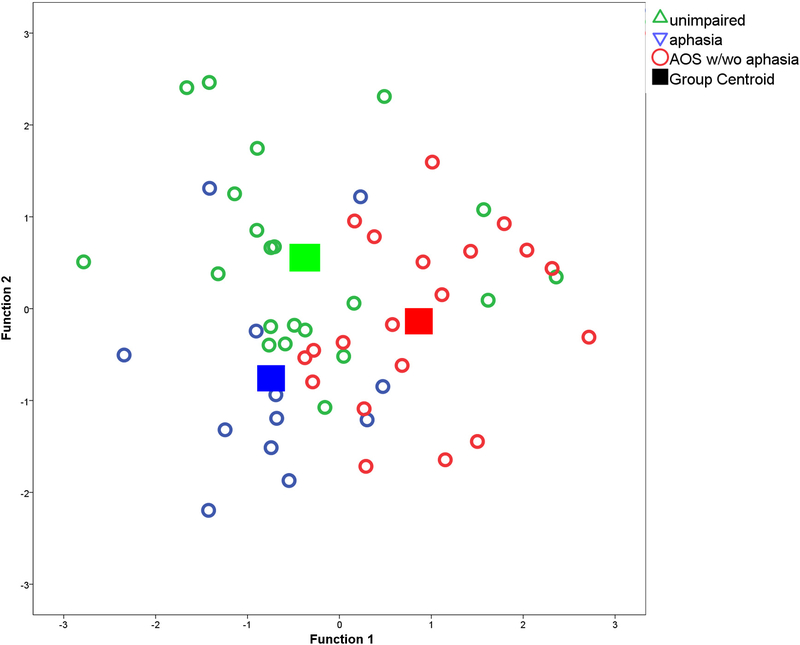

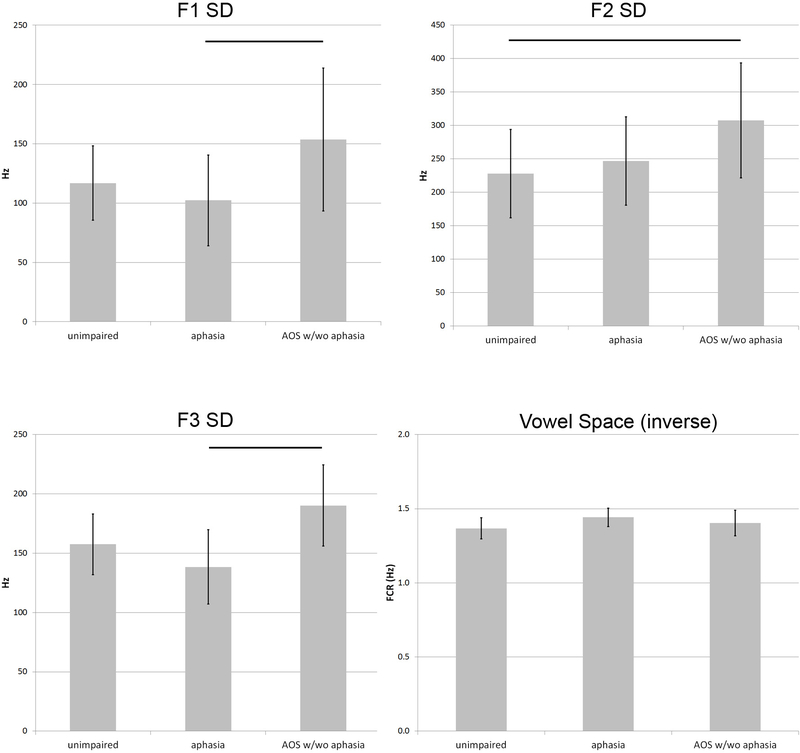

AOS vs. Aphasia-only vs. Unimpaired-speech

Three-way classification success between stroke survivors with AOS (with or without aphasia), aphasia-only, or without speech/language impairment was 60.4% (50.9% cross-validated), when four acoustic measures were entered: F1 SD, F2 SD, F3 SD and FCR. Table 3 provides the cross-validated classification results, and Figure 1 shows how the two functions based on these measures separated the three groups. The bar graphs in Figure 2 show that the mean F1 SD was higher in speakers with AOS than in the other two groups (significantly different between speakers with AOS and speakers with aphasia only: t(30)=2.587; p<.05), as was the mean F2 SD (significantly different between speakers with AOS and unimpaired speakers: t(39)=3.252; p<.05), and the mean F3 SD (significantly different between speakers with AOS and speakers with aphasia only: t(39)=2.341; p<.05). Note that the bar graphs show the raw data values, to accommodate interpretation, whereas the statistical analyses are based on the normalised data. The unimpaired speakers had the lowest FCR and the speakers with aphasia had the highest FCR, but the group differences were not significant for this variable. No other variables improved classification significantly.

Table 3.

Classification results (cross-validated) for the three-way stepwise linear discriminant analysis predicting group-membership of stroke survivors with AOS, aphasia and without speech-language impairment. 50.9% of cross-validated grouped cases were correctly classified.

| Predicted Group Membership | ||||

|---|---|---|---|---|

| unimpaired (%) | Aphasia (%) | AOS w/wo aphasia (%) | Total | |

| unimpaired | 8 (66.7) | 2 (16.7) | 2 (16.7) | 12 |

| aphasia | 9 (42.9) | 8 (38.1) | 4 (19.0) | 21 |

| AOS w/wo aphasia | 6 (30.0) | 3 (15.0) | 11 (55.0) | 20 |

Figure 1.

Canonical plot of the discriminant functions for the three-way stepwise linear discriminant analysis predicting group-membership of stroke survivors with AOS, aphasia and without speech-language impairment. The two ‘functions’ each consist of different weightings for the parameter values of the four acoustic measures that together predict group membership.

Figure 2.

Means and standard errors (2x) for the four acoustic measures that together predict group membership between stroke survivors with AOS, aphasia and without speech-language impairment. Lines between bars indicate significant pairwise differences between the groups (t-tests with Bonferroni correction; p<.05).

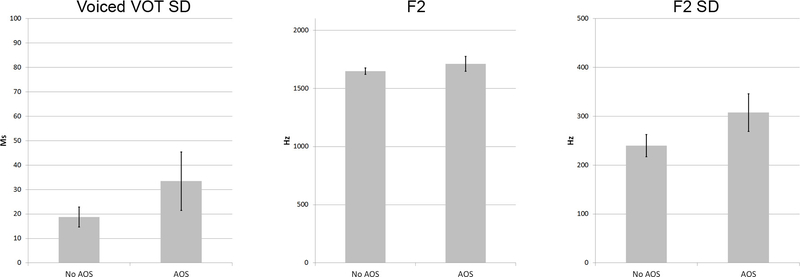

AOS

Binary classification between speakers with and without AOS was achieved with 75.5% success (73.6% cross-validated), by entering Voiced-VOT SD, mean F2, and F2 SD. After cross-validation, classification was correct for 16/20 (80%) speakers with AOS and for 23/33 (69.7%) of speakers without AOS. Figure 3 shows how all three measures were higher in speakers with AOS than in stroke survivors without AOS, though none of these differences were significant by themselves. No other variables improved classification significantly.

Figure 3.

Means and standard errors (2x) for the three acoustic measures that together predict group-membership between stroke survivors with and without AOS.

AOS vs. Aphasia-only

Excluding the unimpaired stroke survivors, binary classification of speakers with AOS with or without aphasia from speakers with aphasia only was achieved with 75.6% success (70.7% cross-validated), by entering the mean F1 SD and F3 SD values. After cross-validation, classification was correct for 16/20 (80%) speakers with AOS, and 13/21 (61.9%) speakers with aphasia only. No other variables improved classification significantly.

AOS vs. Conduction Aphasia

The binary classification between speakers with AOS and speakers with Conduction Aphasia (none of whom were diagnosed with AOS) was successful in 92.9% of cases (82.1% cross-validated), based on the variables Voiced-VOT SD and F1 SD, which were both higher in speakers with AOS (t(25.946)=4.705; p<.05, and t(26)=2.579; p<.05, respectively). Classification was correct for 7/8 speakers with conduction aphasia (87.5%) and for 16/20 speakers with AOS (80%). No other variables improved classification significantly.

Aphasia

The binary LDA aimed at predicting speakers with versus without aphasia did not yield variables that significantly improved classification over chance.

Dysarthria

The binary LDA aimed at predicting speakers with versus without dysarthria was successful in 77.4% of cases (75.5% cross-validated), based on the mean Voiceless VOT and the Voiced-VOT SD. The VOT for voiceless consonants was significantly shorter in speakers with dysarthria (t(39)=3.112; p<.05).

Ordinal Regression

We used ordinal regression to assess which (if any) combination of our acoustic factors predicts AOS severity, as rated with the ASRS. The model fit based on these factors was significantly better than that of the model based on the intercept alone (Chi2(11)=39.630, p<.001; Nagelkerke’s pseudo r2 = .581). Table 4 provides the parameter estimates for the factors that contributed significantly to prediction of AOS severity. Note that these parameter estimates reflect the relative importance of the factors to the predictive model, but they cannot be directly interpreted in terms of absolute milliseconds or Hertz, as they are based on z-scores (and lognormal transformations for some variables). In order of importance, the four acoustic factors that contributed significantly to the prediction of AOS severity (p<.05) were F2 SD, Voiceless VOT, F1 SD, and Voiced VOT SD. Parameter estimates for these factors were all positive, with the exception of Voiceless VOT, so that a shorter VOT for voiceless consonants was predictive of more severe AOS. Results for the other variables were not different if Voiceless VOT SD was excluded from the input.

Table 4.

Parameter estimates and Wald statistics for the ordinal regression analysis of the relation between AOS severity, as rated with the Apraxia of Speech Rating Scale (ASRS, Strand et al., 2014), and acoustic consonant and vowel measures. Variables shown contribute significantly to the prediction of AOS severity (p<.05).

| Coefficient | S.E. of Coefficient | Wald | Sign. | 95% Conf. Interval | |||

|---|---|---|---|---|---|---|---|

| Lower | Upper | ||||||

| Threshold | ASRS AOS Severity = 0 | 1.235 | 0.51 | 5.855 | .016 | 0.235 | 2.236 |

| ASRS AOS Severity = 1 | 1.978 | 0.557 | 12.629 | .000 | 0.887 | 3.069 | |

| ASRS AOS Severity = 2 | 2.696 | 0.621 | 18.832 | .000 | 1.479 | 3.914 | |

| ASRS AOS Severity = 3 | 4.445 | 0.901 | 24.31 | .000 | 2.678 | 6.212 | |

| Location | F2 SD (Bark, Ln) | 2.372 | 0.696 | 11.615 | .001 | 1.008 | 3.736 |

| Voiceless VOT (Ln) | -1.2 | 0.474 | 6.406 | .011 | -2.129 | -0.271 | |

| F1 SD (Bark, Ln) | 1.843 | 0.785 | 5.513 | .019 | 0.305 | 3.382 | |

| Voiced VOT SD (Ln) | 0.897 | 0.408 | 4.825 | .028 | 0.097 | 1.697 | |

Ln = Lognormal transformed

Discussion

For the discussion of our multiple correlations analysis, we focus here on the correlations between the acoustic and diagnostic measures, rather than on the correlations between the acoustic measures themselves. The latter are naturally of interest, but more relevant to the interpretation of the subsequent LDAs and regression analyses and inherently taken into account there. Severity of AOS was correlated with acoustic measures of both consonant (VOT) and vowel (formant) production in stroke survivors. Speakers with more severe AOS tend to have a raised F1 and increased F1 and F2 dispersion, as well as increased VOT dispersion for voiced stops. The latter indicates a generally unstable interaction between oral and laryngeal articulators during consonant production, and the F1 and F2 dispersions similarly indicate a lack of articulatory consistency in oral space.

The raised first formant may be an interesting consequence of a more general change in articulatory setting, however. The frequency of F1 is largely related to tongue height, so that a generally more open vowel articulation, with lowered mandible, would be reflected in a raised F1. Haley et al. (2001) cite dissertation work by Keller (1975) that noted a similar raised F1 in 10 speakers with different types of aphasia (without information on presence of AOS), and relate it to a tendency for higher vowels to be replaced by lower vowels. We note that a more open articulatory pattern is consistent with the ‘articulatory groping’ behaviour that is often taken as a clinical indicator of AOS (though possibly more so in European than in North-American clinical practice). In our experience, this searching behavior often manifests as repeated widening of the mouth (lowering of the jaw), but at this point we can only speculate as to whether this is related to the systematically raised F1 observed here. It is clearly not the case that our speakers with AOS showed a generally wider or reduced vowel space, as shown by the absence of a correlation with FCR. In fact, this indirect vowel space measure did not correlate with any diagnostic characteristics independently, although it likely contributed to the separation between unimpaired and aphasic speakers in the three-way LDA, where aphasic speakers were shown to have a marginally higher FCR, i.e., a smaller vowel space. Outside the scope of the present research question, we do not pursue this latter finding here.

It is of marginal interest that the severity of aphasia is correlated with AOS severity. The WAB-R AQ is known to be highly dependent on fluency scores (based on spontaneous speech and picture description), which are naturally correlated with AOS severity. The less fluent a speaker, the lower their WAB AQ score and the higher their AOS severity rating is likely to be. Likewise, the correlations between the ASRS-apraxia severity rating and the ASRS-aphasia and ASRS-dysarthria ratings show once again that strict classification into these syndromes based on perceptual analysis is challenging, with speech output symptoms likely overlapping.

Normalised absolute F1 values and absolute VOT values for voiced consonants were affected by gender, with F1 values being higher in females and Voiced-VOT values being shorter. Voiced-consonant VOTs were not further implicated as predictors in any of our analyses, but mean F1 did correlate positively with AOS severity independently. However, the gender distribution among our speakers with AOS weighed towards males rather than females (7/20 females, see Table 1), so it is unlikely that this correlation is driven by gender imbalance. Therefore, although we do treat it as a limitation of the present study that the gender distribution between aphasic and non-aphasic speakers was not more symmetric, there is no indication that this may have affected the particular outcomes presented here. Acoustic measures of speech naturally interact with gender, and any normalisation procedure that aims to reduce such effects runs the risk of also reducing non-gender effects of interest. In particular, however, there is little reason to assume that the VOT and formant dispersion measures would be inherently gender-specific, except potentially indirectly through the correlation between means and standard deviations. It is these dispersion measures that we expected to be the best reflection of articulatory instability.

As revealed by the three-way discriminant analysis and by the binary discriminant analyses assessing predictors for the presence of AOS (versus all others in the sample, aphasic speakers, and conduction aphasic speakers), the deficit is indeed primarily characterised by increased dispersion of vowel formants, in particular of F1 and F2, as well as increased dispersion of voiced-stop VOTs, confirming the main premise of the present study that vowel articulation stability is measurably affected in AOS, at minimum to the same extent as consonant articulation stability. The binary classification between speakers with and without AOS in the full sample also revealed speakers with AOS to display an elevated absolute F2. The second formant has less straightforward articulatory substrates than the first, discussed above, but it is largely determined by tongue position on the horizontal plane (front to back), particularly for front vowels, while lip and pharyngeal sections of the vocal tract are also known to contribute to F2 (e.g. Lee, Shaiman, & Weismer, 2016). Increased F2 variance as a predictor of the presence of AOS may therefore reflect imprecision in horizontal positioning of the tongue for vowel articulations, while the rise in absolute F2 values suggests more frontal articulation patterns in speakers with AOS. It is of interest that Kent and Rosenbek (1983) draw attention to one of their AOS participants, who produces the /u/ in zoo with an “inappropriately high” (239) frequency, so that it falls within the normal range of /i/. Visual inspection of their summary data (Kent & Rosenbek, 1983: 243) also suggests that their 7 speakers often produce vowels with a higher than ‘normal’ F2). Together with the raised F1 pattern noted earlier, we may be capturing a tendency here for speakers with AOS to produce more frontal and open/low articulations, compared to unimpaired speakers or speakers with aphasia only.

Figures 1 and 2, as well as the binary discriminant analysis on the presence of aphasia, confirm that aphasic speakers are not characterized by consistent articulatory (acoustic) deviances or instability. That is, these acoustic measures do not distinguish them from stroke survivors without speech or language impairment. This is in line with the notion that literal paraphasias in aphasia are primarily generated through selection errors, rather than articulatory planning or execution errors.

In the present study, presence of dysarthria was predicted above chance by reduced VOTs in voiceless consonants, as well as by the dispersion of voiced-consonant VOTs. We do point to the small number of speakers with dysarthria in our sample (n=8) and the fact that they formed a subset of the speakers with AOS and/or aphasia. In addition, type of ‘dysarthria’ was not further specified in our sample, though all participants suffered from a single cerebral stroke, so that the resulting dysarthrias are by definition of the upper motor neuron type (Darley, Aronson & Brown, 1969). The finding of reduced VOTs in speakers with dysarthria is in line with previous reports, particularly in speakers with spastic dysarthria, one type of upper motor neuron dysarthria (Hardcastle, Barry, & Clark, 1985; Kent & Kim, 2003; Morris, 1989). Auzou et al. (2000) note that consonants with long lags, such as English voiceless stops, are considered more complex articulations than consonants with short lags, because of the careful timing required between the oral stop and laryngeal closure and the more complex muscle activity involved with vocal fold adduction for these consonants.

The analysis that is perhaps clinically most relevant is the binary LDA classification between speakers with AOS and speakers with conduction aphasia (see also Seddoh et al., 1996). Conduction aphasia is characterised by the production of many phonemic paraphasias, often in the form of sequences of approximations to the word form target. Such phonological output errors are typically challenging to distinguish from apraxic speech errors (e.g., Code, 1998; Den Ouden, 2011; Ziegler, Aichert, & Staiger, 2012). The successful distinction between the representatives of these two output problems in our sample was based on greater dispersion of both voiced-consonant VOTs and F1 in the speakers with AOS. Note that the primary objective of the present study was not to optimize the clinical diagnosis of AOS relative to other output impairments, but rather to assess to what extent vowel acoustics are affected by AOS. The successful classification of 7/8 speakers with conduction aphasia and 16/20 speakers with AOS, based on stability of both consonant and vowel acoustics, confirms that phonemic production is relatively stable in (conduction) aphasia, likely reflecting phonological planning and selection problems, but unstable in AOS, likely reflecting speech motor planning problems.

Finally, the ordinal regression analysis of factors predicting AOS severity confirms the role of increased F1 and F2 dispersion as characteristic of AOS and as markers of severity, together with VOT dispersion for voiced consonants. In addition, voiceless-stop VOTs were found to be consistently shorter in speakers with more severe AOS, just as in the speakers with dysarthria in our sample. We again point to the fact that 6/8 dysarthric speakers in our sample were also diagnosed with AOS, and 6/20 speakers with AOS had concomitant dysarthria. For this reason, we re-ran the ordinal regression analysis excluding the 8 speakers with dysarthria (Chi2(11)=34.238, p<.001; Nagelkerke’s pseudo r2 = .610). In that case, two variables remain that significantly predict AOS severity: Voiced-VOT dispersion (β=1.922, Wald=5.294; p<.05) and F1 dispersion (β=2.191, Wald =4.480; p<.05). Therefore, it is likely that the reduced voiceless-stop VOTs are primarily a characteristic of dysarthria, rather than AOS.

To address the apparent inconsistencies in identified predictive variables between the subsequent analyses we have presented here, please note that the LDA and ordinal regression analyses we have conducted, just like the analyses conducted by Basilakos et al. (in press), assess various variables at the same time, importantly taking into account their intercorrelations, so that the outcome reflects the strongest predictors that contribute to classification independently. This emphatically does not mean that other variables may not be correlated or associated with the deficit, but only that these do not contribute significantly more to the predictive model, given the presence of the most independently predictive variables. The methods, therefore, are good tools by which to identify predictive variables out of a specific set, which was the objective here, but not suited to exclude the possibility of other variables being associated with the deficits under investigation.

We note that earlier studies by Ryalls (1986), Haley et al. (2001), and Jacks et al. (2010) unexpectedly did not find correlations between vowel formant dispersion and AOS, and speculate that this may have been due to the nature of the elicitation task used in these studies, with deliberately elicited specific target forms and repetitions of similar syllables. This may have functioned similarly to a training mechanism, inducing greater stability than what is revealed in our less restricted discourse analyses. If this is correct, it entails support for the use of repetitive articulatory speech-motor exercises as a treatment approach for AOS (Wambaugh, Nessler, Cameron, & Mauszycki, 2012). Mauszycki et al. (2010) reached opposite conclusions in a study on the influence of repetitive-task elicitation on error consistency in AOS (no increased consistency), but their analysis was based on narrow phonetic transcription as opposed to acoustic measurements, so it is possible that acoustic detail captured here was not measured in that study.

The present study was aimed specifically at assessing the effects of AOS on vowel acoustics, as previous analyses of vowel acoustics have not yielded consistent results, possibly leading to an impression that vowels may be relatively unimpaired in this disorder. Our analyses show that both presence and severity of AOS are indeed reflected in vowel acoustics, as well as consonant acoustics.

Though not the object of this investigation, vowel formant acoustic measures may contribute to the classification of speech motor output disorders. However, we also note that the classification success of our LDAs, though better than chance, is certainly not sufficiently high to warrant reliance on formant and VOT measures alone in the diagnosis or quantification of AOS. Rather, we advocate that vowel acoustics are added to a larger toolbox of instrumental measures, if the aim is to optimally classify speech motor disorders as objectively as possible. The high classification success achieved by Basilakos et al. (in press) shows that this is a promising venture, made possible through the increased availability of large datasets and automated acoustic measures of speech.

Conclusion

We investigated the extent to which articulatory problems with vowels are associated with the presence and severity of AOS, relative to problems with consonant articulation. Presence and severity of AOS were rated based on a scale (Strand, et al., 2014) that takes into account the common co-morbidity of AOS with aphasia and to a lesser extent dysarthria. The most consistent predictors of both the presence and severity of AOS, across our different analyses, turn out to be dispersion of F1, F2 and voiced-consonant VOTs. The apraxic deficit, therefore, does appear to be across-the-board, affecting vowel articulation as well as consonant articulation. The deficit is primarily characterised by articulatory instability, i.e., a lack of consistency between articulations, within speakers. Vowel space itself was not consistently affected in AOS, dysarthria or aphasia in the current sample. Acoustic measures of both consonants and vowels may improve classification of motor speech impairments after stroke, and differentiation from aphasic output problems.

Acknowledgments

This work was supported by the National Institute on Deafness and Other Communication

Footnotes

Disorders (P50 DC014664, PI JF; T32 DC014435, AB). The authors thank Grigori Yourganov, PhD., for consultation on statistical methods.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Adank P, Smits R, & van Hout R (2004). A comparison of vowel normalization procedures for language variation research. The Journal of the Acoustical Society of America, 116(5), 3099–3107. 10.1121/1.1795335 [DOI] [PubMed] [Google Scholar]

- Auzou P, Ozsancak C, Morris RJ, Jan M, Eustache F, & Hannequin D (2000). Voice onset time in aphasia, apraxia of speech and dysarthria: a review. Clinical Linguistics & Phonetics, 14(2), 131–150. [Google Scholar]

- Ballard K, Granier J, & Robin D (2000). Understanding the nature of apraxia of speech: Theory, analysis, and treatment. Aphasiology, 969–995. [Google Scholar]

- Basilakos A, Yourganov G, Den Ouden DB, Fogerty D, Rorden C, Feenaughty L, & Fridriksson J (in press). A multivariate analytic approach to the differential diagnosis of apraxia of speech. J Speech Lang Hear Res. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Basilakos AA (2016). Towards Improving the Evaluation of Speech Production Deficits in Chronic Stroke PhD.Diss, University of South Carolina. [Google Scholar]

- Basilakos A, Rorden C, Bonilha L, Moser D, & Fridriksson J (2015). Patterns of Poststroke Brain Damage That Predict Speech Production Errors in Apraxia of Speech and Aphasia Dissociate. Stroke, 46,1561–1566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blumstein SE, Cooper WE, Goodglass H, Statlender S, & Gottlieb J (1980). Production deficits in aphasia: A voice-onset time analysis. Brain and Language(9), 153–170. [DOI] [PubMed] [Google Scholar]

- Boersma P (2001). Praat, a system for doing phonetics by computer. Glot International, 5(9/10), 341–345. [Google Scholar]

- Code C (1998). Models, theories and heuristics in apraxia of speech. Clinical Linguistics and Phonetics, 12(1), 47–65. [Google Scholar]

- Cicchetti DV (1994). Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychological Assessment, 6(4), 284. [Google Scholar]

- Dabul BL (2000). Apraxia Battery for Adults (2nd ed.). Austin, TX: Pro-Ed. [Google Scholar]

- Darley FL, Aronson AE, & Brown JR (1969). Differential Diagnostic Patterns of Dysarthria. J Speech Hear Res, 12(2), 246–269. doi: 10.1044/jshr.1202.246. [DOI] [PubMed] [Google Scholar]

- Den Ouden DB (2011). Phonological disorders In Botma B, Kula NC & Nasukawa K (Eds.), Continuum Companion to Phonology (pp. 320–340). London, New York: Continuum. [Google Scholar]

- Fletcher AR, McAuliffe MJ, Lansford KL, & Liss JM (2017). Assessing Vowel Centralization in Dysarthria: A Comparison of Methods. J Speech Lang Hear Res, 60(2), 341–354. doi: 10.1044/2016_jslhr-s-15-0355 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galluzzi C, Bureca I, Guariglia C, & Romani C (2015). Phonological simplifications, apraxia of speech and the interaction between phonological and phonetic processing. Neuropsychologia, 71, 64–83. doi: 10.1016/j.neuropsychologia.2015.03.007 [DOI] [PubMed] [Google Scholar]

- Gandour J, & Dardarananda R (1984). Voice Onset Time in Aphasia - Thai .2. Production. Brain and Language, 23(2), 177–205. doi: Doi10.1016/0093-934x(84)90063-4 [DOI] [PubMed] [Google Scholar]

- Goodglass H, Kaplan E, & Barresi B (2000). Boston Diagnostic Aphasia Examination -Third Edition: Pearson. [Google Scholar]

- Haley KL, Jacks A, de Riesthal M, Abou-Khalil R, & Roth HL (2012). Toward a Quantitative Basis for Assessment and Diagnosis of Apraxia of Speech. Journal of Speech Language and Hearing Research, 55(5), S1502–S1517. doi: 10.1044/1092-4388(2012/11-0318) [DOI] [PubMed] [Google Scholar]

- Haley KL, Ohde RN, & Wertz RT (2001). Vowel quality in aphasia and apraxia of speech: Phonetic transcription and formant analyses. Aphasiology, 15(12), 1107–1123. doi: Doi10.1080/02687040143000519 [Google Scholar]

- Haley KL, & Overton HB (2001). Word length and vowel duration in apraxia of speech: The use of relative measures. Brain and Language, 79(3), 397–406. doi: 10.1006/brln.2001.2494 [DOI] [PubMed] [Google Scholar]

- Hardcastle WJ, Barry RAM, & Clark CJ (1985). Articulatory and Voicing Characteristics of Adult Dysarthric and Verbal Dyspraxic Speakers - an Instrumental Study. British Journal of Disorders of Communication, 20(3), 249–270. [DOI] [PubMed] [Google Scholar]

- Itoh M, Sasanuma S, Tatsumi IF, Murakami S, Fukusao Y, & Suzuki T (1982). Voice Onset Time Characteristics in Apraxia of Speech. Brain and Language, 17(2), 193–210. doi: 10.1016/0093-934x(82)90016-5 [DOI] [PubMed] [Google Scholar]

- Jacks A (2008). Bite Block Vowel Production in Apraxia of Speech. Journal of Speech, Language, and Hearing Research, 51(4), 898–913. [DOI] [PubMed] [Google Scholar]

- Jacks A, Mathes K, & Marquardt T (2010). Vowel acoustics in adults with apraxia of speech. J Speech Lang Hear Res, 53(1), 61–74. doi: 1092-4388_2009_08-0017 [DOI] [PubMed] [Google Scholar]

- Karlsson F, & van Doorn J (2012). Vowel formant dispersion as a measure of articulation proficiency. Journal of the Acoustical Society of America, 132(4), 2633–2641. doi: 10.1121/1.4746025 [DOI] [PubMed] [Google Scholar]

- Keller E (1975). Vowel errors in aphasia. Unpublished Doctoral Dissertation (University of Toronto, Canada: ). [Google Scholar]

- Kent RD, & Kim YJ (2003). Toward an acoustic typology of motor speech disorders. Clinical Linguistics & Phonetics, 17(6), 427–445. doi: 10.1080/0269920031000086248 [DOI] [PubMed] [Google Scholar]

- Kent RD, & Rosenbek JC (1983). Acoustic patterns of apraxia of speech. Journal of Speech and Hearing Research, 26, 231–249. [DOI] [PubMed] [Google Scholar]

- Kertesz A (2007). Western Aphasia Battery - Revised. San Antonio, TX: Pearson. [Google Scholar]

- Lobanov BM (1971). Classification of Russian vowels spoken by different listeners. Journal of the Acoustical Society of America, 49, 606–608. [Google Scholar]

- Lee J, Shaiman S, & Weismer G (2016). Relationship between tongue positions and formant frequencies in female speakers. J Acoust Soc Am, 139(1), 426–440. doi: 10.1121/1.4939894 [DOI] [PubMed] [Google Scholar]

- Lennes M (2003). Collect_formant_data_from_files.praat [Praat script], from http://www.helsinki.fi/~lennes/praat-scripts/public

- Lisker L, & Abramson AS (1964). A Cross-Language Study of Voicing in Initial Stops - Acoustical Measurements. Word-Journal of the International Linguistic Association, 20(3), 384–422. [Google Scholar]

- Maas E, Gutierrez K, & Ballard KJ (2014). Phonological encoding in apraxia of speech and aphasia. Aphasiology, 28(1), 25–48. doi: 10.1080/02687038.2013.850651 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maas E, Mailend ML, & Guenther FH (2015). Feedforward and Feedback Control in Apraxia of Speech: Effects of Noise Masking on Vowel Production. Journal of Speech Language and Hearing Research, 58(2), 185–200. doi: 10.1044/2014_jslhr-s-13-0300 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mauszycki SC, Dromey C, & Wambaugh JL (2007). Variability in apraxia of speech: A perceptual, acoustic, and kinematic analysis of stop consonants. Journal of Medical Speech-Language Pathology, 15(3), 223–242. [Google Scholar]

- Mauszycki SC, Wambaugh JL, & Cameron RM (2010). Variability in apraxia of speech: Perceptual analysis of monosyllabic word productions across repeated sampling times. Aphasiology, 24(6), 838–855. [DOI] [PubMed] [Google Scholar]

- McNeil MR, Doyle PJ, & Wambaugh J (2000). Apraxia of Speech: A treatable disorder of motor planning and programming In Nadeau SE, Rothi LJG & Crosson B (Eds.), Aphasia and Language: Theory to Practice (pp. 221–266). New York, London: The Guildford Press. [Google Scholar]

- Morris RJ (1989). Vot and Dysarthria - a Descriptive Study. Journal of Communication Disorders, 22(1), 23–33. doi: Doi10.1016/0021-9924(89)90004-X [DOI] [PubMed] [Google Scholar]

- Nearey TM (1978). Phonetic Feature Systems for Vowels. Indiana: Indiana University Linguistics Club. [Google Scholar]

- Ogar J, Slama H, Dronkers N, Amici S, & Gorno-Tempini M (2005). Apraxia of speech: an overview. Neurocase, 11(6), 427–432. doi: 10.1080/13554790500263529 [DOI] [PubMed] [Google Scholar]

- Ordin M, & Polyanskaya L (2015). Perception of speech rhythm in second language: the case of rhythmically similar L1 and L2. Frontiers in Psychology, 6. doi: Artn 316 10.3389/Fssyg.2015.00316 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peterson GE, & Lehiste I (1960). Duration of Syllable Nuclei in English. Journal of the Acoustical Society of America, 32(6), 693–703. doi: Doi 10.1121/1.1908183 [DOI] [Google Scholar]

- Rosenbek JC, Kent RD, & LaPointe LL (1984). Apraxia of Speech: An Overview and Some Perspectives In Rosenbek JC, McNeil MR & Aronson AE (Eds.), Apraxia of Speech: Physiology, Acoustics, Linguistics, Management (pp. 1–72). San Diego: College-Hill Press. [Google Scholar]

- Ryalls JH (1986). An Acoustic Study of Vowel Production in Aphasia. Brain and Language, 29(1), 48–67. doi: Doi 10.1016/0093-934x(86)90033-7 [DOI] [PubMed] [Google Scholar]

- Sapir S, Ramig LO, Spielman JL, & Fox C (2010). Formant Centralization Ratio: A Proposal for a New Acoustic Measure of Dysarthric Speech. Journal of Speech Language and Hearing Research, 53(1), 114–125. doi: 10.1044/1092-4388(2009/08-0184) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seddoh SAK, Robin DA, Sim HS, Hageman C, Moon JB, & Folkins JW (1996). Speech timing in apraxia of speech versus conduction aphasia. Journal of Speech and Hearing Research, 39(3), 590–603. [DOI] [PubMed] [Google Scholar]

- Strand EA, Duffy JR, Clark HM, & Josephs K (2014). The Apraxia of Speech Rating Scale: a tool for diagnosis and description of apraxia of speech. J Commun Disord, 51, 43–50. doi: 10.1016/j.jcomdis.2014.06.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Traunmüller H (1990). Analytical expressions for the tonotopic sensory scale. Journal of the Acoustical Society of America, 88, 97–100. [Google Scholar]

- Vergis MK, Ballard KJ, Duffy JR, McNeil MR, Scholl D, & Layfield C (2014). An acoustic measure of lexical stress differentiates aphasia and aphasia plus apraxia of speech after stroke. Aphasiology, 28(5), 554–575. doi: 10.1080/02687038.2014.889275 [DOI] [Google Scholar]

- Wambaugh JL, Nessler C, Cameron R, & Mauszycki SC (2012). Acquired Apraxia of Speech: The Effects of Repeated Practice and Rate/Rhythm Control Treatments on Sound Production Accuracy. Am J Speech Lang Pathol, 21(2), S5–27. doi: 10.1044/1058-0360(2011/11-0102) [DOI] [PubMed] [Google Scholar]

- Wells JC (1997). SAMPA computer readable phonetic alphabet In Gibbon D, Moore R & Winski R (Eds.), Handbook of Standards and Resources for Spoken Language Systems (Vol. Part IV, section B). Berlin and New York: Mouton de Gruyter. [Google Scholar]

- Whiteside SP, Robson H, Windsor F, & Varley R (2012). Stability in Voice Onset Time Patterns in a Case of Acquired Apraxia of Speech. Journal of Medical Speech-Language Pathology, 20(1), 17–28.25614728 [Google Scholar]

- Ziegler W (2002). Psycholinguistic and Motor Theories of Apraxia of Speech. Seminars in Speech and Language, 23, 231–244. [DOI] [PubMed] [Google Scholar]

- Ziegler W, Aichert I, & Staiger A (2012). Apraxia of Speech: Concepts and Controversies. J Speech Lang Hear Res, 55(5), S1485–1501. doi: 10.1044/1092-4388(2012/12-0128) [DOI] [PubMed] [Google Scholar]