Abstract

BACKGROUND:

Assessment of adherence to study medications is a common challenge in clinical research. Counting unused study medication is the predominant method by which adherence is assessed in outpatient clinical trials but it has limitations that include questionable validity and burdens on research personnel.

PURPOSE:

To compare capsule counts, patient questionnaire responses, and plasma drug levels as methods of determining adherence in a clinical trial that had 2056 participants and used centralized drug distribution and patient follow-up.

METHODS:

Capsule counts from study medication bottles returned by participants and responses to questions regarding adherence during quarterly telephone interviews were averaged and compared. Both measures were compared to plasma drug levels obtained at the 3-month study visit of patients in the treatment group. Counts and questionnaire responses were converted to adherence rates (doses taken divided by days elapsed) and were categorized by stringent (≥85.7%) and liberal (≥71.4%) definitions. We calculated the prevalence-adjusted bias-adjusted kappa to assess agreement between the two measures.

RESULTS:

Using a pre-paid mailer, participants returned 76.0% of study medication bottles to the central pharmacy. Both capsule counts and questionnaire responses were available for 65.8% of participants and were used to assess adherence. Capsule counts identified more patients who were under-adherent (18.8% by the stringent definition and 7.5% by the liberal definition) than self-reports did (10.4% by the stringent definition and 2.1% by the liberal definition). The prevalence-adjusted bias-adjusted kappa was 0.58 (stringent) and 0.83 (liberal), indicating fair and very good agreement, respectively. Both measures were also in agreement with plasma drug levels determined at the 3-month visit (capsule counts: p = 0.005 for the stringent and p = 0.003 for the liberal definition; questionnaire: p = 0.002 for both adherence definitions).

LIMITATIONS:

Inconsistent bottle returns and incomplete notations of medication start and stop dates resulted in missing data but exploratory missing data analyses showed no reason to believe that the missing data resulted in systematic bias.

CONCLUSIONS:

Depending upon the definition of adherence, there was fair to very good agreement between questionnaire results and capsule counts among returned study bottles, confirmed by plasma drug levels. We conclude that a self-report of medication adherence is potentially comparable to capsule counts as a method of assessing adherence in a clinical trial, if a relatively low adherence threshold is acceptable, but adherence should be confirmed by other measures if a high adherence threshold is required.

Background

Assessment of how well patients adhere to prescribed medications is a challenge in clinical research as well as clinical practice [1–3]. It is important in clinical research for several reasons, including evaluation of the effect of treatment, drug safety and tolerability, identification of dose-response relationships, estimation of pharmacokinetic parameters, and optimization of dosing intervals and frequency [2–5]. Adherence assessment is a critical element in interpreting clinical trial results because, like loss to follow up, non-adherence reduces statistical power [6,7].

Food and Drug Administration (FDA) regulations governing clinical evaluations of drugs require sponsors to monitor participant compliance but do not specify a particular method [8,9]. Guidelines promulgated by the International Conference on Harmonization in Guidance E8: General Considerations for Clinical Trials also indicate, ‘Methods used to evaluate patient usage of the test drug should be specified in the protocol and the actual usage documented’ [10]. Authors of recent reviews advocate using multiple methods of assessing adherence in research because there is no ‘gold standard’ in this area [1,2,11,12]. However, a systematic review of randomized controlled clinical trials published in 26 high impact factor medical journals over 2 years found that only 18 of 192 (9.4%) publications reviewed used two or more different methods of assessment concurrently [7].

The principal methods of assessing adherence in clinical research can be categorized as direct or indirect. Direct methods include analysis of blood or urine drug, metabolite, or marker levels and direct administration or observation of the participant taking the medication. Indirect methods include counts of doses and volumetric measurements of medication remaining in dispensing containers, self-reporting by participants (interview questioning, diaries, written questionnaires), and electronic devices that monitor the times when medication containers are opened [2,13]. Although counting doses is the predominant method used in outpatient research because of its simplicity, it has limitations, such as burdens placed on patients and research personnel, as well as debatable validity [4]. Adherence has been shown to decrease over time, emphasizing the importance of repeated assessment, particularly in clinical trials that employ infrequent and/or centralized follow-up evaluations [14,15].

For these reasons, assessment of adherence was an important component of our randomized trial (‘The Homocysteine Study’ HOST [16,17]) which was designed to combine an enrollment and a 3-month visit with quarterly telephone follow-up and centralized study drug dispensing for the remainder of the trial. In this report, we compare capsule counts, self-reports by participants (telephone questionnaire responses), and plasma drug concentrations as measures of adherence in the HOST trial.

Methods

The HOST trial was a multi-center, randomized, double blind, placebo-controlled trial of vitamin induced lowering of plasma homocysteine in patients with end-stage renal disease or advanced chronic kidney disease [16,17]. Participants from 36 geographically diverse medical centers were enrolled and follow up was primarily by telephone. The study drug, a capsule containing 40 mg folic acid, 100 mg pyridoxine hydrochloride, and 2 mg cyanocobalamin, or placebo, was dispensed by mail from a central pharmacy.

After enrollment, HOST participants returned to the clinic for a 3-month follow up visit at which a blood sample was obtained to measure plasma folate concentration. Every 3 months thereafter, participants were interviewed by phone and mailed a new supply of the study drug (100 capsules). Participants were furnished with a pre-addressed, postage paid envelope and instructed to write start and stop dates on the old bottle label, return the bottle to the central pharmacy, and immediately begin using the new bottle of study capsules.

During the quarterly follow-up telephone calls, participants were asked the following questions adapted from a portion of the Brief Medication Questionnaire (BMQ) [18]:

‘Are you still taking the study medication?’

‘People sometimes forget to take their study medicine or take less study medicine than was ordered because they feel they need less. How many times in the past week did you take less than one study pill a day?’

‘People sometimes take more study medication because they feel like taking more. How many times in the past week did you take an extra study capsule?’

Self-reported adherence rates were calculated from responses to the second question.

.

The central pharmacy tracked bottles of study drug returned by participants using a unique, participant-specific bar code on each label. Capsules remaining in returned bottles were counted by an automated counter. Adherence rates from capsule counts were calculated from the counts of remaining capsules in returned bottles and number of days elapsed between the start and stop dates recorded by the participants on the label.

.

Based on the questionnaires and the capsule counts, we calculated the percentage of prescribed doses presumed to be taken using each assessment method. We defined adherence categories using ‘stringent’ and ‘liberal’ definitions as follows:

The stringent definition

Under-adherent: <85.7%, which equals more than one missed dose in the past week

Adherent: ≥85.7%, which equals no more than one missed dose in the past week

The liberal definition

Under-adherent: <71.4%, which equals more than two missed doses in the past week

Adherent: ≥71.4%, which equals no more than two missed doses in the past week

The thresholds were chosen because they correspond exactly to the smallest units that could be discerned from the questionnaire. Capsule counts and questionnaire responses for each participant were averaged over the centralized follow-up phase of the study (after the 3-month study visit). Bottles with missing, unreadable, or implausible start or stop dates were excluded. Data from 14 quarters were included in the analysis of the centralized follow-up phase of the study (after the 3-month study visit). We excluded the final year of follow-up because of small sample sizes and sporadic bottle returns during the closeout period that were not representative of other study periods.

In a second analysis, the capsule counts and questionnaire responses were compared with plasma folate levels obtained at the 3-month visit in participants assigned to the active treatment group. Adherence over the course of the study was also compared with the 3-month plasma levels in the treatment group. Research has shown that the ingestion of folic acid in high doses results in a large increase in plasma folate levels [17,19]. Therefore, high plasma drug levels should be associated with medication adherence in HOST. Capsule counts and questionnaire responses obtained at the study sites during the 3-month study visit were used in the 3-month plasma folate level analysis.

The human rights committee at the West Haven Cooperative Studies Program Coordinating Center and the institutional review boards of all participating sites approved the HOST study, and all participants provided written informed consent.

Statistical analysis

Cohen’s kappa (κ) statistic was calculated to determine the level of agreement between the capsule count and questionnaire responses using both stringent and liberal adherence definitions [20]. Because κ is highly dependent on prevalence it can be misleadingly low if not corrected for prevalence. We therefore also report a prevalence-adjusted bias-adjusted kappa (PABAK) [21].

Since the plasma folate levels were skewed, the nonparametric Wilcoxon rank-sum test was used in the second analysis to determine whether 3-month capsule count and questionnaire response adherence categories were associated with the distribution of plasma folate levels. The same test was used to compare 3-month plasma folate levels with mean patient adherence levels over the course of the study follow-up period. In addition, 3-month plasma folate levels were examined for correlation with 3-month capsule count and questionnaire response adherence data as continuous measures by calculating Pearson’s product-moment correlation coefficient (r).

We also used univariate and step-wise linear and logistic regression analyses to explore the relationships between 21 independent variables including medication side effect reports, baseline demographic and clinical characteristics previously associated with medication adherence [22], and the bottle return rate (number of bottles returned by each participant/number of bottles shipped to each participant × 100) as well as capsule count and self-report adherence levels to determine if there were any characteristics that were related to both missing data and the outcomes (adherence). These analyses were conducted to determine if missing data were missing at random or were non-ignorable and could have resulted in bias. The two-sided, unadjusted alpha levels were set at p ≤ 0.05 for the Wilcoxon rank-sum test and the linear and logistic regression analyses. SAS version 9.1 was used for these analyses (SAS Institute Inc., Cary, NC).

Results

Between September 2001 and October 2003, 2056 participants were enrolled in the HOST trial (1032 were randomized to active treatment and 1024 to placebo). Participants were followed for a median of 3.2 years. During the 14 quarters included in the analysis, 21,297 bottles of study medication were shipped to patients. Of these, 16,196 (76.0%) were returned to the central pharmacy for capsule counts. Unreadable start or stop dates required discarding 3168 bottles (19.6% of returned bottles). Of the 2056 randomized participants, 1745 (84.9%) had questionnaire results and 1378 (67.0%) had capsule count results. The final capsule count-questionnaire analysis set represented adherence data from 1352 (65.8%) HOST participants, 670 (49.6%) randomized to active treatment and 682 (50.4%) randomized to placebo, who returned at least one bottle and had at least one questionnaire response. Factors that contributed to missing data included the substantial proportion of HOST participants in both treatment groups who died during the course of the trial (43% in each group) and a smaller proportion who withdrew before the end of the trial (9.9% in the active treatment arm and 13.0% in the placebo arm) [17]. Delays in ascertaining deaths and withdrawals resulted in mailing bottles of study medication to participants who had already left the study.

By the stringent definition, capsule counts identified more participants who were under-adherent (18.8%) than self-reports (10.4%) did (Table 1.). The PABAK was 0.58 (Table 1.).

Table 1.

Agreement between adherence by capsule count vs questionnaire by stringent and liberal criteria (N = 1352 patients returning bottles and responding to questionnaires for each analysis)

| Capsule counts |

||||||

|---|---|---|---|---|---|---|

| Stringenta |

Liberalb |

|||||

| Question naife responses | Adherent (%) | Under (%) | Total (%) | Adherent (%) | Under (%) | Total (%) |

| Adherent (%) | 74.9 | 14.7 | 89.6 | 90.8 | 7.1 | 97.9 |

| Under (%) | 6.3 | 4.1 | 10.4 | 1.6 | 0.4 | 2.1 |

| Total (%) | 81.2 | 18.8 | 100.0 | 92.5c | 7.5 | 100.0 |

Adherent, ≥85.7%; Cohen's kappa, 0.17; and PABAK, 0.58 (bias index = 0.08, prevalence index = 0.71).

Adherent. ≥71.4%; Cohen's kappa, 0.06; and PABAK, 0.83 (bias index = 0.05, prevalence index = 0.90).

Total does not sum due to rounding.

As expected, the liberal definition resulted in categorizing more participants as adherent by both measures (Table 1.). The level of agreement between the two measures of adherence was also higher using the liberal definition (PABAK = 0.83) (Table 1.).

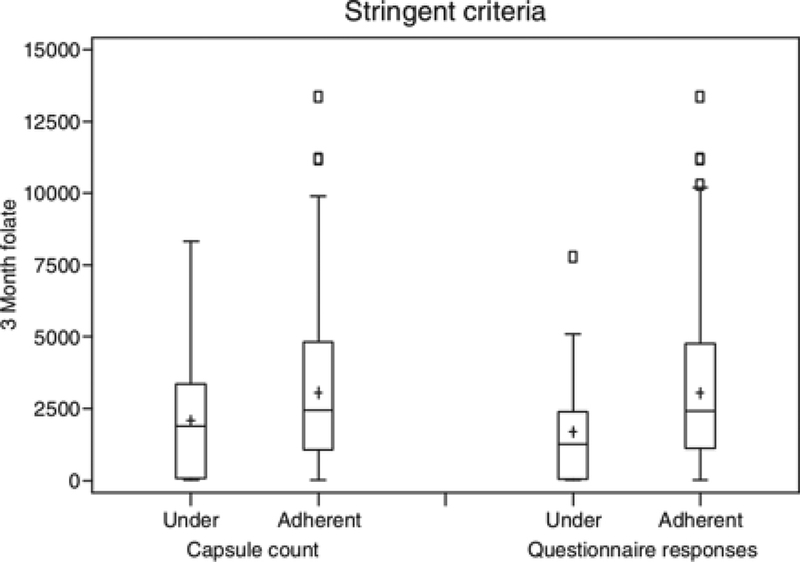

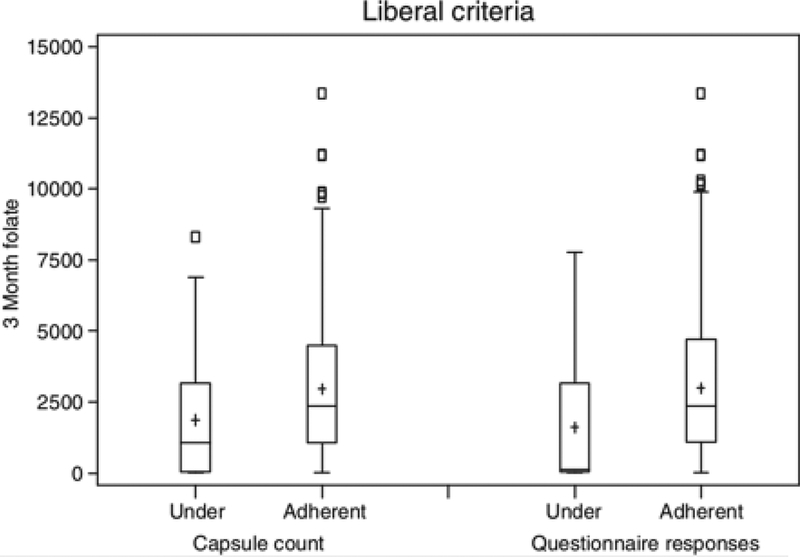

The plasma folate levels of the active treatment group at the 3-month visit were matched with capsule counts and questionnaire responses from that visit (N = 256 matched observations for the capsule count and 424 observations for the questionnaire analyses). Both capsule counts and questionnaire responses were closely associated with the median plasma folate levels using the stringent adherence definition categories (p = 0.005 and p = 0.002, respectively) and liberal adherence definition categories (p = 0.003 and p = 0.002, respectively) (Figures 1 and 2). Folate levels were also correlated at the 3-month visit with capsule counts (r = 0.20 and p = 0.002) and questionnaire responses (r = 0.14, p = 0.003) examined as continuous measures. In addition, the 3-month plasma folate levels were significantly related to median adherence over the course of the study for capsule counts and questionnaire responses (respectively, stringent: p = 0.004 and p ≤ 0.001; liberal: p = 0.006 and p = 0.013).

Figure 1.

Plasma folate (ng/mL) of participants in the treatment group at 3 months (ordinate) compared to capsule counts in bottles returned at the 3-month visit and to questionnaire responses at the 3-month visit. The results are divided into ‘under-adherent’ (<85.7% of doses taken), and ‘adherent’ (≥85.7% of doses taken) by the stringent definition. The median folate value for the under-adherent (n = 64) and adherent (n = 192) groups according to capsule count were 1888 and 2452, respectively (p = 0.005). The median folate value for the under-adherent (n = 27) and adherent (n = 397) groups according to questionnaires were 1255 and 2424, respectively (p = 0.002).

Notes: The central line in the box indicates the 50th percentile (median) value; the + indicates the mean; the box represents the interquartile range (IQR) (25th and 75th percentiles), the ends of the whiskers represent the maximum value within 1.5 times the IQR, and the small boxes represent outlier values greater than 1.5 times the IQR.

Figure 2.

Plasma folate (ng/mL) of participants in the treatment group at 3 months (ordinate) compared to capsule counts in bottles returned at the 3-month visit and to questionnaire responses at the 3-month visit. The results are divided into ‘under-adherent’ (<71.4% of doses taken), and ‘adherent’ (≥71.4% of doses taken), by the liberal definition. The median folate value for the under-adherent (n = 34) and adherent (n = 222) groups according to capsule count were 1063 and 2365, respectively (p = 0.003). The median folate value for the under-adherent (n = 10) and adherent (n = 414) groups according to questionnaires were 133 and 2359, respectively (p = 0.002). Notes: As given in Figure 1

Exploratory regression analyses of adherence-related variables to evaluate whether data were missing at random or could have contributed to bias revealed that there were several variables associated with missing data (as well as morbidity and mortality). However, only one of the 21 tested variables had a statistically significant relationship to both missing data and an adherence measure indicating that missing data are likely missing at random and not informative missing.

Discussion

Few studies have evaluated patient medication adherence using three different methods simultaneously, including plasma drug levels. In their review of adherence assessment techniques used in drug trials reported in three major journals over a 12-year period, Jayaraman and colleagues found that capsule counts and self-report were the most common methods (33% and 25%, respectively) [3]. Drug level assays were used in 14% of trials. A combination of methods, usually counts and self-reports, was employed in 16%. Gossec et al. [7] confirmed these relative findings in their recent review of methods of assessing adherence in randomized controlled trials in six chronic diseases.

There is some disagreement in the literature regarding the appropriate number, cut-off points, and descriptions of agreement categories for kappa statistics [23]. According to Byrt, in considering a comparison free of prevalence and bias effects (kappa = PABAK), a kappa of 0.41–0.60 should be interpreted as fair, 0.61–0.80 as good, and 0.81–0.92 as very good agreement [23]. Thus, the PABAK values in this study indicate a fair (stringent definition) to very good (liberal definition) agreement between capsule count and questionnaire methods. These results were confirmed by the significant correlation between plasma folate levels and both capsule counts and questionnaire responses, suggesting that the questionnaire may be a good substitute for capsule counts in certain clinical trials. The self-report questionnaire method is better at identifying adherent patients than non-adherent patients, however. This result is consistent with the findings that capsule counts identified more patients who were under-adherent. Many patients fail to report nonadherence when questioned [2].

Our findings are consistent with some but not all reports on adherence. Matsui et al.[5] compared counts and patient diaries with electronic monitoring using the Medication Management System-4 (MEMS), which records the date and time a medication bottle is opened, to evaluate adherence to oral iron chelation therapy in 15 patients over 2 years. There was no difference between adherence determined from counts or diaries. MEMS-assessed adherence was lower than that determined by either counts or diaries. The authors suggested this could be partially explained by problems the patients had with MEMS that would lead to falsely low estimates. Liu et al. [24] found significant correlations between interview, pill count, and MEMS in their study of 108 outpatients getting antiretroviral treatment. Svarstad et al. [18] tested the validity of the BMQ by comparing it to MEMS and found a correlation between the rate of dose omission by the BMQ with the true rate of omission by the MEMS. They also reported that the rate of dose omissions by counts for the month following enrollment (11%) was correlated with the MEMS rate (13%; r = 0.66, p = 0.001).

The results of other studies are more equivocal. Choo et al. [25] compared adherence rates for hypertension therapy using a questionnaire adapted from the BMQ, pharmacy records, counts, and MEMS. These investigators found high rates of self-reported adherence. Questions about forgotten doses were predictive of MEMS results, although the patient reports were imprecise. The authors suggested that patient-reported adherence is qualitatively informative. While there was only a moderate correlation between manual and MEMS counts (r = 0.52), the median adherence proportions by the two methods were similar. In a randomized study of elderly ambulatory care patients, Grymonpre and colleagues [26] reported that the pill count method demonstrated lower mean adherence compared to that determined by self-report. The authors attributed this discrepancy in part to early refills and assessing pill count adherence using only one bottle per patient.

It is difficult to draw conclusions from the findings of previous studies comparing adherence assessment methods because of methodological shortcomings [27]. For example, it is difficult to compare ‘self-reports’ to other methods because the questions asked of patients were framed differently, recall periods varied, and three different techniques of ‘self reports’ were used – interview, patient diary, and questionnaire [2]. These categories may be blurred further because an ‘interview’ may use a structured questionnaire, as in our study. Unless the adherence measurement technique is specified in detail, differing results are difficult to interpret. The distinction between interviews that likely employed a structured questionnaire and those that were flexible and less controlled may not be clear in review articles [1,27,28]. Methodological ambiguities and inconsistent assessment techniques in studies using self-reporting result in conflicting conclusions. Despite these limitations, it is widely accepted that self-reports and pill counts overestimate adherence [1,2,22].

Even biochemical methods to assess adherence, such as analysis of blood or urine drug, metabolite, or marker levels, have limitations. Unless samples are analyzed serially, these methods can only provide qualitative information about adherence by confirming that a study medication was taken sometime in the recent past [22]. Even when the pharmacokinetic parameters of the study drug are characterized well, uncertainty with respect to dose timing and individual variations in volume of distribution, serum protein levels, and drug metabolism prevent the use of drug or marker levels to quantify adherence [2].

Limitations of our study include our comparison of adherence over different time periods (1 week using the questionnaire, 3 months using the capsule counts, and at the 3-month visit using the plasma folate levels) but because the questionnaire and counts were repeated measures and the results for each participant were averaged over the course of the study, we believe the comparisons are valid for the purpose of assessing average adherence during the course of a clinical trial.

Other limitations include missing data owing to withdrawals, deaths, inability to locate participants after changes of address and telephone numbers and other temporary or permanent loss to follow-up, missed questionnaire adherence responses, inconsistent bottle returns, missing or unusable start and stop dates, and the inability to match participants’ plasma folate levels with capsule counts at the 3-month visit because of failure to return bottles. Although over 70% of dispensed bottles were included in our calculation of the adherence rate reported in HOST [17], the inconsistency in bottle returns and start–stop date documentation by participants prevented us from calculating adherence using dispensed bottles for all participants who had questionnaire responses. Despite quarterly phone call reminders and written instructions with each bottle, not all participants returned their bottles or recorded the start and stop dates consistently. HOST’s centralized follow-up design may have contributed to this difficulty. Additional reinforcement and other aids, for example, coupling a bottle-tracking system with specific reminders for bottles that were not returned, might improve the response rate but resources for the trial were limited (part of the reason for the centralized follow-up design) and it was not possible to include these personalized reminders in HOST. Moreover, HOST participants had a significant disease burden and multiple co-morbidities that undoubtedly contributed to the amount of missing and unusable returned bottles and missing questionnaires through participant mortality, morbidity, and study withdrawals.

While there was missing data in this study, the results from our analyses comparing baseline variables with missing data and medication adherence (one of 21 tested variables was significantly related to both missing data and an adherence measure) showed that it is unlikely that there was a systematic relationship between missing data and medication adherence that might have biased the study results. Given the number of tests conducted, a small number of variables associated with both missing data and an adherence measure could be expected due to chance alone. We note that the amount of missing data in our study is not substantially different from that reported in other studies. Curtin et al. [29] in a study of adherence to oral medications by hemodialysis patients (similar to HOST participants), found that 51% of their measures of adherence to antihypertensive therapy were usable in their analysis comparing different adherence measures (49% of the data were missing). Similarly, Hox and De Leeuw reported in their meta-analysis of 45 studies comparing completion rates in mailed, telephone, and face-to-face surveys that telephone surveys had an average completion rate of 67.2% (32.8% of the data were missing) [30].

To what extent our results can be extrapolated to studies of interventions using drugs with unknown tolerability or narrow therapeutic windows or to studies with a different questionnaire or frequency of follow-up is uncertain. It is worth noting that requiring the return of used medication bottles has value beyond that of assessing adherence and accounting for medication. It reinforces the importance of adherence to participants and the key role they play in the successful conduct of the study. This may be particularly valuable in trials with limited in-person follow-up.

In summary, our results show that a self-report questionnaire is potentially a reliable substitute for capsule counts. For some studies, however, particularly those involving drugs with a narrow therapeutic window or with unknown tolerability or studies in which a high-adherence threshold is required, it may be better to employ capsule counts or other independent measures of adherence since these measures may also improve adherence as well as provide evidence that may be useful in assessing the tolerability of the study drug.

Supplementary Material

Acknowledgments

• Funding

This study was sponsored by the Cooperative Studies Program, Department of Veterans Affairs Office of Research and Development, and was supported in part by PamLab LLC and Abbott Laboratories.

Footnotes

• Disclaimer Neither PamLab nor Abbott Laboratories were involved in the design or conduct of the study, the collection, management, analysis, and interpretation of the data, or review or approval of the manuscript.

Collaborators (175)

Warren S, Raisch D, Campbell H, Guarino P, Kaufman J, Petrokaitis E, Goldfarb D, Gaziano J, Jamison R, Jamison R, Gaziano J, Cxypoliski R,Goldfarb D, Guarino P, Hartigan P, Helmuth M, Kaufman J, Warren S, Peduzzi P, Levinsky N, Kopple J, Brown B Jr, Cheung A, Detre K, Greene T,Klag M, Motulsky A, Marottoli R, Allore H, Beckwith D, Farrell W, Feldman R, Mehta R, Neiderman J, Perry E, Kasl S, Zeman M, Young E, Belanger K, Lopez R, Grunsten N, Sanders P, Killian D, Shanklin N, Kaufman J, Dibbs E, Langhoff E, Tyrrell D, Crowley S, Piexoto A, DeLorenzo A, Joncas C, Miller RT, Kaelin K, Markulin M, Dev D, Sajgure D, Saklayen M, Neff H, Shook S, Chonchol M, Levi M, Clegg L, Singleton A, Abu-Hamdan D,Bey-Knight L, King K, Jones E, Palant C, Sheriff H, Simmons C, Sinks J, Wingo C, McAllister B, Sallustio J, Smith N, Popli S, Linnerud P, Zuluaga A, Dolson G, Tasby G, Agarwal R, Lewis R, Jasinski D, Sachs N, Veneck D, Rehman S, Basu A, Ingram K, Paul R, Raymond J, Holmes K,Wiegmann T, Abdallah J, Nagaria A, Smith D, Renft A, Finnigan T, Feldman G, Schmid S, Cooke C, Wall B, Patterson R, Contreras G, Jaimes E,O’Brien G, Ma K, Rust J, Otterness S, Batuman V, Kulivan C, Goldfarb D, Shea M, Modersitzki F, Dixon T, Pastoriza E, Titus L, Jamison R, Cook I,Anderson M, Palevsky P, Salai P, Anderson S, Walczyk J, Lindner A, Stehman-Breen C, Pascual E, Nugent-Carney J, Thomson S, Mullaney S,Dingsdale J, Rosado C, Azcona-Vilchez C, Betcher S, Rewakowski C, Cohen D, Flanagin G, Steffe J, Lohr J, Fucile N, Yohe L, Blumenthal S,Schmidt D, Jamison R, Helmuth M, Georgette G, Shannon T, Johnson H, Martin M, Gaziano J, Cxypoliski R, Best N, Pastore N, Jones D, Rayne A,Castrillon Z, Sather M, Warren S, Raisch D, Campbell H, Badgett C, Day J, Taylor Z, Peduzzi P, Antonelli M, Guarino P, Hartigan P, Durant L,Jobes E, Joyner S, Perry M, Petrokaitis E, Zellner S, O’Leary TJ, Huang GD.

References

- 1.Osterberg L, Blaschke T. Adherence to medication. N Engl J Med 2005; 353:487–97. [DOI] [PubMed] [Google Scholar]

- 2.Farmer KC. Methods for measuring and monitoring medication regimen adherence in clinical trials and clinical practice. Clin Ther 1999; 21: 1074–90. [DOI] [PubMed] [Google Scholar]

- 3.Jayaraman S, Rieder MJ, Matsui DM. Compliance assessment in drug trials: has there been improvement in two decades?. Can J Clin Pharmacol 2005; 12:e251–53. [PubMed] [Google Scholar]

- 4.Friedman LM, Furberg CD, DeMets DL. Participant adherence Fundamentals of Clinical Trials (3rd edn, Chapter 13). Springer-Verlag, New York, 1998. [Google Scholar]

- 5.Matsui D, Hermann C, Klein J, et al. Critical comparison of novel and existing methods of compliance assessment during a clinical trial of an oral iron chelator. J Clin Pharmacol 1994; 34: 944–49. [DOI] [PubMed] [Google Scholar]

- 6.Boudes P. Drug compliance in therapeutic trials: a review. Control Clin Trials 1998; 19: 257–68. [DOI] [PubMed] [Google Scholar]

- 7.Gossec L, Tubach F, Dougados M, Ravaud P. Reporting of adherence to medication in recent randomized controlled trials of 6 chronic diseases: a systematic literature review. Am J Med Sci 2007; 334: 248–54. [DOI] [PubMed] [Google Scholar]

- 8.FDA Guidance for Industry. General considerations for the clinical evaluation of drugs. 1974. Available at:http://www.fda.gov/downloads/Drugs/GuidanceComplianceRegulatoryInformation/Guidances/ucm071682.pdf (accessed 27 May 2011).

- 9.21 CFR 312.23(a)(6) and 21 CFR 312.56. In: Code of Federal Regulations.

- 10.FDA Guidance for Industry. ICH guideline on general considerations for clinical trials (E8). In: 62 Fed Reg 66113; 17 December 1997. [Google Scholar]

- 11.Spilker B, Methods of assessing and improving patient compliance in clinical trials In Spilker B. (ed.). Guide to Clinical Trials. Raven Press, Ltd, New York, NY,1991, 102–14. [Google Scholar]

- 12.Zelikovsky N, Schast A, Eliciting accurate reports of adherence in a clinical interview: development of the medical adherence measure. Pediatr Nurs 2008; 34:141–46. [PubMed] [Google Scholar]

- 13.Cramer JA, Mattson RH, Prevey ML, et al. How often is medication taken as prescribed? A novel assessment technique. JAMA 1989; 261: 3273–77. [PubMed] [Google Scholar]

- 14.Cramer JA, Scheyer RD, Mattson RH. Compliance declines between clinic visits. Arch Intern Med 1990; 150: 1509–10. [PubMed] [Google Scholar]

- 15.Feinstein AR., On white-coat effects and the electronic monitoring of compliance. Arch Intern Med 1990; 150: 1377–78. [PubMed] [Google Scholar]

- 16.Jamison RL, Hartigan P, Gaziano JM, et al. Design and statistical issues in the homocysteinemia in kidney and end stage renal disease (HOST) study. Clin, Trials 2004; 1: 451–60. [DOI] [PubMed] [Google Scholar]

- 17.Jamison RL, Hartigan P, Kaufman JS, et al. Effect of homocysteine lowering on mortality and vascular disease in advanced chronic kidney disease and end-stage renal disease: a randomized controlled trial. JAMA 2007; 298: 1163–70. [DOI] [PubMed] [Google Scholar]

- 18.Svarstad BL, Chewning BA, Sleath BL, Claesson C. The Brief Medication Questionnaire: a tool for screening patient adherence and barriers to adherence.Patient Educ Couns 1999; 37: 113–24. [DOI] [PubMed] [Google Scholar]

- 19.SunderPlassmann G, Fodinger M, Buchmayer H, et al. Effect of high dose folic acid therapy on hyperhomocysteinemia in hemodialysis patients: results of the Vienna multicenter study. J Am Soc Nephrol 2000; 11: 1106–16. [DOI] [PubMed] [Google Scholar]

- 20.Cohen J. A coefficient of agreement for nominal scales. Educ Psychol Meas 1960; 20: 37–46. [Google Scholar]

- 21.Byrt T, Bishop J, Carlin JB. Bias, prevalence and kappa. J Clin Epidemiol 1993; 46: 423–29. [DOI] [PubMed] [Google Scholar]

- 22.Krueger KP, Berger BA, Felkey B. Medication adherence and persistence: a comprehensive review. Adv Ther 2005; 22: 313–56. [DOI] [PubMed] [Google Scholar]

- 23.Byrt T. How good is that agreement?. Epidemiology 1996; 7: 561. [DOI] [PubMed] [Google Scholar]

- 24.Liu H, Golin CE, Miller LG, et al. A comparison study of multiple measures of adherence to HIV protease inhibitors. Ann Intern Med 2001; 134: 968–77. [DOI] [PubMed] [Google Scholar]

- 25.Choo PW, Rand CS, Inui TS, et al. Validation of patient reports, automated pharmacy records, and pill counts with electronic monitoring of adherence to antihypertensive therapy. Med Care 1999; 37: 846–57. [DOI] [PubMed] [Google Scholar]

- 26.Grymonpre RE, Didur CD, Montgomery PR, Sitar DS. Pill count, self-report, and pharmacy claims data to measure medication adherence in the elderly. Ann Pharmacother 1998; 32: 749–54. [DOI] [PubMed] [Google Scholar]

- 27.Nichol MB, Venturini F, Sung JC. A critical evaluation of the methodology of the literature on medication compliance. Ann Pharmacother 1999; 33: 531–40. [DOI] [PubMed] [Google Scholar]

- 28.Garber M, Nau D, Erickson S, et al. The concordance of self-report with other measures of medication adherence. A summary of the literature. Med Care 2004;42: 649–52. [DOI] [PubMed] [Google Scholar]

- 29.Curtin RB, Svarstad BL, Keller TH. Hemodialysis patients’ noncompliance with oral medications. ANNA J 1999; 26: 307–16. [PubMed] [Google Scholar]

- 30.Hox JJ, De Leeuw ED. A comparison of nonresponse in mail, telephone, and face-to-face surveys. Applying multilevel modeling to meta-analysis. Qual Quant 1994; 28: 329–44. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.