Abstract

Objects often appear with some amount of occlusion. We fill in missing information using local shape features even before attending to those objects—a process called amodal completion. Here we explore the possibility that knowledge about common realistic objects can be used to “restore” missing information even in cases where amodal completion is not expected. We systematically varied whether visual search targets were occluded or not, both at preview and in search displays. Button-press responses were longest when the preview was unoccluded and the target was occluded in the search display. This pattern is consistent with a target-verification process that uses the features visible at preview but does not restore missing information in the search display. However, visual search guidance was weakest whenever the target was occluded in the search display, regardless of whether it was occluded at preview. This pattern suggests that information missing during the preview was restored and used to guide search, thereby resulting in a feature mismatch and poor guidance. If this process were preattentive, as with amodal completion, we should have found roughly equivalent search guidance across all conditions because the target would always be unoccluded or restored, resulting in no mismatch. We conclude that realistic objects are restored behind occluders during search target preview, even in situations not prone to amodal completion, and this restoration does not occur preattentively during search.

Keywords: visual search, eye movements, occlusion, target template, perceptual filling-in

Introduction

Through a process called amodal completion, we act as though we can see occluded parts of objects even though there is no actual percept of the occluded area. We presume that the object continues behind the occluder and that unseen parts exist. For example, we treat the black square in Figure 1A as being a complete square behind a white bar. The two disconnected black fragments are grouped together and responded to as though they are a unified, completed object.

Figure 1.

Restoring missing information from complex objects is likely to involve more processes than amodal completion: Classic examples of cases where amodal completion processes (A) are engaged and (B) are not. In everyday contexts (C), some objects will engage traditional amodal-completion processes, but many will not. We can still, however, behave as though we know what missing information is present for the majority of these objects. These filling-in processes technically meet the definition of amodal completion (completing missing information without a resulting percept) but are qualitatively different from the processes in (A), since it is not linking contours across two sides of an occluder. (D) An extreme case, in which half of an object is occluded and the visible contours cannot relate behind the occluder in a way that would allow the contours to merge (Tse, 1999b). As in (B), low-level completion processes (where contours are matched to linked parts across an occluder) are not expected here. However, through knowledge of the object category, we know what the missing information is likely to be. Experiment 1 in the current study uses stimulus arrangements resembling (D).

We do not, however, act as though we can see the hidden part of the square extending behind the white bar in Figure 1B. The same grouping process also explains this difficulty, because there is no fragment on the other side of the occluder to link to the visible fragment. Completion is prevented because amodal completion proceeds through the linking of matching fragments (Rensink & Enns, 1998) or because this shape allows for too many interpretations (Singh, 2004).

Amodal completion is generally thought to proceed through several stages of autonomous organizational mechanisms (Rensink & Enns, 1998). First, the process begins with boundary assignment: For completion to work, any edge created by occluders must be assigned to the occluder rather than the occluded object. To complete the black square in Figure 1A, the visual system must identify that the edges along the sides of the white bar exist because of the white bar and are not part of the black square. Then fragments that are separated by occluders are linked or grouped together, to varying degrees depending on the type of occluder (more strongly with 3-D than with 2-D occluders). This linking occurs through figural, shape-based properties such as local contours and the presence of T- or L-junctions. Global cues may also be important at this stage (Plomp, Nakatani, Bonnardel, & Leeuwen, 2004; Van Lier, Van der Helm, & Leeuwenberg, 1995). Finally, these linked fragments are functionally treated as belonging to the same object. The result is some form of representation on which we can act as though at least some missing information is actually there. Yet we have no conscious, salient visual percept of information behind the occluder—that is, we do not actually see the missing parts of objects.

Prior results suggest that the amodal-completion process is very fast, early, preattentive, and completed within around 300 ms, across a wide variety of stimulus sets. Although the speed of completion varies across tasks and stimuli, changes in behavioral data plateau around 250–300 ms (Plomp et al., 2004; Shore & Enns, 1997). With simple stimuli (e.g., squares, circles, and bars), amodal completion begins very quickly: Differences resulting from amodal completion have been found as early as 15 ms after stimulus onset (Murray, Foxe, Javitt, & Foxe, 2004), with the representation of the stimulus unfolding further over time (Rauschenberger, Liu, Slotnick, & Yantis, 2006). This process is preattentive (Davis & Driver, 1998; Rensink & Enns, 1998). With realistic images, completion follows a similarly rapid time course: Differences in event-related potential due to completion emerge at least as early as 120–180 ms after stimulus onset with realistic images (Johnson & Olshausen, 2005).

But is there also a role for higher level cognitive information? In Figure 1C, as in real life, we act as though the cars and the truck are complete objects. This is often despite the lack of matching fragments on opposite sides of the occluders. Such matching fragments—or edges that meet behind an occluder—are necessary for amodal completion (Rensink & Enns, 1998; Tse, 1999a, 1999b), and yet we can report that these objects extend beyond their occluders. This process, which has been called recognition from partial information (Kellman, 2003), probably involves using higher level information (such as global symmetry) to recognize that a part might belong to a complete (but occluded) object.

Note that because there is no salient visual percept of the missing area, and these objects are functionally treated as complete (at least to the extent of affecting subjective reports), this process technically falls under the traditional operational definition of amodal completion. However, to distinguish these different mechanisms, we will use the term amodal completion to refer only to amodal completion as it is traditionally understood—the linking of fragments by completing contours using shape-based features. Amodal completion is presumed to operate using specific underlying processes and under very specific sets of circumstances that may not apply to higher level processes. We will refer to higher level mechanisms as restoration and the output of this process as a restored object representation. When discussing the process of inferring missing information more generally, we will use the term filling in. Thus, amodal completion is the rapid, preattentive shape-based filling-in process that has been extensively studied with simple stimuli. When more realistic stimuli are used, a higher level restoration process might also serve to fill in objects.

Although some researchers have suggested that these restoration and amodal-completion processes are different (Guttman & Kellman, 2004; Kellman, 2003), the details of this distinction have not been thoroughly explored. Amodal completion uses local shape features; occurs rapidly and early (Rensink & Enns, 1998), preattentively and in parallel across the search display (Davis & Driver, 1998); and does so in an obligatory way, since it occurs even when it is ultimately detrimental to task performance (Davis & Driver, 1998; He & Nakayama, 1992; Rauschenberger & Yantis, 2001; Rensink & Enns, 1998). Which of these aspects, if any, are also true of restoration?

The present study has two broad and interrelated aims. The first is to explore the role of object-category representation on restoration. To the extent that we have learned basic-level categorical features for a target object, it is possible that features can be generated for the parts of that object that are occluded. Do these restoration processes only work to allow subjective reporting of an object's existence, or can the restored features also guide our attention and affect our goal-directed behavior? A second aim of this study is to test the possibility that preattentive features may be sufficient for the visual system to interpolate missing information and restore occluded parts of objects. Specifically, we test whether restoration can occur preattentively across an array of objects in a visual-search task or whether it requires each object to be attended before filling in occurs.

Guidance and restoration in visual search

Visual search typically involves attention (and gaze) being serially guided to locations having the greatest match between the visual features from the search display and the top-down features from a target template (the features of the search target held in memory after they are either provided by a cue or preview or learned throughout the experiment; e.g., Wolfe, 1994). When filling in makes a distractor look more like a target object, participants will be more likely to attend to those distractor objects, thus slowing target detection. In general, search is fast when distractors are dissimilar to the target and slower and less guided when they are similar to the target (Alexander & Zelinsky, 2011, 2012; Duncan & Humphreys, 1989; Treisman, 1991). These effects are graded, with better matches between the target template and the target as it appears in the search display resulting in targets being more quickly fixated and more likely to be the first objects fixated (Schmidt & Zelinsky, 2009; Vickery, King, & Jiang, 2005; Wolfe & Horowitz, 2004). Because these better matches—and the resulting improvements in the direction of eye movements to the target—are thought to be the result of a stronger guidance signal (Wolfe, 1994; Wolfe, Cave, & Franzel, 1989), for the remainder of this article we will refer to two measures as reflecting increases in the strength of the guidance signal: increases in the rate at which the target is the first object fixated, and faster times to fixate the target.

Surprisingly little is known about the target template, despite decades of research. What features can guide search are underspecified (Wolfe & Horowitz, 2004, 2017), and it is still unclear precisely how those features are then consolidated into a target template. The target template is thought to reside in visual working memory (Woodman, Luck, & Schall, 2007), where load is known to affect amodal completion (H. Lee & Vecera, 2005), which suggests that target templates might use at least some filled-in information. To the extent that the target template includes restored features, this suggests that target templates are formed at least partly from higher level information rather than just the pixels that are presented, including features other than the basic visual features which have traditionally been considered as forming the template.

In two experiments, the present study examines whether restoration occurs during search tasks, both at preview and in parallel across visual search displays. In doing so, we are extending the literature relating amodal completion and visual search to a different, higher level filling-in mechanism, and extending the stimulus class previously used to study filling-in processes in visual search contexts to real-world objects. We rule out possible roles of other filling-in processes in our data in Experiment 1 by placing occluders such that they cover half of an object and objects do not extend across the occluders, and in Experiment 2 by removing half of the object, rather than using a visible occluder. In both cases, there are no fragments that can be linked across occluders, and low-level amodal completion should not engage—as in Figure 1D. These cases do, however, provide a situation where higher level restoration mechanisms may provide valuable information to the visual system and are therefore likely to be used.

In the present work, we measured completion in terms of how directly gaze was guided to targets that appeared occluded or not in the search display. We chose this approach, rather than creating displays where completion could make the target more similar to distractors, because it allows for the same distractors to be counterbalanced across conditions (removing item-specific confounds that different distractors could create) and provides us with a direct measure (eye movements) rather than solely inferential response-time measures of guidance. There is substantial evidence that attention and eye movements are actively directed or guided toward objects that are more similar to target objects (for reviews on guidance of, respectively, eye movements and attention, see Chen & Zelinsky, 2006; Wolfe, 1994).

Unlike the logic of previous studies of occlusion during visual search tasks, in which participants searched for the same target across blocks of trials (He & Nakayama, 1992; Rauschenberger & Yantis, 2001; Rensink & Enns, 1998; Wolfe et al., 2011), the present study manipulated occlusion of the target at two phases of the search task: during the preview and during the search display. Occlusion during preview, at the time of target encoding, has not previously been manipulated independently of occlusion during the actual search display. This manipulation may provide some insight into the character of the target template, while simultaneously avoiding a potential confound. Specifically, if the preview is not explicitly manipulated, then it is not clear what representation participants are using. If the task is to search for a square occluded by a circle with no spatial separation, what would participants search for? They might use the unique feature (the notch) that would speed search or might use a filled-in target template (a square behind a circle), which would result in inefficient search as a result of similarity to the distractors. The resulting inefficient search would be due not to preattentive filling in (or any completion in the search display) but rather due to the use of a different target definition. The present design both avoids this potential confound and—importantly—tests whether restored features can inform the target template. Do participants use a restored description of a target when it is occluded at preview?

Experiment 1

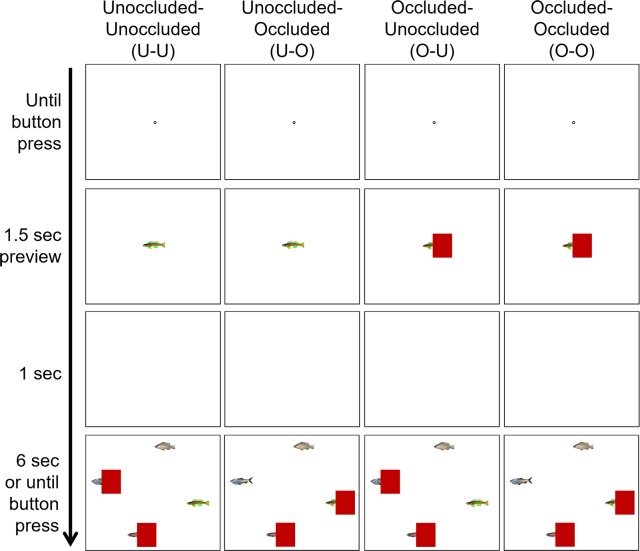

In Experiment 1 we independently varied whether the target was occluded during preview and in the search display. The target could be unoccluded at preview and then either unoccluded in the search display (U-U) or occluded (U-O). Similarly, it could be occluded at preview and then either unoccluded in the search display (O-U) or occluded (O-O). See Figure 2 for a schematic of the procedure. These four conditions enabled us to assess restoration in terms of match or mismatch between the target representation encoded from the preview and the target representation as encoded from the search display.

Figure 2.

The procedure from Experiment 1. The target was always present and always shared the same category (e.g., fish) as the distractors.

At both the preview and search phases of a trial, there are several possible interpretations of an occluded target. One possibility is that occluded objects in the preview or the search display (or both) might be either fully or partially restored into whole objects. Another possibility is that an occluded object (at preview or in the search display) could be treated as half of an object, with no restoration. Because guidance varies directly with the degree of match between a top-down representation of the target formed at preview and the target in the search display (Alexander & Zelinsky, 2012), we can ask whether the target is restored at either phase during search by measuring guidance. If restoration happens at one phase and not the other, the resulting mismatch would decrease guidance relative to conditions where mismatch is not expected. If, similar to amodal completion, restoration occurs preattentively and in parallel across the visual field, then objects in the preview and the search display would always appear complete. Therefore, the target template would always match the search display: Participants would always search for whole (unoccluded or restored) objects, and all objects in the search display would be whole (unoccluded or restored). To the extent that this occurs, we will find strong search guidance in all conditions, with no effects of preview or display occlusion.

If, however, restoration is not preattentive, then the search displays will not be completed before eye movements are made towards the target. The preview, however, could still be restored and the target template might include restored features, because the preview is always presented where the participant is looking and presumably attending. The restored preview would then mismatch the target in occluded search displays (O-O and U-O), resulting in a decrease in attention guidance to the target. Performance in the U-U and O-U conditions, however, should be good; in both cases, a whole or restored target template would match the whole unoccluded target in the search display. This match would result in a strong guidance signal. If restoration requires attention, we predict that—irrespective of whether or not the target is occluded during preview—search guidance will be poorest whenever the target is occluded in the search display (U-O and O-O).

Restoration might also occur in the search display after the object is attended. Visual search tasks are often decomposed into two stages (Alexander, Schmidt, & Zelinsky, 2014; Alexander & Zelinsky, 2012; Castelhano, Pollatsek, & Cave, 2008): an early stage in which attention is guided to an object, and a later verification or recognition stage where participants determine whether the attended object is or is not the target. Overall reaction times and accuracy are poor indicators of which process is affecting search. To distinguish between the early, preattentive aspects of search and the later recognition and verification aspects, we use eye tracking to derive two measures: the time between the onset of the search display and the first fixation on the target (time to target) and the amount of time participants take to make their response after fixating the target (verification time), an epoch that would include mainly a target-recognition process. If restoration occurs after objects are fixated, we would expect the preview to be restored, because participants fixate the preview. Then, after onset of the search display, we predict finding two things: First, in measures of guidance before the target is fixated, we will find relatively poor guidance whenever the target is occluded in the search display (U-O and O-O), because participants have an unoccluded or restored template which mismatches the occluded target in the search display. Second, in measure of verification times we predict relatively equal performance across preview conditions, because after guidance is complete and the target has been fixated, restoration occurs for the target in the search display. The restored or unoccluded search display would then match the restored or unoccluded preview in all conditions, but only for measures assessing performance after the target is fixated (verification time). Verification times may be longer on trials where the target is occluded in the search display (relative to unoccluded search displays), as the restoration process would presumably take some additional time.

Another possibility is that restoration may not occur at all, even while the objects are attended. If restoration is not automatic, participants may choose not to restore the targets. The complex objects we are using as targets may also be too difficult to restore. As a result, if restoration does not occur, performance should depend only on the visible aspects of the objects. This would result in better performance whenever the representations match (O-O, U-U, and O-U) compared to when they do not (U-O). Note that the O-U condition consists of matching representations if the occluder is treated as task irrelevant and outside the effective set size of the search task. The target features from the visible half of the occluded preview are present in the unoccluded search display, leading to a perfect match. In the U-O condition, however, participants may search for features from the half of the previewed target that becomes occluded in the search display, leading to a poor match. This is also true if participants use more holistic features (such as global shape) or other features using information from both halves of the objects. Note that poor performance in U-O is evidence for a lack of restoration in the search display but does not rule out the possibility of restoration during the preview (which would be evident in a main effect of display condition).

Method

Participants

Sixteen students from Stony Brook University, with a mean age of 20 years, participated in exchange for course credit. All participants reported normal or corrected-to-normal vision. All participants provided written informed consent in adherence with the principles of the Declaration of Helsinki.

Stimuli and apparatus

Target and distractor objects were selected from the Hemera Photo-Objects collection (Hemera Technologies, Gatineau, Canada) and resized to ∼2.7° of visual angle. Nine different categories of objects were included: cars, fish, butterflies, teddy bears, chairs, flowers, bottles, guns, and swords (see Supplementary Materials for images of the selected objects). Each exemplar from a given category appeared only once throughout the experiment. One target and three distractors from the same category appeared equally spaced (∼12.2° center-to-center distance between neighbors) in each search display, positioned on an imaginary circle with a radius of ∼8.7°.

Gaze position was recorded using an EyeLink 1000 eye tracker (SR Research, Ottawa, Canada) sampling at 1,000 Hz with default saccade-detection settings. Participants used a chin rest and were seated 60 cm from the screen. Trials were displayed on a Dell Trinitron CRT monitor at a resolution of 1,024 × 768 pixels.

Design and procedure

Participants searched for a previewed target in four-object search displays within a two-by-two experimental design: On 50% of trials, half of the preview was covered by a red occluder, and on 50% of trials targets were half occluded by the same red shape in the search display. This led to four preview/search conditions: target occluded at preview and in the search display (O-O), target unoccluded both at preview and in the search display (U-U), target occluded at preview but not in the search display (O-U), and vice versa (U-O). Preview condition was blocked and the order of these blocks counterbalanced across participants. Display condition was interleaved, so that whether the target was occluded or not at preview did not cue whether it would be occluded in the search display. The side of occlusion (left or right) was counterbalanced across participants and conditions, as was the assignment of targets to occlusion condition (U-U, U-O, O-U, O-O). In the O-O condition, the object was occluded on the same side at preview and in the search display. Each participant completed a total of 144 trials (36 per condition).

Each trial began with the participant fixating a central dot and pressing a button on the controller to initiate the trial (Figure 2). A target preview then appeared for 1.5 s, which was either half occluded by a red shape (O-U and O-O) or unoccluded (U-U and U-O). This was followed by a blank screen (appearing for 1 s) and then a search display, consisting of three distractors and the target, which was either half occluded (O-O and U-O) or unoccluded (U-U and O-U). In this experiment, distractor objects shared the same category as the target, requiring participants to search for a specific target and not just the target category.

Half of the items in the search display were always occluded. Because trials where the target was occluded in the search display were interleaved with trials where it was not, and because these trials occurred equally often, the presence of occluders in the search display could not cue participants toward items that were likely to be the target. Similarly, occlusion of the target at preview did not signal whether it would be occluded in the search display.

Participants were required to fixate on the target and press a button. If they pressed the button while not fixating any object (i.e., while looking at a blank area of the screen, or while their eyes were closed), an error signal sounded and they were reminded to look at the target when pressing the button. If participants were fixating a distractor when they pressed the button, these trials were counted as errors, an error signal sounded, and the word “incorrect” appeared on screen for 500 ms. Trials timed out after 6 s (0.17% of trials).

Results and discussion

In Experiment 1, we asked whether restoration operates preattentively and in parallel, in visual search with realistic objects in a context where other filling-in processes should not occur. In this case, the target template would always match the search display—both always either unoccluded or restored—and we predicted strong search guidance in all conditions, with no effects of preview or display occlusion. If restoration occurs but requires attention, search guidance will always be worse whenever the target is occluded in the search display, regardless of whether or not it was occluded during the preview (U-O and O-O): The preview will be unoccluded or restored and will be matched against a search display that is not restored. If restoration never occurs, performance will be poor in U-O as a result of mismatch (half of the object is missing) but good in O-O, U-U, and O-U, where the representations match.

The false alarm rate was very low in all conditions (<3%). Accuracy was 1% lower for occluded search displays, F(1, 15) = 9.23, p < 0.01, and marginally higher for occluded preview, F(1, 15) = 4.66, p = 0.05. Preview and display type did not interact (p = 0.21). We are not confident that this pattern is clearly characterized, however, due to the high accuracy rates in this experiment, which may be masking an interaction: Accuracy in the U-O condition was numerically lower than the other three conditions (97.0% vs. 98.1%–98.8%). Error trials were excluded from all subsequent analyses.

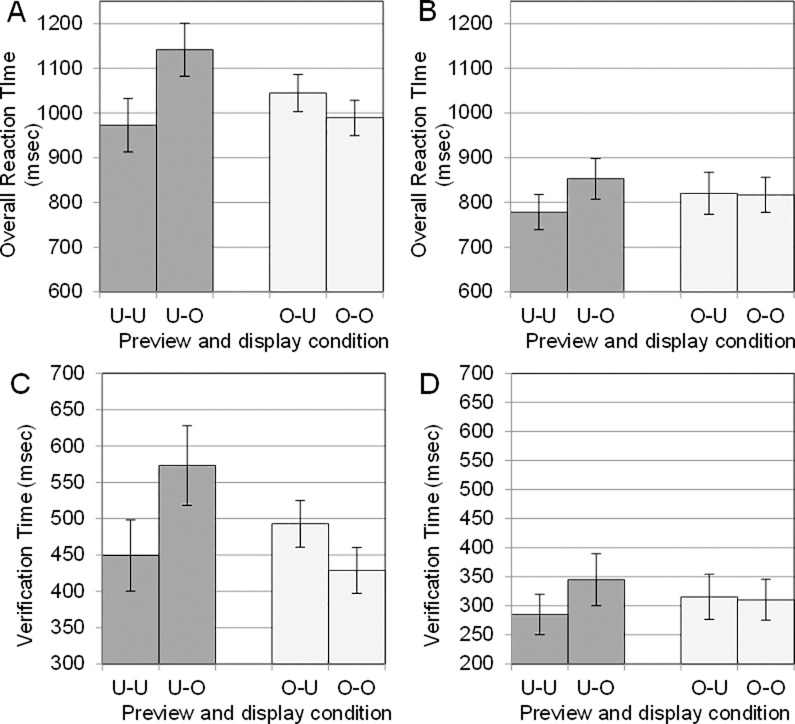

A 2 × 2 within-subject analysis of variance (preview condition and display condition) on reaction times (RTs) revealed a significant Preview condition × Display condition interaction, F(1, 15) = 19.72, p < 0.001, ηp2 = 0.57. Post hoc paired-samples t tests indicated that the U-O condition was carrying the interaction; RTs in that condition were significantly longer than those in the U-U and O-O conditions, t(15) ≥ 3.5, p < 0.05, and marginally different from those in O-U, t(15) = 2.0, p = 0.07 (Figure 3A). Underlying these differences in RT might be differences in search guidance, target verification, or both. To further specify the effects of occlusion in our task, we therefore decomposed overall manual RTs into the time taken by participants to first fixate the target (time to target) and the time to make their manual response after fixating the target (verification time).

Figure 3.

Measures that include verification processes suggest that the target was not completed in the search display, as indicated by poor performance in U-O. Overall reaction times, from search display onset to subject response, were longest in the U-O condition in Experiment 1 (A) and longer in U-O relative to U-U in Experiment 2 (B). Verification time, from the first fixation on the target until the subject's manual response, was similarly longest in U-O, both for Experiment 1 (C) and for Experiment 2 (D). Error bars indicate one standard error of the mean. See Figure 4A and 4B for the time-to-target component of reaction time.

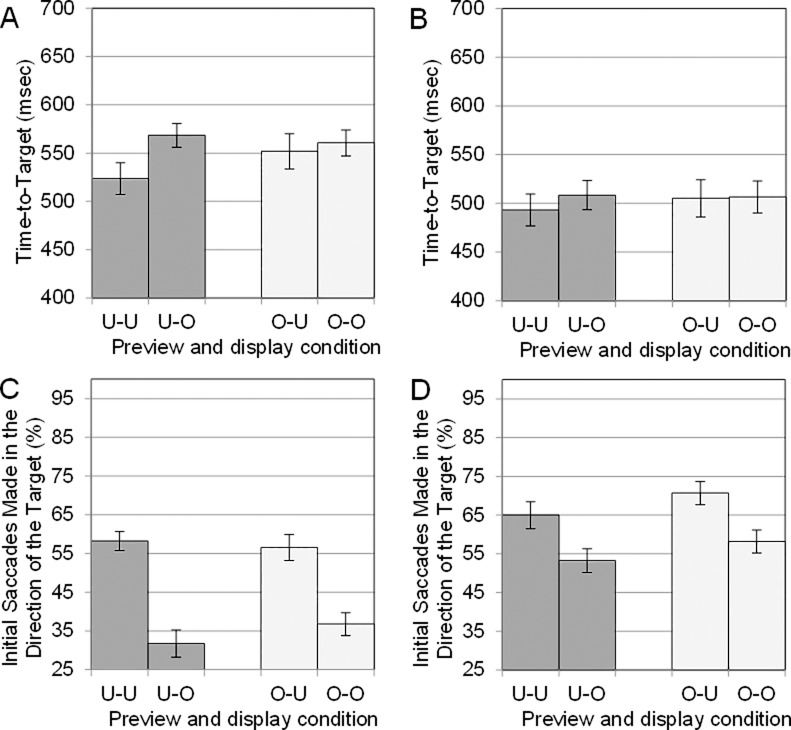

Turning first to guidance, we found that time to target was substantially similar regardless of preview occlusion. This might suggest that participants restored the target at preview, but that restoration did not occur in the search display, at least prior to fixating the target in this task. Preview condition and display condition did not interact in time to target, F(1, 15) = 2.4, p = 0.15, ηp2 = 0.14 (see Figure 4A). Time to target was marginally longer when targets were occluded in the search display, F(1, 15) = 4.1, p = 0.06, ηp2 = 0.21, but not reliably affected by preview condition, F(1, 15) = 0.61, p = 0.45, ηp2 = 0.04.

Figure 4.

Guidance was relatively poor when the target was occluded in the search display (U-O and O-O), suggesting that restoration is not preattentive. In Experiment 1, this was true both for time to target (A) and for initial saccade direction (C). In Experiment 2, no effect was found for time to target (B), but a generally similar pattern to Experiment 1 was found for initial saccade direction (D). Chance is 25% for saccade direction, and guidance was better than chance in all conditions. Error bars indicate one standard error of the mean.

While time-to-target differences are likely due to guidance effects, this measure can also be affected by the time needed to reject each fixated distractor. Initial saccade direction is therefore a more conservative and stringent measure of search guidance: Participants either looked initially toward the target (and thus were guided) or did not. We calculated initial saccade direction by dividing the display into equal 90° slices, each centered on an object. If the endpoint of the first saccade landed in a slice, the saccade was counted as being directed toward that object. One fourth or 25% of these saccades would be directed to each slice if saccades were directed by chance, providing a baseline level of guidance.

The pattern of initial saccade directions was consistent with the time-to-target data and suggests that participants were restoring the occluded features of the target during preview and comparing these restored target templates to unrestored search displays. As in the other measures, no main effect of preview condition was found, F(1, 15) = 0.34, p = 0.57, ηp2 < 0.03 (with a trend toward a Preview condition × Display condition interaction, p = 0.09, ηp2 = 0.29), again suggesting that participants completed the target during preview. There was a main effect of display condition, F(1, 15) = 29.91, p < 0.001, ηp2 = 0.67, with participants being over 20% less likely to direct initial saccades toward the target in U-O and O-O than in U-U and O-U (see Figure 4C).

Importantly, the weaker guidance in U-O and O-O is not due to a bias toward looking at unoccluded items: Through the course of the trial, distractors were fixated 36% more frequently when the target was occluded in the search display (suggesting stronger guidance on target-unoccluded trials), but occluded distractors were fixated just as frequently as unoccluded ones, ts(15) < 0.8, ps > 0.44.

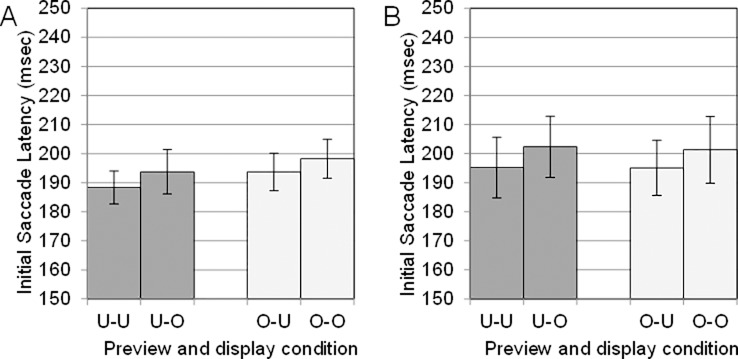

We confirmed that these guidance effects in the initial-saccade-direction measure were not due to a speed/accuracy trade-off by analyzing initial saccade latency, the time from search display onset until the first saccade (see Figure 5A). The only significant effect in initial saccade latency was a main effect of display condition (p < 0.05, ηp2 = 0.34), where the unoccluded displays (in which guidance was strongest) had shorter saccade latencies. All other comparisons were nonsignificant (ps > 0.34). This pattern is not consistent with a speed/accuracy trade-off.

Figure 5.

Patterns in initial saccade latencies were not consistent with a speed/accuracy trade-off. Unoccluded displays (where guidance was strongest—see Figure 4) had the shortest initial saccade latencies, in Experiment 1 (A) and Experiment 2 (B). Error bars indicate one standard error of the mean.

Our data suggest that when an occluded target is presented at preview, the target template used to guide search uses at least some features that are restored from the occluded parts of the preview. Further, they suggest that unlike amodal completion, restoration does not occur preattentively in the search display. Missing information in the search display is not restored prior to fixation on those objects, if at all. An alternative explanation is that there was no restoration of missing information but that half of an object might provide sufficient features to form a target template which would be equally good as a template that might be formed when the whole object was visible. However, the poorer guidance in O-O relative to O-U (see Figure 4C), t(15) = 4.02, p < 0.01, demonstrates that this is not the case: A template formed from just half of an object, with no restoration of missing features, should perfectly match that half of an object when it is present in the search display regardless of whether an occluder is also present.

Restoration also did not occur after gaze was directed to the target: The verification process used only the visible aspects of the object from the preview.1 Verification time showed a Preview condition × Display condition interaction, F(1, 15) = 25.69, p < 0.001, ηp2 = 0.63, with the longest verification times in U-O, ts(15) ≥ 1.95, all ps ≤ 0.07 (Figure 3C). This was not due to a speed/accuracy trade-off: Accuracy in U-O was numerically (though not significantly) lower than in the other conditions. Instead, this pattern is consistent with a verification process that checks for features of the target that were visible during preview—with no restoration. Half of those features were then occluded in the search display on U-O trials, and the longer verification times suggest that the search display was also not restored for verification. The longer verification times in U-O relative to O-O are especially informative. One might expect the whole object preview to prime restoration processes in the U-O search displays, resulting in faster, not slower, verification times. Instead, these data—the same as the overall pattern of RTs—suggest that participants were not restoring the search display during verification. Importantly, the difference between this pattern of effects in verification time and guidance measures also suggests that verification relies on a different representation than the target template.

In Experiment 1 participants were required to locate a specific target among other objects from the same category. Despite this being a common search scenario, it raises concerns that participants may have restored previews more than they otherwise would have because specific information about the target was necessary to distinguish it from the same-category distractors. In Experiment 2 we addressed this possibility by having participants search for targets among distractors from different object categories. If we find the same pattern of results as in Experiment 1, we can conclude that our findings did not depend on the high demands placed on the target representation formed at preview. Finding a different pattern of results would suggest that restoration at preview is not an automatic process with these stimuli but rather one that depends on the demands of the search task.

Experiment 1 also used salient red occluders. Although these were placed in a way that should limit amodal completion or known filling-in processes other than restoration, it is possible that amodal completion may have still been weakly engaged. Partial filling in from amodal completion could create the data pattern we observed if amodal completion is not preattentive when the mechanisms are only weakly engaged and can provide features to the target template. Because the current study is focused on restoration, we chose to rule out this explanation by creating displays where amodal completion is even less likely to engage, rather than exploring these possible attributes of it. Specifically, in Experiment 2 we removed the occluders completely, deleting half of the object instead of presenting an explicit occluder. In the absence of an occluder and depth information, amodal completion should not engage. If we find the same patterns in Experiment 2 despite this change, we can conclude that our data patterns are not driven by occluder type or even the presence of occluders.

Experiment 2

In Experiment 1, we found that participants did not restore occluded stimuli in the search display but did restore their target templates. This suggests that with realistic objects there is a restoration process that requires attention, unlike the standard conception of amodal completion, which points to a qualitatively different filling-in process that follows different rules. There have been some previous suggestions of the existence of such a process (Kellman, 2003). We show that this process does not merely affect subjective reports, as previously reported, but also has behavioral relevance for visual search performance and the direction of eye movements. There are, however, several other possible explanations which need to be addressed.

First, searching for a target among distractors that share the basic-level target category (as in Experiment 1) forced participants to rely on specific visual features that distinguish the target from the other category members. It is possible that people do not normally restore objects at preview, and only did so in Experiment 1 for this reason. To test this, in Experiment 2 we attempted to replicate these results in a search task using distractors from random basic-level object categories. Participants could potentially base their judgments on less precise visual features in this context. Under these conditions, restoration at preview should be less necessary, as the features visible on the unoccluded half of the target would almost always be sufficient to distinguish it from other random objects. Poor performance in U-O relative to O-O would indicate that this was the case. In contrast to Experiment 1, the occluded preview would remain occluded in the target template and would then match occluded search displays in the O-O condition, where the unoccluded preview in the U-O condition would not match the occluded target. If participants restore the preview, we should find equivalent performance in both conditions, because the same template would be compared against the occluded search display in both U-O and O-O.

Second, the evidence for restoration reported in Experiment 1 could potentially be due to weak engagement of amodal-completion processes. To remove engagement of amodal completion or other low-level filling in that might occur through the presence of occluders, we removed either the left or right half of objects in Experiment 2 rather than placing red occluders in front of them as in Experiment 1. With the elimination of the explicit occluders, the depth cues are also eliminated. For consistency with Experiment 1, we will continue to refer to these conditions as occluded and will keep the same O-O, U-U, U-O, and O-U condition labels.

With simple stimuli, completion during search does not occur without explicit occluders (Rensink & Enns, 1998). Similar differences have been found between explicit occluders and deleted pixels with realistic stimuli in recognition tasks (Johnson & Olshausen, 2005). If we also observe filling in with occluders (Experiment 1) but not with deleted pixels (Experiment 2), this would suggest that amodal completion may be contributing to the filling in in Experiment 1. If instead we find comparable patterns of guidance between Experiments 1 and 2, this would show that restoration is robust to the absence of depth cues in a way that amodal completion is not. This would argue strongly against any possibility that our results are simply due to amodal completion and would further suggest the involvement of a qualitatively different process.

Eliminating explicit occluders addresses another question: Can the poorer guidance in U-O and O-O in Experiment 1 be attributed to the presence of occluders? When occluders are present, the search displays may be more crowded or cluttered, which may lead to poorer guidance (Neider & Zelinsky, 2008; Wolfe & Horowitz, 2017). In occluded displays, the shape being occluded might also be grouped with the occluding shape as a result of their sharing a boundary (Plomp et al., 2004; Wolfe et al., 2011), due to the inherent confound between occlusion and proximity with the occluder. This could lead to a disruption in detection or discrimination of the target part or less efficient search (but see Rensink & Enns, 1998). Our occluders were also salient—and potentially distracting (although saccades were not directed more often to occluded distractors). It might be the case that targets were preattentively restored but the preattentive guidance signals were swamped by the barrage of signals originating from the salient occluders. Replicating our evidence against preattentive filling in in the absence of salient occluders, and indeed in the absence of any visible occluders at all, would argue against these possibilities.

By again varying target occlusion both at preview and in the search display, we test whether restoration occurred by comparing performance between conditions; if restoration occurred at preview, guidance will be worse whenever the target is occluded in the search display (U-O and O-O) and we should find no effect of preview occlusion. Finding this pattern would demonstrate the existence of restoration with realistic objects in the absence of depth cues and occluders, arguing for the extraordinary robustness of this effect. Poorer performance in U-O than in O-O, however, would suggest that no form of completion or restoration is engaged, and that the restoration found in Experiment 1 occurs only with the presence of explicit occluders or the requirement that participants use exemplar-specific visual features to discriminate between targets and distractors.

Method

Sixteen students participated in exchange for course credit. All were Stony Brook University undergraduates (mean age of 19 years) and reported normal or corrected-to-normal vision. None of the participants from Experiment 1 participated in Experiment 2.

The design, equipment, and procedure were identical to Experiment 1 with the following exceptions: First, new stimuli were selected from the Hemera Photo-Objects collection. These objects were not restricted to the nine object categories used in Experiment 1—a wide assortment of objects were used—and were instead selected with the constraint that the target and distractor categories could not overlap on a trial-by-trial basis. If the target on a given trial was a duck, there would be no other duck as a distractor in the search display. Second, the red occluders used in Experiment 1 were replaced with occluders that matched the color of the background, resulting in occluded objects that appeared to be missing half of their pixels.

Results and discussion

Accuracy and overall RT results were consistent with Experiment 1, although RTs in Experiment 2 were overall much faster due to the lessened target–distractor similarity making the search task much easier (Alexander & Zelinsky, 2012). The false-alarm rate was very low, less than 4% in all conditions. Error trials were excluded from further analyses. Overall, RT was significantly affected by display condition, F(1, 15) = 5.83, p < 0.05, ηp2 = 0.28, but not by preview condition, F(1, 15) = 0.01, p = 0.92, ηp2 = 0.001. There was a Preview condition × Display condition interaction, F(1, 15) = 9.20, p < 0.01, ηp2 = 0.38 (Figure 3B). The following analyses again decompose these overall RTs into separate time-to-target and target-verification epochs.

The time-to-target data from Experiment 2 did not yield differences between conditions. There was no main effect of either preview condition, F(1, 15) = 0.37, p = 0.55, ηp2 = 0.02, or display condition, F(1, 15) = 1.15, p = 0.30, ηp2 = 0.07, nor a Preview condition × Display condition interaction, F(1, 15) = 0.89, p = 0.36, ηp2 = 0.06 (Figure 4B). The lack of an effect of preview occlusion suggests that targets were restored during preview, as in Experiment 1. However, without the corresponding drop in performance in occluded search displays (U-O and O-O), this is a null effect that must be interpreted with caution. We also cannot conclude from this measure that restoration was not also happening in the search display. However, because time to target is a relatively coarse measure of guidance, affected also by verification processes, and because so little detailed information about the specific target was needed to perform this task, it may be that we were simply not able to observe effects in the time-to-target measure. We therefore also analyzed the percentage of initial saccades directed to the target.

Consistent with Experiment 1, the initial-saccade-direction results indicate that the target was restored at preview but not restored preattentively in the search display (see Figure 4D). Initial saccade direction was strongly affected by display condition, F(1, 15) = 28.59, p < 0.001, ηp2 = 0.66. However—unlike in Experiment 1—there was no Preview condition × Display condition interaction, F(1, 15) = 0.04, p = 0.85, ηp2 = 0.00. Rather, we found that initial saccade direction was significantly affected by preview condition, F(1, 15) = 6.74, p < 0.05, ηp2 = 0.31, but in an unexpected way—participants were slightly better in guiding their initial eye movement to the target when only half of the target was shown at preview. This small benefit found for half objects may simply reflect greater scrutiny paid to the occluded preview to recognize the object. Regardless, the lack of worse guidance with occluded previews demonstrates restoration during the preview and argues against the possibility that restoration in this task required the presence of an explicit occluder, at least during preview.

We again ruled out potential speed/accuracy trade-offs that could cause differences in initial saccade direction by analyzing initial saccade latencies. No reliable effects were found, ps > 0.11, ηp2 < 0.1. These patterns of initial saccade direction cannot be explained in terms of a speed/accuracy trade-off.

The pattern of verification times in Experiment 2 was generally consistent with Experiment 1, again suggesting that participants were not using restored features during verification (see Figure 3D). As before, there was a Preview condition × Display condition interaction, F(1, 15) = 10.36, p < 0.01, ηp2 = 0.41, accompanied by a main effect of display condition, F(1, 15) = 6.03, p < 0.05, ηp2 = 0.29. There was no reliable effect of preview condition, F(1, 15) = 0.01, p = 0.93, ηp2 = 0.00. Mean verification time was numerically longest in the U-O condition, and this was significantly longer than in the U-U condition, t(15) = 3.56, p < 0.01. However, it did not reliably differ between U-O and either O-O, t(15) = 1.26, p = 0.23, or O-U, t(15) = 0.99, p = 0.34. These results suggest that verification judgments were again based only on visible information—without any restoration—during the preview and in the search display.

In summary, Experiment 2 provides further evidence both against the preattentive restoration of targets in the search display and for the restoration of occluded previews. Participants appear to have restored the preview both in Experiment 1 and, at least as expressed in initial saccade direction, in Experiment 2. That this is true for both experiments demonstrates that these effects are not sensitive to the types of occlusion used in this study and suggests a robustness of restoration to contexts where information is missing but depth cues do not provide a means for interpolating contours and other low-level information. The fact that occlusion effects in the search display were similar between Experiments 1 and 2 suggests that the lack of preattentive restoration observed in Experiment 1 was not due to some peculiarity intrinsic to large, red occluders. The only plausible explanation for the poorer guidance observed to occluded targets is that these targets were not preattentively restored. Moreover, guidance and verification data from a control experiment using the same-category distractors from Experiment 1 but the missing-half occluders from Experiment 2 produced the same data patterns as those reported in Experiment 1,2 further suggesting that our results were not due to an artifact introduced by the occluders themselves. In no case did we find that objects were restored preattentively in the search display.

General discussion

People often subjectively report that objects continue behind occluders. Here we show that this information can also be used to guide our eye movements during visual search. We introduced a new oculomotor-based paradigm for investigating filling in during search, one that allows the dissociation not only of filling-in effects at preview from those occurring in the search display but also of effects of filling in on search guidance from those on target verification. We used this paradigm to explore how the visual system handles occluded or otherwise missing information in the context of realistic stimuli, which little previous work has explored (but see Johnson & Olshausen, 2005; Vrins, de Wit, & van Lier, 2009). Our findings extend existing work on this topic in several respects, demonstrating that—with realistic objects—a qualitatively different kind of filling in, which we call restoration, provides inferred features that are included in the target template used to guide visual search.

We demonstrated restoration in two experiments where amodal completion would not have been possible. Our findings suggest that different mechanisms can be recruited for complex stimuli, which we believe to involve the use of category identity as an additional source of information for filling in. For example, when half of a car is visible at preview, participants may recognize it as a car and use their learned category knowledge to represent it in their visual working memory as a whole car. Relatedly, previous work has shown that amodal completion is affected by the semantic properties of stimuli (Vrins et al., 2009) and stimulus familiarity (Plomp et al., 2004). These previous studies have interpreted these effects as higher level influences affecting amodal completion. The present study, however, demonstrates restoration in cases where low-level amodal completion is expected to fail (Rensink & Enns, 1998), and the results suggest that restoration and amodal completion are quite different: Although restoration may interact with and facilitate amodal completion (Plomp et al., 2004; Vrins et al., 2009), it may also be independently recruited to fill in objects that otherwise cannot be filled in.

Restoration is similar to amodal completion in that both result in an ability to function as though hidden features can be seen, without an actual corresponding visual percept. Data from Experiment 1 suggest that when participants were presented with occluded previews of real-world targets, the target representations they formed and used to guide their search included information from the occluded halves of the objects. This was demonstrated by the stronger guidance found in O-U and U-U relative to U-O and O-O. This pattern also suggests that restoration, like amodal completion, is obligatory. Specifically, when the preview was occluded, participants used a restored target template even though the target would then appear occluded in the search display on 50% of trials. Restoration is unlikely to be a perfect process, and although the missing information might be helpful in some of the trials where the search displays are unoccluded—as evidenced by a slight improvement in U-U relative to O-U—this barely present improvement is a poor trade-off for the large drop in performance in occluded search displays.

There are several striking differences between restoration and amodal completion. First, restoration engages even in situations where completion does not. Completion typically does not occur when occluders are placed at an end of an object rather than covering a middle segment of it (Rensink & Enns, 1998; for a discussion of other stimulus situations where cues might lead to completion despite occluders at the end of an object, see Tse, 1999b). Restoration, however, occurred despite this kind of occluder placement (Experiment 1) and completely absent the depth cues which normally benefit completion (Experiment 2). These arrangements should not engage contour-based completion mechanisms; a different mechanism is therefore needed to explain the demonstrated effects.

We discovered a second striking difference between restoration and amodal completion: the speed of these processes. If restoration is fast and preattentive, then occluded search displays should be restored prior to fixation on any objects, making these restored features available to guide search. Our data failed to support this prediction of a fast and preattentive restoration process—search was guided less strongly to targets that were occluded in the search display. This suggests either that objects must be serially attended to be restored, or that restoration might have too long a time course to influence initial eye movements. Although speed of amodal completion varies with the task and stimuli used (Plomp et al., 2004; Shore & Enns, 1997), its effects completion have been found as early as 15 ms with simple stimuli (Murray et al., 2004) and at least as early as 120–180 ms with realistic images (Johnson & Olshausen, 2005). If restoration is substantially slower than that, it would not be expected to affect guidance in this task (and, more generally, the early eye movements in other search tasks): Mean initial saccade latencies were ∼190 ms in all conditions of the current study (see Figure 5). This slower time course of restoration in our data is consistent with findings that event-related potentials elicited in response to occluded shapes have a slower time course in response to interpretations where higher level knowledge might play a role, relative to interpretations involving only structural completions (Hazenberg & van Lier, 2016). These timing differences between amodal completion and restoration are precisely what one would expect if restoration relies on higher level categorical knowledge. One presumably cannot use categorical knowledge of the target category without first identifying that target and knowing what category the target is a member of! Therefore, one would expect completion to be faster than restoration.

We believe that the restored target template uses category information from long-term memory. There are two different ways that we envision this happening. First, it may be that specific visual features that were visible from unoccluded parts of the target were elaborated with categorical features to produce a hybrid category-specific guiding template. This proposed hybrid target template might explain the relatively poor performance we observed in the Experiment 1 O-O condition (relative to U-U and O-U; see Figure 4C). Participants would search not only for the visible half of the target but also for the categorical features that would likely be found in the target's occluded half. As an example of our proposal, when previewed with the front half of a car, categorical knowledge might also make the rear wheel, the trunk, and so on, available to guide search. This kind of hybrid template is consistent with work on categorical search, which shows that search guidance improves with the amount of information included in a categorical target label (Schmidt & Zelinsky, 2009). What is new about the current suggestion is the idea that guidance may integrate categorical target information with information about the specific features of a given target, making this suggestion similar in principle to proposals that top-down and bottom-up information is integrated for the purpose of guiding search (Adeli, Vitu, & Zelinsky, 2017; Peters & Itti, 2007; Torralba, Oliva, Castelhano, & Henderson, 2006; Wolfe, 1994).

Second, the information may be purely categorical: The missing information may cause participants to discard the visual target template and instead activate a subordinate categorical template (e.g., “a trout”) or a basic-level categorical template (“fish,” which would be effective in Experiment 2). That categorical representation of the target might then bias target features in the visual input to guide attention. In this case, the target would therefore not be technically restored or filled in, but instead a different, categorical representation would be used in place of the previewed pixels. If this latter possibility is correct, the small guidance decreases in O-U relative to U-U would be due to the relative imprecision of the categorical template that was formed in the O-U condition, which would not perfectly match any individual exemplar targets. However, the better guidance we found in unoccluded previews in Experiment 2 (Figure 4D) is not expected with the use of purely categorical targets, unless the categorical templates were better primed by occluded previews. This better priming might have happened if participants scrutinized the visible halves of occluded previews in anticipation of a more difficult target-recognition decision. We therefore cannot determine conclusively from the present data whether participants were using purely categorical templates. Note that the current data are also consistent with a third interpretation, based on predictive coding (T. S. Lee & Mumford, 2003; Rao & Ballard, 1999). To the extent that a categorical representation of a target can be considered a prediction, then the missing information due to object occlusion at preview might generate a prediction error—one that is minimized by completing the target (Rao & Ballard, 1999). Distinguishing between these processes will be an important direction for future work.

Finally, our data demonstrated a dissociation between the information used for target guidance and the information used for target recognition; a restored representation (or a categorical representation) was used to guide eye movements during search but not to recognize the target once it was fixated. This distinction between representations used for guidance and those used for recognition makes sense in the real world. Given that one never knows what regions of a target will be occluded, it makes sense to search using as complete a representation of the target as possible, since any part may or may not be occluded during search. Choosing to search for only the left half of your car when only the right half was visible might make you late for work. However, if your task was to decide whether a specific car was your friend's based on a photograph of only that car's left side, even if you had a reasonable idea of what the missing half might look like, the best strategy to ensure success would be to match the left side of each car that you find to the photo. Information that was visible during target encoding is simply more reliable than information that was not, due to the possibility of reconstruction error during restoration.

Our data suggest that both of these representations are used, but at different phases of the search task: Guidance uses a restored representation, whereas verification relies only on the visible regions of the target that are unoccluded at preview and search. This reliance by verification on visually previewed information explains the comparatively poor performance found in the U-O condition; verification suffers only when target information that was visible at preview is not visible in the search display. Such a distinction between guidance and verification is also consistent with the modal model conception of visual search, one that assumes that guidance and recognition are separate processes that simply interact as part of a repeating guidance–recognition cycle (Wolfe, 1994). According to this view, simple visual features are used to guide search to a likely target candidate, with this guidance operation then followed by the use of potentially different, and presumably more powerful, features to decide whether the attended or fixated object is a target or a distractor. This historical conception of search has been challenged by modeling approaches that use essentially the same preattentively available features to perform both search subtasks (Elazary & Itti, 2010; Navalpakkam & Itti, 2005; Zelinsky, 2008), leading to claims in the literature that search guidance and target recognition may be one and the same process (Maxfield & Zelinsky, 2012; Nakayama & Martini, 2011; Zelinsky, Peng, Berg, & Samaras, 2013). An additional important direction for future work will be to determine whether the information used for target guidance and verification is qualitatively different, or whether it is fundamentally the same but subject to different decision criteria: It may be that the occasional guidance error resulting from a poorly completed target preview carries little cost and can be tolerated, whereas potential recognition errors are costlier and cannot. Guidance may use a relatively broad set of features, but recognition may use more conservative decision criteria and a narrower set of features, relying only on those that were extracted directly from the previewed regions of the target and producing the pattern of verification data observed in the present study.

Supplementary Material

Acknowledgments

We thank Peter Kwon, Shiv Munaswar, Sarah Osborn, and Thitapa Shinaprayoon for their help with data collection. This work was supported by NIH Grant R01-MH063748 to GJZ.

Commercial relationships: none.

Corresponding author: Robert G. Alexander.

Email: robert.alexander@downstate.edu.

Address: Department of Psychology, Stony Brook University, Stony Brook, NY, USA.

Footnotes

Given that this is a negative result, we cannot rule out the possibility that some information was extrapolated for verification purposes but was not sufficient to create differences in verification time. Nevertheless, this would still mean that completion is less important for verification than it is for search guidance.

To confirm that there are no differences that are simply masked by manipulating two aspects of the task in the same experiment, we also ran a control version where we removed half of the object (as in Experiment 2) but used the stimuli from Experiment 1. The results of the control experiment confirmed that participants restored missing information despite the absence of explicit occluders. Specifically, main effects of display condition were again found for both time to target, F(1, 15) = 14.29, p < 0.05, ηp2 = 0.49, and initial saccade direction, F(1, 15) = 18.51, p = 0.001, ηp2 = 0.55; and preview condition again had no effect on either time to target, F(1, 15) = 1.09, p = 0.31, ηp2 = 0.31, or initial saccade direction, F(1, 15) < 0.01, p = 0.94, ηp2 = 0.000.

Contributor Information

Robert G. Alexander, Email: robert.alexander@downstate.edu.

Gregory J. Zelinsky, Email: gregory.zelinsky@stonybrook.edu.

References

- Adeli H, Vitu F, Zelinsky G. J. A model of the superior colliculus predicts fixation locations during scene viewing and visual search. The Journal of Neuroscience. (2017);37(6):1453–1467. doi: 10.1523/JNEUROSCI.0825-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexander R. G, Schmidt J, Zelinsky G. J. Are summary statistics enough? Evidence for the importance of shape in guiding visual search. Visual Cognition. (2014);22(3-4):595–609. doi: 10.1080/13506285.2014.890989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexander R. G, Zelinsky G. J. Visual similarity effects in categorical search. Journal of Vision. (2011);11(8):1–15.:9. doi: 10.1167/11.8.9. PubMed Article https://doi.org/10.1167/11.8.9 PubMed ] [ PubMed ] [ Article. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexander R. G, Zelinsky G. J. Effects of part-based similarity on visual search: The Frankenbear experiment. Vision Research. (2012);54:20–30. doi: 10.1016/j.visres.2011.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Castelhano M. S, Pollatsek A, Cave K. R. Typicality aids search for an unspecified target, but only in identification and not in attentional guidance. Psychonomic Bulletin & Review. (2008);15(4):795–801. doi: 10.3758/pbr.15.4.795. [DOI] [PubMed] [Google Scholar]

- Chen X, Zelinsky G. J. Real-world visual search is dominated by top-down guidance. Vision Research. (2006);46(24):4118–4133. doi: 10.1016/j.visres.2006.08.008. [DOI] [PubMed] [Google Scholar]

- Davis G, Driver J. Kanizsa subjective figures can act as occluding surfaces at parallel stages of visual search. Journal of Experimental Psychology: Human Perception and Performance. (1998);24(1):169–184. doi: 10.1037/0096-1523.24.1.169. [DOI] [Google Scholar]

- Duncan J, Humphreys G. W. Visual search and stimulus similarity. Psychological Review. (1989);96(3):433–458. doi: 10.1037/0033-295x.96.3.433. [DOI] [PubMed] [Google Scholar]

- Elazary L, Itti L. A Bayesian model for efficient visual search and recognition. Vision Research. (2010);50(14):1338–1352. doi: 10.1016/j.visres.2010.01.002. [DOI] [PubMed] [Google Scholar]

- Guttman S. E, Kellman P. J. Contour interpolation revealed by a dot localization paradigm. Vision Research. (2004);44(15):1799–1815. doi: 10.1016/j.visres.2004.02.008. [DOI] [PubMed] [Google Scholar]

- Hazenberg S. J, van Lier R. Disentangling effects of structure and knowledge in perceiving partly occluded shapes: An ERP study. Vision Research. (2016);126:109–119. doi: 10.1016/j.visres.2015.10.004. [DOI] [PubMed] [Google Scholar]

- He Z. J, Nakayama K. Surfaces versus features in visual search. Nature. (1992 Sep 17);359:231–233. doi: 10.1038/359231a0. [DOI] [PubMed] [Google Scholar]

- Johnson J. S, Olshausen B. A. The recognition of partially visible natural objects in the presence and absence of their occluders. Vision Research. (2005);45(25):3262–3276. doi: 10.1016/j.visres.2005.06.007. [DOI] [PubMed] [Google Scholar]

- Kellman P. J. Interpolation processes in the visual perception of objects. Neural Networks. (2003);16(5):915–923. doi: 10.1016/S0893-6080(03)00101-1. [DOI] [PubMed] [Google Scholar]

- Lee H, Vecera S. P. Visual cognition influences early vision: The role of visual short-term memory in amodal completion. Psychological Science. (2005);16(10):763–768. doi: 10.1111/j.1467-9280.2005.01611.x. [DOI] [PubMed] [Google Scholar]

- Lee T. S, Mumford D. Hierarchical Bayesian inference in the visual cortex. Journal of the Optical Society of America A. (2003);20(7):1434–1448. doi: 10.1364/josaa.20.001434. [DOI] [PubMed] [Google Scholar]

- Maxfield J. T, Zelinsky G. J. Searching through the hierarchy: How level of target categorization affects visual search. Visual Cognition. (2012);20(10):1153–1163. doi: 10.1080/13506285.2012.735718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray M. M, Foxe D. M, Javitt D. C, Foxe J. J. Setting boundaries: Brain dynamics of modal and amodal illusory shape completion in humans. The Journal of Neuroscience. (2004);24(31):6898–6903. doi: 10.1523/JNEUROSCI.1996-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakayama K, Martini P. Situating visual search. Vision Research. (2011);51(13):1526–1537. doi: 10.1016/j.visres.2010.09.003. [DOI] [PubMed] [Google Scholar]

- Neider M. B, Zelinsky G. J. Exploring set size effects in scenes: Identifying the objects of search. Visual Cognition. (2008);16(1):1–10. [Google Scholar]

- Peters R. J, Itti L. Beyond bottom-up. Incorporating task-dependent influences into a computational model of spatial attention. (2007. June) Paper presented at the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN.

- Plomp G, Nakatani C, Bonnardel V, Leeuwen C. v. Amodal completion as reflected by gaze durations. Perception. (2004);33(10):1185–1200. doi: 10.1068/p5342x. [DOI] [PubMed] [Google Scholar]

- Rao R. P, Ballard D. H. Predictive coding in the visual cortex: A functional interpretation of some extra-classical receptive-field effects. Nature Neuroscience. (1999);2(1):79–87. doi: 10.1038/4580. [DOI] [PubMed] [Google Scholar]

- Rauschenberger R, Liu T, Slotnick S. D, Yantis S. Temporally unfolding neural representation of pictorial occlusion. Psychological Science. (2006);17(4):358–364. doi: 10.1111/j.1467-9280.2006.01711.x. [DOI] [PubMed] [Google Scholar]

- Rauschenberger R, Yantis S. Masking unveils pre-amodal completion representation in visual search. Nature. (2001 Mar 15);410(6826):369–372. doi: 10.1038/35066577. [DOI] [PubMed] [Google Scholar]

- Rensink R. A, Enns J. T. Early completion of occluded objects. Vision Research. (1998);38(15–16):2489–2505. doi: 10.1016/s0042-6989(98)00051-0. [DOI] [PubMed] [Google Scholar]

- Schmidt J, Zelinsky G. J. Search guidance is proportional to the categorical specificity of a target cue. The Quarterly Journal of Experimental Psychology. (2009);62(10):1904–1914. doi: 10.1080/17470210902853530. [DOI] [PubMed] [Google Scholar]

- Shore D. I, Enns J. T. Shape completion time depends on the size of the occluded region. Journal of Experimental Psychology: Human Perception and Performance. (1997);23(4):980–998. doi: 10.1037//0096-1523.23.4.980. [DOI] [PubMed] [Google Scholar]

- Singh M. Modal and amodal completion generate different shapes. Psychological Science. (2004);15(7):454–459. doi: 10.1111/j.0956-7976.2004.00701.x. [DOI] [PubMed] [Google Scholar]

- Torralba A, Oliva A, Castelhano M. S, Henderson J. M. Contextual guidance of eye movements and attention in real-world scenes: The role of global features in object search. Psychological Review. (2006);113(4):766–786. doi: 10.1037/0033-295X.113.4.766. [DOI] [PubMed] [Google Scholar]

- Treisman A. Search, similarity, and integration of features between and within dimensions. Journal of Experimental Psychology: Human Perception and Performance. (1991);17(3):652–676. doi: 10.1037//0096-1523.17.3.652. [DOI] [PubMed] [Google Scholar]

- Tse P. U. Complete mergeability and amodal completion. Acta Psychologica. (1999a);102(2–3):165–201. doi: 10.1016/s0001-6918(99)00027-x. [DOI] [PubMed] [Google Scholar]

- Tse P. U. Volume completion. Cognitive Psychology. (1999b);39(1):37–68. doi: 10.1006/cogp.1999.0715. [DOI] [PubMed] [Google Scholar]

- Van Lier R, Van der Helm P, Leeuwenberg E. Competing global and local completions in visual occlusion. Journal of Experimental Psychology: Human Perception and Performance. (1995);21(3):571–583. doi: 10.1037//0096-1523.21.3.571. [DOI] [PubMed] [Google Scholar]

- Vickery T. J, King L.-W, Jiang Y. Setting up the target template in visual search. Journal of Vision. (2005);5(1):81–92.:8. doi: 10.1167/5.1.8. PubMed Article https://doi.org/10.1167/5.1.8 PubMed ] [ PubMed ] [ Article. [DOI] [PubMed] [Google Scholar]

- Vrins S, de Wit T. C, van Lier R. Bricks, butter, and slices of cucumber: Investigating semantic influences in amodal completion. Perception. (2009);38(1):17–29. doi: 10.1068/p6018. [DOI] [PubMed] [Google Scholar]

- Wolfe J. M. Guided search 2.0: A revised model of visual search. Psychonomic Bulletin & Review. (1994);1(2):202–238. doi: 10.3758/BF03200774. [DOI] [PubMed] [Google Scholar]

- Wolfe J. M, Cave K. R, Franzel S. L. Guided search: An alternative to the feature integration model for visual search. Journal of Experimental Psychology: Human Perception and Performance. (1989);15(3):419–433. doi: 10.1037//0096-1523.15.3.419. [DOI] [PubMed] [Google Scholar]

- Wolfe J. M, Horowitz T. S. What attributes guide the deployment of visual attention and how do they do it? Nature Reviews Neuroscience. (2004);5(6):495–501. doi: 10.1038/nrn1411. [DOI] [PubMed] [Google Scholar]

- Wolfe J. M, Horowitz T. S. Five factors that guide attention in visual search. Nature Human Behaviour. (2017);1:0058. doi: 10.1038/s41562-017-0058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolfe J. M, Reijnen E, Horowitz T. S, Pedersini R, Pinto Y, Hulleman J. How does our search engine “see” the world? The case of amodal completion. Attention, Perception, & Psychophysics. (2011);73(4):1054–1064. doi: 10.3758/s13414-011-0103-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodman G. F, Luck S. J, Schall J. D. The role of working memory representations in the control of attention. Cerebral Cortex. (2007);17(Suppl. 1):i118–i124. doi: 10.1093/cercor/bhm065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zelinsky G. J. A theory of eye movements during target acquisition. Psychological Review. (2008);115(4):787–835. doi: 10.1037/a0013118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zelinsky G. J, Peng Y, Berg A. C, Samaras D. Modeling guidance and recognition in categorical search: Bridging human and computer object detection. Journal of Vision. (2013);13(3):1–20.:30. doi: 10.1167/13.3.30. PubMed Article https://doi.org/10.1167/13.3.30 PubMed ] [ PubMed ] [ Article. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.