Abstract

Simulation-based learning is an effective technique for teaching nursing students' skills and knowledge related to patient deterioration. This study examined students' acquisition of theoretical knowledge about symptoms, pathophysiology, and nursing actions after implementing an educational intervention during simulation-based learning. A quasi-experimental study compared theoretical knowledge among two groups of students before and after implementation of the intervention. The intervention introduced the following new components to the existing technique: a knowledge test prior to the simulation, video-recording of the performance, and introduction of a structured observation form used by students and facilitator during observation and debriefing. The intervention group had significantly higher scores on a knowledge test conducted after the simulations in comparison to the scores in the control group. In both groups scores were highest on knowledge of symptoms and lowest on knowledge of pathophysiology; the intervention group had significantly higher scores than the control group on both topics. Students' theoretical knowledge of patient deterioration may be enhanced by improving the students' prerequisites for learning and by strengthening debriefing after simulation.

1. Introduction

Simulation-based learning (SBL) is a technique [1] widely used in nursing education, the use of which as an educational tool to achieve a wide range of learning outcomes has been supported by a multitude of studies [2]. One important outcome of nursing education is improved recognition and management of patient deterioration; these are essential nursing skills that students should begin to develop while in school [3], and students need a wide range of knowledge to recognize and act upon the signs of deterioration.

The relationship between theory and practice is a complex challenge in professional education. This is widely documented and commonly termed as a “gap” [4]. To reduce this gap, theoretical knowledge and practical experience must be integrated. SBL is a pedagogical approach that can be considered a “third learning space” between coursework and practicums; this approach may bring the content and process of theoretical work and practical training closer to each other [5].

SBL has been said to be a more effective teaching strategy than classroom teaching for the development of assessment skills for the care of patients with deteriorating conditions [6]. Simulation provides an opportunity to be exposed to critical scenarios and can highlight the clinical signs and symptoms the students will have to deal with in these situations [7]. It provides inexperienced students the opportunity to use their knowledge in a simulated situation, which mirrors the clinical context without the risk of harming actual patients [8]. Situated learning theory claims that learning is influenced by the context in which it occurs [9]. Tun, Alinier, Tang, and Kneebone [10] argue that the aspect of fidelity may hinge on the learners perceived realism of the context and that a simulation may seem realistic to students who lack experience. Lavoie, Pepin, and Cossette [11] call for educational interventions that can enhance nursing students' ability to recognize signs and symptoms in patient deterioration situations.

Many studies claim that SBL may improve theoretical knowledge acquisition [12–16]. A review by Foronda, Liu, and Bauman [17] suggested that simulation was an effective andragogical method for teaching skills and knowledge and called for more research to strengthen the evidence related to what types of nursing knowledge and nursing content could be effectively developed through SBL.

A review of empirical studies of educational interventions related to deteriorating patient situations showed that few used objective assessment as an outcome measure: only just over one-third measured improvement in knowledge, skills, and/or technical performance [18]. On the other hand, a plethora of studies are concerned with the students' experiences during SBL; these studies have found that students generally show a high degree of satisfaction with SBL as an educational technique because they often experience increased knowledge and confidence [3, 19, 20]. At the same time, however, there is a broad tendency for nursing students to overestimate their skills and knowledge in self-reports [21]. An essential component of quality assurance in nursing education thus remains to evaluate students' knowledge acquisition [22].

The present study compared acquisition of theoretical knowledge by two cohorts of nursing students in the course of six simulated scenarios on patient deterioration before and after the implementation of an educational intervention during simulation-based learning. The intervention aimed to improve students' learning prerequisites and strengthen debriefing in simulation. The aim of the study was to explore whether there was a difference in students' knowledge level before and after the educational intervention. The following research questions were developed:

What are the differences in posttest scores on the knowledge test between the control group and the intervention group?

What are the differences between stimulus (pre-) and posttest scores in the intervention group?

2. Methods

2.1. Intervention

In this study, we present an intervention to enhance students' theoretical knowledge via simulation-based learning and measure this development using an objective assessment. The intervention was inspired by the First2Act model, as described by Buykx and colleagues [3, 20]. First2Act was developed to improve nurses' emergency management skills [20], and it comprises five components: developing core knowledge, assessment, simulation, reflective review, and performance feedback. These components were set on the basis of experiential learning theory and empirical pedagogical literature (e.g., [23, 24]). A lack of theory-based research in simulation hampers the development of coherence and external validity in this field of research [25]. The present study uses First2Act as an explicit theoretical framework. Its distinct components are pedagogically founded and are hypothesized to enhance learning in advanced simulation [20]. Due to the importance of feedback in simulation-based learning [26], the feedback processes were enhanced beyond those introduced by First2Act [20].

The simulations were conducted before the students' first clinical practicum in hospital medical or surgical units. Simulation training before a practicum can, if the experiences reflect the way knowledge and actions will be used in actual practice, provide the students with authentic activities that mirror the forthcoming experiences in the real world of nursing [9]. In both cohorts, the students participated in a total of six scenarios where the patient developed a deteriorating condition, respectively, angina pectoris, cardiac arrest, hypoglycemia, postoperative bleeding, worsening of obstructive lung disease, and ileus. The scenarios were inspired by scenarios already created by the National League for Nursing and Laerdal, a medical company (Laerdal Medical Corporation 2008), and refined in collaboration with practicing nurses to suit a Norwegian context. The scenarios were carried out over two days, meaning that the students were given the repeated opportunity to collaborate on the assessment and treatment of deteriorating patients and to repeatedly go through the cycles of reflection before, in, and on action [27]. The students were organized into previously established learning groups each consisting of 5–9 students. Students in both cohorts had completed theoretical education on pathology, nursing subjects, and basic life support and had learned a variety of practical nursing skills in the simulation center.

Two students acted as nurses in each scenario, with one as the leading nurse; the remaining students were observers or next-of-kin. All students acted the role of leading nurse at least once during simulation and most twice. The students received a short synopsis of the six scenarios one week before the simulation to give them the opportunity to prepare for the simulations. During simulation, one of the faculty members had the role of facilitator, while another operated the manikin VitalSim (Laerdal Medical, Norway). Table 1 details the structure of the scenario simulation in both cohorts participating in the study.

Table 1.

Structure of the scenario simulation for both cohorts.

| 2013 cohort Original scenario simulation |

2014 cohort Intervention |

|

|---|---|---|

| Briefing | 15 minutes | 15 minutes |

| Simulation | 10 minutes Students observed in accordance with overall learning outcomes |

10 minutes Video-recording of nurse actors Observation according to a structured observation form |

| Debriefing | 20 minutes Facilitator led debriefing. Learning outcomes provided the basis for reflection |

Session 1 15 minutes Viewing of video-recording by nurse actors Observers and facilitator/operator discussed observation and planned their feedback Session 2 20 min Facilitator led feedback according to the checklist |

Our intervention introduced the following new components to the existing procedure.

(1) A knowledge test with multiple-choice questions (MCQs) was conducted one week before simulation as a stimulus for learning, to give the students the opportunity to prepare in advance. The questions covered core knowledge associated with each of the scenarios; students received individual electronic feedback giving the correct answers.

(2) The simulation performance was video-recorded on an iPad (Table 1).

(3) While observers and facilitators in the 2013 cohort gave feedback in relation to general learning outcomes, a structured observation form was developed for the 2014 cohort that covered scenario-specific observations and actions (Table 1), for example, measuring blood pressure, correct medication administration, when to call for help, and priority of actions.

(4) The debriefing was divided into two sessions: First, the students who had performed the simulation watched the video-recording, allowing for an assessment of their own performance (Table 1). Meanwhile, the observers planned and discussed the feedback they would provide the performing students, with reference to the structured observation form, and the facilitator and operator did likewise. The observation form described correct nursing actions and observations related to scenario-specific learning outcomes. Second, a facilitator-led debriefing was conducted following the framework described in Debriefing Assessment for Simulation in healthcare (DASH)® [28]. The new observation form also guided the facilitators during debriefing.

2.2. Study Design

This study used a two-group quasi-experimental design [29] that compared students' knowledge acquisition between a control and an intervention group. The control group experienced simulated scenarios according to their existing study program, while the intervention group experienced simulated scenarios based on a new pedagogical design, implemented one year later.

2.3. Sample and Setting

All students in the second year of their bachelor's degree in nursing at a university college in Norway were invited to participate in the study in 2013 (99 students) and in 2014 (91 students). The students were informed about the study in class and on the institution's digital learning platform. In December 2013, the 68 students who agreed to join the study participated in the scenario simulations as the control group; of these, 60 agreed to participate in the posttest. In December 2014, the 69 students who agreed to join took part in the scenario simulations as the intervention group; of these, 53 agreed to participate in the posttest. Of these 53 students, 40 participated in the stimulus test conducted before the simulation.

2.4. Development of the Instrument

The instrument had two sections. The first section included demographic data: age, gender, if they had worked in the health service and if so how long, and if they had experiences with simulation. The second section consisted of a multiple-choice questionnaire (MCQ) developed to function as the stimulus test as well as the posttest (Table 2).

Table 2.

Multiple-choice questionnaire.

| Multiple choice questions – tick off the alternatives (the required number) you consider correct. | |

|---|---|

| Theme: Angina pectoris | |

| 1 | How does Nitroglycerin (NG) work? Tick off the two alternatives you consider to be correct. |

| (i) Reduces the transfer of pain in the nervous system | |

| (ii) Reduces venous flow to the heart | |

| (iii) Improves the level of oxygen (pO2) in the blood | |

| (iv) Can trigger dizziness and fall in blood pressure | |

| 2 | What are the two symptoms that can be present during an attack of angina pectoris? Tick off the two alternatives you consider correct. |

| (i) Chest pain decreases after intake of NG | |

| (ii) The patient's lips turn cyanotic | |

| (iii) Frequence of pulse and blood pressure will decrease | |

| (iv) The patient may become winded/breathless during exertion | |

| 3 | Range in prioritized order the actions you would perform with a patient admitted to hospital with angina pectoris (1 most important – 4 least important) |

| (i) Administration of oxygen | |

| (ii) Insertion of peripheral vein cannula | |

| (iii) Blood sampling and ECG | |

| (iv) Administration of nitroglycerin | |

|

| |

| Theme: Cardiac arrest | |

| 4 | Which statements are correct? Tick off two alternatives. |

| (i) The most common cause of cardiac arrest is acute heart infarction | |

| (ii) Cardiac arrest implies that the infarction is large | |

| (iii) Resuscitation is effective whether it starts at once or after a few minutes | |

| (iv) Abnormal breathing in an unconscious patient indicates cardiac arrest | |

| 5 | Which two statements about heart compression are correct? |

| (i) Depth of compression should be 5-6 cm | |

| (ii) Number of compressions should be at least 100/minute | |

| (iii) The most important to prioritize the first minutes after cardiac arrest is effective heart compression | |

| (iv) Resuscitation should always start with 30 compressions in a row | |

| 6 | Range in prioritized order actions with a patient with cardiac arrest |

| (i) Alert others (call) | |

| (ii) Heart compression | |

| (iii) Ventilation | |

| (iv) Check if the patient has gotten a pulse again | |

|

| |

| Theme: Hypovolemia/bleeding | |

| 7 | Which two statements are correct concerning bleeding and blood transfusion? |

| (i) Blood transfusion is required if hgb falls 20% | |

| (ii) Bleeding through the bandage after a hip operation indicates a large loss of blood | |

| (iii) Reactions to a transfusion may normally occur the first 15 minutes after the transfusion has started | |

| (iv) Blood transfusion is normally required when hgb-values < 7g/100ml | |

| 8 | What are the symptoms of blood loss/development of shock? Tick off two alternatives you consider correct. |

| (i) Low blood pressure (<90 mmHg) | |

| (ii) Warm and red skin color | |

| (iii) Slow and irregular pulse | |

| (iv) Increasing apathy/confusion | |

| 9 | Tick off two actions you consider most important to prioritize with a patient developing shock |

| (i) Administration of oxygen | |

| (ii) Insert a urinary catheter | |

| (iii) Intravenous hydration | |

| (iv) Raise head-end of bed to ease ventilation/respiration | |

|

| |

| Theme: COPD | |

| 10 | What is the meaning of a COPD patient's habitual spO2 values? Tick off/mark the alternative that is correct (one tick). |

| (i) The patient's spO2 values during the best phase of the disease | |

| (ii) The patient's spO2 values during worsening of COPD | |

| (iii) The patient's spO2 values with COPD grade 3 or 4 | |

| 11 | Which symptoms are typical during acute worsening of COPD? Tick off two alternatives you consider correct. |

| (i) Restless and anxious patient | |

| (ii) Low values of O2 (spO2) and Co2 (spCo2) | |

| (iii) Inspirational stridor (difficult to breathe in) | |

| (iv) Expirational stridor (difficult to breathe out) | |

| 12 | Tick off two actions you consider most important to prioritize with a patient with worsening COPD |

| (i) Administer prescribed medications | |

| (ii) Abundant administration of oxygen | |

| (iii) Create a calming environment | |

| (iv) Measure O2 and CO2 in blood sample before treatment begins | |

|

| |

| Theme: Diabetes/hypoglycemia | |

| 13 | Which keywords match type-1 diabetes? Tick off two alternatives you consider correct. |

| (i) Auto-immune disease | |

| (ii) Non-existent production of insulin | |

| (iii) Insulin resistance | |

| (iv) Part loss of insulin production | |

| 14 | What are the symptoms in a patient with a mild degree of hypoglycemia? Tick off two alternatives you consider correct. |

| (i) Loss of consciousness | |

| (ii) Hunger | |

| (iii) Diplopia | |

| (iv) Shivering | |

| 15 | How do you handle an unconscious diabetic patient? Tick off one alternative. |

| (i) As if the patient had hypoglycemia (give sugar) | |

| (ii) As if the patient had hyperglycemia (give insulin) | |

| (iii) Never treat the patient before you know the values of sugar in the blood | |

|

| |

| Theme: Ileus/hypovolemia | |

| 16 | Which situations can lead to hypovolemia? Tick off two alternatives you consider correct. |

| (i) Cancer in the bowels | |

| (ii) The normal passage of intestinal content has stopped | |

| (iii) Paralysis of the bowels | |

| (iv) Feces leaks into the abdominal cavity | |

| 17 | Which symptoms may be present during hypovolemia? Tick off two alternatives you consider correct. |

| (i) Extended abdomen or dry mucous membranes | |

| (ii) Standing skin folds | |

| (iii) Abundant light-colored urine | |

| (iv) High blood pressure | |

| 18 | Which two actions are the most important when one suspects that a patient has ileus? |

| (i) Administer pain medication | |

| (ii) Administer a laxative | |

| (iii) Aspiration of ventricular content | |

| (iv) Careful stimulation of the bowels with soup | |

The questionnaire consisted of 18 questions related to the deterioration of the patient's condition, 3 from each of the 6 different scenarios that students simulated. Six questions covered pathophysiology, for example, “Which situations can lead to hypovolemia?”; five questions covered symptoms, for example, “What are the two symptoms that can be present during an attack of angina pectoris?”; five questions covered nursing actions, for example “How do you handle an unconscious diabetic patient?”; and two questions covered prioritization of nursing actions, for example, “Range in prioritized order actions with a patient with cardiac arrest.” Of the 18 questions, 14 had 4 answer options, where students should mark off 2 correct answers; 2 had 3 answer options, where students should mark off 1 correct answer; and in the last 2 questions students were required to rank 4 answer options. The MCQ was developed by the facilitators, and content validity was established by experienced practicing nurses. The instrument had medium internal consistency, with Cronbach's alpha of 0.62 for the control group, 0.73 for the intervention group, and 0.62 for the stimulus test. These somewhat low numbers may be because the instrument focused on multiple content areas but had only 18 questions.

2.5. Data Collection and Analysis

Both posttests were completed as paper-and-pencil tests on a written form immediately after the last scenario simulation. This format was chosen to achieve the highest possible response rate [30]. The stimulus test for the intervention group was completed electronically through a digital learning platform one week before the SBL started.

Data analysis was performed with SPSS, version 22. Homogeneity between the groups was tested with descriptive summary statistics; then, knowledge scores were analyzed with descriptive statistics, and comparisons between the control and intervention groups were conducted with independent-samples t-test. A paired-sample t-test was used to assess difference between knowledge scores from the stimulus test and posttest mean scores in the intervention group. Effect size was computed using Cohen's d [31]. Age-related differences in scores were analyzed with analysis of variance (ANOVA).

2.6. Ethical Considerations

The study was approved by the university college where it was conducted and the Norwegian Social Science Data Services (project number 36135). Return of the questionnaire was considered to constitute consent to participate.

3. Results

Participant characteristics are shown in Table 3.

Table 3.

Characteristics of the participants.

| Control | Intervention | t-test/χ2 | |

|---|---|---|---|

| n = 60 | n = 53 | p-values | |

| Age (M ± SD) | 23.5 ± 5.6 | 24.5 ± 6.7 | 0.355a |

| Gender | |||

| Female (n %) | 55 91.7 | 50 94.3 | χ 2 0.306 |

| Male (n %) | 5 8.3 | 3 5.7 | 0.584 |

| Work experience in health care before starting the nursing bachelor | |||

| Yes (n %) | 47 78.3 | 45 84.9 | χ 2 0.803 |

| No (n %) | 13 21.7 | 8 15.1 | 0.370 |

| Years of work experience in health care | |||

| (M, ± SD) | 1.3 ± 1.5 | 2.4 ± 3.7 | 0.038a |

| Experience with critically ill patients | |||

| Yes (n %) | 21 (36.2) | 19 (37.3) | χ 2 0.13 |

| No (n %) | 37 (63.8) | 32 (62.7) | 0.910 |

| Experience with simulation | |||

| Yes (n %) | 6 (10) | 5 (9.6) | χ 2 0.005 |

| No (n %) | 54 (90) | 47 (90.4) | 0.946 |

at-test.

There was homogeneity on all tested characteristics between the two groups of students who participated in the posttest, with the exception of years of work experience (Table 3). We did not control for the differences in years of work experience at baseline.

Mean scores and standard deviations were calculated among all students who completed the knowledge test. There was a significant improvement in posttest knowledge scores between the control group (M=8.9 SD=3.2) and the intervention group (M=11.2 SD=3.5), p< 0.001. Effect size was d=0.68, considered a medium-sized effect [22].

The participants were divided into three age categories. Mean knowledge score for participants <22 years old in the control group was 8.5 (n=37) and in the intervention group 10.5 (n=31); participants aged 22–26 years had mean score of 9.1 (n=15) in the control group and 11.8 (n=8) in the intervention group; and mean score for participants >26 years was 10.6 (n=8) in the control group and 12.4 (n=14) in the intervention group. These differences were not statistically significant.

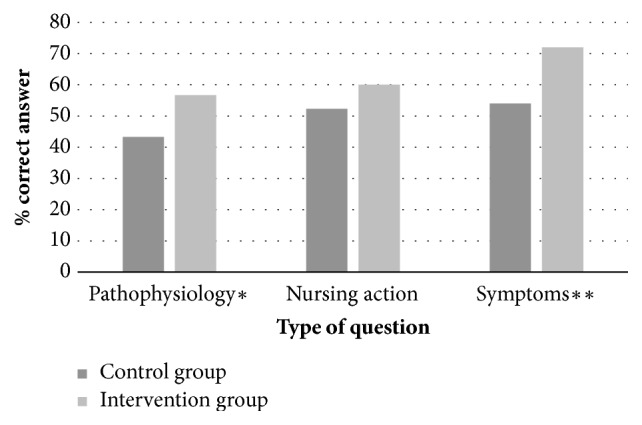

The intervention group had significantly higher scores than the control group on questions concerning pathophysiology knowledge (p=0.001) and knowledge of symptoms (p<0.001) (Figure 1). Within both groups, questions concerning pathophysiology had lower scores than questions about symptoms and nursing actions.

Figure 1.

Posttest knowledge scores before and after the intervention. ∗p=0.001 ∗∗p<0.001.

Forty students in the intervention group completed both the stimulus test and the posttest; their scores were significantly higher on the posttest than on the stimulus test (M=12.0 SD=3.2 versus M=8.9 SD=3.1), p< 0.001. Effect size was d=1.1, considered as a large-sized effect [29].

4. Discussion

The purpose of the study was to examine the role of the educational intervention in the students' acquisition of knowledge. The knowledge test results show significantly greater improvement in posttest scores in the intervention group than in the control group. One component of the intervention was the introduction of a stimulus test, and because we wanted to investigate the effect of this component, no such test was given to the control group. It was found that there was a significant improvement in posttest scores compared with scores on the stimulus test. In the following, we will discuss the specific elements of the intervention that may have impacted the difference in the results between the two groups.

First, introduction of a knowledge test before the scenario simulation may stimulate students to strengthen their cognitive learning and is one aspect of participant preparation as described in the International Nursing Association for Clinical Simulation and Learning (INACSL) “Standards of Best Practice: SimulationSM” [32]. This is because a stimulus for learning can make the students more aware of both the knowledge they have and the knowledge they lack. It can thus serve as a “repetition trigger” prompting students to brush up on relevant topics such as pathophysiology as a preparation for simulation exercises. Baseline theoretical knowledge is necessary to acquire competence acquisition through SBL [33]. Similarly, Flood and Higbie [34] found that a relevant didactic lecture could be useful to strengthen cognitive learning on blood transfusion. To discover one's own lack of knowledge in a test conducted prior to the simulation may encourage more preparation and may furthermore increase students' attention during simulation and debriefing because they recognize the relevance of the test content as they practice and reflect. This strengthened knowledge base gives more substance to students' reflections and problem analysis during debriefing and thereby improves their knowledge development [23].

Viewing of video-recordings by students in the roles of nurses was the second component of the intervention that we see as potentially helpful. Video-assisted debriefing led by a facilitator is often used in SBL, although its effect is uncertain [35]. By completing the scenario and then watching their own video-recorded performance immediately afterward, the students who had acted as nurses got an opportunity to assess themselves; similar to the stimulus test, self-assessment can make students aware of both the knowledge they possess and the knowledge they lack to help them perform necessary actions. Video-based self-assessment in particular can help students develop awareness of their strengths and weaknesses [36]. However, it has been reported that some students “felt ashamed” when watching themselves onscreen [37]; to preclude this, we decided that our participants should view their performance alone, without interference of teacher and peers, so as to focus on learning, not on the judgment from others. Thereby, the students who had acted in the scenario were also given the opportunity to gain the observer's perspective. We expected that this would have reduced stress and thereby provided the opportunity for improved preparation before debriefing, also leaving them readier to focus on knowledge development together with their peers during the debriefing session.

The third new element of the intervention was the structured observation form, which focused on specific actions in each scenario. In this study, both the observers and the facilitator used the same observation form, which may have contributed to a clear focus on knowledge of the signs of deteriorating conditions; when students respond appropriately and then verbalize their deliberations, knowledge application is taken to be demonstrated [33]. An observation form functions as a tool that mediates learning [38, 39] and draws students' attention to the importance of change in patients' condition. The use of observation tools has been reported to engage the observer in learning [40–42] and facilitate observational learning by focusing on important aspects [43–45]. The observation form may have triggered assessments of actions based on specific professional knowledge rather than an overall approach.

A schedule of six scenarios in the course of two days afforded the students the chance for repetitive practice of important actions involved in handling deteriorating patients. Although the scenarios were different, the focus remained on key observations and actions to counteract deterioration, allowing ample practice for observing and handling common events such as low blood pressure or oxygen deficiency. Repetition is recommended as a best practice in learning [46]. Marton [47] argues that students need to be exposed not only to similarities but also to differences, in order to connect knowledge to different situations. The observation form highlighted key observations and may have helped the students to verbalize these aspects, make them explicit, and thereby promote transfer of knowledge from one situation to another. The students were exposed to many variations through the scenarios, and this may have improved their knowledge about symptoms, explaining why they had the highest score on symptoms.

Students' knowledge scores increased with age, in both groups. The finding was not significant but could indicate a trend. Though Shinnick, Woo, and Evangelista [48] claim that age is not a predictor of knowledge gain, increasing age may nevertheless indicate greater beneficial experience; thus, the stimulus test may be of even greater importance to younger students as a stimulus to learning—perhaps especially during SBL, for which baseline theoretical knowledge is one of several necessary antecedents [3, 33].

Both groups had the highest scores on knowledge of symptoms, lower on appropriate nursing actions, and lowest on knowledge of pathophysiology. We can only speculate with regard to this finding that it may be easier to acquire knowledge about symptoms and actions because this type of knowledge can be enhanced through visualization—by handling the actual symptoms of deteriorating patients, watching themselves on video, and taking the observer role. The use of manikins can be advantageous in this regard because symptoms can be portrayed via manikin's software, which can increase student's attention to the symptoms. It is also possible that pathophysiology requires a deeper understanding, meaning that it involves knowledge as justification for action. Recognizing symptoms in time is an important part of identifying signs of deteriorating conditions [12], and therefore high scores on knowledge of symptoms are a valuable finding. The intervention group had significantly higher scores than the control group on knowledge of pathophysiology and symptoms. This indicates that the new components of stimulus test, video viewing, and observer forms positively influenced the students' acquisition of knowledge. Both groups of students had limited clinical experience at this point in their education, which may explain why they did not achieve higher scores in general.

5. Limitations of the Study

The findings of this study may be of interest to educators because how to enhance students' knowledge acquisition is an increasingly important issue in SBL. The results of this study, however, are based on only a small sample recruited from only one school of nursing, which limits their generalizability. We used a convenience sample in this study, and the intervention group had 1.1 years more work experience than the control group. It is possible, though difficult to gauge, if levels of work skills could influence these students' overall scores. However, there was no significant difference in the two student groups' experience with critically ill patients.

Although the use of MCQs is a common approach in knowledge assessment, there are discussions about whether they really fit the purpose [49, 50]. Here, because stimulus test and posttest consists of the same questions, we are aware that students may remember correct answers from the stimulus test and therefore score higher on the posttest. This may mean that students have primarily gained knowledge from the stimulus test and not the other components of the intervention. Nevertheless, the significant increase in scores between the stimulus test and posttest in the intervention group suggests that the other components also are decisive in the students' knowledge acquisition. In addition, knowledge was measured only one time after the simulation, thus yielding no information on long-term knowledge retention. Finally, correct answers on MCQs do not necessarily correspond with students' actions in real situations of patient deterioration.

6. Conclusion

Students' knowledge scores were compared before and after an educational intervention during SBL. The results showed significantly greater improvement of scores in the intervention group than in the control group. Based on these findings, we assume that pedagogical underpinning of SBL, which emphasizes improvement of students' prerequisites for learning and strengthens the debriefing, can positively influence students' knowledge acquisition.

Data Availability

The underlying data will be available through the USN Research Data Archive, DOI 10.23642/usn.6148562.

Conflicts of Interest

The authors declare that there are no conflicts of interest.

References

- 1.Gaba D. The future vision of simulation in health care. BMJ Quality & Safety. 2004;13:i2–i10. doi: 10.1136/qshc.2004.009878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Nehring W. M., Lashley F. R. Nursing Simulation: A Review of the Past 40 Years. Simulation & Gaming. 2009;40(4):528–552. doi: 10.1177/1046878109332282. [DOI] [Google Scholar]

- 3.Buykx P., Cooper S., Kinsman L., et al. Patient deterioration simulation experiences: Impact on teaching and learning. Collegian. 2012;19(3):125–129. doi: 10.1016/j.colegn.2012.03.011. [DOI] [PubMed] [Google Scholar]

- 4.Hatlevik I. K. R. The theory-practice relationship: Reflective skills and theoretical knowledge as key factors in bridging the gap between theory and practice in initial nursing education. Journal of Advanced Nursing. 2012;68(4):868–877. doi: 10.1111/j.1365-2648.2011.05789.x. [DOI] [PubMed] [Google Scholar]

- 5.Laursen P. F. Multiple bridges between theory and practice. In: Jens-Christian Smeby M. S., editor. From vocational to professional education: educating for social welfare. Routledge: London, UK; 2015. pp. 89–104. [Google Scholar]

- 6.Merriman C. D., Stayt L. C., Ricketts B. Comparing the effectiveness of clinical simulation versus didactic methods to teach undergraduate adult nursing students to recognize and assess the deteriorating patient. Clinical Simulation in Nursing. 2014;10(3):e119–e127. doi: 10.1016/j.ecns.2013.09.004. [DOI] [Google Scholar]

- 7.Kelly M. A., Forber J., Conlon L., Roche M., Stasa H. Empowering the registered nurses of tomorrow: Students' perspectives of a simulation experience for recognising and managing a deteriorating patient. Nurse Education Today . 2014;34(5):724–729. doi: 10.1016/j.nedt.2013.08.014. [DOI] [PubMed] [Google Scholar]

- 8.DeBourgh G. A., Prion S. K. Using Simulation to Teach Prelicensure Nursing Students to Minimize Patient Risk and Harm. Clinical Simulation in Nursing. 2011;7(2):e47–e56. doi: 10.1016/j.ecns.2009.12.009. [DOI] [Google Scholar]

- 9.Onda E. L. Situated Cognition: Its Relationship to Simulation in Nursing Education. Clinical Simulation in Nursing. 2012;8(7):e273–e280. doi: 10.1016/j.ecns.2010.11.004. [DOI] [Google Scholar]

- 10.Tun J. K., Alinier G., Tang J., Kneebone R. L. Redefining Simulation Fidelity for Healthcare Education. Simulation & Gaming. 2015;46(2):159–174. doi: 10.1177/1046878115576103. [DOI] [Google Scholar]

- 11.Lavoie P., Pepin J., Cossette S. Development of a post-simulation debriefing intervention to prepare nurses and nursing students to care for deteriorating patients. Nurse Education in Practice. 2015;15(3):181–191. doi: 10.1016/j.nepr.2015.01.006. [DOI] [PubMed] [Google Scholar]

- 12.Fisher D., King L. An integrative literature review on preparing nursing students through simulation to recognize and respond to the deteriorating patient. Journal of Advanced Nursing. 2013;69(11):2375–2388. doi: 10.1111/jan.12174. [DOI] [PubMed] [Google Scholar]

- 13.Lapkin S., Levett-Jones T., Bellchambers H., Fernandez R. Effectiveness of Patient Simulation Manikins in Teaching Clinical Reasoning Skills to Undergraduate Nursing Students: A Systematic Review. Clinical Simulation in Nursing. 2010;6(6):e207–e222. doi: 10.1016/j.ecns.2010.05.005. [DOI] [PubMed] [Google Scholar]

- 14.Oh P.-J., Jeon K. D., Koh M. S. The effects of simulation-based learning using standardized patients in nursing students: A meta-analysis. Nurse Education Today . 2015;35(5):e6–e15. doi: 10.1016/j.nedt.2015.01.019. [DOI] [PubMed] [Google Scholar]

- 15.Shin S., Park J.-H., Kim J.-H. Effectiveness of patient simulation in nursing education: Meta-analysis. Nurse Education Today . 2015;35(1):176–182. doi: 10.1016/j.nedt.2014.09.009. [DOI] [PubMed] [Google Scholar]

- 16.Yuan H. B., Williams B. A., Fang J. B., Ye Q. H. A systematic review of selected evidence on improving knowledge and skills through high-fidelity simulation. Nurse Education Today . 2012;32(3):294–298. doi: 10.1016/j.nedt.2011.07.010. [DOI] [PubMed] [Google Scholar]

- 17.Foronda C., Liu S., Bauman E. B. Evaluation of simulation in undergraduate nurse education: An integrative review. Clinical Simulation in Nursing. 2013;9(10):e409–e416. doi: 10.1016/j.ecns.2012.11.003. [DOI] [Google Scholar]

- 18.Connell C. J., Endacott R., Jackman J. A., Kiprillis N. R., Sparkes L. M., Cooper S. J. The effectiveness of education in the recognition and management of deteriorating patients: A systematic review. Nurse Education Today . 2016;44:133–145. doi: 10.1016/j.nedt.2016.06.001. [DOI] [PubMed] [Google Scholar]

- 19.Lapkin S., Levett-Jones T., Gilligan C. A systematic review of the effectiveness of interprofessional education in health professional programs. Nurse Education Today . 2013;33(2):90–102. doi: 10.1016/j.nedt.2011.11.006. [DOI] [PubMed] [Google Scholar]

- 20.Buykx P., Kinsman L., Cooper S., et al. FIRST2ACT: Educating nurses to identify patient deterioration - A theory-based model for best practice simulation education. Nurse Education Today . 2011;31(7):687–693. doi: 10.1016/j.nedt.2011.03.006. [DOI] [PubMed] [Google Scholar]

- 21.Liaw S. Y., Scherpbier A., Rethans J.-J., Klainin-Yobas P. Assessment for simulation learning outcomes: A comparison of knowledge and self-reported confidence with observed clinical performance. Nurse Education Today . 2012;32(6):e35–e39. doi: 10.1016/j.nedt.2011.10.006. [DOI] [PubMed] [Google Scholar]

- 22.Bailey P. H., Mossey S., Moroso S., Cloutier J. D., Love A. Implications of multiple-choice testing in nursing education. Nurse Education Today . 2012;32(6):e40–e44. doi: 10.1016/j.nedt.2011.09.011. [DOI] [PubMed] [Google Scholar]

- 23.Boud D., Falchikov N. Aligning assessment with long-term learning. Assessment & Evaluation in Higher Education. 2006;31(4):399–413. doi: 10.1080/02602930600679050. [DOI] [Google Scholar]

- 24.Kolb A. Y., Kolb D. A. Learning styles and learning spaces: enhancing experiential learning in higher education. Academy of Management Learning and Education (AMLE) 2005;4(2):193–212. doi: 10.5465/amle.2005.17268566. [DOI] [Google Scholar]

- 25.Rourke L., Schmidt M., Garga N. Theory-based research of high fidelity simulation use in nursing education: A review of the literature. International Journal of Nursing Education Scholarship. 2010;7(1, article no. 11) doi: 10.2202/1548-923X.1965. [DOI] [PubMed] [Google Scholar]

- 26.Issenberg S. B., McGaghie W. C., Petrusa E. R., Gordon D. L., Scalese R. J. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Medical Teacher. 2005;27(1):10–28. doi: 10.1080/01421590500046924. [DOI] [PubMed] [Google Scholar]

- 27.Schön D. A. Educating the Reflectice Practitioner. San Francisco, Calif, USA: Jossey-Bass; 1987. [Google Scholar]

- 28.Simon R., Raemer D. B., Rudolph J. W. Debriefing Assessment for Simulation in, Healthcare (DASH)© Rater’s Handbook. Boston, Mass, USA: Center for Medical Simulation; 2010. [Google Scholar]

- 29.Polit D. F., Beck C. T. Nursing Research : generating and assessing evidence for nursing practice. 10th. Philadelphia, Penn, USA: Wolters Kluwer; 2017. [Google Scholar]

- 30.Hohwü L., Lyshol H., Gissler M., Jonsson S. H., Petzold M., Obel C. Web-based versus traditional paper questionnaires: a mixed-mode survey with a nordic perspective. Journal of Medical Internet Research. 2013;15(8):p. e173. doi: 10.2196/jmir.2595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Cohen J. Statistical Power analysis for behavioral sciences. Hillsdale, NJ, USA: L. Erlbaum Associates; 1988. [Google Scholar]

- 32.International Nursing Association for Clinical Simulation & Learning. INACSL Standards of Best Practice: SimulationSM: Simulation Design. Clinical Simulation in Nursing. 2016;12:5–12. [Google Scholar]

- 33.Hansen J., Bratt M. Competence Acquisition Using Simulated Learning Experiences: A Concept Analysis. Nursing Education Perspectives. 2015;36(2):102–107. doi: 10.5480/13-1198. [DOI] [PubMed] [Google Scholar]

- 34.Flood L. S., Higbie J. A comparative assessment of nursing students' cognitive knowledge of blood transfusion using lecture and simulation. Nurse Education in Practice. 2016;16(1):8–13. doi: 10.1016/j.nepr.2015.05.008. [DOI] [PubMed] [Google Scholar]

- 35.Levett-Jones T., Lapkin S. A systematic review of the effectiveness of simulation debriefing in health professional education. Nurse Education Today . 2014;34(6):e58–e63. doi: 10.1016/j.nedt.2013.09.020. [DOI] [PubMed] [Google Scholar]

- 36.Yoo M. S., Son Y. J., Kim Y. S., Park J. H. Video-based self-assessment: Implementation and evaluation in an undergraduate nursing course. Nurse Education Today . 2009;29(6):585–589. doi: 10.1016/j.nedt.2008.12.008. [DOI] [PubMed] [Google Scholar]

- 37.Pereira J., Echeazarra L., Sanz-Santamaría S., Gutiérrez J. Student-generated online videos to develop cross-curricular and curricular competencies in Nursing Studies. Computers in Human Behavior. 2014;31(1):580–590. doi: 10.1016/j.chb.2013.06.011. [DOI] [Google Scholar]

- 38.Säljö R. Lärande i praktiken : ett sociokulturellt perspektiv. Stockholm, Sweden: Prisma; 2000. [Google Scholar]

- 39.Wenger E. Communities of practice : learning, meaning, and identity. Learning in doing: social, cognitive, and computational perspectives. Cambridge, UK: Cambridge University Press; 1998. [DOI] [Google Scholar]

- 40.Schaar G. L., Ostendorf M. J., Kinner T. J. Simulation: Linking quality and safety education for nurses competencies to the observer role. Clinical Simulation in Nursing. 2013;9(9):e407–e410. doi: 10.1016/j.ecns.2013.08.001. [DOI] [Google Scholar]

- 41.Reime M. H., Johnsgaard T., Kvam F. I., et al. Learning by viewing versus learning by doing: A comparative study of observer and participant experiences during an interprofessional simulation training. Journal of Interprofessional Care. 2017;31(1):51–58. doi: 10.1080/13561820.2016.1233390. [DOI] [PubMed] [Google Scholar]

- 42.Wighus M., Bjørk I. T. An educational intervention to enhance clinical skills learning: Experiences of nursing students and teachers. Nurse Education in Practice. 2018;29:143–149. doi: 10.1016/j.nepr.2018.01.004. [DOI] [PubMed] [Google Scholar]

- 43.Krogh C. L., Ringsted C., Kromann C. B., et al. Effect of engaging trainees by assessing peer performance: A randomised controlled trial using simulated patient scenarios. BioMed Research International. 2014;2014:7. doi: 10.1155/2014/610591.610591 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Kaplan B. G., Abraham C., Gary R. Effects of participation vs. observation of a simulation experience on testing outcomes: implications for logistical planning for a school of nursing. International Journal of Nursing Education Scholarship. 2012;9(1, Article 14) doi: 10.1515/1548-923X.2398. [DOI] [PubMed] [Google Scholar]

- 45.Stegmann K., Pilz F., Siebeck M., Fischer F. Vicarious learning during simulations: Is it more effective than hands-on training? Medical Education. 2012;46(10):1001–1008. doi: 10.1111/j.1365-2923.2012.04344.x. [DOI] [PubMed] [Google Scholar]

- 46.Cook D. A., Hamstra S. J., Brydges R., et al. Comparative effectiveness of instructional design features in simulation-based education: Systematic review and meta-analysis. Medical Teacher. 2013;35(1):e867–e898. doi: 10.3109/0142159X.2012.714886. [DOI] [PubMed] [Google Scholar]

- 47.Marton F. Sameness and difference in transfer. Journal of the Learning Sciences. 2006;15(4):499–535. doi: 10.1207/s15327809jls1504_3. [DOI] [Google Scholar]

- 48.Shinnick M. A., Woo M., Evangelista L. S. Predictors of Knowledge Gains Using Simulation in the Education of Prelicensure Nursing Students. Journal of Professional Nursing. 2012;28(1):41–47. doi: 10.1016/j.profnurs.2011.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Kardong-Edgren S., Lungstrom N., Bendel R. VitalSim® Versus SimMan®: A Comparison of BSN Student Test Scores, Knowledge Retention, and Satisfaction. Clinical Simulation in Nursing. 2009;5(3):e105–e111. doi: 10.1016/j.ecns.2009.01.007. [DOI] [Google Scholar]

- 50.Levett-Jones T., Lapkin S., Hoffman K., Arthur C., Roche J. Examining the impact of high and medium fidelity simulation experiences on nursing students' knowledge acquisition. Nurse Education in Practice. 2011;11(6):380–383. doi: 10.1016/j.nepr.2011.03.014. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The underlying data will be available through the USN Research Data Archive, DOI 10.23642/usn.6148562.