Abstract

Background

Policy encourages health care providers to listen and respond to feedback from patients, expecting that it will enhance care experiences. Enhancement of patients’ experiences may not yet be a reality, particularly in primary health care settings.

Objective

To identify the issues that influence the use and impact of feedback in this context.

Design and Setting

A realist synthesis of studies of the use of patient feedback within primary health care settings.

Methods

Structured review of published studies between 1971 and January 2015.

Results

Eighteen studies were reported in 20 papers. Eleven studies reported patient survey scores as a primary outcome. There is little evidence that formal patient feedback led to enhanced experiences. The likelihood of patient feedback to health care staff stimulating improvements in future patients’ experiences appears to be influenced predominantly by staff perceptions of the purpose of such feedback; the validity and type of data that is collected; and where, when and how it is presented to primary health care teams or practitioners and teams’ capacity to change.

Conclusions

There is limited research into how patient feedback has been used in primary health care practices or its usefulness as a stimulant to improve health care experience. Using a realist synthesis approach, we have identified a number of contextual and intervention-related factors that appear to influence the likelihood that practitioners will listen to, act on and achieve improvements in patient experience. Consideration of these may support research and improvement work in this area.

Keywords: Access and evaluation, health care, health care quality, patient advocacy, patient satisfaction, quality assurance, quality of health care, total quality management

Introduction

Policy makers, consumers and the general public are in favour of public reporting of quality of health care but research shows that such information is rarely searched for or used by consumers or health care purchasers and often misunderstood by consumers (1).

In the UK it is argued that public reporting of quality of care and in particular, patient feedback about health care experiences is needed to enhance transparency and accountability of health care (2–6). Listening and responding to patients’ feedback about their experiences of health care is viewed as fundamental to achieving safe, effective and person-centred care (5,7–13).

Internationally, patient evaluations of care have become a key feature in health care quality systems and patient survey ratings have existed in the quality and outcomes framework within general practice contracts in the UK since 2004. Now in the UK patients experience reports of all GP practices are publically available.

UK policy assumes that such sharing of patient feedback with general practice teams will improve future care experiences (2,3,14,15). Variability in patients’ reported evaluations of general practice care continues to prevail however (16,17). While perhaps a reflection of variability in care experience, there is indication that much of this variability can be alternatively explained by socio-demographic factors. In particular, patient ratings of GP-patient communication have been found to be significantly influenced by age, gender, housing, ethnicity, employment status, the GP practice and practice size as well as random error (18–20). This ‘noise’ associated with the variance in patients’ experiences of care limits our ability to fully understand the role of feedback as a stimulant for improving care experiences.

The lack of change in survey scores is often attributed to the methodological challenges associated with surveys, namely their ceiling effect (21) and their use as a proxy indicator of the quality of GP care has been contested by GPs. GPs’ have raised concerns about the validity, reliability and appropriateness of patient experience ratings. Many have questioned the representativeness of survey samples; the validity and inherent biases they perceive to be associated with survey items and survey administration processes (22,23). Statistical examination of survey responses has however demonstrated that such concerns are largely unfounded. Correlations all but disappear when gender, age, ethnicity, housing and employment status such factors are adjusted for (18,24).

In addition to GP concerns about the validity and reliability of survey results, evidence from studies examining the impact of patient feedback at a health care system level remains equivocal. Some have reported some impact (13,25) but impact is more likely to be related to other incentives (mainly linked to GP contracts in the UK) than the influence of the feedback itself (23,25,26).

Few would disagree however with the importance of ensuring positive experiences of health care and indeed we know that patient evaluations of their experiences of care can be influenced by improvements in care provision (24). However, how effective patient feedback is as a stimulant for improvement or how best the impact of this intervention that has considerable costs might be optimized remains unclear.

To date, most studies examining the influence of patient feedback at practice or practitioner level have concentrated on practitioners’ views of patient feedback or considered patient evaluations of care as a sole outcome. Other possible impacts at practitioner or practice level has not been clearly synthesized and the ways in which feedback patient operates at these levels are thus poorly understood. We therefore conducted a realist synthesis to address this evidence gap. Our research questions were:

Q1 Is the use of formal patient feedback at the individual health care team or practitioner level associated with changes in future patients’ experiences in primary health care settings?

Q2 What appears to influence the use of formal patient feedback in primary health care teams?

Q3 What appears to influence the effectiveness of efforts (stimulated by patient feedback) aimed at improving future patients’ experiences in primary health care?

The term ‘patient feedback’ is often used to describe a range of concepts including patient satisfaction, experience, involvement, expectations, preferences, evaluation and patient reported outcome measures often causing confusion. We use the term ‘patient feedback’ to describe various forms of formal feedback including patients’ satisfaction levels, experience, views, accounts and evaluations of general practice care in relation to accessibility, continuity, quality of consultations and customer care.

Methods

A realist synthesis is an explanatory analysis of evidence to seek to understand what works for whom, in what circumstances, and how. Realist syntheses test programme theories—explanations of how an intervention is meant to work and its anticipated outcome(s) (official expectations) against empirical findings (27–29). Programme theories are derived by exploratory work with key stakeholders and/or analysis of intervention documentation (28). They are then refined through analysis of empirical evidence such that the context (C) in which the intervention is placed and how this affects reasoning and actions (Mechanisms—M) of the receivers of the intervention and how mechanisms influence outcomes (O) are made explicit. Such explanations are typically expressed as CMO configurations: C + M = O (28,29).

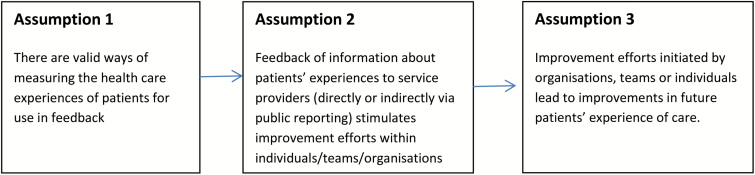

We derived our initial programme theory about the impact of patient feedback in general practice by using narrative policy analysis principles (30) to analyse NHS health policy/guidance documents relating to the use of patient feedback from 1998 to 2012 [Quality and Outcomes Framework (QOF) was introduced in 2004. However policy decisions prior to its introduction were also examined in an attempt to understand the implicit or explicit assumptions that led to the introduction of patient surveys as part of the QOF.]. Examination of the content in these policy documents uncovered three official assumptions by policy makers (Fig. 1).

Figure 1.

Initial programme theory.

The literature search process was similar a search strategy in a systematic review. Searches were conducted in Cochrane Systematic Review library, MEDLINE, Psycinfo and CINAHL databases between 1971 and September 2016 to identify systematic reviews, research studies or quality improvement reports. The search strategy was as follows:

#1 patient evaluation$ OR patient feedback OR patient N2feedback OR patient satisfaction OR patient N2 satisfaction OR patient rating$ OR patient N2 rating$ OR patient survey OR patient N2 survey OR patient view$ OR patient N2 view$ OR patient prefer$ OR patient N2 prefer OR patient complaint$ OR patient OR patient experience$ OR patient N2experience$

#2 (MM ‘Quality IndicatORs, Health Care’) OR (MM ‘Total Quality Management’) OR (MM ‘Quality Assurance, Health Care’) OR (MM ‘Management Quality Circles’) OR (MM ‘Health Care Quality, Access, and Evaluation’) OR (MM ‘Quality of Health Care’)

#1 and #2

We included:

1. Studies that examined how patient feedback to primary health care teams about their experiences of using the service has been used

2. Studies that examined the impact of patient feedback within primary health care services

We excluded papers that met the following exclusion criteria:

1. Studies about patients’ experiences of ill health that do not include feedback about the person’s or population’s experiences of using the health service, e.g. papers about lived experience of living with specific conditions.

2. Studies where it cannot be clearly ascertained if patient feedback was shared with health care staff, e.g. may examine trends over time but changes can be influences by a number of variables including contractual requirements for service provision and delivery.

3. Studies that focus on the patients’ experiences of a particular treatment or intervention

4. Methodological papers that only discuss the development of measures of patient experience or satisfaction.

5. Studies of the impact of quality reports which include a range of quality measures, one of which is patient experience scores.

6. Studies that focus on the use of patient feedback within medical education including post graduate training, e.g. GP registrar training.

7. Studies that focus on patient reported outcomes measures (PROMs).

8. Discussion papers or editorials about how health care providers have used patient feedback and/or the impact of such feedback.

Databases were searched by the first author (DJB). Screening of titles and abstracts was undertaken by DJB, with BG and VE each checking a sample of 100. Full papers were independently assessed against the inclusion/exclusion criteria by all authors, disagreements were resolved through discussion. Included papers’ reference lists were reviewed to identify further studies. All studies were assessed for rigour and relevance (27,31) and key details were collated in a structured data extraction form (available from author on request).

A narrative synthesis of study findings was undertaken as heterogeneity of studies precluded a meaningful quantitative synthesis (32). Firstly within the synthesis, we examined the studies, specifically looking for evidence that either confirmed or refuted each of the programme theory assumptions. We used working hypotheses initially derived from the programme theory and inductively as we analysed the data to guide this process. The following example describes the process in more detail and was repeated for each assumption.

Example of testing working hypotheses

To examine assumption 3—‘improvement efforts initiated by organizations, teams or individuals lead to improvements in future patients’ experience of health care’—we collated all data relating to the impact of improvement efforts; looked for frequency of evidence of improvement in patients’ experiences and purposefully looked for evidence of other impact types. On noticing that patient experiences rarely improved we developed a working hypothesis that impact was not always seen as a result of teams’ efforts. We then explored the factors detailed in papers that appeared to make this more or less likely. The factors identified were then classified as context or mechanisms in accordance with the definitions provided by Pawson and Tilley (33) where context is the culture where the intervention is placed and mechanisms are reasoning and actions of the social actors.

Data from this process were used to populate a CMO configuration and refine our initial programme theory, both of which we introduce a little later. These seek to explain the conditions in which patient feedback would fire particular mechanisms that were more likely to lead to improved patient experiences.

Results

The electronic search identified 8718 (not de-duplicated) publications. Twenty-seven full-text articles were independently assessed by DJB, VE and BG and 15 met the inclusion criteria. Three further papers were found in reference lists of included papers (Fig. 2).

Figure 2.

Literature review flowchart.

20 papers reported data from 18 studies. For clarity we subsequently refer to findings from 18 studies conducted in the UK, Netherlands, USA and Hong Kong.

Summary of study designs

We found one systematic review of the impact of feedback at the individual practitioner level (34). All but one primary study in this review used patient surveys. All primary studies reported patient questionnaires as the mode of patient feedback to clinicians or teams. Eleven intervention studies, of which three were quality improvement reports, examined the impact of patient feedback on future experience (6,8,9,11,35–41,45). Six studies examined GP practice staff views and reports of use of patient feedback (22,42–46). One (10) surveyed practices to identify their survey tools and improvements.

Most (9/11) of the intervention studies used survey instruments that elicited patient assessments of both physician and practice performance. These included:

General Practice Assessment Questionnaire (GPAQ) (9)

Consumer Assessments of Healthcare Providers and Systems (CAHPS) (8,11)

Chronically Ill Patients Evaluate General Practice (CEP) (36,37)

Visit rating questionnaire (VRQ) (38)

Physician Office visit survey (7)

The other intervention studies used bespoke patient satisfaction surveys (39,41).

Half (9/18) the studies examined the impact of written feedback at practice (i.e. organization) level (4,7–9,11,22,35,38,41). Two provided it at individual practitioner level only (36,40). Five (6,31,44–46) examined written feedback provided at both practice and individual practitioner level. The systematic review (34) included studies using a range of feedback methods and the method in which survey data was fed back was unreported in 2 studies (10,39). Ten of the 13 primary studies that reported details of how feedback was presented provided practice/practitioners’ feedback alongside comparative scores (7–9,22,35,36,38,40,42,45). Three provided teams or individuals with their own data without any comparisons (39,41,44).

The majority (10) of studies combined feedback with other interventions: quality improvement posters (38); quality improvement collaborative (8,11); physician reimbursement (7,22); improvement guide including determinants of patient satisfaction (36,40), quality improvement meetings (35,41,45) template actions plans and web-based local comparative patient experience data (9) and public reporting (46).

Primary outcomes fell into two main categories: (i) patient experience and (ii) staff reports of experience of receiving or using feedback. Patient experience scores were the primary outcome measured in eight studies (7,8,11,34–40,46). Apart from Kibbe (41) who evaluated impact of feedback by auditing an aspect of poor experience (continuity of care) and monitoring complaints rates, all other studies considered impact via staff reports and/or views and/or experiences of being provided with patient feedback (6,9,10,22,31,42,44–46).

Q1. Is the use of formal patient feedback associated with changes in future patients’ experiences in primary health care settings?

Regardless of instruments used, methods of feedback, associated interventions or context, we found that formal patient feedback to general practice teams/practitioners had either a negligible or very weak impact on future patient experience scores. Studies either reported no statistical improvements (35,36); very few, small, non-statistically significant changes in aspects of experience (8) or mixed picture of increases and decreases in scores within or across practices (7,34,39,40,48).

Q2. What appears to influence the use of formal patient feedback in primary health care teams?

Contrary to assumption 1 in our programme theory we found significant professional concerns over the validity of patient feedback as well as other concerns such as perceived purpose of feedback; type of data collected, presentation; timeliness of feedback, the context staff work in and staff resistance to patient feedback that moderated the extent to which feedback stimulated practice change.

Perceived purpose

Where teams sought patients’ evaluations themselves, perceived benefits were consistently reported by staff (10,39,41). Perceptions amongst those who received patient feedback gathered by others were more mixed (6,22,31,35,36,38,44). GPs reported being anxious that feedback data would be used to judge their quality of care rather than serve development purposes. English GPs perceived their national survey to be a political tool with questions biased to elicit negative evaluations (22).

Type of data and its presentation

Teams were more likely to attempt to change practice when survey findings: (i) were presented in accessible formats (9,11,37,44,45); (ii) included appropriate reference points (6); (iii) reported experience scores alongside importance ratings (8) and (iv) were care process and practitioner specific (6–8,37,41,45); were shared in ways that were acceptable to the individual GP (11,36,45), included patient comments (31,45) and highlighted issues already known to the practice (11,39,44). Even when all of these conditions were satisfied however, GPs in one randomized controlled trial perceived significantly less need to alter their own practice after receipt of such feedback (37) and in another study, a minority of GPs had poorer job satisfaction and more negative views of patient evaluations of care after receiving their feedback (45).

Validity of patient feedback

Some clinicians expressed concern about: the reliability and validity of some measures of patients’ experiences (6,22,31,45); lack of adjustment of scores for socio-demographic variables; ratings resistant to change (22,31,44); and conflation of patients’ views of one consultation with overall evaluations of doctors’ personalities or evaluations of the practice (22,31,42,44). Questions considered important by GPs were found to enhance perceived validity (11,44) as were surveys that facilitated ‘digging deeper’ into poorer ratings (7,11,41). Challenging of data validity was not reported when data was adjusted for confounding variables (11,36).

Timeliness

The effect of time between patient feedback being sought and shared with practitioners is unclear. In the one study where it was considered, some teams complained of the time delay between data collection and reporting, deeming monthly feedback that had been delayed as historic and unnecessary to address. Others, never referred to their monthly data after they received baseline data yet increased (although not statistically significant) patient experience ratings (11).

Context

A lack of leadership commitment to quality improvement, absence of structures to support listening to feedback (11,42), feedback not being viewed as an important indicator of quality, lack of patient feedback within quality/leadership agendas (11,35,39,41,42,44) and lack of external or internal resources available to address patient concerns (22,42,44) were cited by health care staff as key barriers in their workplace contexts to making improvements in practice in response to patient feedback.

Staff resistance to patient feedback

Scepticism over the quality of patient feedback data was reported in six studies (6,22,31,35,37,44). GPs were often resistant to feedback, particularly to relational aspects of patient experience (11,22,31,37,45,46). Some GPs perceived tensions between providing patients with a good experience and providing good clinical care (31). They often dismissed feedback as inaccurate/incomplete representations of reality and challenged how feedback had been gathered, particularly if it demonstrated a need for improvement (11,22,31,44).

The congruence between patient feedback and medical staff’s opinions of service quality appears to influence responses to feedback. Positive feedback and critical feedback that reflected practitioners’ views was perceived as motivating by GPs (39,41,44) and galvanized change efforts (6,39,41,44). When feedback was critical of services and contrary to practitioners’ views, medical staff tended to question the data, methodology or small sample sizes (6,11,22,31,35).

Q3. What appears to influence the effectiveness of efforts (stimulated by patient feedback) aimed at improving future patients’ experiences in primary health care?

Many studies reported attempts to improve services in response to feedback received (7,9–11,35,37,39,44,46). Commencement of change appears to be influenced by fit between the proposed improvements and the strategic priorities of departments/organizations (39,41,44), few competing priorities and access to facilitation (11). Those with external facilitation support or had attempted modest, non-complex changes using tight management controls appeared to be most likely to implement changes (6,11,46). Budgetary restrictions, lack of time and high workload requirements were also reported as barriers (6,9,11,42,44). The impact of financial incentives remains unclear as the one study that used them had equivocal results (7).

Discussion

Summary

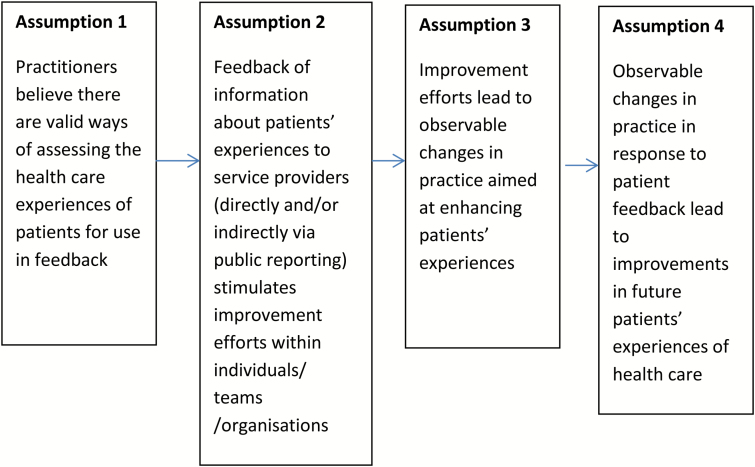

We found that policy assumptions about the transformative capacity of patient feedback (initial programme theory, Fig. 1) were not consistently empirically demonstrated in studies that examined its impact at general practice or practitioner level. Our realist synthesis shows that responses to and the impact of patient feedback at practice or practitioner level were moderated by a number of factors, and improvement efforts rarely resulted in improved patient experience survey scores. We refined our programme theory in light of our findings (Fig. 3) and produced a CMO configuration (Fig. 4) which details the contextual conditions (C) that appear to be necessary if patient feedback is to stimulate changes in practice (M) and if such changes are to result in improvements in patients’ experiences of care (O).

Figure 3.

Refined programme theory.

Figure 4.

Proposed CMO—how patient feedback leads to improvements in future patients’ experiences of care.

Critically, we found that although external facilitation or managerial support shows some promise in supporting teams to initiate improvement efforts (11), assumption 3 of our programme theory was not upheld, i.e. changes in future patients’ experience scores were not observed. We have therefore included an additional step to reflect the fact that often staff report efforts to improve patient experience following receipt of formal patient feedback recognizing that this may not always result in changes in patients’ experiences of care. Some of this appears to be due to the lack of discriminatory ability of surveys. Equally however many contextual factors and internal staff beliefs about formal feedback tools and methods, either promote or hinder efforts to engage in improvement efforts.

Strengths and limitations

We focused only on published studies but did not consider the grey literature. The heterogeneity of studies only permitted a narrative synthesis of impact. We have not included studies of impact of other types of patient feedback or engagement potentially better suited to support improvements in service delivery (47–49).

Prior to this study there has been no structured literature review of the impact of formal patient feedback in general practice at a practice or practitioner level. Our search strategy was very sensitive, we maximized its comprehensiveness by filtering by hand rather than using electronic filters.

The use of realist review principles permitted this study to go beyond assessing impact to systematically examining the range of factors affecting the use and impact of practice and practitioner level patient experience data. It therefore provides a useful addition to what is known about how and why formal patient feedback impacts on the quality of GP care.

Comparison with existing literature

Hospital-based studies have reached similar conclusions to ours, indicating that significant attention needs to be paid to the quality and type of data if staff resistance to listen to patient feedback and use it to inform their work is to be overcome (50–52).

Implications for research and practice

Patient surveys may play a role in accountability, transparency and patient choice in health provision and perhaps a role in identifying areas in need of improvement. The feedback that can be obtained in patient experience or satisfaction surveys, even when combined with intense and focused improvement efforts, has not been found so far to result in significant improvements in future patients’ experiences at a practice or practitioner level. The literature is scant however, and we caution that absence of evidence of effectiveness is not the same as evidence of ineffectiveness.

Responsiveness of instruments to any actual change, face validity of questionnaires and socio-cultural inclusiveness of administration strategies need further investigation if professional scepticism of patient surveys are to be minimized (31). Professional scepticism could also be reduced if the focus of patient feedback programmes was formative and agreements on how data should be analysed, adjusted and shared were reached between regulators/survey providers and health care staff (52,53).

Inclusion of qualitative patient feedback, providing explanation for ratings, presented at the practitioner level could also enhance the attention paid to it in practice. Change at the individual practitioner level and in particular to the interpersonal aspects of practice has been reported as difficult so attention also needs paid to providing staff with support to consider patients’ views of their service as valid and potentially different to theirs and to adjust their practice when this is indicated.

To our knowledge there have been no studies of the range of ways (beyond patient surveys) in which primary health care teams gain an understanding of their patients’ experiences and what they do in everyday practice to enhance such experiences. Until now, much of what is recommended is from reports by practitioners of what might/would be helpful or from what they have found useful/not useful in specific studies where feedback has been an intervention. Research focused on how practices gather, pay attention to, and respond to formal patient feedback would support the identification of ways in which primary health care teams can work to improve patients’ experiences of their service (54).

Declaration

Funding: Chief Scientist Office Doctoral Training Fellowship in Health Services and Health of the Public Research (Ref: DTF/08/07).

Ethical approval: none.

Conflict of interest: none.

References

- 1. Marshall MN, Romano PS, Davies HT. How do we maximize the impact of the public reporting of quality of care?Int J Qual Health Care 2004; 16 (suppl 1): i57–63. [DOI] [PubMed] [Google Scholar]

- 2. The Scottish Government. Implementing the quality strategy for NHSScotland in primary care. In: DoH (ed). Edinburgh, UK, 2010. http://www.gov.scot/Resource/Doc/321597/0103382.pdf (accessed on 11 July 2017). [Google Scholar]

- 3. Darzi A. High Quality Care for All: NHS Next Stage Review Final Report. London, UK: DoH, 2008. [Google Scholar]

- 4. NHS Scotland. Partnerships for Care: Scotland’s Health White Paper. 2003. http://www.gov.scot/Resource/Doc/47032/0013897.pdf (accessed on 23 June 2017). [Google Scholar]

- 5. The Scottish Government. Better Health, Better Care: Action Plan. 2007. http://1nj5ms2lli5hdggbe3mm7ms5.wpengine.netdna-cdn.com/files/2010/03/pnsscot.pdf (accessed on 11 July 2017). [Google Scholar]

- 6. Carter M, Greco M, Sweeney K et al. . Impact of systematic patient feedback on general practices, staff, patients and primary care trusts. Education for Primary Care 2004; 15; 30–8. [Google Scholar]

- 7. Carey RG. Improving patient satisfaction: a control chart case study. J Ambul Care Manage 2002; 25: 78–83. [DOI] [PubMed] [Google Scholar]

- 8. Davies E, Shaller D, Edgman-Levitan S et al. . Evaluating the use of a modified CAHPS survey to support improvements in patient-centred care: lessons from a quality improvement collaborative. Health Expect 2008; 11: 160–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Chanter C, Ashmore S, Mandair S. Improving the patient experience in general practice with the General Practice Assessment Questionnaire (GPAQ). Qual Prim Care 2005; 13: 225–32. [Google Scholar]

- 10. Hearnshaw H, Baker R, Cooper A et al. . The costs and benefits of asking patients for their opinions about general practice. Fam Pract 1996; 13: 52–8. [DOI] [PubMed] [Google Scholar]

- 11. Davies E, Cleary PD. Hearing the patient’s voice? Factors affecting the use of patient survey data in quality improvement. Qual Saf Health Care 2005; 14: 428–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. The Scottish Executive. Patient Focus, public involvement. 2001. http://www.gov.scot/Resource/Doc/158744/0043087.pdf (accessed on 11 July 2017). [Google Scholar]

- 13. UK Government. Local Government and Public Involvement in Health Act. 200. 7. http://www.legislation.gov.uk/ukpga/2007/28/pdfs/ukpgaen_20070028_en.pdf (accessed on 11 July 2017). [Google Scholar]

- 14. The Scottish Government. The Patients’ Rights (Scotland) Act. 2011. http://www.legislation.gov.uk/asp/2011/5/contents (accessed on 11 July 2017). [Google Scholar]

- 15. Department of Health. The NHS Outcomes Framework 2013/14. London, UK: 2012. https://www.gov.uk/government/uploads/system/uploads/attachment_data/file/213055/121109-NHS-Outcomes-Framework-2013-14.pdf (accessed on 11 July 2017). [Google Scholar]

- 16. Allan J, Schattner P, Stocks N et al. . Does patient satisfaction of general practice change over a decade?BMC Fam Pract 2009; 10: 13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Elliott MN, Kanouse DE, Burkhart Q et al. . Sexual minorities in England have poorer health and worse health care experiences: a national survey. J Gen Intern Med 2015; 30: 9–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Salisbury C, Wallace M, Montgomery AA. Patients’ experience and satisfaction in primary care: secondary analysis using multilevel modelling. BMJ 2010; 341: c5004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Lyratzopoulos G, Elliott M, Barbiere JM et al. . Understanding ethnic and other socio-demographic differences in patient experience of primary care: evidence from the English General Practice Patient Survey. BMJ Qual Saf 2012; 21: 21–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Burt J, Lloyd C, Campbell J et al. . Variations in GP-patient communication by ethnicity, age, and gender: evidence from a national primary care patient survey. Br J Gen Pract 2016; 66: e47–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Ahmed F, Burt J, Roland M. Measuring patient experience: concepts and methods. Patient 2014; 7: 235–241. [DOI] [PubMed] [Google Scholar]

- 22. Asprey ACJ, Newbould J, Cohn S et al. . Challenges to the credibility of patient feedback in primary healthcare settings: a qualitative study. Br J Gen Pract 2013; 63: 200–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Ahmed F, Abel GA, Lloyd CE et al. . Does the availability of a South Asian language in practices improve reports of doctor-patient communication from South Asian patients? Cross sectional analysis of a national patient survey in English general practices. BMC Fam Pract 2015; 16: 55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Campbell SM, Kontopantelis E, Reeves D et al. . Changes in patient experiences of primary care during health service reforms in England between 2003 and 2007. Ann Fam Med 2010; 8: 499–506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Richards N, Coulter A. Is the NHS becoming more patient centred? Trends from the national surveys of NHS patients in England 2001–2007. 2007. http://www.yearofcare.co.uk/sites/default/files/pdfs/99_Trends_2007_final[1].pdf (accessed on 11 July 2017).

- 26. Kontopantelis E, Roland M, Reeves D. Patient experience of access to primary care: identification of predictors in a national patient survey. BMC Fam Pract 2010; 11: 61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Pawson R, Greenhalgh T, Harvey G et al. . Realist review–a new method of systematic review designed for complex policy interventions. J Health Serv Res Policy 2005; 10 (suppl 1): 21–34. [DOI] [PubMed] [Google Scholar]

- 28. Rycroft-Malone J, McCormack B, Hutchinson AM et al. . Realist synthesis: illustrating the method for implementation research. Implement Sci 2012; 7: 33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Wong G, Westhorp G, Pawson R et al. . Realist Synthesis RamesesTraining Materials, 2013. http://www.ramesesproject.org/media/Realist_reviews_training_materials.pdf (accessed on 19 November 2015). [Google Scholar]

- 30. Prior L, Hughes D, Peckham S. The discursive turn in policy analysis and the validation of policy stories. J Soc Policy 2012; 41: 271–89. [Google Scholar]

- 31. Edwards A, Elwyn G, Hood K et al. . Judging the ‘weight of evidence’ in systematic reviews: introducing rigour into the qualitative overview stage by assessing Signal and Noise. J Eval Clin Pract 2000; 6: 177–84. [DOI] [PubMed] [Google Scholar]

- 32. Centre for Reviews and Dissemination, University of York. Systematic reviews CRD’s guidance for undertaking reviews in health care University of York 2009 http://www.york.ac.uk/inst/crd/pdf/Systematic_Reviews.pdf (accessed on 23 January 2015).

- 33. Pawson R, Tilley N.. Realistic Evaluation. London, UK: Sage, 1997. [Google Scholar]

- 34. Reinders ME, Ryan BL, Blankenstein AH et al. . The effect of patient feedback on physicians’ consultation skills: a systematic review. Acad Med 2011; 86: 1426–36. [DOI] [PubMed] [Google Scholar]

- 35. Greco M, Carter M, Powell R et al. . Does a patient survey make a difference?Educ Prim Care 2004; 15: 183–9. [Google Scholar]

- 36. Vingerhoets E, Wensing M, Grol R. Feedback of patients’ evaluations of general practice care: a randomised trial. Qual Health Care 2001; 10: 224–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Wensing M, Elwyn G. Improving the quality of health care: methods for incorporating patients’ views in health care... third of three articles. BMJ 2003; 326: 877–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Isenberg SF, Stewart MG. Utilizing patient satisfaction data to assess quality improvement in community-based medical practices. Am J Med Qual 1998; 13: 188–94. [DOI] [PubMed] [Google Scholar]

- 39. Tam J. Linking quality improvement with patient satisfaction: a study of a health service centre. Marketing Intelligence & Planning 2007; 25: 732–45. [Google Scholar]

- 40. Kirschner K, Braspenning J, Maassen I et al. . Improving access to primary care: the impact of a quality-improvement strategy. Qual Saf Health Care 2010; 19: 248–51. [DOI] [PubMed] [Google Scholar]

- 41. Kibbe DC, Bentz E, McLaughlin CP. Continuous quality improvement for continuity of care. J Fam Pract 1993; 36: 304–8. [PubMed] [Google Scholar]

- 42. Geissler KH, Friedberg MW, SteelFisher GK et al. . Motivators and barriers to using patient experience reports for performance improvement. Med Care Res Rev 2013; 70: 621–35. [DOI] [PubMed] [Google Scholar]

- 43. Edwards A, Evans R, White P et al. . Experiencing patient-experience surveys: a qualittaive study of the accounts of GPs. Br J Gen Pract 2011; 61: e157–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Boiko O, Campbell JL, Elmore N et al. . The role of patient experience surveys in quality assurance and improvement: a focus group study in English general practice. Health Expect 2015; 18: 1982–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Heje HN, Vedsted P, Olesen F. General practitioners’ experience and benefits from patient evaluations. BMC Fam Pract 2011; 12: 116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Friedberg MW, SteelFisher GK, Karp M et al. . Physician groups’ use of data from patient experience surveys. J Gen Intern Med 2011; 26: 498–504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Zaslavsky AM, Zaborski LB, Cleary PD. Plan, geographical, and temporal variation of consumer assessments of ambulatory health care. Health Serv Res 2004; 39: 1467–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Bleich SN, Ozaltin E, Murray CK. How does satisfaction with the health-care system relate to patient experience?Bull World Health Organ 2009; 87: 271–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Gayet-Ageron A, Agoritsas T, Schiesari L et al. . Barriers to participation in a patient satisfaction survey: who are we missing?PLoS One 2011; 6: e26852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Tasa K, Baker GR, Murray M. Using patient feedback for quality improvement. Qual Manag Health Care 1996; 4: 55–67. [DOI] [PubMed] [Google Scholar]

- 51. Niles N, Tarbox G, Schults W et al. . Using qualitative and quantitative patient satisfaction data to improve the quality of cardiac care. Jt Comm J Qual Improv 1996; 22: 323–35. [DOI] [PubMed] [Google Scholar]

- 52. Barr JK, Giannotti TE, Sofaer S et al. . Using public reports of patient satisfaction for hospital quality improvement. Health Serv Res 2006; 41: 663–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Riiskjaer E, Ammentorp J, Nielsen JF et al. . Patient surveys–a key to organizational change?Patient Educ Couns 2010; 78: 394–401. [DOI] [PubMed] [Google Scholar]

- 54. Ivers N, Jamtvedt G, Flottorp S et al. . Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev 2012: CD000259. [DOI] [PMC free article] [PubMed] [Google Scholar]