With recent breakthroughs in artificial intelligence, computer-aided diagnosis (CAD) for colonoscopy is gaining increasing attention. CAD allows automated detection and classification (i. e. pathological prediction) of colorectal polyps during real-time endoscopy, potentially helping endoscopists to avoid missing and mischaracterizing polyps. Although the evidence has not caught up with technological progress, CAD has the potential to improve the quality of colonoscopy, with some CAD systems for polyp classification achieving diagnostic performance exceeding the threshold required for optical biopsy. The present article provides an overview of this topic from the perspective of endoscopists, with a particular focus on evidence, limitations, and clinical applications.

Introduction

Several studies have shown that colonoscopy is associated with a reduction in colorectal cancer mortality. This benefit is based on the detection and resection of any neoplastic polyps; however, polyps can be missed during screening colonoscopy and endoscopists may not be able to differentiate between neoplastic and non-neoplastic polyps. Polyp miss rates as high as 20 % have been reported for high definition resolution colonoscopy 1 , while a large prospective trial of optical biopsy of small colon polyps using narrow-band imaging (NBI) showed that the accuracy of physicians was only 80 % in diagnosing detected polyps as adenomas, even after a physician training program 2 .

To overcome these limitations, computer-aided diagnosis (CAD) is attracting more attention because it may help endoscopists to avoid missing and mischaracterizing polyps. CAD for colonoscopy is generally designed to extract various features from a colonoscopic image/movie and output the predicted polyp location or pathology based on machine learning. The term “machine learning” refers to a fundamental function of artificial intelligence, whereby a computer can be trained to learn (in this case, recognize or characterize polyps) through repetition and experience (exposure to a large number of annotated polyp images). Ideally, the output of CAD is expressed in real time on the monitor, immediately assisting the endoscopist’s decision-making.

What can we expect of CAD in clinical practice?

CAD for colonoscopy is expected to improve the adenoma/polyp detection rate and the accuracy of optical biopsy during colonoscopy. Furthermore, successful implementation of CAD into screening colonoscopy may help to minimize variations in the adenoma detection rate (ADR) among endoscopists or centers. CAD could also play a role in training both novice and more experienced endoscopists who need to improve their ADR.

Overview of the technology

Automated detection of colorectal polyps

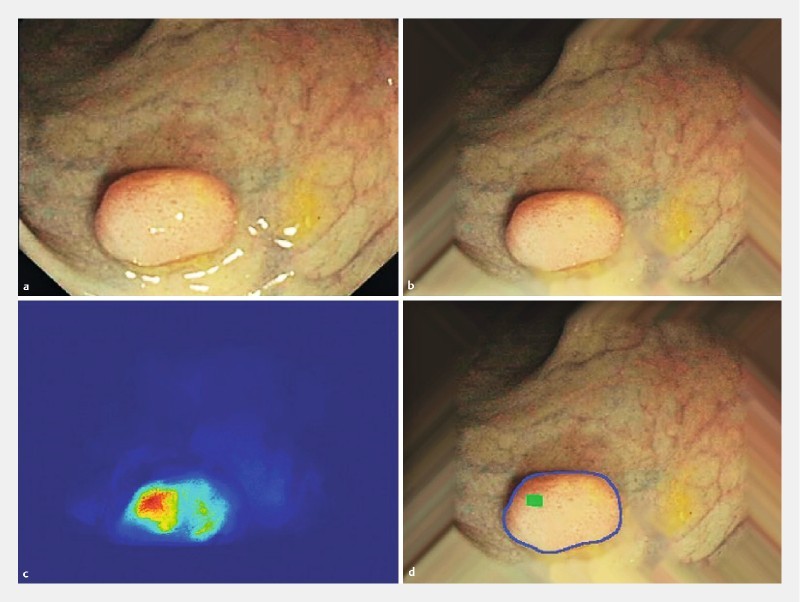

Automated detection is designed to alert endoscopists using a marker or sound when artificial intelligence technology suspects the presence of a polyp on the screen during colonoscopy ( Fig.1 ). Automated polyp detection is receiving attention because improved detection of adenomatous polyps contributes to lower rates of interval cancers, with a 1 % increase in the ADR reportedly being associated with a 3 % decrease in the risk of cancer 4 .

Fig. 1.

Automated polyp detection 3 . The energy map based on valleys of image intensity correctly detected the location of a polyp.

An ideal automated polyp detection system requires not only high sensitivity for detecting polyps but also a fast processing time for image analysis and on-screen labeling, so that the endoscopist is alerted in real time regarding the presence of a polyp. The first CAD model was reported by Karkanis et al. in 2003 5 . Although this model provided a sensitivity of 90 % in the detection of adenomatous polyps, analysis was based on static images; therefore, application of the technology was impractical for real-time analysis of a video stream. A number of subsequent studies focused on improving both the accuracy and speed of CAD systems. Tajbakhsh et al. 6 reported an 88 % sensitivity for polyp detection, more importantly demonstrating video image analysis with a latency of only 0.3 seconds. This achievement is notable in that nearly real-time automated detection with acceptable sensitivity was realized. However, the authors evaluated videos containing only 10 unique polyps in recorded video segments analyzed retrospectively, so this relatively high diagnostic sensitivity cannot necessarily be generalized to clinical practice.

Dramatic advances in this research field are now expected owing to the emergence of deep learning, which will be reviewed below.

Automated classification of colorectal polyps

Automated classification is designed to output the predicted pathology (e. g. neoplastic or non-neoplastic) of detected polyps, helping endoscopists to make an appropriate optical diagnosis and permitting selective resection of neoplastic polyps. Avoiding unnecessary polypectomy of hyperplastic polyps could represent an important cost-saving strategy (saving an estimated 33 million dollars yearly in the United States) 7 .

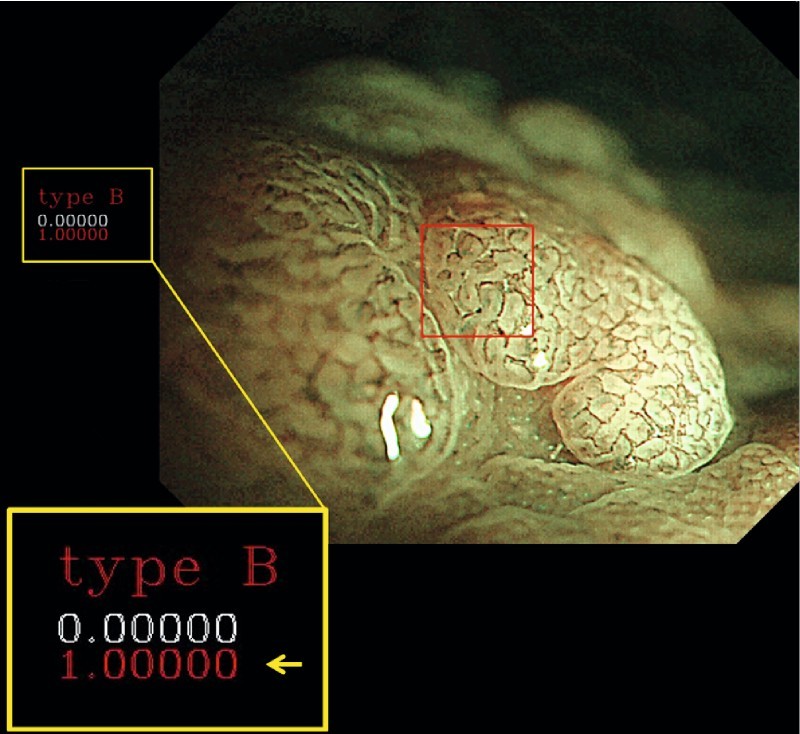

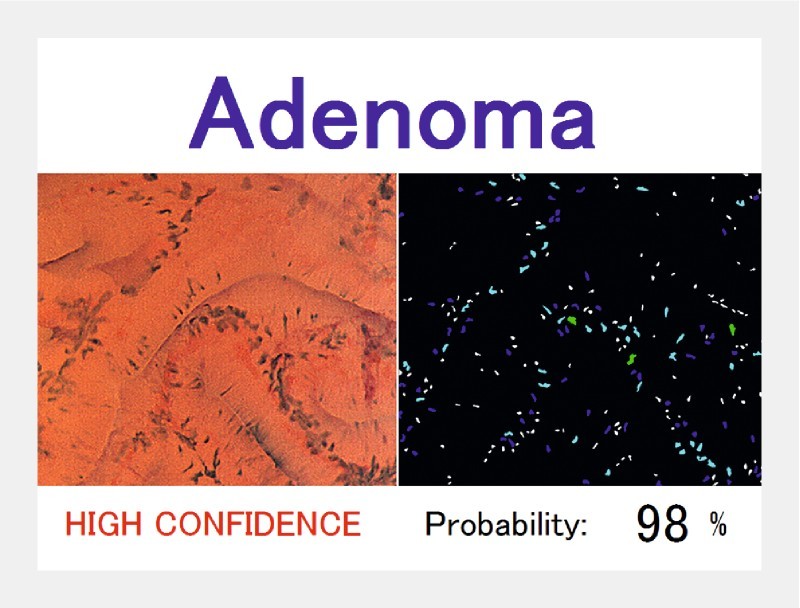

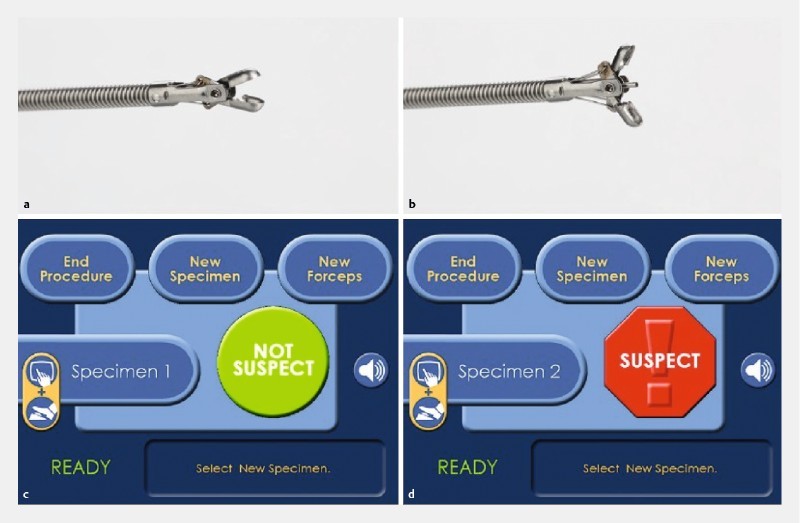

In contrast to automated detection, the principle targets of automated classification are currently advanced imaging modalities such as magnifying NBI ( Fig.2 ), endocytoscopy (CF-Y-0058; prototype from Olympus Co.) ( Fig.3 ), and laser-induced fluorescence spectroscopy (WavSTAT4; Pentax Corp., Tokyo, Japan) ( Fig.4 ). When using these modalities, endoscopists are required to indicate the region of interest by either centering the polyp 8 or placing the polyp in contact with the endoscope 9 10 to obtain the CAD outputs.

Fig. 2.

Automated classification of a polyp using magnifying narrow-band imaging 8 . The polyp classification, along with the probability, is generated in real time. In this image, the predicted classification is type B, which is representative of an adenoma.

Fig. 3.

Automated classification of a polyp using endocytoscopy 9 . The image features are acquired from nuclear morphologies, and the pathology of the polyp is predicted in real time with its probability. When the probability is > 90 %, the mark “HIGH CONFIDENCE” is shown.

Fig. 4.

Automated characterization using laser-induced fluoroscopic spectroscopy, namely the WavSTAT4 system 10 . The resulting autofluorescence signal from the polyp is analyzed in real time using a proprietary software algorithm, and within 1 second is classified as “Not suspect (of adenoma)” or “Suspect (of adenoma).”

Combinations of these advanced imaging modalities and CAD systems have led to the development of real-time artificial intelligence-assisted optical biopsy. In fact, some of these CAD systems 8 9 10 provided > 90 % negative predictive values for the histologic findings of adenomatous polyps in the rectosigmoid colon when diagnosed with “high confidence,” which is the threshold established by the Preservation and Incorporation of Valuable endoscopic Innovations (PIVI) for a “leave and not resect” strategy 11 .

The application of CAD to magnifying NBI was initially reported by Tischendorf et al. 12 and Gross et al. 13 in 2010 and 2011, respectively. In their model, nine vessel features (length, brightness, perimeter, and others) were extracted from NBI images for machine learning, providing an accuracy of 85.3 % in differentiation between neoplastic and non-neoplastic polyps. However, these studies were based on off-site assessment of still images. Following these studies, Takemura et al. 14 and Kominami et al. 8 reported a more robust algorithm suitable for real-time clinical use, offering in vivo classification of polyps during endoscopy ( Fig.2 ).

Endocytoscopy, which enables contact microscopy ( × 500) for in vivo cellular imaging, has also been intensively investigated for automated polyp classification. Endocytoscopy is considered particularly suitable for application with CAD systems because it provides focused, consistent images of fixed size that enable easier and more robust image analysis by CAD. The CAD models reported by Mori et al. 9 and Misawa et al. 15 were based on automated extraction of ultra-magnified nuclear/vessel features followed by machine-learning analysis, which resulted in approximately 90 % accuracy for identification of adenomas with only a 0.2-second latency after capturing an image. However, endocytoscopes are not available worldwide because they are prototypes.

Another promising modality for automated classification is laser-induced autofluorescence spectroscopy 10 . This CAD system is incorporated into a standard biopsy forceps; therefore, endoscopists can resect diminutive polyps immediately after obtaining the pathological prediction by CAD ( Fig. 4 ).

Emergence of deep learning

Using traditional artificial intelligence technologies, which obtain a > 90 % sensitivity in polyp detection, is challenging in real-life endoscopic conditions, and accurate polyp classification has not been demonstrated without advanced endoscopic imaging modalities. In this regard, newer “deep learning” approaches in artificial intelligence may offer important improvements over earlier generations of machine-learning CAD systems.

Deep learning is a new and more sophisticated machine-learning method that, for computer vision, has the advantage of being able to “learn” from large datasets of raw images without needing “instruction” with regard to which specific image features to look for. Deep learning approaches to image recognition have led to higher accuracy and faster processing times. Although the clinical application of deep learning is still very limited, automated detection of lung cancer using deep learning for computed tomography (CT) images may soon be commercially available (Enlitic Inc., San Francisco, California, USA).

With respect to CAD for colonoscopy, the potential of deep learning has recently been explored in several ex vivo studies 16 17 18 . Byrne et al. 18 applied deep learning to real-time recognition of neoplastic polyps, resulting in 83 % accuracy using colonoscopy videos. This achievement is notable for two reasons. First, deep learning enabled the simultaneous detection and classification of polyps. Second, the researchers succeeded in automated polyp classification using a standard colonoscope, not a magnifying endoscope or endocytoscope. Real-time detection and classification using standard endoscopic equipment is clearly the approach that would have the largest impact on endoscopic practice. Ongoing research in this area is crucially important to refine this technology and strengthen the clinical evidence base.

Clinical studies

Beyond refining the technical aspects of polyp detection and classification, the effectiveness and value of CAD systems will require careful evaluation in clinical trials before routine clinical application can be recommended. Several recent and important clinical studies have laid the groundwork for future clinical research in this field.

Four prospective studies and nine retrospective studies have been published on automated polyp classification ( Table1 ). A prospective trial conducted by Kominami et al. 8 is one of the well-designed studies that focused on in vivo automated classification using magnifying NBI ( Fig.2 ). A total of 41 patients with 118 colorectal lesions underwent assessment by one endoscopist with the real-time use of CAD; the endoscopist was not blind to the endoscopic images. The diagnostic accuracy of CAD for differentiation of adenomas was 93.2 %, using the pathological findings of the resected specimen as the gold standard. This study was notable because the recommendation for follow-up colonoscopy based on pathology and the real-time CAD prediction were identical in 92.7 % of cases, meeting the PIVI initiative for the “resect and discard” strategy 8 . However, the numbers of involved lesions and endoscopists were too small to generalize the results into clinical practice.

Table 1. Summary of preclinical and clinical studies involving computer-aided diagnosis (CAD) for colonoscopy (experimental studies excluded).

| Reference | Year | Type of CAD | Endoscopic modality | Study design | Subjects | Accuracy 1 |

| Fernandez-Esparrach 3 | 2016 | Automated detection | White-light endoscopy | Retrospective study | 31 lesions | – 2 |

| Takemura 19 | 2010 | Automated classification | Magnifying chromoendoscopy | Retrospective study | 134 images | 99 % |

| Tischendorf 12 | 2010 | Automated classification | Magnifying NBI | Post hoc analysis of prospectively acquired data | 209 lesions | 85 % |

| Gross 13 | 2011 | Automated classification | Magnifying NBI | Post hoc analysis of prospectively acquired data | 434 lesions | 93 % |

| Takemura 14 | 2012 | Automated classification | Magnifying NBI | Retrospective study | 371 lesions | 97 % |

| Kominami 8 | 2016 | Automated classification | Magnifying NBI | Prospective study | 118 lesions | 93 % |

| Mori 20 | 2015 | Automated classification | Endocytoscopy | Retrospective study | 176 lesions | 89 % |

| Mori 9 | 2016 | Automated classification | Endocytoscopy | Retrospective study | 205 lesions | 89 % |

| Misawa 15 | 2016 | Automated classification | Endocytoscopy combined with NBI | Retrospective study | 100 images | 90 % |

| Andre 21 | 2012 | Automated classification | Confocal laser endomicroscopy | Retrospective study | 135 lesions | 90 % |

| Kuiper 22 | 2015 | Automated classification | Laser-induced autofluorescence spectroscopy | Prospective study | 207 lesions | 74 % |

| Rath 10 | 2015 | Automated classification | Laser-induced autofluorescence spectroscopy | Prospective study | 137 lesions | 85 % |

| Aihara 23 | 2013 | Automated classification | Autofluorescence imaging | Prospective study | 102 lesions | – 3 |

| Inomata 24 | 2013 | Automated classification | Autofluorescence imaging | Post hoc analysis of prospectively acquired data | 163 lesions | 83 % |

NBI, narrow-band imaging.

Accuracy is expressed in terms of the differentiation of adenomas from non-neoplastic lesions.

The sensitivity and specificity were 70.4 % and 72.4 %, respectively. No description regarding accuracy was found in this study.

The sensitivity and specificity were 94.2 % and 88.9 %, respectively. No description regarding accuracy was found in this study.

While computer vision and engineering groups have published most of the early work on automated polyp detection, a recent study conducted by Fernandez-Esparrach et al. 3 is now the first and only physician-initiated clinical study in this field. In this ex vivo study, the authors assessed their CAD model using 24 colonoscopy videos containing 31 polyps and obtained a sensitivity and specificity of > 70 % for polyp detection ( Fig.1 ). The polyp location was marked by expert endoscopists as a gold standard.

Their proposed model was notable in two respects. First, their model was useful for the detection of small flat lesions, which are the most difficult to detect endoscopically. Their model was most effective for polyps observed zenithally (i. e. from above), when the complete polyp boundary could be viewed (vs. tangentially or horizontally, when only the edge of the polyp may be visible). Second, the performance of automated polyp detection was not negatively affected by poor bowel preparation because most types of fecal content did not fit the appearance model of polyps. However, their study had several limitations in its design: it was a retrospective analysis, involved off-site evaluation of the CAD system, and included a small sample size of videos.

What type of evidence do we need?

As a first step, prospective clinical trials based on real-time assessment are required. Real-time automated detection/classification is being realized with improvements in computer power and deep learning applications, which can enable in vivo (not off-site) prospective trials in this field. To accelerate clinical implementation, researchers should subsequently proceed to a randomized controlled trial evaluating the adenoma/polyp detection rate, withdrawal time, and other clinical parameters with and without CAD. The potential detrimental effects of CAD must be also assessed, including the potential for a more prolonged procedure time and the impact of false positives (i. e. identification of non-polyps). In automated polyp classification, outcome measures should also focus on the threshold proposed by the PIVI criteria 11 because this type of technology is designed to help in vivo optical biopsy. The broader goal of CAD for colonoscopy is to determine whether these technologies can lead to detectable improvements in important endpoints such as the occurrence of interval cancers or cancer-related mortality.

What problems are to be solved?

Technological problems

The most important technological issue is the limited data for machine learning. Generally, CAD requires “big data” to obtain excellent performance; deep learning required 10 million learning images to recognize various images of a cat with high reliability 25 . However, the currently investigated CAD systems for colonoscopy only used a maximum of 6000 images for machine learning 9 . A “big data” approach that employs deep learning to improve CAD in colonoscopy will likely require multicenter collaborations to collect sufficient numbers of images and videos.

Legal concerns

CAD is already being used in routine mammography for identification of suspicious lesions, and concerns have developed over whether CAD can be used retrospectively to determine whether a cancer should have been detected on an image 26 . Additionally, researchers have discussed whether all CAD marked images, whether true-negative, false-negative, true-positive, or false-positive, should be stored to record evidence of the clinical decisions made by the physician. Taking these concerns into consideration, appropriate legal support is needed to accelerate the implementation of CAD into clinical endoscopy practice. Public guidance for the development of CAD devices is now available from the Food and Drug Administration in the United States and the Ministry of Economy, Trade and Industry of Japan; however, these documents do not establish legally enforceable responsibilities.

Roadmap to implement CAD into clinical practice

The following five stages should be cleared to implement CAD into the clinical practice of colonoscopy: (i) product development, (ii) feasibility studies, (iii) clinical trials, (iv) regulatory approval, and (v) insurance reimbursement.

The most important factor for “(i) product development” and “(ii) feasibility studies” is the continued growth of medical – engineering collaborations in this field. In addition, learning from CAD-related progress in other domains, ranging from CT colonography and capsule endoscopy to breast mammography, will be beneficial because the diagnostic algorithms and technological barriers have many aspects in common 27 . Research on automated polyp detection currently remains focused on the early product development and feasibility stages.

The essential factor for “(iii) clinical trials” and “(iv) regulatory approval” is close coordination between academic research teams and industry. This collaboration is mandatory because industry expertise and resources are required for late-stage product development and drug regulatory approval. Research on automated polyp classification has already entered these later stages; one CAD system using laser-induced autofluorescence spectroscopy has already obtained regulatory approval from both the USA and the European Union and is now commercially available (WavSTAT4; Pentax Corp., Tokyo, Japan) 10 .

Finally, “(v) insurance reimbursement” is a critical step to ensure the widespread use of artificial intelligence-assisted medicine. To the best of our knowledge, no CAD system is yet reimbursed by national or private insurance, although acquisition of insurance reimbursement clearly contributes to widening the market for new products. Notably, the Japanese government officially announced that they are considering adding some incentives for the use of artificial intelligence-assisted medicine to the national insurance system by 2020.

Conclusion

With recent breakthroughs in artificial intelligence, interest in CAD is gaining traction as a novel approach to improve the quality of colonoscopy. However, technological, medical, and practical barriers lie ahead before widespread clinical use will be a consideration. In order to overcome these barriers, the following measures are necessary: (i) continued productive collaboration between the medical field and experts in engineering and computer vision research, (ii) clinical initiatives to acquire “big data” for machine learning in colonoscopy, (iii) evaluation of the evidence for colonoscopic CAD through rigorous clinical trials, and (iv) addressing legal and insurance reimbursement issues. We anticipate that each of these hurdles will likely be overcome during the next several years, which will open the door to the clinical application of CAD in colonoscopy.

Footnotes

Competing interests None

References

- 1.Kumar S, Thosani N, Ladabaum U et al. Adenoma miss rates associated with a 3-minute versus 6-minute colonoscopy withdrawal time: a prospective, randomized trial. Gastrointest Endosc. 2016 doi: 10.1016/j.gie.2016.11.030. [DOI] [PubMed] [Google Scholar]

- 2.Rees C J, Rajasekhar P T, Wilson A et al. Narrow band imaging optical diagnosis of small colorectal polyps in routine clinical practice: the Detect Inspect Characterise Resect and Discard 2 (DISCARD 2) study. Gut. 2017;66:887–895. doi: 10.1136/gutjnl-2015-310584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fernandez-Esparrach G, Bernal J, Lopez-Ceron M et al. Exploring the clinical potential of an automatic colonic polyp detection method based on the creation of energy maps. Endoscopy. 2016;48:837–842. doi: 10.1055/s-0042-108434. [DOI] [PubMed] [Google Scholar]

- 4.Corley D A, Jensen C D, Marks A R et al. Adenoma detection rate and risk of colorectal cancer and death. NEJM. 2014;370:1298–1306. doi: 10.1056/NEJMoa1309086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Karkanis S A, Iakovidis D K, Maroulis D E et al. Computer-aided tumor detection in endoscopic video using color wavelet features. IEEE Trans Inf Technol Biomed. 2003;7:141–152. doi: 10.1109/titb.2003.813794. [DOI] [PubMed] [Google Scholar]

- 6.Tajbakhsh N, Gurudu S R, Liang J. Automated polyp detection in colonoscopy videos using shape and context information. IEEE Trans Med Imaging. 2016;35:630–644. doi: 10.1109/TMI.2015.2487997. [DOI] [PubMed] [Google Scholar]

- 7.Hassan C, Pickhardt P J, Rex D K. A resect and discard strategy would improve cost-effectiveness of colorectal cancer screening. Clin Gastroenterol Hepatol. 2010;8:865–869 e861–e863. doi: 10.1016/j.cgh.2010.05.018. [DOI] [PubMed] [Google Scholar]

- 8.Kominami Y, Yoshida S, Tanaka S et al. Computer-aided diagnosis of colorectal polyp histology by using a real-time image recognition system and narrow-band imaging magnifying colonoscopy. Gastrointest Endosc. 2016;83:643–649. doi: 10.1016/j.gie.2015.08.004. [DOI] [PubMed] [Google Scholar]

- 9.Mori Y, Kudo S E, Chiu P W et al. Impact of an automated system for endocytoscopic diagnosis of small colorectal lesions: an international web-based study. Endoscopy. 2016;48:1110–1118. doi: 10.1055/s-0042-113609. [DOI] [PubMed] [Google Scholar]

- 10.Rath T, Tontini G E, Vieth M et al. In vivo real-time assessment of colorectal polyp histology using an optical biopsy forceps system based on laser-induced fluorescence spectroscopy. Endoscopy. 2016;48:557–562. doi: 10.1055/s-0042-102251. [DOI] [PubMed] [Google Scholar]

- 11.Rex D K, Kahi C, O'Brien M et al. The American Society for Gastrointestinal Endoscopy PIVI (Preservation and Incorporation of Valuable Endoscopic Innovations) on real-time endoscopic assessment of the histology of diminutive colorectal polyps. Gastrointest Endosc. 2011;73:419–422. doi: 10.1016/j.gie.2011.01.023. [DOI] [PubMed] [Google Scholar]

- 12.Tischendorf J J, Gross S, Winograd R et al. Computer-aided classification of colorectal polyps based on vascular patterns: a pilot study. Endoscopy. 2010;42:203–207. doi: 10.1055/s-0029-1243861. [DOI] [PubMed] [Google Scholar]

- 13.Gross S, Trautwein C, Behrens A et al. Computer-based classification of small colorectal polyps by using narrow-band imaging with optical magnification. Gastrointest Endosc. 2011;74:1354–1359. doi: 10.1016/j.gie.2011.08.001. [DOI] [PubMed] [Google Scholar]

- 14.Takemura Y, Yoshida S, Tanaka S et al. Computer-aided system for predicting the histology of colorectal tumors by using narrow-band imaging magnifying colonoscopy (with video) Gastrointest Endosc. 2012;75:179–185. doi: 10.1016/j.gie.2011.08.051. [DOI] [PubMed] [Google Scholar]

- 15.Misawa M, Kudo S E, Mori Y et al. Characterization of colorectal lesions using a computer-aided diagnostic system for narrow-band imaging endocytoscopy. Gastroenterology. 2016;150:1531–1532. doi: 10.1053/j.gastro.2016.04.004. [DOI] [PubMed] [Google Scholar]

- 16.Li T, Cohen J, Craig M et al. A novel computer vision program accurately identifies colonoscopic colorectal adenomas. Gastrointest Endosc. 2016;83:AB482. [Google Scholar]

- 17.Zhang R, Zheng Y, Mak T W et al. Automatic detection and classification of colorectal polyps by transferring low-level CNN features from nonmedical domain. IEEE J Biomed Health Inform. 2017;21:41–47. doi: 10.1109/JBHI.2016.2635662. [DOI] [PubMed] [Google Scholar]

- 18.Byrne M F, Rex D K, Chapados N et al. Artificial intelligence (AI) in endoscopy-deep learning for optical biopsy of colorectal polyps in real-time on unaltered endoscopic videos. United European Gastroenterol J. 2016;4:A155. [Google Scholar]

- 19.Takemura Y, Yoshida S, Tanaka S et al. Quantitative analysis and development of a computer-aided system for identification of regular pit patterns of colorectal lesions. Gastrointest Endosc. 2010;72:1047–1051. doi: 10.1016/j.gie.2010.07.037. [DOI] [PubMed] [Google Scholar]

- 20.Mori Y, Kudo S, Wakamura K et al. Novel computer-aided diagnostic system for colorectal lesions by using endocytoscopy (with videos) Gastrointest Endosc. 2015;81:621–629. doi: 10.1016/j.gie.2014.09.008. [DOI] [PubMed] [Google Scholar]

- 21.Andre B, Vercauteren T, Buchner A M et al. Software for automated classification of probe-based confocal laser endomicroscopy videos of colorectal polyps. World J Gastroenterol. 2012;18:5560–5569. doi: 10.3748/wjg.v18.i39.5560. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kuiper T, Alderlieste Y A, Tytgat K M et al. Automatic optical diagnosis of small colorectal lesions by laser-induced autofluorescence. Endoscopy. 2015;47:56–62. doi: 10.1055/s-0034-1378112. [DOI] [PubMed] [Google Scholar]

- 23.Aihara H, Saito S, Inomata H et al. Computer-aided diagnosis of neoplastic colorectal lesions using 'real-time' numerical color analysis during autofluorescence endoscopy. Eur J Gastroenterol Hepatol. 2013;25:488–494. doi: 10.1097/MEG.0b013e32835c6d9a. [DOI] [PubMed] [Google Scholar]

- 24.Inomata H, Tamai N, Aihara H et al. Efficacy of a novel auto-fluorescence imaging system with computer-assisted color analysis for assessment of colorectal lesions. World J Gastroenterol. 2013;19:7146–7153. doi: 10.3748/wjg.v19.i41.7146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Le Q V, Ranzato M A, Matthieu Det al. Building high-level features using large scale unsupervised learningIn: Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); 2013 May 26–31Vancouver, Canada: IEEE; 20138595–8598.

- 26.Philpotts L E. Can computer-aided detection be detrimental to mammographic interpretation? Radiology. 2009;253:17–22. doi: 10.1148/radiol.2531090689. [DOI] [PubMed] [Google Scholar]

- 27.Yuan Y, Meng M Q. Deep learning for polyp recognition in wireless capsule endoscopy images. Med Phys. 2017;44:1379–1389. doi: 10.1002/mp.12147. [DOI] [PubMed] [Google Scholar]