Abstract

Children with autism spectrum disorders (ASD) often exhibit impairments in communication and social interaction, and thus face various social challenges in collaborative activities. Given the cost of ASD intervention and lack of access to trained clinicians, technology-assisted ASD intervention has gained momentum in recent years. In this paper, we present a novel collaborative virtual environment (CVE) based social interaction platform for ASD intervention. The development of CVE technology for ASD intervention may lead to the creation of a novel low-cost intervention environment that will foster collaboration with peers and provide flexibility in communication. The presented Communication-Enhancement CVE system, Hand-in-Hand, allows two children to play a series of interactive games in a virtual reality environment by using simple hand gestures to collaboratively move virtual objects that are tracked in real-time via cameras. Further, these games are designed to promote natural communication and cooperation between the users via the presented Communication-Enhancement mode that allows users to share information and discuss game strategies using gaze and voice based communication. The results of a feasibility study with 12 children with ASD and 12 typically developing peers show that this system was well accepted by both the children with and without ASD, improved their cooperation in game play, and demonstrated the potential for fostering their communication and collaboration skills.

Index Terms: Autism intervention, collaborative virtual reality system, communication enhancement, social communication and interaction

I. Introduction

Autism spectrum disorders (ASD), characterized by deficits in communication and social interaction together with restricted, repetitive and stereotyped patterns of behavior, represent a range of neurodevelopmental disabilities [1–3]. One in 68 children are diagnosed with ASD in the US [4, 5], with prevalence rates amongst school-aged children (6–17 years) increasing from 1.16% to 2.00% between 2007 and 2012 [6]. Many children with ASD have difficulty developing the social competence required for appropriately interacting with peers, which may lead to poor social-emotional reciprocity, misuse of verbal or non-verbal behaviors, and inappropriate relationships [7, 8]. Research has shown that compared to typically developing (TD) peers, children with ASD may experience greater loneliness and difficulties in developing satisfying friendships and social networks, even though they themselves expect social involvement [9, 10]. Cascading effects of these social challenges could also prevent children with ASD from living independently, limiting their opportunities and training resources across systems of care.

Although ASD is a life-long disorder with no known cure, several studies have shown that children with ASD can learn how to act in social situations when they can repeatedly practice specific scenarios [5, 11–16]. However, traditional educational interventions for ASD are costly, inaccessible and inefficient due to limited resources and weak motivations [17–20]. In recent years, computer-based interventions have shown potential due to their low-cost, their appeal to children with ASD, and their relatively broader access. Many children with ASD exhibit a natural affinity for computer technologies that leads to a higher level of engagement and fewer disruptive behaviors in computer-based interactions [20, 21]. In particular, virtual reality (VR) technologies allows children with ASD to actively participate in interactive and immersive simulated situations [22, 23]. Several VR-based systems have been developed to teach important living skills, such as driving skills [24], and social skills, to children with ASD, and results suggest that children were able to appropriately understand, use and react to virtual environments with the possibility of transferring theses skills to real life. Bernardini et al. designed a game, called ECHOES, where children with autism could interact with an autonomous virtual agent and practice social communication skills through a touchable screen. Their results showed the positive impact of ECHOES environment on children with autism [25]. Ke et al. examined the potential effect of a VR-based social interaction program on the interaction and communication performance of children with high-functioning autism and found improved social competence measures after the intervention [26]. Smith et al. tested the efficacy of a VR job interview training program for adults with ASD and indicated that VR training could be a feasible tool to improve job interview skills [27]. Kandalaft et al. investigated the feasibility of a VR social cognition training intervention for young adults with high-functioning autism and found positive effects of the VR intervention on theory of mind and emotion recognition [28]. While VR-based intervention is promising as indicated by the above-mentioned literature, it is difficult to implement complex flexible interaction similar to peer-based interaction within traditional VR-based paradigms. Recent evidence suggests that children can show improvements in learning, communication and sociability when working or playing collaboratively with others [29]. Traditional VR environments usually depend on preprogrammed, rigid interactions with virtual avatars or objects and thus do not scale up well for complex adaptive interaction. Collaborative virtual environments (CVE), on the other hand, address the shortcoming of the traditional VR environments and enable multiple users in distributed locations to interact freely with one another within a shared virtual setting using a computer network. They can navigate and control the shared virtual world as well as communicate (verbally or non-verbally) to share information [30] and thus can benefit from peer-based interaction. Considering these advantages of CVE over traditional virtual environment, we wanted to design a CVE for children with ASD to foster social interaction with the purpose of: (1) granting users active control over interactions that gives children with ASD an opportunity to interact with others in a simple and less stressful environment; and (2) supporting collaborative games that inspire participation of children with ASD in the interactive work and conversation.

CVEs in recent years have been employed to enhance social understanding or skills in children with ASD. Schmidt et al. developed a game-based learning environment for youth with ASD to learn computational thinking and social skills while working together to solve problems with virtual programmable robots [31]. Weiss et al. created the TalkAbout CVE program for children to grasp social conversation skills [32]. Cheng et al. developed a CVE, where students talked with a virtual teacher to learn social techniques in the context of social scenarios [33]. In another study, Cheng et al. also simulated several animated social scenarios in a CVE for children with ASD to enhance the understanding of empathy [34]. Stichter et al. allowed youth with high functioning autism (HFA) to engage in collaborative tasks (e.g., to build a restaurant) in a shared CVE, called iSocial [35]. Millen et al. designed a CVE, encouraging students with ASD to attend the participatory design activities [36]. Several other works used touch-based tablet with multiple inputs and responses for collaborative activities to provide face-to-face interaction among users [32, 37, 38]. For instance, Battocchi et al. used a puzzle game capable of enforcing collaboration on the tabletop to facilitate cooperative behaviors in children with ASD [38]. All these studies indicate the promise of CVE system on improving social competence for children with ASD. Building upon this impressive body of previous work, our system offers new contributions in providing opportunities for flexible and varying collaboration, enhanced communication and objective measurements of collaboration and communication.

In this paper, we present the design and development of a novel CVE, called the Hand-in-Hand (HIH) Communication-Enhancement CVE system, for children with ASD. This system has the capability to support naturalistic social interaction, promote communication within game play, and gather objective data on user’s performance and communication in real time (Fig. 1). A preliminary system design for a part of the HIH system was previously presented in a conference paper [39]. The current work, in addition to expanding and improving all technical details, improves our contributions in several major ways: (1) introduces a new Communication-Enhancement mode to provide gaze and voice based communication within the game play, to provide greater opportunity to enhance social communication; (2) conducts new sets of experiments with many more participants (from 3 ASD-TD pairs to 12 ASD-TD pairs), and (3) significantly enhances the Results section to present the contributions of this work in the context of the existing works. The primary contributions of the current work are two-fold: (1) to design a novel CVE platform, Hand-in-Hand (HIH), to promote collaborative game play in children with ASD, and (2) to test the system with a feasibility study with children with ASD and their TD peers. HIH is unique in the sense that it requires two children to dynamically simultaneously coordinate their hand manipulative actions to move the virtual objects that can be played with or without verbal and gaze contingent communication between the players. To our knowledge, no such systems exist at present.

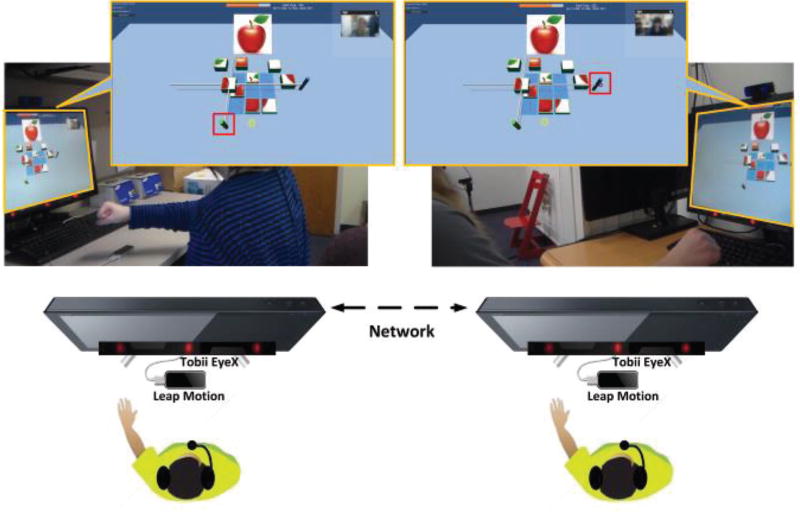

Fig. 1.

Two players wearing headphones in distant locations were playing the Puzzle Game in the HIH CVE system via the Leap Motion controller. They respectively controlled one virtual handle (marked by the red square) of the Move Tool. The Tobii EyeX tracker placed on the bottom of each monitor was used to track the eye gaze of each player on their respective monitor screen.

The rest of paper is organized as follows: Section 2 describes the system architecture of the HIH CVE system. Section 3 presents the details of the feasibility study followed by the results and discussion in Section 4. Finally, in Section 5, we summarize the contributions of the current work along with its limitations and future potential.

II. HIH CVE System Design

The primary goal of the HIH platform was to design a CVE platform that would provide opportunities for two players to collaboratively complete interactive games. It was designed specifically so that players situated in distant locations could use dynamic and simultaneous hand coordination to grab and move the virtual objects together. While puzzle games have been used in the literature to foster collaboration [38, 40], games requiring dynamic motor coordination have not yet been explored to the best of our knowledge. Although not a hallmark of the disorder, many children with ASD have deficits in motor control in addition to social interaction [41, 42] and HIH is designed to provide opportunities to teach both these skills simultaneously. An additional significant feature that distinguishes our puzzle games from others is that they require relatively complex cooperation between two players. That is, instead of collaboratively moving a puzzle piece toward the destination direction along the same axis, each player separately controls one movement direction (either horizontal or vertical), which is different from the destination direction. The combination of their movements leads to the puzzle piece being correctly placed. Thus, players need to think not only about their own movement, but also need to pay attention to their partner’s operation to make sure their actions are coordinated.

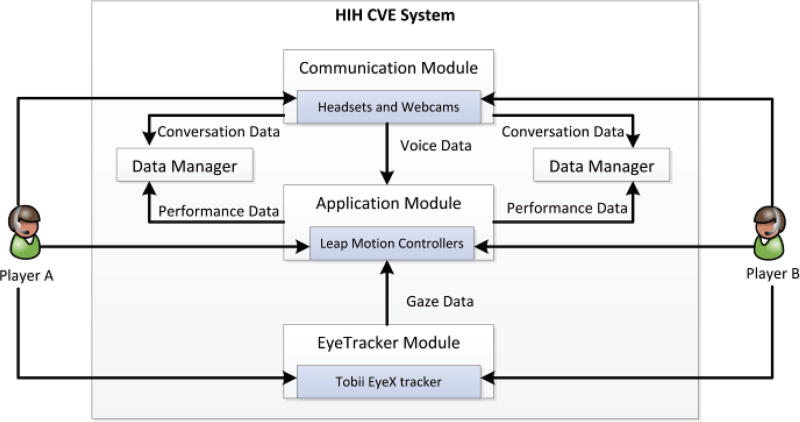

Within the CVE, collaborative games were developed to foster flexible communication and interaction between the players. The whole system was built using the game development engine Unity3D (https://unity3d.com/) with three interacting modules and two data mangers (Fig. 2). The Application Module, as the primary part of this system, manages the game connection and game execution. It allows data exchange and synchronization between two running applications on distant computers so as to support simultaneous manipulations of virtual objects by the two players in the same games. It also guarantees the proper functioning of games based on predefined logics. The Communication Module enables real-time video and audio communication between distant players and provides voice data for the Application Module. The Eyetracker Module obtains the player’s gaze data in real time, which combined with the voice data are used to achieve gaze and voice contingent communication in games. The Data Managers record performance and conversation data of each player for offline analysis.

Fig. 2.

The HIH Communication-Enhancement CVE system architecture that includes three major modules and two data managers for each user. The hardware devices associated with each module are shown within the modules. The arrows with texts explains the data flow in this system.

Each player interacting with this system is equipped with one Leap Motion controller (https://www.leapmotion.com/), a set of headset and webcam, and one Tobii EyeX tracker (http://www.tobii.com/xperience/products/). The Leap Motion device, which is a camera based user input system, can recognize the players’ hand locations and gestures as the control signals to manipulate virtual objects in the CVE. The headset and the webcam are used for audio-visual communication, while the Tobii EyeX tracker acquires the gaze information of the players.

In subsequent sections, we discuss each part of the system in details to present the hardware, the software platform and implementation approaches for the design.

A. Application Module

Game design is the core of Application Module. The game type and logic can impact the behaviors and communication of the players. First, we considered the collaborative modes in order to promote interaction between the users. Considering that collaborative work always requires concerted intentions and actions among partners, the games were designed to move virtual objects collaboratively for a common objective. Additionally, the requirement for collaborative manipulation can be varied through different games. At the same time, we also wanted each player to equally contribute toward these movements. In order to facilitate this, we created collaborative tools that could help as well as force each player to make efforts in the collaborative manipulations. The collaborative tool that we designed had two handles, each of which was controlled by one player to move virtual objects. However, in order to make the use of the tool more natural, we further improved the virtual object manipulation capability of the users by allowing them to use their hand to virtually grasp the tool handles. We chose the Leap Motion controller, which can track one’s hand in real-time, to enable hand control in the games. We believe that the use of such a device likely fosters the practice of realistic manipulation behaviors in players, such as grabbing and moving, which may be helpful for children with ASD. Finally, we designed a series of collaborative games played with the Leap Motion devices. These games were developed with the game development engine, Unity3D, because it could be easily integrated with the Leap Motion controller and support interactive game experience. Some virtual objects and pictures in the games were obtained from free online repositories, while others were developed with Autodesk Maya (https://www.autodesk.com/products/maya/overview).

1) Leap Motion Controllers and Collaborative Tools

Leap Motion controller is a gesture-based interactive tool that allows players control tools in a more naturalistic way and feel more immersed within the games. This small device (3 × 1.2 × 5 inches) is easy to use by placing it in front of the player (Fig. 1). When the player puts his/her hand above it within its detection range (approximately a field of view of 150 degrees and a range between 25 to 600 millimeters), it can track the 3D locations of hand and fingers and recognize the gestures with high speed and accuracy [43]. Two virtual collaborative tools, Move Tool (Fig. 1) and Collection Tool (Fig. 4 and 5), were developed with the Leap Motion controller application programming interface (API) that functioned based on the real-time hand data of the two players. To simplify the hand behaviors required for manipulating the tools, players only needed to learn three gestures: (1) close hand to grasp the handle; (2) open hand to release the handle; and (3) move hand to manipulate a virtual object using the handle. Once both players grab their handles and move their hands toward the same target, the tool is able to control objects in the CVE. For instance, when moving a puzzle piece to the correct location with the Move Tool, both players should first choose the same puzzle piece and then move it together by controlling one movement direction (horizontal or vertical direction) by each player. Without the help of the partner, puzzle piece cannot be chosen or moved. The Collection Tool also forces the players to cooperate, because the tool would not move or collect virtual objects unless both handles move consistently.

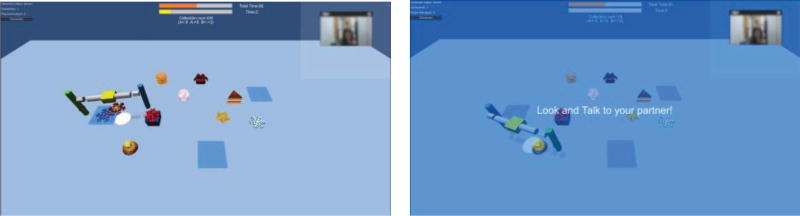

Fig. 4.

The Collection Game with Communication-Enhancement functionality. After players collect one toy (e.g., the red snowflake in the left picture) using the Collection Tool, the game is paused and gives a cue: “Look and Talk to your partner!” for players (as shown in the right picture).

Fig. 5.

The Delivery Game with Communication-Enhancement functionality. After players deliver one star (e.g., the star delivered to the left target area in the left picture), the game is paused and gives a cue (“Look and Talk to your partner!”) to players (as shown in the middle picture). If both players can quickly look and talk to each other, they will be rewarded with an additional target (e.g., the right target marked with black circle with 3 points in the right picture) near the starting point and thus they can have a chance to increase their scores.

Collaborative tools combined with the Leap Motion device provide realistic manipulations as well as instant visual feedback of their operations. The states of handles (e.g., motion and orientation) reflect a player’s actions and intention, and thus allows better understanding of their partner’s operations and thus likely promotes better cooperation between the partners even in distant setting.

1) Collaborative Games Design

We designed three collaborative games in this work, which are called Puzzle Games (PG), Collection Games (CG) and Delivery Games (DG). These games were designed based on the following specifications: they should (1) be easy to learn and engaging for children; (2) be goal-oriented and time-limited to motivate active behaviors; (3) involve visuo-spatial collaborative activities, and (4) foster extensive interactions and communication.

All these games require two players to collaboratively move virtual objects to correct locations using collaborative tools within a certain period of time (5 minutes for Puzzle Games and 3 minutes for Collection and Delivery Games). Players score every time they successfully put one virtual object in the correct location. In the Puzzle Game, two players are required to put 9 separate puzzle pieces together according to the provided target picture (e.g., the “apple” picture in Fig.1). The Collection Games (Fig. 4) require two players to bring 9 scattered toys to the collection areas with toy pictures. And in the Delivery Games (Fig. 5), two players should deliver several stars (at most 7 stars) to some available destinations with different rewards (e.g., 4, 6 and 10 points) while avoiding moving through the red-striped “dangerous” areas. These games are the same games that we briefly discussed in [39] with one major exception – we have designed and integrated a Communication-Enhancement mode in the Collection Games and the Delivery Games to facilitate audio-visual communication opportunities between the players. Fig. 3 shows the major modes for these games.

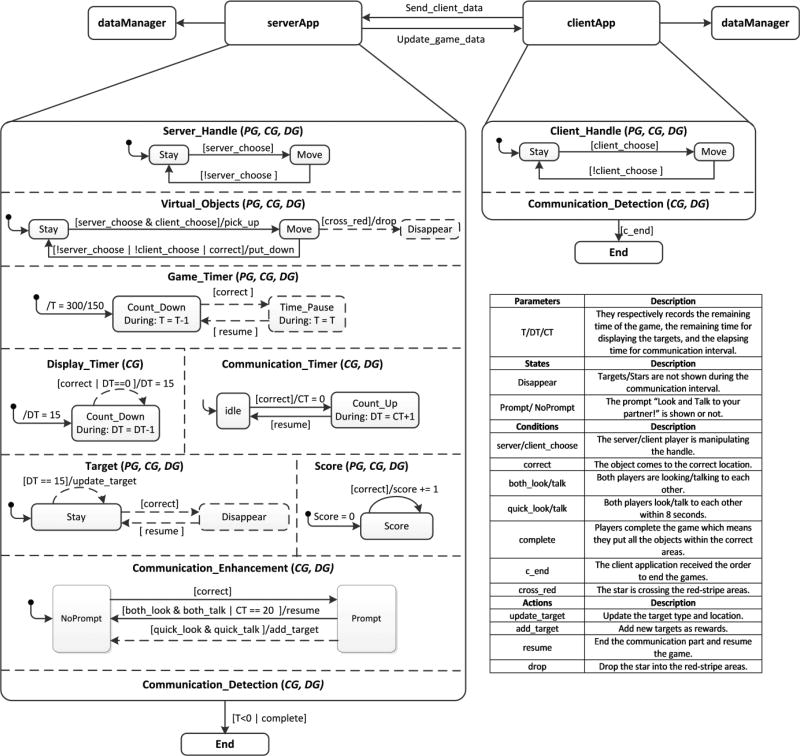

Fig. 3.

The Statechart diagram showing major modes developed for implementing the collaborative games. Some modes are used in all the games, while some other modes (displayed by dashed line squares) are only needed in some games. The bottom-right table explains several elements displayed in the diagram.

All of these collaborative games require simultaneous manipulations by two players in distributed locations. We used a server-client architecture for real-time data exchange and synchronization so as to maintain consistent game states on connected applications. The serverApp performs major game computation and control, while the clientApp only addresses the handle control and communication detection locally. The local data from clientApp, including client handle states, the voice and gaze data of the client player, are sent to the serverApp that performs the game logic, updates the game states based on the data of both applications and propagated updated data to the clientApp at a regular frequency (50Hz).

In the serverApp, several concurrent modes are chosen to implement the game logic based on different game specifications. The Handle mode is designed to map a player’s manipulations to the states of the handles of the collaborative tools. The Virtual_Objects mode is designed to change the virtual objects’ locations depending on the states of the collaborative tools. The Score mode is used to manage and display players’ scores. The Game_Timer mode records the remaining game time, and the Target mode manages the targets’ states (e.g., locations and numbers).

Except for these basic modes, the Collection Games have the Display_Timer mode to record the elapsing time for displaying one target, during which players are given at most 15 seconds to collect the target object. When players successfully collect the target object or the display time is out, the Display_Timer mode will reset the display time (15 seconds) and the Target mode will update the target by randomly showing a new target in one of the collection areas.

In addition, to promote audio-visual communication between the players, the Collection and Delivery Games require additional modes or states. Both games include the Communication_Enhancement mode to provide the communication opportunity for the players after one successful collection or delivery. The Communication_Detection mode detects if the players are talking and looking to each other, and the Communication_Timer mode allows at most 20 seconds’ communication interval. During the communication interval, the game is paused and prompts “Look and Talk to your partner!” to encourage communication (Fig. 4 and Fig. 5). When communication time is out or both players look and talk to each other, the game will resume and continue. Specifically, the Collection Games will add more targets as rewards for players to improve their scores as soon as both players start looking and talking to each other within the first 8 seconds. Players are thus encouraged to actively communicate with their partners.

2) Collaborative Strategies

To win these games, players have to talk and cooperate with each other. First, the game description only introduces the basic game rules and players need to figure out how to collaboratively work with their partners. For example, they are told that each player controls one handle, but they do not know which handle belongs to whom, which they need to find out either by trying out themselves or by discussing with their partners. During experiments, we found that many players tended to tell their partners which handle they should control and even how to control after they learnt the skill themselves.

Second, specific strategies can enhance information sharing and game-based discussion. In the Puzzle Games, players were sometimes confused about the partial pictures of the target and debated where the individual puzzle pieces should go. And because one puzzle piece can only be picked up when both players are manipulating the collaborative tool and each player controls one of the moving directions (either horizontal or vertical) of the puzzle piece, they have to first jointly determine which puzzle piece to move and where to put it. In one of the Collection Games, the target picture is only visible to one player, who should then share the information with the partner to make sure they move toward the same direction. In another Collection Game, two target pictures simultaneously appear in the game space. However, one of them is visible to one player, while the other one is visible to the other player. Two players thus may move toward two different targets if they do not discuss the target they see or the direction they want to move to. Manipulation conflicts are therefore likely to occur when there is little or no communication between the players. This was intentional, to implicitly encourage more information sharing which, in turn, could lead to more communication. In the Delivery Games, there are several paths to several destinations with different rewards. Two players should discuss which destination to deliver the star and which path to go. Additionally, these destinations are surrounded by several stationary or moving “dangerous” strips. Players thus need to discuss how to coordinate their movement orientation and speed in order to avoid these “dangerous” strips.

The introduction of the newly designed Communication-Enhancement mode provides a short interval for players to freely chat with each other during games. They talked about the game objectives, the skills needed to play the games, the next steps they should do, judged the partner’s performance, and so on during this short time interval.

3) Use of Gaze and Voice Data

The Communication Detection mode was designed to infer whether the two players were looking at each other and talking to their partners. This mode obtains gaze and voice data of each player in real time. As mentioned before, gaze data were obtained from the EyeTracker Module, while the voice data came from the Communication Module.

The software associated with Tobii EyeX tracker provides methods to check gaze in real time, which is useful to check whether player’s gaze is within the region of interest (ROI) area. The live video feed of the partner is put at the top right corner of the screen in our games, which does not overlap with the other ROIs of the game. Once a player’s gaze enters the top right corner, the player is judged to be looking at his/her partner.

Detection of talking between the partners was implemented by calculating the volume and fundamental frequency of the sound coming from the microphone. The volume reflects the loudness of a sound which is proportional to the amplitude of the sound wave, while the fundamental frequency refers to the vibration of a sound. When people talk, the volume and domain frequency change significantly compared to the silent situation. The volume and fundamental frequency of a sound can be computed by

| (1) |

where rmsValue represents the root mean square (RMS) of sound amplitude, refValue is the RMS value for 0 dB, Nmax is the index of maximum amplitude element, and freqResolution represents frequency resolution of the sound.

The Unity3D software functions, GetSpectrumData and GetOutputData, allow developers to access the information about the amplitude and frequency of audio data, which makes it possible to compute the volume and dominant frequency of sound from the above equations. From our previous study [39], we collected voice samples from the participants to analyze the range of volumes and dominant frequencies of their voices to design thresholds for the current study. The volume threshold was set as ≥30 dB and the fundamental frequency threshold was set as 170 Hz-300 Hz (the upper limit was set to avoid noise). A player is judged to be talking when both his/her voice volume and frequency fall within these threshold ranges.

B. Communication Module and EyeTracker Module

The Communication Module supports video and audio communication between players by using the software Skype (http://www.skype.com/en/). When using our system, one player makes a video call to his/her partner and builds the communication channel, through which players can discuss strategies for playing games and sharing information in real time. Each player’s voice is recorded through the microphone in real time, and utilized in the Communication_Detection mode to detect if the player is talking.

The EyeTracker Module obtains player’s gaze information with the Tobii EyeX eye tracker, which is capable of tracking the gaze point as player’s eyes scan the screen. It is placed on the bottom of the monitor screen and plugged in the Unity to transmit gaze data to the Communication_Detection mode for online “look” detection.

C Data Managers

Data managers were developed to save the objective gaming data as well as the conversations between players for offline analysis. Gaming data, such as completed pieces, cooperative efficiency and total play time, can indicate a player’s performance and cooperation. Recorded audios of conversations between two players are transcribed verbatim and used to analyze communication between the players (Table I).

TABLE I.

Metrics of Performance and Communication

| Measures | Description |

|---|---|

| Completed pieces (/minute) | The number of puzzle pieces that matched the target in one game per minute. |

| Cooperative efficiency (%) | The time of collaboratively manipulating the puzzle piece divided by total manipulating time. |

| Total play time (s) | The time of playing one game (the maximum allowed play time of Puzzle Game was 300s). |

| Back-and-forth sentences(/per minute) | One back-and-forth sentence was defined as one player spoke and his/her partner responded. |

| Words count of one player (/minute) | The total number of words that each player spoke in one game divided by the total play time. |

III. Feasibility Study

The goal of this study was to conduct a preliminary evaluation of this novel system regarding its ability to foster collaborative activities among participants. We collected preliminary pilot data regarding collaborative actions to assess change within our small pilot sample and validate the capacity of the system to address main hypotheses.

A. Participants

We recruited 12 children with ASD and 12 TD children for the feasibility study of the HIH CVE systems with and without the Communication_Enhancement mode. One child with ASD was paired with one TD child based on age and gender to form a group (i.e., 12 groups in total). The reason for not creating the ASD-ASD pair is that it is more common for children with ASD to interact with TD peers in daily life, and the goal of ASD intervention includes improving the relationships of children with ASD with their TD peers.

We conducted two studies with HIH CVE system. In Study 1 we tested the system without the Communication_Enhancement mode. In Study 2, we tested the system with the Communication_Enhancement mode. The studies were approved by the Vanderbilt University Institutional Review Board. Both sets of experiments were conducted after obtaining the assent of the participants, consents from their caregivers and under the supervision of trained ASD therapists and experimenters. The parents of the participants first completed ASD symptom measurements: the Social Responsiveness Scale (SRS) [44] and Social Communication Questionnaire (SCQ) [45]. Afterwards each participant went to separate experimental rooms and played games together within the shared CVE through the local area network as shown in Fig. 1. Table II presents the detailed information of participants. All participants spoke with flexible phrase speech and could complete all aspects of game play without adult prompting or support.

TABLE II.

Participants‘ Characteristics

| Study 1 | Study 2 | |||

|---|---|---|---|---|

|

|

||||

| Participants | ASD (n=6) | TD (n=6) | ASD (n=6) | TD (n=6) |

|

|

||||

| Mean(SD) | Mean(SD) | Mean(SD) | Mean(SD) | |

| Age (years) | 12.38(2.60) | 12.60(2.66) | 12.12(3.59) | 13.15(3.77) |

| SRS-2 total raw score | 108(17.13) | 41(24.11) | 105.8(13.4) | 12.5(6.87) |

| SRS-2 Tscore | 80.5(7.80) | 53.33(9.27) | 81(5.94) | 42.5(3.45) |

| SCQ current total score | 19.7(11.35) | 7(8.70) | 13.5(4.54) | 1.33(1.25) |

B. Procedure

At the beginning of each experiment, experimenters explained the procedure and devices (e.g., Leap Motion controller, Tobii EyeX tracker, camera, and headset) to the participants and taught them how to interact with the Leap Motion Controller. Participants in separate rooms then completed individual calibration of their eye tracking device, and built an audio and video communication channel with the partner via Skype.

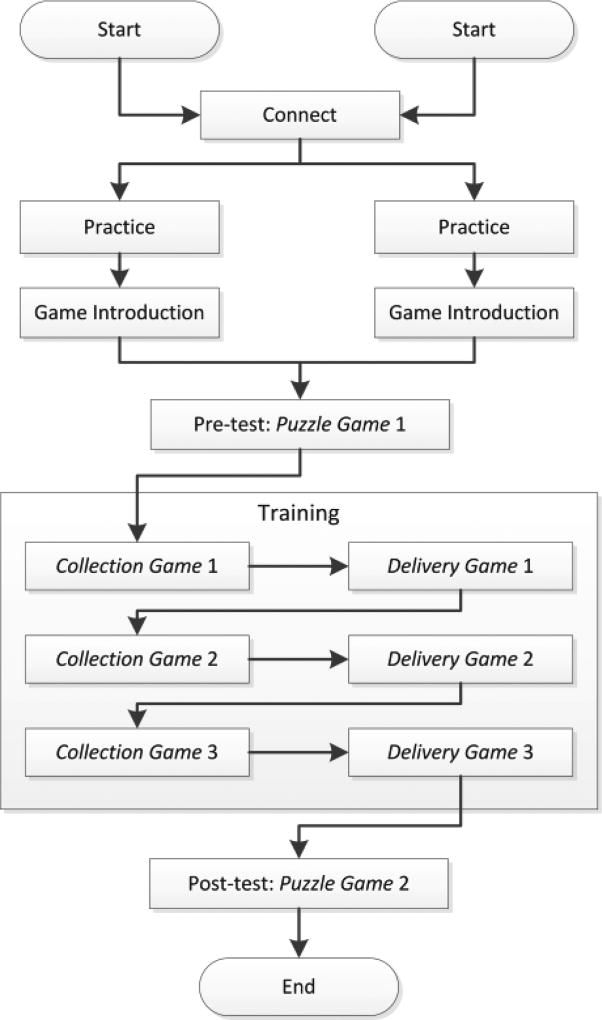

The whole experimental procedure is described by the simplified flowchart in Fig. 6. Two running applications were first connected via the IP addresses. Then, participants practiced handle operations in an independent practice game under the guidance of the experimenters. As players were supposed to control handles in all games, they repeatedly practiced grabbing, moving and releasing one handle in this practice game. Until they got used to the handle controls they were not allowed to enter the next session, “Game Introduction,” that presented the rules of each game with illustrative images and words.

Fig. 6.

Flowchart of the experimental procedure.

After both participants got familiar with playing the collaborative games, they entered the game session which consisted of eight games. Each game was automatically loaded one by one based on a pre-defined order. Participants were required to collaboratively complete these games without the help or intervention of the experimenters or therapists. Puzzle Games were played in the pre- and post-test to assess the change of participants’ performance and communication. The Training session consisted of 3 Collection Games and 3 Delivery Games to enhance social understanding and collaborative skills of the participants. These training games were different from one another and became increasingly more difficult requiring progressively more discussion between participants. In addition, the Communication-Enhancement mode slowed down the pace of the game and created more opportunities for communication. Table III includes the main configuration differences of all the games. Diverse types of games were intended to keep participants engaged and motivated to negotiate with their partners. After completing all games, each participant filled out a questionnaire to give feedback in terms of engagement, performance and communication, as well as advice for improving the system. Before they left, they each received gift cards as compensation.

TABLE III.

Game Configuration Differences

| Games | Differences |

|---|---|

| Puzzle Game 1 | Target picture is “Lemon”. |

| Puzzle Game 2 | Target picture is “Apple”. |

| Collection Game 1 | Every time, the target picture is visible to both players. |

| Collection Game 2 | Every time, the target picture is only visible to one player but invisible to the other one. |

| Collection Game 3 | Every time, two target pictures simultaneously appears, each of which is only visible to one of the players. |

| Delivery Game 1 | Obstacles move along the horizontal or vertical direction. |

| Delivery Game 2 | Obstacles spin around fixed centers. |

| Delivery Game 3 | Obstacles move in several complex manners. |

IV. Results

Here we first present the system validation results to demonstrate that the HIH Communication-Enhancement CVE system functioned robustly and stably. Then we give the feasibility study results from two aspects: participant’s experience (subjective) and participants’ performance (objective), and also compare the results of Study 1 and Study 2 to show the advantages of HIH Communication-Enhancement CVE system in fostering collaborative gameplay skills and social communication in children with ASD.

A. System Validation Results

The nature of CVE requires stable data exchange and synchronization in real time. We thus first evaluated the network communication performance of this system by analyzing the rate of RPCs (Remote Procedure calls). RPCs in Unity3D enables data transmission to remote machine and the RPC rate can indicate the data transmission rate over the network. Six tests were conducted on two computers that had 3.7 GHz processors (8 GB and 16GB of RAM) and NVIDIA Quadro K600 GPUs (60 Hz refresh rate). The results for each game are shown in Table IV. The average network communication throughput of the server and the client for all the games was around 50 Hz, which was the same as the predefined transmission rate. This rate is sufficiently fast to avoid latency in connected games.

TABLE IV.

Test Results of Data Transmission Rate (Hz)

| Games | Server | Client | ||

|---|---|---|---|---|

|

| ||||

| Mean | SD | Mean | SD | |

| Pre-test | 50.19 | 0.30 | 50.20 | 0.31 |

| Training 1 | 50.02 | 0.01 | 50.02 | 0.01 |

| Training 2 | 50.05 | 0.41 | 50.06 | 0.41 |

| Training 3 | 50.02 | 0.01 | 50.05 | 0.06 |

| Training 4 | 50.02 | 0.01 | 50.05 | 0.06 |

| Training 5 | 50.29 | 0.63 | 50.30 | 0.62 |

| Training 6 | 49.84 | 0.43 | 50.02 | 0.01 |

| Post-test | 50.20 | 0.68 | 50.21 | 0.68 |

We also measured the accuracy of the eye gaze detection in the ROI to make sure that the Communication-Detection mode functioned as designed. We sought the help of 6 volunteers to participate in a measurement task that asked each of them to look at the ROI area for 10 times for 10s as well as the non-ROI area for 10 times for 10s. The accuracy of gaze detection for each volunteer is shown in Table V. It was found that the eye gaze detection had an average accuracy of 91.26% for the ROI area and 99.61% for the non-ROI. Since the detected behavior “looking at the partner” was a continuous action, more than 90% accuracy were deemed acceptable for the system.

TABLE V.

Accuracy of Eye Gaze Detection (%)

| Volunteers | ROI | Non-ROI | ||

|---|---|---|---|---|

|

| ||||

| Mean | SD | Mean | SD | |

| 1 | 89.78 | 0.52 | 99.56 | 0.14 |

| 2 | 95.46 | 0.47 | 100 | 0 |

| 3 | 87.76 | 0.43 | 98.94 | 0.29 |

| 4 | 84.54 | 0.69 | 100 | 0 |

| 5 | 92.42 | 0.43 | 99.82 | 0.06 |

| 6 | 97.6 | 0.43 | 99.32 | 0.22 |

B. Participants’ feedback

We collected participants’ feedback about using the system with a questionnaire including 10 questions. In Table VI, we present these questions associated with the answers of the participants from the two studies to show and compare participants’ experience in using the HIH CVE systems with and without the Communication-Enhancement mode.

TABLE VI.

Participants‘ Feedback From Two Feasibility Studies

| Question: 1–6 | Study 1 | Study 2 | ||||||

|---|---|---|---|---|---|---|---|---|

| ASD (n = 6) | TD (n = 6) | ASD (n = 6) | TD (n = 6) | |||||

| Mean(Min/Max) | SD | Mean(Min/Max) | SD | Mean(Min/Max) | SD | Mean(Min/Max) | SD | |

| 1. Like the games? | 3.67(1/5) | 1.25 | 3.33 (1/4) | 1.11 | 4.67(4/5) | 0.47 | 4.33 (4/5) | 0.47 |

| 2. Did you do well? | 4.00 (2/5) | 1.00 | 3.33 (1/4) | 1.11 | 4.33 (4/5) | 0.47 | 3.83 (2/5) | 0.90 |

| 3. Did partner do well? | 3.50(2/5) | 1.12 | 3.17(1/4) | 1.07 | 4.50(4/5) | 0.50 | 4.67(4/5) | 0.47 |

| 4. Important to talk? | 4.00 (2/5) | 1.00 | 4.33 (3/5) | 0.75 | 4.83 (4/5) | 0.37 | 4.67(4/5) | 0.47 |

| 5. Important to cooperate? | 4.83 (4/5) | 0.37 | 4.83 (4/5) | 0.37 | 4.50(4/5) | 0.50 | 4.83 (4/5) | 0.37 |

| 6. Easy to work together? | 3.50(1/5) | 1.38 | 2.83 (1/5) | 1.34 | 3.33 (1/5) | 1.37 | 3.67(3/5) | 0.75 |

| Question: 7–10 | ASD-TD in Study 1 | Choices | ASD-TD in Study 2 | |||||

| 7. How often did you talk? | 10(5/5) | “Very often”. | 10(5/5) | |||||

| 2(1/1) | “Only when I needed”. | 2(1/1) | ||||||

| 0 | “Very little”. | 0 | ||||||

| 8. Which was most useful to learn how to play? | 6(3/3) | “Talking with my partner”. | 9(4/5) | |||||

| 1 (0/1) | “Reading game instructions”. | 2(1/1) | ||||||

| 5 (3/2) | “By trying several times”. | 1(1/0) | ||||||

| 9. Which was most useful to win? | 11(5/6) | “Working closely with my partner”. | 10 (4/6) | |||||

| 1 (1/0) | “My personal performance”. | 1(1/0) | ||||||

| 0 | “Understanding the game rules”. | 1(1/0) | ||||||

| 10. Did you play better? | 3(2/1) | “We played better by the end”. | 11(5/6) | |||||

| 8 (3/5) | “Stayed the same”. | 1(1/0) | ||||||

| 1 (1/0) | “We played worse”. | 0 | ||||||

The first six questions used a five-point Likert scale. The answers for these questions indicated that participants in both studies liked the games (Q1: Mean ≥ 3.67), played well in these games (Q2: Mean ≥ 3.33, Q3: Mean ≥ 3.50), confirmed the importance of communication (Q4: Mean ≥ 4) and cooperation (Q5: Mean ≥ 4.5), and felt it was not hard to work with their partners in the games (Q6: Mean ≥ 2.83).

In addition, participants in Study 2 gave more positive feedback for most of these questions compared to participants in Study 1 (MeanStudy2 = 4.35 > MeanStudy1 = 3.78). In Q1, the participants in Study 2 showed considerable interest in these games (MeanASD = 4.67, MeanTD = 4.33) compared to those in Study 1 (MeanASD = 3.67, MeanTD = 3.33). In Q2, the participants in Study 2 (MeanASD = 4.33, MeanTD = 3.83) gave a slightly higher score for self-evaluation than those in Study 1 (MeanASD = 4, MeanTD = 3.33). In Q3, the participants in Study 2 also gave a higher score for their partners’ performance (MeanASD = 4.50, MeanTD = 4.67) than those in Study 1 (MeanASD = 3.5, MeanTD = 3.17). To consider Q2 and Q3 together, we found that the participants in Study 2 thought more highly of their partners (MeanQ3 > MeanQ2), which may indicate social niceties to appreciate one’s partner’s work that was not seen in Study 1 (MeanQ3 < MeanQ2). In Q4, the participants in Study 2 understood the importance of communicating with partners better (MeanASD = 4.83, MeanTD = 4.67) compared to those in Study 1 (MeanASD = 4, MeanTD = 4.33). In Q5, all participants gave a score as high as “4” or “5” about the importance of cooperation. In Q6, all participants except for the TD ones in Study 1 felt it was easy to work together with their partners. From our observation, one challenge in cooperation was to correctly or quickly respond to partners’ questions or commands, which sometimes was harder for some participants (most were participants with ASD). For example, when one participant asked, “Which object do you want to do next?”, his/her partner only answered, “That one,” instead of specifying the object. However, the introduction of Communication-Enhancement mode in the current system increased opportunities for communication and allowed participants to do timely information sharing and understand the partners’ ideas and feelings, which we thought led to better game experience in Study 2.

The last four questions were choice questions and participants’ answers were counted and shown in the form of “Total number (ASD number/TD number)” in Table VI. The answers for these questions indicated that most participants believed that they talked “very often” in the games (Q7: 20 out of 24), the most useful way to learn how to play was to talk with their partners (Q8: 15 out of 24), the most useful way to win was to work closely with their partners (Q9: 21 out of 24) and they played better (Q10:14 out of 24).

However, several questions were answered differently by the two study participants. For example, in Q8, most participants in Study 2 (9: ASD = 4, TD = 5) thought “talking with my partner” was the best way to learn how to play the games. However, in Study 1, 6 participants (ASD = 3, TD = 3) chose “talking with my partner”, while there were still 5 participants (ASD = 3, TD = 2) chose “by trying several times”. The results suggested that participants in Study 2 that used the system with Communication-Enhancement mode might have increased information sharing and game related discussions. In Q10, most participants in Study 2 (11: ASD = 5, TD = 6) felt they played better at the end, while fewer participants in Study 1 (8: ASD= 3, TD = 5) felt the same. The results were consistent with the performance results (discussed later in detail), because participants in Study 2 completed more puzzle pieces (increased by 1.27 per minute) than those in Study 1 (increased by 1.03 per minute).

C. Participants’ performance

We conducted statistical analyses of participants’ game performance and communication in pre- and post-test. Note that the pre- and post-test games (Puzzle Games) were different from the training games in terms of game rules, objectives and use of collaborative tools to avoid habituation effect as well as to observe generalizability of the training. We compared the performance and communication metrics in the pre- and post-test using the two-tail Wilcoxon signed-rank test (alpha = .05) [46] and simultaneously evaluated the effect size via Cohen’s d [47]. Since normality of this small sample (N = 6 pairs for each study) could not be assumed we used the Wilcoxon signed-rank test to analyze the data. Since this test produces a conservative results as compared to t-tests, we believe that this is the right approach because it will not overstate the effect. TABLE VII and TABLE VIII present the results for Study 1 and Study 2. None of the metrics except for the “cooperative efficiency” of Study 2 showed statistically significant improvements (W = 0, p = .0313, |d| = 1.5394) between pre- and post-test. However, participants’ performance improved after the training session, sometimes with large effect size, considering the increased number of completed puzzle pieces, increased cooperative efficiency and reduced total play time. Most of the participants (except for TD participants in Study 1) also spoke more in the post-test.

TABLE VII.

Participants‘ Performance Comparison in Study 1

| Variables | Pre-test | Post-test | Diff. | W | p-value | |d| | ||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| Mean | SD | Mean | SD | |||||

| Completed pieces (/minute) | 1.12 | 0.78 | 2.15 | 1.34 | 1.03 | 1.5 | .0938 | 0.9390 |

| Cooperative efficiency (%) | 53.38 | 16.76 | 60.51 | 17.88 | 7.13 | 3 | .1563 | 0.4110 |

| Total play time (s) | 288 | 25 | 224 | 65.11 | −64 | 10 | .1250 | 1.2890 |

| Word count of participants with ASD (/minute) | 34.21 | 30.77 | 36.53 | 26.64 | 2.32 | 8 | .6875 | 0.0804 |

| Word count of TD participants (/minute) | 38.44 | 13.75 | 35.30 | 15.54 | −3.14 | 15 | .4375 | 0.2142 |

| Back-and-forth sentences(/per minute) | 3.71 | 2.00 | 2.82 | 1.76 | −0.89 | 13 | .1875 | 0.4671 |

| Aggregate score | 1.12 | 0.50 | 1.52 | 0.88 | 0.40 | 3 | .1563 | 0.5649 |

TABLE VIII.

Participants‘ Performance Comparison in Study 2

| Variables | Pre-test | Post-test | Diff. | W | p-value | |d| | ||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| Mean | SD | Mean | SD | |||||

| Completed pieces (/minute) | 1.18 | 0.86 | 2.45 | 1.48 | 1.27 | 1 | .0625 | 1.05 |

| Cooperative efficiency (%) | 36.98 | 22.92 | 68.61 | 17.87 | 31.63 | 0 | .0313* | 1.5394 |

| Total play time (s) | 289 | 21.25 | 242 | 85.11 | −47 | 9 | .2500 | 0.7604 |

| Word count of participants with ASD (/minute) | 28.65 | 19.59 | 33.66 | 17.24 | 5.01 | 7 | .5625 | 0.2714 |

| Word count of TD participants (/minute) | 35.91 | 36.87 | 52.62 | 26.23 | 16.71 | 3 | .1563 | 0.5224 |

| Back-and-forth sentences(/per minute) | 3.73 | 3.54 | 6.00 | 2.88 | 2.23 | 3 | .1563 | 0.7033 |

| Aggregate score | 1.12 | 0.70 | 2.02 | 1.08 | 0.90 | 2 | .0938 | 0.9857 |

p<.05

To compare the cooperation performance of participants in two studies, we found that participants in Study 2 achieved more improvements. First, participants in Study 2 increased the number of completed puzzle pieces by 1.27 per minute (W = 1, p = .0625, |d| = 1.05 (large effect size)), while the increases in Study 1 were 1.03 per minute (W = 1.5, p = .0938, |d| = 0.9390 (large effect size)). In addition, we found a statistically significant difference and a very large effect size regarding the cooperative efficiency of participants in Study 2 (W = 0, p = .0313, |d| = 1.5394). Participants in Study 2 cooperated more efficiently by the end with an increase of 31.63%, compared to only 7.13% increase in Study 1 (W = 3, p = .1563, |d| = 0.4110 (small effect size)). The results suggested that participants in Study 2 using the system with the Communication-Enhancement mode cooperated and played better after the training session as compared to the participants in Study 1.

The total play time could partially reflect the difficulty of the games for the participants. However, since participants were given enough time (at most 300 seconds) to complete the games, they were not in a hurry. Sometimes, participants would like to talk for a while and then manipulate the puzzle pieces. The participants in both studies spent less play time in the post-test (Study1: W = 10, p = .1250, |d| = 1.2890 (large effect size); Study 2: W = 9, p = .2500, |d| = 0.7604 (medium effect size)).

To compare the communication of participants in these two studies, we found that participants in Study 2 communicated more frequently than those in Study 1 with regard to the back-and-forth sentences (full sentences and phrases). We counted the number of back-and-forth exchanges to assess how often they communicated in the games. We can see that communication between paired participants in Study 2 was enhanced by 2.27 per minute (W = 3, p = .1563, |d| = 0.7033 (medium effect size)). On the contrary, communication was decreased by 0.89 per minute in Study 1 (W = 13, p = .1875, |d| = 0.4674 (small effect size)). We also counted the words each participant spoke in the games. Though participants with ASD in Study 2 spoke words in a low level (pre: 28.65 words/minute; post: 33.66 words/minute) compared to those in Study 1 (pre: 34.21 words/minute; post: 36.53 words/minute), they still spoke more words (increasing by 5.01 words/minute) than those in Study 1 (increasing by 2.32 words/minute). Particularly, word count for TD participants in Study 1 decreased by 3.14 per minute, but increased substantially by 16.71 per minute in Study 2. These results showed that participants in Study 2 who used the system with the Communication-Enhancement mode were more likely to communicate with their partners by the end.

To further understand the performance results, we also calculated aggregate scores that integrated 4 performance measures, which were completed pieces, cooperative efficiency, total play time and back-and-forth sentences. The data for each measure were first normalized using Min-Max scaling method, and then summed together to generate the final aggregate scores (range: [0, 4]). We observe that in both studies, participants achieved the same aggregate score (1.12) on average in the pre-test. However, participants in Study 2 got a higher score in the post-test (W = 2, p = 0.0938) characterized by the large effect size (|d| = 0.9857). The results may indicate that participants in Study 2 improved more with respect to cooperation and communication.

D. Preliminary Analysis of Conversations

We analyzed the conversation of the participants offline to understand whether they had any change in conversation between pre- and post-test. We divided the content of the conversation into two groups: game-oriented conversation and social conversation.

Game-oriented contents included sharing of game information and virtual object manipulation directives, which were necessary to accomplish the game objectives. Game information sharing occurred in two ways: (1) spontaneous information sharing and (2) question-and-answer. As participants could not directly observe the operations of their partners, they generally actively told their partners of their intentions and actions, such as “I have my hand on the handle”, “Wait, I’m trying to grab it”, “I think we need to take the piece out”, “This time is an apple”, “You are supposed to grab the green thing” and so on. Additionally, participants would inquire information from their partners, such as “take this one?” “Can you describe to me what you see? Because I can’t see your circle.” “Which piece would you want to go first?” “Where should it go?” and so on. Manipulation directives, like “go down”, “one more up”, “go and grab it” and “put this right here” happened frequently in their conversations, which reflected the embedded information sharing requirements of the designed game rules. We found that sometimes one of the paired participants would take the role of a “leader” to carry out the manipulations directives.

Social contents contained social information sharing and evaluations of partners. Some participants, including those with ASD, introduced themselves to their partners during the game. Some also discussed the games they played in their daily lives. In addition, participants would encourage their partners or praised their good performance in the games. For example, when they completed a game, they often said “We did it”, “Perfect”, “Good job” or “We are doing good”, and their partners always gave similar responses. At the beginning of a game, they would say “I bet we can get this time”, “We will do a good job” or “We can win this time if we work together as a team.” During the game, they would say “that’s good”, “We get it so right,” or “Let’s do this, bro. You are my man”. Sometimes they even joked about foolish actions, such as “you are not very good at this” and “You talk about like you making pizza”.

Through the conversation analysis, we found that although some participants would react slowly or describe things less accurately, they could be encouraged and promoted to improve their behaviors or share information with their partners. It appears that these collaborative games have the potential to provide a spontaneous communication space, which encourage conversations in a natural way.

V. Conclusion and Future Work

This paper presents the development and evaluation of the Hand-in-Hand (HIH) Communication-Enhancement CVE system, which can provide a naturalistic social interaction platform for children with ASD and their peers, increase the opportunities for communication and cooperation within the collaborative games and collect quantitative data regarding collaborative and communicative performance of the participants. The feasibility study tested the acceptability of the system among children with ASD and obtained a preliminary assessment of the system. Results showed that participants enjoyed the collaborative games presented by this system and cooperated progressively better in these games. They also emphasized the importance of communication and cooperation with their partners in order to win these games. The Communication-Enhancement mode facilitated the spontaneous conversations between participants and performance analysis demonstrated that participants communicated more frequently with their partners by the end. In addition, we found that participants could be positively influenced by their partners in the process of playing these games. For instance, when one participant with ASD could not correctly answer his/her partner’s question (“Where will the present go?”), the partner raised a new question (“The left area, middle area, or the right area?”); then the child with ASD could understand how to answer and replied, “The right one.” These spontaneous conversations could help children with ASD practice verbal behaviors in a natural and visual way. We believe that the results of this feasibility study indicate a need for a fully-powered intervention study in the future to critically assess the intervention effect due to this novel system.

In the future, the HIH Communication-Enhancement CVE system will be further improved to support more naturalistic collaborative gameplay platform. We are now working on designing the CVE with the haptic interfaces that is able to produce physical feedback to the user. We expect the haptic CVE system could increase the sense of cooperation between partners. Additionally, more participants are needed in the future for the user study to assess the practical value of the system for children with ASD. In order to explore the influence of the system on the communication ability of the participants, we plan to continue with the analysis of the participants’ conversations in terms of the game-oriented content and the social content and perform a statistical analysis of the change in the content of the conversation.

Acknowledgments

We are grateful for the support provided by NIH grants 1R01MH091102-01A1 and 1R21MH111548-01 for this research. The authors are solely responsible for the contents and opinions expressed in this manuscript.

Biographies

Huan Zhao received the B.S. degree in automation from Xi’an Jiaotong University, Xi’an, China, in 2012, and the M.S. degree in electrical engineering from Vanderbilt University, Nashville, TN, USA, in 2016. From 2012 to 2014, she did research at Institute of Artificial Intelligence and Robotics in Xi’an Jiaotong University. She is currently pursuing the Ph.D. degree in electrical engineering at Vanderbilt University, Nashville, TN, USA.

Her research interests lie in the design and development of systems for special needs using the technologies of virtual reality, human-machine interaction and robotics.

Amy R. Swanson received the MA. degree in social science from University of Chicago, Chicago, IL, USA, in 2006.

Currently she is a Clinical/Translational Research Coordinator at Vanderbilt Kennedy Center’s Treatment and Research Institute for Autism Spectrum Disorders, Nashville, TN, USA.

Amy S. Weitlauf received her Ph.D. in Psychology from Vanderbilt University, Nashville, TN, USA, in 2011.

She is a licensed clinical psychologist with the Vanderbilt Kennedy Center’s Treatment and Research Institute for Autism Spectrum Disorders and is an Assistant Professor of Pediatrics at Vanderbilt University. Medical Center.

Zachary E. Warren received the Ph.D. degree from the University of Miami, Miami, FL, USA, in 2005.

Currently he is an Associate Professor of Pediatrics and Psychiatry at Vanderbilt University. He is the Director of the Treatment and Research Institute for Autism Spectrum Disorders at the Vanderbilt Kennedy Center.

Nilanjan Sarkar (S’92-M’93–SM’04) received the Ph.D. degree in mechanical engineering and applied mechanics from the University of Pennsylvania, Philadelphia, PA, USA, in 1993.

In 2000, Dr. Sarkar joined Vanderbilt University, Nashville, TN, where he is currently a Professor of mechanical engineering and electrical engineering and computer science. His current research interests include human–robot interaction, affective computing, dynamics, and control. Dr. Sarkar is a Fellow of the ASME.

Contributor Information

Huan Zhao, Electrical Engineering and Computer Science Department, Vanderbilt University, Nashville, TN 37212 USA (huan.zhao@vanderbilt.edu).

Amy R. Swanson, Treatment and Research Institute for Autism Spectrum Disorders, Vanderbilt Kennedy Center, Nashville, TN 37203 USA (amy.r.swanson@vanderbilt.edu)

Amy S. Weitlauf, Treatment and Research Institute for Autism Spectrum Disorders, Vanderbilt Kennedy Center, Nashville, TN 37203 USA (amy.s.weitlauf@vanderbilt.edu)

Zachary E. Warren, Treatment and Research Institute for Autism Spectrum Disorders, Vanderbilt Kennedy Center, Nashville, TN 37203 USA (zachary.e.warren@vanderbilt.edu)

Nilanjan Sarkar, Mechanical Engineering Department, Vanderbilt University, Nashville, TN 37212 USA (nilanjan.sarkar@vanderbilt.edu).

References

- 1.Baio J. Prevalence of Autism Spectrum Disorders: Autism and Developmental Disabilities Monitoring Network, 14 Sites, United States, 2008. Morbidity and Mortality Weekly Report. Surveillance Summaries. Volume 61, Number 3. Centers for Disease Control and Prevention. 2012 [PubMed] [Google Scholar]

- 2.Kim YS, Leventhal BL, Koh Y-J, Fombonne E, Laska E, Lim E-C, Cheon K-A, Kim S-J, Kim Y-K, Lee H. Prevalence of autism spectrum disorders in a total population sample. American Journal of Psychiatry. 2011;168:904–912. doi: 10.1176/appi.ajp.2011.10101532. [DOI] [PubMed] [Google Scholar]

- 3.Johnson CP, Myers SM. Identification and evaluation of children with autism spectrum disorders. Pediatrics. 2007;120:1183–1215. doi: 10.1542/peds.2007-2361. [DOI] [PubMed] [Google Scholar]

- 4.Home C. Prevalence of Autism Spectrum Disorder Among Children Aged 8 Years—Autism Developmental Disabilities Monitoring Network, 11 Sites. United States, 2010. 2014 [PubMed] [Google Scholar]

- 5.Maglione MA, Gans D, Das L, Timbie J, Kasari C. Nonmedical interventions for children with ASD: Recommended guidelines and further research needs. Pediatrics. 2012;130:S169–s178. doi: 10.1542/peds.2012-0900O. [DOI] [PubMed] [Google Scholar]

- 6.Blumberg SJ, Bramlett MD, Kogan MD, Schieve LA, Jones JR, Lu MC. Changes in prevalence of parent-reported autism spectrum disorder in school-aged US children: 2007 to 2011–2012. National health statistics reports. 2013;65:1–7. [PubMed] [Google Scholar]

- 7.Stichter JP, O’Connor KV, Herzog MJ, Lierheimer K, McGhee SD. Social competence intervention for elementary students with Aspergers syndrome and high functioning autism. Journal of autism and developmental disorders. 2012;42:354–366. doi: 10.1007/s10803-011-1249-2. [DOI] [PubMed] [Google Scholar]

- 8.Carter EW, Common EA, Sreckovic MA, Huber HB, Bottema-Beutel K, Gustafson JR, Dykstra J, Hume K. Promoting social competence and peer relationships for adolescents with autism spectrum disorders. Remedial and Special Education. 2013 0741932513514618. [Google Scholar]

- 9.Locke J, Ishijima EH, Kasari C, London N. Loneliness, friendship quality and the social networks of adolescents with high-functioning autism in an inclusive school setting. Journal of Research in Special Educational Needs. 2010;10:74–81. [Google Scholar]

- 10.Bauminger N, Kasari C. Loneliness and friendship in high-functioning children with autism. Child development. 2000:447–456. doi: 10.1111/1467-8624.00156. [DOI] [PubMed] [Google Scholar]

- 11.Myers SM, Johnson CP. Management of children with autism spectrum disorders. Pediatrics. 2007;120:1162–1182. doi: 10.1542/peds.2007-2362. [DOI] [PubMed] [Google Scholar]

- 12.Francis K. Autism interventions: a critical update. Developmental Medicine & Child Neurology. 2005;47:493–499. doi: 10.1017/s0012162205000952. [DOI] [PubMed] [Google Scholar]

- 13.Warren Z, McPheeters ML, Sathe N, Foss-Feig JH, Glasser A, Veenstra-VanderWeele J. A systematic review of early intensive intervention for autism spectrum disorders. Pediatrics. 2011;127:e1303–e1311. doi: 10.1542/peds.2011-0426. [DOI] [PubMed] [Google Scholar]

- 14.Crooke PJ, Hendrix RE, Rachman JY. Brief report: Measuring the effectiveness of teaching social thinking to children with Asperger syndrome (AS) and high functioning autism (HFA) Journal of autism and developmental disorders. 2008;38:581–591. doi: 10.1007/s10803-007-0466-1. [DOI] [PubMed] [Google Scholar]

- 15.Lopata C, Thomeer ML, Volker MA, Nida RE, Lee GK. Effectiveness of a manualized summer social treatment program for high-functioning children with autism spectrum disorders. Journal of autism and developmental disorders. 2008;38:890–904. doi: 10.1007/s10803-007-0460-7. [DOI] [PubMed] [Google Scholar]

- 16.McPheeters ML, Warren Z, Sathe N, Bruzek JL, Krishnaswami S, Jerome RN, Veenstra-VanderWeele J. A systematic review of medical treatments for children with autism spectrum disorders. Pediatrics. 2011;127:e1312–e1321. doi: 10.1542/peds.2011-0427. [DOI] [PubMed] [Google Scholar]

- 17.Ploog BO, Scharf A, Nelson D, Brooks PJ. Use of computer-assisted technologies (CAT) to enhance social, communicative, and language development in children with autism spectrum disorders. Journal of autism and developmental disorders. 2013;43:301–322. doi: 10.1007/s10803-012-1571-3. [DOI] [PubMed] [Google Scholar]

- 18.Wainer AL, Ingersoll BR. The use of innovative computer technology for teaching social communication to individuals with autism spectrum disorders. Research in Autism Spectrum Disorders. 2011;5:96–107. [Google Scholar]

- 19.Williams C, Wright B, Callaghan G, Coughlan B. Do children with autism learn to read more readily by computer assisted instruction or traditional book methods? A pilot study. Autism. 2002;6:71–91. doi: 10.1177/1362361302006001006. [DOI] [PubMed] [Google Scholar]

- 20.Shane HC, Albert PD. Electronic screen media for persons with autism spectrum disorders: Results of a survey. Journal of autism and developmental disorders. 2008;38:1499–1508. doi: 10.1007/s10803-007-0527-5. [DOI] [PubMed] [Google Scholar]

- 21.Pennington RC. Computer-assisted instruction for teaching academic skills to students with autism spectrum disorders: A review of literature. Focus on Autism and Other Developmental Disabilities. 2010;25:239–248. [Google Scholar]

- 22.Parsons S, Cobb S. State-of-the-art of virtual reality technologies for children on the autism spectrum. European Journal of Special Needs Education. 2011;26:355–366. [Google Scholar]

- 23.Strickland D. Virtual reality for the treatment of autism. Studies in health technology and informatics. 1997:81–86. [PubMed] [Google Scholar]

- 24.Wade J, Bian D, Zhang L, Swanson A, Sarkar M, Warren Z, Sarkar N. Universal Access in Human-Computer Interaction. Universal Access to Information and Knowledge. Springer; 2014. Design of a Virtual Reality Driving Environment to Assess Performance of Teenagers with ASD; pp. 466–474. [Google Scholar]

- 25.Bernardini S, Porayska-Pomsta K, Smith TJ. ECHOES: An intelligent serious game for fostering social communication in children with autism. Information Sciences. 2014;264:41–60. [Google Scholar]

- 26.Ke F, Im T. Virtual-reality-based social interaction training for children with high-functioning autism. The Journal of Educational Research. 2013;106:441–461. [Google Scholar]

- 27.Smith MJ, Ginger EJ, Wright K, Wright MA, Taylor JL, Humm LB, Olsen DE, Bell MD, Fleming MF. Virtual reality job interview training in adults with autism spectrum disorder. Journal of autism and developmental disorders. 2014;44:2450–2463. doi: 10.1007/s10803-014-2113-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kandalaft MR, Didehbani N, Krawczyk DC, Allen TT, Chapman SB. Virtual reality social cognition training for young adults with high-functioning autism. Journal of autism and developmental disorders. 2013;43:34–44. doi: 10.1007/s10803-012-1544-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Mevarech ZR, Silber O, Fine D. Learning with computers in small groups: Cognitive and affective outcomes. Journal of Educational Computing Research. 1991;7:233–243. [Google Scholar]

- 30.Churchill EF, Snowdon D. Collaborative virtual environments: an introductory review of issues and systems. Virtual Reality. 1998;3:3–15. [Google Scholar]

- 31.Schmidt M, Beck D. Computational Thinking and Social Skills in Virtuoso: An Immersive, Digital Game-Based Learning Environment for Youth with Autism Spectrum Disorder; International Conference on Immersive Learning; 2016. pp. 113–121. [Google Scholar]

- 32.Weiss PL, Gal E, Zancanaro M, Giusti L, Cobb S, Millen L, Hawkins T, Glover T, Sanassy D, Eden S. Usability of technology supported social competence training for children on the autism spectrum; Virtual Rehabilitation (ICVR), 2011 International Conference on; 2011. pp. 1–8. [Google Scholar]

- 33.Cheng Y, Ye J. Exploring the social competence of students with autism spectrum conditions in a collaborative virtual learning environment-The pilot study. Computers & Education. 2010;54:1068–1077. [Google Scholar]

- 34.Cheng Y, Chiang H-C, Ye J, Cheng L-h. Enhancing empathy instruction using a collaborative virtual learning environment for children with autistic spectrum conditions. Computers & Education. 2010;55:1449–1458. [Google Scholar]

- 35.Stichter JP, Laffey J, Galyen K, Herzog M. iSocial: Delivering the social competence intervention for adolescents (SCI-A) in a 3D virtual learning environment for youth with high functioning autism. Journal of autism and developmental disorders. 2014;44:417–430. doi: 10.1007/s10803-013-1881-0. [DOI] [PubMed] [Google Scholar]

- 36.Millen L, Cobb S, Patel H, Glover T. Collaborative virtual environment for conducting design sessions with students with autism spectrum conditions,” in. Proc. 9th Intl Conf. on Disability, Virtual Reality and Assoc. Technologies. 2012:269–278. [Google Scholar]

- 37.Hourcade JP, Bullock-Rest NE, Hansen TE. Multitouch tablet applications and activities to enhance the social skills of children with autism spectrum disorders. Personal and ubiquitous computing. 2012;16:157–168. [Google Scholar]

- 38.Battocchi A, Pianesi F, Tomasini D, Zancanaro M, Esposito G, Venuti P, Ben Sasson A, Gal E, Weiss PL. Collaborative Puzzle Game: a tabletop interactive game for fostering collaboration in children with Autism Spectrum Disorders (ASD); Proceedings of the ACM International Conference on Interactive Tabletops and Surfaces; 2009. pp. 197–204. [Google Scholar]

- 39.Zhao H, Swanson A, Weitlauf A, Warren Z, Sarkar N. A Novel Collaborative Virtual Reality Game for Children with ASD to Foster Social Interaction,” in; International Conference on Universal Access in Human-Computer Interaction; 2016. pp. 276–288. [Google Scholar]

- 40.Zhang L, Gabriel-King M, Armento Z, Baer M, Fu Q, Zhao H, Swanson A, Sarkar M, Warren Z, Sarkar N. Design of a Mobile Collaborative Virtual Environment for Autism Intervention; International Conference on Universal Access in Human-Computer Interaction; 2016. pp. 265–275. [Google Scholar]

- 41.Ming X, Brimacombe M, Wagner GC. Prevalence of motor impairment in autism spectrum disorders. Brain and Development. 2007;29:565–570. doi: 10.1016/j.braindev.2007.03.002. [DOI] [PubMed] [Google Scholar]

- 42.Provost B, Lopez BR, Heimerl S. A comparison of motor delays in young children: autism spectrum disorder, developmental delay, and developmental concerns. Journal of autism and developmental disorders. 2007;37:321–328. doi: 10.1007/s10803-006-0170-6. [DOI] [PubMed] [Google Scholar]

- 43.Guna J, Jakus G, Pogacnik M, Tomazic S, Sodnik J. An analysis of the precision and reliability of the leap motion sensor and its suitability for static and dynamic tracking. Sensors. 2014;14:3702–3720. doi: 10.3390/s140203702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Constantino JN, Gruber CP. The social responsiveness scale. Los Angeles: Western Psychological Services. 2002 [Google Scholar]

- 45.Rutter M, Bailey A, Lord C. The social communication questionnaire: Manual. Western Psychological Services; 2003. [Google Scholar]

- 46.Woolson R. Wilcoxon Signed-Rank Test. Wiley encyclopedia of clinical trials. 2008 [Google Scholar]

- 47.Cohen J. Statistical power analysis for the behavioral sciences (revised ed.) New York: Academic Press; 1977. [Google Scholar]