Abstract

Purpose

The primary purpose of this study was to assess speech understanding in quiet and in diffuse noise for adult cochlear implant (CI) recipients utilizing bimodal hearing or bilateral CIs. Our primary hypothesis was that bilateral CI recipients would demonstrate less effect of source azimuth in the bilateral CI condition due to symmetric interaural head shadow.

Method

Sentence recognition was assessed for adult bilateral (n = 25) CI users and bimodal listeners (n = 12) in three conditions: (1) source location certainty regarding fixed target azimuth, (2) source location uncertainty regarding roving target azimuth, and (3) Condition 2 repeated, allowing listeners to turn their heads, as needed.

Results

(a) Bilateral CI users exhibited relatively similar performance regardless of source azimuth in the bilateral CI condition; (b) bimodal listeners exhibited higher performance for speech directed to the better hearing ear even in the bimodal condition; (c) the unilateral, better ear condition yielded higher performance for speech presented to the better ear versus speech to the front or to the poorer ear; (d) source location certainty did not affect speech understanding performance; and (e) head turns did not improve performance. The results confirmed our hypothesis that bilateral CI users exhibited less effect of source azimuth than bimodal listeners. That is, they exhibited similar performance for speech recognition irrespective of source azimuth, whereas bimodal listeners exhibited significantly poorer performance with speech originating from the poorer hearing ear (typically the nonimplanted ear).

Conclusions

Bilateral CI users overcame ear and source location effects observed for the bimodal listeners. Bilateral CI users have access to head shadow on both sides, whereas bimodal listeners generally have interaural asymmetry in both speech understanding and audible bandwidth limiting the head shadow benefit obtained from the poorer ear (generally the nonimplanted ear). In summary, we found that, in conditions with source location uncertainty and increased ecological validity, bilateral CI performance was superior to bimodal listening.

Without data-driven, patient-specific guidelines for determining bilateral cochlear implant (CI) candidacy, clinicians will continue to struggle making clinical recommendations regarding retention of residual acoustic hearing in the nonimplanted ear versus pursuit of a second CI. This is one of the most common questions posed to clinicians working in CI programs as even the best performing CI users experience considerable difficulty with speech understanding in everyday listening environments that include diffuse noise and/or reverberation. In fact, our highest-performing patients generally struggle with speech understanding at signal-to-noise ratios (SNRs) for which individuals with normal hearing would achieve ceiling-level performance, such as +5 dB SNR (e.g., Buss, Calandruccio, & Hall, 2015; Summers, Makashay, Theodoroff, & Leek, 2013; Vermeire et al., 2016).

There are several options for CI listeners that may help improve speech understanding in noise. Some of these options involve signal preprocessing strategies designed to enhance speech and/or reduce noise in adverse listening environments (Brockmeyer & Potts, 2011; Gifford, Olund, & DeJong, 2011; Potts & Kolb, 2014; Rakszawski, Wright, Cadieux, Davidson, & Brenner, 2016; Wolfe, Schafer, John, & Hudson, 2011); remote microphones, including wireless and DM or FM technology (Assmann & Summerfield, 2004; De Ceulaer et al., 2016; Dorman et al., 2015; Fitzpatrick, Seguin, Schramm, Armstrong, & Chenier, 2009; Schafer, Romine, Musgrave, Momin, & Huynh, 2013); and induction loops and an integrated telecoil (Julstrom & Kozma-Spytek, 2014; Schafer et al., 2013).

Hearing With Two Ears: Bimodal Hearing Versus Bilateral CI

Another option for improving hearing relates to the clinically recommended intervention—more specifically, whether the clinician recommends bimodal hearing (CI plus contralateral hearing aid [HA]) or pursuit of a second CI. Hearing with two ears, either via bilateral CIs or a CI paired with a contralateral HA, provides distinct advantages in various listening conditions. For listeners using a bimodal hearing configuration (CI plus contralateral HA), significant bimodal benefit over CI alone (or HA alone) is noted both for speech in quiet and in noise (e.g., Dunn, Tyler, & Witt, 2005; Gifford et al., 2014; R. J. M. van Hoesel, 2012; Zhang, Dorman, & Spahr, 2010). Bimodal localization abilities, however, are not well defined as there are conflicting reports with some studies showing significant bimodal benefit (e.g., Choi et al., 2017; Potts & Litovsky, 2014) and other demonstrating little to no benefit over unilateral hearing alone (e.g., Dorman, Loiselle, Cook, Yost, & Gifford, 2016; Potts & Litovsky, 2014). The primary theories underlying bimodal benefit for speech understanding are segregation, glimpsing, and head shadow. Segregation implicates the use of low-frequency acoustic hearing—primarily fundamental frequency (F0) periodicity (i.e., voice pitch)—affording the listener a comparison of electric and acoustic stimuli to separate the target signal from the background noise (e.g., Chang, Bai, & Zeng, 2006; Kong, Stickney, & Zeng, 2005; Qin & Oxenham 2006). Glimpsing assumes that different yet complementary electric and acoustic stimulation across ears—likely implicating the availability of temporal fine structure in the acoustic hearing ear—allows the target to be “glimpsed” during spectral and/or temporal troughs in the background noise (e.g., Brown & Bacon 2009; Kong & Carlyon, 2007; Li & Loizou, 2008).

Head shadow, or better ear listening, is generally a high-frequency, level-based cue produced by a frequency-dependent difference in the SNR across ears. Head shadow does not require two functional ears as individuals with unilateral hearing can benefit, provided that the noise originates from the side of the poorer ear. Because head shadow is primarily a level-dependent cue—which has greater magnitude for high-frequency stimuli—many bimodal listeners with sloping high-frequency loss in the acoustic hearing ear will not be able to obtain equivalent head shadow benefit for each ear. In fact, for frequencies below 1000 Hz—the spectral range over which most bimodal listeners will have access in the HA ear—the maximum possible interaural level difference (ILD) is approximately 5 dB (Kuhn, 1977; Macaulay, Hartmann, & Rakerd, 2010; Yost, 2000). There are several studies documenting asymmetry in head shadow for monaural listening and for bimodal listening in cases where the audible bandwidth and speech understanding is considerably different across ears (Ching, Incerti, & Hill, 2004; Dunn et al., 2005; Gifford, Dorman, Sheffield, Teece, & Olund, 2014; Morera et al., 2005; Potts, Skinner, Litovsky, Strube, & Kuk, 2009; Pyschny et al., 2014). The implications are that the poorer hearing ear—typically the HA ear—will not derive as much benefit from head shadow as the better hearing CI ear.

For listeners with bilateral CIs, significant bilateral benefit over either CI alone is noted both for speech in quiet and in noise (e.g., Buss et al., 2008; Gifford et al., 2014; Litovsky, Parkinson, Arcaroli, & Sammeth, 2006; R. J. M. van Hoesel, 2012). For speech in quiet or in colocated noise, research has typically demonstrated equivalent or even less benefit for bilateral CI as compared with bimodal hearing, given the complementary yet different information provided in a bimodal hearing configuration (e.g., R. J. M. van Hoesel, 2012). Bilateral CI localization, however, is significantly better than either unilateral CI alone (e.g., Dorman, Loiselle et al., 2016; Grantham et al., 2007; Potts & Litovsky, 2014). The primary underlying mechanism driving bilateral CI benefit for speech understanding and localization is availability of ILD cues (Dorman et al., 2013, 2014; Grantham, Ashmead, Ricketts, Haynes, & Labadie, 2008; Laback, Pok, Baumgartner, Deutsch, & Schmid, 2004; Loiselle, Dorman, Yost, Cook, & Gifford, 2016; Seeber & Fastl, 2008; R. J. M. van Hoesel & Tyler, 2003). Given the presence of ILD cues for bilateral CI users, head shadow is not only present but is also generally more symmetrical across ears (e.g., Buss et al., 2008; Gifford et al., 2014; Litovsky et al., 2006; Pyschny et al., 2014).

Bilateral ≠ Binaural

There is little-to-no evidence of true binaural hearing for bimodal listeners. Though bimodal listeners have access to fine timing cues via periodicity and temporal fine structure in the acoustic hearing ear, this information is not well transmitted by the CI (e.g., Francart, Lenssen, & Wouters, 2011; Francart, Van den Bogaert, Moonen, & Wouters, 2009) and is thus not available across ears. Similarly, bilateral CI recipients demonstrate little-to-no evidence of true binaural hearing. The reason is that bilateral CI recipients have limited access to fine structure interaural time differences (ITDs) outside of controlled laboratory conditions (e.g., Francart et al., 2009; Grantham et al., 2008; Laback et al., 2004; Majdak, Laback, & Baumgartner, 2006) due to the lack of temporal fine structure for relatively high channel stimulation rates used in commercial CI sound processing (typically 900 pulses per second or higher), spectral mismatches across ears, and lack of processor synchronization. Envelope ITDs are present, however, without processor synchronization and the potential for variable channel and overall stimulation rates across ears and channel interaction, envelope ITDs are generally not well resolved (e.g., Kan, Jones, & Litovsky, 2015; Kerber & Seeber, 2013; R. J. van Hoesel, 2004; R. van Hoesel et al., 2008).

Group Communication Environments: Fluctuating Source Azimuth

On the basis of published research, we could expect that, in social gatherings where the target source varies among a group of potential talkers, unilateral CI recipients will struggle in conditions for which (a) noise is directed toward the implanted ear (Choi et al., 2017; Gifford et al., 2014; Litovsky et al., 2006) and (b) the target is directed toward the poorer ear (Bernstein, Schuchman, & Rivera, 2017). Indeed, it is possible that both possibilities could exist simultaneously, which would place the unilateral CI recipient in an extremely challenging environment. The unilateral CI disadvantage is thought to be due primarily to asymmetry in head shadow across ears, as described above (e.g., R. J. M. van Hoesel, 2012).

The effect of source azimuth for speech understanding in noisy environments may appear trivial given the fact that most adult listeners with two functioning ears—particularly those with hearing loss—should instinctively turn their heads to orient themselves toward the perceived signal of interest so that they can take advantage of both head-orientation benefits (e.g., Grange & Culling, 2016; Kock, 1950) and audiovisual gain (e.g., Sumby & Pollack, 1954). However, research has demonstrated that signals placed at 0° may not yield the most favorable SNR nor the highest levels of speech understanding for HAs (Festen & Plomp, 1986; Mantokoudis et al., 2011; Pumford, Seewald, Scollie, & Jenstad, 2000; Ricketts, 2000) and CI processors (Aronoff, Freed, Fisher, Pal, & Soli, 2011; Kolberg, Sheffield, Davis, Sunderhaus, & Gifford, 2015) housed in a behind-the-ear configuration. Thus, this is an important phenomenon to investigate as it may influence patient counseling and recommendations for technology and intervention. Indeed, research has documented benefits for speech recognition resulting from turning one's head away from the speaker 30° to 60° for listeners with normal hearing (Grange & Culling, 2016) and for individuals with bilateral sensorineural hearing loss wearing HAs (Archer-Boyd, Holman, & Brimijoin, 2018). This head orientation benefit capitalizes on head shadow, or better ear listening, by placing the incident angle of the head in the region of greatest interaural level differences (Macaulay et al., 2010).

Kolberg et al. (2015) assessed the effect of source azimuth for unilateral and bilateral CI conditions in which the target speech was randomly roved from 0° to ±90° in a semidiffuse restaurant noise. They showed that the bilateral listening condition yielded higher speech understanding for all source azimuths with the greatest improvement observed for speech directed to the poorer hearing ear. The “bilateral” condition, however, included both bimodal and bilateral CI recipients. Though this article only reported mean data, for the seven bilateral CI recipients (Kolberg et al., 2015), the benefit afforded by two CIs was variable across source azimuths ranging from a few percentage points up to 15 percentage points for speech directed toward the poorer ear. For the two bimodal listeners, the benefit afforded by adding the HA to the CI was also quite variable; however, the magnitude of the benefit was considerably smaller than observed for the bilateral recipients ranging from a decrement in performance up to a benefit of 8 percentage points (Kolberg et al., 2015). The greatest bimodal benefit was observed for speech originating from the front (8 percentage points) followed by speech presented toward the HA ear (5 percentage points). For speech directed toward the CI ear, both bimodal listeners exhibited a decrement in performance (ranging from −7.5 to −11.2 percentage points) in the bimodal condition as compared with the CI-alone condition. This discrepancy between the bilateral and bimodal listeners, however, could have been attributed to the small sample size, and thus, no generalizations could be made.

The primary goal of this study was to expand upon the results reported by Kolberg et al. (2015) for a larger sample of adult bilateral and bimodal listeners allowing for better definition of the effects and a comparison across the two hearing configurations. Our primary hypothesis was that the effect of source azimuth on speech understanding would be significantly different across the groups such that (a) the bilateral CI recipients would demonstrate less effect of source azimuth in the bilateral CI condition due to symmetrical head shadow across ears and (b) the bimodal listeners would demonstrate significantly poorer performance when speech was directed to the poorer hearing ear.

The Effect of Source Location Certainty on Speech Understanding

As discussed previously, multitalker environments are commonplace, and most studies examining speech understanding in noise for individuals with CIs have used stimuli that were presented from a fixed location, most commonly at 0°. The effect of roving source azimuth for adults with CI(s) using auditory-only stimuli has not been thoroughly investigated. Furthermore, there are no published data relevant to the effect of source location certainty with respect to the target stimulus for individuals with CIs and/or HAs.

Kidd et al. (2005) investigated the effect of source certainty for individuals with normal hearing. They presented three simultaneous messages from three source azimuths: −60°, 0°, and +60°. One of the sentences in each trial was randomly assigned as the target on the basis of the call sign to which the listener was primed. Listeners were then provided with information regarding the likelihood of the target originating from a specific location with probability estimates ranging from complete certainty to chance. They found that performance was significantly higher for conditions in which the listener was certain of the impending source azimuth for the target stimulus (Kidd, Arbogast, Mason, & Gallun, 2005). Brungart and Simpson (2007) investigated the effect of source location certainty on speech understanding for dichotic listening in listeners with normal hearing. They showed that source location certainty yielded significant benefit for speech understanding in the presence of three- and four-talker distracters with a mean improvement of 20 percentage points (Brungart & Simpson, 2007).

Davis et al. (2016) investigated the effect of source location certainty for speech understanding in noise for young individuals with normal hearing with a source that was either presented at 0° or −60° or moving in the listener's left hemi-field. In all conditions, the distracter consisted of two different sentences that were either colocated with the source at either 0°, −60°, or moving in the listener's left hemi-field (Davis, Grantham, & Gifford, 2016). They found no effect of source location certainty on speech understanding; however, they did observe a significant interaction between source location certainty and motion for two conditions in which source location certainty yielded significantly better outcomes.

We do not fully understand the effects of source location certainty for speech understanding, even for listeners with normal hearing. To date, there are no published studies investigating the effect of source location certainty for speech understanding with CI recipients. Hence, the secondary goal of this study was to investigate the effect of source location certainty on speech understanding performance for listeners with CIs. On the basis of previous studies discussed here, our hypothesis was that a priori knowledge of the target source would yield significantly higher performance compared with conditions in which the location of the source was roved randomly between 0° and ±90°.

Experiment 1: Effect of Source Azimuth and Source Location Certainty for Bilateral and Bimodal Listeners

Method

Participants

Study participants included 37 adult CI recipients who were recruited and participated in the study in accordance with an institutional review board approval obtained at Vanderbilt University and Arizona State University. Participants' ages ranged from 29 to 81 years with a mean of 55.2 years. Inclusion criteria required the following: (a) minimum of 6 months' experience with each implanted ear prior to participation; (b) most recent generation processor at the time of data collection for both ears, which was the Harmony for AB, Nucleus 5 (CP810) for Cochlear, and Opus2 for MED-EL; and (c) bimodal participants were required to have aidable hearing at 250 Hz, which was defined as an audiometric threshold < 80 dB HL. The 250-Hz threshold criterion was chosen given that 250 Hz is the lowest frequency for which we are able to verify HA output for various HA prescriptive fitting targets and that acoustic hearing low-pass filtered at 250 Hz is the minimum bandwidth for which significant additive bimodal benefit can be observed (Sheffield & Gifford, 2014; Zhang et al., 2010). Of the 37 participants, 12 were bimodal listeners and 25 were bilateral CI recipients. Complete demographic information is provided in Tables 1 and 2 for the bimodal and bilateral participants.

Table 1.

Demographic and device information for the 25 bilateral CI participants.

| Participants | Age at testing (years) | Gender | Manufacturer | SNR used for testing (dB) | CNC (% correct) first CI | CNC (% correct) second CI | CNC (% correct) bilateral |

|---|---|---|---|---|---|---|---|

| 1* | 51 | female | AB | 10 | 80 | 78 | 92 |

| 2* | 29 | male | AB | 5 | 42 | 68* | 78 |

| 3* | 81 | female | AB | 5 | 76 | 78 | 76 |

| 4* | 55 | female | Cochlear | 5 | 74 | 88 | 92 |

| 5* | 68 | male | Cochlear | 12 | 32 | 72* | 72 |

| 6* | 45 | male | MED-EL | 5 | 84 | 78 | 86 |

| 7* | 62 | female | MED-EL | 2 | 84 | 66 | 90 |

| 8 | 61 | female | Cochlear | 10 | 66 | 60 | 74 |

| 9 | 37 | female | Cochlear | 10 | 60 | 62 | 84 |

| 10 | 41 | male | Cochlear | 10 | 72 | 68 | 80 |

| 11 | 74 | male | AB | 15 | 10 | 56* | 58 |

| 12 | 65 | male | MED-EL | 11 | 86 | 76 | 86 |

| 13 | 58 | male | AB | 15 | 70 | 43* | 74 |

| 14* | 52 | female | MED-EL | 2 | 62 | 48 | 54 |

| 15* | 43 | male | MED-EL | 8 | 76 | 76 | 86 |

| 16* | 33 | female | MED-EL | 5 | 86 | 76 | 86 |

| 17 | 81 | male | MED-EL | 10 | 58 | 56 | 74 |

| 18* | 54 | female | MED-EL | 5 | 66 | 64 | 80 |

| 19* | 60 | male | MED-EL | 12 | 76 | 74 | 84 |

| 20* | 39 | female | MED-EL | 7 | 84 | 74 | 88 |

| 21* | 78 | female | MED-EL | 7 | 66 | 48 | 88 |

| 22* | 66 | female | MED-EL | 3 | 82 | 72 | 78 |

| 23* | 45 | male | MED-EL | 5 | 80 | 68 | 80 |

| 24* | 58 | female | MED-EL | 15 | 48 | 40 | 40 |

| 25* | 46 | female | AB | 5 | 96 | 88 | 90 |

| M (SD) | 55.3 (14.6) | — | — | 8.0 (4.0) | 68.6 (19.2) | 67.0 (13.0) | 78.8 (12.4) |

Note. Asterisk by participant number indicates participation in both Experiments 1 and 2. Asterisk for the second CI-only CNC score indicates that the second CI score was significantly different from the first CI score. CI = cochlear implant; SNR = signal-to-noise ratio; CNC = consonant nucleus consonant; AB = Advanced Bionics.

Table 2.

Demographic and device information for the 12 bimodal participants.

| Participants | Age at testing (years) | Gender | Manufacturer | SNR used for testing (dB) | CNC (% correct) HA only | CNC (% correct) CI only | CNC (% correct) bimodal |

|---|---|---|---|---|---|---|---|

| 1* | 57 | male | MED-EL | 5 | 64 | 70 | 78 |

| 2* | 70 | female | Cochlear | 5 | 34 | 96* | 94 |

| 3* | 53 | male | Cochlear | 12 | 18 | 88* | 100 |

| 4 | 41 | female | AB | 15 | 0 | 88* | 82 |

| 5* | 42 | female | Cochlear | 8 | 50 | 90* | 86 |

| 6* | 64 | male | AB | 10 | 0 | 90* | 96 |

| 7* | 65 | female | AB | 10 | 16 | 78* | 82 |

| 8 | 57 | female | Cochlear | 10 | 54 | 58 | 96 |

| 9 | 36 | female | Cochlear | 12 | 72 | 36* | 82 |

| 10 | 53 | female | Cochlear | 7 | 52 | 46 | 82 |

| 11 | 62 | male | MED-EL | 5 | 80 | 86 | 92 |

| 12 | 60 | female | Cochlear | 10 | 26 | 66* | 80 |

| M (SD) | 55.0 (10.5) | — | — | 9.1 (3.2) | 38.8 (27.2) | 74.3 (19.3) | 87.5 (7.6) |

Note. Asterisk by participant number indicates participation in both Experiments 1 and 2. Asterisk for the CI-only CNC score indicates that the CI score was significantly different from the HA-only score. SNR = signal-to-noise ratio; CNC = consonant nucleus consonant; HA = hearing aid; CI = cochlear implant; AB = Advanced Bionics.

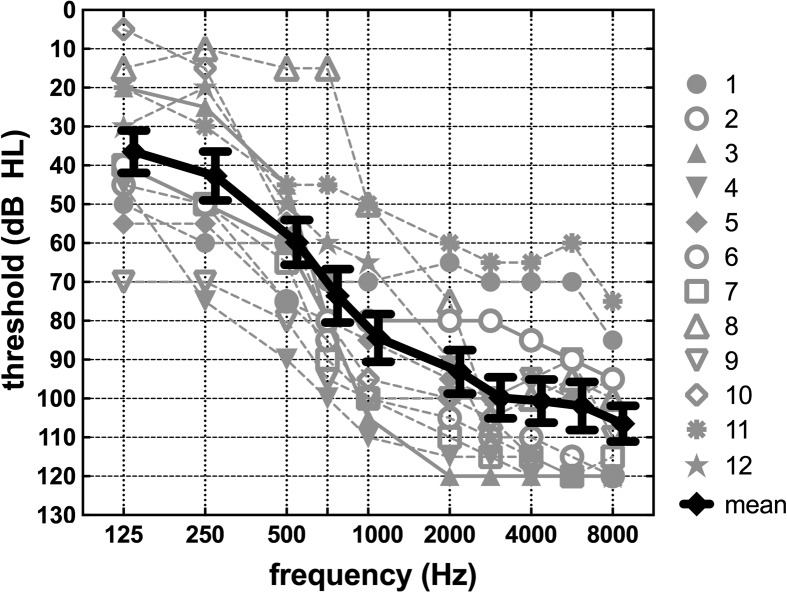

Individual and mean audiometric thresholds for the nonimplanted ears of the 12 bimodal participants are shown in Figure 1. On average, audiometric thresholds obtained via air conduction were consistent with a moderate sloping to profound loss. Though we do not report bone conduction thresholds here, all losses were sensorineural in nature. At the individual level, audiometric thresholds ranged from normal hearing in the low frequencies with precipitously sloping loss to a severe–profound loss at all frequencies. The mean low-frequency pure-tone average (125, 250, and 500 Hz) was 46.1 dB HL, and the mean pure-tone average (500, 1000, and 2000 Hz) was 81.1 dB HL.

Figure 1.

Individual and mean audiometric thresholds for the 12 bimodal participants. Error bars represent ±1 SEM.

Stimuli

The Texas Instruments/Massachusetts Institute of Technology (TIMIT) sentence materials (e.g., Dorman, Spahr, Loizou, Dana, & Schmidt, 2005; King, Firszt, Reeder, Holden, & Strube, 2012; Lamel, Kassel, & Seneff, 1986) were presented at 65 dBA from a single speaker. The TIMIT corpus used for this experiment included 34 lists of equal intelligibility each containing 20 sentences. These 34 lists were assembled by Dorman et al. (2005) from the original, much larger TIMIT corpus. The 34 lists are composed of 680 sentences spoken by 301 different talkers (94 female, 207 male). Each list is made up of 20 sentences spoken by 18 to 20 different talkers with an equivalent talker-sex distribution averaged across all 34 lists. The mean fundamental frequency for the male and female talkers was 121 Hz and 204 Hz, respectively. The R-SPACE proprietary restaurant noise was presented from seven of the eight speakers during sentence presentation, as the sentences and noise were never colocated. The presentation level of the background restaurant noise presentation level was determined on an individual basis to yield approximately 50% correct TIMIT sentence recognition on a full 20-sentence list, with a range of 30% to 67%, for the best-aided condition with speech at 0° azimuth. All listeners were first assessed with an SNR of +5 dB. If the sentence recognition score was less than 30% or higher than 70% after presentation of the first 10 sentences in the list, the SNR was initially increased or decreased by 5 dB, respectively, and a unique list was presented at the new SNR. If this SNR did not yield performance in the desired range, the experimenter varied SNR in smaller increments to achieve performance in this range. With the exception of the eight bilateral and three bimodal listeners for whom criterion performance was achieved at the starting SNR of +5 dB, all other listeners required presentation of two to three sentence lists to determine SNR for experimentation. The final SNR used for all assessments ranged from +2 to +15 dB with a mean of +8.3 dB (see Tables 1 and 2).

Procedure

Experimentation was completed in a single-walled sound booth using the Revitronix R-SPACE system. Each of the eight loudspeakers was placed 24 in. from the listener's head, in order to simulate a realistic restaurant setting like those described in detail in previous studies (e.g., Compton-Conley, Neuman, Killion, & Levitt, 2004; Revit, Killion, & Compton-Conley, 2007). The eight loudspeakers surround the listener circumferentially in a 360° arc with 45° separation between speakers.

Sentences were presented in the R-SPACE restaurant noise for the two following conditions:

Randomly from either 0°, 90°, or 270° (one list of 20 sentences to each location for a 60-sentence block).

Fixed from either 0°, 90°, or 270° (one list of 20 sentences to each location)—the listener was aware of the source location prior to testing each 20-sentence list.

For the two conditions listed above, all participants were tested with each ear alone and in the best-aided condition (bilateral CI or bimodal). Thus, the scoring for all conditions was achieved via the presentation of 18 of the 34 TIMIT equivalent lists, which were chosen randomly for each of the participants. Three lists (4, 10, and 29) were omitted from use for all participants due to the presence of explicit content. The order of listening conditions was randomized by the test administrators prior to experimentation. Each individual was given one full list presented at 0°, with noise originating from the other seven speakers for practice prior to commencing experimentation. All participants had previously participated in various laboratory listening experiments and were thus familiar with speech-in-noise testing, though none had been previously tested with the TIMIT sentences. All listeners were instructed to look forward at the speaker placed at 0° regardless of source azimuth. The listeners were instructed to repeat what they had heard following each sentence. The listener was equipped with a transmitting lapel microphone. The experimenters, who had normal hearing, wore earphones connected to the transmitting lapel microphone to help ensure that the listeners' spoken responses were audible and that ambient noise levels in the laboratory did not interfere with sentence scoring.

In addition to speech-in-noise testing in the R-SPACE system, we also obtained estimates of monosyllabic word recognition for all listeners. The consonant nucleus consonant (CNC; Peterson & Lehiste, 1962) stimuli were presented, in quiet, at a calibrated presentation level of 60 dBA from a single loudspeaker placed at a distance of approximately 1.1 m from the listener to be consistent with recommendations from the minimum speech test battery (Minimum Speech Test Battery, 2011) for adult CI users. All participants were assessed with each ear alone and the bilateral best-aided condition (bilateral CI or bimodal). Prior to experimentation for each listener, all stimuli were calibrated in the free field using the substitution method with a Larsen Davis SoundTrack LxT or 800B sound level meter.

Hearing Equipment Verification

Prior to testing, the HAs for the non-CI ear of all bimodal participants were verified to be providing NAL-NL1 target audibility for 60– and 70–dB SPL speech. This protocol ensured that speech was audible in the nonimplanted ear for presentation levels used in the current study. If an individual's HA was not meeting NAL-NL1 targets, the HA was reprogrammed prior to experimentation. Further, we obtained aided audiometric detection thresholds to frequency-modulated pure tones for all implanted ears to ensure that detection levels were between 20 to 30 dB HL for octave and interoctave frequencies between 250 and 6000 Hz (Firszt, Holden, Skinner, & Tobey, 2004; Holden, Skinner, & Fourakis, 2007; James, Blamey, & Martin, 2002; Skinner, Fourakis, Holden, Holden, & Demorest, 1999; Skinner, Holden, Holden, Demorest, & Fourakis, 1997). In the event that thresholds exceeded 30 dB HL for a particular frequency or range of frequencies, the participant's threshold (T) levels were increased for electrodes with the corresponding frequency assignment, and aided thresholds were retested ensuring detection at 30 dB HL or less.

Results

Monosyllabic Word Recognition

CNC word recognition was obtained in an effort to quantify participant outcomes on a standardized clinical measure of speech understanding. As shown in Tables 1 (bilateral CI) and 2 (bimodal), CNC word recognition abilities were variable across participants. For the HA-alone condition of the bimodal listeners, word recognition ranged from 0% to 80% with a mean of 38.8% correct. For the unilateral CI-alone condition (considering each implanted ear for all 37 participants), word recognition ranged from 10% to 96% with a mean score of 69.1% correct. For the bimodal hearing configuration, word recognition ranged from 78% to 100% with a mean score of 87.5% correct. For the bilateral CI condition, word recognition ranged from 40% to 92% with a mean score of 78.8% correct. Tables 1 and 2 also indicate which participants exhibited significantly different monosyllabic word recognition across the two ears via asterisk by the CI alone or second CI scores for the bimodal and bilateral CI groups, respectively. Using the 95% confidence intervals for test–retest variability using 50-word lists (Thornton & Raffin, 1978), we found that 67% of bimodal listeners exhibited significant interaural asymmetry, whereas only 16% of bilateral CI users exhibited significant interaural asymmetry in word recognition.

CNC scores were then grouped by poorer ear, better ear, and bilateral best-aided condition—note that bilateral best-aided refers to the two-eared condition whether it be accomplished via bilateral CI or bimodal listening. This categorization was based on CNC word scores to determine better versus poorer ears; we did not, however, account for whether the performance difference across ears was considered statistically significant using 95% confidence intervals for individual test–retest variability (Thornton & Raffin, 1978). Bilateral Participant 15 exhibited equivalent CNC word recognition across the two ears; however, this individual reported that his first implanted ear was his “better hearing ear,” and thus, patient report was used for categorization for this one participant. Note that, for two of the 12 bimodal listeners (9 and 10), the better ear was the HA ear. We did, indeed, analyze the data according to the performance or function of the ear. Thus, for bimodal Participants 9 and 10, the HA ear was characterized as the better hearing ear. We completed a two-way, repeated-measures analysis of variance (ANOVA) using listener group (bimodal vs. bilateral) and listening condition (poorer ear, better ear, and bilateral best-aided condition) as the independent variables and CNC word score, in percent correct, as the dependent variable. Statistical analysis revealed no effect of listener group, F(1, 35) = 1.3, p = .27, ηp 2 = .04, a significant effect of listening condition, F(2, 70) = 75.2, p < .001, ηp 2 = .68, and a significant interaction, F(2, 70) = 20.8, p < .001, ηp 2 = .37. Holm-Sidak post hoc analyses revealed a significant difference in CNC word recognition for the bilateral and bimodal groups for the poorer ear alone (t36 = 4.9, p < .001, d = −1.2).

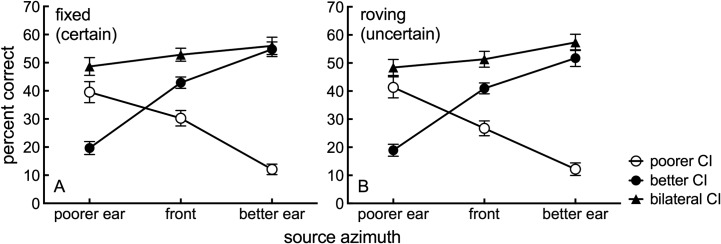

Sentence Understanding in Noise: Source Location Certainty

Data for the bilateral CI and bimodal listeners are shown in Figures 2 and 3, respectively. In each figure, the left-hand panel (labeled A) displays mean sentence recognition scores for the fixed source azimuth testing for which the listener was certain of the target speech location. The right-hand panel (labeled B) displays mean scores for the randomly roving source azimuth conditions for which the listener was uncertain of the target speech location. Statistical analysis was completed to investigate interaction effects of listener group (bilateral and bimodal), listening condition (better ear, poorer ear, and bilateral), source location certainty, and source azimuth on TIMIT sentence recognition in noise. A linear mixed model incorporating all four variables revealed no statistically significant four-way interaction effects between listener group, listening condition, source location certainty, and source azimuth, F(4, 321.3) = 0.125, p = .973, ηp 2 = .01. There was, however, a significant three-way interaction for listener group, listening condition, and source azimuth, F(4, 321.3) = 2.47, p = .045, ηp 2 = .03. Post hoc analysis of this three-way interaction effect was investigated by conducting group by source location azimuth two-way, repeated-measures ANOVAs within each of the listening conditions.

Figure 2.

Mean TIMIT sentence recognition, in percent correct, for bilateral CI users as function of source azimuth for the poorer CI (unfilled circles), better CI (filled circles), and bilateral CI (filled triangles) conditions. Panel A displays mean performance for conditions in which the source was fixed (source location certainty), and Panel B displays mean performance for roving source azimuth (listener uncertainty). Error bars represent ±1 SEM. CI = cochlear implant.

Figure 3.

Mean TIMIT sentence recognition, in percent correct, for bimodal listeners as function of source azimuth for the poorer ear (unfilled circles—hearing aid ear for 10 of 12 bimodal listeners), better ear (filled circles—CI ear for 10 of 12 bimodal listeners), and bimodal (filled triangles) conditions. Panel A displays mean performance for conditions in which the source was fixed (source location certainty), and Panel B displays mean performance for roving source azimuth (listener uncertainty). Error bars represent ±1 SEM. HA = hearing aid; CI = cochlear implant.

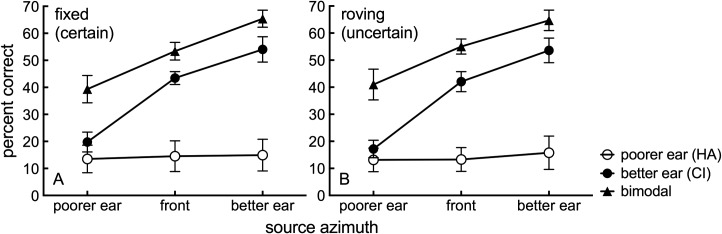

Listening Condition and Source Azimuth

Figure 4 displays mean sentence recognition scores averaged across source location certainty for the bilateral CI and bimodal groups represented as unfilled and filled symbols, respectively. We chose to investigate the effects of listening condition and source azimuth across the groups to determine whether bilateral CIs offer advantages as compared with bimodal hearing.

Figure 4.

Mean TIMIT sentence recognition, in percent correct, as function of source azimuth for the poorer ear (circles), better ear (squares), and bilateral, best-aided (triangles) conditions. Filled and unfilled symbols represent the bimodal and bilateral CI listeners, respectively. Scores displayed here were averaged across source location certainty. Error bars represent ±1 SEM. CI = cochlear implant.

Poorer Hearing Ear

Investigating just the poorer ear alone for both the bilateral CI and bimodal groups, two-way, repeated-measures ANOVA revealed a significant effect of group, F(1, 35) = 6.1, p = .018, ηp 2 = .15, source azimuth, F(2, 35) = 28.6, p < .001, ηp 2 = .62, and an interaction, F(2, 70) = 11.5, p < .001, ηp 2 = .25. Mean sentence recognition scores for the poorer hearing ears of the bilateral CI recipients were 39.4, 27.1, and 13.0 percent correct for speech originating from the poorer ear, front, and better ear, respectively. Mean sentence recognition scores for the poorer hearing ear of the bimodal listeners were 17.6, 13.9, and 11.4 percent correct for speech directed toward the poorer ear, front, and better ear, respectively. Post hoc testing using an all-pairwise multiple comparison procedure (Holm-Sidak) revealed a significant difference between the groups when the source originated from the poorer ear (t36 = 4.0, p < .001, d = −1.2) and the front (t36 = 2.4, p = .021, d = −0.9) but no difference between the groups when speech originated from the better hearing ear (t36 = 0.29, p = .77, d = −0.05). Post hoc analyses were also completed for the effect of source azimuth. For the bilateral CI group, all three source azimuths yielded significantly different sentence understanding scores (poorer vs. front: t 24 = 4.7, p < .001, d = 0.7; poorer vs. better: t 24 = 11.2, p < .001, d = 1.8; front vs. better: t 24 = 6.5, p < .001, d = 1.3). For the bimodal group, sentence understanding was not different across source azimuth (poorer vs. front: t 11 = 0.9, p = .59, d = −0.2; poorer vs. better: t 11 = 1.6, p = .24, d = −0.3; front vs. better: t 11 = 0.7, p = .49, d = −0.2).

Better Hearing Ear

Investigating the better ear alone, statistical analysis revealed no effect of group, F(1, 35) = 0.000002, p = .99, ηp 2 = .0000001, significant effect of source azimuth, F(2, 35) = 161.72, p < .001, ηp 2 = .90, and no interaction, F(2, 70) = 0.31, p = .74, ηp 2 = .009. Mean sentence recognition scores for the better hearing ear of the bilateral CI recipients were 19.9, 42.6, and 52.2 percent correct for speech directed originating from the poorer ear, front, and better ear, respectively. Mean sentence recognition scores for the better hearing ear of the bimodal listeners were 18.5, 42.5, and 53.7 percent correct for speech directed toward the poorer ear, front, and better ear, respectively. Holm-Sidak post hoc analyses were completed for source azimuth with the better hearing ear alone. For the bilateral CI group, all three source azimuths yielded significantly different sentence understanding scores (poorer vs. front: t 24 = 9.9, p < .001, d = 2.0; poorer vs. better: t 24 = 14.8, p < .001, d = 2.6; front vs. better: t 24 = 5.0, p < .001, d = 0.9). Similarly for the bimodal group, all three source azimuths yielded significantly different sentence understanding scores (poorer vs. front: t 11 = 7.3, p < .001, d = 2.1; poorer vs. better: t 11 = 10.7, p < .001, d = 2.5; front vs. better: t 11 = 3.4, p = .001, d = 0.8).

Bilateral Best-Aided Hearing Condition (Bilateral CI or Bimodal Listening)

Considering the bilateral best-aided condition (bilateral or bimodal) for both groups, statistical analysis revealed no effect of participant group, F(1, 35) = 0.04, p = .84, ηp 2 = .001, significant effect of source azimuth, F(2, 35) = 35.0, p < .001, ηp 2 = .67, and a significant interaction, F(2, 70) = 9.6, p < .001, ηp 2 = .22. Mean sentence recognition scores for the bilateral best-aided condition of the bilateral CI recipients were 47.8, 53.2, and 55.5 percent correct for speech directed originating from the poorer ear, front, and better ear, respectively. Mean sentence recognition scores for the bilateral best-aided condition of the bimodal listeners were 40.0, 54.2, and 65.0 percent correct for speech directed toward the poorer ear, front, and better ear, respectively. Post hoc analyses (Holm-Sidak) revealed that, for the bilateral CI group, sentence understanding was significantly higher for speech directed to the poorer ear versus the front (t 24 = 2.9, p = .03, d = 0.3) and to the poorer ear versus the better ear (t 24 = 3.8, p = .002, d = 0.5), but there was no difference between speech directed to the front ear versus the better ear (t = 1.3, p = .29, d = 0.2). For the bimodal group, all three source azimuths yielded significantly different sentence understanding scores (poorer vs. front: t 11 = 4.7, p < .001, d = 1.0; poorer vs. better: t 11 = 8.2, p < .001, d = 1.6; front vs. better: t 11 = 3.5, p = .002, d = 1.0). Thus, the bilateral CI group was less affected by source azimuth as compared with the bimodal group evidenced by the fact that there was no significant difference between bilateral CI users' scores with speech to the front as compared with speech to the better hearing ear.

Comparisons Between Best-Aided Condition and Better Hearing Ear

Finally, we wanted to investigate whether the best-aided condition yielded significantly higher outcomes than the better hearing ear alone for both the bilateral CI and bimodal listeners. Thus, we compared the two listening conditions (bilateral, best aided vs. better ear) at each of the three source azimuths using three paired-samples t tests. We used a Bonferroni correction for multiple comparisons, which adjusted the critical value for significance to 0.017. For the bilateral CI recipients, the bilateral condition yielded significantly higher performance than the better hearing ear alone for all source azimuths. Details of the paired t-test analyses are as follows:

Source location, poorer ear: t 24 = 9.4, p = .00000000155, d = 2.1.

Source location, front: t 24 = 6.1, p = .00000295, d = 0.8.

Source location, better ear: t 24 = 2.6, p = .015, d = 0.3.

The same trend was observed for the bimodal listeners. Specifically, the bimodal condition yielded significantly higher performance than the better hearing ear alone for all source azimuths. Details of the paired t-test analyses are as follows:

Source location, poorer ear: t 11 = 4.1, p = .002, d = 1.4.

Source location, front: t 11 = 2.8, p = .016, d = 1.1.

Source location, better ear: t 11 = 3.7, p = .004, d = 0.8.

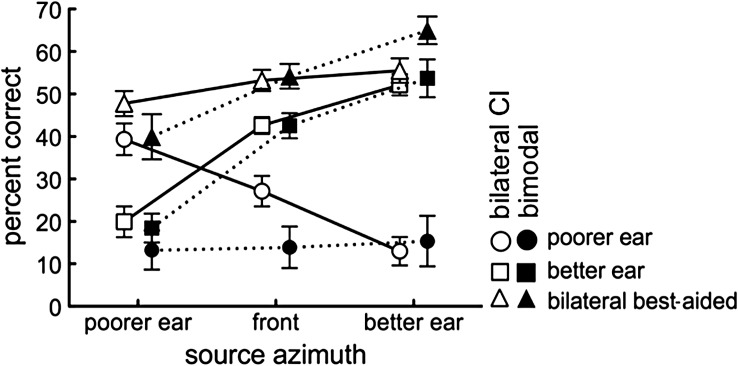

Experiment 2: The Effect of Source Azimuth for Sentence Understanding With Roving Source Azimuth When Listeners Are Allowed to Use Head Turns

Experiment 1 investigated the effect of listening condition, source azimuth, and source location certainty for sentence understanding in the presence of a semidiffuse noise. All listeners had been instructed to look forward at the speaker located at 0° for all testing. This could be considered a contrived condition, as listeners with two functioning ears can often instinctively turn their heads toward to the signal of interest in communicative environments. In fact, nearly all listeners commented that they would have achieved better scores had they been allowed to turn their head to face the perceived source. Consequently, the primary purpose of Experiment 2 was to investigate the effect of source azimuth for the bilateral best-aided condition (bilateral CI or bimodal) when the listeners were allowed to utilize head turns. The listeners were instructed not to move forward or to either side so as to potentially confound SNR, which was fixed across source azimuth. Rather, all participants were otherwise instructed to rotate their head in any way that they saw fit to help maximize hearing when they would detect a change in source azimuth. This represented an “uncertain” condition using the bilateral best-aided listening configuration.

Method

Study participants for Experiment 2 included a 24-participant subset of the original 37 adult CI recipients who participated in Experiment 1. Those who participated in both experiments are indicated by an asterisk next to the participant label in Tables 1 and 2. Of the 24 listeners participating in Experiment 2, 18 were bilateral and six were bimodal. The stimuli and procedures were identical to that described for Experiment 1; however, for Experiment 2, we only assessed sentence understanding in the bilateral best-aided configuration allowing head turns with a roving or uncertain source azimuth. No lists were repeated for any of the participants as there were many more available lists as compared with the number of listening conditions, even for those participating in both experiments. Every participant utilized head turns as verified by experimenter observation during sentence scoring.

Results

Effect of Head Turn for Improving Speech Understanding With Roving Source Azimuth

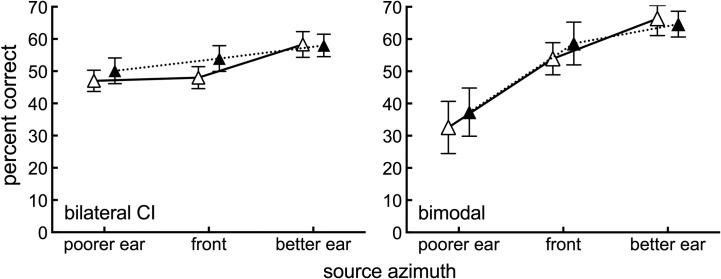

Mean data for the bilateral and bimodal listeners are shown in Figure 5. The “no head turn” data shown here were also shown in Figure 4; however, the mean “no head turn” data shown in Figure 5 represent the mean data for just the 24-listener sample participating in this experiment. We completed a linear mixed-model analysis of the main and interaction effects of listener group (bilateral CI and bimodal), source azimuth, and head turn behavior on TIMIT sentence recognition in noise. As was found in the first experiment, there was a statistically significant main effect of azimuth on the recognition scores, F(2, 96.4) = 16.29, p < .001, ηp 2 = .25. Neither statistically significant main effects of listener group, F(1, 121.8) = 0.27, p = .61, ηp 2 = .002, nor head turn behavior were observed, F(1, 121.8) = 0.16, p = .69, ηp 2 = .001. Furthermore, no statistically significant interaction effects of study group or azimuth with head turn behavior were observed (p > .48, ηp 2 = .015). These results suggest that, in the absence of visual cues, head turn behavior—in which a listener attempts to facilitate a more favorable signal by taking advantage of gross localization abilities and the resultant benefits that can be afforded by manipulating the relative angle of incidence—did not afford significant improvements in speech understanding for either bilateral CI or bimodal listeners.

Figure 5.

Mean TIMIT sentence recognition, in percent correct, for the different source azimuths for bilateral CI users on the left and bimodal listeners on the right. The filled symbols represent conditions for which the listener was utilizing head turns, and the unfilled symbols represent conditions for which the listener was asked to look straight ahead at the front speaker. Error bars represent ±1 SEM. CI = cochlear implant.

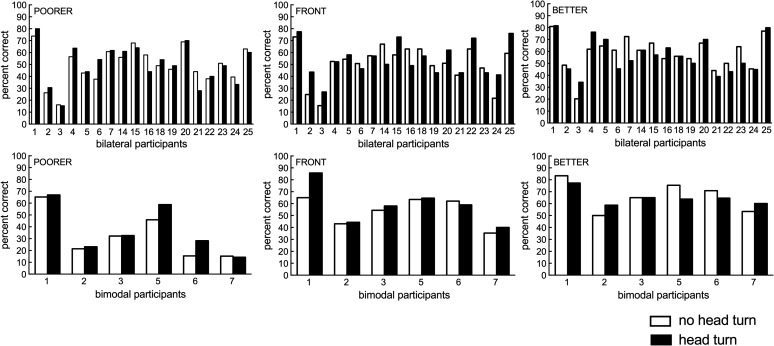

Individual data are plotted in Figure 6 for which the source location is indicated in the upper left-hand corner of each panel for the bilateral CI and bimodal listeners. Correlation analyses were completed comparing the degree of head-turn benefit and CNC word recognition and the degree of interaural asymmetry in CNC word recognition. No significant correlations were noted for any of the comparisons (p > .05 in all cases).

Figure 6.

Individual TIMIT sentence recognition, in percent correct, obtained in the best-aided condition (bilateral cochlear implant and bimodal) for bilateral cochlear implant and bimodal listeners. The unfilled bars represent conditions in which the listeners did not use head turns, and the filled bars represent conditions in which the listeners were allowed to turn their heads throughout testing.

Discussion

Outcomes: Bilateral CI > Bimodal

Without data-driven, patient-specific guidelines for determining bilateral CI candidacy, clinicians will continue to struggle making clinical recommendations regarding retention of bimodal hearing versus bilateral implantation. Making this situation even more difficult is the fact that approximately 60% of current adult CI candidates have aidable acoustic hearing—mostly low frequency—in one or both ears (Dorman & Gifford, 2010). Despite the fact that minimal acoustic hearing is required to observe significant bimodal benefit for speech recognition (e.g., Dunn et al., 2005; Gifford, Dorman, McKarns, & Spahr, 2007; Mok, Galvin, Dowell, & McKay, 2010; Schafer, Amlani, Paiva, Nozari, & Verret, 2011; Sheffield & Gifford, 2014; Zhang et al., 2010), the widespread availability of bilateral cochlear implantation in the United States allows for two viable interventions for our current unilateral CI recipients: (a) continued use of an HA in the non-CI ear for bimodal hearing or (b) pursuit of a second CI for bilateral electric hearing. Of course, the ideal intervention would be bilateral cochlear implantation with bilateral hearing preservation—theoretically allowing access to both interaural timing and level cues (e.g., Dorman et al., 2013; Dorman, Loiselle et al., 2016; Gifford et al., 2015; Moteki, Kitoh, Tsukada, Iwasaki, Nishio, & Usami, 2015). However, given that hearing preservation cannot be guaranteed, even with minimally traumatic surgical techniques and atraumatic electrodes, we generally implement a conservative approach to our clinical recommendations for surgical-based interventions. Several studies have demonstrated no difference between bilateral CI and bimodal listening on clinical measures of speech understanding in the best-aided condition with most listeners demonstrating significant benefit from the addition of a second ear, via either HA or CI (e.g., Cullington & Zeng, 2011; Dorman & Gifford, 2010; Dunn et al., 2005; Gifford et al., 2014, 2015). In fact, bimodal listeners may exhibit greater benefit from the addition of an HA in the nonimplanted ear than bilateral listeners obtain from a second CI—as compared with the best CI ear—when considering clinical measures of speech understanding using a single loudspeaker placed at 0° (R. J. M. van Hoesel, 2012). This is thought to be due to the fact that the HA ear has better underlying spectral resolution as compared with the CI ear (Zhang, Spahr, Dorman, & Saoji, 2013) and can provide additional cues not afforded by electric hearing, such as periodicity and temporal fine structure. Recent studies of speech understanding outcomes for high-performing bimodal listeners who had pursued a second CI demonstrated significant improvements in speech understanding following bilateral implantation for various environments, including speech shaped noise (Luntz et al., 2014) and high levels of spectrotemporally complex noise (Gifford et al., 2015). Similarly in a large multicenter study, Blamey et al. (2015) demonstrated that bilateral CI users (n = 86) significantly outperformed bimodal listeners (n = 589) for standard, clinical measures of speech in quiet and in noise.

For the bilateral CI recipients in the current study, (a) speech understanding was significantly higher in the bilateral CI condition as compared with the best CI condition for all source azimuth and (b) speech understanding was not significantly different across source azimuth in the bilateral CI condition for speech originating from the front and the better ear, but speech originating from the poorer ear was significantly worse than the other two sources. For the bimodal listeners in the current study, (a) speech understanding was significantly higher in the bimodal condition as compared with the better hearing ear alone for all source azimuths, and (b) speech understanding was significantly different for all source azimuths—for both the better hearing ear alone and in the bimodal listening condition—with best performance observed with speech toward the better hearing ear, which was the CI ear for 10 of 12 bimodal listeners. As compared with bimodal hearing, bilateral cochlear implantation allowed listeners to generally overcome effects of ear and source location exhibited by bimodal listeners, though bilateral CI users did exhibit significantly poorer speech understanding with speech directed to the poorer ear (mean decrement ranging from 5.4 to 7.7 percentage points).

The ability of the bilateral CI recipients to overcome ear and source effects is most likely attributed to better ear listening, or head shadow. Because head shadow is a monaural level-based cue, listeners need just one hearing ear to benefit from head shadow, provided that the distracter originates from the side of the poorer ear. We maintain that the reason that bilateral CI users are then able to overcome the effects of ear and source exhibited by the bimodal listeners is that they have a greater degree of symmetry between ears in terms of both speech understanding performance and audible bandwidth and, hence, have access to bilateral head shadow. The across-ear symmetry in monosyllabic word recognition for the bilateral CI and bimodal listeners is displayed in Tables 1 and 2.

Another possibility is that bilateral CI users were able to use both head shadow and binaural unmasking of speech, the latter of which is also commonly referred to as squelch (Koenig, 1950M). Binaural unmasking of speech is observed with the addition of an ear with a poorer SNR yielding overall improvement in speech understanding. Binaural unmasking of speech is dependent upon both interaural time and level differences. The majority of reports in the literature have demonstrated little-to-no evidence of binaural unmasking of speech for bilateral CI users (e.g., Buss et al., 2008; Gifford et al., 2014; Litovsky et al., 2006; Schleich, Nopp, & D'Haese, 2004). Researchers implicate the use of envelope-based signal processing for CI sound processors as the primary reason that timing differences are not well resolved. That is, the fine structure timing is not well preserved with current sound processor strategies and high channel stimulation rates, as used by the listeners in the current study.

Source Location Certainty and Head Turn

We found no effect of source location certainty nor head turns on sentence recognition in noise for any of the source azimuths or listening configurations. The former was consistent with Davis et al. (2016) but in contrast to two previous studies demonstrating a significant benefit for source location certainty in listeners with normal hearing (Brungart & Simpson, 2007; Kidd et al., 2005). Possible reasons for the lack of improvement with source location certainty in the current study are related to differences in the target population between the current and previous studies. To our knowledge, this is the first published study investigating the effect of source location certainty on speech understanding with CI recipients. CI recipients have very different peripheral processing as compared with listeners with normal hearing—particularly with respect to spectral resolution. Thus, it is possible that even the addition of a priori knowledge was not enough to overcome the effects of the task difficulty. Furthermore, the participants received no practice with the certain conditions as compared with the roving (or uncertain) conditions—whereas in some previous studies, considerable training had been provided (e.g., Brungart & Simpson, 2007; Kidd et al., 2005).

With respect to head turns, we are not aware of any previous studies that have explicitly investigated the effects of head turn behaviors on speech understanding with roving sources; thus, this is the first instance of such a report. There are, however, previous studies documenting head orientation benefit for speech recognition in noise for listeners with normal hearing (Grange & Culling, 2016) and listeners with hearing loss using HAs (Archer-Boyd et al., 2018). Further, Freeman, Culling, Akeroyd, and Brimijoin (2017) demonstrated that listeners with normal hearing are able to orient their head rotation to at least partially compensate for a moving target. In the current study, though there was a trend toward higher performance for speech at 0° for both listener groups, this did not reach statistical significance. This finding was surprising from the perspective of face validity given that nearly all listeners commented that the task was much simpler when allowed to use head turns. However, it is very possible that the subjective reports reflected listening effort, which can be entirely independent of speech understanding performance (e.g., Houben, van Doorn-Bierman, & Dreschler, 2013; Pals, Sarampalis, van Rijn, & Başkent, 2015; Picou & Ricketts, 2014).

Limitations

Though the bilateral CI users generally overcame ear and azimuth effects, the differences observed between bilateral CI and bimodal listeners may, in part, be due to relatively small sample sizes and an uneven sample across the two groups. We tried for several years to recruit bimodal participants who had audiometric thresholds better than 80 dB HL at 250 Hz. There is a growing population of bilateral CI users among the adult implant population. Thus, we had difficulty in recruiting samples of equivalent size. Given recent reports of other studies demonstrating significantly higher outcomes for bilateral CI users as compared with bimodal listeners in both across-subjects (Blamey et al., 2015) and within-subjects (Gifford et al., 2015) study designs, it is likely that this effect will hold up for cases in which bimodal listeners have interaural asymmetry in speech understanding performance and audible bandwidth.

As alluded to in the previous paragraph, another potential reason for the difference in performance between the bilateral CI and bimodal listeners was the asymmetry in speech understanding across the CI and HA ears for the bimodal listeners. Though we have purported this to be the primary reason for the superiority of the bilateral CI users' performance, this is a byproduct of bimodal sampling. More listeners with highly asymmetric hearing loss are pursuing cochlear implantation in the poorer hearing ear (e.g., Firszt, Holden, Reeder, Cowdrey, & King, 2012) even including adults with unilateral hearing loss (e.g., Grossmann et al., 2016; Firszt, Holden, Reeder, Waltzman, & Arndt, 2012; Zeitler et al., 2015). In the current dataset, 67% of the bimodal listeners exhibited significant interaural asymmetry in monosyllabic word understanding in contrast to just 16% of bilateral CI users (see Tables 1 and 2). For bimodal listeners with greater symmetry in speech understanding across the HA and CI ears and more symmetric audible bandwidth, we may observe more similar outcomes across ears and source azimuths for bimodal and bilateral CI users. Thus, a limitation of the current study was that most bimodal listeners had highly asymmetric speech understanding and audibility across ears. Though this is generally characteristic of bimodal listeners we encounter in the clinical environment, future research should investigate speech understanding in these complex environments for bilateral CI users as compared with bimodal listeners with greater symmetry across ears—including CI recipients with single-sided deafness. Such an investigation is critical if we are to determine a definitive criterion for the amount of residual hearing and speech understanding in the non-CI ear, which would warrant a second CI, thereby optimizing speech understanding in the bilateral, best-aided condition.

Finally, we want to point out that the across-group differences may be due to the fact that we utilized auditory-only conditions in both experiments. In the majority of real-world communication settings, even listeners with normal hearing make use of visual cues to aid speech understanding in noise (e.g., Sumby and Pollack, 1954). Indeed, R. J. M. van Hoesel (2015) demonstrated a highly significant audiovisual benefit for seven adult bilateral CI recipients when assessing speech understanding with a roving target. Dorman, Liss, et al. (2016) demonstrated similar findings with a stationary target such that bilateral CI (n = 4) and bimodal (n = 17) listeners derived significant benefit from the addition of visual cues. Furthermore, they showed that the degree of audiovisual benefit was nearly identical across the listener groups. Because they did not provide audiometric data regarding the nonimplanted ear nor the degree of interaural asymmetry in performance for the bimodal listeners, it is unclear whether their bimodal participants were similar to the group used here. Also, the bimodal listener data were compared with a small sample of bilateral CI recipients. Thus, we do not fully understand whether bimodal listeners—particularly those with considerable interaural asymmetry in performance and audible bandwidth—derive the same magnitude of audiovisual benefit as bilateral CI recipients. Further research is warranted in this area as this question holds great clinical relevance for counseling patients and clinical guidance regarding the pursuit of a second implant.

Conclusions

The primary purpose of this study was to investigate between-group differences for bimodal and bilateral CI users on measures of individual ear and bilateral, best-aided speech understanding performance on monosyllabic word recognition and sentence recognition in semidiffuse noise. Sentence recognition was assessed in conditions for which the participants were either certain or uncertain regarding the source azimuth of the sentence stimuli. A third condition investigated the effect of allowing the listener to turn his or her head with the randomly roving source condition. Results were as follows:

The best-aided condition (bilateral CI or bimodal listening) yielded significantly higher speech understanding than the better hearing ear alone for all source locations.

Better hearing ear alone—speech directed to the better hearing ear yielded significantly higher speech understanding than either speech to the front or speech to the poorer hearing ear.

Bimodal listeners exhibited significantly higher performance with speech directed to the better hearing ear as compared with speech to the front or to the poorer hearing ear even in the bimodal, best-aided condition.

Bilateral CI users exhibited more similar levels of speech understanding across source azimuth in the bilateral CI condition; however, speech directed to the poorer hearing ear yielded significantly poorer performance than with speech to the front or to the better hearing ear.

A priori knowledge of the source origin did not affect speech understanding performance for either group.

Allowing participants to use head turns did not result in significantly different speech understanding as compared with the head fixed condition.

In summary, bilateral CI users exhibited more similar speech understanding for all sources (bilateral mean scores ranging from 48% to 56%), whereas bimodal listener performance was significantly impacted by both ear and source azimuth effects (bimodal mean scores ranging from 40% to 65%). We attribute this to the bilateral CI users having access to head shadow, or better ear listening, on both sides, whereas the bimodal listeners had greater instances of interaural asymmetry in speech understanding and audible bandwidth. The clinical and real-world implications associated with these outcomes are that in complex, group listening environments, bilateral cochlear implantation (a) provides listeners with more symmetrical hearing and speech understanding across ears affording significantly higher levels of speech understanding and (b) may eliminate the necessity of preferential seating, which is often critical for successful communication with bimodal hearing.

Acknowledgments

This work was supported by National Institutes of Health Grant R01 DC009404, awarded to René Gifford, and National Institutes of Health Grant R01 DC010821, awarded to René Gifford and Michael Dorman. Portions of this dataset were presented at the 2012 meeting of the American Auditory Society in Scottsdale, AZ; the 12th International Conference on Cochlear Implants and Other Implantable Auditory Technologies meeting in Baltimore, MD; the 2016 Maximizing Performance in CI Recipients: Programming Concepts meeting in New York, NY; and the 2017 Conference on Implantable Auditory Prostheses in Tahoe City, CA.

Funding Statement

This work was supported by National Institutes of Health Grant R01 DC009404, awarded to René Gifford, and National Institutes of Health Grant R01 DC010821, awarded to René Gifford and Michael Dorman.

References

- Archer-Boyd A. W., Holman J. A., & Brimijoin W. O. (2018). The minimum monitoring signal-to-noise ratio for off-axis signals and its implications for directional hearing aids. Hearing Research, 357, 64–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff J. M., Freed D. J., Fisher L. M., Pal I., & Soli S. D. (2011). The effect of different cochlear implant microphones on acoustic hearing individuals' binaural benefits for speech perception in noise. Ear and Hearing, 32(4), 468–484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Assmann P., & Summerfield Q. (2004). The perception of speech under adverse conditions. In Greenberg S., Ainsworth W. A., & Fay R. R. (Eds.), Speech processing in the auditory system (pp. 231–308). New York, NY: Springer. [Google Scholar]

- Bernstein J. G. W., Schuchman G. I., & Rivera A. L. (2017). Head shadow and binaural squelch for unilaterally deaf cochlear implantees. Otology & Neurotology, 38, e195–e202. [DOI] [PubMed] [Google Scholar]

- Blamey P. J., Maat B., Başkent D., Mawman D., Burke E., Dillier N., … Lazard D. S. (2015). A retrospective multicenter study comparing speech perception outcomes for bilateral implantation and bimodal rehabilitation. Ear and Hearing, 36, 408–416. [DOI] [PubMed] [Google Scholar]

- Brockmeyer A. M., & Potts L. (2011). Evaluation of different signal processing options in unilateral and bilateral cochlear freedom implant recipients using R-Space background noise. Journal of the American Academy of Audiology, 22(2), 65–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown C. A., & Bacon S. P. (2009). Low-frequency speech cues and simulated electric-acoustic hearing. The Journal of the Acoustical Society of America, 125, 1658–1665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brungart D. S., & Simpson B. D. (2007). Cocktail party listening in a dynamic multitalker environment. Perception & Psychophysics, 69(1), 79–91. [DOI] [PubMed] [Google Scholar]

- Buss E., Calandruccio L., & Hall J. W. (2015). Masked sentence recognition assessed at ascending target-to-masker ratios: Modest effects of repeating stimuli. Ear and Hearing, 36, e14–e22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buss E., Pillsbury H. C., Buchman C. A., Pillsbury C. H., Clark Marcia S., … Barco A. L. (2008). Multicenter U.S. bilateral MED-EL cochlear implantation study: Speech perception over the first year of use. Ear and Hearing, 29, 20–32. [DOI] [PubMed] [Google Scholar]

- Chang J. E., Bai J. Y., & Zeng F. G. (2006). Unintelligible low-frequency sound enhances simulated cochlear-implant speech recognition in noise. IEEE Transactions in Biomedical Engineering, 53, 2598–2601. [DOI] [PubMed] [Google Scholar]

- Ching T. Y., Incerti P., & Hill M. (2004). Binaural benefits for adults who use hearing aids and cochlear implants in opposite ears. Ear and Hearing, 25, 9–21. [DOI] [PubMed] [Google Scholar]

- Choi J. E., Moon I. J., Kim E. Y., Park H. S., Kim B. K., Chung W. H., … Hong S. H. (2017). Sound localization and speech perception in noise of pediatric cochlear implant recipients: Bimodal fitting versus bilateral cochlear implants. Ear and Hearing, 38, 426–440. [DOI] [PubMed] [Google Scholar]

- Compton-Conley C. L., Neuman A. C., Killion M. C., & Levitt H. (2004). Performance of directional microphones for hearing aids: Real world versus simulation. Journal of the American Academy of Audiology, 15(6), 440–455. [DOI] [PubMed] [Google Scholar]

- Cullington H. E., & Zeng F. G. (2011). Comparison of bimodal and bilateral cochlear implant users on speech recognition with competing talker, music perception, affective prosody discrimination, and talker identification. Ear and Hearing, 32, 16–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis T. J., Grantham D. W., & Gifford R. H. (2016). Effect of motion on speech recognition. Hearing Research, 337, 80–88. [DOI] [PubMed] [Google Scholar]

- De Ceulaer G., Bestel J., Mulder H., Goldbeck F., de Varebeke S. P., & Govaerts P. J. (2016). Speech understanding in noise with the Roger Pen, Naida CI Q70 processor, and integrated Roger 17 receiver in a multi-talker network. European Archives of Oto-Rhino-Laryngology, 273(5), 1107–1114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., Cook S., Spahr A., Zhang T., Loiselle L., Schramm D., … Gifford R. H. (2015). Factors constraining the benefit to speech understanding of combining information from low-frequency hearing and a cochlear implant. Hearing Research, 322, 107–111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., & Gifford R. H. (2010). Combining acoustic and electric stimulation in the service of speech recognition. International Journal of Audiology, 49, 912–919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., Liss J., Wang S., Berisha V., Ludwig C., & Natale S. C. (2016). Experiments on auditory–visual perception of sentences by users of unilateral, bimodal, and bilateral cochlear implants. Journal of Speech, Language, and Hearing Research, 59, 1505–1519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., Loiselle L., Cook S. J., Yost W. A., & Gifford R. H. (2016). Sound source localization by normal-hearing listeners, hearing-impaired listeners and cochlear implant listeners. Audiology & Neurotology, 21, 127–131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., Loiselle L., Stohl J., Yost W. A., Spahr A., Brown C., & Cook S. (2014). Interaural level differences and sound source localization for bilateral cochlear implant patients. Ear and Hearing, 35, 633–640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., Spahr A. J., Loiselle L. H., Zhang T., Cook S. J., Brown C., & Yost W. A. (2013). Localization and speech understanding by a patient with bilateral cochlear implants and bilateral hearing preservation. Ear and Hearing, 34, 245–248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., Spahr A. J., Loizou P. C., Dana C. J., & Schmidt J. S. (2005). Acoustic simulations of combined electric and acoustic hearing (EAS). Ear and Hearing, 26(4), 371–380. [DOI] [PubMed] [Google Scholar]

- Dunn C. C., Tyler R. S., & Witt S. A. (2005). Benefit of wearing a hearing aid on the unimplanted ear in adult users of a cochlear implant. Journal of Speech, Language, and Hearing Research, 48(3), 668–680. [DOI] [PubMed] [Google Scholar]

- Festen J. M., & Plomp R. (1986). Speech-reception threshold in noise with one and two hearing aids. The Journal of the Acoustical Society of America, 79(2), 465–471. [DOI] [PubMed] [Google Scholar]

- Firszt J. B., Holden L. K., Reeder R. M., Cowdrey L., & King S. (2012). Cochlear implantation in adults with asymmetric hearing loss. Ear and Hearing, 33, 521–533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Firszt J. B., Holden L. K., Reeder R. M., Waltzman S. B., & Arndt S. (2012). Auditory abilities after cochlear implantation in adults with unilateral deafness: A pilot study. Otology & Neurotology, 33, 1339–1346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Firszt J. B., Holden L. K., Skinner M. W., & Tobey E. A. (2004). Recognition of speech presented at soft to loud levels by adult cochlear implant recipients of three cochlear implant systems. Ear and Hearing, 25(4), 375–387. [DOI] [PubMed] [Google Scholar]

- Fitzpatrick E. M., Seguin C., Schramm D., Armstrong S., & Chenier J. (2009). The benefits of remote microphone technology for adults with cochlear implants. Ear and Hearing, 30(5), 590–599. [DOI] [PubMed] [Google Scholar]

- Francart T., Lenssen A., & Wouters J. (2011). Sensitivity of bimodal listeners to interaural time differences with modulated single- and multiple-channel stimuli. Audiology & Neurootology, 16, 82–92. [DOI] [PubMed] [Google Scholar]

- Francart T., Van den Bogaert T., Moonen M., & Wouters J. (2009). Amplification of interaural level differences improves sound localization in acoustic simulations of bimodal hearing. The Journal of the Acoustical Society of America, 126, 3209–3213. [DOI] [PubMed] [Google Scholar]

- Freeman T. C. A., Culling J. F., Akeroyd M. A., & Brimijoin W. O. (2017). Auditory compensation for head rotation is incomplete. Journal of Experimental Psychology: Human Perception and Performance, 43, 371–380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford R. H., Dorman M. F., McKarns S. A., & Spahr A. J. (2007). Combined electric and contralateral acoustic hearing: word and sentence recognition with bimodal hearing. Journal of Speech, Language, and Hearing Research, 50, 835–843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford R. H., Dorman M. F., Sheffield S. W., Teece K., & Olund A. P. (2014). Availability of binaural cues for bilateral implant recipients and bimodal listeners with and without preserved hearing in the implanted ear. Audiology & Neurotology, 19(1), 57–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford R. H., Driscoll C. L. W., Davis T. J., Fiebig P., Micco A., & Dorman M. F. (2015). A within-subject comparison of bimodal hearing, bilateral cochlear implantation, and bilateral cochlear implantation with bilateral hearing preservation: High-performing patients. Otology & Neurotology, 36(8), 1331–1337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford R. H., Olund A. P., & DeJong M. D. (2011). Improving speech perception in noise for children with cochlear implants. Journal of the American Academy of Audiology, 22(9), 623–632. [DOI] [PubMed] [Google Scholar]

- Grange J. A., & Culling J. F. (2016). The benefit of head orientation to speech intelligibility in noise. The Journal of the Acoustical Society of America, 139, 703–712. [DOI] [PubMed] [Google Scholar]

- Grantham D. W., Ashmead D. H., Ricketts T. A., Haynes D. S., & Labadie R. F. (2008). Interaural time and level difference thresholds for acoustically presented signals in post-lingually deafened adults fitted with bilateral cochlear implants using CIS+ processing. Ear and Hearing, 29, 33–44. [DOI] [PubMed] [Google Scholar]

- Grantham D. W., Ashmead D. H., Ricketts T. A., Labadie R. F., & Haynes D. S. (2007). Horizontal-plane localization of noise and speech signals by postlingually deafened adults fitted with bilateral cochlear implants. Ear and Hearing, 28(4), 524–541. [DOI] [PubMed] [Google Scholar]

- Grossmann W., Brill S., Moeltner A., Mlynski R., Hagen R., & Radeloff A. (2016). Cochlear implantation improves spatial release from masking and restores localization abilities in single-sided deaf patients. Otology & Neurotology, 37, 658–664. [DOI] [PubMed] [Google Scholar]

- Holden L. K., Skinner M. W., & Fourakis M. S. (2007). Effect of increased IIDR in the Nucleus Freedom cochlear implant system. Journal of the American Academy of Audiology, 18, 777–793. [DOI] [PubMed] [Google Scholar]

- Houben R., van Doorn-Bierman M., & Dreschler W. A. (2013). Using response time to speech as a measure for listening effort. International Journal of Audiology, 52, 753–761. [DOI] [PubMed] [Google Scholar]

- James C. J., Blamey P. J., & Martin L. F. (2002). Adaptive dynamic range optimization for cochlear implants: A preliminary study. Ear and Hearing, 23, 49S–58S. [DOI] [PubMed] [Google Scholar]

- Julstrom S., & Kozma-Spytek L. (2014). Subjective assessment of cochlear implant users' signal-to-noise ratio requirements for different levels of wireless device usability. Journal of the American Academy of Audiology, 25(10), 952–968. [DOI] [PubMed] [Google Scholar]

- Kan A., Jones H. G., & Litovsky R. Y. (2015). Effect of multi-electrode configuration on sensitivity to interaural timing differences in bilateral cochlear-implant users. The Journal of the Acoustical Society of America, 138, 3826–3833. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kerber S., & Seeber B. U. (2013). Localization in reverberation with cochlear implants: predicting performance from basic psychophysical measures. Journal of the Association for Research in Otolaryngology, 14, 379–392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kidd G., Arbogast T. L., Mason C. R., & Gallun F. J. (2005). The advantage of knowing where to listen. The Journal of the Acoustical Society of America, 118(3 Pt 1), 1614–1625. [DOI] [PubMed] [Google Scholar]

- King S. E., Firszt J. B., Reeder R. M., Holden L. K., & Strube M. (2012). Evaluation of TIMIT sentence list equivalency with adult cochlear implant recipients. Journal of the American Academy of Audiology, 23(5), 313–331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kock W. (1950). Binaural localization and masking. The Journal of the Acoustical Society of America, 22, 801–804. [Google Scholar]

- Koenig W. (1950). Subjective effects in binaural hearing. [Letter to the editor]. The Journal of the Acoustical Society of America, 22, 61–62. [Google Scholar]

- Kolberg E. R., Sheffield S. W., Davis T. J., Sunderhaus L. S., & Gifford R. H. (2015). Cochlear implant microphone location affects speech recognition in diffuse noise. Journal of the American Academy of Audiology, 26(1), 51–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong Y. Y., & Carlyon R. P. (2007). Improved speech recognition in noise in simulated binaurally combined acoustic and electric stimulation. The Journal of the Acoustical Society of America, 121, 3717–3727. [DOI] [PubMed] [Google Scholar]

- Kong Y. Y., Stickney G. S., & Zeng F. G. (2005). Speech and melody recognition in binaurally combined acoustic and electric hearing. The Journal of the Acoustical Society of America, 117, 1351–1361. [DOI] [PubMed] [Google Scholar]

- Kuhn G. F. (1977). Model for the interaural time differences in the azimuthal plane. The Journal of the Acoustical Society of America, 62, 157–167. [Google Scholar]

- Laback B., Pok S. M., Baumgartner W. D., Deutsch W. A., & Schmid K. (2004). Sensitivity to interaural level and envelope time differences of two bilateral cochlear implant listeners using clinical sound processors. Ear and Hearing, 25, 488–500. [DOI] [PubMed] [Google Scholar]

- Lamel L., Kassel R., & Seneff S. (1986, January). Speech database development: Design and analysis of the acoustic–phonetic corpus. Paper presented at the DARPA Speech Recognition Workshop, Palo Alto, CA. [Google Scholar]

- Li N., & Loizou P. C. (2008). A glimpsing account for the benefit of simulated combined acoustic and electric hearing. The Journal of the Acoustical Society of America, 123, 2287–2294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky R. Y., Parkinson A., Arcaroli J., & Sammeth C. (2006). Simultaneous bilateral cochlear implantation in adults: A multicenter clinical study. Ear and Hearing, 27(6), 714–730. [DOI] [PMC free article] [PubMed] [Google Scholar]