Abstract

Purpose:

This study investigated the most efficient means of measuring pain intensity and pa interference comparing ecological momentary assessment (EMA) to end of day (EOD) data, with the highest level of measurement reliability as examined in individuals with spinal cord injury.

Methods:

EMA (five times throughout the day) and EOD ratings of pain and pain interference were collected over a seven-day period. Multilevel models were used to examine the reliability for both EOD and EMA assessments in order to determine the amount of variability in these assessments over the course of a week or the day and a multilevel version of the Spearman- Brown Prophecy formula was used to estimate values for reliability.

Results:

Findings indicated that a minimum of 5 days of EOD assessments were needed to achieve excellent reliability (>.90) for Pain Intensity and a minimum of 3 days of assessments was needed to achieve excellent reliability for Pain Interference. For EMA assessments, a minimum of one observation is needed over 6 days, 2 over 4, 3 over 3, or 2 over 4 days is needed to achieve excellent reliability for Pain Intensity ratings. For Pain Interference ratings, a minimum of one observation is needed over 5 days, 2 over 3, or 3 over 2 days, is needed to achieve excellent reliability.

Conclusions:

These findings can help researchers and clinician balance the cost/benefit tradeoffs of these different types of assessments by providing specific cutoffs for the numbers of each type of assessment that are needed to achieve excellent reliability.

Keywords: feasibility, spinal cord injury, ecological momentary assessment, daily diaries, pain, pain interference

The inherent subjectivity of pain necessitates its assessment by self-report. The most commonly used self-report measures involve asking the person to recall his or her pain (e.g., average, worst, best) over a defined time frame (e.g., the past 24 hours, week, month). However, recall of pain is fraught with problems and is a more complex task than it appears on its face1 with evidence for the influence of peak and recency effects on recall pain ratings.2 Ecological momentary assessment (EMA) or experience sampling, has been used to collect participants’ immediate experiences in real-world settings, minimizing recall bias and increasing measurement reliability.3,4 This approach is considered the most accurate means of capturing pain ratings and is generally regarded as the “gold standard” of assessment.4–7 Recent work suggests that EMA can also substantially improve reliability of measurement. For example, a recent study showed that a composite of five once-daily pain ratings resulted in measurement reliability of 0.90.5 Improving measurement reliability from the commonly accepted threshold of 0.70 to 0.90, for instance, provides a substantial boost to study power and decreases required sample size by 22%.8 In addition to improving measurement reliability, EMA facilitates within- person examination of factors associated with pain and provides a more granular view of treatment responsiveness.9

End of day (EOD) diaries are an alternative method to assess symptoms like pain; while they do not capture self-report in real-time, they do require recall of temporally proximal (same day) experiences. EOD diaries have the potential to reduce respondent burden and costs relative to EMA methods, and in chronic pain research they have been shown to have a high degree of correlation (≥0.90) with EMA ratings.6,10 However, it may not be the case that a single EOD assessment is more accessible and/or acceptable to respondents compared to multiple within-day EMA assessments. Indeed, our previous research has suggested lower missing data rates in EMA compared to EOD diaries11, which we speculated was at least partly due to the different technology platforms for each type of assessment - a wrist-worn device for EMA and web-based EOD diaries.

Decisions about pain assessment methods for any given research or clinical application are influenced by many factors, including consideration of respondent burden, sampling density needed to address the research question, required technology to complete the assessment, and the available time frame for assessment. Although previous research has established the superiority of EMA relative to recall measures in terms of reliability and study power, there is little information about how EMA compares to EOD data collection methods in terms of reliability. Such information can help investigators and clinicians to make informed decisions about measurement protocols. This study investigated the most efficient means of measuring pain intensity and pain interference, comparing EMA to EOD data, with the highest level of measurement reliability. This study was conducted in a sample of persons with chronic pain and spinal cord injury (SCI), a condition where chronic pain is a highly prevalent, intractable, distressing and disabling secondary condition.12–20 The feasibility of EMA in SCI has been previously demonstrated.11,21 Pain and pain interference data were collected using a 7-day EMA and EOD diary protocol.22 We determined the number of EOD assessments needed to reach a reliability coefficient of ≥0.90 for pain intensity and pain interference, as well as the minimum number of EMA observations required to achieve a reliability coefficient of ≥0.90 for both pain intensity and pain interference. We also examined the impact that time of day had on the estimated reliability for the EMA assessments.

Methods

Participants

Individuals with a medically documented SCI23 were recruited across three different treatment facilities (the University of Michigan, Wayne State University/Rehabilitation Institute of Michigan, and the University of Washington) for inclusion in this study. Participants were at least 18 years old, able to comprehend and speak English at a 6th grade reading level, able to provide informed consent, and be at least one year post-SCI to be eligible. Participants were also required to endorse ≥ 4/10 average pain (on a 0 to 10 scale where 0 is no pain at all and 10 is the worst imaginable pain) in the past month and be able to use a computer mouse/touchscreen and a wrist-worn monitor in order to meet inclusion criteria. Current inpatient treatment and atypical sleep/wake patterns were exclusion criteria. Participants were recruited as a part of a prospective observational study22 that examined how chronic pain acceptance relates to the pain, distress, well-being, and functioning in the daily lives of individuals with chronic pain and SCI. All data were collected in accordance with and approval of each institution’s Institutional Review Boards and written documentation of consent was obtained from each participant.

We used the International Standards for Neurological Classification of SCI24 to characterize participants as paraplegic (impairment or loss of motor and/or sensory function in the thoracic, lumbar or sacral [but not cervical] segments of the spinal cord secondary to damage of neural elements within the spinal canal), or tetraplegic (impairment or loss of motor and/or sensory function in the cervical segments of the spinal cord due to damage of neural elements within the spinal canal). Participants were characterized as either complete (an absence of sensory and motor function in the lowest sacral segment) or incomplete (partial preservation of sensory and/or motor function is found below the neurological level and includes the lowest sacral segment).

Study Procedures

All study participants completed EMA and EOD assessments over a 7-day period. For the purposes of the current analysis, we compared EOD reports of pain intensity and pain interference collected using a daily survey diary with multiple EMAs of pain intensity and pain interference completed over a 7-day period. Details for the larger study protocol are reported elsewhere.11,22

EOD Assessments.

Participants completed an EOD daily diary that included measures of pain intensity and pain interference (described, below) each of 7 nights before bed by logging on to an online-data collection site (Assessment CenterSM).

Pain Intensity was measured using an adapted version of the Patient Reported Outcome Measurement Information System (PROMIS)25–28 Pain Intensity - Short Form 3a.29 This short form has three items: “how intense was your pain at its worst?;” “how intense was your average pain?”; and “what is your level of pain right now?”. The response timeframe was changed from “in the past 7 days…” to “today…”. Participants rated each item on Likert scale (from 1 – 5). Scores were on a T metric (M = 50, SD = 10), with higher scores indicating higher pain intensity.

Pain Interference was measured using the Spinal Cord Injury - Quality of Life (SCI-QOL) measurement system30–32 Pain Interference 10-item short form.33 Items assess self-reported consequences of pain on emotional, physical and recreational activities. Items were adapted in that the context was changed from “In the past 7 days…” to “Today…”. Participants rated each item on Likert scale (from 1 “not at all” to 5 “always”). Scores were on a T metric (M = 50, SD = 10), with higher scores indicating higher levels of pain interference.

Ecological Momentary Assessment.

EMA data that included measures of pain intensity and pain interference (described below) were collected using a wrist-worn accelerometer enhanced with a user interface for entry of self-report data (i.e., the PRO-Diary; CamNTech, Cambridge, UK). During the 7-day period, participants entered self-reported ratings of pain and pain interference 5 times throughout the day: upon waking, 11am, 3pm, 7pm, and bedtime. An audible alarm alerted participants to enter ratings at 11am, 3pm, and 7pm, and participant initiated ratings (with no prompt) at wake and bed times. Wake time was defined as the time that he/she became fully awake for the day, regardless of whether or not they remained in bed. Bedtime was defined as the time that they turned out the light or intended to go to sleep, not the time they necessarily got into bed. Pain and pain interference items were adapted from the “right now” item of the widely used Brief Pain Inventory34–37 for use in this study.

Momentary Pain Intensity was assessed by asking “What is your level of pain right now?” Responses ranges from 0 = “no pain” to 10 = “worst pain imaginable.”

Momentary Pain Interference was assessed by asking “How much is your pain interfering with what you are doing right now?” Responses ranged from 0 = “no interference” to 10 = “totally interfering.”

Data Analyses

Preliminary Analyses

Data distribution statistics were calculated in order to describe the sample and to check for assumptions underlying the primary analyses.

Primary Analyses

Multilevel models (MLM) were used to examine the reliability for both EOD and EMA assessments to determine the amount of variability in these assessments over the course of a week (i.e., EOD assessments) or day (i.e., for EMA ratings). For EOD analyses, we examined days (1–7) nested within people. For EMA analyses, we examined occasions (1–5) nested within days (1–7), nested within people. In addition, in order to determine whether or not time of day influenced reliability coefficients for EMA ratings, the MLM models were rerun separately for morning (Time 1), midday (Time 3) and evening (Time 5) ratings. Specifically, for each time point (Time 1, Time 3, and Time 5) the MLM examined days (1–7) nested within people. All multilevel models were unconditional variance component models; other than days and occasions, there were no other independent variables included in the model. These full information maximum likelihood mixed-effects MLMs are considered the standard for handling missing data that is characteristic of this type of study design.38 In addition, a multilevel version of the Spearman-Brown Prophecy formula was used in order to estimate values for reliability.39 Minimal acceptable reliability was specified as ≥ 0.70.40,41 Results also report the variation of scores for assessments at each time point. For these analyses, score variation reflects the square-root of the variance estimates that were generated in the associated MLM models. All statistical analyses were performed using MLwiN 2.27.42

Normality was assessed using normal quantile plots and through an examination of the distribution of responses for the dependent variables for the presence of floor and ceiling effects. The data were reasonably well approximated by a normal distribution for EMA and EOD Pain Intensity, and EOD Pain Interference. For EMA Pain Interference, although the data was reasonably well approximated by a normal distribution, there was a floor effect (for 30% of all observations). While there are models that address the presence of a substantial number of zero’s, we elected to retain our analytic approach for consistency across measures.

Sample Size Considerations

Sample size considerations were based on sampling needs for moderation analyses that are reported elsewhere (n = 102 participants were needed to detect a medium effects size between two groups - high pain acceptance and low pain acceptance - on the association between pain and functioning).22

Results

Participant Descriptive Statistics

One-hundred and thirty-one participants participated in the 7-day home monitoring period (29.0% paraplegia complete, 25.2% paraplegia incomplete, 7.6% tetraplegia complete, 29.8% tetraplegia incomplete, 8.4% missing). The majority of participants were male (74%) and Caucasian (74%); 19.1% were African American, 1.5% were Asian, and 3.1% were American Indian or Multiracial (race was missing for 2.3% of the sample). SCI was most frequently the result of a motor vehicle accident (33.6%), followed by falls (19.8%), gunshot wounds or other violence (16%), diving and other sports/recreation (both at 7.6%), and medical/surgical accidents (3.8%). Almost half of participants used a manual wheelchair (47.3%), followed by a power wheelchair (28.2%), using a cane or walker (12.2%, walking without assistance (10.7%), and being pushed by someone else (1.5%).

Primary Analyses

Table 1 provides descriptive data for the EOD and EMA assessments for each day of the home monitoring period. For both EOD and EMA assessments, pain ratings were stable and modest in terms of intensity. Furthermore, ~20% (or less) of the data was missing on any given day, which has been previously reported.11

Table 1.

Descriptive Data for End of Day (EOD) and Ecological Momentary Assessment (EMA) Data (N = 131)

| Day 1 | Day 2 | Day 3 | Day 4 | Day 5 | Day 6 | Day 7 | Floor & Ceiling Effects for 7-day period |

||

|---|---|---|---|---|---|---|---|---|---|

| M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | % at | % at | |

| Missing | Missing | Missing | Missing | Missing | Missing | Missing | Floor | Ceiling | |

| EOD | |||||||||

| Pain Intensity** | 50.9 (6.5) 20.3% |

51.2 (6.3) 20.3% |

52.4 (7.0) 20.3% |

51.1 (6.8) 20.3% |

51.0 (6.9) 20.3% |

51.2 (7.0) 20.3% |

50.7 (7.9) 20.3% |

1.7% | 0.7% |

| Pain Interference** |

56.8 (7.2) 21.1% |

57.2 (6.9) 21.1% |

57.2 (8.0) 21.1% |

56.3 (7.7) 21.1% |

56.5 (7.3) 21.1% |

56.2 (8.1) 21.1% |

55.9 (7.7) 21.1% |

8.5% | 3.1% |

| EMA | |||||||||

| Pain Intensity*** | 3.9 (2.4) 16.5% |

4.0 (2.4) 16.5% |

4.0 (2.4) 16.5% |

4.1 (2.3) 16.5% |

4.2 (2.3) 16.5% |

4.0 (2.4) 16.5% |

4.0 (2.4) 16.5% |

8% | 0.6% |

| Pain Interference*** |

2.4 (2.5) 17.6% |

2.5 (2.5) 17.6% |

2.5 (2.5) 17.6% |

2.5 (2.6) 17.6% |

2.6 (2.5) 17.6% |

2.5 (2.6) 17.6% |

2.5 (2.6) 17.6% |

32.9% | 1.0% |

Note. EOD = end of day; EMA = ecological momentary assessment

scores are on a T metric (M=50, SD=10)

ratings are on a scale of 0–10; in all cases, higher scores indicate worse pain

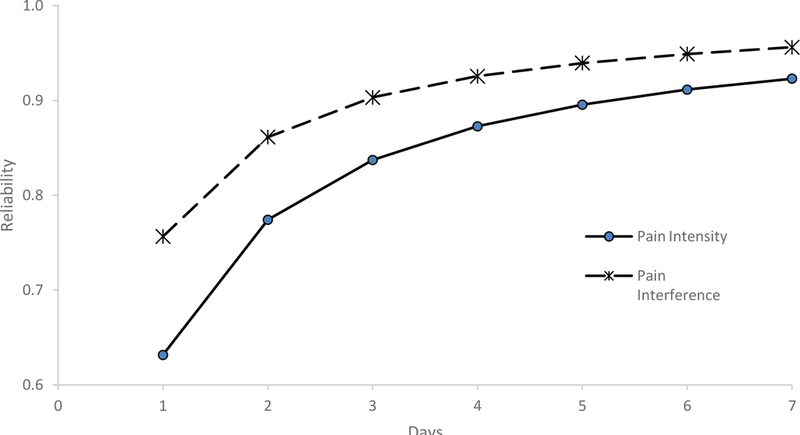

Multilevel models were fit for EOD measures (see Table 2).For pain interference, on average, scores varied by 3.67 points (~.5 of a standard deviation as scores are on a T metric) over the course of the seven-day monitoring period (as noted above, score variation reflects the square-root of the variance estimate provided in Table 2). For EOD pain intensity, on average, scores varied by 4.25 points (possible scores can range from 3–15) over the course of the seven-day period. At the individual level, pain interference scores varied by 6.46 points and pain intensity scores varied by 5.57 points. Table 3 and Figure 1 provides reliability data for EOD ratings of pain intensity and pain interference. For pain intensity, a minimum of 2 days of assessments was needed to achieve adequate reliability (> .70), while a minimum of 5 days of assessments was needed to achieve excellent reliability (> 0.90). For pain interference, a single assessment yielded adequate reliability, while a minimum of 3 days of assessments were needed to achieve excellent reliability.

Table 2.

Model Estimates for End of Day (EOD) Pain Intensity and Pain Interference Assessments (N = 125)

| Random Effects | ||||||

| Pain Intensity | Pain Interference | |||||

| Effect | Estimate | SE | p | Estimate | SE | p |

| Individual | 30.97 | 4.37 | 0.0000 | 41.73 | 5.63 | <.00001 |

| Day - Error | 18.08 | 1.06 | 0.0000 | 13.44 | 0.79 | <.00001 |

| Fixed Effects | ||||||

| Pain Intensity | Pain Interference | |||||

| Effect | Estimate | SE | p | Estimate | SE | p |

| Intercept | 51.37 | 0.53 | 0.0000 | 56.94 | 0.60 | <.00001 |

Table 3.

Reliability Data for End of Day (EOD) Assessments (N = 125)

| Day | Pain Intensity r (SE) n |

Pain Interference r (SE) n |

|---|---|---|

| 1 | 0.63 (0.04) 106 | 0.78 (0.03) 105 |

| 2 | 0.77 (0.03) 106 | 0.88 (0.02) 104 |

| 3 | 0.84 (0.02) 94 | 0.91 (0.01) 98 |

| 4 | 0.87 (0.02) 102 | 0.93 (0.01) 101 |

| 5 | 0.90 (0.01) 99 | 0.95 (0.01) 96 |

| 6 | 0.91 (0.01) 104 | 0.96 (0.01) 104 |

| 7 | 0.92 (0.01) 100 | 0.96 (0.01) 100 |

Note. Bolding indicates excellent reliability (i.e., reliability coefficient ≥ .90)

Figure 1. Reliability Data for End of Day (EOD) Assessments (N = 125).

This figure shows the estimated reliability coefficients for both of the pain assessments (Pain Intensity and Pain Interference) over the course of the seven-day period.

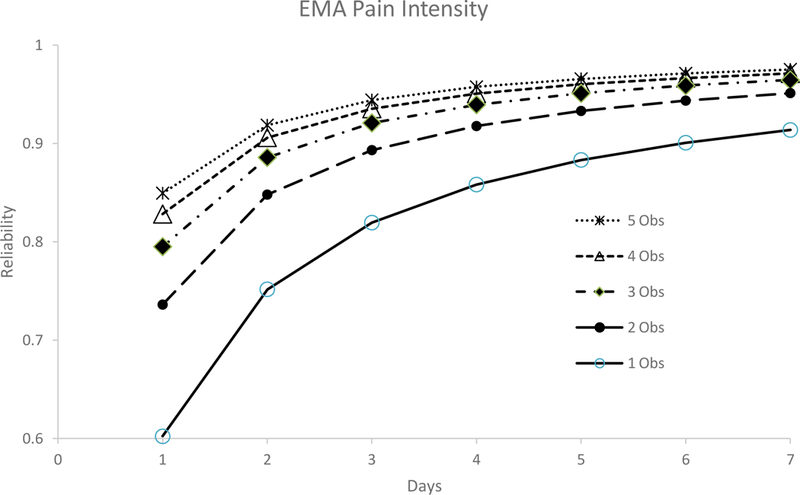

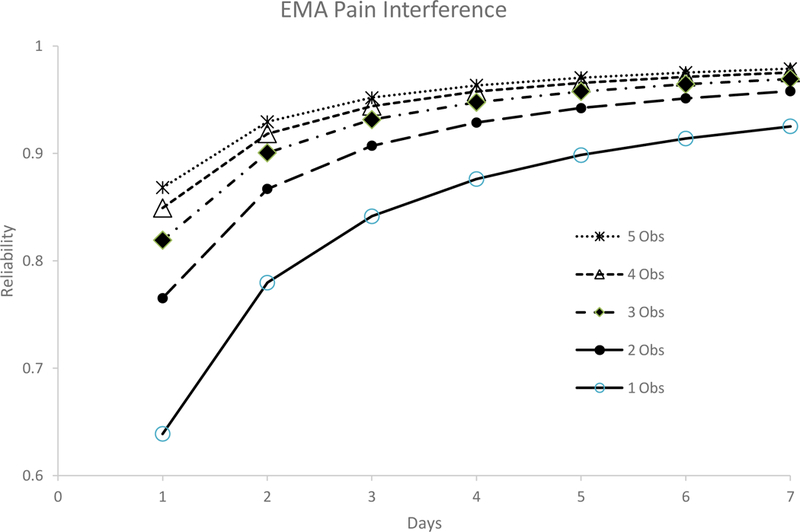

Multilevel models were also fit for EMA measures (see Table 4). Specifically, for pain interference, on average, scores varied by 1.47 points (possible scores can range from 0–10) over the course of the day (as noted above, score variation reflects the square-root of the variance estimate provided in Table 5). EMA pain intensity scores, on average, varied by 1.44 points (possible scores can range from 0–10) over the course of the day. At the individual level or within person, pain interference scores varied by 0.45 points and pain intensity scores varied by 0.44 points over the course of the seven-day period. At the individual level, pain interference scores varied by 2.04 points and pain intensity scores varied by 1.86 points. Table 5 and Figures 2A and 2B provides reliability data for the EMA ratings of pain intensity and pain interference. For pain intensity, at least 2 assessments were needed (either two assessments within the same day or a single assessment for two days) to achieve adequate reliability, whereas a minimum of one observation over 6 days, 2 observations over 4 days, 3 observations over 3 days, or 2 observations over 4 days was needed to achieve excellent reliability. For pain interference, at least 2 assessments were needed (either two assessments within the same day or a single assessment for two days) to achieve adequate reliability, whereas a minimum of one observation was needed over 5 days, 2 observations over 3 days, 3, observations over 2 days, is needed to achieve excellent reliability. In addition, reliability coefficients did not differ according to time of day (morning, midday, and evening; Table 6).

Table 4.

Model Estimates for Ecological Momentary Assessment (EMA) Pain Intensity and Pain Interference (N = 130)

| Random Effects | ||||||

| Pain Intensity | Pain Interference | |||||

| Effect | Estimate | SE | p | Estimate | SE | p |

| Individual | 3.45 | 0.44 | 0.0000 | 4.17 | 0.53 | <.00001 |

| Day | 0.19 | 0.04 | 0.0000 | 0.20 | 0.04 | <.00001 |

| Occasion - Error | 2.08 | 0.06 | 0.0000 | 2.16 | 0.06 | <.00001 |

| Fixed Effects | ||||||

| Pain Intensity | Pain Interference | |||||

| Effect | Estimate | SE | p | Estimate | SE | p |

| Intercept | 4.02 | 0.17 | 0.0000 | 2.54 | 0.18 | <.00001 |

Table 5.

Reliability data for Ecological Momentary Assessments (EMAs; N = 130)

| Pain Intensity | ||||||

|---|---|---|---|---|---|---|

| Day | Observation | |||||

|

Total # of observations |

1 r (SE) |

2 r (SE) |

3 r (SE) |

4 r (SE) |

5 r (SE) |

|

| 1 | 555 | 0.60 (0.03) | 0.74 (0.03) | 0.80 (0.02) | 0.83 (0.02) | 0.85 (0.02) |

| 2 | 549 | 0.75 (0.02) | 0.85 (0.02) | 0.89 (0.01) | 0.91 (0.01) | 0.92 (0.01) |

| 3 | 524 | 0.82 (0.02) | 0.89 (0.01) | 0.92 (0.01) | 0.94 (0.01) | 0.94 (0.01) |

| 4 | 541 | 0.86 (0.02) | 0.92 (0.01) | 0.94 (0.01) | 0.95 (0.01) | 0.96 (0.01) |

| 5 | 533 | 0.88 (0.01) | 0.93 (0.01) | 0.95 (0.01) | 0.96 (0.01) | 0.97 (0.00) |

| 6 | 529 | 0.90 (0.01) | 0.94 (0.01) | 0.96 (0.01) | 0.97 (0.00) | 0.97 (0.00) |

| 7 | 507 | 0.91 (0.01) | 0.95 (0.01) | 0.96 (0.00) | 0.97 (0.00) | 0.98 (0.00) |

| Pain Interference | ||||||

| 1 | 548 | 0.64 (0.04) | 0.77 (0.03) | 0.82 (0.03) | 0.85 (0.02) | 0.87 (0.02) |

| 2 | 546 | 0.78 (0.03) | 0.87 (0.02) | 0.90 (0.02) | 0.92 (0.01) | 0.93 (0.01) |

| 3 | 521 | 0.84 (0.02) | 0.91 (0.02) | 0.93 (0.01) | 0.94 (0.01) | 0.95 (0.01) |

| 4 | 539 | 0.88 (0.02) | 0.93 (0.01) | 0.95 (0.01) | 0.96 (0.01) | 0.96 (0.01) |

| 5 | 531 | 0.90 (0.02) | 0.94 (0.01) | 0.96 (0.01) | 0.97 (0.01) | 0.97 (0.01) |

| 6 | 525 | 0.91 (0.01) | 0.95 (0.01) | 0.96 (0.01) | 0.97 (0.01) | 0.98 (0.00) |

| 7 | 503 | 0.93 (0.01) | 0.96 (0.01) | 0.97 (0.01) | 0.98 (0.00) | 0.98 (0.00) |

Note. Bolding indicates excellent reliability (i.e., reliability coefficient ≥ .90)

Figure 2A. Reliability data for Ecological Momentary Assessments (EMAs) of Pain Intensity (N = 130).

This figure shows the estimated reliability coefficients for each of the five different Ecological Momentary Assessment (EMA) ratings that were provided each day for Pain Intensity over the course of the seven-day period.

Figure 2B. Reliability data for Ecological Momentary Assessments (EMAs) of Pain Interference (N = 130).

This figure shows the estimated reliability coefficients for each of the five different Ecological Momentary Assessment (EMA) ratings that were provided each day for Pain Intensity over the course of the seven-day period.

Table 6.

Reliability data for Morning, Midday and Evening Ecological Momentary Assessments (EMAs; N = 130)

| Morning | Midday | Evening | ||||

|---|---|---|---|---|---|---|

| Day | Pain Intensity | Pain Interference |

Pain Intensity | Pain Interference |

Pain Intensity | Pain Interference |

| 1 | 0.67 | 0.69 | 0.68 | 0.69 | 0.68 | 0.66 |

| 2 | 0.80 | 0.82 | 0.81 | 0.82 | 0.81 | 0.80 |

| 3 | 0.86 | 0.87 | 0.86 | 0.87 | 0.87 | 0.86 |

| 4 | 0.89 | 0.90 | 0.89 | 0.90 | 0.90 | 0.89 |

| 5 | 0.91 | 0.92 | 0.91 | 0.92 | 0.91 | 0.91 |

| 6 | 0.93 | 0.93 | 0.93 | 0.93 | 0.93 | 0.92 |

| 7 | 0.94 | 0.94 | 0.94 | 0.94 | 0.94 | 0.93 |

Discussion

The primary purpose of this analysis was to investigate the most efficient methods of measuring pain and pain interference with the highest level of measurement reliability while minimizing respondent burden in a sample of persons with SCI and chronic pain. Specifically, our findings indicated that the number of assessments needed to establish excellent reliability differed by both modality (EOD versus EMA assessment) and measure (pain intensity or pain interference).

The data provided in Tables 3 and 5 can be used to optimize the assessment of pain using EMA and EOD assessments. For example, while a reliability of 0.90 may provide an adequate balance between study power and precision,8 a clinician/researcher may elect a lower cutoff (i.e., good reliability or > .80) to balance costs (e.g., increased participant burden, monetary expense for data capture) associated with utilizing this type of methodology.

In addition, the minimum number of assessments required to achieve adequate, good, or excellent reliability was consistently less for Pain Interference than Pain Intensity. For the EOD assessments, this is most likely due to the fact that daily pain interference was assessed with a 10-item short form whereas the measures for daily pain intensity was much shorter (i.e., 3 items). Specifically, the greater the number of items on a scale, the more reliable the measurement, according to classical test theory methodology.43 It is also possible that Pain Interference may be a less psychometrically robust assessment of pain. The presence of a floor effect for EMA Pain Interference would support this premise. In addition, it is also possible that Pain Interference is less robust given the fact that this rating is, by definition, grounded within the context of activity (which may make subjective ratings more variable given comprehension or varying levels of daily activities, as well as varying expectations about activity involvement). As such, it seems plausible that this contextual element would increase between subject variability, which in turn would be associated with a decrease in measurement reliability. Future work could consider using different items (of the same length) to examine whether reliability and sensitivity could be improved when examining pain in persons with SCI. It is unclear why reliability for Pain Interference was consistently less than for Pain Intensity for the EMA assessments; this different warrants further investigation. Regardless, these findings support the notion that there are distinct aspects of pain that can be measured in SCI.17,44

While EMA has been touted as a superior approach to the assessment of pain because responses are less reliant on memory which may be biased,45 it can have associated costs for both the researcher and the participant. For example, EMA requires hardware for monitoring and data capture, more sophisticated analytical approaches, and is potentially a time burden for study participants. Furthermore, high response frequency for EMA assessments raises concerns for compliance (although in this sample missing data rates for EMA assessments [~16%] were generally lower than the missing data for EOD assessments [~20%]). Data from this study suggest that both EMA and EOD assessments of pain are reliable, that the actual number of ratings per type of modality is generally similar, and that the timing of the EMA assessments does not impact reliability. For example, for pain intensity, 5 EOD assessments are needed (i.e., one assessment each of 5 days), relative to an EMA assessment of pain intensity which would require between 6 (1 assessment each of 6 days) and 8 assessments (2 observations each over 4 days). Similarly, for pain interference, 3 observations are needed to achieve reliability for EOD assessments, relative to needing between 5 (1 rating over 5 days) and 6 (2 observations over 3 days or 3 observations over 2 days). Thus, depending on the time frame for any given protocol, EMA may prove advantageous over EOD or vice versa depending on specific study design needs. Findings also support previous research that highlights the importance of multiple ratings to improve measurement sensitivity and specificity of pain.5 More specifically, understanding the number of assessments needed to achieve excellent reliability, regardless of assessment modality (EOD or EMA), can maximize the statistical precision and sensitivity of pain assessments in individuals with SCI.

The number of items that are administered, as well as the administration format that is used (i.e., computer adaptive test or static short forms) are also additional factors that warrant further consideration in the optimization of the assessment of pain. As mentioned above, given that the number of assessments needed to establish adequate, good, or excellent reliability was consistently lower for Pain Interference than Pain Intensity, and that we suspect that the smaller number of Pain Intensity items may at least partially account for this finding (at least for the EOD assessments). Thus, using measures that include more items may counterbalance the need for requiring more assessments to achieve greater measurement reliability. As such, more information is needed to optimize the balance between the incremental administrative burden of adding additional items with the number of independent observations needed to achieve different levels of measurement reliability. Given that the EOD measures in this study offer a computer adaptive test (CAT) administration option, it is possible that the CAT administration may offer a solution for optimizing this balance (given that CAT can be specified to administer a minimum and maximum number of items and can be programmed to stop administration once a pre-specified standard error is met).

There are also several limitations to this study and its results. First, as with many other studies in SCI, our sample is predominantly male, limiting the generalizability of findings for females with SCI. Furthermore, while we assessed EMA and EOD ratings of pain, this study did not include the same measures during the in-person baseline or follow-up assessments precluding our ability compare these types of ratings with the type of solitary assessment of pain that is characteristic of many study designs (that include baseline, interim, and follow-up assessments). In addition, the relatively high rates for missing data for both EMA [~16%] and EOD assessments [~20%] raises a potential concern. These concerns are somewhat mitigated by the fact that they are only slightly higher than missing data rates in other clinical populations (−10%) without the degree of impairment as SCI.46–49 Furthermore, the validity of both the EOD and EMA assessments have not been established. For example, while EMA methods are often considered the “gold standard”, there are no data to support or refute this claim and it is possible that EMA and recall measures of pain, for instance, might be tapping distinct constructs altogether.3 Fortunately, the potential concern of reactivity or temporal shifts in ratings, was not present in these data (a summary of these analyses is provided elsewhere50). In addition, the presence of a floor effect for EMA Pain Interference presented an analytical challenge in our data. While we elected to retain our approach for consistency across the different measures, further investigation of using statistical models that address the presence of a substantial number of zero’s could be considered. In addition, all analyses used a modified version of the PROMIS measures, one in which the time frame of the original items (“in the last 7 days”) was removed; yet, scores were derived using the original calibration data that included this time frame. Thus, the calibration data may over- or under-estimate the pain ratings of participants in this study. Finally, future work is needed to directly compare EMA and EOD assessments to determine the effect that EMA reports may have on same day summative reporting, as well as the overall validity for these type of data, which is complicated by the issues raised earlier in this paragraph.

Despite these limitations, this study provides important information for optimizing the assessment of pain using EMA and EOD assessments. The data provided herein can help researchers and clinician balance the cost/benefit tradeoffs of these different types of assessments by providing specific cutoffs for the numbers of each type of assessment that are needed to achieve excellent reliability. Future study designs can consider these recommendations so as to minimize the participant assessment burden that comes with having to complete repeat assessments of pain. Furthermore, findings highlight how pain in SCI is multifaceted, and thus ratings of pain intensity (while typically the most common type of pain assessment in clinical care) do not capture the full breadth of the pain experience in individuals with SCI. Finally, consideration of how pain interferes with daily life and activities is critical for a richer understanding of the clinical implications that pain has on functioning in SCI.

Compliance with Ethical Standards:

Acknowledgments, Funding and Disclosures

We thank Siera Goodnight, Kristen Pickup, Daniela Ristova-Trendov, Christopher Garbaccio, Jessica Mackelprang-Carter, and Angela Garza for collecting and managing these data. A sincere thanks to all of our study participants for their effort in being in this study.Research reported in this publication was supported by the Craig H. Neilsen Foundation under award number 287372 (PI: Kratz). The content is solely the responsibility of the authors and does not necessarily represent the views of the Craig H. Neilsen Foundation. Dr. Kratz was supported during manuscript preparation by a grant from the National Institute of Arthritis and Musculoskeletal and Skin Diseases (award number K01AR064275). The authors have no conflicts of interest to report.

Funding: Research reported in this publication was supported by a grant from the Craig H. Neilsen Foundation under award number 287372 (PI: Kratz). The content is solely the responsibility of the authors and does not necessarily represent the views of the Craig H. Neilsen Foundation.

Footnotes

Conflict of Interest: The authors have no conflicts of interest to report.

Ethical approval: All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed consent: Informed consent was obtained from all individual participants included in the study.

References

- 1.Broderick JE, Stone AA, Calvanese P, Schwartz JE, Turk DC. Recalled pain ratings: a complex and poorly defined task. J Pain. 2006;7(2):142–149. [DOI] [PubMed] [Google Scholar]

- 2.Schneider S, Stone AA, Schwartz JE, Broderick JE. Peak and end effects in patients’ daily recall of pain and fatigue: a within-subjects analysis. J Pain. 2011;12(2):228–235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Shiffman S, Stone AA, Hufford MR. Ecological momentary assessment. Annu Rev Clin Psychol. 2008;4:1–32. [DOI] [PubMed] [Google Scholar]

- 4.Schneider S, Stone AA. Ambulatory and diary methods can facilitate the measurement of patient-reported outcomes. Qual Life Res. 2016;25(3):497–506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Heapy A, Dziura J, Buta E, Goulet J, Kulas JF, Kerns RD. Using multiple daily pain ratings to improve reliability and assay sensitivity: how many is enough? J Pain. 2014;15(12):1360–1365. [DOI] [PubMed] [Google Scholar]

- 6.Broderick JE, Schwartz JE, Schneider S, Stone AA. Can End-of-day reports replace momentary assessment of pain and fatigue? J Pain. 2009;10(3):274–281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Salaffi F, Sarzi-Puttini P, Atzeni F. How to measure chronic pain: New concepts. Best Pract Res Cl Rh. 2015;29(1):164–186. [DOI] [PubMed] [Google Scholar]

- 8.Perkins DO, Wyatt RJ, Bartko JJ. Penny-wise and pound-foolish: The impact of measurement error on sample size requirements in clinical trials. Biol Psychiat. 2000;47(8):762–766. [DOI] [PubMed] [Google Scholar]

- 9.Stone AA, Broderick JE. Real-time data collection for pain: appraisal and current status. Pain Med. 2007;8 Suppl 3:S85–93. [DOI] [PubMed] [Google Scholar]

- 10.Stone AA, Broderick JE, Schwartz JE. Validity of average, minimum, and maximum end- of-day recall assessments of pain and fatigue. Contemporary clinical trials.2010;31 (5):483–490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kratz AL, Kalpakjian CZ, Hanks RA. Are intensive data collection methods in pain research feasible in those with physical disability? A study in persons with chronic pain and spinal cord injury. Qual Life Res. 2017;26(3):587–600. [DOI] [PubMed] [Google Scholar]

- 12.Siddall PJ, McClelland JM, Rutkowski SB, Cousins MJ. A longitudinal study of the prevalence and characteristics of pain in the first 5 years following spinal cord injury. Pain. 2003;103(3):249–257. [DOI] [PubMed] [Google Scholar]

- 13.Dijkers M, Bryce T, Zanca J. Prevalence of chronic pain after traumatic spinal cord injury: A systematic review. J Rehabil Res Dev. 2009;46(1):13–29. [PubMed] [Google Scholar]

- 14.Siddall PJ, Loeser JD. Pain following spinal cord injury. Spinal Cord. 2001;39(2):63–73. [DOI] [PubMed] [Google Scholar]

- 15.Cardenas DD, Jensen MP. Treatments for chronic pain in persons with spinal cord injury: A survey study. J Spinal Cord Med. 2006;29(2):109–117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Budh CN, Osteraker AL. Life satisfaction in individuals with a spinal cord injury and pain. Clin Rehabil. 2007;21(1):89–96. [DOI] [PubMed] [Google Scholar]

- 17.Cruz-Almeida Y, Alameda G, Widerstrom-Noga EG. Differentiation between pain-related interference and interference caused by the functional impairments of spinal cord injury. Spinal Cord. 2009;47(5):390–395. [DOI] [PubMed] [Google Scholar]

- 18.Ataoglu E, Tiftik T, Kara M, Tunc H, Ersoz M, Akkus S. Effects of chronic pain on quality of life and depression in patients with spinal cord injury. Spinal Cord. 2013;51(1):23–26. [DOI] [PubMed] [Google Scholar]

- 19.Finnerup NB. Pain in patients with spinal cord injury. Pain. 2013;154 Suppl 1:S71–76. [DOI] [PubMed] [Google Scholar]

- 20.Mehta S, McIntyre A, Janzen S, Loh E, Teasell R, Spinal Cord Injury Rehabilitation Evidence T. Systematic Review of Pharmacologic Treatments of Pain After Spinal Cord Injury: An Update. Arch Phys Med Rehabil. 2016;97(8):1381–1391 e1381. [DOI] [PubMed] [Google Scholar]

- 21.Kalpakjian CZ, Farrell DJ, Albright KJ, Chiodo A, Young EA. Association of daily stressors and salivary cortisol in spinal cord injury. Rehabil Psychol. 2009;54(3):288–298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kratz AL, Ehde DM, Bombardier CH, Kalpakjian CZ, Hanks RA. Pain Acceptance Decouples the Momentary Associations Between Pain, Pain Interference, and Physical Activity in the Daily Lives of People With Chronic Pain and Spinal Cord Injury. J Pain. 2017;18(3):319–331. [DOI] [PubMed] [Google Scholar]

- 23.Kirshblum SC, Burns SP, Biering-Sorensen F, et al. International standards for neurological classification of spinal cord injury (revised 2011). J Spinal Cord Med.2011;34(6):535–546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kirshblum SC, Waring W, Biering-Sorensen F, et al. Reference for the 2011 revision of the International Standards for Neurological Classification of Spinal Cord Injury. J Spinal Cord Med. 2011; 34(6):547–554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Riley WT, Rothrock N, Bruce B, et al. Patient-reported outcomes measurement information system (PROMIS) domain names and definitions revisions: further evaluation of content validity in IRT-derived item banks. Qual Life Res. 2010; 19(9):1311–1321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cella D, Riley W, Stone A, et al. The Patient-Reported Outcomes Measurement Information System (PROMIS) developed and tested its first wave of adult self-reported health outcome item banks: 2005–2008. J Clin Epidemiol. 2010;63(11):1179–1194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Czajkowski SM, Cella D, Stone AA, Amtmann D, Keefe F. Patient-Reported Outcomes Measurement Information System (Promis): Using New Theory and Technology to Improve Measurement of Patient-Reported Outcomes in Clinical Research. Ann Behav Med. 2010;39:46–46. [Google Scholar]

- 28.Cella D, Rothrock N, Choi S, Lai JS, Yount S, Gershon R. Promis Overview: Development of New Tools for Measuring Health-Related Quality of Life and Related Outcomes in Patients with Chronic Diseases. Ann Behav Med. 2010;39:47–47. [Google Scholar]

- 29.Donders J, Tulsky DS, Zhu J. Criterion validity of new WAIS-II subtest scores after traumatic brain injury. J Int Neuropsych Soc. 2001;7(7):892–898. [PubMed] [Google Scholar]

- 30.Tulsky DS, Kisala PA, Victorson D, et al. Methodology for the development and calibration of the SCI-QOL item banks. J Spinal Cord Med. 2015;38(3):270–287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Tulsky DS, Kisala PA, Victorson D, et al. Overview of the Spinal Cord Injury-Quality of Life (SCI-QOL) measurement system. The journal of spinal cord medicine.2015;38(3):257–269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Tulsky DS, Kisala PA. The Spinal Cord Injury-Quality of Life (SCI-QOL) measurement system: Development, psychometrics, and item bank calibration. The journal of spinal cord medicine. 2015;38(3):251–256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Cohen ML, Kisala PA, Dyson-Hudson TA, Tulsky DS. Measuring pain phenomena after spinal cord injury: Development and psychometric properties of the SCI-QOL Pain Interference and Pain Behavior assessment tools. J Spinal Cord Med. 2017:1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Cleeland CS. Measurement of pain by subjective report In: Chapman CR, Loeser JD, eds. Advances in Pain Research and Therapy. Vol 12 New York, NY: Raven Press; 1989:391–403. [Google Scholar]

- 35.Cleeland CS, Ryan KM. Pain assessment: global use of the Brief Pain Inventory. Ann Acad Med Singapore. 1994;23(2):129–138. [PubMed] [Google Scholar]

- 36.Osborne TL, Jensen MP, Ehde DM, Hanley MA, Kraft G. Psychosocial factors associated with pain intensity, pain-related interference, and psychological functioning in persons with multiple sclerosis and pain. Pain. 2007;127(1–2):52–62. [DOI] [PubMed] [Google Scholar]

- 37.Osborne TL, Raichle KA, Jensen MP, Ehde DM, Kraft G. The reliability and validity of pain interference measures in persons with multiple sclerosis. J Pain Symptom Manage. 2006;32(3):217–229. [DOI] [PubMed] [Google Scholar]

- 38.Schafer JL, Graham JW. Missing data: our view of the state of the art. Psychol Methods.2002;7(2):147–177. [PubMed] [Google Scholar]

- 39.Raudenbush SW, Bryk AS. Hierarchical Linear Models in Social and Behavioral Research: Applications and Data Analysis Methods. Second Edition ed. Thousand Oaks CA: Sage Publications; 1992. [Google Scholar]

- 40.Cohen J Statistical power analysis for the behavioral sciences (2nd edition). New York: Academic Press; 1988. [Google Scholar]

- 41.DeVellis R Scale development: Theory and applications. 4th ed. Los Angeles, CA:Sage; 2017. [Google Scholar]

- 42.Rasbash J, Steele F, Browne WJ, Goldstein H. User’s Guide to MLwiN, v2.26. University of Bristol: Centre for Multilevel Modelling; 2012. [Google Scholar]

- 43.Nunnally J, Bernstein I. Psychometric theory. New York, NY: McGraw-Hill; 1994. [Google Scholar]

- 44.Felix ER, Cruz-Almeida Y, Widerstrom-Noga EG. Chronic pain after spinal cord injury: what characteristics make some pains more disturbing than others? J Rehabil Res Dev. 2007;44(5):703–715. [DOI] [PubMed] [Google Scholar]

- 45.Stone A, Shiffman S, Atienza A, Nebling L, eds. The science of real-time data capture: Self-reports in health research. New York: Oxford University; 2007. [Google Scholar]

- 46.Stone AA, Shiffman S, Schwartz JE, Broderick JE, Hufford MR. Patient compliance with paper and electronic diaries. Controlled clinical trials. 2003;24(2): 182–199. [DOI] [PubMed] [Google Scholar]

- 47.Stone AA, Shiffman S, Schwartz JE, Broderick JE, Hufford MR. Patient non-compliance with paper diaries. Brit Med J. 2002;324(7347):1193–1194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Stone AA, Broderick JE, Schwartz JE, Shiffman S, Litcher-Kelly L, Calvanese P.Intensive momentary reporting of pain with an electronic diary: reactivity, compliance, and patient satisfaction. Pain. 2003; 104(1–2):343–351. [DOI] [PubMed] [Google Scholar]

- 49.Kratz AL, Davis MC, Zautra AJ. Attachment predicts daily catastrophizing and social coping in women with pain. Health Psychol. 2012;31(3):278–285. [DOI] [PubMed] [Google Scholar]

- 50.Kratz AL, Ansari S, Duda M, et al. Feasibility of the E4 Wristband to Assess Sleep in Adults with and without Fibromyalgia. 36th Annual Scientific Meeting of the American Pain Society; 2017; Pittsburgh, PA. [Google Scholar]