1. Background

Central venous catheterization (CVC) is a medical procedure performed over five million times a year in the U.S., which allows doctors to deliver medication, nutrition, and take measurements not possible through noninvasive means. During this procedure, a catheter is inserted through the skin into the internal jugular vein (IJ), subclavian vein, or femoral vein with the tip placed near the heart. Unfortunately, some CVC patients experience adverse effects, including pneumothorax, hematoma, hemothorax, accidental arterial puncture, and thrombosis [1]. Currently, medical residents are trained in CVC using mannequin patient simulators, and the concept of “see one, do many, do one competently, and teach everyone” is wide spread [2]. Virtual reality simulators like the Mediseus Epidural simulator have shown great potential as medical training tools by allowing the unique possibility of exposing trainees to a much wider variety of medical scenarios than static patient simulators [3].

The design and accuracy of a two-part haptic virtual reality training system for the insertion of a CVC needle into the IJ are introduced in this paper. This system utilizes a Geomagic Touch (Rock Hill, SC) haptic feedback robot and 3D Guidance trakSTAR electromagnetic tracking system by Northern Digital, Inc. (Waterloo, ON). This system allows the user to “scan” the neck using a virtual ultrasound and then feel the haptic forces that occur during needle insertion.

2. Methods

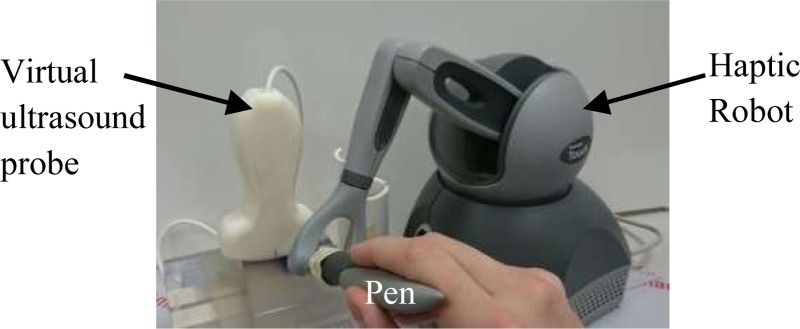

The design of the CVC haptic robot simulator is divided into two components: a haptic robot arm and pen representing the syringe, and a position tracking system simulating an ultrasound probe, as shown in Fig. 1. The system is programed using matlab and simulink interfacing with Quanser's Quarc real-time control software. All the imaging used for the virtual ultrasound is in VRML using matlab.

Fig. 1.

Prototype haptic robotic CVC trainer with virtual ultrasound probe, haptic needle, and scanning surface

2.1. Robotic Haptic Needle.

The major component of the CVC haptic robot simulator is the haptic robot arm that provides realistic force feedback to the user as shown in Fig. 1. The Geomagic Touch gives 3 deg of force feedback along with 6 deg of position sensing. Using the needle insertion characterizations developed in Gordon et al. [4], which characterize needle force as a piecewise exponential function, this haptic feedback is programed into the device to give the feeling of a needle being inserted into tissue. The virtual needle tip is projected 8 cm away from the robotic pen tip. Small bounding forces are also applied in the direction perpendicular to insertion to restrict the lateral movement when in the virtual tissue. The force characterization equations are interchangeable to allow for a variety of needle insertion scenarios including scar tissue and excess fat tissue.

When a simulated needle insertion is performed, simulink exports the position of the ultrasound, position of the needle tip, angle of the needle, final distance from the needle tip to the vein center, and overall test time to matlab. This can then be analyzed to detect known problematic procedure behaviors, such as multiple insertion attempts, and determine the performance of the user.

2.2. Virtual Ultrasound Device.

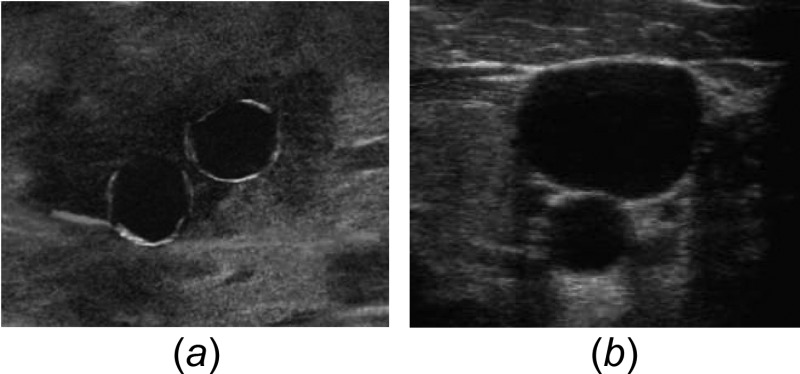

The creation of the virtual ultrasound image begins with the visualization of 3D space as a 2D cutting plane. The IJ and carotid artery (CA) are represented by two circles in a VRML virtual world with 16 circumferential reference points. These reference points can then be scaled in the x and y directions to vary the initial shapes and sizes of the vessels and allow for deformation in the vessel shape. Using the rotation of the 3D tracking device as reference, the images of the vessels stretch in the x and y directions. This mimics how an ultrasound plane through the vessels appears when placed in the transverse and longitudinal directions. The needle can then interact with the position of these data points to simulate vessel flexibility. Virtual patient anatomy can be easily varied by moving the vessel positions in the virtual tissue.

An essential component of the ultrasound is the visualization of the needle crossing the ultrasonic plane. A line projected by the robot arm's pen represents the needle in the virtual space. The ultrasound plane is represented by a point at the position of the tracker and a plane normal vector projected from this point. Using the needle line and ultrasound plane, the 3D line–plane intersection was found. Then, using the linear transformation in Eq. (1), the intersection point on the 2D ultrasound image is found, where T is a 2 × 3 transformation matrix consisting of the x- and y-axis vectors in 3D space, I is the 3D location of the needle plane intersection, and p is the 3D location of the ultrasound probe. Finally, the needle tip was represented by a small rectangle in the previously created ultrasound virtual world

| (1) |

A high-fidelity simulated ultrasound image was created as shown in Fig. 2. The background of the ultrasound was created as a static compilation of the ultrasound images taken from the region around IJ procedures. These images were blended to give a generic yet realistic look. Overlaid on the virtual vessels were images of a CA and IJ. These were cropped to fit the vessels in the image.

Fig. 2.

Ultrasound image of IJ and CA made by (a) virtual synthetic rendering and (b) actual ultrasound probe in human tissue [5]

To further increase the fidelity, an ultrasound transducer handle for the tracker, as shown in Fig. 1, was 3D printed from ABS plastic. By attaching the tracking probe to the transducer, the location and rotation of the transducer are tracked in real time. To verify the accuracy of the transducer rotation, measurements were taken using a digital level.

3. Results

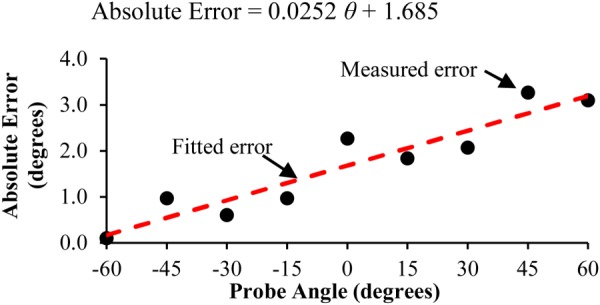

Due to the accuracy of the tracker used in virtual ultrasound, movements in the x, y, and z directions are accurate within 1.4 mm. When measuring the rotations of the probe, the average error in the probe angle (θ) of rotation around the z-axis was measured to be 1.7 deg. The error in the probe fit the trend line in Eq. (2) with an R2 value of 0.88 as shown in Fig. 3

Fig. 3.

Absolute error between actual probe angle and sensed angle

| (2) |

When the needle crosses the 3D ultrasound plane, the needle appears in the correct location on the 2D ultrasound image. When the virtual needle tip makes contact with the vein, the vein deforms the greatest at the location of the needle and partially deforms around that location. If the probe is pressed into the scanning surface, the vein compresses in the 2D image. Then, the vein deformation is independent of the image of the needle crossing the plane in the image. A medical resident who trains first-year residents in the CVC procedure described the vein compressibility and deformation as being very similar to the actual procedure.

A variety of haptic force characterizations have been generated using expert estimation and the Gordon et al. [4] method. These equations were then implemented into the program. For comparison, the feel of the forces of a needle inserted into the tissue of a CAE Healthcare Blue Phantom Ultrasound Central Line Mannequin (Sarasota, FL) was compared with the feel of the actual insertion of a catheter needle into the mannequin. In the direction of insertion, the needle forces experienced were very similar and deemed adequate for CVC simulation by a resident trainer of CVC insertion procedures. It was noted that the mannequin felt slightly firmer due to the limited strength and response time of the Geomagic Touch haptic robot.

4. Interpretation

From the results, it was concluded that the synthetically generated ultrasound image gives a realistic rendering of an ultrasound environment, the spatial position and angle of the haptic pen and virtual ultrasound are accurate, and the haptic feeling of the robot is adequate for resident training. After a short adaption period, a medical resident was able to effectively use the virtual ultrasound and haptic robot. This is important because the device learning curve needs to be minimal, so that residents can focus on learning the procedure.

To increase the fidelity of the system, several key improvements will be made. Some of these improvements will focus on the software to improve the ultrasound background image, allow the background to vary as the probe moves parallel to the vessels, and have the background vary as the probe and needle apply pressure to the virtual tissue. Other improvements will focus on the physical interaction of the resident with the haptic robot, replacing the haptic pen with a syringelike handle to allow the residents to practice properly holding a syringe and aspirating during the procedure. These improvements will help this device be an effective training tool for CVC.

Acknowledgment

The research reported in this publication was supported by the National Heart, Lung, and Blood Institute of the National Institutes of Health under Award No. R01HL127316. We would like to thank Blue Phantom for loaning a CVC mannequin simulator.

Accepted and presented at The Design of Medical Devices Conference (DMD2016), April 11–14, 2016 Minneapolis, MN, USA.

Contributor Information

David Pepley, Department of Mechanical and Nuclear Engineering, , The Pennsylvania State University, , State College, PA 16801.

Mary Yovanoff, Department of Industrial Engineering, , The Pennsylvania State University, , State College, PA 16801.

Katelin Mirkin, Hershey Medical Center, , The Pennsylvania State University, , State College, PA 16801.

Scarlett Miller, Department of Engineering , Design and Industrial Engineering, , The Pennsylvania State University, , State College, PA 16801.

David Han, Hershey Medical Center, , The Pennsylvania State University, , State College, PA 16801.

Jason Moore, Department of Mechanical and Nuclear Engineering, , The Pennsylvania State University, , State College, PA 16801.

References

- [1]. McGee, D. C. , and Gould, M. K. , 2003, “ Current Concepts—Preventing Complications of Central Venous Catheterization,” New Engl. J. Med., 348(12), pp. 1123–1133. 10.1056/NEJMra011883 [DOI] [PubMed] [Google Scholar]

- [2]. Vozenilek, J. , Huff, J. S. , Reznek, M. , and Gordon, J. A. , 2004, “ See One, Do One, Teach One: Advanced Technology in Medical Education,” Acad. Emerg. Med., 11(11), pp. 1149–1154. 10.1197/j.aem.2004.08.003 [DOI] [PubMed] [Google Scholar]

- [3]. Coles, T. R. , Meglan, D. , and John, N. W. , 2011, “ The Role of Haptics in Medical Training Simulators: A Survey of the State of the Art,” IEEE Trans. Haptics, 4(1), pp. 51–66. 10.1109/TOH.2010.19 [DOI] [PubMed] [Google Scholar]

- [4]. Gordon, A. , Kim, I. , Barnett, A. C. , and Moore, J. Z. , “ Needle Insertion Force Model for Haptic Simulation,” ASME Paper No. MSEC2015-9352. 10.1115/MSEC2015-9352 [DOI] [Google Scholar]

- [5].Hayden, G., 2015, “ Procedural Ultrasound Images,” Medical University of South Carolina, Charleston, SC.http://www.emergencyultrasoundteaching.com/image_galleries/procedural_images/index.php