Abstract

Visual evoked potentials (VEPs) can be measured in the EEG as response to a visual stimulus. Commonly, VEPs are displayed by averaging multiple responses to a certain stimulus or a classifier is trained to identify the response to a certain stimulus. While the traditional approach is limited to a set of predefined stimulation patterns, we present a method that models the general process of VEP generation and thereby can be used to predict arbitrary visual stimulation patterns from EEG and predict how the brain responds to arbitrary stimulation patterns. We demonstrate how this method can be used to model single-flash VEPs, steady state VEPs (SSVEPs) or VEPs to complex stimulation patterns. It is further shown that this method can also be used for a high-speed BCI in an online scenario where it achieved an average information transfer rate (ITR) of 108.1 bit/min. Furthermore, in an offline analysis, we show the flexibility of the method allowing to modulate a virtually unlimited amount of targets with any desired trial duration resulting in a theoretically possible ITR of more than 470 bit/min.

Introduction

Visual evoked potentials (VEPs) are electrical potentials that can be measured by electroencephalography (EEG) as the brain’s responses to a visual stimulus. VEPs are used in a variety of fields ranging from clinical diagnostics [1] over basic research [2] to their application in a Brain-Computer Interface (BCI) [3].

The idea of a VEP-based BCI was originally developed by Sutter [4], who proposed “the visual evoked response as a communication channel” and envisioned the use of VEPs for a BCI-controlled keyboard. Sutter implemented such a VEP-based BCI in 1992 where he used 64 visual stimuli that were modulated by a complex stimulation pattern.

Today, VEP-based BCIs are either based on the original idea of Sutter and use complex stimulation patterns (also called codes) to elicit a code-modulated VEP (cVEP) [5, 6], or they use visual stimulation with a specific frequency which evokes steady-state VEPs (SSVEPs) [7]. Although both methods differ in how the stimulation pattern is constructed, both methods depend on the construction of a stimulus-specific template, restricting the number of possible targets. For example, a state-of-the-art cVEP BCI speller uses a 63 bit m-sequence, which is circularly shifted by 2 bits for each target, thereby allowing a total of 32 targets. In order to increase the number of targets, the shift mus be decreased or the sequence length must be increased, either decreasing performance or speed. Another approach is to group several targets and use multiple m-sequences for each group [8, 9], but this approach is also limited as the number of equal-sized m-sequences is limited. In case of SSVEP BCIs there are also some approaches to increase the number of targets, for example by using multiple frequencies sequential coding [10] or by using multi-phase cycle coding [11], but both show reduced information transfer rates.

One notable exception investigating a more general approach to use visual stimulation that is not restricted to predefined patterns is the work by Thielen et al. [12]. Their model is based on the assumption that the response to a complex stimulation pattern is a linear superposition of individual single-flash VEPs [13]. Thielen et al. therefore developed a convolution model that breaks down a complex stimulation pattern in smaller subcomponents and models the response to a complex stimulation pattern by a superposition of the responses to the subcomponents. While this approach allows for a more flexible prediction model, the stimulation patterns are not fully arbitrary, as they can only be composed of short and long pulses.

It should be noted, that the usability of stimulation patterns is restricted by the brain. Herrmann [14] has shown that brain responses can only be found by using stimulation frequencies of up to 90 Hz. Furthermore, the stimulation rate is limited by the used hardware for stimulus presentation, like a computer monitor. Therefore, “arbitrary stimulation patterns” should be interpreted as a huge set of possible stimulation patterns relative to the used stimulation rate.

In this paper, we present a model which is able to predict arbitrary stimulation patterns and its backward model which is able to predict the brain response to arbitrary stimulation patterns. We show that both models can be used for high-speed BCI control using any desired trial duration with a virtually unlimited amount of targets. First, we explain the training and prediction of both models (EEG2Code and Code2EEG) followed by the explanation how both models can be used for BCI control. As we used (pseudo) random stimulation patterns, we explain how they are generated. In the following subsection we explain the experimental setup including the used hardware, software and the presentation layout followed by the preprocessing steps. The results are structured by first showing the stimulation pattern prediction (EEG2Code) and the brain response prediction (Code2EEG), after that the BCI performance is shown for both online results and offline results varying the trial duration and number of targets. Finally we discuss the results and give an outlook to future work.

Materials and methods

EEG2Code model

Training

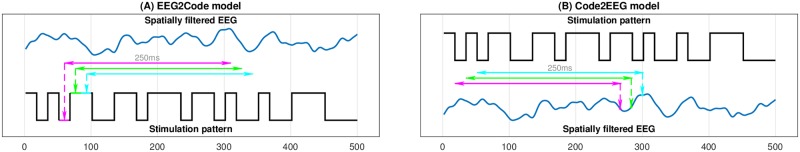

The model is based on a ridge regression model, which uses the EEG signal to predict the stimulation pattern during an arbitrary stimulation. For training, the stimulation pattern is always a fully random binary sequence presented with a rate of 60 bit/s. The most prominent parts of a VEP are N1, P1 and N2, the negative/positive potentials with peaks at around 70 ms, 100 ms and 140 ms (post-stimulus), respectively. As the complete VEP lasts for around 250 ms, we use a 250 ms window of spatially filtered EEG data as predictor and the corresponding bit of the stimulation pattern (0 = black, 1 = white) as response to train the ridge regression model. The window is shifted sample-wise over the data during a trial, meaning that it is required to use 250 ms of EEG data after trial end, otherwise the last 250 ms of a stimulation pattern can not be predicted. Fig 1A depicts this procedure with a bit-wise window shifting for simplicity. The ridge regression model and its bias term β0 can be calculated by

| (1) |

| (2) |

where X (the predictor) is a n × k-matrix with n the number of windows and k the window length (number of samples). y (the responses) is a n × 1-vector containing the corresponding bit of the stimulation sequence for each window. I is the identity matrix and λ the regularization parameter, which was not optimized but set to 0.001. Since a window has a length of k = 150 samples, at the used sampling rate s = 600Hz, the output is a coefficient vector of length 150, one for each input sample and the constant bias term β0. The number of windows n depends on the number of trials N, the average trial duration d, the window length k and the sampling rate s:

| (3) |

As described in section Data acquisition, we used N = 96 and d = 4s resulting in n = 216, 000 windows.

Fig 1. Training of the regression models.

A: EEG2Code model. Each 250ms window of the spatially filtered EEG data will be projected to its corresponding bit (1 or 0) of the corresponding stimulation pattern. B: Code2EEG model. Each 250ms window of the modulation pattern will be projected to the corresponding value of the spatially filtered EEG data.

Prediction

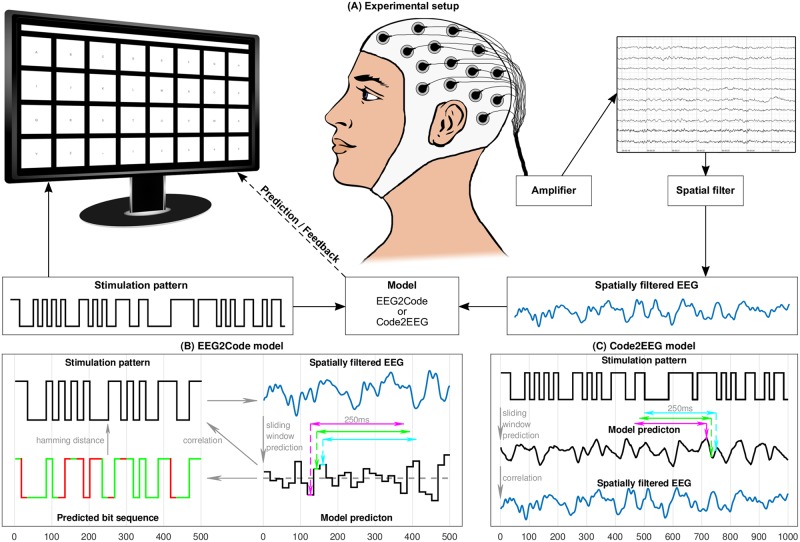

After training, the model is able to predict the bits of the stimulation pattern. Fig 2B depicts the procedure, in which the measured EEG data is spatially filtered and the trained regression model is used to predict each 250 ms window (sample-wise shifted). The regression model predicts a real number yi for each window i

| (4) |

where β0 is the constant term and β1…k the coefficients for each window sample xk. The predicted real values yi in turn can be interpreted as a binary values by a simple threshold method, each value above or equal 0.5 is set to be binary 1 and 0 otherwise. Afterwards, the predicted binary sequence is compared to the stimulation pattern using the Hamming distance. The binary transformation is only done for identifying the bit prediction accuracy, not for BCI control (see section BCI control).

Fig 2. Setup of the BCI experiment and model prediction.

A: The presentation layout is as shown on the monitor, it has 32 targets labeled alphabetically from A to Z followed by ‘_’ and numbers 1 to 5. The targets are separated by a blank black space and above targets is the text field showing the written text during the experiment. Each target is modulated with its own random stimulation pattern. During a trial, the participant has to focus a target. A spatial filter is applied to the measured EEG. The model predicts the target which is indicated to the participant by highlighting the target in yellow and the letter is appended to the text field above the keyboard. B: The EEG2Code model predicts an arbitrary stimulation pattern. A 250ms window will be slided sample-wise over the spatially filtered EEG signal. For simplicity, it is shown bit-wise using 3 exemplary windows. The trained model predicts a real number for each window. Each value above 0.5 (gray dashed line) is interpreted as boolean 1 or 0, otherwise. The resulting bit sequence can be compared (Hamming distance) to the stimulation pattern (match = green, mismatch = red). For target selection we used the correlation coefficient between model prediction and the stimulation patterns of all targets, the one which correlates most will be chosen as the predicted target. C: The Code2EEG model predicts the brains response to an arbitrary stimulation pattern by sliding the 250 ms window over the stimulation pattern. For BCI control, the model predicts the brain responses to the stimulation patterns of all targets, which in turn are compared to the measured EEG using the correlation coefficient. The one which correlates most will be chosen as the predicted target.

Code2EEG model

Training

Like the EEG2Code model, the Code2EEG model is also based on a ridge regression model and trained on fully random stimulation patterns to predict the EEG response based on a stimulation pattern. We also use 250 ms windows (150 samples) and the equation is the same as Eqs 1 and 2, but the predictor X is the window containing the stimulation pattern and the response y is the spatially filtered EEG data for the corresponding time-point. Fig 1B depicts this procedure for three exemplary windows.

Again, the output of the ridge regression is a coefficient vector of length 150. And we set λ to 0.001.

Prediction

After training, the model is able to predict the brain response to an arbitrary stimulation pattern. The equation is the same as Eq 4, but x1…xk are the k samples of the stimulation pattern window. The model prediction y can now be compared to the measured (and spatially filtered) EEG. We used the correlation coefficient to compare them. Fig 2C depicts the procedure, in this case, the real stimulation pattern is used to predict the brain response.

Modelling brain response

As described, the model can predict the brain response to an arbitrary stimulation pattern, therefore, we also tested the prediction of the brain response to a single flash stimulus, a 30 Hz SSVEP stimulus, and an m-sequence. For this, an additional participant (not included in the BCI experiment) had to perform the spatial filter and training session followed by 120 single flash trials and 120 SSVEP trials using 30 Hz stimulation frequency, whereas each trial lasts for 1 s. Both the single flash pattern and the 30 Hz pattern were presented using a single target in the middle of the screen, whereas it had the same size as the targets of the matrix keyboard layout (see section Presentation layout). The m-sequences were presented as part of the data collection used for spatial filter generation (see section Spatial filter).

BCI control

For BCI control, each target is modulated with its own (random) stimulation sequence and we need a method to choose the correct target out of others. For the EEG2Code model we used the correlation coefficient between the model prediction and the modulation patterns of all targets. The corresponding target which correlates most is selected. We could also use the pattern prediction accuracy, but as shown in our previous study [15], this is leading to a loss of additional information which in turn leads to a reduced performance.

The Code2EEG model predicts the EEG data of the modulation patterns of all targets and compares them to the measured EEG data, again, the one which correlates most is chosen as the correct target.

During the online experiment, feedback is given to the participant by coloring the selected target in yellow for 100 ms and adding the corresponding letter to the text field.

Varying trial duration and number of targets

As both models are based on a sliding window approach, the trial duration can be varied, therefore, we analyzed the BCI performance by varying the trials length from 0.5 s to 2 s.

Furthermore the model are trained on fully random stimulation patterns, therefore, it is not required to use the same stimulation patterns for training and testing, but arbitrary stimulation patterns can be used which in turn allows to vary the number of targets. Additional targets simply get another random stimulation pattern. With a trial length of 2 s and 60 Hz refresh rate, there are 2120 = 1.33 ⋅ 1036 different stimulation patterns, which therefore is the upper bound for the number of targets with 2 s trials. Using the data collected in the online experiment, we simulated the BCI control with up to 500,000 targets in steps of 50 targets. In the online experiment, we predicted the stimulation pattern from the EEG and compared the prediction to the stimulation sequence of the 32 targets to select the one with the highest accuracy. For the simulation, we compared the prediction not only to the 32 targets, but generated additional random stimulation sequences for target 33 to 500,000 and compared the prediction to all stimulation targets. While the correct target has to be selected out of 32 targets in the online experiment, it has to be selected out of up to 500,000 targets in the simulation, which essentially makes this a multiclass classification problem with 500,000 classes.

Modulation patterns

Random modulation patterns

During the experiment the MT19937 [16] random generator was used for generating random modulation patterns. At each monitor refresh a random integer (0 or 1) is generated for each target, therefore, the binary sequence of a target is always random without conscious repetitions and generated with a rate of 60 bit/s, continuously. The pattern generation can be repeated or varied by using the same or a different random seed, respectively.

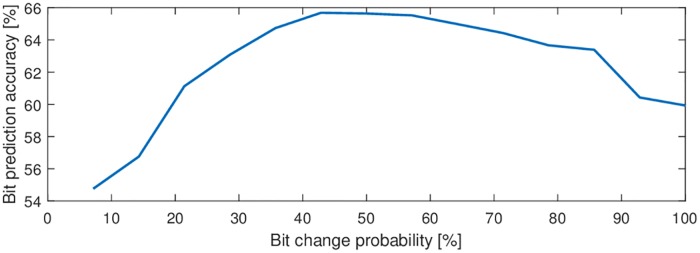

Optimized modulation patterns

In our previous study [17] we found that the number of bit changes is a crucial property of stimulation patterns that leads to different performances. We analyzed the pattern prediction accuracy of all 250 ms (15 bit) subsequences and found a maximum accuracy for sub-sequences with 7 bit changes. We repeated the analysis using the data of the current study and confirmed these findings. The pattern prediction accuracy of 15 bit subsequences is best for sequences with 6 to 8 bit changes (Fig 3), meaning a bit change probability of approximately 50% at a stimulation rate of 60 Hz.

Fig 3. Influence of the bit change probability using the EEG2Code model.

The accuracies are averaged using all 250 ms (15 bit) sub-sequences of random modulation trials grouped by the bit change probability. For example, a bit change probability of 100% means that each successive bit changes from 0 to 1 or 1 to 0, respectively. The maximum bit prediction accuracy is reached between approximately 40% to 60%, meaning an average of 6 to 8 bit changes. Therefore, we used only 15 bit subsequences with 7 bit changes for the optimized modulation patterns.

Therefore, we generated a set of 15 bit long sequences with 7 bit changes, this results in a total number of 6,864 bit sequences. As we use a correlation measure to determine the correct target, we filtered those sequences. For this, we generated 100,000 subsets of 150 randomly chosen sequences out of the 6,864 bit sequences and took the subset with lowest average correlation between the sequences in the subset. The resultant subset has an average correlation of -0.004 (SD = 0.276) between any sub-sequence to all others. The subset allows to modulate 150T/250ms different targets, where T is the trial duration in milliseconds (under the assumption that T/250ms is a positive integer).

Experimental setup

Hardware & Software

The BCI system consists of a g.USBamp (g.tec, Austria) EEG amplifier, two personal computers (PCs), Brainproducts Acticap system with 32 channels and a LCD monitor (BenQ XL2430-B) for stimuli presentation. Participants are seated approximately 80 cm in front of the monitor.

PC1 is used for the presentation on the LCD monitor, which is set to refresh rate of 60 Hz and its native resolution of 1920 × 1080 pixels. A stimulus can either be black or white, which can be represented by 0 or 1 in a binary sequence and is synchronized with the refresh rate of the LCD monitor. The timings of the monitor refresh cycles are synchronized with the EEG amplifier by using the parallel port.

PC2 is used for data acquisition and analysis. As a general framework for recording the data of the EEG amplifier we used BCI2000 [18] and the data processing is done with MATLAB [19]. The amplifier sampling rate was set to 600 Hz, resulting in 10 samples per frame/stimulus. Additionally, a TCP network connection was established to PC1 in order to send instructions to the presentation layer and to get the modulation patterns of the presented stimuli.

We used a 32 electrodes layout, 30 electrodes were located at Fz, T7, C3, Cz, C4, T8, CP3, CPz, CP4, P5, P3, P1, Pz, P2, P4, P6, PO9, PO7, PO3, POz, PO4, PO8, PO10, O1, POO1, POO2, O2, OI1h, OI2h, and Iz. The remaining two electrodes were used for electrooculography (EOG), one between the eyes and one left of the left eye. The ground electrode (GND) was positioned at FCz and reference electrode (REF) at OZ.

Presentation layout

We used MATLAB [19] and the Psychtoolbox [20] for the presentation layer. Our layout is a 4 × 8 matrix keyboard layout (32 targets in total) as shown in Fig 2A, whereas the targets are labeled alphabetically from A to Z followed by ‘_’ and numbers 1 to 5. The targets are separated by a blank black space and above targets is a text field showing the written text. Corresponding to the binary stimulation pattern the targets flicker in black (binary 0) and white (binary 1).

Participants

The study was approved by the local ethics committee of the Medical Faculty at the University of Tübingen and conformed to the guidelines of the Declaration of Helsinki. A written informed consent was obtained from all participants. To test the system, 9 healthy subjects (5 female) were recruited. All subjects had normal or corrected-to-normal vision. The age ranged from 18 to 23 years. Each subject participated in one session and completed the whole experiment. None of the subjects participated in other VEP EEG studies before.

Data acquisition

Initially, the participants had to perform 2 runs to generate a spatial filter (see section Spatial filter). The training phase was split into 3 runs, but with varying trial duration, 5 s, 4 s, and 3 s, respectively. The testing phase was split into 14 runs with a trial duration of 2 s. The runs were alternated using random stimulation patterns and optimized stimulation patterns.

During all runs the inter-trial time was set to 0.75 s and the participants had to perform 32 trials in lexicographic order (see section Presentation layout).

Preprocessing

Frequency filter

The recorded EEG data is bandpass filtered by the amplifier between 0.1 Hz and 60 Hz using a Chebyshev filter of order 8 and an additional 50 Hz notch filter was applied.

Correcting raster latencies

Standard computer monitors (CRT, LCD) cause raster latencies because of the line by line image build-up dependent on the refresh rate. As VEPs are affected by these latencies, resulting in a decreased BCI performance, we corrected the raster latencies by shifting the EEG data in respect to its vertical position on the screen. For example, with a refresh rate of 60 Hz, the image build-up takes about 16 ms. A target in the (vertical) center of the screen is thereby shown 8 ms after the first pixel is shown, which means that the EEG has to be shifted by 8 ms to correct for that latency. During BCI control, it is not known what character the user wants to select and thereby its position is unknown. Therefore the EEG data is shifted in respect to the target against which it is compared. A more detailed description is given in our previous work [21].

Spatial filter

Recent studies [6, 22] have shown increased classification accuracy by using spatial filters to improve the signal-to-noise ratio of the brain signals. As random stimulation is not suitable for spatial filter training, an m-sequence with low auto-correlation is used for target modulation. The spatial filter training is done using a canonical correlation analysis (CCA) as described in a previous work [23], except that the presentation layout and stimulation duration differ. The presentation layout is as described above and the participants had to perform 32 trials (one per target) whereas one trial lasts for 3.15 seconds followed by 1.05 for gaze shifting. As the used modulation pattern has a length of 63 bits (1.05 seconds), we got 96 sequence cycles per participant, which in turn are used for training the spatial filter. The spatial filter is then used for the following experiment.

Performance evaluation

For the pattern prediction of the EEG2Code model, the binary values of the predicted patterns were compared to the stimulation patterns by using the Hamming distance. Because the distances are 1’s and 0’s, the averaged Hamming distance of all samples corresponds to the accuracy of how much of the stimulation pattern can be predicted correctly.

The prediction of the brain responses is evaluated by using the correlation coefficient between the predicted EEG and the spatially filtered EEG.

The BCI control performance of both models is evaluated using the accuracy of correctly predicted targets.

In addition to the accuracies, we calculated the corresponding information transfer rates (ITRs) [24]. The ITR can be computed with the following equation:

| (5) |

with N being the number of classes, P the accuracy, and T the time in seconds required for one prediction. The ITR is given in bits per minute (bpm).

It is important to note that we used the ITR for two different scenarios: For the pattern prediction of the EEG2Code model the values are: N = 2 and T = 1/60 s, as each bit was predicted individually. For BCI control N equals the number of targets and T the trial duration including the inter-trial time.

Comparison to a cVEP BCI speller

In order to compare the method to a state-of-the-art cVEP BCI speller, we used the runs of the spatial filter training. As we used three m-sequence cycles per trial during the spatial filter runs, the trials were split resulting in 192 trials. A randomized 10-fold cross-validation was performed by using the one-class support vector machine method with a spatial filter obtained by CCA as described in a previous work [22].

Using the EEG2Code model we analyzed the trials in two different ways. On the one hand, we used the model trained during the online experiment (with randomized stimulation patterns) and made a target prediction for each of the 192 trials. On the other hand, we performed also a randomized 10-fold cross-validation and trained a model during each fold using those trials.

Results

To summarize, the method presented in this paper allows to create prediction models in two directions: on the one hand the EEG2Code model can be used to predict the visual stimulation pattern based on the EEG, on the other hand the Code2EEG model can be used to predict the brain response (the VEP measured by EEG) for a given visual stimulation pattern. Both models can be used for BCI control, for which multiple stimuli (i.e. targets) are modulated with random patterns, as depicted in Fig 2. For data acquisition we performed an online BCI control experiment, additionally we did an offline analysis to get the performance of the stimulation pattern prediction and the brain response prediction. Furthermore, we analyzed the BCI performance by varying the trial duration and the number of targets. The results are shown in the following subsections.

Stimulation pattern prediction

When using random visual stimulation, the EEG2Code model is able to predict the stimulation pattern with an average accuracy of 64.6% (the percentage of correctly predicted bits in the bit-vector), which corresponds to an ITR of 232 bit per minute (bpm). For the best subject, the model is able to predict 69.1% of the stimulation pattern correctly, which corresponds to an ITR of 389.9 bpm.

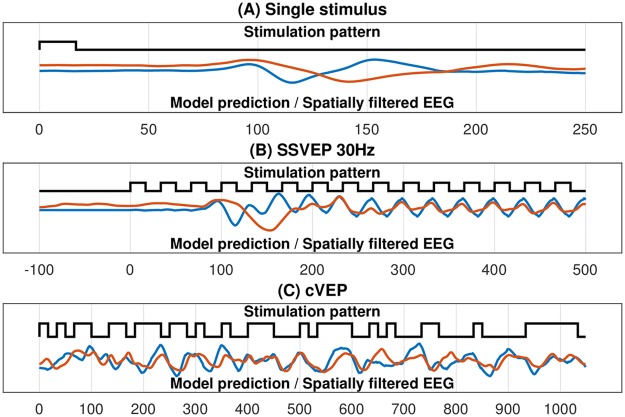

Brain response prediction

Using the Code2EEG model, we can predict the EEG response to a visual stimulation pattern. Exemplary, the model trained on random visual stimulation was used to predict the response to a single-light flash of 16.6 ms, to a 30 Hz SSVEP pattern, and to a cVEP m-sequence pattern (Fig 4). When predicting the EEG response to random visual stimulation patterns, the average correlation between the prediction and measured EEG is r = 0.384, with a maximum correlation of r = 0.509 for subject S1 (see Table 1 for details).

Fig 4. Predicted brain responses of the Code2EEG model compared to the measured EEG.

The black line represents the stimulation pattern, the blue line the predicted brain response and the red line the spatially filtered EEG (120 trials averaged). A: Single stimulus pattern lasting for 1/60 s. B: 30 Hz SSVEP pattern. C: cVEP pattern (m-sequence).

Table 1. Results of the model prediction.

| Subject | EEG2Code | Code2EEG | |||

|---|---|---|---|---|---|

| ACC [%] | ITR [bpm] | Pt | r | Pt | |

| S1 | 69.1 | 389.9 | 7.3e−15 | 0.509 | 2.8e−17 |

| S2 | 64.5 | 222.4 | 1.1e−05 | 0.338 | 2.7e−04 |

| S3 | 63.7 | 196.5 | 2.8e−03 | 0.364 | 8.0e−03 |

| S4 | 65.6 | 257.8 | 4.8e−11 | 0.396 | 1.1e−04 |

| S5 | 66.3 | 282.7 | 8.0e−11 | 0.464 | 8.9e−16 |

| S6 | 67.1 | 308.2 | 1.4e−16 | 0.409 | 8.5e−08 |

| S7 | 63.7 | 196.8 | 3.7e−07 | 0.270 | 4.4e−08 |

| S8 | 60.9 | 124.5 | 8.3e−04 | 0.344 | 9.1e−06 |

| S9 | 60.2 | 109.4 | 1.7e−03 | 0.257 | 3.2e−03 |

| mean | 64.6 | 232.0 | 6.0e−04 | 0.384 | 1.3e−03 |

Shown are the average results of all subjects, whereas best results are in bold font. For the EEG2Code model the accuracy (ACC) indicates how many bits of the stimulation pattern can be predicted correctly. The ITR is calculated using Eq 5 with N = 2 and T = 1/60s. Pt are the average p-values under the hypothesis that the correlation between the model prediction and the stimulation pattern are equal to zero. For the Code2EEG model the correlation (r) between the model prediction the measured EEG data is given, as well as the average Pt.

Brain-Computer Interface control

As mentioned, both models can be used for BCI control. In the following, we show the online performance in addition to results from an offline analysis in which the trial duration and the number of targets were varied.

Online results

The EEG2Code model was tested in an online BCI with a trial length of 2 s. An inter-trial time of 0.75 s was chosen, because previous experiments have shown that 0.5 s is too short for untrained users. The participants, who never used a BCI before, had to perform 7 runs with fully random stimulation patterns and 7 runs with optimized stimulation patterns. During each run the participants had to spell each letter in lexicographic order, meaning 32 trials per run for a total of 224 trials. Table 2 shows the target prediction accuracies and the corresponding ITRs. When using completely random stimulation patterns the average accuracy of target selection is 97.8%, which corresponds to 103.9 bpm. As we found that modulation patterns with a specific number of bit changes lead to better results [15], optimized modulation sequences (details in Material and Methods section) were also tested, leading to an accuracy of 98.0% (108.1 bpm). Due to a ceiling effect, the difference between random and optimized stimulation patterns is not significant.

Table 2. Results of the online BCI experiment.

| Subject | optimized | random | ||

|---|---|---|---|---|

| ACC [%] | ITR [bpm] | ACC [%] | ITR [bpm] | |

| S1 | 100.0 | 109.1 | 100.0 | 109.1 |

| S2 | 99.6 | 107.7 | 99.1 | 106.5 |

| S3 | 99.1 | 106.5 | 96.4 | 100.4 |

| S4 | 100.0 | 109.1 | 100.0 | 109.1 |

| S5 | 100.0 | 109.1 | 99.1 | 106.5 |

| S6 | 100.0 | 109.1 | 100.0 | 109.1 |

| S7 | 99.6 | 107.7 | 98.2 | 104.3 |

| S8 | 99.1 | 106.5 | 96.9 | 101.3 |

| S9 | 84.8 | 79.3 | 90.6 | 89.2 |

| mean | 98.0 | 108.1 | 97.8 | 103.9 |

Accuracies (ACC) and information transfer rates (ITR) of the online BCI experiment (EEG2Code model). Shown are the average results of all subjects using random stimulation patterns and optimized stimulation patterns. ITRs are calculated using Eq 5 with N = 32 and T = 2.75s (including an inter-trial time of 0.75s).

Varying trial duration and number of targets

The following results are based on the runs with optimized stimulation patterns. Furthermore, for ITR calculation we used an inter-trial-time of 0.5 s, as this is sufficient for experienced participants.

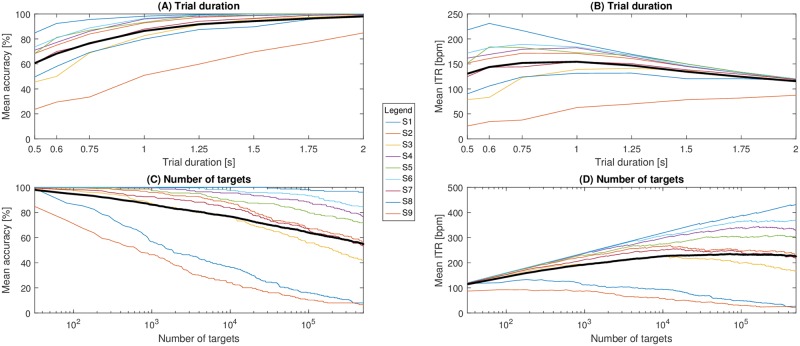

Using the EEG2Code model with 32 targets, varying the trials length from 0.5 s to 2 s shows that average accuracy increases with longer trials, but the ITR reaches its optimum of 154.3 bpm with a trial duration of 1 s. It is worth mentioning that S1 achieved an ITR of 231.1 bpm using 600 ms trial duration which corresponds to an accuracy of 92.41%. The Code2EEG model is generally less accurate, reaching only 94.4% with 2 s trials, but still has an optimum average ITR of 146.6 bpm with a trial length of 0.75 s. More detailed results for the EEG2Code model are shown in Fig 5A and 5B and for the Code2EEG model in Figs A and B in S1 Fig.

Fig 5. BCI performance using the EEG2Code model.

Shown are the accuracies and ITRs (Eq 5 with N the number of targets and T the trial duration including 0.5 s inter-trial time). Each colored line is one subject and the thick black line represents the mean of all subjects. A and B: using varying trial durations. C and D: using varying number of targets (logarithmic scale).

As both models are not limited to fixed stimulation patterns, but work with arbitrary patterns, the number of targets can be increased. We simulated a BCI with up to 500,000 targets in steps of 50 targets. Fig 5C and 5D shows the accuracies and ITRs of the EEG2Code model relative to the number of targets. The averaged optimum ITR was at 235.3 bpm using 71,930 targets, although the optimum varied largely between subjects. For subject S1 the ITR was still increasing at half a million targets with an an accuracy of 96.3% and an ITR of 432 bpm. Furthermore, S1 achieved an accuracy of 100% for up to 29,500 targets. In S2 Fig are detailed results for S1 showing the target variation for all trial durations, revealing a maximum ITR of 474.5 bpm using 472,700 targets and a trial duration of 1.5 s, which would be, to the best of our knowledge, the highest reported ITR of an offline BCI.

Using the Code2EEG model, the maximum average ITR of 183.8 bpm is reached with 9,600 targets with an average accuracy of 64.93%. Adding more targets decreases the ITR for most of the participants. Detail results for the Code2EEG model can be found in Figs C and D in S1 Fig.

Comparison to a cVEP BCI speller

A within-subject comparison to a state-of-the-art cVEP BCI speller method [22] was performed using the m-sequence trials of the spatial filter run. The results are listed in Table 3. By using the EEG2Code model trained on trials using random stimulation patterns, an average target prediction accuracy of 89.7% was reached, which corresponds to an ITR of 155.3 bpm (with N = 32 and T = 1.55s). It should be noted that the model was not trained on any of the m-sequence trials. The EEG2Code model trained on only m-sequence trials performs better with an accuracy of 90.8% (ITR: 158.6 bpm). Using the OCSVM method the average accuracy is 93.2% (ITR: 166.5 bpm), whereas the results are not significantly better as the results of the EEG2Code model trained on only m-sequences (p > 0.05, paired t-test).

Table 3. Performance comparison using m-sequence stimulation for cVEP BCI.

| Subject | EEG2Code | OCSVM [22] | |

|---|---|---|---|

| trained on random sequences | trained on m-sequences | trained on m-sequences | |

| S1 | 100.0% | 100.0% | 100.0% |

| S2 | 94.8% | 97.9% | 98.9% |

| S3 | 50.0% | 39.0% | 53.7% |

| S4 | 100.0% | 99.5% | 100.0% |

| S5 | 98.4% | 98.4% | 98.4% |

| S6 | 95.3% | 96.3% | 96.8% |

| S7 | 97.4% | 98.4% | 97.9% |

| S8 | 86.5% | 91.6% | 93.2% |

| S9 | 84.9% | 95.8% | 99.5% |

| mean | 89.7% | 90.8% | 93.2% |

Shown are the accuracies (ACC) of the target prediction on data where m-sequences were used for stimulation. For the EEG2Code we used the model trained on random stimulation patterns during the online experiment on the one hand and the models trained on m-sequence trials using a randomized 10-fold cross-validation (CV) on the other hand. The results are compared to the results using the one-class support vector machine (OCSVM) also by using a randomized 10-fold CV. The results are averaged over 192 trials with a duration of 1.05 s.

Discussion

In this paper, we presented a method that models the process of VEP generation and can be used in two directions to either predict the EEG response to a visual stimulation pattern (Code2EEG) or predict the visual stimulation pattern from the EEG (EEG2Code). Contrary to previous methods [12, 25], the presented method works with arbitrary stimulation patterns, while it is only trained on a limited set of stimulation patterns. We used random stimulation patterns because we assume to cover most of the possible VEP responses.

Using the EEG2Code model, we have demonstrated that a stimulation pattern presented at 60 Hz can be predicted with an average accuracy of 64.6%, which corresponds to an ITR of 232 bpm. (with a maximum of 69.1% and 389.9 bpm). It should be noted that these ITRs can not be compared to ITRs during BCI control but rather serve as a theoretical measure showing the information that can be extracted from the EEG data. Additionally, for the ITR we used T = 1/60s which is slightly biased due to the required window length of 250 ms, but T approaches to 1/60s and the bias decreases with increasing trial duration.

When using the Code2EEG model to predict the EEG response to a certain stimulation pattern, the correlation between the recorded EEG and the predicted EEG response is r = 0.384. It should be noted that the Code2EEG model only predicts the evoked response in the EEG, and not the noise present in the EEG, so that the correlation between the prediction and the evoked response should actually be higher. On the other side, a correlation of r = 0.384 means that r2 = 14.75% of the variance in the recorded EEG can be explained by the Code2EEG model, thereby giving a lower bound for the signal-to-noise-ratio (SNR) of the visual evoked response in the EEG. This is confirmed by comparing the model prediction of the cVEP pattern to the averaged, and thereby noise reduced, recorded EEG (Fig 4C), which resulted in a correlation of r = 0.551.

As the presented approach is based on the assumption of linearity in the VEP generation process [13, 26], we have shown that most of the VEP response to arbitrary stimulation patterns can be explained by a superposition of single VEP responses. But interestingly, the duration of the predicted single-flash VEP response and the onset of the predicted 30 Hz SSVEP response is shortened in time compared to the recorded VEP responses, whereas the sinusoidal part of the 30 Hz SSVEP response matches well. It seems there is an initially slowed response after a longer stimulation pause which the model can not reflect because it is trained using random stimulation patterns where longer stimulation pauses are unlikely. By using a model trained on sequences with < 3 bitchanges the onset matches better for all stimulation patterns, whereas the further prediction matches worse (S3 Fig). This could be an evidence, that the VEP generation is a non-linear process. Furthermore, this could be the reason for the worse stimulation pattern prediction of sequences with less bit changes (Fig 3).

We have also shown that both models can be used for controlling a BCI. When using the EEG2Code model for an online BCI, we achieved an average accuracy of 108 bpm and thereby, with an ITR > 100 bpm, fall in the category of high-speed BCIs. But the performance of our online EEG2Code BCI is below the best results reported for a cVEP BCI (144 bpm [5]) or an SSVEP BCI (267 bpm [7]). However, the BCI used in the online setup was not optimized to achieve a high ITR, but to be comfortably usable by a BCI-naive person and to get the required data for the offline analysis. In the offline analysis we have shown that the parameters can be optimized, for example by reducing the trial duration, to achieve an average ITR of 154.3 bpm and up to 231.1 bpm. For the best subject, we found a theoretical maximum ITR of 474.5 bpm, although we only simulated results for up to 500,000 targets and the ITR was still rising at that point, so that it is likely that the maximum is even higher.

Compared to other types of BCIs, such a high number of possible targets is a unique feature of the EEG2Code BCI. There are other approaches increasing the number of targets, like using multiple m-sequences in cVEP BCIs [8, 9] or by using sequential coding strategy in SSVEP BCIs [10, 11], but both are still limited and there is, to the best of our knowledge, no method allowing a virtually unlimited number of possible targets (e.g. 96.3% accuracy for 500,000 targets with a trial length of 2 s for the best subject S1).

With 1000 targets, the average accuracy is still around 90% and goes down to around 55% for half a million targets, with the best subject still achieving >95% accuracy. As the Dictionary of Chinese Variant Form compiled by the Taiwan (ROC) Ministry of Education in 2004 contains 106,230 individual characters, this BCI approach would theoretically allow to select each character of that alphabet individually. Although this thought is purely theoretical as there are practical limitations, like both displaying or finding a target out of such a high amount of targets. Furthermore, increasing the number of targets and not increasing the monitor results in smaller targets which will also reduce the performance. Nevertheless, those theoretical ITRs are still interesting as they provide a lower bound for the maximum amount of information that is contained in EEG data.

The Code2EEG model resulted in a lower BCI performance compared to the EEG2Code model, but with a trial length of 0.75 s it achieved an average ITR of 146.5 bpm, which is three times higher than the ITR of the re-convolution BBVEP model of Thielen et al. [12], which achieved an average ITR of 48.4 bpm.

It should be noted that the theoretical ITRs obtained in the offline analysis are not meant to compete with state-of-the-art BCI methods, but only to show how much information can be extracted form the EEG with our model. Regarding the comparison to state-of-the-art methods, we test performed a within-subject comparison and compared our EEG2Code model a state-of-the-art cVEP BCI speller [22] using the same data. The results using the EEG2Code model trained on m-sequence trials are slightly worse, but not significantly (p > 0.05, paired t-test). A comparison to the BCI performance using the optimized random stimulation patterns with 1 s trial duration reveals similar results, with an ITR of 154.3 bpm the performance is slightly worse. In return, the EEG2Code model using random stimulation patterns offers full flexibility for BCI application design as the number of targets can be varied freely as well as the trial duration. Especially the latter allows to use the method for an asynchronous BCI, for example by only classifying if a specific correlation is reached, which will be addressed in a future study.

Due to the discussed assumption of non-linearity, we expect a better pattern prediction of the EEG2Code model using a non-linear method. Achieving accuracies of above 75% would allow to use error-correction codes, as know from coding theory. This in turn would allow to encode arbitrary information as a binary sequence, directly transfer it through visual stimuli, decode it and correct possible errors. This would allow to build more robust and fully flexible BCI applications by using the brain as data transfer channel.

Finally, the approach to create a general model for the generation of VEPs could also be applied to other stimulation paradigms like sensory or auditory stimulation. Also, such an approach could be utilized for electrical stimulation for therapeutic means, where optimal stimulation parameters need to be found to evoke a certain response [27].

Supporting information

Shown are the accuracies and ITRs (including 0.5 s inter-trial time). Each colored line is one subject and the thick black line represents the mean of all subjects. A and B: using varying trial durations. C and D: using varying number of targets (logarithmic scale).

(TIF)

Shown are the accuracies and ITRs (including 0.5 s inter-trial time) using varying number of targets for varying trial durations. Each colored line represents a different trial duration. The maximum ITR of 474.5 bpm is reached using a trial duration of 1.5 s and 472,700 additional targets.

(TIF)

For the training, only stimulation patterns with < 3 bitchanges are used. The black line represents the stimulation pattern, the blue line the predicted brain response and the red line the spatially filtered EEG (120 trials averaged). A: Single stimulus pattern lasting for 1/60 s. B: 30 Hz SSVEP pattern. C: cVEP pattern.

(TIF)

Data Availability

All files are available from the figshare database: https://doi.org/10.6084/m9.figshare.7058900.

Funding Statement

This work was supported by the Deutsche Forschungsgemeinschaft (DFG; grant SP-1533\2-1; www.dfg.de). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Chirapapaisan N, Laotaweerungsawat S, Chuenkongkaew W, Samsen P, Ruangvaravate N, Thuangtong A, et al. Diagnostic value of visual evoked potentials for clinical diagnosis of multiple sclerosis. Documenta Ophthalmologica. 2015;130(1):25–30. 10.1007/s10633-014-9466-6 [DOI] [PubMed] [Google Scholar]

- 2. Blake R, Logothetis NK. Visual competition. Nature Reviews Neuroscience. 2002;3(1):13 10.1038/nrn701 [DOI] [PubMed] [Google Scholar]

- 3. Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain–computer interfaces for communication and control. Clinical neurophysiology. 2002;113(6):767–791. 10.1016/S1388-2457(02)00057-3 [DOI] [PubMed] [Google Scholar]

- 4.Sutter EE. The visual evoked response as a communication channel. In: Proceedings of the IEEE Symposium on Biosensors; 1984. p. 95–100.

- 5. Spüler M, Rosenstiel W, Bogdan M. Online Adaptation of a c-VEP Brain-Computer Interface(BCI) Based on Error-Related Potentials and Unsupervised Learning. PLOS ONE. 2012;7(12):1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Bin G, Gao X, Wang Y, Li Y, Hong B, Gao S. A high-speed BCI based on code modulation VEP. Journal of neural engineering. 2011;8(2):025015 10.1088/1741-2560/8/2/025015 [DOI] [PubMed] [Google Scholar]

- 7. Chen X, Wang Y, Nakanishi M, Gao X, Jung TP, Gao S. High-speed spelling with a noninvasive brain–computer interface. Proceedings of the National Academy of Sciences. 2015;112(44):E6058–E6067. 10.1073/pnas.1508080112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wei Q, Liu Y, Gao X, Wang Y, Yang C, Lu Z, et al. A novel c-VEP BCI paradigm for increasing the number of stimulus targets based on grouping modulation with different codes. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2018; [DOI] [PubMed]

- 9. Liu Y, Wei Q, Lu Z. A multi-target brain-computer interface based on code modulated visual evoked potentials. PloS one. 2018;13(8):e0202478 10.1371/journal.pone.0202478 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Zhang Y, Xu P, Liu T, Hu J, Zhang R, Yao D. Multiple frequencies sequential coding for SSVEP-based brain-computer interface. PloS one. 2012;7(3):e29519 10.1371/journal.pone.0029519 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Tong J, Zhu D. Multi-phase cycle coding for SSVEP based brain-computer interfaces. Biomedical engineering online. 2015;14(1):5 10.1186/1475-925X-14-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Thielen J, van den Broek P, Farquhar J, Desain P. Broad-Band visually evoked potentials: re (con) volution in brain-computer interfacing. PloS one. 2015;10(7):e0133797 10.1371/journal.pone.0133797 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Capilla A, Pazo-Alvarez P, Darriba A, Campo P, Gross J. Steady-State Visual Evoked Potentials Can Be Explained by Temporal Superposition of Transient Event-Related Responses. PLOS ONE. 2011;6(1):1–15. 10.1371/journal.pone.0014543 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Herrmann CS. Human EEG responses to 1–100 Hz flicker: resonance phenomena in visual cortex and their potential correlation to cognitive phenomena. Experimental brain research. 2001;137(3-4):346–353. 10.1007/s002210100682 [DOI] [PubMed] [Google Scholar]

- 15.Nagel S, Rosenstiel W, Spüler M. Random visual evoked potentials (rVEP) for Brain-Computer Interface (BCI) Control. In: Proceedings of the 7th International Brain-Computer Interface Conference; 2017. p. 349–354.

- 16. Matsumoto M, Nishimura T. Mersenne twister: a 623-dimensionally equidistributed uniform pseudo-random number generator. ACM Transactions on Modeling and Computer Simulation (TOMACS). 1998;8(1):3–30. 10.1145/272991.272995 [DOI] [Google Scholar]

- 17.Nagel S, Rosenstiel W, Spüler M. Finding optimal stimulation patterns for BCIs based on visual evoked potentials. In: Proceedings of the 7th International Brain-Computer Interface Meeting. BCI Society; 2018. p. 164–165.

- 18. Schalk G, McFarland DJ, Hinterberger T, Birbaumer N, Wolpaw JR. BCI2000: a general-purpose brain-computer interface (BCI) system. IEEE Transactions on biomedical engineering. 2004;51(6):1034–1043. 10.1109/TBME.2004.827072 [DOI] [PubMed] [Google Scholar]

- 19.MATLAB. version 9.3 (R2017b). Natick, Massachusetts: The MathWorks Inc.; 2017.

- 20. Brainard DH, Vision S. The psychophysics toolbox. Spatial vision. 1997;10:433–436. 10.1163/156856897X00357 [DOI] [PubMed] [Google Scholar]

- 21. Nagel S, Dreher W, Rosenstiel W, Spüler M. The effect of monitor raster latency on VEPs, ERPs and Brain–Computer Interface performance. Journal of neuroscience methods. 2018;295:45–50. 10.1016/j.jneumeth.2017.11.018 [DOI] [PubMed] [Google Scholar]

- 22.Spüler M, Rosenstiel W, Bogdan M. One Class SVM and Canonical Correlation Analysis increase performance in a c-VEP based Brain-Computer Interface (BCI). In: Proceedings of 20th European Symposium on Artificial Neural Networks (ESANN 2012). Bruges, Belgium; 2012. p. 103–108.

- 23. Spüler M, Walter A, Rosenstiel W, Bogdan M. Spatial filtering based on canonical correlation analysis for classification of evoked or event-related potentials in EEG data. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2014;22(6):1097–1103. 10.1109/TNSRE.2013.2290870 [DOI] [PubMed] [Google Scholar]

- 24. Wolpaw JR, Ramoser H, McFarland DJ, Pfurtscheller G. EEG-based communication: improved accuracy by response verification. IEEE transactions on Rehabilitation Engineering. 1998;6(3):326–333. 10.1109/86.712231 [DOI] [PubMed] [Google Scholar]

- 25.Cardona J, Caicedo E, Alfonso W, Chavarriaga R, Millán JdR. Superposition model for steady state visually evoked potentials. In: Systems, Man, and Cybernetics (SMC), 2016 IEEE International Conference on. IEEE; 2016. p. 004477–004482.

- 26. Lalor EC, Pearlmutter BA, Reilly RB, McDarby G, Foxe JJ. The VESPA: a method for the rapid estimation of a visual evoked potential. Neuroimage. 2006;32(4):1549–1561. 10.1016/j.neuroimage.2006.05.054 [DOI] [PubMed] [Google Scholar]

- 27. Walter A, Naros G, Spüler M, Gharabaghi A, Rosenstiel W, Bogdan M. Decoding stimulation intensity from evoked ECoG activity. Neurocomputing. 2014;141:46–53. 10.1016/j.neucom.2014.01.048 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Shown are the accuracies and ITRs (including 0.5 s inter-trial time). Each colored line is one subject and the thick black line represents the mean of all subjects. A and B: using varying trial durations. C and D: using varying number of targets (logarithmic scale).

(TIF)

Shown are the accuracies and ITRs (including 0.5 s inter-trial time) using varying number of targets for varying trial durations. Each colored line represents a different trial duration. The maximum ITR of 474.5 bpm is reached using a trial duration of 1.5 s and 472,700 additional targets.

(TIF)

For the training, only stimulation patterns with < 3 bitchanges are used. The black line represents the stimulation pattern, the blue line the predicted brain response and the red line the spatially filtered EEG (120 trials averaged). A: Single stimulus pattern lasting for 1/60 s. B: 30 Hz SSVEP pattern. C: cVEP pattern.

(TIF)

Data Availability Statement

All files are available from the figshare database: https://doi.org/10.6084/m9.figshare.7058900.