Abstract

Artificial intelligence (AI) enables machines to provide unparalleled value in a myriad of industries and applications. In recent years, researchers have harnessed artificial intelligence to analyze large-volume, unstructured medical data and perform clinical tasks, such as the identification of diabetic retinopathy or the diagnosis of cutaneous malignancies. Applications of artificial intelligence techniques, specifically machine learning and more recently deep learning, are beginning to emerge in gastrointestinal endoscopy. The most promising of these efforts have been in computer-aided detection and computer-aided diagnosis of colorectal polyps, with recent systems demonstrating high sensitivity and accuracy even when compared to expert human endoscopists. AI has also been utilized to identify gastrointestinal bleeding, to detect areas of inflammation, and even to diagnose certain gastrointestinal infections. Future work in the field should concentrate on creating seamless integration of AI systems with current endoscopy platforms and electronic medical records, developing training modules to teach clinicians how to use AI tools, and determining the best means for regulation and approval of new AI technology.

Keywords: Artificial intelligence, Machine learning, Gastrointestinal endoscopy, Computer-assisted decision making, Computer-aided detection, Colonic polyps, Colonoscopy, Computer-aided diagnosis, Colorectal adenocarcinoma

Core tip: Artificial intelligence (AI) appears poised to transform several industries, including clinical medicine. Recent advances in AI technology, namely the improvement in computational power and advent of deep learning, will lead to the near-term availability of clinically relevant applications in gastrointestinal endoscopy, such as real-time, high-accuracy colon polyp detection and classification and fast, automatic processing of wireless capsule endoscopy images. Applications of AI toward gastrointestinal endoscopy will likely exponentially rise in the coming years, and attention should be paid toward regulation, approval, and effective implementation of this powerful technology.

INTRODUCTION

Artificial intelligence (AI) has transformed information technology by unlocking large-scale, data-driven solutions to what once were time intensive problems. Over the past few decades, researchers have successfully demonstrated how AI can improve our ability to perform medical tasks, ranging from the identification of diabetic retinopathy to the diagnosis of cutaneous malignancies[1,2]. As the medical community’s understanding and acceptance of AI grows, so too does our imagination of the many ways in which it can improve patient care, expedite clinical processes, and relieve the burden of medical professionals.

Gastroenterology is a field that requires physicians to perform a myriad of clinical skills, ranging from dexterous manipulation and navigation of endoscopic devices and visual identification and classification of disease to data-driven clinical decision-making. In recent years, AI tools have been designed to help physicians in performing these tasks. Research groups have shown how deep learning can assist with a variety of skills from colonic polyp detection to analysis of wireless capsule endoscopy (WCE) images[3,4]. As the number of applications of AI in gastroenterology expands, it is important to understand the extent of our success and the hurdles that lie ahead. In this review, we aim to (1) provide a brief overview of artificial intelligence technology; (2) describe the ways in which AI has been applied to gastroenterology thus far; (3) discuss what value AI offers to this field; and finally (4) comment on future directions of this technology.

ARTIFICIAL INTELLIGENCE TECHNOLOGY

Artificial intelligence is machine intelligence that mimics human cognitive function[5]. Research in AI began in the 1950s with the earliest applications being in board games, logical reasoning, and simple algebra. Interest in the field grew over the next few decades due to the exponential increase in computational power and data volume.

Machine learning is an artificial intelligence technique in which computers use data to improve their performance in a task without explicit instruction[6]. Examples of machine learning include an application that learns to identify and discard spam emails or a thermostat that learns household temperature preferences over time. Machine learning is often classified into two categories - supervised and unsupervised learning. In supervised learning, a machine is trained with data that contain pairs of inputs and outputs[7]. The machine learns a function to map the inputs to outputs, which can then be applied toward new examples. Linear and logistic regression, which are often employed in clinical research, are examples of supervised machine learning because they produce a regression function that correlates inputs to outputs based on observed data. In unsupervised learning, machines are given data inputs that are not explicitly paired to labels or outputs[7]. The machine is tasked with finding its own structure and patterns from the set of objects. An example of unsupervised learning is clustering, in which a system creates clusters of similar data points from a large data set.

Feature learning refers to a set of techniques within machine learning that asks machines to automatically identify features within raw data as opposed to the features being explicitly labeled[8]. This technique enables machines to learn features and infer functions between inputs and outputs without being provided the features in advance. A subset of feature learning is deep learning, which harnesses neural networks modeled after the biological nervous system of animals. Deep learning is especially valuable in clinical medicine because medical data often consist of unstructured text, images, and videos that are not easily processed into explicit features.

Machine learning, and more specifically deep learning, has been widely applied in tasks such as gaming, weather, security, and media. Recent notable examples include AlphaGo beating the world’s premier Go player, facial recognition within iPhone images, and automatic text generation[9-11].

Deep learning has also shown significant promise in performing clinical tasks. Researchers from Stanford trained a deep convolutional neural network (CNN) on 129450 skin lesion images consisting of 2032 different diseases, and showed that the network performed on par against 21 board-certified dermatologists in distinguishing keratinocyte carcinomas from benign seborrheic keratosis and malignant melanomas from benign nevi[2]. Other research groups have applied machine learning to identify diabetic retinopathy from fundus photographs, classify proliferative breast lesions as benign or malignant, and predict clinical orders[12-14].

APPLICATIONS OF AI IN GASTROENTEROLOGY

Automatic colonic polyp detection

Automatic colon polyp detection has been one of the primary areas of interest for applications of artificial intelligence in gastrointestinal endoscopy. Generally speaking, automatic polyp detection constructs are designed to alert the endoscopist to the presence of a polyp on the screen through either a digital visual marker or sound.

Numerous studies have demonstrated that endoscopists with higher adenoma detection rates during screening colonoscopy more effectively protect their patients from subsequent risk of colonic cancer[15,16]. Corley et al[15], for example, in their evaluation of 314872 colonoscopies performed by 136 gastroenterologists showed that every 1.0% increase in adenoma detection rate was associated with a 3.0% decrease in the risk of cancer (hazard ratio, 0.97; 95%CI: 0.96 to 0.98). However, adenoma miss rates during screening colonoscopy remain relatively high, and have been estimated to be anywhere from 6%-27%[17]. Reasons for missing polyps are myriad, and can include inadequate mucosal inspection (for instance behind folds in the right colon), lack of recognition of subtle mucosal findings representing flat polyps, and variable prep quality. Importantly, there is evidence that some missed polyps are actually present on the visual field, but are not recognized by the endoscopist[18-20].

In the past two decades, several computer-aided detection (CADe) techniques have been proposed to assist endoscopists in the detection of polyps that would otherwise have been missed[21-24]. The ideal automatic polyp detection tool must have (1) high sensitivity for detection of polyps; (2) decreased rate of false positives; and (3) low latency so that polyps can be tracked and identified in near-real time. This last objective has eluded researchers up until recently as automatic polyp detection during live or recorded video can be affected by camera motion, strong light reflections, lack of focus of the traditionally used wide-angle lens, variation in polyp size, location and morphology, and the presence of vascular patterns, bubbles, fecal material and other distractors that may serve as false positives[25].

CADe in optical colonoscopy was first utilized and validated using still images obtained from endoscopic videos. Most of the modalities described below all utilize some combination of the following techniques: pre-processing of an image or series of images in order to discard noise, a feature extraction tool that identifies and extracts a feature or mix of features within the image (e.g., texture, shape or color), and a machine-learning or deep learning classification that uses these features to identify polyps[25].

A number of methods for CADe were proposed in the 1990s. Early attempts included the use of region-growing methods - a pixel-based image segmentation approach - for the extraction of large intestinal lumen contours and for the detection of lower gastrointestinal tract pathology[21-23]. By the end of the 1990s, research efforts mostly combined texture, color, or mixed analysis methods with intelligent pattern classification to aid in the detection of lesions in static endoscopic images[23]. These efforts included work targeting both microscopic features and macroscopic characteristics of lesions within the colon in order to predict the likelihood of neoplastic and pre-neoplastic lesions[26,27]. The concurrent development of neural networks helped push the field forward. Early grey-level texture analysis of endoscopic images included utilization of texture spectrum[24], co-occurrence matrices[28,29], Local Binary Pattern (LBP)[30], and wavelet-domain co-occurrence matrix features[31]. Using this last approach, Karkanis et al[31] developed one of the earliest examples of polyp detection software. Known as CoLD (Colorectal Lesions Detector), the software utilized second-order statistical features, calculated on the wavelet transformation of each image to discriminate amongst regions of normal or abnormal tissue. An artificial neural network performed the classification of these features, obtained from still images alone, and the work achieved a detection accuracy of more than 95%[32,33].

Other groups developed methods that utilized color features. Tjoa and Krishnan[34] combined texture spectrum and color histogram features to broadly analyze colon status as “normal” or “abnormal”. In 2003, Karkanis et al[35] used a color feature extraction scheme built on wavelet decomposition (Color Wavelet Covariance or CWC) to develop a computer-aided detection method with a higher sensitivity than previous methods that were built on grey-level features or color-texture inputs. The CWC method demonstrated a 90% sensitivity and 97% specificity for polyp detection when utilized on high-resolution endoscopy video-frames[35]. In 2015, Zheng et al[36] created an intelligent clinical decision support tool that utilized a Bayesian fusion scheme combining color, texture and luminal contour information for the detection of bleeding lesions and luminal irregularities in endoscopic images. In 2006, Iakovidis et al[23] developed a pattern recognition framework that accepted standard low-resolution video input and achieved a detection accuracy of greater than 94.5%.

These early works were based on the analysis of static endoscopic images and video frames. Subsequent work focused on translating polyp detection methods to real-time video analysis. In 2016, Tajbakhsh et al[37] developed a CADe system that used a hybrid context-shape approach, whereby context information was used to remove non-polypoid structures from analysis and shape information was used to localize polyps. Using this system, Tajbakhsh et al[37] reported an 88% sensitivity for real-time polyp detection. Perhaps more importantly, this group showed a latency, defined as the time from the first appearance of a polyp in the video to the time of its first detection by the software system, of only 0.3 s. The limitation to this study was its retrospective nature and limited clinical generalizability, as the system was tested on only twenty-five unique polyps[37].

Subsequent work in optical colonoscopy focused on validating real-time polyp detection modalities on larger colonoscopy image databases. Fernández-Esparrach et al[38] developed a method for utilizing energy maps based on localization of polyps and their boundaries - a so-called Window Median Depth of Valleys Accumulation (WM-DOVA) energy map method. Using this method on 24 videos containing 31 different polyps, this group demonstrated a sensitivity of 70.4% and a specificity of 72.4% for detection of polyps[38]. Wang et al[25] developed a method that utilized edge-cross section visual features and a rule-based classification to detect “polyp edges”. This Polyp-Alert software was trained on 8 full colonoscopy videos and subsequently tested on 53 randomly selected full videos. The system correctly detected 42 of 43 (97.7%) polyps on the screen and did so with very little latency. However, the software had an average of 36 false-positives per colonoscopy video analyzed[25]. False positives commonly resulted from protruding folds, the appendiceal orifice and ileocecal valve, and areas of the colon with residual fluid[25].

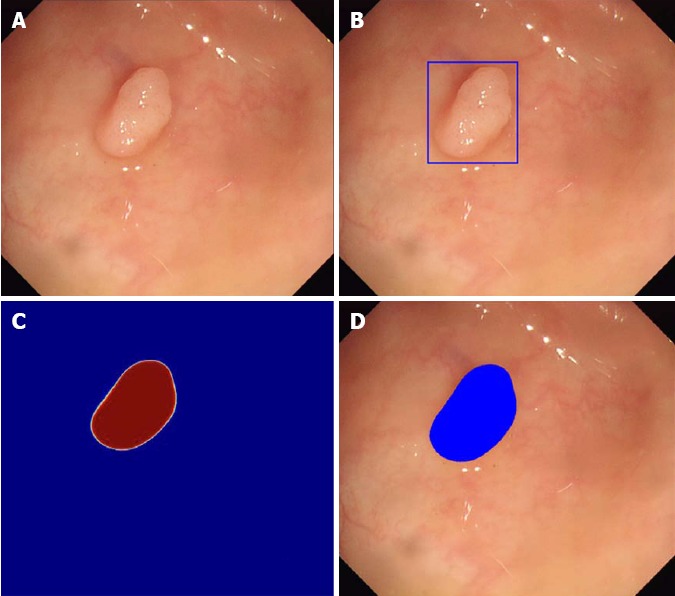

Both of these approaches were based on traditional machine learning methods with explicit feature specification. More recently, several groups have begun to incorporate deep learning methods into CAD systems. At Digestive Disease Week 2016, Li et al[39] presented perhaps the first example of a deep learning system for polyp detection. This group trained a convolutional neural network on 32305 colonoscopy images, and achieved an accuracy of 86% and sensitivity of 73% for polyp detection[39]. This study was instrumental in showing that a deep learning based computer vision program could accurately identify the presence of colorectal adenomas from colonoscopic images. Wang et al[40] recently presented their deep learning polyp detection software at the 2017 meeting of the World College of Gastroenterology. This system, built on a SegNet Architecture system was developed using a retrospective set of 5545 endoscopist-annotated images from colonoscopies performed in China and subsequently validated prospectively using 27461 colonoscopy images from 1235 patients (Figure 1)[40]. It is currently being testing in a single-center prospective feasibility study[40]. More recently, Misawa et al[41] developed a deep learning based AI system, which was trained on 105 polyp-positive and 306 polyp-negative videos. The system was tested on a separate data set, and was able to detect 94% of polyps with a false positive detection rate of 60%[41].

Figure 1.

Automatic polyp detection by Wang et al[40]. A: Original image obtained during colonoscopy; B: Automatic detection by box method; C: Probability map whereby red indicates high probability of polyp and blue indicates low probability of polyp; D: Automatic detection by paint method whereby blue coloring indicates location of polyp.

Deep learning methods hold the promise of increasing diagnostic accuracy and processing large amounts of data quickly. Future work must continue to develop methods that balance a high sensitivity with low latency and improved false positive rates.

Optical biopsy

Once a lesion has been detected, computational analysis may help predict polyp histology without the need for tissue biopsy, a subfield sometimes referred to as computer-aided diagnosis (CADx). The field of optical biopsy is several decades old, but the addition of deep learning and the increasing complexity of computational analytic methods have led to recent developments in this field. The ability to diagnose small polyps such as diminutive adenomas in-situ via optical diagnosis may allow for adenomas to be resected and discarded rather than sent for sometimes unnecessary histopathologic examination[42]. This “resect and discard” strategy has been estimated to promise upwards of $33 million dollars in savings per year in the United States alone[43]. A similar “diagnose and disregard” strategy has been suggested for diminutive polyps such as hyperplastic polyps in the rectosigmoid colon, where non-neoplastic polyps are identified via optical biopsy and left in place.

Historically, advanced imaging modalities have been the main areas of investigation for optical biopsy. These include chromoendoscopy, narrow spectra technologies (Narrow Band Imaging, i-Scan, and Fujinon intelligent color enhancement), endocytoscopy, and laser-induced fluorescence spectroscopy. In Japan, chromoendoscopy, defined as the topical application of stains or pigments to improve tissue localization during endoscopy, is widely used to further characterize small polyps during standard screening and surveillance colonoscopy[44]. The Kudo pit-pattern is one of the most widely known classification systems used to classify and predict the histopathology of a given lesion[27]. Takayama et al[45] found that chromoendoscopy combined with magnifying endoscopy (in this case an endoscope that magnified images by a factor of 40) achieved a sensitivity for the diagnosis of dysplastic crypt foci of 100%.

Narrow band imaging (NBI) is another endoscopic optical modality where blue and green light is used to enhance the mucosal detail of a polyp in order to better characterize vessel size and pattern[46]. The NBI International Colorectal Endoscopic (NICE) classification uses color, vessels and surface pattern to differentiate between hyperplastic and adenomatous histology[47]. However, NBI, like chromoendoscopy, has been shown to have significant interobserver and intraobserver variability[48,49]. Interobserver variance generally stems from differences in expertise, while intraobserver variance is affected by experience, personal well-being, levels of distraction, and stress[50].

The existence of inter- and intraobserver variance and steep learning curves have likely contributed to the slow pace of adoption of these techniques beyond specialized medical centers. The use of CADx modalities may allow for decreased variance amongst providers, increased standardization, and, perhaps most importantly, more widespread adoption by non-experts in the field[51]. Following a similar developmental trajectory as the field of automatic polyp detection (CADe), the first CADx systems were developed using static colonoscopic images and image series. In 2010, Tischendorf et al[50] developed a computer-based analysis algorithm for colorectal polyps using magnifying NBI, with a subsequent automatic classification scheme using machine learning. This system achieved a sensitivity of 90% compared to a human sensitivity of 93.8% when using the same database of 209 polyp images (with corresponding biopsy)[50]. In a follow up study on smaller polyps in 2011, Gross et al[52] reported a 95% sensitivity in the computer based-algorithm group compared to a 93.4% sensitivity in a human expert group and 86.0% sensitivity in a human non-expert group. Both of these studies were limited, however, in that they involved off-site computer analysis of static images.

Subsequent work by Takemura et al[53] and Kominami et al[54] translated machine learning methods to real-time clinical use. Takemura et al[53] developed a custom software (HuPAS version 3.1, Hiroshima University, Hiroshima, Japan) that utilized a “bag-of-features” representation of NBI images and hierarchical k-means clustering of local features. In an initial study using static images, this group showed a sensitivity of 97.8%, specificity of 97.9%, and accuracy of 97.8% for diagnosis of neoplastic lesions. Diagnostic concordance between the computer-aided classification system and the two experienced endoscopists was 98.7%[53]. In a follow up study, this same group developed a real-time software to automatically recognize polyps, and then analyze and classify them as neoplastic or non-neoplastic[54]. This approach yielded a sensitivity 93.0%, a specificity of 93.3%, accuracy of 93.2%, and concordance between the image recognition software and human endoscopic diagnosis of 97.5%[54]. Though this was a study on just 41 patients with 118 colorectal lesions, it was the first of its kind to demonstrate that CADx in real-time is feasible and comparable to human diagnostics using magnified NBI.

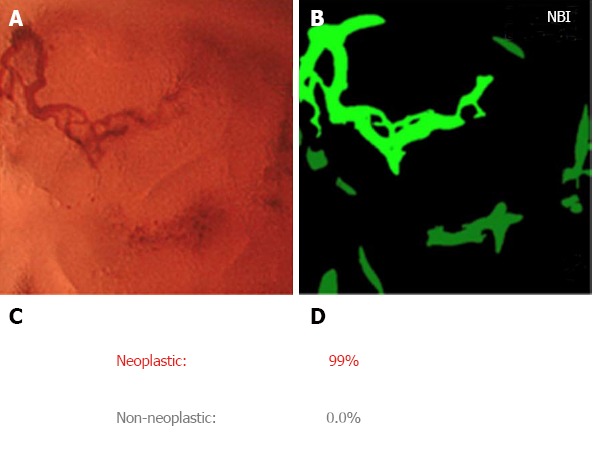

Several other advanced endoscopy imaging modalities have similarly benefited from advances in CAD. Endocytoscopy (EC) is an ultra-high magnification technique that provides images of surface epithelial structures at cellular resolution[55]. In 2015, Mori et al[56] developed the EC-CAD system, a machine-learning CAD system that uses nuclear segmentation and feature extraction to predict pathologic classification (i.e., non-neoplastic, adenoma and cancer, unable to diagnose). In a pilot study consisting of images from 176 polyps and 152 patients, the system showed a sensitivity of 92.0% and specificity of 79.5% compared to a sensitivity of 92.7% and specificity of 91% by expert endoscopists[56]. Misawa et al[57] then developed an EC system that utilized NBI rather than dye staining, and developed a machine learning CAD system referred to as AI-assisted endocytoscopy to analyze EC-NBI images produced by this instrument. This system uses texture analysis and automatic vessel extraction, which is analyzed by a support vector machine and outputs a 2-class diagnosis (non-neoplastic or neoplastic) in real time with a 0.3 second latency[57]. In a recent validation study using 100 randomly selected images of colorectal lesions, the AI-assisted endocytoscopy achieved a sensitivity of 85% for the diagnosis of adenomatous polyps, a specificity of 98%, and an accuracy of 90% (Figure 2)[57]. Mori et al[58] recently reported on the results of a prospective study further studying the AI-assisted endocytoscopy system. This single-center study in Yokohama, Japan involved 88 men and women with 126 polyps. The system demonstrated a sensitivity of 97%, specificity of 67%, accuracy of 83%, and positive and negative predictive values of 78% and 95% with extremely low latency.

Figure 2.

Output from artifical intelligence-assisted endocytoscopy system by Misawa et al[57]. A: Input from endocytoscopy with narrow band imaging; B: Extracted vessel image whereby green light represents extracted vessel image; C: System outputs diagnosis of neoplastic or non-neoplastic; D: Probability of diagnosis calculated by support vector machine classifier. NBI: narrow band imaging.

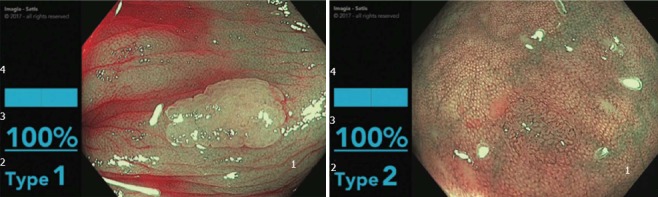

With the advent of deep learning, real-time optical analysis of polyps may be possible using white-light alone, without the aid of advanced, endoscopic imaging modalities such as chromoendoscopy, NBI, endocytoscopy or laser-induced autofluorescence spectroscopy (Table 1). In 2017, Byrne et al[59] developed and trained an AI deep convolution neural network (DCNN) on both unaltered white-light and NBI colonoscopy video recordings (Figure 3). The network was tested on 125 videos of consecutively encountered diminutive polyps, and achieved a 94% accuracy of classification for 106 of the 125 videos (for 19 polyps the system was unable to reach a credibility score threshold of ≥ 50%). For these 106 polyp videos, the system was able to detect adenomas with a sensitivity of 98% and a specificity of 83%[59]. Furthermore, the model worked in quasi real-time with a delay of just 50ms per frame[59]. This work is also significant in that it achieved the diagnostic thresholds set forth by the Preservation and Incorporation of Valuable Endoscopic Innovations initiative set forth by the American Society for Gastrointestinal Endoscopy. This initiative states that in order for optical biopsy to reach an acceptable threshold to support the “resect and discard” or “diagnose and leave strategies”, there must be ≥ 90 % agreement for post-polypectomy surveillance intervals for the “resect and discard” strategy, and ≥ 90% negative predictive value (NPV) for adenomatous histology for the “diagnose and leave” strategy[60].

Table 1.

Summary of clinical studies involving computer-aided detection and computer-aided diagnosis in real time (during live colonoscopy or video recording)

| Reference | Year | Type of CAD | Endoscopic Modality/ Input | Processing Modality | Study Design | Sensitivity | Specificity | Accuracy | Latency | Notes |

| Wang et al[25] | 2015 | CADe | White-Light Endoscopy | Polyp-Edge Detection Algorithm and Shot Extraction | Retrospective | - | - | 97.7%1 | 0.02 s | 36 false-positives per video |

| Fernández-Esparrach et al[38] | 2016 | CADe | White-Light Endoscopy | WM-DOVA | Retrospective | 70.4%2 | 72.4%2 | - | - | Accuracy and latency reported for this study |

| Tajbakhsh et al[37] | 2016 | CADe | White-Light Endoscopy | Hybrid Context-Shape Extractor, Edge Mapping | Retrospective | 88.0%2 for CVC-ColonDB | - | - | 0.3 s | 0.1 False positives per frame |

| 48.0% for ASU-Mayo | ||||||||||

| Wang et al[40] | 2017 | CADe | White-Light Endoscopy | Deep learning, built on SegNet Architecture | Retrospective | 91.6%2 | 96.3%2 | 100.0%1 | 0.04 s | |

| Misawa et al[41] | 2018 | CADe | White-Light Endoscopy | Deep learning, built on a DCNN | Retrospective | 90.0%2 | 63.3%2 | 76.5%1 | - | |

| Kominami et al[54] | 2016 | CADx | Magnifying NBI | Bag of features representation, SVM output | Prospective | 93.0%3 | 93.3%3 | 93.2%4 | - | 97.5% concordance between automatic diagnosis and endoscopic diagnosis |

| Komeda et al[75] | 2017 | CADx | A mix of White-Light Endoscopy, NBI and Chromoendoscopy | Deep learning, built on a CNN | Retrospective | - | - | 75.1%5 | ||

| Byrne et al[59] | 2017 | CADx | White-Light Endoscopy and NBI | Deep learning, built on a DCNN | Retrospective | 98.0%36 | 83.0% 36 | 94.0%4 | 0.05 s | For 19 polyps the system was unable to reach a credibility score threshold of ≥ 50% |

| Mori et al[58] | 2017 | CADx | Endocytoscopy and NBI | Texture analysis, automatic vessel extraction, SVM output | Prospective | 97.0%3 | 67.0%3 | 83.0%4 |

Tracking accuracy or detection rate, defined as number of polyps detected by software/total number of polyps present in videos;

Sensitivity and specificity for the detection of polyps;

Sensitivity and specificity for the diagnosis of neoplastic versus non-neoplastic lesions;

Accuracy defined as differentiation of adenomas from non-neoplastic lesions;

Accuracy of a 10-hold cross-validation is 0.751, where the accuracy is the ratio of the number of correct answers over the number of all the answers produced by the CNN;

Sensitivity and specificity in this case are calculated based on histology of 106/125 polyps in the video test set. For the remaining 19 polyps the system was unable to reach a credibility score threshold of ≥ 50%; CADx: Computer-aided diagnosis; CADe: Computer-aided detection; SVM: Support vector machine; WM-DOVA: Window median depth of valleys accumulation; NBI: Narrow band imaging; CNN: Convolution neural network; DCNN: Deep convolution neural network.

Figure 3.

Automatic polyp classification system. 1: Input from narrow band imaging; 2: Computer diagnosis of NICE type 1 (hyperplastic) vs NICE type 2 (adenomatous); 3: Probability of diagnosis; 4: Computer determined confidence in diagnosis probability. Obtained with permission from Dr. Michael Byrne (Division of Gastroenterology at Vancouver General Hospital and UBC).

Future work in this field must by necessity continue to refine sensitivity, specificity, accuracy, PPV and NPV of real-time optical classification methods while working to combine CADe and CADx modalities.

EGD and capsule endoscopy

Compared to applications in colonic polyp detection and classification, there have been fewer applications of deep learning in other areas of gastroenterology. However, the existing applications deserve recognition for their novelty and promise. One notable application is the use of CNN to diagnose Helicobacter pylori (H. pylori) infection by analysis of gastrointestinal endoscopy images[61]. H. pylori is strongly linked to gastritis, gastroduodenal ulcers, and gastric cancer, so prompt and effective diagnosis and eradication of this infection is important[62]. Existing diagnostic methods for H. pylori infection including urea breath test and stool antibody testing are highly sensitive and specific, but can be logistically difficult to schedule and process. In this study by Itoh et al[61], researchers developed a CNN trained on 149 gastrointestinal endoscopy images and tested on 30 images. The resulting sensitivity and specificity of the CNN for detection of H. pylori infection was 86.7% and 86.7% with an AUC of 0.956, which is significantly better than the performance of human endoscopists[61,63].

Deep learning with convolutional neural networks has also been applied toward endoscopic detection of gastric cancer. In 2018, Hirasawa et al[64] constructed a CNN-based diagnostic system which was trained on more than 13000 endoscopic images of gastric cancer. The system was then tested on 2296 images and in just 47 s, correctly diagnosed 71 of 79 gastric cancer lesions for a sensitivity of 92.2%. However, the positive predictive value was only 30.6% as a result of several false positives. This study highlights the potential of deep learning systems to accurately and quickly detect cancer. One can expect that with more training data and improved computational hardware, both the accuracy and analysis speed will only improve.

Several studies have demonstrated applications of deep learning in wireless capsule endoscopy (WCE). A major challenge of WCE for busy clinicians is the time-intensive nature of reviewing the images. However, deep learning offers a solution to both problems - it provides quick analysis of large-volume data and uses representation learning to extract its own features from unstructured images. Capsule endoscopy can be used to identify mucosal changes characteristic of celiac disease, but visual diagnosis has low sensitivity[65]. Zhou et al[66] trained a CNN using capsule endoscopy clips from patients with and without celiac disease, and reported a sensitivity and specificity of 100% for distinguishing celiac disease patients from controls in a testing set of ten patients. Further, the study found that the evaluation confidence of the system was correlated to the severity of the small bowel mucosal lesions.

Deep learning in WCE has also been shown to be effective in detection of small bowel bleeding. The first several studies to demonstrate computer-aided diagnosis of bleeding from WCE images used RGB and color texture feature extraction to help distinguish areas of bleeding from non-bleeding[67-69]. More recent studies, including by Xiao et al[70] and Hassan et al[71], used deep learning and feature learning to achieve sensitivities and specificities as high as 99% for detection of gastrointestinal (GI) bleeding. Further research and validation of these models may allow for a fast and highly effective means of detecting GI bleeding, with less work for the interpreting physician.

Similar image processing methods have even been applied to infectious disease detection in WCE. He et al[72] developed a CNN to detect hookworms, a cause of chronic infection affecting an estimated 740 million people in areas of poverty[72,73]. Hookworm infections cause chronic intestinal blood loss resulting in iron-deficiency anemia and hypoalbuminemia, and are especially dangerous in children and women of reproductive age due to its adverse effects in pregnancy[73]. In this study, He et al[72] tested a CNN on 440000 WCE images, and developed a system with high sensitivity and accuracy for hookworm detection. Applications of deep learning to hookworm detection and diagnosis of other infectious disease in the gastrointestinal tract may provide significant clinical value worldwide, especially in low-resource settings, if the cost of capsule endoscopy can be substantially lowered.

VALUE OF AI IN GASTROENTEROLOGY

As seen from the examples of CAD in gastroenterology described above, there are numerous potential benefits to the development and integration of CADx and CADe systems in everyday practice. In general, using artificial intelligence as an adjunct to standard practices within GI has the potential to improve the speed and accuracy of diagnostic testing while aiming to offload human providers from time-intensive tasks. In addition, CAD systems are not subject to some of the pitfalls of human-based diagnosis such as inter- and intraobserver variance and fatigue.

We are entering an age where CAD tools, applied in academic research settings, can at least match, and sometimes exceed human performance for the detection or diagnosis of endoscopic findings in a variety of modalities within gastroenterology[74]. Current prospective studies generally utilize CADe and CADx as a “second reader”, where information derived from CAD systems serve to support the endoscopist’s diagnosis. When used in this fashion, CAD modalities can assist human providers with time-intensive, data-rich tasks. Several studies have shown that human observation of standard colonoscopy video by either nurses or trainees may increase an individual provider’s polyp and adenoma detection rates[18-20]. The CADe systems described above, when integrated into daily practice, may offer a reliable, and ever-vigilant “second observer,” which could provide particular value for junior gastroenterologists or endoscopists with low adenoma detection rates[38].

FUTURE DIRECTIONS

As applications of artificial intelligence in gastroenterology continue to increase, there are several areas of interest that we believe will hold significant value in the future. First, the technical integration of artificial intelligence systems with existing electronic medical records (EMR) and endoscopy platforms will be important to optimize clinical workflow. New AI applications must be able to easily “read in” data from a video input or EMR, allowing the systems to use the data for training and real time decision support. A seamless integration in the endoscopy suite will be crucially important in encouraging clinician adoption.

Second, AI systems must continue to expand their library of clinical applications. As discussed in this review, there are several promising studies that demonstrate how AI can improve our performance on clinical tasks such as polyp identification, detection of small bowel bleeding, and even endoscopic recognition of H. pylori and hookworm infection. Future research should continue to identify new clinical tasks that are well-suited to machine learning tools. For example, analysis of WCE for diagnosis of celiac disease suggests that similar methodologies may be effective in diagnosing inflammatory bowel disease or providing more objective scoring of mucosal IBD activity during treatment. From a performance perspective, AI systems in clinical endoscopy will need to eliminate latency in detection to facilitate the real-world applicability of these technologies.

Third, further research is needed to understand the ethical and pragmatic considerations involved in the integration of artificial intelligence tools in gastroenterology practice. To begin, what is the general physician sentiment toward artificial intelligence? Is AI considered a threat or a tool by the gastroenterology community? A deeper understanding of the end-user is crucial to dictating how these tools should be designed and deployed. If AI tools are accepted by physicians, how will we train individuals to use these technologies effectively? Will the learning curve for using these systems be prohibitive? If so, further research is needed to describe the most effective training methods for physician practices beginning to adopt AI technology. In today’s technology-driven environment, it is clear that data security is of utmost importance, especially when dealing with protected health information. As the number of AI tools increases, so too should our efforts toward designing security systems and encryption methods to safeguard clinical data. Finally, the clinical community needs to decide on standards for approval and regulation of new AI technologies, including potential implications for legal matters including medical malpractice.

CONCLUSION

Artificial intelligence is an exciting new frontier for clinical gastroenterology. Artificial intelligence techniques like deep learning allow for expedited processing of large-volume unstructured data, and in doing so enable machines to assist clinicians in important tasks, such as polyp detection and classification. Several research groups have shown how artificial intelligence techniques can provide significant clinical value in gastroenterology, and the number of applications will likely continue to expand as computational power and algorithms improve. As the field evolves, a watchful eye is needed to ensure that security, regulation, and ethical standards are upheld.

Footnotes

Conflict-of-interest statement: Dr. Tyler Berzin is Consultant for Boston Scientific and Medtronic; and Dr. Yuichi Mori is speaking honorarium from Olympus Corp. No other conflict of interest to declare.

Manuscript source: Invited manuscript

Peer-review started: May 10, 2018

First decision: June 6, 2018

Article in press: June 30, 2018

Specialty type: Gastroenterology and hepatology

Country of origin: United States

Peer-review report classification

Grade A (Excellent): A

Grade B (Very good): B, B

Grade C (Good): 0

Grade D (Fair): 0

Grade E (Poor): 0

P- Reviewer: Poskus T, Shi H, Zhang QS S- Editor: Wang JL L- Editor: A E- Editor: Wu YXJ

Contributor Information

Muthuraman Alagappan, Center for Advanced Endoscopy, Beth Israel Deaconess Medical Center, Harvard Medical, Boston, MA 02215, United States.

Jeremy R Glissen Brown, Center for Advanced Endoscopy, Beth Israel Deaconess Medical Center, Harvard Medical, Boston, MA 02215, United States.

Yuichi Mori, Digestive Disease Center, Showa University Northern Yokohama Hospital, Yokohama, Japan.

Tyler M Berzin, Center for Advanced Endoscopy, Beth Israel Deaconess Medical Center, Harvard Medical, Boston, MA 02215, United States. tberzin@bidmc.harvard.edu.

References

- 1.Ting DSW, Cheung CY, Lim G, Tan GSW, Quang ND, Gan A, Hamzah H, Garcia-Franco R, San Yeo IY, Lee SY, et al. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images From Multiethnic Populations With Diabetes. JAMA. 2017;318:2211–2223. doi: 10.1001/jama.2017.18152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, Thrun S. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Mori Y, Kudo SE, Berzin TM, Misawa M, Takeda K. Computer-aided diagnosis for colonoscopy. Endoscopy. 2017;49:813–819. doi: 10.1055/s-0043-109430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Yuan Y, Meng MQ. Deep learning for polyp recognition in wireless capsule endoscopy images. Med Phys. 2017;44:1379–1389. doi: 10.1002/mp.12147. [DOI] [PubMed] [Google Scholar]

- 5.Poole DL, Mackworth AK, Goebel R. Computational intelligence: A logical approach. New York: Oxford University Press; 1998. [Google Scholar]

- 6.Mitchell TM. Machine learning. New York: McGraw-Hill; 1997. p. 414. [Google Scholar]

- 7.Russell SJ, Norvig P. Artificial intelligence: a modern approach (3rd Edition) Upper Saddle River: Prentice Hall; 2010. p. 1132. [Google Scholar]

- 8.Bengio Y, Courville A, Vincent P. Representation learning: a review and new perspectives. IEEE Trans Pattern Anal Mach Intell. 2013;35:1798–1828. doi: 10.1109/TPAMI.2013.50. [DOI] [PubMed] [Google Scholar]

- 9.Silver D, Schrittwieser J, Simonyan K, Antonoglou I, Huang A, Guez A, Hubert T, Baker L, Lai M, Bolton A, et al. Mastering the game of Go without human knowledge. Nature. 2017;550:354–359. doi: 10.1038/nature24270. [DOI] [PubMed] [Google Scholar]

- 10.Computer Vision Machine Learning Team. An On-device Deep Neural Network for Face Detection. Apple Machine Learning J. 2017:1. [Google Scholar]

- 11.Sutskever I, Martens J, Hinton G. Generating text with recurrent neural networks. Proceedings of the 28th International Conference on Machine Learning; 2011 Jun 28- Jul 2; Bellevue, Washington, USA; [Google Scholar]

- 12.Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, Venugopalan S, Widner K, Madams T, Cuadros J, et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA. 2016;316:2402–2410. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- 13.Radiya-Dixit E, Zhu D, Beck AH. Automated Classification of Benign and Malignant Proliferative Breast Lesions. Sci Rep. 2017;7:9900. doi: 10.1038/s41598-017-10324-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Chen JH, Alagappan M, Goldstein MK, Asch SM, Altman RB. Decaying relevance of clinical data towards future decisions in data-driven inpatient clinical order sets. Int J Med Inform. 2017;102:71–79. doi: 10.1016/j.ijmedinf.2017.03.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Corley DA, Jensen CD, Marks AR, Zhao WK, Lee JK, Doubeni CA, Zauber AG, de Boer J, Fireman BH, Schottinger JE, et al. Adenoma detection rate and risk of colorectal cancer and death. N Engl J Med. 2014;370:1298–1306. doi: 10.1056/NEJMoa1309086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Coe SG, Wallace MB. Assessment of adenoma detection rate benchmarks in women versus men. Gastrointest Endosc. 2013;77:631–635. doi: 10.1016/j.gie.2012.12.001. [DOI] [PubMed] [Google Scholar]

- 17.Ahn SB, Han DS, Bae JH, Byun TJ, Kim JP, Eun CS. The Miss Rate for Colorectal Adenoma Determined by Quality-Adjusted, Back-to-Back Colonoscopies. Gut Liver. 2012;6:64–70. doi: 10.5009/gnl.2012.6.1.64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Aslanian HR, Shieh FK, Chan FW, Ciarleglio MM, Deng Y, Rogart JN, Jamidar PA, Siddiqui UD. Nurse observation during colonoscopy increases polyp detection: a randomized prospective study. Am J Gastroenterol. 2013;108:166–172. doi: 10.1038/ajg.2012.237. [DOI] [PubMed] [Google Scholar]

- 19.Lee CK, Park DI, Lee SH, Hwangbo Y, Eun CS, Han DS, Cha JM, Lee BI, Shin JE. Participation by experienced endoscopy nurses increases the detection rate of colon polyps during a screening colonoscopy: a multicenter, prospective, randomized study. Gastrointest Endosc. 2011;74:1094–1102. doi: 10.1016/j.gie.2011.06.033. [DOI] [PubMed] [Google Scholar]

- 20.Buchner AM, Shahid MW, Heckman MG, Diehl NN, McNeil RB, Cleveland P, Gill KR, Schore A, Ghabril M, Raimondo M, et al. Trainee participation is associated with increased small adenoma detection. Gastrointest Endosc. 2011;73:1223–1231. doi: 10.1016/j.gie.2011.01.060. [DOI] [PubMed] [Google Scholar]

- 21.Krishnan SM, Tan CS, Chan KL, editors Closed-boundary extraction of large intestinal lumen. Proceedings of the 16th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; 1994 Nov 3-6; Baltimore, USA. Piscataway, NJ: IEEE Service Center, 1994; [Google Scholar]

- 22.Krishnan SM, Yang X, Chan KL, Kumar S, Goh PMY, editors Intestinal abnormality detection from endoscopic images. Proceedings of the 20th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; 1998 Nov 1-1; Hong Kong, China. Piscataway, NJ: IEEE Service Center, 1998; [Google Scholar]

- 23.Iakovidis DK, Maroulis DE, Karkanis SA. An intelligent system for automatic detection of gastrointestinal adenomas in video endoscopy. Comput Biol Med. 2006;36:1084–1103. doi: 10.1016/j.compbiomed.2005.09.008. [DOI] [PubMed] [Google Scholar]

- 24.Karkanis S, Galousi K, Maroulis D, editors . Classification of endoscopic images based on texture spectrum. Proceedings of Workshop on Machine Learning in Medical Applications, Advance Course in Artificial Intelligence-ACAI99; 1999 Jul 15; Chania, Greece [Google Scholar]

- 25.Wang Y, Tavanapong W, Wong J, Oh JH, de Groen PC. Polyp-Alert: near real-time feedback during colonoscopy. Comput Methods Programs Biomed. 2015;120:164–179. doi: 10.1016/j.cmpb.2015.04.002. [DOI] [PubMed] [Google Scholar]

- 26.Esgiar AN, Naguib RN, Sharif BS, Bennett MK, Murray A. Microscopic image analysis for quantitative measurement and feature identification of normal and cancerous colonic mucosa. IEEE Trans Inf Technol Biomed. 1998;2:197–203. doi: 10.1109/4233.735785. [DOI] [PubMed] [Google Scholar]

- 27.Kudo S, Tamura S, Nakajima T, Yamano H, Kusaka H, Watanabe H. Diagnosis of colorectal tumorous lesions by magnifying endoscopy. Gastrointest Endosc. 1996;44:8–14. doi: 10.1016/s0016-5107(96)70222-5. [DOI] [PubMed] [Google Scholar]

- 28.Karkanis S, Magoulas GD, Grigoriadou M, Schurr M, editors . Detecting abnormalities in colonoscopic images by textural description and neural networks. Proceedings of Workshop on Machine Learning in Medical Applications, Advance Course in Artificial Intelligence-ACAI99; 1999 Jul 15; Chania, Greece [Google Scholar]

- 29.Magoulas GD, Plagianakos VP, Vrahatis MN. Neural network-based colonoscopic diagnosis using on-line learning and differential evolution. Applied Soft Computing. 2004:4: 369–379. [Google Scholar]

- 30.Wang P, Krishnan SM, Kugean C, Tjoa MP, editors . Classification of endoscopic images based on texture and neural network. Proceedings of the 23rd IEEE Engineering in Medicine and Biology; 2001 Oct 25-28; Istanbul, Turkey. Piscataway, NJ: IEEE Service Center, 2001 [Google Scholar]

- 31.Karkanis SA, Magoulas GD, Iakovidis DK, Karras DA, Maroulis DE, editors Evaluation of textural feature extraction schemes for neural network-based interpretation of regions in medical images. Proceedings of the IEEE International Conference on Image Processing; 2001 Oct 7-10; Thessaloniki, Greece. Piscataway, NJ: IEEE Service Center, 2001; [Google Scholar]

- 32.Karkanis SA, Iakovidis DK, Karras DA, Maroulis DE, editors Detection of lesions in endoscopic video using textural descriptors on wavelet domain supported by artificial neural network architectures. Proceedings of the IEEE International Conference on Image Processing; 2001 Oct 7-10; Thessaloniki, Greece. Piscataway, NJ: IEEE Service Center, 2001; [Google Scholar]

- 33.Maroulis DE, Iakovidis DK, Karkanis SA, Karras DA. CoLD: a versatile detection system for colorectal lesions in endoscopy video-frames. Comput Methods Programs Biomed. 2003;70:151–166. doi: 10.1016/s0169-2607(02)00007-x. [DOI] [PubMed] [Google Scholar]

- 34.Tjoa MP, Krishnan SM. Feature extraction for the analysis of colon status from the endoscopic images. Biomed Eng Online. 2003;2:9. doi: 10.1186/1475-925X-2-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Karkanis SA, Iakovidis DK, Maroulis DE, Karras DA, Tzivras M. Computer-aided tumor detection in endoscopic video using color wavelet features. IEEE Trans Inf Technol Biomed. 2003;7:141–152. doi: 10.1109/titb.2003.813794. [DOI] [PubMed] [Google Scholar]

- 36.Zheng MM, Krishnan SM, Tjoa MP. A fusion-based clinical decision support for disease diagnosis from endoscopic images. Comput Biol Med. 2005;35:259–274. doi: 10.1016/j.compbiomed.2004.01.002. [DOI] [PubMed] [Google Scholar]

- 37.Tajbakhsh N, Gurudu SR, Liang J. Automated Polyp Detection in Colonoscopy Videos Using Shape and Context Information. IEEE Trans Med Imaging. 2016;35:630–644. doi: 10.1109/TMI.2015.2487997. [DOI] [PubMed] [Google Scholar]

- 38.Fernández-Esparrach G, Bernal J, López-Cerón M, Córdova H, Sánchez-Montes C, Rodríguez de Miguel C, Sánchez FJ. Exploring the clinical potential of an automatic colonic polyp detection method based on the creation of energy maps. Endoscopy. 2016;48:837–842. doi: 10.1055/s-0042-108434. [DOI] [PubMed] [Google Scholar]

- 39.Li T, Cohen J, Craig M, Tsourides K, Mahmud N, Berzin TM. The Next Endoscopic Frontier: A Novel Computer Vision Program Accurately Identifies Colonoscopic Colorectal Adenomas. Gastrointestinal Endoscopy. 2016;83:AB482. [Google Scholar]

- 40.Wang P, Xiao X, Liu J, Li L, Tu M, He J, Hu X, Xiong F, Xin Y Liu X. A Prospective Validation of Deep Learning for Polyp Auto-detection during Colonoscopy. World Congress of Gastroenterology 2017; 2017 Oct 13-18; Orlando, USA [Google Scholar]

- 41.Misawa M, Kudo SE, Mori Y, Cho T, Kataoka S, Yamauchi A, Ogawa Y, Maeda Y, Takeda K, Ichimasa K, et al. Artificial Intelligence-Assisted Polyp Detection for Colonoscopy: Initial Experience. Gastroenterology. 2018;154:2027–2029.e3. doi: 10.1053/j.gastro.2018.04.003. [DOI] [PubMed] [Google Scholar]

- 42.Wilson AI, Saunders BP. New paradigms in polypectomy: resect and discard, diagnose and disregard. Gastrointest Endosc Clin N Am. 2015;25:287–302. doi: 10.1016/j.giec.2014.12.001. [DOI] [PubMed] [Google Scholar]

- 43.Hassan C, Pickhardt PJ, Rex DK. A resect and discard strategy would improve cost-effectiveness of colorectal cancer screening. Clin Gastroenterol Hepatol. 2010;8:865–869, 869.e1-869.e3. doi: 10.1016/j.cgh.2010.05.018. [DOI] [PubMed] [Google Scholar]

- 44.Fennerty MB. Tissue staining. Gastrointest Endosc Clin N Am. 1994;4:297–311. [PubMed] [Google Scholar]

- 45.Takayama T, Katsuki S, Takahashi Y, Ohi M, Nojiri S, Sakamaki S, Kato J, Kogawa K, Miyake H, Niitsu Y. Aberrant crypt foci of the colon as precursors of adenoma and cancer. N Engl J Med. 1998;339:1277–1284. doi: 10.1056/NEJM199810293391803. [DOI] [PubMed] [Google Scholar]

- 46.Gono K, Obi T, Yamaguchi M, Ohyama N, Machida H, Sano Y, Yoshida S, Hamamoto Y, Endo T. Appearance of enhanced tissue features in narrow-band endoscopic imaging. J Biomed Opt. 2004;9:568–577. doi: 10.1117/1.1695563. [DOI] [PubMed] [Google Scholar]

- 47.Hewett DG, Kaltenbach T, Sano Y, Tanaka S, Saunders BP, Ponchon T, Soetikno R, Rex DK. Validation of a simple classification system for endoscopic diagnosis of small colorectal polyps using narrow-band imaging. Gastroenterology. 2012;143:599–607.e1. doi: 10.1053/j.gastro.2012.05.006. [DOI] [PubMed] [Google Scholar]

- 48.Rogart JN, Jain D, Siddiqui UD, Oren T, Lim J, Jamidar P, Aslanian H. Narrow-band imaging without high magnification to differentiate polyps during real-time colonoscopy: improvement with experience. Gastrointest Endosc. 2008;68:1136–1145. doi: 10.1016/j.gie.2008.04.035. [DOI] [PubMed] [Google Scholar]

- 49.Sikka S, Ringold DA, Jonnalagadda S, Banerjee B. Comparison of white light and narrow band high definition images in predicting colon polyp histology, using standard colonoscopes without optical magnification. Endoscopy. 2008;40:818–822. doi: 10.1055/s-2008-1077437. [DOI] [PubMed] [Google Scholar]

- 50.Tischendorf JJ, Gross S, Winograd R, Hecker H, Auer R, Behrens A, Trautwein C, Aach T, Stehle T. Computer-aided classification of colorectal polyps based on vascular patterns: a pilot study. Endoscopy. 2010;42:203–207. doi: 10.1055/s-0029-1243861. [DOI] [PubMed] [Google Scholar]

- 51.Byrne MF, Shahidi N, Rex DK. Will Computer-Aided Detection and Diagnosis Revolutionize Colonoscopy? Gastroenterology. 2017;153:1460–1464.e1. doi: 10.1053/j.gastro.2017.10.026. [DOI] [PubMed] [Google Scholar]

- 52.Gross S, Trautwein C, Behrens A, Winograd R, Palm S, Lutz HH, Schirin-Sokhan R, Hecker H, Aach T, Tischendorf JJ. Computer-based classification of small colorectal polyps by using narrow-band imaging with optical magnification. Gastrointest Endosc. 2011;74:1354–1359. doi: 10.1016/j.gie.2011.08.001. [DOI] [PubMed] [Google Scholar]

- 53.Takemura Y, Yoshida S, Tanaka S, Kawase R, Onji K, Oka S, Tamaki T, Raytchev B, Kaneda K, Yoshihara M, et al. Computer-aided system for predicting the histology of colorectal tumors by using narrow-band imaging magnifying colonoscopy (with video) Gastrointest Endosc. 2012;75:179–185. doi: 10.1016/j.gie.2011.08.051. [DOI] [PubMed] [Google Scholar]

- 54.Kominami Y, Yoshida S, Tanaka S, Sanomura Y, Hirakawa T, Raytchev B, Tamaki T, Koide T, Kaneda K, Chayama K. Computer-aided diagnosis of colorectal polyp histology by using a real-time image recognition system and narrow-band imaging magnifying colonoscopy. Gastrointest Endosc. 2016;83:643–649. doi: 10.1016/j.gie.2015.08.004. [DOI] [PubMed] [Google Scholar]

- 55.Inoue H, Kudo SE, Shiokawa A. Technology insight: Laser-scanning confocal microscopy and endocytoscopy for cellular observation of the gastrointestinal tract. Nat Clin Pract Gastroenterol Hepatol. 2005;2:31–37. doi: 10.1038/ncpgasthep0072. [DOI] [PubMed] [Google Scholar]

- 56.Mori Y, Kudo SE, Wakamura K, Misawa M, Ogawa Y, Kutsukawa M, Kudo T, Hayashi T, Miyachi H, Ishida F, et al. Novel computer-aided diagnostic system for colorectal lesions by using endocytoscopy (with videos) Gastrointest Endosc. 2015;81:621–629. doi: 10.1016/j.gie.2014.09.008. [DOI] [PubMed] [Google Scholar]

- 57.Misawa M, Kudo SE, Mori Y, Nakamura H, Kataoka S, Maeda Y, Kudo T, Hayashi T, Wakamura K, Miyachi H, et al. Characterization of Colorectal Lesions Using a Computer-Aided Diagnostic System for Narrow-Band Imaging Endocytoscopy. Gastroenterology. 2016;150:1531–1532.e3. doi: 10.1053/j.gastro.2016.04.004. [DOI] [PubMed] [Google Scholar]

- 58.Mori Y, Kudo S, Misawa M, Takeda K, Ichimasa K, Ogawa Y, Maeda Y, Kudo T, Wakamura K, Hayashi T, et al. Diagnostic yield of “artificial intelligence”-assisted endocytoscopy for colorectal polyps: a prospective study. United European Gastroenterol J. 2017;5:A1–A160. [Google Scholar]

- 59.Byrne MF, Chapados N, Soudan F, Oertel C, Linares Pérez M, Kelly R, Iqbal N, Chandelier F, Rex DK. Real-time differentiation of adenomatous and hyperplastic diminutive colorectal polyps during analysis of unaltered videos of standard colonoscopy using a deep learning model. Gut. 2017;pii:gutjnl–2017-314547. doi: 10.1136/gutjnl-2017-314547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Rex DK, Kahi C, O’Brien M, Levin TR, Pohl H, Rastogi A, Burgart L, Imperiale T, Ladabaum U, Cohen J, et al. The American Society for Gastrointestinal Endoscopy PIVI (Preservation and Incorporation of Valuable Endoscopic Innovations) on real-time endoscopic assessment of the histology of diminutive colorectal polyps. Gastrointest Endosc. 2011;73:419–422. doi: 10.1016/j.gie.2011.01.023. [DOI] [PubMed] [Google Scholar]

- 61.Itoh T, Kawahira H, Nakashima H, Yata N. Deep learning analyzes Helicobacter pylori infection by upper gastrointestinal endoscopy images. Endosc Int Open. 2018;6:E139–E144. doi: 10.1055/s-0043-120830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Goodwin CS. Helicobacter pylori gastritis, peptic ulcer, and gastric cancer: clinical and molecular aspects. Clin Infect Dis. 1997;25:1017–1019. doi: 10.1086/516077. [DOI] [PubMed] [Google Scholar]

- 63.Bah A, Saraga E, Armstrong D, Vouillamoz D, Dorta G, Duroux P, Weber B, Froehlich F, Blum AL, Schnegg JF. Endoscopic features of Helicobacter pylori-related gastritis. Endoscopy. 1995;27:593–596. doi: 10.1055/s-2007-1005764. [DOI] [PubMed] [Google Scholar]

- 64.Hirasawa T, Aoyama K, Tanimoto T, Ishihara S, Shichijo S, Ozawa T, Ohnishi T, Fujishiro M, Matsuo K, Fujisaki J, et al. Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images. Gastric Cancer. 2018;21:653–660. doi: 10.1007/s10120-018-0793-2. [DOI] [PubMed] [Google Scholar]

- 65.Petroniene R, Dubcenco E, Baker JP, Ottaway CA, Tang SJ, Zanati SA, Streutker CJ, Gardiner GW, Warren RE, Jeejeebhoy KN. Given capsule endoscopy in celiac disease: evaluation of diagnostic accuracy and interobserver agreement. Am J Gastroenterol. 2005;100:685–694. doi: 10.1111/j.1572-0241.2005.41069.x. [DOI] [PubMed] [Google Scholar]

- 66.Zhou T, Han G, Li BN, Lin Z, Ciaccio EJ, Green PH, Qin J. Quantitative analysis of patients with celiac disease by video capsule endoscopy: A deep learning method. Comput Biol Med. 2017;85:1–6. doi: 10.1016/j.compbiomed.2017.03.031. [DOI] [PubMed] [Google Scholar]

- 67.Pan G, Yan G, Song X, Qiu X. BP neural network classification for bleeding detection in wireless capsule endoscopy. J Med Eng Technol. 2009;33:575–581. doi: 10.1080/03091900903111974. [DOI] [PubMed] [Google Scholar]

- 68.Fu Y, Zhang W, Mandal M, Meng MQ. Computer-aided bleeding detection in WCE video. IEEE J Biomed Health Inform. 2014;18:636–642. doi: 10.1109/JBHI.2013.2257819. [DOI] [PubMed] [Google Scholar]

- 69.Li B, Meng MQ. Computer-aided detection of bleeding regions for capsule endoscopy images. IEEE Trans Biomed Eng. 2009;56:1032–1039. doi: 10.1109/TBME.2008.2010526. [DOI] [PubMed] [Google Scholar]

- 70.Xiao J, Meng MQ. A deep convolutional neural network for bleeding detection in Wireless Capsule Endoscopy images. Conf Proc IEEE Eng Med Biol Soc. 2016;2016:639–642. doi: 10.1109/EMBC.2016.7590783. [DOI] [PubMed] [Google Scholar]

- 71.Hassan AR, Haque MA. Computer-aided gastrointestinal hemorrhage detection in wireless capsule endoscopy videos. Comput Methods Programs Biomed. 2015;122:341–353. doi: 10.1016/j.cmpb.2015.09.005. [DOI] [PubMed] [Google Scholar]

- 72.He JY, Wu X, Jiang YG, Peng Q, Jain R. Hookworm Detection in Wireless Capsule Endoscopy Images With Deep Learning. IEEE Trans Image Process. 2018;27:2379–2392. doi: 10.1109/TIP.2018.2801119. [DOI] [PubMed] [Google Scholar]

- 73.Hotez PJ, Brooker S, Bethony JM, Bottazzi ME, Loukas A, Xiao S. Hookworm infection. N Engl J Med. 2004;351:799–807. doi: 10.1056/NEJMra032492. [DOI] [PubMed] [Google Scholar]

- 74.East JE, Vleugels JL, Roelandt P, Bhandari P, Bisschops R, Dekker E, Hassan C, Horgan G, Kiesslich R, Longcroft-Wheaton G, et al. Advanced endoscopic imaging: European Society of Gastrointestinal Endoscopy (ESGE) Technology Review. Endoscopy. 2016;48:1029–1045. doi: 10.1055/s-0042-118087. [DOI] [PubMed] [Google Scholar]

- 75.Komeda Y, Handa H, Watanabe T, Nomura T, Kitahashi M, Sakurai T, Okamoto A, Minami T, Kono M, Arizumi T, et al. Computer-Aided Diagnosis Based on Convolutional Neural Network System for Colorectal Polyp Classification: Preliminary Experience. Oncology. 2017;93 Suppl 1:30–34. doi: 10.1159/000481227. [DOI] [PubMed] [Google Scholar]