Abstract

We consider perceptual learning -- experience-induced changes in the way perceivers extract information. Often neglected in scientific accounts of learning and in instruction, perceptual learning is a fundamental contributor to human expertise and is likely a crucial contributor in domains where humans show remarkable levels of attainment, such as chess, music, and mathematics. In Section II, we give a brief history and discuss the relation of perceptual learning to other forms of learning. We consider in Section III several specific phenomena, illustrating the scope and characteristics of perceptual learning, including both discovery and fluency effects. We describe abstract perceptual learning, in which structural relationships are discovered and recognized in novel instances that do not share constituent elements or basic features. In Section IV, we consider primary concepts that have been used to explain and model perceptual learning, including receptive field change, selection, and relational recoding. In Section V, we consider the scope of perceptual learning, contrasting recent research, focused on simple sensory discriminations, with earlier work that emphasized extraction of invariance from varied instances in more complex tasks. Contrary to some recent views, we argue that perceptual learning should not be confined to changes in early sensory analyzers. Phenomena at various levels, we suggest, can be unified by models that emphasize discovery and selection of relevant information. In a final section, we consider the potential role of perceptual learning in educational settings. Most instruction emphasizes facts and procedures that can be verbalized, whereas expertise depends heavily on implicit pattern recognition and selective extraction skills acquired through perceptual learning. We consider reasons why perceptual learning has not been systematically addressed in traditional instruction, and we describe recent successful efforts to create a technology of perceptual learning in areas such as aviation, mathematics, and medicine. Research in perceptual learning promises to advance scientific accounts of learning, and perceptual learning technology may offer similar promise in improving education.

Keywords: perceptual learning, expertise, pattern recognition, automaticity, cognition, education

I. Introduction

On a good day, the best human chess grandmaster can beat the world’s best chess-playing computer. The computer program is no slouch; every second, it examines upwards of 200 million possible moves. Its makers incorporate sophisticated methods for evaluating positions, and they implement strategies based on advice from grandmaster consultants. Yet, not even this formidable array of techniques gives the computer a clear advantage over the best human player.

If chess performance were based on raw search, the human would not present the slightest problem for the computer. Estimates of human search through possible moves in chess suggest that even the best players examine on the order of 4 possible move sequences, each about 4 plies deep (where a ply is a pair of turns by the two sides). That estimate is per turn, not per second, and a single turn may take many seconds. Assuming the computer were limited to 10 sec of search per turn, the human would be at a disadvantage of about 1,999,999,984 moves searched per turn.

Given this disparity, how is it possible for the human to outplay the machine? The accomplishment suggests information processing abilities of remarkable power but mysterious nature. Whatever the human is doing, it is, at its best, roughly equivalent to 2 billion moves per sec of raw search. “Magical” would not seem too strong a description for such abilities.

We have not yet said what abilities these are, but before doing so, we add one more striking observation. Biological systems often display remarkable structures and capacities that have emerged as evolutionary adaptations to serve particular functions. Compared to machines that fly, for example, the capabilities of a dragonfly or hummingbird (or even the lowly mosquito) are astonishing. Yet the information processing capabilities we are considering may be seen as all the more remarkable because they do not appear to be adaptations specialized for one particular task. We did not evolve to play chess. In other words, it is likely that human attainments in chess are consequences of highly general abilities that contribute to learned expertise in many domains. Such abilities may have evolved for more ecological tasks, but they are of such power and generality that humans can become remarkably good in almost any domain involving complex structure.

What abilities are these? They are abilities of perceptual learning. The effects we are describing arise from experience-induced changes in the way perceivers pick up information. With practice in any domain, humans become attuned to the relevant features and structural relations that define important classifications, and over time we come to extract these with increasing selectivity and fluency. As a contrast, consider: Most artificial sensing devices that exist, or those we might envision, would have fixed characteristics. If they functioned properly, their performance on the 1000th trial of picking up some information would closely resemble their performance on the first trial. Not so in human perception. Rather, our extraction of information changes adaptively to optimize particular tasks. A large and growing research literature suggests that such changes are pervasive in perception and that they profoundly affect tasks from the pickup of minute sensory detail to the extraction of complex and abstract relations that underwrite symbolic thought. Perceptual learning thus furnishes a crucial basis of human expertise, from accomplishments as commonplace as skilled reading to those as rarified as expert air traffic control, radiological diagnosis, grandmaster chess, and creative scientific insight.

In this paper, we give an overview of perceptual learning, a long-neglected area of learning, both in scientific theory and research, as well as in educational practice. Our consideration of perceptual learning will proceed as follows. In the second section, we provide some brief historical background on perceptual learning and some taxonomic considerations, contrasting and relating it to other types of learning. In the third section, we consider some instructive examples of perceptual learning, indicating its influence in a range of levels and tasks, and arguing that the information processing changes brought about by perceptual learning can be usefully categorized as discovery and fluency effects. In the fourth section, we consider explanations and modeling concepts for perceptual learning, and we use this information to consider the scope of perceptual learning in the fifth section. As its role and scope in human expertise become clearer, its absence from conventional instructional settings becomes more paradoxical. In a final section, we discuss these issues and the potential for improving education by using perceptual learning techniques.

II. Perceptual Learning in Context

Perceptual Learning and Taxonomies of Learning

Perceptual learning can be defined as “an increase in the ability to extract information from the environment, as a result of experience and practice with stimulation coming from it.” (Gibson, 1969, p. 3). With sporadic exceptions, this kind of learning has been neglected in scientific research on learning. Researchers in animal learning have focused on conditioning or associative learning phenomena – connections between responses and stimuli. Most work on human learning and memory has focused on encoding of items in memory (declarative knowledge) or learning sequences of actions (procedural learning).

Perceptual learning is not encompassed by any of these categories. It works synergistically with them all, so much so that it often comprises a missing link, concealed in murky background issues of other learning research. In stimulus-response approaches to animal and human learning, it is axiomatic that the “stimulus” is part of the physical world and can be described without reference to internal variables in the organism. Used in this way “stimulus” omits a set of thorny issues. For an organism, a physical event is not a stimulus unless it is detected. And what kind of stimulus it is will depend on which properties are registered and how it is classified. Like the sound made (or not) by the proverbial tree falling in the forest, “stimulus” has two meanings, and they are not interchangeable. The tone or light programmed by the experimenter is a physical stimulus, but whether a psychological stimulus is present and what its characteristics are depends on the organism’s perceptual capacities.

When stimuli are chosen to be obvious, work in associative learning can occur without probing the fine points of perception and attention. The problem of perceptual learning, however, is that with experience, the organism’s pick-up of information changes. In its most fascinating instances, perceptual learning leads the perceiver to detect and distinguish features, differences, or relations not previously registered at all. Two initially indistinguishable stimuli can come to be readily distinguished, even in basic sensory acuities, such as those tested by your optometrist. In higher level tasks, the novice chess player may be blind to the impending checkmate that jumps out at the expert, and the novice art critic may lack the expert’s ability to detect the difference between the brush strokes in a genuine Renoir painting and those in a forgery. Perceptual learning is not the attachment of a stimulus to a response, but rather the discovery of new structure in stimulation.

Perceptual learning is also not procedural knowledge. Some learned visual scanning routines for specialized tasks may be procedural, but much perceptual learning can be shown in improved pickup of information in presentations so brief that no set of fixations or scan pattern could drive the relevant improvements. In general, the relation between perceptual learning and procedures is flexible. The perceptual expertise of an instrument flight instructor, for example, may allow her to notice at a glance that the aircraft has drifted 200 feet above the assigned altitude. If she is flying the plane, the correct procedure would be to lower the nose and descend. If she is instructing a student at the controls, the proper procedure may be a gentle reminder to check the altimeter. In examples like this one, the perceptual information so expertly extracted can be mapped onto various responses. Another difference is that in many descriptions (e.g., Anderson, Corbett, Fincham, Hoffman, & Pelletier, 1992), procedures consist of sets of steps that are conscious, at least initially in learning. Availability to consciousness is often not an obvious characteristic of changes in sensitivity that arise in perceptual learning.

Finally, it should be obvious that perceptual learning does not consist of learning declarative information – facts and concepts that can be verbalized. Besides the fact that structures extracted by experts in a domain often cannot be verbally explained, the effect of learning is to change the capacity to extract. This idea has been discussed in instructional contexts (Bereiter & Scardamalia, 1998). Most formal learning contexts implicitly follow a “mind as container” metaphor (see section VI below), with learning as the transfer of declarative knowledge – facts and concepts that can be verbalized. Perceptual learning effects involve “mind as pattern recognizer” (Bereiter & Scardamalia, 1998).

Looking back at our example of chess, some readers may be puzzled by our emphasis on perceptual processes in what appears to be a high-level domain involving explicit reasoning and perhaps language. Although reasoning is certainly involved in chess expertise, it is precisely the difficulty of accounting for human competence within the computational limitations of human reasoning that makes chess, and many other domains of expertise, so fascinating. The most straightforward ideas about explicit reasoning in chess are those that have been successfully formalized and implemented in classic artificial intelligence work. Given a position description (a node in a problem space), there are certain allowable moves, and these may be considered in terms of their value via some evaluation function. Search through the space for the best move is computationally unwieldy, but may be aided by heuristics that prune the search tree. But as we noted earlier, human search of this sort is severely limited and easily dwarfed by computer chess programs. Perhaps more fertile efforts to connect these concepts to human chess playing lie in reasoning about positions as a basis for heuristically guiding search. It is unlikely, however, that the synergy of pattern recognition and reasoning can be explained by explicit symbolic processes, such as those mediated by language. If chess expertise were based on explicit knowledge, the grandmaster consultants to the developers of chess-playing programs would have long since incorporated the relevant patterns used by the best human players. This has not been feasible because much of the relevant pattern knowledge is not verbally accessible. These are some of the reasons that classic studies of chess expertise (e.g., DeGroot, 1965; Chase & Simon, 1973) have pointed to the crucial importance of perception of structure and a more limited role for explicit reasoning (at least relative to our preconceptions!). We believe that similar conclusions apply to many high-level domains of human competence.

The counterintuitive aspects of perception vs. reasoning in expertise derive both from excessive expectations of reasoning and misunderstandings of the nature of perception. These issues are not new. Max Wertheimer, in his classic work Productive Thinking, discussed formal logical and associative approaches to reasoning and argued that neither encompasses what is perhaps the most crucial process: the apprehension of relations. It was the belief of the Gestalt psychologists, such as Wertheimer, that this apprehension is rooted in perception. Although the point is still not sufficiently appreciated, perception itself is abstract and relational. If we see two trees next to each other, one twice as tall as the other, there are many accurate descriptions that may be extracted via perception. The shade of green of the leaves and the texture of the bark are concrete features. But more abstract structure, such as the ratio of the height of one tree to the other, are just as “real,” as are informational variables in stimulation that make them perceivable. This is a deeply important point, one that has proven decisive in modern theories of perception (Gibson, 1969, 1979; Marr, 1982; Michotte, 1952). In recovering the connectivity of a non-rigid, moving entity from otherwise meaningless points of light (Johannson, 1973) or perceiving causal relations from stimulus relations (Michotte, 1952), it is obvious that apprehending the abstract structure of objects, arrangements and events is an important, perhaps the most important, goal of perceptual processing.

Still the foregoing analyses leave some questions unanswered. One might ask why humans play chess (and understand chemistry, etc.) whereas animals do not. Some answers to this question are peripheral to our interests here, such as the fact that instructing new players about rules, procedures, and basic strategy is greatly facilitated by language. Here again, however, the fact that one can produce in a short time novice players who can recite the rules and moves of chess flawlessly contrasts with the fact that no verbal instruction suffices to produce grandmasters. More pertinent, however, is the issue of the pickup of abstract structural relations in perception. Might this faculty differ between humans and most animals? Might humans be more disposed to find abstract structure than animal perceivers? Might language and symbolic functions facilitate the salience of information and help guide pattern extraction? These are fascinating possibilities, which we take up below.

Perceptual Learning and the Origins of Perception

The phrase “perceptual learning” has been used in a number of ways. In classical empiricist views of perceptual development, all meaningful perception (e.g., perception of objects, motion, and spatial arrangement) was held to arise from initially meaningless sensations. Meaningful perception was thought to derive from associations among sensations (e.g., Locke, 1690/1971; Berkeley, 1709/1910; Titchener, 1902) and with action (Piaget, 1952). In this view, most of perceptual development early in life consists of perceptual learning. This point of view, dominant through most of the history of philosophy and experimental psychology, was based primarily on logical arguments (about the ambiguity of visual stimuli) and on the apparent helplessness of human infants in the first 6 months of life. Young infants’ lack of coordinated activity was at once an ingredient of a dogma about early perception and at the same time an obstacle to its direct study.

Over the past several decades, experimental psychologists developed methods of testing infant perception directly. Although infants do not do much, they perceive quite a lot. And they do deploy visual attention, via eye and head movements. With appropriate techniques, these tendencies, as well as electrophysiological and other methods, can be used to reveal a great deal about early perception (for a review see Kellman & Arterberry, 1998).

What this research has shown is that the traditional empiricist picture of perceptual development is incorrect. Although perception becomes more precise with age and experience, basic capacities of all sorts – such as the abilities to perceive objects, faces, motion, three-dimensional space, the directions of sounds, coordinate the senses in perceiving events, and other abilities -- arise primarily from innate or early-maturing mechanisms (Bushnell, Sai & Mullin, 1989; Held, 1985; Kellman & Spelke, 1983; Meltzoff & Moore, 1977; Slater, Mattock & Brown, 1990).

Despite its lack of viability as an account of early perceptual development, the idea that learning may allow us to attach meaning to initially meaningless sensations has been suggested to characterize perceptual learning throughout the lifespan. In a classic article, Gibson & Gibson (1955) criticized this view and contrasted it with another.

They called the traditional view – that of adding meaning to sensations via associations and past experience – an enrichment view. We must enrich and interpret current sensations by adding associated sensations accumulated from prior experience. In Piaget’s (1952; 1954) more action-oriented version of this account, it is also the association of perception and action that leads to meaningful perception of objects, space and events.

The Gibsons noticed a curious fact about enrichment views: The more learning occurs, the less perception will be in correspondence with the actual information present in a situation. This is the case because with more enrichment, the current stimuli play a smaller role in determining the percept. More enrichment means more reliance on previously acquired information. Such a view has been held by generations of scholars who have characterized perception as a construction (Berkeley, 1709/1910; Locke, 1690/1971), an hypothesis (Gregory, 1972), an inference (Brunswik, 1956) or an act of imagination based on past experience (Helmholtz, 1864/1962).

The Gibsons suggested that the truth may be quite the opposite. Experience might make perceivers better at using currently available information. With practice perception might become more, not less, in correspondence with the given information. They called this view of perceptual learning differentiation. Differentiation, or the discovery of distinguishing features, may play an important role in various creative and cognitive processes. Through discovery, undifferentiated concepts come to have better-defined boundaries (Jung, 1923). Creativity, it has been argued, involves connecting various well differentiated concepts to unconscious representations of knowledge, like instincts and emotion (Perlovsky, 2006). In any situation, there is a wealth of available information to which perceivers might become sensitive. Learning as differentiation is the discovery and selective processing of the information most relevant to a task. This includes filtering relevant from irrelevant information, but also discovery of higher-order invariants that govern some classification. Perceptual learning is conceived of as a process that leads to task-specific improvement in the extraction of what is really there.

In our review, we focus on this notion of perceptual learning, that is, learning of the sort that constitutes an improvement in the extraction of information. Most contemporary perceptual learning research, although varying on other dimensions, fits squarely within the differentiation camp and would be difficult to interpret as enrichment. Although contemporary computational vision approaches that emphasize use of Bayesian priors in determining perceptual descriptions may be considered updated, more quantitative, versions of traditional enrichment views, the use of enrichment as an account of learning by the individual is rare in perceptual learning research today. This is partly due to the consistent emphasis on perceptual learning phenomena that involve improvements in sensitivity (rather than changes in bias). Also, enrichment is not much reflected in contemporary perceptual learning work because there is little evidence of perception being substantially influenced by accumulation of priors ontogenetically (i.e., during learning by the individual). Current Bayesian approaches to perception often suggest that priors have been acquired during evolutionary time (e.g., Purves, Williams, Nundy, & Lotto, 2004). This emphasis on evolutionary origins is much more consistent with what is known about early perceptual development (Kellman & Arterberry, 1998).

The contemporary focus on differentiation in perceptual learning can also understood in terms of signal detection theory (SDT). SDT is a psychological model of how biological systems can detect signals in the presence of noise. It is closely related to the concept of signal to noise ratio in telecommunications (e.g., Cover & Thomas, 1991), but also considers potential response biases of subjects that can arise due to, for example, the costs associated with failing to detect a signal or mistaking noise for a signal. More specifically, SDT offers mathematical techniques for quantifying sensitivity independent of response bias from perceptual data, where sensitivity represents accuracy in detecting or discriminating and response bias represents the tendencies of an observer to use the available response categories in the presence of uncertainty.

A well-established fact of SDT is that stimulus frequency affects response bias, not sensitivity (e.g., Wickens, 2002). Consider an experiment in which the observer must say red or blue for a stimulus pattern presented on each trial, and the discrimination is difficult (so that accuracy is well below perfect). For an ambiguous stimulus, observers will be more likely to respond blue if “blue” is the correct answer on 90% of trials than if “blue” is the correct answer on 50% of trials. SDT analysis will reveal that sensitivity is the same, and what has changed is response bias. Such frequency effects contain the essential elements both of associative “enrichment” theories of perceptual learning and of the use of Bayesian priors in generating perceptual responses.

Although there are interesting phenomena associated with such changes in response bias (including optimizing perceptual decisions), perceptual learning research today is much more concerned with the remarkable fact that practice actually changes sensitivity. Sensitivity in SDT is a function of the difference between the observer’s probability of saying blue given a blue stimulus and the probability of saying “blue” given a red stimulus). The perceptual learning effects we will consider are typically improvements in sensitivity or facility in dealing with information available to the senses. As we will see, such improvements can come in the form of finer discriminations or discovery and selective use of higher-order structure.

A Brief History of Perceptual Learning

This notion of perceptual learning as improvement in the pick-up of information has not been a mainstay in the psychology of learning. Still it has made cameo appearances. William James devoted a section of his Principles of Psychology to “the improvement of discrimination by practice (James, 1890, Vol. I, p. 509).” Clark Hull, the noted mathematical learning theorist, did his dissertation in 1918 on a concept learning experiment in which slightly deformed Chinese characters were used. In each of twelve categories of characters, differing exemplars shared some invariant structural property. Subjects learned to associate a sound (as the name of each category) using 6 instances of each category and were trained to a learning criterion. A test phase with 6 new instances showed that learning had led to the ability to classify novel instances accurately (Hull, 1920). This ability to extract invariance from instances and to respond selectively to classify new instances mark Hull’s study as not only a concept formation experiment but as a perceptual learning experiment.

Hull’s later contributions to learning theory had little to do with this early work, a bit of an irony, as Eleanor Gibson, who did the most to define the modern field of perceptual learning, was later a student in Hull’s laboratory. She found Hull’s laboratory preoccupied with conditioning phenomena when she did her dissertation there in 1938 (Szokolszky, 2003). It was Gibson and her students who made perceptual learning a highly visible area of research in the 1950s and 1960s, much of it summarized in a classic review of the field. By the mid-1970s, this area had become quiescent. One reason was that much of the research at the time had shifted focus to infant perception and cognition. Most of this research focused on characterizing basic perceptual capacities of young infants rather than on perceptual learning processes. Research specifically directed at perceptual learning in infancy has become more common recently, however (e.g., Fiser & Aslin, 2002; Saffran, Loman, & Robertson, 2000; Gomez & Gerken, 2000).

In the last decade and a half, there has been an explosion of research on perceptual learning. This newest period of research has distinctive characteristics. It has centered almost exclusively on learning effects involving elementary sensory discriminations. The research has produced remarkable findings of marked improvements on almost any sensory task brought about by discrimination practice. The focus on basic sensory discriminations has been motivated by interest in cortical plasticity and attempts to connect behavioral and physiological data. This focus differs from earlier work on perceptual learning, as we consider below, and relating perceptual learning work spanning different tasks and levels poses a number of interesting challenges and opportunities. One goal of this review is to highlight phenomena and principles appearing at different levels and in different tasks that point toward a unified understanding of perceptual learning.

III. Some Perceptual Learning Phenomena

To give some idea of its scope and characteristics, we consider a few examples of perceptual learning phenomena. Our examples come primarily from visual perception. They span a range of levels, from simple sensory discriminations to higher-level perceptual learning effects more relevant to real-world expertise.

Highlighting these examples may also illustrate that perceptual learning phenomena can be organized into two general categories – discovery and fluency effects (Kellman, 2002). Discovery involves some change in the bases of response: Selecting new information relevant for a task, amplifying relevant information, or suppressing information that is irrelevant are cases of discovery. Fluency effects involve changes, not in what is extracted, but in the ease of extraction. When relevant information has been discovered, continued practice allows its pickup to become less demanding of attention and effort. In the limit, it becomes automatic -- relatively insensitive to attentional load (Schneider & Shiffrin, 1977).

The distinction between discovery and fluency is not always clearcut or easily assessed, as these effects typically co-occur in learning. Particular dependent variables may be sensitive to both. Improved sensitivity to a feature or relation would characterize discovery, whereas improved speed, load insensitivity, or dual-task performance would naturally seem to be fluency effects. But improved speed may also be a consequence of discovering a higher-order invariant that provides a shortcut for the task (a discovery effect). Conversely, when performance is measured under time constraints, fluency improvements may influence measures of sensitivity or accuracy, because greater fluency allows more information to be extracted in a fixed time. The distinction between discovery and fluency is nevertheless important; understanding perceptual learning will require accounts of how new bases of response are discovered and how information extraction becomes more rapid and less demanding with practice.

The examples we consider illustrate a variety of perceptual learning effects but do not comprise a comprehensive treatment of phenomena in the field. Also, there are a number of interesting issues in the field that fall outside the scope of this article. These include several factors that affect perceptual learning, including the effects of sleep (e.g. Karni & Sagi, 1993; Stickgold, James, & Hobson, 2000), the importance or irrelevance of feedback (e.g. Shiu & Pashler, 1992; Fahle & Edelman, 1993; Petrov, Dosher, & Lu, 2005), and even whether or not subjects need to be perceptually aware of the stimuli (Watanabe, Nanez, & Sasaki, 2001; Seitz & Watanabe, 2003). For these and other issues not treated here, we refer the interested reader to more inclusive reviews (Gibson, 1969; Goldstone, 1998; Kellman, 2002; Fahle & Poggio, 2002).

Basic Visual Discriminations

What if someone were to tell you that your visual system’s maximal ability to resolve fine detail could improve with practice – by orders of magnitude? This may surprise you, as you might expect that basic limits of resolution, such as discriminating two lines with slightly different orientations, would rest on mechanisms that are fixed. Because basic characteristics of resolution affect many higher-level processes, we might expect on evolutionary grounds that observers’ efficiency for extracting detail is optimal within biophysical constraints. In fact, this is not the case – not for orientation sensitivity or for a variety of other basic, visual sensitivities. Appropriately structured perceptual learning can produce dramatic changes in basic sensitivities.

Vernier acuity – judging the alignment of two small line segments – is a frequently studied task that reveals the power of perceptual learning. In a typical Vernier task, two lines, one above the other, are shown on each trial, and the observer indicates whether the upper line is to the left or right of the lower line. In untrained subjects, Vernier acuity is already remarkably precise. So precise, in fact, that it is not uncommon for subjects to be able to detect misalignments of less than 10 arc secs (Westheimer & McKee, 1978). Because this detection threshold is smaller than the aperture diameter of one foveal cone photoreceptor, this acuity, along with others, has been labelled a “hyperacuity” (Westheimer, 1975).

Hyperacuity phenomena have posed challenges to vision modelers. Recent modeling efforts suggest that the basic acuity limits of the human visual system are achieved in part by exploiting sources of information that arise due to the non-linear response properties of retinal and cortical cells responding to near-threshold signals in the presence of noise (Hongler, de Meneses, Beyeler, and Jacot, 2003; Hennig, Kerscher, Funke, and Worgotter, 2002). Despite the neural complexity already involved in achieving hyperacuity, with training over thousands of trials, Vernier thresholds improve substantially. Saarinen & Levi (1995), for example, found as much as a 6-fold decrease in threshold after 8000 trials of training. Similar improvements have been found for motion discrimination (Ball & Sekuler, 1982) and simple orientation sensitivity (Shiu & Pashler, 1992; Vogels & Orban, 1985).

Visual Search

A task with more obvious ecological relevance is visual search. Finding or discriminating a target object hidden among distractors, or in noise, characterizes a number of tasks encountered by visually guided animals, including detection of food and predators. Studies of perceptual learning in various visual search tasks show that experience leads to robust gains in sensitivity and speed. Karni & Sagi (1993) had observers search for obliquely oriented lines in a field of horizontal lines. The target, always in one quadrant of the visual field, consisted of a set of three oblique lines arrayed vertically or horizontally. (The subject’s task was to say on each trial whether the vertical or horizontal configuration was present.) The amount of time needed to reliably discriminate decreased from about 200 ms on session 1 to about 50 ms on session 15. Sessions were spaced 1–3 days apart, and training effects persisted even years later. Data from their study are shown in Figure 1.

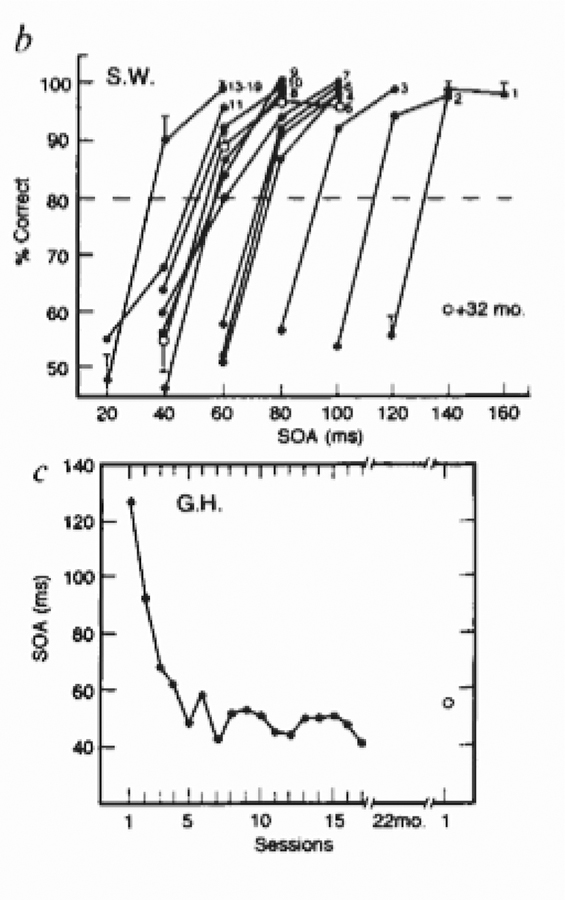

Figure 1.

Practice-induced improvements in visual search (Karni & Sagi, 1993). All data are from a single subject. Top: On each session (spaced 1–3 days apart), subjects showed significant improvement in the amount of exposure time (SOA) necessary to discriminate between two targets presented among distrators. Separate curves represent performance during different sessions. The leftward shift of the curves with practice indicates that performance of the task required progressively shorter presentations to achieve a given accuracy level. Between sessions, performance remained relatively constant, and was unchanged 32 months after the final training session. Bottom: Exposure time (SOA) necessary to reach threshold performance (~80% correct) as a function of training session.

The learning observed by Karni & Sagi was specific to location and orientation (of the background elements). This specificity is observed in many perceptual learning tasks using basic discriminations, a small number of unchanging stimulus patterns, and fixed presentation characteristics. The lack of transfer to untrained retinal locations, across eyes, and to different stimulus values is often argued to indicate a low-level locus of learning. Learning that is specific to a retinal position, for example, may involve early cortical levels in which the responses of neural units are retinotopic – mapped to particular retinal positions – rather than units in higher level areas which show some degree of positional invariance. On the other hand, other studies of perceptual learning have shown robust transfer. In visual search studies, Ahissar & Hochstein (2000) showed that learning to detect a single line element hidden in an array of parallel, differently-oriented line segments could generalize to positions at which the target was never presented. Sireteanu & Rettenbach (2000) discussed learning effects in which learning leads serial (sequential) search tasks to become parallel or nearly so; such effects often generalize across eyes, retinal locations, and tasks. Inconsistency of results regarding specificity of transfer have complicated the idea that lack of transfer implies a low-level site of learning. The basic inference that specificity indicates that learning occurs at early processing levels has also been argued to be a fallacy (Mollon & Danilova, 1996). We discuss this issue in relation to general views of perceptual learning in section V.

Automaticity in Search

Earlier work involving perceptual learning in visual search provides a classic example of improvements in fluency. In a series of studies, Schneider & Shiffrin (1977) had subjects judge whether any letters in a target set appeared at the corners of rectangular arrays that appeared in sequence. Attentional load was manipulated by varying the number of items in the target set and the number of items on each card in the series of frames. (Specifically, load was defined as the product of the number of possible target items and the number of possible locations to be searched.) Early in learning, or when targets and distractors were interchangeable across trials, performance was highly load-sensitive: Searching for larger numbers of items or checking more locations took longer. For subjects trained with a consistent mapping between targets and distractors (i.e., an item could appear as one or the other but not both), subjects not only became much more efficient in doing the task, but they came to perform the task equally well over a range of target set sizes and array sizes. The fact that performance became load-insensitive led Schneider & Shiffrin to label this type of performance automatic processing.

Unitization in Shape Processing

Some perceptual learning effects on visual search suggest that the representation of targets, distractors, or both, can change due to experience. Consider the results of Sireteanu & Rettenbach (2000), in which a previously serial visual search comes to be performed in parallel as a result of training. The classic interpretation of this result is that training caused the target of the visual search to become represented as a visual primitive, or feature (Treisman & Gelade, 1980). More generally, this result suggests that conjunctions of features can become represented as features themselves, i.e., they become a unit.

Unitization, like “chunking” (Miller 1956), refers to the process of how information can get encoded or processed more efficiently. The idea is that the information capacity of various cognitive functions is fixed and limited. We can, however, learn to utilize the available capacity more efficiently by forming higher-level units based on relations discovered through learning.

Goldstone (2000) studied unitization using simple 2D shapes like those shown in Figure 2. Each shape is formed from 5 separate parts. Subjects were trained to sort these shapes into categories based on the presence of a single component, a conjunction of 5 components, or a conjunction of 5 components in a specific order. Performance, measured by response time, improved with training, and although categorization based on a single feature was fastest, the greatest improvement due to training was for categorization based on 5 parts in a specific order. Goldstone suggests that the improvement was because the shapes came to be represented in a new way. The original representation of the shapes, through training, was replaced with new, more efficient, chunk-like representation formed from parts that were always present in the stimuli, but previously not perceived. A detailed study of response times indicated that the improvement based on unitization was greater than would be predicted based on improvements in processing of individual components. This result furnished strong evidence that new perceptual units were being used.

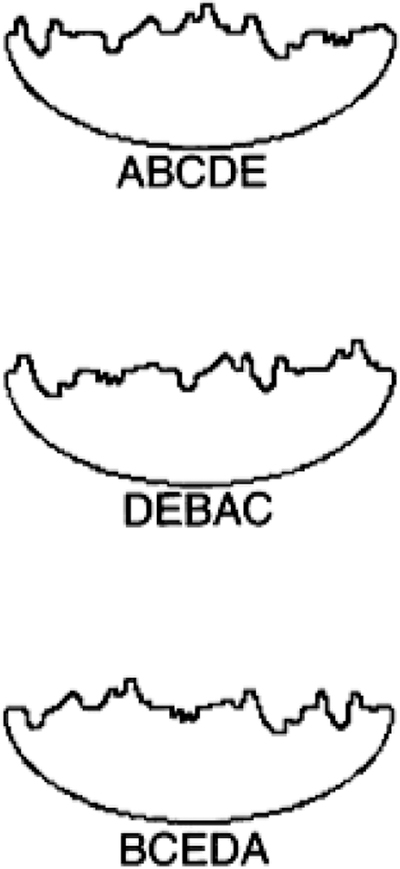

Figure 2.

Stimuli used in unitization experiment by Goldstone (2000). Each top contour is composed of 5 contour segments (labeled A-E). Categorization training in which a consistent arrangement (e.g., ABCDE) was discriminated from items differing by one component led to performance that indicated formation of a new perceptual unit or discovery of higher-order shape invariants (See text.)

An interesting issue with this and other sources of evidence for perceptual unitization or chunking is whether basic elements have merely become cemented together perceptually or whether a higher-order invariant has been discovered that spans multiple elements and replaces their separate coding (Gibson, 1969). In the top display in Figure 2, for example, the learner may come to encode relations among positions of peaks or valleys in the contour. Such relations are not defined if individual components are considered separately. It is possible that unitization phenomena in general depend on discovery of higher-order pattern relations, but these ideas deserve further study.

Perceptual Learning in Real-World Tasks

In recent years, perceptual learning research has most often focused on basic sensory discriminations. Both the focus and style of this research contrast with real-world learning tasks, in which it would be rare for a learner to have thousands of trials discriminating two simple displays differing minimally on some elementary attribute. Ecologically, the function of perceptual learning is almost certainly to allow improvements in information extraction from richer, multidimensional stimuli, where even those falling into some category show a range of variation. A requirement of learning in such tasks is that the learner comes to extract invariant properties from instances having variable characteristics. As E. Gibson put it “It would be hard to overemphasize the importance for perceptual learning of the discovery of invariant properties which are in correspondence with physical variables. The term ‘extraction’ will be applied to this process, for the property may be buried, as it were, in a welter of impinging stimulation.”(Gibson, 1969, p. 81).

These aspects of real-world tasks at once indicate why perceptual learning is so important and why it is task-specific. For a given purpose, not all properties of an object are relevant. In fact, for understanding causal relations and structural similarities, finding the relevant structure is a key to thinking and problem solving (Duncker, 1945; Wertheimer, 1957). For different tasks, the relevant properties will vary. For example, in classifying human beings, the relevant properties differ in an employment interview and in an aircraft weight and balance calculation (at least for most jobs). Often, perceptual learning in real-world situations involves discovery of relations and some degree of abstraction. Perceptual learning that ferrets out dimensions and relations crucial to particular tasks underwrites not only remarkable improvements in orientation discrimination but also the expertise that empowers a sommelier or successful day-trader. In understanding human expertise, these factors play a greater role than is usually suspected; conversely, the burden of explanation placed on the learning of facts, rules, or techniques is often exaggerated, and the dependence of these latter processes on perceptual learning goes unnoticed. Some examples may illustrate this idea.

Chess Expertise.

Chess is a fascinating domain in which to study perceptual learning, for several reasons. One is that, as mentioned earlier, humans can reach astonishing levels of expertise. Another is that the differences between middle level players and masters tend not to involve explicit knowledge about chess. DeGroot (1965) and Chase & Simon (1973) studied chess masters, intermediate players, and novices to try to determine how masters perform at such a high level. Masters did not differ from novices or mid-level players in terms of number of moves considered, search strategies, or depth of search. Rather, the differences appeared to be perceptual learning effects – exceptional abilities to encode rapidly and accurately positions and relations on the board. These abilities were tested in experiments in which players were given brief exposures to chess positions and had to recreate them with a set of pieces on an empty board. Relative to intermediate or novice players, a chess master seemed to encode larger chunks, picked up important chess relations in these chunks and required very few exposures to fully recreate a chessboard. One might wonder if masters are simply people with superior visual memory skills – skills that allowed them to excel at chess. A control experiment (Chase & Simon, 1973) suggested that this is not the case. When pieces were placed randomly on a chessboard, a chess master showed no better performance in recreating board positions than a novice or mid-level player. (In fact, there was a slight tendency for the master to perform worse than the others.) These results suggest that the master differs in having advanced skills in extracting structural patterns specific to chess.

The Word Superiority Effect.

It is comforting to know that perceptual expertise is not the sole province of grandmasters, as few of us would participate. Common to almost all adults is a domain in which extensive practice leads to high expertise: fluent reading. An intriguing indicator of the power of perceptual learning effects in reading is the word superiority effect. Suppose a participant is asked to judge on each trial whether the letter R or L is presented. Suppose further that the presentations consist of brief exposures and that the time of these exposures is varied. We can use such a method to determine an exposure time that allows the observer to be correct about 80% of the time.

Now suppose that, instead of R or L, the observer’s task is to judge whether “DEAR” or “DEAL” is presented on each trial. Again, we find the exposure time that produces 80% accuracy. If we compare this condition to the single letter condition, we see a remarkable effect first studied by Reicher (1969) and Wheeler (1970). (For an excellent review, see Baron, 1978.) The exposure time allowing 80% performance will be substantially shorter for the words than for the individual letters. Note that the context provided by the word frame provides no information about the correct choice, as the frame is identical for both. In both the single letter and word conditions, the discrimination must be based on the R vs. the L. Somehow, the presence of the irrelevant word context allows the discrimination to be made more rapidly.

One might suppose that the word-superiority effect comes from readers having learned the overall shapes of familiar words. If practice leads to discovery of higher-order structure of the word (beyond the processing of the individual letters), this structure may become more rapidly processed. It turns out that this explanation of the word superiority effect is not correct. The most stunning evidence on the point is that the effect is not restricted to familiar words; it also works for pronounceable nonsense (e.g., GEAL vs. GEAD, but not GKBL vs. GKBD). The fact that most of the effect is preserved with pronounceable nonsense suggests that learning has led to extraction and rapid processing of structural regularities characteristic of English spelling patterns. The idea that the word superiority effect results from perceptual learning of higher-order regularities would predict that beginning readers would not show the word superiority effect. This proves to be the case (Feitelson & Razel, 1984).

Interaction of Fluency and Discovery Processes.

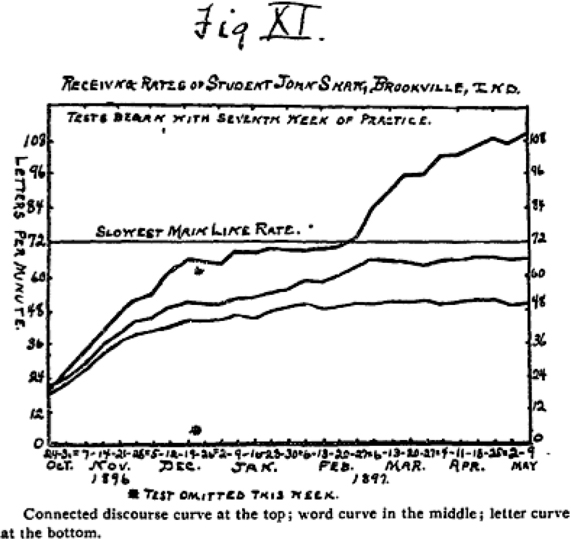

It has long been suspected that fluency and discovery processes interact in the development of expertise. Writing in Psychological Review in 1897, Bryan & Harter proposed that automatizing the pick-up of basic structure serves as a foundation for discovering higher-order relationships (Bryan & Harter, 1897). These investigators studied learning in the task of telegraphic receiving. When the measure of characters received per minute (in Morse Code) was plotted against weeks of practice, a typical, negatively accelerated learning curve appeared, reaching asymptote after some weeks. With continued practice, however, many subjects produced a new learning curve, rising from the plateau of the first. And for some subjects, after even more practice, a third learning curve ultimately emerged. Each learning curve raised performance to substantially higher levels than before.

What could account for this remarkable topography of learning? When Bryan & Harter asked their subjects to describe their activity at different points in learning, responses suggested that the information being processed differed considerably at different stages. Those on the first learning curve reported that they were concentrating on the way letters of English mapped onto the dots and dashes of Morse Code. Those on the second learning curve reported that dots and dashes making letters had become automatic for them; now they were focusing on word structure. Finally, learners at the highest level reported that common words had become automatic; they were now focusing on message structure. To test these introspective reports, Bryan & Harter presented learners in the second phase with sequences of letters that did not make words. Under these conditions, performance returned to the asymptotic level of the first learning curve. When the most advanced learners were presented with sequences of words that did not make messages, their performance returned to the asymptotic levels of the second learning curve. These results confirmed subjects’ subjective reports.

The Bryan & Harter results serve as a good example for distinguishing discovery and fluency effects in perceptual learning. Both are involved in the telegraphers’ learning. As learning curves are ascending, we may assume that at least some of what is occurring is that learners are discovering new structure that enables better performance. The acquisition of new bases of response (discovery) is clearly shown in the tests Bryan & Harter did with non-meaningful letter and word sequences. What is also noticeable, however, are long periods at asymptotic performance at one level before a new learning curve emerges. In Figure 2 (top curve), such a period continues for nearly a month of practice. Given that performance (words per minute transcribed) is not changing, the information being extracted is unlikely to be changing in such periods. What is changing? Arguably, what is changing is that controlled, attentionally demanding processing is giving way to automatic processing (Schneider & Shiffrin, 1973). If in fact the information being extracted is constant, then this change would appear to be a pure fluency effect. Both the discovery of structure and automatizing its pick-up appear to be necessary to pave the way for discovery of higher level structure.

Although the universality of the phenomenon of three separable learning curves in telegraphic receiving has been questioned (Keller, 1958), Bryan & Harter’s data indicate use of higher-order structure by advanced learners and suggest that discovery of such structure is a limited capacity process. Automating the processing of basic structure at one level frees attentional capacity to discover higher level structure, which can in turn become automatic, allowing discovery of even higher level information, and so on. This cycle of discovery and fluency in perceptual learning --- discovering and automating of higher and higher levels of structure -- may account for the seemingly magical levels of human expertise that sometimes arise from years of sustained experience, as in chess, mathematics, music and science. These insights from the classic Bryan & Harter study are reflected in more recent work, such as demonstrations that expertise involves learning across multiple time scales in pure motor skill development (Liu, Mayer-Kress, and Newell, 2006) and automating basic skills in development of higher-level expertise in areas such as reading (Samuels and Flor, 1997) and mathematics (Gagne, 1983). These principles are increasingly reflected in modern application of perceptual learning to training procedures (e.g., Clawson, Healy, Ericcson, and Bourne, 2001). Bryan & Harter’s study offers a compelling suggestion about how discovery and fluency processes interact. Their 1897 article ends with a memorable claim: “Automaticity is not genius, but it is the hands and feet of genius.”

Abstract Perceptual Learning

Two important tenets of contemporary theories of perception are that perceptual systems are sensitive to higher-order relationships (Gibson, 1979; Marr, 1982), and they produce as outputs abstract descriptions of reality (Marr, 1982; Michotte, 1952), that is, descriptions of physical objects, shapes, spatial relations and events. These modern ideas contrast with pervasive earlier views that sensory systems produce initially meaningless sensations, which acquire meaning only through association and inference.

Sensitivity to abstract relations is reflected as well in perceptual learning. It is probably worth making clear what we mean by abstract, as there are a variety of uses of the term. For our purposes, abstract information may be illustrated as follows. Suppose we hear and encode a melody. Encoding the information that the first note has fundamental frequency f1 is a concrete encoding. Encoding that the second note has a fundamental frequency that is higher in frequency than the first note by some amount k is encoding a relation. Encoding that the second note is higher than the first by an octave is encoding an abstract relation. The idea is that the abstract encoding is dependent on a concrete value only conditionally. For an octave, whatever the first frequency is, the second must be twice that. In general, a relation is abstract if it involves binding the value of a variable. (In this example, the first frequency can be any frequency x, but the second must be 2x.) Our notion of abstract information is close to J. Gibson’s notion of higher-order invariants (Gibson, 1950).

The importance of relations and abstract relations was crucial in Gestalt Psychology (e.g., Wertheimer, 1923/1938; Koffka, 1935). Our use of a melody borrows from the Gestaltists, as it was a classic example of theirs. If you hear a new melody and remember it, what is it that you have learned? If learning were confined to a concrete, sensory level, the learning would consist of a temporal series of frequencies of sound. But this description would not capture the learning of most listeners. Most of us (except those with “perfect pitch”) will not retain the particular frequencies (or more accurately, the sensations of pitch corresponding to those frequencies). Your learning is such that you will seamlessly recognize the melody as the same if you hear it later, even if it has been transposed to a different key. The fact that the melody retains its identity despite changes in all of the constituent frequencies of sound indicates encoding of the melody in terms of abstract relations. Conversely, most hearers would have little ability to identify later the exact key in which the melody was played at first hearing.

In vision, abstract relations are pervasive in our encoding of shape. A miniature plastic replica of an elephant is easily recognizable as an elephant. This ability is more remarkable than it first appears. It involves abstract relations and using these to classify despite novel (and starkly conflicting) concrete features. (No real elephants are made of plastic or fit in the palm of your hand.) Seeing the miniature elephant as appropriately shaped involves abstract relations, such as the proportions of the body, trunk and ears, that are applied to a novel set of concrete size values. Perceptual learning about shape seems to have this property in any situation in which shape invariance is required across changes in constituent elements, size or other changes (what the Gestalt psychologists called “transposition” phenomena).

In the cases of a melody and for some shape perception, abstract relations are salient even in initial encoding (although there is a possibility that such invariance is initially discovered through learning processes). In other contexts, learning processes have a larger challenge in discovering over a longer period some higher-order invariance that determines a classification (Gibson, 1969). Whether the relevant relations “pop out” or are discovered gradually, the pickup of abstract structure is common in human perceptual learning, and it presents challenges in the modeling of perceptual learning.

There has not been much work on perceptual learning of abstract relations. Numerous efforts have involved shape perception (Gibson, 1969), but not much has been done to specifically examine invariance over transformations after learning. Intuitively, work on caricature (Ryan & Schwartz, 1956) or on recognition of people and events in point-light displays (e.g., a person walking has small lights placed on the major joints of the body and is filmed in the dark; Johansson, 1973) are studies of the effects of abstract perceptual learning processes.

Although sensory discrimination tasks used in most contemporary perceptual learning research do not appear to involve abstract perceptual learning, it may be more pervasive than suspected. An interesting example comes from the work of Nagarajan, Blake, Wright, Byl, and Merzenich (1998). They trained subjects on an interval discrimination for vibratory pulses. The sensory (concrete) aspects of this learning would be expected to involve tactile sensations sent to somatosensory cortex. They found that after training, learning transferred to other parts of the trained hand, and to the same position on the contralateral hand. More remarkable, they found that learning also transferred to auditory stimuli with the same intervals. So, what was learned was actually the time interval – an abstract relation among inputs, with the particular inputs being incidental. This idea that even apparently low-level tasks may involve relational structure is also reflected in recent work suggesting that perceptual learning may not operate directly on sensory analyzers but only through perceptual-constancy based representations (Garrigan & Kellman, 2008). We discuss that work more thoroughly in section V below.

Research suggests that perceptual learning of abstract relations is a basic characteristic of human perceptual learning from very early in life. Marcus, Vijayan, Bandi Rau & Vishton (1999) familiarized 7-month-old infants with syllable sequences in which the first and last elements matched, such as “li na li” or “ga ti ga”. Afterwards, infants showed a novelty response (longer attention) to a new string such as “wo fe fe” but showed less attention to a new string that fit the abstract pattern of earlier items, such as “wo fe wo”. Similar results have been obtained in somewhat older infants (Gomez & Gerken, 2000). These findings suggest an early ability to discover abstract relationships, although there is some possibility that speech stimuli are somewhat special in this respect (Saffran & Greipentrog, 2001).

That learning often latches onto abstract relations is important in attaining behaviorally relevant descriptions of our environment: For thought and action, it is often the case that encoding relations and abstract relations is more crucial than encoding sensory particulars (Gibson, 1979; Koffka, 1935; von Hornbostel, 1927). Whether this holds true depends on the task and environment, of course.

IV. Explaining and Modeling Perceptual Learning Phenomena

How have researchers sought to explain and model perceptual learning? These questions are important not only for understanding human performance but for artificial systems as well. Understanding how learners discover invariance among variable instances would have value for creating learning devices as well as explaining human abilities. We currently have no good machine learning algorithms that can learn from several examples to correctly classify new instances of dogs, cats, and squirrels the way 4-year-old humans do routinely. Even understanding how basic acuities improve with practice presents interesting challenges for modeling. Here we describe several foundational ideas that have been proposed and illustrated by empirical studies or models, or both.

Receptive Field Modification

Aligned with the focus of much research is the idea that low-level perceptual learning might work by modifying the receptive fields of the cells that initially encode the stimulus. For example, individual cells could adapt to become more sensitive to important features, effectively recruiting more cells for a particular purpose, making some cells more specifically tuned for the task at hand, or both. Equivalently, receptive field modification can be thought of as a way to exclude irrelevant information. A detector that is sensitive to two similar orientations might develop narrower sensitivity to facilitate discrimination between the two.

The idea of receptive field modification in early cortical areas would fit with some known properties of perceptual learning. In vision, specificity to retinal location and to particular ranges on stimulus dimensions (e.g., orientation) would be consistent with known properties of cells in early cortical areas (V1 and V2). Consistent with some perceptual learning results, effects of changing a cell’s receptive field would be long lasting, compared to other adaptation effects. As a cell’s receptive field becomes more specifically tuned, it may also become more resilient to future, experience-induced changes since it would be less broadly sampling the statistics of the environment.

Evidence for receptive field change has been found using single-cell recording techniques in primates. In monkey somatosensory cortex, there is high variability in the size of representations of the digits (Merzenich, et al., 1987). At least some of this variability may be due to effects of experience. Perceptual learning can dramatically alter both the total amount of cortex devoted to a particular mental representation as well as the size of the receptive fields of the individual cells. Recanzone, et al., (1992) trained owl monkeys to discriminate different frequencies of tactile vibration. Following training, the total size of cortex corresponding to the trained digits increased 1.5–3 fold. Other studies have shown that a task requiring fine dexterity, e.g. retrieving food pellets from small receptacles, resulted in a decrease in the size of the receptive fields of cells corresponding to the tips of the digits used in the task (Xerri, et al., 1999). Presumably, smaller receptive fields would lead to finer tactile acuity, and thereby increase the level of performance in retrieving the pellets.

In the auditory domain, Recanzone, Schreiner & Merzenich (1993) showed that monkeys trained on a difficult frequency discrimination improved over several weeks. Mapping of primary auditory cortex after training showed that receptive fields of cortical cells responding to the task-relevant frequencies were larger than before training.

An example of a specific model that attempts to explain learning effects by receptive field change is the vision model of Vernier hyperacuity proposed by Poggio, Fahle, & Edelman (1992). Their model begins with a network of non-oriented units receiving input from simulated photoreceptors. The photoreceptor layer of their model remains static, while the second layer is an optimized representation of the photoreceptor activity using radial basis functions. This second layer represents cells whose receptive field structure changes to more effectively utilize task-relevant information from the photoreceptors.

Selection and Differentiation

An alternative approach for modeling perceptual learning is the idea of selection. Selection describes learning to preferentially use some subset of the information available for making a decision. This notion was at the core of Eleanor Gibson’s work, so much so that she often used “differentiation learning” as synonymous with perceptual learning. Summing up the approach, she said:

It has been argued that what is learned in perceptual learning are distinctive features, invariant relationships, and patterns; that these are available in stimulation; that they must, therefore, be extracted from the total stimulus flux. … From the welter of stimulation constantly impinging on the sensory surfaces of an organism, there must be selection. (Gibson, 1969, p. 119.)

A specific proposal put forth by Gibson was that perceptual learning works by discovering distinguishing (or distinctive) features. Distinguishing features are task-specific: they are those features that provide the contrasts relevant to some classification. Thus, in learning to discriminate objects, the learner will tend to select information along dimensions that distinguish the objects, rather than forming better overall descriptions of the objects (Pick, 1965).

In contemporary perceptual learning work with basic sensory discriminations, the notion of selection is also a viable candidate for explaining many results. Imagine an experiment in which the learner improves at distinguishing two different Gabor patches (or lines) having a slight orientation difference. On each trial the learner must decide which of the two patches was presented. Now envision in cortex an array of orientation-sensitive units responsive at the relevant stimulus positions. Some units are activated by either stimulus; some more by one stimulus than the other; others may be activated by only one stimulus. Suppose the learner’s decision initially takes all of these responses into account, giving each equal measure. As learning proceeds, the weights of these inputs are gradually altered. Units that do not discriminate well between the stimuli will be given less weight, whereas those that discriminate strongly will be given more weight. It may even be the case that the best discriminators are not the units that initially give the largest response to either stimulus. For example, suppose the learner is to discriminate lines at 10o vs. 14 o, and suppose orientation-sensitive units respond somewhat to inputs within ± 6o of their preferred orientations. In this simple example, an analyzers at 7 o and 17 o may be more informative than the units centered at 10 o or 14o, because such analyzers would be activated by only one of the two displays.

Recently, Petrov, Dosher & Lu (2005) presented experimental and modeling results indicating that in a low-level task (orientation discrimination of two Gabor patches in noise), perceptual learning was best described, not in terms of receptive field modification, but by selective reweighting. They argued that even in simple discrimination paradigms, perceptual learning might be explained in terms of discovery of which analyzers best perform a classification and the increased weighting of such analyzers. In their model, encodings at the lowest level remain unchanged.

This approach brings to light an interesting set of issues. Earlier models of perceptual learning posited that the changes occurred in the earliest encodings because these areas have the same specificity – e.g. position and orientation – that has been reported in the perceptual learning literature. Petrov, et al, point out, however, that specificity in perceptual learning only requires that some part of the neural system responsible for making a particular decision have specificity, not that the changes that drive perceptual learning occur in units that have specificity. The solution, they argue, again involves selection. Learning can occur via changes in higher level structures and still have specificity, provided that those structures are reading the outputs of lower-level units that are specific to visual field location, orientation, or some other stimulus attribute. Specificity can arise from differentially selecting information from units with specificity, and can therefore arise from changes in higher-level, abstract representations of the relevant stimuli.

In order to unambiguously demonstrate lack of transfer, many perceptual learning experiments utilize two conditions (for learning and transfer testing) that involve distinct neural structures, e.g. stimuli at two orientations orthogonal to one another. In these experiments, lack of transfer is defined as training in one condition that does not enhance performance (or enhances performance less) in the other condition. This setup, Petrov, et al., argue, is poorly suited for discriminating between the receptive field modification hypothesis and the selective reweighting hypothesis. In this type of experiment, training in each condition involves distinct neural representations at the lowest level and distinct connections between these representations and higher level “decision units”. Since both the representations and the connections are distinct, specificity could result from modification at either stage, and therefore cannot distinguish between the receptive field hypothesis (changes at the level of representation) and the selective reweighting hypothesis (changes in the connections).

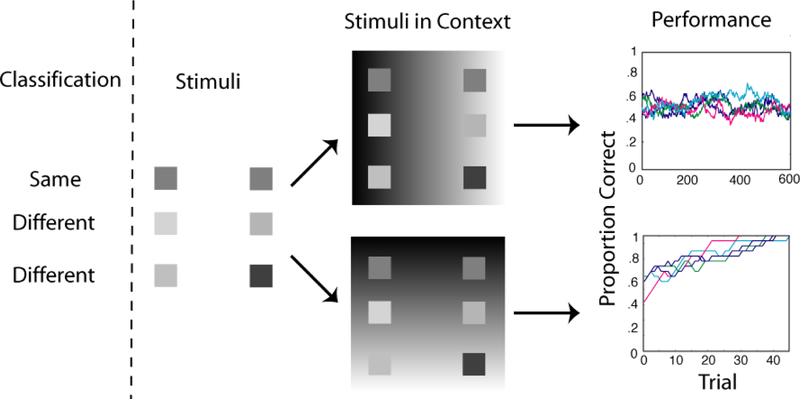

In the Petrov, et al. experiments, instead of having a different stimulus in each condition, the same stimulus was presented in a different context in each condition.. In this case the representation at the lowest level is the same (i.e. the same units in V1), and the connection from these units to higher level units could be shared or distinct. Their experimental data were well-described by a model with a single representation of the stimulus, with training effects occurring in distinct connections between the representation and the decision units.

This recent work adds to earlier results that seemed discrepant with hypotheses of receptive field change. Ahissar, Laiwand, Kozminsky, & Hochstein (1998) trained subjects in a pop-out visual search task with a target of one orientation and distractors in another orientation. After learning had occurred, they swapped target and distractor orientations and again trained subjects to criterion. Finally, they switched back to the original orientations and found that performance was neither better nor worse than at the end of the first session of training. If training had caused modification of receptive fields of orientation-sensitive units in V1, they argued, then switching target and distractor orientations after training should have interfered with the earlier learning. Yet the earlier learning was preserved, and it coexisted with the later learning. These results are more consistent with a model in which a particular task leads to selective use of particular analyzers, but the underlying analyzers themselves remain unchanged. This kind of result also addresses a deep concern about the idea of receptive field modification in vision: Given that the earliest cells in the striate pathway (beginning with cortical area V1) are the inputs for many visual functions, including contour, texture, object, space, and motion perception, task-specific learning that altered receptive fields at this level would be expected to affect or compromise many other visual functions. The fact that such effects do not seem to occur is comforting for visual health but less so for explanations of perceptual learning in terms of receptive field change.

The notion of selective reweighting corresponds nicely to Gibson’s earlier account of perceptual learning as selection and the learning of distinguishing features. It raises the fascinating possibility that perceptual learning at all levels can be modeled as selection. One difference is that Gibson talked about selection of relevant stimulus information, whereas Petrov et al (2005) and others cast the selection notion in terms of selection and weighting of analyzers within perceptual systems. We believe these two versions of selection are two sides of the same coin. To be used, information must be encoded, and the function of encoding processes is to obtain information. Perceptual learning processes, we might say, select information relevant to a task by weighting heavily the analyzers that encode it. (Trying to distinguish more deeply between selection of information and selection of analyzers is much like puzzling over whether we really “see” objects in the world, or we see electrical signals in our brains. For discussion, including a claim that we see the world, not cortical signals, see Kellman & Arterberry, 1998, p. 10–11).

Relational Recoding

Neither receptive field modification nor simple selection from concrete inputs can account for the substantial part of human perceptual learning that is abstract. Take the idea of learning what a square is. A given square, having a specific size and viewed on a particular part of the retina, activates a number of oriented units in visual cortex. Suppose the learner is given category information that this pattern is a square. Using well-known connectionist learning concepts, this feedback could be used to strengthen the connection of each activated oriented unit with the label “square.” One result of this approach is that with sufficient learning (to stabilize weights in the network), the network would come to accurately classify this instance if it recurred. Moreover, with appropriate design, the network would also be able to respond “square” to a display that contained a large part, but not all, of the previously activated units. These kinds of learned classification and generalization results are readily obtainable by well-established methods common in machine learning.

There is another level to the problem, however, and as we noted earlier, it is one with deep roots. At the turn of the last century, Gestalt psychologists criticized their structuralist predecessors for the idea that complex perceptions, such as perception of an object, are obtained by associating together local sensory elements. Their famous axiom “The whole is different from the sum of the parts” was aptly illustrated in many domains that are commonplace for perceivers but profoundly puzzling for the structuralist aggregating of sensory elements or, in our era, the weighting of concrete detector inputs. A square, for example, is not the sum of the activations triggered by any particular square. Another square may be smaller, rotated, or displaced on the eye. All of these transformations activate different populations of oriented units in early cortical areas. Moreover, we also readily recognize squares made of little green dots, or squares made of sequences of lines that are oriented obliquely along the edges of a square. A square made in this way may have no elementary units in common with an earlier example of square, whereas another pattern that shares 90% of the activated units with an earlier example may simply not be a square at all. Being a square has to do with relations among element positions, not the elements themselves.

Kellman, Burke & Hummel (1999) made a proposal about how such abstract invariants may be learned. A crucial part of their approach is that the model be generic, in that it contains only properties we might expect on other grounds to be available in visual processing. That is, one could easily build a specific device to learn about squares, but the goal is to understand how we can be a general purpose device that learns the invariants of squares, rhombuses, dogs, cats, chess moves, etc. The key, according to these investigators, is a stage of early relational recoding of inputs. In vision, there are basic features and dimensions to which we are sensitive. Besides these, there must be operators that compute relations among features or values on dimensions. In the simulations of Kellman et al (1999), the key operator was an “equal / different” operator. This operator compared values, and produced an output that was large when two values were approximately equal and small when they differed beyond some threshold value. Their model learned to classify, from only one or two examples, various quadrilaterals (e.g., square, rectangle, parallelogram, trapezoid, rhombus, etc.). The model was given the ability to extract contour junctions and distances between these. The layer of equal / different operators scanned all pairs of inter-junction distances. A response layer that used the results of all equal / different operators readily learned the quadrilateral classifications from simple category feedback. Learning, even with a minimum of examples of each form class, generalized to patterns of different size, orientation, and position; with certain types of preprocessing, they would also generalize to figures made of any elements.

The key idea here is not to provide a complete account of learning in this particular domain but to begin to address the perceptual learning ability to discover higher-order invariants. We believe that along with the basic encodings of features and dimensions there is a set of operators that computes new relations. There is evidence for equal / different operators and one or two others. Some of these relational computations are automatic or nearly so; some may be generated in a search for structure over longer learning periods in complex tasks. The outputs of automatically generated relational recodings or newly synthesized ones that were not initially obvious may account for the salience of some relations in perception and for the longer term learning of other, less salient ones.

It is implied by this view that there is essentially a “grammar” of perceptual learning and learnability. Some relations in stimuli are obvious; some may be discovered with experience and effort; and some are unlearnable. These outcomes depend on the operators that compute relations on the concrete inputs, as well as on search processes that explore the space of potentially computable operations for classifications that are not initially obvious. If this overall approach has merit, then determining the operators in this grammar, although difficult, stands as a key question in understanding abstract perceptual learning. The solution of this “Kantian” question of what relational operators we possess that may allow us to discover new invariants will help us understand how we learn to classify dogs and cats, become experts at chess and radiology, and will also empower artificial learning devices that may someday share in the remarkable levels of structural intuition displayed by human experts.

Abstract Relations and Language