Abstract

Purpose

Deaf children are frequently reported to be at risk for difficulties in executive function (EF); however, the literature is divided over whether these difficulties are the result of deafness itself or of delays/deficits in language that often co-occur with deafness. The purpose of this study is to discriminate these hypotheses by assessing EF in populations where the 2 accounts make contrasting predictions.

Method

We use a between-groups design involving 116 children, ages 5–12 years, across 3 groups: (a) participants with normal hearing (n = 45), (b) deaf native signers who had access to American Sign Language from birth (n = 45), and (c) oral cochlear implant users who did not have full access to language prior to cochlear implantation (n = 26). Measures include both parent report and performance-based assessments of EF.

Results

Parent report results suggest that early access to language has a stronger impact on EF than early access to sound. Performance-based results trended in a similar direction, but no between-group differences were significant.

Conclusions

These results indicate that healthy EF skills do not require audition and therefore that difficulties in this domain do not result primarily from a lack of auditory experience. Instead, results are consistent with the hypothesis that language proficiency, whether in sign or speech, is crucial for the development of healthy EF. Further research is needed to test whether sign language proficiency also confers benefits to deaf children from hearing families.

Hearing, speech, and language are often tightly interconnected, but they are also dissociable constructs. It can therefore be challenging to tease apart the impact of hearing (i.e., access to and processing of auditory input) from the impact of language (i.e., access to and processing of linguistically structured input). Several authors have recently proposed that hearing—or lack thereof—has consequences far beyond the auditory system, extending to high-level cognitive processes, including but not limited to executive function (EF; Arlinger, Lunner, Lyxell, & Pichora-Fuller, 2009; Conway, Pisoni, Anaya, Karpicke, & Henning, 2011; Conway, Pisoni, & Kronenberger, 2009; Kral, Kronenberger, Pisoni, & O'Donoghue, 2016; Kronenberger, Beer, Castellanos, Pisoni, & Miyamoto, 2014; Kronenberger, Colson, Henning, & Pisoni, 2014; Kronenberger, Pisoni, Henning, & Colson, 2013; Pisoni, Conway, Kronenberger, Henning, & Anaya, 2010; Ulanet et al., 2014). A major limitation of these proposals is that they have not excluded the hypothesis that the observed difficulties might be due to problems with language that are only secondary to hearing loss.

Distinguishing the impact of auditory access from that of language access is crucial for both theory and practice. Theoretical accounts that emphasize the role of auditory access (e.g., the auditory connectome model, Kral et al., 2016; the auditory scaffolding hypothesis, Conway et al., 2009; cognitive hearing science, Arlinger et al., 2009) posit relatively novel and as-yet-unspecified connections between low-level sensation/perception and higher-order cognition. Meanwhile, accounts that emphasize the role of language access (e.g., Barker et al., 2009; Botting et al., 2016; Castellanos, Pisoni, Kronenberger, & Beer, 2016; Dammeyer, 2010; Figueras, Edwards, & Langdon, 2008; Marshall et al., 2015; Remine, Care, & Brown, 2008) posit a different cognitive architecture, with the primary links occurring among higher-order cognitive processes.

At a more practical level, this distinction has implications for clinical care: If auditory access is required for healthy cognitive development, then all deaf 1 children need exposure to sound as early as possible. But if linguistic access is required, the space of possible interventions becomes bigger: In particular, learning a natural 2 sign language is predicted to be beneficial under the latter account, but not the former.

This study is designed to distinguish between auditory access and language access accounts of EF difficulties in deaf children during their elementary school years (age 5–12 years). EF refers to a constellation of cognitive skills that regulate both cognition and behavior. Major domains include behavior regulation and metacognition, with subskills including attention, planning/problem solving, and inhibitory control (for a similar model, see Diamond, 2013). Early development of EF skills has strong and long-lasting consequences for many domains of subsequent development, in both typical and atypical development (Eigsti et al., 2006; McDonough, Stahmer, Schreimman, & Thompson, 1997; Pellicano, 2007; Schuh, Eigsti, & Mirman, 2016). Individual differences in EF account for unique variance in school readiness and academic outcomes even after taking IQ and prior knowledge into account (Alloway et al., 2005; Blair & Razza, 2007; Bull, Espy, & Wiebe, 2008). EF in childhood also predicts long-term outcomes, including SAT scores (Mischel, Shoda, & Peake, 1989), probability of college graduation (McClelland et al., 2007), and a range of other attributes including health, addiction, socioeconomic status, and likelihood of being convicted of criminal charges (Moffitt et al., 2011). Promoting healthy development of EF skills is therefore a high priority for early intervention.

Unfortunately, there have been longstanding concerns about deaf children's EF skills, dating back at least a century (Pintner & Paterson, 1917; Vygotsky, 1925), continuing through the cognitive revolution (e.g., Altshuler, Deming, Vollenweider, Rainer, & Tendler, 1976; Chess & Fernandez, 1980; Harris, 1978; Lesser & Easser, 1972; Mitchell & Quittner, 1996; Myklebust, 1960; O'Brien, 1987; Quittner, Smith, Osberger, Mitchell, & Katz, 1994; Reivich & Rothrock, 1972; Smith, Quittner, Osberger, & Miyamoto, 1998), and persisting despite the advent of cochlear implants (CIs; Beer et al., 2014; Beer, Kronenberger, & Pisoni, 2011; Figueras et al, 2008; Khan, Edwards, & Langdon, 2005; Kronenberger, Beer, et al., 2014; Kronenberger, Colson, et al., 2014; Kronenberger et al., 2013; Remine et al., 2008; Schlumberger, Narbona, & Manrique, 2004; Surowiecki et al., 2002). The literature is remarkably consistent in finding that these skills are at risk in deaf children as a whole and that EF skills are associated with language proficiency in deaf children, although these relationships may be different than those in hearing children (Kronenberger, Colson, et al., 2014). However, the literature is divided as to the causal nature of these connections. Although most authors acknowledge the possibility of complex interrelationships among hearing, language, EF, and additional domains (e.g., the social environment), some have proposed that deafness has direct effects on EF and other high-level cognitive processes (Arlinger et al., 2009; Conway et al., 2009, 2011; Kral et al., 2016; Kronenberger, Beer, et al., 2014; Kronenberger, Colson, et al., 2014; Kronenberger et al., 2013; Pisoni et al., 2010), whereas others emphasize that there is no evidence for a role of auditory access that cannot also be explained by language access (Barker et al., 2009; Botting et al., 2016; Castellanos et al., 2016; Dammeyer, 2010; Figueras et al., 2008; Marshall et al., 2015; Remine et al., 2008). A third possibility, suggested by Conway et al. (2009), inter alia, is that differences in social environments could also account for (or at least contribute to) these apparent deaf–hearing differences. This study is not designed to address this third possibility and focuses instead on the potential impact of the presence/absence of auditory access and of language access.

The existence of a relationship between language and EF is well documented, but its nature is complex and likely bidirectional. Evidence that EF can impact language comes from studies of psycholinguistic processing, which have identified specific aspects of language structure that require controlled processing, for example, resolving ambiguity by integrating context and inhibiting alternative meanings, overriding a regular past tense rule to correctly produce irregular verb forms, or switching from an incorrect parse of a garden path sentence to a correct parse. In children, the evidence is correlational (Ibbotson & Kearvell-White, 2015; Khanna & Boland, 2010; Mazuka, Jincho, & Oishi, 2009; Woodard et al., 2016), and thus, causality cannot be determined. However, Novick, Hussey, Teubner-Rhodes, Harbison, and Bunting (2014) showed that adults who responded to training on a nonlinguistic working memory task also improved in their ability to recover from syntactic ambiguity in sentence processing. These results provide evidence that EF skills can causally impact language processing, at least in some contexts.

However, these fine-grained processing measures are quite removed from the much more coarse ways in which the language abilities of deaf children are measured, which are typically based on the child's overall performance on standardized assessments of language proficiency. Kronenberger, Colson, et al. (2014) found that the relationships between (spoken) language proficiency and various subdomains of EF were different in deaf and hearing children. These observations highlight the need for a better understanding of the causal connections between language and EF, specifically whether deficits in EF cause problems in language acquisition and processing or whether deficits in language acquisition cause problems in EF.

Arguments for the latter view have often come from studies involving deaf children, for whom the source of their language difficulties is clearer than for hearing children. For example, Botting et al. (2016) showed that vocabulary level (in sign or speech) mediated the difference between deaf versus hearing children's performance on EF tasks, but the reverse was not true. In other words, language ability explained unique variance in EF after controlling for hearing status, but hearing status did not explain unique variance after controlling for language ability. However, the study is limited in that vocabulary level is only one dimension of language ability.

A major reason that debate over this question persists is that most previous studies have not used a research design that is well suited to discriminating between these accounts. Most previous studies have included participants who have lacked both auditory access and language access for a significant period of time. The current study adopts a between-group design involving populations who lack either auditory or language access during infancy (and toddlerhood, in some cases); thus, the auditory access and language access hypotheses make contrasting predictions for these groups. All accounts predict that deaf children who have experienced a period of time without full access to both auditory input and linguistic input—that is, oral CI users—should be at higher risk for problems in EF than children who have had full access to both audition and language from birth—that is, hearing controls. Thus, deaf children with CIs who did not have access to spoken English or to a fluent ASL model prior to implantation and children with typical hearing are important reference populations. The crucial group that allows us to distinguish between the auditory access and language access accounts consists of children who are born deaf but raised in families where the parents were already proficient in a natural sign language such as ASL. We will refer to these children as Deaf native signers. Because these children do not have meaningful auditory access (indeed, most of them do not regularly use any hearing technology), auditory access accounts predict EF skills in Deaf native signers to be worse than those of hearing controls, test norms, and perhaps even those of deaf children who gain auditory access via cochlear implantation. Conversely, because the Deaf native signers have never experienced a period without access to linguistically structured input, the language access account predicts that their EF skills should not differ from those of hearing controls or scale norms and may be better than those of deaf children who first gained effective access to linguistically structured input after cochlear implantation.

A previous study from our group has directly addressed this question. Hall, Eigsti, Bortfeld, and Lillo-Martin (2017a) reported parent ratings of EF in Deaf native signers and hearing controls, using the Behavior Rating Inventory of Executive Function (BRIEF; Gioia, Isquith, Guy, & Kenworthy, 2000). The mean T scores of the Deaf native signers did not differ from those of the hearing controls or test norms on any scale or summary index. However, the Deaf native signers were at significantly greater risk of falling in the elevated (but not clinically significant) range on the Inhibit and Working Memory subscales of the BRIEF relative to hearing participants, though not relative to test norms. Hall et al. (2017a) attribute this to unexpectedly low (i.e., good) scores among hearing participants, but this could also reflect outdated norms.

A number of other limitations in the previous study restrict the extent to which it provided a thorough test of the auditory access and language access accounts. First, it did not include a comparison group of deaf children who experienced a period without access to linguistically structured input. Although at least four previous studies have documented EF difficulties in this population using the BRIEF (Beer et al., 2011; Hintermair, 2013; Kronenberger, Beer, et al., 2014; Oberg & Lukomski, 2011), it is possible that recent improvements in hearing technology and early hearing detection/intervention have lessened the previously reported difficulties in deaf CI users. Second, the previous study hinges largely on interpreting a null effect; it may simply have been underpowered to detect significant effects. Third, it relied exclusively on a parent report measure, which is more vulnerable to bias than performance-based assessment. Fourth, several of the Deaf native signers in the previous study had received CIs; thus, experience with sound could have contributed to the development of EF in at least some of the participants.

In this study, we address the first two limitations of our previous study by including a group of deaf children who have CIs but who do not have access to a fluent ASL model. This allows us to test whether EF difficulties persist among deaf children who have current technology and intervention and serves as a check on the power of the previous study to detect group differences. We address the third limitation by also including performance-based assessment of EF in all three participant groups, using a sustained attention task, a tower task, and a go/no-go task. Although each of these is often viewed as assessing one particular domain of EF, successful performance on all tasks requires both behavior regulation and metacognition. We address the fourth limitation by excluding native signers with CIs from analysis. The findings reported here thus provide an even stronger test of the auditory access and language access hypotheses.

Method

The methods below were approved by the institutional review board at the University of Connecticut as well as those of participating schools, where applicable. To ensure that informed consent was obtained from all participants or their guardians, we prepared written documents in English and also video recordings of the same information in ASL. Whenever information was distributed to potential participants, it included links to these ASL materials. Participants were free to choose whether to read the document in English or watch a video of its ASL translation. All participants were also tested by a researcher proficient in the participant's preferred method of communication and who explained study procedures to the participants directly and answered any questions they had about the research. Child assent was requested from all children in their preferred language (English or ASL); documentation of child assent was obtained in written English for children over the age of 10. Testing was performed in a variety of settings, including the University of Connecticut, elementary schools, after-school programs, family homes, public libraries, and a summer camp for deaf and hard-of-hearing children. In all cases, the testing environment was designed to minimize visual and auditory distraction; the only people present were the participant, the experimenter, and, in some cases, a caregiver or school chaperone. When present, these other adults were positioned out of the child's line of sight and did not interact during the testing session. Upon completion of the 40-min testing session, the participant's caregiver received $10.

Participants

A total of 120 participants took part in the study: 45 children with typical hearing, 49 Deaf native signers, and 26 oral CI users. All participants across groups were between the ages of 5;0 and 12;11 (years;months). Parent report data from the hearing children and a superset of the Deaf native signers were previously reported in Hall et al. (2017a); to provide a cleaner test of the auditory access hypothesis, the analyses reported here exclude four Deaf native signers who had received CIs, reducing the number of participants in this group to 45. Children with additional medical diagnoses (e.g., autism, Down syndrome, cerebral palsy) were excluded from all groups. Children with diagnoses of attention-deficit/hyperactivity disorder or learning disability were considered eligible, because difficulties in EF could plausibly be a cause rather than a consequence of these diagnoses. Demographics of the samples are summarized in Table 1. Race and ethnicity, when reported, did not differ significantly between groups (Fisher's exact tests, all ps > .34). The oral CI users reported race and ethnicity more often than participants in the other groups.

Table 1.

Participant demographics.

| Demographic variable | Deaf native signers, n = 45 | Oral CI users, n = 26 | Hearing controls, n = 45 | F/χ2 | p |

|---|---|---|---|---|---|

| Age | 0.66 | .52 | |||

| Mean, in years;months | 8;02 | 8;09 | 8;04 | ||

| (SD) | (2;03) | (2;04) | (1;10) | ||

| Range | 5;01–12;10 | 5;06–12;10 | 5;06–12;11 | ||

| Sex (female:male:other) | 28:17:0 | 12:14:0 | 24:21:0 | 1.8 | .40 |

| Race | .34 | ||||

| White | 23 | 23 | 26 | ||

| Black | 2 | 0 | 0 | ||

| Native American | 0 | 0 | 2 | ||

| Asian | 0 | 0 | 1 | ||

| Multiple | 4 | 2 | 3 | ||

| Other | 0 | 0 | 2 | ||

| No response | 15 | 1 | 11 | ||

| Ethnicity | .99 | ||||

| Hispanic | 3 | 2 | 3 | ||

| Non-Hispanic | 23 | 21 | 30 | ||

| Other | 1 | 0 | 1 | ||

| No response | 18 | 3 | 11 | ||

| Hearing status | Severe or profound congenital deafness | Severe or profound congenital deafness | No known hearing impairment | n/a | n/a |

| Language experience | Exposure to sign language at home from birth and at school; variable speech emphasis at home and school | Little accessible language input prior to cochlear implant; listening and spoken language emphasis at home and school | Exposure to spoken language from birth | n/a | n/a |

| Age of CI | n/a | n/a | n/a | n/a | |

| Mean, in years;months | 1;08 | ||||

| (SD) | (0;08) | ||||

| Median | 13 | ||||

| Range | 0;08–2;11 | ||||

| Primary caregiver education level a | I: 1 | I: 0 | I: 0 | 6.2 | .80 |

| II: 2 | II: 1 | II: 1 | |||

| III: 7 | III: 3 | III: 8 | |||

| IV: 9 | IV: 6 | IV: 9 | |||

| V: 24 | V: 12 | V: 25 | |||

| VI: 2 | VI: 4 | VI: 2 | |||

| Caregiver completing BRIEF | Mother: 35 | Mother: 20 | Mother: 30 | 4.1 | .40 |

| Father: 4 | Father: 5 | Father: 5 | |||

| Other: 1 | Other: 0 | Other: 2 |

Note. CI = cochlear implant; BRIEF = Behavior Rating Inventory of Executive Function.

Education: I = less than high school, II = high school or General Education Development (GED), III = some college or associate's degree, IV = bachelor's degree, V = graduate training or advanced degree, VI = not reported.

The hearing participants in our sample were recruited from local schools and after-school programs by advertisements in and around the vicinity of the University of Connecticut and local contacts in Connecticut and California. Multilingual children were included and constituted 16% of the sample (7/45). 3

The Deaf native signers in our sample had severe or profound congenital sensorineural deafness. The majority (39/45) did not regularly use any hearing technology; six used hearing aids at least “sometimes”; as stated previously, four participants who had received CIs were excluded from analysis. 4 Deaf native signers were recruited from schools and organizations for the deaf in Connecticut, Texas, Maryland, Massachusetts, and Washington, DC. We consider all Deaf native signers to be at least bilingual (in ASL and written English); two also knew more than one sign language.

The oral CI users in our sample had also been identified as having severe or profound bilateral deafness before 12 months and received at least one CI before turning 3. The median age of implantation was 13 months; the mean was 20 months (SD = 8 months). All were raised in families who had chosen an oral/aural communication emphasis, focusing on listening and spoken language, and therefore did not typically use ASL or other forms of manual communication (e.g., sign-supported speech, cued speech) with their children, as assessed by a parent report questionnaire that included questions about communication approaches, including qualitative and quantitative questions about what types of manual communication were used (if any), how frequently, and how proficient the parents and child were. The primary criterion for inclusion in the oral CI group was the lack of access to a natural sign language such as ASL; thus, families who occasionally used sign-supported speech were not automatically excluded. In practice, however, the majority of participants in this group had chosen a nearly exclusive focus on listening and spoken language. All of the oral CI users were currently attending mainstream schools. Participants were recruited by contacting schools and other organizations serving deaf children and their families, many of which emphasized listening and spoken language. Most participants in this group came from families living in southern New England and New York City, as well as from a summer camp in Colorado, where families hailed from across the country.

Measures

The study included one parent report questionnaire, three performance-based assessments of EF, and one performance-based control task. Most participants took part in all aspects of the study; however, for several participants data are only available from either the parent report or the performance-based assessments (typically because a behavioral testing session could not be scheduled or because parents did not return the questionnaire, respectively).

The parent report measure was the BRIEF (Gioia et al., 2000). The performance-based EF measures were the Tower subtest of the NEPSY battery (Korkman, Kirk, & Kemp, 1998), the Attention-Sustained subtest of the Leiter International Performance Scale–Revised (LIPS-R; Roid & Miller, 1997), and a Go/No-Go task adapted from Eigsti et al. (2006). These tasks were selected because they were suitable for children age 5–12, did not require any auditory stimuli or language-based responses, had previously been used with deaf populations, and were short enough to fit into a 40-min testing session. We describe each measure in more detail below.

BRIEF

For this assessment, parents are asked to indicate how often various behaviors have been a problem for the child over the past 6 months: never, sometimes, or often (Gioia et al., 2000). The 86 items are arranged into eight subscales. Three of the subscales (Inhibit, Shift, Initiate) index behavior regulation; the remaining five subscales (Emotional Control, Working Memory, Planning/Organization, Organization of Materials, Monitor) index metacognition. The Behavior Regulation Index and the Metacognition Index combine to form a Global Executive Composite. Raw scores are converted to T scores (normed separately by age and sex), which have mean = 50, SD = 10. Higher values indicate greater impairments. The BRIEF has been widely used with deaf children; for further details, see Hall et al. (2017a).

Performance-Based Tasks

The following paragraphs describe each performance-based task in turn. These tasks are sometimes framed as though they assess individual subdomains of EF. Under such a view, tower tasks are typically used to measure planning and problem solving, selective attention tasks are used to measure focus and concentration, and go/no-go tasks are used to measure inhibitory control. However, we do not assume that any given task is “process-pure”; successful performance on each task requires both behavior regulation and metacognition.

Tower

In the Tower subtest of the NEPSY battery (Korkman et al., 1998), children are presented with a physical apparatus consisting of three vertical pegs (short, medium, long) emanating from a wooden base. On each peg sits a colored ball (red, yellow, blue). From this starting state, the child's task is to rearrange the three balls into a goal state (displayed by the experimenter) while following simple rules. At the start of each trial, the experimenter resets the three balls to the starting state (same for all trials). The experimenter then displays a picture that shows the goal state for those trials. Each trial has a time limit and a move limit; testing stops after the child fails to complete four items in a row within the allotted time and/or number of moves. Raw scores are then converted to scaled scores with mean = 10, SD = 3. Lower values indicate worse behavior. The instructions were presented in the child's preferred language. The first author (a fluent ASL signer) worked with a Deaf native signer to translate the English instructions into ASL. For participants who preferred English, we used a reverse translation of the ASL instructions to maximize comparability across the three participant groups. Because the ASL translation of the instructions included spatial information, the same spatial information was included in the co-speech gestures produced by the experimenter when explaining the task in English. (The scores of the hearing controls provide reassurance that these procedures did not substantively impact task performance.) Successful performance on this task requires both behavior regulation (complying with rules, inhibiting impulsive moves, staying on-task) and metacognition (planning ahead, allocating visual working memory to imagine upcoming states, engaging problem-solving strategies). Previous studies using tower tasks have reported deficits in deaf children relative to hearing children and/or test norms (Botting et al., 2016; Figueras et al., 2008; Luckner & McNeill, 1994); however, the vast majority of deaf participants in these previous studies are not native signers.

Attention-Sustained

In the Attention-Sustained subtest of the LIPS-R (Roid & Miller, 1997), the child is presented with a pencil and a paper form on which is printed an array of shapes or drawings. A target shape is indicated at the top of each page; the child's task is to cross off all of the target shapes in the array and only the target shapes, ignoring salient visual distractors. The experimenter nonverbally conveys the task to participants, who then have an opportunity to practice the task (thereby demonstrating understanding of the instructions). There are four levels of increasing difficulty, each of which is timed. The child's overall performance is scored and converted to a scaled score with mean = 10, SD = 3, where lower values indicate worse performance. Successful performance on this task requires both behavior regulation (inhibiting responses to distractor shapes, staying on-task) and metacognition (allocating visual attention, using efficient scanning strategies). Khan et al. (2005) found that deaf children with CIs (n = 17) and hearing aids (n = 13) both performed worse than hearing children (n = 18) on this task.

Go/No-Go

This paradigm is designed to habituate children to making a prepotent response (“go” trials), which they must occasionally refrain from making (“no-go” trials). The crucial result is the extent to which children are able to successfully override that prepotent response, thus demonstrating inhibitory control. In this study, adapted from Eigsti et al. (2006), we used E-Prime 2.0 software (Psychology Software Tools, 2012) on a PC laptop to display a cartoon of a mouse hole with a door that opened and closed quickly (500 msec). When the door opened, the child saw either a piece of cheese (75% of trials) or a cat (25% of trials). The experimenter explained (in English or ASL) that the child's goal was to help the mouse collect all the cheese but to avoid getting caught by the cat. The child's task was to press the space bar whenever cheese appeared but to not press the space bar when the cat appeared. The task involved 192 trials presented over the span of 5 min, with an interstimulus interval of 1 s. The dependent measure is the rate of false alarms (i.e., pressing the space bar when a picture of the cat appeared). No-go trials were preceded by one to five go trials; those preceded by a larger number of go trials could have evoked more false alarms because of the relatively increased salience of the go response. Preliminary analyses yielded no evidence that the number of no-go trials influenced the probability of false alarms; therefore, this factor was not included in the analyses reported below. High false alarm rates indicate poor inhibitory control. Two previous studies have used variations on the Go/No-Go paradigm with signing deaf participants (Kushalnagar, Hannay, & Hernandez, 2010; Meristo & Hjelmquist, 2009); however, neither study included comparison groups of oral deaf or hearing participants.

Corsi Blocks, Forward

Finally, we included a task on which no group is predicted to be at a disadvantage: the Corsi block task, forward condition. In this task, the experimenter presents a physical apparatus consisting of nine blocks arranged haphazardly on a wooden board. The experimenter taps a series of blocks at a rate of 1 per second; the child's task is to tap on the same blocks in the same order. Two sequences are presented at a given list length; if at least one sequence is recalled correctly, the length of the sequence increases by one (up to a maximum of nine). The score is the length of the longest sequence recalled correctly times the number of successful trials; thus, higher scores indicate better performance. This task does not require significant behavior regulation or metacognition; instead, it assesses visuospatial short-term memory, which neither the auditory access nor the language access account predicts to be at risk in these children. Therefore, finding group differences on this task could indicate differences in general compliance or cognitive level that might cast doubt on the interpretation of group differences on the other EF tasks. Conversely, finding that the groups do not differ on this control task would strengthen the interpretation of any group differences that might be observed on the other EF tasks.

We did not collect nonverbal intelligence for these children. There is a long history of reports that native signers tend to outperform other deaf children on intelligence tests: even those that are intended to be nonverbal tasks (e.g., Amraei, Amirsalari, & Ajalloueyan, 2017; Braden, 1987; Kusché, Greenberg, & Garfield, 1983; Meadow, 1968; Sisco & Anderson, 1980). Given this prior evidence, matching the two groups on nonverbal IQ scores would require samples that are not necessarily representative of the populations to which we aim to generalize. We suspect that these population-level differences reflect the role that mastery of a language plays even in supposedly nonverbal cognition (at least at the level of performance—whether there are true population-level differences in competence is much more difficult to determine).

We also did not conduct formal language assessment because the two participant groups use different primary languages, and it would not be at all clear how to compare performance on nonequivalent assessments of different languages. In addition, there remains a lack of standardized assessments of ASL proficiency that are appropriate for the full age range of our sample.

Procedure

Parents who responded to initial recruitment messages were screened by e-mail; those who qualified then received copies of the informed consent forms and a demographic questionnaire, either in electronic format or in hard copy. Those families who received hard copies were also given a hard copy of the BRIEF, which they were free to fill out before, during, or after their child's testing session. Children ages 7–12 qualified for an additional testing session for a separate study (Hall, Eigsti, Bortfeld, & Lillo-Martin, 2017b), which was scheduled either concurrently or on a separate occasion, according to parental preference. If parents elected to schedule both sections on the same day, the child had a break between them, and session order was determined randomly. 5 Children aged 5–6 were eligible only for this study. In all cases, task order was randomized by asking the child to pull a plastic egg out of a bag, with the name of a different task inside each egg. In addition to randomizing task order, this helped the children keep track of their progress and motivated them to complete all four tasks. Each task lasted 7–8 min on average, with the total session lasting about 40 min, including short breaks between tasks. If parents were not present during the testing session or had not completed the demographic questionnaire and/or the BRIEF by the end of the testing session, they were given a postage-paid envelope to return the completed forms by mail and received biweekly e-mail reminders until the forms were returned.

Results

Parent Report Measure

Data from the BRIEF are available for 110 of 116 participants (95%). The six missing data points are from parents who never completed the form despite persistent reminders. Because comparisons of the BRIEF between the hearing children and the Deaf native signers in this study have been previously reported in Hall et al. (2017a), we focus the bulk of what follows on comparisons involving the oral CI users. We do, however, include brief comparisons between the hearing controls and Deaf native signers to test whether the previously reported results might change when Deaf native signers with CIs are excluded.

Because measuring central tendency is more informative for theoretical purposes and measuring relative risk is more informative for clinical purposes, we analyze both mean T scores and the rates of both elevated (+1 SD) and clinically significant (+1.5 SDs) scores. Note that on the BRIEF, higher T scores reflect increased incidence of problematic behavior.

Mean Scores

Means and standard deviations for all groups are given in Table 2. Separate Group × Scale analyses of variance (ANOVAs) were conducted for the three behavior regulation subscales (3 × 3) and the five metacognition subscales (3 × 5), with subscale as a within-subject factor and group as a between-subjects factor. The three summary indices were each analyzed with separate one-way ANOVAs (with group as a three-level factor), because the Global Executive Composite is not independent of the Behavior Regulation Index and Metacognitive Index. Unless otherwise noted, main effects were further explored with Dunnett's test (using the oral CI users as the reference group), whereas interactions were further explored with pairwise comparisons of the oral CI users and one of the other two groups on each subscale. Finally, a separate set of pairwise comparisons tested for differences between the hearing controls and Deaf native signers who did not also have a CI (to further clarify the basis for the Hall et al., 2017a, findings).

Table 2.

Means and standard deviations of BRIEF T scores by group.

| Scale type | Subscale/index | Hearing controls (n = 38) |

Deaf native signers (n = 40) |

Oral CI users (n = 25) |

|---|---|---|---|---|

| M (SD) | M (SD) | M (SD) | ||

| BRI | Inhibit | 47.32 (8.36) | 50.3 (10.81) | 56.32 (13.13) |

| BRI | Shift | 49.45 (12.05) | 48.08 (8.813) | 59.36 (13.95) |

| BRI | Emotion Control | 48.29 (10.78) | 47.18 (9.532) | 55.56 (13.68) |

| MI | Initiate | 47.89 (7.537) | 49.75 (11.53) | 52.96 (11.1) |

| MI | Working Memory | 46.24 (7.205) | 49.98 (10.06) | 53.96 (11.45) |

| MI | Plan/Organize | 45.71 (7.725) | 49.53 (9.106) | 53 (11.4) |

| MI | Organize Materials | 48.92 (8.604) | 48.68 (10.36) | 50.88 (12.71) |

| MI | Monitor | 46.21 (8.178) | 47.7 (10.81) | 53.96 (12.97) |

| Summary | BRI | 47.95 (10.3) | 48.33 (9.996) | 57.88 (12.95) |

| Summary | MI | 46.89 (8.433) | 48.68 (10.23) | 53.48 (12.01) |

| Summary | GEC | 46.84 (8.059) | 48.68 (10.22) | 55.72 (12.65) |

Note. CI = cochlear implant; BRIEF = Behavior Rating Inventory of Executive Function; BRI = Behavior Regulation Index; MI = Metacognitive Index; GEC = Global Executive Composite.

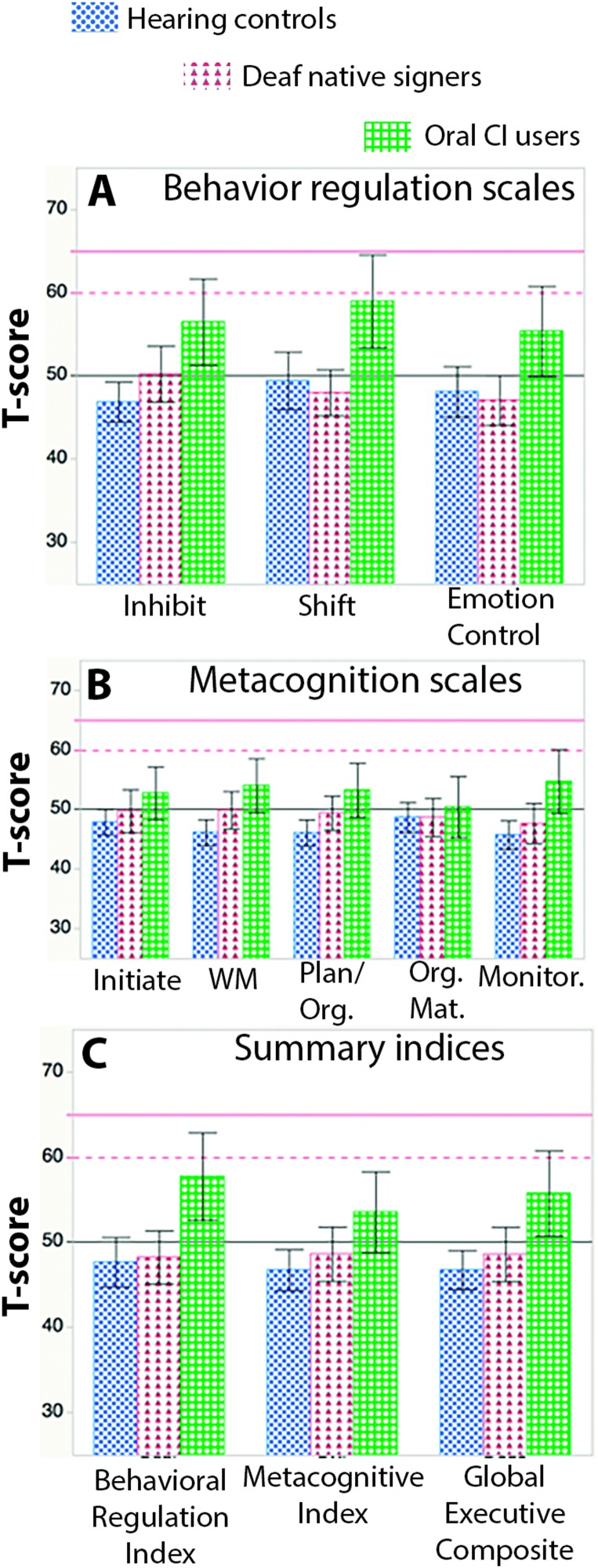

Figure 1A displays the mean scores for the behavior regulation subscales. There was a significant main effect of group, F(2, 109) = 8.87, p < .001, ηp 2 = .14, with Dunnett's test indicating that the oral CI users had significantly higher means than both other groups. A marginal Group × Scale interaction, F(4, 218) = 2.03, p < .1, ηp 2 = .04, suggested that the magnitude of this group difference might differ by subscale. Post hoc contrasts confirmed that the oral CI users had significantly higher mean scores relative to both hearing controls and Deaf native signers on all three behavior regulation subscales (all Fs > 13, all ps < .001, with ηp 2 ranging from .06 to .16). Consistent with Hall et al. (2017a), the hearing controls and Deaf native signers differed only on the Inhibit subscale, F(1, 218) = 5.20, p < .03, ηp 2 = .02.

Figure 1.

Mean T scores on the BRIEF by subscale (or index) and group. Error bars indicate 95% confidence intervals. CI = cochlear implant; WM = working memory; Org. = Organization; Org. Mat. = Organization of Materials; Monitor. = Monitoring; BRIEF = Behavior Rating Inventory of Executive Function.

Figure 1B displays the mean scores for the metacognition subscales. There was a significant main effect of group, F(2, 109) = 4.89, p < .01, ηp 2 = .08, with Dunnett's test indicating that the oral CI users had significantly higher means than the hearing controls, though not the Deaf native signers. A significant Group × Scale interaction, F(8, 109) = 2.00, p < .05, ηp 2 = .03, motivated further analysis of group differences on each subscale. Because of the large number of potential comparisons involved in exploring the interaction of a three-level and five-level factor, we conducted five separate one-way ANOVAs, each exploring the effect of group for one metacognition subscale, with participant as a random factor nested within group. These analyses revealed significant main effects of group for the Working Memory, Plan/Organize, and Monitoring subscales. Applying Dunnett's test to each of these revealed that the oral CI users had higher mean scores than the hearing controls in all three cases and that the oral CI users also had higher mean scores than the Deaf native signers on the Monitoring subscale. In contrast to the Hall et al. (2017a) findings, which reported a significant difference between hearing controls and Deaf native signers on the Working Memory subscale, the present analysis revealed only a marginally significant difference, F(1, 109) = 3.47, p = .07, ηp 2 = .03.

Figure 1C displays the mean scores for the three summary indices. Analysis of the Behavior Regulation Index revealed a significant main effect of group, F(2, 109) = 8.67, p < .001, ηp 2 =.14, with Dunnett's test indicating that the oral CI users had significantly higher means than both the hearing controls and the Deaf native signers. Analysis of the Metacognition Index also revealed a significant main effect of group, F(2, 109) = 4.06, p < .02, ηp 2 = .07; the oral CI users had significantly higher means than the hearing controls, but not the Deaf native signers. Analysis of the Global Executive Composite revealed a significant main effect of group, F(2, 109) = 7.20, p < .01, ηp 2 = .12; the oral CI users had significantly higher means than both the hearing controls and the Deaf native signers. Consistent with the Hall et al. (2017a) findings, the hearing controls and Deaf native signers did not differ on any summary index (all Fs < 0.8, all ps > .37, all ηp 2s < .01).

Relative Risk

On the BRIEF, scores of +1 SD are considered elevated, whereas scores of +1.5 SDs are considered clinically significant. Tables 3 and 4 report the relative risk ratios for elevated and clinically significant scores, respectively, for the oral CI users relative to the hearing controls, the Deaf native signers, and the rates expected under a normal distribution. To compute this latter measure, we first multiplied the sample size of each group by 15.87% (to determine the number of individuals expected to show elevated scores) and by 6.7% (to determine the number of individuals expected to show clinically significant scores). The values were rounded to the nearest integer and used to compute relative risk between the observed data and a hypothetical sample of the same size drawn from a normal distribution.

Table 3.

Relative risk of having elevated scores for each scale of the BRIEF (T score ≥ 60).

| Scale/index | Oral CI users/hearing controls | Oral CI users/deaf native signers | Oral CI users/normal distribution |

|---|---|---|---|

| Inhibit | 8.65 [2.05, 36.5] | 1.43 [0.71, 2.89] | 2.5 [0.9, 6.96] |

| Shift | 2.97 [1.34, 6.59] | 3.78 [1.51, 9.5] | 3 [1.11, 8.09] |

| Emotion Control | 2.31 [0.9, 5.92] | 4.21 [1.23, 14.42] | 2 [0.69, 5.83] |

| Initiate | 6.06 [1.36, 27.03] | 1.58 [0.63, 3.98] | 1.75 [0.58, 5.27] |

| Working Memory | 19.04 [2.6, 139.19] | 1.58 [0.8, 3.1] | 2.75 [1, 7.53] |

| Plan/Organize | 6.06 [1.36, 27.03] | 2.21 [0.78, 6.23] | 1.75 [0.58, 5.27] |

| Organization of Materials | 4.04 [1.14, 14.29] | 2.76 [0.9, 8.51] | 1.75 [0.58, 5.27] |

| Monitoring | 4.33 [1.51, 12.42] | 3.94 [1.38, 11.27] | 2.5 [0.9, 6.96] |

| Behavior Regulation Index | 4.15 [1.65, 10.47] | 3.78 [1.51, 9.5] | 3 [1.11, 8.09] |

| Metacognitive Index | 7.79 [1.82, 33.34] | 2.84 [1.07, 7.54] | 2.25 [0.79, 6.4] |

| Global Executive Composite | 4.76 [1.69, 13.43] | 2.89 [1.22, 6.87] | 2.75 [1, 7.53] |

Note. Risk ratios in boldface are statistically significant (95% confidence interval does not include 1). In cases where the lower bound of the confidence interval was 1.0 after rounding, risk ratios were considered significant if the value was rounded down to 1.0, but not if it was rounded up to 1.0. CI = cochlear implant; BRIEF = Behavior Rating Inventory of Executive Function.

Table 4.

Relative risk of having clinically significant scores for each scale of the BRIEF (T score ≥ 65).

| Scale/index | Oral CI users/hearing controls | Oral CI users/deaf native signers | Oral CI users/normal distribution |

|---|---|---|---|

| Inhibit | 13.85 [1.83, 104.59] | 2.1 [0.82, 5.37] | 4 [0.94, 17.07] |

| Shift | 3.46 [1.33, 9.03] | 15.77 [2.14, 116.06] | 5 [1.21, 20.64] |

| Emotion Control | 4.62 [1.34, 15.88] | 4.21 [1.23, 14.42] | 4 [0.94, 17.07] |

| Initiate | 8.65 [1.07, 70.11] | 1.58 [0.51, 4.92] | 2.5 [0.53, 11.74] |

| Working Memory | 8.65 [1.07, 70.11] | 3.94 [0.82, 18.85] | 2.5 [0.53, 11.74] |

| Plan/Organize | 4.33 [0.9, 20.74] | 7.88 [0.98, 63.75] | 2.5 [0.53, 11.74] |

| Organization of Materials | 8.65 [1.07, 70.11] | 3.94 [0.82, 18.85] | 2.5 [0.53, 11.74] |

| Monitoring | 29.42 [1.74, 497.46] a | 3.15 [1.06, 9.43] | 4 [0.94, 17.07] |

| Behavior Regulation Index | 4.62 [1.34, 15.88] | 4.21 [1.23, 14.42] | 4 [0.94, 17.07] |

| Metacognitive Index | 5.19 [1.13, 23.88] | 9.46 [1.21, 74.18] | 3 [0.67, 13.51] |

| Global Executive Composite | 15.58 [2.09, 116.12] | 4.73 [1.41, 15.88] | 4.5 [1.07, 18.85] |

Note. Risk ratios in boldface are statistically significant (95% confidence interval does not include 1). CI = cochlear implant; BRIEF = Behavior Rating Inventory of Executive Function.

Zero hearing participants had clinically significant scores on the Monitoring scale. Relative risk is computed by adding 0.5 to each cell to avoid dividing by 0.

Regarding the rates of elevated scores, the oral CI users were at significantly greater risk relative to the hearing controls on 10 of 11 scales, relative to the native signers on 6 of 11 scales, and relative to the rates expected under a normal distribution on 4 of 11 scales.

Regarding the rates of clinically significant scores, the oral CI users remained at significantly greater risk relative to the hearing controls on 10 of 11 scales, relative to the native signers on 6 of 11 scales, and relative to the rates expected under a normal distribution on 2 of 11 scales.

There were no scales on which the oral CI users were at significantly or even numerically less risk than any comparison group. For elevated rates, risk ratios ranged from a low of 1.43 to a high of 19.04 (see Table 3). For clinically significant rates, risk ratios ranged from 1.58 to 15.58, not including the Monitoring subscale, where zero hearing controls had clinically significant scores and risk ratios are therefore approximated (see Table 4).

Consistent with findings reported in Hall et al. (2017a), Deaf native signers were at significantly greater risk of having elevated scores on the Inhibit and Working Memory subscales compared to hearing controls, but not relative to the rates expected in a normal distribution. There were no differences between Deaf native signers and hearing controls in the rates of clinically significant scores (not including the Monitoring subscale, where zero hearing controls had clinically significant scores).

Performance-Based Measures

Data from performance-based measures are available from 106 of 116 participants; logistics prevented testing sessions from being scheduled for the remaining 10 participants. The following sections report any additional exclusions from within this subset of participants. Data submitted to parametric analyses were checked to ensure that they did not seriously violate statistical assumptions of normality and homogeneity of variance/homoscedasticity. Means for all performance-based measures are given in Table 5.

Table 5.

Means and standard deviations of scores on performance-based tasks by group.

| Task | Measure | Hearing controls | Deaf native signers | Oral CI users |

|---|---|---|---|---|

| Tower | Scaled score | 10.13 (2.12) | 9.04 (2.47) | 9.17 (2.74) |

| LIPS-R AS | Scaled score | 9.90 (3.00) | 9.56 (2.81) | 8.91 (2.92) |

| Go/No-Go | False alarm rate a | 0.34 (0.17) | 0.38 (0.17) | 0.42 (0.17) |

| Corsi (forward) | Total score a | 34.56 (13.86) | 37.07 (13.8) | 32.89 (13.87) |

Note. CI = cochlear implant; LIPS-R AS = Leiter International Performance Scale–Revised Attention-Sustained.

Age-adjusted means from analysis of covariance.

Tower

Data from the Tower task are available from 91 of 106 participants. The 15 missing data points are due to one of two reasons: either the child or the experimenter terminated the task before the child reached the stopping rule (n = 5) or the experimenter made a mistake in administration or scoring (n = 10). The majority of these administration mistakes occurred because trials in which the child fidgeted with the balls were initially not counted as “moves”; however, because there were no reliable criteria for discriminating “fidgets” from “moves,” any instance of the child touching a ball was ultimately counted as a move. All trials were then rescored under this consistent standard. Importantly, test administration instructions specify that if a child makes an error on Items 3 or 4, then Items 1 and 2 should be administered. Unfortunately, Items 1 and 2 were never administered if the experimenter had initially counted Items 3 and 4 as correct; in such cases, the child's score is uncertain and was therefore excluded from analysis. These mistakes affected hearing controls and Deaf native signers equally (four and five instances, respectively), but no oral CI users were affected, as the protocol had been corrected by the time we began collecting data from CI users. All five instances where testing stopped prematurely affected the Deaf native signers, which suggests that the results reported below may underestimate their true competence.

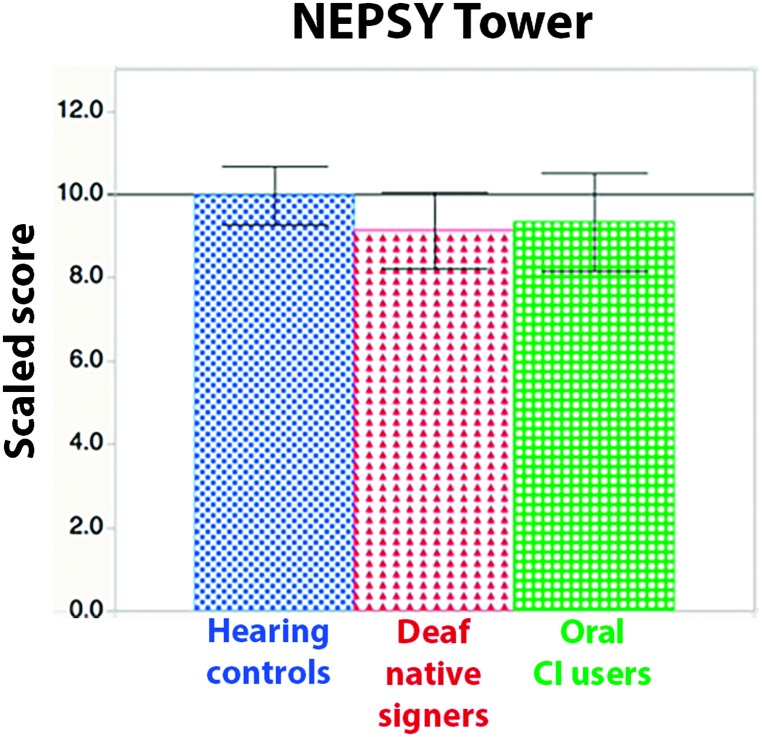

Means. A one-way ANOVA revealed no significant differences in the mean scores of any group, F(2, 88) = 1.11, p = .34, ηp 2 = .02 (see Figure 2).

Figure 2.

Scaled scores by group on the NEPSY Tower subtest. Error bars indicate 95% confidence intervals. CI = cochlear implant.

Relative risk. We computed the relative likelihood of scores falling into the below-average range, defined as 1 SD or more below the mean. Deaf native signers were not at significantly greater risk than hearing controls (risk ratio = 1.31, 95% confidence interval [0.42, 4.1]). Relative risk was higher for oral CI users relative to hearing controls but was not statistically significant (risk ratio = 2.87, 95% confidence interval [0.94, 6.8]).

Attention-Sustained

Data from the LIPS-R Attention-Sustained subtest are available from 97 of 106 participants. The nine missing data points are due to experimenter error (n = 9). Seven of these nine cases were tested using a timer that was later discovered to have been set incorrectly; all nine were in the hearing control group.

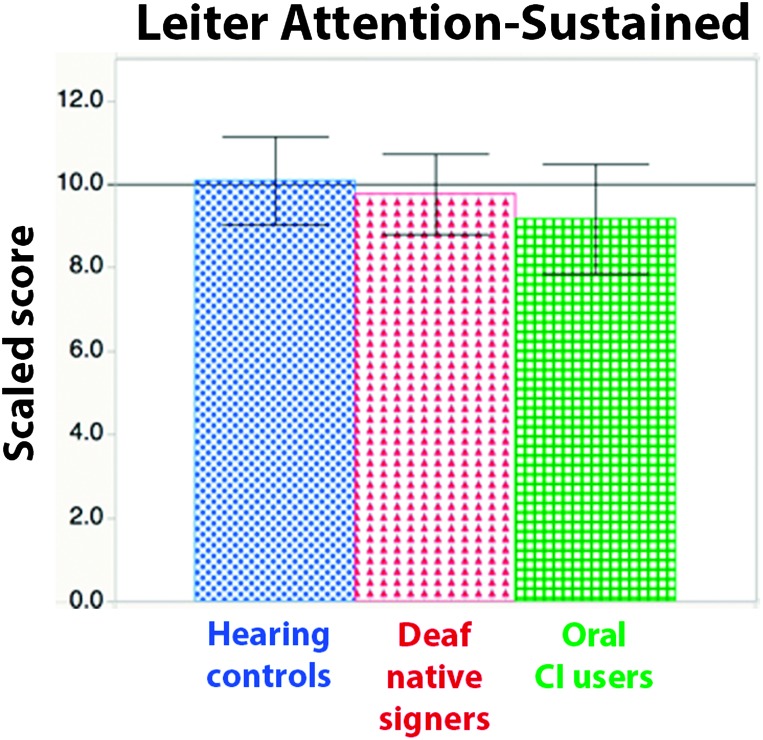

Means. A one-way ANOVA revealed no significant differences in the mean scores of any group, F(2, 99) = 0.68, p = .51, ηp 2 = .01 (see Figure 3).

Figure 3.

Scaled scores by group on the Leiter International Performance Scale–Revised Attention-Sustained subtest. Error bars indicate 95% confidence intervals. CI = cochlear implant.

Relative risk. Again, Deaf native signers were not at significantly greater risk than hearing controls (risk ratio = 1.38, 95% confidence interval [0.55, 3.5]). Risk was slightly higher in oral CI users but also not significant (risk ratio = 1.7, 95% confidence interval [0.65, 4.4]).

Go/No-Go

Data from the Go/No-Go task were available for 104 of 106 participants. The remaining two missing data points reflect technical problems: one participant's data file was corrupted, and one was overwritten.

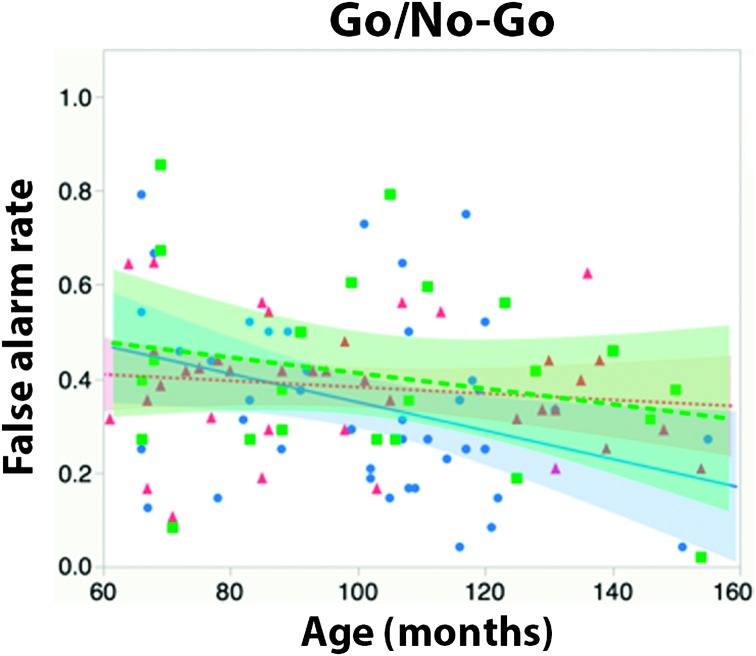

Means. Because the Go/No-Go task does not have published age-based norms, we conducted an analysis of covariance (ANCOVA) with age (in months) as a continuous covariate, after verifying that the effect of age did not differ between the groups (see Figure 4). There was no main effect of group, F(2, 100) = 1.08, p = .34, ηp 2 = .02. There was a significant main effect of age, F(1, 100) = 6.05, p < .02, ηp 2 = .05; false alarms decreased with age, reflecting better inhibitory control.

Figure 4.

False alarm rates for individual participants by age and group. Shaded regions represent 95% confidence of fit. CI = cochlear implant.

Relative risk. Again, because of the absence of age-based norms for this task, we first estimated the relationship between false alarm rates and age by calculating the best-fit line for the hearing control group. Next, we calculated each participant's signed residual from that best-fit line as an indication of how far their performance was from that of an average hearing participant of the same age. We then z-transformed all of these residuals with respect to the mean and standard deviation of the distribution of residuals in the hearing controls only. Thus, the average z score in the hearing controls was fixed at zero, but the z scores of participants in the other two groups were free to vary. Finally, we calculated the relative risk of scoring above the average range by tallying the proportion of participants in each group whose z scores were 1 or higher (higher false alarm rates reflect poorer inhibitory control).

Results revealed a by-now familiar pattern: Deaf native signers were not at significantly increased risk relative to hearing participants (risk ratio = 1.32, 95% confidence interval [0.49, 3.6]). Oral CI users were at greater risk, but not significantly so (risk ratio = 2.18, 95% confidence interval [0.83, 5.7]).

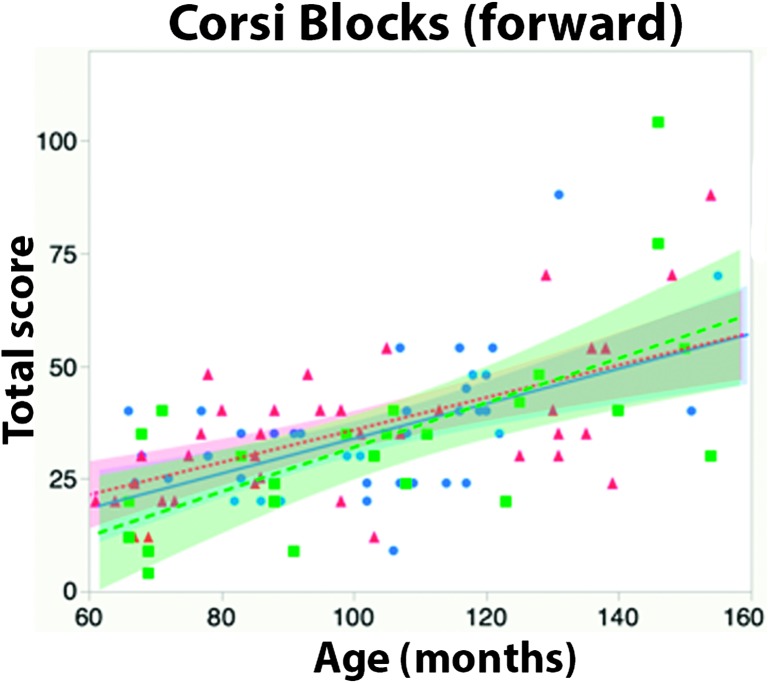

Corsi Blocks

Data from the Corsi block task were available for all 106 participants.

Means. Like the Go/No-Go task, the Corsi block task also lacks published age-based norms. We therefore conducted an ANCOVA with age (in months) as a continuous covariate, after verifying that the effect of age did not differ between the groups (see Figure 5). As expected, the ANCOVA revealed no main effect of group, F(2, 103) = 0.51, p = .60, ηp 2 = .01. A significant main effect of age, F(1, 102) = 61.12, p < .001, ηp 2 = .35, indicated that memory performance improved with age.

Figure 5.

Individual total score on the Corsi block task (forward), by age and group. Shaded regions represent 95% confidence of fit. CI = cochlear implant.

Relative risk. Again, because of the absence of age-based norms, we transformed participants' raw scores to z scores as described above. As expected, neither group of deaf participants was at significantly greater risk of scoring in the below average range on this task relative to hearing controls (i.e., z score of less than −1). Deaf native signers were at numerically less risk than hearing children (risk ratio = 0.83, 95% confidence interval [0.31, 2.2]); oral CI users remained at numerically greater risk (risk ratio = 1.34, 95% confidence interval [0.53, 3.4]).

Performance-Based EF Composite

Finally, to obtain an overall estimate of the participants' performance across the three behavioral tasks that require EF, we transformed the scaled scores from the NEPSY Tower and the LIPS-R Attention-Sustained tasks into z scores, so that they were on the same scale as the false alarm scores for the Go/No-Go task. We also multiplied the false alarm z scores by −1 so that lower values always indicated worse performance. We then computed a composite score in two ways, which differ only in their treatment of missing values.

The first approach was to simply average the z scores across tasks. Here, missing values were simply ignored in both the numerator and denominator. This has the advantage of not requiring any imputation of missing values but accordingly places disproportionately larger weight on the tasks from which a given participant yielded data; thus, unusually good or poor performance on a single task can bias the composite score under this approach.

The second approach was to impute missing or excluded data for each task by calculating the best-fit line that related the child's age (in months) to raw scores for the group to which the participant belonged. This has the advantage of allowing all tasks to contribute equally to the composite score but introduces uncertainty through the imputation of values for missing or excluded data. We mitigated the extent of this uncertainty by checking the imputed values against the participants' observed scores, where available. For example, consider a 9-year-old hearing child whose data from the Tower test were excluded because testing stopped before the child reached the official ceiling criterion. The predicted raw score for a 9-year-old hearing child would be 12; however, the available data might show that this child achieved a raw score of at least 15 before stopping. This participant's true score could have been higher than 15 had testing continued, but it certainly could not be lower. We can therefore improve on the imputed estimate of 12 by using this child's observed score of 15. These imputed scores are first converted into scaled scores (if available) and then z-transformed as described above and averaged to yield the second composite score.

Means. We conducted separate one-way ANOVAs for the two methods of computing the composite score: Neither one yielded a significant main effect of group (missing values retained, F(2, 103) = 1.64, p = .2, ηp 2 = .03; missing values imputed, F(2, 103) = 1.87, p = .16, ηp 2 = .04).

Relative risk. We also calculated the relative risk of scoring below the average range (i.e., z score ≤ −1), shown in Table 6. As with several previous analyses, relative risk did not differ significantly between groups, although risk ratios were numerically largest among the oral CI users.

Table 6.

Relative risk ratios of scoring 1 or more standard deviations from the mean in the direction of undesired outcomes.

| Task | Deaf native signers/hearing controls | Oral CI users/hearing controls | Oral CI users/deaf native signers |

|---|---|---|---|

| NEPSY Tower | 1.31 [0.42, 4.1] | 2.53 [0.94, 6.84] | 1.93 [0.73, 5.14] |

| LIPS-R AS | 1.38 [0.55, 3.49] | 1.7 [0.65, 4.44] | 1.23 [0.53, 2.87] |

| Go/No-Go | 1.32 [0.49, 3.59] | 2.18 [0.83, 5.73] | 1.65 [0.66, 4.11] |

| Corsi blocks | 0.83 [0.31, 2.17] | 1.34 [0.53, 3.42] | 1.63 [0.59, 4.47] |

| EF Composite 1* | 1.38 [0.4, 4.77] | 2.24 [0.66, 7.56] | 1.63 [0.52, 5.03] |

| EF Composite 2* | 0.74 [0.13, 4.17] | 2.99 [0.78, 11.42] | 4.06 [0.85, 19.31] |

Note. No differences reached significance (all 95% confidence intervals include 1); however, risk was numerically highest in oral CI users in all cases. *EF Composite 1 treats missing values as absent. EF Composite 2 imputes missing values as described in the main text. CI = cochlear implant; LIPS-R AS = Leiter International Performance Scale–Revised Attention-Sustained; EF = executive function.

With missing values retained, the risk ratio for native signers relative to hearing controls was 1.38 (95% confidence interval [0.40, 4.8]), whereas the risk ratio for oral CI users relative to hearing controls was 2.24 (95% confidence interval [0.66, 7.6]). Comparing oral CI users to Deaf native signers resulted in a risk ratio of 1.63 (95% confidence interval [0.52, 5.0]).

With missing values imputed, the risk ratio for Deaf native signers relative to hearing controls was 0.74 (95% confidence interval [0.13, 4.2]), reflecting less risk among the native signers than the hearing controls (though not significantly so). The risk ratio for oral CI users relative to hearing controls was 2.99 (95% confidence interval [0.78, 11.4]). Finally, comparing oral CI users to Deaf native signers resulted in a risk ratio of 4.06 (95% confidence interval [0.85, 19.3]).

Discussion

The hypothesis that early auditory deprivation disrupts higher-order neurocognitive functioning makes two clear predictions: The Deaf native signers (who were born and have remained profoundly deaf) should perform worse than the hearing controls on assessments of EF. In addition, the Deaf native signers (who have been deaf for an average of 8 years) should also perform worse than the oral CI users, whose deafness lasted an average of 20 months, after which they gained access to sound through cochlear implantation. The present findings do not provide strong support for either prediction: Deaf native signers showed no evidence of problems in EF compared to norms from typically developing children, although there were statistically significant differences relative to the hearing controls on two BRIEF subscales (Inhibit and Working Memory). Deaf native signers also performed no worse than oral CI users; indeed, many of their parent-reported EF skills were significantly better. Means from the performance-based tasks trended in the same direction. Taken together, these results strongly suggest that deafness itself does not meaningfully disrupt EF and that therefore some other factor—perhaps early access to language—has a stronger impact. We expand on each set of findings below.

Scant Evidence of EF Problems Among Deaf Native Signers

In a previous study, Hall et al. (2017a) found little evidence of EF difficulties as measured by the BRIEF questionnaire (Gioia et al., 2000). The Deaf native signers did not differ from test norms either in their mean score or in the rates of elevated or clinically significant scores. In the two instances where they differed from hearing controls (the Inhibit and Working Memory subscales), the differences were driven by unexpectedly good scores among hearing participants who were sampled from the community surrounding a major research university and may therefore not represent the population of hearing children in general. Although there were no between-group differences in socioeconomic status as measured by the primary caregiver's highest level of education (all participant groups were raised by highly educated parents), it remains possible that more subtle differences in socioeconomic status or related factors led to the unusually good scores among hearing participants on these scales. It is also possible that the population of people who choose to participate in research studies does in fact have lower than normal scores on the BRIEF, such that Deaf native signers ought to have scored just as low as hearing participants. We cannot exclude this possibility but note that lack of auditory access is not a viable explanation for the difference, because the oral CI users scored higher.

Differences on the Inhibit and Working Memory subscales were still observed in this study, although—as in the previous study—they are only significant when analyzing elevated scores; there are no differences between Deaf native signers and hearing children in the rates of clinically significant scores. Still, both inhibitory control (e.g., Harris, 1978; Parasnis, Samar, & Berent, 2003; Quittner et al., 1994) and working memory (Burkholder & Pisoni, 2006; Pisoni & Cleary, 2003) have previously been identified as potential areas of risk for deaf and hard-of-hearing children who are not native signers. On the other hand, other studies have found that neither inhibitory control (Meristo & Hjelmquist, 2009) nor working memory (Bavelier, Newport, Hall, Supalla, & Boutla, 2008; Boutla, Supalla, Newport, & Bavelier, 2004; Hall & Bavelier, 2010; Marshall et al., 2015) is at risk in Deaf native signers. The present results attest to the complexity and potential task specificity of these findings and underscore the need for further research. In particular, it may be fruitful to document the developmental trajectory of these skills and to evaluate whether task selection plays an outsized role in determining when significant differences are or are not found.

The results of Hall et al. (2017a) are limited in that several of the Deaf native signers had access to sound via CIs, the central finding hinged on interpreting a null effect, and the evidence was entirely based on a parent report measure. This study is not vulnerable to the same criticisms, as explained below.

Access to sound via CIs cannot explain the good performance of Deaf native signers, because the handful who used CIs were excluded from the analyses reported here. Although six Deaf native signers reported using hearing aids at least “sometimes,” they also reported hearing levels that indicated minimal auditory access. In addition, self-reported use of hearing technology (on an ordinal scale of 1–7) did not significantly predict outcomes in either parent report measures (BRIEF Global Executive Composite: ρ = .04, p = .82) or performance-based measures (EF Composite: ρ = .02, p = .91).

In the parent report data, though not the performance-based measures, the oral CI users were at significantly increased risk of having disturbances in EF, relative to test norms, hearing controls, and Deaf native signers. The fact that the oral CI users differed from the hearing children on 10 of 11 BRIEF subscales provides helpful context for interpreting the lack of differences between the larger sample of Deaf native signers and hearing children on nine of the 11 scales. Given that differences were detected in the smallest sample of participants, the nondifferences are unlikely to be Type II error.

Neither can the present findings be attributed to parental bias; the Deaf native signers demonstrated age-appropriate performance on all of the performance-based measures and did not differ significantly from hearing children, either in mean score or in relative risk of having below-average scores.

Demonstrating nondifferences in cognitive development between Deaf native signers and hearing children is not novel; however, previous studies of cognitive development in deaf children have not included both Deaf native signers and oral CI users in sufficient numbers to allow meaningful comparison between the groups. Doing so is difficult, because the two populations can be challenging for a single research team to enroll. Researchers with clinical backgrounds may have relatively easy access to CI populations but may lack the ASL skills necessary to recruit and enroll Deaf native signers. Researchers with fluency in ASL, meanwhile, often lack access to children whose families have chosen to pursue listening and spoken language. In addition, qualified participants are geographically diffuse and may require sampling from multiple regions. These barriers have historically hindered progress; to move forward, we call for more collaborations among researchers who have complementary skills and networks.

Despite these practical challenges, including Deaf native signers and oral CI users within the same study is crucial because older findings might be discounted as not relevant to current generations of deaf children, especially given recent advances in early hearing detection and intervention. We recognize, of course, that Deaf native signers are an exceptional population and are not representative of congenitally deaf children in general. But it is precisely because of their exceptional status that the inclusion of Deaf native signers makes it possible to distinguish between theoretical accounts that would otherwise be confounded (i.e., auditory access and language access). The present results contribute to a substantial body of evidence documenting that auditory access is not necessary for healthy cognitive development, provided that children have access to a natural language that they can perceive. This view is further corroborated by an independent body of evidence that documents difficulties in EF among children whose language is impaired, but whose hearing is intact, as in specific language impairment (e.g., Henry, Messer, & Nash, 2012; Hughes, Turkstra, & Wulfeck, 2009; Marton, 2008) and autism (Landa & Goldberg, 2005; McEvoy, Rogers, & Pernnington, 1993; Ozonoff, Pennington, & Rogers, 1991).

To our knowledge, the only evidence that deafness itself impacts higher-order cognitive processes comes from studies of the distribution of visual attention, which find that Deaf native signers perform differently than hearing native signers to targets presented in the visual periphery (Bosworth & Dobkins, 2002; Proksch & Bavelier, 2006). Even here, there is debate about whether these effects are due to top-down influences (indicating a role for EF) or whether they can be explained by purely bottom-up processes (thus not involving EF). In summary, there is little if any evidence demonstrating that deafness itself disrupts EF that could not also be attributed to delayed access to or incomplete mastery of language.

Mixed Evidence of EF Problems Among Oral CI Users

The present findings revealed a different pattern for oral CI users: The parent report findings indicated significantly increased incidence of behavioral problems related to EF, whereas the performance-based tasks did not. Our findings of higher mean scores and greater relative risk in CI users compared to hearing participants and test norms are consistent with at least four previous studies that all used the BRIEF to document EF in deaf children who also experienced a period without full access to language (Beer et al., 2011; Hintermair, 2013; Kronenberger, Beer, et al., 2014; Oberg & Lukomski, 2011).

Previous studies have also reported that such deaf children have performed worse than hearing controls or test norms on performance-based assessments of EF (e.g., Figueras et al., 2008; Khan et al., 2005; Kronenberger et al., 2013; Luckner & McNeill, 1994). In the context of these previous findings and the parent report results obtained here, it is somewhat surprising that we did not find any significant differences between the oral CI users and either hearing controls or Deaf native signers on any of these performance-based tasks. Several interpretations of this pattern are possible.

One possibility is that the lack of significant differences on the performance-based measures in this study is an instance of Type II error, especially given the smaller sample of oral CI users and the consistent numerical trends in both the means and risk ratios. Another interpretation is that the children in the present sample have benefited from recent advances in early hearing detection and intervention, which are now offering this generation of deaf children greater protection against disturbances to EF relative to the children who participated in previous studies. A third (related) possibility is that exposure to a particular type of language is a weaker predictor of EF than proficiency in that language. The design of the present experiment categorizes children according to their language exposure, which may be a reliable proxy for proficiency in Deaf native signers, but less so for oral CI users, for whom spoken language outcomes are notoriously variable (e.g., Bouchard, Ouellet, & Cohen, 2009; Ganek, Robbins, & Niparko, 2012; Geers, Nicholas, Tobey, & Davidson, 2016; Kral et al., 2016; Niparko et al., 2010; Peterson, Pisoni, & Miyamoto, 2010; Szagun & Schramm, 2016). It is possible that the participants in our sample of oral CI users were experiencing greater success in spoken language acquisition than the CI users reported in previous studies; however, because we did not directly assess the participants' language proficiency, we cannot be certain that this was the case. Future research documenting the relationship between deaf children's language proficiency (spoken or signed) and EF is warranted.

Another interpretation of the discrepancy between the parent report and the performance-based results is that the measures are tapping different underlying constructs. Although the BRIEF has been shown to have predictive validity for diagnosing attention-deficit/hyperactivity disorder, its relationship to traditional psychological tasks involving EF has remained inconsistent. The consensus in the literature seems to be that there is no strong correlation between results on the BRIEF and results of performance-based assessment (e.g., Mahone et al., 2002; Payne, Hyman, Shores, & North, 2011; Toplak et al., 2008, Toplak, West, & Stanovich, 2013; Vriezen & Pigott, 2002). A likely possibility is that when children participate in research tasks, often in a novel environment and in the presence of an unfamiliar adult, their behavior during a 40-min testing session may not be representative of their behavior in ordinary life.

This study is not designed to distinguish among these various interpretations of why participants in the oral CI group were found to have difficulties in EF by parent report but not by performance-based assessment; this remains a question for future research. The main contribution of this study is that the pattern of data we observed appears to be inconsistent with the hypothesis that deficits in oral CI users are caused by auditory deprivation. If it were, the Deaf native signers should have scored worse than the oral CI users and worse than the norms; neither of those occurred. The only evidence that auditory deprivation could impact EF comes from the finding that Deaf native signers scored worse than hearing controls on the Inhibit and Working Memory subscales. As discussed above, this finding is difficult to interpret, given that the Deaf native signers had thoroughly average scores (Inhibit: 50.3; Working Memory: 49.98), whereas the hearing controls had markedly better than average scores (Inhibit: 47.32; Working Memory, 46.24). We argue that such evidence is too weak to justify the conclusion that auditory deprivation has an adverse impact on the development of EF. Instead, the data are fully consistent with the theory that early access to language, signed or spoken, is crucial for the development of healthy EF.

Possible Impact of Bilingualism?

It has been reported that children who acquire more than one grammar show advantages in EF tasks, particularly those related to cognitive control (Bialystok & Viswanathan, 2009). Although these findings remain controversial (Duñabeitia et al., 2014; Paap & Greenberg, 2013), they raise an intriguing possibility: Perhaps deafness and bilingualism both impact EF but in opposite directions, such that their effects are canceled out in Deaf native signers (who typically master the grammars of ASL and of English by school age). One reason to be skeptical of this interpretation is that there is not yet clear evidence that bimodal bilingualism (i.e., knowing the grammar of a sign language and of a spoken language) confers the same advantages to EF as unimodal bilingualism (i.e., knowing the grammars of two sign languages or two spoken languages). For instance, hearing adults who are bimodal bilinguals do not show advantages in executive control tasks over monolinguals, whereas unimodal bilinguals do (Emmorey, Luk, Pyers, & Bialystok, 2008). Deaf native signers are also unlike most other child bilinguals in that their mastery of L2 (English) comes primarily through its written form and continues to develop throughout the elementary years. The extent of their bilingualism in early childhood thus remains debatable. Clearer evidence would come from examining deaf children who are acquiring more than one sign language; this study included two such participants, but this is too small a sample to provide meaningful insight. We therefore leave these issues to future research.

Conclusions

Distinguishing the impact of auditory access from that of language access is challenging but important, both theoretically and clinically. For example, the mechanisms by which such effects would be produced are entirely different under a theory based on auditory access than under a theory based on language access. The difference in clinical implications is equally stark. If auditory access is necessary to develop healthy EF and given that EF skills in childhood predict not only school readiness but a host of other outcomes across the life span (Blair & Razza, 2007; McClelland et al., 2007; Mischel et al., 1989; Moffitt et al., 2011), it would constitute grounds to argue that all deaf children need access to sound in early childhood. Under this view, exposure to sign language is not predicted to be helpful. On the other hand, if access to language is necessary to develop healthy EF, then all deaf children need access to language as early as possible. Crucially, under this latter view, early access to a natural sign language (e.g., ASL) is predicted to be beneficial for the child's overall development. (The specific parameters of amount and quality of ASL input that would be necessary to yield benefits remain unknown; this is a critical gap that must be addressed in future research.)

This study joins a substantial body of previous evidence arguing that language access is more critical than auditory access for the cognitive development of deaf children. Although auditory access can serve as one means of obtaining language access, it is not the only means nor is it necessarily the most effective: Most extant studies have assessed proficiency in only one specific language, rather than assessing whether or not the child has developed age-appropriate mastery of at least one natural language. Unfortunately, the relative efficacy of different types of language exposure remains poorly characterized (Fitzpatrick et al., 2016).

A recent study illustrates the importance of adopting a global language framework (i.e., a framework in which mastery of at least one natural language is the primary goal, rather than mastery of one specific language). Geers et al. (2017) found that English language proficiency was highest among deaf children who used English only and declined with increasing use of manual communication. However, 49% of participants in the English-only group failed to achieve age-appropriate proficiency by early elementary school—a rate 3.06 times higher than the 16% expected under a normal distribution. Although these rates were even higher among children who used manual communication, proficiency in ASL was never assessed; it is possible that these children were in fact proficient in ASL, and therefore, we would predict them to have healthy EF. It is also possible that these children were not proficient in any language (spoken or signed), in which case we would predict them to be at risk for difficulties in EF. Answering these questions more definitively will require shifting from an approach that emphasizes proficiency in one specific language to assessing whether a child demonstrates age-appropriate skills in at least one natural language.