Abstract

Hearing is often viewed as a passive process: Sound enters the ear, triggers a cascade of activity through the auditory system, and culminates in an auditory percept. In contrast to a passive process, motor-related signals strongly modulate the auditory system from the eardrum to the cortex. The motor modulation of auditory activity is most well documented during speech and other vocalizations but also can be detected during a wide variety of other sound-generating behaviors. An influential idea is that these motor-related signals suppress neural responses to predictable movement-generated sounds, thereby enhancing sensitivity to environmental sounds during movement while helping to detect errors in learned acoustic behaviors, including speech and musicianship. Findings in humans, monkeys, songbirds, and mice provide new insights into the circuits that convey motor-related signals to the auditory system, while lending support to the idea that these signals function predictively to facilitate hearing and vocal learning.

Keywords: corollary discharge, hearing, forward models, vocal learning, prediction, cancellation, reafference

INTRODUCTION

A major challenge for our brain is to distinguish sensory stimuli that result from our own movements (i.e., reafference or sensory feedback) from sensory stimuli generated by external sources (i.e., exafference) (Marr 1982, Sperry 1950, von Holst & Mittelstaedt 1950). Although our movements can generate auditory, visual, and somatosensory feedback, our ability to vocalize and make a wide variety of other sounds places special demands on our auditory system. How do we maintain sensitivity to fainter exafferent sounds in the face of reafferent sounds that can strongly stimulate the ear? And how do we monitor reafferent sounds to control the motor systems that determine their acoustic patterns, as we must when we learn to speak, sing, or play a musical instrument? This review concerns the neural circuits and mechanisms engaged to solve these interrelated problems.

Motor Modulation of the Auditory System Is Widespread

A striking feature of the auditory system is how much its processing can be modulated by movement. For example, vocalization as well as a variety of other head and neck movements activates the middle ear muscles, dampening auditory responses to self-generated sounds (Carmel & Starr 1964, Mukerji et al. 2010, Salomon & Starr 1963). Other movement-related signals act more centrally, in the auditory brainstem and cortex (Curio et al. 2000, Eliades & Wang 2003, Flinker et al. 2010, Heinks-Maldonado et al. 2005, Houde et al. 2002, Müller-Preuss & Ploog 1981, Schneider et al. 2014, Singla et al. 2017). Although most often studied in the context of vocalization, an emerging picture is that nonvocal movements, ranging from finger movements to walking, modulate auditory processing through central corollary discharge pathways that convey motor-related signals directly to the auditory cortex (Reznik et al. 2014, 2015; Rummell et al. 2016; Schneider et al. 2014; Stenner et al. 2015; van Elk et al. 2014; Weiss et al. 2011; Zatorre 2007).

Roles for Movement-Related Auditory Modulation in Hearing and Auditory-Guided Motor Learning

Given the various behavioral contexts and sites in the brain where motor-related signals modulate auditory processing, it is useful to first ponder what roles such modulation may serve. One clue is that movement most often suppresses auditory responses in the brain. Perhaps auditory cues are simply less reliable during movement, especially in animals with a small interaural distance, such as mice. Rapid head movements, including those induced by locomotion, thus may make computing the location of a sound source particularly challenging, favoring reallocation of neural resources to other sensory modalities (Sheppard et al. 2013). Lending support to this reallocation hypothesis is the finding that, in direct contrast to the suppression observed along the auditory pathway, activity in the visual cortex is enhanced during locomotion (Niell & Stryker 2010). Additionally, movements increase during states of arousal, and arousal engages neuromodulatory pathways that can strongly affect sensory processing (Kuchibhotla et al. 2017, Lee & Dan 2012, McGinley et al. 2015a, Nelson & Mooney 2016). Thus, movement and motor-related modulation of auditory processing could be manifestations of a common underlying change in brain state.

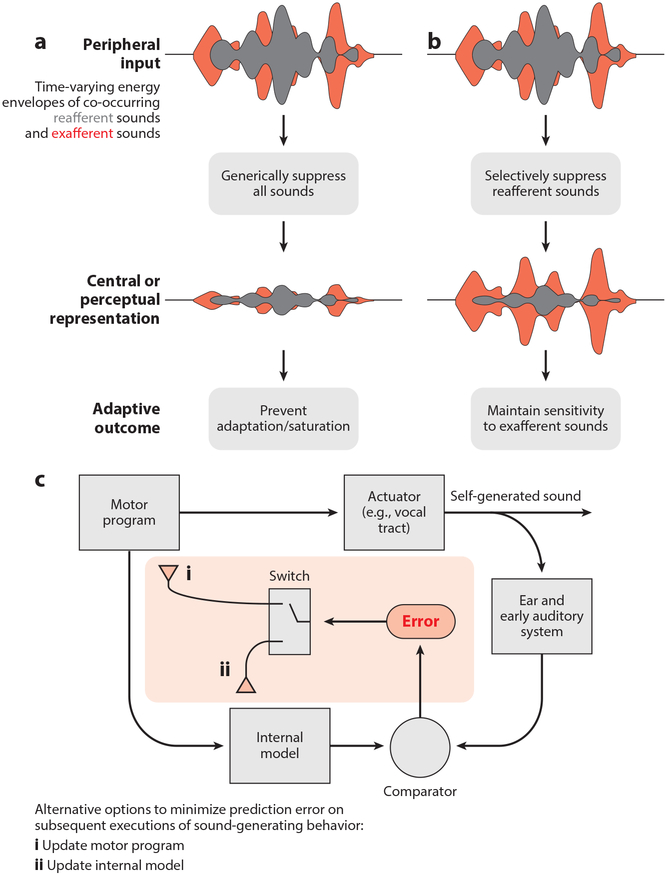

Although changes in brain state are certain to account for important changes in auditory processing, motor-related signals in the auditory system are likely to act in a more directed manner to facilitate hearing. Indeed, the capacity to suppress auditory responses to self-generated sounds can prevent sensory adaptation, allowing the auditory system to maintain sensitivity to exafferent sounds immediately after periods of intense sound generation (Figure 1a). In stridulating crickets and echolocating bats, corollary discharge circuits operate at early stages of the auditory system to cancel out responses to high-intensity self-generated sounds that would otherwise render the animal temporarily unable to detect fainter exafferent sounds following periods of sound generation (Poulet & Hedwig 2002, Suga & Shimozawa 1974).

Figure 1.

Adaptive roles for the modulation of auditory signals during behavior. (a) The auditory system processes environmental (exafferent; red) and self-generated (reafferent; gray) sounds often simultaneously. Generic suppression of all sounds during the execution of sound-generated behaviors can prevent saturation of the auditory system or adaptation, which can leave the auditory system temporarily insensitive following periods of sound generation. (b) In a more directed manner, the auditory system can selectively suppress the acoustic features of self-generated sounds while leaving neural processing of other sounds intact, allowing the brain (and the animal) to detect and react to important environmental cues, even during the production of self-generated sounds. (c) Suppression of self-generated sounds in the auditory system could reflect an internal model that is important for detecting performance errors. Errors, in turn, can be used in one of two ways to minimize subsequent errors. (c, i) First, errors can be used to update the motor program, thereby updating the sound-generating behavior, such as when learning to play a musical instrument. (c, ii) Second, errors can be used to update the internal model rather than the motor program, such as when learning the new sounds of one’s footsteps after walking from concrete to a leafy surface.

A somewhat more sophisticated function hypothesized for motor modulation of the auditory system is to selectively suppress responses to predictable self-generated sounds (Figure 1b). Such a selective self-cancellation process could help the auditory system respond to unpredicted exafferent sounds, such as the vocalizations of other conspecifics or the approaching footfalls of a predator, even in the face of ongoing sound generation. Notably, even small movements that we make, such as tapping our fingers, drawing a breath, speaking, or chewing, reliably generate audible sounds. Nonetheless, the sounds resulting from any single type of movement may be quite varied, such as when we walk over thin ice and then through knee deep water or switch from plucking the bass to the guitar. Therefore, whereas some aspects of auditory cancellation may be hardwired, cancellation mechanisms that can be shaped by experience are likely to be especially adaptive.

Beyond serving a role in detecting exafferent sounds, the brain’s ability to encode predictable aspects of the movement–sound relationship is essential for monitoring the proper execution of sound-generating behaviors, such as speech. Indeed, predictive forward (i.e., motor to sensory) interactions figure prominently in models of auditory-dependent motor learning, including human speech learning (Guenther & Hickok 2015, Hickok & Poeppel 2007, Tian & Poeppel 2010, Wolpert et al. 1995) (Figure 1c). A central circuit that compares this forward prediction with actual movement-related sensory feedback is a likely source of error signals that ultimately enable us to learn how to speak, sing, or play a musical instrument. Presumably, self-cancellation mechanisms that evolved to facilitate hearing during movement were subsequently exploited to enable the learning of more complex auditory-guided motor skills.

A Focus on Motor-Related Signals in the Auditory Cortex of Primates, Mice, and Songbirds

Although motor signals can be detected across the auditory neuraxis, this review focuses largely on the phenomenology, function, and circuit basis of these interactions in the vertebrate auditory cortex. We emphasize the auditory cortex because it receives a wide array of nonauditory afferents, including top-down inputs from frontal cortical regions important to motor planning and bottom-up inputs from neuromodulatory cell groups that facilitate experience-dependent auditory cortical plasticity (Bao et al. 2001, Ford et al. 2001, Froemke et al. 2007, Hackett 2015, Hackett et al. 1999, Kilgard & Merzenich 1998, Morel et al. 1993, Petrides & Pandya 1988). These features, along with the clear role that the auditory cortex plays in speech and musical perception in humans (Gutschalk et al. 2015, Hickok & Poeppel 2007, Peretz et al. 1994, Rauschecker & Scott 2009, Scott & Johnsrude 2003, Stewart et al. 2006, Zatorre 1988, Zatorre et al. 2002), make the auditory cortex an especially attractive site to search for movement-related signals that affect auditory processing and plasticity. Here, we emphasize recent research in three different groups—primates, mice, and songbirds—each of which offers specific insights into the circuit basis and function of motor-related signals that modulate auditory processing.

PRIMATES

Vocalization Modulates the Primate Auditory Cortex

Vocalization is an ancient and near-universal form of social signaling in vertebrates, facilitating social affiliation, conspecific and individual recognition, and courtship. Most mammals and all primates produce a rich repertoire of innate vocalizations, and humans have an added capacity for speech (i.e., spoken language), a culturally transmitted, learned vocal behavior. Indeed, humans rely on speech as their default means of social communication, with an adult uttering on average approximately 15,000 words daily, or well more than one-quarter of a billion words in a lifetime (Mehl et al. 2007). Consequently, the auditory system must be highly efficient at processing vocal sounds and distinguishing self-generated vocalizations from those of others. Although the entire auditory system is essential to these tasks, the major role of the auditory cortex in speech comprehension indicates that this is a primary site where such distinctions are made (Geschwind & Levitsky 1968, Gutschalk et al. 2015, Hickok & Poeppel 2007, Peretz et al. 1994, Scott & Johnsrude 2003, Stewart et al. 2006, Zatorre 1988, Zatorre et al. 2002).

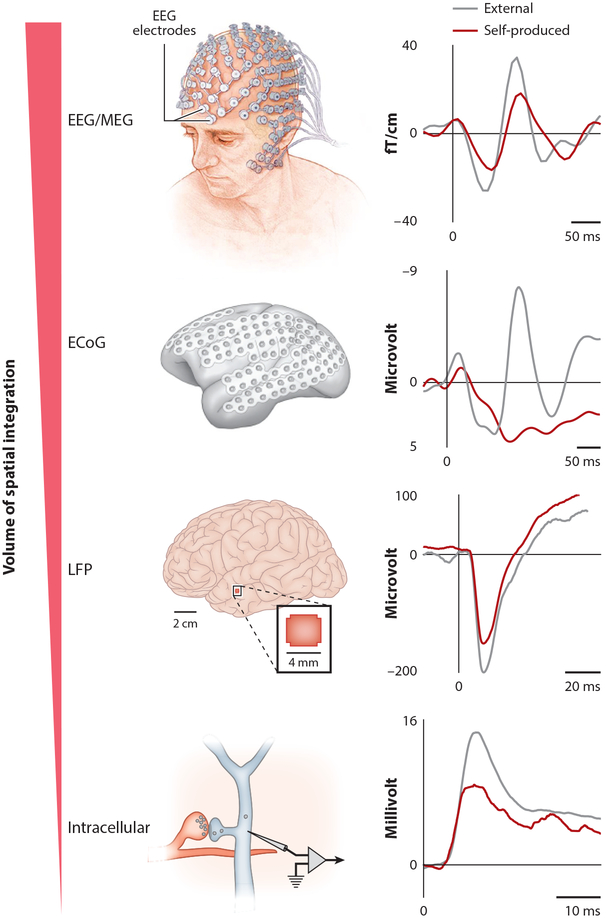

In humans, auditory cortical activity differs markedly depending on whether the subject is speaking or quietly listening to speech playback. Noninvasive neural recordings reveal that vocalization consistently suppresses auditory cortical activity relative to the predominantly excitatory responses elicited by speech playback (Curio et al. 2000, Ford et al. 2001, Houde et al. 2002, Numminen & Curio 1999) (Figure 2). Because these noninvasive techniques measure the mean activity of many thousands or millions of neurons, they cannot distinguish whether vocalization uniformly suppresses auditory cortical neurons or instead exerts more heterogeneous effects. Notably, uniform suppression could indicate a nonspecific filter operating at the auditory periphery, whereas a heterogeneous pattern of cortical suppression could indicate a cortical locus for the modulatory mechanism. In fact, higher-resolution electrical recordings of human brain activity underscore that the patient’s own speech suppresses many auditory cortical neurons relative to speech playback, while revealing a smaller number of sites (or neurons) either equally excited during both conditions or more excited during vocalization (Creutzfeldt et al. 1989, Flinker et al. 2010, Pasley et al. 2012). Similar heterogeneity has been seen with single-neuron recordings of the auditory cortex of freely vocalizing marmosets or in owl monkeys vocalizing in response to electrical stimulation in the periaqueductal gray (PAG) (Eliades & Wang 2005, 2008; Müller-Preuss & Ploog 1981). In the marmoset, in which the most systematic recordings have been made, approximately 70% of auditory cortical neurons are suppressed during vocalization relative to their vocal playback-evoked responses, whereas most of the remaining neurons show similar levels of excitation in the two conditions. Moreover, a systematic neuronal receptive field analysis of vocalizing marmosets indicates that the smaller subset of auditory cortical neurons that show vocalization-related excitation is responding largely to acoustic features in the vocalization and thus may be especially well suited for maintaining veridical responses to external sounds during vocal production (Eliades & Wang 2017).

Figure 2.

Scales of experimentation for monitoring neural activity during sound-generating behaviors. The techniques used to measure neural activity during sound-generating behavior include noninvasive scalp electrodes such as those used to measure EEGs and intracellular recordings from single neurons in the auditory system of freely moving mice and songbirds. Regardless of the scale of measurement, neuronal responses measured within the auditory cortex across many organisms are weaker during the processing of self-generated sounds than during the processing of externally produced sounds. Rightmost panels are modified with permission from authors from the following references: EEG/MEG data, Curio et al. (2000); ECoG data, Flinker et al. (2010); LFP data, Rummell et al. (2016); intracellular data, Schneider et al. (2014). Top left panel adapted from Mayo Clinlc. Abbreviations: ECoG, electrocortigram; LFP, local field potential; MEG, magnetoencephalography.

A related issue is whether all major subdivisions of the human auditory cortex display a similar tendency toward speaker-induced suppression. The auditory cortex of humans as well as other primates can be divided into a core region (primary auditory cortex, or A1), located in the posterior-medial part of Heschl’s gyrus (HG) within the Sylvian fissure, and noncore (belt and parabelt) regions that extend along the lateral face of the superior temporal gyrus (Hackett 2015, Romanski & Averbeck 2009). Notably, surface electrocortigram arrays sample activity largely in the noncore regions of the cortex (Figure 2). In contrast to recordings made from these noncore regions, depth electrode recordings made from HG show that most sites respond similarly during speech and speech playback (Behroozmand et al. 2016). Thus, the core region of the auditory cortex may function to largely track vocalization-related auditory feedback, whereas noncore regions may integrate these feedback signals with top-down signals related to vocal motor control.

Evidence That Motor Signals Are Predictive

Online manipulations of auditory feedback in freely vocalizing marmosets and humans support the idea that vocal suppression in the auditory cortex functions predictively to cancel out auditory feedback anticipated to result from vocal motor commands. In marmosets, slightly shifting the frequency of vocalization-related auditory feedback strongly excites rather than suppresses auditory cortical single-unit activity and this effect is not attributable to changes in signal intensity (Eliades & Wang 2008). A similar result in humans has been described, in which altering speech-related auditory feedback results in weaker speaker-induced suppression in the auditory cortex. Indeed, with a large enough shift in pitch, speaker-induced suppression of the auditory cortex is nearly completely abolished (Behroozmand & Larson 2011).

Although often imperceptible to a listener, human speech is variable from one utterance to the next. Interestingly, the speaker-induced suppression measured in the human auditory cortex varies across multiple utterances of the same sound such that the more similar a given utterance is to the median utterance, the stronger the suppression observed in the auditory cortex (Niziolek et al. 2013). These data suggest that motor-related signals relating to speech predict the average acoustic features of a word rather than its trial-to-trial variations. Tracking deviations from an average target may make speech resilient to changes in reafferent distortion, such as when we speak in cavernous rooms versus narrow corridors.

Other Movements Also Modulate Auditory Cortical Activity

The link between vocalization and audition is so direct and the contingency so certain that it may seem inescapable that vocal motor-related signals modulate auditory processing. However, a wide variety of other movements also affect auditory processing and perception, indicative of a broader and more generalized motor-to-auditory interaction. For example, in humans, event-related potentials (either the N100 or M100 component of EEGs or MEG, respectively) in the auditory cortex are attenuated when tones are generated by the subjects tapping on a key, as opposed to passively listening to the same tone, and the timing of this attenuation precedes tone onset, pointing to a motor source (Reznik et al. 2015, Stenner et al. 2015, Timm et al. 2016, Weiss et al. 2011). Remarkably, a similar suppression has been noted for tone playbacks linked to the subject’s heartbeat, suggesting that visceral signals can also modulate auditory cortical activity (van Elk et al. 2014). In most cases, this attenuation in auditory cortical activity is paralleled by a reduced sensitivity to self-generated sounds, although an enhancement of blood-oxygen-level-dependent functional MRI signals in the auditory cortex—and of auditory sensitivity—has also been reported (Reznik et al. 2014).

A Cortical Circuit for Motor-to-Auditory Interactions in the Primate

Anatomical tracing studies of nonhuman primates have identified projections between frontal cortical regions important for movement and temporal regions involved in audition, including projections between the orbitofrontal and prefrontal cortex and the primary, belt, and parabelt regions of the auditory cortex (Deacon 1992; Petrides & Pandya 1988, 2002; Romanski et al. 1999). Although it is likely that information is transmitted bidirectionally between motor and auditory cortical regions, prior emphasis has been placed on projections from rather than to the auditory cortex. In humans, two massive fiber tracts, the arcuate and the uncinated fasciculi, are thought to enable the bidirectional transmission of information between the auditory and motor cortices during speech and other sound-generating behaviors. In concordance with the idea that these pathways serve partly to convey motor signals to the auditory cortex, coherence in the high gamma range, a correlate of synchronous firing, increases between the frontal and temporal lobes during speech, and speaker-induced suppression in the auditory cortex begins several hundred milliseconds prior to speech onset, close to when speech-related signals peak in Broca’s area (Flinker et al. 2015). In the marmoset auditory cortex, vocal suppression can precede vocal onset by hundreds of milliseconds, consistent with a motor cortical origin (Eliades & Wang 2008).

Various other lines of evidence also support a direct pathway from frontal to auditory cortical regions. For example, lesions of frontal cortex lead to a degeneration of fibers coursing through the arcuate sulcus toward the auditory cortex (Leichnetz & Astruc 1975). Furthermore, micro-stimulation in the frontal cortex of squirrel monkeys suppresses auditory cortical activity, and the onset latency of this suppression is commensurate with a monosynaptic pathway (Alexander et al. 1976). Finally, in humans, transcranial magnetic stimulation applied to the lip motor region of the lateral primary motor cortex can selectively impair the auditory discrimination of voiced consonants (Möttönen & Watkins 2009), which may point to a motor cortical influence on auditory cortical processing of speech sounds. Despite many useful insights into motor-to-auditory interactions from studies of humans and other primates, a full exploration of how the motor cortex influences auditory cortical processing requires studies of organisms more amenable to high-resolution circuit mapping and manipulation.

BRIDGING BEHAVIORAL, CELLULAR, AND SYNAPTIC LEVELS OF ANALYSIS IN MICE

The Mouse Auditory Cortex Is Suppressed During Vocalizations and Other Movements

Like humans and nonhuman primates, mice vocalize during a variety of social encounters, including pup-dam interactions and male-to-female courtship during adulthood (Portfors 2007). Although mouse vocalizations are innate rather than learned (Mahrt et al. 2013), they can be fairly complex, comprising strings of variable syllables uttered in close succession. Moreover, as has been observed in humans, the mouse auditory cortex is modulated by numerous movements, including vocalization. The mouse therefore serves as an important bridge for comparing vocalization-related modulation of the auditory cortex across species and, given their suitability for genetic manipulations, an opportunity to dissect the neural circuitry important to the motor-related modulation of auditory cortical activity.

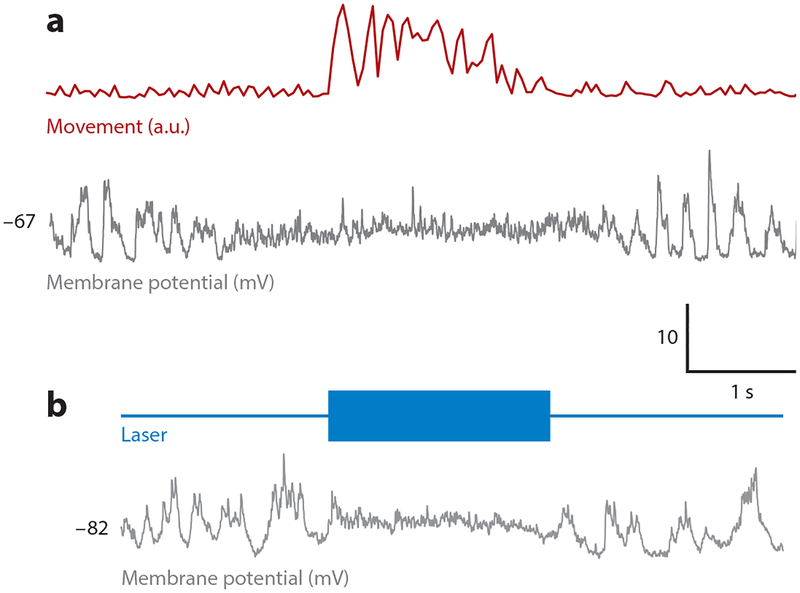

As in humans and other primates, vocalization in the mouse is paralleled by marked suppression of auditory cortical activity. Intracellular recordings of freely courting male mice reveal that auditory cortical pyramidal cells are suppressed during vocalizations, a phenomenon accompanied by a slight decrease in membrane potential and a marked decrease in membrane potential variance (Schneider et al. 2014). Similar recordings from both unrestrained and head-fixed mice detect similar membrane potential changes and diminished tone-evoked responses accompanying almost any movement, including locomotion, whisking, and grooming (Schneider et al. 2014, Zhou et al. 2014) (Figure 3a). During locomotion, when full tuning curves can be obtained, the magnitude of suppression is similar across all frequencies (Schneider et al. 2014, Zhou et al. 2014). One caveat is that males vocalize as they pursue females; thus, more extensive studies of stationary mice are needed to determine how much suppression can be driven solely by vocalization.

Figure 3.

Motor cortical inputs to the auditory cortex suppress membrane potential responses and behavioral detection thresholds. (a) Intracellular recordings of the membrane potential of auditory cortical excitatory neurons in behaving mice reveal a decreased membrane potential variability during movement (from Schneider et al. 2014). (b) Activating channelrhodopsin (ChR2)-expressing M2 axon terminals in the auditory cortex of resting mice by illumination with blue light recapitulates movement-like membrane potential dynamics in auditory cortical excitatory neurons (from Schneider et al. 2014).

Changes in pupil diameter, a class of visceral movements, closely parallel changes in membrane potential and tone-evoked responsiveness in the auditory cortex (McGinley et al. 2015a,b). Notably, the pupil is highly dilated during locomotion but is at an intermediate diameter when the mouse is resting (McGinley et al. 2015a). Because changes in pupil diameter are thought to reflect changes in the balance between sympathetic and parasympathetic tone, one idea is that ascending neuromodulatory inputs alter the global brain state to affect movement and auditory cortical responsiveness in parallel (Reimer et al. 2014).

Behavioral Correlates of Movement-Related Modulation of Auditory Cortical Activity

The strong suppression of tone-evoked responses in the auditory cortex during movement predicts that the mouse’s ability to detect tones should also vary in a movement-dependent manner. Indeed, mice perform more poorly in tone-conditioned lick assays during running, when the auditory cortex is suppressed (and when its pupil diameter is intermediate) (McGinley et al. 2015a). These observations lend support to the idea that movement-related suppression of auditory cortical activity results in diminished auditory sensitivity in the mouse. Although in naive mice diminished cortical responsiveness during locomotion is similar across all frequencies, experience with predictable movement-related sounds can shape auditory cortical suppression in a frequency-specific manner. Recent studies yoked the playback of fixed frequency tone bursts to either lever press movements (Rummell et al. 2016) or treadmill running (Schneider & Mooney 2017), creating an experimentally manipulable form of auditory reafference. Intriguingly, after several days of experience in this environment, movement-related suppression in the auditory cortex was enhanced at the reafferent frequency and these cortical changes were accompanied by improved performance in tone detection ability during movement (Sundararajan et al. 2017). Apparently, auditory cortical suppression in the mouse can be adaptively shaped by experience to selectively suppress predictable auditory reafference, as observed in humans and nonhuman primates (Weiss et al. 2011).

Top-Down and Bottom-Up Circuits for Movement-Related Auditory Cortical Modulation

A major goal of studying movement-related auditory cortical modulation in the mouse is to better describe the underlying synaptic and circuit mechanisms. In fact, intracellular recordings combined with optogenetic and viral circuit mapping methods have helped clarify that a substantial fraction (~50%) of movement-related auditory cortical suppression arises locally within the mouse’s auditory cortex (Schneider et al. 2014). A primary means of this suppression is a shunting inhibition driven by local inhibitory interneurons, which are excited during movement and synapse onto pyramidal neurons in the auditory cortex (Nelson et al. 2013, Schneider et al. 2014). Furthermore, optogenetic activation of inhibitory interneurons in immobile, awake mice impairs performance in a tone detection task, similar to the effects of movement (Sundararajan et al. 2017). Finally, following experience with predictable locomotion-driven auditory reafference, movement-related suppression in the auditory thalamus is not altered at the reafferent frequency, indicating that the predictive component of movement-related suppression arises entirely within the auditory cortex (Schneider & Mooney 2017). These observations emphasize the importance of local inhibitory neurons driving movement-related auditory cortical suppression and advance these cells as a site where experience can act to modify this cortical filter.

A major question is what excites inhibitory neurons in the auditory cortex during movement. One clue is that the auditory cortices in rodents, similar to those in primates, receive direct input from several frontal cortical regions important to motor planning and execution, including the primary motor cortex (M1), the secondary motor cortex (M2, or medial agranular cortex), and the cingulate cortex (Nelson & Mooney 2016, Nelson et al. 2013). The most detailed anatomical and functional descriptions are of the synaptic pathway from M2 to the auditory cortex in the mouse, which we focus on here. A subset of M2 neurons (i.e., M2ACtx neurons) make axonal projections to all layers of the auditory cortex, where they synapse on both excitatory and inhibitory neurons (Nelson et al. 2013). Notably, M2ACtx neurons are located in both deep and superficial layers of M2, and many of these deep-layer M2ACtx neurons also innervate brainstem motor regions, providing a route by which signals important to movement can also reach the auditory cortex (Nelson et al. 2013).

The extensive projections from M2, including from M2ACtx neurons that also innervate brainstem regions important to movement, to the auditory cortex suggest that the M2-to-ACtx pathway is important for suppressing auditory cortical activity during movement (Nelson et al. 2013). Indeed, the major effect of optogenetically activating M2ACtx axon terminals in the auditory cortex is to drive feedforward inhibition onto excitatory neurons that, in resting mice, is sufficient to suppress auditory cortical responses to tones and elevate tone detection thresholds, closely mimicking the effects of movement (Schneider et al. 2014) (Figure 3b). Interestingly, M2ACtx synapses onto auditory cortical interneurons are strengthened by experience with predictable movement-related auditory feedback, providing a synaptic locus and mechanism whereby the brain can learn to suppress the expected acoustic consequences of sound-generating movements (Schneider & Mooney 2017).

Notably, the discrepancy between how locomotion modulates the auditory cortex (suppression) and the visual cortex (enhancement) is far less pronounced when mice experience auditory or visual cues that are predictable consequences of their running movements. In fact, a substantial fraction of both auditory cortical and visual cortical neurons are unresponsive to such predictable self-generated sensory feedback, whereas these same neurons respond more strongly when sensory feedback deviates from expectation (Zmarz & Keller 2016; D.M. Schneider, J. Sundararajan & R. Mooney, manuscript submitted). Moreover, projections from M2 to the visual cortex in the mouse are important for predicting the expected visual consequences of movement, suggesting that M2 may play an important role in generating forward models across different sensory modalities (Lienweber et al. 2017).

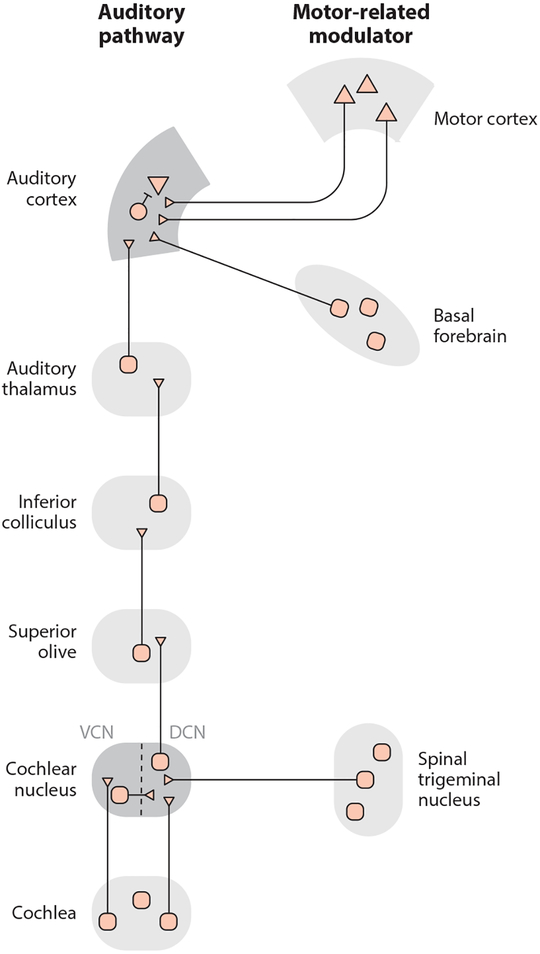

In addition to frontal cortical inputs such as those from M2, the auditory cortex also receives a variety of neuromodulatory inputs, most notably from cholinergic neurons in the basal forebrain (BF) (Lehmann et al. 1980, Nelson & Mooney 2016) (Figure 4). Two-photon calcium imaging of GCaMP+ BF axon terminals in the auditory cortex shows a dramatic increase in acetylcholine activity in the auditory cortex during small movements, locomotion, and auditory task engagement, providing a potential mechanism for state-dependent changes in auditory processing (Kuchibhotla et al. 2017, Nelson & Mooney 2016). Interestingly, both BFACtx and M2ACtx neurons are active during movement and form synapses onto many of the same auditory cortical excitatory and inhibitory neurons (Nelson & Mooney 2016). However, optogenetically activating BFACtx terminals in resting or anesthetized mice does not recapitulate the effects of movement (nor the effects of stimulating M2ACtx terminals) on auditory cortical membrane potential dynamics or tone responsiveness (Nelson & Mooney 2016). Moreover, projection-pattern-specific retrograde tracing experiments indicate that BFACtx and M2ACtx neurons are components of distinct networks: Whereas M2ACtx neurons receive inputs largely from frontal cortical regions related to motor planning and action initiation, BFACtx neurons receive inputs predominantly from subcortical regions, including the hypothalamus and the locus coeruleus, that may convey state-related information (Nelson & Mooney 2016). The high spatial overlap of M2 and BF axon terminals in the auditory cortex thus allows for a diverse set of movement- and state-related signals to converge on the same auditory cortical microcircuits during behavior. Moreover, because pairing BF input to the auditory cortex with tones is sufficient to drive long-lasting cortical plasticity that is specific for the paired tone (Froemke et al. 2007), the coincident movement-dependent activation of BF and M2 inputs to the auditory cortex could underlie the brain’s ability to learn how to suppress self-generated sounds.

Figure 4.

Schematic of the ascending auditory system and key motor-related modulators. The mammalian auditory cortex receives movement-related signals from motor cortical regions and from cholinergic neurons in the basal forebrain. Motor-related signals also impinge upon the auditory system within the brainstem. In particular, inputs from the spinal trigeminal nucleus innervate the DCN. Schematic based on data from Nelson & Mooney (2016), Schneider et al. (2014), and Singla et al. (2017). Abbreviations: DCN, dorsal cochlear nucleus; VCN, ventral cochlear nucleus.

Suppression of Self-Generated Sounds in the Auditory Brainstem

Although the major focus of this review is on motor-related inputs to the auditory cortex, studies of the mouse brain have also provided important new insights into subcortical pathways through which movement-related signals modulate auditory activity. Of particular interest is a recently described circuit in the cochlear nucleus (CN) of the auditory brainstem in which inputs from the auditory nerve converge with somatosensory-related inputs from the spinal trigeminal nucleus (Singla et al. 2017) (Figure 4). Singla et al. (2017) show that neurons in the ventral CN (VCN) respond to sounds generated by a mouse’s licking behavior as well as to externally generated, broadband sounds. In contrast, output neurons from the dorsal CN (DCN) respond only to environmental sounds, whereas their responses to licking sounds are strongly suppressed. The DCN is a particularly compelling region for studying how self-generated sounds are cancelled, because its architecture is highly similar to cerebellar circuitry important for cancelling sensory reafference, including vestibular signals generated by active head movements. Future studies should continue to explore the functional and anatomical parallels between DCN and cerebellar circuitry and determine how motor-related signals in the DCN and cortex operate in concert to influence hearing and behavior.

MOTOR-TO-AUDITORY INTERACTIONS IN SONGBIRDS: A ROLE IN VOCAL LEARNING

Although vocalization in vertebrates is common, the capacity for vocal learning is rare. Songbirds are one of the few nonhuman animals that learn to vocalize: Juvenile songbirds memorize a tutor song and then learn to produce a precise copy of this model by comparing singing-related auditory feedback to this song memory. Furthermore, adult songbirds of many species continue to use auditory feedback to maintain their stable songs, and vocal plasticity can be triggered in adults by deafening them or by distorting auditory feedback during singing (Andalman & Fee 2009, Roberts et al. 2017, Sober & Brainard 2009, Turner & Brainard 2007, Woolley & Rubel 1997). These requirements for auditory experience resemble those for human speech, and the circuits in the songbird brain that underlie singing and song learning and, to a somewhat lesser extent, audition have been the subject of intensive study (Doupe & Kuhl 1999). These features make the songbird especially useful for understanding whether and how motor and auditory systems interact to enable vocal learning. Indeed, recent studies have highlighted that motor-to-auditory pathways in the songbird play a role in juvenile song copying and adult forms of vocal plasticity.

Feedback and Putative Motor Signals in the Songbird Auditory Cortex

Single-unit recordings of the auditory telencephalon of freely singing finches, from regions analogous to the mammalian secondary auditory cortex, detect neurons that are similarly activated during singing and in response to song playback, consistent with their role in processing singing-related auditory feedback (Keller & Hahnloser 2009, Prather et al. 2008). In contrast to the largely suppressive effects of vocalization on the primate auditory cortex, singing exerts a predominantly excitatory effect on auditory cortical activity in the songbird, although whether this represents a bias in cell types that are sampled in songbirds versus primates remains uncertain. Notably, in approximately 20% of auditory cortical neurons in the songbird, vocal modulation precedes song onset by several tens of milliseconds, consistent with a motor-related signal (Keller & Hahnloser 2009). Therefore, during singing, the songbird auditory cortex appears to integrate auditory feedback and vocal motor-related signals.

Vocal Motor Signals in the Songbird Auditory Cortex May Facilitate Error Detection

Systematic perturbations of auditory feedback in singing birds suggest that the auditory cortex is where an internal motor-based estimate of auditory feedback is integrated with actual feedback to generate error signals (Canopoli et al. 2014). Indeed, a subset of songbird auditory cortical neurons may function as error detectors: Although these neurons may not be active during singing or in response to song playback, they are highly excited when singing-related auditory feedback is disrupted by noise, a manipulation that can trigger error-correcting processes that culminate in song plasticity (Keller & Hahnloser 2009, Turner & Brainard 2007). In contrast, other auditory cortical neurons show similar patterns of activity during singing and song playback, but their singing-related activity is unaffected by feedback perturbations. Such behavior is reminiscent of sensorimotor mirror neurons, which are speculated to provide a motor-based estimate of anticipated sensory feedback (Prather et al. 2008). One possibility is that local circuits in the songbird auditory cortex compare this feedback estimate with the actual feedback to generate an error signal. Although still a matter of speculation, an important goal of future studies will be to elucidate the circuitry that gives rise to these putative error signals in the songbird auditory cortex.

A Circuit for Transmitting Vocal Motor Signals to the Auditory Cortex

Although song-error-detecting circuits await a fuller description, recent studies of songbirds have begun to clarify a pathway that conveys motor-related signals to the auditory cortex and illuminate a role for this pathway, as well as downstream error-detecting cells, in song learning. Notably, recent research has elucidated a motor-to-auditory circuit comprising neurons in HVC, a telencephalic nucleus important to song production, that extend their axons into regions analogous to the mammalian secondary auditory cortex (Akutagawa & Konishi 2010, Nottebohm et al. 1982, Roberts et al. 2017). Lesions in HVC prevent songbirds from singing their learned songs, not from producing their innate vocalizations, and focal cooling of HVC slows song timing (Aronov et al. 2008, Hamaguchi et al. 2016, Long & Fee 2008, Nottebohm et al. 1976). Remarkably, the role of HVC in songbirds parallels that of Broca’s area in humans, where lesions cause dysfluency and focal cooling slows speech (Broca 1861, Flinker et al. 2015, Hickok 2012, Long et al. 2016, Ojemann 1991).

Unlike Broca’s area, the cellular and synaptic organization of HVC is known in relatively great detail. Prior studies show that HVC contains two distinct populations of projection neurons (i.e., HVCX and HVCRA cells) that make nonoverlapping axonal projections to song-specific regions of the basal ganglia (Area X) or to downstream motor regions (RA). Recently, Roberts et al. (2017) discovered a third, much rarer population of HVC neurons (i.e., HVCAv cells) that project to a small region of the auditory cortex named Avalanche (Av), strongly reminiscent of the motor-to-auditory cortical projection in mammals. Moreover, because Av is reciprocally connected to other regions of the songbird auditory telencephalon, HVCAv neurons are a likely source of the motor-related signals that have been detected in the auditory cortex of singing birds (Keller & Hahnloser 2009).

Consistent with a role for HVCAv neurons in transmitting motor-related signals, calcium imaging of HVCAv cells in freely behaving birds shows that these neurons become highly active immediately before and during singing, and that this singing-related activity persists following deafening. Detailed circuit mapping in brain slices reveals that HVCAv neurons receive synaptic input from HVCRA but not HVCX neurons and not from afferents to HVC that convey premotor and auditory signals (Roberts et al. 2017). Because HVCRA neurons play a critical role in song timing, HVCAv neurons may provide a specialized channel for relaying information about song timing to the auditory cortex (Hahnloser et al. 2002, Lynch et al. 2016, Picardo et al. 2016). Whether this channel also conveys a motor estimate of auditory feedback is unknown, but some HVCX neurons display sensorimotor mirroring (Prather et al. 2008), suggesting that information about such an estimate is available within HVC. Another possibility is that HVCAv neurons provide a motor-related signal to the auditory cortex that is subsequently combined with other information to generate an internal model of the predicted feedback.

A Role for Motor-to-Auditory Interactions in Vocal Learning

Consistent with the idea that motor-to-auditory pathways play an important role in vocal learning, selectively ablating HVCAv neurons with intersectional viral genetic methods disrupts juvenile song copying and adult forms of feedback-dependent song plasticity (Roberts et al. 2017). In juvenile birds previously exposed to a tutor song, selectively killing these cells prevents accurate song copying. Killing HVCAv neurons in adults exerts little to no effect on the bird’s ability to sing previously learned songs but reduces deterioration of a song’s temporal features normally elicited by deafening. Notably, experiments using singing-contingent noise playback, which can be used to alter either the frequency or the duration of specific target syllables, show that adults with HVCAv lesions are selectively impaired in their ability to modify the duration but not the frequency of the target syllable. Taken together, these findings support the idea that the HVC-to-Av pathway is a source of motor signals important to song copying in the juvenile and to feedback-dependent modification of song timing in the adult. Whether these signals are part of a predictive mechanism in a forward model is currently unknown, but testing this idea remains an important goal of future studies. Another possibility is that HVCAv neurons serve as part of a motor-dependent gate for auditory flow into HVC, a site where auditory responses are rapidly gated off during singing (Prather et al. 2008). Yet another scenario is that HVCAv cells transmit a motor-based representation of the song model, which is at least partly encoded in HVC, to auditory cortical regions downstream of HVC, where it could be compared with singing-related auditory feedback to facilitate song copying and maintenance (Prather et al. 2010; Roberts et al. 2010, 2012; Vallentin et al. 2016).

Another remaining issue is the functional link between error-detecting cells and error-correcting processes that drive vocal learning. An emerging consensus is that projections from dopamine-releasing neurons in the ventral tegmental area (VTA) to Area X are critical to juvenile song copying and feedback-dependent forms of adult song plasticity (Gadagkar et al. 2016, Hisey et al. 2018). In this light, auditory regions that supply input to the VTA warrant special attention. One source of auditory-related input to the VTA is a cluster of neurons located in a region of the caudal telencephalon called the ventral portion of the intermediate arcopallium (Aiv), which in turn receives input from Av and other secondary auditory cortical regions (Mandelblat-Cerf et al. 2014). Similar to putative error-detecting neurons in the songbird auditory cortex, these VTA-projecting Aiv neurons are relatively inactive during singing but are excited when singing-related feedback is disrupted by noise playback. In adult songbirds, lesions in Aiv slow deafening-induced song deterioration, lending support to a model in which these neurons are a source of error signals to the VTA that help guide song learning. This organization suggests that the circuitry interposed between HVC and Aiv plays an important role in comparing singing-related auditory feedback with internal song targets, including the tutor song memory.

CONCLUSIONS, IMPLICATIONS, AND FUTURE DIRECTIONS

Major Findings

Far from being a simple feedforward system, the vertebrate auditory system integrates sound information and a host of internally generated signals important for imminent and ongoing behaviors. Recent research in primates, mice, and songbirds emphasizes several consistent principles regarding how motor and auditory systems interact.

Motor-to-auditory interactions are ubiquitous across many vertebrate species. The widespread nature of this phenomenon suggests that motor modulation of hearing is an evolutionarily ancient strategy that has been exploited for a variety of sensory and motor functions.

Motor-to-auditory interactions occur not only during vocalization but also in other various movements. The numerous behaviors accompanied by motor-to-auditory interactions likely reflect the strong coupling between movement and sound and provide fertile ground for discovering the neural circuit basis for auditory-guided behaviors, including speech and musicianship.

The major functional consequence of movement is to suppress auditory cortical activity. Suppression may serve a role in sensory adaptation and in predictive cancellation, helping facilitate hearing while providing the basis for error signals important for vocal learning.

High-resolution circuit mapping using genetic tools in the mouse underscores both the convergent and the parallel nature of motor modulation of auditory processing. In addition to top-down corticocortical circuits, bottom-up circuits convey movement- and state-related signals from neuromodulatory neurons to the auditory cortex. Moreover, motor-related signals modulate auditory processing at the level of the DCN, suggesting that motor modulation of hearing is a computationally intensive task implemented by various players, including regions traditionally associated with motor control as well as other pathways associated with neural plasticity, learning, and error correction.

The recent functional dissection of a motor-to-auditory pathway in the songbird provides the most direct evidence to date that these pathways are important to vocal learning. The discovery of such a pathway in the songbird suggests that circuits that may have evolved originally to facilitate hearing have been co-opted to enable error detection processes that underlie vocal learning. An important goal in songbird research will be to determine whether and how circuits that transmit motor signals to the auditory system contribute to error detection, including comparison of vocalization-related auditory feedback with internal vocal targets.

ACKNOWLEDGMENTS

The authors would like to thank current and former members of the Mooney lab for ongoing discussions regarding the topics covered in this article. In particular, we thank Masashi Tanaka, Katherine Tschida, Mor Ben-Tov, Audrey Mercer, Erin Hisey, Matthew Kearney, Janani Sundararajan, Jonah Singh Alvarado, Samuel Brudner, Valerie Michael, and Michael Booze.

Footnotes

DISCLOSURE STATEMENT

The authors are not aware of any affiliations, memberships, funding, or financial holdings that might be perceived as affecting the objectivity of this review.

LITERATURE CITED

- Akutagawa E, Konishi M. 2010. New brain pathways found in the vocal control system of a songbird. J. Comp. Neurol 518:3086–100 [DOI] [PubMed] [Google Scholar]

- Alexander GE, Newman JD, Symmes D. 1976. Convergence of prefrontal and acoustic inputs upon neurons in the superior temporal gyrus of the awake squirrel monkey. Brain Res 116:334–38 [DOI] [PubMed] [Google Scholar]

- Andalman AS, Fee MS. 2009. A basal ganglia-forebrain circuit in the songbird biases motor output to avoid vocal errors. PNAS 106:12518–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronov D, Andalman AS, Fee MS. 2008. A specialized forebrain circuit for vocal babbling in the juvenile songbird. Science 320:630–34 [DOI] [PubMed] [Google Scholar]

- Bao S, Chan VT, Merzenich MM. 2001. Cortical remodelling induced by activity of ventral tegmental dopamine neurons. Nature 412:79–83 [DOI] [PubMed] [Google Scholar]

- Behroozmand R, Larson CR. 2011. Error-dependent modulation of speech-induced auditory suppression for pitch-shifted voice feedback. BMC Neurosci 12:54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behroozmand R, Oya H, Nourski KV, Kawasaki H, Larson CR, et al. 2016. Neural correlates of vocal production and motor control in human Heschl’s gyrus. J. Neurosci 36:2302–15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Broca P 1861. Remarks on the seat of the faculty of articulate language, following an observation of aphemia (loss of speech). Bull. Soc. Anat 6:330–57 [Google Scholar]

- Canopoli A, Herbst JA, Hahnloser RH. 2014. A higher sensory brain region is involved in reversing reinforcement-induced vocal changes in a songbird. J. Neurosci 34:7018–26 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carmel PW, Starr A. 1964. Non-acoustic factors influencing activity of middle ear muscles in waking cats.Nature 202:195–96 [DOI] [PubMed] [Google Scholar]

- Creutzfeldt O, Ojemann G, Lettich E. 1989. Neuronal activity in the human lateral temporal lobe. II. Responses to the subjects own voice. Exp. Brain Res 77:476–89 [DOI] [PubMed] [Google Scholar]

- Curio G, Neuloh G, Numminen J, Jousmaki V, Hari R. 2000. Speaking modifies voice-evoked activity in the human auditory cortex. Hum. Brain Mapp 9:183–91 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deacon TW. 1992. Cortical connections of the inferior arcuate sulcus cortex in the macaque brain. Brain Res 573:8–26 [DOI] [PubMed] [Google Scholar]

- Doupe AJ, Kuhl PK. 1999. Birdsong and human speech: common themes and mechanisms. Annu. Rev. Neurosci 22:567–631 [DOI] [PubMed] [Google Scholar]

- Eliades SJ, Wang X. 2003. Sensory-motor interaction in the primate auditory cortex during self-initiated vocalizations. J. Neurophysiol 89:2194–207 [DOI] [PubMed] [Google Scholar]

- Eliades SJ, Wang X. 2005. Dynamics of auditory-vocal interaction in monkey auditory cortex. Cereb. Cortex 15:1510–23 [DOI] [PubMed] [Google Scholar]

- Eliades SJ, Wang X. 2008. Neural substrates of vocalization feedback monitoring in primate auditory cortex.Nature 453:1102–6 [DOI] [PubMed] [Google Scholar]

- Eliades SJ, Wang X. 2017. Contributions of sensory tuning to auditory-vocal interactions in marmoset auditory cortex. Hear Res 348:98–111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flinker A, Chang EF, Kirsch HE, Barbaro NM, Crone NE, Knight RT. 2010. Single-trial speech suppression of auditory cortex activity in humans. J. Neurosci 30:16643–50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flinker A, Korzeniewska A, Shestyuk AY, Franaszczuk PJ, Dronkers NF, et al. 2015. Redefining the role of Broca’s area in speech. PNAS 112:2871–75 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ford JM, Mathalon DH, Heinks T, Kalba S, Faustman WO, Roth WT. 2001. Neurophysiological evidence of corollary discharge dysfunction in schizophrenia. Am. J. Psychiatry 158:2069–71 [DOI] [PubMed] [Google Scholar]

- Froemke RC, Merzenich MM, Schreiner CE. 2007. A synaptic memory trace for cortical receptive field plasticity. Nature 450:425–29 [DOI] [PubMed] [Google Scholar]

- Gadagkar V, Puzerey PA, Chen R, Baird-Daniel E, Farhang AR, Goldberg JH. 2016. Dopamine neurons encode performance error in singing birds. Science 354:1278–82 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geschwind N, Levitsky W. 1968. Human brain: left-right asymmetries in temporal speech region. Science 161:186–87 [DOI] [PubMed] [Google Scholar]

- Guenther FH, Hickok G. 2015. Role of the auditory system in speech production. Handb. Clin. Neurol 129:161–75 [DOI] [PubMed] [Google Scholar]

- Gutschalk A, Rupp A, Dykstra AR. 2015. Interaction of streaming and attention in human auditory cortex.PLOS ONE 10:e0118962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hackett TA. 2015. Anatomic organization of the auditory cortex. Handb. Clin. Neurol 129:27–53 [DOI] [PubMed] [Google Scholar]

- Hackett TA, Stepniewska I, Kaas JH. 1999. Prefrontal connections of the parabelt auditory cortex in macaque monkeys. Brain Res 817:45–58 [DOI] [PubMed] [Google Scholar]

- Hahnloser RH, Kozhevnikov AA, Fee MS. 2002. An ultra-sparse code underlies the generation of neural sequences in a songbird. Nature 419:65–70 [DOI] [PubMed] [Google Scholar]

- Hamaguchi K, Tanaka M, Mooney R. 2016. A distributed recurrent network contributes to temporally precise vocalizations. Neuron 91:680–93 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heinks-Maldonado TH, Mathalon DH, Gray M, Ford JM. 2005. Fine-tuning of auditory cortex during speech production. Psychophysiology 42:180–90 [DOI] [PubMed] [Google Scholar]

- Hickok G 2012. Computational neuroanatomy of speech production. Nat. Rev. Neurosci 13:135–45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. 2007. The cortical organization of speech processing. Nat. Rev. Neurosci 8:393–402 [DOI] [PubMed] [Google Scholar]

- Hisey E, Kearney MG, Mooney R. 2018. A common neural circuit for internally guided and externally reinforced forms of motor learning. Nat. Neurosci 21:589–97 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Houde JF, Nagarajan SS, Sekihara K, Merzenich MM. 2002. Modulation of the auditory cortex during speech: an MEG study. J. Cogn. Neurosci 14:1125–38 [DOI] [PubMed] [Google Scholar]

- Keller GB, Hahnloser RH. 2009. Neural processing of auditory feedback during vocal practice in a songbird. Nature 457:187–90 [DOI] [PubMed] [Google Scholar]

- Kilgard MP, Merzenich MM. 1998. Plasticity of temporal information processing in the primary auditory cortex. Nat. Neurosci 1:727–31 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuchibhotla KV, Gill JV, Lindsay GW, Papadoyannis ES, Field RE, et al. 2017. Parallel processing by cortical inhibition enables context-dependent behavior. Nat. Neurosci 20:62–71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee SH, Dan Y. 2012. Neuromodulation of brain states. Neuron 76:209–22 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lehmann J, Nagy JI, Atmadia S, Fibiger HC. 1980. The nucleus basalis magnocellularis: the origin of a cholinergic projection to the neocortex of the rat. Neuroscience 5:1161–74 [DOI] [PubMed] [Google Scholar]

- Leichnetz GR, Astruc J. 1975. Efferent connections of the orbitofrontal cortex in the marmoset (Saguinus oedipus). Brain Res 84:169–80 [DOI] [PubMed] [Google Scholar]

- Lienweber M, Ward D, Sobczak J, Attinger A, Keller GB. 2017. A sensorimotor circuit in mouse cortex for visual flow predictions. Neuron 95:1420–32 [DOI] [PubMed] [Google Scholar]

- Long MA, Fee MS. 2008. Using temperature to analyse temporal dynamics in the songbird motor pathway. Nature 456:189–94 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Long MA, Katlowitz KA, Svirsky MA, Clary RC, Byun TM, et al. 2016. Functional segregation of cortical regions underlying speech timing and articulation. Neuron 89:1187–93 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lynch GF, Okubo TS, Hanuschkin A, Hahnloser RH, Fee MS. 2016. Rhythmic continuous-time coding in the songbird analog of vocal motor cortex. Neuron 90:877–92 [DOI] [PubMed] [Google Scholar]

- Mahrt EJ, Perkel DJ, Tong L, Rubel EW, Portfors CV. 2013. Engineered deafness reveals that mouse courtship vocalizations do not require auditory experience. J. Neurosci 33:5573–83 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mandelblat-Cerf Y, Las L, Denisenko N, Fee MS. 2014. A role for descending auditory cortical projections in songbird vocal learning. eLife 3:e04371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marr D 1982. Vision Cambridge, MA: MIT Press [Google Scholar]

- McGinley MJ, David SV, McCormick DA. 2015a. Cortical membrane potential signature of optimal states for sensory signal detection. Neuron 87:179–92 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGinley MJ, Vinck M, Reimer J, Batista-Brito R, Zagha E, et al. 2015b. Waking state: Rapid variations modulate neural and behavioral responses. Neuron 87:1143–61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mehl MR, Vazire S, Ramirez-Esparza N, Slatcher RB, Pennebaker JW. 2007. Are women really more talkative than men? Science 317:82. [DOI] [PubMed] [Google Scholar]

- Morel A, Garraghty PE, Kaas JH. 1993. Tonotopic organization, architectonic fields, and connections of auditory cortex in macaque monkeys. J. Comp. Neurol 335:437–59 [DOI] [PubMed] [Google Scholar]

- Möttönen R, Watkins K. 2009. Motor representations of articulators contribute to categorical perception of speech sounds. J. Neurosci 29:9819–25 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mukerji S, Windsor AM, Lee DJ. 2010. Auditory brainstem circuits that mediate the middle ear muscle reflex.Trends Amplif 14:170–91 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Müller-Preuss P, Ploog D. 1981. Inhibition of auditory cortical neurons during phonation. Brain Res 215:61–76 [DOI] [PubMed] [Google Scholar]

- Nelson A, Mooney R. 2016. The basal forebrain and motor cortex provide convergent yet distinct movement-related inputs to the auditory cortex. Neuron 90:635–48 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson A, Schneider DM, Takatoh J, Sakurai K, Wang F, Mooney R. 2013. A circuit for motor cortical modulation of auditory cortical activity. J. Neurosci 33:14342–53 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niell CM, Stryker MP. 2010. Modulation of visual responses by behavioral state in mouse visual cortex. Neuron 65:472–79 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niziolek CA, Nagarajan SS, Houde JF. 2013. What does motor efference copy represent? Evidence from speech production. J. Neurosci 33:16110–16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nottebohm F, Kelley DB, Paton JA. 1982. Connections of vocal control nuclei in the canary telencephalon. J. Comp. Neurol 207:344–57 [DOI] [PubMed] [Google Scholar]

- Nottebohm F, Stokes TM, Leonard CM. 1976. Central control of song in the canary, Serinus canarius. J. Comp. Neurol 165:457–86 [DOI] [PubMed] [Google Scholar]

- Numminen J, Curio G. 1999. Differential effects of overt, covert and replayed speech on vowel-evoked responses of the human auditory cortex. Neurosci. Lett 272:29–32 [DOI] [PubMed] [Google Scholar]

- Ojemann GA. 1991. Cortical organization of language. J. Neurosci 11:2281–87 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pasley BN, David SV, Mesgarani N, Flinker A, Shamma SA, et al. 2012. Reconstructing speech from human auditory cortex. PLOS Biol 10:e1001251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peretz I, Kolinsky R, Tramo M, Labrecque R, Hublet C, et al. 1994. Functional dissociations following bilateral lesions of auditory cortex. Brain 117(Pt. 6):1283–301 [DOI] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. 1988. Association fiber pathways to the frontal cortex from the superior temporal region in the rhesus monkey. J. Comp. Neurol 273:52–66 [DOI] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. 2002. Comparative cytoarchitectonic analysis of the human and the macaque ventro-lateral prefrontal cortex and corticocortical connection patterns in the monkey. Eur. J. Neurosci 16:291–310 [DOI] [PubMed] [Google Scholar]

- Picardo MA, Merel J, Katlowitz KA, Vallentin D, Okobi DE, et al. 2016. Population-level representation of a temporal sequence underlying song production in the zebra finch. Neuron 90:866–76 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Portfors CV. 2007. Types and functions of ultrasonic vocalizations in laboratory rats and mice. J. Am. Assoc.Lab. Anim. Sci 46:28–34 [PubMed] [Google Scholar]

- Poulet JF, Hedwig B. 2002. A corollary discharge maintains auditory sensitivity during sound production. Nature 418:872–76 [DOI] [PubMed] [Google Scholar]

- Prather JF, Peters S, Nowicki S, Mooney R. 2008. Precise auditory-vocal mirroring in neurons for learned vocal communication. Nature 451:305–10 [DOI] [PubMed] [Google Scholar]

- Prather JF, Peters S, Nowicki S, Mooney R. 2010. Persistent representation of juvenile experience in the adult songbird brain. J. Neurosci 31:10586–98 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauschecker JP, Scott SK. 2009. Maps and streams in the auditory cortex: Nonhuman primates illuminate human speech processing. Nat. Neurosci 12:718–24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reimer J, Froudarakis E, Cadwell CR, Yatsenko D, Denfield GH, Tolias AS. 2014. Pupil fluctuations track fast switching of cortical states during quiet wakefulness. Neuron 84:355–62 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reznik D, Henkin Y, Schadel N, Mukamel R. 2014. Lateralized enhancement of auditory cortex activity and increased sensitivity to self-generated sounds. Nat. Commun 5:4059. [DOI] [PubMed] [Google Scholar]

- Reznik D, Ossmy O, Mukamel R. 2015. Enhanced auditory evoked activity to self-generated sounds is mediated by primary and supplementary motor cortices. J. Neurosci 35:2173–80 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts TF, Gobes SM, Murugan M, Olevczky BP, Mooney R. 2012. Rapid spine stabilization and synaptic enhancement at the onset of behavioral learning. Nat. Neurosci 15:1454–5922983208 [Google Scholar]

- Roberts TF, Hisey E, Tanaka M, Kearney MG, Chattree G, et al. 2017. Identification of a motor-to-auditory pathway important for vocal learning. Nat. Neurosci 20:978–86 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts TF, Tschida KA, Klein ME, Mooney R. 2010. Rapid spine stabilization and synaptic enhancement at the onset of behavioral learning. Nature 463:948–52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Averbeck BB. 2009. The primate cortical auditory system and neural representation of conspecific vocalizations. Annu. Rev. Neurosci 32:315–46 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Bates JF, Goldman-Rakic PS. 1999. Auditory belt and parabelt projections to the prefrontal cortex in the rhesus monkey. J. Comp. Neurol 403:141–57 [DOI] [PubMed] [Google Scholar]

- Rummell BP, Klee JL, Sigurdsson T. 2016. Attenuation of responses to self-generated sounds in auditory cortical neurons. J. Neurosci 36:12010–26 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salomon G, Starr A. 1963. Sound sensations arising from direct current stimulation of the cochlea in man. Dan. Med. Bull 10:215–16 [PubMed] [Google Scholar]

- Schneider DM, Mooney R. 2017. Motor cortex suppresses auditory cortical responses to self-generated sounds Presented at Soc. Neurosci. Nov. 11–15, Washington, DC [Google Scholar]

- Schneider DM, Nelson A, Mooney R. 2014. A synaptic and circuit basis for corollary discharge in the auditory cortex. Nature 513:189–94 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott SK, Johnsrude IS. 2003. The neuroanatomical and functional organization of speech perception. Trends Neurosci 26:100–7 [DOI] [PubMed] [Google Scholar]

- Sheppard JP, Raposo D, Churchland AK. 2013. Dynamic reweighting of multisensory stimuli shapes decision-making in rats and humans. J. Vis 13:1–19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singla S, Dempsey C, Warren R, Enikolopov AG, Sawtell NB. 2017. A cerebellum-like circuit in the auditory system cancels responses to self-generated sounds. Nat. Neurosci 20:943–50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sober SJ, Brainard MS. 2009. Adult birdsong is actively maintained by error correction. Nat. Neurosci 12:927–31 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sperry RW. 1950. Neural basis of the spontaneous optokinetic response produced by visual inversion. J. Comp. Physiol. Psychol 43:482–89 [DOI] [PubMed] [Google Scholar]

- Stenner MP, Bauer M, Heinze HJ, Haggard P, Dolan RJ. 2015. Parallel processing streams for motor output and sensory prediction during action preparation. J. Neurophysiol 113:1752–62 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stewart L, von Kriegstein K, Warren JD, Griffiths TD. 2006. Music and the brain: disorders of musical listening. Brain 129(Pt. 10):2533–53 [DOI] [PubMed] [Google Scholar]

- Suga N, Shimozawa T. 1974. Site of neural attenuation of responses to self-vocalized sounds in echolocating bats. Science 183:1211–13 [DOI] [PubMed] [Google Scholar]

- Sundararajan J, Schneider DM, Mooney R. 2017. Mechanisms of movement-related changes to auditory detection thresholds Presented at Soc. Neurosci. Nov. 11–15, Washington, DC [Google Scholar]

- Tian X, Poeppel D. 2010. Mental imagery of speech and movement implicates the dynamics of internal forward models. Front. Psychol 1:166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Timm J, Schönwiesner M, Schröger E, SanMiguel I. 2016. Sensory suppression of brain responses to self-generated sounds is observed with and without the perception of agency. Cortex 80:5–20 [DOI] [PubMed] [Google Scholar]

- Tumer E, Brainard M. 2007. Performance variability enables adaptive plasticity of ‘crystallized’ adult birdsong. Nature 450:1240–44 [DOI] [PubMed] [Google Scholar]

- Vallentin D, Kosche G, Lipkind D, Long MA. 2016. Inhibition protects acquired song segments during vocal learning in zebra finches. Science 351:267–71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Elk M, Lenggenhager B, Heydrich L, Blanke O. 2014. Suppression of the auditory N1-component for heartbeat-related sounds reflects interoceptive predictive coding. Biol. Psychol 99:172–82 [DOI] [PubMed] [Google Scholar]

- von Holst E, Mittelstaedt H. 1950. The reafference principle. Interaction between the central nervous system and the periphery In Selected Papers of Erich von Holst: The Behavioural Physiology of Animals and Man, 1:39–73. London: Methuen (from German) [Google Scholar]

- Weiss C, Herwig A, Schütz-Bosbach S. 2011. The self in action effects: selective attenuation of self-generated sounds. Cognition 121:207–18 [DOI] [PubMed] [Google Scholar]

- Wolpert DM, Ghahramani Z, Jordan MI. 1995. An internal model for sensorimotor integration. Science 269:1880–82 [DOI] [PubMed] [Google Scholar]

- Woolley SM, Rubel EW. 1997. Bengalese finches Lonchura Striata domestica depend upon auditory feedback for the maintenance of adult song. J. Neurosci 17:6380–90 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ. 1988. Pitch perception of complex tones and human temporal-lobe function. J. Acoust. Soc. Am 84:566–72 [DOI] [PubMed] [Google Scholar]

- Zatorre RJ. 2007. There’s more to auditory cortex than meets the ear. Hear Res 229:24–30 [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P, Penhune VB. 2002. Structure and function of auditory cortex: music and speech. Trends Cogn. Sci 6:37–46 [DOI] [PubMed] [Google Scholar]

- Zhou M, Liang F, Xiong XR, Li L, Li H, et al. 2014. Scaling down of balanced excitation and inhibition by active behavioral states in auditory cortex. Nat. Neurosci 17:841–50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zmarz P, Keller GB. 2016. Mismatch receptive fields in mouse visual cortex. Neuron 92:766–72 [DOI] [PubMed] [Google Scholar]