Abstract

Purpose

To assess the concurrent validity of a wireless patch sensor to monitor time lying, sitting/standing, and walking in an experimental and a hospital setup.

Methods

Healthy adults participated in two testing sessions: an experimental and real-world hospital setup. Data on time lying, sitting/standing, and walking was collected with the HealthPatch and concurrent video recordings. Validity was assessed in three ways: 1. test for mean differences between HealthPatch data and reference values; 2. Intraclass Correlation Coefficient analysis (ICC 3.1 agreement); and 3. test for mean differences between posture detection accuracies.

Results

Thirty-one males were included. Significant mean differences were found between HealthPatch data and reference values for sitting/standing (mean 14.4 minutes, reference: 12.0 minutes, p<0.01) and walking (mean 6.4 minutes, reference: 9.0 minutes, p<0.01) in the experimental setup. Good correlations were found between the HealthPatch data and video data for lying (ICC: 0.824) and sitting/standing (ICC: 0.715) in the hospital setup. Posture detection accuracies of the HealthPatch were significantly higher for lying and sitting/standing in the experimental setup.

Conclusions

Overall, the results show a good validity of the HealthPatch to monitor lying and poor validity to monitor sitting/standing or walking. In addition, the validity outcomes were less favourable in the hospital setup.

Introduction

Low mobility during hospital stay is common in patients [1] and independently related to poor functional outcomes such as reduced pulmonary function, decreased strength, functional decline, and increased risk on disability in activities of daily living [2–6]. Interventions aiming to decrease the risk on disability and functional decline show good results by increasing the amount of in-hospital mobility [7–10]. Mobility of patients during hospital stay is assessed with a wide variety of methods such as accelerometry [2, 11], structured observations using behavioural mapping protocols [6, 12, 13], and interviews [3]. Accelerometry has a great potential to determine the amount of mobility in patients during hospital stay due to its ability to provide easy and continuously data collection in both brief and lengthy periods of mobility. However, the validity of such accelerometry in the hospital setup is largely unknown [1, 14].

The HealthPatch [15] (Fig 1) is a wireless patch sensor with an accelerometer and equipped for use in hospitals. The HealthPatch is developed to allow thorough evaluation of inpatient mobility (i.e. lying, sitting/standing, and walking) while providing additional data on vital parameters such as heart rate, heart rate variability and respiratory rate. The study of Chan et al. [15] shows promising results with high posture detection accuracies of the HealthPatch in an experimental setup. However, the validity of a measurement device is depending on the context in which it is established [16]. The use of accelerometry in an experimental setup, characterized by scripted activities in a specific order, might misrepresent validity outcomes when compared to real-world conditions [17]. The application of accelerometry in a hospital setup might be considered as a new situation as the context evokes other movement patterns (i.e. lying on the side or sitting on the edge of a hospital bed) [14] and short periods of movement [11, 18]. Therefore, the HealthPatch should specifically be assessed for validity purposes in a setup as close to a real-world hospital setup as possible [19–21]. As the research group considered video recordings in patients during hospitalization too intrusive in this phase of validation, the aim of the current study is to assess the concurrent validity of the HealthPatch to monitor the time lying, sitting/standing, and walking tested in an experimental and real-world hospital setup in healthy adults.

Fig 1.

The reusable wireless HealthPatch sensor (left) and disposable HealthPatch (right) [15].

Materials and methods

Study design

A cross-sectional, experimental study design was used to assess the concurrent validity of the HealthPatch (Vital Connect, CA, United States of America) (Fig 1). All participants participated in two conditions: firstly a real-world hospital setup, secondly an experimental setup. Validity is defined by the Consensus-based Standards for the selection of health Measurement Instruments (COSMIN) group as ‘the degree to which an instrument truly measures the construct it purports to measure’ [16]. The domain of validity is operationalized in three sub-domains, in which concurrent validity is defined as ‘the degree to which the scores of a measurement instrument are an adequate reflection of a golden standard’ [16]. The current study used video data as the golden standard for assessment of mobility in both an experimental and a hospital setup.

Participants’ characteristics and recruitment

A convenience sample of students was recruited between February 2017 and July 2017 at the HAN university of applied sciences, Nijmegen, the Netherlands. Students were informed and asked to participate via social media, e-mail, and their digital learning environment. Healthy male students between 18 and 30 years were eligible for inclusion. Healthy was operationalized as the absence of cognitive or physical disability to lie on a bed, sit, stand, and walk for a total of sixty minutes. The current study aimed to include at least 30 participants, which is considered fair in the COSMIN checklist [19]. Principles of the Declaration of Helsinki (64th version, 19-10-2013) were followed and ethical approval was granted by the advisory board Practice oriented research, Faculty of Health, Behaviour and Society, HAN university of applied sciences, Nijmegen, the Netherlands (EACO 57.02/17). Written informed consent for study inclusion and anonymous collection of data was obtained from each respondent.

Measurement procedure

The measurement procedure started with a standard measurement of body weight (Seca 761), length, and breast girth. Horizontal placement of the patch on the skin at left midclavicular line under the pectoralis minor muscle (position 3) showed the highest accuracy for posture detection in previous research [15] and was for that reason used in all participants. Thereafter, each participant participated for thirty minutes in both the experimental and the hospital setup, resulting in sixty minutes of HealthPatch and video data per participant. Mobility was videotaped with two cameras (GoPro HERO 4) in two different angles to ensure full data capture.

In the experimental setup, participants were instructed by the observers (TG, TvdH, IS, AT) to change level of mobility after standardized blocks of three minutes without breaks according to a predefined format: lying on the hospital bed (block 1, 7, 8), sitting on the hospital bed (block 2, 6, 9), walking in the hospital room (block 3, 5, 10); sitting on the comfortable chair (block 4). In the real-world hospital setup, the observers provided no specific instructions to change the mobility of participants. In addition, participants were not informed about the purpose of measurements to empower natural behaviour. The hospital room had a surface of 30m2, with a hospital bed, nightstand, table, two seats, and a closet (S1 Fig). The participants were able to listen to music and watch television from the bed, which is identical to a real-world hospital setup. Drinks, journals and books were provided at the table.

Outcomes

HealthPatch data, device under investigation–The primary outcome in the current study was time lying, sitting/standing, or walking. The HealthPatch continuously measured level of mobility with a 3-axis micro electro-mechanical system accelerometer resulting in counts with a frequency of 1 Hertz [15]. The time lying, sitting/standing, or walking was saved as one count per second. The data was collected and transmitted instantly by the wireless HealthPatch sensor to an IPad by Bluetooth and securely stored in private cloud data files.

Video data, golden standard–Analysis of video recordings on mobility in participants was considered as the golden standard to determine actual time lying, sitting/standing, or walking of the participants. Second-by-second analysis of the video data was performed by the observers (TG, TvdH, IS, AT) and reported in a standardized case report form. In this form, the observers reported the time lying, sitting/standing, and walking of the participants. In addition, details were provided on the start and end of measurements, problems with data collection, and name of observers. Definitions of different levels of mobility were described by Pedersen et al. [11] and used in the current study to improve interrater assessment of video data. At last, the four observers assessed 270 minutes of new videotaped pilot test data in which the inter-observer reliability was calculated.

Data analysis

HealthPatch data was stored (AT, TvdH) and double checked (AT, TvdH, NK) for incorrect data and missing values. In case of incorrect or missing data, data files (HealthPatch data) and case report forms (video data) were checked to correct the data entry. Prior to data analysis, a syntax was written (AT, TH, NK) to provide independent data analysis in IBM SPSS statistics, version 23 [22]. Descriptive statistics (mean, range) were used to describe the participant characteristics. All time in different levels of mobility was expressed in minutes.

Experimental setup

HealthPatch data on individual level were presented and analyzed with a scatter plot to observe differences in HealthPatch data between participants. Mean differences were calculated between the HealthPatch data and reference values (observed time lying, sitting/standing, walking) with a one sample t-test. The video data showed exactly three minutes lying, sitting/standing or walking per block in all participants as predetermined, securing solid use of the reference values. Outcomes with a two-tailed p-value < 0.05 were considered significant.

Hospital setup

The HealthPatch data were analyzed for correlation with the golden standard video data in the hospital setup. Firstly, data on individual level were presented for lying, sitting/standing and walking with a scatter plot of both HealthPatch and video data. Secondly, Intraclass Correlation Coefficients (ICCs) were calculated between the HealthPatch and video data using a two-way mixed effect model (ICC 3.1 agreement) with 95% confidence intervals (CI). A correlation of ICC < 0.70 was considered poor or moderate, ICC ≥ 0.70 as good and ICC ≥ 0.90 as excellent [23, 24]. Thirdly, measurement error was investigated using Bland Altman 95% limits of agreements (LOA) and visual inspection of measurement error patterns [24]. The limits of agreement illustrate the range and magnitude of the differences between HealthPatch outcomes and video observations [25].

Comparison experimental and hospital setup

A comparison of ICC outcomes between the experimental and hospital setup would provide information on differences in outcomes related to the specific setup. However, correlation coefficients cannot be calculated for data in the experimental setup as there was no variance in time lying, sitting/standing, and walking in all participants. With no variance in the independent variable, the standardized time lying, sitting/standing, or walking, a correlation coefficient cannot be calculated [24]. An independent samples t-test was found to adequately provide information on the (significant) differences between outcomes of the experimental and hospital setup. Therefore, posture detection accuracies were computed on data collected in both the experimental and the hospital setup. The accuracies were calculated as the percent agreement between the HealthPatch and video data.

Results

Thirty-one males were included in the current study with a mean age of 22 years, ranging between 18 and 29 years. The demographics were: mean weight 80 kilograms (range: 60-104kg), mean length 183 centimetres (range: 171-195cm), and mean breast girth 90 centimetres (range: 81-100cm). HealthPatch data were missing on four participants in the experimental setup (participant 9, 17, 19, 24) and hospital setup (participant 6, 7, 24, 25) as a result of connectivity problems. One HealthPatch (case 24) disconnected as the chest hair of the participant impaired the connection between the HealthPatch and his skin. Three participants sat with their arms folded for their chest, causing the HealthPatch to stop register and transfer data. No explanation was found for the missing data in the remaining four participants. As a result, the current study included data on 27 participants (810 minutes) per setup. Analysis of the inter-observer reliability on pilot test video data showed ICC’s (agreement) between 0.971 and 0.999.

Concurrent validity–experimental setup

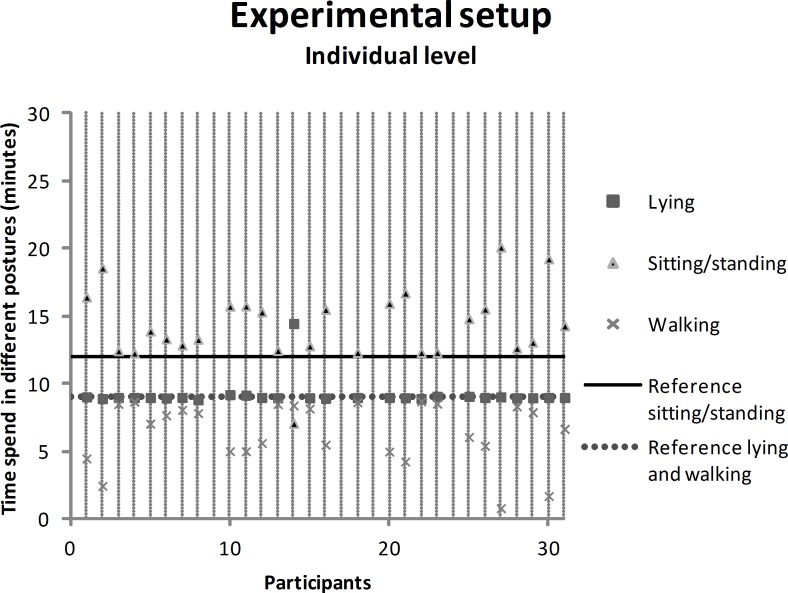

Fig 2 shows the HealthPatch data of the participants in the experimental setup on individual level. The minimum and maximum recorded time lying at individual level were respectively 8.8 and 14.5 minutes, sitting/standing 7.1 and 20.1 minutes, and walking 1.7 and 8.7 minutes. Visual inspection showed an exchange of measurement error between sitting/standing and walking, as the measurement error increased simultaneously. Throughout the experimental setup, participants were instructed: lying 9 minutes (dashed reference line), sitting/standing 12.0 minutes (solid reference line), and walking 9.0 minutes (dashed reference line). The HealthPatch recorded lying for a mean of 9.2 minutes, sitting/standing 14.4 minutes, and walking 6.4 minutes (Table 1). The recorded time sitting/standing was significantly overestimated by the HealthPatch compared to the reference value, while the recorded time walking was significantly underestimated.

Fig 2. Scatter plot showing the individual HealthPatch data in the experimental setup, with a reference line for the actual time that participants were lying (9 minutes, dashed line), sitting/standing (12 minutes, solid line), or walking (9 minutes, dashed line).

Table 1. Difference between the mean HealthPatch outcomes (device under investigation) and video data (golden standard) in the experimental setup, analyzed with the one sample t-test.

| Level of mobility | HealthPatch (mean, SD) |

Video data (golden standard) |

p-value | 95% confidence interval (lower–upper bound) |

|---|---|---|---|---|

| Experimental setup | Minutes | Minutes | ||

| Lying | 9.2 (1.1) | 9.0 | 0.303 | -0.2–0.6 |

| Sitting/standing | 14.4 (2.7) | 12.0 | <0.001 | 1.3–3.4 |

| Walking | 6.4 (2.3) | 9.0 | <0.001 | -3.5 –-1.7 |

Concurrent validity–hospital setup

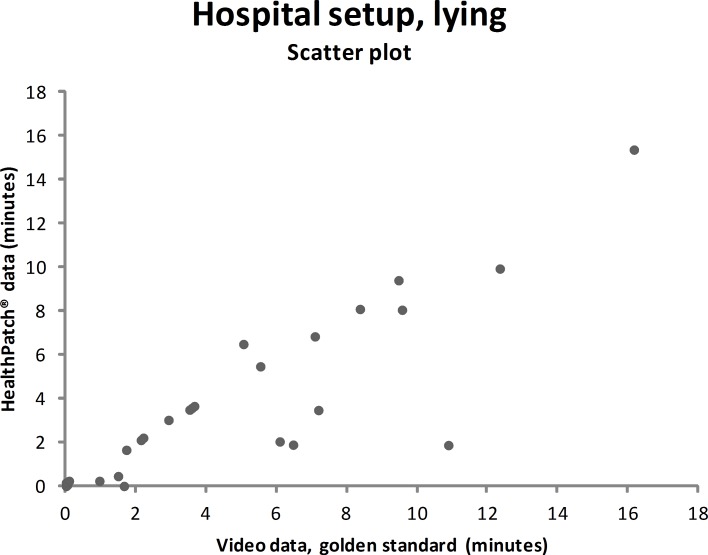

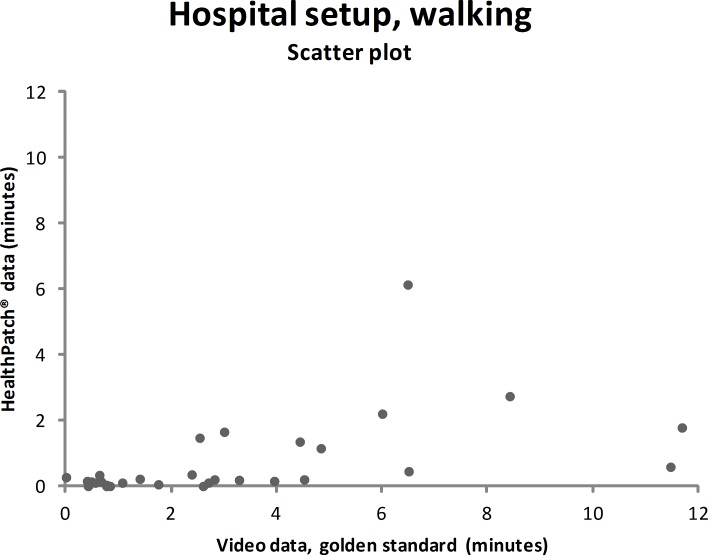

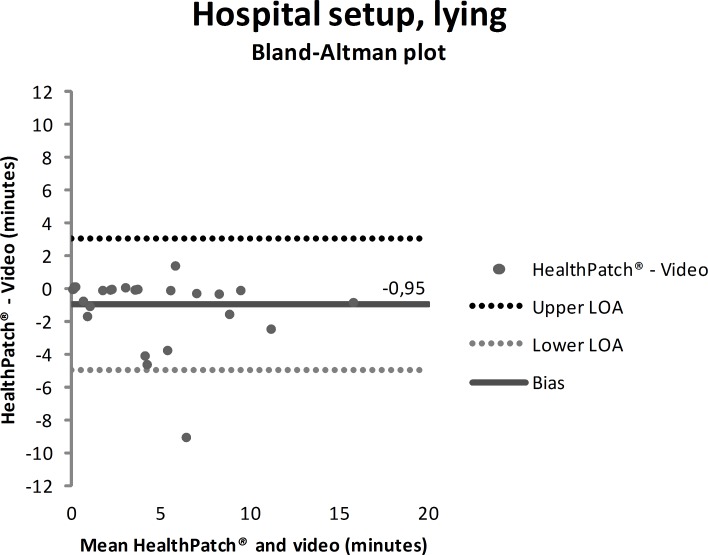

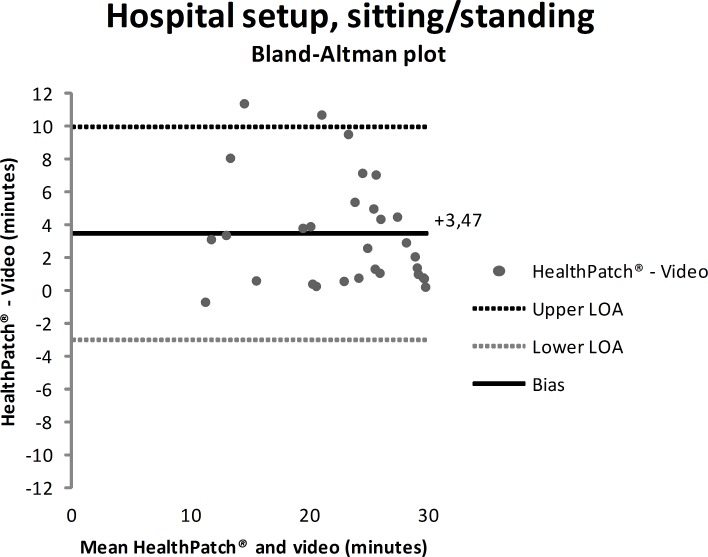

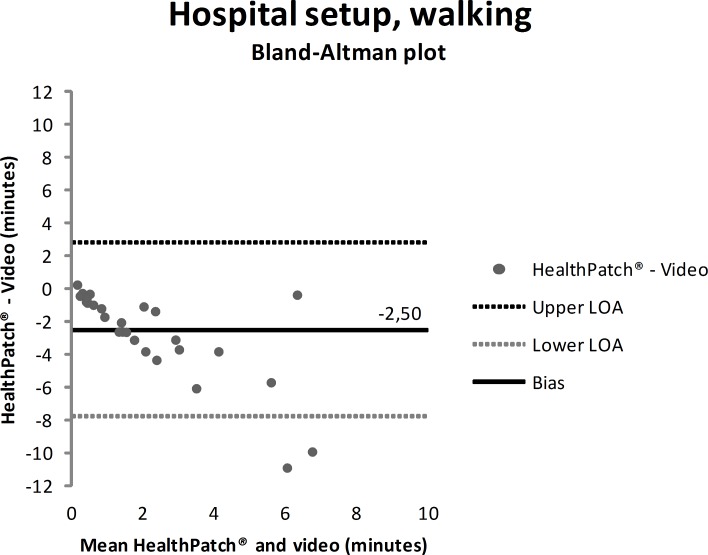

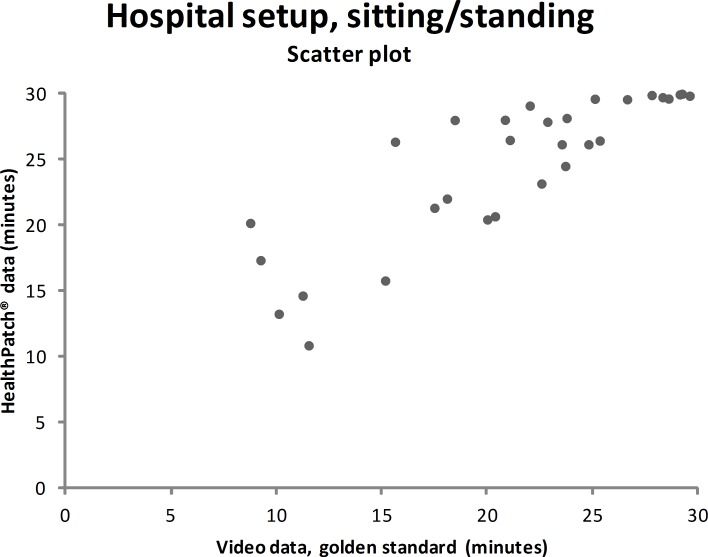

Video data during the hospital setup measurements showed that the participants were lying mean 4.7 minutes (SD: 9.0), sitting/standing mean 22.0 minutes (SD: 9.9), and walking mean 3.3 minutes (SD: 5.6). Figs 3–5 show outcomes of participants in the hospital setup at individual level. ICC’s agreement between the HealthPatch outcomes and video data were respectively 0.824 (good), 0.715 (good), and 0.406 (poor) for lying, sitting/standing, and walking (Table 2). Furthermore, Bland-Altman analysis of the HealthPatch data compared to the video data showed a mean difference of -0.95 (LOA: -4.95–3.05) when lying (in minutes), which indicated a mean underestimation of 20% of total time lying (Fig 6). Furthermore, analysis showed an overestimation of 16% of total time sitting/standing with a mean difference of 3.47 (LOA: -3.01–9.96) (Fig 7). In addition, a mean difference of -2.50 (-7.82–2.81) was observed when walking, which indicated a mean underestimation of 76% of total time walking (Fig 8). Ultimately, Bland-Altman analysis seemed to show an increase in measurement error of the HealthPatch walking data concurrent with an increase of actual time walking.

Fig 3. Scatter plot showing the correlation between the video data (golden standard) and HealthPatch data (device under investigation) for lying in the hospital setup (ICC agreement: 0.824).

Fig 5. Scatter plot showing the correlation between the video data (golden standard) and HealthPatch data (device under investigation) for walking in the hospital setup (ICC agreement: 0.406).

Table 2. Correlation between the HealthPatch data (device under investigation) and video data (golden standard) in the hospital setup, analyzed with the intraclass correlation coefficient (model 3.1 agreement).

| Level of mobility | HealthPatch (mean, SD) |

Video data (mean, SD) | ICC agreement | 95% confidence interval (lower–upper bound) |

|---|---|---|---|---|

| Hospital setup | Minutes | Minutes | ||

| Lying | 3.7 (7.7) | 4.7 (9.0) | 0.824 | 0.779–0.860 |

| Sitting/standing | 25.5 (8.2) | 22.0 (9.9) | 0.715 | 0.486–0.827 |

| Walking | 0.8 (2.6) | 3.3 (5.6) | 0.406 | 0.182–0.567 |

Fig 6. Bland-Altman plot showing mean difference (solid line) and 95% limits of agreement (dashed lines) between HealthPatch data and video data of the observed time lying in the hospital setup.

Fig 7. Bland-Altman plot showing mean difference (solid line) and 95% limits of agreement (dashed lines) between HealthPatch data and video data of the observed time sitting/standing in the hospital setup.

Fig 8. Bland-Altman plot showing mean difference (solid line) and 95% limits of agreement (dashed lines) between HealthPatch data and video data of the observed time walking in the hospital setup.

Fig 4. Scatter plot showing the correlation between the video data (golden standard) and HealthPatch data (device under investigation) for sitting/standing in the hospital setup (ICC agreement: 0.715).

Comparison experimental and hospital setup

The posture detection accuracies of the HealthPatch ranged between 72.3% and 99.3% (Table 3). The most accurate posture detection was shown in the experimental setup for lying, the lowest for walking in the experimental setup. The posture was observed accurately each 30.0 minutes for a mean time of 26.2 minutes in the hospital setup and 24.4 minutes in the experimental setup. Overall, the posture detection accuracies were statistically significantly different between the experimental and the hospital setup measurements at all levels of mobility. In specific, posture detection accuracies were higher in the experimental setup except for walking.

Table 3. Mean differences in posture detection accuracies (in percentage) of HealthPatch data between the experimental and the hospital setup.

| Posture | Experimental setup (mean, SD) |

Hospital setup (mean, SD) |

p-value | 95% confidence interval (lower–upper bound) |

|---|---|---|---|---|

| Lying | 99.3% (0.6) | 94.0% (10.0) | 0.011 | -9.3%–-1.3% |

| Sitting/standing | 98.6% (4.8) | 85.4% (13.6) | <0.001 | -18.8%–-7.6% |

| Walking | 72.3% (24.9) | 89.9% (9.7) | <0.001 | 7.7%– 27.6% |

Group differences were tested with the independent samples t-test.

Discussion

This is the first study to assess the concurrent validity of the HealthPatch to determine time lying, sitting/standing, and walking in both an experimental and a hospital setup. Experimental setup outcomes show poor validity of the HealthPatch to determine time sitting/standing and walking, indicated by statistically significant differences with reference values. The HealthPatch is able to validly monitor lying and sitting/standing in a real-world hospital setup reflected by good ICC’s. These findings implicate that the HealthPatch might be a promising tool to assess the time lying and sitting/standing of patients during hospital stay. However, the ICC between HealthPatch and video data for walking in the hospital setup is poor and Bland-Altman analysis shows a high and increasing measurement error. The posture detection accuracies show low walking detection accuracies and statistically significant differences on all posture detection accuracies between the experimental and the hospital setup. With this knowledge, the use of HealthPatch walking data could not be used interchangeable with the golden standard (video data) and should be considered as biased [24]. The statistically significant and clinically relevant underestimation of time lying by the HealthPatch could result in incorrect recommendations to walk more often, which could potentially harm patients during their hospital stay. For example, when a patient walked 33 minutes per day hospital stay, HealthPatch monitoring might suggest that the patient walked 8 minutes. In addition, the partially loss of mobility data in 7 out of 31 participants suggests that the technology readiness level of the HealthPatch is insufficient for implementation in daily practice.

Earlier research on posture detection accuracies of the HealthPatch by Chan et al. [15] shows results between 88.1% and 95.4% dependent on patch location. However, this study has not followed current methodological recommendations as described by the COSMIN group [19] and performed measurements in only one experimental setup. Pedersen et al. [11] report posture detection accuracies of two wireless monitors (thigh and ankle) in a hospital setup of 90.8%-100% when lying, 73.1%-98.6% when sitting/standing, and 96.5% when walking. Validation of those two wireless monitors is promising, though validation was not the primary aim of the study and therefore performed in a very small sample (n = 6) on limited data (lying 42 minutes, sitting/standing 30 minutes, walking 18 minutes). In addition, Baldwin et al. [26] assessed validity outcomes of the activPAL in patients after intensive care unit discharge. They present similar results to the current study as Bland-Altman analysis reflects an overestimation of the time standing and underestimation of walking. At last, the validation study of Brown et al. [21] included patients admitted at medical wards and shows high correlations of wireless monitors data with behavioural observations in lying (0.98, Pearson correlation coefficient), in sitting (0.97) and standing/walking (0.91). Despite the limitations of Pearson correlation coefficient analysis to detect systematic bias [24], these results show good potential especially for time standing/walking of participants.

Strengths and limitations

Strengths of the current study include the analysis of video recorded data as a golden standard for mobility observation [21]. In addition, the use of a real-world hospital setup showed important differences of HealthPatch outcomes between the experimental and the hospital setup. A limitation was the inclusion of healthy participants instead of hospitalized patients which decreases the real-world generalizability. Observations in patients during hospitalization were considered too intrusive and benefits for participation were considered too low for patients as vulnerable research participants according to the declaration of Helsinki [27]. A second limitation is the use of different observers for the video data. The influence of different observers on outcomes was addressed with rigorous training, discussion of posture definitions, and standardized reporting. Furthermore, the inter-observer reliability analysis shows excellent agreement (ICC: 0.971–0.999). At last, the loss of data in one participant might have been prevented by shaving his chest hair as recommended by the manufacturer.

Recommendations for future research

Firstly, the differences between outcomes in the experimental and real-world hospital setup show the need for a real-world setup to determine the validity of accelerometry. The validity for examination of time lying, sitting/standing, and walking is different between setups indicated by analysis on both individual (Figs 3–5 versus Figs 6–8) and group level (Table 3). In addition, a secondary analysis of the video data showed for example that the low posture detection of walking in the experimental setup (72.3%) compared to the hospital setup (89.9%) could be the result of overly cautious and controlled transfer from sitting to walking by participants 2, 27, and 30 in the experimental setup. Therefore, future research on validity of accelerometry for hospital use should be performed in a real-world hospital setup [14]. Secondly, knowledge of reliability outcomes is needed to be able to early discriminate patients at (risk for) low mobility and provide mobility stimulating interventions if possible.

Conclusions

Assessment of mobility in patients during hospital stay is of great clinical importance. Valid and accurate assessment of in-hospital mobility contributes to early detection of patients at risk for development of new disability during their hospital stay as a result of physical inactivity. Furthermore, mobility assessment is crucial for monitoring purposes in interventions aiming to increase in-hospital mobility of patients. Wireless devices with accelerometry, such as the HealthPatch, provide an opportunity to continuously monitor mobility in patients without mobility limitations.

This is the first study to assess the concurrent validity of an accelerometer to monitor levels of mobility in both an experimental and a hospital setup. Overall, the results show a good validity of the HealthPatch to monitor lying and poor validity for sitting/standing and walking. In addition, the validity outcomes were less favourable in the hospital setup. The use of an experimental and a hospital setup within this study provides a blue-print for future studies to analyze validity of accelerometry.

Supporting information

The measurements were performed in the research zone with a hospital bed, nightstand, table, two seats, and a closet. Two cameras (GoPro HERO 4) were used to videotape the mobility of participants.

(TIF)

(ZIP)

(ZIP)

(ZIP)

(ZIP)

Acknowledgments

We would like to thank the support and kindness of Shanna Bloemen and Yvonne Geurts for providing HealthPatch equipment. The authors would also like to acknowledge Mariska Weenk and Mats Koeneman for their help in data handling and storage. Ruud Leijendekkers is thanked for his help in preparation of the article and figures.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

The authors received no specific funding for this work.

References

- 1.Anderson JL, Green AJ, Yoward LS, Hall HK. Validity and reliability of accelerometry in identification of lying, sitting, standing or purposeful activity in adult hospital inpatients recovering from acute or critical illness: a systematic review. Clinical rehabilitation. 2017:269215517724850 Epub 2017/08/15. 10.1177/0269215517724850 . [DOI] [PubMed] [Google Scholar]

- 2.Brown CJ, Redden DT, Flood KL, Allman RM. The underrecognized epidemic of low mobility during hospitalization of older adults. J Am Geriatr Soc. 2009;57(9):1660–5. Epub 2009/08/18. 10.1111/j.1532-5415.2009.02393.x . [DOI] [PubMed] [Google Scholar]

- 3.Zisberg A, Shadmi E, Sinoff G, Gur-Yaish N, Srulovici E, Admi H. Low mobility during hospitalization and functional decline in older adults. J Am Geriatr Soc. 2011;59(2):266–73. Epub 2011/02/15. 10.1111/j.1532-5415.2010.03276.x . [DOI] [PubMed] [Google Scholar]

- 4.Boyd CM, Landefeld CS, Counsell SR, Palmer RM, Fortinsky RH, Kresevic D, et al. Recovery of activities of daily living in older adults after hospitalization for acute medical illness. Journal of the American Geriatrics Society. 2008;56(12):2171–9. 10.1111/j.1532-5415.2008.02023.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Nielsen KG, Holte K, Kehlet H. Effects of posture on postoperative pulmonary function. Acta anaesthesiologica Scandinavica. 2003;47(10):1270–5. Epub 2003/11/18. . [DOI] [PubMed] [Google Scholar]

- 6.Mudge AM, McRae P, McHugh K, Griffin L, Hitchen A, Walker J, et al. Poor mobility in hospitalized adults of all ages. Journal of hospital medicine. 2016. Epub 2016/01/23. 10.1002/jhm.2536 . [DOI] [PubMed] [Google Scholar]

- 7.Baztan JJ, Suarez-Garcia FM, Lopez-Arrieta J, Rodriguez-Manas L, Rodriguez-Artalejo F. Effectiveness of acute geriatric units on functional decline, living at home, and case fatality among older patients admitted to hospital for acute medical disorders: meta-analysis. BMJ (Clinical research ed). 2009;338:b50 Epub 2009/01/24. 10.1136/bmj.b50 ; PubMed Central PMCID: PMCPMC2769066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bachmann S, Finger C, Huss A, Egger M, Stuck AE, Clough-Gorr KM. Inpatient rehabilitation specifically designed for geriatric patients: systematic review and meta-analysis of randomised controlled trials. BMJ (Clinical research ed). 2010;340:c1718 Epub 2010/04/22. 10.1136/bmj.c1718 ; PubMed Central PMCID: PMCPMC2857746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Inouye SK, Bogardus ST Jr., Charpentier PA, Leo-Summers L, Acampora D, Holford TR, et al. A multicomponent intervention to prevent delirium in hospitalized older patients. The New England journal of medicine. 1999;340(9):669–76. Epub 1999/03/04. 10.1056/NEJM199903043400901 . [DOI] [PubMed] [Google Scholar]

- 10.Fisher SR, Graham JE, Ottenbacher KJ, Deer R, Ostir GV. Inpatient walking activity to predict readmission in older adults. Archives of physical medicine and rehabilitation. 2016;97(9):S226–S31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Pedersen MM, Bodilsen AC, Petersen J, Beyer N, Andersen O, Lawson-Smith L, et al. Twenty-four-hour mobility during acute hospitalization in older medical patients. The journals of gerontology Series A, Biological sciences and medical sciences. 2013;68(3):331–7. Epub 2012/09/14. 10.1093/gerona/gls165 . [DOI] [PubMed] [Google Scholar]

- 12.Kuys SS, Dolecka UE, Guard A. Activity level of hospital medical inpatients: an observational study. Archives of gerontology and geriatrics. 2012;55(2):417–21. Epub 2012/03/16. 10.1016/j.archger.2012.02.008 . [DOI] [PubMed] [Google Scholar]

- 13.Callen BL, Mahoney JE, Grieves CB, Wells TJ, Enloe M. Frequency of hallway ambulation by hospitalized older adults on medical units of an academic hospital. Geriatric Nursing. 2004;25(4):212–7. 10.1016/j.gerinurse.2004.06.016 [DOI] [PubMed] [Google Scholar]

- 14.Lim SE, Ibrahim K, Sayer A, Roberts H. Assessment of physical activity of hospitalised older adults: a systematic review. The journal of nutrition, health & aging. 2018;22(3):377–86. [DOI] [PubMed] [Google Scholar]

- 15.Chan AM, Selvaraj N, Ferdosi N, Narasimhan R, editors. Wireless patch sensor for remote monitoring of heart rate, respiration, activity, and falls. Engineering in Medicine and Biology Society (EMBC), 2013 35th Annual International Conference of the IEEE; 2013: IEEE. [DOI] [PubMed]

- 16.Mokkink LB, Terwee CB, Patrick DL, Alonso J, Stratford PW, Knol DL, et al. The COSMIN study reached international consensus on taxonomy, terminology, and definitions of measurement properties for health-related patient-reported outcomes. Journal of clinical epidemiology. 2010;63(7):737–45. 10.1016/j.jclinepi.2010.02.006 [DOI] [PubMed] [Google Scholar]

- 17.Chigateri NG, Kerse N, Wheeler L, MacDonald B, Klenk J. Validation of an Accelerometer for Measurement of Activity in Frail Older People. Gait & posture. 2018. [DOI] [PubMed] [Google Scholar]

- 18.Brown CJ, Williams BR, Woodby LL, Davis LL, Allman RM. Barriers to mobility during hospitalization from the perspectives of older patients and their nurses and physicians. Journal of hospital medicine. 2007;2(5):305–13. 10.1002/jhm.209 [DOI] [PubMed] [Google Scholar]

- 19.Mokkink LB, Terwee CB, Knol DL, Stratford PW, Alonso J, Patrick DL, et al. The COSMIN checklist for evaluating the methodological quality of studies on measurement properties: a clarification of its content. BMC medical research methodology. 2010;10:22 Epub 2010/03/20. 10.1186/1471-2288-10-22 ; PubMed Central PMCID: PMCPMC2848183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Brod M, Tesler LE, Christensen TL. Qualitative research and content validity: developing best practices based on science and experience. Quality of Life Research. 2009;18(9):1263 10.1007/s11136-009-9540-9 [DOI] [PubMed] [Google Scholar]

- 21.Brown CJ, Allman RM. Validation of use of wireless monitors to measure levels of mobility during hospitalization. Journal of rehabilitation research and development. 2008;45(4):551 [DOI] [PubMed] [Google Scholar]

- 22.George D, Mallery P. IBM SPSS Statistics 23 step by step: A simple guide and reference: Routledge; 2016. [Google Scholar]

- 23.Terwee CB, Bot SD, de Boer MR, van der Windt DA, Knol DL, Dekker J, et al. Quality criteria were proposed for measurement properties of health status questionnaires. Journal of clinical epidemiology. 2007;60(1):34–42. Epub 2006/12/13. 10.1016/j.jclinepi.2006.03.012 . [DOI] [PubMed] [Google Scholar]

- 24.Portney LG, Watkins M. Foundations of clinical research: applications to pratice: FA Davis Company/Publishers; 2015. [Google Scholar]

- 25.Bland JM, Altman DG. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet (London, England). 1986;1(8476):307–10. Epub 1986/02/08. . [PubMed] [Google Scholar]

- 26.Baldwin CE, Johnston KN, Rowlands AV, Williams MT. Physical Activity of ICU Survivors during Acute Admission: Agreement of the activPAL with Observation. Physiotherapy Canada. 2018;70(1):57–63. 10.3138/ptc.2016-61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Association WM. World Medical Association Declaration of Helsinki. Ethical principles for medical research involving human subjects. Bulletin of the World Health Organization. 2001;79(4):373 [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

The measurements were performed in the research zone with a hospital bed, nightstand, table, two seats, and a closet. Two cameras (GoPro HERO 4) were used to videotape the mobility of participants.

(TIF)

(ZIP)

(ZIP)

(ZIP)

(ZIP)

Data Availability Statement

All relevant data are within the paper and its Supporting Information files.