Summary

The recruitment is an integral part of most research projects in medical sciences involving human participants. In health promotion research, there is increasing work on the impact of environments. Settings represent environments such as schools where social, physical and psychological development unfolds. In this study, we investigated weight gain in students within a university setting. Barriers to access and recruitment of university students within a specific setting, in the context of health research are discussed. An online survey on health behaviours of first year students across 101 universities in England was developed. Ethics committees of each institutions were contacted to obtain permission to recruit and access their students. Recruitment adverts were standardized and distributed within restrictions imposed by universities. Three time points and incentives were used. Several challenges in recruiting from a university setting were found. These included (i) ethics approval, (ii) recruitment approval, (iii) navigating restrictions on advertisement and (iv) logistics of varying university academic calendars. We also faced challenges of online surveys including low recruitment, retention and low eligibility of respondents. From the 101 universities, 28 allowed dissemination of adverts. We obtained 1026 responses at T1, 599 at T2 and 497 at T3. The complete-case sample represented 13% of those originally recruited at T1. Conducting research on students within the university setting is a time consuming and challenging task. To improve research-based health promotion, universities could work together to increase consistency as to their policies on student recruitment.

Keywords: settings approach, recruitment, retention, online research, university

INTRODUCTION

Recruitment is an essential part of medical research, and is seen as one the most difficult aspects of any project (Visanji and Oldham, 2013). It has important impacts on biases, validity and power for statistical analyses. Within research involving humans, observational participant data can notably be collected through interviews, questionnaires and observations (Axinn and Pearce, 2006). In the United Kingdom (UK), all government funded research projects and most university-led research projects involving human participant interaction (e.g. contacting participants for a survey) require ethics approval (ESRC, 2015). The inconveniences and inconsistencies of obtaining ethics approval have been extensively discussed (Edwards et al., 2004; Abbott and Grady, 2011) but the challenges and difficulties in recruitment and retention of participants, in behavioural research, and in settings such as universities, has yet to be well documented (Foster et al., 2011).

For health promotion efforts, there is a desire and need to better understand settings, such as schools and universities, where social, physical and psychological development unfolds and where individuals spend the majority of their time outside of their home (Alibali and Nathan, 2010). Schools, universities and work places have been shown to be key settings for health promotion interventions (Whitelaw et al., 2001; Dooris, 2009). Notably, universities have been highlighted in the past two decades for their significant health promotion potential to the young adult population (World Health Organization, 1998). Within research, settings offer environments that allow for the continued development of evidence-based health promotion, to study specific research questions and to understand how variations in the context (e.g. across different universities) affect behaviours (Alibali and Nathan, 2010). Unfortunately, accessing these settings for subject recruitment can be very challenging, and limited research has been published on the barriers for researchers. Several authors (Blinn-Pike et al., 2000; Guo et al., 2002) who have argued for more reporting on recruitment efforts, wrote that this lack of publication on recruitment issues in school settings hinders research (Guo et al., 2002) and limits effective and improved future studies (Blinn-Pike et al., 2000).

The university student population can be an interesting and convenient sample on which to conduct research, especially as millions of individuals now attend university. According to an OECD report (OECD, 2012), the number of people attending tertiary education increased by 25% between 1995 and 2010 in OECD countries. It is now estimated that across these countries, 49% of those below the age of 25 enter tertiary education programmes, making university students a large population of young adults. In the UK, the student population is over 2.3 million (Universities UK, 2015). Thus, making up an important part of society given its size and future role in the workforce, and in the development of our nations. Moreover, university students are in the pivotal stage of young adulthood; between teenage years and working life—where lifelong health behaviours are heavily influenced or fostered. Notably, adolescent weight gain is highly predictive of overweight and obesity in adulthood (Guo et al., 2002). Better understanding of this population, their health behaviours and needs is crucial to adequately induce students into developing and sustaining healthy behaviours.

One of the easiest ways to research students in a specific university is through online platforms. In the UK, almost 90% of those aged 16–24 use the internet daily (Office for National Statistics, 2013). Online surveys can be useful in research, especially in multi-site projects, as they have little distribution burden, are low cost and allow for rapid data collection (Sue and Ritter, 2011; Hewson et al., 2015). Studies have found that mail and online surveys were both comparable in quality, with online surveys sometimes yielding higher responses (Griffis et al., 2003; Hoonakker and Carayon, 2009). Response rates to cross-sectional online surveys have been found to be approximately 40% (Cook et al., 2000; Baruch and Holtom, 2008; Shih and Fan, 2008) and the number of reminders is seen as a determinant factor in the response rates (Cook et al., 2000). Two meta-analyses on the effects of incentives such as cash or inclusion to a draw, found that they significantly motivated individuals to start a survey and that participants were more likely to finish a survey if provided with incentives (Bosnjak and Tuten, 2003; Deutskens et al., 2004; Göritz, 2006; Göritz and Wolff, 2007).

In obesity and behavioural research, recruitment and retention of participants is an important part of the study design. Longitudinal multi-site studies are growing in number, as researchers start assessing the relative impact of environments and settings. University students are believed to be at risk for weight gain during their first year of university as they face a transition from secondary school to university (Vadeboncoeur et al., 2015, 2016a). Research since the 1980s suggest that stress, alcohol, drinking, unhealthy eating and poor physical activity play important roles at the individual level, in their weight gain (Crombie et al., 2009; Vella-Zarb and Elgar, 2009).

To further research the impact of settings such as universities on weight gain, a national study in England on first year undergraduate students taking a socio-ecological approach was designed. The objective was to collect, via a web survey, longitudinal data on weight and several other factors relating to health behaviours and university environments. The aim of this paper is to discuss the encountered experience with research from a university setting when conducting a health oriented online survey in 101 universities in the UK. The barriers of firstly being ‘permitted’ to recruit, secondly recruiting students across several channels, thirdly retaining students in a three wave survey and fourthly obtaining a final sample for analyses are discussed. Attrition data, the relative impact of each reminder wave and the size of the usable final sample are presented. Based on our previously reported findings on obtaining research ethics for multi-site projects (Vadeboncoeur et al., 2016b), recruitment is equally an integral challenge of any research study, especially in multi-site projects targeting settings.

METHODS

Survey design

The Online Bristol Survey platform was used to conduct an online longitudinal study with three time points [start of academic year (T1), December (T2), end of academic year (T3)]. The survey was pilot tested three times in an iterative manner. It asked students to self-report their height, weight and several variables ranging from perceived university environment to health behaviours. None of the questions were deemed of a sensitive nature by the ethics committees. All questions were closed ended and none were mandatory. The survey could be accessed by students who had the link, with no login process. Students were asked to enter their university email address in each survey to invite them to the follow-ups and to match time point responses. Participants provided their email at the beginning and at the end of the survey, for cross-checking. The initial page of each survey was an information sheet detailing the eligibility criteria, informed consent and data protection. The eligibility criteria were first year undergraduate students. Students could not have taken a gap year, be pregnant, have children or be taking medication which affected their weight.

Timeline

Universities in England do not share the same academic calendar for terms start and end. We created a database, based on university websites, of start and end dates of the academic year and the number of terms. Only teaching terms were included. Students were recruited and sent follow-up surveys depending on the start/end date of their universities. Data collection for T1 was in September/October 2014, within two weeks of the start of the academic year; T2 was in December 2014 for everyone and T3 was in April/June 2015, within two weeks of the end of the academic year.

Recruitment

After obtaining ethics approval from our university, 100 universities across England were approached to obtain approval to recruit their students. The subset of universities which provided ethics approval often imposed restrictions on possible recruitment methods. Accounting for these restrictions, several different strategies were used for recruitment. The survey was advertized via email, social media posts, university recruitment websites, university intranet and/or through departments. A standardized text was used for advertisement. It included the main eligibility criteria, notion of incentives, contact information of the lead researcher and the activated survey link. By clicking the link, students could directly access the survey without login information. A total of £279 was spent on advertisement as six universities charged fees for contacting students.

Longitudinal retention

Using the email address students entered in T1, all T1 participants were invited to complete the T2 follow-up survey in December. A short standardized email was sent to everyone which included the T2 survey activated link, mention of the incentives and the researches’ contact information. The incentive was mentioned as ‘you could win one of the many £50 retail vouchers’. Students had two weeks to answer the survey. Up to three reminders were sent to students who had not yet completed the T2 survey. These were also short and mentioned the incentive.

For the T3 follow-up survey at the end of the academic year, students received an initial invitation within two weeks of the end of their academic year. Students who had completed T1 and T2 surveys received a slightly different email than students who had only completed T1. The former group had an extra sentence stating how important their participation was as they had completed T1 and T2 and that they were eligible for an additional win of a £100 retail voucher. All students had two weeks to complete the survey and up to three reminders were sent to those who had yet completed it. The reminders were also stratified between those who answered T1 and those who answered both T1 and T2.

RESULTS

Recruitment process

The first step was to obtain ethics approval and permission for recruitment. One hundred and one universities in England were contacted and ethics approval from 60 universities was obtained (Vadeboncoeur et al., 2016b). As detailed elsewhere, the process of getting ethics approval came with procedural and content inconsistencies. Within the 60 universities granting ethics approval, only 28 universities agreed to have the survey advertized to the student population. The majority of these 28 research ethics committees imposed varying levels of restrictions on where we could advertise, to whom or how. In light of this, within the 28 universities, permission was granted to use seven different recruitment methods: (i) email sent by the student union to all first year undergraduates, (ii) social media posts from the student union, (iii) advertisements posted on the student union website, (iv) email sent by the registrar to all first year undergraduates, (v) post on a study recruitment website of the university, (vi) advertisements on the student news pin board and (vii) advertisements on the student intranet platform. In 21 universities, one of these methods was used with recruited numbers ranging from 0 to 274 participants per university (Table 1). Two of these methods yielded no responses. In seven universities two combined methods were used. These combined methods led to recruitment numbers ranging from 1 to 95 participants. The highest number of responses, 95, came from the combination of an email from the student union and an advert on the university website. The lowest response, 1, was obtained from a combined recruitment effort of advert on the intranet and a social media post. An important issue with recruitment this way was that the response rate from each university could not be determined as how many students were reached through the advertisement methods were unknown.

Table 1:

Number of universities and average number of recruited participants per method of advertisement

| N of methods | Method of recruitment | N university using method | Mean N of participants |

|---|---|---|---|

| 1 | |||

| Email by registrar | 1 | 274 | |

| Email by a university department | 1 | 35 | |

| Ad on university intranet | 11 | 43 | |

| Ad in newsletter of CR in colleges | 1 | 47 | |

| Post on social media by SU | 3 | 15 | |

| Post on research volunteer intranet | 1 | 12 | |

| Ad in university fresher booklet | 1 | 0 | |

| Email via research volunteer opt-in list | 1 | 0 | |

| Ad on university job posting website | 1 | 0 | |

| 2 | |||

| Email by SU & university website ad | 1 | 95 | |

| Ad on intranet & ad in SU newsletter | 1 | 40 | |

| Ad in newsletter of colleges & social media ad | 1 | 38 | |

| Ad in newsletter of colleges & ad on university website | 1 | 36 | |

| Post on social media & newsletter by SU | 1 | 11 | |

| Ad on SU website and SU social media post | 1 | 10 | |

| Ad on intranet & SU social media post | 1 | 1 |

Recruitment

After receiving ethics and recruitment approval, high recruitment numbers was the next step. In total, 1126 participants across 28 universities were recruited. One participant from a university which did not grant ethics approval was obtained and was later removed from analyses. The mean number of participants per university was 40 students, ranging from 1 to 274 participants per university. In 21 universities, at least 10 students were recruited from each.

Longitudinal retention

Time point two (T2)

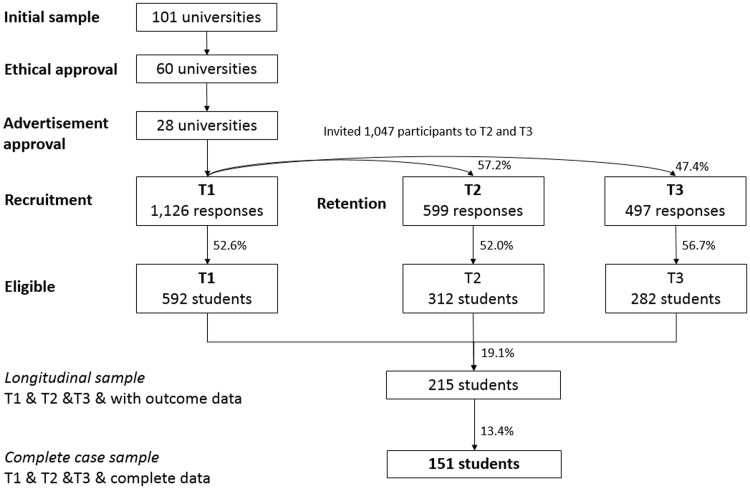

From the 1126 responders from T1, a follow-up survey to 1048 participants was sent. Seventy-eight students could not be reached due to not entering their email addresses or entering errors in the two email questions. Over the T2 survey answer period, a total of 599 respondents completed the survey, representing a retention of 57.2% from the eligible 1047 participants (Table 2, Figure 1). The first follow-up invitation email led to 362 responses, representing 60.4% of the total T2 responses. The retention rate increased by 11.2% after the first reminder. It then increased by 5.2 and 6.3% after reminder email two and three, respectively.

Table 2:

Success of retention of participation during the T2 and T3 follow-up survey, according to the different waves of invitations

| T2 survey | |||||

|---|---|---|---|---|---|

| Contacts | N | Cumul. N | % N of T2 | T1% retention | T1 cumul. % retention |

| Initial email | 362 | 362 | 60.4 | 34.6 | 34.6 |

| Reminder 1 | 117 | 479 | 19.5 | 11.2 | 45.8 |

| Reminder 2 | 54 | 533 | 9.0 | 5.2 | 50.9 |

| Reminder 3 | 66 | 599 | 11.0 | 6.3 | 57.2 |

| T3 Survey | |||||

| Contacts | N | Cumul. N | % N of T3 | T1% retention | T1 cumul. % retention |

| Initial email | 272 | 272 | 54.7 | 26.0 | 26.0 |

| Reminder 1 | 84 | 356 | 16.9 | 8.0 | 34.0 |

| Reminder 2 | 69 | 425 | 13.9 | 6.6 | 40.6 |

| Reminder 3 | 72 | 497 | 14.0 | 6.7 | 47.4 |

Fig. 1:

Overview of the recruitment and retention of students in 101 universities for an online survey.

Time point three (T3)

For the T3 follow-up survey at the end of the academic year, 497 individuals answered the survey (Table 2, Figure 1). This represented a 47.4% retention from the 1048 who were sent the survey. The initial contact was the most successful with 272 responses, representing 54.7% of the total T3 respondents. The first email reminder yielded 16.9% of the responses while reminder two and three led to 14.0% of the responses.

Eligibility

The ultimate step relating to recruitment is ensuring a large sample for analyses after data cleaning and exclusions. For T1 data collection, 592 students from 26 universities were eligible, 52.6% of the initial recruitment (Table 3, Figure 1). The majority of the participants removed were not first year students. At T2, 312 students were eligible, 52.0% of the 599 T2 responses. At T3, 282 of the 497 respondents were eligible, 56.7%. These students came from 23 different universities with an average of 12 participants per university.

Table 3:

Average and range of eligible of respondents per university by survey time point

| Survey | Universities | Participants per university |

% Eligible | ||||

|---|---|---|---|---|---|---|---|

| Total N | Total N | Mean N | SD | Min | Max | ||

| T1 | 26 | 592 | 22.8 | 40.8 | 40.8 | 208 | 52.6 |

| T2 | 26 | 312 | 12.8 | 21.2 | 21.2 | 110 | 52.0 |

| T3 | 23 | 282 | 12.2 | 20 | 20 | 95 | 56.7 |

For the analyses, the datasets were cleaned to obtain a ‘longitudinal sample’ of those who answered T1, T2 and T3 and who had outcome data (weight and height at each time point). The sample was 215 students, 19.1% of the original 1126 responses recruited. The ‘complete case sample’, students who had answered all three time points and had data for all variables investigated, was composed of 151 students, 13.4% of the original recruitment sample.

DISCUSSION

Recruitment and retention for the online survey, across 101 universities, had to be broken down into several steps. From the 101 universities in England, ethics approval from 60 was granted and of which 28 universities authorized advertizing of the survey to their student population. Universities imposed inconsistent restrictions on advertizing strategies. Due to varying academic calendars, recruitment across universities had to be staggered. The most successful recruitment strategies were emails sent to students directly through the registrar, department or student union. The least successful were advertisements in the opt-in volunteer mailing list and job posting section of the university website. From the recruitment, 1126 responses from 26 universities were obtained while 599 responses at T2 and 497 at T3 were obtained. The follow-up retention rate ranged from 47% to 57% and the most successful contact was the first survey invitation email. The subsequent three reminders increased the retention by 5–11%. From the respondents at T1, T2 and T3, on average 53.7% were eligible, though only 151 students were included in a complete case sample. The final sample was 13.4% of the initially recruited sample and represented 0.2% of the first year undergraduate student population across the 21 universities (HESA, 2015).

These results highlight six challenges of conducting (i) research within settings such as universities and (ii) conducting online surveys within universities and the student population. The challenges were (1) ethical approval does not mean approval to recruit, (2) mixed and inconsistent restrictions on recruitment advertisement are imposed, leading to diverse approaches across multiple sites, (3) university academic calendars differ by university, rendering recruitment in 28 universities complicated and with increased bias, (4) online recruitment means it is difficult to estimate the overall recruitment success, as the advert reach is unknown, (5) high retention of participants in follow-up surveys is a difficult achievement in a student population and (6) recruited participants does not mean eligible student. These barriers and challenges can be large deterrents to multi-site or inter-settings research within universities.

Stemming from the second listed challenge of restrictions regarding advertisement for recruitment, social media posts, through the student unions were used. Although adverts stated the eligibility criteria, respondents often did not follow them. Almost 50% of the sample was not eligible for analysis since many second and third year students answered the survey even though it specified first year students. This means that online surveys in universities need to either better target their adverts to the right audience (when possible) or cast a wide net during recruitment, as almost 50% of the sample may be ineligible during analyses. Researchers should aim to recruit as much as possible via emails as this showed to be the most effective method. Studies should also be designed with few inedibility criteria as they will likely significantly reduce the actual eligible sample. The T2 and T3 retention rates of 47–57% in this study were within the same range compared to other studies of similar student population, for online survey studies with several email reminders. In a meta-analysis on first year university weight gain, the authors found a weighted mean retention rate between the first and last time points, to be on average 57% (Vadeboncoeur et al., 2015). The studies included were online surveys, face-to-face interviews or weighing sessions. Similarly to this study, Göritz and Wolf (2007) found retention rates of 59 and 55% in waves three and four of a longitudinal study, where only those who had responded to wave one were invited to the subsequent waves. As listed in the challenges faced by researchers, ensuring a high retention needs to be prioritized by researchers and special forms of incentives for those completing all time points should be considered.

The initial follow-up email was the most efficient, but three additional contacts with students, significantly increased the number of responses at each contact. Between 20 and 28% of the T2 and T3 samples needed two or three reminders. This is similar to findings by Hiskey and Troop (2002) who found that 65% of those who responded to wave two, did so after the first-email reminder and that 17.5% of the sample required two reminders and a further 17.5% required three reminders. This is in line with Schaefer and Dillman (1998) who stated that four contacts yields the highest response rate. If no follow-up reminders are sent, researchers can expect response rate of less than 30% (Cook et al., 2000). It appears that contacting students for follow-up surveys through emails was easy and effective. Having students enter their email address at the beginning and at the end of the survey allowed us to correct 60 mistakes. We would recommend all online survey researchers to ensure double entry for emails; if the student had only been asked once to enter their email, 5.3% (60 individuals) of the recruited sample would have been lost. Seventy-eight respondents could not be reached for the T2 and T3 follow-ups and this may be due to students not knowing their university email address at the beginning of the year. Researchers employing similar study designs and methods should ensure double entry of emails and should send a least three email reminders.

Something to note is that the aim of the study was different than the aim of this article. Thus, there is no experimental design to test different specification in conducting online surveys. Baseline recruitment rate is not known, since it was not known how many individuals were reached through online advertisements. Online surveys also have possible coverage, sample, measurement and non-response error (Umbach, 2004) but these are limitations which arise when interpreting the findings and not in analysing retention rates.

The above has described challenges researchers can face when conducting studies on university students. Recommendations aimed at researchers were formulated for many of the challenges, nevertheless to have a drastic impact on reducing these, changes need to be made from the top down. At the broader country level, steps can be taken by the universities to improve fairness, transparency and sustainability for researchers and students in the context of research recruitment of university students. This can be achieved within the scope of University Research Ethics Committees (UREC) who namely have the mandate, within their university, to provide ethics approval on student and faculty led research involving human participants. Their role could extend to formulating explicit guidelines, protocols and procedures for recruitment of students, which would be applicable to all researchers once they have been granted ethics approval. A follow-up step from this would be to establish a universal framework of recruitment guidelines across all universities in England, but where individual URECs could modify slightly for university distinct specifications. The government’s research council should lead the establishment of this framework to ensure greater transparency and an open-access process for recruitment when research is deemed ethical. As a first step, the authors believe that it does not require much of universities to have recruitment guidelines, which would be greatly beneficial to the research community and to students. The guidelines should help in postulating how, when, and how often students can be reached for recruitment, which in turn could reduce recruitment fatigue. For example, universities could set-up opt-out emailing lists with a few profiling specifications (year of study, programme of study) to be used on a monthly basis to advertise research recruitment. It is important to increase accessibility of specific settings, such as universities, as research within these populations is valuable and useful. However, it is critical to have a strategy to deal with the increase in request for student participants. Survey fatigue from students could lead to poor future recruitment and poor collected data. The authors have previously (Vadeboncoeur et al., 2016b) argued for redefining the England-wide university ethics governance framework and here we suggest that a framework for recruitment in the university setting should be added to the agenda.

In conclusion, research on students within the university setting is a time consuming and challenging task. Researchers aiming to obtain a representative sample across many sites must overcome many steps and barriers. In this study, we found that after obtaining ethics approval, research advertisement in universities was a major barrier as it is was usually only possible through the student union. Once the students were recruited, the retention rates were adequate and each of the four contacts with students regarding the follow-up were useful. The final sample for data analysis was 13.4% of the recruited sample, indicating that for longitudinal studies with 10 min surveys, researchers need to invest considerable effort in recruitment since retention and eligibility have drastic impacts on the final numbers. Researchers should aim for targeted recruitment email adverts while universities should be open to improving research on an important population such as students. When considering challenges of obtaining ethics approval (citation removed) and of recruitment, research in several universities becomes daunting. If England wants more and larger studies on young adults in universities, a more appropriate, fair, transparent and consistent guidelines for recruitment ought to be developed. This could be achieved through URECs at the university level or through a governmental effort in developing a recruitment framework across universities. Unless these mechanisms within universities become standardized, it is unrealistic for researchers to expect large samples when conducting settings research.

FUNDING

N.T. (006/P&C/CORE/2013/OXFSTATS) and C.F. (006/PSS/CORE/2016/OXFORD) receive funding from the British Heart Foundation (BHF). This work was also supported by Nuffield Department of Population Health and the Clarendon Fund Scholarship.

CONFLICT OF INTEREST STATEMENT

The authors declare that they have no conflict of interest.

REFERENCES

- Abbott L., Grady C. (2011) A systematic review of the empirical literature evaluating IRBs: what we know and what we still need to learn. Journal of Empirical Research on Human Research Ethics, 6, 3–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alibali M. W., Nathan M. J. (2010) Conducting research in schools: a practical guide. Journal of Cognition and Development, 11, 397–407. [Google Scholar]

- Axinn W. G., Pearce L. D. (2006) Mixed Method Data Collection Strategies. Cambridge: Cambridge University Press. [Google Scholar]

- Baruch Y., Holtom B. C. (2008) Survey response rate levels and trends in organizational research. Human Relations, 61, 1139–1160. [Google Scholar]

- Blinn-Pike L., Berger T., Rea-Holloway M. (2000) Conducting adolescent sexuality research in schools: lessons learned. Family Planning Perspectives, 32, 246–251. [PubMed] [Google Scholar]

- Bosnjak M., Tuten T. L. (2003) Prepaid and promised incentives in web surveys an experiment. Social Science Computer Review, 21, 208–217. [Google Scholar]

- Cook C., Heath F., Thompson R. L. (2000) A meta-analysis of response rates in web-or internet-based surveys. Educational and Psychological Measurement, 60, 821–836. [Google Scholar]

- Crombie A., Ilich J., Dutton G., Panton L., Abood D. (2009) The freshman weight gain phenomenon revisited. Nutrition Reviews, 67, 83–94. [DOI] [PubMed] [Google Scholar]

- Deutskens E., De Ruyter K., Wetzels M., Oosterveld P. (2004) Response rate and response quality of internet-based surveys: an experimental study. Marketing Letters, 15, 21–36. [Google Scholar]

- Dooris M. (2009) Holistic and sustainable health improvement: the contribution of the settings-based approach to health promotion. Perspectives in Public Health, 129, 29–36. [DOI] [PubMed] [Google Scholar]

- Edwards S. J., Ashcroft R., Kirchin S. (2004) Research ethics committees: differences and moral judgement. Bioethics, 18, 408–427. [DOI] [PubMed] [Google Scholar]

- ESRC. (2015) ESRC Framework for research ethics. Available from http://www.esrc.ac.uk/files/funding/guidance-for-applicants/esrc-framework-for-research-ethics-2015/ (last accessed January 2016).

- Foster C. E., Brennan G., Matthews A., McAdam C., Fitzsimons C., Mutrie N. (2011) Recruiting participants to walking intervention studies: a systematic review. International Journal of Behavioral Nutrition and Physical Activity, 8, 137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Göritz A. S. (2006) Incentives in web studies: methodological issues and a review. International Journal of Internet Science, 1, 58–70. [Google Scholar]

- Göritz A. S., Wolff H.G. (2007) Lotteries as incentives in longitudinal web studies. Social Science Computer Review, 25, 99–110. [Google Scholar]

- Griffis S. E., Goldsby T. J., Cooper M. (2003) Web‐based and mail surveys: a comparison of response, data, and cost. Journal of Business Logistics, 24, 237–258. [Google Scholar]

- Guo S. S., Wu W., Chumlea W. C., Roche A. F. (2002) Predicting overweight and obesity in adulthood from body mass index values in childhood and adolescence. American Journal of Clinical Nutrition, 76, 653–658. [DOI] [PubMed] [Google Scholar]

- HESA. (2015) Higher Education Student Enrolments and Qualifications Obtained at Higher Education Providers in the United Kingdom 2013/15. https://www.hesa.ac.uk/data-and-analysis (last accessed January 2016).

- Hewson C., Vogel C., Laurent D. (2015) Internet Research Methods. London: Sage. [Google Scholar]

- Hiskey S., Troop N. A. (2002) Online longitudinal survey research viability and participation. Social Science Computer Review, 20, 250–259. [Google Scholar]

- Hoonakker P., Carayon P. (2009) Questionnaire survey nonresponse: a comparison of postal mail and internet surveys. International Journal of Human–Computer Interaction, 25, 348–373. [Google Scholar]

- OECD. (2012) Education at a Glance 2012: OECD Indicators. Available from: 10.1787/eag-2012-en (last accessed January 2016). [DOI]

- Office for National Statistics. (2013) Internet Access - Households and Individuals: 2013. Statistical bulletin. Available from: http://www.ons.gov.uk/peoplepopulationandcommunity/ (last accessed January 2016).

- Schaefer D. R., Dillman D. A. (1998) Development of a standard e-mail methodology: results of an experiment. Public Opinion Quarterly, 62, 378–397. [Google Scholar]

- Shih T.-H., Fan X. (2008) Comparing response rates from web and mail surveys: a meta-analysis. Field Methods, 20, 249–271. [Google Scholar]

- Sue V. M., Ritter L. A. (2011) Conducting Online Surveys. Thousand Oaks, CA: SAGE Publications. [Google Scholar]

- Umbach P. D. (2004) Web surveys: best practices. New Directions for Institutional Research, 2004, 23–38. [Google Scholar]

- Universities UK. (2015) Patterns and trends in UK higher education 2015. Available from: http://www.universitiesuk.ac.uk/ (last accessed July 2016).

- Vadeboncoeur C., Townsend N., Foster C. (2015) A meta-analysis of weight gain in first year university students: is freshman 15 a myth? BMC Obesity, 2, 22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vadeboncoeur C., Foster C., Townsend N. (2016a) Freshman 15 in England: a longitudinal evaluation of first year university student's weight change. BMC Obesity, 3, 45–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vadeboncoeur C., Townsend N., Foster C., Sheehan M. (2016b) Variation in university research ethics review: Reflections following an inter-university study in England. Research Ethics, 12, 217–233. [Google Scholar]

- Vella-Zarb R., Elgar F. (2009) The ′freshman 5′: a meta-analysis of weight gain in the freshman year of college. Journal of American College Health, 58, 161–166. [DOI] [PubMed] [Google Scholar]

- Visanji E., Oldham J. (2013) Patient Recruitment in Clinical Trials: A Review of Literature. Physical Therapy Reviews, 6, 141–150. [Google Scholar]

- Whitelaw S., Baxendale A., Bryce C., MacHardy L., Young I., Witney E. (2001) ′Settings′ based health promotion: a review. Health Promotion International, 16, 339–353. [DOI] [PubMed] [Google Scholar]

- World Health Organization (1998) Health Promoting Universities: Concept, Experience and Framework for Action. Geneva: World Health Organization.