Abstract

Kernel entropy component analysis (KECA) is a newly proposed dimensionality reduction (DR) method, which has showed superiority in many pattern analysis issues previously solved by principal component analysis (PCA). The optimized KECA (OKECA) is a state-of-the-art variant of KECA and can return projections retaining more expressive power than KECA. However, OKECA is sensitive to outliers and accused of its high computational complexities due to its inherent properties of L2-norm. To handle these two problems, we develop a new extension to KECA, namely, KECA-L1, for DR or feature extraction. KECA-L1 aims to find a more robust kernel decomposition matrix such that the extracted features retain information potential as much as possible, which is measured by L1-norm. Accordingly, we design a nongreedy iterative algorithm which has much faster convergence than OKECA's. Moreover, a general semisupervised classifier is developed for KECA-based methods and employed into the data classification. Extensive experiments on data classification and software defect prediction demonstrate that our new method is superior to most existing KECA- and PCA-based approaches. Code has been also made publicly available.

1. Introduction

Curse of dimensionality is one of the major issues in machine learning and pattern recognition [1]. It has motivated many scholars from different areas to properly implement dimensionality reduction (DR) to simplify the input space without degrading performances of learning algorithms. Various efficient methods associated with DR have been developed, such as independent component analysis (ICA) [2], linear discriminant analysis [3], principal component analysis (PCA) [4], projection pursuit [5], to name a few. Among these robust algorithms, PCA has been one of the most used techniques to perform feature extraction (or DR). PCA implements linear data transformation according to the projection matrix, which aims to maximize the second-order statistics of input datasets [6]. To extend PCA to nonlinear space, Schölkopf et al. [7] proposed the kernel PCA, the so-called KPCA method. The key of KPCA is to find the nonlinear relation between the input data and the kernel feature space (KFS) using the kernel matrix, which is derived from a positive semidefinite kernel function of computing inner products. Both PCA and KPCA perform data transformation by selecting the eigenvectors corresponding to the top eigenvalues of the projection matrix and the kernel matrix, respectively. All of them (including their variants) have experienced great success in different areas [8–12], such as image reconstruction [13], face recognition [14–17], image processing [18, 19], to name a few. However, as suggested by Zhang and Hancock [20], the DR should be performed according to the perspective of information theory for obtaining more acceptable results.

To improve performances of the aforementioned approaches to DR, Jessen [6] developed a new and completely different data transformation algorithm, namely, kernel entropy component analysis (KECA). The main difference between KECA and PCA or KPCA is that the optimal eigenvectors (or called entropic components) derived from KECA can compress the most Renyi entropy of the input data instead of being associated with top eigenvalues. The procedure of selecting the eigenvectors related to the Renyi entropy of the input space is started with a Parzen window kernel-based estimator [21]. Then, only the eigenvectors corresponding to the most entropy of the input datasets are selected to perform DR. This distinguished characteristic helps KECA achieve better performances than the classical PCA and KPCA in face recognition and clustering [6]. In recent years, Izquierdo-Verdiguier et al. [21] employed the rotation matrix from ICA [2] to optimize KECA and proposed the optimized KECA (OKECA). OKECA not only shows superiority in classification of both synthetic and real datasets but can obtain acceptable kernel density estimation (KDE) just using very fewer entropic components (just one or two) compared with KECA [21]. However, OKECA is sensitive to outliers for its inherent properties of L2-norm. In other words, if the input space follows normal distribution and is contaminated by nonnormal distributed outliers, this may lead to the downgrade of its performance on DR in terms of OKECA. Additionally, OKECA is very time-consuming when handling large-scale input datasets (Section 4).

Therefore, the main purpose of this paper is to propose a new variant of KECA and improve the proneness to outliers and efficiency of OKECA. L1-norm is well known for its robustness to outliers [22]. Additionally, Nie et al. [23] established a fast iteration process to handle the general L1-norm maximization issue with nongreedy algorithm. Hence, we take advantages of OKECA and propose a new L1-norm version of KECA (denoted as KECA-L1). KECA-L1 uses an efficient convergence procedure, motivated by Nie et al.'s method [23], to search for the entropic components contributing to the most Renyi entropy of input data. To evaluate the efficiency and effectiveness of KECA-L1, we design and conduct a series of experiments, in which the data vary from single class to multiattribute and from small to large size. The classical KECA and OKECA are also included for comparison.

The remainder of this paper is organized as follows: Section 2 reviews the general L1-norm maximization issue, KECA, and OKECA. Section 3 presents KECA with nongreedy L1-norm maximization and semisupervised-learning-based classifier. Section 4 validates the performance of the new method on different data sets. Section 5 ends this paper with some conclusions.

2. Preliminaries

2.1. An Efficient Algorithm to Solving the General L1-Norm Maximization Issue

The general L1-norm maximization problem is first raised by Nie et al. [23]. This issue, based on a hypothesis that there exists an upper bound for the objective function, can be generally formulated as [23]

| (1) |

where both f(ν) and g i(ν) for each i denote arbitrary functions, and ν ∈ 𝒞 represents an arbitrary constraint.

Then a sign function sign(·) is defined as

| (2) |

and employed to transform the maximization problem (1) as follows:

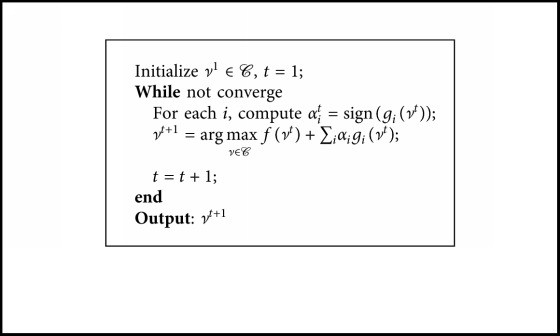

| (3) |

where α i=sign(g i(ν)). Nie et al. [23] proposed a fast iteration process to solve problem (3), which is shown in Algorithm 1. It can be seen from Algorithm 1 that α i is determined by current solution ν t, and the next solution ν t+1 is updated according to the current α i. The iterative process is repeated until the procedure converges [23, 24]. The convergence of the Algorithm 1 has been demonstrated, and the associated details can also be read in [23].

Algorithm 1.

Fast iteration approach to solving the general L1-Norm maximization problem (3).

2.2. Kernel Entropy Component Analysis

KECA is characterized by its entropic components instead of the principal or variance-based components in PCA or KPCA, respectively. Hence, we firstly describe the concept of the Renyi quadratic entropy. Given the input dataset X=[x 1,…, x N](x i ∈ ℝ D), the Renyi entropy of X is defined as [6]

| (4) |

where p(x) is a probability density function. Based on the monotonic property of logarithmic function, Equation (4) can be rewritten as

| (5) |

We can estimate Equation (5) using the kernel k σ(x, x t) of Parzen window density estimator determined by the bandwidth coefficient σ [6] such that

| (6) |

where K ij=k σ(x i, x j) constitutes the kernel matrix K and 1 represents an N-dimensional vector containing all ones. With the help of the kernel decomposition [6],

| (7) |

Equation (6) is transformed as follows:

| (8) |

where the diagonal matrix D and the matrix E consist of eigenvalues λ 1,…, λ N and the corresponding eigenvectors e 1,…, e N, respectively. It can be observed from Equation (7) that the entropy estimator consists of projections onto all the KFS axes because

| (9) |

where the function of ϕ(·) is to map the two samples x i and x j into the KFS. Additionally, only an entropic component e i meeting the criteria of λ i ≠ 0 and 1 T e i ≠ 0 can contribute to the entropy estimate [21]. In a word, KECA implements DR by projecting ϕ(X) into a subspace E l spanned not by the eigenvectors associated with the top eigenvalues but by entropic components contributing most to the Renyi entropy estimator [25].

2.3. Optimized Kernel Entropy Component Analysis

Due to the fact that KECA is sensitive to different bandwidth coefficients σ [21], OKECA is proposed to fill this gap and improve performances of KECA on DR. Motivated by the fast ICA method [2], an extra rotation matrix (applying W) is employed to the kernel decomposition (Equation (7)) in KECA for maximizing the information potential (the entropy values in Equation (8)) [21]:

| (10) |

where ‖·‖2 is the L2-norm and w denotes a column vector (N × 1) in W. Izquierdo-Verdiguier et al. [21] utilized a gradient-ascent approach to handle the maximization problem (10):

| (11) |

where τ is the step size. ∂J/∂w(t) can be obtained by Lagrangian multiplier:

| (12) |

The entropic components multiplied by the rotation matrix can obtain more (or equal) information potential than that of the KECA even using fewer components [21]. Moreover, OKECA shows the capability of being robust to the bandwidth coefficient. However, there exist two main limitations for OKECA. First, the new entropic components derived from OKECA are sensible to outliers since its inherent properties of L2-norm (Equation (10)). Second, although a very simple stopping criterion is designed to avoid additional iterations, OKECA is still of high computational complexities for its computational cost is O(N 3+4tN 2) [21], where t is the number of iterations for finding the optimal rotation matrix, compared with that the one of KECA is O(N 3) [21].

3. KECA with Nongreedy L1-Norm Maximization

3.1. Algorithm

In order to alleviate the problems existing in OKECA, this section presents how to extend KECA to its nongreedy L1-norm version. For readers' easy understanding, the definition of L1-norm is firstly introduced as follows:

Definition 1. Given an arbitrary vector x ∈ ℝ N×1, the L1-norm of the vector x is

| (13) |

where ‖·‖1 is the L1-norm and x j denotes the jth element of x.

Then, motivated by OKECA, we attempt to develop a new objective function to maximize the information potential (Equations (8) and (10)) based on the L1-norm:

| (14) |

where (a 1,…, a N)=A=E D 1/2, N is the size of samples. The rotation matrix is denoted as W ∈ ℝ DIM×m, where DIM and m are the dimension of input data and dimension of the selected entropic components (or number of projection), respectively. It is difficult to directly solve problem (14), but we may regard it as a special case of problem (1) when f(ν) ≡ 0. Therefore, the Algorithm 1 can be employed to solve (14). Next, we show the details about how to find the optimal solution of problem (14) based on the proposal from References [23, 24]. Let

| (15) |

Thus, problem (14) can be simplified as

| (16) |

By singular value decomposition (SVD), then

| (17) |

where U ∈ ℝ DIM×DIM, Λ ∈ ℝ DIM×m, and V ∈ ℝ m×m. Then we obtain

| (18) |

where Z ∈ ℝ m×m, λ ii and z ii denote the (i, i) − th element of matrix Λ and Z, respectively. Due to the property of SVD, we have λ ii ≥ 0. Additionally, Z is an orthonormal matrix [23] such that z ii ≤ 1. Therefore, Tr(W T M) can reach the maximum only if Z=[I m, 0 m×(DIM − m)], where I m denotes the m × m identity matrix, and 0 m×(DIM − m) is a m × (DIM − m) matrix of zeros. Considering that Z=V T W T U, thus the solution to problem (16) is

| (19) |

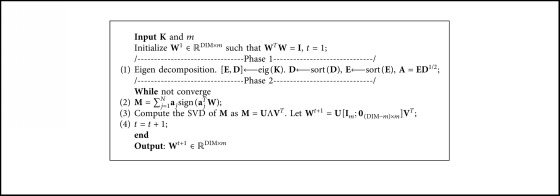

Algorithm 2 (A MATLAB implementation of the algorithm is available at the Supporting Document for the interested readers) shows how to utilize the nongreedy L1-norm maximization described in Algorithm 1 to compute Equation (19). Since problem (16) is a special case of problem (1), we can obviously obtain that the optimal solution W ∗ to Equation (19) is a local maximum point for ‖W T E D 1/2‖1 based on Theorem 2 in Reference [23]. Moreover, the Phase 1 of the Algorithm 2 spends O(N 3) on the eigen decomposition. Thus, the total of computational cost of KECA-L1 is O(N 3+Nt), where t is the number of iterations for convergence. Considering that the computational complexity of OKECA is O(N 3+4tN 2), we can safely conclude that KECA-L1 has much faster convergence than OKECA's.

Algorithm 2.

KECA-L1.

3.2. The Convergence Analysis

This subsection attempts to demonstrate the convergence of the Algorithm 2 in the following: theorem:

Theorem 1 . —

The above KECA-L1 procedure can converge.

Proof —

Motivated by References [23, 24], first we show the objective function (9) of KECA-L1 will monotonically increase in each iteration t. Let g i(u t)=W T a j and α i t=sign(a j T W), then (9) can be simplified to

(20) Obviously, α i t+1 is parallel to g i(u t+1), but neither is α i t. Therefore,

(21) Considering that |g i(u t)|=α i t g i(u t), thus

(22) Substituting (22) in (21), it can be obtained

(23) According to the Step 3 in Algorithm 2 and the theory of SVD, for each iteration t, we have

(24) Combining (23) and (24) for every i, we have

(25) which means that Algorithm 2 is monotonically increasing. Additionally, considering that objective function (14) of KECA-L1 has an upper bound within the limited iterations, the KECA-L1 procedure will converge.

3.3. The Semisupervised Classifier

Jenssen [26] established a semisupervised learning (SSL) algorithm for classification using KECA. This SSL-based classifier was trained by both labeled and unlabeled data to build the kernel matrix such that it can map the data to KFS appropriately [26]. Additionally, it is based on a general modelling scheme and applicable for other variants of KECA, such as OKECA and KECA-L1.

More specifically, we are given N pairs of training data {x i, y i}i=1 N with samples x i ∈ ℝ D and the associated labels y i. In addition, there are M unlabeled data points for testing. Let X u=[x u 1,…, x u M] and X l=[x l 1,…, x l N] denote the testing data and training data without labels, respectively; thus, we can obtain an overall matrix X=[X u X l]. Then we construct the kernel matrix K derived from X using (6), K ∈ ℝ (N+M)×(N+M), which plays as the input of Algorithm 2. After the iteration procedure of nongreedy L1-norm maximization, we obtain a projection of onto m orthogonal axes, where and . In other words, and are the low-dimensional representations of each testing data point x u i and the training one x l j, respectively. Assume that x u ∗ is an arbitrary data point to be tested. If it satisfies

| (26) |

then x u ∗ is assigned to the same class with the jth data point of X l.

4. Experiments

This section shows the performance of the proposed KECA-L1 compared with the classical KECA [6] and OKECA [21] for real-world data classification using the SSL-based classifier illustrated in Section 3.3. Several recent techniques such as PCA-L1 [27] and KPCA-L1 [28] are also included for comparison. The rationale to select these methods is that previous studies related to DR found that they can produce impressive results [27–29]. We implement the experiments on a wide range of real-world datasets: (1) six different datasets from the University California Irvine (UCI) Machine Learning Repository (available at http://archive.ics.uci.edu/ml/datasets.html) and (2) 9 different software projects with 34 releases from the PROMISE data repository (available at http://openscience.us/repo). The MATLAB source code for running KECA and OKECA, uploaded by Izquierdo-Verdiguier et al. [21], is available at http://isp.uv.es/soft_feature.html. The coefficients set for PCA-L1 and KPCA-L1 is the same with [27, 28]. All of the experiments are all performed by MATLAB R2012a on a PC with Inter Core i5 CPU, 4 GB memory, and Windows 7 operating system.

4.1. Experiments on UCI Datasets

The experiments are conducted on six datasets from the UCI: the Inonosphere dataset is a binary classification problem of whether the radar signal can describe the structure of free electrons in the ionosphere or not; the Letter dataset is to assign each black-and-white rectangular pixel display to one of the 26 capital letters in the English alphabet; the Pendigits handles the recognition of pen-based handwritten digits; the Pima-Indians data set constitutes a clinical problem of diabetes diagnosis in patients from clinical variables; the WDBC dataset is another clinical problem for the diagnosis of breast cancer in malignant or benign classes; and the Wine dataset is the result of a chemical analysis of wines grown in the same region in Italy but derived from three different cultivars. Table 1 shows the details of them. In the subsequent experiments, we just utilized the simplest linear classifier [30]. The theory of maximizing maximum likelihood (ML) [31] is selected as the rule for selecting bandwidth coefficient as suggested in [21].

Table 1.

UCI datasets description.

| Database | N | DIM | N c | N train | N test |

|---|---|---|---|---|---|

| Ionosphere | 351 | 33 | 2 | 30 × 2 | 175 |

| Letter | 20000 | 16 | 26 | 35 × 26 | 3870 |

| Pendigits | 10992 | 16 | 9 | 60 × 9 | 3500 |

| Pima-Indians | 768 | 8 | 2 | 100 × 2 | 325 |

| WDBC | 569 | 30 | 2 | 35 × 2 | 345 |

| Wine | 178 | 12 | 3 | 30 × 3 | 80 |

N: number of samples, DIM: number of dimensions, N c: number of classes, N train: number of training data, and N test: number of testing data.

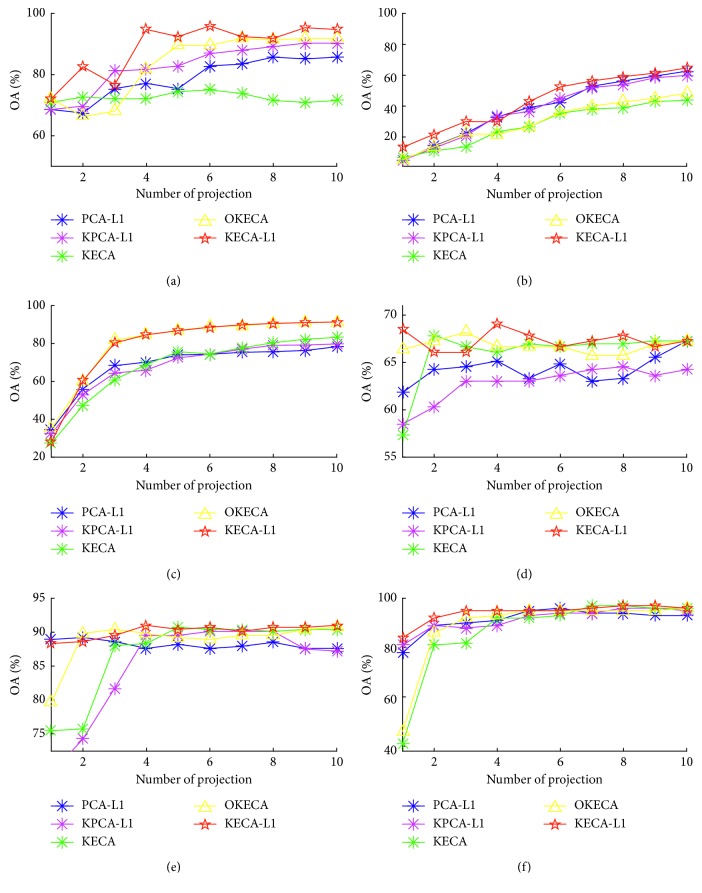

The implementation of KECA-L1 and other methods is repeated using all the selected datasets with respect to different numbers of components for 10 times. We have utilized the overall classification accuracy (OA) to evaluate the performance of different algorithms on the classification. OA is defined as the total number of samples correctly assigned in percentage terms, which is within [0,1] and indicates better quality with larger values. Figure 1 presents the average OA curves obtained by the aforementioned algorithms for these six real datasets. It can be observed from Figure 1 that OKECA is superior to KECA, PCA-L1, and KPCA-L1 except for solving Letter issue. This is probably because DR performed by OKECA not only can reveal the structure related to the most Renyi entropy of the original data but also consider the rotational invariance property [21]. In addition, KECA-L1 outperforms the other methods besides of OKECA. This may be attributed to the robustness of L1-norm to outliers compared with that of the L2-norm. In Figure 1, OKECA seems to obtain nearly the same results with KECA-L1's. However, the average running time (in hours) of OKECA in the Pendigits is 37.384 times more than that of KECA-L1 1.339.

Figure 1.

Overall accuracy obtained by the PCA-L1, KPCA-L1, KECA, OKECA, and KECA-L1 using different UCI databases with different numbers of extracted features. (a) Ionosphere, (b) Letter, (c) Pendigits, (d) Pima-Indians, (e) WDBC, and (f) Wine.

4.2. Experiments on Software Projects

In software engineering, it is usually difficult to test a software project completely and thoroughly with the limited resources [32]. Software defect prediction (SDP) may provide a relatively acceptable solution to this problem. It can allocate the limited test resources effectively by categorizing the software modules into two classes: nonfault-prone (NFP) or fault-prone (FP) according to 21 software metrics (Table 2).

Table 2.

Descriptions of data attributes.

| Attribute | Description |

|---|---|

| WMC | Weighted methods per class |

| AMC | Average method Complexity |

| AVG_CC | Mean values of methods in the same class |

| CA | Afferent couplings |

| CAM | Cohesion among methods of class |

| CBM | Coupling between Methods |

| CBO | Coupling between object classes |

| CE | Efferent couplings |

| DAM | Data access Metric |

| DIT | Depth of inheritance tree |

| IC | Inheritance Coupling |

| LCOM | Lack of cohesion in Methods |

| LCOM3 | Normalized version of LCOM |

| LOC | Lines of code |

| MAX_CC | Maximum values of methods in the same class |

| MFA | Measure of function Abstraction |

| MOA | Measure of Aggregation |

| NOC | Number of Children |

| NPM | Number of public Methods |

| RFC | Response for a class |

| Bug | Number of bugs detected in the class |

This section aims to employ KECA-based methods to reduce the selected software data (Table 3) dimensions and then utilize the SSL-based classifier combined with the support vector machine [33] to classify each software module as NFP or FP. The bandwidth coefficient set is still restricted to the rule of ML. PCA-L1 and KPCA-L1 are involved as a benchmarking yardstick. There are 34 groups of tests for each release in Table 3. The most suitable releases [34] from different software projects are selected as training data. We evaluate the performance of different selected methods on SDP in terms of recall (R), precision (P), and F-measure (F) [35, 36]. The F-measure is defined as

| (27) |

where

| (28) |

Table 3.

Descriptions of software data.

| Releases | #Classes | #FP | % FP |

|---|---|---|---|

| Ant-1.3 | 125 | 20 | 0.160 |

| Ant-1.4 | 178 | 40 | 0.225 |

| Ant-1.5 | 293 | 32 | 0.109 |

| Ant-1.6 | 351 | 92 | 0.262 |

| Ant-1.7 | 745 | 166 | 0.223 |

| Camel-1.0 | 339 | 13 | 0.038 |

| Camel-1.2 | 608 | 216 | 0.355 |

| Camel-1.4 | 872 | 145 | 0.166 |

| Camel-1.6 | 965 | 188 | 0.195 |

| Ivy-1.1 | 111 | 63 | 0.568 |

| Ivy-1.4 | 241 | 16 | 0.066 |

| Ivy-2.0 | 352 | 40 | 0.114 |

| Jedit-3.2 | 272 | 90 | 0.331 |

| Jedit-4.0 | 306 | 75 | 0.245 |

| Lucene-2.0 | 195 | 91 | 0.467 |

| Lucene-2.2 | 247 | 144 | 0.583 |

| Lucene-2.4 | 340 | 203 | 0.597 |

| Poi-1.5 | 237 | 141 | 0.595 |

| Poi-2.0 | 314 | 37 | 0.118 |

| Poi-2.5 | 385 | 248 | 0.644 |

| Poi-3.0 | 442 | 281 | 0.636 |

| Synapse-1.0 | 157 | 16 | 0.102 |

| Synapse-1.1 | 222 | 60 | 0.270 |

| Synapse-1.2 | 256 | 86 | 0.336 |

| Synapse-1.4 | 196 | 147 | 0.750 |

| Synapse-1.5 | 214 | 142 | 0.664 |

| Synapse-1.6 | 229 | 78 | 0.341 |

| Xalan-2.4 | 723 | 110 | 0.152 |

| Xalan-2.5 | 803 | 387 | 0.482 |

| Xalan-2.6 | 885 | 411 | 0.464 |

| Xerces-init | 162 | 77 | 0.475 |

| Xerces-1.2 | 440 | 71 | 0.161 |

| Xerces-1.3 | 453 | 69 | 0.152 |

| Xerces-1.4 | 588 | 437 | 0.743 |

In (28), FN (i.e., false negative) means that buggy classes are wrongly classified to be nonfaulty, while FP (i.e., false positive) means nonbuggy classes are wrongly classified to be faulty. TP (i.e., true positive) refer to correctly classified buggy classes [34]. Values of Recall, Precision, and F-measure range from 0 to 1 and higher values indicate better classification results.

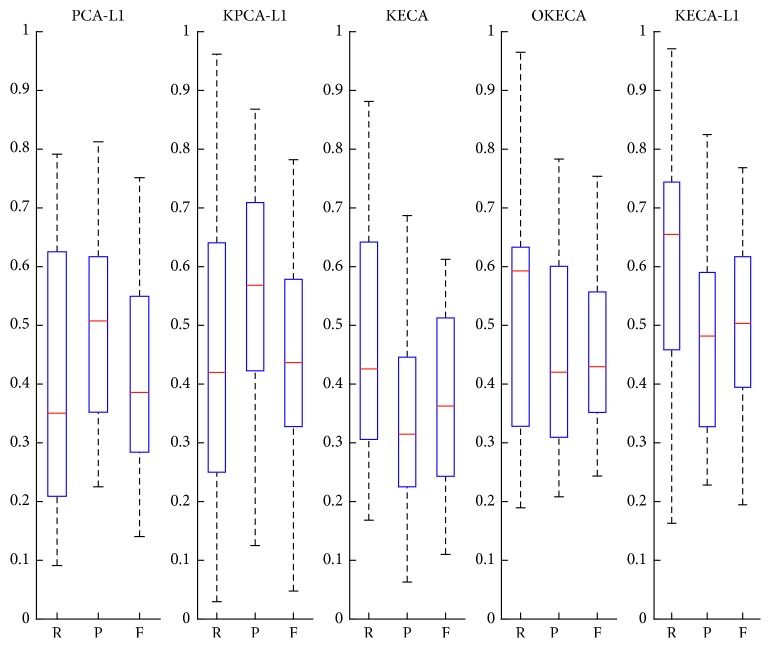

Figure 2 shows the results using box-plot analysis. From Figure 2, considering the minimum, maximum, median, first quartile, and third quartile of the boxes, we find that KECA-L1 performs better than the other methods in general. Specifically, KECA-L1 can obtain acceptable results in experiments for SDP compared with the benchmarks proposed in Reference [34], since the median values of the boxes with respect to R and F are close to 0.7 and more than 0.5, respectively. On the contrary, not only KECA and OKECA but PCA-L1 and KPCA-L1 cannot meet these criteria. Therefore, all of the results validate the robustness of KECA-L1.

Figure 2.

The standardized boxplots of the performance achieved by PCA-L1, KPCA-L1, KECA, OKECA, and KECA-L1, respectively. From the bottom to the top of a standardized box plot: minimum, first quartile, median, third quartile, and maximum.

5. Conclusions

This paper proposes a new extension to the OKECA approach for dimensional reduction. The new method (i.e., KECA-L1) employs L1-norm and a rotation matrix to maximize information potential of the input data. In order to find the optimal entropic kernel components, motivated by Nie et al.'s algorithm [23], we design a nongreedy iterative process which has much faster convergence than OKECA's. Moreover, a general semisupervised learning algorithm has been established for classification using KECA-L1. Compared with several recently proposed KECA- and PCA-based approaches, this SSL-based classifier can remarkably promote the performance on real-world datasets classification and software defect prediction.

Although KECA-L1 has achieved impressive success on real examples, several problems still should be considered and solved in the future research. The efficiency of KECA-L1 has to be optimized for it is relatively time-consuming compared with most existing PCA-based methods. Additionally, the utilization of KECA-L1 is expected to appear in each pattern analysis algorithm previously based on PCA approaches.

Acknowledgments

This work was supported by the National Natural Science Foundation of China (Grant no. 61702544) and Natural Science Foundation of Jiangsu Province of China (Grant no. BK20160769).

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Supplementary Materials

The MATLAB toolbox of KECA-L1 is available.

References

- 1.Gao Q., Xu S., Chen F., Ding C., Gao X., Li Y. R₁-2-DPCA and face recognition. IEEE Transactions on Cybernetics. 2018;99:1–12. doi: 10.1109/tcyb.2018.2796642. [DOI] [PubMed] [Google Scholar]

- 2.Hyvärinen A., Oja E. Independent component analysis: algorithms and applications. Neural Networks. 2000;13(4-5):411–430. doi: 10.1016/s0893-6080(00)00026-5. [DOI] [PubMed] [Google Scholar]

- 3.Belhumeur P. N., Hespanha J. P., Kriegman D. J. Eigenfaces vs. Fisherfaces: recognition using class specific linear projection. European Conference on Computer Vision. 1996;1:43–58. doi: 10.1007/bfb0015522. [DOI] [Google Scholar]

- 4.Turk M., Pentland A. Eigenfaces for recognition. Journal of Cognitive Neuroscience. 1991;3(1):71–86. doi: 10.1162/jocn.1991.3.1.71. [DOI] [PubMed] [Google Scholar]

- 5.Friedman J. H., Tukey J. W. A projection pursuit algorithm for exploratory data analysis. IEEE Transactions on Computers. 1974;23(9):881–890. doi: 10.1109/t-c.1974.224051. [DOI] [Google Scholar]

- 6.Jenssen R. Kernel entropy component analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2010;32(5):847–860. doi: 10.1109/tpami.2009.100. [DOI] [PubMed] [Google Scholar]

- 7.Schölkopf B., Smola A., Müller K. R. Nonlinear component analysis as a kernel eigenvalue problem. Neural Computation. 1998;10(5):1299–1319. doi: 10.1162/089976698300017467. [DOI] [Google Scholar]

- 8.Mika S., Smola A., Scholz M. Kernel PCA and de-noising in feature spaces. Conference on Advances in Neural Information Processing Systems II. 1999;11:536–542. [Google Scholar]

- 9.Yang J., Zhang D., Frangi A. F., Yang J. Y. Two-dimensional PCA: a new approach to appearance-based face representation and recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2004;26(1):131–137. doi: 10.1109/tpami.2004.1261097. [DOI] [PubMed] [Google Scholar]

- 10.Nishino K., Nayar S. K., Jebara T. Clustered blockwise PCA for representing visual data. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2005;27(10):1675–1679. doi: 10.1109/tpami.2005.193. [DOI] [PubMed] [Google Scholar]

- 11.Ke Y., Sukthankar R. PCA-SIFT: a more distinctive representation for local image descriptors. Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition; June-July 2004; Washington, DC, USA. pp. 506–513. [Google Scholar]

- 12.D’Aspremont A., El Ghaoui L., Jordan M. I., Lanckriet G. R. G. A direct formulation for sparse PCA using semidefinite programming. SIAM Review. 2007;49(3):434–448. doi: 10.1137/050645506. [DOI] [Google Scholar]

- 13.Luo M., Nie F., Chang X., Yang Y., Hauptmann A., Zheng Q. Avoiding optimal mean robust PCA/2DPCA with non-greedy L1-norm maximization. Proceedings of International Joint Conference on Artificial Intelligence; July 2016; New York, NY, USA. pp. 1802–1808. [Google Scholar]

- 14.Yu Q., Wang R., Yang X., Li B. N., Yao M. Diagonal principal component analysis with non-greedy L1-norm maximization for face recognition. Neurocomputing. 2016;171:57–62. doi: 10.1016/j.neucom.2015.06.011. [DOI] [Google Scholar]

- 15.Li B. N., Yu Q., Wang R., Xiang K., Wang M., Li X. Block principal component analysis with nongreedy L1-norm maximization. IEEE Transactions on Cybernetics. 2016;46(11):2543–2547. doi: 10.1109/tcyb.2015.2479645. [DOI] [PubMed] [Google Scholar]

- 16.Nie F., Huang H. Non-greedy L21-norm maximization for principal component analysis. 2016. http://arxiv.org/abs/1603.08293v1. [DOI] [PubMed]

- 17.Nie F., Yuan J., Huang H. Optimal mean robust principal component analysis. Proceedings of International Conference on Machine Learning; June 2014; Beijing, China. pp. 1062–1070. [Google Scholar]

- 18.Wang R., Nie F., Hong R., Chang X., Yang X., Yu W. Fast and orthogonal locality preserving projections for dimensionality reduction. IEEE Transactions on Image Processing. 2017;26(10):5019–5030. doi: 10.1109/TIP.2017.2726188. [DOI] [PubMed] [Google Scholar]

- 19.Zhang C., Nie F., Xiang S. A general kernelization framework for learning algorithms based on kernel PCA. Neurocomputing. 2010;73(4–6):959–967. doi: 10.1016/j.neucom.2009.08.014. [DOI] [Google Scholar]

- 20.Zhang Z., Hancock E. R. Kernel entropy-based unsupervised spectral feature selection. International Journal of Pattern Recognition and Artificial Intelligence. 2012;26(5) doi: 10.1142/s0218001412600026.1260002 [DOI] [Google Scholar]

- 21.Izquierdo-Verdiguier E., Laparra V., Jenssen R., Gomez-Chova L., Camps-Valls G. Optimized kernel entropy components. IEEE Transactions on Neural Networks and Learning Systems. 2017;28(6):1466–1472. doi: 10.1109/tnnls.2016.2530403. [DOI] [PubMed] [Google Scholar]

- 22.Li X., Pang Y., Yuan Y. L1-norm-based 2DPCA. IEEE Transactions on Systems Man and Cybernetics Part B. 2010;40(4):1170–1175. doi: 10.1109/tsmcb.2009.2035629. [DOI] [PubMed] [Google Scholar]

- 23.Nie F., Huang H., Ding C., Luo D., Wang H. Robust principal component analysis with non-greedy L1-norm maximization. Proceedings of International Joint Conference on Artificial Intelligence; July 2011; Barcelona, Catalonia, Spain. pp. 1433–1438. [Google Scholar]

- 24.Wang R., Nie F., Yang X., Gao F., Yao M. Robust 2DPCA with non-greedy L1-norm maximization for image analysis. IEEE Transactions on Cybernetics. 2015;45(5):1108–1112. doi: 10.1109/tcyb.2014.2341575. [DOI] [PubMed] [Google Scholar]

- 25.Shekar B. H., Sharmila Kumari M., Mestetskiy L. M., Dyshkant N. F. Face recognition using kernel entropy component analysis. Neurocomputing. 2011;74(6):1053–1057. doi: 10.1016/j.neucom.2010.10.012. [DOI] [Google Scholar]

- 26.Jenssen R. Kernel entropy component analysis: new theory and semi-supervised learning. Proceedings of IEEE International Workshop on Machine Learning for Signal Processing; September 2011; Beijing, China. pp. 1–6. [Google Scholar]

- 27.Kwak N. Principal component analysis based on L1-norm maximization. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2008;30(9):1672–1680. doi: 10.1109/tpami.2008.114. [DOI] [PubMed] [Google Scholar]

- 28.Xiao Y., Wang H., Xu W., Zhou J. L1 norm based KPCA for novelty detection. Pattern Recognition. 2013;46(1):389–396. doi: 10.1016/j.patcog.2012.06.017. [DOI] [Google Scholar]

- 29.Xiao Y., Wang H., Xu W. Parameter selection of gaussian kernel for one-class SVM. IEEE Transactions on Cybernetics. 2015;45(5):941–953. doi: 10.1109/tcyb.2014.2340433. [DOI] [PubMed] [Google Scholar]

- 30.Krzanowski W. Principles of Multivariate Analysis. Vol. 23. Oxford, UK: Oxford University Press (OUP); 2000. [Google Scholar]

- 31.Duin. On the choice of smoothing parameters for Parzen estimators of probability density functions. IEEE Transactions on Computers. 1976;25(11):1175–1179. doi: 10.1109/tc.1976.1674577. [DOI] [Google Scholar]

- 32.Liu W., Liu S., Gu Q., Chen J., Chen X., Chen D. Empirical studies of a two-stage data preprocessing approach for software fault prediction. IEEE Transactions on Reliability. 2016;65(1):38–53. doi: 10.1109/tr.2015.2461676. [DOI] [Google Scholar]

- 33.Lessmann S., Baesens B., Mues C., Pietsch S. Benchmarking classification models for software defect prediction: a proposed framework and novel findings. IEEE Transactions on Software Engineering. 2008;34(4):485–496. doi: 10.1109/tse.2008.35. [DOI] [Google Scholar]

- 34.He Z., Shu F., Yang Y., Li M., Wang Q. An investigation on the feasibility of cross-project defect prediction. Automated Software Engineering. 2012;19(2):167–199. doi: 10.1007/s10515-011-0090-3. [DOI] [Google Scholar]

- 35.He P., Li B., Liu X., Chen J., Ma Y. An empirical study on software defect prediction with a simplified metric set. Information and Software Technology. 2015;59:170–190. doi: 10.1016/j.infsof.2014.11.006. [DOI] [Google Scholar]

- 36.Wu Y., Huang S., Ji H., Zheng C., Bai C. A novel Bayes defect predictor based on information diffusion function. Knowledge-Based Systems. 2018;144:1–8. doi: 10.1016/j.knosys.2017.12.015. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

The MATLAB toolbox of KECA-L1 is available.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.