Abstract

It is widely accepted that holistic processing is critical for early face recognition, but recent work has suggested a larger role for feature-based processing. The earliest step in familiar face recognition is thought to be matching a perceptual representation of a familiar face to a stored representation of that face, which is thought to be indexed by the N250r event-related potential (ERP). In the current face-priming studies, we investigated whether this perceptual representation can be effectively activated by feature-based processing. In the first experiment, prime images were familiar whole faces, isolated eyes or isolated mouths. Whole faces and isolated eyes, but not isolated mouths, effectively modulated the N250r. In the second experiment, prime images were familiar whole faces presented either upright or inverted. Inverted face primes were no less effective than upright face primes in modulating the N250r. Together, the results of these studies indicate that activation of the earliest face recognition processes is not dependent on holistic processing of a typically configured face. Rather, feature-based processing can effectively activate the perceptual memory of a familiar face. However, not all features are effective primes as we found eyes, but not mouths, were effective in activating early face recognition.

Keywords: face perception, holistic processing, face recognition, N250r, ERP, face identity

Introduction

Face recognition is a key facilitator of successful social interactions, as it allows for retrieval of biographical and semantic information necessary to guide appropriate social behavior (Bruce and Young, 1986; Haxby et al., 2000). The earliest step in familiar face recognition is thought to be the matching of a perceptual representation of a familiar face to a stored representation of that face (Bruce and Young, 1986). This process is likely indexed by the N250r event-related potential (ERP), which is evoked by the sequentially paired presentation of a prime face (S1) followed by a target face (S2) of the same identity (Begleiter et al., 1995; Schweinberger et al., 1995; Schweinberger and Burton, 2003; Bindemann et al., 2008). The N250r appears as a negative deflection over the right occipitotemporal scalp at ∼200–300 ms after the onset of S2.

Although the N250r is evoked by the repetition of any pair of images of the same face, there are important differences between the response to familiar and unfamiliar individuals. The amplitude of the N250r is larger (i.e. more negative, indicating a larger repetition effect) to the repeated presentation of a familiar face than to the repeated presentation of an unfamiliar face (Begleiter et al., 1995; Schweinberger et al., 1995, Schweinberger et al., 2002a; Schweinberger et al., 2002b; Herzmann et al., 2004; Schweinberger et al., 2004; Dörr et al., 2011; Gosling and Eimer, 2011). Also, unlike the N250r evoked by unfamiliar faces, the N250r evoked by familiar faces is preserved even when a small number of face stimuli intervene between the prime and the target (Pfütze et al., 2002; Schweinberger et al., 2002a; Schweinberger et al., 2004; Dörr et al., 2011). This modulation by familiarity distinguishes the N250r from other early face-sensitive components such as the N170 (cf. Schweinberger and Neumann, 2016).

Perhaps most compelling, the N250r occurs for familiar faces even when S1 and S2 images differ considerably in camera angle, lighting, affective state, age of the individual, haircut, etc. (Bindemann et al., 2008; Kaufmann et al., 2009; Zimmermann and Eimer, 2013; Fisher et al., 2016). This persistence of the N250r (albeit with a diminished amplitude), despite changes in perceptual, pictorial and structural codes, suggests that the N250r is sensitive to identity, per se, and not simple image properties (Schweinberger et al., 2004; Bindemann et al., 2008; Gosling and Eimer, 2011; Faerber et al., 2015; Wirth et al., 2015).

A cardinal feature of face perception is that it is thought to be primarily holistic, relying on the bound representation of all face parts rather than a collection of individual parts (for reviews, see Rossion, 2013; Richler and Gauthier, 2014). Note that holistic processing and second-order configural processing (perception dependent on the relationship between features within a face) are not strictly synonymous (McKone and Yovel, 2009; Piepers and Robbins, 2012), but, unless otherwise noted, we do not distinguish between the two in the current report. One demonstration of holistic face processing comes from the ‘part/whole’ paradigm in which participants are asked to identify face parts of previously studied faces (Tanaka and Farah, 1993). Critically, the parts are shown either in isolation or in the context of the whole face. Participants are better able to determine the identity of the individual parts when presented in the whole face. Another classic example of the dominance of holistic processing is the face-inversion effect, which refers to a more profound performance decrement for naming faces than for naming objects when images are rotated 180° (e.g. Yin, 1969; Tanaka and Farah, 1993; Farah et al., 1995; McKone, 2004; Van Belle et al., 2010, but see Rezlescu et al., 2016). The inversion is thought to disrupt holistic perception (Yin, 1969; Farah et al., 1995; Rossion, 2008, 2009; Van Belle et al., 2010), though perhaps not abolish it entirely (Richler et al., 2011). These behavioral effects are reflected in early ERP components associated with face perception. Face inversion causes an increased amplitude and/or increased peak latency in the P100 (Itier and Taylor, 2002, 2004a, 2004b; Feng et al., 2012; Colombatto and McCarthy, 2016) and N170 (Bentin et al., 1996; Rossion et al., 1999, 2000; Eimer, 2000; Itier and Taylor, 2004c; Wiese 2013).

If perceptual matching of facial identity drives the repetition effect, what features of the face are critical for successful matching? Similar to the P100 and N170, the N250r is affected by inversion. When S1 and S2 are both inverted, the ERP magnitude is attenuated and the onset is delayed for unfamiliar faces (Itier and Taylor, 2004a; Schweinberger et al., 2004; Jacques et al., 2007; Towler and Eimer, 2016). Towler and Eimer (2016) recently suggested that there is a qualitative difference in how the identity of upright and inverted unfamiliar faces is processed, such that upright faces are supported by both holistic and feature-based processes, whereas inverted faces are supported solely by the latter. This is consistent with the traditional interpretation of the inversion effect as reflecting the disruption of holistic processing. However, as noted earlier, the N250r is significantly modulated by the familiarity of a face. Indeed, there are several experimental manipulations (e.g. introducing backward masks or intervening face stimuli) that reduce or abolish the N250r evoked by unfamiliar faces but have little effect on the N250r evoked by familiar faces (Pfütze et al., 2002; Schweinberger et al., 2002a; Schweinberger et al., 2004; Dörr et al., 2011). Moreover, there is evidence that familiarity with a face facilitates feature-based processing (di Oleggio Castello et al., 2017). Therefore, the dependence on holistic processing might not generalize to familiar faces.

In the current studies, we investigated whether holistic processing is necessary for early visual recognition of familiar faces, as indexed by the N250r. In the first study, S1 depicted either full faces, isolated eyes or isolated mouths. A significant N250r to a target face preceded by a face-part prime would suggest successful activation of the visual representation of a face without benefit of a full-face context (i.e. holistic). In the second study, S1 was presented either upright or inverted. In all cases, S2 was a full upright face. This is a second critical difference (the first being the use of familiar faces) between the current work and prior studies that inverted both S1 and S2 (Itier and Taylor, 2004a; Schweinberger et al., 2004; Jacques et al., 2007; Towler and Eimer, 2016). In the current work, a repetition effect requires that an upright target face be matched to the perceptual memory evoked by the inverted prime face. Such a repetition effect would suggest that the perceptual memory of the identity was successfully extracted from the inverted prime and therefore that the earliest stages of face recognition are not dependent on holistic processing.

Methods

Participants

Participants were recruited from the Kenyon College campus and surrounding community and compensated for their participation. All participants had normal or corrected-to-normal vision. After completion of the electroencephalography (EEG) study, participants were asked to indicate their familiarity with each of the famous faces used in the experiment by pressing one of three buttons: ‘Not familiar’, ‘Moderately familiar’ or ‘Very familiar’. Participants who were insufficiently familiar with the faces (indicated that 25% or more were ‘Not familiar’) were excluded from analysis. All participants gave written and informed consent. The Kenyon College Institution Research Board approved this protocol.

Study 1: face parts

A total of 35 individuals (20 female, 15 male) aged between 18 and 59 (M = 21.0) participated in Study 1. Two participants were excluded due to excessive noise in the EEG data. Nine participants were excluded from analysis due to insufficient familiarity with the famous faces. Thus, there were 24 participants (15 female, 9 male) aged between 18 and 22 (M = 20.2) included in the analyses.

Study 2: inversion

A total of 32 individuals (24 female, 8 male) aged between 18 and 23 (M = 20.1) participated in Study 2. Two participants were excluded due to excessive noise in the EEG data. Three participants were excluded from analysis due to insufficient familiarity with the faces. Two participants were unable to complete the post-test familiarity evaluations due to technical issues. These participants were not excluded from the analysis, which potentially increases the probability of Type II, not Type I error. Inclusion of two participants with insufficient familiarity with the faces (if that were the case) would diminish the likelihood of finding a result predicated on familiarity. Thus, there were 27 participants (22 female, 5 male) aged between 18 and 23 (M = 20.2) included in the analyses.

Stimuli

Of the 1580 identities within the MSRA-CFW: Data Set of Celebrities Faces on the Web (Zhang et al., 2012), 220 were selected as being most likely to be known to college students. In an independent experiment, Kenyon College students ranked the familiarity of these 220 individuals, and the 72 most familiar celebrities were selected for use in the current experiments. Two pictures of each celebrity were downloaded from Google Images. The image pairs were selected so that they differed in a meaningful way such as facial expression, hairstyle, age or shooting angle. Pictures were cropped to isolate the face and resized to be 500 pixels tall with a resolution of 72 pixels per inch. Image width varied as a function of each face’s natural shape. For each image, a version was created in which the eyes or the mouth were isolated. To do so, the scene and aperture filters in Photoshop CS6 were used to blur the surrounding face (Figures 1 and 2; FIRST ROW).

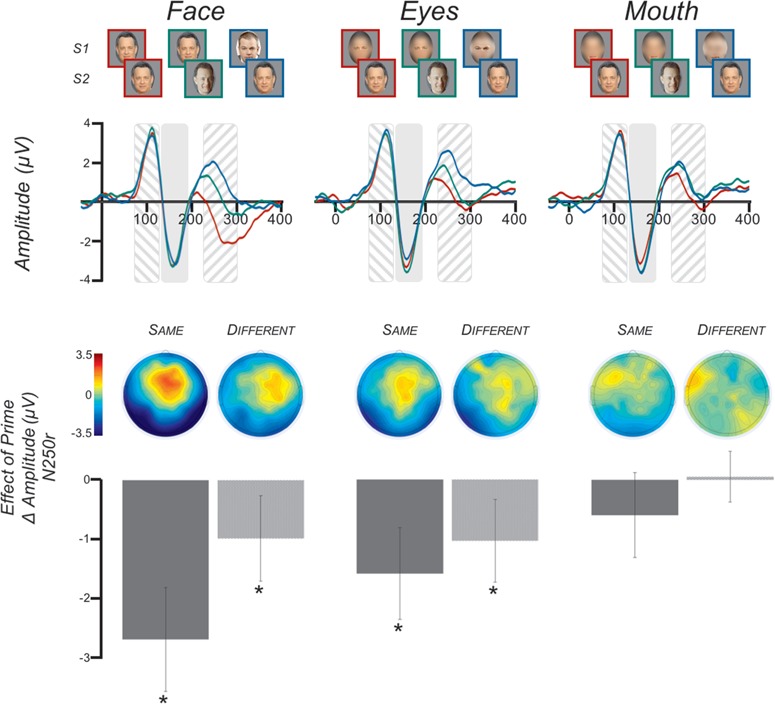

Fig. 1.

Study 1 Stimuli and ERP results. (FIRST ROW) The nine trial types used in Study 1 were three levels of prime (same, different, none) × three levels of feature (faces, eyes, mouth). (SECOND ROW) Grand-average ERPs (N = 24) across electrodes P8, P10, TP8, P7, P9, TP7. The time period of the P100 is indicated by the area with downward slashes. NOTE: this figure displays the waveform across the electrodes listed above, whereas the P100 statistics were done on the average waveform of electrodes C1, Cz, C2. The time period of the N170 is indicated by the solid shaded area. The time period of the N250r is indicated by the area with upward slashes. The waveforms are color coded such that RED is the primesame condition, GREEN is the primedifferent condition and BLUE is the primenone condition. (THIRD ROW) Topographical maps of the N250r priming effect at each cell of the feature factor. The ‘Same’ maps display the primesamevs primenone simple contrast, whereas the ‘Different’ maps display the primedifferentvs primenone contrast. (FOURTH ROW) N250r mean amplitude differences across electrodes P8, P10, TP8, P7, P9, TP7. The contrasts displayed are the same as for the topographical maps. Error bars indicate the 95% confidence interval. Asterisks indicate significant priming effects, P < 0.05.

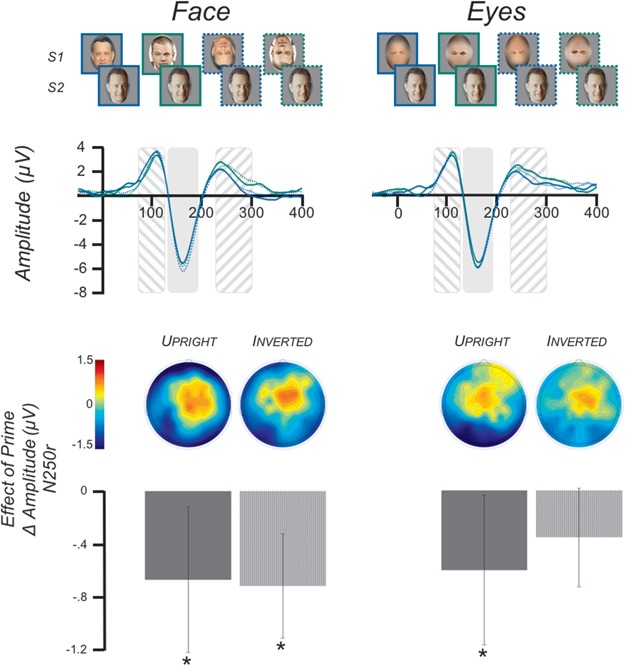

Fig. 2.

Study 2 Stimuli and ERP results. (FIRST ROW) The eight trial types used in Study 2 were two levels of prime (none, different) × two levels of feature (face, eyes) × two levels of orientation (upright, inverted). (SECOND ROW) Grand-average ERPs (N = 27) across electrodes P8, P10, TP8, P7, P9, TP7. The time period of the P100 is indicated by the area with downward slashes. NOTE: this figure displays the waveform across the electrodes listed above, whereas the P100 statistics were done on the average waveform of electrodes C1, Cz, C2. The time period of the N170 is indicated by the solid shaded area. The time period of the N250r is indicated by the area with upward slashes. The waveforms are color coded such that SOLID BLUE is the primedifforientationup condition, SOLID GREEN is the primenoneorientationup condition, DASHED BLUE is the primedifforientationinv condition and DASHED GREEN is the primenoneorientationinv condition. (THIRD ROW) Topographical maps of the N250r priming effect. The maps display the primediffvs primenone simple contrast for each cell of the feature and orientation factors. (FOURTH ROW) N250r mean amplitude differences across electrodes P8, P10, TP8, P7, P9, TP7. The contrasts displayed are the same as for the topographical maps. Error bars indicate the 95% confidence interval. Asterisks indicate significant priming effects, P < 0.05.

Experimental procedure

Stimulus presentation was controlled by PsychoPy (Peirce, 2007) and displayed on a 27” LCD display with a resolution of 1920 × 1080 and a refresh rate of 60 Hz. Participants were seated ∼70 cm from the display; the exact distance varied slightly to accommodate participant comfort.

The stimulus presentation was modeled after the paradigm described in Schweinberger et al. (2002a). All trials began with the presentation of a black fixation cross on a grey background displayed for 500 ms, followed by presentation of the prime face (S1) for 500 ms. S1 was replaced by a small green circle displayed in the center of the screen for 1300 ms, followed by presentation of the target face (S2) for 1250 ms. The location of S2 was offset from the location of S1 to decrease any effects of retinal and/or low-level visual adaptation. The randomly chosen offset was either horizontal (48 pixels), vertical (27 pixels) or diagonal (55 pixels). Participants were asked to indicate the sex of the person depicted in S2 by pressing one of two buttons as quickly as possible after face onset. The following trial began after a 2450-ms interstimulus interval.

Study 1: face parts

There were nine variations of the S1 and S2 pairings in this 3 × 3 design (Figure 1; FIRST ROW). The ‘prime’ factor included levels of prime-same (primesame), prime-different (primedifferent) and unprimed control (primenone). In the primesame condition, S1 and S2 were the same picture of the same familiar identity (e.g. Paul Newman followed by the same picture of Paul Newman). In the primedifferent condition, S1 and S2 were different pictures of the same familiar identity (e.g. Paul Newman followed by a different picture of Paul Newman). Lastly, in the primenone condition, S1 and S2 were different familiar identities (e.g. Paul Newman followed by Robert Redford). These conditions were fully crossed with the three levels of the ‘feature’ factor: full face (featureface), eyes (featureeyes) and mouth (featuremouth). There were 18 trials of each of the nine conditions. These 162 trials were presented randomly across 18 counterbalanced blocks.

Study 2: inversion

There were eight variations of the S1 and S2 pairings 2 × 2 × 2 design (Figure 2; FIRST ROW). The ‘prime’ factor included levels of prime-different (primedifferent) and unprimed control (primenone). So unlike Study 1, in Study 2, all prime stimuli were different images of the same identity. The ‘feature’ factor included levels of full face (featureface) and eyes (featureeyes). An ‘orientation’ factor added in this study included levels of upright (orientationup) and inverted (orientationinv), the latter being a face rotated 180°. There were 24 trials for all of the face conditions. Due to a coding error, there was a slight imbalance in the number of eye trials, such that upright eyes (primed or unprimed) were presented 22 times and inverted eyes (primed or unprimed) were presented 26 times. The 192 trials were presented randomly across 24 counterbalanced blocks.

EEG acquisition and analysis

Continuous biopotential signals were recorded using the ActiveTwo BioSemi amplifier system (BioSemi, Amsterdam, The Netherlands). EEG was acquired from 64 scalp electrodes arranged in the 10/20 system. Two external electrodes were placed on the mastoids to be used as an offline reference. Two external electrodes were placed approximately 1-cm lateral and 1-cm inferior to the outer canthus of the left eye to record the horizontal and vertical electrooculogram, respectively.

All signals were digitized and recorded on an Apple Mac Mini running ActiView software (BioSemi) at a sampling rate of 2048 Hz. Off-line analysis was conducted with the EEGLAB (Swartz Center for Computational Neuroscience, La Jolla, CA, USA) MATLAB toolbox and the ERPLAB plugin (Steve Luck, UC-Davis Center for Mind and Brain, Davis, CA, USA).

EEG data were imported with an initial reference of the averaged mastoids, downsampled to 256 Hz, and bandpass filtered with half-amplitude cutoffs of 0.5–100 Hz. Epochs event-locked to the onset of S2 were extracted from the continuous EEG (−1000 to 2000 ms). ICA decomposition was run to identify eye movement, blink and other artefactual components, which were removed from the data. The data were then re-referenced to the mean of all scalp electrodes.

ERPs were generated by averaging the EEG epochs from each electrode for each experimental condition. ERPs were baseline normalized by subtracting the average of a 150-ms pre-stimulus epoch from each time point and were lowpass filtered with a second order Butterworth filter with a half-amplitude cutoff of 40 Hz. The grand average ERP waveform was produced by averaging across all participants’ ERPs.

Electrodes and the time windows for analysis were selected to investigate three components of interest: P100, N170 and N250r. These electrodes and time windows were selected based on inspection of the experiment-wide grand-average ERP and prior literature that used the same, or very similar, parameters (Schweinberger et al., 2004; see Schweinberger and Neumann, 2016 for a review; Herzmann, 2016). Mean amplitudes were calculated for the P100 across electrodes C1, Cz and C2 from 75–125 ms. Our analysis of the N170 (130–190 ms) and N250r (225–300 ms) focused on electrodes P8, P10 and TP8 in the right hemisphere and P7, P9 and TP7 in the left hemisphere.

Mean amplitudes for Study 1 were analyzed using a repeated-measures analysis of variance (ANOVA) with within-subjects factors of hemisphere (hemright, hemleft), prime (primesame, primedifferent, primenone) and feature (featureface, featureeyes, featuremouth). The Greenhouse–Geisser correction was used to correct for any violations of sphericity. Mean amplitudes for Study 2 were analyzed using a repeated-measures ANOVA with the within-subject factors of hemisphere (hemright, hemleft), prime (primedifferent, primenone), feature (featureface, featureeyes) and orientation (orientationup, orientationinv). For both studies, the hemisphere factor was only included in the analyses of the N170 and N250r. Main and interaction effects were explicated with paired-samples t-tests.

Results

Study 1: face parts

P100

There were no main or interaction effects of prime or feature (Ps > 0.05).

N170

There were no main or interaction effects of hemisphere, prime or feature (Ps > 0.05).

N250r

There was no effect of hemisphere (P > 0.05). There were significant main effects of prime [F(1.55, 35.58) = 22.80, P < 0.001,  = 0.498] and feature [F(1.44, 33.13) = 17.42, P < 0.001,

= 0.498] and feature [F(1.44, 33.13) = 17.42, P < 0.001,  = 0.431]. These main effects were qualified by a significant prime

= 0.431]. These main effects were qualified by a significant prime  feature interaction [F(3.31, 76.21) = 7.25, P < 0.001,

feature interaction [F(3.31, 76.21) = 7.25, P < 0.001,  = 0.240] (Figure 1) and a significant hemisphere

= 0.240] (Figure 1) and a significant hemisphere  prime interaction [F(1.69, 38.85) = 3.61, P = 0.044,

prime interaction [F(1.69, 38.85) = 3.61, P = 0.044,  = 0.136]. There was no three-way interaction of hemisphere × prime × feature (P > 0.05).

= 0.136]. There was no three-way interaction of hemisphere × prime × feature (P > 0.05).

To better understand the prime × hemisphere interaction, we used paired-sampled t-tests to evaluate the priming effect (i.e. primesamevs primenone and primedifferentvs primenone) within each hemisphere. Within the left hemisphere, a significant priming effect was evoked by both primesame (mean difference: −1.75 ± 0.33 μV, P < 0.001) and primedifferent (mean difference: −1.03 ± 0.26 μV, P = 0.001), whereas only primesame (mean difference: −1.51 ± 0.32 μV, P < 0.001) evoked an effect in the right hemisphere.

To better understand the prime × feature interaction, we used paired-sampled t-tests to evaluate the priming effect (i.e. primesamevs primenone and primedifferentvs primenone) at each of the three feature levels (featureface, featureeyes, featuremouth) (Figure 1, FOURTH ROW). At the featureface level, a significant N250r was evoked by both primesame (mean difference: −2.69 ± 0.43 μV, P < 0.001) and primedifferent (mean difference: −0.98 ± 0.35 μV, P = 0.010). At the featureeye level, a significant N250r was evoked by both primesame (mean difference: −1.59 ± 0.37 μV, P < 0.001) and primedifferent (mean difference: −1.03 ± 0.34 μV, P = 0.006). At the featuremouth level, there were no significant priming effects (Ps > 0.05).

Finally, we directly contrasted the prime effect of faces with the prime effect of eyes with a paired-samples t-test of primesame–primenone and primediff–primenone conditions for each of the features. There was a significantly greater effect for same faces than same eyes (mean difference: −1.10 10 ± 1.93 μV, P = 0.011) but no difference in effect for different faces and different eyes (mean difference: 0.05 ± 1.64 μV, P > 0.05).

Study 2: inversion

P100

There were no main or interaction effects of prime, feature or inversion (Ps > 0.05).

N170

Inverted faces evoked a larger N170 than upright faces [F(1, 26) = 6.57, P = 0.017,  = 0.202]. There were no other main or interaction effects (Ps > 0.05).

= 0.202]. There were no other main or interaction effects (Ps > 0.05).

N250r

There was a significant main effect of priming [F(1, 26) = 22.60, P < 0.001,  = 0.465], such that primed trials evoked a smaller response than unprimed trials. There was also a significant main effect of hemisphere [F(1, 26) = 9.27, P = 0.005,

= 0.465], such that primed trials evoked a smaller response than unprimed trials. There was also a significant main effect of hemisphere [F(1, 26) = 9.27, P = 0.005,  = 0.263] with the right hemisphere showing a more positive response than the left. Critically, there were no other main or interaction effects (Ps > 0.05), indicating that the strength of the priming effect did not vary as a function of feature or orientation (Figure 2, FOURTH ROW).

= 0.263] with the right hemisphere showing a more positive response than the left. Critically, there were no other main or interaction effects (Ps > 0.05), indicating that the strength of the priming effect did not vary as a function of feature or orientation (Figure 2, FOURTH ROW).

Discussion

Here, we report two studies that support the notion that early face recognition is not solely dependent on holistic processing, but rather can be successfully activated by feature-based processing. However, we found that only eyes effectively engaged early recognition systems, suggesting that all features are not equipotent. These findings serve to deemphasize the role of holistic processing in familiar face perception and, in the context of the extant literature, suggest a prominent role of experience in the processes recruited to support face perception.

Is holistic processing necessary for facial recognition?

The results of both studies suggest that activation of a stored identity representation can occur without the benefit of holistic processing.

In Study 1, we disrupted holistic processing by presenting a single isolated facial feature (eyes or mouth) that was largely devoid of the full configural information used in holistic processing (see below for a discussion of the potential effect of residual configural information). We found that isolated eyes effectively activated the perceptual memory of the prime face, as indexed by the N250r component evoked by the target face. This suggests that feature-based processing is sufficient for engaging the perceptual memory thought to be the first step in familiar face recognition. However, trials with mouth primes did not modulate the N250r regardless of whether the mouth was isolated from the same or a different picture as the target. The implication of the privileged nature of eyes is discussed in greater detail below.

To further test the feature-based processing effect found in Study 1, we ran a second study that used a different technique to disrupt holistic processing. In Study 2, inverted faces, which are known to disrupt holistic processing (Yin, 1969; Farah et al., 1995; Rossion 2008, 2009; Van Belle et al., 2010), were used as prime stimuli. If holistic processing is necessary for recognition, one would expect an interaction between prime (primed or unprimed) and orientation (upright, inverted), such that the N250r for primed vs unprimed faces would only be observed in the upright condition. To the contrary, we found no interaction between these factors (Figure 2, FOURTH ROW). Moreover, pairwise comparisons found that the N250r was significant for prime trials of upright faces, upright eyes and inverted faces, though inverted eyes did not reach significance. Put plainly, the orientation of a face had no effect on the activation of the perceptual memory of the face’s identity as indexed by the N250r.

Together, these studies suggest that holistic processing is not necessary to activate perceptual memories of the facial identity of familiar faces, which is consistent with behavioral work suggesting that holistic processing is not strongly correlated with face recognition abilities (Konar et al., 2009; Wang et al., 2012; DeGutis et al., 2013; Richler et al., 2015, but see Rezlescu et al., 2017; Sunday et al., 2017).

It is important to note that the current results do not preclude a role for holistic processing in face recognition. Though we propose that it is unnecessary for producing the N250r, it is likely functioning in parallel. Towler and Eimer (2016) reported that while internal or external face features evoked the N250r, there was a super-additive effect for full faces. That is, the magnitude of the N250r to full face primes was larger than the sum of the effect to internal and external feature primes, which implies a unique contribution from holistic processing. Consistent with this, we found that while eyes evoked a significant repetition effect, the effect was greater for full faces. But this was only true when the prime and target were of the same image, as was the case in Towler and Eimer (2016). When the prime and target were of different images, there was no difference in the strength of the face and eye priming effects. This raises the possibility that the contribution of holistic processing observed in that prior report was owed to pictorial similarity and low-level features.

It is important to point out that the manipulation in Study 1 did not entirely obscure second-order configural information, because the blurred portions of the face retained some configural information (Figure 1, FIRST ROW). There is evidence that identification relies on the ordinal luminance relationship between parts, particularly the eyes and surrounding region (Gilad et al., 2009). This relationship would have been largely preserved by our blurring technique and could account for the significant priming effect of eyes. However, single-cell recordings in the inferior temporal cortex of the macaque brain found that the eyes and surrounding area were not the only region sensitive to contrast (Ohayon et al., 2012). Of all recorded cells that were sensitive to at least one feature, 70% were sensitive to the eye region (also see Engell and McCarthy, 2014). But importantly, a substantial proportion of the cells, ∼45%, were sensitive to the nose–mouth region. In this context, we would argue that it is unlikely that preserved configural information (luminance contrast or otherwise) meaningfully contributed to the N250r effects, as any preserved configural information would be present for both eyes and mouths, but the N250r was evoked solely by the former.

The role of familiarity in shaping face processing

We found that the N250r evoked by inverted primes did not differ from upright primes. This result is in contrast to prior work that found upright faces served as more potent primes than inverted faces (e.g. Itier and Taylor, 2004a; Jacques et al., 2007; Towler and Eimer, 2016). However, there are two important features that differentiate this study from prior work.

First, and most important, we used familiar faces as opposed to unfamiliar faces. This is a critical distinction because the effects evoked by unfamiliar faces might not generalize to familiar faces. Repetition of familiar faces results in a larger (Begleiter et al., 1995; Schweinberger et al., 1995; Schweinberger et al., 2002a; Schweinberger et al., 2002b; Herzmann et al., 2004; Schweinberger et al., 2004 Dörr et al., 2011; Gosling and Eimer, 2011) and more robust (Pfütze et al., 2002; Schweinberger et al., 2002a; Schweinberger et al., 2004; Dörr et al., 2011 ) N250r than repetition of unfamiliar faces. Moreover, Burton et al. (2015) have recently made a strong case, based primarily on behavioral studies, against a primary role of configural processing in familiar face recognition. Though the terms configural and holistic processing are often used interchangeably, they are not synonymous (Maurer et al., 2002; Piepers and Robbins, 2012; Richler and Gauthier, 2014). Nonetheless, both require integration of more than one face-part and can thus be similarly contrasted to feature-based processing.

Second, the current study is the first to investigate the N250r response to an upright face that has been primed by an inverted face. Prior studies presented both the prime and the target face as upright or inverted. Therefore, any modulation of the ERP evoked by the target faces in the current study can be attributed solely to the influence, if any, of the previously presented prime. That is, our design removes potential confounds introduced by presenting an inverted target face.

Considering our finding in the context of the prior literature, we support the notion that familiarity with faces is associated with a diminished importance of holistic processing during recognition (see also di Oleggio Castello et al., 2017).

Significance of eyes in face recognition

Eyes seem to have a privileged role in feature-based processing, as we found that eyes, but not mouths, were effective primes. This privileged role is not entirely unexpected given that eyes are perhaps the most important feature in face perception. They convey critical information regarding affective state (Schyns et al., 2007), attentional focus (Langton et al., 2000) and identity (Schyns et al., 2002). It is therefore not surprising that deficits in typical social behavior such as Autism are often associated with impairments in eye processing (see Itier and Batty, 2009 for a review). ERP studies have found that the amplitude and latency of the face-selective N170 is modulated by eyes presented in isolation rather than in the context of a full face (Bentin et al., 1996; Itier et al., 2006; Nemrodov and Itier, 2011; Zhang et al., 2017). Moreover, results from an intracranial EEG study of face and eye perception show that eye-sensitive regions are more abundant than face-sensitive regions throughout the ventral occipitotemporal face processing system (Engell and McCarthy, 2014).

Laterality

In Study 1, the N250r was observed in both hemispheres when the prime and target images were identical, whereas the effect was only observed in the left hemisphere when the prime and target were different images of the same individual. Several EEG studies of general visual processing have noted hemispheric asymmetries between local and global processing (Heinze and Münte, 1993; Heinze et al., 1998; Yamaguchi et al., 2000; Malinowski et al., 2002). Consistent with this hemispheric bias, the hemodynamic response of the fusiform face area is larger in the right hemisphere during natural (presumably holistic) viewing of faces, but larger in the left hemisphere when participants are instructed to attend to specific face features (Rossion et al., 2000a). This suggests a right hemisphere advantage for holistic processing and left hemisphere advantage for feature-based processing. In this context, the current results could be interpreted as demonstrating that without benefit of seeing the identical image, there is a greater dependence on facial features during recognition of familiar faces and thus a relatively larger priming effect in the left hemisphere when viewing different images of the same individual. Though this is an interesting possibility, it is inconsistent with other findings in the current report. Critically, we did not observe a hemisphere × feature × priming interaction in Study 1 nor a main effect of hemisphere in Study 2, which disrupted holistic processing by inverting the prime faces.

Wholes, parts and … voices?

Given the current results that imply holistic visual perception is not necessary for familiar face detection, perhaps such detection might also be primed by other modalities (e.g. printed name, voice) as well. The interactive activation model of person recognition (Burton et al., 1990) suggests that the ‘person identification nodes’ (PINs) in the classic cognitive model of Bruce and Young (1986) can be accessed by different modalities, including hearing an individual’s voice. It has been argued that a supra-threshold activation of these PINs is what determines familiarity and not activation of the model’s ‘face recognition units’. As such, determining familiarity should be facilitated by primes of same and different modalities than the target (e.g. Paul Newman’s voice, followed by an image of the actor). This is supported by the observation that familiarity decisions are facilitated by the prior presentation of a semantic cue (e.g. the text ‘Paul Newman’) (Young et al., 1994). Similar results have been reported for cross-modal priming of voices and faces (Ellis et al., 1997). However, EEG results do not find modulation of the N250r by cross-modal priming and therefore suggest that any behavioral facilitation is likely owed to a later process (Pickering and Schweinberger, 2003 but also see Holcomb et al., 2005). Future studies will need to more thoroughly address these possibilities.

Conclusions

In summary, the current studies provide evidence that familiar face recognition is not solely dependent on holistic processing. Feature-based processing can effectively activate the perceptual memory of a familiar face. Compared to prior work that focused on unfamiliar faces, these results suggest an increased emphasis on facial features as a function of familiarity. However, all features are not created equally. We found that only eyes, but not mouths, were effective in activating early face recognition. This is likely owed, in large part, to the essential social information conveyed by the eyes.

Highlights

Holistic processing is not necessary for early identity recognition of familiar faces.

Inverted faces and isolated features can effectively activate the perceptual memory of a familiar face, as indexed by the identity sensitive N250r ERP.

This effectiveness was observed for eyes but not mouths.

Acknowledgments

We thank Henry Quillian, Claire Robertson, Josue Parr, Samantha Montoya and Anoohya Muppirala for help in data collection and Grit Herzmann for her comments on an earlier draft of this manuscript. This research was funded, in part, by the Kenyon Summer Science Program.

References

- Begleiter H., Porjesz B., Wang W. (1995). Event-related brain potentials differentiate priming and recognition to familiar and unfamiliar faces. Electroencephalography and Clinical Neurophysiology, 94(1), 41–9. [DOI] [PubMed] [Google Scholar]

- Bentin S., Allison T., Puce A., Perez E., McCarthy G. (1996). Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience, 8(6), 551–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bindemann M., Burton A.M., Leuthold H., Schweinberger S.R. (2008). Brain potential correlates of face recognition: geometric distortions and the n250r brain response to stimulus repetitions. Psychophysiology, 45(4), 535–44. doi: 10.1111/j.1469-8986.2008.00663.x [DOI] [PubMed] [Google Scholar]

- Bruce V., Young A. (1986). Understanding face recognition. British Journal of Psychology, 77(3), 305–27. [DOI] [PubMed] [Google Scholar]

- Burton A. M., Bruce V., Johnston R. A. (1990). Understanding face recognition with an interactive activation model. British Journal of Psychology, 81 (Pt 3), 361–80. [DOI] [PubMed] [Google Scholar]

- Burton A.M., Schweinberger S.R., Jenkins R., Kaufmann J.M. (2015). Arguments against a configural processing account of familiar face recognition. Perspectives on Psychological Science, 10(4), 482–96. doi: 10.1177/1745691615583129 [DOI] [PubMed] [Google Scholar]

- Colombatto C., McCarthy G. (2016). The effects of face inversion and face race on the P100 ERP. Journal of Cognitive Neuroscience, 1–13. doi: 10.1162/jocn_a_01079 [DOI] [PubMed] [Google Scholar]

- DeGutis J., Wilmer J., Mercado R.J., Cohan S. (2013). Using regression to measure holistic face processing reveals a strong link with face recognition ability. Cognition, 126(1), 87–100. doi: 10.1016/j.cognition.2012.09.004 [DOI] [PubMed] [Google Scholar]

- Dörr P., Herzmann G., Sommer W. (2011). Multiple contributions to priming effects for familiar faces: analyses with backward masking and event-related potentials. British Journal of Psychology, 102(4), 765–82. doi: 10.1111/j.2044-8295.2011.02028.x [DOI] [PubMed] [Google Scholar]

- Eimer M. (2000). Effects of face inversion on the structural encoding and recognition of faces: evidence from event-related brain potentials. Cognitive Brain Research, 10(1–2), 145–58. [DOI] [PubMed] [Google Scholar]

- Ellis H. D., Jones D. M., Mosdell N. (1997). Intra- and inter-modal repetition priming of familiar faces and voices. British Journal of Psychology, 88 (Pt 1), 143–56. [DOI] [PubMed] [Google Scholar]

- Engell A.D., McCarthy G. (2014). Face, eye, and body selective responses in fusiform gyrus and adjacent cortex: an intracranial EEG study. Frontiers in Human Neuroscience, 8, 642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faerber S.J., Kaufmann J.M., Schweinberger S.R. (2015). Early temporal negativity is sensitive to perceived (rather than physical) facial identity. Neuropsychologia, 75, 132–42. doi: 10.1016/j.neuropsychologia.2015.05.023 [DOI] [PubMed] [Google Scholar]

- Farah M.J., Tanaka J.W., Drain H.M. (1995). What causes the face inversion effect? Journal of Experimental Psychology: Human Perception and Performance, 21(3), 628. [DOI] [PubMed] [Google Scholar]

- Feng W., Martinez A., Pitts M., Luo Y.-J., Hillyard S.A. (2012). Spatial attention modulates early face processing. Neuropsychologia, 50(14), 3461–8. [DOI] [PubMed] [Google Scholar]

- Fisher K., Towler J., Eimer M. (2016). Facial identity and facial expression are initially integrated at visual perceptual stages of face processing. Neuropsychologia, 80, 115–25. doi: 10.1016/j.neuropsychologia.2015.11.011 [DOI] [PubMed] [Google Scholar]

- Gilad S., Meng M., Sinha P. (2009). Role of ordinal contrast relationships in face encoding. Proceedings of the National Academy of Sciences of the United States of America, 106(13), 5353–8. doi: 10.1073/pnas.081239610 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gosling A., Eimer M. (2011). An event-related brain potential study of explicit face recognition. Neuropsychologia, 49(9), 2736–45. doi: 10.1016/j.neuropsychologia.2011.05.025 [DOI] [PubMed] [Google Scholar]

- Haxby J.V., Hoffman E.A., Gobbini M.I. (2000). The distributed human neural system for face perception. Trends in Cognitive Sciences, 4(6), 223–33. [DOI] [PubMed] [Google Scholar]

- Heinze H.J., Hinrichs H., Scholz M., Burchert W., Mangun G.R. (1998). Neural mechanisms of global and local processing. A combined PET and ERP study. Journal of Cognitive Neuroscience, 10(4), 485–98. [DOI] [PubMed] [Google Scholar]

- Heinze H.J., Münte T.F. (1993). Electrophysiological correlates of hierarchical stimulus processing: dissociation between onset and later stages of global and local target processing. Neuropsychologia, 31(8), 841–52. [DOI] [PubMed] [Google Scholar]

- Herzmann G. (2016). Increased N250 amplitudes for other-race faces reflect more effortful processing at the individual level. International Journal of Psychophysiology, 105,57:65.. doi: 10.1016/j.ijpsycho.2016.05.001 [DOI] [PubMed] [Google Scholar]

- Herzmann G., Schweinberger S.R., Sommer W., Jentzsch I. (2004). What's special about personally familiar faces? A multimodal approach. Psychophysiology, 41(5), 688–701. doi: 10.1111/j.1469-8986.2004.00196.x [DOI] [PubMed] [Google Scholar]

- Holcomb P.J., Anderson J., Grainger J. (2005). An electrophysiological study of cross-modal repetition priming. Psychophysiology, 42(5), 493–507. doi: 10.1111/j.1469-8986.2005.00348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itier R.J., Batty M. (2009). Neural bases of eye and gaze processing: the core of social cognition. Neuroscience and Biobehavioral Reviews, 33(6), 843–63. doi: 10.1016/j.neubiorev.2009.02.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itier R.J., Latinus M., Taylor M. J. (2006). Face, eye and object early processing: what is the face specificity? NeuroImage, 29(2), 667–76. doi: 10.1016/j.neuroimage.2005.07.041 [DOI] [PubMed] [Google Scholar]

- Itier R.J., Taylor M.J. (2002). Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: a repetition study using ERPs. NeuroImage, 15(2), 353–72. [DOI] [PubMed] [Google Scholar]

- Itier R.J., Taylor M.J. (2004a). Effects of repetition learning on upright, inverted and contrast-reversed face processing using ERPs. NeuroImage, 21(4), 1518–32. doi: 10.1016/j.neuroimage.2003.12.016 [DOI] [PubMed] [Google Scholar]

- Itier R.J., Taylor M.J. (2004b). N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cerebral Cortex, 14(2), 132–42. [DOI] [PubMed] [Google Scholar]

- Itier R.J., Taylor M.J. (2004c). Source analysis of the N170 to faces and objects. NeuroReport, 15(8), 1261–5. doi: 10.1097/01.wnr.0000127827.73576.d8 [DOI] [PubMed] [Google Scholar]

- Jacques C., d'Arripe O., Rossion B. (2007). The time course of the inversion effect during individual face discrimination. Journal of Vision, 7(8), 3. doi: 10.1167/7.8.3 [DOI] [PubMed] [Google Scholar]

- Kaufmann J.M., Schweinberger S.R., MikeBurton A. (2009). N250 ERP correlates of the acquisition of face representations across different images. Journal of Cognitive Neuroscience, 21(4), 625–41. [DOI] [PubMed] [Google Scholar]

- Konar Y., Bennett P.J., Sekuler A.B. (2009). Holistic processing is not correlated with face-identification accuracy. Psychological Science, 21(1), 38–43. doi: 10.1177/0956797609356508 [DOI] [PubMed] [Google Scholar]

- Langton S.R.H., Watt R.J., Bruce V. (2000). Do the eyes have it? Cues to the direction of social attention. Trends in Cognitive Sciences, 4(2), 50–9. [DOI] [PubMed] [Google Scholar]

- Malinowski P., Hübner R., Keil A., Gruber T. (2002). The influence of response competition on cerebral asymmetries for processing hierarchical stimuli revealed by ERP recordings. Experimental Brain Research, 144(1), 136–9. [DOI] [PubMed] [Google Scholar]

- Maurer D., Grand R.L., Mondloch C.J. (2002). The many faces of configural processing. Trends in Cognitive Sciences, 6(6),255–60. [DOI] [PubMed] [Google Scholar]

- McKone E. (2004). Isolating the special component of face recognition: peripheral identification and a Mooney face. Journal of Experimental Psychology: Learning, Memory, and Cognition, 30(1), 181. [DOI] [PubMed] [Google Scholar]

- McKone E., Yovel G. (2009). Why does picture-plane inversion sometimes dissociate perception of features and spacing in faces, and sometimes not? Toward a new theory of holistic processing. Psychonomic Bulletin & Review, 16(5), 778–97. doi: 10.3758/PBR.16.5.778 [DOI] [PubMed] [Google Scholar]

- Nemrodov D., Itier R.J. (2011). The role of eyes in early face processing: a rapid adaptation study of the inversion effect. British Journal of Psychology, 102(4), 783–98. doi: 10.1111/j.2044-8295.2011.02033.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohayon S., Freiwald W. A., Tsao D.Y. (2012). What makes a cell face selective? The importance of contrast. Neuron, 74(3), 567–81. doi: 10.1016/j.neuron.2012.03.024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oleggio Castello M.V., Wheeler K.G., Cipolli C., Gobbini M.I. (2017). Familiarity facilitates feature-based face processing. PLoS One, 12(6), e0178895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peirce J. W. (2007). PsychoPy—Psychophysics software in python. Journal of Neuroscience Methods, 162(1–2), 8–13. doi: 10.1016/j.jneumeth.2006.11.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfütze E., Sommer W., Schweinberger S. R. (2002). Age-related slowing in face and name recognition: evidence from event-related brain potentials. Psychology and Aging, 17(1), 140–60. doi: 10.1037//0882-7974.17.1.140 [DOI] [PubMed] [Google Scholar]

- Pickering E. C., Schweinberger S. R. (2003). N200, n250r, and N400 event-related brain potentials reveal three loci of repetition priming for familiar names. J Exp Psychol Learn Mem Cogn, 29(6), 1298–311. [DOI] [PubMed] [Google Scholar]

- Piepers D.W., Robbins R.A. (2012). A review and clarification of the terms "holistic," "configural," and "relational" in the face perception literature. Frontiers in Psychology, 3, 559. doi: 10.3389/fpsyg.2012.00559 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rezlescu C., Chapman A., Susilo T., Caramazza A. (2016). Large inversion effects are not specific to faces and do not vary with object expertise. Journal of Vision, 17(10), 250. [Google Scholar]

- Rezlescu C., Susilo T., Wilmer J.B., Caramazza A. (2017). The inversion, part-whole, and composite effects reflect distinct perceptual mechanisms with varied relationships to face recognition. Journal of Experimental Psychology. Human Perception and Performance, 43(12), 1961–1973. doi: 10.1037/xhp0000400 [DOI] [PubMed] [Google Scholar]

- Richler J.J., Floyd R.J., Gauthier I. (2015). About-face on face recognition ability and holistic processing. Journal of Vision, 15(9), 15. doi: 10.1167/15.9.15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richler J.J., Gauthier I. (2014). A meta-analysis and review of holistic face processing. Psychological Bulletin, 140(5), 1281–302. doi: 10.1037/a0037004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richler J.J., Mack M.L., Palmeri T.J., Gauthier I. (2011). Inverted faces are (eventually) processed holistically. Vision Research, 51(3), 333–42. doi: 10.1016/j.visres.2010.11.014 [DOI] [PubMed] [Google Scholar]

- Rossion B. (2008). Picture-plane inversion leads to qualitative changes of face perception. Acta Psychologica, 128(2), 274–89. [DOI] [PubMed] [Google Scholar]

- Rossion B. (2009). Distinguishing the cause and consequence of face inversion: the perceptual field hypothesis. Acta Psychologica, 132(3), 300–12. doi: 10.1016/j.actpsy.2009.08.002 [DOI] [PubMed] [Google Scholar]

- Rossion B. (2013). The composite face illusion: a whole window into our understanding of holistic face perception. Visual Cognition, 21, 139–253. [Google Scholar]

- Rossion B., Delvenne J.-F., Debatisse D., et al. (1999). Spatio-temporal localization of the face inversion effect: an event-related potentials study. Biological Psychology, 50(3), 173–89. [DOI] [PubMed] [Google Scholar]

- Rossion B., Dricot L., Devolder A., et al. (2000a). Hemispheric asymmetries for whole-based and part-based face processing in the human fusiform gyrus. Journal of Cognitive Neuroscience, 12(5), 793–802. [DOI] [PubMed] [Google Scholar]

- Rossion B., Gauthier I., Tarr M.J., et al. (2000). The N170 occipito-temporal component is delayed and enhanced to inverted faces but not to inverted objects: an electrophysiological account of face-specific processes in the human brain. Neuroreport, 11(1), 69–72. [DOI] [PubMed] [Google Scholar]

- Schweinberger S.R., Burton A.M. (2003). Covert recognition and the neural system for face processing. Cortex, 39(1), 9–30. [DOI] [PubMed] [Google Scholar]

- Schweinberger S.R., Huddy V., Burton A. M. (2004). N250r: a face-selective brain response to stimulus repetitions. NeuroReport, 15(9), 1501–5. doi: 10.1097/01.wnr.0000131675.00319.42 [DOI] [PubMed] [Google Scholar]

- Schweinberger S.R., Neumann M.F. (2016). Repetition effects in human ERPs to faces. Cortex, 80, 141–153 doi: 10.1016/j.cortex.2015.11.001 [DOI] [PubMed] [Google Scholar]

- Schweinberger S.R., Pfütze E.-M., Sommer W. (1995). Repetition priming and associative priming of face recognition: evidence from event-related potentials. Journal of Experimental Psychology: Learning, Memory, and Cognition, 21(3), 722. [Google Scholar]

- Schweinberger S.R., Pickering E.C., Burton A.M., Kaufmann J.M. (2002a). Human brain potential correlates of repetition priming in face and name recognition. Neuropsychologia, 40(12), 2057–73. [DOI] [PubMed] [Google Scholar]

- Schweinberger S.R., Pickering E.C., Jentzsch I., Burton A.M., Kaufmann J.M. (2002b). Event-related brain potential evidence for a response of inferior temporal cortex to familiar face repetitions. Cognitive Brain Research, 14(3), 398–409. [DOI] [PubMed] [Google Scholar]

- Schyns P.G., Bonnar L., Gosselin F. (2002). Show me the features! Understanding recognition from the use of visual information. Psychological Science, 13(5), 402–9. [DOI] [PubMed] [Google Scholar]

- Schyns P.G., Petro L.S., Smith M.L. (2007). Dynamics of visual information integration in the brain for categorizing facial expressions. Current Biology, 17(18), 1580–5. doi: 10.1016/j.cub.2007.08.048 [DOI] [PubMed] [Google Scholar]

- Sunday M.A., Richler J.J., Gauthier I. (2017). Limited evidence of individual differences in holistic processing in different versions of the part-whole paradigm. Attention, Perception, and Psychophysics, 79(5), 1453–65. doi: 10.3758/s13414-017-1311-z [DOI] [PubMed] [Google Scholar]

- Tanaka J.W., Farah M.J. (1993). Parts and wholes in face recognition. The Quarterly Journal of Experimental Psychology, 46(2), 225–45. [DOI] [PubMed] [Google Scholar]

- Towler J., Eimer M. (2016). Electrophysiological evidence for parts and wholes in visual face memory. Cortex, 83, 246–58. doi: 10.1016/j.cortex.2016.07.022 [DOI] [PubMed] [Google Scholar]

- Van Belle G., De Graef P., Verfaillie K., Rossion B., Lefèvre P. (2010). Face inversion impairs holistic perception: evidence from gaze-contingent stimulation. Journal of Vision, 10(5), 10. [DOI] [PubMed] [Google Scholar]

- Wang R., Li J., Fang H., Tian M., Liu J. (2012). Individual differences in holistic processing predict face recognition ability. Psychological Science, 23(2), 169–77. doi: 10.1177/0956797611420575 [DOI] [PubMed] [Google Scholar]

- Wiese H. (2013). Do neural correlates of face expertise vary with task demands? Event-related potential correlates of own-and other-race face inversion. Frontiers in Human Neuroscience, 7, 1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wirth B. E., Fisher K., Towler J., Eimer M. (2015). Facial misidentifications arise from the erroneous activation of visual face memory. Neuropsychologia, 77, 387–99. doi: 10.1016/j.neuropsychologia.2015.09.021 [DOI] [PubMed] [Google Scholar]

- Yamaguchi S., Yamagata S., Kobayashi S. (2000). Cerebral asymmetry of the "top-down" allocation of attention to global and local features. The Journal of Neuroscience, 20(9), RC72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin R.K. (1969). Looking at upside-down faces. Journal of Experimental Psychology, 81(1), 141. [Google Scholar]

- Young A. W., Flude B. M., Hellawell D. J., Ellis A. W. (1994). The nature of semantic priming effects in the recognition of familiar people. British Journal of Psychology, 85 (Pt 3), 393–411. [DOI] [PubMed] [Google Scholar]

- Zhang H., Sun Y., Zhao L. (2017). Face context influences local part processing: an ERP study. Perception, 46(9), 1090–104. 301006617691293. doi: 10.1177/0301006617691293 [DOI] [PubMed] [Google Scholar]

- Zhang X., Zhang L., Wang X.-J., Shum H.-Y. (2012). Finding celebrities in billions of web images. IEEE Transactions on Multimedia, 14(4), 995–1007. [Google Scholar]

- Zimmermann F.G., Eimer M. (2013). Face learning and the emergence of view-independent face recognition: an event-related brain potential study. Neuropsychologia, 51(7), 1320–9. doi: 10.1016/j.neuropsychologia.2013.03.028. [DOI] [PubMed] [Google Scholar]