Abstract

Hypothesis:

1) Environmental sound awareness (ESA) and speech recognition skills in experienced, adult cochlear implant (CI) users will be highly correlated, and, 2) ESA skills of CI users will be significantly lower than those of age-matched adults with normal hearing.

Background:

Enhancement of ESA is often discussed with patients with sensorineural hearing loss as a potential benefit of implantation and, in some cases, ESA may be a major motivating factor. Despite its ecological validity and patients’ expectations, ESA remains largely a presumed skill. The relationship between ESA and speech recognition is not well-understood.

Methods:

ESA was assessed in 35 postlingually deaf, experienced CI users and a control group of 41 age-matched, normal hearing listeners using the validated, computerized familiar environmental sounds test—identification (FEST-I) and a diverse speech recognition battery. Demographic and audiological factors as well as nonverbal intelligence quotient (IQ)/nonverbal reasoning and spectral resolution were assessed.

Results:

Six of the 35 experienced CI users (17%) demonstrated FEST-I accuracy within the range of the NH controls. Among CI users all correlations between FEST-I accuracy and speech recognition scores were strong. Chronological age at the time of testing, duration of deafness, spectral resolution, and nonverbal IQ/nonverbal reasoning were strongly correlated with FEST-I accuracy. Partial correlation analysis showed that correlations between FEST-I and speech recognition measures remained significant when controlling for the demographic and audiological factors.

Conclusion:

Our findings reinforce the hypothesis that ESA and speech perception share common underlying processes rather than reflecting truly separate auditory domains.

Keywords: Cochlear implants, Environmental sound awareness, Familiar environmental sounds test, Speech recognition

Patients with sensorineural hearing loss (SNHL) considering cochlear implantation (CI) are commonly motivated by desire for improvement in two ecologically important domains: speech recognition skills and environmental sound awareness (ESA) (1). For prelingually deaf adults and multiply involved children with hearing loss, for whom speech and language development are not considered a likely outcome, ESA may be the primary anticipated benefit of implantation (2,3).

While a large body of work has been amassed over the last 30 years supporting the benefit of CIs in the domain of speech recognition, ESA among CI users remains largely a presumed benefit: comparatively little is known regarding how CI users perceive and interpret nonspeech, non-musical sounds in their listening environments. While some of the knowledge accrued from studying speech recognition skills following CI may be generalizable to ESA, there are fundamental differences between speech and environmental sounds that make it unreasonable to assume that the perceptual processes for the two are equivalent (4). Speech sounds represent components of linguistic structure such as phonemes, syllables and words, whose accurate perception is essential for accessing their semantic properties. The acoustic characteristics of speech sounds are bound by the physical properties of a single sound-producing source, the vocal tract. In contrast, environmental sounds are causally bound to a tremendous multitude of objects and actions, with distinct physical properties that occur in a listener’s environment (5,6). Alternatively, as in case of signaling or warning sounds, environmental sounds have arbitrarily established associations between their acoustic signals and information they convey.

There are several practical reasons for expanding our knowledge about of ESA in CI users. First, deficiencies in ESA have several important practical consequences in daily life relevant to those with hearing impairment. Chief among these is safety, such as the ability of a CI user to detect and identify an alarm in a listening environment without assistive technologies, or to hear the cry of one’s baby. Second, CI candidates themselves report a desire to improve their ESA, and they explicitly report expectations of improvement in this domain following implantation (2,7). Furthermore, there is preliminary support for the hypothesis that ESA can be trained, although the duration of benefit and transfer of benefit to untrained environmental and speech sounds is incompletely characterized (8–10).

Most importantly to this study, a strong association between ESA and speech recognition skills may suggest the possibility of novel candidacy and outcome assessment tools for non-native language speakers in a clinic setting, or for patients with cognitive impairment who cannot complete conventional speech recognition testing (8,11). Alternatively, if ESA and speech recognition skills are not correlated, which has been reported in at least one study (12), ESA may serve as a meaningful contrast to speech skills. If ESA represents a contrasting outcome domain, it would have implications for clinical testing of CI candidates and as a novel outcome in CI users, as well as in developing a better theoretical understanding of the perceptual processes the underlie speech recognition and ESA skills.

Some, but not all, previous studies that examined the association between environmental sounds and speech in CI users have found significant correlations (11,13). However, these studies and studies reporting ESA among CI users in general (2,3,7,14,15) had a relatively small number of participants and used large environmental sound inventories. Such large environmental sound tests may not be practical for use in clinical settings. On the other hand, a shorter environmental sound test may not be able to capture the perceptual structures and processes that underlie the relationship between speech and environmental sounds. Further, earlier studies also often did not include an appropriate age-matched control group, which might have potentially biased interpretation of test results.

The purpose of this study was to examine how ESA skills, specifically, environmental sound identification abilities assessed with a brief 25-item test (16), relate to speech recognition skills in experienced CI users. Our hypothesis was twofold: first, ESA and speech recognition skills of CI users would be highly correlated, and, second, ESA skills of CI users will be significantly lower than those of aged-matched adults with age-appropriate hearing. The implications of this hypothesis are that speech recognition and ESA skills rely upon the same basic underlying sensory and cognitive processes, and for this reason, the two domains may serve as predictors or surrogates for each other. The null hypothesis, that ESA and speech perception skills are not correlated, would imply that ESA and speech recognition reflect two distinct underlying perceptual processes, and for this reason, assessments of ESA and speech recognition should potentially be considered independently, both for clinical evaluation of CI candidates or CI users, and for researchers investigating perceptual processing in patients with hearing loss.

MATERIALS AND METHODS

Participants

This study was approved by the Institutional Review Board (IRB) of The Ohio State University. Thirty-five experienced adult CI users with a mean of 7.32 years (minimum = 1 yr; maximum = 34 yr) of CI use participated. The mean age at time of testing was 67 years (minimum = 50 yr; maximum = 83 yr). Varying etiologies of hearing losses and ages at implantation were included. All participants were postlingually deaf, received their implants at or after the age of 35 years, and were found to be candidates for implantation using the clinical sentence recognition criteria in place at that time. Participants demonstrated CI-aided thresholds more than 35 dB HL at 0.25, 0.5, 1, and 2 kHz, measured by a clinical audiologist within the 12-months before enrollment. All spoke American English as their first language.

A control group of 41 older (mean age 67.35 yr; minimum = 50 yr; maximum 81 yr), normal hearing (NH) participants was tested as well. NH was defined as four-tone (0.5, 1, 2, and 4 kHz) pure-tone average (PTA) thresholds less than 25 dB HL in the better-hearing ear for those less than or equal to 60 years, and 30 dB HL PTA for those more than 60 years old. Control participants were identified from patients with nono-tologic complaints in our department, as well as using a national research recruitment database. Table 1 summarizes demographic and audiological characteristics of the CI users and the NH controls.

TABLE 1.

Demographic and audiological characteristics of the CI users and NH control group

| CI Users | NH Controls | ||

|---|---|---|---|

| Number | 35 | 41 | |

| Mean age at test (yr) | 67.60 | 67.35 |

t (76) = 0.158, p = 0.875 |

| Minimum (yr) | 50 | 50 | |

| Maximum (yr) | 83 | 81 | |

| SD (yr) | 9.19 | 6.86 | |

| Male | 22 (57.9%) | 15 (34.9%) | |

| Female | 16 (42.1%) | 43 (65.1%) | |

| Mean preoperative puretone average | |||

| Mean (dB) | 95.46 | ||

| Minimum (dB) | 120 | ||

| Maximum (dB) | 22.5 | ||

| SD (dB) | 21.93 | ||

| Duration of hearing loss | |||

| Mean (yr) | 37.91 | ||

| Minimum (yr) | 4 | ||

| Maximum (yr) | 76 | ||

| SD (yr) | 21.06 | ||

| Duration of CI use (yr) | |||

| Mean (yr) | 7.32 | ||

| Minimum (yr) | 1 | ||

| Maximum (yr) | 34 | ||

| SD (yr) | 6.91 | ||

| Device laterality | |||

| Right | 17 (48.6%) | ||

| Left | 7 (20%) | ||

| Bilateral | 11 (31.4%) | ||

CI indicates cochlear implant; dB, decibel; NH, normal hearing; SD, standard deviation.

To rule out cognitive impairment, participants completed the Mini-Mental State Examination (MMSE), a validated screening assessment tool for memory, attention, and the ability to follow instructions (17). Raw scores were converted to T scores based on age and education. A T score of less than 29 is concerning for cognitive impairment, which was considered an exclusionary criterion, but all participants had MMSE T scores of more than or equal to 29.

Because our measure of ESA required reading environmental sound names, the Word Reading subtest of the Wide Range Achievement Test, 4th edition (WRAT) (18), was used to measure basic language proficiency. All participants were required to have a standard score of more than or equal to 83. Because our ESA assessment required looking at a computer monitor, a screening test of near-vision was performed. All participants had corrected near-vision of more than or equal to 20/40.

General Approach

All auditory signals were presented via a computer in an acoustically treated room, over a high-quality loudspeaker 1-m from the participant at zero degrees azimuth. Visual stimuli were displayed on a computer touchscreen. For speech recognition assessments, all responses were video- and audio-recorded for later scoring. During testing, participants wore their CIs in their “everyday” settings. Participants with bilateral CIs wore both during testing, and participants with contralateral hearing aids wore all devices in usual settings.

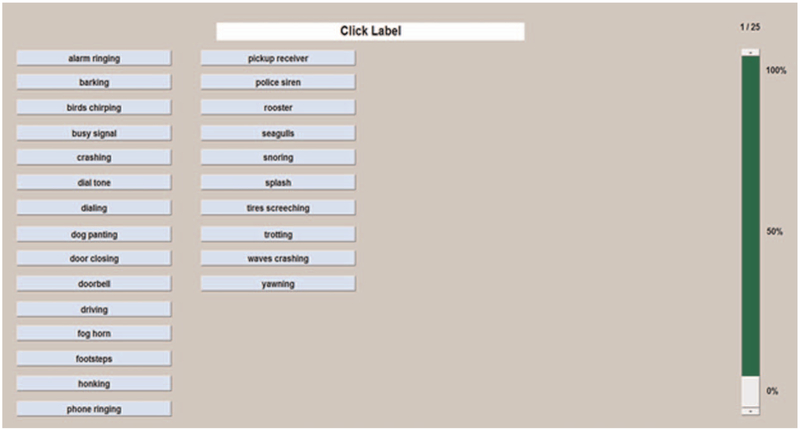

Familiar Environmental Sound Test–Identification (FEST-I)

The familiar environmental sound test–identification (FEST-I) (8,16,19,20) was our measure of environmental sound identification. The FEST-I uses a closed-set, forced-choice paradigm that requires selection of one out of a total of 25 response alternatives. Figure 1 shows what the participant sees on the computer monitor while taking the FEST-I. The sounds included on the FEST-I represent a broad sampling of familiar environmental sounds that can be encountered in everyday soundscapes. Each sound belonged to one of five categories of sounds used in earlier work by Tye-Murray et al. (15) on ESA in NH listeners: 1) human/animal vocalizations and bodily sounds, 2) mechanical sounds, 3) water sounds, 4) aerodynamic sounds, and 5) signaling sounds. In the development of the FEST-I, all test signals were found to be easily identifiable (i.e., average accuracy 98%, standard deviation [SD] = 2) and highly familiar (i.e., 6.4 on a 7-point rating scale) to young NH listeners (8). Test signals varied in duration (0.1–0.5s to 7–9 s); the average was 2.7 seconds (SD = 1.3). Scores used in analyses consisted of percent correct responses.

FIG. 1.

Screenshot of FEST-I response screen. FEST-I indicates familiar environmental sounds test—identification.

Speech Recognition Measures

Our speech recognition assessment included isolated words and words in meaningful sentences. All speech recognition tasks were performed in quiet. Inclusion of speech materials of varying difficulty was selected to ensure that floor and ceiling effects were reduced across all measures. All measures were prerecorded to maintain consistent presentation levels and presented at 68 dB SPL. Spoken word recognition was measured using a Central Institute for the Deaf Word List (CID-W22) of 50 words, selected because previous work has demonstrated a wide range of variability in CI users when tested in quiet (21,22). Recognition of spoken words in meaningful sentences was tested using the Institute of Electrical and Electronics Engineers (IEEE) corpus (23), chosen because these 25 sentences are more complex than most clinical batteries (e.g., AzBio or HINT), and a wide range of performance has been demonstrated among adult CI users. The IEEE assessment features 30 sentences total—28 that are tested and scored and two practice sentences that are not scored (24). Finally, 32 Perceptually Robust English Sentence Test Open-set (PRESTO) sentences were presented. PRESTO sentences are challenging and incorporate high-variability conditions: each sentence on a given test list is produced by a different talker, and the talkers on each list represent a number of regional dialects from the United States. No talker is ever repeated in the same test list, and each PRESTO sentence is novel (23). Two of the 32 sentences are used for practice purposes and the remaining 30 are tested and scored. Scores used in analyses were percent total words correct for each task.

Spectral Resolution

The Modified Spectral Ripple Task (MSRT; available at http://smrt.tigerspeech.com) consisted of 202 pure-tone frequencies with amplitudes spectrally modulated by a sine wave (25). Ripple density and phase of ripple onset were determined by frequency and phase of the modulating sinusoid. The ripple-resolution threshold was determined using a three-interval, two-alternative forced-choice, one-up/one-down adaptive procedure. The initial target stimulus had 0.5 ripples per octave (RPO), with a step size of 0.2 RPO. The reference stimulus consisted of 20 RPO. Listeners performed the task by discriminating a reference from target stimuli. Six runs of 10 reversals each comprised the task. Scores used in the final analysis were based on the last six reversals of each run (the first three runs were discarded as practice). A higher score represented better spectral resolution.

Nonverbal Intelligence Quotient (IQ)/Nonverbal Reasoning

Nonverbal IQ/nonverbal reasoning was assessed using Raven’s Progressive Matrices (26). This nonverbal assessment requires the participant to correctly identify a missing element that completes a visual pattern on a computer screen, the majority in the form of geometric design matrices. A 10-minute time limit was imposed for completion of this measure.

Statistical Analyses

Statistical analyses were performed using SPSS Statistics (Version 22; IBM Corp., Armonk, NY). Demographic analyses and measures of central tendency were conducted to characterize the sample. An independent-samples t test was performed to compare means of FEST-I scores between CI users and NH controls. Bivariate Pearson correlation analyses were performed among the variables FEST-I scores and speech recognition measures and between FEST-I percent correct and demographic/audiological factors. Partial correlation analyses were performed to test the relationship between FEST-I accuracy and speech recognition skills controlling for the potentially confounding effects of demographic/audiological factors correlated with FEST-I performance.

RESULTS

CI Users Versus NH Listeners: FEST-I Accuracy

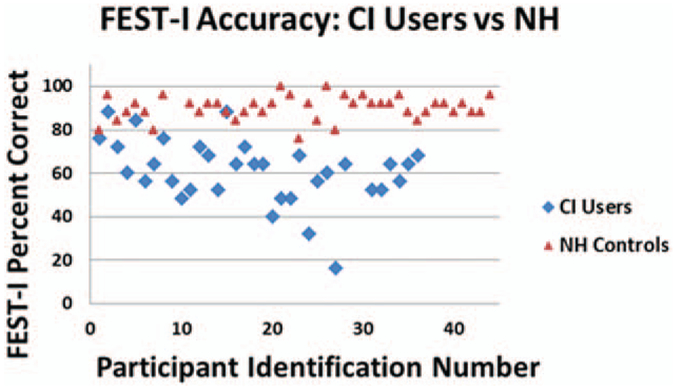

Our sample of 35 experienced CI users achieved a mean accuracy score of 61% on the FEST-I (minimum = 16%; maximum = 88%; SD = 14.96). The NH controls achieved a mean accuracy score of 90% on the FEST-I (minimum = 76%; maximum = 100%; SD = 5.22). Six of the 35 experienced CI users (17%) demonstrated accuracy within the range of the NH controls. Figure 2 displays the distribution of FEST-I accuracy scores among CI users compared with NH controls. An independent-samples t test established this difference to be highly significant (t[74] = −11.61, p < 0.001).

FIG. 2.

Scatterplot of FEST-I accuracy among experienced CI users compared to NH listeners. CI indicates cochlear implant; FEST-I, familiar environmental sounds test—identification; NH, normal hearing.

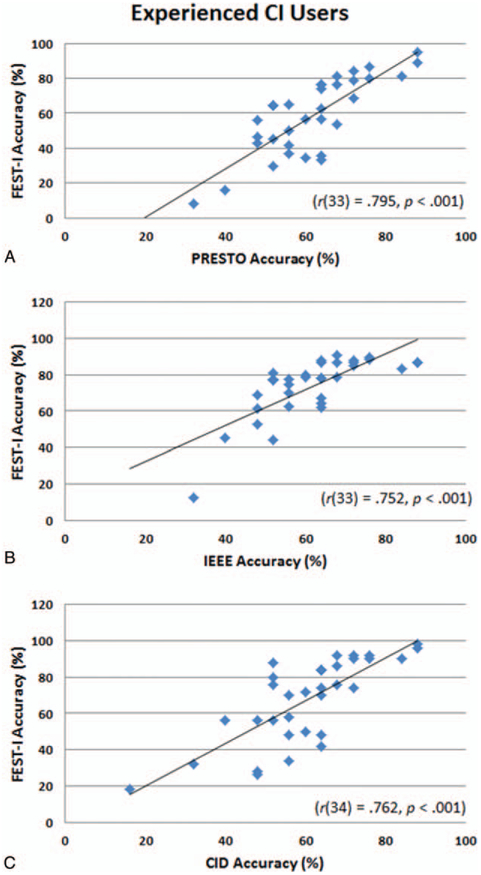

Correlation between FEST-I and Speech Recognition Measures in CI Users

For experienced CI users, all Pearson bivariate correlations between FEST-I accuracy and speech recognition scores were strong. This pattern was consistently observed across 1) PRESTO (r[33] = 0.795, p < 0.001); 2) IEEE standard sentences (r[33] = 0.752, p < 0.001); and 3) CID (r[34] = 0.762, p < 0.001). Figure 3A–C shows scatterplots for FEST-I accuracy and the speech recognition measures in experienced CI users for these measures.

FIG. 3.

A-C, Scatterplot of FEST-I accuracy and speech recognition measures. FEST-I indicates familiar environmental sounds test—identification

Correlations Between Demographic/Audiological Factors and FEST-I Accuracy in CI Users

Pearson bivariate correlation analyses were performed between FEST-I accuracy and a number of demographic and audiological factors of potential relevance. The factors considered included the following: 1) chronological age at the time of testing, 2) duration of deafness (computed as current age minus patient-reported age at onset of hearing loss), 3) spectral resolution, and 4) nonverbal IQ/nonverbal reasoning using the Raven’s task. Table 2 shows a summary of these correlations, all of which displayed significant correlation between FEST-I accuracy and each of these demographic/ audiological factors.

TABLE 2.

Correlations between demographic/audiological factors and FEST-I accuracy among CI users

| Factor | Correlation with FEST-I Accuracy |

|---|---|

| Chronological age at test (yr) | r (35) = −0.511, p = 0.002 |

| Duration of deafness (yr) | r (35) = −0.352, p = 0.038 |

| Spectral resolution | r (33) = 0.538, p = 0.001 |

| Nonverbal IQ/nonverbal reasoning | r (35) = 0.528, p = 0.001 |

CI indicates cochlear implant; FEST-I, familiar environmental sounds test—identification; IQ, intelligence quotient.

Partial Correlation Analyses

To examine whether the strong, consistent relationship between speech recognition measures and FEST-I scores persisted after controlling for potential confounding variables of chronological age at the time of testing, duration of deafness, spectral resolution, and nonverbal IQ/nonverbal reasoning, we performed three partial correlation analyses with FEST-I accuracy and IEEE, PRESTO, and CID-22 controlling for chronological age, duration of deafness, spectral resolution, and nonverbal IQ/nonverbal reasoning performance as confounding predictors. For PRESTO (r[25] = 0.655, p < 0.001), IEEE (r[25] = 0.573,p = 0.002), and CID (r[26] = 0.592, p = 0.001), the correlations with ESA remained significant and the change in magnitude was only slight (Table 3).

TABLE 3.

Partial correlation analysis between speech recognition measures and FEST-I accuracy controlling for demographic and audiological factors

| Speech Recognition Measure |

Parital Correlation with FEST-I Accuracya |

|---|---|

| IEEE | r (25)=0.573, p=0.002 |

| PRESTO | r(25)=0.655, p<0.001 |

| CID-W22 | r (26) = 0.592, p = 0.001 |

Partialled out: chronological age at test, duration of deafness, spectral resolution, nonverbal IQ/nonverbal reasoning.

CID-22 indicates Central Institute for the Deaf Word List; FEST-I, familiar environmental sounds test—identification; IEEE, Institute of Electrical and Electronics Engineers; PRESTO, Perceptually Robust English Sentence Test Open-set.

DISCUSSION

Speech recognition is the major motivating factor for most prospective CI users, and current CI software and pre-processing strategies are specifically designed to accommodate speech signals. In many patients considering a CI, ESA is also presented as a benefit of implantation. For some patients, ESA may be a major motivating factor. In spite of its ecological validity and potential as an additional non-speech outcome predictor or performance measure, ESA is largely a presumed skill following CI.

Interpreting the limited objective evidence on ESA among CI users is challenging for a number of reasons. First, the majority of studies on the topic of ESA are from the early days of CIs and reflect performance with early, fewer-channel devices (2,3,7,14,15). Sample sizes were typically small, included heterogeneous hearing loss etiologies and a wide range of durations of auditory deprivation and durations of implantation. Furthermore, test design was heterogeneous to the point that comparison was very difficult: open and closed set tests, sometimes used simultaneously in the same study. Without normative data from age-matched cohorts, comparison to expectations relative to the performance of NH listeners was difficult as well. The problem of using control groups consisting of younger NH listeners for comparison with older CI users (i.e., age-effect confound) is another problem with this literature. Finally, conflicting findings exist on core questions of interest.

One such question of interest is how speech recognition and ESA relate to one another. This relationship is valuable to consider because if there is a strong correlation between the two domains, ESA assessments could potentially serve as a proxy for speech recognition. This could be helpful in cases where speech assessments are not available (e.g., non-English speakers for whom an alternative test is not available) or for patients who are too young to participate in traditional speech audiometry or who are cognitively delayed or impaired. Looi and Arnephy (12) reported that ESA skills did not correlate with speech recognition skills (using the Hearing in Noise Test in quiet and in noise along with Consonant-Nucleus-Consonant words and phonemes). Their findings suggest that ESA is a separate skill, not just an extension of speech recognition.

Other investigators have demonstrated strong and consistent correlations between ESA and speech recognition skills using the same measures. Using a closed-set format, Reed and Delhorne (11) found that postlingually deaf adult CI users with Northwestern University-6 (NU-6) scores more than 34% correct were able to recognize environmental sounds with a higher degree of accuracy (90% correct for five subjects and >80% for six); subjects with scores below 34% demonstrated greater difficulty in ESA (45–75% accurate). In another study, Inverso and Limb (13) examined ESA using the NonLinguistic Sounds Test (NLST) together with a speech recognition battery. They found that CI users with higher speech recognition scores also tended to have higher NLST scores. Shafiro et al. (20) also found correlations in the moderate-to-strong range between ESA and speech recognition measures, with the exception of vowels.

The first observation of importance from the current study is that only 17% (six of 35 participants) of our sample of experienced CI users performed within the range of performance seen for NH listeners. This finding is consistent with the performance of experienced CI users in other studies (11–13,20,23). In fact, the performance level of some CI users with poor ESA (11) is similar to that of profoundly deaf patients using tactual aids (27).

The second finding of importance is that our CI users demonstrated a large amount of variability in ESA skills, which was not observed in the control group of NH listeners. This was an expected finding, seen in almost all studies on ESA in CI users, across a variety of assessments (11–13,20,23). This finding is also consistent with the large, unexplained variability routinely observed in speech recognition skills that is a major theme of CI literature.

Using correlation analyses that looked at demographic and audiological factors, we found that chronological age at the time of testing, duration of deafness before implantation, nonverbal IQ/nonverbal reasoning, and spectral resolution were all strongly correlated with ESA. Chronological age at test and nonverbal IQ may serve as proxies for potential cognitive decline that may attend aging and hearing loss, a relationship that is increasingly recognized on the basis of epidemiological research (28,29). Our finding of a significant correlation between duration of deafness and FEST-I accuracy is consistent with the earlier reports by some investigators (11) and at odds with others (12). It is important to keep in mind that duration of auditory deprivation is a challenge to accurately characterize given the inherent recall bias associated with self-report of onset of hearing loss and the generally gradual nature of hearing decline. As in studies assessing the impact of duration of deafness on speech perception, a significant challenge is how to optimally define and quantify duration of deafness. For want of a better method, we relied on participant recollection of onset of hearing loss to define duration of deafness.

Spectral resolution, a listener’s sensitivity to the detailed frequency information encoded in an auditory signal, has drawn attention among CI researchers and clinicians in recent years because of its correlation with open-set speech recognition, especially in noisy conditions (25,30). It has been demonstrated that identification accuracy for environmental sounds in a closed-set response task improves with increased spectral resolution of the sounds presented (19). In our sample, spectral resolution was correlated with ESA skills.

Confirming of our initial hypothesis, we found that our sample of CI users as a whole demonstrated ESA skills that correlated strongly and consistently across our battery of challenging speech recognition skills. This finding is in agreement with most existing work examining this question (11,13,20), and at odds with others (12). A major contribution that our study makes toward addressing this important question is the use of a battery of diverse speech recognition tasks selected to ensure a wide range of performance and avoid ceiling and floor effects. Further, since chronological age, duration of deafness, spectral resolution, and nonverbal IQ/nonverbal reasoning all correlated with FEST-I accuracy, we performed three partial correlation analyses to control for confounding effects. The novel aspect of this approach is the demonstration that even taking these factors into account, we still find strong associations of ESA with speech recognition and only a small decrease in magnitude. This novel finding provides support for the hypothesis that there is some common perceptual processing between the two skills, possibly related to processing acoustically complex ecologically meaningful signals.

CONCLUSIONS

ESA in CI users is a domain of auditory perception that is presumed to improve following implantation, but has been the focus of little research. Our findings that ESA and speech recognition are strongly and consistently correlated and that these correlations remain significant even after controlling for potentially confounding influences, reinforce the hypothesis that ESA and speech perception share common underlying processes rather than reflecting truly separate auditory domains. Further, work into ESA assessments as predictors or surrogates for speech recognition is called for, as is further investigation into the efficacy of ESA training and the potential for transfer of these skills to untrained ESA and speech recognition tasks.

Acknowledgments:

Data collection for this manuscript was supported by the American Otological Society Clinician-Scientist Award to Aaron Moberly. The authors would like to thank Kara Vasil, AuD, Taylor Wucinich, Natalie Safdar, and Alexandra Davies for their assistance in data collection, scoring, and data entry.

ResearchMatch, which was used to recruit some NH participants, is supported by National Center for Advancing Translational Sciences Grant UL1TR001070.

Footnotes

Disclosures: Harris (none); Boyce (none); Pisoni (none); Shafiro (none); Moberly: Data collection for this manuscript was supported by the American Otological Society Clinician-Scientist Award to Aaron Moberly.

The authors disclose no conflicts of interest.

REFERENCES

- 1.Food and Drug Administration (FDA). What are the Benefits of Cochlear Implants? Available at: https://www.fda.gov/MedicalDevices/ProductsandMedicalProcedures/ImplantsandProsthetics/Cochlear-Implants/ucm062843.htm. Accessed March 24th, 2017.

- 2.Kelsay DM, Tyler RS. Advantages and disadvantages expected and realized by pediatric cochlear implant recipients as reported by their parents. Am J Otol 1996;17:866–73. [PubMed] [Google Scholar]

- 3.Zwolan TA, Kileny PR, Telian SA. Self-report of cochlear implant use and satisfaction by prelingually deafened adults. Ear Hear 1996;17:198–210. [DOI] [PubMed] [Google Scholar]

- 4.Pisoni DB. Comment: modes of processing speech and nonspeech signals In: Mattingly IG, Studdert-Kennedy M, editors. Modularity and Motor Theory of Speech Perception. Hillsdale, NJ: Erblbaum; 1991. pp. 225–38. [Google Scholar]

- 5.Gaver WW. How do we hear in the world? Explorations in ecological acoustics. Ecol Psychol 1993;5:285–313. [Google Scholar]

- 6.Gaver WW. What in the world do we hear? An ecological approach to auditory event perception. Ecol Psychol 1993;5:1–29. [Google Scholar]

- 7.Zhao F, Stephens SD, Sim SW, Meredith R. The use of qualitative questionnaires in patients having and being considered for cochlear implants. Clin Otolaryngol Allied Sci 1997;22:254–9. [DOI] [PubMed] [Google Scholar]

- 8.Shafiro V Development of a large-item environmental sound test and the effects of short-term training with spectrally-degraded stimuli. Ear Hear 2008;29:775–90. [DOI] [PubMed] [Google Scholar]

- 9.Loebach JL, Pisoni DB. Perceptual learning of spectrally degraded speech and environmental sounds. J Acoust Soc Am 2008;123:1126–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Altieri N Commentary: environmental sound training in cochlear implant users. Front Neurosci 2017;1:36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Reed CM, Delhorne LA. Reception of environmental sounds through cochlear implants. Ear Hear 2005;26:48–61. [DOI] [PubMed] [Google Scholar]

- 12.Looi V, Arnephy J. Environmental sound perception of cochlear implant users. Cochlear Implants Int 2010;11:203–27. [DOI] [PubMed] [Google Scholar]

- 13.Inverso Y, Limb CJ. Cochlear implant-mediated perception of nonlinguistic sounds. Ear Hear 2010;31:505–14. [DOI] [PubMed] [Google Scholar]

- 14.Proops DW, Donaldson I, Cooper HR, et al. Outcomes from adult implantation, the first 100 patients. J Laryngol Otol Suppl 1999;24:5–13. [PubMed] [Google Scholar]

- 15.Tye-Murray N, Tyler RS, Woodworth GG, Gantz BJ. Performance over time with a nucleus or Ineraid cochlear implant. Ear Hear 1992;13:200–9. [DOI] [PubMed] [Google Scholar]

- 16.Shafiro V, Sheft S, Norris M, et al. Toward a nonspeech test of auditory cognition: semantic context effects in environmental sound identification in adults of varying age and hearing abilities. PLoS One 2016;11:28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res 1975;12:189–98. [DOI] [PubMed] [Google Scholar]

- 18.Wilkinson GS, Robertson GJ. Wide Range Achievement Test— Fourth Edition: Professional Manual. Lutz, FL: Psychological Assessment Resources; 2006. [Google Scholar]

- 19.Shafiro V Identification of environmental sounds with varying spectral resolution. Ear Hear 2008;29:401–20. [DOI] [PubMed] [Google Scholar]

- 20.Shafiro V, Gygi B, Cheng M, Vachhani J, Mulvey M. Perception of environmental sounds by experienced cochlear implant patients. EarHear 2011;32:511–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hirsh I, Davis H, Silverman S, Reynolds E, Eldert E, Benson R. Development of materials for speech audiometry. J Speech Hear Disord 1952;17:321–37. [DOI] [PubMed] [Google Scholar]

- 22.Moberly AC, Lowenstein JH, Tarr E, et al. Do adults with cochlear implants rely on different acoustic cues for phoneme perception than adults with normal hearing? J Speech Lang Hear R 2014;57: 566–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Gilbert JL, Tamati TN, Pisoni DB. Development, reliability, and validity of PRESTO: a new high-variability sentence recognition test. J Am Acad Audiol 2013;24:26–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rothauser E, Chapman W, Guttman N, et al. IEEE recommended practice for speech quality measurements. IEEE Trans Audio Electroacoust 1969;17:225∓46. [Google Scholar]

- 25.Aronoff JM, Landsberger DM. The development of a modified spectral ripple test. J Acoust Soc Am 2013;134:EL217–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Raven J Manualfor Raven’s Progressive Matrices and Vocabulary Scales. Research Supplement No.l: The 1979 British Standardisation of the Standard Progressive Matrices and Mill Hill Vocabulary Scales, Together With Comparative Data From Earlier Studies in the UK, US, Canada, Germany and Ireland. San Antonio, TX: Harcourt Assessment; 1981. [Google Scholar]

- 27.Reed CM, Delhorne LA. The reception of environmental sounds through wearable tactual aids. Ear Hear 2003;24:528–38. [DOI] [PubMed] [Google Scholar]

- 28.Deal JA, Betz J, Yaffe K, et al. Hearing impairment and incident dementia and cognitive decline in older adults: The Health ABC Study. J Gerontol A Biol Sci Med Sci 2017;72:703–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Lin FR, Yaffe K, Xia J, et al. Hearing loss and cognitive decline in older adults. JAMA Intern Med 2013;173:293–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Horn DL, Won JH, Rubinstein JT, Werner LA. Spectral ripple discrimination in normal-hearing infants. Ear Hear 2017;38:212–22. [DOI] [PMC free article] [PubMed] [Google Scholar]