Significance

We must routinely make decisions based on uncertain sensory information. Sometimes that uncertainty is related to our own cognitive state, such as when we are not paying attention. Do our decisions about what we perceive take into account our attentional state? Or are we blind to such internal sources of uncertainty, leading to poor decisions and overconfidence? We found that human observers take attention-dependent uncertainty into account when categorizing visual stimuli and reporting their confidence in a task in which uncertainty is relevant for performance. Moreover, they do so in an approximately Bayesian fashion. Human perceptual decision-making can therefore, at least in some cases, adjust in a statistically appropriate way to external and internal sources of uncertainty.

Keywords: attention, perceptual decision, confidence, Bayesian, criterion

Abstract

Perceptual decisions are better when they take uncertainty into account. Uncertainty arises not only from the properties of sensory input but also from cognitive sources, such as different levels of attention. However, it is unknown whether humans appropriately adjust for such cognitive sources of uncertainty during perceptual decision-making. Here we show that, in a task in which uncertainty is relevant for performance, human categorization and confidence decisions take into account uncertainty related to attention. We manipulated uncertainty in an orientation categorization task from trial to trial using only an attentional cue. The categorization task was designed to disambiguate decision rules that did or did not depend on attention. Using formal model comparison to evaluate decision behavior, we found that category and confidence decision boundaries shifted as a function of attention in an approximately Bayesian fashion. This means that the observer’s attentional state on each trial contributed probabilistically to the decision computation. This responsiveness of an observer’s decisions to attention-dependent uncertainty should improve perceptual decisions in natural vision, in which attention is unevenly distributed across a scene.

Sensory representations are inherently noisy. In vision, stimulus factors such as low contrast, blur, and visual noise can increase an observer’s uncertainty about a visual stimulus. Optimal perceptual decision-making requires taking into account both the sensory measurements and their associated uncertainty (1). When driving on a foggy day, for example, you may be more uncertain about the distance between your car and the car in front of you than you would be on a clear day and try to keep further back. Humans often respond to sensory uncertainty in this way (2, 3), adjusting their choice (4) behavior as well as their confidence (5). Confidence is a metacognitive measure that reflects the observer’s degree of certainty about a perceptual decision (6, 7).

Uncertainty arises not only from the external world but also from one’s internal state. Attention is a key internal state variable that governs the uncertainty of visual representations (8, 9); it modulates basic perceptual properties like contrast sensitivity (10, 11) and spatial resolution (12). Surprisingly, it has been suggested that, unlike for external sources of uncertainty, people fail to take attention into account during perceptual decision-making (13–15), leading to inaccurate decisions and overconfidence—a risk in attentionally demanding situations like driving a car.

However, this proposal has never been tested using a perceptual task designed to distinguish fixed from flexible decision rules, nor has it been subjected to formal model comparison. Critically, as we show in SI Appendix, section S1, the standard signal detection tasks used previously cannot, in principle, test the fixed decision rule proposal. In standard tasks, the absolute internal decision rule cannot be uniquely recovered, making it impossible to distinguish between fixed and flexible decision rules (SI Appendix, Fig. S1A).

Testing whether observers take attention-dependent uncertainty into account for both choice and confidence also requires a task in which such decision flexibility stands to improve categorization performance. This condition is not met by traditional left versus right categorization tasks, in which the optimal choice boundary is the same (halfway between the means of the left and right category distributions) regardless of the level of uncertainty (SI Appendix, Fig. S1B). Optimal performance can be achieved simply by taking the difference between the evidence for left and the evidence for right, with no need to take uncertainty into account. The same principle applies to present versus absent detection tasks.

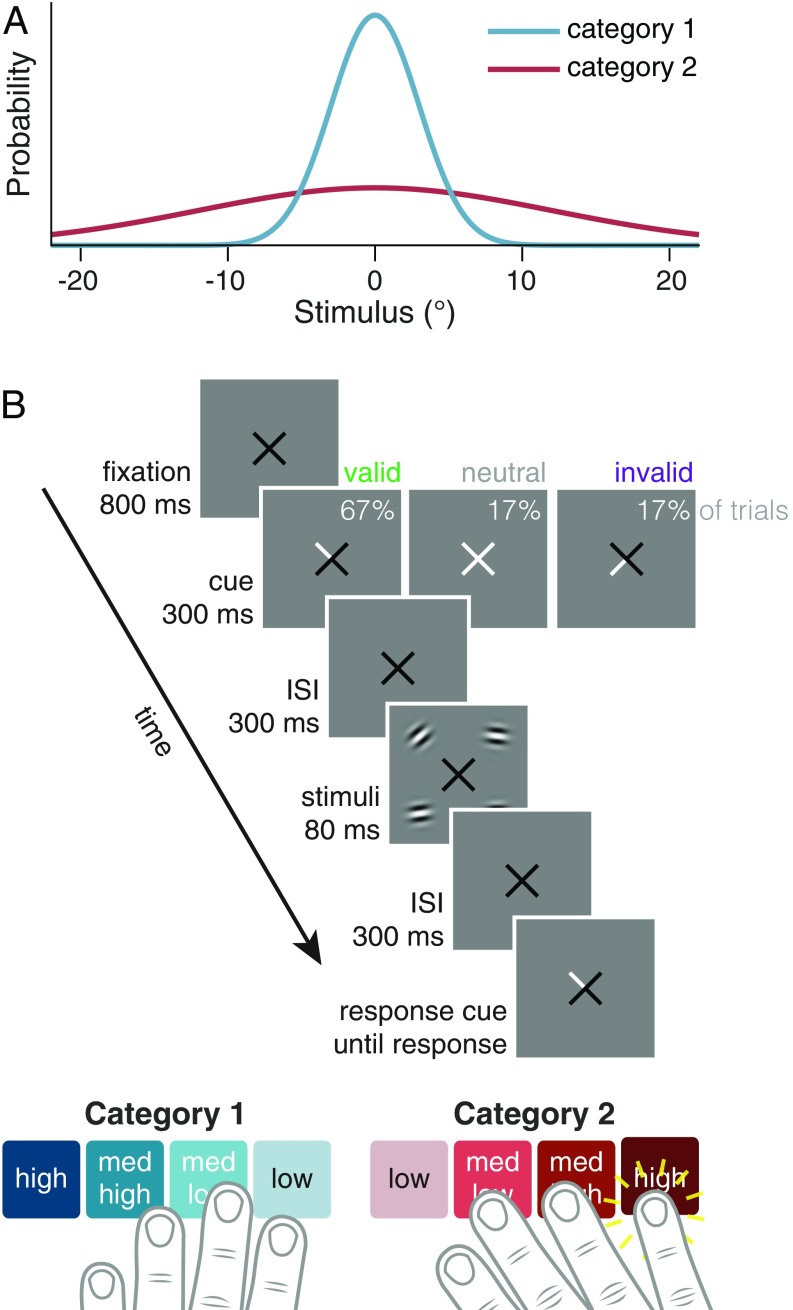

To overcome these limitations, we used a categorization task—which we call the embedded category task—specifically designed to test whether decision rules depend on uncertainty. Observers categorized stimuli as belonging to one of two distributions, which had the same mean but different variances (Fig. 1A). The task requires distinguishing a more specific from a more general perceptual category (4, 5), which is typical of object recognition (16, 17) (e.g., distinguishing a beagle from other dogs) and perceptual grouping (e.g., distinguishing collinear line segments from other line segment configurations) (18). In the embedded category task, the optimal choice boundaries shift as uncertainty increases, which allowed us to determine whether observers’ behavior tracked these shifts, along with analogous shifts in confidence boundaries.

Fig. 1.

Stimuli and task. (A) Stimulus orientation distributions for each category. (B) Trial sequence. Cue validity, the likelihood that a nonneutral cue would match the response cue, was 80%. Each button corresponds to a category choice and confidence rating.

Results

Observers performed the embedded category task in which they categorized drifting grating stimuli as drawn from either a narrow distribution around horizontal (SD=, category 1) or a wide distribution around horizontal (SD=, category 2) (Fig. 1A) (4). Because the category distributions overlap, maximum accuracy on the task is ∼80%. We trained observers on the category distributions in category training trials, in which a single stimulus was presented at the fovea, before the main experiment and in short, top-up blocks interleaved with the test blocks (see Materials and Methods). Accuracy on category training trials in test sessions was 71.9% 4.0%, indicating that observers knew the category distributions and could perform the task well.

Four stimuli were briefly presented on each trial, and a response cue indicated which stimulus to report. Observers reported both their category choice (category 1 vs. 2) and their degree of confidence on a 4-point scale using one of eight buttons, ranging from high-confidence category 1 to high-confidence category 2 (Fig. 1B). Using a single button press for choice and confidence prevented postchoice influences on the confidence judgment (19) and emphasized that confidence should reflect the observer’s perception rather than a preceding motor response. We manipulated voluntary (i.e., endogenous) attention on a trial-to-trial basis using a spatial cue that pointed to either one stimulus location (valid condition: the response cue matched the cue, 66.7% of trials; and invalid condition: it did not match, 16.7% of trials) or all four locations (neutral condition: 16.7% of trials) (Fig. 1B). Twelve observers participated, with about 2,000 trials per observer.

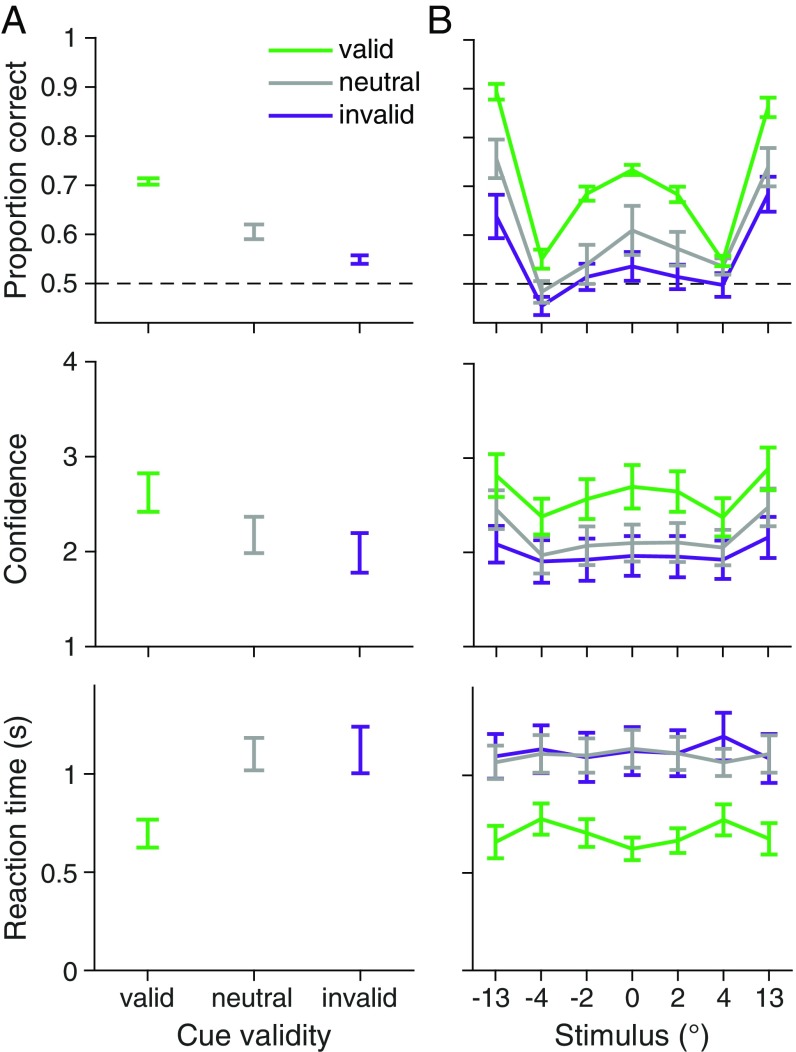

Cue validity increased categorization accuracy, one-way repeated-measures ANOVA, , , with higher accuracy following valid cues, two-tailed paired test, , , and lower accuracy following invalid cues, , , relative to neutral cues (Fig. 2A, Top). This pattern confirms that attention increased orientation sensitivity (e.g., refs. 11 and 20). Attention also increased confidence ratings, , , and decreased reaction time, , , ruling out speed–accuracy tradeoffs as underlying the effect of attention on accuracy (Fig. 2A, Center and Bottom).

Fig. 2.

Behavioral data. observers. Error bars show trial-weighted mean and SEM across observers. (A) Accuracy, confidence ratings, and reaction time as a function of cue validity. (B) As in A but as a function of cue validity and stimulus orientation. Stimulus orientation is binned to approximately equate the number of trials per bin. SI Appendix, Fig. S5 shows proportion category 1 choice data, and SI Appendix, Fig. S6 shows confidence and reaction time data in more detail.

Decision rules in this task are defined by how they map stimulus orientation and attention condition onto a response. We therefore plotted behavior as a function of these two variables. Overall performance was a “W”-shaped function of stimulus orientation (Fig. 2B, Top), reflecting the greater difficulty in categorizing a stimulus when its orientation was near the optimal category boundaries (at ∼ with no noise). Attention increased the sensitivity of responses to the stimulus orientation (Fig. 2B).

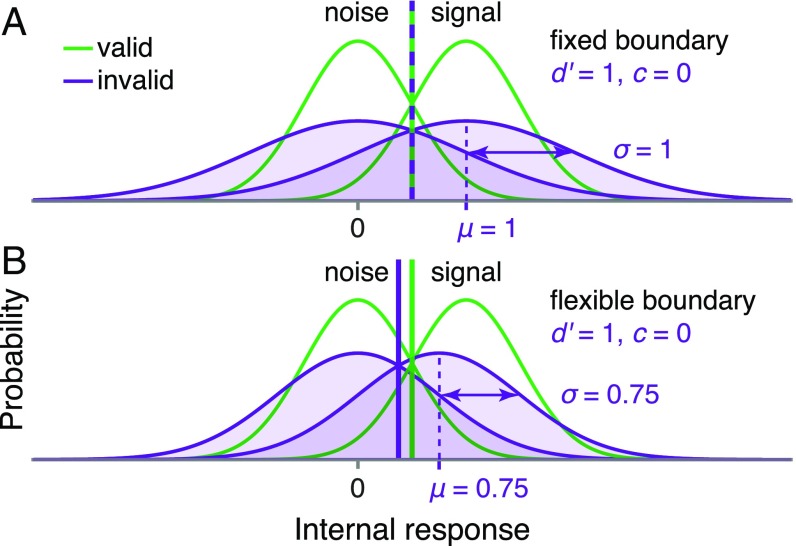

To assess whether observers changed their category and confidence decision boundaries to account for attention-dependent orientation uncertainty, we fit two main models. In one, the Bayesian model, decisions take uncertainty into account, whereas in the other, the Fixed model, decisions are insensitive to uncertainty. Both models assume that, for the stimulus of interest, the observer draws a noisy orientation measurement from a normal distribution centered on the true stimulus value with SD (i.e., uncertainty) dependent on attention. In the Bayesian model, decisions depend on the relative posterior probabilities of the two categories, leading the observer to shift his or her decision boundaries in measurement space, based on the attention condition (4, 5) (Fig. 3 A and B; SI Appendix, Fig. S2). The Bayesian model maximizes accuracy and produces confidence reports that are a function of the posterior probability of being correct. Note that observers could take uncertainty into account in other ways, but here we began with a normative approach by using a Bayesian model. In the Fixed model, observers use the same decision criteria, regardless of the attention condition (13, 15, 21–27) (i.e., they are fixed in measurement space; Fig. 3 A and B). We used Markov chain Monte Carlo (MCMC) sampling to fit the models to raw, trial-to-trial category and confidence responses from each observer separately (Materials and Methods and SI Appendix, Table S1).

Fig. 3.

Model schematics, fits, and fit comparison. (A) Schematic of Bayesian (Left) and Fixed (Right) models, which were fit separately for each observer. As attention decreases, uncertainty (measurement noise SD) increases, and orientation measurement likelihoods (blue and red curves) widen (28). In the Bayesian model, choice and confidence boundaries change as a function of uncertainty. In the Fixed model, boundaries do not depend on uncertainty. Colors indicate category and confidence response (color code in Fig. 1B). (B) Decision rules for Bayesian and Fixed models show the mappings from orientation measurement and uncertainty to category and confidence responses. Horizontal lines indicate the uncertainty levels used in A; the regions intersecting with a horizontal line match the regions in the corresponding plot in A. (C) Model fits to response as a function of orientation and cue validity. Response is an 8-point scale ranging from high confidence category 1 to high confidence category 2, with colors corresponding to those in Fig. 1B; only the middle six responses are shown. Error bars show mean and SEM across observers. Shaded regions are mean and SEM of model fits (see SI Appendix, section S3.8). Although mean response is shown here, models were fit to raw, trial-to-trial data. Stimulus orientation is binned to approximately equate the number of trials per bin. (D) Model comparison. Black bars represent individual observer LOO differences of Bayesian from Fixed. Negative values indicate that Bayesian had a higher (better) LOO score than Fixed. Blue line and shaded region show median and 95% confidence interval (CI) of bootstrapped mean differences across observers.

Observers’ decisions took attention-dependent uncertainty into account. The Bayesian model captured the data well (Fig. 3C) and substantially outperformed the Fixed model (Fig. 3 C and D), which had systematic deviations from the data. Although the fit depended on the full dataset, note deviations of the Fixed fit from the data near zero tilt and at large tilts in Fig. 3C, including failure to reproduce the cross-over pattern of the three attention condition curves that is present in the data and the Bayesian fit. To compare models, we used an approximation of leave-one-out cross-validated log likelihood that uses Pareto-smoothed importance sampling (PSIS-LOO; henceforth LOO) (29). Bayesian outperformed Fixed by an LOO difference (median and 95% CI of bootstrapped mean differences across observers) of 102 [45, 167]. This implies that the attentional state is available to the decision process and is incorporated into probabilistic representations used to make the decision.

Although our main question was whether observers’ decisions took uncertainty into account, our methods also allowed us to determine whether Bayesian computations were necessary to produce the behavioral data or whether heuristic strategies of accounting for uncertainty would suffice. We tested two models with heuristic decision rules in which the decision boundaries vary as linear or quadratic functions of uncertainty, approximating the Bayesian boundaries (SI Appendix, Fig. S3A). The Linear and Quadratic models both outperformed the Fixed model (LOO differences of 124 [77, 177] and 129 [65, 198], respectively; SI Appendix, Fig. S3 B and C). The best model, quantitatively, was Quadratic, similar to previous findings with contrast-dependent uncertainty (4, 5). SI Appendix, Table S2 shows all pairwise comparisons of the models. Model recovery showed that our models were meaningfully distinguishable (SI Appendix, Fig. S4). Decision rules therefore changed with attention without requiring Bayesian computations.

We next asked whether category decision boundaries—regardless of confidence—shift to account for attention-dependent uncertainty. Perhaps, for example, performance of the Bayesian model was superior not because observers changed their categorization behavior but because they rated their confidence based on the attention condition, which they knew explicitly. Given the mixed findings on the relation between attention and confidence (30–33) and the proposal that perceptual decisions do not account for attention (13), such a finding would not be trivial (see Discussion), but it would warrant a different interpretation than if category decision boundaries also depended on attention. We fit the four models to the category choice data only and again rejected the Fixed model (SI Appendix, Fig. S5 A and B and Tables S3 and S4). Therefore, category criteria, independent of confidence criteria, varied as a function of attention-dependent uncertainty.

Finally, we directly tested for decision boundary shifts—the key difference between the Bayesian and Fixed models—by estimating each observer’s category decision boundaries nonparametrically. To do so, we fit the category choice data with a Free model in which the category decision boundaries varied freely and independently for each attention condition. The estimated boundaries differed between valid and invalid trials (Fig. 4 and SI Appendix, Fig. S5C), with a mean difference of 7. (SD = ), two-tailed paired test, , . Most observers showed a systematic outward shift of category decision boundaries from valid to neutral to invalid conditions, confirming that their choices accounted for uncertainty.

Fig. 4.

Free model analysis. Group mean MCMC parameter estimates (crosses) show systematic changes in the category decision boundary across attention conditions. The same pattern can be seen for individual observers: Each gray line corresponds to a different observer, with connected points representing the estimates for valid, neutral, and invalid attention conditions. Each point represents a pair of parameter estimates: uncertainty and category decision boundary for a specific attention condition.

Discussion

Using an embedded category task designed to distinguish fixed from flexible decision rules, we found that human perceptual decision-making takes into account uncertainty due to spatial attention, when uncertainty is relevant for performance. These findings indicate flexible decision behavior that is responsive to attention—an internal factor that affects the uncertainty of stimulus representations.

Our findings of flexible decision boundaries run counter to a previous proposal that observers use a fixed decision rule under varying attentional conditions (13–15, 21). This idea originated from a more general “unified criterion” proposal (25, 26), which asserts that in a display with multiple stimuli, observers adopt a single, fixed decision boundary (the unified criterion) for all items (22–27). The unified criterion proposal implies a rigid, suboptimal mechanism for perceptual decision-making in real-world complex scenes, in which uncertainty can vary due to a variety of factors.

Although the unified criterion proposal has served to explain experimental findings (13–15, 21–27, 34), it is impossible to infer decision boundaries from behavior in the signal detection theory (SDT) tasks used previously (35). In theory, it is always possible to explain behavioral data from such tasks with a fixed decision rule, as long as the means and variances of the internal measurement distributions are free to vary (SI Appendix, section S1).

This issue is particularly thorny for attention studies: SDT works with arbitrary, internal units of “evidence” for one choice or another, and attention could change the means, the variances, or both properties of the internal evidence distributions (10, 11, 36). As a result, the decision boundaries are underconstrained: A fixed decision boundary could be mistaken for a flexible one, and vice versa (Fig. 5). Relatedly, in a perceptual averaging task, confidence data apparently generated by a fixed decision rule can also be explained by a Bayesian decision rule with small underestimations of the internal measurement noise (37). These considerations underscore the importance of doing model comparison even for relatively simple decision models. It may be, then, that decision boundaries did change with attention in previous studies, but these changes were not inferred for methodological reasons.

Fig. 5.

Limitations of standard SDT tasks. SDT tasks such as the detection task illustrated here cannot distinguish fixed from flexible decision rules when the means and variances of internal measurement distributions can also vary across conditions. (A) Fixed and (B) flexible decision rules give the same behavioral data (perceptual sensitivity, , and criterion, ) in the two depicted scenarios, in which attention affects the measurement distributions differently (compare the invalid distributions in A and B). An experimenter could not infer from the behavioral data which scenario occurred.

Alternatively, it may be that decision boundaries truly did not change in previous studies, and task differences underlie our differing results. Studies supporting the unified criterion proposal used either detection or orthogonal discrimination (13, 15, 21–27, 34), which is often used as a proxy for detection (10, 38). In these tasks, the stimuli are low contrast relative to either a blank screen or a noisy background, and performance is limited by low signal-to-noise ratio. In our categorization task, by comparison, although maximum performance is capped due to overlap of the category-conditioned stimulus distributions, variations in performance depend on the precision of orientation representations, just as in a left vs. right fine discrimination task. Therefore, it may be that observers adjust decision boundaries defined with respect to precise features (e.g., What is the exact orientation?) but not boundaries defined with respect to signal strength (e.g., Is anything present at all?).

Other task differences could play a role as well. Some experiments matched perceptual sensitivity for different attention conditions by changing stimulus contrast, so attention and physical stimulus properties covaried (13, 15). For the metacognitive report, we asked for confidence rather than visibility (13); these subjective measures are known to differ (39). Finally, one study (15) using a signal detection approach suggested that observers rely insufficiently on an instructed prior, especially for unattended stimuli. The question of how attention affects the use of a prior is different from the current question, as incorporating a prior requires a cognitive step beyond accounting for uncertainty in the perceptual representation. In the future, it will be interesting to examine how decision boundaries relate to priors, attention, and uncertainty more generally in this task and other tasks in which absolute decision boundaries can be uniquely inferred.

Despite attention’s large influence on visual perception (8), only a handful of studies have examined its influence on visual confidence, with mixed results. Two studies found that voluntary attention increased confidence (30, 31), one found that voluntary but not involuntary attention increased confidence (33), and another found no effect of voluntary attention on confidence (32). This last result has been attributed to response speed pressures (30, 33). Three other studies suggested an inverse relation between attention and confidence, though these used rather different attention manipulations and measures. One study reported higher confidence for uncued compared with cued error trials (40), one found higher confidence for stimuli with incongruent compared with congruent flankers (41), and a third found that lower fMRI BOLD activation in the dorsal attention network correlated with higher confidence (21). Here, experimentally manipulating spatial attention without response speed pressure revealed a positive, approximately Bayesian, relation between attention and confidence.

The mechanisms for decision adjustment under attention-dependent uncertainty could be mediated by effective contrast (10, 42, 43). Alternatively, attention-dependent decision-making may rely on higher order monitoring of attentional state. For example, the observer could consciously adjust a decision depending on whether he or she was paying attention. Future studies will be required to distinguish between these more bottom-up or top-down mechanisms.

Our finding that human observers incorporate attention-dependent uncertainty into perceptual categorization and confidence reports in a statistically appropriate fashion points to the question of what other kinds of internal states can be incorporated into perceptual decision-making. There is no indication, for example, that direct stimulation of sensory cortical areas leads to adjustments of confidence and visibility reports (21, 44, 45), suggesting that the system is not responsive to every change to internal noise. It may be that the system is more responsive to states that are internally generated or that have consistent behavioral relevance. Attention is typically spread unevenly across multiple objects in a visual scene, so the ability to account for attention likely improves perceptual decisions in natural vision. It remains to be seen whether the perceptual decision-making system is responsive to other cognitive or motivational states.

Materials and Methods

Extended materials and methods are available in SI Appendix.

Observers.

Twelve observers (seven female) participated in the study. Observers received $10 per 40- to 60-min session, plus a completion bonus of $25. The experiments were approved by the University Committee on Activities Involving Human Subjects of New York University. Informed consent was given by each observer before the experiment. All observers were naïve to the purpose of the experiment, and none were experienced psychophysical observers.

Apparatus, Stimuli, and Task.

Observers were seated in a dark room, at a viewing distance of 57 cm from the screen, with their head stabilized by a chin-and-head rest. Stimuli were presented on a gamma-corrected 100 Hz, 21-inch display (Model Sony GDM-5402) with a gray background (60 cd/). Stimuli were drifting Gabors with spatial frequency of 0.8 cycles per degree of visual angle (dva), speed of 6 cycles per second, Gaussian envelope with SD 0.8 dva, and randomized starting phase. In category training, the stimuli were positioned at fixation. In all other blocks, one stimulus was positioned in each of the four quadrants of the screen (45, 135, 225, ), 5 dva from fixation. On each trial, each of the four stimuli was drawn independently and with equal probability from one of the two category distributions. The main task is shown in Fig. 1 and described in Results. Online eye tracking (Eyelink 1000) was used to ensure fixation.

Experimental Procedure.

Each observer completed seven sessions: two staircase sessions (training, contrast staircase, prescreening) followed by five test sessions (main experiment). Observers received instructions and training for each task (see SI Appendix).

Staircase Sessions.

Each staircase session consisted of three category training blocks (72 trials each) and three category/attention testing-with-staircase blocks (144 trials each), in alternation.

In category training blocks, observers learned the stimulus distributions. On each trial, category 1 or 2 was selected with equal probability, the stimulus orientation was drawn from the corresponding stimulus distribution, and the stimulus appeared at fixation for 300 ms at 35% contrast. Observers reported category 1 or 2 and received accuracy feedback after each trial.

In category/attention testing-with-staircase blocks, the trial sequence was identical to the main task (Fig. 1B), except observers reported only category choice. There was no trial-to-trial feedback on this or any other type of attention block.

We used an adaptive staircase procedure (46, 47) to estimate psychometric functions for performance accuracy as a function of contrast, separately for valid, neutral, and invalid trials. Simulations we conducted before starting the study showed that without a sufficiently large accuracy difference between valid and invalid trials, our models would be indistinguishable. Therefore, we used the psychometric function posteriors to determine whether the observer was eligible for the test sessions and, if so, to determine the stimulus contrast for that observer (see SI Appendix). Twenty-eight observers were prescreened, 13 were invited to participate in the main study, and 1 dropped out, leaving 12 observers, our target.

Test Sessions.

Each test session consisted of three category training blocks (identical to staircase sessions but with observer-specific stimulus contrast) and three confidence/attention testing blocks (144 trials each), in alternation. These testing blocks were the main experimental blocks; the trial sequence is shown in Fig. 1B.

Modeling Procedures.

The modeling procedures were similar to those used by Adler and Ma (5).

We used free parameters to characterize , the SD of orientation measurement noise, for all three attention conditions: and . We added orientation-dependent noise (48).

We coded all responses as , with each value indicating category and confidence, as in Fig. 1B. The probability of a single trial is equal to the probability mass of the internal measurement distribution in a range corresponding to the observer’s response . We find the boundaries in measurement space, as defined by the fitting model and parameters , and then compute the probability mass of the measurement distribution between the boundaries:

| [1] |

where and .

To obtain the log likelihood of the dataset, given a model with parameters , we compute the sum of the log probability for every trial , where is the total number of trials:

| [2] |

To fit the model, we sampled from the posterior distribution over parameters, . To sample from the posterior, we use an expression for the log posterior

| [3] |

where is given in Eq. 2. We took uniform (or, for parameters that were standard deviations, log-uniform) priors over reasonable, sufficiently large ranges (49), which we chose before fitting any models. We sampled from the probability distribution using a MCMC method, slice sampling (50) (see SI Appendix).

To compare model fits while accounting for the complexity of each model, we computed the LOO (29). A bootstrapping procedure was used to compute the group mean with CIs for LOO score differences between models.

Bayesian Model.

The Bayesian model generates category and confidence responses based on the log posterior ratio, , of the two categories:

| [4] |

Given the orientation measurement likelihoods, , and marginalizing over the stimulus , this is equivalent to

| [5] |

The observer compares to a set of decision boundaries , which define the eight possible category and confidence responses. is the category boundary and captures possible category bias, and it is the only boundary parameter in models of category choice only. is fixed at and is fixed at , leaving seven free boundary parameters: .

In the Bayesian models with noise, we assume that, for each trial, there is an added Gaussian noise term on , , where and is a free parameter.

Fixed Model.

The observer compares the measurement to a set of boundaries that are not dependent on . We fit free parameters and use measurement boundaries .

Linear and Quadratic Models.

The observer compares the measurement to a set of boundaries that are linear or quadratic functions of . We fit free parameters and and use measurement boundaries (Linear) or (Quadratic).

Free Model.

To estimate the category boundaries with minimal assumptions, we fit free parameters , , and and used measurement boundaries .

Data and Code Availability.

All data and code used for running experiments, model fitting, and plotting are available at https://doi.org/10.5281/zenodo.1422804.

Supplementary Material

Acknowledgments

The authors thank Roshni Lulla and Gordon C. Bill for assistance with data collection and helpful discussions; Luigi Acerbi for helpful ideas and tools related to model fitting and model comparison; and Stephanie Badde, Michael Landy, and Christopher Summerfield for comments on the manuscript. This material is based upon work supported by the National Science Foundation Graduate Research Fellowship under Award DGE-1342536 (to W.T.A.) and by the National Eye Institute of the National Institutes of Health under Awards T32EY007136 (to New York University) and F32EY025533 (to R.N.D.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

Data deposition: All data and code used for running experiments, model fitting, and plotting are available at https://doi.org/10.5281/zenodo.1422804.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1717720115/-/DCSupplemental.

References

- 1.Knill DC, Richards W, editors. Perception as Bayesian Inference. Cambridge Univ Press; Cambridge, UK: 1996. [Google Scholar]

- 2.Trommershäuser J, Kording K, Landy MS, editors. Sensory Cue Integration. Oxford Univ Press; Oxford: 2011. [Google Scholar]

- 3.Ma WJ, Jazayeri M. Neural coding of uncertainty and probability. Annu Rev Neurosci. 2014;37:205–220. doi: 10.1146/annurev-neuro-071013-014017. [DOI] [PubMed] [Google Scholar]

- 4.Qamar AT, et al. Trial-to-trial, uncertainty-based adjustment of decision boundaries in visual categorization. Proc Nat Acad Sci USA. 2013;110:20332–20337. doi: 10.1073/pnas.1219756110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Adler WT, Ma WJ. 2018. Comparing Bayesian and non-Bayesian accounts of human confidence reports. bioRxiv:093203. Preprint, posted December 11, 2016.

- 6.Mamassian P. Visual confidence. Annu Rev Vis Sci. 2016;2:459–481. doi: 10.1146/annurev-vision-111815-114630. [DOI] [PubMed] [Google Scholar]

- 7.Fleming SM, Daw ND. Self-evaluation of decision-making: A general Bayesian framework for metacognitive computation. Psych Rev. 2017;124:91–114. doi: 10.1037/rev0000045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Carrasco M. Visual attention: The past 25 years. Vis Res. 2011;51:1484–1525. doi: 10.1016/j.visres.2011.04.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Reynolds JH, Chelazzi L. Attentional modulation of visual processing. Annu Rev Neurosci. 2004;27:611–647. doi: 10.1146/annurev.neuro.26.041002.131039. [DOI] [PubMed] [Google Scholar]

- 10.Carrasco M, Penpeci-Talgar C, Eckstein M. Spatial covert attention increases contrast sensitivity across the CSF: Support for signal enhancement. Vis Res. 2000;40:1203–1215. doi: 10.1016/s0042-6989(00)00024-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lu ZL, Dosher BA. External noise distinguishes attention mechanisms. Vis Res. 1998;38:1183–1198. doi: 10.1016/s0042-6989(97)00273-3. [DOI] [PubMed] [Google Scholar]

- 12.Anton-Erxleben K, Carrasco M. Attentional enhancement of spatial resolution: Linking behavioural and neurophysiological evidence. Nat Rev Neurosci. 2013;14:188–200. doi: 10.1038/nrn3443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Rahnev D, Maniscalco B, Graves T, Huang E, de Lange FP, Lau H. Attention induces conservative subjective biases in visual perception. Nat Neurosci. 2011;14:1513–1515. doi: 10.1038/nn.2948. [DOI] [PubMed] [Google Scholar]

- 14.Rahnev DA, Bahdo L, de Lange FP, Lau H. Prestimulus hemodynamic activity in dorsal attention network is negatively associated with decision confidence in visual perception. J Neurophysiol. 2012;108:1529–1536. doi: 10.1152/jn.00184.2012. [DOI] [PubMed] [Google Scholar]

- 15.Morales J, et al. Low attention impairs optimal incorporation of prior knowledge in perceptual decisions. Atten Percept Psychophys. 2015;77:2021–2036. doi: 10.3758/s13414-015-0897-2. [DOI] [PubMed] [Google Scholar]

- 16.Liu Z, Knill DC, Kersten D. Object classification for human and ideal observers. Vis Res. 1995;35:549–568. doi: 10.1016/0042-6989(94)00150-k. [DOI] [PubMed] [Google Scholar]

- 17.Sanborn AN, Griffiths TL, Shiffrin RM. Uncovering mental representations with Markov Chain Monte Carlo. Cogn Psychol. 2010;60:63–106. doi: 10.1016/j.cogpsych.2009.07.001. [DOI] [PubMed] [Google Scholar]

- 18.Geisler WS, Perry JS. Contour statistics in natural images: Grouping across occlusions. Vis Neurosci. 2009;26:109–121. doi: 10.1017/S0952523808080875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Navajas J, Bahrami B, Latham PE. Post-decisional accounts of biases in confidence. Curr Op Behav Sci. 2016;11:55–60. [Google Scholar]

- 20.Cameron EL, Tai JC, Carrasco M. Covert attention affects the psychometric function of contrast sensitivity. Vis Res. 2002;42:949–967. doi: 10.1016/s0042-6989(02)00039-1. [DOI] [PubMed] [Google Scholar]

- 21.Rahnev DA, Maniscalco B, Luber B, Lau H, Lisanby SH. Direct injection of noise to the visual cortex decreases accuracy but increases decision confidence. J Neurophysiol. 2012;107:1556–1563. doi: 10.1152/jn.00985.2011. [DOI] [PubMed] [Google Scholar]

- 22.Caetta F, Gorea A. Upshifted decision criteria in attentional blink and repetition blindness. Vis Cognit. 2010;18:413–433. [Google Scholar]

- 23.Gorea A, Caetta F, Sagi D. Criteria interactions across visual attributes. Vis Res. 2005;45:2523–2532. doi: 10.1016/j.visres.2005.03.018. [DOI] [PubMed] [Google Scholar]

- 24.Zak I, Katkov M, Gorea A, Sagi D. Decision criteria in dual discrimination tasks estimated using external-noise methods. Atten Percept Psychophys. 2012;74:1042–1055. doi: 10.3758/s13414-012-0269-0. [DOI] [PubMed] [Google Scholar]

- 25.Gorea A, Sagi D. Failure to handle more than one internal representation in visual detection tasks. Proc Natl Acad Sci USA. 2000;97:12380–12384. doi: 10.1073/pnas.97.22.12380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gorea A, Sagi D. Disentangling signal from noise in visual contrast discrimination. Nat Neurosci. 2001;4:1146–1150. doi: 10.1038/nn741. [DOI] [PubMed] [Google Scholar]

- 27.Gorea A, Sagi D. Natural extinction: A criterion shift phenomenon. Vis Cognit. 2002;9:913–936. [Google Scholar]

- 28.Giordano AM, McElree B, Carrasco M. On the automaticity and flexibility of covert attention: A speed-accuracy trade-off analysis. J Vis. 2009 doi: 10.1167/9.3.30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Vehtari A, Gelman A, Gabry J. Practical Bayesian model evaluation using leave-one-out cross-validation and WAIC. Stat Comput. 2017;27:1413. [Google Scholar]

- 30.Zizlsperger L, Sauvigny T, Haarmeier T. Selective attention increases choice certainty in human decision making. PLoS One. 2012;7:e41136. doi: 10.1371/journal.pone.0041136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zizlsperger L, Sauvigny T, Händel B, Haarmeier T. Cortical representations of confidence in a visual perceptual decision. Nat Commun. 2014;5:3940. doi: 10.1038/ncomms4940. [DOI] [PubMed] [Google Scholar]

- 32.Wilimzig C, Tsuchiya N, Fahle M, Einhäuser W, Koch C. Spatial attention increases performance but not subjective confidence in a discrimination task. J Vis. 2008 doi: 10.1167/8.5.7. [DOI] [PubMed] [Google Scholar]

- 33.Kurtz P, Shapcott KA, Kaiser J, Schmiedt JT, Schmid MC. The influence of endogenous and exogenous spatial attention on decision confidence. Sci Rep. 2017;7:6431. doi: 10.1038/s41598-017-06715-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Solovey G, Graney GG, Lau H. A decisional account of subjective inflation of visual perception at the periphery. Atten Percept Psychophys. 2015;77:258–271. doi: 10.3758/s13414-014-0769-1. [DOI] [PubMed] [Google Scholar]

- 35.Kontsevich LL, Chen C-C, Verghese P, Tyler CW. The unique criterion constraint: A false alarm? Nat Neurosci. 2002;5:707. doi: 10.1038/nn0802-707a. [DOI] [PubMed] [Google Scholar]

- 36.Dosher BA, Lu ZL. Noise exclusion in spatial attention. Psych Sci. 2000;11:139–146. doi: 10.1111/1467-9280.00229. [DOI] [PubMed] [Google Scholar]

- 37.Zylberberg A, Roelfsema PR, Sigman M. Variance misperception explains illusions of confidence in simple perceptual decisions. Consc Cognit. 2014;27:246–253. doi: 10.1016/j.concog.2014.05.012. [DOI] [PubMed] [Google Scholar]

- 38.Thomas JP, Gille J. Bandwidths of orientation channels in human vision. J Opt Soc Am. 1979;69:652–660. doi: 10.1364/josa.69.000652. [DOI] [PubMed] [Google Scholar]

- 39.Rausch M, Zehetleitner M. Visibility is not equivalent to confidence in a low contrast orientation discrimination task. Front Psychol. 2016;7:591. doi: 10.3389/fpsyg.2016.00591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Baldassi S, Megna N, Burr DC. Visual clutter causes high-magnitude errors. PLoS Biol. 2006;4:e56. doi: 10.1371/journal.pbio.0040056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Schoenherr JR, Leth-Steensen C, Petrusic WM. Selective attention and subjective confidence calibration. Atten Percept Psychophys. 2010;72:353–368. doi: 10.3758/APP.72.2.353. [DOI] [PubMed] [Google Scholar]

- 42.Ling S, Carrasco M. Sustained and transient covert attention enhance the signal via different contrast response functions. Vis Res. 2006;46:1210–1220. doi: 10.1016/j.visres.2005.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Carrasco M, Ling S, Read S. Attention alters appearance. Nat Neurosci. 2004;7:308–313. doi: 10.1038/nn1194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Fetsch CR, Kiani R, Newsome WT, Shadlen MN. Effects of cortical microstimulation on confidence in a perceptual decision. Neuron. 2014;83:797–804. doi: 10.1016/j.neuron.2014.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Peters MAK, et al. Transcranial magnetic stimulation to visual cortex induces suboptimal introspection. Cortex. 2017;93:119–132. doi: 10.1016/j.cortex.2017.05.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Kontsevich LL, Tyler CW. Bayesian adaptive estimation of psychometric slope and threshold. Vis Res. 1999;39:2729–2737. doi: 10.1016/s0042-6989(98)00285-5. [DOI] [PubMed] [Google Scholar]

- 47.Prins N. The psychometric function: The lapse rate revisited. J Vis. 2012 doi: 10.1167/12.6.25. [DOI] [PubMed] [Google Scholar]

- 48.Girshick AR, Landy MS, Simoncelli EP. Cardinal rules: Visual orientation perception reflects knowledge of environmental statistics. Nat Neurosci. 2011;14:926–932. doi: 10.1038/nn.2831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Acerbi L, Vijayakumar S, Wolpert DM. On the origins of suboptimality in human probabilistic inference. PLoS Comput Biol. 2014;10:e1003661. doi: 10.1371/journal.pcbi.1003661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Neal RM. Slice sampling. Ann Stat. 2003;31:705–741. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data and code used for running experiments, model fitting, and plotting are available at https://doi.org/10.5281/zenodo.1422804.