Abstract

This paper explores the workflow and use of an interactive medical checklist for trauma resuscitation—an emerging technology developed for trauma team leaders to support decision making and task coordination among team members. We used a technology probe approach and ethnographic methods, including video review, interviews, and content analysis of checklist logs, to examine how team leaders use the checklist probe during live resuscitations. We found that team leaders of various experience levels use the technology differently. Some leaders frequently glance at the checklist and take notes during task performance, while others place the checklist on a stand and only interact with the checklist when checking items. We compared checklist timestamps to task activities and found that most items are checked off after tasks are performed. We conclude by discussing design implications and new design opportunities for a future dynamic, adaptive checklist.

Keywords: Digital checklist, trauma resuscitation, technology probe, interactive systems for healthcare

ACM Classification Keywords: H.5.m. Information interfaces; presentation (e.g., HCI): Miscellaneous

INTRODUCTION

Trauma resuscitation is a dynamic, fast-paced medical environment where communication is a critical factor for efficient and error-free patient care. This team-based process requires careful coordination of tasks between seven to 15 medical providers during treatment of severely injured patients. To ensure safety, trauma teams follow Advanced Trauma Life Support or ATLS, an evaluation and management protocol standardized for this setting.

Trauma centers are also increasingly adopting resuscitation checklists, showing positive impacts on team performance [17]. These initial experiences have confirmed that checklists may play a critical role in emergency situations when the pressure to perform is high [3]. Despite the introduction of protocols and low-tech interventions like checklists, critical evaluation tasks are often delayed [11]. These delays may have minimal impact for less severely injured patients, but can contribute to adverse outcomes and even death in critically injured patients requiring life-saving interventions [11]. Many factors complicate medical emergencies, including the rapidly changing patient status, unreliable team communications and complex coordination of tasks in a short period of time. These factors also pose challenges to designing technologies for improving team performance during time-critical, medical work.

Checklists were created to assist with the performance of sequential steps, thereby reducing human errors and improving communication and collaboration [3]. Although paper-based checklists have improved healthcare safety, their digitization may lead to further advances by allowing faster access to data through integration with other hospital systems and sharing of information through common displays [21]. Digital checklists could also adapt to varying patient scenarios, a requirement for non-routine events where checklists could provide assistance for poorly recalled or necessary information. Attempts have been made to digitize checklists (e.g., Wu et al. [28]), but even a simple translation of tasks from analog to digital may compromise efficiency and user satisfaction, as evidenced in the domain of electronic health records. Selecting an appropriate approach to designing medical checklists, as well as “solving an interaction design problem” [10] for their continued success is therefore critical.

We conducted a mixed-methods study in a regional trauma center to understand how trauma team leaders use a digital checklist during trauma resuscitations. We used the technology probe approach [6] to collect information about the digital checklist use through logs and to identify new design opportunities. We compared 11 digital checklist resuscitations (or cases) to 10 cases with paper checklists to understand how the use of the checklist varied between the two formats. We also reviewed video records from paper and digital cases to gain insight about frequency of glancing at the checklist, checklist handling, and communication. Our results show that team leaders use the digital checklist differently than paper checklists. When using the digital checklist, leaders take fewer notes, but leave fewer items unchecked. They frequently glance at the digital checklist during task performance and before verbalizing directions. We use these results and participant feedback to discuss design implications for a future adaptive checklist, including improved note-taking abilities, customization of checklist layout for different team leaders, and adaptability of checklist information to varying patient contexts.

The context of medical emergencies offers new insights into the design and functionality of cognitive aids because these scenarios are more dynamic than routine situations, requiring new approaches to system design [10,28]. The resulting technology must support coordination of work in a time-pressured environment, while adapting to rapid changes in patient status. Context-adaptive checklists need to be simple to minimize cognitive load and ensure compliance, yet robust enough to reduce omissions, provide relevant information in changing contexts, and enhance individual performance [10]. Our contributions to the area of interactive systems design include:

Application of technology probe in a dynamic, safety-critical medical context

In-the-wild evaluation of an emerging technology

Design implications for a future adaptive checklist

BACKGROUND AND RELATED WORK

Paper vs. Electronic Checklists in Medicine

Electronic checklists (e-checklists) are increasingly used in medical domains. These first-generation e-checklists are often either identical translations of paper checklists or their projections on large wall displays [4,16,19,20]. Although digital cognitive aids reduce omissions and improve team performance, these aids are facing the same design limitations as their paper counterparts [24,28]. Below we review studies of both paper and electronic checklists, their design, and their effects on team performance.

Paper checklists have been evaluated in several medical settings, including trauma resuscitation and operating rooms. Kelleher et al. [15] analyzed the completion and timeliness of tasks during pediatric resuscitations with and without a checklist, finding increased task completion and faster task performance when using a checklist. Hart and Owen [12] evaluated a verbal checklist for anesthesia administration. Although most participants found the verbal checklist useful, one third of the items were omitted. Paper is the simplest and most convenient medium for checklists [25], but it also has disadvantages. For example, paper checklists are static, limiting their adaptability to different patient scenarios. Also, they cannot exchange information with other medical systems or update automatically [19].

More recently, attempts have been made at making the digital aids more responsive to patient scenarios and user needs. Gonzales et al. [8], for example, evaluated the effectiveness of a projected shared display with two interaction modes, the Microsoft Kinect and a touchscreen, during acute care resuscitations. Despite an initial concern that a touchscreen display might create a distraction, it was perceived as more user-friendly than the gesture-based interaction. Christov et al. [5] introduced a smart checklist for blood transfusion, cardiac surgery, and infusion therapy that automatically generates a list of items based on predefined activities. Their design also supports adaptation of the user interface to different users, as well as dynamic updating of information based on the current activity. Wu et al. [28] analyzed the effectiveness of a dynamic procedure aid for operating rooms. The dynamic aid was found useful because it provided a step-at-a-glance layout, making the protocol steps easy to view and reducing provider cognitive load. We contribute to this body of work by designing and evaluating an adaptive checklist for medical emergencies, and by leveraging the technology probe approach in situ, during real trauma resuscitations with real users.

Several studies have compared the effects of paper and e-checklists on team performance [8,24,28], finding that e-checklists usually outperformed their paper counterparts. Thongprayoon et al. [24], for example, compared the use of a paper checklist to an identical e-checklist for intensive care unit providers. The e-checklist reduced provider errors and cognitive workload without increasing checklist completion time, showing the feasibility of checklists in emergency medical settings. In contrast, Watkins et al. [26] found no difference between the two formats. These studies are critical because they establish the importance of a proper design and appropriate use of cognitive aids, in addition to the format. In this work, our goal was not to compare how paper and electronic checklists perform in relation to protocol adherence or clinical measures (e.g., [16]). We were instead interested in comparing use practices and provider behaviors around interactions with the technology to identify issues with the electronic version and inform the design of a future adaptive checklist.

Methodological Approach: Technology Probes

A technology probe is an artifact designed to collect information around use, explore usability issues, and provide inspiration for a new design space. In several studies, technology probes were introduced into environments to elicit reflections from participants and to reveal technology needs and desires, supplementing other ethnographic methods [2,9,14]. Amin et al. [1], for example, introduced the probe as part of a participatory design exercise to derive design requirements for nonverbal messaging. Gaye and Holmquist [7] deployed probes to form an understanding of user environments and paths around a city for designing a system that uses the urban environment as an interface for real-time electronic music making. We have similarly deployed a digital checklist for trauma resuscitation as a probe to gather contextual information about the environment and technology use, and to obtain new insights into the design and functionality of cognitive aids for medical emergencies.

A common approach to system design in HCI is to observe the environment to identify issues, create a prototype, and then test it in the lab. In previous studies, we worked with medical experts to design the checklist probe and evaluate for usability to stabilize the system [21]. In this study, we took the design out of the controlled usability setting and deployed it as a technology probe in the wild.

TRAUMA RESUSCITATION CHECKLIST PROBE

Our technology probe was an e-checklist that was designed and developed to (1) collect information about the checklist use during actual trauma resuscitations, (2) understand use practices around the technology, and (3) stimulate discussion about features that are needed for an adaptive checklist in this setting. The checklist probe is a mobile application based on its paper counterpart that has been in use at our research site since 2012 (Figure 1(a)). The probe allows its users—trauma team leaders—to prepare for patient arrival and ensure compliance with the patient evaluation protocol throughout resuscitation.

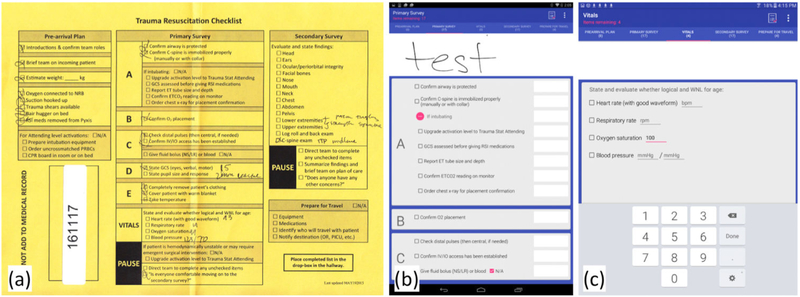

Figure 1:

(a) Paper-based checklist. (b) and (c) Example pages of the digital checklist probe.

Hardware and Software

The checklist probe was implemented using the Android Studio IDE and was written in Java for the Android mobile operating system with a minimum support for platform version Android 4.2 with API level 17. The user interface was implemented using Android XML layouts. The system also uses the SQLite library as a SQL database engine. The design pattern for the checklist probe is Model-View-Controller. The hardware included a Google Nexus 10 tablet and an Adonit Jot Pro stylus for touchscreen devices.

Design

The initial design of the checklist probe and its features were informed by content analysis of 163 paper checklists collected during actual trauma resuscitations over four months [22]. To design the user interface, we used Google Material Design standards. Each section of the paper checklist—pre-arrival plan, primary survey, secondary survey, and prepare for travel—was designed onto its own tab on the digital checklist (Figure 1(b)). The pre-arrival plan includes preparatory steps for patient arrival. The primary survey focuses on major physiological systems like Airway, Breathing, blood Circulation and Disability or neurological exam (ABCDs), while secondary survey includes a head-to-toe evaluation to identify other injuries. The prepare for travel section includes the plan for care. We added a fifth tab for recording patient vital signs (Figure 1(c)). Each item from the paper checklist also appears as an item on the probe with a checkbox next to it. We observed in our analysis of paper checklists that trauma leaders take handwritten notes next to items and in margins. To provide them with a similar functionality on the probe, we implemented a white box next to each item, as well as a static margin at the top for taking handwritten notes. When items are checked off, the color of the text fades from black to grey. Optional, “If applicable” items from the paper checklist, like intubation, were implemented as collapsible sections that hide the items by default but are revealed by tapping the “+” symbol (Figure 1(b)). The user can tap the tabs or swipe between tab screens. A tab heading with the number of remaining items can be found at the top of each tab, providing a cognitive reminder for unchecked items. Several items, including patient weight, Glasgow Coma Score (or GCS, indicating the neurological status of the patient), and vital signs (Figure 1(c)) have input areas for typing numerical values on a keypad, which automatically checks off the corresponding item. Upon finishing the checklist, the user can review any unchecked items before pressing the “complete checklist” button and submitting the log. This action saves the log onto the tablet for internal review and analysis. The probe was first tested for usability [21] and then piloted by medical experts on our research team who shadowed trauma team leaders during 16 actual resuscitations. No design changes were made during the probe deployment, except to fix system glitches.

Logging

The checklist log contains a text file with timestamps for checked items, numerical notes entered for patient weight, GCS or vital signs, frequency and sequence of tab switching with timestamps, and the list of any unchecked items. The log also contains an image file with handwritten notes, timestamps and corresponding items.

METHODS

Trauma team leaders at our site used the digital checklist probe during daytime events and the paper checklist during nighttime and weekend events for three consecutive months (Oct. 1, 2016 – Jan. 5, 2017). During the deployment period, the checklist probe logs were collected from 11 events, and the paper checklist was used in 70 events. To understand the digital checklist use patterns and how they differed from the paper checklist use, we matched probe cases to cases with paper checklists based on case and patient characteristics. For each case, we then triangulated data from log analysis or paper checklist content analysis, live video review, and interviews with team leaders. The hospital’s research ethics committee approved this study.

Study Setting

The study took place at a pediatric teaching hospital with a regional level 1 trauma center. Two adjacent resuscitation rooms, each with three beds, are used for assessing patients. Both rooms are equipped with video cameras and microphones, providing video records with multiple views of the patient area. The center treats an average of 600 patients per year, with injury mechanisms ranging from falls, to burns, to firearm- and motor vehicle traffic-related injuries. Patients are assigned one of three activation levels: low acuity (stat), high acuity (attending stat), and transfer. Activations are announced about 10 minutes before arrival, unless the patient arrives without notification (e.g., brought in by private vehicle or insufficient time to notify the hospital). Although resuscitation rooms lack information technology for data synthesis, there are tools and technologies that assist teams during resuscitations. These include paper-based flowsheets and checklists, sign-in boards, wall-mounted timers (to keep track of time), vital sign monitors, various wall charts with patient parameters, and the electronic health record (EHR) system.

Participants

Trauma teams consist of surgeons, emergency medicine physicians, nurses, critical care specialists, respiratory therapists, and anesthesiologists. Each team member has a specific role and a set of defined tasks. For example, anesthesiologists and respiratory therapists manage the patient’s airway, while surgical residents or nurse practitioners examine the patient. Scribe nurses document the event, and bedside nurses establish intravenous access and administer medications and fluids. Attending surgeons, surgery fellows and residents take the leadership roles, assigning tasks and making decisions.

Our participants were six trauma team leaders: three senior surgery residents and three surgery fellows. Fellows, on average, had 7.2 years (SD: 2) of experience working in trauma resuscitation and residents had an average of 3.8 months (SD: 0.3). All participants reported confidence in using tablets and smartphones during work. Five were somewhat familiar with the Android operating system, and one was very familiar. Five participants had used the paper-based resuscitation checklist at least once before beginning this study, and one participant first used the digital checklist before ever using a paper checklist. Participants were compensated for the interview portion of the study.

Digital Checklist Training Session

Before deploying the checklist probe in live events, we ran an hour-long training session at the hospital with two residents and two fellows who at the time served as trauma team leaders. We also trained two research coordinators at the hospital to use the digital checklist and conduct training sessions with new residents at the start of their rotations. The session started with an introduction to the checklist features and functionalities and then users tested these features and familiarized with the checklist design. We also used this training session to address any issues or questions before moving forward with the study.

Probe Use Procedure

When a daytime trauma patient case came into the hospital, one of the research coordinators handed the checklist probe (tablet) to the team leader at the start of the resuscitation. The leaders were instructed to use the digital checklist as they would normally use the paper version. Upon concluding the resuscitation, team leaders were able to review any unchecked items and complete the checklist by pressing the “complete checklist” button. This action saved the log file locally on the tablet. The research coordinator then reviewed log files and removed any patient health information. The anonymized log files were transferred to a password-protected account on a cloud server to which only the research team has access.

Data Collection and Analysis

Our data included digital checklist logs collected via the probe, paper checklists, video records of live resuscitations, and interviews with trauma team leaders.

Digital Checklist Logs

Data from each anonymized checklist log file were first transcribed into a spreadsheet containing the following sections: checklist start and end times, time elapsed from when the checklist was started and completed, notes taken and their corresponding timestamps and checklist items (e.g., a note “2 mm bilateral” was written for the “State pupil size and response” item at 0:11:19), any unchecked items, items checked out of order and notes about the ordering (e.g., “1st pause completed at end of checklist”), tab switching sequence, and overall notes about the case (e.g., “weight was checked, but no value typed in”). We then analyzed log transcripts using an open coding technique to identify patterns within notes, unchecked items, items checked out of order, or tab switching sequence. In addition, activity logs (e.g., start and end times for 37 most critical activities performed during resuscitations – three in the pre-arrival plan section, and all activities in the primary and secondary survey and vitals sections) were obtained through video review for six cases. We compared these activity logs to timestamps of checked items from corresponding checklist logs to determine the time difference between actual task performance and item checking. We also examined checklist use patterns among team leaders of different experience levels.

Paper Checklist Review

Paper checklists were used during nighttime and weekend resuscitations because the research coordinators were not present to hand off the probe and monitor its use for any troubleshooting. Seventy paper checklists were used during the study period, but only 49 were submitted for review. From this sample, we selected 10 checklists to match 10 of the 11 probe cases using systematic filtering based on several criteria (one probe case was unique and did not have an appropriate paper checklist case match). First, we filtered the checklists based on the experience of the leader, leaving two categories: surgery fellow and senior surgery resident. Next, we filtered the checklists based on activation level (stat, attending or transfer). We then matched the checklists based on the following criteria as closely as possible: patient age, type of injury (blunt, penetrating, no injury), and neurological score (GCS). Medical experts reviewed the match choices to ensure that any discrepancies in age or GCS were within reason (Table 1).

Table 1:

Probe case characteristics (grey rows) and their matching paper cases (white rows). For age, y = years, m = months, and w = weeks.

| Experience Level |

Activation Level |

GCS | Age | Injury Type |

Now Activation |

|---|---|---|---|---|---|

| Fellow | Stat | 14 | 2y | Blunt | 1 |

| Fellow | Stat | 14 | 1y | Blunt | 0 |

| Fellow | Stat | 12 | 3y | Blunt | 0 |

| Fellow | Stat | 13 | 1y | Blunt | 1 |

| Fellow | Stat | 15 | 8y | Blunt | 1 |

| Fellow | Stat | 14 | 8y | Blunt | 1 |

| Fellow | Stat | 13 | 14y | Blunt | 0 |

| Fellow | Stat | 14 | 13y | Blunt | 0 |

| Fellow | Stat | 15 | 16y | Blunt | 1 |

| Fellow | Stat | 15 | 14y | Blunt | 0 |

| Senior resident | Transfer | 12 | 7w | No injury | 0 |

| Senior resident | Transfer | 15 | 9m | Blunt | 0 |

| Senior resident | Stat | 14 | 6m | Blunt | 0 |

| Senior resident | Stat | 14 | 2y | Blunt | 0 |

| Senior resident | Stat | 14 | 9m | Blunt | 0 |

| Senior resident | Stat | 14 | 2y | Blunt | 0 |

| Senior resident | Stat | 15 | 9y | Blunt | 0 |

| Senior resident | Stat | 15 | 10y | Blunt | 1 |

| *Fellow | Attending | 15 | 14y | Penetrating | 0 |

Case with no paper checklist match.

To analyze the paper checklists, we used a similar approach to that of the digital checklist logs. We first transcribed the handwritten notes and their location, unchecked items, team leader experience level, and case- and patient information (activation level, notification, injury type, patient age, and GCS). We then performed content analysis of notes, identifying common information types that were recorded. Note-taking patterns were also analyzed based on team leader experience levels and patient acuity.

Video Review

To better understand how team leaders used and interacted with checklists, as well as how checklist use affected teamwork, we reviewed 21 videos, 10 with the paper checklist and 11 with the digital probe. We transcribed any dialogue between leaders and other team members related to checklist items, and recorded observations pertaining to checklist use behaviors (e.g., “stepping closer to look over patient, glancing back to checklist”). Transcripts were then analyzed using open coding to identify patterns in use behaviors across both checklist formats. Due to ethics restrictions, we were not allowed to associate the resuscitation cases with individual team leaders, even though we could see them in the videos. We therefore report the results using the leaders’ experience levels.

Interviews with Team Leaders

We conducted one-hour, semi-structured interviews with three team leaders who had used the probe at least twice. The interview focused on leaders’ perceptions about the digital checklist, their needs, and on potential areas for checklist design improvement. Interview questions were categorized into: communication, decision-making, memory load, and checklist use behaviors. Example questions include, “Do you check items right after they are completed or information is verbalized, or wait for several items to be completed? Why do you use this method” and “At the end of a trauma event when summarizing, do you refer back to notes or items on the checklist?” Interviews were audio-recorded and transcribed for analysis.

FINDINGS

We present findings in four parts. First, we describe our general observations of the digital checklist probe use and how it differed from the use of paper checklists. We then describe the differences in probe use based on leaders’ domain experience levels. Next, we present findings about note taking, checklist completion rates and item check off times. We conclude with the challenges our participants faced during the probe deployment.

General Observations: Paper vs. Digital Checklist Use

Through video review, we observed several trends in how team leaders used the checklist probe and paper checklist: (1) carrying the checklist around and checking off items on- the-go vs. leaving it on the stand and checking off items at the end; (2) frequently glancing at the checklists; and, (3) switching between checklist formats. We next describe these trends in greater detail.

Similar to paper checklists, the digital checklist in the form of a tablet afforded a great deal of mobility during team leaders’ work. Although team leaders are usually hands-off—most of the time standing at the foot of the bed and monitoring a resident or nurse practitioner as they assess the patient—this mobility is important because team leaders sometimes need to approach the patient to confirm findings from physical assessment. In both paper and digital checklist cases, we observed team leaders moving around the patient to get a closer look at the injury, all while holding the checklist. Specifically, we saw leaders moving around with the checklist in their hands in three probe cases and two paper checklist cases. When walking around, they held the tablet in one hand with their palm facing up so they could glance at the checklist screen. For example, during the secondary survey in one digital probe case, the team was performing the log roll and back exam, during which the patient is rolled on one side and their back is examined. The junior resident is asking the patient if they are in pain while pressing various parts of the back. As the team turns the patient back to the supine position, the leader walks over to the side of the bed while holding the tablet, screen facing up. He leans over the bed and watches the respiratory therapist and junior resident remove the neck collar to perform the c-spine exam. The leader glances at one of the displays on the wall, then looks back at the checklist and checks off the corresponding “C-spine exam” checkbox. Our comparison of the activity completion times and timestamps from the log also showed that the leader first checked off “Log roll and back exam” step after this task was performed, and then walked to the bedside to check on the patient, stepped back, and checked off “C-spine exam.” This leader explained during the interview that he uses the checklist in a check-as-you-go manner, or checking items after each task is performed. We observed similar behaviors with paper checklists; in one case, a team leader held the checklist with one hand while leaning in to look at the patient, and in another case, the leader tucked the checklist under his arm. The mobility that the checklists afford is a critical factor in allowing the leader to perform their job without being inhibited by the technology.

Not all leaders, however, kept holding the checklist probe during their work. In fact, we found that team leaders held checklists in different ways throughout resuscitations. In one digital checklist case and three paper checklist cases, various team leaders did not hold the checklist at all during the patient assessment and simply checked off items at the very end. In four paper-checklist cases, team leaders leaned on the scribe’s stand at the foot of the bed while taking notes and checking off items. Team leaders in four other cases put the paper form on a clipboard or used their hand behind the paper as a support before switching to leaning on the stand. In one digital checklist case, the leader became upset when the checklist application stopped working, looked around for the research coordinator, but then put the checklist on the stand and pulled out a pen and blank piece of paper to take notes. Later in the event, this leader went back to the digital checklist, transcribed notes from paper into the probe and checked off the completed tasks. In three digital checklist cases, the leaders filled out the pre-arrival plan and then placed the checklist on the stand while waiting for the patient to arrive, but immediately picked the tablet up when the patient entered the room.

Team leaders tended to glance at the checklist often during resuscitations. In six paper checklist cases and seven probe cases, team leaders visually referenced the checklist at various points in the process: before they directed the team or clarified if tasks had been performed, as team members performed tasks, and after task completions. In one probe case, the junior resident asked if they could move on to the secondary survey. The leader first glanced at the checklist and then asked if a specific task had been completed:

JR: “Ready for secondary survey?”

TL: [glances at the checklist] “Did you check pupils?”

JR: “Pupils?”

TL: “Yeah.”

JR: [checks pupils] “2 equal reactive.”

TL: [checks off “State pupil size and response” item]

Team leaders also glanced at the checklist when providing the summary of the process and discussing the plan of care. One participant commented how retrieving information from the digital checklist was easier at a glance because it focused their attention on one section at a time, compared to the paper version that shows everything in one plane. The digital probe was also used as a reference point to check for incomplete tasks. One leader explained how they used the checklist if they noticed an item had been skipped:

“If there ’s something I missed then I’ll tell the second year [junior resident] to just go ahead [...] it’s usually part of the secondary exam where we didn’t look in the ears, so then we’ll go back and do that.”

Two paper checklist cases were unique in that they involved the use of both digital and paper checklists. In both cases, the leaders started by using the digital checklist, but they soon experienced issues so they put the digital checklist down and continued using the paper form. In both cases, the leaders were taking a note when the app malfunctioned; one was in the pre-arrival plan tab and the other in the primary survey tab. Both leaders became frustrated with the probe, and one was even heard saying, “Why is this not working?” This team leader switched from probe to paper during step D, indicating this switch by leaving all items until step D unchecked and writing a note at the top that read “started on i-pad” (Figure 2(a)).

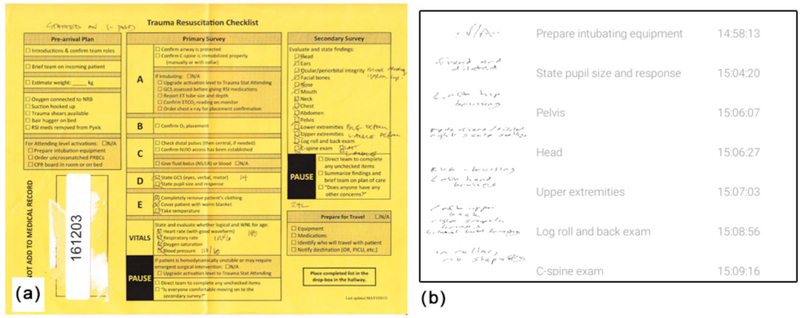

Figure 2:

(a) Team leader’s notes on the paper checklist after switching back and forth between the digital and paper version. (b) Example notes from the digital probe log, their corresponding items and timestamps.

Checklist Use by Leaders of Different Experience Levels

We observed that team leaders of different experience levels used the checklists differently. Regardless of the checklist format, team leaders with less experience (senior residents) on average took more notes than surgery fellows (2.3 notes versus 1.5 for digital; 5.75 notes versus 0 for paper). We also observed surgery fellows, or more experienced leaders, placing the checklist on the stand and not referring to it in two probe cases and three paper checklist cases. Rather, they picked up the checklist and checked off items at the very end of the resuscitation. The fellows confirmed our observations during the interviews, reporting that they only take notes if there is something significant or abnormal about the patient. One participant elaborated: “If there’s something specific about the findings or the vitals, or if there’s something relevant or abnormal about the exam, I just put it in the little comment section”. In contrast to fellows’ behaviors, senior surgery residents tend to hold the checklist in their hands throughout resuscitations, checking off items as tasks are performed, both on paper and digital checklists. For example, one senior surgery resident was diligently glancing at the digital checklist, reading the items out loud and then checking them off immediately after they were performed:

“Do we have our oxygen hooked up? Trauma sheers available? Bair hugger... RSI meds pulled off there... [trailing off]” [...] “Do we have her covered with a warm blanket? Can we get a temperature?”

For more experienced leaders, the checklist served as a protocol reminder and as the information organizer, rather than a memory tool for taking notes: “[The checklist] organizes the information gathering and then, in the sense that it expedites that and organizes it, it helps you come to a decision point more efficiently.” To this end, both fellows explained that the primary survey steps are second nature to them and they have the checklist items memorized. Where they saw the checklist helpful, however, was in reminding them of the secondary survey, which has more steps in no particular order: “For the secondary survey, you make sure that you’re dotting your i’s and crossing your t’s.” One fellow explained the usefulness of the checklist as a training tool for learning all steps of the ATLS protocol:

“If I were to have a checklist in residency, I probably would have gotten a lot better a lot faster at running traumas, just because hey there’s a checklist, if I just put it to memory then most of the things I will not miss.”

This reflection as a result of using the checklist probe showed the importance of having access to the checklist for team leaders of all experience levels, especially those who are in training or learning the ATLS protocol.

Note Taking on Paper vs. Digital Checklist

Content analysis of both paper and digital checklists showed several differences in the way these two formats were used for note taking. On average, the amount of notes taken on both paper and digital checklists was the same, but the deviations varied: 2 notes per paper checklist (SD 3.8) compared to 2 notes per digital checklist (SD 1.5). Vital signs were written down in two paper-checklist cases and typed in all digital checklist cases. In four digital checklist cases, notes were handwritten for pupil size. Notes were also handwritten for temperature in three digital cases. These findings suggest that, like for vitals, it may be more efficient to offer a typed note entry field for all numerical items (e.g., temperature or pupil size). Notes for the secondary survey items were taken in six digital cases, while only two paper cases had notes in this section of the checklist. The results of lab studies were written down in two paper checklist cases, and in one case the leader drew checkboxes next to the added lab items. The amount of notes on paper checklists during this study, however, was lower than what we found in our previous analysis of paper checklists [22], suggesting that the amount of notes may depend on individual preferences.

On paper checklists, most of the notes were written in the top margin section, vitals box, or secondary survey section, while the notes on digital checklists were often associated with specific checklist items, e.g., “fixed and dilated” for the item “State pupil size and response” (Figure 2(b)). Two team leaders reported that they use the digital checklist just like they use the paper checklist, taking notes only if a significant change in the patient’s status or an abnormal finding occurs. One participant explained that handwriting on the tablet was more usable than taking notes on paper:

“There’s more room to actually write something on the tablet, because on this sheet it’s just clunky, where I would write something off to the side you have to write it in small print and it would run into other things.”

To take notes on the digital checklist, the user must first click on a white box next to the checklist item, which opens up a large writing space for note taking. When the note is complete and the user presses the checkmark, the note minimizes back into the white box and shows up next to the related item (Figure 1(b)). The participant commented that this feature could be improved by enlarging the minimized notes so they are more readable for later referencing.

Digital Checklist Completion Rates and Check Off Times

Digital checklist logs showed that fewer items were omitted—3 items on average (SD 2.8) versus 17 items on paper checklists (SD 11.2). Most of the items omitted on paper checklists appear within sections with “N/A” checkboxes, relating to tasks that occur infrequently, like intubation. On the digital checklist, these “N/A” items are hidden by default (with the exception of the “Prepare for travel” section) and do not contribute to the number of unchecked items. Our analysis of timestamps for item check offs showed that a long delay averaging 10 minutes, occurred between when the last pre-arrival plan item and the first primary survey item were checked. We explain this delay by the fact that the pre-arrival plan is often completed before the patient arrival, and especially in cases with early notifications when the team assembles in the resuscitation room ahead of time. After comparing the digital checklist log timestamps with the task completion times from the activity log, we found that for about 20 tasks throughout the checklist, items were checked 3 minutes, on average, after the task was completed. In contrast, we calculated that about 5 to 6 times per checklist, items were checked an average of 1 minute and 39 seconds before the task was completed. Most of these “pre-checks” occurred in the primary survey where tasks may take longer to complete, so the item is checked while the task is being performed but before task completion is noted on the activity log. There were about 5 instances per checklist where the task was not performed to completion but the corresponding checklist item was checked. These “false checks” mostly occurred during multi-step tasks, like calculating the GCS score, which includes determining the individual score for eye movement, motor movement, and verbal behavior, but is not considered complete until the total score is reported. In the cases we observed, the leaders checked off these tasks because they received completion reports for individual task components, while the medical experts producing the ground truth activity log marked them as incomplete.

We also found that items on the digital checklist were checked out of order. Most of these out-of-order items, however, were within the same tab (e.g., secondary survey items checked out of order but not mixed with primary survey items). The interviews revealed that following the order within the primary survey is important, but less strict during the secondary survey, as long as all tasks are completed. When asked what they you do if one of the items is skipped, one of the participants responded:

“If it’s in the primary survey, I stop them and I say you have to do this before you move on to anything else. If it’s in the secondary survey, I’ll let them finish what they’re doing, then I’ll say ‘hey did you do this?’ and go back. Once you’ve gone to the secondary survey I’m less concerned about it, but primary survey order matters so I stop immediately there.”

The other items that were often checked out of order were the pause sections, which were checked after all other items, at the very end in four digital checklist cases.

Challenges in Using the Digital Checklist Probe

Team leaders described several challenges in using the digital checklist compared to the paper version. The first challenge was the need to setup the tablet upon arrival to the resuscitation room. This challenge emerged during the last few days of our study, after we installed a locking mechanism to secure the tablet. The team leaders needed to unplug the tablet from the charger, unlock the security lock, and then unlock the tablet screen. One participant commented that they would skip the tablet and go straight to the paper checklist if the patient was already in the room. We changed the probe access mechanism—from a research coordinator handing it to leaders at the beginning of resuscitations to storing it in the room—to allow for probe use and data collection during weekend- and night shifts, as we continue with the probe deployment. The leaders’ comments, however, suggest that we need a different strategy to ensure the probe use in cases when the patient is already in the room and there is no time to prepare.

Another challenge was the lack of visibility for items that were left unchecked: “I think it would be nice at the end to have the app tell you these were the fields that were missing.” Unlike paper version that provides an at-a-glance overview of the entire checklist, the digital version separates items into tabs, making them visible only if the user goes to a particular tab. To address this design issue, we already had a feature implemented that provided a list of unchecked items to the user before they would submit the checklist log. It turned out this feature was not obvious to our users. Also, three team leaders had difficulties with using the stylus and the interface. In two of these cases, the application froze and the participants switched to using the paper checklist. Although most cases with the digital probe involved routine daytime events, there were several cases where environmental factors, like patient crying or delays in task performance, could have impacted the checklist use. In situations where the patient was crying, the team leader often had to request information from the junior resident more than once (e.g., JR: “We’ll roll once we get an IV.” TL: “Yep.” [moments later, while patient is crying] TL: “Didyou get the IV?” JR: “Not yet.” TL: “Do we have IV access?” JR: “Yep”).

We next discuss the implications of our observations for designing a future, context-adaptive checklist to better support decision making and protocol compliance in a team-driven, time-critical and high-stress medical work.

DISCUSSION

Deploying the digital checklist probe allowed us to collect data about its use in actual trauma resuscitations by real users who will be using the digital checklist long term, and to determine if the new technology impacted the checklist use in any significant way. What surprised us was that behaviors around the probe use (e.g., carrying the probe around, leaving it on the stand, checking off on-the-go, or checking off all items at the very end) did not differ from those around the paper checklist use. This finding showed that the digital checklist could function as an appropriate replacement for the paper form within this dynamic and messy workflow. The challenges faced by users during the study period provided valuable information about future design directions, not only for short-term improvements, but also for “solving an interaction design problem” [10] to ensure the continued success of checklists in healthcare. Our goals are to make this technology dynamic and adaptable to different patient and user contexts, and with this study, we lay the groundwork for achieving these goals.

Adaptive Checklist Design for Medical Emergencies

A recurring theme in our findings was that of mobility—a technology affordance that is critical in collaborative work because it allows individuals to reconfigure themselves with regard to ongoing demands of the activity in which they are engaged [18]. As our results have shown, team leaders often moved around the patient bed to get a closer look at physical findings or to assist other team members. When team leaders moved to either side of the bed during patient assessment, they kept holding the probe in one hand while using the other hand to examine the patient. The probe implemented on a tablet was mobile enough to allow the leaders to freely move about the trauma room and quickly glance at the checklist when needed. Our participants commented that retrieving information at a glance from the digital checklist—quickly looking to see what items have previously been done and what items are next in the protocol, or quickly checking notes that have been taken—was easier than on paper because it only presented one section of the checklist at a time. This finding is somewhat contrary to the common belief that providing information on paper in one plane allows for an easy, at-a-glance access to all items [13,23]. When moving quickly about the room to access the patient, this ease of glancing makes an important difference and could make visual information retrieval more efficient. It is therefore important to take both of these issues—mobility and ease of glancing—into account when designing for the checklist adaptation. For example, it will still be important to keep the checklist sections (whether in tabs or through a different layout) small so that all items are visible at all times, and there is no need for scrolling, especially if the leaders are on the move. Showing all items for the current page or tab would also allow users to learn the relative positions of items and quickly localize them as they move about.

Our findings have also shown that items on the checklist are checked either on the go (often with a delay), or at the end of the patient assessment, which is often attributed to team leader preference. This discrepancy in checking behaviors could make the design of a context-adaptive checklist challenging because the system will not be able to detect when items are checked relative to task completion, especially if checking happens at once or at the end. Similarly, our analysis of the probe logs has shown that many items were checked out of order, or before and after task completions, further complicating the adaptive design. Three of the team leaders explained that the order of task performance matters on the primary survey, and if any items are left unchecked, they will immediately direct the team to complete those tasks. The secondary survey, however, is less strict about the order of tasks and our findings confirmed that out-of-order check offs most frequently occurred on the secondary survey tab. These findings have implications for making the checklist adaptable. On the one hand, linear presentation of the checklist items facilitates memorization and visual search for individual items, suggesting a layout with linear lists for all checklist pages. On the other hand, although following the order of steps for both surveys is recommended to avoid selection errors [27], the actual resuscitation workflow structure is often not linear. To balance these requirements, we could leverage adaptive design and visualizations to make the user aware when the sequential order of checking individual items is important but not critical, or to enforce a particular order when it truly matters. Similarly, to encourage timely check offs for completed tasks, the adaptive design could show incomplete tasks by moving checked items to the bottom of the page and populating the top area with unchecked items. Future studies are also needed to examine what tasks are associated with different patient scenarios, and which corresponding items are checked and when, to determine what kinds of checklist adaptations could be made.

Sometimes, items on the checklists were also left unchecked for various reasons, including user experience, skipped tasks, inability to perform tasks due to limited time or delays, or user negligence. This partial completion issue impacts the checklist adaptability in the same way as checking items out of order or at the very end. To address this issue, Christov et al. [5] for example, implemented an “X” button and an optional free-form notes field next to items on their smart checklist for nurses to indicate any problems with completing the process steps. A similar feature could also be designed for the resuscitation checklist to facilitate adaptation. A challenge in designing this feature, however, is that Christov et al. approach supports a single user or performer, whereas our goal is to support teamwork. With a single performer, there is a single thread of activity, making it easier to know the process status and reasons for incomplete tasks. With teamwork, there may be multiple activity threads in progress, making it difficult to immediately know what was done or not, and why.

Related to this issue is team leaders’ domain experience. As we have observed in our study, more experienced leaders memorize the ATLS protocol steps without referring to the checklist or they take notes only when findings are abnormal. These observations suggest that checklist adaptation should tailor to user experience levels as well. This type of adaptation, however, should follow a different approach to allow team leaders to select features based on their individual preferences about note taking or checking items. For example, the system should allow users to take both handwritten and typed notes, depending on what works best for them. Users who “check-as-you-go” might prefer to only view tasks remaining within each tab, so checked items could automatically move to the bottom of the tab. If the patient’s vital signs drastically change during the resuscitation, team leaders might want to record these trends for later reference, as expressed by our participants.

Increasing the system complexity, adding features, and making the checklist adaptive also increases the likelihood of system malfunctioning. As we have seen through the technology probe deployment, our users have already experienced issues with a relatively simple note-taking feature, when the app froze. These and other potential issues suggest that the design of an adaptive checklist must consider what this increasing complexity means for user interaction. We are taking several approaches as we continue with this work. First, our further extensive testing of the system will reveal different issues that future design iterations will resolve. Second, our goal is to keep the design simple and intuitive, without adding many obtrusive clicks or new screens. Third, it will be important to keep the user in the loop, especially as the system becomes “smarter” and less reliant on user input. Finally, the paper checklist will eventually be replaced by its digital counterpart, so the application will need a fallback mechanism, which could simply show the static checklist without interactive features.

CONCLUSION AND STUDY LIMITATIONS

In this study, we deployed a digital checklist in the trauma resuscitation environment using a technology probe approach. We collected information about the probe use through digital logs, video review and interviews. From these data, we compared how the use of the probe differed from using a paper checklist. We identified advantages and challenges to using the digital checklist in this setting, and discussed implications for designing an adaptive checklist.

Our study has several limitations. First, only six participants used the digital checklist probe due to resident rotations that are common at teaching hospitals. Residents at our study site rotate every four months, with current residents leaving and new residents joining the division of trauma and burn services. These rotations pose challenges for both the system and study design. For example, two residents left the hospital before the study period concluded, making it difficult to interview them. Additionally, the discontinuous nature of residents’ schedules means that we cannot have users using the checklist probe over longer periods of time. Second, our initial deployment of the digital checklist probe focused on daytime resuscitations. We have recently implemented a locking mechanism to allow team leaders to access the tablet during off hours, nights and weekends. This ability will allow us to collect a more comprehensive sample of use cases. Third, the matching between paper and digital checklist cases was also challenging. For example, there were several cases with large discrepancies in patient age (e.g., 9 months versus 2 years), which could have an impact on some of the observed differences between cases.

Finally, this was a single site study, which could limit the generalizability of our results. However, because most US trauma centers use the same staffing and procedures, and follow the same resuscitation protocol, we believe that our results are at least generalizable to sites within the US. Additionally, it may be difficult to generalize from trauma resuscitation to generic medical care, and from medical care to other disciplines. Even so, trauma resuscitation alone is an important domain. Despite these limitations, we believe that researchers and designers will benefit from our results.

ACKNOWLEDGMENTS

This research is supported by the National Science Foundation under Award Number 1253285, and partially supported by the National Library Of Medicine of the National Institutes of Health under Award Number R01LM011834. We thank the medical staff at the research site for participating in this study.

REFERENCES

- 1.Amin Alia K., Kersten Bram T. A., Kulyk Olga A., Pelgrim PH, Wang CM, and Panos Markopoulos. 2005. SenseMS: A user-centered approach to enrich the messaging experience for teens by non-verbal means. In Proceedings of the 7th International Conference on Human Computer Interaction with Mobile Devices & Services (MobileHCI ‘05), 161–166. 10.1145/1085777.1085804 [DOI] [Google Scholar]

- 2.Kirsten Boehner, Janet Vertesi, Phoebe Sengers, and Paul Dourish. 2007. How HCI interprets the probes. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ‘07), 1077–1086. 10.1145/1240624.1240789 [DOI] [Google Scholar]

- 3.Thomas Boillat and Christine Legner. 2015. From paper-based to mobile checklists - a reference model. In Wirtschaftsinformatik, 106–120. https://doi.org/http://aisel.aisnet.org/wi2015/8

- 4.Burden Amanda R., Carr Zyad J., Staman Gregory W., Littman Jeffrey J., and Torjman Marc C.. 2012. Does every code need a “reader?” improvement of rare event management with a cognitive aid “reader” during a simulated emergency: a pilot study. Simulation in Healthcare: Journal of the Society for Simulation in Healthcare 7, 1: 1–9. 10.1097/SIH.0b013e31822c0f20 [DOI] [PubMed] [Google Scholar]

- 5.Christov Stefan C., Conboy Heather M., Nancy Famigletti, Avrunin George S., Clarke Lori A., and Osterweil Leon J.. 2016. Smart checklists to improve healthcare outcomes. In Proceedings of the 2016 International Workshop on Software Engineering in Healthcare Systems (SEHS ‘16), 54–57. 10.1145/2897683.2897691 [DOI] [Google Scholar]

- 6.Bill Gaver, Tony Dunne, and Elena Pacenti. 1999. Design: Cultural Probes. interactions 6, 1: 21–29. 10.1145/291224.291235 [DOI] [Google Scholar]

- 7.Lalya Gaye and Lars Erik Holmquist. 2004. In duet with everyday urban settings: A user study of sonic city. In Proceedings of the 2004 Conference on New Interfaces for Musical Expression (NIME ‘04), 161–164. [Google Scholar]

- 8.Gonzales Michael J., Henry Joshua M., Calhoun Aaron W., and Riek Laurel D.. 2016. Visual TASK: A collaborative cognitive aid for acute care resuscitation. In Proceedings of the 10th EAI International Conference on Pervasive Computing Technologies for Healthcare (Pervasive Health 2016), 1–8. [Google Scholar]

- 9.Connor Graham, Mark Rouncefield, Martin Gibbs, Frank Vetere, and Keith Cheverst. 2007. How probes work. In Proceedings of the 19th Australasian Conference on Computer-Human Interaction: Entertaining User Interfaces (OZCHI ‘07), 29–37. 10.1145/1324892.1324899 [DOI] [Google Scholar]

- 10.Eliot Grigg. 2015. Smarter clinical checklists: How to minimize checklist fatigue and maximize clinician performance. Anesthesia and Analgesia 121, 2: 570–573. 10.1213/ANE.0000000000000352 [DOI] [PubMed] [Google Scholar]

- 11.Gruen Russell L., Jurkovich Gregory J., McIntyre Lisa K., Foy Hugh M., and Maier Ronald V.. 2006. Patterns of errors contributing to trauma mortality. Annals of Surgery 244, 3: 371–380. 10.1097/01.sla.0000234655.83517.56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hart Elaine M. and Harry Owen. 2005. Errors and omissions in anesthesia: A pilot study using a pilot’s checklist. Anesthesia and Analgesia 101, 1: 246–250. 10.1213/01.ANE.0000156567.24800.0B [DOI] [PubMed] [Google Scholar]

- 13.Heath Christian and Luff Paul. 1996. Documents and professional practice: “bad” organisational reasons for “good” clinical records. In Proceedings of the 1996 ACM Conference on Computer Supported Cooperative Work (CSCW ‘96), 354–363. 10.1145/240080.240342 [DOI] [Google Scholar]

- 14.Hilary Hutchinson, Wendy Mackay, Bo Westerlund, Bederson Benjamin B., Allison Druin, Catherine Plaisant, Michel Beaudouin-Lafon, Stephane Conversy, Helen Evans, Heiko Hansen, Nicolas Roussel, and Bjorn Eiderback. 2003. Technology probes: Inspiring design for and with families. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ‘03), 17–24. 10.1145/642611.642616 [DOI] [Google Scholar]

- 15.Kelleher Deirdre C., Carter Elizabeth A., Waterhouse Lauren J., Parsons Samantha E., Fritzeen Jennifer L., and Burd Randall S.. 2014. Effect of a checklist on advanced trauma life support task performance during pediatric trauma resuscitation. Academic Emergency Medicine: Official Journal of the Society for Academic Emergency Medicine 21, 10: 1129–1134. 10.1111/acem.12487 [DOI] [PubMed] [Google Scholar]

- 16.Kramer Heidi S. and Drews Frank A.. Checking the lists: A systematic review of electronic checklist use in health care. Journal of Biomedical Informatics. 10.1016/jjbL2016.09.006 [DOI] [PubMed] [Google Scholar]

- 17.Angela Lashoher, Schneider Eric B., Catherine Juillard, Kent Stevens, Elizabeth Colantuoni, and Berry William R.. 2016. Implementation of the World Health Organization Trauma Care Checklist Program in 11 centers across multiple economic strata: Effect on care process measures. World Journal of Surgery: 1–9. 10.1007/s00268-016-3759-8 [DOI] [PubMed] [Google Scholar]

- 18.Paul Luff and Christian Heath. 1998. Mobility in collaboration. In Proceedings of the 1998 ACM Conference on Computer Supported Cooperative Work (CSCW ‘98), 305–314. 10.1145/289444.289505 [DOI] [Google Scholar]

- 19.Marshall Stuart D.. 2016. Helping experts and expert teams perform under duress: an agenda for cognitive aid research. Anaesthesia. 10.1111/anae.13707 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Marshall Stuart D., Penelope Sanderson, McIntosh Cate A., and Helen Kolawole. 2016. The effect of two cognitive aid designs on team functioning during intraoperative anaphylaxis emergencies: a multi-centre simulation study. Anaesthesia 71, 4: 389–404. https://doi.org/10.ll11/anae.13332 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Aleksandra Sarcevic, Brett Rosen, Leah Kulp, Ivan Marsic, and Randall Burd. 2016. Design challenges in converting a paper checklist to digital format for dynamic medical settings. In Proceedings of the 10th EAI International Conference on Pervasive Computing Technologies for Healthcare (Pervasive Health 2016, 1–8). [PMC free article] [PubMed] [Google Scholar]

- 22.Aleksandra Sarcevic, Zhan Zhang, Ivan Marsic, and Burd Randall S.. 2016. Checklist as a memory externalization tool during a critical care process. In Proceedings of the 2016 AMIA Annual Symposium (AMIA ‘16) 2016: 1080–1089. [PMC free article] [PubMed] [Google Scholar]

- 23.Sellen Abigail J. and Harper Richard H.R.. 2003. The Myth of the Paperless Office. MIT Press, Cambridge, MA, USA. [Google Scholar]

- 24.Charat Thongprayoon, Harrison Andrew M., O’Horo John C., Sevilla Berrios Ronaldo A., Pickering Brian W., and Vitaly Herasevich. 2014. The effect of an electronic checklist on critical care provider workload, errors, and performance. Journal of Intensive Care Medicine. 10.1177/0885066614558015 [DOI] [PubMed] [Google Scholar]

- 25.Verdaasdonk Emiel G., Stassen Laurents P., Widhiasmara Prama P., and Jenny Dankelman. 2009. Requirements for the design and implementation of checklists for surgical processes. Surgical Endoscopy 23, 4: 715–26. 10.1007/s00464-008-0044-4 [DOI] [PubMed] [Google Scholar]

- 26.Watkins Scott C., Shilo Anders, Anna Clebone, Elisabeth Hughes, Vikram Patel, Laura Zeigler, Yaping Shi, Shotwell Matthew S., McEvoy Matthew D., and Weinger Matthew B.. 2016. Mode of information delivery does not effect anesthesia trainee performance during simulated perioperative pediatric critical events: A trial of paper versus electronic cognitive aids. Simulation in Healthcare: Journal of the Society for Simulation in Healthcare 11, 6: 385–393. 10.1097/SIH.0000000000000191 [DOI] [PubMed] [Google Scholar]

- 27.Rachel Webman, Jennifer Fritzeen, JaeWon Yang, Ye Grace F., Mullan Paul C., Qureshi Faisal G., Parker Sarah H., Aleksandra Sarcevic, Ivan Marsic, and Burd Randall S.. 2016. Classification and team response to nonroutine events occurring during pediatric trauma resuscitation. Journal of Trauma Acute Care Surgery 81, 4: 666–673. 10.1097/TA.0000000000001196 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Leslie Wu, Jesse Cirimele, Kristen Leach, Stuart Card, Larry Chu, Kyle Harrison T, and Klemmer Scott R.. 2014. Supporting crisis response with dynamic procedure aids. In Proceedings of the 2014 Conference on Designing Interactive Systems (DIS ‘14), 315–324. 10.1145/2598510.2598565 [DOI] [Google Scholar]