Abstract

Exposure of humans to unusual spaces is effective to observe the adaptive strategy for an environment. Though adaptation to such spaces has been typically tested with vision, little has been examined about adaptation to left–right reversed audition, partially due to the apparatus for adaptation. Thus, it is unclear if the adaptive effects reach early auditory processing. Here, we constructed a left–right reversed stereophonic system using only wearable devices and asked two participants to wear it for 4 weeks. Every week, the magnetoencephalographic responses were measured under the selective reaction time task, where they immediately distinguished between sounds delivered to either the left or the right ear with the index finger on the compatible or incompatible side. The constructed system showed high performance in sound localization and achieved gradual reduction of a feeling of strangeness. The N1m intensities for the response-compatible sounds tended to be larger than those for the response-incompatible sounds until the third week but decreased on the fourth week, which correlated with the initially shorter and longer reaction times for the compatible and incompatible conditions, respectively. In the second week, disruption of the auditory-motor connectivity was observed with the largest N1m intensities and the longest reaction times, irrespective of compatibility. In conclusion, we successfully produced a high-quality space of left–right reversed audition using our system. The results suggest that a 4-week exposure to the reversed audition causes optimization of the auditory-motor coordination according to the new rule, which eventually results in the modulation of early auditory processing.

Keywords: Auditory adaptation, Early auditory processing, Unusual environment, Neural plasticity, Stimulus-response compatibility, Magnetoencephalography (MEG)

Introduction

Long-term exposure of humans to unusual sensory spaces is effective to see the adaptive strategy for an environment. Typically, adaptation to unusual spaces has been tested in the visual modality, in which left–right reversed vision [1–4] or up-down reversed vision [5, 6] was achieved with prisms. These studies have mainly focused on optimization of visual-motor coordination during adaptation to reversed vision, where participants wore simple apparatus for several weeks to 1.5 months in general and found that visual adaptation and its after-effects occur both perceptually and behaviorally with modulation of early visual processing [1, 3].

Despite numerous reports on unusual vision, however, fewer attempts have been made to examine adaptation to unusual audition [7–9]. Particularly, long-term adaptation to left–right reversed audition was only tested in a limited number of psychological studies. Young [7] constructed a left–right reversed version of the ‘pseudophone’ [10] comprised of two curved trumpets, which were inserted into a participant’s ear canals in a contrariwise manner, and for the first time tested adaptation by himself wearing it continuously for 3 days at most and totally for 85 h. Willey et al. [8] retested Young’s experiment in three participants wearing it continuously for 3, 7, and 8 days. Though these two previous studies have reported sound localization toward visual counterparts and deliberate the correction of movements for reversed sounds in the end, sounds without visual cues were consistently localized in reversed directions all through the exposure period and no after-effect was detectable for sound localization. These results dispute the notion that adaptation effects reach an auditory sensory level with this apparatus and period [11]. Even after their studies, some researchers have attempted to examine reversed audition by developing an electronic version of the pseudophone [9, 12]. Hofman et al. [9] developed a more precise apparatus and tested adaptation to reversed audition in two participants wearing it continuously for 3 days and 3 weeks, but again failed to detect auditory sensory adaptation. In contrast, however, it has been reported that a relatively short exposure to sounds displaced by a small angle resulted in not only visual capture of the sound-source locations but also shifted the localization for unseen sounds during and even after the exposure period [13, 14], indicating auditory adaptation at a sensory level. From these apparently different findings, it was hypothesized that longer exposure to left–right reversed audition with a higher-quality apparatus causes modulation of the early auditory processing along with optimization of the auditory-motor coordination, as in the cases of reversed vision. In this study, we thus attempted to construct a left–right reversed stereophonic system only using wearable devices that recently became available and tested adaptation by a selective reaction time task using magnetoencephalography (MEG) with two participants continuously wearing the system for 4 weeks.

Methods

Left–right reversed audition system

Construction of the reversed audition system

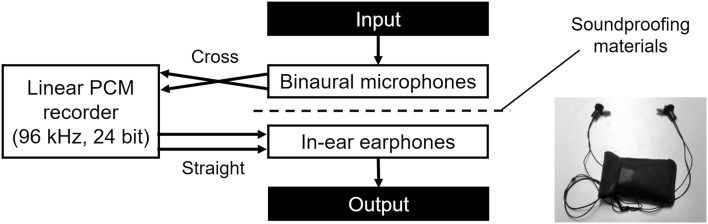

The left–right reversed stereophonic system was constructed only using wearable devices. As shown in Fig. 1, the microphone parts of binaural earphones/microphones (CS-10EM, Roland Corp., Hamamatsu, Shizuoka, Japan) were used with dedicated windscreens for suppressing the wind noise, which were connected crossly to a linear PCM recorder (PCM-M10, Sony Corp., Tokyo, Japan). Analogue sound signals were digitalized at 96 kHz with a 24-bit depth by the recorder, and these digitalized signals were immediately played through in-ear earphones (ER-4B, Etymotic Research Inc., Elk Grove Village, Illinois, USA) that were connected directly to the recorder. The microphone parts of binaural earphones/microphones and the in-ear earphones have an ability of three-dimensional expression and were slightly isolated by soundproofing materials. The body of the system was placed into a pocket-sized bag.

Fig. 1.

Left–right reversed audition system. Binaural microphones are cross-connected to a linear PCM recorder, in which the analog sound signals are digitalized. Digital signals are immediately played by in-ear earphones that are connected directly to the recorder. The microphones and the earphones are slightly isolated by soundproofing materials

Verification of the reversed audition system

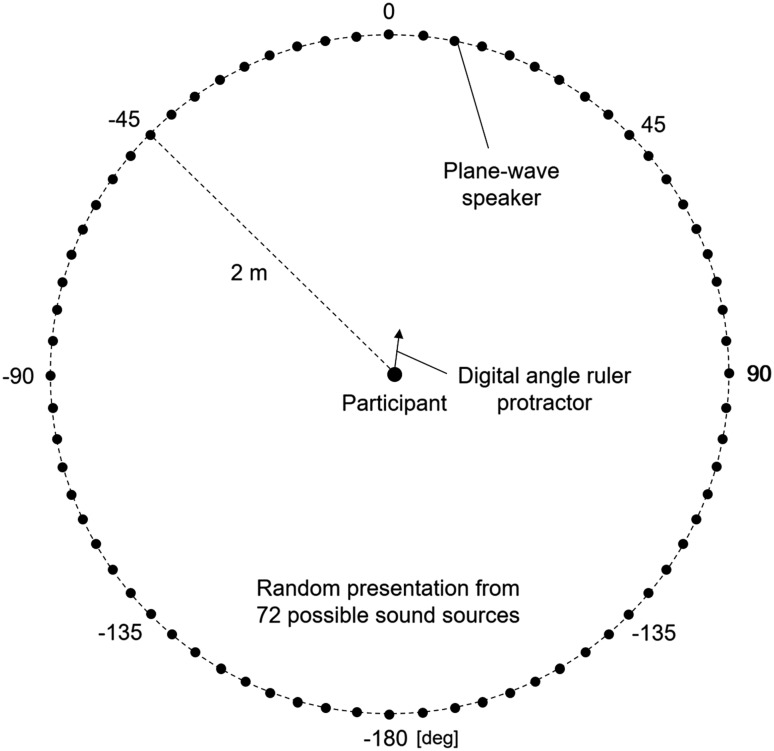

The potential delay of the reversed audition system was evaluated by comparing the onset timing of the actual sound and that of the reversed sound through the system. Next, in order to verify the left–right reversed stereophonic space, sound source localization was tested with and without the system, as shown in Fig. 2. In an anechoic room, 1000-Hz tones at 65-dB sound pressure level were presented from either of 72 sound sources through plane-wave speakers (SS-2101, Alphagreen Co., Ltd., Yokohama, Kanagawa, Japan). The sound at one location lasted for 10 s and was immediately switched to the next sound at another location. Each of the sound sources was used once in a random order. The sound sources were located every five degrees from −180 to 175 degrees in a clockwise manner along a circle with a radius of 2 m. They were directed toward the center of the circle, where a digital angle ruler protractor (5422-200, Wenzhou Sanhe Measuring Instrument Co., Ltd., Wenzhou, Zhejiang, China) was set. Six participants, positioned at the center of the circle and facing a 0-degree sound source with a blindfold, were instructed to indicate the direction of the perceived sound using the protractor during each sound period, in the normal and reversed conditions.

Fig. 2.

Experimental setup of sound source localization. In an anechoic room, 1000-Hz tones are presented from either of 72 sound sources ranging from −180 to 175 degrees through plane-wave speakers that are directed toward the center of the circle, where a digital angle ruler protractor is set. The sound at one location lasts for 10 s and is immediately switched to the next sound at another location. Each of sound sources is used once in a random order

Adaptation to left–right reversed audition

Procedure of adaptation

Two right-handed healthy men in their twenties (Participants A and B) participated in the experiments of adaptation to left–right reversed audition. They continuously wore the left–right reversed audition system for 4 weeks except during sleep, bath, and battery/memory swap time, and were accompanied by an observer for as long as possible. During these periods, earplugs were inserted into their ears to not prevent adaptation. The system was also taken off during MEG recording periods. Each time they put on the system, sound pressure was carefully adjusted based on the point of subjective equality to the normal condition. Informed consent was obtained after each participant received an explanation of the details of the experimental procedure. Experimental protocols were approved by the Ethics Commission of Tokyo Denki University.

MEG experiment during adaptation

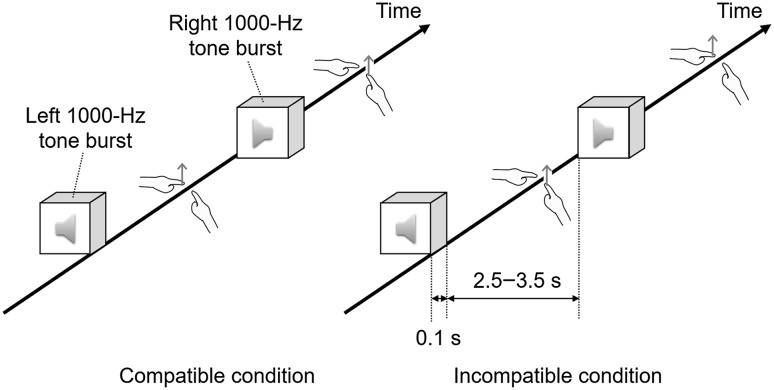

MEG recordings were performed under the selective reaction time task, as shown in Fig. 3, once a week during adaptation to left–right reversed audition including pre- and post-periods. In a dim magnetically shielded room, monaural 1000-Hz tone bursts for 0.1 s were delivered to either the left or the right ear at 65-dB sound pressure level through sponge insert earphones with plastic ear tubes (ER-2, Etymotic Research Inc., Elk Grove Village, Illinois, USA). These stimuli appeared pseudorandomly 80 times in a block with an inter-stimulus interval of 2.5–3.5 s, and in total, there were four blocks with an inter-block interval of at least 30 s. In two blocks, participants were instructed to respond immediately to the right stimuli with the right index finger and to the left stimuli with the left index finger. In the other two blocks, they were instructed to respond immediately to the right stimuli with the left index finger and to the left stimuli with the right index finger. These compatible and incompatible blocks were alternatively arranged. They were also instructed to gaze at a fixation point in a cross shape constantly located just in front of them.

Fig. 3.

Selective reaction time task. Tone bursts of 1000 Hz are delivered to either the left or the right ear pseudorandomly. In compatible blocks, participants are instructed to respond immediately to the tone bursts with the index finger on the compatible side. In incompatible blocks, they are instructed to respond immediately to them with the index finger on the incompatible side. Each block has 80 presentations, and these blocks are alternatively arranged

Outside the shielded room, we used a three-dimensional digitizer (FASTRAK, Polhemus, Colchester, Vermont, USA) to record the locations of three fiducial landmarks at nasion and two preauricular points, the centers of the four head-positioning indicator coils attached to the scalp, and more than 30 additional points on the scalp. The landmarks were used to define the MEG head coordinate system, and all points were used to superimpose the MEG data onto an inflated cortical surface model. Weak magnetic fields produced by the coils were recorded to register the locations of the MEG sensors relative to the head coordinate system. The MEG data were recorded with a 122-channel Neuromag MEG system (Neuromag-122 TM, Neuromag Ltd., Helsinki, Finland) that has 61 pairs of orthogonally oriented planar gradiometers. The sampling rate was 1 kHz and the analog recording passband was 0.03–200 Hz.

Analysis of the MEG data during adaptation

Epochs contaminated with eye-related artifacts were rejected. The collected MEG data were averaged from 100 ms before to 500 ms after the stimulus onset for each kind of stimulus. The offset was removed based on a pre-stimulus interval and the averaged data were digitally low-pass filtered at 40 Hz. Source modeling of the averaged MEG responses was conducted with the MNE software package [15, 16]. After co-registration of the MEG and magnetic resonance imaging (MRI) coordinate systems, magnetic fields produced by the assumed current dipoles placed at each source space were computed at each sensor location, using the boundary-element model processed from each participant’s structural MRIs with the FreeSurfer software package [17, 18]. The inverse operator for the source estimation was then computed from the forward solution, noise covariance matrix, and source covariance matrix. Applying the operator to each time point of the averaged MEG data, minimum-norm estimates (MNEs) and noise-normalized MNEs called dynamic statistical parametric maps (dSPMs) were calculated. These estimates were overlaid onto the individual inflated cortical surfaces, reconstructed from MRIs using FreeSurfer, and morphed into a FreeSurfer average brain to obtain the grand-average estimates. Dynamic SPMs, representing the statistical test variables with little location bias, were used for source localization to set the regions of interest (ROIs); MNEs were used for comparison between conditions within the ROIs (for similar analysis, see e.g., [19]). In order to examine the changes in the auditory-motor functional connectivity over time, we then performed the Granger-causal analysis [20] using the MVGC Multivariate Granger Causality Matlab Toolbox [21]. Based on the dSPMs, four pairs of MEG channels that were most sensitive to each of the bilateral auditory and motor activities were selected. For each pair of channels, the averaged MEG data was subtracted from each single-trial MEG data, and the zero-mean residual data from 90 ms (the peak latency of N1m components) to 500 ms averaged within each channel pair was used to estimate a vector autoregressive model. Based on the model, the Granger causality test for four areas was conducted in a pairwise manner in the time domain.

Results

Left–right reversed audition system

The left–right reversed stereophonic system was constructed to be small enough to fit easily into a pocket using wearable devices. Wind noise was suppressed by dedicated windscreens attached to the system. Potential delay of the system was 2 ms constantly. It was confirmed with the system that all sounds were reversed with respect to the median plane without any perceptible delay and that original sounds were virtually inaudible. Figure 4 shows the results of sound source localization in a 360-degree field averaged across six participants in the normal and reversed conditions. The perceptual angles in the normal condition were well correlated (positively) with the physical angles (adjusted R 2 = 0.99) and the perceptual angles in the reversed condition were also well correlated (negatively) with the physical angles (adjusted R 2 = 0.96), though little fluctuations were observed (Fig. 4a). Moreover, the reversed perceptual angles were more correlated (negatively) with the normal perceptual angles (adjusted R 2 = 0.98) than the physical angles (Fig. 4b).

Fig. 4.

Results of localization of 72 sound sources ranging from −180 to 175 degrees based on the front direction in a clockwise manner averaged across six participants in the normal and reversed conditions. a Correlation between physical and perceptual angles in the normal (white dots; adjusted R 2 = 0.99) and reversed (dark gray dots; adjusted R 2 = 0.96) conditions. b Correlation between normal and reversed perceptual angles (light gray dots; adjusted R 2 = 0.98). The reversed perceptual angles are more correlated (negatively) with normal perceptual angles than physical angles

Adaptation to left–right reversed audition

General results during adaptation

Every time the system was used, it was carefully confirmed that all sounds were reversed properly and that sounds in themselves were naturally heard in their daily lives. Since the participants wearing the system looked like they were listening to music with a mobile music player, they reported that there was no stress on being seen by anyone anywhere at any time. At first, they felt a feeling of strangeness when sounds were accompanied by visual information or associated with movements of the body. For example, someone knocking at the door, someone speaking out of view, or something approaching from behind forced the participant to turn around on the opposite side and suddenly notice the situation. It was hard to talk smoothly on the phone because of the discrepancy between the sides of receiver and voice. This feeling, however, started reducing from the first week, and mirror-image sounds were gradually accepted as ‘normal’ with visual information and movements, though sounds without any cue were still perceived as ‘reversed’ even at the end of the exposure period. Moreover, the movements of the body for mirror-image sounds became possible in a deliberate way from the first week, and more of a routine by the end of the experimental period. After taking off the system, no obvious after-effect was perceived for sounds alone, and eventually, everything returned to the baseline level.

Results of the MEG experiment during adaptation

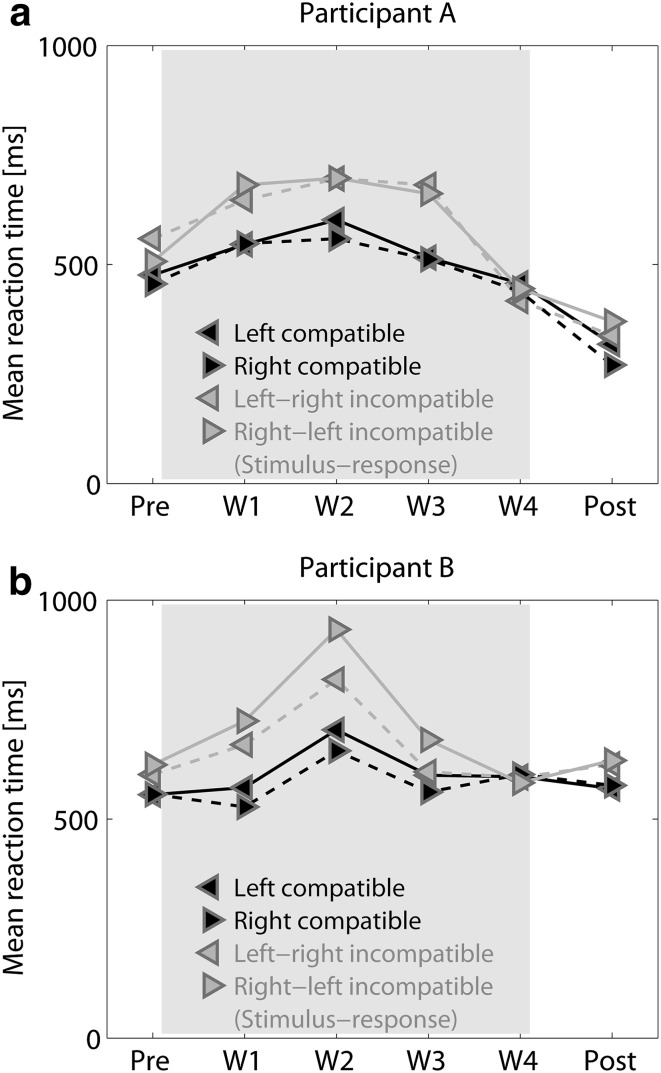

It was confirmed that each experiment complied with the instructions for the MEG experiment as much as possible. Figure 5 shows the mean reaction times during the selective reaction time task before, during, and after the exposure period. In Participants A and B, the reaction times for sounds compatible with a responded side of the index finger were 50–200 ms longer than those for compatible sounds until the third week, but became 55–60 ms shorter in the fourth week. Moreover, the mean reaction times were longest (550–940 ms) in the second week irrespective of the compatibility and returned to the initial level after the adaptation, though they were 175 ms shorter during post-period than during pre-period in Participant A.

Fig. 5.

Mean reaction times during the selective reaction time task in a participant A and b participant B. The reaction times for response-incompatible sounds are longer than those for response-compatible sounds until the third week (W3), but becomes slightly shorter in the fourth week (W4). Moreover, the mean reaction times are the longest in the second week (W2) irrespective of compatibility and returns to the initial level after the exposure period (shaded in gray)

Figure 6 shows the characteristics of N1m components evoked under the selective reaction time task. As shown in Fig. 6a, in the dSPM waveforms within the ROIs of the left and right cortices, the N1m components were observed bilaterally at 90 ms for the left cortex and 92 ms for the right cortex after sound onset for every condition in both participants, and their sources were located in the bilateral superior temporal planes (STPs). Figure 6b shows the changes in relationships between N1m intensities within the ROIs of STPs for the response-compatible sounds and the response-incompatible sounds over exposure time for Participants A and B. The N1m intensities in the compatible conditions were 1.7–8.3 pAm larger than those in the incompatible conditions from the pre-period to the third week, but were 0.6 pAm smaller in the fourth week in both hemispheres. Moreover, the intensities were the largest (16–67 pAm) in the second week irrespective of the compatibility and laterality. After taking off the system, they returned to the initial level. A similar trend was also seen for P2m in both hemispheres. Figure 7 shows the temporal changes in the functional connectivity across the bilateral auditory and motor areas at a threshold of p < 0.05 during the selective reaction time task. Before wearing the system, these four areas interacted well with each other for all conditions, but the auditory-motor connectivity became unstable from the first week and transiently showed overall disruption, especially in the right motor-to-auditory feedback and left-to-right motor communication, in the second week, irrespective of the compatibility. The connectivity disruption recovered thereafter, though an unstable situation still continued.

Fig. 6.

Characteristics of N1m components under the selective reaction time task. a Dynamic statistical parametric mapping waveforms averaged across all conditions for the left and right cortices and source localization for N1m components. The N1m components are detected at about 90 ms after sound onset in the bilateral superior temporal planes (STPs). b, c Changes in the relationships between N1m intensities within the ROIs of STPs for the response-compatible sounds and response-incompatible sounds over exposure time in participants A and B, respectively. The N1m intensities in the compatible conditions tend to be larger than those in the incompatible conditions from Pre to the third week (W3) but reduce further in the fourth week (W4) in both hemispheres. The intensities are the largest in the second week (W2) irrespective of compatibility and laterality

Fig. 7.

Temporal changes in functional connectivity across the left and right auditory (LA and RA, respectively) and motor (LM and RM, respectively) areas at a threshold of p < 0.05 during the selective reaction time task. White, gray, and black squares indicate the number of participants who showed significance for the Granger causality test (N = 0, 1, and 2, respectively). Initially-interplayed auditory-motor connectivity is unstable from the first week and transiently shows overall disruption in the second week. The connectivity disruption recovers thereafter, though unstable situation continues

Discussion

Validity of the left–right reversed audition system

As planned, a wearable system that achieves left–right reversed stereophonic space was successfully constructed, with original sounds and wind noise sufficiently suppressed. Though reversed sounds through the system were constantly delayed by 2 ms as compared with their original sounds, this is considered small enough to ignore considering the temporal auditory acuity [22], and actually, the participants did not perceive any delay. Moreover, the linearity for reversed perceptual angles against normal perceptual angles was even better than those against physical angles. It suggests that the system not only produces 360-degree stereophonic space but also reflects the human auditory characteristics of sound localization. At these points, the quality of the current system is much better than that of other previously proposed electronic apparatuses for left–right reversed audition [9, 12]. Consequently, we conclude that the constructed system is valid to test adaptation to left–right reversed audition.

General adaptation to left–right reversed audition

Based on the participants’ reports, they complied with the instruction throughout the experimental period with little attention to their appearance with the system because it visually resembled listening to music with a mobile music player. The system was better than that in the cases of left–right reversed vision with prisms [1–4] and reversed audition with curved trumpets [7, 8] and enabled to facilitate adaptation free from any constraint of the environment. Actually, a feeling of strangeness gradually decreased in the participants’ daily lives and almost diminished at the end of the exposure period, which was similar to the cases of reverse vision [1–4]. Unlike the visual cases, however, auditory perception alone seemed to be not adapted even when the exposure period was prolonged to 4 weeks and higher quality apparatus was used as compared to the previous studies [7–9]. Notably, a different finding from these studies is that the movements became routine by the end of the experimental period. This implies the achievement of a low-level optimization of auditory-motor coordination with the current approach.

Behavioral and neural adaptation to left–right reversed audition

The present study hypothesized that our adopted approach would result in the modulation of early auditory processing along with optimization of the auditory-motor coordination. In fact, the intensities of N1m components, generated from the bilateral STPs including the auditory cortices, varied with time, and this temporal trend in intensities was correlated with the reaction times in two points: (1) absolute values irrespective of response compatibility (i.e., their tendency to be maximum in the second week, with overall disruption of the auditory-motor connectivity), and (2) relative values depending on the stimulus-response compatibility (i.e., their tendency to be inverted in the fourth week). For the first point, it should be taken into account that N1m intensities are sensitive to arousal states [23] that depend on the participant’s mental conditions at the moment. However, the intensities and reaction times showed clear peaks, which cannot be explained by the arousal effect. Moreover, the N1m increase with prolonged reaction time is contradictory with the viewpoint that additional recruitment of auditory neurons facilitates perceptual decisions [24]. Strongly supported by the instability and second-week transient disruption of the auditory-motor connectivity, it is considered that the auditory-motor optimization processes for the new auditory environment are reflected in these phenomena and that efficient processing becomes possible again as the optimization proceeds. The second point is more related to the outcome of optimization. Initially, the relative differences between the N1m intensities for response-compatible and -incompatible sounds were slight, which is consistent with the event-related potential studies [25, 26], but the response-compatible intensities never fell below the incompatible intensities for the first 3 weeks. Correlated with this trend, the shorter and longer reaction times were obtained for the compatible and incompatible stimuli, respectively, consistent with the general trend of auditory stimulus-response compatibility [27, 28]. Notably, their relative differences were prolonged, presumably reflecting preferential optimization processes along with the increase in compatibility-independent absolute reaction times and the disruption of auditory-motor connectivity. In the fourth week, however, compatible-incompatible inversion occurred both for the N1m intensities and the reaction times. Since stimulus-locked motor activity was reported to appear at about 110 ms post-stimulus [29] and no motor activity was actually seen at the N1m latencies in the present study, it is unlikely for the motor activity to spill over into the auditory cortex, suggesting the validity of N1m evaluation. Cochlear implant studies support the notion that N1 (electroencephalographic counterpart of N1m) could be plastically modulated [30, 31], though neural activity returned to the baseline level after taking off the system in the present study. Accordingly, it is concluded that the 4-week exposure to left–right reversed audition causes modulation of early auditory processing along with the auditory-motor optimization. Though a further study is needed to elucidate the overall adaptation effects, this is the very first study to construct a precise left–right reversed stereophonic system and to test long-term adaptation. Just like the delayed combined use of transcranial brain stimulation and MEG [32], that of a wearable system and MEG could be an effective approach to uncover brain functions.

Acknowledgements

This work was partially supported by a grant from JSPS KAKENHI Grant Number JP26730078. The authors thank Kazuhiro Shigeta and Takayuki Hoshino for technical assistance.

Conflict of interest

The authors declare that they have no conflicts of interest in relation to this article.

References

- 1.Sugita Y. Visual evoked potentials of adaptation to left–right reversed vision. Percept Mot Skills. 1994;79(2):1047–1054. doi: 10.2466/pms.1994.79.2.1047. [DOI] [PubMed] [Google Scholar]

- 2.Sekiyama K, Miyauchi S, Imaruoka T, Egusa H, Tashiro T. Body image as a visuomotor transformation device revealed in adaptation to reversed vision. Nature. 2000;407(6802):374–377. doi: 10.1038/35030096. [DOI] [PubMed] [Google Scholar]

- 3.Miyauchi S, Egusa H, Amagase M, Sekiyama K, Imaruoka T, Tashiro T. Adaptation to left–right reversed vision rapidly activates ipsilateral visual cortex in humans. J Physiol Paris. 2004;98(1–3):207–219. doi: 10.1016/j.jphysparis.2004.03.014. [DOI] [PubMed] [Google Scholar]

- 4.Sekiyama K, Hashimoto K, Sugita Y. Visuo-somatosensory reorganization in perceptual adaptation to reversed vision. Acta Psychol (Amst) 2012;141(2):231–242. doi: 10.1016/j.actpsy.2012.05.011. [DOI] [PubMed] [Google Scholar]

- 5.Stratton GM. Some preliminary experiments on vision without inversion of the retinal image. Psychol Rev. 1896;3(6):611–617. doi: 10.1037/h0072918. [DOI] [Google Scholar]

- 6.Linden DE, Kallenbach U, Heinecke A, Singer W, Goebel R. The myth of upright vision. A psychophysical and functional imaging study of adaptation to inverting spectacles. Perception. 1999;28(4):469–481. doi: 10.1068/p2820. [DOI] [PubMed] [Google Scholar]

- 7.Young TP. Auditory localization with acoustical transposition of the ears. J Exp Psychol. 1928;11(6):399–429. doi: 10.1037/h0073089. [DOI] [Google Scholar]

- 8.Willey CF, Inglis E, Pearce CH. Reversal of auditory localization. J Exp Psychol. 1937;20(2):114–130. doi: 10.1037/h0056793. [DOI] [Google Scholar]

- 9.Hofman PM, Vlaming MS, Termeer PJ, Van Opstal AJ. A method to induce swapped binaural hearing. J Neurosci Methods. 2002;113(2):167–179. doi: 10.1016/S0165-0270(01)00490-3. [DOI] [PubMed] [Google Scholar]

- 10.Thompson SP. The pseudophone. Philos Mag Ser 5. 1879;8(50):385–390. doi: 10.1080/14786447908639700. [DOI] [Google Scholar]

- 11.Welch RB. Perceptual modification: adapting to altered sensory environments. New York: Academic Press; 1978. [Google Scholar]

- 12.Ohtsubo H, Teshima T, Najamizo S. Effects of head movements on sound localization with an electronic pseudophone. Jpn Psychol Res. 1980;22(3):110–118. doi: 10.4992/psycholres1954.22.110. [DOI] [Google Scholar]

- 13.Held R. Shifts in binaural localization after prolonged exposures to atypical combinations of stimuli. Am J Psychol. 1955;68(4):526–548. doi: 10.2307/1418782. [DOI] [PubMed] [Google Scholar]

- 14.Freedman SJ, Stampfer K. The effects of displaced ears on auditory localization. Technical Report AFOSR. 1964; 64-0938, p. 1–24.

- 15.Hämäläinen MS, Ilmoniemi RJ. Interpreting magnetic fields of the brain: minimum norm estimates. Med Biol Eng Comput. 1994;32(1):35–42. doi: 10.1007/BF02512476. [DOI] [PubMed] [Google Scholar]

- 16.Dale AM, Liu AK, Fischl BR, Buckner RL, Belliveau JW, Lewine JD, Halgren E. Dynamic statistical parametric mapping: combining fMRI and MEG for high-resolution imaging of cortical activity. Neuron. 2000;26(1):55–67. doi: 10.1016/S0896-6273(00)81138-1. [DOI] [PubMed] [Google Scholar]

- 17.Dale AM, Fischl B, Sereno MI. Cortical surface-based analysis—I. Segmentation and surface reconstruction. Neuroimage. 1999;9(2):179–194. doi: 10.1006/nimg.1998.0395. [DOI] [PubMed] [Google Scholar]

- 18.Fischl B, Sereno MI, Dale AM. Cortical surface-based analysis—II: inflation, flattening, and a surface-based coordinate system. Neuroimage. 1999;9(2):195–207. doi: 10.1006/nimg.1998.0396. [DOI] [PubMed] [Google Scholar]

- 19.Aoyama A, Haruyama T, Kuriki S. Early auditory change detection implicitly facilitated by ignored concurrent visual change during a Braille reading task. J Integr Neurosci. 2013;12(3):385–399. doi: 10.1142/S0219635213500234. [DOI] [PubMed] [Google Scholar]

- 20.Granger CWJ. Investigating causal relations by econometric models and cross-spectral methods. Econometrica. 1969;37(3):424–438. doi: 10.2307/1912791. [DOI] [Google Scholar]

- 21.Barnett L, Seth AK. The MVGC multivariate Granger causality toolbox: a new approach to Granger-causal inference. J Neurosci Methods. 2014;223:50–68. doi: 10.1016/j.jneumeth.2013.10.018. [DOI] [PubMed] [Google Scholar]

- 22.Green DM. Temporal auditory acuity. Psychol Rev. 1971;78(6):540–551. doi: 10.1037/h0031798. [DOI] [PubMed] [Google Scholar]

- 23.Herrmann CS, Knight RT. Mechanisms of human attention: event-related potentials and oscillations. Neurosci Biobehav Rev. 2001;25(6):465–476. doi: 10.1016/S0149-7634(01)00027-6. [DOI] [PubMed] [Google Scholar]

- 24.Tsunada J, Liu AS, Gold JI, Cohen YE. Causal contribution of primate auditory cortex to auditory perceptual decision-making. Nat Neurosci. 2016;19(1):135–142. doi: 10.1038/nn.4195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Ragot R, Fiori N. Mental processing during reactions toward and away from a stimulus: an ERP analysis of auditory congruence and S-R compatibility. Psychophysiology. 1994;31(5):439–446. doi: 10.1111/j.1469-8986.1994.tb01047.x. [DOI] [PubMed] [Google Scholar]

- 26.Nandrino JL, el Massioui F. Temporal localization of the response selection processing stage. Int J Psychophysiol. 1995;19(3):257–261. doi: 10.1016/0167-8760(95)00017-M. [DOI] [PubMed] [Google Scholar]

- 27.Roswarski TE, Proctor RW. Auditory stimulus-response compatibility: is there a contribution of stimulus-hand correspondence? Psychol Res. 2000;63(2):148–158. doi: 10.1007/PL00008173. [DOI] [PubMed] [Google Scholar]

- 28.Maslovat D, Carlsen AN, Franks IM. Investigation of stimulus-response compatibility using a startling acoustic stimulus. Brain Cogn. 2012;78(1):1–6. doi: 10.1016/j.bandc.2011.10.010. [DOI] [PubMed] [Google Scholar]

- 29.Endo H, Kizuka T, Masuda T, Takeda T. Automatic activation in the human primary motor cortex synchronized with movement preparation. Brain Res Cogn Brain Res. 1999;8(3):229–239. doi: 10.1016/S0926-6410(99)00024-5. [DOI] [PubMed] [Google Scholar]

- 30.Sharma A, Campbell J, Cardon G. Developmental and cross-modal plasticity in deafness: evidence from the P1 and N1 event related potentials in cochlear implanted children. Int J Psychophysiol. 2015;95(2):135–144. doi: 10.1016/j.ijpsycho.2014.04.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sandmann P, Plotz K, Hauthal N, de Vos M, Schönfeld R, Debener S. Rapid bilateral improvement in auditory cortex activity in postlingually deafened adults following cochlear implantation. Clin Neurophysiol. 2015;126(3):594–607. doi: 10.1016/j.clinph.2014.06.029. [DOI] [PubMed] [Google Scholar]

- 32.Veniero D, Vossen A, Gross J, Thut G. Lasting EEG/MEG aftereffects of rhythmic transcranial brain stimulation: level of control over oscillatory network activity. Front Cell Neurosci. 2015 doi: 10.3389/fncel.2015.00477. [DOI] [PMC free article] [PubMed] [Google Scholar]