Abstract

Contingency management (CM) is a well-established treatment for opioid use, yet its adoption remains low in community clinics. This manuscript presents a secondary analysis of a study comparing a comprehensive implementation strategy (Science to Service Laboratory; SSL) to didactic training-as-usual (TAU) as a means of implementing CM across a multi-site opioid use disorder program. Hypotheses predicted that providers who received the SSL implementation strategy would 1) adopt CM faster and 2) deliver CM more frequently than TAU providers. In addition, we examined whether the effect of implementation strategy varied as a function of a set of theory-driven moderators, guided by the Consolidated Framework for Implementation Research: perceived intervention characteristics, perceived organizational climate, and provider characteristics (i.e., race/ethnicity, gender). Sixty providers (39 SSL, 21 TAU) across 15 clinics (7 SSL, 8 TAU) completed a comprehensive set of measures at baseline and reported biweekly on CM use for 52 weeks. All participants received didactic CM training; SSL clinics received 9 months of enhanced training, including access to an external coach, an in-house innovation champion, and a collaborative learning community. Discrete-time survival analysis found that SSL providers more quickly adopted CM; provider characteristics (i.e., race/ethnicity) emerged as the sole moderator of time to adoption. Negative binomial regression revealed that SSL providers also delivered CM more frequently than TAU providers. Frequency of CM adoption was moderated by provider (i.e., gender and race/ethnicity) and intervention characteristics (i.e., compatibility). Implications for implementation strategies for community-based training are discussed.

Keywords: contingency management, training, opioid use disorder, implementation, Consolidated Framework for Implementation Research

1. Introduction

The current opioid crisis has increased the need for effective, community-based services for individuals with opioid use disorders (OUDs; Nosyk et al., 2013; Rudd, Seth, David, & Scholl, 2016). Contingency management (CM) is a psychosocial intervention that uses motivational incentives (e.g., gift cards, vouchers, etc.) to reinforce specific patient treatment-related behaviors, such as appointment attendance or negative drug screens (Prendergast, Podus, Finney, Greenwell, & Roll, 2006). CM has robust evidence-base for the treatment of OUDs, demonstrating effectiveness as both a standalone (Prendergast et al., 2006) and adjunct (Griffith, Rowan-Szal, Roark, & Simpson, 2000) intervention. Despite strong evidence that CM is effective when delivered by clinicians in community settings (Petry, Alessi, & Ledgerwood, 2012; Petry, Alessi, Marx, Austin, & Tardif, 2005), adoption rates remain low in community-based OUD clinics (Hartzler, Lash, & Roll, 2012). Research has identified multiple barriers to CM adoption at both the provider (e.g., competing priorities, insufficient training, philosophical objectives; Benishek, Kirby, Dugosh, & Padovano, 2010; Kirby, Benishek, Dugosh, & Kerwin, 2006), and organization- (e.g., staff turnover, insufficient funding; Ducharme, Knudsen, Roman, & Johnson, 2007; Hartzler, Donovan, et al., 2012; Hartzler, Jackson, Jones, Beadnell, & Calsyn, 2014) levels. Such barriers underscore the need for multi-level implementation strategies to promote the adoption of CM.

Our research team recently evaluated the Science to Service Laboratory (SSL), a comprehensive implementation strategy developed by the New England Addiction Technology Transfer Center (ATTC; see Squires, Gumbley, & Storti, 2008 for detailed description) relative to didactic training as usual (TAU) across a multi-site OUD program. The SSL implementation strategy was designed to target provider-level barriers to change via didactic training and individualized feedback, and organization-level barriers via a specially trained internal change champion and an external implementation coach. Results indicated that the SSL was effective in increasing odds of CM adoption: on average, SSL providers had 3.6 times greater odds of CM adoption than TAU providers, over the course of the 12-month study [blinded for review].

While an important first step, our initial findings on the overall odds of CM adoption left several important questions unanswered. Specifically, our findings did not consider the time to or frequency of adoption. Such outcomes are critical to examine, considering that the overarching goal of an implementation strategy is not merely to get providers to use an evidence-based intervention, but also for providers to quickly and consistently deliver it. Rogers (1995) theorized that a natural rate of adoption would resemble an S-shaped curve, wherein adoption would start out slow and then reach a tipping point and accelerate. Some addiction treatment research has found evidence supporting this natural rate of adoption (e.g., Oser & Roman, 2008), but to date no substance use research has examined whether a specific implementation model accelerates or amplifies adoption. Thus, the current secondary analysis examines two important constructs: time to adoption (defined as how quickly each implementation strategy helped providers to adopt CM) and CM frequency (defined as the number of weeks providers implemented CM over the study period).

Additionally, our primary report only revealed the effect of the SSL implementation strategy at the group level. A critically important question is which implementation strategy was optimal for which providers under which contextual factors. Addressing this question requires the identification of moderator variables, defined as variables that are present before implementation, independent of assignment to condition, and have an interactive effect with implementation strategy (see Kraemer, Wilson, Fairburn, & Agras, 2002). Identification of which OUD treatment providers respond best to which implementation strategy could provide valuable, actionable information in the context of the current opioid crisis, when there is an urgent need to disseminate effective treatments as quickly and as efficiently as possible (Nosyk et al., 2013; Rudd et al., 2016). Despite their clinical utility, moderators have rarely been examined in the implementation science literature and warrant further consideration.

1.1. Selection of Moderators

The goal of the current secondary analysis was to examine moderators of implementation strategy on two novel outcomes (time to CM adoption and CM adoption frequency) among the 60 OUD counselors in our original trial. Our selection of moderators was guided by the Consolidated Framework for Implementation Research (CFIR; Damschroder & Hagedorn, 2011), which specifies five dimensions that should be considered in implementation research: Intervention Characteristics, Provider Characteristics, Inner Setting, Outer Setting, and Implementation Process. In his seminal work, Rogers argued that intervention characteristics, or specific attributes of CM, could account for 49% to 87% of the variance in time to adoption (p.206, Rogers, 1995). Our measure of intervention characteristics was the Perceived Attributes Scale (PAS), which measures intervention characteristics that Rogers’ theorized as most important, namely Relative Advantage, Observability, Trialability, Compatibility, and Complexity. For Inner Setting, we selected providers’ perceptions of organizational climate, a key construct in the CFIR model, using the Organizational Climate subscale from Lehman and colleagues’ (2002) Organizational Readiness for Change Scale (ORC). For Provider Characteristics, we examined provider gender, race/ethnicity, and providers’ years of experience with addiction counseling as potential moderators, due to prior research demonstrating that these factors were associated with CM adoption (Aletraris, Shelton, & Roman, 2015). Two additional provider variables – caseload and provider’s highest degree earned – were found to differ between the SSL and TAU groups at baseline in the primary report and were thus controlled for as covariates instead of moderators. We did not examine potential moderators from the Outer Setting or Implementation Process domains; the former was not directly targeted by the SSL and the latter was captured by our independent variable (SSL vs. TAU), which was itself an indicator of implementation strategy.

1.2. Study Hypotheses

We hypothesized that SSL-trained providers would both adopt CM faster and deliver CM more frequently than TAU-trainees. Due to a lack of prior research and our small sample size, examination of moderators was exploratory with the goal of identifying factors to examine in future work.

2. Methods

Community Sites

Providers were recruited from a large OUD treatment program that had 18 OUD clinics across New England and Midwestern states. Roughly 4,100 adults annually received outpatient services, including individual, family or group therapy, and methadone maintenance.

2.2. Research Design

Assignment to Conditions.

Reflecting the ATTC’s regional approach to training initiatives, the original trial used regional, nonrandom assignment to implementation strategies. Seven sites in the New England region received the SSL, while 11 sites in the Midwest received TAU. Geographic location was used instead of randomization for three reasons: a) to avoid contamination associated with resource-sharing among local staff, b) to resemble standard procedures at the OUD treatment organization, which frequently held regional training events, and c) to ensure compatibility with training procedures in the ATTC Network, in which each Regional ATTC had the autonomy to roll out specific initiatives.

Recruitment.

This study was approved by the [blinded for review] institutional review board. First, researchers approached the 18 clinic directors during a quarterly leadership meeting to provide information on the study. Clinic directors then provided the research team with the number of treatment providers at their clinic who met two study inclusion criteria: a) employed at the clinic for ≥3 months and b) maintaining an active clinical caseload. In total, 145 providers (51 SSL clinics, 94 TAU clinics) were sent resource packets and invited to return response cards expressing their interest to participate in the study. Notably, both providers and clinic directors were informed that study participation was voluntary and would not influence their employment.

Of the 145 providers invited to participate, 75 (46 SSL clinics, 29 TAU clinics) returned reply cards, reflecting an overall response rate of 52%. Response rates heavily favored the SSL sites (i.e., 90% at SSL clinics versus 31% at TAU clinics; z(144) = 22.2, p < .001). Differential response was likely related to the non-blinded nature of the study, as providers at SSL clinics knew that they would receive implementation support. Although clearly indicative of a response bias, it is possible that differential enrollment created a more stringent test of the SSL: providers in the SSL condition were compared to only those TAU providers most eager for CM training.

Sixty providers (80% of responders, 41% of invited) enrolled in the study, completing informed consent procedures and the baseline assessment. Enrollment rates did not differ between conditions, with 85% of SSL (39 of 46) and 72% of TAU (21 of 29) providers participating in the study, z(74) = 1.30, p > .05. All 18 clinic directors expressed an interest in participating in the study, but 3 TAU sites did not return their interest cards. As such, the final sample included 60 providers (39 SSL, 21 TAU) across 15 sites (7 SSL, 8 TAU; see Table 1). Providers in both conditions completed assessments at baseline, 3-, and 12-months, and reported on their adoption of CM biweekly. Providers could earn up to $200 for completing assessments: $20 at baseline, $30 at 3 months, $50 at 12 months, and a $50 bonus for completing all 26 biweekly reports of CM adoption. Only the baseline assessment and biweekly CM reports are examined in the current study. Up to 15% (n = 9) of the sample had missing data on biweekly CM reports. Missing data analysis found that none of the predictors used in this study significantly predicted having missing data at any of the time points (i.e., ps > .05). Notably, assignment to condition did not predict having missing data.

Table 1.

Demographics and average scores on baseline rating scales, as a function of implementation strategy

| SSL n=39 | TAU n=21 | Total N=60 | χ2 or t | p | Φ | |

|---|---|---|---|---|---|---|

| Gender (males) | 13 (33%) | 6 (29%) | 19 (32%) | 0.14 | .705 | 0.05 |

| Racial/ethnic minority | 9 (23%) | 5 (24%) | 14 (23%) | 0.00 | .949 | 0.01 |

| Bachelor’s degree, or less* | 33 (85%) | 13 (62%) | 46 (77%) | 3.94 | .047 | −0.26 |

| Heavy caseload (40+ cases)* | 26 (67%) | 7 (33%) | 33 (55%) | 6.13 | .013 | 0.32 |

| 3+ years of experience in drug abuse counseling | 24 (62%) | 12 (57%) | 36 (60%) | 0.11 | .740 | 0.04 |

| Organizational Readiness for Change Scale (ORC) | ||||||

| Organizational Climate | 3.5 (0.41) | 3.4 (0.37) | 3.5 (0.40) | −0.77 | .444 | 0.26 |

| Perceived Attributes Scale (PAS) | ||||||

| Compatibility | 5.1 (0.99) | 4.9 (0.99) | 5.0 (0.99) | −0.59 | .558 | 0.20 |

| Complexity | 4.7 (0.97) | 4.3 (0.72) | 4.6 (0.90) | −1.51 | .138 | 0.47 |

| Observability | 4.7 (0.79) | 4.5 (0.61) | 4.6 (0.74) | −1.31 | .196 | 0.28 |

| Relative Advantage | 5.1 (0.87) | 5.0 (0.99) | 5.0 (0.91) | −0.48 | .632 | 0.11 |

| Trialability | 5.2 (0.99) | 5.0 (0.78) | 5.1 (0.92) | −0.91 | .369 | 0.22 |

Note. Demographic variables are frequency counts with percentages in parentheses, while baseline scores on the ORC and PAS are means with standard deviations in parentheses. Between-group differences were tested using the χ2-statistic.

p<.05

2.3. Implementation Conditions

Training as Usual (TAU).

All participants received standard didactic trainings in CM for patients with OUDs (Petry, 2012). Trainings were done in-person using a group-based format, and were led by a national expert in CM delivery. Didactic training began with a review of the evidence supporting CM, followed by training in how to apply behavioral principles in treatment. Providers then practiced designing and implementing reinforcement schedules (Petry & Stitzer, 2002). Providers were excused from their work duties and received CEU credit for attending the day-long didactic CM training.

SSL-Enhanced Training.

Following the conclusion of TAU didactics, providers at SSL clinics received both internal and external implementation support for 9 months. External supports included access to a technology transfer specialist, a masters-level social worker with over 20 years of experience managing and supervising clinical programs. The specialist was primarily available for technical assistance with implementation, but also supported SSL providers by holding monthly conference calls, moderating an online message board, and hosting forums for providers to problem-solve barriers to CM implementation. Two internal support strategies were used to facilitate intra-organizational change. Specifically, a clinical director at each SSL site volunteered to be an innovation champion and helped to coordinate SSL activities with the external specialist. Additionally, SSL providers received supplemental, role-specific training in the change process. Innovation champions began by receiving a 4-day training on theories of organizational change and how they could support clinic-wide adoption and implementation of evidence-based practices like CM. Then, the innovation champion and the technology transfer specialist led a 4-hour training open to all SSL clinic employees centered on evidence-based practices, organizational change theory, and CM adoption and implementation.

2.4. Measures

Provider Characteristics.

Providers self-reported demographics at baseline, including gender (male vs. female), race/ethnicity (Non-Hispanic White vs. minority), years of experience in drug use counseling, treatment provider’s highest degree earned, and caseload.

Organizational Readiness to Change Scale (ORC; Lehman et al., 2002).

The ORC is a 115-item scale that measures provider perceptions of organizational readiness to change via four scales: motivation to change, adequacy of resources, staff attributes, and organizational climate. We used the 23-item organizational climate scale as our measure of the CFIR Inner Setting dimension because several of the ORC scales (e.g., staff attributes and motivation to change) conceptually overlapped with the CFIR Provider Characteristics dimension, and it closely aligns with the CFIR Inner Setting construct of implementation climate. In the current sample, reliability of the ORC organizational climate scale was 0.75.

Provider Attribute Scale (PAS; Moore & Benbasat, 1991).

The PAS is a 27 item scale describing five perceived intervention characteristics informed by Roger’s seminal work: Relative Advantage, the perceived benefit of a new practice over current practices; Observability, the extent to which results of a practice can be seen at the organization; Trialability, the perceived need to try out the new practice before adopting; Compatibility, the provider’s perception that the new practice aligns with their own values, needs, and experiences; and Complexity, whether the new practice is perceived to be difficult to use. At baseline, participants rated items on a 7-point Likert scale, with higher scores indicating stronger agreement. The PAS has demonstrated good validity, internal consistency, and test-retest reliability (Karahanna, Straub, & Chervany, 1999; Plouffe, Hulland, & Vandenbosch, 2001; Venkatesh, Morris, & Davis, 2003). Our reliability coefficients were between 0.75 and 0.90.

CM Adoption.

Adoption of CM was measured in 2-week intervals via provider responses to an online survey item. Participants were asked to report whether they had used CM reinforcers with one or more patients in the past 2 weeks; those answering affirmatively were coded as adopters for that 2-week period. To calculate frequency of CM adoption, CM use was summed across the 26 twice monthly assessments for each provider, for a total score of 0 to 26.

3. Analytic Plan

We investigated the effect of implementation strategy (SSL vs. TAU) on CM adoption in two ways. First, we conducted Discrete-Time Survival Analysis (Singer & Willett, 2003) to determine whether time to first adoption of CM was dependent on implementation strategy, and whether this effect was moderated by CFIR constructs. Analyses were conducted using procedures described in the user’s guide for Mplus 8, example 6.19 (Muthén & Muthén, 2012). This approach examined the likelihood of initiation of CM adoption at each time point, given that adoption had not previously occurred. At each time point, CM adoption was coded as occurring at that specific time point (1), having not yet occurred (0), or having occurred at a previous time point (missing). To understand the effect of implementation strategy and moderators on this survival outcome, we estimated the effect of predictors and moderators on a latent variable representing the hazard of initiation at any given time point. We standardized the continuous moderator variables in order to obtain accurate interaction effects. The effect of implementation strategy was coded 0 (TAU condition) and 1 (SSL condition). As such, observed moderation effects could be interpreted as changes in hazard given SSL vs. TAU membership (main effect), as modified by 1 standard deviation changes in each moderator (interaction effect for continuous predictors), or as modified by the presence of a specific characteristic (interaction effect for categorical predictors gender, race/ethnicity, and years experience).

Second, we used negative binomial regression to examine the effect of implementation strategy and the putative moderators on CM adoption frequency. Because CM adoption was not normally distributed, we first examined fit of CM adoption rates to a number of count-based distributions (Poisson, zero-inflated Poisson, negative binomial, zero-inflated negative binomial) using the CountFit package in STATA (Williams, 2006; see Atkins, Baldwin, Zheng, Gallop, & Neighbors, 2013, for an informative overview of count distributions). Results indicated that CM adoption rates best fit (determined by least difference of expected vs. observed values, and Akaike and Bayesian Information Criterion for parsimony) a negative binomial distribution. When predicting values using this distribution, predictors are assumed to be related to CM adoption frequency via an effect on a logarithmic scale. Exponentiated coefficients from this function can be interpreted as rate ratios; that is, for each one-unit increase in the predictor (i.e., being in the SSL condition rather than the TAU condition), the expected adoption rate increases by the product of this exponentiated coefficient. Because data were missing at certain time points for some providers, we performed multiple imputation using the MICE package in R (van Buuren, 2007), where missing data were imputed conditional on auxiliary variables (treatment assignment and covariates, as well as the CFIR moderators) and data from other sessions. We generated 10 imputations, and analyzed imputed data by having Mplus automatically average results over 10 computations (Asparouhov & Muthén, 2010).

For both sets of analyses, we ran two iterations of the model. The first step included the effect of implementation strategy and the second step included one of the putative moderators. Due to the exploratory nature of the analyses and the low-to-moderate correlations among ORC and PAS moderators (see Table 2), we tested the effect of each moderator separately. Consistent with the primary outcome report, all analyses included treatment provider’s highest degree earned and caseload (i.e., number of clients) as covariates.

Table 2.

Pearson correlations between moderators (1-8) and covariates (9-10) included in models.

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 1. Gender | ||||||||||

| 2. Racial/ethnic Minority | −0.05 | |||||||||

| 3. Highest Degree Earned | −0.02 | 0.04 | ||||||||

| 4. Caseload | 0.05 | 0.14 | −0.01 | |||||||

| 5. 3+ Years of Experience | 0.12 | −0.21 | 0.16 | −0.25 | ||||||

| 6. ORC Organizational Climate | −0.03 | 0.06 | −0.28* | −0.00 | −0.20 | |||||

| 7. PAS Compatability | −0.22 | −0.05 | 0.03 | −0.10 | −0.13 | 0.34** | ||||

| 8. PAS Complexity | −0.18 | −0.24 | −0.05 | −0.25 | −0.03 | 0.10 | 0.50** | |||

| 9. PAS Observability | −0.17 | −0.03 | −0.10 | −0.31* | 0.08 | 0.18 | 0.53** | 0.14 | ||

| 10. PAS Relative Advantage | −0.23 | −0.13 | 0.00 | −0.08 | −0.10 | 0.35** | 0.81** | 0.53** | 0.41** | |

| 11. PAS Trialability | −0.23 | −0.19 | −0.03 | −0.26* | −0.10 | 0.36** | 0.72** | 0.57** | 0.53** | 0.69** |

Note. ORC = Organizational Readiness for Change Scale, PAS = Perceived Attributes Scale.

p<.05,

p<.01

4. Results

4.1. Time to Adoption

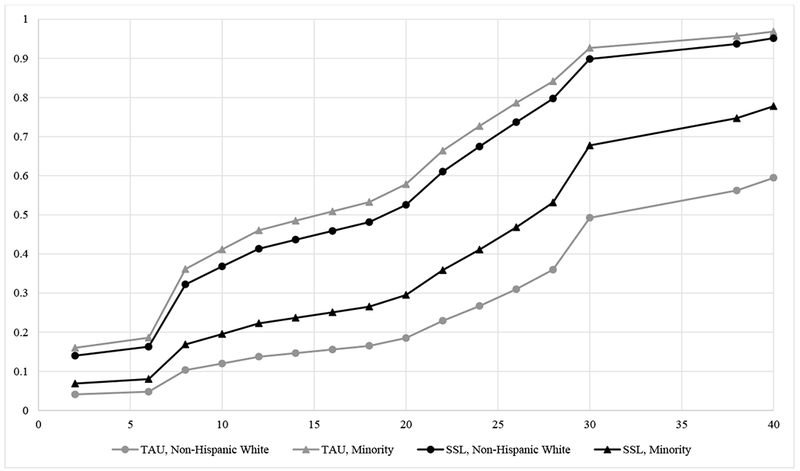

Results of the discrete-time survival analysis (see Table 3 for a breakdown of moderator results, by CFIR construct) indicated a significant main effect of implementation strategy on time to CM adoption, such that those in the SSL condition had a Hazard Ratio of 2.23 (product increase in hazard; 95% CI: 1.02- 4.86) for CM adoption relative to TAU. To help interpret time to adoption findings, we examined the number of estimated weeks it took for each implementation strategy to reach specific CM adoption benchmarks. For example, in the SSL condition, 20% of providers would adopt CM by 12 weeks, whereas in the TAU condition this benchmark would be achieved at 16 weeks (i.e., 4 weeks faster to reach the 20% benchmark in SSL versus TAU). Similarly, in the SSL condition, 60% of providers would adopt CM by week 30, whereas in the TAU condition, this benchmark would not be reached until 40 weeks (i.e., 10 weeks faster to reach the 60% benchmark in SSL versus TAU). At any given time point, SSL providers were estimated to adopt CM between 2 and 10 weeks faster than TAU providers.

Table 3.

Effects of implementation strategy, moderators, and interaction terms on time to CM adoption

| Hazard Ratio | 95% CI | ||||||

|---|---|---|---|---|---|---|---|

| CFIR Domain | Predictor | Est. | SE | Low | High | p | |

| Main Effect | SSL v. TAU | 0.80 | 0.40 | 2.23 | 1.02 | 4.86 | .044 |

| Provider Characteristics | SSL v. TAU | −0.11 | 0.64 | 0.90 | 0.25 | 3.17 | .867 |

| Gender | −1.08 | 0.72 | 0.34 | 0.08 | 1.38 | .131 | |

| Interaction | 1.38 | 0.85 | 3.96 | 0.75 | 20.80 | .103 | |

| SSL v. TAU | 1.34 | 0.48 | 3.81 | 1.49 | 9.73 | .232 | |

| Racial/Ethnic Minority | 1.50 | 0.80 | 4.46 | 0.93 | 21.31 | .061 | |

| Interaction | −2.29 | 0.91 | 0.10 | 0.02 | 0.61 | .012 | |

| SSL v. TAU | 1.06 | 0.59 | 2.90 | 0.92 | 9.16 | .070 | |

| 3+ Years of Experience | −0.33 | 0.73 | 0.72 | 0.17 | 2.98 | .651 | |

| Interaction | −0.26 | 0.79 | 0.77 | 0.16 | 3.66 | .746 | |

| Inner Setting | SSL v. TAU | 0.82 | 0.40 | 2.27 | 1.03 | 5.01 | .043 |

| Organizational Climate | −0.19 | 0.31 | 0.83 | 0.45 | 1.51 | .537 | |

| Interaction | 0.33 | 0.35 | 1.39 | 0.70 | 2.78 | .346 | |

| Intervention Characteristics | SSL v. TAU | 0.83 | 0.40 | 2.28 | 1.04 | 5.03 | .041 |

| Compatibility | −0.32 | 0.31 | 0.73 | 0.39 | 1.34 | .303 | |

| Interaction | 0.37 | 0.36 | 1.45 | 0.72 | 2.93 | .296 | |

| SSL v. TAU | 0.71 | 0.41 | 2.02 | 0.90 | 4.55 | .087 | |

| Complexity | 0.43 | 0.45 | 1.53 | 0.64 | 3.68 | .338 | |

| Interaction | −0.51 | 0.49 | 0.60 | 0.23 | 1.58 | .303 | |

| SSL v. TAU | 0.87 | 0.42 | 2.39 | 1.05 | 5.42 | .037 | |

| Observability | −0.38 | 0.37 | 0.69 | 0.33 | 1.42 | .308 | |

| Interaction | 0.49 | 0.40 | 1.63 | 0.75 | 3.58 | .220 | |

| SSL v. TAU | 0.84 | 0.41 | 2.31 | 1.04 | 5.12 | .040 | |

| Relative Advantage | −0.43 | 0.34 | 0.65 | 0.34 | 1.26 | .205 | |

| Interaction | 0.48 | 0.38 | 1.62 | 0.77 | 3.42 | .207 | |

| SSL v. TAU | 0.88 | 0.42 | 2.42 | 1.06 | 5.51 | .036 | |

| Trialability | −0.57 | 0.37 | 0.56 | 0.27 | 1.16 | .121 | |

| Interaction | 0.55 | 0.41 | 1.74 | 0.78 | 3.85 | .174 | |

Note. Each moderator and interaction effect was tested separately from the others. Moderators were chosen from 3 Consolidated Framework for Implementation Research (CFIR; Damschroder et al., 2009) domains: provider characteristics, inner setting (i.e., organizational climate on the Organizational Readiness for Change Scale), and intervention characteristics (i.e., subscales on the Perceived Attributes Scale).

The moderator analyses indicated that neither Inner Setting (ORC organizational climate scale) nor Intervention Characteristics (any of the PAS scales) significantly moderated the effect of implementation strategy on time to CM adoption. Of the Provider Characteristics, only racial/ethnic minority status had a significant interactive effect with implementation strategy. Probing of the model revealed a non-significant trend for minority providers to adopt CM faster than Non-Hispanic White providers in the overall sample (main effect: HR: 4.46, p = .061), and a significant interaction with condition, such that minority providers adopted CM more slowly in the SSL condition (HR: 0.10, p = .012; see Figure 1).

Figure 1.

Estimated time to first CM adoption, by provider racial/ethnic minority status and implementation strategy

Note. Y-axis: Estimated proportion of providers who adopted CM, within each implementation strategy. X-axis: Number of weeks in study. Data were collected for 52 weeks, but the estimated proportion of providers who adopted CM stagnated after week 40. Provider racial/ethnic minority status significantly interacted with implementation strategy to predict time to first CM adoption (p = .012).

4.2. Total CM Adoption Frequency

Table 4 presents the prediction of total CM adoption rates, following the same analytical procedures as Table 3. Results may be interpreted as rate ratio (RR) changes, such that the expected frequency count of adoption rates was 1.70 times (95% CI: 1.01- 2.85) higher in the SSL condition (predicted frequency: 10.40 times), compared to TAU (predicted frequency: 6.12 times), after adjusting for covariates. In other words, SSL providers were predicted to use CM 70% more frequently than TAU providers.

Table 4.

Effects of implementation strategy, moderators, and interaction terms on frequency of CM adoption.

| Rate Ratio | 95% CI | ||||||

|---|---|---|---|---|---|---|---|

| CFIR Domain | Predictor | Est. | SE | Low | High | p | |

| Main Effect | SSL v. TAU | 0.53 | 0.27 | 1.70 | 1.01 | 2.85 | .046 |

| Provider Characteristics | SSL v. TAU | 0.95 | 0.40 | 2.59 | 1.18 | 5.69 | .018 |

| Gender | 0.87 | 0.45 | 2.38 | 0.99 | 5.72 | .053 | |

| Interaction | −1.02 | 0.49 | 0.36 | 0.14 | 0.93 | .035 | |

| SSL v. TAU | 0.76 | 0.32 | 2.14 | 1.15 | 4.00 | .017 | |

| Racial/Ethnic Minority | 0.48 | 0.49 | 1.62 | 0.62 | 4.18 | .323 | |

| Interaction | −1.23 | 0.59 | 0.29 | 0.09 | 0.93 | .038 | |

| SSL v. TAU | 0.50 | 0.37 | 1.65 | 0.79 | 3.41 | .180 | |

| 3+ Years of Experience | −0.08 | 0.51 | 0.92 | 0.34 | 2.52 | .872 | |

| Interaction | 0.08 | 0.54 | 1.08 | 0.38 | 3.12 | .882 | |

| Inner Setting | SSL v. TAU | 0.52 | 0.27 | 1.68 | 0.99 | 2.83 | .053 |

| Organizational Climate | −0.09 | 0.24 | 0.92 | 0.58 | 1.46 | .719 | |

| Interaction | 0.26 | 0.25 | 1.30 | 0.79 | 2.14 | .296 | |

| Intervention Characteristics | SSL v. TAU | 0.71 | 0.30 | 2.03 | 1.13 | 3.66 | .018 |

| Compatibility | −0.53 | 0.29 | 0.59 | 0.33 | 1.03 | .062 | |

| Interaction | 0.63 | 0.31 | 1.88 | 1.03 | 3.44 | .040 | |

| SSL v. TAU | 0.48 | 0.26 | 1.61 | 0.97 | 2.69 | .067 | |

| Complexity | 0.12 | 0.20 | 1.13 | 0.76 | 1.68 | .544 | |

| Interaction | −0.03 | 0.23 | 0.97 | 0.62 | 1.53 | .904 | |

| SSL v. TAU | 0.75 | 0.32 | 2.12 | 1.12 | 3.99 | .020 | |

| Observability | −0.68 | 0.37 | 0.51 | 0.24 | 1.05 | .067 | |

| Interaction | 0.73 | 0.37 | 2.07 | 1.00 | 4.31 | .051 | |

| SSL v. TAU | 0.62 | 0.29 | 1.86 | 1.05 | 3.29 | .034 | |

| Relative Advantage | −0.25 | 0.26 | 0.78 | 0.47 | 1.30 | .341 | |

| Interaction | 0.36 | 0.28 | 1.43 | 0.83 | 2.46 | .200 | |

| SSL v. TAU | 0.66 | 0.29 | 1.94 | 1.09 | 3.44 | .023 | |

| Trialability | −0.45 | 0.34 | 0.64 | 0.33 | 1.23 | .180 | |

| Interaction | 0.46 | 0.34 | 1.59 | 0.81 | 3.12 | .178 | |

Note. Each moderator and interaction effect was tested separately from the others. Moderators were chosen from 3 Consolidated Framework for Implementation Research (CFIR; Damschroder et al., 2009) domains: provider characteristics, inner setting (i.e., organizational climate on the Organizational Readiness for Change Scale), and intervention characteristics (i.e., subscales on the Perceived Attributes Scale). Data were imputed, and results were averaged over 10 computations.

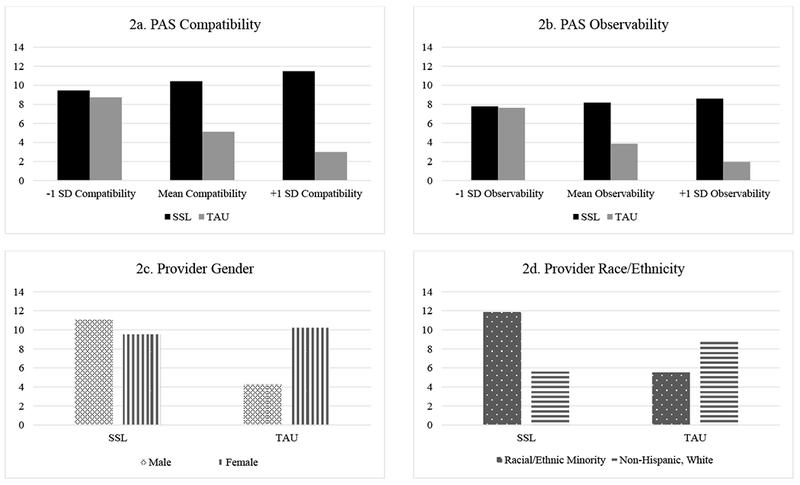

The effect of implementation strategy was not moderated by Inner Setting, however, it was significantly moderated by an Intervention Characteristic. Specifically, there was a nonsignificant main effect for the PAS Compatibility scale (RR: 0.33, p = .062), such that perceiving CM as more compatible with one’s values was associated with less frequent CM adoption. This non-significant trend was qualified by a significant implementation strategy X Compatibility interaction, such that the negative effect of higher Compatibility on CM adoption was attenuated among SSL-providers (p = .040; see Figure 2a). Two Provider Characteristics, both gender and racial/ethnic minority status, moderated the effect of implementation strategy on CM adoption frequency. Female providers delivered CM more frequently at a trend level (HR: 2.38, p = .053), but this effect was significantly attenuated in the SSL condition (p = .035; see Figure 2c). Furthermore, minority providers adopted CM more frequently in the TAU condition than in the SSL condition (p = .012, if Non-Hispanic White in the SSL condition; see Figure 2d).

Figure 2.

Frequency of CM adoption by significant and trending moderators, with implementation strategy

Note. Y-axes: Estimated number of two-week periods in which CM was implemented (i.e., frequency of CM adoption; out of 26). PAS = Perceived Attributes Scale, SSL = Science to Service Laboratory, TAU = Treatment as Usual, SD = Standard Deviation.

5. Discussion

The current study examined the speed and magnitude of CM adoption among providers receiving SSL versus TAU implementation strategies. Results aligned with study hypotheses, such that SSL providers began to deliver CM faster and more frequently than TAU providers, further supporting SSL’s capacity to maximize adoption [see blinded for review]. Interestingly, providers who perceived CM to be compatible with their approach to treatment at baseline were somewhat less likely to adopt CM when they received only TAU. However, these negative effects were attenuated by SSL: PAS Compatibility scores were less negatively associated with frequency of CM adoption among SSL providers. Finally, female and racial/ethnic minority providers responded differently to the two implementation strategies, thereby providing important targets for tailoring CM implementation.

5.1. Time to Adoption and Adoption Frequency

Findings suggested that SSL providers adopted CM faster than TAU, with the difference in estimated adoption rates varying substantially over time (e.g., 4-week difference at 20% adoption, 10-week difference at 60% adoption). Some evidence suggests that longer delays between training and implementation of a novel practice may reduce competency in and intentions to use the practice, especially without ongoing implementation support (Comer & Barlow, 2014; Palinkas et al., 2008). In the weeks after training, TAU providers may lose enthusiasm for, knowledge about, or newly acquired skills they need to deliver CM; SSL’s ongoing support and supervision could protect against such losses. Still, other research indicates adoption can take up to 10 years to occur (Oser & Roman, 2008), suggesting the observed two-week difference may not represent the totality of SSL’s effect. More research is needed to assess the extent to which a short delay in adoption may impact competent delivery of CM long-term.

Although the significant difference in time to CM adoption between the SSL and TAU conditions was modest, SSL providers also delivered CM significantly more frequently than TAU providers, indicating SSL was associated with faster initiation and increased persistence in CM implementation. Together, these results highlight the importance of examining multiple measures of adoption. In the SSL condition, communicating monthly with the technology transfer specialist as well as the presence of on-site innovation champions may have enabled providers to overcome well-documented barriers to frequent CM use (see Nelson, Steele, & Mize, 2006). An important next step will be to assess whether the SSL model addresses other critical implementation benchmarks, such as the fidelity with which a practice is delivered over time (Shelton, Cooper, & Stirman, 2018).

5.2. Moderator Analyses

Perceived compatibility of CM with usual practice was the only organizational factor to moderate of CM adoption. The significant interaction effect occurred in the context of a main effects that was only significant at the trend level, and therefore should be interpreted with caution. The main effect trend suggested that providers were less likely to adopt CM when they perceived it as compatiable with their approach to therapy: these findings did not align with previous research suggesting that compatibility of a practice promotes its adoption (Rogers, 1995). It is possible that providers who perceived CM to be compatible with their approach to therapy might have seen less need to use CM frequently because it overlapped with their preferred clinical practice. While this explanation is purely speculative, it is worth noting that the differential effects of compatibility were attenuated in the SSL condition. These results are consistent with evidence suggesting that workshop training alone is not sufficient to promote the use of a novel practice, even if providers have positive attitudes about the compatability of the intervention (Beidas & Kendall, 2010). Although providers in TAU may have perceived CM as compatible with their needs and values, they likely required additional support beyond workshop training to adopt CM in lieu of current practices. Ongoing support from the SSL might have helped providers build confidence in using CM instead of their typical approach to treatment, thereby increasing frequency of adoption.

Study findings also highlighted unique effects of gender on CM adoption. Male providers receiving TAU were significantly less likely to adopt CM than females, though SSL appeared to be roughly equally effective among males and females. Tannenbaum and colleagues (2016) and Aarons (2006) noted substantial gender differences in provider decision making, leadership traits within an organization, and collaboration and consensus building, with females being more inquisitive and collaborative when identifying decisions and solutions. By contrast, other studies have found that males are more likely to apply specific evidence-based practices, including the monitoring components of CM (Henggeler et al., 2008), prolonged exposure (Ruzek et al., 2017) and use of clinical decision support tools (Bauer, Carroll, Saha, & Downs, 2016). Our findings that males were less likely to adopt CM overall run counter to this prior work. Of note, it remains unclear to what degree prior findings may be artifacts of small study sample sizes, and several other studies have failed to find significant gender differences in adoption of evidence-based interventions, suggesting that gender effects may vary as a function of contextual factors, like the intervention or implementation strategy (Brookman-Frazee, Haine, Baker-Ericzén, Zoffness, & Garland, 2010; Schoenwald, Letourneau, & Halliday-Boykins, 2005; Shapiro, Prinz, & Sanders, 2012).

There is also equivocal evidence supporting the role of race/ethnicity in influencing providers’ decisions to adopt evidence-based practices like CM. Many studies have failed to explore or did not find a significant impact of race/ethnicity on adoption or adherence to novel practices, like Multi-Systemic Therapy (Schoenwald et al., 2005) and evidence-based elements in youth psychotherapy (Brookman-Frazee et al., 2010). However, race/ethnicity does appear to play a role in therapist attitudes and openness to evidence-based practices (Aarons et al., 2012; Aarons, Hurlburt, & Horwitz, 2011), suggesting the need for further research on the role of race/ethnicity in the adoption of novel practices in community-based OUD clinics. Although numerous articles have highlighted the importance of tailoring implementation strategies to the context of individual organizations or clinics (Powell et al., 2015, 2017), few have discussed strategies for tailoring implementation efforts to individual provider characteristics (i.e., gender, race). The fact that the SSL, a promising theory-driven implementation strategy, was associated with slower time to and lower frequency of CM adoption among racial/ethnic minority providers highlights the critical need for further research on effective tailoring of implementation efforts.

5.3. Limitations

The study has several limitations that are important to note. First, the somewhat small sample used in the present study limits the broader implications of our findings; they would be strengthened by replication in a larger sample. Although there were significant interactions of gender and race with implementation strategy, just 30% of providers were male and less than 25% identified as racial/ethnic minorities. Our ability to detect significant results with such small sample sizes attests to the strength of associations, but increases the likelihood that other important associations were not similarly identified (i.e., false negatives).

The use of non-randomized design is another limitation and may have influenced enrollment rates. The present study enrolled 80% of responders and 41% of all invited providers across 18 clinics, which is consistent with enrollment rates in similar state-wide or national treatment implementation studies (Beidas & Kendall, 2010). However, as noted in the primary outcome paper for the ATTC study [blinded for review], the SSL and TAU conditions had differential success rates in recruitment, with eligible SSL clinics and providers more likely to enroll than eligible TAU clinics and providers. As a result, providers who were aware that they would receive additional support may have already had more positive attitudes about CM and increased intention to apply novel CM practices prior to receiving training (Turner & Sanders, 2006). Future research using a randomized approach to assigning providers to training conditions is needed to fully isolate the unique impact of SSL on CM adoption.

Finally, CM adoption was measured via provider self-report, without independent corroboration or any measure of fidelity to CM. Although provider self-report is an time- and cost-efficient way to collect adoption data in OUD community settings, future studies would benefit from incorporation of more objective (e.g. chart review, session coding) measures of CM adoption.

6. Conclusions

In summary, SSL-trained providers appeared to adopt CM faster and more frequently than TAU providers. These findings further underscore the importance of ongoing supervision and consultation to maximize uptake and sustained implementation of evidence-based practices (Beidas & Kendall, 2010). SSL appeared to reduce the impact of barriers to adoption, including providers’ gender and their perceptions of the compatibility of CM. However, SSL did not appear to be as effective for racial/ethnic minority providers, suggesting a need to tailor implementation efforts to both the organizational and individual cultural context. Future efforts to enhance CM adoption may need to incorporate strategies to address the unique gender and cultural factors that may influence a provider’s decision to use a novel practice.

Highlights.

Contingency management (CM) works but is underutilized to treat opioid use disorders

Comprehensive implementation strategies speed adoption & maximize CM delivery

Organization, treatment and provider characteristics moderated adoption & delivery

Acknowledgments

Data collection was supported by a National Institute on Drug Abuse R21DA021150 grant and a Substance Abuse and Mental Health Services Administration grant (TI013418) awarded to Dr. Squires. Manuscript preparation was supported in part by a Substance Abuse and Mental Health Services Administration H79TI080209 grant awarded to Dr. Becker and a National Institute on Alcohol Abuse and Alcoholism T32 Fellowship (T32 AA007459; PI: Monti) that covered the time of both Dr. Helseth and Dr. Janssen. The authors report no competing interests relevant to this work.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Aarons GA (2006). Transformational and transactional leadership: association with attitudes toward evidence-based practice. Psychiatric Services, 57(8), 1162–1169. doi: 10.1176/ps.2006.57.8.1162 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Glisson C, Green PD, Hoagwood KE, Kelleher KJ, & Landsverk JA (2012). The organizational social context of mental health services and clinician attitudes toward evidence-based practice: a United States national study. Implementation Science, 7(1), 56. doi: 10.1186/1748-5908-7-56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Hurlburt M, & Horwitz SM (2011). Advancing a Conceptual Model of Evidence-Based Practice Implementation in Public Service Sectors. Administration and Policy in Mental Health and Mental Health Services Research, 55(1), 4–23. doi: 10.1007/sl0488-010-0327-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aletraris L, Shelton JS, & Roman PM (2015). Counselor Attitudes Toward Contingency Management for Substance Use Disorder: Effectiveness, Acceptability, and Endorsement of Incentives for Treatment Attendance and Abstinence. Journal of Substance Abuse Treatment, 57(4), 41–48. doi: 10.1016/j.jsat.2015.04.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asparouhov T, & Muthén BO (2010). Multiple Imputation with Mplus Technical Report Version 2. Retrieved from https://www.statmodel.com/download/Imputations7.pdf [Google Scholar]

- Atkins DC, Baldwin SA, Zheng C, Gallop RJ, & Neighbors C (2013). A tutorial on count regression and zero-altered count models for longitudinal substance use data. Psychology of Addictive Behaviors: Journal of the Society of Psychologists in Addictive Behaviors, 27(1), 166–177. doi: 10.1037/a0029508 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer NS, Carroll AE, Saha C, & Downs SM (2016). Experience with decision support system and comfort with topic predict clinicians’ responses to alerts and reminders. Journal of the American Medical Informatics Association, 23(e1), e125–e130. doi: 10.1093/jamia/ocvl48 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, & Kendall PC (2010). Training Therapists in Evidence-Based Practice: A Critical Review of Studies From a Systems-Contextual Perspective. Clinical Psychology: Science and Practice, 77(1), 1–30. doi: 10.1111/j.l468-2850.2009.01187.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benishek LA, Kirby KC, Dugosh KL, & Padovano A (2010). Beliefs about the empirical support of drug abuse treatment interventions: A survey of outpatient treatment providers. Drug and Alcohol Dependence, 107(2–3), 202–208. doi: 10.1016/j.drugalcdep.2009.10.013 [DOI] [PubMed] [Google Scholar]

- Brookman-Frazee L, Haine RA, Baker-Ericzén M, Zoffness R, & Garland AF (2010). Factors associated with use of evidence-based practice strategies in usual care youth psychotherapy. Administration and Policy in Mental Health and Mental Health Services Research, 37(3), 254–269. doi: 10.1007/sl0488-009-0244-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Comer JS, & Barlow DH (2014). The occasional case against broad dissemination and implementation: Retaining a role for specialty care in the delivery of psychological treatments. American Psychologist, 69(1), 1–18. doi: 10.1037/a0033582 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder LJ, & Hagedorn HJ (2011). A guiding framework and approach for implementation research in substance use disorders treatment. Psychology of Addictive Behaviors, 25(2), 194–205. doi: 10.1037/a0022284 [DOI] [PubMed] [Google Scholar]

- Ducharme LJ, Knudsen HK, Roman PM, & Johnson JA (2007). Innovation adoption in substance abuse treatment: exposure, trialability, and the Clinical Trials Network. Journal of Substance Abuse Treatment, 32(4), 321–329. doi: 10.1016/j.jsat.2006.05.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Griffith JD, Rowan-Szal GA, Roark RR, & Simpson DD (2000). Contingency management in outpatient methadone treatment: a meta-analysis. Drug and Alcohol Dependence, 58(1–2), 55–66. doi: 10.1016/S0376-8716(99)00068-X [DOI] [PubMed] [Google Scholar]

- Hartzler B, Donovan DM, Tillotson CJ, Mongoue-Tchokote S, Doyle SR, & McCarty D (2012). A multilevel approach to predicting community addiction treatment attitudes about contingency management. Journal of Substance Abuse Treatment, 42(2), 213–221. doi: 10.1016/j.jsat.2011.10.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartzler B, Jackson TR, Jones BE, Beadnell B, & Calsyn DA (2014). Disseminating contingency management: impacts of staff training and implementation at an opiate treatment program. Journal of Substance Abuse Treatment, 46(4), 429–438. doi: 10.1016/j.jsat.2013.12.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartzler B, Lash SJ, & Roll JM (2012). Contingency management in substance abuse treatment: A structured review of the evidence for its transportability. Drug and Alcohol Dependence, 122(1–2), 1–10. doi: 10.1016/j.drugalcdep.2011.11.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henggeler SW, Chapman JE, Rowland MD, Halliday-Boykins CA, Randall J, Shackelford J, & Schoenwald SK (2008). Statewide adoption and initial implementation of contingency management for substance-abusing adolescents. Journal of Consulting and Clinical Psychology, 76(4), 556–567. doi: 10.1037/0022-006X.76.4.556 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karahanna E, Straub DW, & Chervany NL (1999). Information technology adoption across time: a cross-sectional comparison of pre-adoption and post-adoption beliefs. MIS Quarterly, 23(2), 183–213. [Google Scholar]

- Kirby KC, Benishek LA, Dugosh KL, & Kerwin ME (2006). Substance abuse treatment providers’ beliefs and objections regarding contingency management: Implications for dissemination. Drag and Alcohol Dependence, 55(1), 19–27. doi: 10.1016/j.drugalcdep.2006.03.010 [DOI] [PubMed] [Google Scholar]

- Kraemer HC, Wilson GT, Fairburn CG, & Agras WS (2002). Mediators and moderators of treatment effects in randomized clinical trials. Archives of General Psychiatry, 59(10), 877–883. doi: 10.1001/archpsyc.59.10.877 [DOI] [PubMed] [Google Scholar]

- Lehman WEK, Greener JM, & Simpson DD (2002). Assessing organizational readiness for change. Journal of Substance Abuse Treatment, 22(4), 197–209. doi: 10.1016/S0740-5472(02)00233-7 [DOI] [PubMed] [Google Scholar]

- Moore GC, & Benbasat I (1991). Development of an Instrument to Measure the Perceptions of Adopting an Information Technology Innovation. Information Systems Research, 2(3), 192–222. doi: 10.1287/isre.2.3.192 [DOI] [Google Scholar]

- Muthén LK, & Muthén BO (2012). Mplus User’s Guide (Eighth). Los Angeles, CA: Muthén & Muthén. [Google Scholar]

- Nelson TD, Steele RG, & Mize JA (2006). Practitioner attitudes toward evidence-based practice: themes and challenges. Administration and Policy in Mental Health, 33(3), 398–409. doi: 10.1007/s10488-006-0044-4 [DOI] [PubMed] [Google Scholar]

- Nosyk B, Anglin MD, Brissette S, Kerr T, Marsh DC, Schackman BR, … Montaner JSG (2013). A call for evidence-based medical treatment of opioid dependence in the United States and Canada. Health Affairs (Project Hope), 32(8), 1462–1469. doi: 10.1377/hlthaff.2012.0846 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oser CB, & Roman PM (2008). A categorical typology of naltrexone-adopting private substance abuse treatment centers. Journal of Substance Abuse Treatment, 34(A), 433–442. doi: 10.1016/j.jsat.2007.08.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palinkas LA, Schoenwald SK, Hoagwood K, Landsverk J, Chorpita BF, & R WJ (2008). Research Network on Youth Mental Health. An ethnographic study of implementation of evidence-based treatment in child mental health: First steps. Psych Services, 59(7), 738–746. doi: 10.1176/appi.ps.59.7.738 [DOI] [PubMed] [Google Scholar]

- Petry NM (2012). Contingency management for substance abuse treatment: A guide to implementing this evidence-based practice. New York: Routledge. [Google Scholar]

- Petry NM, Alessi SM, & Ledgerwood DM (2012). Contingency management delivered by community therapists in outpatient settings. Drug and Alcohol Dependence, 722(1–2), 86–92. doi: 10.1016/j.drugalcdep.2011.09.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petry NM, Alessi SM, Marx J, Austin M, & Tardif M (2005). Vouchers versus prizes: Contingency management treatment of substance abusers in community settings. Journal of Consulting and Clinical Psychology, 73(6), 1005–1014. doi: 10.1037/0022-006X.73.6.1005 [DOI] [PubMed] [Google Scholar]

- Petry NM, & Stitzer ML (2002). Contingency management: Using motivational incentives to improve drug abuse treatment. West Haven, CT. [Google Scholar]

- Plouffe CR, Hulland JS, & Vandenbosch M (2001). Research Report: Richness Versus Parsimony in Modeling Technology Adoption Decisions—Understanding MerchantAdoption of a SmartCard-Based PaymentSystem. Information Systems Research, 72(2), 208–222. [Google Scholar]

- Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, & Mandell DS (2017). Methods to Improve the Selection and Tailoring of Implementation Strategies. The Journal of Behavioral Health Services & Research, 44(2), 177–194. doi: 10.1007/s11414-015-9475-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, … Kirchner JE (2015). A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implementation Science : IS, 10(1), 21. doi: 10.1186/s13012-015-0209-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prendergast M, Podus D, Finney J, Greenwell L, & Roll J (2006). Contingency management for treatment of substance use disorders: A meta-analysis. Addiction, 101(11), 1546–1560. doi: 10.1111/j.l360-0443.2006.01581.x [DOI] [PubMed] [Google Scholar]

- Rogers EM (1995). Elements of Diffusion. Diffusion of Innovations (4th ed.). New York, NY: Simon & Schuster Inc. [Google Scholar]

- Rudd RA, Seth P, David F, & Scholl L (2016). Increases in Drug and Opioid-Involved Overdose Deaths — United States, 2010–2015. MMWR Morbidity and Mortality Weekly Report, 65, 1445–1452. doi: 10.15585/mmwr.mm655051el [DOI] [PubMed] [Google Scholar]

- Ruzek JI, Eftekhari A, Crowley J, Kuhn E, Karlin BE, & Rosen CS (2017). Post-training Beliefs, Intentions, and Use of Prolonged Exposure Therapy by Clinicians in the Veterans Health Administration. Administration and Policy in Mental Health, 44(1), 123–132. doi: 10.1007/sl0488-015-0689-y [DOI] [PubMed] [Google Scholar]

- Schoenwald SK, Letourneau EJ, & Halliday-Boykins C (2005). Predicting Therapist Adherence to a Transported Family-Based Treatment for Youth. Journal of Clinical Child & Adolescent Psychology, 34(4), 658–670. doi: 10.1207/sl5374424jccp3404_8 [DOI] [PubMed] [Google Scholar]

- Shapiro CJ, Prinz RJ, & Sanders MR (2012). Facilitators and Barriers to Implementation of an Evidence-Based Parenting Intervention to Prevent Child Maltreatment: The Triple P-Positive Parenting Program. Child Maltreatment, 17(1), 86–95. doi: 10.1177/1077559511424774 [DOI] [PubMed] [Google Scholar]

- Shelton RC, Cooper BR, & Stirman SW (2018). The Sustainability of Evidence-Based Interventions and Practices in Public Health and Health Care. Annual Review of Public Health, 39(1), 55–76. doi: 10.1146/annurev-publhealth-040617-014731 [DOI] [PubMed] [Google Scholar]

- Singer JD, & Willett JB (2003). Applied longitudinal data analysis. Oxford, UK: Oxford University Press. [Google Scholar]

- Squires DD, Gumbley SJ, & Storti SA (2008). Training substance abuse treatment organizations to adopt evidence-based practices: The Addiction Technology Transfer Center of New England Science to Service Laboratory. Journal of Substance Abuse Treatment, 34(3), 293–301. doi: 10.1016/j.jsat.2007.04.010 [DOI] [PubMed] [Google Scholar]

- Tannenbaum C, Greaves L, & Graham ID (2016). Why sex and gender matter in implementation research. BMC Medical Research Methodology, 16(1), 145. doi: 10.1186/sl2874-016-0247-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turner KMT, & Sanders MR (2006). Dissemination of evidence-based parenting and family support strategies: Learning from the Triple P—Positive Parenting Program system approach. Aggression and Violent Behavior, 11(2), 176–193. doi: 10.1016/j.avb.2005.07.005 [DOI] [Google Scholar]

- van Buuren S (2007). Multiple imputation of discrete and continuous data by fully conditional specification. Statistical Methods in Medical Research, 16(3), 219–242. doi: 10.1177/0962280206074463 [DOI] [PubMed] [Google Scholar]

- Venkatesh V, Morris MG, & Davis FD (2003). User Acceptance of Information Technology: Toward a Unified View, 27(3), 425–478. [Google Scholar]

- Williams R (2006). Review of regression models for categorical dependent variables using Stata by Long and Freese. The Stata Journal, 6(2), 273–278. [Google Scholar]