Abstract

Automatic and efficient plant monitoring offers accurate plant management. Construction of three-dimensional (3D) models of plants and acquisition of their spatial information is an effective method for obtaining plant structural parameters. Here, 3D images of leaves constructed with multiple scenes taken from different positions were segmented automatically for the automatic retrieval of leaf areas and inclination angles. First, for the initial segmentation, leave images were viewed from the top, then leaves in the top-view images were segmented using distance transform and the watershed algorithm. Next, the images of leaves after the initial segmentation were reduced by 90%, and the seed regions for each leaf were produced. The seed region was re-projected onto the 3D images, and each leaf was segmented by expanding the seed region with the 3D information. After leaf segmentation, the leaf area of each leaf and its inclination angle were estimated accurately via a voxel-based calculation. As a result, leaf area and leaf inclination angle were estimated accurately after automatic leaf segmentation. This method for automatic plant structure analysis allows accurate and efficient plant breeding and growth management.

Keywords: leaf segmentation, three-dimensional imaging, leaf inclination angle estimation, leaf area estimationutf8

1. Introduction

In the growth and developmental processes, plant structures change during the seasons because of environmental processes [1]. For accurate plant breeding and growth monitoring, it is necessary to obtain information about the plant structures affecting the plant status [2,3,4]. For example, leaf area index (LAI) is an important plant structural parameter because it determines the primary photosynthetic production, plant evaporation, and plant growth characterization [5]. Leaf inclination angle can also be an informative plant structural parameter because it can be an indicator of a growth-state, such as a water condition [6]. It relates greatly to increasing photosynthetic productivity [7]. Thus, LAI and leaf inclination angles are important plant structural parameters. However, the conventional methods of measuring LAI and leaf inclination angles are destructive and tedious [5,8].

Two-dimensional (2D) imaging techniques have been utilized for obtaining structural parameters, such as height, stem diameter, and leaf area, which have led to plant functional analysis. However, techniques based on 2D imaging are insufficient for investigating the spatial plant structures because plants have complicated three-dimensional (3D) structures [4,9,10,11]. Moreover, because a 2D image is essentially a projected image in one direction, some leaves in 2D images are hidden by others (occlusion), and the structural parameters are difficult to estimate accurately. Thus, 2D projections remove important parts of the information and fail to exploit the full potential of shape analysis [11]. Thus, construction of 3D models of plants and acquisition of their spatial information is an effective method of overcoming these problems. This leads to plant functions and conditions necessary for appropriate breeding and growth management.

For 3D model construction, lidar (light detection and ranging) currently attracts attention. It is a 3D scanner that measures the distance to a target using the elapsed time between the emission and return of laser pulses. It can record many 3D point-cloud data of a target, from which information about plant structural parameters, such as shape, LAI, leaf area density, leaf inclination angle, location, height, and volume, can be extracted [12,13,14,15,16,17,18,19,20]. Another method is the stereo vision system, which constructs 3D point clouds from a set of two images [21,22]. Takizawa et al. [23] used stereo vision to construct plant 3D models, and Mizuno et al. [24] used stereo vision for wilt detection. With the exponential increase in computational power and the widespread availability of digital cameras, the use of a photogrammetric approach (i.e., structure from motion (SfM)) has generated 3D point-cloud models from 2D imagery and has become widespread [1,25,26,27,28,29]. The SfM approach requires multiple images of a scene taken from different positions. From those images, camera parameters and camera positions are calculated, and 3D point-cloud images are constructed [30]. More detailed 3D models can be constructed by this method, compared to stereo vision, owing to the use of multiple scene images. In previous studies, plant shape (e.g., height, stem length, leaf area) could be estimated accurately from 3D models constructed by SfM [4,28].

After obtaining detailed 3D plant structural models, automatic detection of individual leaves becomes a fundamental and challenging task in agricultural practices [31]. For example, in phenotyping, to reduce labor cost, there has been a growing interest in developing solutions for the automated analysis of visually observable plant traits [32]. To extract plant traits, automatic leaf segmentation in 3D images is essential. If leaves in 3D images are segmented automatically, it becomes possible to automatically extract structural plant traits. Furthermore, automatic segmentation can be applied to many related works in agricultural automation (e.g., de-leafing, plant inspection, pest management [31]).

In previous studies on 3D segmentation method, Alenya et al. [33] and Xia et al. [31] proposed segmentation methods using depth sensors. However, the methods had problems with low segmentation accuracy and difficulty under the condition that neighboring leaves were near one another. Paproki et al. [10] presented a 3D mesh-based technique developed for leaf segmentation. In that study, 3D point-cloud models were converted into mesh-based 3D models, and tubular fittings were implemented to organs as stems or petioles. Afterwards, each leaf of a 3D image was segmented [34]. However, when mesh-based 3D models were built, all regions in the 3D model could not be filled with meshes. Thus, some holes occurred on the surfaces. Because this segmentation method utilized the normal vector of each polygon, and whole-leaf surfaces had to be filled with meshes, the holes on the 3D model had to be filled manually, so that the entire segmentation process is difficult to fully automate. The segmentation of 3D leaves, which uses top-view 2D images, were also advocated by Kaminuma et al. [35] and Teng et al. [36]. In these studies, k-curvature and graph-cut algorithms were used for leaf segmentation. Those methods had difficulty segmenting overlapped leaves in the top view. Thus, their application was limited to simple plants having only a few leaves without overlapping in the top-view images.

Moreover, estimation of automatic plant structures, such as leaf area and leaf inclination angle, were also difficult. In the case of mesh-based 3D modeling, leaf area and leaf inclination angle could be calculated based on the area and normal vector of each polygon mesh. However, the construction of mesh-based 3D models entailed manual operations to fill holes on the surface of leaves. Thus, the automatic extraction of the plant structure parameters was difficult.

In previous segmentation methods, accuracy was not satisfactory and the samples were limited to those having few leaves; the fully automatic process remained difficult. Furthermore, automatic estimation of structural parameters of plants was also difficult.

In this study, we propose methods for accurate and automatic leaf segmentation and retrieval of plant structural parameters using 3D point-cloud images, overcoming disadvantages of previous studies. Leaves in 3D models were segmented automatically with a 3D point-cloud processing method combined with a 2D image processing method. After the process, leaf area and leaf inclination angle in each segmented leaf were estimated accurately and automatically.

2. Materials and Methods

2.1. Plant Material

For the experiments, we selected small plants (i.e., Pothos (Epipremnum aureum), Hydrangea (Hydrangea macrophylla), Dwarf schefflera (Schefflera arboricola), Council tree (Ficus altissima), Kangaroo vine (Cissus antarctica), Umbellata (Ficus umbellate), and Japanese sarcandra (Sarcandra glabra)). The height and number of leaves ranged from 20 to 60 cm and from 3 to 11, respectively.

2.2. 3D Reconstruction of Plants

The camera used in image acquisition for 3D reconstruction was a Canon EOS M2 (Canon Inc., Tokyo, Japan). The camera was hand-held, and about 80 images were recorded for each sample. The number of images was set to obtain sufficient 3D resolution for each leaf to be clearly observed. For 3D reconstruction image acquisition, we referred to Rose et al. [28], where images were taken by moving around the sample and closing the circle. The distance from the camera to the plant was about 30–100 cm. The images were taken from an oblique angle or horizontally. The resolution of the image was 3456 × 5184 pixels. For 3D point-cloud image construction using SfM [37], camera calibration was done using Agisoft Lens (Agisoft LCC, Saint Petersburg, Russia). For the calibration, about 100 images of a checkerboard were taken from various angles and positions, and in the software, image corners were extracted automatically and the parameters for the calibration were calculated. Then, using the software Agisoft Photoscan Professional (Agisoft LCC, Russia), plant 3D point-cloud images were reconstructed [38]. The reconstructed models did not have any spatial scale and their coordinates did not correspond to world coordinates. Thus, a cube-shaped reference, such as a box (, was placed around the plant, and the scale and coordinate of the 3D image was determined [38].

2.3. Automatic Leaf Segmentation and Its Evaluation

2.3.1. Conversion into Voxel Coordinate

In the reconstructed 3D model, the non-green area (i.e., not leaf such as branches and other noises), the normalized green value of which (G/(R + G + B)) was less than 0.4, was cut. All points constituting the 3D model were converted into voxel coordinates (voxel-based 3D model), in which each X, Y and Z value of point-cloud data was rounded-off to the nearest integer value, allowing it to efficiently calculate structural parameters [12,13,15,18,19]. The size of a voxel was set to about between 0.03 and 0.2 cm, determined per the plant scale. Smaller voxel sizes were given to smaller plants. Voxels corresponding to coordinates converted from points within the data were assigned an attribute value of 1, and the space without points was given an attribute value of 0 [39].

2.3.2. Generation of Top-View Binary 2D Image from Voxel-Based 3D Model

Each point in the voxel-based 3D model was projected onto the plane above the model, and the binary 2D images of the top view could be obtained. Pixel values in the binary image were the same as the corresponding voxels. In the projected images, regions of leaves included small holes caused by lack of points. The holes were filled using an algorithm based on morphological reconstruction, in which a hole was filled if the pixel values around the hole were not zero [40].

2.3.3. Generation of Seed Regions for 3D Leaf Segmentation

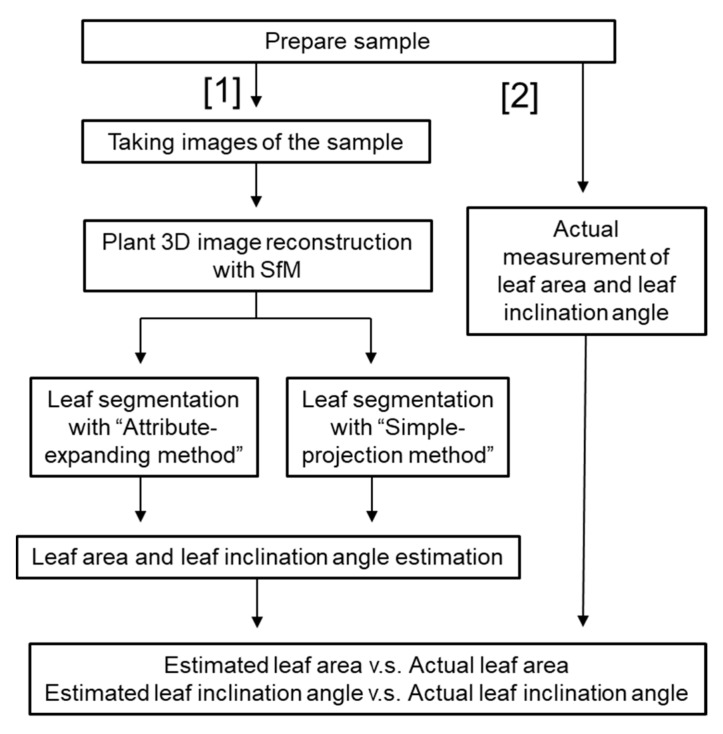

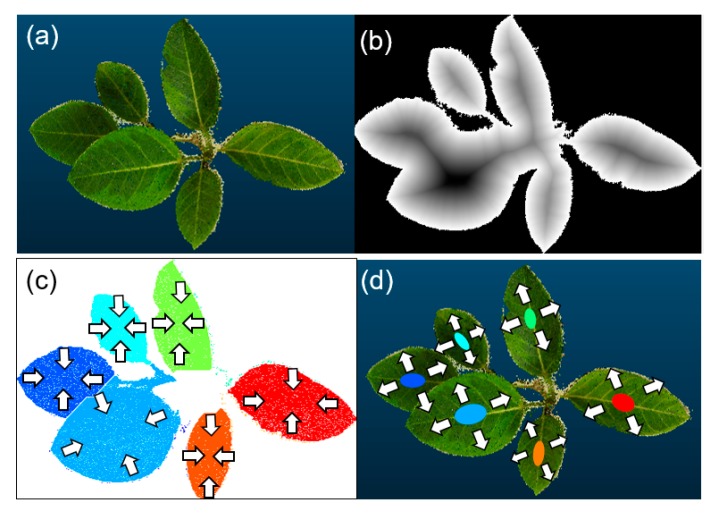

The flow chart of the experimental process is shown in Figure 1. Next, the method for leaf segmentation is explained. In the top-view 2D binary images, the distance transform of [41] was implemented, for which the distance between each background pixel (pixel value: 0) and the nonzero pixel (leaf or plant organs) nearest the background was calculated [42]. The border of the background and nonzero pixel consisted of leaf edges. Figure 2a,b represent the top-view image of a 3D point cloud and the grayscale image via distance transform, respectively. In the grayscale image (Figure 2b), the contrast represents the distance from the nearest leaf edges. Areas closer to the leaf edges are illustrated by brighter colors. Then, the watershed algorithm [43] was conducted to the distance transformed image as the initial leaf segmentation process. Afterwards, the segment numbers (i.e., leaf number) were labeled at each pixel corresponding to segmented leaves in the 2D image. The initial leaf segmentation result is shown in Figure 2c. Regions of zero pixels had no leaf numbers. The initial 2D leaf segmentation was insufficient because the occluded regions and boundaries between each leaf were not correctly segmented. Afterwards, the scale of the image after the initial segmentation was reduced to 1/10 around its centroid (arrows in Figure 2c shows the directions of reduction). The 1/10 parts (i.e., seed region) were projected onto voxels in the 3D model (Figure 2d), and the voxels corresponding to the seed regions were assigned to the leaf number.

Figure 1.

Flow chart of the process from 3D reconstruction, leaf segmentation to estimation of structural parameters.

Figure 2.

Top-view 2D images of a plant generating initial seed regions for 3D leaf segmentation: (a) top-view image of a 3D plant point-cloud image; (b) grayscale image via distance transform—the contrast represents the distance from the nearest edges; (c) an image after initial segmentation by the watershed algorithm—colors represent each leaf and arrays indicate the directions of shrinking to create seed regions; and (d) an image representing seed regions for the 3D leaf segmentation—arrays show the directions for expanding each region in the 3D images.

2.3.4. Automatic Leaf Segmentation by Expanding the Seed Region

Next, leaf numbers were assigned to the nonzero voxels neighboring the seed regions. Then, the leaf number was also assigned to the nonzero voxels neighboring the voxels per leaf number. By repeating this process, a leaf number was assigned to each nonzero voxel, and each seed region was expanded three-dimensionally, as shown in Figure 2d. This process was repeated until leaf numbers were assigned to all nonzero voxels and leaf segmentation processes were finished. This method has not been introduced before and we call it the “attribute-expanding method” in this paper.

2.4. Automatic Leaf Area and Leaf Inclination Angle Estimation from Segmented Leaves

Leaf area and leaf inclination angle were estimated from segmented leaves. In the leaf area calculation, the voxel sizes of the X and Y axes were not changed, but the voxel size of the Z axis was changed to 3.0 to reduce the influence of noises around leaf surfaces in a vertical direction, which results in overestimation of leaf area. The leaf area was calculated by multiplying the total number of voxels within a leaf and an area of a horizontal face of a voxel.

For leaf inclination angle estimation, Hosoi et al. [44] fitted a plane onto one leaf, and the inclination angle was calculated from the normal vector. Based on this study, the inclination angle was calculated for each leaf by fitting points around the centroid point of each segmented leaf.

2.5. Evaluation of the Accuracy of Automatic Leaf Segmentation and Leaf Area and Leaf Inclination Angle Estimation

To evaluate the accuracy of automatic leaf segmentation, leaf area estimates after manual segmentation from the 3D images and ones from the present automatic leaf segmentation were compared (n = 61). Success rate was defined also for evaluating the accuracy of the present segmentation. Success rate was the rate of the number of the correctly segmented leaves to the total number of all leaves within a plant. If the percentage error of the automatic leaf area estimation was less than 10%, it was regarded as a correct segmentation, that is, high enough to be practical [28].

Next, performance of segmentation methods to leaves overlapped in the top view was compared to the present segmentation method and the 2D image-based method. The latter is a method simply projecting the initial segmentation result (e.g., Figure 2c) to the 3D plant model (i.e., simple-projection method). We prepared 10 sets of two leaves which were overlapped in the top view. Then, the accuracy of leaf area estimation after segmentation was compared to the results of the two methods. Then, to evaluate the accuracy of the voxel-based leaf area estimation, the estimated leaf area and actual leaf area were compared (n = 30). For obtaining actual leaf area, JPEG images of leaves were taken, and the areas were determined by multiplying the number of pixels and the area per pixel. When taking the JPEG images, leaves were pressed between a transparent board and white board to flatten them.

The actual value of the leaf inclination angle measured by an inclinometer and its estimated and actual values were compared (n = 30) to evaluate the error of leaf area estimation.

3. Results

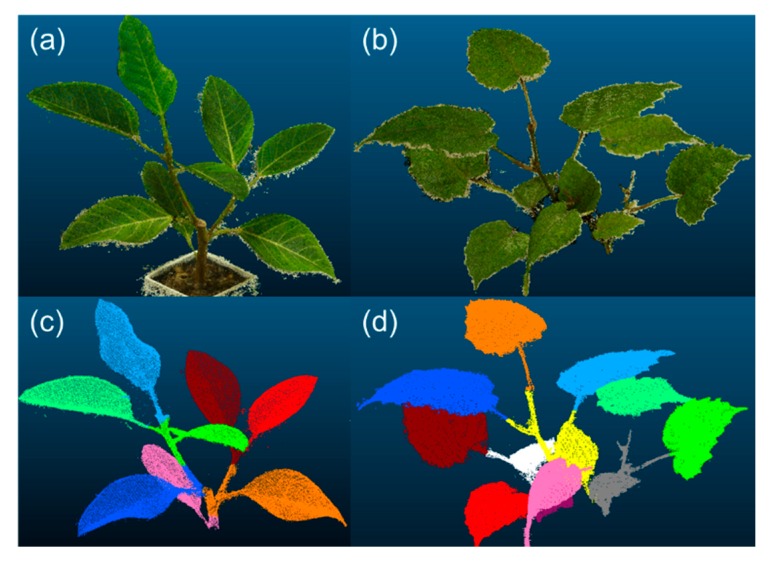

3.1. Leaf Segmentation

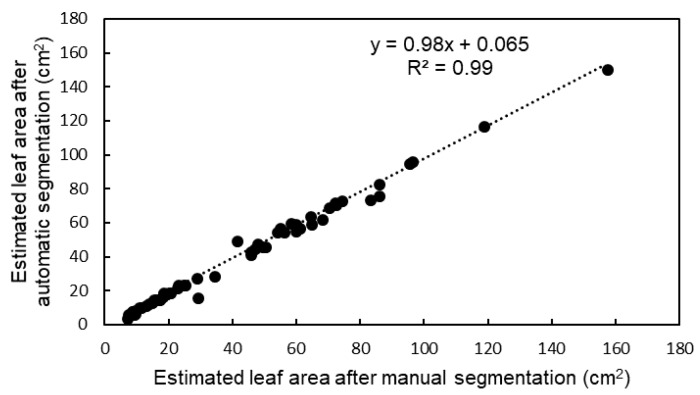

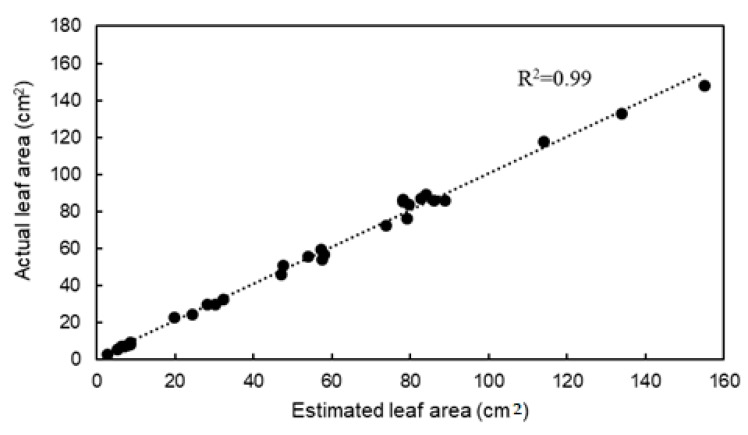

Figure 3 illustrates examples of segmentation results. Plants in images (a) and (b) had 8 and 11 leaves, respectively. The attribute-expanding method enabled the accurate segmentation of the plant with such structures. Table 1 represents the accuracy of leaf area estimation and leaf segmentation. As shown in the table, for all segmented leaves, the success rate was 86.9%. Absolute leaf area estimation error showed an increasing tendency with increasing leaf area. Figure 4 shows the relationship between leaf area estimates after manual segmentation and those after automatic leaf segmentation. This indicates a high correlation of R2 = 0.99 for the leaf area with the present automatic method, compared to that with manual segmentation. Its root-mean-square error (RMSE) was 3.23 cm2. Leaf area estimates by the simple-projection method were also compared to ones after manual segmentation, offering an RMSE of 8.26 cm2, much higher than the present automatic segmentation.

Figure 3.

Segmentation results of plants: plants in image (a) (Council tree) and (b) (Kangaroo vine) have 8 and 11 leaves, respectively; images (a,b) represent 3D point-cloud images of the target plants; images (c,d) show the results of segmentation of images (a,b), respectively.

Table 1.

Accuracy of leaves are estimations and leaf segmentation of each plant; success rate represents the percentage of leaves correctly segmented with more than 90% leaf area estimation accuracy.

| Average Leaf Number | Leaf Area (cm2) | Absolute Leaf Area Estimation Error (cm2) | Success Rate (%) | |

|---|---|---|---|---|

| Dwarf schefflera | 5 | 8.06 | 0.06 | 100 |

| Kangaroo vine | 11 | 13.74 | 0.67 | 82 |

| Pothos | 4 | 20.67 | 0.29 | 100 |

| Hydrangea | 4 | 41.48 | 1.66 | 100 |

| Council tree | 5.3 | 59.68 | 3.44 | 75 |

| Dwarf schefflera | 5 | 73.48 | 2.35 | 90 |

| All sample | 5.7 | 36.2 | 1.73 | 86.9 |

Figure 4.

Relationship between leaf area estimates after manual segmentation and those after automatic leaf segmentation.

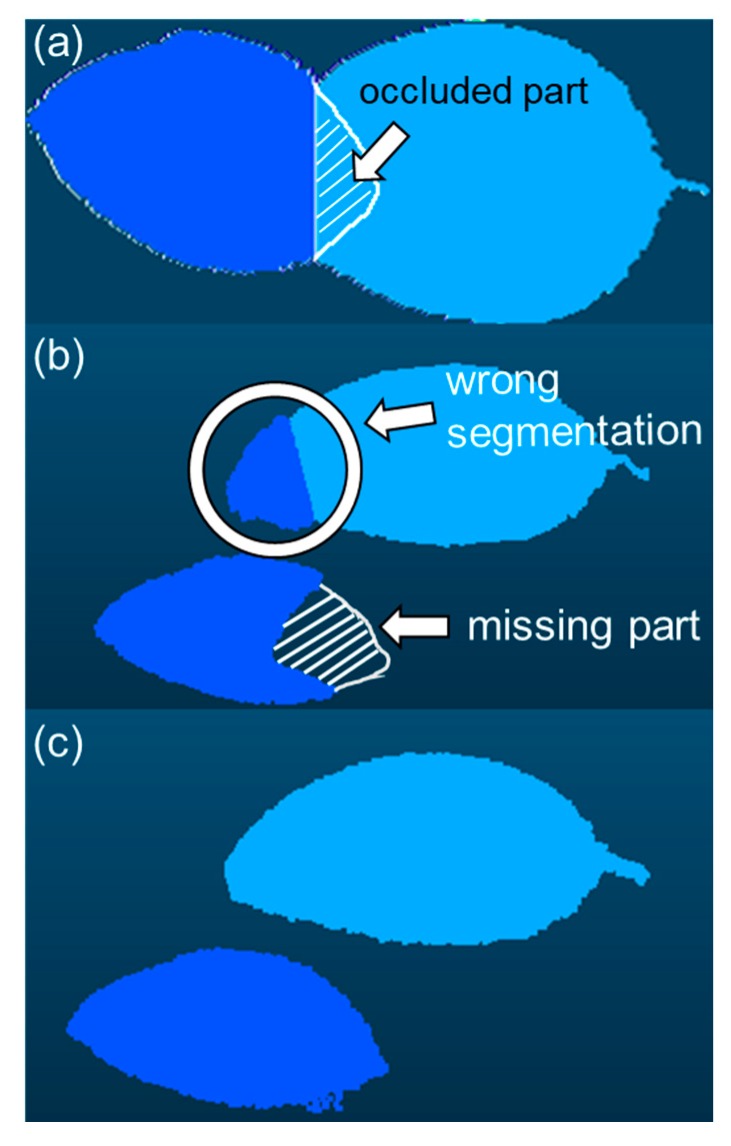

3.2. Leaf Area and Leaf Inclination Angle Estimation from Segmented Leaves

Figure 5 depicts an example of segmentation for overlapping leaves. Figure 5a represents a 2D image after initial segmentation (explained in Section 2.3.3). After obtaining the image, leaf segmentation was conducted via the simple-projection method (Figure 5b) and the attribute-expanding method (Figure 5c). In Figure 5b, an attribute value was not assigned to the part of the lower leaf occluded by the above leaf in the top-view image (Figure 5a). Consequently, the occluded part was missed in the simple-projection method. Moreover, in Figure 5b, the tip part above the leaf and the region around the leaf boundary was wrongly segmented. The segmentation error problem was later solved during the attribute-expanding method, as shown in Figure 5c.

Figure 5.

Example of segmentation for overlapped leaves: image (a) is a 2D image after initial segmentation, and image (b,c) show the images after segmentation via the simple-projection and attribute-expansion methods, respectively.

During the segmentation of 10 sets of overlapped leaves, the leaf area estimation error (mean absolute percent error: MAPE) through the simple-projection method (Figure 5b) was 17.4% ± 7.1%, whereas the error with the attribute-expanding method (Figure 5c) was 1.4% ± 2.0%, significantly smaller (p < 0.01).

Figure 6 shows the relationship between estimated leaf area based on number of voxels in the 3D models and the measured leaf area based on JPEG images. The coefficient of determination was 0.99, and the RMSE and the MAPE were 3.21 cm2 and 4.14%, respectively.

Figure 6.

Relationship between estimated leaf area based on number of voxels and actual leaf area.

In the leaf inclination angle estimation, the RMSE and absolute error of each segmented leaf was 2.68 and 1.92, respectively. The estimation accuracy was independent of the inclination angle of the target leaf.

4. Discussion

4.1. Leaf Segmentation

In the top-view 2D images of plants, some regions of leaves were occluded because of leaf overlapping, and boundaries between leaves were not clear. Thus, in the simple-projection method, unsegmented missing parts and wrong segmentation in the leaf borders were unavoidable, as shown in Figure 5b. Similar problems could exist in other 2D image-based segmentations, such as graph-cut or active contour models [35,36]. On the other hand, the missing parts and the wrong segmentation on the leaves were not observed in the leaves segmented with the present method. It resulted in more accurate leaf segmentation and leaf area estimation. Moreover, recent methods for automatic mesh-based 3D reconstruction have started to be considered [45,46], however, it is still very difficult to conduct the reconstruction fully automatically. Unlike the mesh-based 3D models, the construction of voxel-based 3D models can be done automatically; also, on this point, our voxel-based segmentation method is advantageous.

Unlike simple projections, in the attribute-expanding method, distance transform and the watershed algorithm were used not for segmentation of all leaf regions but for making seed regions around each leaf centroid in the 3D models. Furthermore, the seed regions were projected onto the 3D models, and each leaf region was three-dimensionally expanded from the projected seed regions, assigning the leaf number to the voxels of leaves. The region expansion stopped at the leaf edges and did not progress to other leaves because each leaf edge within a leaf was apart from the edges of other leaves in the 3D coordinates. Hence, whereas the leaf edges could not be segmented correctly in the simple-projection method because of leaf overlapping in the top-view 2D image, leaf edges could be segmented accurately in the present segmentation method, as shown in Figure 5c. Moreover, such 3D region expansion also allowed assignment of the leaf number to the region occluded by the top-view 2D image, resulting in accurate leaf segmentation without missing parts (Figure 5c). When leaves and branches cannot be distinguished, the segmentation accuracy decreases. Using the normalized green value (G/(R + G + B)), branches were automatically cut down, however, a few branches remained, which resulted in the segmentation error. By optimizing the normalized green value at each sample depending on the color of the target leaf and branches and/or light condition, the error will be lower. The optimization of the value will widen its application.

4.2. Voxel-Based Leaf Area Calculation and Leaf Inclination Angle Estimation

The MAPE of the leaf area estimation with the mesh-based methods ranged from 2.41% to 10.3% [16,35,47,48] in previous studies, whereas MAPE in the present method was 4.14%, meaning the leaf area estimation accuracy in this method was high enough. In the mesh-based 3D model, a mesh is composed of a target point and the adjacent two points. The meshes had to be constructed with whole-leaf surfaces to retrieve leaf areas and inclination angles of all leaves. However, mesh formation through the whole-leaf surface was difficult in the complicated 3D point configuration often observed in plant 3D models, resulting in holes (i.e., regions where mesh construction failed) on the surfaces. To fill the holes, manual interpolation by additional mesh surfaces was needed. However, the present voxel-based calculation estimated leaf area by simply counting the number of voxels and converting them to a surface area without any manual processes needed by the mesh-based calculation. This allowed fully automatic leaf area calculation. Moreover, because points in the present 3D models were constructed from SfM and distributed thoroughly on each leaf with high density, no holes were on leaves of the voxel models. This led to accurate leaf area calculation.

The accuracy of leaf inclination angle estimation in this study was as high as the previous study (RMSE in the previous study: 4.3 [44]). Thanks to the automatically segmented leaf, a plane fitting to each leaf for the angle estimation could be automatically conducted to each leaf, such that the inclination angle of each leaf could be estimated automatically, whereas the mesh-based method could estimate the inclination angle from the normal mesh vector. Thus, manual operation was necessary to fill the holes of leaf surfaces.

As described above, the present voxel-based leaf area and leaf inclination angle calculations were equivalent to or greater than the mesh-based methods in terms of accuracy and fully automatic calculation.

5. Conclusions

In this study, plant 3D point-cloud images were constructed using SfM, and leaves in the 3D models were segmented automatically; then, leaf area and leaf inclination angle were estimated automatically. First, initial segmentation was conducted based on the top-view images of plants using distance transform and the watershed algorithm. Next, the images of leaves after the initial segmentation were reduced by 90%, and the seed regions for each leaf were produced. Then, the seed regions were projected onto the voxelized 3D models. Afterwards, each region with a different leaf number was expanded on the 3D model. Finally, each leaf in the 3D models was segmented. From the segmented leaves, each leaf area and leaf inclination angle was estimated using a voxel-based 3D image processing method. As a result, more accurate and automatic leaf segmentation was realized compared to previous studies. In all segmented leaves, the success rate was 86.9%. Leaf area estimates after manual segmentation and those after automatic leaf segmentation indicated a high correlation of R2 = 0.99 for the leaf area with the present automatic method. Its RMSE was 3.23 cm2. In the leaf inclination angle estimation, the absolute error of each segmented leaf was 1.92. Thus, a series of processes from automatic leaf segmentation to structural parameters estimation was conducted automatically and accurately. The present method offers efficiently useful information for appropriate plant breeding and growth management. As a next step, this method should be applied to various kinds of plants to ensure its potential to be used widely.

Author Contributions

K.I. conducted the experiment and analysis. This paper was written by K.I., F.H. supervised this research.

Funding

This work was supported by ACT-I, Japan Science and Technology Agency.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Muller-Linow M., Pinto-Espinosa F., Scharr H., Rascher U. The leaf angle distribution of natural plant populations: Assessing the canopy with a novel software tool. Plant Methods. 2015;11:11. doi: 10.1186/s13007-015-0052-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Shono H. A new method of image measurement of leaf tip angle based on textural feature and a study of its availability. Environ. Control Biol. 1995;33:197–207. doi: 10.2525/ecb1963.33.197. [DOI] [Google Scholar]

- 3.Dornbusch T., Wernecke P., Diepenbrock W. A method to extract morphological traits of plant organs from 3D point clouds as a database for an architectural plant model. Ecol. Model. 2007;200:119–129. doi: 10.1016/j.ecolmodel.2006.07.028. [DOI] [Google Scholar]

- 4.Zhang Y., Teng P., Shimizu Y., Hosoi F., Omasa K. Estimating 3D Leaf and Stem Shape of Nursery Paprika Plants by a Novel Multi-Camera Photography System. Sensors. 2016;16:874. doi: 10.3390/s16060874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Leemans V., Dumont B., Destain M.F. Assessment of plant leaf area measurement by using stereo-vision; Proceedings of the 2013 International Conference on 3D Imaging; Liège, Belgium. 3–5 December 2013; pp. 1–5. [Google Scholar]

- 6.Honjo T., Shono H. Measurement of leaf tip angle by using image analysis and 3-D digitizer. J. Agric. Meteol. 2001;57:101–106. doi: 10.2480/agrmet.57.101. [DOI] [Google Scholar]

- 7.Muraoka H., Takenaka A., Tang H., Koizumi H., Washitani I. Flexible leaf orientations of Arisaema heterophyllum maximize light capture in a forest understorey and avoid excess irradiance at a deforested site. Ann. Bot. 1998;82:297–307. doi: 10.1006/anbo.1998.0682. [DOI] [Google Scholar]

- 8.Bailey B.N., Mahaffee W.F. Rapid measurement of the three-dimensional distribution of leaf orientation and the leaf angle probability density function using terrestrial LiDAR scanning. Remote Sens. Environ. 2017;194:63–76. doi: 10.1016/j.rse.2017.03.011. [DOI] [Google Scholar]

- 9.Konishi A., Eguchi A., Hosoi F., Omasa K. 3D monitoring spatio–temporal effects of herbicide on a whole plant using combined range and chlorophyll a fluorescence imaging. Funct. Plant Biol. 2009;36:874–879. doi: 10.1071/FP09108. [DOI] [PubMed] [Google Scholar]

- 10.Paproki A., Sirault X., Berry S., Furbank R., Fripp J. A novel mesh processing based technique for 3D plant analysis. BMC Plant Boil. 2012;12:63. doi: 10.1186/1471-2229-12-63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Paulus S., Behmann J., Mahlein A.K., Plümer L., Kuhlmann H. Low-cost 3D systems: Suitable tools for plant phenotyping. Sensors. 2014;14:3001–3018. doi: 10.3390/s140203001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hosoi F., Omasa K. Voxel-based 3-D modeling of individual trees for estimating leaf area density using high-resolution portable scanning lidar. IEEE Trans. Geosci. Remote Sens. 2006;44:3610–3618. doi: 10.1109/TGRS.2006.881743. [DOI] [Google Scholar]

- 13.Hosoi F., Omasa K. Estimating vertical plant area density profile and growth parameters of a wheat canopy at different growth stages using three-dimensional portable lidar imaging. ISPRS J. Photogramm. Remote Sens. 2009;64:151–158. doi: 10.1016/j.isprsjprs.2008.09.003. [DOI] [Google Scholar]

- 14.Leeuwen M.V., Nieuwenhuis M. Retrieval of forest structural parameters using LiDAR remote sensing. Eur. J. For. Res. 2010;129:749–770. doi: 10.1007/s10342-010-0381-4. [DOI] [Google Scholar]

- 15.Hosoi F., Nakai Y., Omasa K. Estimation and error analysis of woody canopy leaf area density profiles using 3-D airborne and ground-based scanning lidar remote-sensing techniques. IEEE Trans. Geosci. Remote Sens. 2010;48:2215–2223. doi: 10.1109/TGRS.2009.2038372. [DOI] [Google Scholar]

- 16.Hosoi F., Nakabayashi K., Omasa K. 3-D modeling of tomato canopies using a high-resolution portable scanning lidar for extracting structural information. Sensors. 2011;11:2166–2174. doi: 10.3390/s110202166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Dassot M., Constant T., Fournier M. The use of terrestrial LiDAR technology in forest science: Application fields, benefits and challenges. Ann. For. Sci. 2011;68:959–974. doi: 10.1007/s13595-011-0102-2. [DOI] [Google Scholar]

- 18.Hosoi F., Omasa K. Estimation of vertical plant area density profiles in a rice canopy at different growth stages by high-resolution portable scanning lidar with a lightweight mirror. ISPRS J. Photogramm. Remote Sens. 2012;74:11–19. doi: 10.1016/j.isprsjprs.2012.08.001. [DOI] [Google Scholar]

- 19.Hosoi F., Nakai Y., Omasa K. 3-D voxel-based solid modeling of a broad-leaved tree for accurate volume estimation using portable scanning lidar. ISPRS J. Photogramm. Remote Sens. 2013;82:41–48. doi: 10.1016/j.isprsjprs.2013.04.011. [DOI] [Google Scholar]

- 20.Itakura K., Hosoi F. Automatic individual tree detection and canopy segmentation from three-dimensional point cloud images obtained from ground-based lidar. J. Agric. Meteol. 2018;74:109–113. doi: 10.2480/agrmet.D-18-00012. [DOI] [Google Scholar]

- 21.Ivanov N., Boissard P., Chapron M., Andrieu B. Computer stereo plotting for 3-D reconstruction of a maize canopy. Agric. For. Meteol. 1995;75:85–102. doi: 10.1016/0168-1923(94)02204-W. [DOI] [Google Scholar]

- 22.Li L., Zhang Q., Huang D.F. A Review of Imaging Techniques for Plant Phenotyping. Sensors. 2014;14:20078–20111. doi: 10.3390/s141120078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Takizawa H., Ezaki N., Mizuno S., Yamamoto S. Plant recognition by integrating color and range data obtained through stereo vision. JACIII. 2005;9:630–636. doi: 10.20965/jaciii.2005.p0630. [DOI] [Google Scholar]

- 24.Mizuno S., Noda K., Ezaki N., Takizawa H., Yamamoto S. Detection of wilt by analyzing color and stereo vision data of plant; Proceedings of the Computer Vision/Computer Graphics Collaboration Techniques; Rocquencourt, France. 4–6 May 2007; pp. 400–411. [Google Scholar]

- 25.Dandois J.P., Ellis E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013;136:259–276. doi: 10.1016/j.rse.2013.04.005. [DOI] [Google Scholar]

- 26.Morgenroth J., Gomez C. Assessment of tree structure using a 3D image analysis technique—A proof of concept. Urban For. Urban Green. 2014;13:198–203. doi: 10.1016/j.ufug.2013.10.005. [DOI] [Google Scholar]

- 27.Obanawa H., Hayakawa Y., Saito H., Gomez C. Comparison of DSMs derived from UAV-SfM method and terrestrial laser scanning. Jpn. Soc. Photogram. Remote Sens. 2014;53:67–74. doi: 10.4287/jsprs.53.67. [DOI] [Google Scholar]

- 28.Rose J.C., Paulus S., Kuhlmann H. Accuracy analysis of a multi-view stereo approach for phenotyping of tomato plants at the organ level. Sensors. 2015;15:9651–9665. doi: 10.3390/s150509651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Itakura K., Hosoi F. Estimation of tree structural parameters from video frames with removal of blurred images using machine learning. J. Agric. Meteol. 2018;74:154–161. doi: 10.2480/agrmet.D-18-00003. [DOI] [Google Scholar]

- 30.James M.R., Robson S. Straightforward reconstruction of 3D surfaces and topography with a camera: Accuracy and geoscience application. J. Geophys. Res. Earth Surf. 2012;117 doi: 10.1029/2011JF002289. [DOI] [Google Scholar]

- 31.Xia C., Wang L., Chung B.K., Lee J.M. In situ 3D segmentation of individual plant leaves using a RGB-D camera for agricultural automation. Sensor. 2015;15:20463–20479. doi: 10.3390/s150820463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Minervini M., Abdelsamea M., Tsaftaris S.A. Image-based plant phenotyping with incremental learning and active contours. Ecol. Inf. 2014;23:35–48. doi: 10.1016/j.ecoinf.2013.07.004. [DOI] [Google Scholar]

- 33.Alenya G., Dellen B., Foix S., Torras C. Robotized plant probing: Leaf segmentation utilizing time-of-flight data. IEEE Robot. Autom. Mag. 2013;20:50–59. doi: 10.1109/MRA.2012.2230118. [DOI] [Google Scholar]

- 34.Paulus S., Dupuis J., Mahlein A.K., Kuhlmann H. Surface feature based classification of plant organs from 3D laserscanned point clouds for plant phenotyping. BMC Bioinfom. 2013;14:238. doi: 10.1186/1471-2105-14-238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kaminuma E., Heida N., Tsumoto Y., Yamamoto N., Goto N., Okamoto N., Konagaya A., Matsui M., Toyoda T. Automatic quantification of morphological traits via three-dimensional measurement of Arabidopsis. Plant J. 2014;38:358–365. doi: 10.1111/j.1365-313X.2004.02042.x. [DOI] [PubMed] [Google Scholar]

- 36.Teng C.H., Kuo Y.T., Chen Y.S. Leaf segmentation, classification, and three-dimensional recovery from a few images with close viewpoints. Opt. Eng. 2011;50:037003. [Google Scholar]

- 37.Dandois J.P., Olano M., Ellis E.C. Optimal altitude, overlap, and weather conditions for computer vision UAV estimates of forest structure. Remote Sens. 2015;7:13895–13920. doi: 10.3390/rs71013895. [DOI] [Google Scholar]

- 38.Miller J., Morgenroth J., Gomez C. 3D modelling of individual trees using a handheld camera: Accuracy of height, diameter and volume estimates. Urban For. Urban Green. 2015;14:932–940. doi: 10.1016/j.ufug.2015.09.001. [DOI] [Google Scholar]

- 39.Hosoi F., Omasa K. Factors contributing to accuracy in the estimation of the woody canopy leaf area density profile using 3D portable lidar imaging. J. Exp. Bot. 2007;58:3463–3473. doi: 10.1093/jxb/erm203. [DOI] [PubMed] [Google Scholar]

- 40.Soille P. Morphological Image Analysis: Principles and Applications. Springer Science & Business Media; Berlin, Germany: 2013. [Google Scholar]

- 41.Maurer C.R., Qi R., Raghavan V. A linear time algorithm for computing exact Euclidean distance transforms of binary images in arbitrary dimensions. IEEE Trans. Pattern Anal. Mach. Intell. 2003;25:265–270. doi: 10.1109/TPAMI.2003.1177156. [DOI] [Google Scholar]

- 42.Gonzales R.C., Woods R.E. Digital Image Processing. Pearson; England, UK: 2013. Chapter 10. [Google Scholar]

- 43.Meyer F. Topographic distance and watershed lines. Signal Proc. 1994;38:113–125. doi: 10.1016/0165-1684(94)90060-4. [DOI] [Google Scholar]

- 44.Hosoi F., Nakai Y., Omasa K. Estimating the leaf inclination angle distribution of the wheat canopy using a portable scanning lidar. J. Agric. Meteol. 2009;65:297–302. doi: 10.2480/agrmet.65.3.6. [DOI] [Google Scholar]

- 45.Pound M.P., French A.P., Murchie E.H., Pridmore T.P. Surface reconstruction of plant shoots from multiple views; Proceedings of the ECCV 2014: Computer Vision—ECCV 2014 Workshops; Zurich, Switzerland. 6–12 September 2014; pp. 158–173. [Google Scholar]

- 46.Frolov K., Fripp J., Nguyen C.V., Furbank R., Bull G., Kuffner P., Daily H., Sirault X. Automated plant and leaf separation: Application in 3D meshes of wheat plants; Proceedings of the International Conference on Digital Image Computing: Techniques and Applications (DICTA); Gold Coast, Australia. 30 November–2 December 2016; pp. 1–7. [Google Scholar]

- 47.Kanuma T., Ganno K., Hayashi S., Sakaue O. Leaf area measurement using stereo vision. IFAC Proc. 1998;31:157–162. doi: 10.1016/S1474-6670(17)42115-X. [DOI] [Google Scholar]

- 48.Paulus S., Schumann H., Kuhlmann H., Léon J. High-precision laser scanning system for capturing 3D plant architecture and analysing growth of cereal plants. Biosyst. Eng. 2014;121:1–11. doi: 10.1016/j.biosystemseng.2014.01.010. [DOI] [Google Scholar]