Abstract

Movement analysis of infants’ body parts is momentous for the early detection of various movement disorders such as cerebral palsy. Most existing techniques are either marker-based or use wearable sensors to analyze the movement disorders. Such techniques work well for adults, however they are not effective for infants as wearing such sensors or markers may cause discomfort to them, affecting their natural movements. This paper presents a method to help the clinicians for the early detection of movement disorders in infants. The proposed method is marker-less and does not use any wearable sensors which makes it ideal for the analysis of body parts movement in infants. The algorithm is based on the deformable part-based model to detect the body parts and track them in the subsequent frames of the video to encode the motion information. The proposed algorithm learns a model using a set of part filters and spatial relations between the body parts. In particular, it forms a mixture of part-filters for each body part to determine its orientation which is used to detect the parts and analyze their movements by tracking them in the temporal direction. The model is represented using a tree-structured graph and the learning process is carried out using the structured support vector machine. The proposed framework will assist the clinicians and the general practitioners in the early detection of infantile movement disorders. The performance evaluation of the proposed method is carried out on a large dataset and the results compared with the existing techniques demonstrate its effectiveness.

Keywords: movement analysis, infantile movement disorders, part-based model, k-means clustering

1. Introduction

Normal human movements, such as, moving an arm, look simple but require a complex coordination of control between the brain and the musculoskeletal system. Any disruption in the coordination system may result in inhabit unwanted movements, trouble in making the intended movements or both [1]. These may appear due to abnormal development of the brain, injury in the brain of a child during the pregnancy or at birth, or genetic disorders. Studies e.g., [2,3,4,5,6], have shown that the early detection of movement disorders plays an important role in the early intervention and establishing a therapy program for the recovery. To diagnose the movement disorders, the spontaneous movements of an infant are observed by the doctors or the physiotherapists along with the family medical history. This examination is known as the general movement assessment (GMA) [2,7]. However, it is a subjective procedure based on observer’s expertise and does not have any standardized criteria to measure the outcomes. Moreover, it is time consuming procedure to manually analyze every infant, therefore an automatic system is needed to accurately analyze the movements in various body parts of infant.

In recent years, numerous computer-based techniques have been proposed to assess the infant’s motion information and to perform quantitative analysis, using various techniques like movement tracking [8,9,10,11,12,13,14]. Some of them used wearable motion sensors [15,16,17] while the others used markers [4,18] on the infant’s body parts and tracked them using the visual sensors to encode the motion information. However, wearing a large number of sensors or markers may cause discomfort to the young patients [19] which may affect their natural body part movements. Several other techniques e.g., [20,21,22] have exploited the Microsoft Kinect to analyze the movement patterns in human body. They either use the Kinect’s integrated body tracking information [22] or body part model fitting technique [23] on depth images to encode the motion information of human body parts by detecting and tracking them in the subsequent frames of a video. Although the invention of Kinect sensor has triggered a lot of research on human motion analysis, rehabilitation, and clinical assessment; its limitations—that the subject must be in standing position and has size greater than one meter for body tracking—make it unsuitable for infants [21]. A review to analyze the movement disorders in infants using their spontaneous movements is presented in [24], and literature related to postural control and efficiency of movement can be found in [25,26,27,28,29].

This paper presents a novel computer vision-based method to detect and track infant’s body parts in video. The proposed method is marker-less and does not use any wearable sensors which makes it ideal for use in infants. It is based on the principle of pictorial structure framework [30,31,32] in which an object’s appearance is represented using a collection of part-templates (also called part-filters) together with the spatial relations between the parts. That is, the human body structure is detected in the proposed technique using the part-templates (part-filters) along with the spatial relations between the parts. In particular, the proposed method begins by detecting the different body parts which are based on joints’ locations, and computes angles at predicted joints such as elbow, shoulder, etc. Later, the movement at different joints is encoded by tracking the angle orientations in the temporal direction. To automatically detect the body parts, the proposed technique learns a model from a set of training images by preparing a set of part-filters and the spatial relations between the parts. Moreover, to handle the problem of appearance changes due to large articulation in human body parts, their appearance-changes are encoded using the mixture of part-filters for the respective part and the spatial relations between the involved parts. The model is represented using a tree-structured graph and the learning process is carried out using the structured support vector machine (structSVM). We recorded a dataset of 10 patients suffering from movement disorders in a local children hospital. The patients were being treated by the therapists in the recording days. The performance of the proposed algorithm is evaluated using two challenging ways: joints estimation accuracy and motion encoding accuracy. The results are compared with exiting state-of-the-art techniques which reveal that the proposed algorithm is very effective in analyzing the infantile movement disorders. The proposed approach is influenced by [31,32] and presents the following novel contributions:

The proposed algorithm does not require wearing markers and other wearable sensors which makes it ideal for movement analysis of infants;

The proposed technique performs movement analysis in videos by computing the angle orientations at different predicted joints’ locations and tracking them in the temporal direction;

The proposal of a simple yet novel modeling of part-templates to deal with the self-occlusion of body parts and the rotation problems;

A novel scoring scheme is introduced to eliminate the false positives in the detection of body parts;

To deal with the vast variability in the different body parts, an optimal mixture size is chosen for each part to improve the detection process.

A detailed review of the state-of-the-art techniques to encode the human body parts movement. The techniques are also classified into various categories based on their underlying body parts detection and motion encoding methods.

2. Related Work

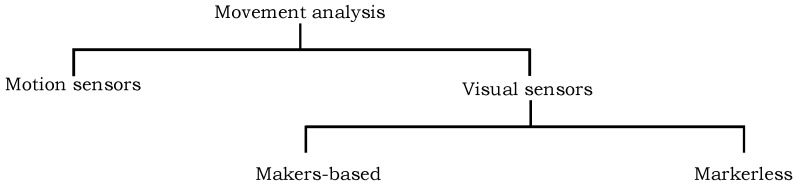

Over the last few years, numerous computer-based techniques have been proposed to analyze the movement of human body parts for the clinical and behavioral assessment and other applications [33,34,35,36]. These techniques can be categorized in two groups: visual sensor-based approaches and motion sensor-based approaches. The first group of approaches either use markers on human body region or exploit markerless solutions by incorporating the image features such as color, edges, etc. to detect and track the different body parts in video data. The second family of approaches use motion sensors e.g., inertial measurement unit (IMU) to encode the motion information. Figure 1 presents the categorization of existing techniques into different groups which are reviewed in the following subsections.

Figure 1.

The distribution of existing techniques for movement analysis of human body parts into various categories.

2.1. Visual Sensor-Based Approaches

These techniques use color images, depth information or both for movement analysis. The methods e.g., [37,38,39] attach markers on human body to represent the joints’ locations, and use them to detect and track human skeleton in a video to compute the motion information, whereas the others exploit image features such as color, shape, and edges to estimate the joints’ locations for movement analysis.

2.1.1. Marker-Based Techniques

The marker-based approaches use a set of markers e.g., infrared (IR) markers, reflective spheres, light-emitting diodes at human body, particularly, at joints’ locations to track the motion information. Tao et al. [40] proposed a color marker-based tracking algorithm to estimate the motion information at different joints. They attached different color markers on joints’ locations and tracked them in the video. The algorithm proposed in [4] encodes the motion of markers in 3D (three dimensional) domain using seven cameras. The markers are attached to the human body and their motion information is used to predict the risk for developing movement disorders. Burke et al. [37] proposed two games for upper limb stroke rehabilitation which are controlled by color object segmentation and its tracking for motion detection. They attach the color markers at the upper limbs and their method detects and tracks them using the calibration process. The system proposed in [38] uses color markers at the foot of the adults to analyze the foot positioning and orientation for gait training. The authors in [41] encodes the human motion information using a tiny high resolution video camera and IR-based markers.

Rado et al. [42] proposed an IR optical tracker-based motion tracking of the knees for patient rehabilitation. Based on the computed motion information, they detect the errors in movement and system demonstrates to the user how to perform the movement correctly. Chen et al. [39] developed a therapy system using an IR camera with hand skateboard training device for upper limb stroke rehabilitation. Patients participating in the therapy have a binding band attached to a hand skateboard on the table to guide the patient in moving the hand skateboard along the designated path. A recent survey on the evaluation of marker based system is presented in [43]. Since these markers have to hook-up with human body parts, they require cumbersome installation and calibration process [44]. Moreover, increasing the number of markers (i.e., hooking-up with each body part of infant) will raise the complexity in the tracking when markers are either close to each other or become occluded due to the size of infant’s body region. Additionally, increasing the IR-based markers makes the system expensive too.

2.1.2. Markerless Techniques

Lately, the markerless techniques have gained attraction in the research community due to its several computer-vision-based applications [45,46,47,48]. Instead of using several markers at human body parts, they use image features such as shape, edges, and pixels’ location to detect and track different body parts. The method proposed in [49] predicts the 3D positions of human joints in depth image. It employs per pixel classification of human body parts using random forest classifier. Hesse et al. [20] proposed an improved version of [49] by exploiting random ferns to estimate the infant’s body parts using pixel-wise body part classification. They tracked angle orientation at predicted joints in the successive frames to encode the motion information. However, it is very difficult to classify the different body parts in depth images particularly when they are mixed-up with the other parts [20]. In [5,50], the authors proposed the computation of optical flow to estimate the movement patterns in the infant’s limbs. However, such techniques are unable to localize the movements at a particular joint. Evet et al. [51] developed a game for stroke rehabilitation. They captured the movement and gesture of hand using an optical camera and a thermal camera. The technique, however, is limited to recognize only two gestures: hand open and closed. A few techniques e.g., [21,52,53,54] use body part model fitting technique which comprises of basic shapes on depth images to detect the infant’s body parts and encode their movements. The accuracy of such techniques significantly degrades when the desired body parts are occluded [23].

In recent years, the Microsoft Kinect sensor is considered an effective and low cost device in the clinical assessment and the rehabilitation places to provide a markerless motion capturing system [21]. It consists of a visual and a depth sensor which enables to create a 3D view of the environment. Additionally, the depth sensor of Kinect provides the skeleton tracking of human which has been exploited by the several researchers at ambient assisted living and therapeutic places to analyze movements in humans. For example, the authors in [22] proposed a system to encode the motion information of a patient using the integrated skeleton information of human from the Kinect. They compare and evaluate the patient movements with the desired exercise and generate the feedback on screen. Guerrero et al. [55] used the Kinect skeleton information to estimate the patient’s posture and compared it with a model posture. These postures are required in some physical exercises to strengthen the body muscles. The technique proposed in [56] calculates the 3D coordinates distances between 15 joints by employing the skeleton information obtained from Kinect, and uses them to monitor the rehabilitation progress. Chang et al. [57] use the human skeleton tracking information from Kinect and proposed a rehabilitation system to assist the therapists in their work. The system is designed for children suffering from motor disabilities and presents the rehabilitation progress to the therapists, as per defined standards. The researches [58,59] exploits the Kinect’s skeleton tracking to analyze the rehabilitation in upper limbs. Chang et al. [59] also validate the tracking results of Kinect sensor using the output of motion capturing system, known as OptiTrack. Recent survey of different applications which encode the movement information of human body parts using Kinect can be found in [60]. Although the Kinect sensor provides real-time skeleton tracking of human with quite a good accuracy, its limitations—that the subject must be in standing position and has size greater than one meter for body tracking—prevent the automatic detection and movement analysis of infants.

2.2. Motion Sensor-Based Algorithms

Motion sensors e.g., accelerometers, gyroscopes, and magnetometers, are another mean to encode human motion information. Heinze et al. [16] proposed a system to capture the movements of infants’ limbs using four accelerometers attached with the limbs. They use a decision tree algorithm to classify these movements into healthy and abnormal. The technique proposed in [61] uses tri-axial accelerometers on the chest, thigh, and shank of the working leg to assess the rehabilitation progress of a patient suffering with knee osteoarthritis by encoding the motion information at the respective body parts. The authors in [62] proposed a system using a set of accelerometers and compass to capture human motion for home rehabilitation. The sensors are attached on specified movable body parts and the system assigns a score based on the quality of movement defined by the therapists. Chen et al. [63] developed a motion monitoring system using a set of wireless accelerometer sensors attached with the patient’s body parts to remotely monitor his/her movements. The movement information is shared with the clinic too using a web-based system. The technique in [64] proposed a system to assess the motor ability of stroke patients using four accelerometers, attached on the human upper limbs and on the chest. The motion information at these parts are extracted within each time segment for linear regression to predict the clinical scores of motor abilities.

Zhang et al. [65] proposed a wireless human motion monitoring system for gait analysis in rehabilitation process using a set inertial measurement units (IMU) and a pair of smart shoes with pressure sensors to measure the force distributions between the two feet during the walk. An IMU is the combination of gyroscopes, accelerometers, and magnetometers, which provides the motion information relevant to angular velocity and acceleration in the sensor/body and magnetic field around it, respectively. The authors in [66,67] compute the motion information from a set of IMUs to monitor the gait. Instead of hooking-up a set of individual sensors, the authors in [68] proposed a sensing jacket consisting of 10 IMUs for home based exercise trainer system. Later, the encoded information is compared with the desired exercise. Similarly, a smart garment is proposed in [69] using a set of IMUs to support posture correction. The systems alerts the user by vibrating on the garment and visual instructions on smartphone using bluetooth connection. The authors in [70] proposed the integration of Kalman filtering with inertial sensors to improve the overall estimation of human motion.

In [71], an electrogoniometer (an electric device to measure the angles at joints) is used to capture the motion information at different joints of children suffering from CP during the exercise. They employed such a motion information in a virtual reality (VR)-based game and claim that patients have shown great interest, performed more repetitions of the exercise, and generated more ankle dorsiflexion in comparison with standalone exercise. Although, VR-based gaming applications offer an interactive, engaging, and effective environment for physical therapy, they require expensive hardware and software setup. Moreover, they are designed to suit a specific class of patients and could not be useful in case of young patients as they cannot interact with such systems. In short, the limitation of wearable sensor-based motion detection techniques is that they require to wear several sensors on the human body which may cause discomfort to them (particularly for young patients) and may affect their natural movements [21].

3. Proposed Infant’s Movement Analysis Algorithm

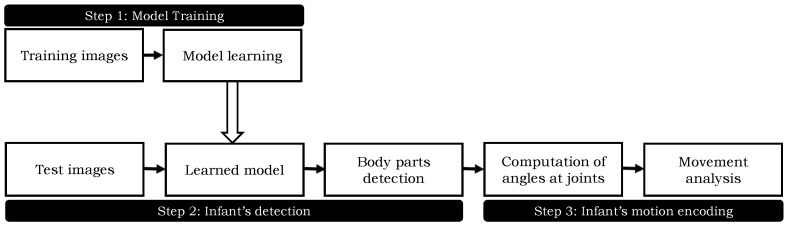

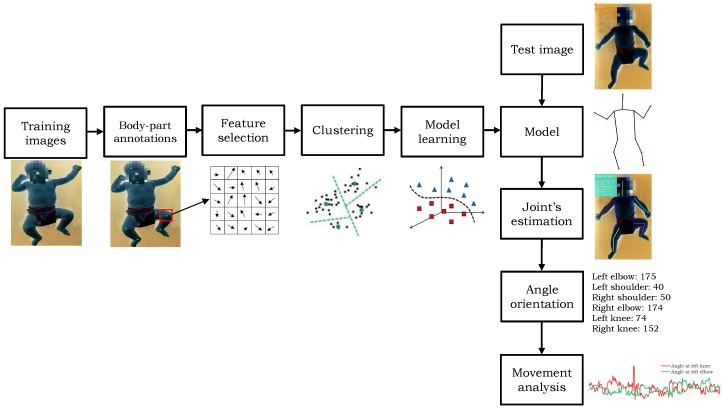

The proposed algorithm works in three steps. First, a human body model is prepared to detect the skeleton of the infants. It generates a mixture of part-filters for each body part and encodes the spatial relations between them. Second, the body parts are detected in a given image using the trained model. Third, the angles are computed on different predicted joints’ locations and the motion information is recorded temporally. A block diagram of the proposed method is shown in Figure 2. The detail of each step is described in the following sections. To enhance the readability, the detection step is explained prior to the training of the model.

Figure 2.

An overview of the proposed method.

3.1. Proposed Template-Based Model for Infant’s Detection

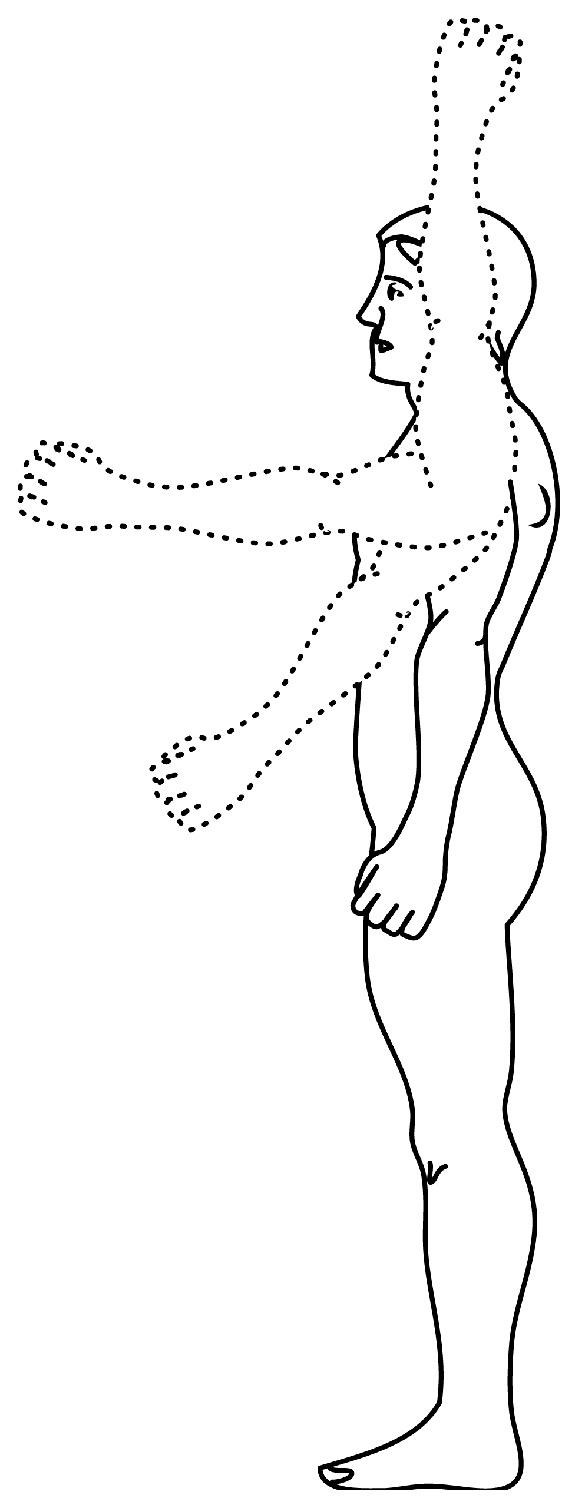

Automatic detection of human body parts in an image is a challenging task due to the variations in their appearances because of colors, shapes, sizes, occlusions etc. Moreover, the human body has many degrees of freedom in the articulation of body parts which may result in extensive variations in their appearances. For example, Figure 3 presents such appearance-changes in the human arm. To cope with these problems, the proposed model prepares a mixture of part-filters for each body part and defines the spatial relations between the body parts. A part-filter in the same mixture may correspond to different orientations, called the ‘state’ of the body part, for example, horizontal versus vertical alignment of the hand. For a given image, the detection of body parts is performed on all locations and scales using part-filters and a score for each part-filter is computed. This score represents the likelihood of occurrence of a particular state. The score is computed by applying the part-filters to convolve over a histogram of oriented gradients (HOG) of the test image. This exhaustive search is carried out only once for the first frame of the video. Since the camera is fixed during the recording and infants are not making rapid movements, the predicted location of infant in previous frame with the relaxation of a certain threshold pixels () in all the directions is employed to set the search-space in the succeeding frame. This search-space optimization is not only helpful in improving the detection accuracy but also decreases the computation cost.

Figure 3.

An example image illustrating the variations in the appearance of human arm due to large articulation [72].

Let be a template of size defined for part in state , where represents the set of states and represents the part. Let be the part-filter response or score in HOG image at location . The response is computed by matching with :

| (1) |

The part-filter scores are computed in a multi-scale fashion, however to keep the discussion simple we describe the algorithm here using at full-scale. Equation (1) is indeed a cross-correlation measure; the highest positive value represents the best matching location of respective part-filter.

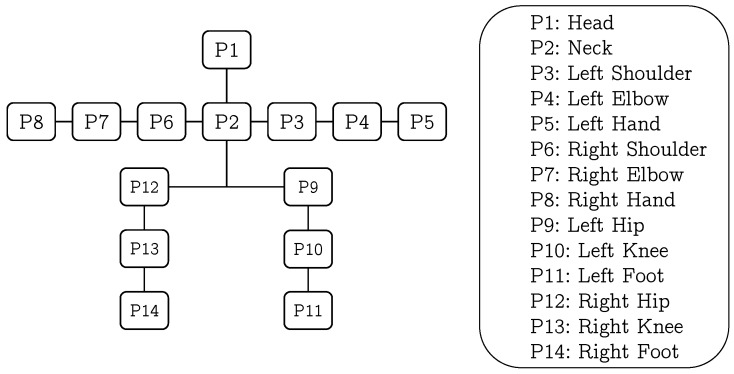

The proposed model is represented using a tree-structured graph , where the set of vertices V represents the body parts (located at joints) and the edges E models the relations between them. A kinematic tree of human body representing the relation between the body parts is shown in Figure 4. To achieve the articulation between different parts, we used a set of ‘springs’ [31] to define a spatial relation between a part and its parent, e.g., hand and elbow. If we have 5 different states of a part and 5 different states of its parent, then there are 25 springs which define the relative placement of child and its parent part giving us 25 different orientations. Let and be a body-part and its parent-part respectively, the score for the detection of the part and its state is defined as:

| (2) |

Figure 4.

A kinematic tree of human body representing the relation between each body part.

Equation (2) consists of three terms. The first term is the part-filter response in at location . The second term D defines a spring model between part and using the distance information between them and can be described as:

| (3) |

where is a deformation parameter which encodes the placement of a part relative to its rest location; i.e., the relative location of to its parent based on their states and , respectively. The term ,

| (4) |

is the relative predictive displacement of with respect to , where and . Equation (3) computes the deformation cost, which describes the difference between the detected and presumed relative position of a part to its parent in -coordinates. In particular, it penalized the score in Equation (2) based on the deviation of predicted location from the rest location. The third term in Equation (2) describes the co-occurrences of parts’ states as in [32]:

| (5) |

The first term in Equation (5) defines the assignment of one particular state for part , while the pairwise feature represents a trained co-occurrence between the parts and using their states and . As described earlier that the proposed algorithm uses a tree-structured graph G to define that which parts of the model have logical relations. It assign a positive score to the parts having a logical relation, and a negative score to the illogical relations.

The final score of a part is computed as the sum of local scores for all possible states , achieved by maximizing Equation (2) over location l and states s. Let represents the pixel location and state of part , that is . Let be children of part . The score of part is computed as:

| (6) |

It can be noted from Equation (6) that the proposed model computes the local score of part at all pixel locations for the state by gathering the score from its children using Equation (2). The overall score is computed as,

| (7) |

Score in Equation (7) is computed for part using the best scoring location and state of its child . To efficiently search the entire human body structure, we exploited the concept of ”independence” assumption. For example, in a given torso instead of using many cascade loops for the detection of all other parts, the proposed method searches independently the best candidates of arm, leg, and so forth. Since we are using a tree-structured graph to encode the spatial relations between the parts, it can be achieved efficiently using dynamic programming [31]. In particular, the proposed method iterates over all the parts, computes the score starting from the leaf-node (i.e., foot) and passes this score to its parent part, and so forth. Eventually, this computation expands till the root part (i.e., head) by following Equations (6) and (7), and the high scoring root location determine the body-model. The proposed algorithm may introduce many overlapping detections in one image. Since the recorded data contains a single infant in each frame, we exploited non-maximum suppression and the highest scoring root location is greedily picked as an estimation of infant body. Moreover, the proposed model also maintains indices in (7) (i.e., the location of a selected part) therefore, we can easily recreate the highest scoring model based solely on the root location. To deal with the self-occlusion of body parts which is common in case of infants’ movements, while saving the predicted parts’ locations in Equation (7) the proposed method also saves their respective detection scores. At retrieval, instead of just picking the highest scoring root configuration of parts, we iterate over each individual part and compare their scores with a pre-defined threshold . The maximum scoring location that satisfies the is chosen as the correct part.

3.2. Movement Analysis

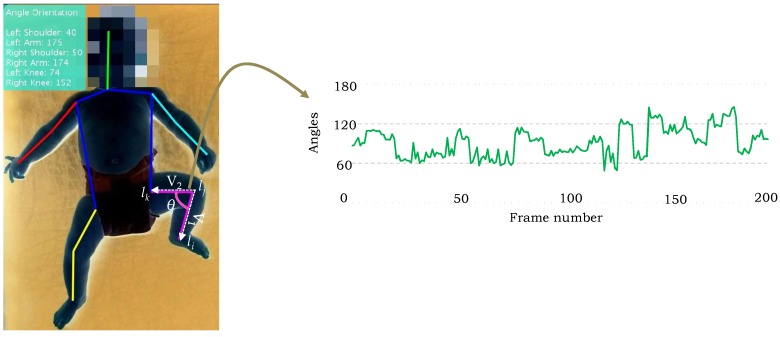

The proposed method computes the angle orientation at the detected locations of body parts and encodes their tracking in the temporal direction to describe the motion information. Since the parts are located at joints, the skeleton information is extracted based on the predicted joints’ locations. The angles are computed at the joints and their tracking in the subsequent frames of the video instigates the movement in various parts, such as elbow, shoulder, knee, and etc. For example, consider the case of knee connected with the ankle and hip. Let , and denote the ankle, knee and hip joints respectively (Figure 5), the following two vectors are computed:

| (8) |

Figure 5.

An example of angle computation at the predicted left knee joint and its tracking in the subsequent 200 frames. The angle orientations at other joints are annotated at the upper-left corner of the test image. The patient body is shown in negative to preserve the privacy of the subject.

The angle between the vectors and representing the angle orientation at the knee joint is computed as,

| (9) |

Analogously, the angles for other joints can be computed and tracked temporally in a video sequence to describe their respective movements. Figure 5 shows the angle orientations computed using the proposed algorithm on a sample image from the test dataset, and the tracking of angle at knee joint in the subsequent frames.

3.3. Model Training

We used a set of positive images annotated with body parts’ locations, and a set of negative images without any human to train a model. Each positive image in the training dataset requires 14 annotated parts’ locations, as shown in Figure 6. The edge relations E in the tree-structured graph are defined manually by connecting the joints. To make the model robust and scale-invariant, the images are scaled, flipped, and rotated by a few degrees. For each annotated location of body part, the features are computed within the bounding box around the annotated part (Figure 6). To define the size of bounding box, the ratio between the length of each part in a given image to the median value of the length of respective part in the whole training set is computed, and then 75 percent quantile of the data (i.e., length) is selected to set the size of the bounding-box for all parts in that image. We computed HOG features in each bounding box to encode the appearance of the respective part and their orientation information is saved in cells. Since it can be observe that the appearance changes in several parts (i.e., state) are based on the relative location of a part with respect to its parent. Therefore, the relative location of part with respect to its parent in all the training images are grouped into S clusters using the k-means clustering algorithm to define the all possible states of a part. We assumed that each cluster describes a unique state of the part. Moreover, we have not fixed the cluster size for each part, rather it is varying based on the degree of articulation in the part. For example, the arm and the leg parts comprises more articulation in comparison with the torso.

Figure 6.

An illustration of model training, and the detection and the tracking of body parts. The patient body is shown in negative to preserve the privacy of the subject.

Within the aforementioned described scenario, our goal is to obtain a set of templates for each body part and the spatial relations between them such that the model assigns a high positive score to the predicted parts in the positive image and a low score to the parts in the negative image. More precisely, in a given training set of positive images {} and negative images {}, the learning of model parameters consists of finding a set of part-filters and deformation parameters which are computed using the structured prediction objective function proposed in [31]. Let’s assume that and is the set of part-filters and the relations between them, then using Equation (2) we can write . In particular, these parameters should satisfy the following two constraints:

| (10) |

To learn the model parameters, the following optimization problem is solved:

where and are the slack variables representing the loss functions for positive and negative images respectively, and C is a user-defined regularization parameter which plays an important role in maximizing the margin and minimizing the loss function. To learn a set of part-filters and their spatial relations, structSVM [73] is an optimal solution however, we used an extension of structSVM proposed in [74]. Similar to the liblinear SVM [75], it uses a dual coordinate descent technique to find an optimal solution in a single pass. The required change in the above derivation is the ability of linear constraints that it should share the same slack variable for all the negative examples belonging to the same image and solve the dual problem coordinate-wise by considering one variable at a time. The above formulation can be described as,

| (11) |

4. Experiments and Results

4.1. Evaluation Dataset

To the best of our knowledge, there is no public dataset available to analyze the movement disorders in infants. Therefore, we captured a dataset in local children hospital using Microsoft Kinect. It is worth mentioning that we used only RGB data from Kinect in the proposed algorithm therefore, any other simple camera can be used too. We selected 10 patients of ages 2 weeks to 6 months with both genders, having movement disorders and currently they are being treated by the therapists. The informed consent was obtained from all participating individuals, the therapists and the infants’ parents. The camera was fitted on a tripod at height of 1.5 m with an angle of 90 from the table surface. Figure 7 shows the camera setup during used in the recording. The subjects were lying on a table in supine lying position (i.e., lying on back), wearing only diapers which helped us to clearly capture their movements. For each patient, the recording session usually lasted for 15 minutes. A total of 20 video sequences comprising more than 25,000 frames were captured and used in the experiments. The ground truth of the test dataset was obtained through manual delineation. A team of three members carefully analyzed each case and manually marked the positions of the joints in each test image.

Figure 7.

Camera setup in dataset acquisition.

4.2. Experimental Setup

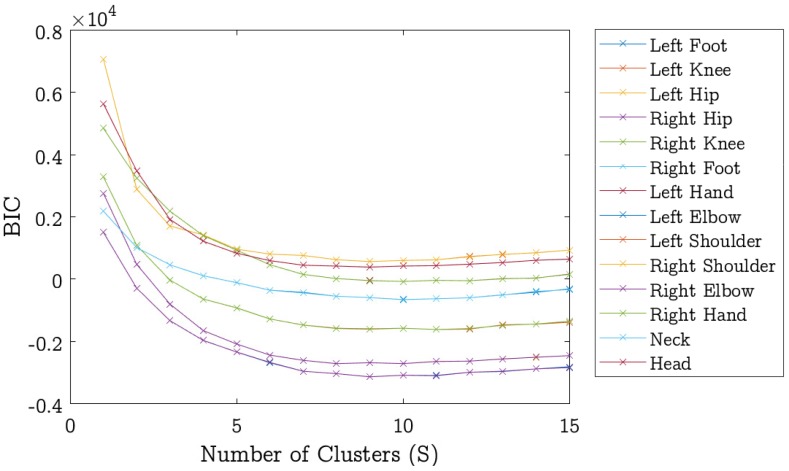

To train the model, we selected 650 positive images. All positive images were flipped and rotated between −15 to +15 with the interval of 5 to obtain a robust model. This helps the model to learn the various states of the body parts. As described earlier that each positive image has 14 annotated locations of body parts, we computed HOG features in a bounding box around the marked location to encode the appearance of the respective body part. To describe the states of a particular part, the relative locations of that part with respective parent part in all images are clustered. The cluster size S depends on the degree of variation in the body part. The parts with large variety of appearances require more part-filters for accurate detection. We used the Bayesian information criterion (BIC) [76] to estimate the cluster size S for each body part. The BIC also known as Schwarz criterion is a criterion designed to select an optimal model among a finite set of models. To represent the different appearances of a part, we use k-means clustering with S number of clusters. The BIC value is computed as in [77]:

| (12) |

where n is the size of the data, R is the residual sum of squares, and k represents the number of model parameters. The parameter , where S as the number of clusters and d is the dimension of data. The BIC value is computed for each body-part at varying the number of clusters S and the value of S that gives the minimum BIC value is chosen as the optimal number of clusters for that body-part. A plot of BIC values and clusters for all body-parts is shown in Figure 8. The optimal number of clusters found though BIC approach are listed in Table 1. We also experimentally tested different cluster sizes for each body part and listed the optimal sizes in Table 1. It can be noted that for right and left elbow parts, both empirical and BIC predicted cluster sizes are same. In other body parts, the empirically estimated cluster size is 2–3 cluster smaller than the BIC predicted size, except the head where the difference is 5. We observed that the performance of the proposed algorithm with BIC predicted clusters is almost same as when empirically estimated cluster sizes are used. Increasing the number of clusters does not improve the accuracy, it instead adversely affects the computational time of the algorithm. From experiments we found that using the BIC predicted cluster sizes, the computational time of the proposed method doubles without significant improvement in the detection accuracy. Nevertheless, using BIC one can automate the cluster size estimation and this can certainly save the time spent in experimentally estimating the cluster size for each body part.

Figure 8.

The number of clusters predicted by the Bayesian information criterion (BIC) for each body-part. A few plots are very close to each other and therefore they largely overlap and are not visible at this scale.

Table 1.

The predicted number of clusters by the Bayesian information criterion (BIC) and BIC value for each body-part. ’Emp.’ represents the number of clusters obtained empirically for each body-part.

| Body-Part | BIC Value (×) | BIC Clusters | Emp. Clusters |

|---|---|---|---|

| Head | −0.67 | 9 | 4 |

| Left Elbow | −1.63 | 9 | 9 |

| Left Foot | −2.73 | 10 | 6 |

| Left Hip | −2.73 | 8 | 6 |

| Left Knee | −1.63 | 11 | 8 |

| Left Shoulder | −0.67 | 9 | 6 |

| Neck | −0.09 | 9 | 4 |

| Right Elbow | −3.15 | 9 | 9 |

| Right Foot | 0.55 | 10 | 6 |

| Right Hand | 0.55 | 10 | 8 |

| Right Hand | −3.15 | 10 | 8 |

| Right Hip | −0.09 | 8 | 6 |

| Right Knee | 0.37 | 11 | 8 |

| Right Shoulder | 0.37 | 9 | 6 |

We also input negative images to the model, the images with no human subjects. Each of the possible root locations in a negative image represents a unique negative example in the training set. We initialized the deformation parameters with which demonstrates that the part location is close to its rest location. The structSVM library [74] is used in the implementation of the proposed method. Before the actual model is trained on full training database, a 10-fold cross validation is performed to validate the model by selecting the optimal value of its parameter C. We used multi-resolution search to find the optimal value of the hyper-parameter. That is, first, the parameter values are tested from a larger range and the best configurations is selected. Then a narrow search space is exploited around this value to select the optimal value in the second step.

4.3. Results

The trained model is evaluated on a probe (i.e., testing) dataset comprising the rest of the recordings of infants. Since the body parts are located on joints and the accuracy of encoding the motion information is also based on the precision of predicting the joints’ locations, the performance of the proposed algorithm is evaluated using the estimation of joints and the encoding of motion at particular joints. The short description of the results in each category is summarized in the subsequent sections.

4.3.1. Joints Estimation Accuracy

The performance of the proposed algorithm in estimating the joints’ locations is assessed using two challenging matrices: Average Joint Position Error and Wost Case Accuracy. The results are compared with the recent existing similar techniques [20,78]. Furthermore, the encoded movement at different predicted joints are also compared with the manually annotated ground truth information. The Average Joint Position Error (AJPE) metric measures the average difference between the predicted locations of joints and their ground truth information. The average differences are measured in millimeters and the results are documented in Table 2. The results show that the proposed algorithm outperforms [20] in the detection of each body part. The mean AJPE of the method in [20] is 41, whereas our method has just over 12.

Table 2.

Average joint position error measure the average difference (in millimeter) between the predicted and the ground truth locations. The error is reported per body part and Mean Error represents the average error across all the body parts in the test dataset. The proposed method outperforms in the detection of each body (i.e., having minimum error).

| Body-Parts Detection Error | ||

|---|---|---|

| Body-Part | Hesse et al. [20] | Proposed Method |

| Head | 37 | 20.3 |

| Neck | 20 | 11.4 |

| Right Shoulder | 27 | 11 |

| Left Shoulder | 73 | 11.4 |

| Right Elbow | 24 | 11.2 |

| Left Elbow | 20 | 12.4 |

| Right Hand | 44 | 11.9 |

| Left Hand | 149 | 14.4 |

| Right Hip | 33 | 11.9 |

| Left Hip | 12 | 11.2 |

| Right Knee | 45 | 11.9 |

| Left Knee | 49 | 11.7 |

| Right Foot | 28 | 14 |

| Left Foot | 30 | 12.8 |

| Mean Error | 41 | 12.7 |

The Worst Case Accuracy (WCA) metric is defined as the percentage of frames in which all the joints must be detected within a certain threshold distance () from the ground truth information. It must be noted that any frame exhibiting error on even one joint location larger than would be considered as false positive. Similar to [78], we conducted two evaluations using 5 cm and 3 cm, and the results are summarized in Table 3. The results reveal that our method performs better in both tests achieving an accuracy of more than 95% and 86%, respectively.

Table 3.

Performance evaluation using WCA metric which describe the percentage number of frames in the test dataset where all the body-parts are detected within a certain threshold distance () from the ground truth location. Two experiments are carried out using = 5 cm and = 3 cm. The best results are marked in bold.

| Method | = 5 cm | = 3 cm |

|---|---|---|

| Hesse et al. [78] | 90.0% | 85.0% |

| Proposed method | 95.8% | 86.3% |

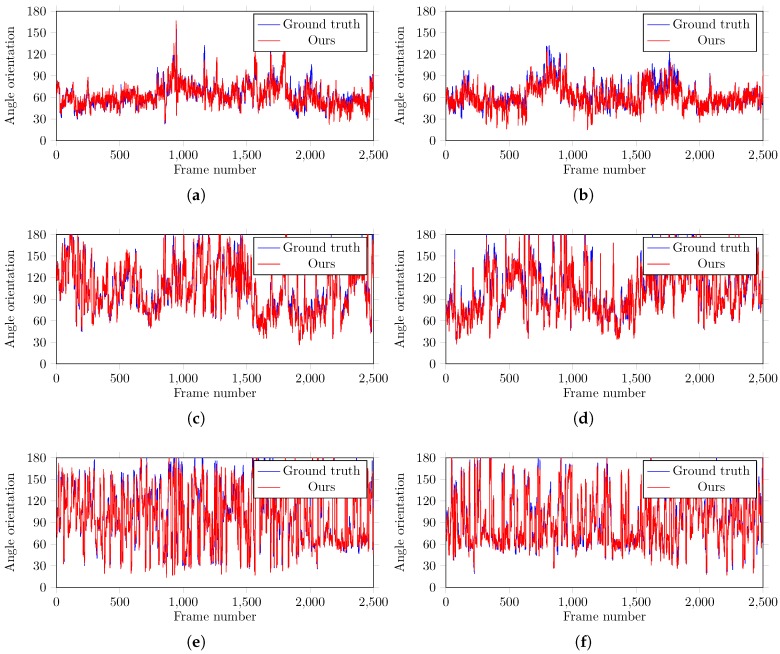

4.3.2. Motion Encoding Accuracy

We also evaluated the performance of the proposed algorithm in the encoding of motion information at predicted joints. The computed information is compared with the ground-truth information. In particular, we computed the angle orientation at the predicted joint locations, such as, shoulders, elbows, and knees. The orientation information is encoded in the temporal direction at particular joints. Figure 9 presents the computed movement information and the ground-truth angles. The overlapped areas represent similar movement patterns. Though there is little difference in the computed and the ground-truth movement patterns, one can observe that the estimated movement information (using angles) reflect the ground-truth accurately. The reason for the this small variation in results is that the body parts are detected a few pixels away from their actual locations (i.e., ground-truth information). In particular, the proposed algorithm estimates the joint’s location as the center of predicted body part patch in the image, and then draws edges between the estimated locations to calculate the angle orientation. Therefore, the deviation of part-filter window (i.e., detection results) by a few pixels from the ground-truth information of body part generates these small variations in computing the angles. Such plots (Figure 9) can help the doctors and the therapists to identify the movement disorders based on the absence of specific motion information at a particular joint.

Figure 9.

Predicted and the ground-truth angle orientations of (a) right shoulder, (b) left shoulder, (c) right elbow, (d) left elbow, (e) right knee, and (f) left knee in a test video sequence with 2500 frames.

To further investigate the performance of the proposed method, Mean Absolute Error (MAE) metric is also computed between the estimated movement information in terms of orientation angles and the ground-truth information. The results are presented in Table 4. The results reveal that the proposed algorithm is very accurate in computing the movement information with mean absolute error of around with respect to the ground-truth.

Table 4.

Mean Absolute Error (MAE) in the estimated and the ground-truth angle orientations of different body parts.

| Left | Right | Average | ||||

|---|---|---|---|---|---|---|

| Elbow | Knee | Shoulder | Elbow | Knee | Shoulder | |

| 3.632 | 2.959 | 2.830 | 3.231 | 3.160 | 2.438 | 3.042 |

One can observe that the evaluation of the proposed method using different metrics, AJPE, WCA, and MAE perform consistently better in estimating the joints’ locations and encoding of motion information which reflect the efficacy of the proposed algorithm.

5. Conclusions and Future Work

In this paper, a novel method to identify the infantile movement disorders is presented. Unlike existing techniques, it does not use markers or sensors on the subject’s body to analyze their movements. A part-based model to detect and track the body parts of infants in video is proposed. The trained model encodes the possible orientations of each part and the spatial relations between the parts. In the probe sequence, the predicted joint locations are used to construct a skeleton and compute the angle orientations, and their tracking in the subsequent frames facilitates the movement analysis of a particular joint. In future, we plan to exploit the depth information to compute accurate angle orientations in 3D domain.

A few interesting applications of the proposed algorithm would be to evaluate the patient’s poses and movement during the therapeutic procedure. For example, motor disability is the special kind of disease arise in human due to a damage in the central nervous system (i.e., brain and spinal cord), associated with the body movements. It introduce several problems such as cerebral palsy, spinal scoliosis, peripheral paralysis of arms/legs, hip joint dysplasia and various myopathies [21]. To deal with such problems, the neurodevelopmental treatment and the Vojta techniques are the most common approaches [79]. The neurological physiotherapy aims to make available the message path between the brain and the musculoskeletal system by assisting the patients to perform blocked movement patterns. During the treatment, a particular stimulation is given to the patient body region to perform these blocked movement pattern which the patient is unable to perform in a normal way. The proposed method can be extended to detect the accurate poses and movements of the patient during the treatment, which ultimately reveals the accuracy of the given treatment. Since the therapist suggests an in-home continuation of the therapy in order to accomplish the best outcomes, an implementation of such a system may serve as in-home therapy alternative to in-hospital therapy. This would not only be helpful for the quick recovery of the patients but also useful for the patients who do not have access to the desired treatment in their towns.

Occlusions in some positions are unavoidable. If a body part is partially occluded, the proposed method is able to accurately detect the movement. However, in case of significant occlusions, increasing the number part filters (clusters) might not achieve the desired results, limiting the performance of our method. To deal with large occlusions, in future, we plan to extend the proposed algorithm using multiple cameras rather than using a single camera setup which would help to cater the large occlusions and to improve the accuracy of the algorithm.

Acknowledgments

We thank the therapists for helping us in capturing the test dataset.

Author Contributions

Conceptualization, M.H.K. and M.G.; Methodology, M.H.K. and M.S.; Investigation, M.H.K. and M.S.; Writing—Original Draft Preparation, M.H.K. and M.S.F.; Writing—Review & Editing, M.H.K., M.S.F. and M.G.

Funding

This research was supported by the German Federal Ministry of Education and Research within the project “SenseVojta: Sensor-based Diagnosis, Therapy and Aftercare According to the Vojta Principle” (Grant Number: 13GW0166E).

Conflicts of Interest

The author declares no conflict of interest.

References

- 1.Mink J.W. The basal ganglia: Focused selection and inhibition of competing motor programs. Prog. Neurobiol. 1996;50:381–425. doi: 10.1016/S0301-0082(96)00042-1. [DOI] [PubMed] [Google Scholar]

- 2.Groen S.E., de Blecourt A.C., Postema K., Hadders-Algra M. General movements in early infancy predict neuromotor development at 9 to 12 years of age. Dev. Med. Child Neurol. 2005;47:731–738. doi: 10.1017/S0012162205001544. [DOI] [PubMed] [Google Scholar]

- 3.Piek J.P. The role of variability in early motor development. Infant Behav. Dev. 2002;25:452–465. doi: 10.1016/S0163-6383(02)00145-5. [DOI] [Google Scholar]

- 4.Meinecke L., Breitbach-Faller N., Bartz C., Damen R., Rau G., Disselhorst-Klug C. Movement analysis in the early detection of newborns at risk for developing spasticity due to infantile cerebral palsy. Hum. Mov. Sci. 2006;25:125–144. doi: 10.1016/j.humov.2005.09.012. [DOI] [PubMed] [Google Scholar]

- 5.Stahl A., Schellewald C., Stavdahl Ø., Aamo O.M., Adde L., Kirkerod H. An optical flow-based method to predict infantile cerebral palsy. IEEE Trans. Neural Syst. Rehabil. Eng. 2012;20:605–614. doi: 10.1109/TNSRE.2012.2195030. [DOI] [PubMed] [Google Scholar]

- 6.B-Hospers C.H., H-Algra M. A systematic review of the effects of early intervention on motor development. Dev. Med. Child Neurol. 2005;47:421–432. doi: 10.1017/S0012162205000824. [DOI] [PubMed] [Google Scholar]

- 7.Prechtl H. General movement assessment as a method of developmental neurology: New paradigms and their consequences. Dev. Med. Child Neurol. 2001;43:836–842. doi: 10.1017/S0012162201001529. [DOI] [PubMed] [Google Scholar]

- 8.Pinho R.R., Correia M.V. A Movement Tracking Management Model with Kalman Filtering, Global Optimization Techniques and Mahalanobis Distance. Adv. Comput. Methods Sci. Eng. 2005;4A:463–466. [Google Scholar]

- 9.Pinho R.R., Tavares J.M.R. Tracking features in image sequences with kalman filtering, global optimization, mahalanobis distance and a management model. Comput. Model. Eng. Sci. 2009;46:51–75. [Google Scholar]

- 10.Pinho R.R., Tavares J.M.R.S., Correia M.V. An Improved Management Model for Tracking Missing Features in Computer Vision Long Image Sequences. WSEAS Trans. Inf. Sci. Appl. 2007;1:196–203. [Google Scholar]

- 11.Cui J., Liu Y., Xu Y., Zhao H., Zha H. Tracking Generic Human Motion via Fusion of Low- and High-Dimensional Approaches. IEEE Trans. Syst. Man Cybern. Syst. 2013;43:996–1002. doi: 10.1109/TSMCA.2012.2223670. [DOI] [Google Scholar]

- 12.Tavares J., Padilha A. Matching lines in image sequences with geometric constraints; Proceedings of the 7th Portuguese Conference on Pattern Recognition; Aveiro, Portugal. 23–25 March 1995. [Google Scholar]

- 13.Vasconcelos M.J.M., Tavares J.M.R.S. Human Motion Segmentation Using Active Shape Models. In: Tavares J.M.R.S., Natal Jorge R., editors. Computational and Experimental Biomedical Sciences: Methods and Applications. Springer International Publishing; Cham, Switzerland: 2015. pp. 237–246. [Google Scholar]

- 14.Gong W., Gonzàlez J., Tavares J.M.R.S., Roca F.X. A New Image Dataset on Human Interactions. In: Perales F.J., Fisher R.B., Moeslund T.B., editors. Articulated Motion and Deformable Objects. Springer; Berlin/Heidelberg, Germany: 2012. pp. 204–209. [Google Scholar]

- 15.Park C., Liu J., Chou P.H. Eco: An Ultra-compact Low-power Wireless Sensor Node for Real-time Motion Monitoring; Proceedings of the 4th International Symposium on Information Process in Sensor Networks; Los Angeles, CA, USA. 24–27 April 2005. [Google Scholar]

- 16.Heinze F., Hesels K., Breitbach-Faller N., Schmitz-Rode T., Disselhorst-Klug C. Movement analysis by accelerometry of newborns and infants for the early detection of movement disorders due to infantile cerebral palsy. Med. Biol. Eng. Comput. 2010;48:765–772. doi: 10.1007/s11517-010-0624-z. [DOI] [PubMed] [Google Scholar]

- 17.Trujillo-Priego I.A., Lane C.J., Vanderbilt D.L., Deng W., Loeb G.E., Shida J., Smith B.A. Development of a Wearable Sensor Algorithm to Detect the Quantity and Kinematic Characteristics of Infant Arm Movement Bouts Produced across a Full Day in the Natural Environment. Technologies. 2017;5:39. doi: 10.3390/technologies5030039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hondori H.M., Khademi M., Dodakian L., Cramer S.C., Lopes C.V. Medicine Meets Virtual Reality 20. Volume 184. IOS Press; Amsterdam, The Netherlands: 2013. A spatial augmented reality rehab system for post-stroke hand rehabilitation; pp. 279–285. [PubMed] [Google Scholar]

- 19.Khan M.H., Helsper J., Boukhers Z., Grzegorzek M. Automatic recognition of movement patterns in the vojta-therapy using RGB-D data; Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP); Phoenix, AZ, USA. 25–28 September 2016; pp. 1235–1239. [DOI] [Google Scholar]

- 20.Hesse N., Stachowiak G., Breuer T., Arens M. Estimating Body Pose of Infants in Depth Images Using Random Ferns; Proceedings of the IEEE International Conference on Computer Vision (ICCV) Workshops; Santiago, Chile. 7–13 December 2005. [Google Scholar]

- 21.Khan M.H., Helsper J., Farid M.S., Grzegorzek M. A computer vision-based system for monitoring Vojta therapy. J. Med. Inform. 2018;113:85–95. doi: 10.1016/j.ijmedinf.2018.02.010. [DOI] [PubMed] [Google Scholar]

- 22.Yao L., Xu H., Li A. Kinect-based rehabilitation exercises system: therapist involved approach. Biomed. Mater. Eng. 2014;24:2611–2618. doi: 10.3233/BME-141077. [DOI] [PubMed] [Google Scholar]

- 23.Khan M.H., Helsper J., Yang C., Grzegorzek M. An automatic vision-based monitoring system for accurate Vojta-therapy; Proceedings of the 2016 IEEE/ACIS 15th International Conference on Computer and Information Science (ICIS); Okayama, Japan. 26–29 June 2016; pp. 1–6. [Google Scholar]

- 24.Marcroft C., Khan A., Embleton N.D., Trenell M., Plötz T. Movement recognition technology as a method of assessing spontaneous general movements in high risk infants. Front. Neurol. 2015;5:284. doi: 10.3389/fneur.2014.00284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Sousa A.S., Silva A., Tavares J.M.R. Biomechanical and neurophysiological mechanisms related to postural control and efficiency of movement: A review. Somatosens. Motor Res. 2012;29:131–143. doi: 10.3109/08990220.2012.725680. [DOI] [PubMed] [Google Scholar]

- 26.Nunes J.F., Moreira P.M., Tavares J.M.R. Handbook of Research on Computational Simulation and Modeling in Engineering. IGI Global; Hershey, PA, USA: 2016. Human motion analysis and simulation tools: a survey; pp. 359–388. [Google Scholar]

- 27.Oliveira R.B., Pereira A.S., Tavares J.M.R.S. Computational diagnosis of skin lesions from dermoscopic images using combined features. Neural Comput. Appl. 2018 doi: 10.1007/s00521-018-3439-8. [DOI] [Google Scholar]

- 28.Oliveira R.B., Papa J.P., Pereira A.S., Tavares J.M.R.S. Computational methods for pigmented skin lesion classification in images: review and future trends. Neural Comput. Appl. 2018;29:613–636. doi: 10.1007/s00521-016-2482-6. [DOI] [Google Scholar]

- 29.Ma Z., Tavares J.M.R. Effective features to classify skin lesions in dermoscopic images. Expert Syst. Appl. 2017;84:92–101. doi: 10.1016/j.eswa.2017.05.003. [DOI] [Google Scholar]

- 30.Fischler M.A., Elschlager R.A. The representation and matching of pictorial structures. IEEE Trans. Comput. 1973;100:67–92. doi: 10.1109/T-C.1973.223602. [DOI] [Google Scholar]

- 31.Felzenszwalb P.F., Girshick R.B., McAllester D., Ramanan D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2010;32:1627–1645. doi: 10.1109/TPAMI.2009.167. [DOI] [PubMed] [Google Scholar]

- 32.Yang Y., Ramanan D. Articulated human detection with flexible mixtures of parts. IEEE Trans. Pattern Anal. Mach. Intell. 2013;35:2878–2890. doi: 10.1109/TPAMI.2012.261. [DOI] [PubMed] [Google Scholar]

- 33.Liu Y., Nie L., Han L., Zhang L., Rosenblum D.S. Action2Activity: Recognizing Complex Activities from Sensor Data; Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence (IJCAI 2015); Buenos Aires, Argentina. 25–31 July 2015; pp. 1617–1623. [Google Scholar]

- 34.Liu Y., Nie L., Liu L., Rosenblum D.S. From action to activity: Sensor-based activity recognition. Neurocomputing. 2016;181:108–115. doi: 10.1016/j.neucom.2015.08.096. [DOI] [Google Scholar]

- 35.Liu Y., Zhang L., Nie L., Yan Y., Rosenblum D.S. Fortune Teller: Predicting Your Career Path; Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence (AAAI-16); Phoenix, AZ, USA. 12–17 February 2016; pp. 201–207. [Google Scholar]

- 36.Liu Y., Zheng Y., Liang Y., Liu S., Rosenblum D.S. Urban Water Quality Prediction Based on Multi-task Multi-view Learning; Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence (IJCAI’16); New York, NY, USA. 9–15 July 2016; pp. 2576–2582. [Google Scholar]

- 37.Burke J., Morrow P., McNeill M., McDonough S., Charles D. Vision based games for upper-limb stroke rehabilitation; Proceedings of the 2008 International Machine Vision and Image Processing Conference (IMVIP); Portrush, Ireland. 3–5 September 2008; pp. 159–164. [Google Scholar]

- 38.Paolini G., Peruzzi A., Mirelman A., Cereatti A., Gaukrodger S., Hausdorff J.M., Della Croce U. Validation of a method for real time foot position and orientation tracking with Microsoft Kinect technology for use in virtual reality and treadmill based gait training programs. IEEE Trans. Neural Syst. Rehabil. Eng. 2014;22:997–1002. doi: 10.1109/TNSRE.2013.2282868. [DOI] [PubMed] [Google Scholar]

- 39.Chen C.C., Liu C.Y., Ciou S.H., Chen S.C., Chen Y.L. Digitized Hand Skateboard Based on IR-Camera for Upper Limb Rehabilitation. J. Med. Syst. 2017;41 doi: 10.1007/s10916-016-0682-3. [DOI] [PubMed] [Google Scholar]

- 40.Tao Y., Hu H. Colour based human motion tracking for home-based rehabilitation. IEEE Int. Conf. Syst. Man Cybern. 2004;1:773–778. [Google Scholar]

- 41.Leder R.S., Azcarate G., Savage R., Savage S., Sucar L.E., Reinkensmeyer D., Toxtli C., Roth E., Molina A. Nintendo Wii remote for computer simulated arm and wrist therapy in stroke survivors with upper extremity hemipariesis; Proceedings of the 2008 Virtual Rehabilitation; Vancouver, BC, Canada. 25–27 August 2008; p. 74. [Google Scholar]

- 42.Rado D., Sankaran A., Plasek J., Nuckley D., Keefe D.F. A Real-Time Physical Therapy Visualization Strategy to Improve Unsupervised Patient Rehabilitation; Proceedings of the 2009 IEEE Visualization Conference; Atlantic City, NJ, USA. 11–16 October 2009. [Google Scholar]

- 43.Colyer S.L., Evans M., Cosker D.P., Salo A.I. A Review of the Evolution of Vision-Based Motion Analysis and the Integration of Advanced Computer Vision Methods Towards Developing a Markerless System. Sports Med. Open. 2018;4:24. doi: 10.1186/s40798-018-0139-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Da Gama A., Chaves T., Figueiredo L., Teichrieb V. Guidance and movement correction based on therapeutics movements for motor rehabilitation support systems; Proceedings of the 2012 14th Symposium on Virtual Augmented Reality; Rio de Janiero, Brazil. 28–31 May 2012; pp. 191–200. [Google Scholar]

- 45.Mehrizi R., Peng X., Tang Z., Xu X., Metaxas D., Li K. Toward Marker-Free 3D Pose Estimation in Lifting: A Deep Multi-View Solution; Proceedings of the 13th IEEE International Conference on Automatic Face Gesture Recognition (FG 2018); Xi’an, China. 15–19 May 2018; pp. 485–491. [DOI] [Google Scholar]

- 46.Elhayek A., de Aguiar E., Jain A., Thompson J., Pishchulin L., Andriluka M., Bregler C., Schiele B., Theobalt C. MARCOnI—ConvNet-Based MARker-Less Motion Capture in Outdoor and Indoor Scenes. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39:501–514. doi: 10.1109/TPAMI.2016.2557779. [DOI] [PubMed] [Google Scholar]

- 47.Tang Z., Peng X., Geng S., Wu L., Zhang S., Metaxas D. Quantized Densely Connected U-Nets for Efficient Landmark Localization; Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Salt Lake City, UT, USA. 18–22 June 2018. [Google Scholar]

- 48.Mehrizi R., Peng X., Xu X., Zhang S., Metaxas D., Li K. A computer vision based method for 3D posture estimation of symmetrical lifting. J. Biomech. 2018;69:40–46. doi: 10.1016/j.jbiomech.2018.01.012. [DOI] [PubMed] [Google Scholar]

- 49.Shotton J., Fitzgibbon A., Cook M., Sharp T., Finocchio M., Moore R., Kipman A., Blake A. Real-Time Human Pose Recognition in Parts from Single Depth Images. In: Cipolla R., Battiato S., Farinella G.M., editors. Machine Learning for Computer Vision. Springer; Berlin/Heidelberg, Germany: 2013. pp. 119–135. [DOI] [Google Scholar]

- 50.Rahmati H., Aamo O.M., Stavdahl O., Dragon R., Adde L. Video-based early cerebral palsy prediction using motion segmentation; Proceedings of the 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Chicago, IL, USA. 26–30 August 2014; pp. 3779–3783. [DOI] [PubMed] [Google Scholar]

- 51.Evett L., Burton A., Battersby S., Brown D., Sherkat N., Ford G., Liu H., Standen P. Dual Camera Motion Capture for Serious Games in Stroke Rehabilitation; Proceedings of the 2011 IEEE International Conference on Serious Games and Applications for Health (SEGAH ’11); Washington, DC, USA. 16–18 November 2011; pp. 1–4. [Google Scholar]

- 52.Olsen M.D., Herskind A., Nielsen J.B., Paulsen R.R. Model-Based Motion Tracking of Infants; Proceedings of the 13th European Conference on Computer Vision—ECCV 2014 Workshops; Zurich, Switzerland. 6–7 September 2014; pp. 673–685. [Google Scholar]

- 53.Penelle B., Debeir O. Human motion tracking for rehabilitation using depth images and particle filter optimization; Proceedings of the 2013 2nd International Conference on Advances in Biomedical Engineering (ICABME); Tripoli, Lebanon. 11–13 September 2013; pp. 211–214. [Google Scholar]

- 54.Khan M.H., Grzegorzek M. Vojta-Therapy: A Vision-Based Framework to Recognize the Movement Patterns. Int. J. Softw. Innov. 2018;5.3:18–32. doi: 10.4018/IJSI.2017070102. [DOI] [Google Scholar]

- 55.Guerrero C., Uribe-Quevedo A. Kinect-based posture tracking for correcting positions during exercise. Stud. Health Technol. Inform. 2013;184:158–160. [PubMed] [Google Scholar]

- 56.Wu K. Master’s Thesis. Department of Computer Science and Information Engineering, National Taipei University of Technology; Taipei, Taiwan: 2011. Using Human Skeleton to Recognizing Human Exercise by Kinect’s Camera. [Google Scholar]

- 57.Chang Y., Chen S., Huang J. A Kinect-based system for physical rehabilitation: A pilot study for young adults with motor disabilities. Res. Dev. Disabil. 2011;32:2566–2570. doi: 10.1016/j.ridd.2011.07.002. [DOI] [PubMed] [Google Scholar]

- 58.Exell T., Freeman C., Meadmore K., Kutlu M., Rogers E., Hughes A.-M., Hallewell E., Burridge J. Goal orientated stroke rehabilitation utilising electrical stimulation, iterative learning and microsoft kinect; Proceedings of the 13th International Conference on Rehabilitation Robotics (ICORR); Seattle, WA, USA. 24–26 June 2013; pp. 1–6. [DOI] [PubMed] [Google Scholar]

- 59.Chang C.Y., Lange B., Zhang M., Koenig S., Requejo P., Somboon N., Sawchuk A.A., Rizzo A.A. Towards pervasive physical rehabilitation using Microsoft Kinect; Proceedings of the 6th International Conference on Pervasive Computing Technologies for Healthcare; San Diego, CA, USA. 21–24 May 2012; pp. 159–162. [Google Scholar]

- 60.Mousavi Hondori H., Khademi M. A Review on Technical and Clinical Impact of Microsoft Kinect on Physical Therapy and Rehabilitation. J. Med. Inform. 2014;2014:846514. doi: 10.1155/2014/846514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Chen K.H., Chen P.C., Liu K.C., Chan C.T. Wearable sensor-based rehabilitation exercise assessment for knee osteoarthritis. Sensors. 2015;15:4193–4211. doi: 10.3390/s150204193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Tseng Y.C., Wu C.H., Wu F.J., Huang C.F., King C.T., Lin C.Y., Sheu J.P., Chen C.Y., Lo C.Y., Yang C.W., et al. A wireless human motion capturing system for home rehabilitation; Proceedings of the 10th International Conference on Mobile Data Management (MDM’09): Systems, Services and Middleware; Taipei, Taiwan. 18–20 May 2009; pp. 359–360. [Google Scholar]

- 63.Chen B.R., Patel S., Buckley T., Rednic R., McClure D.J., Shih L., Tarsy D., Welsh M., Bonato P. A Web-Based System for Home Monitoring of Patients With Parkinsonś Disease Using Wearable Sensors. IEEE Trans. Biomed. Eng. 2011;58:831–836. doi: 10.1109/TBME.2010.2090044. [DOI] [PubMed] [Google Scholar]

- 64.Hester T., Hughes R., Sherrill D.M., Knorr B., Akay M., Stein J., Bonato P. Using wearable sensors to measure motor abilities following stroke; Proceedings of the International Workshop on Wearable and Implantable Body Sensor Networks (BSN’06); Cambridge, MA, USA. 3–5 April 2006. [Google Scholar]

- 65.Zhang W., Tomizuka M., Byl N. A wireless human motion monitoring system for smart rehabilitation. J. Dyn. Syst. Meas. Control. 2016;138:111004. doi: 10.1115/1.4033949. [DOI] [Google Scholar]

- 66.Dehzangi O., Taherisadr M., ChangalVala R. IMU-Based Gait Recognition Using Convolutional Neural Networks and Multi-Sensor Fusion. Sensors. 2017;17:2735. doi: 10.3390/s17122735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Anwary A.R., Yu H., Vassallo M. An Automatic Gait Feature Extraction Method for Identifying Gait Asymmetry Using Wearable Sensors. Sensors. 2018;18:676. doi: 10.3390/s18020676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Bleser G., Steffen D., Weber M., Hendeby G., Stricker D., Fradet L., Marin F., Ville N., Carré F. A personalized exercise trainer for the elderly. J. Ambient Intell. Smart Environ. 2013;5:547–562. [Google Scholar]

- 69.Wang Q., Chen W., Timmermans A.A., Karachristos C., Martens J.B., Markopoulos P. Smart Rehabilitation Garment for posture monitoring; Proceedings of the 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS); Milano, Italy. 25–29 August 2015; pp. 5736–5739. [DOI] [PubMed] [Google Scholar]

- 70.Bo A.P.L., Hayashibe M., Poignet P. Joint angle estimation in rehabilitation with inertial sensors and its integration with Kinect; Proceedings of the 33rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Boston, MA, USA. 30 August–3 September 2011; pp. 3479–3483. [DOI] [PubMed] [Google Scholar]

- 71.Bryanton C., Bosse J., Brien M., Mclean J., McCormick A., Sveistrup H. Feasibility, motivation, and selective motor control: virtual reality compared to conventional home exercise in children with cerebral palsy. Cyberpsychol. Behav. 2006;9:123–128. doi: 10.1089/cpb.2006.9.123. [DOI] [PubMed] [Google Scholar]

- 72.Crommert M.E., Halvorsen K., Ekblom M.M. Trunk muscle activation at the initiation and braking of bilateral shoulder flexion movements of different amplitudes. PLoS ONE. 2015;10:e0141777. doi: 10.1371/journal.pone.0141777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Tsochantaridis I., Hofmann T., Joachims T., Altun Y. Support Vector Machine Learning for Interdependent and Structured Output Spaces; Proceedings of the 21st International Conference on Machine Learning (ICML’04); Banff, AB, Canada. 4–8 July 2004; New York, NY, USA: ACM; 2004. [DOI] [Google Scholar]

- 74.Ramanan D. Dual coordinate solvers for large-scale structural SVMs. arXiv. 2013. 1312.1743

- 75.Fan R.E., Chang K.W., Hsieh C.J., Wang X.R., Lin C.J. LIBLINEAR: A Library for Large Linear Classification. J. Mach. Learn. Res. 2008;9:1871–1874. [Google Scholar]

- 76.Schwarz G. Estimating the dimension of a model. Ann. Stat. 1978;6:461–464. doi: 10.1214/aos/1176344136. [DOI] [Google Scholar]

- 77.Pelleg D., Moore A.W. X-means: Extending K-means with Efficient Estimation of the Number of Clusters; Proceedings of the Seventeenth International Conference on Machine Learning; San Francisco, CA, USA. 29 June–2 July 2000; San Francisco, CA, USA: Morgan Kaufmann Publishers Inc.; 2000. pp. 727–734. [Google Scholar]

- 78.Hesse N., Schröder A.S., Müller-Felber W., Bodensteiner C., Arens M., Hofmann U.G. Body pose estimation in depth images for infant motion analysis; Proceedings of the 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Seogwipo, Korea. 11–15 July 2017; pp. 1909–1912. [DOI] [PubMed] [Google Scholar]

- 79.Barry M.J. Physical therapy interventions for patients with movement disorders due to cerebral palsy. J. Child Neurol. 1996;11:S51–S60. doi: 10.1177/0883073896011001S08. [DOI] [PubMed] [Google Scholar]