Abstract

Virtual reality trainers are educational tools with great potential for laparoscopic surgery. They can provide basic skills training in a controlled environment and free of risks for patients. They can also offer objective performance assessment without the need for proctors. However, designing effective user interfaces that allow the acquisition of the appropriate technical skills on these systems remains a challenge. This paper aims to examine a process for achieving interface and environment fidelity during the development of the Virtual Basic Laparoscopic Surgical Trainer (VBLaST). Two iterations of the design process were conducted and evaluated. For that purpose, a total of 42 subjects participated in two experimental studies in which two versions of the VBLaST were compared to the accepted standard in the surgical community for training and assessing basic laparoscopic skills in North America, the FLS box-trainer. Participants performed 10 trials of the peg transfer task on each trainer. The assessment of task performance was based on the validated FLS scoring method. Moreover, a subjective evaluation questionnaire was used to assess the fidelity aspects of the VBLaST relative to the FLS trainer. Finally, a focus group session with expert surgeons was conducted as a comparative situated evaluation after the first design iteration. This session aimed to assess the fidelity aspects of the early VBLaST prototype as compared to the FLS trainer.

The results indicate that user performance on the earlier version of the VBLaST resulting from the first design iteration was significantly lower than the performance on the standard FLS box-trainer. The comparative situated evaluation with domain experts permitted us to identify some issues related to the visual, haptic and interface fidelity on this early prototype. Results of the second experiment indicate that the performance on the second generation VBLaST was significantly improved as compared to the first generation and not significantly different from that of the standard FLS box-trainer. Furthermore, the subjects rated the fidelity features of the modified VBLaST version higher than the early version. These findings demonstrate the value of the comparative situated evaluation sessions entailing hands on reflection by domain experts to achieve the environment and interface fidelity and training objectives when designing a virtual reality laparoscopic trainer. This suggests that this method could be used successfully in the future to enhance the value of VR systems as an alternative to physical trainers for laparoscopic surgery skills. Some recommendations on how to use this method to achieve the environment and interface fidelity of a VR laparoscopic surgical trainer are identified.

Keywords: Iterative design, Interaction design, surgical training, Virtual reality (VR), Simulator fidelity

1. INTRODUCTION

Laparoscopic surgery is a minimally invasive surgical technique that requires surgeons to view the operating field on a monitor in order to manipulate surgical instruments and tissues. This technique differs from open surgery in that the surgeons operate through small incisions, use specialized instruments, and do not have direct vision of their actions. In spite of its many advantages for patients – faster recovery, less damage for tissues, smaller scars, less pain, and less need for drugs postoperatively – laparoscopic surgery requires the surgeon to learn new and challenging skills such as hand-eye coordination skills, translation of two dimensional video images to a three dimensional working area, and dealing with the fulcrum effect (Cao, MacKenzie, & Payandeh, 1996; Cao & MacKenzie, 1997; Crothers, Gallagher, McClure, James, & McGuigan, 1999; Figert, Park, Witzke, & Schwartz, 2001; Nguyen, Tonetti, & Thomann, 2013). Because of these technical proficiency challenges, the traditional apprenticeship model “See one, do one, teach one” is insufficient for laparoscopic skills training. Moreover, this model introduces legal, ethical and patient safety issues because the apprenticeship model uses patients as practice platforms (Stassen, Bonjer, Grimbergen, & Dankelman, 2005). Finally, work hour restrictions, the cost of operating rooms time, and surgical complications are additional concerns challenging this longstanding dogma (Roberts, Bell, & Duffy, 2006; Buckley, Nugent, Ryan, & Neary, 2012). Thus, one of the most common methods for training laparoscopic surgeons is the use of simulators or virtual reality trainers. These technologies have been identified as potential methods to reduce risks to both medical students and patients by allowing learning, practice, and testing of skills in a protected environment prior to real-world exposure (Aucar, Groch, Troxel, & Eubanks, 2005; Roberts, Bell, & Duffy, 2006; Buckley, Nugent, Ryan, & Neary, 2012).

The effectiveness of a virtual reality (VR) training system, though, is determined by the ability of the trainee to transfer the knowledge learned during training to a real world task (Liu, Macchiarella, Blickensderfer, & Vincenzi, 2008). Building efficient VR training systems depends on designing an effective interaction for these systems by ensuring its fidelity – i.e. the similarity between the knowledge taught in a simulator to the one used in the real world environment (Stoffregen, Bardy, Smart, & Pagulayan, 2003). What is currently lacking are guidelines or processes for how to go about achieving fidelity for surgical trainers. There is the strong belief that the development of effective VR trainers is solely an engineering challenge. In fact, much of the engineering approach is technology driven and has been focusing on high (visual) environment fidelity and realism (Drews & Bakdash, 2013). However, the engineering approach has many limitations including the realization of complete realism being far into the future (Gibson, 1971; Stappers, Gaver, & Overbeeke, 2003; Stoffregen, Bardy, Smart, & Pagulayan, 2003; Kim, Rattner, & Srinivasan, 2003) and the high cost of high-fidelity simulators (Hopkins, 1974).

One approach to achieving higher-fidelity simulations that achieve training objectives is a well-known process within HCI – multidisciplinary iterative design approaches. There is a growing interest in leveraging the principles of the iterative design for medical systems and VR surgical trainers (Mégard, Gosselin, Bouchigny, Ferlay, & Taha, 2009; Forsslund, Sallnäs, & Palmerius, A User-Centered Designed FOSS Implementation of Bone Surgery Simulations, 2009; Johnson, Guediri, Kilkenny, & Clough, 2011; Chellali, Dumas, & Milleville-Pennel, Haptic communication to support biopsy procedures learning in virtual environments, 2012; Yang, Lee, W., Choi, Y., & You, 2012; Barkana Erol & Erol, 2013; von Zadow, Buron, Harms, Behringer, Sostmann, & Dachselt, 2013). The guidelines and best practices around iterative design, though, have primarily discussed the development of systems that are not VR, haptic, and 3D-based interaction environments. In particular, addressing how to make the best use of various user groups throughout different stages of the design and development process and how those evaluation points actually affect various aspects of fidelity have not been articulated.

In this paper, we describe our own experience with developing the Virtual Basic Laparoscopic Surgical Trainer (VBLaST). VBLaST is a VR computer-based basic laparoscopic skills trainer that is meant to mimic an existing physical basic laparoscopic skills simulator without the resource limitations. It enables self-administration, savings on consumables, and automatic processing of performance through objective interpretation of results (Arikatla, et al., 2013). Aspects such as speed and efficiency of movements can be measured and displayed in real-time for the trainees and the instructor with an objective score and used to assess the learning of the targeted skills. The design objective of the VBLaST system is to obtain a training performance not significantly different from that of a validated physical part-task laparoscopic skills trainer while overcoming its limitations.. To achieve this goal, it was important to improve the fidelity of the system and to determine the characteristics of the virtual training environment that are most important for VR simulations to be effective in training laparoscopic skills. From our experience in utilizing an iterative design and development process that capitalized on the felt experience of expert surgeons through a situated comparative method, we are able to show how we determined the appropriate levels of fidelity and thus achieved a comparable training outcome to that of a physical training environment.

2. RELATED WORK

2.1. Types of laparoscopic surgery trainers and related simulators

There are currently two types of laparoscopic surgery simulators: full procedural trainers and part-task trainers.

2.1.1. Full procedural trainers

Full procedural trainers provide a realistic practice environment to conduct an entire surgical procedure. This includes for instance, live animal models and VR procedural simulators. While animal models offer the most realistic, non-patient environment for laparoscopic training, cost and ethical issues are the main reasons why they are not fully integrated into most surgical curricula (Roberts, Bell, & Duffy, 2006). VR trainers offer an alternative training environment and are currently considered as the most promising tools for surgical simulation. These systems digitally recreate the procedures, the specific anatomy, and the environment of laparoscopic surgery. This allows the practicing of all skills necessary to perform a particular laparoscopic operation. Some examples of commercially available full procedural VR simulators include LAP-Mentor (Simbionix USA, Cleveland, OH) (Simbionix, 2016), LapVR (CAE Healthcare Inc., Sarasota, FL) (CAE Healthcare, 2016), and LapSim (Surgical Science, Göteborg, Sweden) (Surgical Science, 2016). These systems offer training modules for full laparoscopic procedures, such as appendectomy (vermiform appendix removal), cholecystectomy (gallbladder removal), or laparoscopic ventral hernia repair.

The potential advantage of these simulators is that well designed full procedural simulation should teach skills, anatomy, and the nuances of a complete surgical procedure in an environment where errors can be made without consequences. In addition, VR trainers offer the possibility of evaluating the surgical performance based on objective measurements. Indeed, all movements and actions performed by the trainee can easily be recorded by the system allowing for immediate and objective feedback.

Despite these advantages, none of these VR systems is currently adopted as a standard for laparoscopic surgical training (Zevin, Aggarwal, & Grantcharov, 2014; Yiannakopoulou, Nikiteas, Perrea, & Tsigris, 2015). This can be explained by several factors. First, these systems are extremely expensive compared to physical trainers, with the average cost of a VR trainer ranging from approximately $80,000 USD to $120,000 USD (Palter, 2011; Steigerwald S., 2013). That does not include additional maintenance fees, the technical support, or the purchase of any optional modules for the system. This increase in cost would inevitably lower the availability of these training systems to most hospitals and learning centers (McMains & Weitzel, 2008). In addition, a recent study has shown that even when these systems are available at the teaching hospitals, they are underused because they are physically locked away, perhaps owing to their cost (Brennan, Loan, Hughes, Hennessey, & Partridge, 2014). The second issue is related to the lack of validity of measurements used to assess the surgical performance on these systems (Stefanidis & Heniford, 2009; Thijssen & Schijven, 2010; Våpenstad & Buzink, 2013; Stunt, Wulms, Kerkhoffs, Dankelman, van Dijk, & Tuijthof, 2014). This is perhaps due to the novelty of these systems and suggests that additional research is needed to validate these measurements. Finally, there is currently a lack of fidelity of the visual and more particularly of the haptic feedback (Iwata, et al., 2011; Steigerwald S., 2013; Hennessey & Hewett, 2013; Sánchez-Margallo, Sánchez-Margallo, Oropesa, & Gómez, 2014; Zevin, Aggarwal, & Grantcharov, 2014). For instance, a study evaluating the haptic feedback of the Lap Mentor trainer has shown that the presence of the haptic feedback has no significant effect on the performance of novice trainees suggesting that a better haptic feedback is still needed on this system (Salkini, Doarn, Kiehl, Broderick, J.F., & Gaitonde, 2010).

All these issues contribute to a decrease in the acceptance of these systems as efficient training tools and slow down their incorporation into surgical training curricula (Reznick & MacRae, 2006; Steigerwald S., 2013; Shaharan & Neary, 2014).

2.1.2. Part-task trainers

Most of the currently existing trainers for basic laparoscopic technical skills are part-task trainers. In contrast to full procedural trainers, these systems are designed to simulate a specific surgical task (such as cutting tissue or suturing), and improve the trainees’ psychomotor skills in this task (Forsslund, Sallnäs, & Lundin Palmerius, 2011). This is because a surgical procedure (e.g. gallbladder removal) is considered to be a combination of basic tasks (e.g. cutting, grasping, suturing) that can be learned separately and out of context, and then put to use in the operating room during a complete surgical procedure (Johnson E., 2007). Although these trainers only cover a small part of the surgical curriculum, they have great value for training basic laparoscopic skills (Giles, 2010; Buckley, Nugent, Ryan, & Neary, 2012).

There are currently two types of part-task trainers for laparoscopic surgery; physical box-trainers and VR task trainers (Munz, Kumar, Moorthy, Bann, & Darzi, 2004).

Physical (also called video) box-trainers incorporate real laparoscopic instruments providing realistic haptic feedback, enable training on inanimate synthetic models, and can also be coupled with cadaveric material to provide realistic anatomy. They have already shown a great value for training laparoscopic skills (Fried G. M., 2008). For instance, the Fundamentals of Laparoscopic Skills (FLS) box-trainer is the only trainer that has been validated and adopted in North America as the standard for training and assessing basic psychomotor skills needed in laparoscopic surgery (Palter, 2011; Skinner, Auner, Meadors, & Sebrechts, 2013; Valdivieso & Zorn, 2014; Pitzul, Grantcharov, & Okrainec, 2012). This box trainer is the technical component of the FLS curriculum and is based on the McGill Inanimate System for Training and Evaluation of Laparoscopic Skills (MISTELS) (Derossis, Fried, Abrahamowicz, Sigman,. Barkun, & Meakins, 1998). The five standard tasks of the FLS curriculum are the peg transfer, the pattern cutting, the ligating loop, and intracorporeal and extracorporeal suturing (Fried, Feldman, Vassiliou, Fraser, & Stanbridge, 2004). The peg transfer task is described in details in section 3.2. The pattern cutting requires cutting a precise circular pattern from suspended gauze along a premarked template. The learning objective is to develop skills in accurate cutting and applying appropriate traction and countertraction working through the constraints of fixed trocar positions. The ligating loop task aims to learn how to correctly and accurately place an endoloop around a tubular structure (foam appendage). In fact, the endoloop is a convenient tool in laparoscopic surgery to securely control a hollow tubular structure, such as a blood vessel. Finally, the two last tasks aim to teach the trainee how to perform suturing using either an intracorporeal tie (Task 4), or an extracorporeal tying technique with the help of a knot pusher (Task 5). Indeed, placing a stitch with precision and tying a knot securely are essential skills for a laparoscopic surgeon.

The FLS curriculum including the previous five tasks underwent a rigorous validation processes demonstrating its reliability (Vassiliou, Ghitulescu, Leffondré, Sigman, & Fried, 2006), construct validity (McCluney, et al., 2007), improved technical performance in the operating room as measured using a validated assessment tool (Sroka, Feldman, Vassiliou, Kaneva, Fayez, & G.M., 2010), and retention of learned skills (Edelman, Mattos,, & Bouwman, 2010). The Proficiency criteria were set (Fraser, Klassen, Feldman, Ghitulescu, Stanbridge, & Fried, 2003) and the FLS curriculum was endorsed by the Society of American Gastrointestinal and Endoscopic Surgeons (SAGES) and the American College of Surgeons (ACS). Currently, completion of the FLS program is an eligibility requirement in order to sit for the American Board of Surgery (ABS) qualifying examination in the United States (Zevin, Aggarwal, & Grantcharov, 2014) and SAGES recommends that all senior residents demonstrate laparoscopic skill competency by taking this exam. In addition to showing their validity in training basic laparoscopic skills, the other main advantage of the physical part-task trainers is their relatively low acquisition cost (approximately $2,000 USD for the FLS box trainer) (Steigerwald S., 2013). This makes them the most widely available tools in residency training programs (Steigerwald S., 2013). However, these trainers are resource-intensive in terms of consumables which increase the annual training cost. A recent study has demonstrated that the annual cost for training 10 residents using the FLS box trainer can be increased up to $12, 000 USD (Orzech, Palter, Reznick, Aggarwal, & Grantcharov, 2012). More importantly, physical box trainers are time-consuming to administer and process, and subjective when the results are interpreted (Botden & Jakimowicz, 2009; Chellali, et al., 2014). In fact, a teacher/evaluator needs to be present to ensure the tasks are being performed properly, to give feedback to the trainees, and to evaluate them.

On the other hand, VR part-task trainers also replicate simple laparoscopic tasks such as cutting, grasping and suturing enabling the operator to learn how to perform basic skills. They are based on three-dimensional computer generated images with which the user can interact through a physical interface. Previous research has shown that virtual task trainers can actually improve intraoperative skills in minimally invasive surgery similar to that of physical task trainers (Seymour, Gallagher, Roman, O’ Bri, Andersen, & Satava, 2002; Chellali, Dumas, & Milleville-Pennel, 2012; Ullrich & Kuhlen, 2012; Yiannakopoulou, Nikiteas, Perrea, & Tsigris, 2015).

The following commercially available VR trainers incorporate basic laparoscopic tasks comparable to the FLS tasks; LAP-Mentor, and LapVR, LapSim, and Lap-X (Medical-X, Rotterdam, the Netherlands) (Medical-X, 2016).

LAP Mentor consists of a custom hardware interface with haptic feedback. It includes a library of modules designed for training basic laparoscopic skills such as camera manipulation, hand–eye coordination, clipping, grasping, cutting and electrocautery. The system provides also tasks similar to those of the FLS box such as the peg transfer, the pattern cutting and ligating loop tasks. Preliminary validations of these three tasks have been conducted (Pitzul, Grantcharov, & Okrainec, 2012) and the results have shown a moderate concurrent validity for the three tasks with significant differences in completion time between the FLS box-trainer and the Lap Mentor. In addition, the three tasks do not use the same scoring metrics that are used in the FLS. On the other hand, another study comparing the two systems shows no significant difference in training performance between the two systems (Beyer, Troyer, Mancini, Bladou, Berdah, & Karsenty, 2011). A more recent study comparing the Lap Mentor to the FLS trainer has shown that the performance on both systems is correlated but not interchangeable (Brinkman, Tjiam, & Buzink, 2013). The trainees have preferred the FLS box trainer over the VR system. The authors have concluded that Lap Mentor training alone might not suffice to pass an assessment on the validated FLS box trainer (Brinkman, Tjiam, & Buzink, 2013).

The LapVR consists of laparoscopic instruments connected to a desktop computer. The system provides also a set of basic skills comparable to the FLS tasks (peg transfer, cutting and clip application). A recent comparative study has shown the lack of validity of the scoring system of the LapVR tasks as compared to the FLS trainer (Steigerwald, Park, Hardy, Gillman, & Vergis, 2015). Because of the lack of validity and the high cost associated with the LapVR, the study concluded that the FLS trainer is currently a more cost-effective and a better tool for training and assessing basic laparoscopic skills.

LapSim is another training system that features training for basic laparoscopic skills. The system has different modules mimicking different tasks such as camera navigation, instrument navigation, coordination, grasping, cutting, clip applying and more advanced modules like suturing and “running the small bowel”. Time, instrument path length and procedure specific errors are measured. In a recent study, the performance on this simulator has not been shown to be correlated with the FLS scores (Hennessey & Hewett, 2014). Some comparative studies and reviews have shown also the benefit of the FLS trainer compared to LapSim for training basic laparoscopic skills (Fairhurst, Strickland, & Maddern, 2011; Tan, et al., 2012; Hennessey & Hewett, 2014), while another study has concluded that both systems are efficient for training laparoscopic skills with an advantage to the FLS box trainer in regard to its cost effectiveness (Orzech, Palter, Reznick, Aggarwal, & Grantcharov, 2012). Indeed, the annual cost of VR training for 5 residents was estimated to $80,000 USD while it was estimated to $12,000 for the FLS box training (Orzech, Palter, Reznick, Aggarwal, & Grantcharov, 2012).

Lap-X also offers similar FLS tasks like peg transfer, pattern cutting and suturing. Lap-X design is centered on portability and hence features a light user interface with haptic feedback. Therefore, the system is less expensive than the previous VR trainers. However, to the best of our knowledge, Lap-X has not been clinically validated and only a preliminary evaluation study has been conducted recently (Kawaguchi, Egi, Hattori, Sawada, Suzuki, & Ohdan, 2014). No comparative study with other existing systems such as the FLS trainer has been conducted to show his concurrent validity. Therefore, more research is needed to validate this system.

In summary, the brief review above shows that both physical box trainers and VR simulators could be efficient for training and assessing basic laparoscopic skills. However, there is currently a significantly larger body of evidence supporting this for physical box trainers than VR simulators. This is confirmed by a recent systematic review showing an advantage for box trainers over VR trainers for training and assessing basic laparoscopic skills regarding the learners’ satisfaction and task time (Zendejas, Brydges, Hamstra, & Cook, 2013). In addition these trainers are less expensive than VR systems. As a consequence, they are the most widely available tools in laparoscopic surgery training programs. For instance, the FLS system which is the only ACS and ABS approved trainer for training and assessing basic laparoscopic skills in North America is a box trainer. However, the evaluation of skills on these systems is subjective and requires the presence of a trained proctor. In this context, VR technologies can overcome this limitation. However, the current VR laparoscopic trainers (both box trainers and full procedural trainers) suffer from some issues such as their high acquisition cost, the lack of validity of their performance measurements, or their lack of visual and haptic fidelity. Our focus in this paper is more particularly on this last issue.

2.2. Impact of simulator fidelity

The effectiveness of a training system is usually determined by the ability of the trainee to transfer the knowledge learned during training to a real world task (Liu, Macchiarella, Blickensderfer, & Vincenzi, 2008). This is particularly true with virtual training environments that replicate real world environments. Nevertheless, building efficient VR training systems depends on designing an effective interaction for these systems. Complex and inappropriate user interfaces make an interactive system likely to be underused or misused with frustrated trainees maintaining their current training methods or not acquiring the targeted skills (Maguire, 2001). To design a system that overcomes some of the previous issues associated with VR surgical trainers, it is important to ensure its fidelity. Simulator fidelity can be defined as the similarity between the knowledge taught in a simulator to the one used in the real world environment (Stoffregen, Bardy, Smart, & Pagulayan, 2003). From the fidelity perspective, part-task trainers can be considered as low-fidelity simulators and full procedural trainers as high-fidelity simulators. While it is intuitive to think that higher fidelity will naturally be necessary to increase the training effectiveness (Hays & Singer, 1989; Hamblin, 2005), other researchers have shown that low-fidelity trainers could be sufficient for an efficient and effective training experience (Kim, Rattner, & Srinivasan, 2003; McMains & Weitzel, 2008; Moroney & Moroney, 1999). Our previous review of the existing laparoscopic simulators confirms that low-fidelity box trainers are sufficient and sometimes more suitable for training basic laparoscopic skills than high-fidelity full procedural trainers.

However, it is important to distinguish between two different aspects of simulator fidelity – interface fidelity and environment fidelity (Waller & Hunt, 1998). Interface fidelity is defined as the degree to which the input and output interaction devices used in the virtual training environment function similarly to the way in which the trainee would interact with the real world (Waller & Hunt, 1998). This concept is similar to the concept of interaction fidelity defined by Ragan et al. – the objective degree of exactness with which real-world interactions can be reproduced (Ragan, Bowman, Kopper, Stinson, Scerbo, & McMahan, 2015). Interface fidelity plays a central role in the transfer of knowledge from a VR trainer to the real world (Drews & Bakdash, 2013). It is affected by the ease of interaction and level of user control of the system (Hamblin, 2005). For instance, an efficient interaction requires that any action from the operator in the virtual world generates an instantaneous multimodal response from the virtual environment.

On the other hand, the environment fidelity is related to the realism of the virtual environment (Hamblin, 2005). It is defined as the degree of mapping between the real world environment and the training environment. This can be linked to the concept of display fidelity defined by Ragan et al. – the objective degree of exactness with which real-world sensory stimuli are reproduced (Ragan, Bowman, Kopper, Stinson, Scerbo, & McMahan, 2015). Environment fidelity depends on a subjective judgment of similarity between the real and the simulated worlds rather than an objectively quantifiable correspondence between values of variables (Waller & Hunt, 1998). It is affected by the system’s quality of the visual, auditory, and haptic feedback. However, according to Dieckmann (2009), the realism of a virtual simulator must be a means to serve specific learning objectives of the trainer and not the goal per se of designing a simulation system. In fact, environment fidelity should be used to positively affect the interface fidelity. For instance, learning how to handle tissue in laparoscopic surgery requires knowledge and skills to apply the appropriate amount of force for pulling or cutting. Previous studies have shown that virtual simulators can efficiently train these skills (Kim, Rattner, & Srinivasan, 2003; Al-Kadi, Donnon, Oddone Paolucci, Mitchell, Debru, & Church, 2012). Moreover, the addition of haptic feedback and animation to the surgical surface also improves the acquisition of these skills because it allows one to correctly perceive the elasticity and other tissue properties (Basdogan, De, Kim, Muniyandi, Kim, & Srinivasan, 2004).

However, guidelines or processes for how to go about achieving interface and environment fidelity for surgical trainers have not been fully realized. There is the strong belief that the development of effective VR trainers is solely an engineering challenge. In fact, much of the engineering approach is technology driven and has been focusing on high (visual) environment fidelity and realism (Drews & Bakdash, 2013). For instance, a system that has visual feedback with more realistic graphics is usually preferred over a system with less realistic graphics, even when the later leads to a better interaction and training (Smallman & St. John, 2005). However, the engineering approach has many limitations. First, despite the advances in computer graphics technology and processing power, complete physical realism towards environment fidelity in simulation will not be accomplished in the near future (Gibson, 1971; Stappers, Gaver, & Overbeeke, 2003; Stoffregen, Bardy, Smart, & Pagulayan, 2003; Kim, Rattner, & Srinivasan, 2003). Second, high-environment fidelity simulators are usually much more expensive than low-environment fidelity simulators (Hopkins, 1974). As discussed above, this is the case of most available VR laparoscopic trainers. Finally, the relationship between high-environment fidelity simulators and transfer of knowledge to the real world has not been fully established (Drews & Bakdash, 2013). Again, this is confirmed by recent reviews on VR laparoscopic simulators (Våpenstad & Buzink, 2013; Yiannakopoulou, Nikiteas, Perrea, & Tsigris, 2015). On the contrary, if the environment fidelity in simulation does not match the interaction aspects of performing the actual task; high environment fidelity even may be detrimental to training (Kozolowski & DeShon, 2004).

Cost-effective low-fidelity surgical trainers could be efficient in surgical training (Kim, Rattner, & Srinivasan, 2003; McMains & Weitzel, 2008). However, the levels of interface and environment fidelity required and the characteristics of the virtual training environment that are most important for VR simulations to be effective in training laparoscopic skills have not been defined. Hence, the design of training systems requires defining the learning objectives of the system, the most important part of the surgical task to simulate, and to what extent it can be simulated successfully (Forsslund, Sallnäs, & Palmerius, 2009). One approach to achieve these requirements is the use of the multidisciplinary iterative design approaches. There is a growing interest in leveraging the principles of the iterative design for medical systems and VR surgical trainers (Mégard, Gosselin, Bouchigny, Ferlay, & Taha, 2009; Forsslund, Sallnäs, & Palmerius, A User-Centered Designed FOSS Implementation of Bone Surgery Simulations, 2009; Johnson, Guediri, Kilkenny, & Clough, 2011; Chellali, Dumas, & Milleville-Pennel, Haptic communication to support biopsy procedures learning in virtual environments, 2012; Yang, Lee, W., Choi, Y., & You, 2012; Barkana Erol & Erol, 2013; von Zadow, Buron, Harms, Behringer, Sostmann, & Dachselt, 2013). Nevertheless, none of these studies have addressed how to leverage the design process to achieve interface and environment fidelity.

2.3. Objectives

In our development effort, human factors engineers, task designers, software developers, and physicians worked together to design and build the VBLaST system. Despite starting off with a concerted effort to gather a definitive set of requirements, our first prototype had reduced performance in comparison to the physical box trainer. Our previous studies (Arikatla, et al., 2013; Chellali, et al., 2014) have shown that the system has several limitations and suggested that improvements are necessary. However, the exact changes required to improve the system were not identified.

In the current paper, the objective was to point out these issues which led to a decrease in the trainees’ performance/learning on our system. Through a focus group with expert laparoscopic surgeons evaluating the VBLaST system side-by-side with the physical skills trainer, we began to uncover the intangible qualities that contribute to interface and environment fidelity in a virtual skills trainer. The findings of this comparative situated evaluation session were then rolled into a second design iteration of our system and the evaluation of that system proved its validity against the physical skills trainer. We discuss these findings in light of the need for an iterative design process to elicit not only the cognitive and functional requirements from the standpoint of the trainees, but also the reflective ‘felt-experience’ of the experienced surgeons in order to round out the intangible properties that enhance the simulator’s functional validity.

In the following, we describe two separate experiments that were conducted to evaluate the two different iterations in the design process of the VBLaST system. In each experiment, the VBLaST prototype was compared to the accepted standard in the surgical community for training and assessing basic laparoscopic skills, the FLS box-trainer. The peg transfer task, the first of the five standard psychomotor tasks of the FLS program was used as the test case in the current study. All of the studies conducted within the scope of this work were approved by the local Institutional Review Boards (IRB).

3. FIRST DESIGN ITERATION

The first iteration of our design process was conducted by performing observations of the use of the physical task trainer, a task analysis from those observations, a prototype design and implementation and an evaluation session. The observations consisted of observing residents during their training for the peg transfer task, using the physical skills trainer. The goal was to conduct a task analysis to identify the learning objectives, the subtasks and actions necessary to complete the main peg transfer task, the instruments used to perform the task as well as the constraints when performing it.

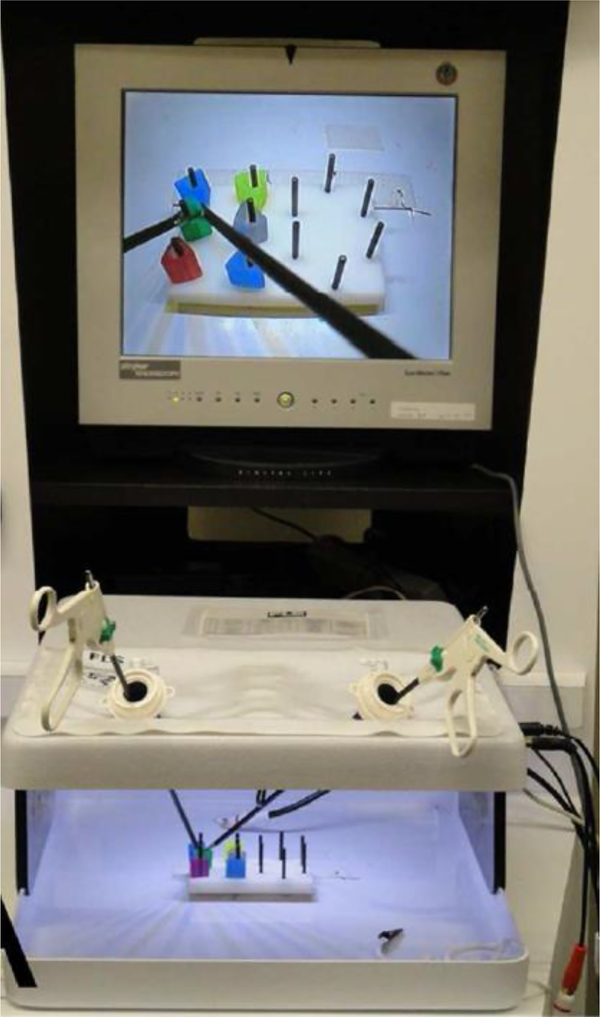

3.1. Physical task trainer

The FLS box-trainer (Figure 1) includes a set of accessories used to simulate specific basic laparoscopic tasks, a source light and a built-in camera capturing the objects/tools movement while a trainee is performing the surgical tasks. The video is displayed on a monitor for the trainee to watch. For the peg transfer laparoscopic task, a peg board is placed in the center of the FLS box-trainer with 12 pegs and six rings. The six rings are on the left side of the peg board at the start of each trial. Two laparoscopic graspers are used to manipulate the rings.

Figure 1:

The FLS box-trainer

3.2. Task to be simulated

The task analysis was validated by two different certified SAGES FLS proctors. The learning objectives of this task are to develop bimanual dexterity, hand-eye coordination, speed and precision. The leaners are trained to perform the task as quickly as possible with fewer errors. A hierarchical task analysis (HTA) at the tasks level and based on video analysis was conducted following the same method used in previous studies (Cao C. G., MacKenzie, Ibbotson, Turner, Blair, & Nagy, 1999; Chellali, et al., 2014). The HTA permitted to decompose the FLS peg transfer task into the following subtasks: using two laparoscopic graspers (one in each hand), operators pick up the rings (one at a time) with the left hand, transfer them to the right hand, and place them on the opposite side of the peg board. Once all the rings are transferred, the process is repeated to transfer the rings back to the other side of the peg board to complete one trial. The instrument tool tip can be rotated using a rotation wheel in order to facilitate the manipulation of the rings. A total of 6 rings with different colors have to be transferred back and forth for each trial. The color and order of transfer of the rings are of no importance. If a ring is dropped on the board, the operator has to pick it up with the same hand and continue the transfer. However, if the ring is dropped outside of the board, the operator has to leave it behind and continue the task. Timing starts once the first ring is grasped and ends after the last ring is transferred.

The previous task analysis suggests that to achieve fidelity of the environment, it is important to replicate the physical workspace by simulating the surgical tools, the rings, the pegs and their interaction in the virtual world. Moreover, the analysis shows that haptic interactions and physical responses of the virtual objects are essential to allow the user to manipulate the virtual objects and to achieve an efficient interaction with the system. These findings were the foundation for the design of the early VBLaST prototype (Arikatla, et al., 2013). This prototype is described in the following section. The first iteration concluded with an experimental evaluation session in which the early VBLaST prototype was compared to the FLS box-trainer.

3.3. VBLaST System Design

The early VBLaST prototype (Figure 2) consisted of computational software to simulate the FLS pegs and rings, and a physical user interface to connect two laparoscopic graspers handles (the same as those used for the FLS box-trainer) to two PHANTOM Omni haptic devices (Geomagic Inc., Boston, MA, USA). These haptic devices have 6 Degrees Of Freedom (DOF) positional sensing, 3-DOF force feedback and a removable stylus for end-user customization. They have a workspace size of 160mm width x 120mm height x 70mm depth. These commercially available haptic devices have a relatively low cost (around $1800 USD each).

Figure 2:

the early VBLaST prototype (VBLaST 1)

Using this interface, the users can hold onto the instruments handles with their hands, moving them in the real space while watching what they are doing inside the virtual space on the computer screen. This replicates the setup of the FLS box trainer where the users move the instruments while watching on a monitor the tools/objects movements captured through a built-in camera. This is also similar to what surgeons experience during laparoscopic surgery and thus ensures the ecological validity of the study (Breedveld & Wentink, 2001). The haptic devices simulate and render contact forces with the virtual objects. Finally, two linear slider potentiometers (one mounted on each handle) were used to allow the user rotating the virtual tool tip using the rotation wheels of the handles (Figure 2).

3.4. First experimental evaluation session

The goal of the first experiment was to verify the design decisions for the early VBLaST version and to get input for modifications in the future iterations. Twenty general surgery residents and attendings (N = 20) were asked to participate in the study (25–55 years old, 13 male-7 female, 2 left handed). Five postgraduate year 1 (PGY1) general surgery residents, five postgraduate year 3 (PGY3) general surgery residents, five postgraduate year 4 (PGY4) general surgery residents, and five fellows and attendings from different hospitals in the Greater Boston Area were recruited and categorized into four groups depending on their expertise level (five subjects in each group), as shown on Table 1.

Table 1:

Subjects’ groups in the first experiment

| Expertise level | PGY1 | PGY3 | PGY4 | Attendings |

|---|---|---|---|---|

| Group name | Group1 | Group2 | Group3 | Group4 |

| Number of subjects | 5 | 5 | 5 | 5 |

3.4.1. Apparatus

The FLS box-trainer and the early VBLaST prototype were used to perform the peg transfer task in this experiment. A digital video capture device (AVerMedia, Milpitas, CA, USA) was used to record subjects’ performance inside the task space for the FLS box-trainer. The video was used to calculate the task completion time and errors (the number of rings dropped outside of the board) for data analysis. The performance measures for the VBLaST were automatically recorded by the system on a log file.

3.4.2. Evaluation procedure

The standard FLS peg transfer task was used in this experiment. Before the start of the experimental session, subjects were asked to complete the consent form and to fill-in a questionnaire detailing the demographics and their previous laparoscopic surgery and laparoscopic simulators experience. The subjects were then shown an instructional video describing the peg transfer task, accompanied by a verbal explanation. Subjects were given one practice trial on each system to become familiar with the procedure and the two systems. Each participant was then asked to perform ten trials of the standard FLS peg transfer task both on the FLS box-trainer and the VR trainer. The study was counterbalanced so that half of the participants performed the task on the FLS box-trainer first, while the other half performed the task on the virtual trainer first. Subjects were assigned randomly to each group. At the end of the session, they were asked to fill-in an evaluation questionnaire and to comment their experience with the system and their opinions about what design aspects of the VBLaST system could be changed.

3.4.3. Evaluation dependent measures

For the FLS box-trainer, the dependent measure (obtained from the captured videos) consisted of a total raw score (ranging from 0 to 300) calculated using the standard metric for the FLS training program (Fraser, Klassen, Feldman, Ghitulescu, Stanbridge, & Fried, 2003) obtained from the SAGES FLS committee. This is the current assessment method of the proficiency of laparoscopic technical skills adopted by the Society of American Gastrointestinal and Endoscopic Surgeons (SAGES), the American College of Surgeons (ACS) and the American Board of Surgery (ABS) for general surgeons (Fried G. M., 2008).

The raw score is based both on accuracy and speed (Derossis, et al., 1998). The timing score is calculated by subtracting the time to complete the task from a preset cutoff time (300 sec): timing score = 300 (sec) minus time to complete the task (sec). This scoring method rewards faster performance with higher scores. If the time to complete the task surpasses the preset cutoff time, a timing score of zero is given to avoid negative scores. Accuracy is also objectively scored by calculating penalty score: percentage of pegs dropped outside the field of view. Finally a total score combining the two above scores is calculated for each trial performed by subtracting the penalty from the timing score: raw score = timing score minus penalty score. Thus, the more accurately and quicker a task was completed, the higher the score.

Similar to what have been used in previous studies (Arikatla, et al., 2013; Chellali, et al., 2014), these raw scores were divided by a normalization factor that was calculated using the best expert FLS score obtained in the FLS condition in the two experiments. The FLS normalized scores ranged from 0 to 100. The same FLS normalization factor was used for experiment 1 and experiment 2 so that the scores are comparable.

For the VBLaST trainer, the raw scores were automatically calculated by the system using the same formula. These raw scores were then divided by a normalization factor calculated using the best expert score obtained from the VBLaST condition in the two experiments. The VBLaST normalized scores also ranged from 0 to 100. The same normalization factor was used for both the early and modified VBLaST prototypes.

Finally, the subjects evaluated different features of the VBLaST system relative to the FLS trainer using a 5-point Likert scale post experimental questionnaire. The ratings were ranging from 1 (not realistic/not useful) to 5 (very realistic/useful).

3.4.4. Experimental design and data analysis

The participants’ performance on each simulator was compared using a mixed design (Split-Plot) ANOVA. The study was a 2×4 within-between subjects design. The within subjects factor was Trainer with two levels (FLS Vs VBLaST1). The between subjects factor was Experience group with four levels (1, 2, 3 and 4). Moreover, The Pearson’s correlation tests were used to assess the correlation between the FLS and the VBLaST scores. Finally, descriptive analyses were used to present the results of the post experimental questionnaire. All analyses were performed using SPSS v.21.0 (IBM Corp., Armonk, NY, USA).

3.5. Results of the first system’s evaluation

3.5.1. Effects of trainer and expertise level on performance

All assumptions for the Split-Plot ANOVA (the normality of data, the equality of covariance, and the homogeneity of variances) were checked using the appropriate statistical tests (Shapiro-Wilk test, the Box’s test and the Levene’s test of equality of variances, respectively). The results indicate that all assumptions are met.

The results of the ANOVA show that there is a significant main effect of Trainer (F(1, 16) = 51.24, p < 0.001, ƞp2=0.76) and a significant main effect of Experience group (F(3, 16) = 5.00, p = 0.012, ƞp2 = 0.48) on the performance scores (Figure 3). There is no significant interaction between the two factors (F(3, 16) = 0.48, p = 0.70). These results indicate that the subjects’ performance on the FLS box-trainer was significantly higher than their performance on the VBLaST1 simulator.

Figure 3:

Comparing the early VBLaST prototype and the FLS box-trainer (error bars represent the standard error)

Moreover, the Post-Hoc tests with Bonferroni correction show that group 1 performed (mean = 72.04 ± 15.9; mean = 36.05 ± 12.57; respectively for FLS and VBLaST1) significantly lower than group 3 (mean = 93.27 ± 8.06; mean = 70.56 ± 18.31; respectively for FLS and VBLaST1) and group 4 (mean = 94.76 ± 7.83; mean = 63.05 ± 13.97; respectively for FLS and VBLaST1) at the .05 level of significance. No other significant differences were observed among the three other groups (Figure 3).

Finally, the Pearson’s correlation test shows that the FLS and VBLaST1 mean scores had a correlation of 0.60 (Pearson's r (18) = 0.60, p = 0.005).

3.5.2. Subjective evaluation

The results of the subjective evaluation are presented on Table 2. The results show that the rating scores were relatively low more particularly for the realism of instruments handling, the quality of the haptic feedback and the trustworthiness of the system in quantifying the laparoscopic performance as compared to the FLS trainer. The overall realism of the simulation and the usefulness of the system for learning the different laparoscopic skills (hand-eye coordination, bimanual dexterity and speed and accuracy) were rated average. Finally, the realism of virtual objects and the usefulness of the haptic feedback were rated high.

Table 2:

subjective rating of the early VBLaST prototype relative to the FLS box-trainer

| Questions: scale varies from 1 (not realistic/not useful) to 5 (very realistic/useful). | Mean Rating | SD |

|---|---|---|

| 1. Realism of the virtual objects (the rings, the pegs…etc.) | 3.65 | ±1.18 |

| 2. Realism of instrument handling compared to FLS | 2.80 | ±0.95 |

| 3. Overall realism compared to FLS | 3.05 | ±0.82 |

| 4. Quality of Haptic feedback | 2.80 | ±1.15 |

| 5. Usefulness of Haptic Feedback | 3.85 | ±1.38 |

| 6. Usefulness for learning hand-eye coordination skills compared to FLS | 3.25 | ±1.06 |

| 7. Usefulness for learning bimanual dexterity skills compared to FLS | 3.20 | ±1.05 |

| 8. Overall usefulness for learning laparoscopic skills compared to FLS | 2.95 | ±0.94 |

| 9. Trustworthiness in quantifying laparoscopic performance | 2.75 | ±1.20 |

SD = standard deviation.

4. EXPERT FOCUS GROUP REFLECTION AND FEEDBACK

The objective of the first study was to evaluate the early prototype of our system and identify its validity and problems. The results of this evaluation have shown that the subjects’ performance on the early VBLaST prototype was significantly lower than their performance on the FLS box-trainer. Moreover, although the scores on the VBLaST and FLS are significantly correlated, the correlation coefficient is medium (r = 0.6). This suggested that the system required improvements to meet the users’ needs in terms of interface and environment fidelity. The analysis of the subjective evaluation gave us some indications on what should be improved.

After this first evaluation session, we held a focus group session with expert surgeons (n = 4), software developers and designers. The aim of this session was to analyze the various dimensions of the early VBLaST prototype, to identify the issues that may have contributed to decrease the users’ performance on this early version and attempt to correct them. As we identified earlier from the literature, fidelity can significantly affect the performance of users in a simulation environment. We know that one could perform the same tasks in the two systems, so it was not a difference in unmet manual or cognitive requirements. Thus, we turned our attention to the felt experience of using the system.

For that purpose, the expert surgeons were asked to test successively the two systems (both the physical and the virtual trainer) and describe the differences between them. The feedback during this comparative situated evaluation was provided verbally and the session was videotaped. Video analyses combined with the findings of the previous preliminary studies (Arikatla, et al., 2013; Chellali, et al., 2014) and the analyses of the questionnaires results and comments provided by subjects during the first experiment, led us to identify different items that required improvements. In the following, we describe the main issues with regards to environment and interface fidelity that were identified and the related design decisions to overcome them during the second design iteration.

4.1. Incorrect mapping of the workspace

The first identified issue is related to the size and mapping of the workspace. In fact, haptic devices are usually used as position control devices in which the displacement of the end-effector (the device stylus) is directly correlated with the displacement of the virtual tool displayed on the screen (Conti & Khatib, 2005). This displacement correlation may not be a one-to-one correspondence, since the tool position may be scaled according to a constant mapping from the device position. In this case, the end effector may be moved a distance of one centimeter in the workspace which causes the virtual tool to move ten centimeters in the virtual space. This can be useful for some applications in which the size of the device workspace is smaller than the size of the virtual space. Indeed, this allows the user to reach objects in all areas of the virtual space. However, this may not be appropriate in the context of learning a surgical motor skill in which fine and accurate positioning of tools is an important task. For these tasks, the large scaling of device movement to virtual tool movement makes the task difficult to perform and may lead to learning the wrong motor skills. Rather, the system needs to simulate faithfully the real world to ensure the correct transfer of skills such as accuracy and speed of movement. In McMahan’s framework of interaction fidelity analysis (FIFA) (Exploring the Effects of Higher-Fidelity Display and Interaction for Virtual Reality Games, 2011), this is referred to as the Transfer Function Symmetry under the control symmetry concept. McMahan argues that a one-to-one position-to-position mapping between the virtual and the real-world results in a higher level of transfer function symmetry which in turn increases the interaction fidelity (Exploring the Effects of Higher-Fidelity Display and Interaction for Virtual Reality Games, 2011).

After testing our system, the expert surgeons commented that although the size of the laparoscopic tool handles connected to the haptic devices were similar to the physical tools in the FLS box-trainer, the combination of the virtual tool tips and the physical handles resulted in longer tools than those used in the FLS box-trainer. Therefore, users had to move them deeper into the virtual ‘box’ in order to reach and manipulate the virtual rings. Moreover, they felt that the virtual space was larger than the FLS trainer workspace resulting in much bigger tool movements to manipulate the rings.

Software developers subsequently confirmed that the size of the virtual tools and space in this prototype were different from the size of the physical tools and workspace. These interface fidelity issues led to an increase in the distance traveled by the tools which would invariably increase the time to complete the task and alter the accuracy of movement. This also affects bimanual dexterity, speed and precision, which were identified as important learning objectives of our system.

This led to the second round design decision of reducing the size of the virtual space so that it matches exactly the size of the physical workspace. Moreover, it was decided to use shorter tool handles connected to the haptic devices so that their combination with the virtual tool tips matches the exact length of the actual tools. For that purpose, some measurements on the FLS box and laparoscopic tools were performed to match the virtual environment to the real-world environment.

4.2. Lack of depth cues

The second identified issue is related to the availability of spatial cues. In fact, grasping, moving and placing objects involve fine motor skills that are dependent on precise perceptual judgments (Hu, Gooch, Thompson, Smits, Rieser, & Shirley, 2000). In laparoscopic surgery, the 2D image provided on the monitor lacks binocular depth cues. Subsequently, surgeons compensate this lack of spatial cues by relying on other information such as the pictorial depth cues available from the video display (Shimotsu & Cao, 2007). These include the monocular cues that are preserved during the 3D to 2D transition from the real image to the monitor and are not substantially degraded in the video image (Wickens & Hollands, 2000). One example of these monocular cues used by the surgeons to perceive depth is shadows cast by the laparoscopic instruments (Shimotsu & Cao, 2007). These shadows are created when an object blocks the illumination of a surface by obscuring the light source. The shape of the shadow provides clues on the shape of the object and its spatial position and orientation. Moreover, the motion of shadows generated by the movement of the shadow-casting objects can give the observer important cues about the trajectory of the moving object and is linked with the object movement to perceive depth (Imura, et al., 2006). Several studies have shown that these shadows are useful depth cues and that humans begin to use them from infancy (Puerta, 1989; Castiello, 2001; Imura, et al., 2006).

When testing the early VBLaST prototype, the expert surgeons noticed that no shadows were displayed. During the discussions, they confirmed that the shadows casted by the surgical instruments are an important depth cue and distance judgment information that helps them during picking, transfer and placement of the rings in the peg transfer task. In fact, when moving an instrument, cast shadows allow them to know when the tool is in close proximity to the surface of an object before contact occurs. They can then timely decide to open the tool tip to grab, transfer or release the rings they are manipulating.

They commented that the absence of shadows in our system altered their perception of depth and imminent contact with objects during the manipulation of the rings. This suggests that this issue has also contributed to decrease the task performance as shown in previous research (Hu, Gooch, Thompson, Smits, Rieser, & Shirley, 2000). This is a visual fidelity problem that we could not have known or ascertained from observations how important this was in skills training. This led to the design decision of including simulated cast shadows in the virtual environment with a particular focus on displaying them in the right position so that they can give the appropriate depth cues to the users.

4.3. Lack of haptic feedback fidelity

The last identified issue is related to the quality of the haptic feedback. In fact, in real laparoscopic surgery haptic perception is highly impaired as interaction with organs is performed through rigid instruments (Klatzky, Lederman, Hamilton, Grindley, & Swendsen, 2003; Brydges, Carnahan, & Dubrowski, 2005). Several studies have investigated the impact of haptic interaction modality in surgical training. Results from these studies show that haptics is an essential component of minimally invasive surgery VR trainers (Dang, Annaswamy, & Srinivasan, 2001; Seymour, Gallagher, Roman, O’Bri, Andersen, & Satava, 2002; Reich, et al., 2006; Ström, Hedman, Särnå, Kjellin, Wredmark, & L., 2006; Panait, Akkary, Bell, Roberts, Dudrick, & Duffy, 2009; Chellali, Dumas, & Milleville-Pennel, 2012), and particularly more so for less experienced surgeons (Cao, Zhou, Jones, & Schwaitzberg, 2007).

In the past, there was an agreement among experts that training on the box trainer is preferable over virtual reality simulator systems for some laparoscopic surgical tasks because of the lack of fidelity of haptic feedback in VR trainers (Botden, Torab, Buzink, & Jakimowicz, 2007). Nevertheless, with the advent of haptic rendering technology it is now possible to simulate faithfully haptic interactions with complex virtual objects in real-time and then overcome this limitation of VR trainers.

After testing our system during the focus group session, our experts pointed out some issues related to the quality of the haptic feedback of the system. Indeed, they noticed that there is a mismatch between the haptic feedback felt when interacting with the virtual rings and the corresponding visual feedback. For instance, they commented that sometimes it seemed like the virtual tool tip was visually in contact with the ring while no haptic feedback was felt. Moreover, the forces felt when touching the virtual rings were much stronger than those felt by the users when interacting with the actual rings. Therefore, they needed to apply stronger forces in order to move the virtual rings. Finally, they noticed that grasping the rings caused sometimes strong vibrations on the devices which disturbed them when performing the task. The software engineers found that this was due to some computational errors related to the collision detection algorithm which caused the instability of the haptic device. Therefore, it was decided to improve the collision detection algorithm as well as the fidelity of the haptic feedback in order to overcome these limitations. Improvements included for instance, measuring the forces generated when manipulating the actual rings so that similar force amplitudes can be displayed on the haptic devices when interacting with virtual rings.

5. SECOND DESIGN ITERATION

A modified VBLaST prototype was designed and implemented. This modified prototype leveraged the lessons learned from the early prototype evaluation as described above. The new prototype is described in the apparatus section below.

5.1. Second experimental evaluation session

To assess the design improvements between the early and the modified versions of the VBLaST system, a second experiment took place using the modified VBLaST prototype. This time twenty-two Obstetrics and Gynecology (OB/GYN) residents and attendings (N = 22) were recruited (26–45 years old, 2 male-20 female, 2 left handed). They consisted of 9 first year residents, 4 third year residents, 5 forth year residents, and 4 attendings all from a teaching hospital in the Greater Boston Area. The subjects were categorized into four groups depending on their expertise level and comparable (in terms of residency years) to the four groups previously defined for general surgery residents in the first experiment as shown on Table 3.

Table 3:

Groups’ of subjects in the second experiment

| Expertise level | First year | Third year | Forth year | Attendings |

|---|---|---|---|---|

| Group name | Group1 | Group2 | Group3 | Group4 |

| Number of subjects | 9 | 4 | 5 | 4 |

5.1.1. Apparatus

In this second experiment, the same FLS box-trainer and set of laparoscopic tools were used and compared to the modified VBLaST prototype (Figure 4).

Figure 4:

The modified VBLaST prototype (VBLaST 2)

5.1.2. Task and procedure

The same task and procedure as experiment 1 were used in this experiment.

5.1.3. Dependent measures

We used the same dependent measures as those used in the previous experiment. Moreover, the same normalization factors were used for this experiment. Finally, the same questionnaires and scales were used for the subjective evaluation.

5.1.4. Experimental design and data analysis

The participants’ performance on each simulator was compared using a mixed design (Split-Plot) ANOVA. The study was a 2×4 within-between subjects design. The within subjects factor was “trainer” with two levels (FLS Vs VBLaST2). The between subjects factor was “experience group” with four levels (1, 2, 3 and 4). Moreover, the participants’ performance on each simulator was compared over the two experiments using two 2-way ANOVAs. The study was a 2×4 between subjects design. The first factor was “trainer” with two levels (FLS1 Vs FLS2 for the first comparison, and VBLaST1 Vs VBLaST2 for the second comparison). The second factor was “experience group” with four levels (1, 2, 3 and 4) for both comparisons. Versions 1 and 2 of the FLS box-trainer (FLS1 and FLS2) were exactly the same, whereas the modified VBLaST prototype (VBLaST2) was the improved version of the early VBLaST prototype (VBLaST1) as described above. In addition, a correlation analysis (Pearson’s test) was performed to investigate the relationship between the FLS and VBLaST2 scores. Finally, Mann Whitney non parametric test was used to compare the subjective ratings of the two VBLaST prototypes. All analyses were performed using SPSS v.21.0 (IBM Corp., Armonk, NY, USA).

5.2. Results of the second system’s evaluation: comparative analyses

5.2.1. The effect of the expertise of participants

Since the two pools of subjects in experiments 1 and 2 were from two different medical specialties (General surgery and OB/GYN), the first step in our analysis was to determine whether the performance of these two populations on the FLS box-trainer were not significantly different in our two experiments. Because the FLS box-trainers used in the two experiments were similar and the expertise groups not significantly different, we hypothesized that the performance of both populations would not be significantly different on this trainer. To verify this hypothesis, a 2-way between subjects ANOVA was conducted.

All assumptions for this 2-way ANOVA (the normality of data, and the homogeneity of variances) were checked using the appropriate statistical tests (Shapiro-Wilk test and the Levene’s test of equality of variances, respectively). The results indicate that the normality assumption is met. However, the Levene’s test reveals that the assumption of equality of variances is not met. According to Tabachnick and Fidell (Using multivariate statistics, 1989), the inequality of variances increases Type I error risk (page 45). To overcome this issue, Keppel (Design and analysis: A researchers handbook, 1991) suggests correcting the violation of the equal variance assumption in a two-way ANOVA by substituting alpha by alpha/2. In our case, this leads to an alpha level of 0.025.

The results of the ANOVA (Figure 5) show no significant main effect of the FLS trainer on the performance scores (F(1, 34) = 2.51, p = 0.12, ƞp2 = 0.06), while a significant main effect of the Expertise group on the performance scores was found (F(3, 34) = 22.91, p < 0.001, ƞp2 = 0.66). Finally, no significant interaction between the two factors was found (Figure 5). The Post-Hoc tests with Bonferroni correction show that group 1 performed (mean = 72.04 ± 15.9; mean = 63.56 ± 6.72; respectively for FLS1 and FLS2) significantly lower than group 2 (mean = 85.14 ± 8.12, mean = 86.62 ± 3.71; respectively for FLS1 and FLS2) group 3 (mean = 93.27 ± 8.06; mean = 90.70 ± 2.69; respectively for FLS1 and FLS2) and group 4 (mean = 94.76 ± 7.83; mean = 88.08 ± 0.77; respectively for FLS1 and FLS2) at the .025 level of significance. No other significant differences were observed among the three other groups (Figure 5).

Figure 5:

comparing the performance of the two populations of subjects on the FLS trainer (error bars represent the standard error)

Because the objective was to determine whether the two populations are significantly different from each other, we have also performed direct comparisons. First, we have conducted a simple independent samples t-test to determine whether the performance of all the participants on FLS1 and FLS2 was significantly different. The results show that the assumption of equality of variances is met and that there is no significant effect (t(41) = 1.90, p = 0.06) of the system on the performance (mean = 86.3 ± 13.39; mean = 78.4 ± 13.48, for FLS1 and FLS 2, respectively).

We have also conducted a one way ANOVA to compare the scores on the two systems for each group of expertise. The Levene’s test in this case indicates that the assumption of equality of variances is met only for group 2. Therefore, the Welch correction was used to overcome the violation of equality of variances assumption. The one-way ANOVA with Welch correction results indicate no main effect of the system for all of the four groups (F(1, 13) = 2.01, p = 0.18; F(1, 8) = 0.11, p = 0.74, F(1, 9) = 0.45, p = 0.51, F(1,8) = 2.80, p = 0.14; respectively for group 1, group 2, group 3 and group 4).

These results confirm that the two populations are not significantly different, both regarding the overall performance scores and the experience groups. The validation of our first hypothesis permits to conduct further comparisons as described in the next sections.

5.2.2. The effect of the design iteration on the VBLaST trainer

To investigate the effect of the design process and determine whether the performance was improved on the modified version of the VBLaST prototype as compared with the early VBLaST prototype, a 2-way between subjects ANOVA on the VBLaST scores was conducted.

All assumptions for this 2 way ANOVA (the normality of data, and the homogeneity of variances) were checked using the appropriate statistical tests (Shapiro-Wilk test and the Levene’s test of equality of variances, respectively). The results indicate that all assumptions are met.

The results of the ANOVA (Figure 6) show a significant main effect of the VBLaST version (F(1, 34) = 21.29, p < 0.001, ƞp2 = 0.38) and a significant main effect of the Experience group (F(3, 34) = 8.81, p < 0.001, ƞp2 = 0.43) on the VBLaST performance scores. No significant interaction was found between the two factors. The results (Figure 6) indicate that the subjects performed significantly better on the modified VBLaST version. Overall, the mean performance scores were increased by 38% in the new VBLaST version. Moreover, the Post-Hoc tests with Bonferroni correction show that experience group 2 (mean = 57.79 ± 28.12; mean = 80.74 ± 6.82; respectively for VBLaST1 and VBLaST2), group 3 (mean = 70.56 ± 18.31; mean = 84.06 ± 3.70; respectively for VBLaST1 and VBLaST2) and group 4 (mean = 63.05 ± 13.97; mean = 86.76 ± 5.43; respectively for VBLaST1 and VBLaST2) performed significantly better than group 1 (mean = 36.05 ± 12.57; mean = 62.20 ± 12.12; respectively for VBLaST1 and VBLaST2) at the .05 level of significance. All other comparisons were not statistically significant (Figure 6).

Figure 6:

Comparing the performance of the two populations of subjects on the two VBLaST prototypes (error bars represent the standard error)

5.2.3. The effect of the trainer (FLS vs VBLaST2)

Our design objective was to obtain similar performance on the virtual and the physical trainer. To compare the subjects’ performance on the modified VBLaST and the FLS box-trainer, a mixed-design (Split-Plot) ANOVA was conducted.

All assumptions for this Split-Plot ANOVA (the normality of data, the equality of covariance, and the homogeneity of variances) were checked using the appropriate statistical tests (Shapiro-Wilk test, the Box’s test, the Levene’s test of equality of variances, respectively). The results indicate that all assumptions are met.

We expected the subjects’ performance on the modified VBLaST prototype to be not significantly different from to the subjects’ performance on the FLS box-trainer.

The results (Figure 7) show no significant main effect of the Trainer (F(1, 18) = 3.62, p = 0.073, ƞp2 = 0.16) and no significant interaction between the Trainer and Expertise level (F(3, 18) = 0.56, p = 0.65, ƞp2 = 0.08) on the performance scores. This confirms that the performance on the two trainers (VBLaST2 and FLS) was not significantly different (Figure 7). On the other hand, there is a significant main effect of Experience group (F(3, 18) = 29.83, p < 0.001, ƞp2 = 0.83) on the performance scores (Figure 7). The Post-Hoc tests with Bonferroni correction show that group 1 (mean = 63.56 ± 6.72; mean = 62.20 ± 12.12; respectively for FLS and VBLaST2) performed significantly lower than group 2 (mean = 86.62 ± 3.71; mean = 80.74 ± 6.82; respectively for FLS and VBLaST2) group 3 (mean = 90.70 ± 2.69; mean = 84.06 ± 3.70; respectively for FLS and VBLaST2) and group 4 (mean = 88.08 ± 0.77; mean = 86.76 ± 5.43; respectively for FLS and VBLaST2) at the .05 level of significance. No other significant differences were observed among the three other groups (Figure 7).

Figure 7:

Comparing the modified VBLaST prototype and the FLS box-trainer (error bars represent the standard error)

To further check whether the performance on the two systems is significantly different, we have also performed direct comparisons. First, we have conducted a simple paired-samples t-test to determine whether the performance of all the participants on FLS and VBLaST2 was significantly different. The results show that the assumption of equality of variances is met and that there is no significant effect of the system (t(21) = 1.84, p = 0.08) on the performance (mean = 78.4 ± 13.48; mean = 75.0 ± 13.85; for FLS and VBLaST2, respectively).

Finally, the Pearson’s correlation test shows that the FLS and VBLaST2 mean scores had a correlation of 0.80 (Pearson's r (20) = 0.80, p = 0.005).

5.2.4. Subjective evaluation

The results show that overall; the ratings of all the evaluated aspects of the system were increased in the second experiment (Figure 8).

Figure 8:

comparison of subjective evaluations of the two VBLaST prototypes (error bars represent the standard error)

The Mann-Whitney tests (Table 4) show a significant effect of the VBLaST prototype version on the ratings of the realism of instruments, the quality of the haptic feedback, the usefulness of learning the different laparoscopic skills and the trustworthiness in quantifying the laparoscopic performance. No other significant differences were found.

Table 4:

Results of the Mann-Whitney test comparing the ratings of the two VBLaST prototypes

| Mann Whitney U VBLaST 1 Vs VBLaST2 | Z VBLaST 1 Vs VBLaST2 | P-value VBLaST 1 Vs VBLaST2 | |

|---|---|---|---|

| 1. Realism of the virtual objects | 180.00 | −1.06 | 0.28 |

| 2. Realism of instrument handling | 142.50 | −2.04 | 0.04* |

| 3. Overall realism | 154.50 | −1.79 | 0.07 |

| 4. Quality of Haptic feedback | 123.00 | −2.55 | 0.01* |

| 5. Usefulness of Haptic Feedback | 123.00 | −2.55 | 0.01* |

| 6. Usefulness for learning hand-eye coordination skills | 197.50 | −0.60 | 0.54 |

| 7. Usefulness for learning bimanual dexterity skills | 136.00 | −2.24 | 0.02* |

| 8. Overall usefulness for learning laparoscopic skills | 135.50 | −2.21 | 0.02* |

| 9. Trustworthiness in quantifying performance | 96.50 | −3.26 | 0.001* |

P < 0.05 =significant effect

6. DISCUSSION AND DESIGN IMPLICATIONS

Virtual reality is a promising technology for developing laparoscopic skills task trainers. The long term goal of this research is to design and develop the VBLaST system. This system is aimed to reach similar objectives in terms of basic technical laparoscopic skills as the current accepted training standard in the surgical community (the FLS box-trainer) while overcoming some of its limitations. The advantage of replicating an existing physical trainer is twofold. First, the FLS trainer has been previously validated and has widely proven its efficacy in training basic laparoscopic skills (Fried G. M., 2008). Second, the assessment of laparoscopic performance on this trainer is also based on validated metrics (Fraser, Klassen, Feldman, Ghitulescu, Stanbridge, & Fried, 2003; Fried G. M., 2008).

6.1. Summary of findings

In this work, we have presented two evaluation studies that allowed us to compare two prototypes of the VBLaST system built after two successive iterations of the design process. The results of these studies demonstrate that the subjects’ performance measured using the current standard scoring method (Fried G. M., 2008), was significantly increased on the modified VBLaST prototype as compared with the early VBLaST prototype. The results of our second experiment demonstrate that the subjects’ performance on the modified VBLaST prototype assessed using the current standard method (Fried G. M., 2008), was not significantly different from their performance on the FLS box-trainer. Moreover, the scores on both systems were significantly correlated with a high coefficient of correlation (r = 0.80). This suggests that the second design iteration permitted to attain our main design objective; having a surgical performance not significantly different from that of the FLS box-trainer in assessing laparoscopic skills. This further demonstrates that inputs from the experts were determinant to improve the system fidelity and training performance and thus, attain the design objectives.

Moreover, while there is no significant difference between the VBLaST and the FLS scores, the results show that the performance scores on the two systems are much closer for group 1 (first year residents) and group 4 (fellows and attendings). These results can be explained by the fact that these groups have reported less exposure to the peg transfer task on the FLS trainer as compared with the two other groups. This suggests that the current version of the VBLaST could be more comparable to the FLS trainer for training novices and users with no previous experience with laparoscopic trainers. The strong correlation between the scores on the two systems further suggests that the current prototype is an efficient tool to assess the laparoscopic performance for the peg transfer task.

Finally, the subjective evaluation of the two prototypes indicates that the perceived realism of the virtual environment was not affected by the new design. This result is not surprising since these aspects of the environment fidelity were not modified and were already rated high after the first design iteration. On the other hand, other aspects of the environment and interface fidelity, such as the perceived realism of instruments and the quality of the haptic feedback were rated significantly higher in the second experiment. Moreover, the perceived usefulness of the system for learning basic laparoscopic skills increased over the two experiments. This also increased the subjects’ assessment of how trustworthy the VBLaST is to quantify accurate measures of laparoscopic performance. These results demonstrate that the design improvements have a direct positive effect on the subjects’ subjective assessment of the environment and interface fidelity and therefore, on the VBLaST as a training system for basic laparoscopic skills. Nevertheless, it is important in the future to evaluate the new version of the VBLaST also with general surgery residents and fellows. This will further validate our system.

6.2. Comparative situated evaluation for expert surgeons and the present-at-hand tool

Our findings have a significant impact on the design methods used in effecting successful felt experiences in VR trainers. We show the importance of involving expert surgeons (i.e. domain experts) during comparative situated evaluation sessions in order to analyze the fidelity component of the system. While the design aspects we uncovered were important in our system, the initial field studies did not permit us to identify them (Arikatla, et al., 2013; Chellali, et al., 2014). In fact, these design elements are all related to movement in the 3-dimensional space and belong then to the procedural knowledge of experts, as defined by Rasmussen (Skills, rules, knowledge: signals, signs and symbols and other distinctions in human performance models, 1983). And discussions and observations of residents during their training for the peg transfer task did not allow us to extract these elements. From the novices’ perspective, the first prototype of the system replicated faithfully the physical trainer that they were familiar with because it simulated the real world objects (environment fidelity) and mimicked the interaction techniques (interface fidelity). However, as we showed, this in and of itself did not lead to a successful VR trainer. Upon testing the system, the expert surgeons provided a different point of view. During the comparative situated evaluation session, expert surgeons were able to note the absence of elements that are inherent in the real world. As we showed with the increased performance of our system to effect similar training outcomes as the physical box training, these elements have a direct impact on learning laparoscopic skills and so ignoring them may result in designing an ineffective training system.