Abstract

Humans and other animals often violate economic principles when choosing between multiple alternatives, but the underlying neurocognitive mechanisms remain elusive. A robust finding is that adding a third option can alter the relative preference for the original alternatives, but studies disagree on whether the third option’s value decreases or increases accuracy. To shed light on this controversy, we used and extended the paradigm of one study reporting a positive effect. However, our four experiments with 147 human participants and a reanalysis of the original data revealed that the positive effect is neither replicable nor reproducible. In contrast, our behavioral and eye-tracking results are best explained by assuming that the third option’s value captures attention and thereby impedes accuracy. We propose a computational model that accounts for the complex interplay of value, attention, and choice. Our theory explains how choice sets and environments influence the neurocognitive processes of multi-alternative decision making.

Research organism: Human

eLife digest

A man in a restaurant is offered a choice between apple or blueberry pie, and chooses apple. The waiter then returns a few moments later and tells him they also have cherry pie available. “In that case”, replies the man, “I’ll have blueberry”.

This well-known anecdote illustrates a principle in economics and psychology called the independence principle. This states that preferences between two options should not change when a third option becomes available. A person who prefers apple over blueberry pie should continue to do so regardless of whether cherry pie is also on the menu. But, as in the anecdote, people often violate the independence principle when making decisions. One example is voting. People may vote for a candidate who would not usually be their first choice only because there is also a similar but clearly less preferable candidate available.

Such behavior provides clues to the mechanisms behind making decisions. Studies show, for example, that when people have to choose between two options, introducing a desirable third option that cannot be selected – a distractor – alters what decision they make. But the studies disagree on whether the distractor improves or impairs performance.

Gluth et al. now resolve this controversy using tasks in which people had to choose between rectangles on a computer screen for the chance to win different amounts of money. Contrary to a previous study, their four experiments showed that a high-value distractor did not change how likely the volunteers were to select one of the two available options over the other. Instead, the distractor slowed down the entire decision-making process. Moreover, volunteers often selected the high-value distractor despite knowing that they could not have it. One explanation for such behavior is that high-value items capture our attention automatically even when they are irrelevant to our goals. If a person likes chocolate cake, their attention will immediately be drawn to a cake in a shop window, even if they had no plans to buy a cake. Eye-tracking data confirmed that volunteers in the above experiments spent more time looking at high-value items than low-value ones. Those volunteers whose gaze was distracted the most by high-value items also made the worst decisions.

Based on the new data, Gluth et al. developed and tested a mathematical model. The model describes how we make decisions, and how attention influences this process. It provides insights into the interplay between attention, valuation and choice – particularly when we make decisions under time pressure. Such insights may enable us to improve decision-making environments where people must choose quickly between many options. These include emergency medicine, road traffic situations, and the stock market. To achieve this goal, findings from the current study need to be tested under more naturalistic conditions.

Introduction

In recent years, studying choices between multiple (i.e., more than two) alternatives has attracted growing interest in many research areas including biology, neuroscience, psychology, and economics (Berkowitsch et al., 2014; Chau et al., 2014; Chung et al., 2017; Cohen and Santos, 2017; Gluth et al., 2017; Hunt et al., 2014; Landry and Webb, 2017; Lea and Ryan, 2015; Louie et al., 2013; Mohr et al., 2017; Spektor et al., 2018a; Spektor et al., 2018b). In such choice settings humans and other animals often violate the independence from irrelevant alternatives (IIA) principle of classical economic decision theory (Rieskamp et al., 2006). This principle states that the relative preference for two options must not depend on a third (or any other) option in the choice set (Luce, 1959; Marschak and Roy, 1954). Violations of IIA have profound implications for our understanding of the neural and cognitive principles of decision making. For example, if choice options are not independent from each other, we cannot assume that the brain first calculates each option’s value separately before selecting the option with the highest (neural) value (Vlaev et al., 2011). Instead, dynamic models in which option comparison and decision formation processes are intertwined appear more promising as they account for several violations of IIA (Gluth et al., 2017; Hunt and Hayden, 2017; Hunt et al., 2014; Mohr et al., 2017; Roe et al., 2001; Trueblood et al., 2014; Tsetsos et al., 2012; Usher and McClelland, 2004).

The underling mechanisms of multi-alternative choice and the conditions under which decision makers exhibit different forms of violations of IIA are a matter of current debate (Chau et al., 2014; Frederick et al., 2014; Louie et al., 2013; Spektor et al., 2018a; Spektor et al., 2018b). Two recent studies by Louie and colleagues (Louie et al., 2013; henceforth Louie2013) and by Chau and colleagues (Chau et al., 2014; henceforth Chau2014) investigated whether and how the value of a third option influences the relative choice accuracy between two other options (i.e., the probability of selecting the option with the higher value). Both studies reported violations of IIA which, however, contradicted each other: Louie2013 found a negative relationship, so that better third options decreased the relative choice accuracy (i.e., the probability of choosing the better of the two original options). They attributed this to divisive normalization of (integrated) value representations in the brain: To keep value coding by neural firing rates in a feasible range, each value code could be divided by the sum of all values. Thus, a third option of higher value implies a higher division of firing rates and reduces the neural discriminability between the other two options. In contrast, Chau2014 reported a positive relationship, that is, third options of higher values increased relative choice accuracy. They predicted this effect by a biophysical cortical attractor model (Wang, 2002). Briefly, this model assumes pooled inhibition between competing attractors that represent accumulated evidence for the different options. A third option of higher value would increase the level of inhibition in the model and thus lead to more accurate decisions (because inhibition reduces the influence of random noise).

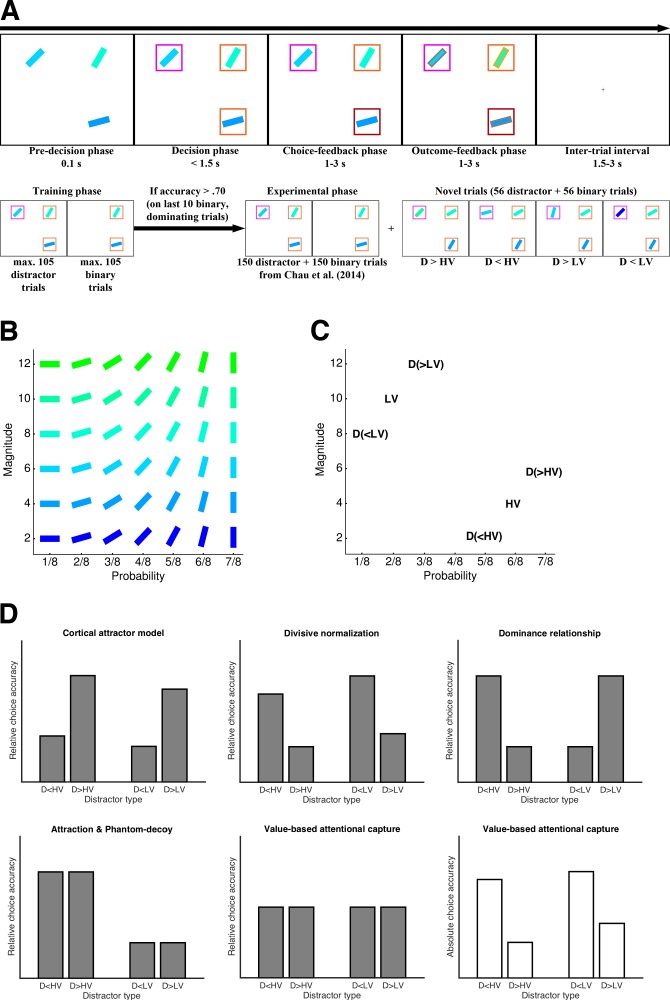

The goal of the present study was to resolve these opposing results by exploiting the multi-attribute nature of Chau2014’s task and extending it with respect to the paradigm and the analysis of the behavioral data. Notably, the paradigms of Louie2013 and Chau2014 differ in several aspects, the most critical being the type of choice options: In Louie2013, participants decided between different food snacks whereas in Chau2014, participants chose between rectangles that represented gambles with reward magnitude and probability conveyed by the rectangles’ color and orientation, respectively (Figure 1A and B). Our initial hypothesis for the discrepancy between the studies’ results was that the explicit presentation of the magnitude and probability attributes in the latter induced IIA-violating ‘context effects’ that emerge through multi-attribute comparison processes (Gluth et al., 2017; Pettibone and Wedell, 2007; Roe et al., 2001; Trueblood et al., 2014; Usher and McClelland, 2004; we elaborate on this and on further hypotheses in the Materials and methods). To test this hypothesis, we added a set of novel trials in which the high-value option (HV), the low-value option (LV), and the unavailable distractor (D) were positioned in the two-dimensional attribute space in a way to allow a rigorous discrimination between the various multi-attribute context effects, divisive normalization, and the biophysical cortical attractor model (Figure 1C and D). Note that henceforth, by ‘divisive normalization’ we refer to the model proposed by Louie2013 which assumes that normalization occurs at the level of integrated values (i.e., after combining attribute values such as magnitude and probabilities into a single value). Other models that instantiate hierarchical or attribute-wise normalization can make qualitatively different predictions (Hunt et al., 2014; Landry and Webb, 2017; Soltani et al., 2012). Also, we do not address the role of divisive normalization as a canonical neural computation (Carandini and Heeger, 2011) beyond its conceptualization by Louie2013.

Figure 1. Study design and predictions for novel trials.

(A) Example trial (upper panel) and general workflow (lower panel) of the Chau2014 paradigm as used in our four experiments (variations in specific experiments are mentioned in the main text). In the critical trials, participant had 1.6 s to choose between two rectangles with a third rectangle displayed but declared unavailable after 0.1 s. (B) Stimulus matrix showing one (of four possible) associations of color and orientation of rectangles with reward probability and magnitude. (C) Example of a set of four novel trials (HV and LV were kept constant across these four trials, but D varied). (D) Qualitative predictions of choice accuracy in the novel trials for the biophysical cortical attractor model proposed by Chau2014, the divisive normalization model proposed by Louie2013, various ‘context effects’ (see Materials and methods), and value-based attentional capture. The predictions vary with respect to the factors Similarity (i.e., whether D is more similar to HV or to LV) or Dominance (i.e., whether D is better or worse than HV/LV). In contrast to the other models, value-based attentional capture does not predict any influence of D’s value on relative choice accuracy (grey bars) but a detrimental effect on absolute choice accuracy (white bars). Predictions of absolute choice accuracy for the remaining models are provided in Figure 1—figure supplement 1.

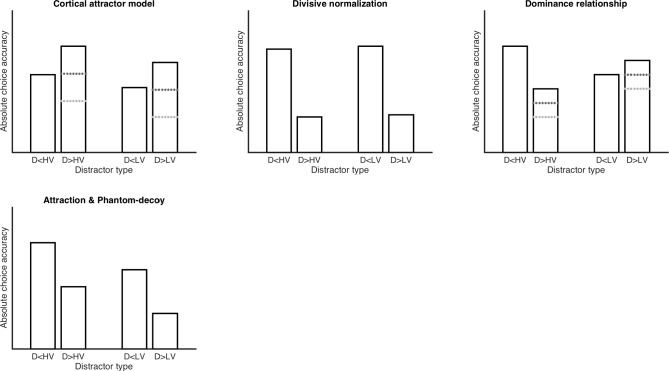

Figure 1—figure supplement 1. Qualitative predictions of absolute choice accuracy for alternative models.

Foreshadowing the results, our data from three behavioral and one eye-tracking experiments with a total of 147 participants are at stark odds with both the findings of Louie2013 and Chau2014. We did not observe a positive impact of D’s value on relative choice accuracy as reported by Chau2014 in the same task, nor a negative impact of D’s value as predicted by Louie2013. In other words, violations of IIA did not occur in the Chau2014 paradigm when participants made decisions under time pressure. Furthermore, a reanalysis of the original Chau2014 data suggested that the reported effect is a statistical artifact (see Materials and methods). In contrast to the absence of any effects on relative choice accuracy, however, we consistently found a negative impact of D’s value on absolute choice accuracy (i.e., the overall probability of choosing the best option), which is not a violation of IIA. We argue that our behavioral and eye-tracking results as well as the results of the original study are best accounted for by value-based attentional capture, that is, by assuming that options capture attention proportional to their value. Value-based attentional capture is a comparatively novel concept in attention research, which has repeatedly been demonstrated in humans and non-human primates (Anderson, 2016; Anderson et al., 2011; Grueschow et al., 2015; Le Pelley et al., 2016; Peck et al., 2009; Yasuda et al., 2012). With respect to the Chau2014 task, it implies that a higher-value D draws more attention and thereby interferes with the choice process. To explain the results from our behavioral and eye-tracking experiments, we integrate the concept of value-based attentional capture into the well-established framework of evidence accumulation in decision making (Bogacz et al., 2006; Gluth et al., 2012; Gluth et al., 2015; Gold and Shadlen, 2007; Heekeren et al., 2008; Smith and Ratcliff, 2004). Our model predicts that only absolute but not relative choice accuracy will be affected by the value of the third option (i.e., no violation of IIA; Figure 1D). It provides a novel and cognitively plausible mechanism of the complex interplay of value and attention on multi-alternative decision making.

Results

Experiment 1: High-value distractors impair choice accuracy

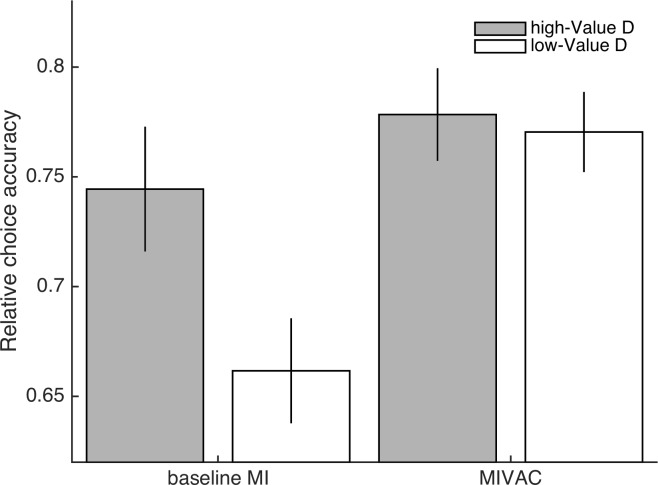

Experiment 1 (with n1 = 31 participants) aimed at comparing different model predictions with respect to the novel set of trials we introduced (Figure 1B and C) and at establishing the core finding of Chau2014 by replicating it. In the decision-making task as introduced by Chau2014 (Figure 1A), two available options of different expected value, HV and LV, are presented in two randomly selected quadrants of the screen and participants are asked to choose the option with the higher expected value. In half of the trials, the remaining two quadrants are left empty, in the other half, a third distractor option, D, is shown in one quadrant but indicated as unavailable after 0.1 s. Then, participants have another 1.5 s to make their choice. The central behavioral analysis by Chau2014 was a logistic regression of relative choice accuracy (i.e., whether HV or LV was chosen while excluding all trials with other responses such as choosing D or the empty quadrant, or being too slow) on the value difference between the two available options, HV-LV, the sum of their values, HV +LV, the value difference of HV and D, HV-D, the interaction of value differences, (HV-LV)×(HV-D), and whether a distractor was present or not, D present. Most importantly, the authors reported a significantly negative regression coefficient of HV-D indicating that higher values of D increased choice accuracy.

To test whether our results replicate the findings of Chau2014, we analyzed decisions made in the (non-novel) trials that were identical to those used by Chau2014. Note that the choice sets in Chau2014 were generated by sampling magnitude and probabilities for HV, LV, and D until HV-LV and HV-D shared less than 25% variance. The options’ average expected values in the resulting trials were 5.13 for HV (SD = 3.08; min = 0.5; max = 10.5), 3.51 for LV (SD = 3.02; min = 0.25; max = 9), and 4.32 for D (SD = 2.11; min = 0.25; max = 9). Although the overall choice performance in our data was strikingly similar to the original data (Table S1 in Supplementary file 1), the negative effect of HV-D on relative choice accuracy could not be replicated. Instead, the average coefficient was positive but not significant (t(30) = 1.40, p = .171, Cohen’s d = 0.25; left panel of Figure 2A; complete results of all regression analyses for all experiments are reported in Tables S2-S5). Interestingly, analyzing absolute choice accuracy (i.e., including all trials) resulted in a significant positive regression coefficient of HV-D (t(30) = 5.14, p < .001, d = 0.92; right panel of Figure 2A). This suggests that a higher value of D lowered choice accuracy when all trials were taken into account.

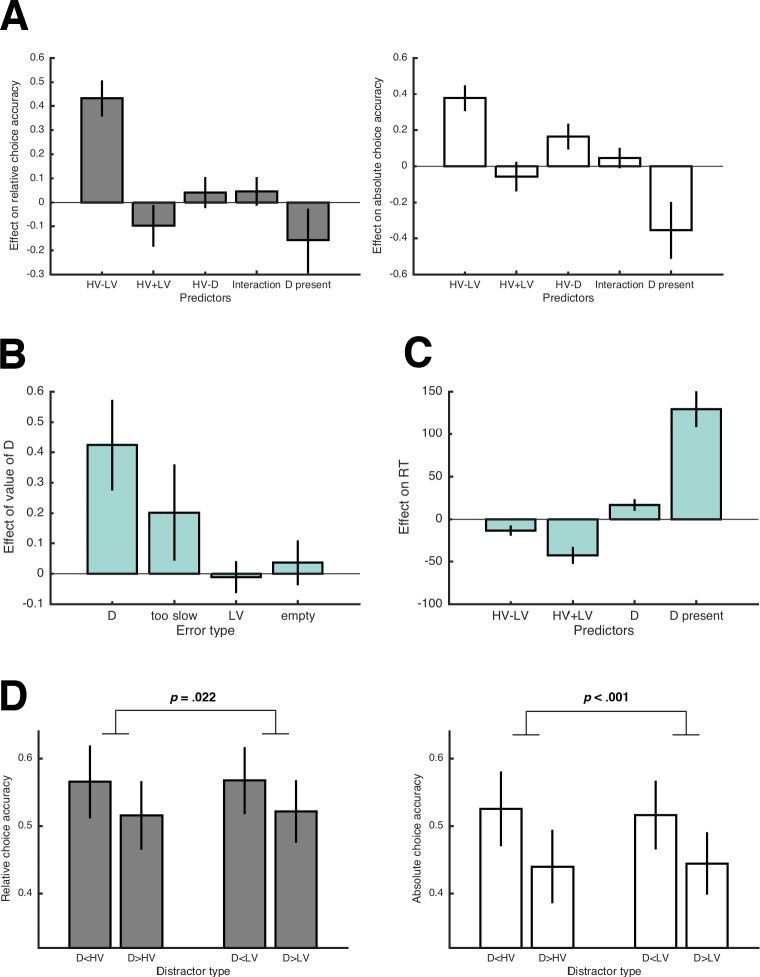

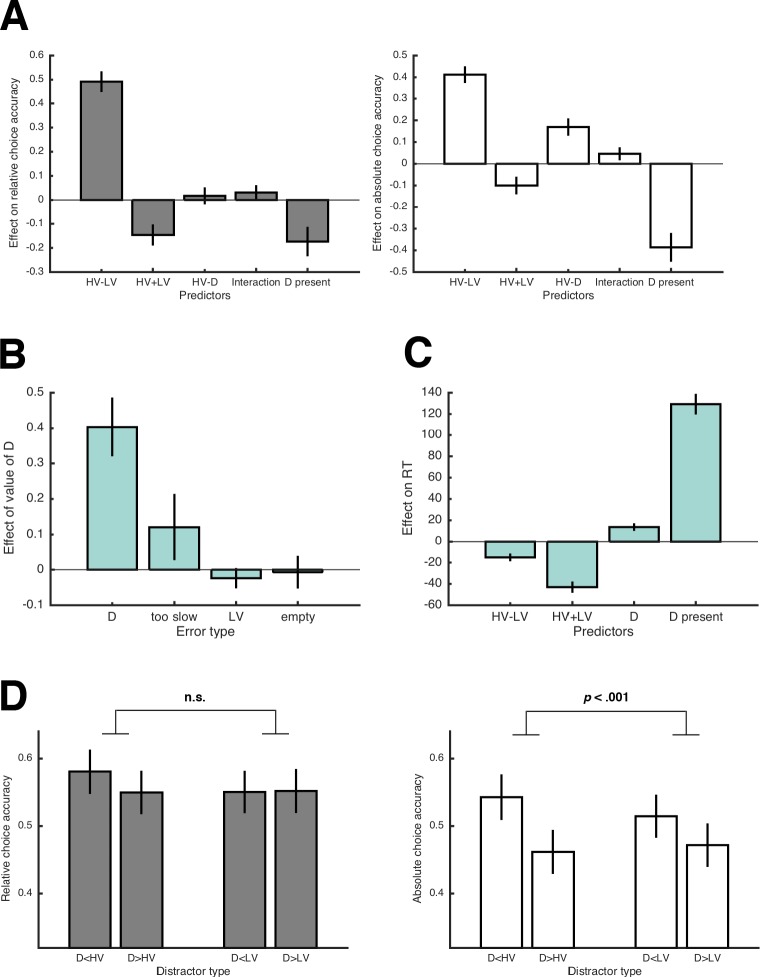

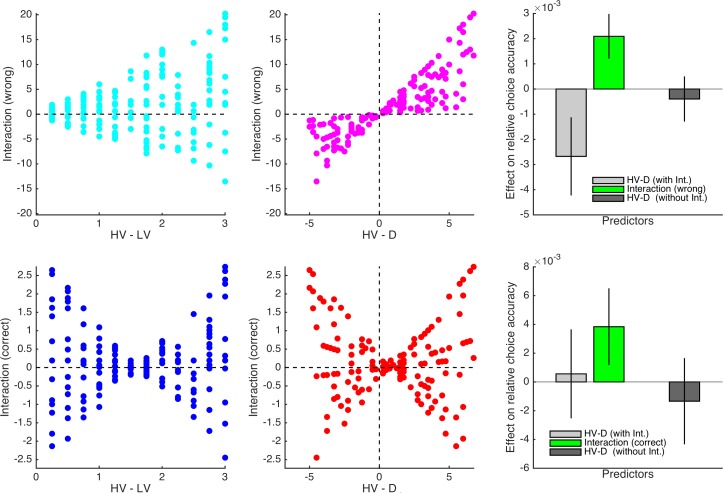

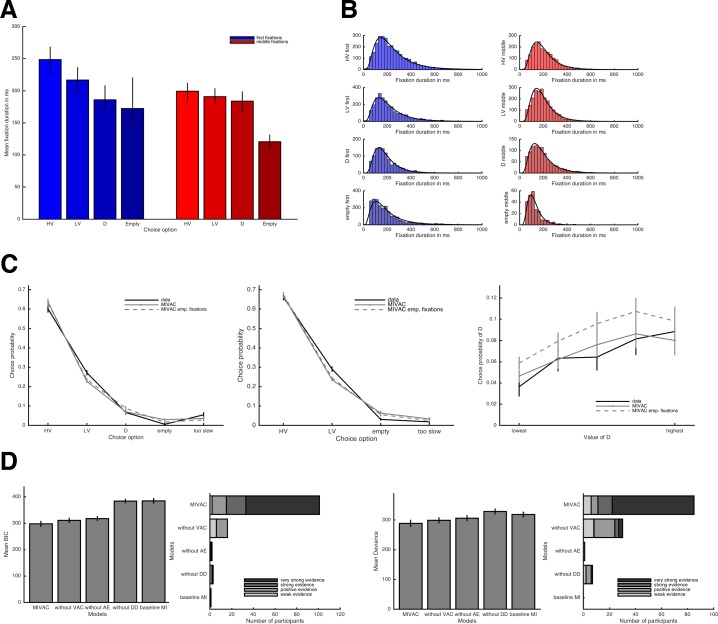

Figure 2. Results of Experiment 1.

(A) Average regression coefficients of the predictor variables proposed by Chau2014 (including the HV-D predictor, which had a negative coefficient in their data) for relative and absolute choice accuracy (left and right panel, respectively). The term ‘interaction’ refers to the predictor variable (HV-LV)×(HV-D). Note that error bars in all figures represent 95% CIs, so that error bars not crossing the 0-line indicate significant effects. (B) Average coefficients of (separate) regression analyses testing how the value of D influenced different types of errors. (C) Average regression coefficients of predictor variables that influenced RT. (D) Relative and absolute choice accuracy in the novel trials (compare with predictions in Figure 1D). The comparatively low performance in the novel trials is due to HV and LV having very similar expected values (see Figure 1C).

To better understand why D’s value has this negative impact on the probability of choosing HV, we analyzed the different possibilities of making errors (i.e., choosing LV, choosing D, choosing the empty quadrant, and being too slow) by testing whether they were predicted by D’s value. The value of D had a significant effect on the probability of choosing D (t(28) = 5.92, p < .001, d = 1.11) and also on being too slow (t(29) = 2.66, p = .013, d = 0.49), whereas the probability of choosing LV (t(30) = −0.47, p = .645, d = −0.08) or the empty quadrant (t(23) = 1.07, p = .294, d = 0.22) were unaffected (Figure 2B). Notably, higher values of D also slowed down response times (RT) for HV and LV choices (t(30) = 6.17, p < .001, d = 1.11; Figure 2C).

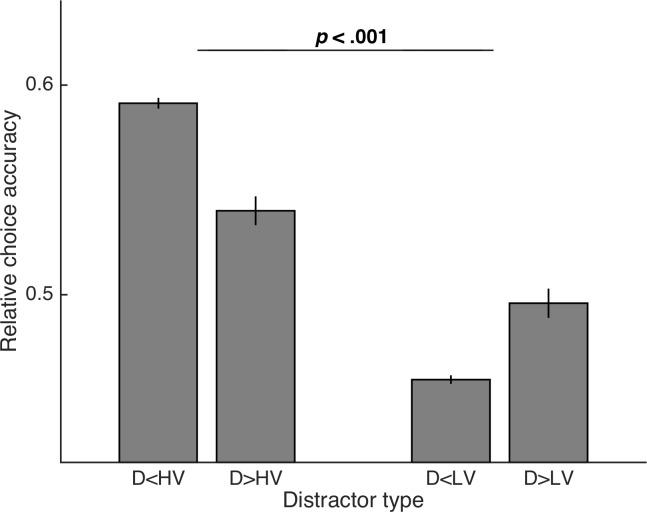

We then looked at choice accuracy in the novel trials that we added to differentiate between specific context effects and the models proposed by Louie2013 and Chau2014. Performance was higher in trials with Ds that were dominated by either HV or LV (Figure 2C), which is the opposite of what is predicted by the Chau2014 model (Figure 1D). This effect of Dominance was significant with respect to both relative choice accuracy (F(1,30) = 5.85; p = .022, = .16) and absolute choice accuracy (F(1,30) = 18.89; p < .001, = .39), the former being consistent with divisive normalization, the latter being consistent with value-based attentional capture (see Figure 1D; but note that–inconsistent with divisive normalization–we could not replicate the effect on relative choice accuracy in Experiments 2 and 3; Figure 5, Table S6-S7 for complete results). Overall, behavior in the novel trials supported the notion that higher-valued Ds impair multi-alternative decision making.

In summary, we could not replicate the results on relative choice accuracy and the IIA violations reported by Chau2014 but obtained opposite effects. At first glance, these results seem to support the divisive normalization account by Louie2013 as an appropriate mechanistic explanation. Note, however, that this account specifically predicts changes in relative choice accuracy, for which we found only weak evidence (i.e., no effect of HV-D in the regression, no effect of D’s value on LV-errors). On the other hand, the effects on absolute choice accuracy were stronger. Accordingly, we reasoned that the results are better explained by value-based attentional capture: This account states that attention is modulated by value such that value-laden stimuli attract attention and impair goal-directed actions, even if those stimuli are irrelevant to the task (Anderson, 2016; Anderson et al., 2011; Grueschow et al., 2015; Le Pelley et al., 2016; Peck et al., 2009; Yasuda et al., 2012). With respect to Chau2014’s paradigm, value-based attentional capture predicts that high-value Ds draw attention to a greater extent than low-value Ds, which leaves less cognitive resources to focus on the decision between HV and LV. Consequently, decisions slow down, leading to higher RT and to more too-slow errors, and choices of D increase, but the relative probability of choosing HV over LV is unaffected–implying no violation of IIA. In Experiment 2 and 3, we sought to establish value-based attentional capture as the underlying mechanisms by testing different predictions that this explanation (but not divisive normalization) makes.

Experiment 2: Distractor effects depend on decision time

If attentional capture is the driving force behind the performance decrease in the Chau2014 task, then increasing available attentional capacity should improve choice accuracy and reduce the detrimental effects of D. In contrast, divisive normalization effects do not seem to require the imposition of time pressure (see Louie2013). Based on this rationale, we conducted a second behavioral experiment in which we compared two groups: A high-time pressure (HP) Group (n2,HP = 25) did exactly the same task as in Experiment 1, but a second, low-time pressure (LP) Group (n2,LP = 24) was given more time to decide (6 s instead of 1.5 s). According to the attentional-capture account, we should replicate the results of Experiment one under high time pressure, but under low time pressure the negative influences of D on (absolute) choice accuracy should disappear. For the novel trial sets, we expected a choice accuracy pattern in line with multi-attribute context effects (see Figure 1D), given that these effects are known to become more prominent with longer deliberation time (Dhar et al., 2000; Pettibone, 2012; Trueblood et al., 2014).

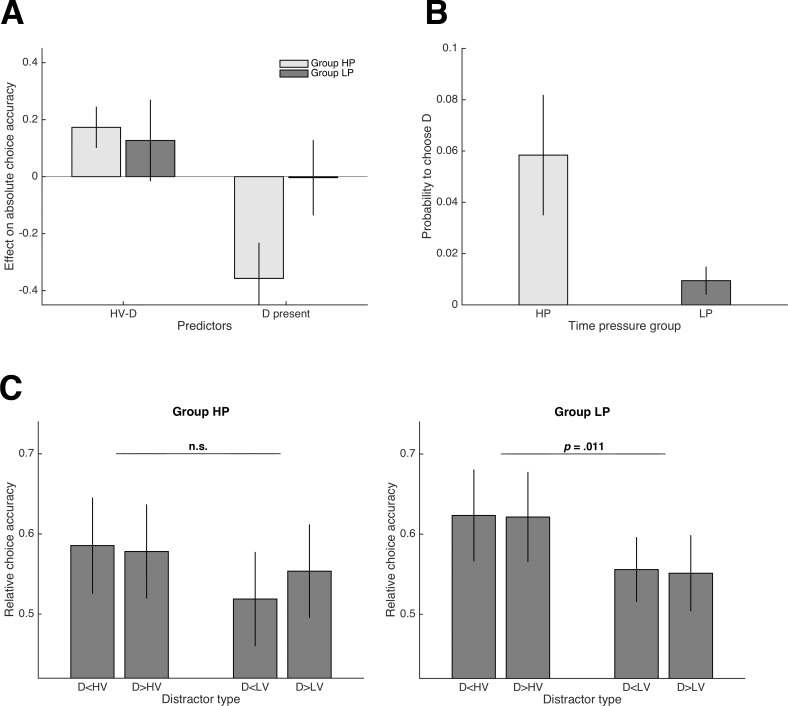

Consistent with Experiment 1, we could not replicate the HV-D effect on relative choice accuracy reported in Chau2014. Again, our results showed a tendency in the opposite direction (t(24) = 0.50, p = .622, d = 0.10). With respect to absolute choice accuracy, there was a strong and significantly positive effect of HV-D in Group HP of Experiment 2 (t(24) = 5.05, p < .001, d = 1.01). In contrast and as predicted, this effect was much weaker and did not reach significance in Group LP (t(23) = 1.85, p = .079, d = 0.38; but note that the difference between groups was not significant: t(47) = 0.61, p = .544, d = 0.17; Figure 3A). Similarly, only participants of Group HP were less accurate in trials with D present compared to trials without D (Group HP: t(24) = -5.97, p < .001, d = -1.19; Group LP: t(23) = -0.06, p = .956, d = -0.01; group difference: t(47) = -4.07, p < .001, d = -1.16; Figure 3A). Choices of D were significantly more frequent in Group HP (5.8% vs. 0.9% of trials; t(47) = 4.15, p < .001, d = 1.20; Figure 3B). With respect to the novel trial set, we could not replicate the main effect of Dominance on relative choice accuracy in Group HP that we had found in Experiment 1 (F(1,24) = 0.25; p = .622, = .01; left panel of Figure 3C). In Group LP, there was a significant main effect of Similarity (F(1,23) = 9.20; p = .011, = .25; right panel of Figure 3C) that was absent in any of our experiments conducted under high time pressure. This (IIA-violating) effect was due to a higher relative choice accuracy when D was more similar to HV than to LV in the two-dimensional attribute space and is consistent with a combination of an attraction effect (Huber et al., 1982) and a phantom-decoy effect (Pettibone and Wedell, 2007; Pratkanis and Farquhar, 1992; see Figure 1D and Materials and methods).

Figure 3. Results of Experiment 2.

(A) Group comparison of regression coefficients reflecting the influence of HV-D and the presence of D on absolute choice accuracy (HP = high time pressure, LP = low time pressure). (B) Group comparison of the frequency of choosing D. In general, the negative influence of D on performance diminished substantially in Group LP, who made decisions under low time pressure. (C) Relative choice accuracy in the novel trials for Group HP (left panel) and LP (right panel). A violation of IIA was only observed in Group LP and is consistent with a combined attraction and phantom-decoy effect (compare with predictions in Figure 1D; see also Materials and methods).

Taken together, while Group HP replicated the results of Experiment one in most aspects, Group LP showed that the negative impact of D disappeared when time pressure was alleviated, lending further support for a value-based attentional capture account. In addition, only Group LP exhibited an IIA-violating choice pattern in the novel trials consistent with specific context effects, supporting the notion that such effects require longer deliberation times to emerge (Dhar et al., 2000; Pettibone, 2012; Trueblood et al., 2014).

Experiment 3: Value affects attention and attention affects decisions

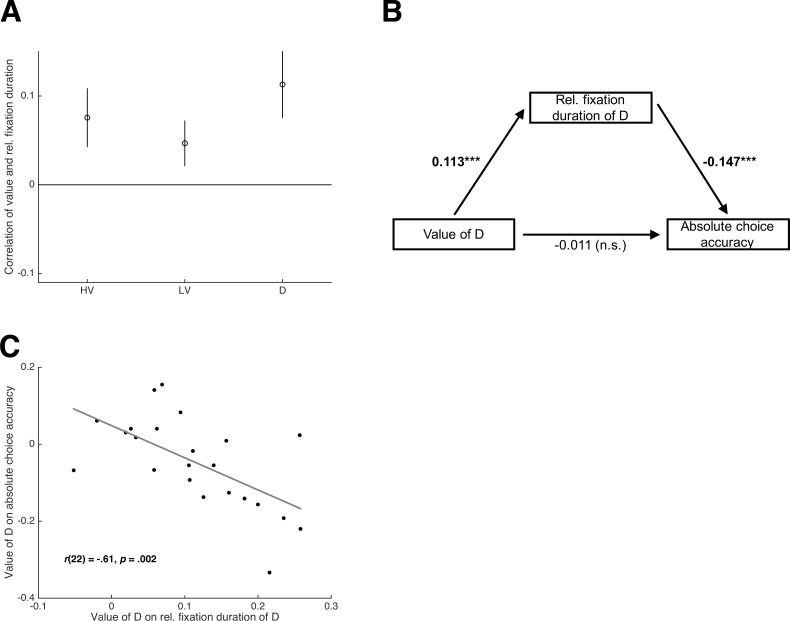

Value-based attentional capture predicts an effect of the value of D on choice accuracy that is mediated by attention. In other words, a higher value of D leads to more attention to D, which in turn impairs absolute choice accuracy. This effect has been referred to as value-based oculomotor capture and is thought to underlie the (behavioral) effect of value-based attentional capture (Failing et al., 2015; Le Pelley et al., 2015; Pearson et al., 2016). To directly test whether value-based oculomotor capture drives the negative influence of D on decision making in the Chau2014 paradigm, we obtained eye-movement data as a measure of attention in Experiment three with n3 = 23 participants using the same paradigm as in Experiments 1 and 2 (Group HP).

The behavioral results of this experiment were in line with our previous experiments (see Tables S2-S7 for statistical results): The negative HV-D effect on relative choice accuracy reported in Chau2014 could not be replicated, but its effect on absolute choice accuracy was significantly positive; the value of D led to more choices of D and slowed down choices of HV and LV; there was a main effect of Dominance on absolute (but not relative) choice accuracy in the novel trial set. With respect to the eye-movement results, we first tested whether options of higher value received more attention, defined as relative fixation duration (see Materials and methods). Thereto, we calculated within each participant the correlation between expected value and relative fixation duration separately for HV, LV, and D. These correlations were consistently higher than zero (HV: t(22) = 4.79, p < .001, d = 1.00; LV: t(22) = 3.84, p < .001, d = 0.80; D: t(22) = 6.25, p < .001, d = 1.30; Figure 4A). Second, we asked whether the dependency of attention to D on D’s value mediated the negative influence of the latter on choice accuracy. A path analysis confirmed this prediction (Figure 4B): In the path model, the value of D had a positive effect on attention to D (t(22) = 6.30, p < .001, d = 1.31), which in turn had a negative effect on choice accuracy (t(22) = −6.79, p < .001, d = −1.42). In fact, the influence of D’s value on choice accuracy was fully mediated by attention with the direct path being not significant (t(22) = −0.76, p = .455, d = −0.16). Finally, we tested whether participants whose attention was affected by D’s value to a greater extent also showed a stronger negative influence of D’s value on absolute choice accuracy. This was confirmed by a significantly negative correlation across participants between the coefficient quantifying the correlation between D-value and D-fixations and the coefficient quantifying the negative impact of D-value on absolute choice accuracy (r(21) = −0.61; p = .002; Figure 4C). Altogether, the eye-movement results strongly support value-based attentional and oculomotor capture as being the underlying mechanism of sub-optimality in the Chau2014 paradigm (see Materials and methods, Figure 4—figure supplement 1 and Figure 7—figure supplement 3 for further eye-tracking results).

Figure 4. Eye-tracking results of Experiment 3.

(A) Average coefficients for the correlation of the value of HV, LV, and D with the relative fixation duration on HV, LV, and D. (B) Path analysis of the relationship between the value of D, the attention on D (i.e., relative fixation duration of D), and absolute choice accuracy. The path analysis is conducted within each participant; the numbers represent the average path coefficients (tested against 0; ***p < .001). (C) Across-participant correlation of the coefficients representing the (positive) influence of the value of D on attention on D and the (negative) influence of the value of D on absolute choice accuracy.

Figure 4—figure supplement 1. Testing the influence of attention on choice.

Experiment 4 and reanalysis of the Chau2014 dataset

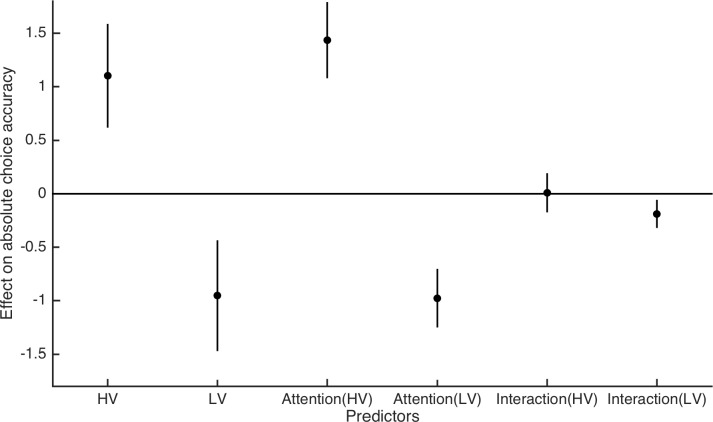

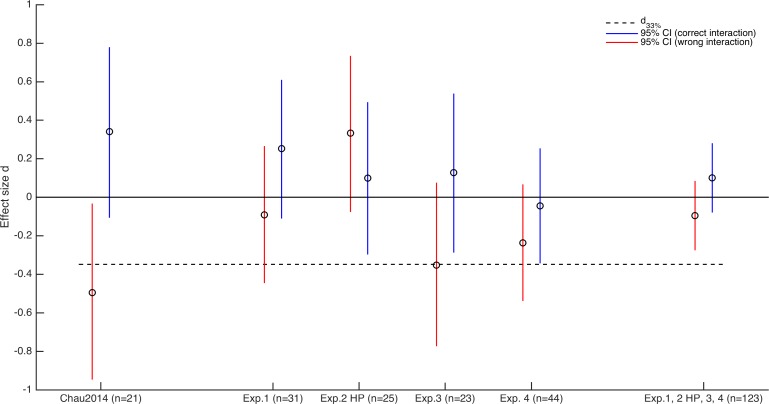

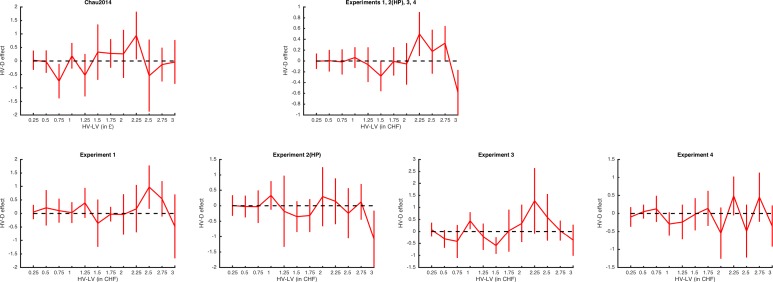

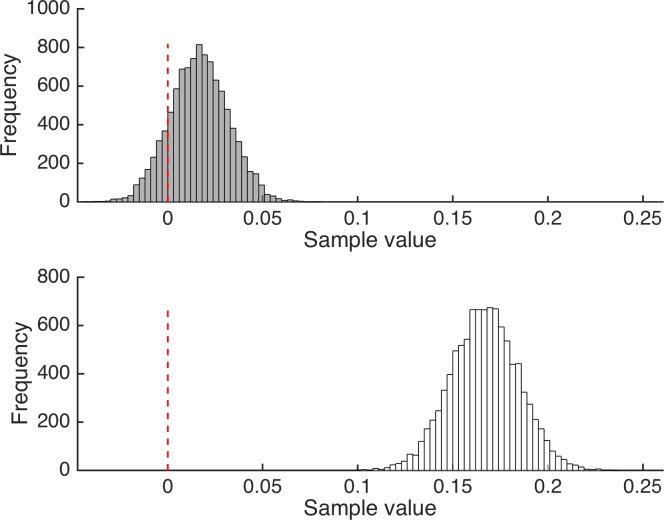

We conducted a fourth experiment, for which we used the exact trial sequences of Chau2014 (provided to us by the authors) and omitted the novel trials. Besides replicating the effects of value-based attentional capture, another goal of this Experiment 4 was to test whether we could find the HV-D effect on relative choice accuracy when making the experiment almost identical to Chau2014. The statistical results of this experiment with n4 = 44 participants are provided in Tables S2-S5 and are in line with Experiment 1 to 3: The negative HV-D effect on relative choice accuracy could not be replicated, there was a significantly positive HV-D effect on absolute choice accuracy, and higher values of D led to significantly more choices of D and to longer RT when choosing HV or LV. Figure 5 summarizes the behavioral results collapsed over all experiments conducted under high time pressure. This summary provides clear evidence that there were no robust effects on relative choice accuracy (and thus no violations of IIA) but strong effects on absolute choice accuracy consistent with the value-based attentional capture account.

Figure 5. Summary of behavioral results of Experiment 1 to 4.

(A) to (D) are analogous to Figure 2 but collapsed over all experiments conducted under high time pressure. Results in (D) only contain data from Experiment 1 to 3, because novel trials were omitted in Experiment 4.

Figure 5—figure supplement 1. Testing the assumption that participants decided on the basis of expected values (EVs).

Figure 5—figure supplement 2. The influence of the interaction term (HV-LV)×(HV-D) on the estimation of the HV-D effect.

Figure 5—figure supplement 3. Effect sizes and test of detectability.

Figure 5—figure supplement 4. Analysis of the HV-D effect on relative choice accuracy for different levels of HV-LV.

Figure 5—figure supplement 5. Bayesian analyses of the HV-D Effect on relative and absolute choice accuracy.

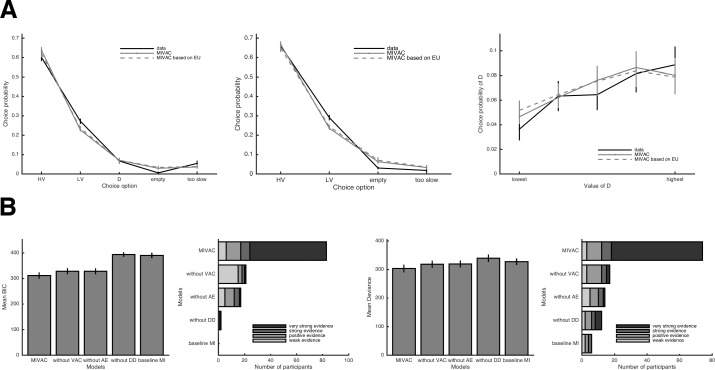

Notably, most of the behavioral data analyses in Chau2014 and our study relied on the assumption that participants can integrate magnitude and probability to compute the expected value (EV = probability × magnitude) of each option and to choose the option with the larger EV. We tested this assumption by comparing a simple EV-based choice model with two other models that assumed that participants focused on only one attribute (i.e., either magnitude or probability) as well as an expected utility (EU) model (e.g., Von Neumann and Morgenstern, 1947) and prospect theory (Kahneman and Tversky, 1979; Tversky and Kahneman, 1992); see Materials and methods). In brief, we found that the EV model explained the choice data better than the single-attribute models, but that the EU model provided the best account of the data (Figure 5—figure supplement 1A). The additional complexity of prospect theory compared to EU was not justified by an increased model fit. For reasons of simplicity and comparability to Chau2014, we used the EV-based value estimates for most statistical and modeling analyses. As a robustness check, however, we reanalyzed our main behavioral tests and replaced EVs by EU-based subjective values. The results were largely unaffected by this adaptation (Figure 5—figure supplement 1B).

We also reanalyzed the data of Chau2014 to look for further evidence of value-based attentional capture. As in our own experiments, we found that HV-D was positively linked to absolute choice accuracy (t(20) = 4.53, p < .001, d = 0.99), and that higher values of D led to both more erroneous choices of D (t(19) = 4.67, p < .001, d = 1.05) and to higher RT when choosing HV or LV (t(20) = 2.94, p = .008, d = 0.64). Thus, the data of Chau2014 also supported value-based attentional capture. In addition, we show with multiple additional analyses that are detailed in Materials and methods and in Figure 5—figure supplement 2 to Figure 5—figure supplement 5 that the originally reported negative effect of HV-D on relative choice accuracy is a statistical artifact that is due to an incorrect implementation of the interaction term (HV-LV)×(HV-D) in the performed regression analysis. After correcting this error, the effect of HV-D disappears.

A computational model integrating value, attention, and decision making

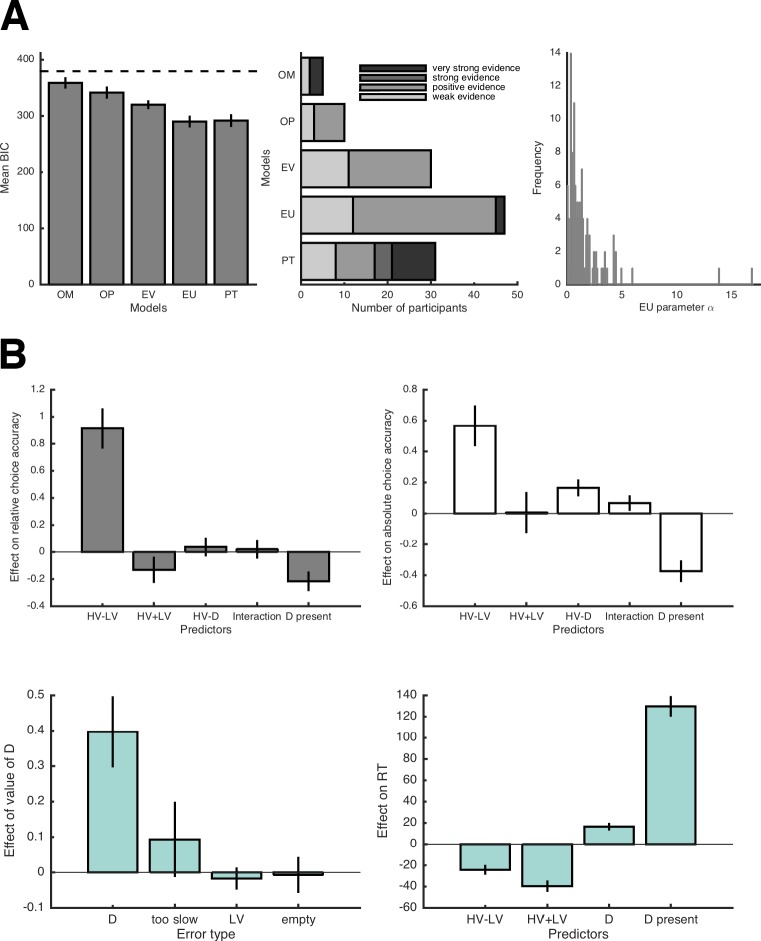

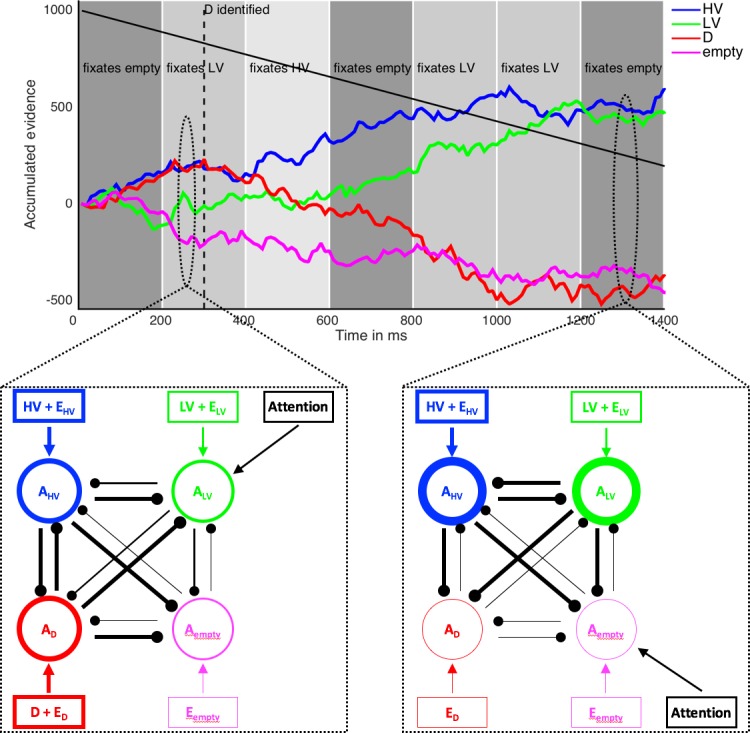

Although value-based attentional capture is a well-established empirical finding (Anderson, 2016; Le Pelley et al., 2016), to the best of our knowledge it has never been implemented into a decision-making model so far. In the following, we propose and test the Mutual Inhibition with Value-based Attentional Capture (MIVAC) model that accounts for the complex interplay of value and attention on choice (Figure 6; details are provided in Methods). MIVAC is an extended mutual inhibition (MI) model, a sequential sampling model that assumes a noisy race-to-bound mechanism of separate, leaky, and mutually inhibiting accumulators for each choice option (Bogacz et al., 2006; Usher and McClelland, 2001). Notably, the MI model (without the extensions we propose for MIVAC) is equivalent to a mean-field reduction of the cortical attractor model that was applied by Chau2014 (Bogacz et al., 2006; Wang, 2002; Wong and Wang, 2006), and it is indeed capable of predicting a positive effect of the value of D on relative choice accuracy (see Figure 6—figure supplement 1). MIVAC assumes an accumulator for each choice option, so four accumulators in the case of the Chau2014 paradigm (for HV, LV, D, and the empty quadrant). A choice is made as soon as an accumulator reaches an upper boundary that collapses in time (to account for the time limit of the task; for example Gluth et al., 2012; Gluth et al., 2013a; Gluth et al., 2013b; Hutcherson et al., 2015; Murphy et al., 2016). Based on our behavioral and eye-movement results, we propose three additional mechanisms. First and foremost, value-based attentional capture is implemented by assuming that the probabilities of fixating particular options depend on their expected values. In other words, more valuable options receive more attention. Second, the input to the accumulator of the currently fixated option is enhanced, consistent with the influence of attention on choice reported in previous work (Cavanagh et al., 2014; Krajbich and Rangel, 2011; Krajbich et al., 2010; Shimojo et al., 2003) and observed in our own data (see Figure 4—figure supplement 1 and Materials and methods). Finally, D can be identified as unavailable, in which case the expected value of D is assumed to be 0, and its accumulation rate and fixation probability are adjusted accordingly. This feature is a specific requirement for the Chau2014 paradigm in which participants are instructed not to choose D (this feature can be omitted for other experimental paradigms).

Figure 6. Illustration of the computational model MIVAC.

Depicted is the development of accumulators of the MIVAC model with estimated parameters from a representative example participant in an example trial (upper panel) together with schematic outlines of the model at two different time points (lower panels). MIVAC consists of four accumulators representing HV, LV, D, and the empty quadrant. At every time step, each accumulator Ax (round nodes in lower panels) receives an input (rectangular nodes) that is equal to the expected value of x (set to 7.5, 5, 10.5, and 0 in this example), plus Gaussian noise Ex. Accumulators inhibit each other (connecting lines between round nodes). Thicker round nodes, rectangular nodes/arrows, and lines indicate higher accumulation states, input, and inhibition, respectively. A choice is made as soon as an accumulator reaches an upper boundary that decreases with time (decreasing black line in upper panel). In this example, HV is chosen after ~900 ms. After D is identified (at 300 ms in this example; dashed vertical line in upper panel), the value of D does not serve as an input to its accumulator anymore. Every 200 ms, a new fixation is made (background greyscale), and the accumulator of the currently fixated option receives an additional input (black ‘Attention’ rectangular nodes). According to the value-based attentional capture element of MIVAC, the probability of fixating an option depends on its (relative) value.

Figure 6—figure supplement 1. The influence of D on relative choice accuracy in the MI and MIVAC models.

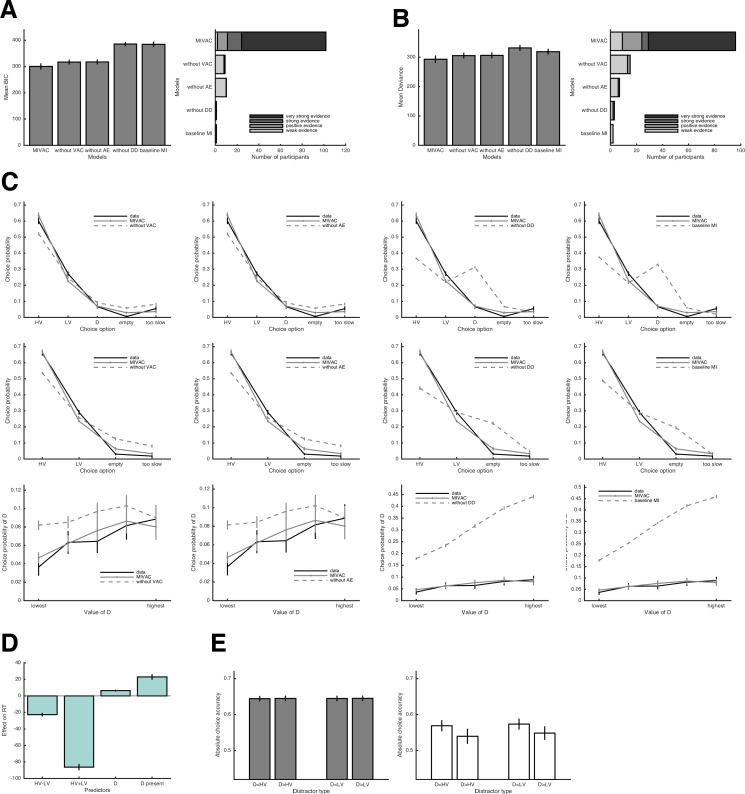

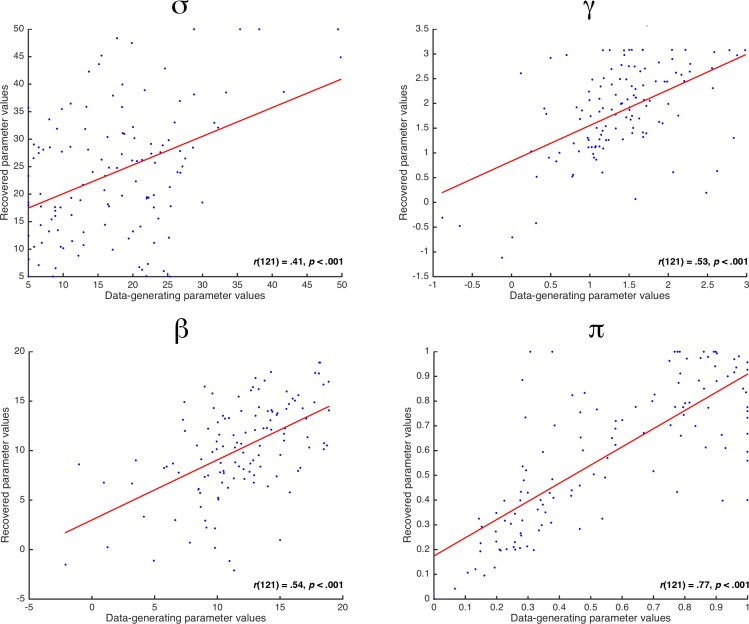

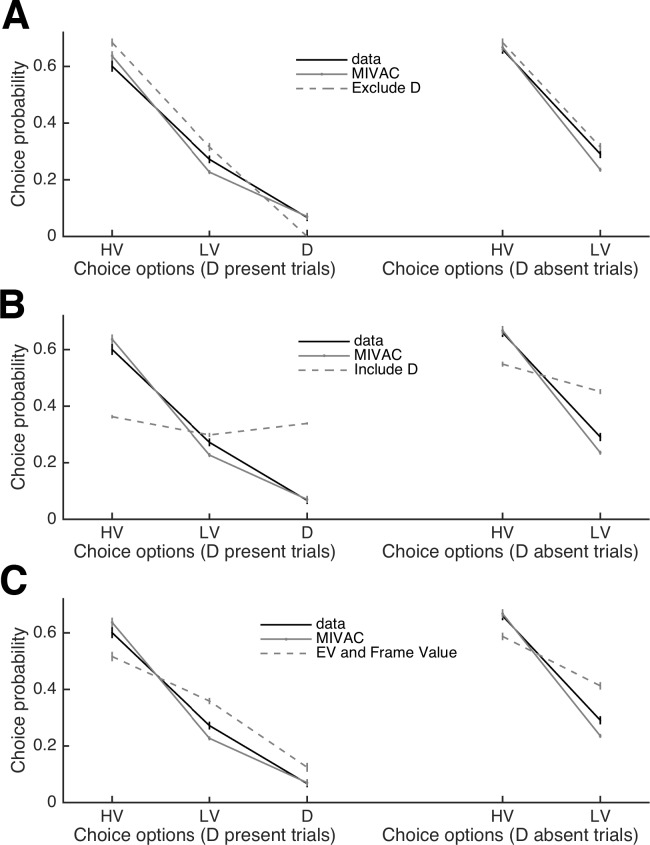

We propose MIVAC to explain the central behavioral findings across all experiments. Furthermore, we illustrate that simpler models without the added mechanisms of MIVAC (in particular, without value-based attentional capture) cannot explain these findings. Thereto, we conducted rigorous quantitative model comparisons in which MIVAC was compared to three models, each of them missing one of the three novel components (i.e., without VAC = without value-based attentional capture; without AE = without attentional enhancement; without DD = without distractor detection) and a baseline MI model, without any of these components. When fitting the models to trials in which a distractor D was present, we found a clear advantage of MIVAC compared to the simplified versions in terms of both average model fit and best model fit per participant (Figure 7A). We then performed a generalization test (Busemeyer and Wang, 2000) by using the models’ estimated parameters from the trials with D to predict behavior in the trials without D. Again, MIVAC outperformed the simpler alternatives (Figure 7B). This generalization test provides strong support for a genuinely better description of the data by MIVAC and rules out an overfitting problem. Qualitatively, MIVAC predicts choice proportions of all potential actions very accurately, and it does so better than the simplified versions without value-based attentional capture with respect to both trials with D present and D absent (upper and middle panels of Figure 7C). The most important test for MIVAC was whether the model accurately predicts the observed increase in choices of D as a function of the value of D. As can be seen in the lower panel of Figure 7C, MIVAC predicts this pattern, and the alternative models either fail to do so or overpredict the frequency of choices of D. MIVAC also reproduces all RT effects reported in Figure 5, that is, negative effects of the difference and the sum of values of HV and LV, and positive effects of the value of D and the presence of D (Figure 7D; compare with Figure 5C). This is particularly remarkable because MIVAC was fitted only to the choice but not to the RT data. Finally, generalizing MIVAC to the novel trials shows that the model exhibits the observed qualitative patterns for both relative and absolute choice accuracy (Figure 7E; compare with Figure 5D). All these results hold when replacing the EV-based input to the accumulators by EU-based subjective values (Figure 7—figure supplement 2) or when simulating the model with fixations drawn from the empirical fixation duration distributions (Figure 7—figure supplement 3).

Figure 7. Quantitative and qualitative tests of MIVAC.

(A) Left panel: average model fits for MIVAC and its simplifications for trials with D present (BIC = Bayesian Information Criterion; lower BICs indicate better fits). MIVAC had the lowest BIC (p < .001 for all pairwise comparisons). Right panel: model evidence per participant. (B) The same as in (A) but generalized to trials with D absent. MIVAC had the lowest deviance (p < .001 for all pairwise comparisons). (C) Upper panel: choice proportions (black line) for the five potential actions together with predictions of MIVAC (continuous grey line) and one of its variants (dashed grey lines). Middle panel: the same but for trials with D absent. Lower panel: observed and predicted probabilities of choosing D as a function of D’s value. It can be seen that the variants of MIVAC without value-based attentional capture or attention-based enhancement of value accumulation fail to predict that choices of D increase as D’s value increases. In contrast, the other two variants (i.e., without detection of D, baseline MI) predict too many choices of D. (D) Predicted RT effects of the value of D by MIVAC; the model correctly predicts all RT effects observed in the data (see Figure 5C). (E) Generalization of MIVAC to the novel trials; the model reproduces the observed qualitative patterns of both relative and absolute choice accuracy (see Figure 5D).

Figure 7—figure supplement 1. Model recovery results.

Figure 7—figure supplement 2. MIVAC with EU-based subjective values as inputs.

Figure 7—figure supplement 3. Fixation durations and MIVAC with empirical fixation durations.

Figure 7—figure supplement 4. Predictions of IIA violations with extension of MIVAC.

Figure 7—figure supplement 5. Comparison of MIVAC with multinomial logit (standard economic) choice models.

Discussion

When choosing between multiple alternatives, humans and other animals often violate IIA, which has far-reaching consequences for our understanding of the neural and cognitive principles of decision making (Hunt and Hayden, 2017; Rieskamp et al., 2006; Vlaev et al., 2011). The purpose of our study was to shed light on the unresolved debate of whether the value of a third option leads to violations of IIA in the sense that it either decreases or increases relative choice accuracy between the other two options. Strikingly, we obtained strong evidence that neither violation of IIA is likely to occur when making decisions under time pressure, but that value-based attentional capture leads to a general performance decline (i.e., a decline in absolute but not relative choice accuracy representing no violation of IIA) and slows down the decision process. MIVAC, a computational model that we propose to explain the findings, is based on the assumptions that value drives attention (Anderson et al., 2011) and attention in turn affects the accumulation of evidence (Krajbich et al., 2010). We found that MIVAC reproduced the central behavioral findings for choice accuracy and RT with remarkable precision.

Specific characteristics of the Chau2014 task design are likely to have facilitated an influence of value-based attentional capture. Having to choose between four potential actions within about 1.5 s puts participants under severe time pressure. This forces them to make use of implicit stimulus-reward associations for identifying attractive options as quickly as possible (Krebs et al., 2011; Serences, 2008). Using such implicit associations requires options that are distinguishable via low-level perceptual features, such as color or orientation (Anderson, 2016), which is exactly what was used in Chau2014. In fact, the Chau2014 paradigm closely resembles the visual search tasks used to study value-based attentional capture (Anderson et al., 2011), in which participants also have 1.5 s to identify a target out of several alternatives, while a distractor is characterized by a specific color (that was previously associated with low or high rewards). Yet, there are crucial differences between the two paradigms. Whereas the typical visual search tasks are framed as perceptual tasks, the Chau2014 paradigm is a value-based task. For example, choosing the LV option in the Chau2014 task can still lead to a reward, whereas choosing a non-target in the visual search task is treated as an error. Perceptual and preferential tasks have been shown to elicit different behavior (Dutilh and Rieskamp, 2016) and to rely on partially distinct neural mechanisms (Polanía et al., 2014). Such differences likely explain why we did not only find an influence of the value of D on RT but also on choice accuracy, which has been reported in only a minority of studies on value-based attentional capture (Itthipuripat et al., 2015; Moher et al., 2015).

An important role of attention in value-based decision making has been established in recent years (Cavanagh et al., 2014; Cohen et al., 2017; Krajbich and Rangel, 2011; Krajbich et al., 2010; McGinty et al., 2016; Shimojo et al., 2003). With some exceptions (Itthipuripat et al., 2015; Towal et al., 2013), research on the interaction of attention and choice has focused on the bias that the former exerts on the latter: Individuals are more likely to choose options that receive comparatively more attention. We replicated this effect in our eye-tracking experiment (Figure 4—figure supplement 1). However, several other eye-movement patterns in our data differed from previous findings. First, participants were more likely to look at more valuable options (Figure 4A), and this effect was significant even for the first fixation in a trial (Materials and methods). Second, the duration of first fixations was not shorter but longer compared to middle fixations (Figure 7—figure supplement 1). Third, the effect of attention on choice was not modulated by value (in the sense that the effect would be more pronounced for options of high compared to low value; Figure 4—figure supplement 1), which is in line with some (Cavanagh et al., 2014) but not with other previous findings (Krajbich and Rangel, 2011; Krajbich et al., 2010). Correspondingly, the MIVAC model differs from previous attention-based instantiations of sequential sampling models, in particular the attentional Drift Diffusion Model (aDDM; Krajbich and Rangel, 2011; Krajbich et al., 2010), in at least two aspects. First, attention is not distributed randomly across choice options but depends on the options’ values (i.e., value-based attentional capture), and second, the accumulation of evidence for attended options is enhanced additively (i.e., independently of value). The latter feature is more in line with the results and model presented in Cavanagh et al. (2014). Interestingly, this study also used abstract choice stimuli as compared to the food snacks used in the studies by Krajbich and colleagues, and participants were put under at least mild time pressure (i.e., choices had to be made within 4 s). We conclude that attention and its influence on decision making can depend to some extent on the experimental design (Spektor et al., 2018b).

An alternative explanation for the interplay of attention, value, and choice could be that attention does not influence choice, but that choice intention influences attention. Stated differently, when having the intention to choose an option this option will receive more attention which then leads to longer fixation durations, so that high-value options are looked at longer only because they are more likely to be chosen. This reversed interpretation of the role of eye movements is particularly plausible for later stages of an ongoing decision because the last fixation is often directed at the eventually chosen option (Shimojo et al., 2003; but see Krajbich et al. (2010), for an account of this ‘gaze-cascade effect’ within the aDDM framework). However, our analyses of first fixations and first-fixation durations are incompatible with such an interpretation (see Materials and methods). As stated above, the probability to fixate an option first depended on that option’s value, consistent with value-based attentional capture. Importantly, this effect remained significant after controlling for the eventual choice, suggesting that it does not simply reflect the intention to choose the first-fixated option. Furthermore, we found that not only the first fixation and its duration but also the value of the first-fixated option contributed to predicting the eventual choice. In our view, this combined contribution of gaze time and value is best accounted for by assuming that people accumulate evidence for choosing an option based on both the option’s value and the amount of attention spent on it. Therefore, we implemented these mechanisms into MIVAC accordingly.

At first glance, an effect opposite to what was observed by Chau2014 appears as support for the divisive normalization account by Louie2013. However, our results refute such an interpretation because the relative choice proportions between HV and LV were unaffected by the value of D. As with the different eye-movement results discussed above, the absence of a ‘divisive normalization’ effect in our data might be related to dissimilarities between the task paradigms. First of all, participants in Chau2014 (but not Louie2013) made decisions under time pressure, which promoted value-based attentional capture effects but suppressed violations of IIA. Furthermore, the decoy was highlighted as unavailable in Chau2014, whereas in Louie2013, the decoy was simply defined as the option with the lowest value. Finally, Chau2014 used abstract two-dimensional stimuli, whereas Louie2013 used concrete food snacks, for which it is currently debated whether and how specific attributes are taken into account (Rangel, 2013). Louie2013 did not address the distinction between single- and multi-attribute decisions, but their model should predict a negative influence of D’s value on relative choice accuracy in both cases (because it assumes normalization to occur at the level of the integrated value signal). Although it goes beyond the scope of the current study, it will be critical to test to what extent effects of value-based attentional capture can be generalized to different implementations of multi-alternative decisions in future research.

Notably, the principle of normalization can be implemented into sequential sampling models, albeit in a different way compared to the mutual inhibition mechanism of MIVAC. According to Teodorescu and colleagues (2016), normalization acts on the input level of the accumulation process such that the input to one accumulator is reduced by the input to all other accumulators (a mechanism also referred to as ‘feed-forward inhibition’; see Bogacz et al., 2006), whereas mutual inhibition acts on the accumulator level such that the accumulation of evidence for one option is reduced by how much evidence has been accumulated for the competing options. Separating between these different instantiations of inhibition is best achieved by taking their RT predictions into account (Teodorescu et al., 2016).

Generally, we did not obtain any reliable value-dependent violation of IIA in the standard version of the Chau2014 paradigm, but only when we gave participants more time to decide. In line with previous research (Dhar et al., 2000; Pettibone, 2012; Trueblood et al., 2014), these findings demonstrate the time-dependency of context effects: Being under time pressure or not determines whether effects related to value-based attentional capture or multi-attribute context effects can be expected to occur. One reason for this dependency could be that people adaptively select decision strategies based on the current decision context (Gluth et al., 2014; Payne et al., 1988; Rieskamp and Otto, 2006), and not all strategies are prone to the same contextual or attentional biases. Furthermore, multi-attribute context effects could be the consequence of dynamic choice mechanisms that require comparatively long deliberation times to exert a measurable influence on decisions (Roe et al., 2001; Trueblood et al., 2014).

Importantly, even though the negative influence of D on absolute choice accuracy is not a violation of IIA, our results cannot be well accounted for by standard economic choice models, such as the multinomial logit model (e.g., McFadden, 2001). We compared three variants of multinomial logit models with MIVAC (see Materials and methods and Figure 7—figure supplement 5). Two of these models either exclude or include D as a regular choice option. Excluding D leads to the prediction that D does not decrease absolute choice accuracy at all. Including D leads to the prediction that D is chosen as a function of its value relative to HV and LV and thus to the prediction that D is chosen in too many trials. A third alternative is to assume that choices are based on a combination of the options’ EVs (as signaled by the rectangles’ colors and orientations) with a subjective value assigned to the colors of the frames that signal whether an option is a target or a distractor. Although this version of a multinomial logit model is able to predict that D is chosen in only a minority of trials, its predictions are clearly worse than those of MIVAC, as the logit model predicts too few choices of HV and too many choices of LV (Figure 7—figure supplement 5). Furthermore, standard economic choice models do not take RT into account and thus cannot predict that high-value D options slow down the choice process (Figure 5C) such that the probability of making too-slow errors is increased (Figure 5B). A dynamic component, like the accumulation of evidence as implemented in MIVAC, is required to explain these RT-related effects. Also note that even though the current version of our model does not predict violations of IIA (consistent with our data under high time pressure), it is straightforward to extend MIVAC to allow such violations. In Materials and methods, we describe one possible extension of MIVAC and demonstrate how it allows the model to predict the IIA violation in our data under low time pressure.

Methodologically, the current study is an eminent example of the importance of replication attempts for the advancement of empirical science (Munafò et al., 2017), which is particularly timely given the current debate on replicability of research in psychology, neuroscience and other fields (Camerer et al., 2016; Open Science Collaboration, 2015; Poldrack et al., 2017). Our initial hypothesis for explaining the IIA violation reported in Chau2014 was based on the assumption of a robust and reliable effect. However, we could not replicate this effect (see Materials and methods and Figure 5—figure supplement 2 to Figure 5—figure supplement 5 for additional analyses that challenge both the replicability and the reproducibility of the effect proposed by Chau2014). When developing novel ideas and experiments on the basis of past findings, it is important that these findings are reliable and have been replicated, to avoid leading research fields into scientific cul-de-sacs, in which time and resources are wasted (Munafò et al., 2017). In our case, only the (unsuccessful) attempt to replicate an original study allowed us to identify truly robust behavioral effects, which favor an entirely different mechanistic explanation than originally proposed: Attention can be captured by irrelevant but value-laden stimuli, which slows down the goal-directed choice process and impairs decision making under time pressure. Crucially, neither our study nor replication studies in general are destructive. We gained novel insights about the role of attention in multi-alternative decision making, and we will gain similarly important insights when attempting to replicate other studies.

Materials and methods

Participants

Thirty-one participants (21 female, age: 20 – 47, M = 27.71, SD = 6.59, 29 right-handed) completed Experiment 1. A total of 51 participants signed up for Experiment 2. Due to computer crashes, the data of two participants (one from Group HP and one from Group LP) were incomplete and excluded from the analyses, resulting in a final sample of 49 participants, 25 were in Group HP (13 female, age: 20–46, M = 26.88, SD = 6.62, 24 right-handed) and 24 were in Group LP (11 female, age: 19–35, M = 23.96, SD = 3.43, 20 right-handed). Participants were assigned randomly to the two groups. Thirty participants signed up for the eye-tracking Experiment 3. One participant was excluded for not passing the training-phase criterion, and additional six participants were excluded due to incompatibility with the eye-tracking device (for further details see section Eye-tracking procedures and pre-processing), resulting in a final sample of 23 participants (14 female, age: 18–54, M = 25.70, SD = 8.66, 19 right-handed). Forty-seven participants signed up for Experiment 4. Due to failing the training-phase criterion, three participants were excluded, resulting in a final sample of 44 participants (36 female, age: 18–46, M = 23.70, SD = 5.74, 40 right-handed). The sample size for Experiment 1 was based on the assumption that testing 1.5 times as many participants as in the original study (Chau2014) would suffice to replicate its main results. In Experiment 4, we tested more participants to ensure a statistical power of >0.90 for replicating the effect of HV-D on relative choice accuracy (note that the effect size in Chau2014 was d = −0.495). The sample size for Experiments 2 and 3 were based on observing strong effects (i.e., d ≥ 0.8) of value-based attentional capture in Experiment 1 (but it should be noted that no formal power analysis was conducted). All participants gave written informed consent, and the study was approved by the Institutional Review Board of the Department of Psychology at the University of Basel. All experiments were performed in accordance with the relevant guidelines and regulations. Data of all participants included in the final samples are made publicly available on the Open Science Framework at https://osf.io/8r4fh/.

Task paradigm

The paradigm was very similar for all four experiments. Participants repeatedly chose between either two (binary trials) or three (distractor trials) two-outcome lotteries (gambles), each yielding an outcome of magnitude X in Swiss Francs (CHF) with probability p or 0 otherwise. The gambles were represented by rectangles, each shown in a random quadrant of the screen, whereby the rectangles’ colors represented outcomes X and the angles represented the probabilities p. Outcomes ranged from CHF 2 to CHF 12 in steps of CHF 2 and were represented by colors ranging from either green to blue or blue to green. Probabilities ranged from 1/8 to 7/8 in steps of 1/8 and were represented by orientation angles ranging from 0° to 90° or from 90° to 0° in steps of 15° (see Figure 1B for all colors and orientations). Associations between colors/outcomes and orientations/probabilities were counter-balanced between participants. In binary trials, participants saw the two options for 100 ms before orange frames appeared around each option (pre-decision phase). After the frames appeared, participants had up to 1.5 s to make a choice by pressing 7, 9, 1, or 3 on the numeric keypad for upper left, upper right, lower left, or lower right quadrant, respectively (participants belonging to Group LP of Experiment 2 had 6 s after appearance of the frames to decide). Distractor trials were similar to binary trials: All of the options were presented for 100 ms before frames appeared around them. Contrary to the binary trials, one of the options had a magenta frame (the distractor), signaling that it could not be chosen. Choosing the distractor resulted in a screen telling that the option was not available after which a new trial began. Similarly, choosing an empty quadrant resulted in a screen showing that the quadrant was empty after which a new trial began.

If a valid choice was registered, the trial continued with a choice-feedback phase for 1 to 3 s, in which the chosen option was highlighted in a dark red color. To ensure that participants paid attention to all available options, participants had to complete a ‘match’ trial before the choice-feedback phase in 15% of all trials. On match trials, one of the options from the decision phase (including the distractor, if distractor trial) was presented in the middle of the screen. Participants had up to 2 s (6 s in Experiment 2, Group LP) to press the key corresponding to the option’s quadrant. If correct, participants saw a screen saying ‘correct’ and an extra CHF 0.10 were added to the participant’s account, otherwise they saw a screen saying ‘wrong’. After every match trial, the trial continued with the choice-feedback phase.

After the choice-feedback phase, participants received feedback about the outcomes of the gambles for 1 to 3 s. The frames’ colors changed to grey if the option did not yield a reward (i.e., the outcome was CHF 0) or to golden yellow if the option yielded a reward. Participants also received feedback about the distractor’s outcome on distractor trials. After this outcome-feedback phase, a new trial began with an inter-trial interval of 1.5 to 3 s in which a fixation cross was shown.

Experimental procedures

After giving informed consent and filling out the demographic questionnaire, participants received detailed instructions about the task and were familiarized with the outcome and probability associations by making six judgments for each dimension in a paired comparison. In all experiments, participants completed a training phase and an experimental phase. In the training phase, participants encountered up to 210 trials, half of which were distractor trials. These trials were randomly generated with the boundary condition that 2/3 of the trials were not dominant (i.e., HV did not have a higher probability and a higher outcome than LV) and the rest were dominant. The training phase continued until participants encountered at least 10 dominant binary trials, and chose HV in at least 70% of the last 10 encountered dominant binary trials. The training phase ended when this criterion was reached and the experimental phase began. If the participants finished all 210 training trials without passing the criterion, the experiment ended. As reported above, participants who did not pass the criterion were excluded from the analysis.

The experimental phase consisted of either 412 (Experiment 1 to 3) or 300 (Experiment 4) trials. The 300 trials used in Experiment four were shared across all experiments and are those used by Chau2014. In Experiment 4, all trials were presented in exactly the same orders as in Chau2014’s experiment, whereas in the other experiments, the distractor trials were presented in the order provided to us by the authors of Chau2014 with the randomized binary trials interleaved. In addition to these trials, Experiments 1 to 3 included 56 novel distractor trials and, correspondingly, the 56 binary trials belonging to these novel trials (details are provided in the next two sections). Throughout the experiment, participants had the opportunity to make four breaks. After completing the experiment, participants received their show-up fee (CHF 5 for 15 min), the average reward of the chosen option (distractor and empty quadrant choices counted as no reward), and the accumulated match bonuses. If participants reached the experimental phase, the experiments took approximately 75 min.

Initial hypothesis for the effects reported in Chau2014

Before conducting our experiments, we assumed that the positive relationship between the value of D and relative choice accuracy as reported by Chau2014 was a robust effect. To explain the apparent contradiction with the findings of Louie2013, we reasoned that the explicit presentation of two attributes (i.e., magnitude X and probability p of reward) in the Chau2014 task led people to compare the options on those attributes directly (i.e., a multi-attribute decision between attributes X and p instead of a decision between expected values, EV). Importantly, certain attribute-wise comparison processes are known to produce IIA violations, so-called ‘context effects of preferential choice’ (Berkowitsch et al., 2014; Gluth et al., 2017; Mohr et al., 2017; Pettibone and Wedell, 2007; Roe et al., 2001; Trueblood et al., 2014; Usher and McClelland, 2004). More specifically, our initial hypothesis was that individuals can recognize that D is either better or worse than LV and/or HV with respect to each attribute (e.g., D might dominate LV with respect to probability). Critically, since LV is per definition worse than HV, it is more likely that D dominates LV than that it dominates HV on some attribute. However, this is only true as long as D has not very low attribute values (leading to a low EV overall). Thus, the dominance relationship between D and LV/HV may help participants to identify the option with the highest EV. The positive relationship between D and relative choice accuracy would then be an epiphenomenon of this dominance relationship mechanism.

To give an example, let us assume that HV, LV, and D are specified as follows:

HV: p = 5/8; X = CHF 8 → EV = CHF 5

LV: p = 3/8; X = CHF 4 → EV = CHF 1.5

D: p = 6/8; X = CHF 6 → EV = CHF 4.5

In this case, D is superior to HV and LV with respect to probability. With respect to magnitude, however, D is superior to LV but inferior to HV. Hence, by counting the number of times D is superior to LV and HV on the attributes (i.e., two for LV vs. one for HV), a decision maker could correctly identify the option with the highest EV. Critically, this information is not helpful anymore when we replace D by a distractor D* of lower EV:

D*: p = 6/8; X = CHF 2 → EV = CHF 1.5

In this new case, the distractor is inferior to both HV and LV with respect to magnitude and (still) superior to both with respect to probability (i.e., the count is one for LV vs. one for HV). Thus, the option with the highest EV cannot be identified anymore based on the dominance relationship alone. This demonstrates that the high-value D might better support choosing between HV and LV than the low-value D*. Notably, the idea that the relative ranking of options influences decision making has also been suggested by others (Howes et al., 2016; Stewart et al., 2006; Tsetsos et al., 2016).

Besides this dominance relationship hypothesis, the paradigm of Chau2014 might also involve other context effects that influence behavior. We considered the attraction effect (Huber et al., 1982) and the phantom-decoy effect (Pettibone and Wedell, 2007; Pratkanis and Farquhar, 1992). According to the attraction effect, the preference between two options (in our case between HV and LV) can be changed by adding a third option (in our case D) that is similar but clearly inferior to only one of the two options (in our case, if D is similar to HV and worse than it, HV should be preferred; if D is similar to LV and worse than it, LV should be preferred). The phantom-decoy effect predicts that if an option is similar but worse than D (and D is unavailable, as in the Chau2014 task), it is more likely to be chosen. According to a combination of attraction and phantom-decoy effects, independent of whether D is worse or better than the similar option, the option being more similar to D should be more likely be chosen. In contrast to the context effects, the Chau2014 model, and the divisive normalization account, value-based attentional capture does not predict any influence of D on relative choice accuracy, but a negative effect on absolute choice accuracy (see Figure 1D). As described in the following section, we sought to distinguish between all these different context effects and the model proposed by Chau2014 by implementing a novel set of trials.

The novel trial set and predictions of different models and context effects

In the novel set of trials used to dissociate predictions from various models and context effects, the HV and LV options were arranged such that i.) HV was superior to LV with respect to one attribute (magnitude or probability) but ii.) inferior with respect to the other attribute, and iii.) D could be placed such that it either fully dominated HV/LV or it was fully dominated by HV/LV (for an example, see Figure 1C in the main text). In the Chau2014 task, there are 14 possible combinations of HV and LV that fulfil these criteria. For each of these 14 combinations, D was placed directly ‘above’ or ‘below’ HV or LV resulting in four trials per combination and 56 novel trials in total (we also added 56 binary trials without D, so that Experiment 1 to 3 had 112 trials more than the original study).

The (qualitative) predictions of the models and context effects with respect to these novel trials are outlined in Figure 1D. Chau2014’s biophysical cortical attractor model predicts a positive effect of the value of D on relative choice accuracy. Thus, the performance should be higher in trials with dominant distractors (i.e., D > HV and D > LV). The divisive normalization model by Louie2013 predicts higher accuracy for low-value Ds, or in other words, it predicts the opposite of Chau2014’s model. Our initial dominance relationship hypothesis predicts higher choice accuracy if HV dominates D (i.e., D < HV), or if LV is dominated by D (i.e., D > LV), because in these cases HV is better than D on more attributes than LV. The combination of attraction and phantom-decoy effects predicts more accurate choices when D is dominated by HV (i.e., D < HV) or dominates HV (i.e., D > HV), or in other words, when D is more similar to HV than to LV. Importantly, all these predictions refer to relative choice accuracy (for which we do not find any robust effects in the Chau2014 task with short deliberation time; see Figure 5D). On the contrary, value-based attentional capture predicts no effect on relative choice accuracy, but a reduction of absolute choice accuracy when D has a high value (i.e., D > HV and D > LV).

Note that the different predictions can also be formulated in terms of main effects and interactions of an ANOVA with the factors Dominance (D dominates or is dominated by HV/LV) and Similarity (D is more similar to HV or to LV). Within this ANOVA, the cortical attractor model predicts a main effect of Dominance. The divisive normalization model also predicts this main effect but in the opposite direction. The dominance relationship hypothesis predicts an interaction effect of Dominance and Similarity. The combined attraction/phantom-decoy effect predicts a main effect of Similarity. Value-based attentional capture predicts a main effect of Dominance in the same direction as the divisive normalization model but on absolute (not relative) choice accuracy.

Behavioral data analysis

In each trial, there was always a higher-valued option HV and a lower-valued option LV. We used two different dependent measures, relative and absolute choice accuracy. Relative choice accuracy refers to the proportion of HV choices among choices of HV and LV only, whereas absolute choice accuracy refers to the proportion of HV choices among all choices (including choices of D, choices of the empty quadrant, and missed responses due to the time limit). Importantly, an influence of the value of D on relative choice accuracy implies a violation of IIA, but an influence on absolute choice accuracy does not necessarily imply this. For each of the two dependent variables, we estimated intra-individual logistic regressions and tested the regression coefficients between subjects against 0 using a two-sided one-sample t-test with an α level of .05. We used the set of predictor variables reported in Chau2014, which consisted of the difference in EV of the two available options, HV-LV, the sum of their EVs, HV+LV, the EV difference between HV and D, HV-D, the interaction between HV-LV and HV-D, (HV-LV)×(HV-D), and whether it was a binary or distractor trial, D present. In the binary trials, the predictors HV-D and (HV-LV)×(HV-D) were kept constant (i.e., replaced by the mean values in the distractor trials in the regression analysis). The predictor variables HV-LV, HV+LV, and HV-D were standardized. Importantly, standardization was conducted before generating the interaction term (HV-LV)×(HV-D) in order to avoid nonessential multicollinearity (Aiken and West, 1991; Mahwah et al., 2003; Dunlap and Kemery, 1987; Marquardt, 1980). The interaction term itself and the binary predictor D present were not standardized (note that this would not have changed any statistical inferences but only the absolute values of coefficients). In addition, we analyzed the influence of the (standardized) value of D on the tendency to choose D (logistic regression) and on the RT of HV and LV choices (linear regression). The RT analysis included additional predictor variables with a significant influence on RT (i.e., HV-LV, HV+LV, D present). Note that only the 300 trials that were also used in the Chau2014 paradigm (but not our 112 novel trials) were included in these regression analyses. The novel trial sets used to dissociate different model predictions were analyzed by a 2 (Dominance) x 2 (Similarity) ANOVA (for details see above).

Because participants received feedback after each decision, we tested whether improvements over time affected the results of relative or absolute choice accuracy by re-analyzing the regressions with an additional predictor variable that coded for the (standardized) trial number. Although we found a small learning effect on absolute choice accuracy (t(122) = 2.12, p = .036, d = 0.19), this did not affect any other effects qualitatively. When adding the trial number predictor to the analysis of the influence of D’s value on the tendency to choose D, we found a strong learning effect (t(122) = −10.18, p < .001, d = −0.92), suggesting that participants improved in avoiding to choose D over the course of the experiment (but the effect of D’s value remained significant). Hence, future instantiations of our model could incorporate a dynamic component to accommodate this learning effect.

Eye-tracking procedures and pre-processing

Experiment 3 was conducted while participants’ gaze positions were recorded using an SMI RED500 eye-tracking device. The experimental procedure was adapted to make it suitable for an eye-tracking experiment. Participants completed the experiment on a 47.38 × 29.61 cm screen (22" screen diagonal) with a resolution of 1680 × 1050 pixels. During the inter-trial interval, participants were instructed to look at the fixation cross and the random duration of the inter-trial interval was removed. Instead, there was a real-time circular area of interest (AOI) with a diameter of 200 pixels around the fixation cross. Participants’ gazes had to (continuously) stay within this AOI for 1 s for the trial to begin. This was done to make sure that participants were indeed looking at the fixation cross and to check the calibration at every trial. If this criterion was not reached within 12 s, the eye tracker was re-calibrated. This procedure was explained to the participants by the experimenter. In case these re-calibrations happened too frequently (e.g., three times in a row within the same trial, or at least three times in ten trials), the sampling frequency was reduced from the initial 500 Hz to 250 Hz. If the issues continued until the lowest possible frequency of 60 Hz was reached, the experiment was aborted and participants received their show-up fee and decision-based bonuses accumulated until then. The first calibration took place just before the training phase and the eye tracker was re-calibrated after each of the four breaks. This experiment took approximately 90 min to complete.

The raw gaze positions were re-coded into events (fixations, saccades, and blinks) in SMI’s BeGaze2 software package using the high-speed detection algorithm and default values. AOIs were defined around the positions where the frames of the options were, and all fixations inside the frame were counted towards the option within that quadrant. Fixations at empty quadrants as well as all fixations outside of the pre-defined AOIs were counted as empty gazes. Fixations within a trial were collapsed and summed to form the dependent variables relative fixation duration and number of fixations. We report results based on the relative fixation duration (i.e., the sum of the duration of all fixations on a specific quadrant divided by the sum of the duration of all fixations on any quadrant). Note that this measure is highly correlated with the number of fixations, which yielded similar results.

Eye-tracking analysis I: Tests of value-based attentional and oculomotor capture

To test the hypothesis that the negative influence of D on absolute choice accuracy is driven by value-based attentional/oculomotor capture, we conducted the following three analyses: i.) We tested for the dependency of relative fixation duration of HV, LV, and D on their respective EVs (Figure 4A). Thereto, the correlations between the options’ relative fixation durations and EVs were calculated for each participant, and the individual Fisher z-transformed correlation coefficients were subjected to one-sample t-test against 0 on the group level (after checking for normality assumptions via the Kolmogorov-Smirnoff test at p < .1). ii.) We conducted a path analysis (within each participant) in which the influence of the value of D on absolute choice accuracy was hypothesized to be mediated by the relative fixation duration on D (Figure 4B). iii.) We run an (across-participant) correlation between the behavioral regression coefficients representing the influence of the value of D on absolute choice accuracy and the regression coefficients representing the influence of the value of D on relative fixation duration on D (Figure 4C).

Eye-tracking analysis II: Direct vs. value-dependent influences of attention on choice