Abstract

Learning about the world is critical to survival and success. In social animals, learning about others is a necessary component of navigating the social world, ultimately contributing to increasing evolutionary fitness. How humans and nonhuman animals represent the internal states and experiences of others has long been a subject of intense interest in the developmental psychology tradition, and, more recently, in studies of learning and decision making involving self and other. In this review, we explore how psychology conceptualizes the process of representing others, and how neuroscience has uncovered correlates of reinforcement learning signals to explore the neural mechanisms underlying social learning from the perspective of representing reward-related information about self and other. In particular, we discuss self-referenced and other-referenced types of reward prediction errors across multiple brain structures that effectively allow reinforcement learning algorithms to mediate social learning. Prediction-based computational principles in the brain may be strikingly conserved between self-referenced and other-referenced information.

Historical perspectives on representing other

Learning about the world and making adaptive decisions is a critical feature of cognition. This important link allows human and nonhuman animals to manipulate their environment and survive. Decision-making takes on more complex dynamics when an animal is not solitary, but lives in a community with other members of its own species. We know much about how human and nonhuman animals learn from their own actions and outcomes, and where such self-referenced information is represented in the brain. However, much less is known about the computations underlying how we learn about others. In this review, we examine the presence of other-referenced prediction errors in the brain that represent other’s actions and reward outcomes.

One of the first academic disciplines to attempt to understand how we develop a concept of others is developmental psychology, in which researchers often explore how babies come to understand the world.1, 2 One viewpoint, that of theory–theory, is that, like small scientists testing causal relations,3 children are constantly gathering data from the world and testing the predictions they make using the collected data. Other people could be regarded as stimuli to be learned about based on observation and direct and vicarious experience.

In contrast, simulation theory assumes that we develop our understanding of others through self-referencing, in which we use the machinery that we use for our own mental processes, and project that knowledge onto other’s behaviors.4 Later, this notion of simulating others became associated with the “mirroring” neuronal activity observed in individual cortical motor neurons when macaque monkeys observe an action and perform the same action.5

Notably, these theories have different predictions about how other-referenced information is represented in the brain.6 In a simulationist account, the notion of “other” is derived from one’s sense of the self, that is, egocentrically. Ideas about others would originate from and rely on the self-referenced, egocentric mechanisms. However, in theory–theory, information about others would be processed and evaluated like any other information from the environment, perhaps engaging allocentric systems. Historically, these two ideas capture a central question about how others are represented in the brain.

Observational and social learning

Both human and nonhuman animals rely on observation to navigate the world. Rats,7 birds,8 and chimpanzees9 observe others to learn about their behaviors in a given environmental or social context. One of the earliest forms of observational learning occurs in imitation. Imitative learning typically involves a young organism copying the motor behaviors of a social exemplar. Infant humans10 and infant monkeys11 imitate gross facial expressions made by a caregiver early on in development, sticking out their tongue reflexively when an adult human demonstrates, likely an example of a simple motor response prepared by the brain for social development. Imitation in human children is most famously exemplified in observational learning or, more broadly, social learning studies.12

Social learning occurs when the learner watches another agent act. Notably, without any practice or direct primary reinforcement, the learner can perform the previously observed behavior. This suggests that the learner is able to acquire new knowledge or skills from watching other’s experienced outcomes, possibly through vicarious reinforcement.13 The efficacy of social learning depends on several social variables. For example, similarity between the observer and the observed increases the efficacy of learning.14 Furthermore, there is a close link between empathy and social learning. Empathy is sensitive to learned information about the traits of the other person, such as how fair they are15 or whether or not the other person is considered in-group or out-group relative to the observer.16 In addition, social status directly affects learning based on others in primates, in which high-status individuals are more likely to be imitated.17

In humans, observational learning may be at the core of establishing social and cultural norms.12 In Bandura’s classic study13 on behavioral modeling, children that saw an adult model aggressive behavior toward a large doll later performed the same aggressive behaviors when given the opportunity to interact with the same doll. Observational learning has an important role in development as well as in later social interactions and social cognition. A critical question in social learning is how self and other are represented across the brain structures involved in learning and whether the learning-related signals referenced to self and other are engaging similar or distinct neural computations.

Social learning as we define it in this review focuses on this observation-based learning, in which a subject learns about another through observing their actions as well as their reward outcomes. However, the aspects of social learning are as multitudinous as the facets of a social interaction itself. One could learn about different aspects of the other, such as their personality or their mental state. Social learning could also reflect learning from others about one’s own reward outcomes (e.g., a teacher providing feedback on a student’s essay and grade).

Higher-level social cognition

Learning about others allows us to model the internal states of other entities. The ability to model another’s beliefs is called Theory of Mind (ToM). ToM is arguably the most complex form of understanding other individuals, heavily engaging other-referenced processing. The fact that human babies can model the beliefs of others speaks to how complex and rich their representation of the world is from the beginning. Not surprisingly, understanding the mechanisms behind ToM has long been of great interest, with competing ideas about whether or not ToM represents a separate social process or the convergence of many generalized processes.18 ToM is often measured using a false belief task,19 which tests if a participant can understand whether a social model has an incorrect belief about an object’s location. Notably, very young children, even as young as 11-month-old infants, are capable of modeling the internal beliefs of others and “pass” a false belief test,20 indicating that other-referenced processing in the brain emerges very early in the human ontogeny.

Studying ToM in nonhuman animals, however, has led to more mixed results. For example, monkeys fail the same false belief tasks infants can pass.21 Nevertheless, nonhuman primates have been shown to engage in other forms of understanding or at least representing others. Monkeys display joint attention via gaze following.22–24 Monkeys will typically follow the gaze of another entity toward an object or direction, indicating either that they can understand something about the perspective of the other entity or that other’s gaze is reflexively allocating one’s attention through hard-wired neural mechanisms evolved to deal with the association between other’s gaze angle and something of interest and value. Similarly, monkeys25 and chimpanzees26 have been shown to comprehend what visual information is available to a separate agent—for example, when given an opportunity to steal food, they prefer to do so from someone who does not have visual access to them at the moment of theft. This indicates that primates understand other entities have a different perspective, even if they do not necessarily model the beliefs of the other entity.

Taken together, both humans and nonhuman animals are capable of complex social cognition, but the level of sophistication is what might differentiate them in the evolutionary history. Understanding the computations of other-referenced information and representations of self and other will further inform how the brain was evolved to enrich what is often referred to as higher-level social cognition.

Reinforcement learning framework

Reinforcement learning (RL) is perhaps the most influential framework developed to describe how an agent learns by interacting with its environment. RL is derived from the behaviorist view of animal behavior, in which an organism’s knowledge of the world is exclusively modeled based on its behavior. Crucially, RL theories focus on mechanistic accounts for behaviors based on several learning-related parameters established from empirical sources.

Both humans and nonhuman animals are excellent models for a variety of learning and decision-making tasks that are grounded on RL theories. Describing learning and learned outcomes through mathematical models is a powerful way to make explicit and testable predictions about how an organism will behave in a particular context and how they will make decisions that take into account internal states, such as motivation and subjective value.27 The RL framework can capture seemingly complex behaviors with relatively simple yet elegant rules, as in the famous Rescorla–Wagner model.28 Although various RL models differ in how they describe different cognitive phenomena, they share several core elements, such as the rate of learning or the salience of stimuli, to fit the specifics of learning and decision-making processes.

RL has its roots and applications in both engineering and psychology. RL has its core foundations in the work of Richard Bellman, most famous for developing the Bellman optimality equation and dynamic programming. The more widely appreciated root of RL is conceptualizing how organisms gather information from their environment to learn and make decisions. RL requires an agent that moves through different states, or contexts, in a given environment. Other necessary components include a reward signal, a value function, and a policy. Reward outcome is central to all forms of RL and consists of a quantity the agent gets as a result of its actions within the environment. The agent then computes a value function using that reward outcome that calculates the expected value of certain states/contexts as well as the conjunction of specific states and actions. The agent uses these value functions to develop a set of preferred actions, known as a policy. A model of the environment is an optional component of RL that can provide the organism with guidance on how to move from state to state.

In dynamic programming, developed by Bellman for engineering applications, a complete model of the environment is required. This idea requires the action of an agent to be guided by the expected payoff of the action in addition to the total expected payoff of potential actions in hypothetical future states.29 The same principle applies to temporal discounting (TD) models, the predominant form of RL model applied in psychological studies of humans and other animals.30 TD learning notably differs from dynamic programming, as it does not require any model of the environment. Instead, learning is accomplished by comparing expected reward to actual reward after a certain transition in time. This difference is the reward prediction error. This prediction error is used to update the value function and, ultimately, the policy of an agent interacting with its environment. Prediction error signaling is indeed the fundamental attribute of the original models of learning.28 In simple terms, a prediction error calculates the difference between what the animal expects to have happen and what actually happens to the animal on a given event or trial.31 This can also be described as an error signal.32

Predictive coding and reinforcement learning in the brain

Prediction errors are effectively used as the signal that drives self-referenced learning. Organisms update their behavior on a trial by trial basis to account for new information provided by this discrepancy in expectation and outcome. In particular, the reward prediction error, which calculates the difference between expected payoff and received payoff, has been established as the striking correlate of a mathematical learning rule in neurobiology.33

A classic type of reward prediction error encoded in the brain is consistent with the type required for TD learning.34 Owing to the essential nature of reward to adaptive behavior, areas that encode reward are some of the best studied regions of the brain besides the regions of the brain involved in sensorimotor transformations. Classically, the dopaminergic substantia nigra35 and the ventral tegmentum36 as well as the dorsal and ventral striatum37 have been shown to be primary areas that process reward receipt and valuation, with dopamine’s relationship to reward now known as one of the most iconic behavior to neurotransmitter associations.38 As one might expect, these areas provide strong examples of encoding reward prediction errors.39, 40

Reward prediction error signals have also been found elsewhere in the brain. Primate lateral habenula neurons encode reciprocal information about reward outcomes to the previously described dopamine neurons in the midbrain.41 Notably, the activity of the lateral habenula neurons precede the activity of the dopamine neurons, suggesting that the lateral habenula neurons serve as an input for the prediction error signal detected in the midbrain.41 Furthermore, functional magnetic resonance imaging (fMRI) in humans has revealed the presence of multiple kinds of prediction errors and other learning-related signals across many reward-related structures in the cerebral cortex,42–44 indicating that the prediction error signaling is a widely generalized mechanism linking learning and decision-making. Applying these models to conceptualizing behavior and neural activity has proved fruitful in the study of learning and decision-making, perhaps most famously in the finding that midbrain dopamine neurons represent the TD reward prediction error.33

At least two important branches of research into RL in the neurosciences continue today. The first involves the potential balance between neural substrates of model-free (basic TD learning) vs. model-based (akin to dynamic programming) learning.44 These studies have collectively identified neural substrates of the model-based state transition error,45 representation of model-based in addition to model-free prediction error in the striatum and ventromedial prefrontal cortex,46 as well as brain areas that act as arbiters between model-free and model-based approaches.47 The second branch is vicarious reinforcement, which can also be modeled in a RL framework to account for how other’s behaviors could be used to update our own learning and decision-making processes using vicarious classes of prediction errors.48 RL can potentially be implemented in social learning about the actions and rewards of others.48–50

Such vicarious reinforcement in an RL framework would intuitively have to be performed in a model-based manner, as it is unclear how a model-free RL system could possibly learn about another agent without creating and updating a model of the other agent’s potential thoughts and future actions. Accordingly, research into how humans may use RL mechanisms to learn and make inferences about others have used a modified Q learning framework that involves a simulated other.50 Still, although RL constitutes a strong opportunity to explain and conceptualize social learning, there exist other computational frameworks that may be applied to social cognition. For example, some have argued that the putative TD reward prediction error forming the basis of RL theories may instead be interpreted in terms of expectation violation or even salience, especially in relation to activity in cortical regions.51, 52 Other models specifically designed to elucidate mentalizing via game theoretic approaches have been highly successful in exploring social behaviors in the relative absence of an explicit RL framework. These mainly consist of algorithms that produce iterative representations of other agents recursively ad infinitum.53, 54 Such approaches have not only explained typical human behavior in a stag-hunt game, but have also identified specific deficits in recursive social cognition in patients with autism spectrum disorders.55

Prediction error signals can occur for a variety of different events to be learned about, like action values, reward value, and reward timing.40, 56, 57 Furthermore, prediction errors are not limited to the reward domain. Evidence of prediction error calculations are even present in sensorimotor areas of the brain that deal with fine tuning actions like the cerebellum and the frontal eye fields31 (see Table 1 for types of prediction errors and associated brain regions). Therefore, a critic signal is responsible for correcting behavioral outputs and cognitive representations across a variety of functional domains of the brain, endorsing the notion that predictive coding is a key feature of the brain.

Table 1.

Representative list of brain areas in which signals that can be described as prediction errors have been found from either primate electrophysiology or human neuroimaging studies

| Prediction error computed | Correlated brain area | |

|---|---|---|

| Egocentric | ||

| Self action | SC31 | OFC31 |

| (action executed)—(action | Cerebellum31 | ACC31 |

| intended) | FEF31 | MCC85, 86 |

| LIP31 | ||

| dlPFC31 | ||

| Self reward outcome | VTA33, 34, 36, 57 | LHb41 |

| (actual reward outcome)—(expected reward outcome) | VS/other striatum31, 56, 84 | ACC86 |

| SN35, 56 | dlPFC31 | |

| Self reward value | vmPFC40 | |

| (actual value of reward)—(expected | VTA40 | |

| value of reward) | SN40 | |

| Self reward timing | VTA35, 57 | |

| (actual timing of reward)—(expected timing of reward) | SN35, 57 | |

| Allocentric | ||

| Other action | dlPFC84 | VS/other striatum63–68 |

| (other’s actual action)—(other’s expected action) | dmPFC91 | |

| Other reward outcome | ACCg48 | MTG42 |

| (other’s actual reward)—(other’s | vmPFC84 | STS42 |

| expected reward) | dmPFC42 | TPJ42 |

| Other motivation | ACCg48 | |

| (other’s actual motivation)—(other’s expected motivation) | ||

This is not a comprehensive list but rather a list to highlight the presence of predictive coding in the brain. Note that the list for the action-related error signals is mostly adapted from Schultz and Dickinson31 review

ACC anterior cingulate cortex, ACCg anterior cingulate gyrus, dlPFC dorsolateral prefrontal cortex, dmPFC dorsomedial prefrontal cortex, FEF frontal eye field, LHb lateral habenula, LIP lateral intraparietal area, MCC middle cingulate cortex, MTG medial temporal gyrus, OFC orbitofrontal cortex, SC superior colliculus, SN substantia nigra, STS superior temporal sulcus, TPJ temporoparietal junction, vmPFC ventromedial prefrontal cortex, VS ventral striatum, VTA ventral tegmental area

As strides in describing increasingly complex human behaviors have been made, attempts to carry the study of learning and decision-making for the self into learning and decision-making that takes into account the behavior of others is now a subject of intense interest. Reacting appropriately to conspecifics and correctly anticipating their behavior is a necessity for social organisms, requiring them to rely on understanding each other just as much as they rely on understanding where to forage for food to survive. As expected, learning about others and representation of self and other are mediated by several reward-related brain structures.

Neural basis of self-referenced and other-referenced reinforcement signals

In this section, we discuss selected research findings that have provided novel insights into how the brain signals self-referenced and other-referenced information in the domain of reinforcement learning and decision-making. When applicable, we focus on other-referenced prediction error signals regarding actions and reward outcomes relevant to reward-guided social learning.

Striatum

Recent advances in the field of neuroscience have elegantly provided various supports for the use of RL mechanisms of learning about others. Although the striatum has long been a center of focus for self-referenced reward information and prediction error in the brain, the role of striatum in learning is not restricted to self-referential processing. In a study examining observational learning and vicarious reinforcement with respect to dopamine release, observer rats vocalized more and experienced significantly more dopamine release in the ventral striatum when seeing another rat receive reward compared to when reward was delivered to an empty box.58 These results extend the role of dopamine release in associations with prediction error signaling to the social domain, implicating the involvement of the similar RL mechanisms for signaling other’s reward outcome. Notably, the degree of dopamine release for other’s reward outcome was still substantially weaker compared to one’s own reward, suggesting that similar mechanisms are utilized but in ways that could be differentiated for self and other.58 In monkeys engaged in a task environment involving actions from and reward outcomes for self and other, neurons in the striatum signal one’s received reward but not the reward received by others while signaling the actions performed by others,59 indicating that there may be specializations for signaling self-referenced and other-referenced information in the striatum, and this differentiation may further depend on the encoding of action and reward outcome of another individual.

There is also evidence that the striatum represents other-referenced reward and prediction errors from human fMRI studies. When socially evaluated by peers, previous positive social interaction with an individual led to that individual being associated with positive outcomes, which correlated with activity in the striatum as well as the orbitofrontal cortex. This suggests that social interaction can similarly activate brain regions that typically signal reinforcing values of primary reinforcers.60 The striatum also appears to be involved in the relative valuation of reward where other’s performance is compared to one’s own performance.61, 62 In an ultimatum game where subjects give money to a partner and receive a proportion of it back, activation of the striatum was also correlated with prediction errors that reflect the difference between the offer the subject received from the partner and what they expected the partner to give, but not between how the subject expected to feel and how they actually felt, which appears to be reflected in ventromedial prefrontal cortex (vmPFC) and the posterior cingulate cortex.63

Furthermore, RL-like prediction errors regarding expectations formed about how others viewed the subjects were correlated with activity in the striatum, OFC, rACC, and anterior insula.60 A variety of economic-game style tasks that require learning about other’s actions and outcomes and/or modeling the internal states of others have reported that the striatum is implicated in these processes. For example, the observed actions of others influence one’s own economic decisions and this is reflected in striatal BOLD response.64 Furthermore, if added payoff for social learning is removed, so that only pure observation of others is necessary for the task, an interpersonal prediction error still occurs in the striatum.65 Similarly, there is evidence from a reciprocity game that learning to trust or not trust others based on their behavior is mediated by a prediction error signal in the caudate nucleus.66

Interestingly, these other-referenced prediction errors in the striatum may even be associated with social norms, given their activation in economic games that rely on feedback from others. A prediction error type signal associated with going against group opinion also has been shown to correlate with how subjects changed their behavior to conform with the group on subsequent judgements.67

In a trust game where an investor gives money to a trustee who can return a proportion of the money, the difference between the trustee’s repayment ratio expected by the actor and what the trustee actually repaid resulted in a prediction error in the striatum in the subjects who relied on the behavior of the partner for learning.68 In addition, in the same study, the difference between the investment ratio and the investor’s model of the other’s model of what the investor will do formed a second order prediction error. Notably, the study found that a subject who failed to deeply model the mind of the partner experienced more striatal correlates of the first type of prediction error (i.e., relying more on the action of the other), whereas the more a subject modeled the mind of the partner, the more likely they were to activate the striatum for the second order prediction error (i.e., relying more on the mental representation of the other).

Anterior cingulate cortex

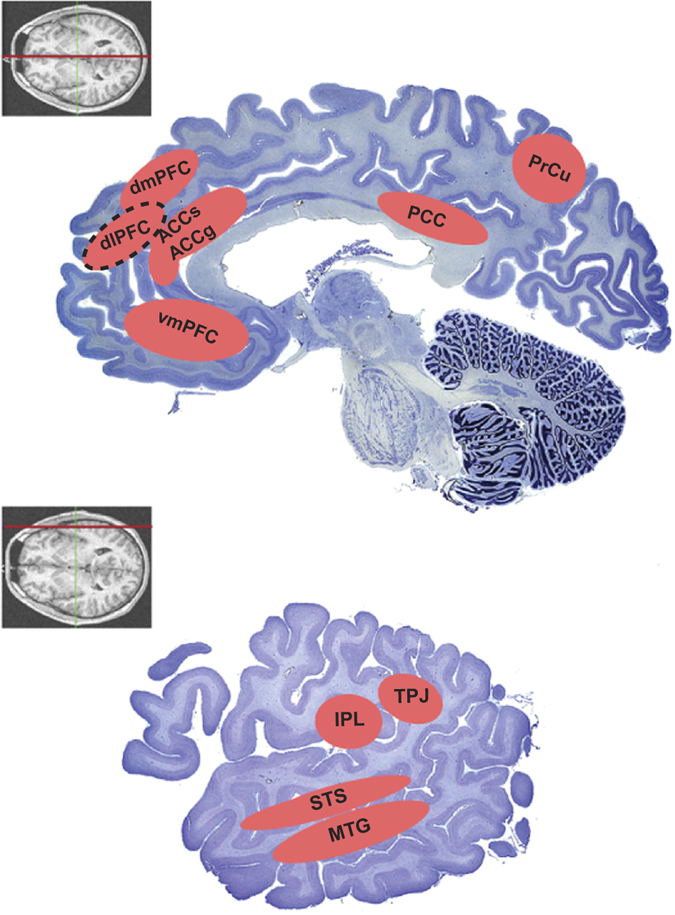

The anterior cingulate cortex (ACC) is implicated in a variety of behaviors and cognitive states,48, 69–71 and could be summarized as an integrative area that relates to motivation and initiating reward-guided or goal-directed behaviors. Seen in this light, ACC may be a core locus of integrating different streams of self-referenced and other-referenced information for generating an adaptive action plan (see Fig. 1 for visualization of other-referenced reward and action areas of the brain). This is bolstered by the considerable evidence that ACC is engaged during social decision-making, with its neuronal signals reflecting information processing about self, other, or both.48, 72–77 In the domain of observational learning, the ability of mice to learn by observing the shock of conspecifics can be effectively abolished by ACC-specific deletion of calcium currents.78 Relatedly, observational aspects of pain have been a major focus for investigating empathy in the human brain. Observing a demonstration of another person being injured and experiencing pain elicits empathic concerns and actively engages a specific portion of the ACC that is also similarly activated when experiencing pain.79 Such shared mechanisms support that observation-driven vicarious pain processing was co-opted or repurposed from processing one’s own pain.

Fig. 1.

Key brain regions involved in representing information with respect to another individual. These areas are often implicated in mentalizing, detecting the beliefs of others, or signaling decision variables concerning another individual. See texts for how these areas are implicated in representing information with respect to another individual. The insets with coronal magnetic resonance images indicate the sections (red line) that correspond to the Nissl-stained sagittal slices. The dotted outline around an area indicates that this area is projected medially from the lateral surface for the purpose of including the area on a more medial aspect of the brain. Adapted with permission from http://www.brains.rad.msu.edu, http://brainmuseum.org, supported by the US National Science Foundation and the National Institutes of health. ACCg anterior cingulate gyrus, ACCs anterior cingulate sulcus, dlPFC dorsolateral prefrontal cortex, dmPFC dorsomedial prefrontal cortex, IPL inferior parietal lobule, MTG medial temporal gyrus, PCC posterior cingulate cortex, PrCu precuneus, STS superior temporal sulcus, TPJ temporoparietal junction, vmPFC ventromedial prefrontal cortex

ACC may represent a critical junction in the cortical pathway of representing and differentiating self and other through processing motivation from the perspectives of self and others.48 Monitoring the spiking activity of individual ACC neurons while monkeys played a social reward allocation task, in which an actor animal has an option to deliver or withhold juice rewards to and from the recipient,80 showed that there are specializations with respect to signaling the reward outcome of self and other. More specifically, in the gyrus of ACC (ACCg), some neurons exclusively encode self reward, whereas others exclusively encode other’s reward, and still some encoded the reward outcomes of self and other.81 Notably, lesioning ACCg, but not the ACC sulcus (ACCs), abolishes social valuation in monkeys,82 indicating a causal contribution of ACCg in social cognition. Similarly, in the human brain, the rostral ACC neurons, overlapping with the ACCg neurons mentioned above, signal-reward outcomes from others during a card game requiring observational learning.77

Furthermore, neurons in ACC have been shown to mediate collective reward-guided actions when monkeys play a prisoner’s dilemma game,74 providing strong evidence that self and other processes are integrated in ACC. The evidence of self and other integration in ACC is also supported by the presence of an anatomical gradient along the human cingulum mapping self and other in a trust game that is absent without a responding partner.83 Moreover, it has been postulated that the ACCs and the ACCg represent distinct streams of information.18, 48, 72, 82

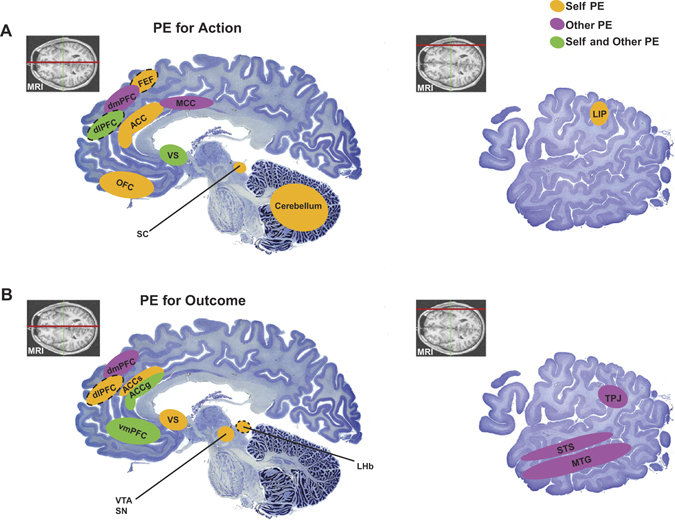

Accurate social learning requires multiple types of prediction error signals with respect to others (see Fig. 2 for representation of self-referencing and other-referencing prediction errors in the brain). For example, observational action prediction errors signal the difference between the actual action of the other and the expected action, whereas vicarious outcome prediction errors signal the difference between the actual and the predicted outcome of the other.48, 84 Furthermore, to estimate the motivation of others, vicarious dynamic prediction errors signal the difference between the actual and estimated movement kinematics of others during their actions.48 Prediction errors for self-referenced action values have been reported in ACC,85, 86 and both the sulcus and gyrus portions of the ACC are implicated in self-reward valuation and decision-making.87, 88 The ACCs is most well-studied for involved in multitudinous functions, from error detection and motivation to cognitive control and response selection.85 Recently, there is an extensive debate over whether or not ACCs are involved in computing value-guided behavioral adaption or cognitive control.69–71

Fig. 2.

Key brain regions that have been shown correlates of self-referenced prediction errors (in yellow) or other-referenced prediction errors (in purple), or both kinds of prediction errors (in green) in the domain of actions (a) and reward/value outcomes (b). For motor learning-related errors, we only provide representative areas as they are beyond the scope of this review. It is worthwhile to note that the distributions of these areas for their involvements in self-referenced or other-referenced prediction error signaling are naturally constrained by the amount of research examining different types of prediction errors. The insets with coronal magnetic resonance images indicate the sections (red line) that correspond to the Nissl-stained sagittal slices. The dotted outline around an area indicates that this area is projected medially from the lateral surface for the purpose of including the area on a more medial aspect of the brain. Adapted with permission from http://www.brains.rad.msu.edu, http://brainmuseum.org, supported by the US National Science Foundation and the National Institutes of health. ACC anterior cingulate cortex, ACCg anterior cingulate gyrus, ACCs anterior cingulate sulcus, dlPFC dorsolateral prefrontal cortex, dmPFC dorsomedial prefrontal cortex, LHb lateral habenula, LIP lateral intraparietal area, MTG medial temporal gyrus, OFC orbitofrontal cortex, SC superior colliculus, SN substantia nigra, STS superior temporal sulcus, TPJ temporoparietal junction, vmPFC ventromedial prefrontal cortex, VS ventral striatum, VTA ventral tegmental area

Notably, there seem to be functional dissociations for signaling self-referenced and other-referenced information between the gyrus and sulcus. For example, prediction errors related to the choices made by another person are found in the ACCg but not in the ACCs.48, 89 Furthermore, the ACCs neurons encode reward outcomes in self-referenced manner in a social decision-making task, whereas a sub-group of the ACCg neurons do so in an other-referenced manner.72 Similarly, in a competitive game, self-referenced reward outcome prediction errors correlate with activity in the ventral striatum, but, critically, belief-based prediction errors about the competitive partners action are encoded in rostral ACC (rACC).90 Furthermore, in a social decision-making task involving utilizing advice from another person, learning rates for self and other are differentially computed by the ACCs and the ACCg, respectively.49 Overall, although social signals have been detected in ACC, the ACCg is most clearly linked to other-referenced information processing based on accumulating evidence spanning whole-brain neuroimaging, electrophysiological recording, and anatomical specializations.48

Prefrontal cortex

The prefrontal cortex has many subsections, and is often thought of as the locus of higher level cognitive processes related to decision making. It is intuitive, then, many parts of the prefrontal cortex process other-referenced information. When observing erroneous choices by another individual informs an association between a specific target and a possible reward during a turn-taking decision-making task in pairs of monkeys, neurons in the dorsomedial frontal cortex encode the errors made by the partner monkey, serving a social error monitoring function,91 which relies on other-referenced information. Similarly, the vmPFC encodes in humans the value of observing another person’s behavior in a reward seeking task, and correlates with that individual’s move towards conforming to social norms.92 Other types of prediction errors are also found in the prefrontal cortex. When participants learn the contingencies between stimulus and reward outcome through direct experience or observing the action and outcome of another person, different reward-related prefrontal structures signal learning-related events for self and other. In such scenarios, the ventral striatum signals self prediction errors, the dorsolateral prefrontal cortex (dlPFC) signals other’s action prediction errors, and vmPFC signal other’s outcome prediction errors.84

Furthermore, Suzuki et al.50 examined the neural correlates of learning stimulus-reward outcome contingencies when participants learned the association directly and when the participants predicted which stimulus another person will likely choose, encouraging the participants to model or mentally simulate the other individual. This manipulation necessitated the use of an other-referenced prediction error, one that calculates the discrepancy between what the other person does and what the participant thought the other person would do. Again, different parts of the prefrontal cortex are engaged as a function of self-referenced and other-referenced computation. The vmPFC tracked the simulated other’s prediction error in a similar manner to self, whereas the simulated other’s action prediction error was signaled by the dorsomedial prefrontal cortex (dmPFC) and dlPFC.50 Notably, neuronal activity in the monkey dmPFC has been shown to closely reflect the strategy of an opponent in a competitive reward-based task, further strengthening the specialized role of dmPFC in simulating others.93 This is consistent with the findings of Behrens et al.,49 in which separable reward signals were computed in ACCg and ACCs for other- referenced and self-referenced reward information, respectively, and that these signals were integrated in vmPFC.

Finally, the orbitofrontal cortex (OFC) is a key cortical region for signaling reward value94 and is also associated with showing value prediction error signaling.95 Although OFC neurons are sensitive to social reward context involving self and other,96 the reward outcome encoding of these neurons seems to be self-referenced,72 suggesting that OFC may be more restricted to mediating behavioral adaptations, including adjusting to social context, in a self-referenced framework.

Encoding of various prediction errors regarding others is a signature of many reward-related regions of the brain, suggesting a tight biological link between self learning and learning about or from others. In particular, these findings endorse the notion that comprehending and learning from the experience of another person is processed under shared predictive coding principles with particular regional specializations for the self and other domain.

Temporal parietal junction and mentalizing networks

When ToM is engaged, demanding the modeling of another individual, precuneus (PrCu), posterior cingulate cortex (PCC), as well as superior temporal sulcus (STS), temporal parietal junction (TPJ), and the medial prefrontal cortex (mPFC) are particularly activated over others.97 STS and TPJ have long been considered as the neural hotspots for higher-level cognitions like ToM and modeling the minds of others. TPJ, in particular, has been regarded as a uniquely social cognition-focused area,98 with evidence that TPJ is necessary for representing the belief of others.99 A meta-analysis of ToM-related areas determined that the most reliably implicated areas are TPJ and mPFC, with activations in PrCu and STS being sensitive to the types of ToM engaged in the context of various ToM measures.100 Notably, researchers have found a close link between self-referential thoughts and mentalizing of others in mPFC,101 indicating how self-referenced and other-referenced information is associated with one another in one of the key regions of the mentalizing network.

Notably, recruitments of TPJ and STS are not specific to tasks designed to measure ToM. TPJ and STS are also activated in situations when considering other’s information to guide one’s actions, suggesting their involvements in broadly defined other-referenced computation. When participants take into account the advice of another person to make a decision about obtaining potential rewards, dmPFC, middle temporal gyrus (MTG), STS, and TPJ activations signal social prediction error.49 Furthermore, when playing a simplified poker game against a human opponent and a computer algorithm, TPJ emerges as a unique region for predicting social decisions that are behaviorally relevant.102 In addition, STS has been well known for their roles in social perception from visual cues.103 Therefore, tracking and interpreting socially relevant information may be the fundamental building blocks of these areas constituting a so-called mentalizing network. Recently, an elegant proposal was put forward suggesting that TPJ is a computational hub in which distinct cognitive processes, like attention, memory, sensory perception, and language all converge together to generate a representation of behaviorally relevant social context.104

Corresponding to this idea, many of the nodes in this proposed mentalizing network have been observed to perform additional functions potentially relevant to other aspects of social behavior. For example, PCC has been proposed to compute subjective value105 as well as other-related social processes including person perception, person updating, and first impressions.106–108

Concluding remarks

Hale and Saxe109 have proposed that mentalizing may be a fundamentally predictive process. Although our current understanding of how the brain implements processes described in theory–theory or simulation theory is not complete, the fact that other-referenced prediction errors appear to be represented neurally suggests that there are shared prediction-based learning mechanisms for social learning and reinforcement learning. The neural mechanisms underlying other-referenced learning may be co-opted from the predictive mechanisms used to learn for the self, one of which is prediction error signaling. Connecting the terminology of reinforcement learning and decision making to the social domain can enhance the development of ideas and methods in studying how we think about others.110

There are many additional dimensions of other-referenced learning that remain to be explored. As experimenters continue to push the limits of studying social learning, interaction, and valuation; we may find ourselves brushing up against the limits of how the brain operationalizes what is “social” and “nonsocial”. Beyond other-referenced representations in the brain, social processing can also refer to the comparison of social agents and nonsocial, yet interactive, agents. Although divergent brain areas may apply similar computations to account for self and other, the neural processes underlying social information processing may not be categorically distinct from other types of information, but rather lie on a continuum. For example, when human participants play a game with other individuals or slot machine partners programmed to display varying levels of generosity, activations in TPJ, PCC, PrCu, vmPFC, and several other regions reflected the prediction errors for generosity similarly for both human and slot machine partners.111

This finding and many others that have observed modulatory differences in brain activations between social and nonsocial information may suggest that the brain may not in fact differentiate the two categorically but processes information as a function of implementing algorithms demanded by specific behavioral constraints. Perhaps social functions could be regarded as repurposed ancestral functions of the brain evolved to deal with an organism’s social environment.112 Then the notion of the “social brain” should be concerned with how specific sets of commonly used computational algorithms are utilized to guide adaptive behaviors.

Acknowledgements

We would like to thank A.N., L.S., and D.L. for thoughtful advice on the manuscript. This work was supported by the National Institute for Mental Health (S.W.C.C., R00-MH099093, R01-MH110750, R21-MH107853), Alfred P. Sloan Foundation (S.W.C.C., FG-2015-66028), and the Natural Sciences and Engineering Research Council of Canada PGSD Fellowship (M.P., 471313).

Author contributions

J.A.J., M.P., C.T., and S.W.C.C. wrote the paper.

Competing interests

The authors declare no competing financial interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Bloom, P. How Children Learn the Meanings of Words (MIT Press, 2000).

- 2.Wynn K. Infants possess a system of numerical knowledge. Curr. Dir. Psychol. Sci. 1995;4:172–177. doi: 10.1111/1467-8721.ep10772615. [DOI] [Google Scholar]

- 3.Keil FC. Science starts early. Science. 2011;331:1022–1023. doi: 10.1126/science.1195221. [DOI] [PubMed] [Google Scholar]

- 4.Goldman AI. In defense of the simulation theory. Mind Lang. 1992;7:104–119. doi: 10.1111/j.1468-0017.1992.tb00200.x. [DOI] [Google Scholar]

- 5.Rizzolatti G, Craighero L. The mirror-neuron system. Annu. Rev. Neurosci. 2004;27:169–192. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- 6.Apperly IA. Beyond simulation–theory and theory–theory: Why social cognitive neuroscience should use its own concepts to study “theory of mind”. Cognition. 2008;107:266–283. doi: 10.1016/j.cognition.2007.07.019. [DOI] [PubMed] [Google Scholar]

- 7.Heyes C, Jaldow E, Nokes T, Dawson G. Imitation in rats (Rattus norvegicus): the role of demonstrator action. Behav. Process. 1994;32:173–182. doi: 10.1016/0376-6357(94)90074-4. [DOI] [PubMed] [Google Scholar]

- 8.Akins CK, Klein ED, Zentall TR. Imitative learning in Japanese quail (Coturnix japonica) using the bidirectional control procedure. Anim. Learn. Behav. 2002;30:275–281. doi: 10.3758/BF03192836. [DOI] [PubMed] [Google Scholar]

- 9.Tomasello M, Davis-Dasilva M, CamaK L, Bard K. Observational learning of tool-use by young chimpanzees. Hum. Evol. 1987;2:175–183. doi: 10.1007/BF02436405. [DOI] [Google Scholar]

- 10.Field T, Woodson R, Greenberg R, Cohen D. Discrimination and imitation of facial expression by neonates. Science. 1982;218:179–181. doi: 10.1126/science.7123230. [DOI] [PubMed] [Google Scholar]

- 11.Ferrari PF, et al. Neonatal imitation in Rhesus macaques. PLoS Biol. 2006;4:e302. doi: 10.1371/journal.pbio.0040302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bandura, A. & Walters, R. H. Social Learning Theory (Prentice-Hall, 1977).

- 13.Bandura A, Ross D, Ross SA. Vicarious reinforcement and imitative learning. J. Abnorm. Soc. Psychol. 1963;67:601–607. doi: 10.1037/h0045550. [DOI] [PubMed] [Google Scholar]

- 14.Manz CC, Sims HP. Vicarious learning: the influence of modeling on organizational behavior. Acad. Manag. Rev. 1981;6:105–113. [Google Scholar]

- 15.Singer T, et al. Empathic neural responses are modulated by the perceived fairness of others. Nature. 2006;439:466–469. doi: 10.1038/nature04271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Xu X, Zuo X, Wang X, Han S. Do you feel my pain ? Racial group membership modulates empathic neural responses. J. Neurosci. 2009;29:8525–8529. doi: 10.1523/JNEUROSCI.2418-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Horner V, Proctor D, Bonnie KE, Whiten A, de Waal FBM. Prestige affects cultural learning in chimpanzees. PLoS ONE. 2010;5:e10625. doi: 10.1371/journal.pone.0010625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Behrens TEJ, Hunt LT, Rushworth MFS. The computation of social behavior. Science. 2009;324:1160–1164. doi: 10.1126/science.1169694. [DOI] [PubMed] [Google Scholar]

- 19.Wimmer H, Perner J. Beliefs about beliefs: representation and constraining function of wrong beliefs in young children’s understanding of deception. Cognition. 1983;13:103–128. doi: 10.1016/0010-0277(83)90004-5. [DOI] [PubMed] [Google Scholar]

- 20.Onishi KH, Baillargeon R. Do 15-month-old infants understand false beliefs ? Science. 2005;308:255–258. doi: 10.1126/science.1107621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Drayton, L. A. & Santos, L. R. A decade of theory of mind research on cayo santiago: insights into rhesus macaque social cognition. Am. J. Primatol. 78, 106–116 (2014). [DOI] [PubMed]

- 22.Rosati, A. G., Arre, A. M., Platt, M. L. & Santos, L. R. Rhesus monkeys show human-like changes in gaze following across the lifespan. Proc. R. Soc. B Biol. Sci. 283, 20160376 (2016). [DOI] [PMC free article] [PubMed]

- 23.Ferrari PF, Kohler E, Fogassi L, Gallese V. The ability to follow eye gaze and its emergence during development in macaque monkeys. Proc. Natl Acad. Sci. 2000;97:13997–14002. doi: 10.1073/pnas.250241197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Shepherd SV. Following gaze: gaze-following behavior as a window into social cognition. Front. Integr. Neurosci. 2010;4:5. doi: 10.3389/fnint.2010.00005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Flombaum JI, Santos LR. Rhesus monkeys attribute perceptions to others. Curr. Biol. 2005;15:447–452. doi: 10.1016/j.cub.2004.12.076. [DOI] [PubMed] [Google Scholar]

- 26.Hare B, Call J, Agnetta B, Tomasello M. Chimpanzees know what conspecifics do and do not see. Anim. Behav. 2000;59:771–785. doi: 10.1006/anbe.1999.1377. [DOI] [PubMed] [Google Scholar]

- 27.Montague PR, Hyman SE, Cohen JD. Computational roles for dopamine in behavioural control. Nature. 2004;431:760–767. doi: 10.1038/nature03015. [DOI] [PubMed] [Google Scholar]

- 28.Rescorla RA, Wagner AR. A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and nonreinforcement. Classic. Cond. II Curr. Res. Theory. 1972;2:64–99. [Google Scholar]

- 29.Bellman R. Dynamic programming and Lagrange multipliers. Proc. Natl Acad. Sci. 1956;42:767–769. doi: 10.1073/pnas.42.10.767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Sutton, R. S. & Barto, A. G. Reinforcement Learning: An Introduction Vol. 1 (MIT Press, 1998).

- 31.Schultz W, Dickinson A. Neuronal coding of prediction errors. Annu. Rev. Neurosci. 2000;23:473–500. doi: 10.1146/annurev.neuro.23.1.473. [DOI] [PubMed] [Google Scholar]

- 32.den Ouden HEM, Friston KJ, Daw ND, McIntosh AR, Stephan KE. A dual role for prediction error in associative learning. Cereb. Cortex. 2009;19:1175–1185. doi: 10.1093/cercor/bhn161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 34.Bayer HM, Glimcher PW. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005;47:129–141. doi: 10.1016/j.neuron.2005.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Schultz W, Apicella P, Ljungberg T, Romo R, Scarnati E. Reward-related activity in the monkey striatum and substantia nigra. Prog. Brain Res. 1993;99:227–235. doi: 10.1016/S0079-6123(08)61349-7. [DOI] [PubMed] [Google Scholar]

- 36.Schultz W. Behavioral theories and the neurophysiology of reward. Annu. Rev. Psychol. 2006;57:87–115. doi: 10.1146/annurev.psych.56.091103.070229. [DOI] [PubMed] [Google Scholar]

- 37.Apicella P, Ljungberg T, Scarnati E, Schultz W. Responses to reward in monkey dorsal and ventral striatum. Exp. Brain Res. 1991;85:491–500. doi: 10.1007/BF00231732. [DOI] [PubMed] [Google Scholar]

- 38.Montague P, Dayan P, Sejnowski T. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J. Neurosci. 1996;16:1936–1947. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Schultz W, Apicella P, Ljungberg T. Responses of monkey dopamine neurons to reward and conditioned stimuli during successive steps of learning a delayed response task. J. Neurosci. 1993;13:900–913. doi: 10.1523/JNEUROSCI.13-03-00900.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Tobler PN, Fiorillo CD, Schultz W. Adaptive coding of reward value by dopamine neurons. Science. 2005;307:1642–1645. doi: 10.1126/science.1105370. [DOI] [PubMed] [Google Scholar]

- 41.Matsumoto M, Hikosaka O. Lateral habenula as a source of negative reward signals in dopamine neurons. Nature. 2007;447:1111–1115. doi: 10.1038/nature05860. [DOI] [PubMed] [Google Scholar]

- 42.Behrens TEJ, Woolrich MW, Walton ME, Rushworth MFS. Learning the value of information in an uncertain world. Nat. Neurosci. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- 43.Niv Y. Reinforcement learning in the brain. J. Math. Psychol. 2009;53:139–154. doi: 10.1016/j.jmp.2008.12.005. [DOI] [Google Scholar]

- 44.Daw, N. D. & Tobler, P. N. Value learning through reinforcement: the basics of dopamine and reinforcement learning. In Neuroeconomics: Decision-Making and the Brain 2nd Edn (eds Glimcher, P. W. & Fehr, E.) 283–298 (London, Elsevier, 2013).

- 45.Gläscher J, Daw N, Dayan P, O’Doherty JP. States versus rewards: dissociable neural prediction error signals underlying model-based and model-free reinforcement learning. Neuron. 2010;66:585–595. doi: 10.1016/j.neuron.2010.04.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Daw ND, Gershman SJ, Seymour B, Dayan P, Dolan RJ. Model-based influences on humans’ choices and striatal prediction errors. Neuron. 2011;69:1204–1215. doi: 10.1016/j.neuron.2011.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lee SW, Shimojo S, O’Doherty JP. Neural computations underlying arbitration between model-based and model-free learning. Neuron. 2014;81:687–699. doi: 10.1016/j.neuron.2013.11.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Apps MA, Rushworth MF, Chang SW. The anterior cingulate gyrus and social cognition: tracking the motivation of others. Neuron. 2016;90:692–707. doi: 10.1016/j.neuron.2016.04.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Behrens TEJ, Hunt LT, Woolrich MW, Rushworth MFS. Associative learning of social value. Nature. 2008;456:245–249. doi: 10.1038/nature07538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Suzuki S, et al. Learning to simulate others’ decisions. Neuron. 2012;74:1125–1137. doi: 10.1016/j.neuron.2012.04.030. [DOI] [PubMed] [Google Scholar]

- 51.Schoenbaum G, Roesch M. Orbitofrontal cortex, associative learning, and expectancies. Neuron. 2005;47:633–636. doi: 10.1016/j.neuron.2005.07.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Schoenbaum G, Roesch MR, Stalnaker TA, Takahashi YK. A new perspective on the role of the orbitofrontal cortex in adaptive behaviour. Nat. Rev. Neurosci. 2009;10:885–892. doi: 10.1038/nrn2753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Yoshida W, Dolan RJ, Friston KJ. Game theory of mind. PLoS Comput. Biol. 2008;4:e1000254. doi: 10.1371/journal.pcbi.1000254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Hula A, Montague PR, Dayan P. Monte carlo planning method estimates planning horizons during interactive social exchange. PLoS Comput. Biol. 2015;11:e1004254. doi: 10.1371/journal.pcbi.1004254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Yoshida W, et al. Cooperation and heterogeneity of the autistic mind. J. Neurosci. 2010;30:8815–8818. doi: 10.1523/JNEUROSCI.0400-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442:1042–1045. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Hollerman JR, Schultz W. Dopamine neurons report an error in the temporal prediction of reward during learning. Nat. Neurosci. 1998;1:304–309. doi: 10.1038/1124. [DOI] [PubMed] [Google Scholar]

- 58.Kashtelyan V, Lichtenberg, Nina T, Chen, Mindy L, Cheer, Joseph F, Roesch, Matthew R. Observation of reward delivery to a conspecific modulates dopamine release in ventral striatum. Curr. Biol. 2014;24:2564–2568. doi: 10.1016/j.cub.2014.09.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Báez-Mendoza R, Harris CJ, Schultz W. Activity of striatal neurons reflects social action and own reward. Proc. Natl Acad. Sci. 2013;110:16634–16639. doi: 10.1073/pnas.1211342110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Jones RM, et al. Behavioral and neural properties of social reinforcement learning. J. Neurosci. 2011;31:13039–13045. doi: 10.1523/JNEUROSCI.2972-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Fliessbach K, et al. Social comparison affects reward-related brain activity in the human ventral striatum. Science. 2007;318:1305–1308. doi: 10.1126/science.1145876. [DOI] [PubMed] [Google Scholar]

- 62.Bault N, Joffily M, Rustichini A, Coricelli G. Medial prefrontal cortex and striatum mediate the influence of social comparison on the decision process. Proc. Natl Acad. Sci. 2011;108:16044–16049. doi: 10.1073/pnas.1100892108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Xiang T, Lohrenz T, Montague PR. Computational substrates of norms and their violations during social exchange. J. Neurosci. 2013;33:1099–1108. doi: 10.1523/JNEUROSCI.1642-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Burke CJ, Tobler PN, Schultz W, Baddeley M. Striatal BOLD response reflects the impact of herd information on financial decisions. Front. Hum. Neurosci. 2010;4:48. doi: 10.3389/fnhum.2010.00048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Lohrenz T, Bhatt M, Apple N, Montague PR. Keeping up with the Joneses: interpersonal prediction errors and the correlation of behavior in a tandem sequential choice task. PLoS Comput. Biol. 2013;9:e1003275. doi: 10.1371/journal.pcbi.1003275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.King-Casas B, et al. Getting to know you: reputation and trust in a two-person economic exchange. Science. 2005;308:78–83. doi: 10.1126/science.1108062. [DOI] [PubMed] [Google Scholar]

- 67.Klucharev V, Hytönen K, Rijpkema M, Smidts A, Fernández G. Reinforcement learning signal predicts social conformity. Neuron. 2009;61:140–151. doi: 10.1016/j.neuron.2008.11.027. [DOI] [PubMed] [Google Scholar]

- 68.Xiang T, Ray D, Lohrenz T, Dayan P, Montague PR. Computational phenotyping of two-person interactions reveals differential neural response to depth-of-thought. PLoS Comput. Biol. 2012;8:e1002841. doi: 10.1371/journal.pcbi.1002841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Kolling N, et al. Value, search, persistence and model updating in anterior cingulate cortex. Nat. Neurosci. 2016;19:1280–1285. doi: 10.1038/nn.4382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Shenhav A, Cohen JD, Botvinick MM. Dorsal anterior cingulate cortex and the value of control. Nat. Neurosci. 2016;19:1286–1291. doi: 10.1038/nn.4384. [DOI] [PubMed] [Google Scholar]

- 71.Ebitz RB, Hayden BY. Dorsal anterior cingulate: a Rorschach test for cognitive neuroscience. Nat. Neurosci. 2016;19:1278–1279. doi: 10.1038/nn.4387. [DOI] [PubMed] [Google Scholar]

- 72.Chang SWC, Gariepy J-F, Platt ML. Neuronal reference frames for social decisions in primate frontal cortex. Nat. Neurosci. 2013;16:243–250. doi: 10.1038/nn.3287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Apps MA, Ramnani N. The anterior cingulate gyrus signals the net value of others’ rewards. J. Neurosci. 2014;34:6190–6200. doi: 10.1523/JNEUROSCI.2701-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Haroush K, Williams, Ziv M. Neuronal prediction of opponent’s behavior during cooperative social interchange in primates. Cell. 2015;160:1233–1245. doi: 10.1016/j.cell.2015.01.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Lockwood PL, Apps MA, Roiser JP, Viding E. Encoding of vicarious reward prediction in anterior cingulate cortex and relationship with trait empathy. J. Neurosci. 2015;35:13720–13727. doi: 10.1523/JNEUROSCI.1703-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Apps MAJ, Lesage E, Ramnani N. Vicarious reinforcement learning signals when instructing others. J. Neurosci. 2015;35:2904–2913. doi: 10.1523/JNEUROSCI.3669-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Hill MR, Boorman ED, Fried I. Observational learning computations in neurons of the human anterior cingulate cortex. Nat. Commun. 2016;7:12722. doi: 10.1038/ncomms12722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Jeon D, et al. Observational fear learning involves affective pain system and Cav1.2 Ca2+ channels in ACC. Nat. Neurosci. 2010;13:482–488. doi: 10.1038/nn.2504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Singer T, et al. Empathy for pain involves the affective but not sensory components of pain. Science. 2004;303:1157–1162. doi: 10.1126/science.1093535. [DOI] [PubMed] [Google Scholar]

- 80.Chang SWC, Winecoff AA, Platt ML. Vicarious reinforcement in rhesus macaques (Macaca mulatta) Front. Neurosci. 2011;5:27. doi: 10.3389/fnins.2011.00027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Chang S. Coordinate transformation approach to social interactions. Front. Neurosci. 2013;7:147. doi: 10.3389/fnins.2013.00147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Rudebeck PH, Buckley MJ, Walton ME, Rushworth MFS. A role for the macaque anterior cingulate gyrus in social valuation. Science. 2006;313:1310–1312. doi: 10.1126/science.1128197. [DOI] [PubMed] [Google Scholar]

- 83.Tomlin D, et al. Agent-specific responses in the cingulate cortex during economic exchanges. Science. 2006;312:1047–1050. doi: 10.1126/science.1125596. [DOI] [PubMed] [Google Scholar]

- 84.Burke CJ, Tobler PN, Baddeley M, Schultz W. Neural mechanisms of observational learning. Proc. Natl Acad. Sci. 2010;107:14431–14436. doi: 10.1073/pnas.1003111107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Holroyd CB, Coles MG. Dorsal anterior cingulate cortex integrates reinforcement history to guide voluntary behavior. Cortex. 2008;44:548–559. doi: 10.1016/j.cortex.2007.08.013. [DOI] [PubMed] [Google Scholar]

- 86.Matsumoto M, Matsumoto K, Abe H, Tanaka K. Medial prefrontal cell activity signaling prediction errors of action values. Nat. Neurosci. 2007;10:647–656. doi: 10.1038/nn1890. [DOI] [PubMed] [Google Scholar]

- 87.Kennerley SW, Walton ME, Behrens TE, Buckley MJ, Rushworth MF. Optimal decision making and the anterior cingulate cortex. Nat. Neurosci. 2006;9:940–947. doi: 10.1038/nn1724. [DOI] [PubMed] [Google Scholar]

- 88.Amemori K-i, Graybiel AM. Localized microstimulation of primate pregenual cingulate cortex induces negative decision-making. Nat. Neurosci. 2012;15:776–785. doi: 10.1038/nn.3088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Apps MAJ, Lockwood PL, Balsters JH. The role of the midcingulate cortex in monitoring others’ decisions. Front Neurosci. 2013;7:251. doi: 10.3389/fnins.2013.00251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Zhu L, Mathewson KE, Hsu M. Dissociable neural representations of reinforcement and belief prediction errors underlie strategic learning. Proc. Natl Acad. Sci. 2012;109:1419–1424. doi: 10.1073/pnas.1116783109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Yoshida K, Saito N, Iriki A, Isoda M. Social error monitoring in macaque frontal cortex. Nat. Neurosci. 2012;15:1307–1312. doi: 10.1038/nn.3180. [DOI] [PubMed] [Google Scholar]

- 92.Chung D, Christopoulos GI, King-Casas B, Ball SB, Chiu PH. Social signals of safety and risk confer utility and have asymmetric effects on observers’ choices. Nat. Neurosci. 2015;18:912–916. doi: 10.1038/nn.4022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Seo H, Cai X, Donahue CH, Lee D. Neural correlates of strategic reasoning during competitive games. Science. 2014;346:340–343. doi: 10.1126/science.1256254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Kringelbach ML. The human orbitofrontal cortex: linking reward to hedonic experience. Nat. Rev. Neurosci. 2005;6:691–702. doi: 10.1038/nrn1747. [DOI] [PubMed] [Google Scholar]

- 95.O’Doherty J, et al. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- 96.Azzi JCB, Sirigu A, Duhamel J-R. Modulation of value representation by social context in the primate orbitofrontal cortex. Proc. Natl Acad. Sci. USA. 2012;109:2126–2131. doi: 10.1073/pnas.1111715109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Frith CD, Frith U. The neural basis of mentalizing. Neuron. 2006;50:531–534. doi: 10.1016/j.neuron.2006.05.001. [DOI] [PubMed] [Google Scholar]

- 98.Saxe R, Kanwisher N. People thinking about thinking people: The role of the temporo-parietal junction in “theory of mind”. Neuroimage. 2003;19:1835–1842. doi: 10.1016/S1053-8119(03)00230-1. [DOI] [PubMed] [Google Scholar]

- 99.Samson D, Apperly IA, Chiavarino C, Humphreys GW. Left temporoparietal junction is necessary for representing someone else’s belief. Nat. Neurosci. 2004;7:499–500. doi: 10.1038/nn1223. [DOI] [PubMed] [Google Scholar]

- 100.Schurz M, Radua J, Aichhorn M, Richlan F, Perner J. Fractionating theory of mind: A meta-analysis of functional brain imaging studies. Neurosci Biobehav. Rev. 2014;42:9–34. doi: 10.1016/j.neubiorev.2014.01.009. [DOI] [PubMed] [Google Scholar]

- 101.Mitchell JP. Inferences about mental states. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2009;364:1309–1316. doi: 10.1098/rstb.2008.0318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Carter RM, Bowling DL, Reeck C, Huettel SA. A distinct role of the temporal-parietal junction in predicting socially guided decisions. Science. 2012;337:109–111. doi: 10.1126/science.1219681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Allison T, Puce A, McCarthy G. Social perception from visual cues: role of the STS region. Trends Cogn. Sci. 2000;4:267–278. doi: 10.1016/S1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- 104.Carter RM, Huettel SA. A nexus model of the temporal–parietal junction. Trends. Cogn. Sci. 2013;17:328–336. doi: 10.1016/j.tics.2013.05.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Kable JW, Glimcher PW. The neural correlates of subjective value during intertemporal choice. Nat. Neurosci. 2007;10:1625–1633. doi: 10.1038/nn2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Mende-Siedlecki P, Baron SG, Todorov A. Diagnostic value underlies asymmetric updating of impressions in the morality and ability domains. J. Neurosci. 2013;33:19406–19415. doi: 10.1523/JNEUROSCI.2334-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Mende-Siedlecki P, Todorov A. Neural dissociations between meaningful and mere inconsistency in impression updating. Soc. Cogn. Affect Neurosci. 2016;11:1489–1500. doi: 10.1093/scan/nsw058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Schiller D, Freeman JB, Mitchell JP, Uleman JS, Phelps EA. A neural mechanism of first impressions. Nat. Neurosci. 2009;12:508–514. doi: 10.1038/nn.2278. [DOI] [PubMed] [Google Scholar]

- 109.Koster-Hale J, Saxe R. Theory of mind: a neural prediction problem. Neuron. 2013;79:836–848. doi: 10.1016/j.neuron.2013.08.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Beam E, Appelbaum LG, Jack J, Moody J, Huettel SA. Mapping the semantic structure of cognitive neuroscience. J. Cogn. Neurosci. 2014;26:1949–1965. doi: 10.1162/jocn_a_00604. [DOI] [PubMed] [Google Scholar]

- 111.Hackel LM, Doll BB, Amodio DM. Instrumental learning of traits versus rewards: dissociable neural correlates and effects on choice. Nat. Neurosci. 2015;18:1233–5. doi: 10.1038/nn.4080. [DOI] [PubMed] [Google Scholar]

- 112.Chang SW, et al. Neuroethology of primate social behavior. Proc. Natl Acad. Sci. 2013;110:10387–10394. doi: 10.1073/pnas.1301213110. [DOI] [PMC free article] [PubMed] [Google Scholar]