Summary

Background : Scribes are assisting Emergency Physicians by writing their electronic clinical notes at the bedside during consultations. They increase physician productivity and improve their working conditions. The quality of Emergency scribe notes is unevaluated and important to determine.

Objective : The primary objective of the study was to determine if the quality of Emergency Department scribe notes was equivalent to physician only notes, using the Physician Documentation Quality Instrument, Nine-item tool (PDQI-9).

Methods : This was a retrospective, observational study comparing 110 scribed to 110 non-scribed Emergency Physician notes written at Cabrini Emergency Department, Australia. Consultations during a randomised controlled trial of scribe/doctor productivity in 2016 were used. Emergency physicians and nurses rated randomly selected, blinded and de-identified notes, 2 raters per note. Comparisons were made between paired scribed and unscribed notes and between raters of each note. Characteristics of individual raters were examined. The ability of the tool to discriminate between good and poor notes was tested.

Results : The PDQI-9 tool has significant issues. Individual items had good internal consistency (Cronbach’s alpha=0.93), but there was very poor agreement between raters (Pearson’s r=0.07, p=0.270). There were substantial differences in PDQI-9 scores allocated by each rater, with some giving typically lower scores than others, F(25,206)=1.93, p=0.007. The tool was unable to distinguish good from poor notes, F(3,34)=1.15, p=0.342. There was no difference in PDQI-9 score between scribed and non-scribed notes.

Conclusions : The PDQI-9 documentation quality tool did not demonstrate reliability or validity in evaluating Emergency Medicine consultation notes. We found no evidence that scribed notes were of poorer quality than non-scribed notes, however Emergency scribe note quality has not yet been determined.

Citation: Walker KJ, Wang A, Dunlop W, Rodda H, Ben-Meir M, Staples M. The 9-Item Physician Documentation Quality Instrument (PDQI-9) score is not useful in evaluating EMR (scribe) note quality in Emergency Medicine. Appl Clin Inform 2017; 8: 981–993 https://doi.org/10.4338/ACI2017052017050080

Keywords: Clinical Documentation and Communications, Scribe, Emergency Medicine, Quality, Safety Culture

1. Background and Significance

Medical scribes are playing an increasingly important part in improving the efficiency of physicians, particularly in the USA but also elsewhere [ 1 , 2 ]. Their use is being evaluated in many settings: Emergency Departments (EDs) [ 3 – 11 ], consultant offices [ 12 – 15 ], primary care [ 16 , 17 ] and hospital wards[ 1 ]. Medical scribes have been found to improve physician productivity [ 3 , 4 , 8 – 10 , 12 ] in most Emergency studies, but not all [ 5 , 15 ]. They have also been useful in most office settings [ 12 – 14 ] and wards, but again, not all [ 15 ]. Their aim has been to reduce the time a physician spends documenting consultations into electronic medical records (EMRs). We know that scribes are well tolerated by patients [ 11 , 14 , 17 , 18 ] and physicians [ 14 , 17 , 19 ] and how to train scribes [ 2 , 20 , 21 ]. We don’t have a good understanding of the quality of the scribed documentation.

There is limited literature guiding how to best evaluate the quality of the medical note despite the creation of documentation for every medical consultation. In the era of the Electronic Medical Record (EMR), it is hard to understand why there is no ideal note quality standard to guide improvement of EMRs. A tool containing 22 quality items [ 22 ] was produced in 2008 by Stetson et al. The group condensed this tool to the most useful 9 items [ 23 ] in 2012 (PDQI-9). We are not aware of any external validation sets to date on this tool.

There is one paper evaluating the quality of scribe documentation [ 16 ]. This paper used the PDQI-9 tool to assess the quality of scribe documentation for diabetic consultations in primary care [ 16 ]. The paper found scribed notes to be slightly higher in quality compared to non-scribed notes (possible scoring range from 9 to 45, scribed notes: mean of 30.3, non-scribed notes: 28.9). Scribed notes included slightly more redundant information. This environment was in a different area of medicine where the vocabulary required would be relatively limited. The evaluations would be relatively similar in all consultations and consultations are undertaken sequentially with contemporaneous notes.

In contrast to the diabetic primary care environment, Emergency Medicine has a wide breadth of presentations and ED patients are managed simultaneously. Sometimes notes are written or edited hours after consultations are undertaken. It is important that the scribe concept is fully evaluated (including quality) before the widespread uptake of the role in Emergency Medicine.

2. Objectives

The primary objective of the study was to determine if the quality of Emergency Department scribed notes was equivalent to non-scribed notes, using the PDQI-9 tool [ 23 ]. A retrospective secondary objective was added during the analysis phase of the study, to test for effectiveness of the tool. This was added when there seemed to be limited agreement between raters regarding note quality.

3. Methods

3.1. Study design

This was a retrospective, observational study comparing scribed to non-scribed medical notes written at Cabrini ED using the PDQI-9 [ 23 ] notes comparison tool with two novel extra questions added at the end (notes blinded and de-identified).

3.2. Setting

The study was undertaken in the ED of Cabrini, Melbourne, Australia. Cabrini is a not-for-profit (private), Catholic hospital network with all specialties represented. The ED sees 24,000 patients per annum, with an admission rate of 50%; the majority of patients are older adults with complex medical conditions, although 25% of attendances are children.

A prospective, randomised controlled trial evaluating the productivity of emergency physicians with and without trained scribes was undertaken at Cabrini ED from October 2015 to September 2016 (ACTRN12615000607572). This formed the dataset of available shifts for analysis. One scribed note was randomly selected (by Excel random number generation) from scribed shifts and these were paired with a matched note written by the same physician without a scribe in the nearest similar shift to the selected shift.

3.3. Participants

Electronic Medical Records (EMRs): The ED uses an EMR to document patient consultations; which is largely free-text (Patient Administrative Systems, PAS, Global Health Systems, Melbourne, Australia) with mandatory sections for diagnosis, billing and patient disposition. Physicians without scribes, use this EMR. They type notes after consulting the patient, whilst seated at a desktop computer. Notes are either written directly post the patient encounter, or up to several hours later.

The scribes document consultations in real-time by standing with the emergency physician during a consultation with a computer-on-wheels (COW), collecting and populating information into an electronic template (developed by Dr Rodda). It prompts for sections of the patient history, physical exam, management and disposition. There are pick-lists for physician identification and normal physical exam findings; tick-boxes for review of symptoms, nursing orders and management options and macros for disposition categories. The pick lists also have the option of manual entry, and positive findings required qualification of findings. There are mandatory prompts for patient demographics, diagnosis and a verification and attestation statement from the physician regarding scribe use. Once edited by the physician and verified, the note reformats into a final document and the note is copied into the PAS record. In addition, the scribe completes the PAS mandatory sections that are outside the free-text section of the record. Scribes were mainly rostered to the busiest parts of the week, Friday to Monday, on day and evening shifts but not nights.

Doctors: Thirteen Emergency Physicians, 70% male, consented to receive scribes in the economic study. Participation was voluntary and physicians were eligible if they were board-certified, permanent staff, working at least one clinical shift per week. Prior to receiving scribes, each was offered 90 minutes of online education sessions and dial-in training in scribe utilisation best practice.

Scribes: There were five trained scribes, 60% male (both pre-medical and medical students). Their training has been described previously in detail [ 2 , 21 ]. The scribes received an hour each of PAS training and template training. By study commencement, they had used the template and PAS for between 70 to 160 training consultations. Scribes were randomly allocated to physician shifts.

3.4. Variables

Quality Tool: The primary outcome was the PDQI-9 [ 22 ] score for each note (► Table 1 ). It is a 9 item score with responses allocated to a 5-point Likert scale. Item terms are: Up-to-date, Accuracy, Thorough, Useful, Organized, Comprehensible, Succinct, Synthesized and Internally Consistent. In addition, we added 2 extra questions, with mandatory answers provided as yes/no, plus voluntary free-text comments. The questions were “Did you find any major or minor errors or omissions in these patient notes?” and “Could you use these notes to manage this patient in the ED if the primary doctor was absent?”

Table 1.

Physician Documentation Quality Instrument-9 Version 1: 11/21/2011 (adapted from [ 23 ])

| Date: | Author: | Reviewer: | ||||

|---|---|---|---|---|---|---|

| Note type | (circle) | Admit | Progress | Discharge | ||

| Instructions: | Please review the chart before assessing the note. Then rate the note | |||||

| Attribute | Score | Description of Ideal Note | ||||

| 1. Up-to-date | Not at all | Extremely | The note contains the most recent test result recommendations. | |||

| 1 | 2 | 3 | 4 | 5 | ||

| 2. Accurate | Not at all | Extremely | The note is true. It is free of incorrect information. | |||

| 1 | 2 | 3 | 4 | 5 | ||

| 3. Thorough | Not at all | Extremely | The note is complete and documents all of the issues of importance to the patient. | |||

| 1 | 2 | 3 | 4 | 5 | ||

| 4. Useful | Not at all | Extremely | The note is extremely relevant, providing valuable information and/or analysis. | |||

| 1 | 2 | 3 | 4 | 5 | ||

| 5. Organized | Not at all | Extremely | The note is well-formed and structured in a way that helps the reader understand the patient’s clinical course. | |||

| 1 | 2 | 3 | 4 | 5 | ||

| 6. Comprehensible | Not at all | Extremely | The note is clear, without ambiguity or sections that are dificult to understand. | |||

| 1 | 2 | 3 | 4 | 5 | ||

| 7. Succinct | Not at all | Extremely | The note is brief, to the point, and without redundancy. | |||

| 1 | 2 | 3 | 4 | 5 | ||

| 8. Synthesized | Not at all | Extremely | The note reflects the author’s understanding of the patient’s status and ability to develop a plan of care. | |||

| 1 | 2 | 3 | 4 | 5 | ||

| 9. Internally consistent | Not at all | Extremely | No part of the note ignores or contradicts any other part. | |||

| 1 | 2 | 3 | 4 | 5 | ||

| Total Score: | ||||||

Raters: were chosen to reflect some of the breadth of end-users of notes. They were all ED employees and were familiar with both scribed and non-scribed notes. An Emergency Physician and another healthcare worker (senior resident or senior nurse, all with greater than 7 years of ED experience) appraised each note providing 2 independent opinions per note. Raters were not trained in the use of the tool and there was no pre-study agreement between raters about standards. They were asked to read the note and use a purpose-built SurveyMonkey (SurveyMonkey® Jan 2017) version of the PDQI-9 (exact copy of original tool + extra items) to record responses online.

3.5. Data sources/measurement

A complete dataset of patients and shifts was created from the Cabrini data warehouse, combining PAS records and a roster database. Notes evaluated in this study had all been verified and/or edited by a physician and were taken from the final EMR, not the initial scribe template.

A patient consultation was randomly selected during each scribed physician shift (Excel random number generator). A note from the nearest shift undertaken by the same physician working without a scribe, matched by day and consultation order was extracted as a control. (ie if the 5 th consecutive consult on a Monday in time order for a shift was chosen for a scribed consultation, the 5 th consult was chosen for the non-scribed consultation the subsequent Monday). Each note was de-identified and printed for raters. It only contained the consultation by the doctor selected, not later information (including post intra-ED handover details).

3.6. Statistical Issues

Study size: We were interested in comparing overall score, rather than specific domains within the PDQI-9. Assuming a mean control score of 29 out of 45 [ 16 ] and SD=6, a statistically significant difference with 95% confidence could be detected with 64 pairs of notes (each pair containing a scribed note and a non-scribed note). This would be sufficient to detect a small effect size as consistent with Misra-Herbert [ 16 ], which reports Cohen’s d of 0.15. Clinically significant differences have not been rigorously investigated in the literature. Analyses were performed using IBM SPSS 21 (IBM, Armonk, NY, USA).

Quantitative variables: Reported omissions were coupled with a brief description of what was missing from the note. Thematic analysis was used to group omissions and count the frequency of such omissions in each group. Self-reported inability to use the notes without additional information was also coupled with what additional information would be required. These data were also compared using thematic analysis.

4. Results

4.1. Participants

There were 163 scribed shifts and 914 non-scribed shifts eligible for analysis in the time period. From those shifts, all notes (1241, 5871 non-scribed) were available. From this pool, 220 (110 scribed, 110 non-scribed) consultations were randomly selected and 217 were analysed by two raters each. This was larger than required for statistical significance and was undertaken to allow comparison with the Misra-Herbert [ 16 ] work. Three notes were missed by raters so only received one score. These single scores were excluded from analysis due to lack of their comparator.

Consultations: The mean age of the scribed patients was 58 years (95% 53, 63), 51% were male, their admission rate was 56%. The mean age of the non-scribed patient consultations was 57 years (95%CI 51, 63), 50% were male and the admission rate was 46%. The typical caseload is 45% male, mean age 56 and admission rate 50%. Paired t-test showed no difference between groups in age (p=.0750), and chi square showed no difference between groups in gender (p=0.893) or admission rate (p=0.138).

Raters: The raters had these skillsets: Emergency physicians (all >20 years experience of Emergency Medicine): generalist, Australasian College for Emergency Medicine (ACEM) examiner, ACEM resident educator, medical student educator. Others: ED shift charge nurses, ED telephone follow-up nurse, final-year ED trainee residents (>6 years ED experience).

Outcome data: Paired t-test showed that scribed notes were longer (357 words, 95%CI 327, 386) compared to non-scribed notes (237 words, 95%CI 215, 259), t(109)=7.34, p<.0001. There was a slight negative skew in PDQI-9 scores for both groups, but skew did not exceed ±1 so the distribution was considered normal. Paired t-test showed no difference in PDQI-9 scores between scribed (mean=38.16, 95%CI 37.5, 38.9) and non-scribed documents (mean=37.35, 95%CI 36.6, 38.1). Chi square revealed no difference in the rate of omissions between scribed (42%) and non-scribed notes (43%), χ =.02, p=0.895. There was also no difference in sufficiency of information to manage the patient between scribed (92%) and non-scribed notes (93%), χ =.03, p=0.874.

Although omissions were numerically equivalent between scribed and non-scribed notes, there was a qualitative difference between the omissions from scribed and non-scribed notes. Some omitted information was detail that might not have been available at the time of the initial consultation (i.e. “missing CT results” or “no pathology results included”). A summary of omissions is described in ► Table 2 .

Table 2.

Omissions from notes identified by raters

| Scribed (%) | Non-Scribed (%) | |

|---|---|---|

| History of presenting complaint | 0 | 12 |

| Medical history, medications or allergies | 4 | 25 |

| Examination findings | 6 | 13 |

| Differential diagnosis | 5 | 2 |

| Results or outcomes of procedure | 60 | 31 |

| Final disposition on discharge from ED | 24 | 17 |

| Spelling errors | 1 | 0 |

All of the notes (scribed and non-scribed) for which raters stated they could not manage the patient if the primary doctor was absent were all based on missing disposition plans or results.

PDQI-9 test performance results: We observed multiple cases in which the two raters’ score for a single note was substantially different. This prompted us to interrogate the reliability of the PDQI-9. Scribed and non-scribed data were pooled given the non-difference between groups. Internal consistency of the 9 items was very strong, Cronbach’s =.93.

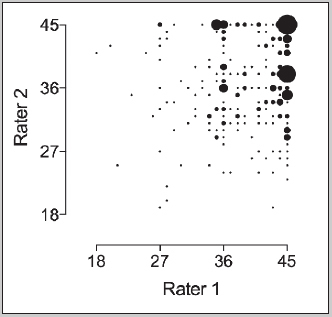

Scores ranged from 18 to 45 with a mean of 37.76 (95%CI 37.2, 38.3). Total score was compared between rater 1 and rater 2 on each note. Pearson’s correlation of total score between raters showed very poor agreement on assessment of quality, r=0.07, p=0.270. ► Figure 1 shows a bubble plot of scores with a highly heteroscedastic distribution F(25,206)=1.93, p=0.007, indicating that agreement between raters occurred only at high values. Larger bubbles indicate higher frequency of occurrences (i.e. 9 of 220 notes were rated 45 out of 45 by both raters, and one note was rated 18 out of 45 by Rater 1 and 41 out of 45 by Rater 2).

fig.1.

Bubble plot of PDQI-9 scores allocated by Rater 1 and Rater 2 for each note

We considered whether some raters might be rating more harshly than others. Each rater’s scores are presented in ► Figure 2 . A one-way ANOVA was used to compare mean allocated scores between each rater. There was a significant difference in rater score F(8,472)=51.25, p<0.0001.

fig.2.

Box and whisker plot indicating median (mid-line), interquartile range (box) 95% confidence intervals (error bars) and outlying scores (dots) for each rater.

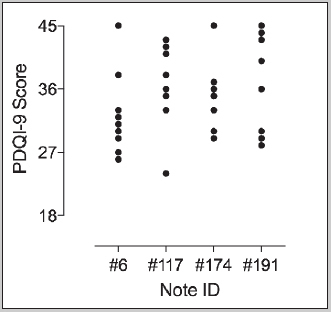

Four notes (two scribed and two non-scribed) were selected as representatives of good- and poor-quality, and these notes were rated by all nine raters. Scores deviated broadly as shown in ► Figure 3 . There was no difference in PDQI-9 scores between notes, F (3,34)=1.15, p=0.342.

fig.3.

Individual PDQI-9 scores allocated by each rater on the four control notes.

Individual PDQI-9 scores allocated by each rater on the four control notes shows almost no agreement between raters. On note #6, no two raters gave the same score. On note #117, two raters agreed on a score of 42 and all other raters gave different scores. On note #174, two raters agreed on a score of 33, and two agreed on a score of 35, all other gave different scores. On note #191, two raters agreed on a score of 29 and all other raters gave different scores.

When asked if the notes omitted any information, 121 of the 220 (55%) notes assessed had agreement between raters. Omissions are described in ► Table 2 . When asked if they could manage the patient with only information in the notes, 193 of the 220 notes had agreement on raters showing 88% agreement.

One rater reported that it was impossible to assess for accuracy (Item 2), as they were not present for consultations and they marked all accuracy questions as a 4. Raters spent between 2 and 4 minutes to review each chart and rate the note.

5. Discussion

5.1. Key Findings of this Study

Our primary goal was to test for equivalency of scribed notes compared to non-scribed notes. We were unable to determine this based on the test performance, although there were no obvious differences between the two groups using the PDQI-9 scores. Qualitative analysis of feedback (► Table 2 ) indicated that scribed notes tended to omit fewer details like previous medical history, allergies or medications compared to non-scribed notes. This is consistent with the larger word count in the scribed notes (357 v 237 words). The clinical relevance of these physician-alone omissions was not explored. For example, an attendance for removal of sutures might not require a complete list of active medical conditions. This finding would be consistent with the finding in Misra-Herbert et al (2016) that scribed notes contained slightly more redundant information than non-scribed notes. However, it may also reflect that physicians chart less when unassisted due to time constraints (or physicians may remember less detail when charting hours after seeing a complex patient).

60% of the scribed notes omitted results or patient disposition details. This may be a true finding, clearly needing improvement by system change or training or both, however some of the finding may be explained the increased pick-up of patients towards end of shift by doctors with scribes, without time for tests to return or disposition decisions to be made (handover and subsequent doctor notes were excluded from analysis).

This study demonstrated that the PDQI-9 score had poor inter-rater reliability. We identified that some raters were strict, others generous. It also showed limited ability to discriminate between a good and poor note in the Emergency Medicine setting. Raters were not trained in how to use the PDQI-9 to rate note quality and it is possible that standardized training might improve inter-rater reliability. However, the current evidence suggests that the PDQI-9 should not be used to evaluate Emergency Medicine consultations. Further validation and/or modifications are required to the scale before there can be confidence that it is a useful tool. We also note that in development of the tool [ 23 ], rating took 7 to 10 minutes per chart and internists had access to entire medical histories, whilst ED raters took about 3 minutes total and could only see the single consultation. It might be that with rater training and score standardization; the PDQI-9 may still be useful. It may also be useful for junior physician training purposes, to stimulate trainees to think about the quality of their charting.

5.2. Fit with Current Knowledge

Authors are questioning how to measure quality in medical documentation. Some suggest domains that could be measured [ 22 , 24 ]. Others value the breadth of information capture and recommend mandated section completion in a document [ 25 ]. The only published tools for quality measurement we were able to identify during study development were the PDQI-22 [ 22 ] and PDQI-9 [ 23 ] scores. None of these scoring suggestions seem to be able to capture what most clinicians would immediately recognize – a good or bad clinical note. Perhaps the gestalt of an experienced provider would be a good way of measuring quality, although this may lead to bias. Subsequent to this study, a QNOTE tool (developed for outpatient notes in 2014[ 26 ]), was validated for in-patient notes [ 27 ]. This should be evaluated for utility in Emergency Medicine in the future, as it appears to have good internal and external validity. We also note publication of a new internal medicine scoring tool [ 28 ] in May 2017 but suggest further validation work given its Cohen’s kappa of 0.67.

The question about the quality of scribed notes in Emergency Medicine remains unanswered. Qualitative work regarding the physicians’ experience of working with scribes mentions that most scribed notes are altered by a physician prior to verification of the documentation, however changes are usually small (Mr Timothy Cowan, Cabrini, abstract ACEM ASM 2016, Queenstown, NZ). Perhaps physicians can largely accept the scribed note (which differs from their usual note style) if they are no longer responsible for data entry. This study raises questions about previous work about scribe documentation in a Primary Care setting [ 16 ]. It is possible that the PDQI-9 tool works in some settings but not others given the diversity of medical documentation.

Whilst the ED note is primarily for the immediate use of the physician, it is also used within the hospital by: ED doctors during future patient visits, ED nurses, ward nurses, bed-allocation staff, consultant physicians and surgeons, telephone follow-up nurses, quality and governance staff, medico-legal advisers, coders/billing office workers and heads-of-units. Users external to the hospital are patients, primary care physicians and specialists in external offices. It is presented in unaltered form to all of these users without further editing and needs to meet all these requirements. This creates a document that might be assessed differently by different users, according to their role requirements for information.

5.3. Future Work

Future work should continue to evaluate the quality of EMR documentation. It would be helpful to have a robust, objective, reliable assessment tool. Also, the quality of scribe documentation output should continue to be evaluated, particularly when it might inform whether a scribe program should be implemented. When documentation is studied or EMRs are developed, consideration should also be made for which settings and end-users will be the recipients of the documentation.

5.4. Limitations

The PDQI-9 tool proved unreliable as a tool and scribe quality results are uninterpretable. Training raters on how to use the tool might have ameliorated the poor reliability. It was also the first study of this type to use nurses to contribute to rating note quality. Nurses may require different information from notes compared to physicians and this may make their scores differ to physician scores.

This was a single centre study in private medicine in Australia with a high admission rate (50% compared to typical USA admission rates of 20%). It compared structured, template documentation of scribes (edited by physicians) against free-text, unstructured documentation written by unassisted board-certified physicians. The large numbers of documents rated in each group may have mitigated this limitation. True blinding was not possible; raters would have been able to quickly identify whether the author of the note was a scribe or not, based on the presence or absence of the template structure.

Employees of the study institution rated notes; which raises the possibility of social desirability and sponsor bias. Raters weren’t enrolled from primary care, consultant offices or hospital wards, so results reflect the perspective of the institution’s Emergency workforce. Opinions may be different in alternate settings.

6. Conclusions

The PDQI-9 documentation quality tool that we used, did not demonstrate reliability or validity in evaluating Emergency Medicine consultation notes. Emergency scribe note quality has not yet been determined.

7. Clinical relevance statement

The PDQI-9 tool should not be used to assess ED note quality. Scribe note quality (after physician editing) hasn’t yet been definitively evaluated. More work is needed on defining and testing the quality of medical notes in EMRs.

Multiple Choice Question

The quality of scribed notes (compared to notes written without a scribe) in Emergency Medicine is:

Better than physician notes

Not yet determined

Equivalent to physician notes

Worse than physician notes

Better in some aspects, worse in others

This study evaluated the quality of notes using a PDQI-9 note quality tool, designed for use with EMR notes. It found that the tool was not reliable and so the results of this study (whilst finding no difference in notes quality between scribed and non-scribed notes) are not interpretable. The only previous scribe work used the same tool in Primary Care and so unfortunately, may also not report reliable results.

Funding Statement

Funding The Cabrini Medical Staff Association funded AW. The Phyllis Connor Memorial Fund, Equity Trustees, the Cabrini Institute, Foundation and hospital also funded this work. No input was provided into study design or write-up by funders.

Conflict of Interest KW is Director of a Scribe Program, WD is a scribe, HR developed the scribe template, and MBM is Director of an ED with a Scribe Program.

Human Subject Protections

The study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects, and was approved by Cabrini Human Research Ethics Committee (06–27–07–15).

Contributions

KW, MS, WD, HR and AW conceived the study and designed the trial. KW, AW: ethics. KW, MBM: funding. AW recruitment, randomization, database design. KW: data collection. MS, WD: statistics, data analysis. AW, WD, KW drafted manuscript. All authors contributed to its revision. KW takes responsibility for the manuscript.

References

- 1.Tischendorf JJ, Crede S, Herrmann P, Bach N, Bomeke C, Manns MP, Schaefer O, Trautwein C. Encoding of diagnosis by medical documentation assistant or ward physician. Dtsch med Wochenschr. 2004;129(33):1731–5. doi: 10.1055/s-2004-829024. [DOI] [PubMed] [Google Scholar]

- 2.Walker K, Johnson M, Dunlop W, Staples M, Rodda H, Turner I, Ben-Meir M.Feasibility evaluation of a pilot scribe-training program in an Australian emergency department Aust Health Rev 2017. doi: 10.1071/AH16188. PubMed PMID: 28355527. [DOI] [PubMed]

- 3.Arya R, Salovich DM, Ohman-Strickland P, Merlin MA. Impact of scribes on performance indicators in the emergency department. Academic emergency medicine. 2010;17(05):490–4. doi: 10.1111/j.1553-2712.2010.00718.x. [DOI] [PubMed] [Google Scholar]

- 4.Bastani A, Shaqiri B, Palomba K, Bananno D, Anderson W. An ED scribe program is able to improve throughput time and patient satisfaction. The American journal of emergency medicine. 2014;32(05):399–402. doi: 10.1016/j.ajem.2013.03.040. [DOI] [PubMed] [Google Scholar]

- 5.Heaton HA, Nestler DM, Jones DD, Lohse CM, Goyal DG, Kallis JS, Sadosty AT. Impact of scribes on patient throughput in adult and pediatric academic EDs. The American journal of emergency medicine. 2016;34(10):1982–5. doi: 10.1016/j.ajem.2016.07.011. [DOI] [PubMed] [Google Scholar]

- 6.Heaton HA, Nestler DM, Jones DD, Varghese RS, Lohse CM, Williamson ES, Sadosty AT.Impact of Scribes on Billed Relative Value Units in an Academic Emergency Department J Emerg Med 2016. doi: 10.1016/j.jemermed.2016.11.017. PubMed PMID: 27988262. [DOI] [PubMed]

- 7.Heaton HA, Nestler DM, Lohse CM, Sadosty AT.Impact of scribes on emergency department patient throughput one year after implementation The American journal of emergency medicine 2016. doi: 10.1016/j.ajem.2016.11.017. PubMed PMID: 27856140. [DOI] [PubMed]

- 8.Hess JJ, Wallenstein J, Ackerman JD, Akhter M, Ander D, Keadey MT, Capes JP. Scribe Impacts on Provider Experience, Operations, and Teaching in an Academic Emergency Medicine Practice. West J Emerg Med. 2015;16(05):602–10. doi: 10.5811/westjem.2015.6.25432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Walker K, Ben-Meir M, O’Mullane P, Phillips D, Staples M. Scribes in an Australian private emergency department: A description of physician productivity. Emergency medicine Australasia. 2014;26(06):543–8. doi: 10.1111/1742-6723.12314. [DOI] [PubMed] [Google Scholar]

- 10.Walker KJ, Ben-Meir M, Phillips D, Staples M. Medical scribes in emergency medicine produce financially significant productivity gains for some, but not all emergency physicians. Emergency medicine Australasia. 2016;28(03):262–7. doi: 10.1111/1742-6723.12562. [DOI] [PubMed] [Google Scholar]

- 11.Shuaib W, Hilmi J, Caballero J, Rashid I, Stanazai H, Ajanovic A, Moshtaghi A, Amari A, Tawfeek K, Khurana A, Hasabo H, Baqais A, Mattar AA, Gaeta TJ.Impact of a scribe program on patient throughput, physician productivity, and patient satisfaction in a community-based emergency department Health Informatics Journal. 2017. Epub March 1, 2017. doi: 10.1177/1460458217692930. [DOI] [PubMed]

- 12.Bank AJ, Obetz C, Konrardy A, Khan A, Pillai KM, McKinley BJ, Gage RM, Turnbull MA, Kenney WO. Impact of scribes on patient interaction, productivity, and revenue in a cardiology clinic: a prospective study. ClinicoEconomics and outcomes research. 2013;5(01):399–406. doi: 10.2147/CEOR.S49010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bank AJ, Gage RM. Annual impact of scribes on physician productivity and revenue in a cardiology clinic. ClinicoEconomics and outcomes research. 2015;7:489–95. doi: 10.2147/CEOR.S89329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Koshy S, Feustel PJ, Hong M, Kogan BA. Scribes in an ambulatory urology practice: patient and physician satisfaction. The Journal of urology. 2010;184(01):258–62. doi: 10.1016/j.juro.2010.03.040. [DOI] [PubMed] [Google Scholar]

- 15.Carnes KM, de Riese CS, Werner TW.A Cost-Benefit Analysis of Medical Scribes and Electronic Medical Record System in an Academic Urology Clinic Urology Practice 2015. 2(3). [DOI] [PubMed]

- 16.Misra-Hebert AD, Amah L, Rabovsky A, Morrison S, Cantave M, Hu B, Sinsky CA, Rothberg MB. Medical scribes: How do their notes stack up? J Fam Pract. 2016;65(03):155–9. [PubMed] [Google Scholar]

- 17.Yan C, Rose S, Rothberg MB, Mercer MB, Goodman K, Misra-Hebert AD. Physician, Scribe, and Patient Perspectives on Clinical Scribes in Primary Care. J Gen Intern Med. 2016;31(09):990–5. doi: 10.1007/s11606-016-3719-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dunlop W, Hegarty L, Staples M, Levinson M, Ben-Meir M, Walker K.Medical scribes have no impact on the patient experience of an emergency department Emergency Medicine Australasia 2017. doi: 10.1111/1742–6723.12818. [DOI] [PubMed]

- 19.Hess JJ, Akhter M, Ackerman J, Wallenstein J, Ander D, Keadey M, Capes JP. Prospective evaluation of a scribe program’s impacts on provider experience, patient flow, productivity, and teaching in an academic emergency medicine practice. Annals of emergency medicine. 2013;1:S1.. doi: 10.5811/westjem.2015.6.25432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Heaton HA, Samuel R, Farrell KJ, Colletti JE. Emergency department scribes: A two-step training program. Annals of emergency medicine. 2015;1:S159.. [Google Scholar]

- 21.Walker KJ, Dunlop W, Liew D, Staples MP, Johnson M, Ben-Meir M, Rodda HG, Turner I, Phillips D. An economic evaluation of the costs of training a medical scribe to work in Emergency Medicine. Emerg Med J. 2016;33(12):865–9. doi: 10.1136/emermed-2016-205934. [DOI] [PubMed] [Google Scholar]

- 22.Stetson PD, Morrison FP, Bakken S, Johnson SB, eNote Research T. Preliminary development of the physician documentation quality instrument. J Am Med Inform Assoc. 2008;15(04):534–41. doi: 10.1197/jamia.M2404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Stetson PDB. Assessing Electronic Note Quality using the Physician Documentation Quality Instrument (PDQI-9) Applied Clinical Informatics. 2012;3:164–74. doi: 10.4338/ACI-2011-11-RA-0070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Combs T. Recognizing the Characteristics of Quality Documentation. J AHIMA. 2016;87(05):32–3. [PubMed] [Google Scholar]

- 25.Kargul GJ, Wright SM, Knight AM, McNichol MT, Riggio JM. The hybrid progress note: semiautomating daily progress notes to achieve high-quality documentation and improve provider efficiency. Am J Med Qual. 2013;28(01):25–32. doi: 10.1177/1062860612445307. [DOI] [PubMed] [Google Scholar]

- 26.Burke HB, Hoang A, Becher D, Fontelo P, Liu F, Stephens M, Pangaro LN, Sessums LL, O’Malley P, Baxi NS, Bunt CW, Capaldi VF, Chen JM, Cooper BA, Djuric DA, Hodge JA, Kane S, Magee C, Makary ZR, Mallory RM, Miller T, Saperstein A, Servey J, Gimbel RW. QNOTE: an instrument for measuring the quality of EHR clinical notes. J Am Med Inform Assoc. 2014;21(05):910–6. doi: 10.1136/amiajnl-2013-002321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Jamieson T, Ailon J, Chien V, Mourad O. An electronic documentation system improves the quality of admission notes: a randomized trial. J Am Med Inform Assoc. 2017;24(01):123–9. doi: 10.1093/jamia/ocw064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Bierman JA, Hufmeyer KK, Liss DT, Weaver AC, Heiman HL. Promoting Responsible Electronic Documentation: Validity Evidence for a Checklist to Assess Progress Notes in the Electronic Health Record. Teach Learn Med. 2017;12:1–13. doi: 10.1080/10401334.2017.1303385. [DOI] [PubMed] [Google Scholar]