Summary

Objectives : Determine if clinical decision support (CDS) malfunctions occur in a commercial electronic health record (EHR) system, characterize their pathways and describe methods of detection.

Methods : We retrospectively examined the firing rate for 226 alert type CDS rules for detection of anomalies using both expert visualization and statistical process control (SPC) methods over a five year period. Candidate anomalies were investigated and validated.

Results : Twenty-one candidate CDS anomalies were identified from 8,300 alert-months. Of these candidate anomalies, four were confirmed as CDS malfunctions, eight as false-positives, and nine could not be classified. The four CDS malfunctions were a result of errors in knowledge management: 1) inadvertent addition and removal of a medication code to the electronic formulary list; 2) a seasonal alert which was not activated; 3) a change in the base data structures; and 4) direct editing of an alert related to its medications. 154 CDS rules (68%) were amenable to SPC methods and the test characteristics were calculated as a sensitivity of 95%, positive predictive value of 29% and F-measure 0.44.

Discussion : CDS malfunctions were found to occur in our EHR. All of the pathways for these malfunctions can be described as knowledge management errors. Expert visualization is a robust method of detection, but is resource intensive. SPC-based methods, when applicable, perform reasonably well retrospectively.

Conclusion : CDS anomalies were found to occur in a commercial EHR and visual detection along with SPC analysis represents promising methods of malfunction detection.

Citation: Kassakian SZ, Yackel TR, Gorman PN, Dorr DA. Clinical decisions support malfunctions in a commercial electronic health record. Appl Clin Inform 2017; 8: 910–923 https://doi.org/10.4338/ACI-2017-01-RA-0006

Keywords: Clinical decision support, errors, malfunction, alerts, electronic health record, electronic medical record

1. Background and Significance

1.1 Clinical Decision Support

Clinical decision support (CDS) tools are focused on improving medical decision making. A large body of evidence supports the effectiveness of these tools to improve process outcomes and reduce errors [ 1 – 5 ]. Overall, CDS is considered an essential part of realizing the potential benefits of health information technology.

Wide concern has been established surrounding the phenomena of alert fatigue, whereby, via increasing exposure to CDS alerts, provider responsiveness to them rapidly declines [ 6 – 8 ]. The problem of alert fatigue is believed to arise both from the sheer volume of alerts presented to the user as well as the high rate of false positive alerts presented [ 9 ]. Alert fatigue is unlikely to decrease as more and more care processes, quality initiatives, and compliance-related issues are being “hard-wired” into the EHR via CDS [ 10 ]. Given these concerns it is imperative that significant effort is made to optimize and curate the CDS tools so as not to contribute further to the phenomena of alert fatigue with poorly functioning CDS.

In addition to alert fatigue, several other safety concerns surrounding CDS have been uncovered and remain unresolved [ 6 , 11 ]. Recently an emerging concern regarding the malfunctioning of CDS systems has been described. A malfunctioning CDS system is best described as when a CDS system “…does not function as it was designed or expected to” [ 12 ]. Work by Wright et al. described a small case series of four CDS malfunctions in a home-grown EHR system in which CDS malfunctions occurred secondary to a change in a laboratory test code, a drug dictionary change, inadvertent alteration of the underlying alert logic, and a software coding error in the underlying system [ 12 ]. Additionally, these authors carried out a survey asking Chief Medical Information Officers (CMIOs) whether similar types of malfunctions of CDS malfunctions had occurred in their systems and 27 out of 29 CMIOs responded affirmatively. To date, there has been no comprehensive analysis of CDS malfunctions within any other EHR installations and none in a commercial system, which are the predominant type in use in the U.S.

When a CDS tool malfunctions there is rarely a mechanism in place to detect the malfunction. Rather, ad-hoc user reports might uncover an issue or an administrator might retrospectively review and manually analyze the firing and response rates [ 13 ]. Given that many organizations have hundreds or thousands of different pieces of CDS this review is all but impossible to comprehensively conduct manually. As well, the current tools in most commercial EHRs provide limited functionality to even conduct a manual review.

2. Objectives

Given the ongoing concerns about effective use of CDS and the emerging concerns regarding CDS malfunctions we set out to evaluate and characterize CDS malfunctions in a commercial EHR [ 10 , 12 , 14 ]. Firstly, we wanted to determine whether CDS malfunctions are occurring in our instance of a commonly utilized commercial EHR. Secondly, if CDS malfunctions are found to occur we sought to characterize the pathways through which these malfunctions happen. Thirdly, we want to describe methods for both detection and prevention of CDS malfunctions.

3. Material and Methods

3.1. Study Setting and Electronic Health Record System

This study utilized data from Oregon Health and Science University (OHSU) , a 576 bed tertiary care facility in Portland, OR. The EHR in use at OHSU is EPIC Version 2015(EPIC Systems, Verona, WI).

3.2. Clinical Decision Support Tools

Our study focuses on the use of what have been referred to in the literature as point of care alerts/ reminders [ 15 ]. These CDS tools generally fall within the category of rules wherein a pre-specified logical criteria is created and expects a specific action or set of actions to be fulfilled [ 16 ].

We have chosen to focus on these alerts since the development and knowledge management of these alerts is done locally, whereas many of the other CDS tools are either inherent to the system (i.e. ordering duplication checking,) or obtained from third-party vendors (Rx-Rx interaction checking). Therefore the knowledge elucidated from this study will likewise have greater external validity.

3.3. Data Abstraction

Utilizing Oracle SQL Developer (Oracle Corporation, Redwood Shores, CA) a SQL script was created to retrieve the alert activity history from the EPIC supplied relational database.

3.4. Visual Anomaly Detection

Visual anomaly detection was performed using Tableau 9.2 (Tableau Software, Seattle, WA). An expert (SZK) visually inspected the alert firing history for each unique alert. When the alert firing appeared to deviate from historical patterns or exhibited behavior that appeared inconsistent with knowledge of the targeted activity the alert firing event was deemed to be a candidate visual anomaly. For example, alerts related to influenza were expected to exhibit a seasonality to their activity and thus if this result was encountered via the visual inspection it would not have been considered an anomaly.

A second expert reviewer (DAD) was utilized to validate the visualization method. Ten representative visualizations of unique alert activity, five considered anomalies and five considered normal by the initial reviewer, were shared and classified by DAD. Inter-rate reliability was calculated.

3.5. Statistical Process Control Anomaly Detection

Given that our dataset comprised count data and the underlying denominator, or area of opportunity, likely varied insignificantly, statistical process control (SPC) c-charts were created [ 17 , 18 ]. C-charts were created in Tableau. The following tests were performed to detect the presence of special cause [ 17 ]. Test #1 the presence of a single point outside the control limits using 3*standard deviation. Test #2 two of three consecutive points are more than 2 standard deviations from the average line and both on the same side of the average line. Test #3 eight or more consecutive points on the same side of the average line. Test #4 consisted of 6 or more values steadily increasing or decreasing. SPC anomaly detection was attempted on time points for both a weekly and monthly scale. To determine the characteristics and performance of SPC detection methods sensitivity, specific, precision and the F measure were determined. For the purposes of test performance characteristics, since there is no established gold standard and resources precluded validation of the entire underlying CDS cohort, we first reduced the dataset to those CDS rules where SPC was able to be utilized, i.e. there was some prior history of control for a sufficient period of time. Then with this reduced dataset we treated all detected visual anomalies, as determined by reviewer SZK, as the true positive and the non-candidate CDS rules as true negatives.

3.6. Candidate Anomaly Validation

A CDS malfunction (aka true positive anomaly) occurs when the CDS rule “…does not function as it was designed or expected to” [ 12 ]. For example, should an anomaly detection method find that a specific alert’s firing rate decreased and it is then determined that this occurred because the target population is seen less frequently in the respective setting this alert would be considered a false positive. In contrast, if a candidate anomaly is identified because the firing rate decreased significantly and this was found to be secondary to a change in a laboratory test code which is part of the CDS tool logic this would be considered a true positive (aka CDS malfunction). In essence, if the CDS should have kept firing because the same situation was occurring and the same alert should still fire in that situation, then it was a true positive or malfunction.

Candidate anomaly validations were conducted in the following manner. Firstly, the alert build records were searched to determine the original date of creation and whether the records had any history of editing, as demonstrated in the time stamp data. Linked records were examined to ensure they remained released in the system. For each candidate anomaly an informal discussion with the local CDS analyst regarding the findings took place. Following this discussion, further discussion with other EHR analysts responsible for various parts of the EHR build occurred. Additionally, the CDS analyst work logs, when available, were searched to determine if notes regarding the build and subsequent alterations to the tool were available. Our institution-wide EHR change notice system was searched for related entries coinciding with changes in alert activity. As needed, we discussed alert activity with relevant clinical users and departments to examine for possible competing changes which would have affected alert firing rates. For any alert involving medication records, extensive discussion with pharmacy informatics colleagues occurred.

The primary outcome of # of malfunctions was described per alert-month. Alert-months were calculated by aggregating the total # of months for which all alerts were active. For example, if we had 10 alerts which were active for 6 months and an additional 5 alerts active for 3 months would we have a total of 75 alert-months.

3.7. Institutional Review Board

Institutional review board (IRB) approval for this study with a waiver of informed consent was obtained.

4. Results

We had a total of 8,300 alert-months comprised of 226 alert type CDS rules which were shown to the user and still active in the system. These CDS rules formed the cohort used in this analysis. Of these 226 rules, 21 were considered visual anomalies by the first CDS reviewer. Of the 21 visual anomalies 4 were considered CDS malfunctions (aka true positives), 8 were false positives (i.e. expected changes in alerting) and 9 were unable to be classified (► Table 1 ). Of the 226 alert type CDS rules, 154 were amenable to the SPC detection method. The remaining rules were not amenable as they did not meet the assumption of control required for SPC detection. All four CDS malfunctions pathways were considered to be the result of knowledge management processes (► Table 2 ).

Table 1.

CDS rules identified as candidate visual anomalies. SPC Detection column lists the test which was violated, see methods section for more details

| CDS Rule | SPC Detection | CDS Malfunction |

|---|---|---|

| Propofol Shortage | 1,2,3 | No |

| ER EKG Ordering | 3 | No |

| Chemotherapy Ordering | 2 | No |

| IV Rx Stop Time | 3 | No |

| Osteoporosis Screening | 3 | No |

| Foley Catheter | 0 | No |

| Osteoporosis Screening #2 | 3 | No |

| Treatment Protocol | 3 | No |

| MRI and Observation status | 3 | Unknown |

| Sigmoidoscopy | 1 | Unknown |

| 2nd Generation anti-psychotics | 3 | Unknown |

| Pnemococcal Vaccination | 3 | Unknown |

| Post Stroke anti-platelet Rxs | 1 | Unknown |

| Daypatient status | 3 | Unknown |

| Observation Status | 3 | Unknown |

| HMO Insurance | NA | Unknown |

| Colorectal Cancer Screening | 3 | Unknown |

| Coronary artery disease Rx | 1,2,3 | Yes |

| Enoxaparin orderset use | 2,3 | Yes |

| Influenza at Dischage | NA | Yes |

| Patient Height documentation | 1 | Yes |

NA = Not applicable

Table 2.

CDS malfunctions and corresponding pathways.

| CDS Rule | Malfunction Pathway |

|---|---|

| Height documentation | Target clinical department was discontinued |

| Enoxaparin order set use | Medication record accidently added to preference list |

| Influenza vaccination | Rule not activated for influenza season |

| Coronary artery disease management | Direct editing of rule logic |

4.1. Anomaly Visualization Reviewer Agreement

A random sample of five candidate visual anomalies visualizations and five non-candidate visualizations, as classified by reviewer SZK, were shared with a second reviewer, DAD, who was blinded to the first reviewer determination. There was 100% agreement in terms of classifying the visualizations as either anomalies or non-anomalies.

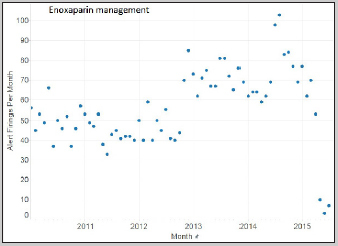

4.2. CDS Malfunction #1: Use of enoxaparin order set

Based on both visualization and SPC c-chart methods (Tests 2 & 3) an anomaly was detected (► Figure 1 ). The visualization method generated a likely anomaly given that the monthly alerting rate consistently ranged in the 60–100s for several years and then subsequently decreased to fewer than 10 firings per month starting in May of 2015.

Fig. 1.

Alert activity from CDS rule related use of enoxaparin order set, determined to be a malfunction

Ensuring the proper use of anti-coagulation is a major patient safety concern. As part of an institutional quality improvement process all orders for enoxaparin needed to utilize an order set to ensure compliance with a regulatory requirement denoting which provider was managing the anti-coagulation. To ensure users utilized the order set an interruptive alert was created which was triggered when an order for enoxaparin was entered and the patient did not have an accompanying order for anti-coagulation management.

In initially developing the alert a dummy medication record for enoxaparin was created and placed in the EHR formulary list available to users. This dummy record would redirect users to the order set and was the one specified in the alert criteria which would cause the alert to fire. However, the actual medication record still needed to exist to ensure proper functioning of a multitude of pharmacy processes. In July of 2015 during routine pharmacy Rx list maintenance a new formulary medication list was created which included the actual enoxaparin medication record instead of the dummy order. Thus, when providers now searched for enoxaparin they found the actual order and not the dummy record. In this circumstance since the actual Rx order was not included in the alert logic, the alert was never triggered. There continued to be rare firings of the alert after this event since some individual users had added the dummy enoxaparin record to their individual Rx preference list and therefore when selected still triggered the alert redirecting them to an order set.

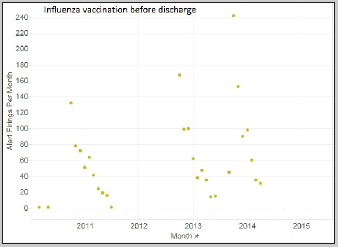

4.3. CDS Malfunction #2: Administration of Flu vaccine at discharge

Based on visualization a candidate anomaly was detected (► Figure 2 ). The visualization method generated an anomaly given that a clear seasonal pattern was observed in influenza seasons 2010–2011, 2012–2013, and 2013–2014 but absent in both 2011–2012 and 2014–2015.

Fig. 2.

Alert activity from CDS rule related to influenza administration prior to discharge, determined to be a malfunction

This alert is triggered when a patient has an active order for influenza vaccination, which has not yet been administered, and then receives an order for discharge. Thus, the alert is trying to prevent failure to administer the vaccination before discharge.

Following discussion with the CDS analyst it was determined that following influenza season the rule is manually de-activated, i.e. removed from production, along with all the other influenza CDS rules. However, in this instance this particular rule was not reactivated for the missing influenza seasons. The CDS analysts characterized this as an oversight.

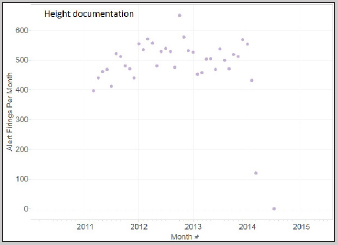

4.4. CDS Malfunction #3: No documented height in oncology patients

Based on visualization and SPC c-chart methods (Test #1) a candidate anomaly was detected ( Figure 3 ). For the visualization method the alert activity was noted to go to zero after March 2014.

Fig. 3.

Alert activity from CDS rule related to height documentation in oncology clinic, determined to be a malfunction

This alert was created to ensure that patients in the hematologic malignancy clinic had recently documented height measurements in the EHR. This is important since many chemotherapeutic medications are dosed based on body surface area which requires a height to calculate and therefore appropriately dose.

Following the discovery of this candidate anomaly the alert criteria was critically examined and it was noted that the department in which this alert was targeted was no longer active. Within the EHR, departments function not so much as virtual representations of physical space, but more as scheduling and billing entities and are in relatively frequent flux.

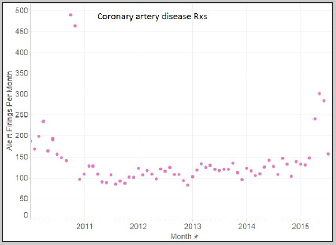

4.5. CDS Malfunction #4: Coronary artery disease and use of anti-platelet medications

Based on visualization of the alert firing history and use of SPC chart detection (Tests 1, 2 and 3) an anomaly was discovered. In visual terms the alert firing was historically occurring approximately 200 times per month. Around October of 2010 an increase of at least two-fold was seen in the alert firing rate (► Figure 4 ). Additionally, an increase in the alert-firing activity was seen in 2015 which corresponded to an intended expansion of the alert to additional clinical departments.

Fig. 4.

Alert activity from CDS rule related to coronary artery disease and appropriate medications (RXs), determined to be a malfunction.

This alert was created to ensure that patients with a diagnosis of coronary artery disease (CAD) were also prescribed an anti-platelet medication, as supported by strong evidence [ 19 ]. When this alert was originally created the classes of medication which satisfied or suppressed the alert were those in the type of antiplatelet Rxs as consistent with the guidelines.

During the candidate anomaly validation work it was determined that a change in rule was made on 10 October 2010, which corresponds to the exact day an increase in the alert firing rate is apparent. This change included the expanding of the target medications which would satisfy, i.e. suppress, this alert from activing. Previously, this alert was suppressed when a patient with a diagnosis of CAD and an Rx for an antiplatelet medication. However, for unclear reasons the alert criteria was expanded to include medications in the class of anticoagulants in addition to antiplatelet medications. Now with these additional medications in place a larger group of medication would suppress the alert. However, what was immediately seen was that the rate of alert firing increased. In discussion with the pharmacy informatics colleagues involved in these changes there was no clear understanding why this increase occurred. Additionally, the alert firing rate subsequently decreased to just below its historical level, again with no known explanation.

4.6. False Positive CDS anomalies

There were a total of eight false positive CDS anomalies identified (► Table 1 ).

4.7. Performance of SPC c-chart anomaly detection methods

SPC c-chart detection methods were applicable to 19 out of 21 anomalies detected by visualization and were able to detect 18 of 19 anomalies (► Table 1 ). The single alert that SPC methods failed to detect was related to potentially inappropriate foley catheter use. This alert was firing at a fairly low rate (<10/day) with a single point determined visually to represent a candidate anomaly but which did not exceed the threshold of 3*standard deviation of the control limits as defined in test #1 for SPC methods. Two candidate anomalies were not amenable to SPC methods. Following this screening process we then treated all anomalies detected by visual analysis as the set of true positives and the remaining set of CDS rules not considered anomalies (via visual analysis) as true negatives, i.e. we treated the detection of visual anomalies as the gold standard test. Additionally, we made this analysis using an aggregate measure for SPC c-chart detection with a positive test being one in which either Tests #1, 2, 3 or 4 was violated. Using these assumptions the sensitivity of SPC c-chart detection methods in our study was 0.95 (18/19). The precision or positive predictive value was 0.29 (18 /(18+44)). The F measure is 0.44.

5. Discussion

In 8,300 alert-months we were able to find and validate four individual CDS malfunctions from a subset of 226 CDS rules. Additionally, we identified nine CDS rules as anomalies that remain unclassified. It is entirely possible, if not likely, that several of these rules represent additional CDS malfunctions. Prior to this study none of the identified CDS malfunctions found had been previously identified by either our analysts or users. To our knowledge this work represents the first published examination of CDS malfunctions in a commercial EHR. Recent work by Wright et al. found four individual instances of CDS malfunctions in a home-grown EHR system out of some 201 examined (A. Wright, May 2016) [ 12 ]. Furthermore, via a survey of CMIOs, they found that these types of errors were possibly much more widespread. Any site with more than 200 alerts is likely to find 1–2 malfunctions per year in their decision support based on normal operations of the system based on our results of 4 malfunctions in 8,300 alert-months.

We collectively referred to the patterns of malfunctions found in our CDS library as knowledge management errors. All of these errors involved active alternation to some aspect of the EHR system except in one case where a lack of action occurred (i.e. Influenza rule not activated, resulting in a malfunction).

Following these findings the two main follow-up questions focus on detection and prevention. With regards to prevention, it would be overly simplistic to suggest that the CDS rules need to be tested any time a change in the system is made. While this is clearly prudent when an analyst makes a direct edit to a CDS rule, as was the case prior to the fourth CDS malfunction, in cases where changes to other parts of the system are made this is likely not feasible given the frequency of alterations. Particularly germane to this pathway is the CDS malfunction which occurred as the result of the discontinuation of a department. We believe this type of malfunction could have occurred through a change in any number of attributes which are used to target the CDS to specific provider or patient populations. For this specific example within the EHR, departments are virtual entities that are created mostly to enable the billing and scheduling process. Departments are created and discontinued with significant frequency and these can occur with virtually no perceptible changes in the physical world. The difficulty with this arises because many of these local attributes are essentially hard coded in multiple areas of the EHR build and changing them requires one by one adjustments. While our institution has a notification system to alert analysts when changes are made in one area of our EHR build which may affect other areas, the system relies on manual curation.

The malfunction related to inadvertently not activing the CDS rule related to influenza is clearly a knowledge management issue. As it currently stands in our institution, analysts are essentially left up to the task of remembering which rules require manual activation and deactivation on a seasonal basis. In this particular case the analyst normally responsible for the deactivation and reactivation of these alerts was out on leave in both instances when it was not activated. As ironic as it might be, an improved knowledge management system which can track and remind the analysts regarding these types of required changes would likely prevent this type of CDS malfunction.

While we identified four CDS malfunctions, the fact that an additional nine anomalies remain unclassified is concerning and demonstrates the significant resources required to validate and test CDS rules. While we employed all of the validation techniques described above we were unable to classify these nine anomalies. To attempt further classification would require complete mapping and analysis of every attribute which defines the rule – something which is prohibitively resource intensive. One of the most complicating parts of the validation is its retrospective nature. In many cases we are trying to look for a needle that was dropped into the haystack 3, 4 or even 5 years ago. We surmise that had these anomalies been uncovered in near real-time they would be much easier to validate. Adding to this difficulty is the fact that in our EHR system the records related to the CDS rules are directly edited, overwriting prior entries. There is a method available in this vendor system to create new records upon editing, which would preserve prior configurations. Implementation of this method would likely improve the ability to trace the root cause of malfunctions.

The CDS malfunction that occurred following routine maintenance on the EHR formulary list highlights the necessity for a prospective method of detection as a particular prevention method for this remains unclear. Additionally, in further support of a prospective method of detection there are undoubtedly other pathways of CDS malfunctions which we have yet to be elucidated. For detection methods, we chose to utilize both visualization and SPC c-charts as these methods have support for their use from the literature in other similar domains, as well as ease of application helping to promote generalizability. Visualization has been shown to be a very strong detection method in multiple domains [ 20 ]. While SPC c-chart detection could only be applied to 152 of the 226 CDS rules we believe this number could easily be improved if there was a prospective system in place. The reason being that with a prospective system we would be able to validate the activity of the alert firing and use that value against which to judge future alert firing activity. Whereas with a retrospective system we by necessity require a period of prolonged control to serve as a baseline.

In brief, creation and implementation of a real-time dashboard would be one logical extension of this effort. To enable this effort the following steps would likely need to be undertaken. Firstly, a raw data pipeline consisting of a SQL script to obtain the raw data from the relational database tables and series of data processing/refinement steps. Following this an automated analysis engine which created SPC charts and then tested the most recent results against them and finally providing this to CDS stakeholders in a visual display.

6. Strength and Limitations

We believe one of the greatest strengths of this work is that it occurred in a vendor system, which is by far the predominant type of EHR currently in use in the U.S. Additionally, while this analysis reviews a single implementation of a single vendor system, there is no particular reason to believe that these types of malfunctions would be limited to our implementation of this system or even to this particular vendor. As well, all of the methods used and software tools which we utilized to carry out this work are readily available to any healthcare system, increasing the external validity of our work.

In order to utilize SPC c-chart methods we had to assume that the denominator of interest, i.e. the potential population of patients on whom the CDS was targeted, did not vary significantly (<20%) [ 17 ]. We believe this assumption was both reasonable and necessary as calculating denominators for the target populations would have been extremely resource intensive as each alert has a unique set of provider, patient and departmental-level characteristics that define when the alert would be shown.

For the SPC c-charts analysis method we utilized one aggregate average and therefore created two aggregate control limits, an upper and a lower. Given that we had no preconceived idea when the change in process would occur, if at all, we were unable to create a control line prior to the change. One of the limitations of our study is that we were unable to validate the entire cohort, meaning there are potentially a number of false negatives, or CDS malfunctions that went undetected by our methods. SPC c-chart demonstrated reasonable performance with respect to retrospective detection of CDS anomalies. While we did not have a gold standard, we do think that the visualization method likely represents a strong method of detection and therefore serves as a reasonable proxy. Additionally, given that upon first constructing detection methods for these types of errors high sensitivity is desirable, and owing to the fact that the alerting behavior of false positive CDS anomalies is fairly identical in many case to true CDS malfunctions, we feel that is was prudent to utilize the CDS anomalies identified by visualization as “true positives” for the purposes of SPC c-chart characteristics.

In addition, the wider context of both pervasive alert over-riding and other types of EHR related errors are important to consider when framing the results of our study [ 21 – 23 ].

7. Conclusion

From a systematic examination of 8,300 CDS alert months we uncovered 21 CDS anomalies by visual inspection. Following validation four were determined to be CDS malfunctions, eight false positive and nine remain unclassified. All of the validated CDS anomalies appear to follow the pathway of what could be termed knowledge management errors. We did not find any errors which results from any intrinsic issues with the EHR system, issues with external system integration or third-party content. This likely represents important work as these types of anomalies are likely occurring in other installations of this vendor system and in other vendor systems as well. Furthermore, use of SPC c-chart analysis represents a promising method for prospective monitoring of CDS alert rules, augmented by manual review for those rules that are not amenable to SPC.

Multiple Choice Question

When performing a retrospective analysis of clinical decision support alert malfunctions in a commercial electronic health record which etiology is the most common cause of error?

Third-party terminology records

Software run-time errors

Knowledge management

Data migration between environments

In our retrospective analysis of clinical decision support malfunctions we found a total of 4 malfunctions during the study period. The etiology of these malfunctions were determined to be knowledge management errors. This was in contrast to a case study published by Wright et. al where they examined CDS malfunctions in a home-grown EHR [ 12 ].

Clinical Relevance Statement

Clinical decision support (CDS) is a major tool to help improve delivery of healthcare. However, effective CDS requires that the underlying rules themselves be functioning properly. We identified multiple CDS malfunctions in our implementation of a vendor EHR. It is likely that similar CDS malfunctions are seen in other vendor systems. All of our CDS malfunctions resulted from knowledge management errors.

Acknowledgements

We thank Diana Pennington, Denise Sandell and Neil Edillo at OHSU for their tremendous assistance in all technical aspects of the CDS tools.

Funding Statement

Funding Research reported in this publication was supported by the National Library of Medicine of the National Institutes of Health under Award Number T15LM007088. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Conflict of Interest The authors acknowledge they have no conflicts of interest in the research.

Human Subjects Protection

The study was performed in compliance with World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects.

References

- 1.Bright TJ, Wong A, Dhurjati R, Bristow E, Bastian L, Coeytaux RR, Samsa G, Hasselblad V, Williams JW, Musty MD, Wing L, Kendrick AS, Sanders GD, Lobach D. Effect of clinical decision-support systems: a systematic review. Annals of internal medicine. 2012;157(01):29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- 2.Lobach D. Enabling Health Care Decision-making Through Clinical Decision Support and Knowledge Management. 2012. [PMC free article] [PubMed]

- 3.McDonald CJ. Protocol-based computer reminders, the quality of care and the non-perfectability of man. The New England journal of medicine. 1976;295(24):1351–5. doi: 10.1056/NEJM197612092952405. [DOI] [PubMed] [Google Scholar]

- 4.Moja L, Kwag KH, Lytras T, Bertizzolo L, Brandt L, Pecoraro V, Rigon G, Vaona A, Ruggiero F, Mangia M, Iorio A, Kunnamo I, Bonovas S. Effectiveness of computerized decision support systems linked to electronic health records: a systematic review and meta-analysis. Am J Public Health. 2014;104(12):e12–22. doi: 10.2105/AJPH.2014.302164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Roshanov PS, Fernandes N, Wilczynski JM, Hemens BJ, You JJ, Handler SM, Nieuwlaat R, Souza NM, Beyene J, Van Spall HG, Garg AX, Haynes RB.Features of effective computerised clinical decision support systems: meta-regression of 162 randomised trialsBmj. 2013;346:f657. doi: 10.1136/bmj.f657. PubMed PMID: 23412440. [DOI] [PubMed]

- 6.Ash JS, Sittig DF, Campbell EM, Guappone KP, Dykstra RH. Some unintended consequences of clinical decision support systems. AMIA Annu Symp Proc. 2007:26–30. [PMC free article] [PubMed] [Google Scholar]

- 7.Shah NR, Seger AC, Seger DL, Fiskio JM, Kuperman GJ, Blumenfeld B, Recklet EG, Bates DW, Gandhi TK. Improving acceptance of computerized prescribing alerts in ambulatory care. Journal of the American Medical Informatics Association : JAMIA. 2006;13(01):5–11. doi: 10.1197/jamia.M1868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Embi PJ, Leonard AC.Evaluating alert fatigue over time to EHR-based clinical trial alerts: findings from a randomized controlled study Journal of the American Medical Informatics Association JAMIA 201219e1e145–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cash JJ. Alert fatigue. Am J Health Syst Pharm. 2009;66(23):2098–101. doi: 10.2146/ajhp090181. [DOI] [PubMed] [Google Scholar]

- 10.Osheroff JA, Teich JM, Levick D, Sittig D, Rogers K, Jenders RA.Improving Outcomes with Clinical Decision Support An Implementers GuideSecond ed: Healthcare Information Management Society2012

- 11.Ash JS, Sittig DF, Dykstra R, Campbell E, Guappone K. The unintended consequences of computerized provider order entry: findings from a mixed methods exploration. International journal of medical informatics. 2009;78 01:S69–76. doi: 10.1016/j.ijmedinf.2008.07.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wright A, Hickman TT, McEvoy D, Aaron S, Ai A, Andersen JM, Hussain S, Ramoni R, Fiskio J, Sittig DF, Bates DW.Analysis of clinical decision support system malfunctions: a case series and survey Journal of the American Medical Informatics Association JAMIA 2016. doi: 10.1093/jamia/ocw005. PubMed PMID: 27026616. [DOI] [PMC free article] [PubMed]

- 13.Ash JS, Sittig DF, Campbell EM, Guappone KP, Dykstra RH, Ash JS, Sittig DF, Campbell EM, Guappone KP, Dykstra RH.Some unintended consequences of clinical decision support systemsAMIA Annu Symp Proc. 2007;Annual Symposium Proceedings/AMIA Symposium 26–30. PubMed PMID: 18693791. [PMC free article] [PubMed]

- 14.Ash JS, Sittig DF, Dykstra R, Wright A, McMullen C, Richardson J, Middleton B.Identifying best practices for clinical decision support and knowledge management in the field Studies in Health Technology & Informatics 2010160Pt 2806–10. [PMC free article] [PubMed] [Google Scholar]

- 15.Wright A, Sittig DF, Ash JS, Feblowitz J, Meltzer S, McMullen C, Guappone K, Carpenter J, Richardson J, Simonaitis L, Evans RS, Nichol WP, Middleton B. Development and evaluation of a comprehensive clinical decision support taxonomy: comparison of front-end tools in commercial and internally developed electronic health record systems. Journal of the American Medical Informatics Association : JAMIA. 2011;18(03):232–42. doi: 10.1136/amiajnl-2011-000113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wright A, Sittig DF, Ash JS, Sharma S, Pang JE, Middleton B. Clinical decision support capabilities of commercially-available clinical information systems. Journal of the American Medical Informatics Association JAMIA. 2009;16(05):637–44. doi: 10.1197/jamia.M3111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Carey RG.Improving healthcare with control charts : basic and advanced SPC methods and case studies Milwaukee, WI: ASQ Quality Press; 2003. xxiv, 194 p. p. [Google Scholar]

- 18.Ben-Gal I.Outlier Detection: Kluwer Academic Publishers2005.

- 19.Smith SC, Jr.,, Benjamin EJ, Bonow RO, Braun LT, Creager MA, Franklin BA, Gibbons RJ, Grundy SM, Hiratzka LF, Jones DW, Lloyd-Jones DM, Minissian M, Mosca L, Peterson ED, Sacco RL, Spertus J, Stein JH, Taubert KA, World Heart F, the Preventive Cardiovascular Nurses A. AHA/ACCF Secondary Prevention and Risk Reduction Therapy for Patients with Coronary and other Atherosclerotic Vascular Disease: 2011 update: a guideline from the American Heart Association and American College of Cardiology Foundation. Circulation. 2011;124(22):2458–73. doi: 10.1161/CIR.0b013e318235eb4d. [DOI] [PubMed] [Google Scholar]

- 20.Keim DA. Information Visualization and Visual Data Mining. IEEE Trans Vis Comput Graph. 2002;8(01):1–8. [Google Scholar]

- 21.Zahabi M, Kaber DB, Swangnetr M. Usability and Safety in Electronic Medical Records Interface Design: A Review of Recent Literature and Guideline Formulation. Hum Factors. 2015;57(05):805–34. doi: 10.1177/0018720815576827. [DOI] [PubMed] [Google Scholar]

- 22.Schiff G, Wright A, Bates DW, Salazar A, Amato MG, Slight SP, Sequist TD, Loudin B, Smith D, Adelman J, Lambert B, Galanter W, Koppel R, McGreevey J, Taylor K, Chan I, Brennan C.Computerized Prescriber Order Entry Medication Safety (CPOEMS): Uncovering and Learning From Issues and ErrorsSliver Spring, MD: US Food and Druf Administration, 2015 December, 15, 2015. Report No.

- 23.van der Sijs H, Aarts J, Vulto A, Berg M. Overriding of drug safety alerts in computerized physician order entry. Journal of the American Medical Informatics Association JAMIA. 2006;13(02):138–47. doi: 10.1197/jamia.M1809. [DOI] [PMC free article] [PubMed] [Google Scholar]