Abstract

Augmented reality (AR) can be an interesting technology for clinical scenarios as an alternative to conventional surgical navigation. However, the registration between augmented data and real-world spaces is a limiting factor. In this study, the authors propose a method based on desktop three-dimensional (3D) printing to create patient-specific tools containing a visual pattern that enables automatic registration. This specific tool fits on the patient only in the location it was designed for, avoiding placement errors. This solution has been developed as a software application running on Microsoft HoloLens. The workflow was validated on a 3D printed phantom replicating the anatomy of a patient presenting an extraosseous Ewing's sarcoma, and then tested during the actual surgical intervention. The application allowed physicians to visualise the skin, bone and tumour location overlaid on the phantom and patient. This workflow could be extended to many clinical applications in the surgical field and also for training and simulation, in cases where hard body structures are involved. Although the authors have tested their workflow on AR head mounted display, they believe that a similar approach can be applied to other devices such as tablets or smartphones.

Keywords: cancer, augmented reality, tumours, computerised tomography, medical image processing, surgery, bone, image registration, paediatrics

Keywords: augmented reality, computer-assisted interventions, patient-specific 3D printed reference, real-world spaces, patient-specific tools, visual pattern, automatic registration, placement errors, software application, actual surgical intervention, tumour location, surgical field, extraosseous Ewing sarcoma

1. Introduction

Augmented reality (AR) is a powerful tool in the medical field, where it gives the opportunity to offer more patient information to the physician by including relevant clinical data in the sight between him and the patient. This medical information can be obtained from imaging studies of the patient [e.g. computed tomography (CT), magnetic resonance (MR), positron emission tomography (PET)] that can be displayed overlaid on the physical world, enabling user interaction and manipulation. During the past two decades, AR has facilitated medical training or surgical planning and guidance. A powerful application of AR in tumour resection surgery is the visualisation of a three-dimensional (3D) model of the segmented tumour over the patient [1], providing relevant information to the surgeon about location and orientation. AR has also been evaluated in training applications [2, 3], where novel physicians were able to improve their skills in terms of spatial vision and surgical ability. All these examples, while showing the possibilities of AR in different medical scenarios, have some limitations in terms of portability, calibration and tracking [4]. Recent technological developments could overcome some of these restrictions. Devices such as Microsoft HoloLens, a compelling head-mounted display, or new software development kits (SDKs) such ARToolKit [5] for mobile devices, will facilitate cheaper and simpler to set-up AR systems spreading their use.

One of the main difficulties for introducing AR in surgical guidance procedures is registering augmented data to the real-world space [6]. Up to now, patient registration has been achieved with optical or electromagnetic tracking systems [7, 8], applying manual alignment [9] or more advanced algorithms such as speed up robust feature [10]. These are solutions that seem to work in some specific applications, but require extra hardware, add complexity to the workflow, increase procedure time and may not be accurate enough.

Previous research in integrating desktop 3D printing with surgical guidance could solve some of the identified limitations of AR in surgical applications. Patient-specific designs, created from CT or MR studies and then 3D printed in-hospital, have already shown their advantages in orthopaedic surgery in scenarios such as open-wedge high tibial osteotomy [11] or femoral varisation osteotomy [12]. These guides are designed to fit precisely in a planned position on the patient. The combination of these surgical guides with a 3D printed AR tracking pattern would avoid the registration problems previously identified.

Recent studies have shown applications of this approach in maxillofacial surgery, attaching a tracking marker to a specifically designed tool that fits in the mandibula of the patient enabling AR registration [13]. Even though this technique showed good accuracy, the attachment of the tracking marker and the occlusal splint was manual, requiring a surface scan for registration, which includes a complex step in the workflow.

To overcome the previous limitations, we propose an AR approach that uses a desktop 3D printer to create patient-specific tools with a tracking marker attached, enabling automatic registration between AR and real-world spaces. This patient-specific tool fits on the patient only in the place it was designed for. The clinical data to be included in the AR scene is previously referenced to the tracking marker and can be easily visualised. This solution was developed as an AR application on Microsoft HoloLens. Accuracy and user experience were evaluated on a patient-based phantom replicating an extraosseous Ewing's sarcoma (EES) of the distal leg scheduled for tumour resection surgery. In addition, the AR approach was tested by the surgeons during the actual surgical intervention.

2. Materials and methods

The AR solution we present includes two main components: an AR application developed for Microsoft HoloLens and a 3D printed patient-specific tracking marker. The accuracy evaluation of the proposed system was performed on a 3D printed phantom replicating a real clinical case: a patient with an EES of the distal leg. Our institution has previous clinical experience 3D printing surgical guides to facilitate the resection of this kind of sarcomas [12]. The addition of relevant clinical data, such as the visualisation of the tumour to resect, over the patient during surgery was achieved by adding the tracking marker to this surgical guide.

For this specific clinical case, the 3D printed phantom included the tumour and surrounding anatomical structures (tibia and fibula). The solution for registration fits perfectly into this case; first, because the tracking marker is added to a surgical guide that was already used for this surgery; second, the modification of the guide does not interfere with the surgical working area; and last, the surgical guide is positioned on a rigid structure, the tibial bone, which means that its position could be replicated during surgery. The accuracy of this placement was also validated on the 3D printed phantom.

2.1. AR application

The AR software application was developed on Unity version 2017.4 LTS using C#. The detection of the tracking marker was performed using Vuforia [14], a pattern recognition SDK. Vuforia includes a recognition algorithm implemented for HoloLens which provides the 3D position of a tracking marker with respect to HoloLens spatial mapping coordinate space. This algorithm enables the developed AR application to display custom 3D models with respect to the tracking marker in the HoloLens virtual environment.

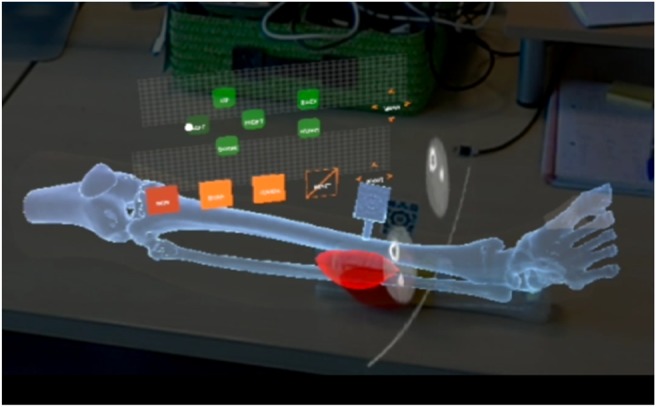

The AR application (Fig. 1) has the following functionalities to facilitate tumour resection surgery:

3D model visualisation: Visualisation of different anatomical 3D models representing anatomy of the patient. A virtual canvas allows the user to select which models to visualise. Anatomical models used in this clinical case included skin, bone and tumour.

Preoperative imaging visualisation: A virtual canvas allows the user to select the slice and the axis (axial, sagittal or coronal) to be augmented. The images are obtained from any 3D modality (CT in this case) and are displayed in their actual position with respect to the patient.

Fig. 1.

Anatomical 3D models and axial CT slice displayed on HoloLens application

2.2. Workflow

The proposed workflow is based on the actual clinical procedure, minimising the additional steps to limit the interference with their actual work.

2.2.1. Augmented reality images

The AR images are based on preoperative imaging studies (CT, MR, PET) acquired from the patient. The 3D Slicer platform was used to rigidly register the studies and to obtain segmentations and anatomical 3D models (polygonal meshes) from the skin, distal leg bone and sarcoma. These models were used for visualisation, phantom 3D printing and to design the surgical guide.

2.2.2. Surgical guide design

Based on the generated 3D models of the bone tissue and tumour, a surgical guide was designed to be attached to the tibia close to the location of the sarcoma. The following aspects were considered in the design: section of the bone exposed during surgery, bone surface features to obtain a reproducible placement and surgical working area. Meshmixer version 3.4 (Autodesk, Inc., USA) software was used for surgical guide design.

2.2.3. Tracking marker design

The tracking marker was designed using Autodesk Inventor 2017 (Autodesk, Inc., USA) following the instructions provided in Vuforia website [14] to obtain accurate tracking results. The marker was then virtually merged to the surgical guide using the same software.

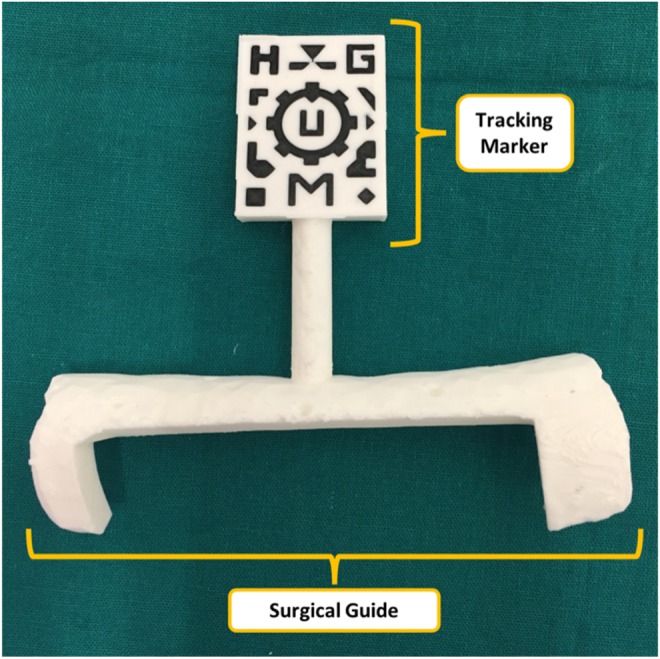

2.2.4. 3D printing

Models were 3D printed with polylactic acid (PLA) on a dual extrusion Ultimaker 3 Extended (Ultimaker B.V., Netherlands) desktop 3D printer. Two sets of 3D models were printed: one for validation purposes (including conical holes in the design) and other for surgical use. Each set of 3D models included a phantom (section of the tibia, section of the fibula and sarcoma) and a surgical guide containing the tracking marker (Fig. 2). Furthermore, the physicians used the 3D printed phantom to inform the patient about the intervention and to assist in surgical planning by presenting the location and size of the tumour.

Fig. 2.

Surgical guide containing visual marker

Prior to surgery, PLA must be sterilised to maintain asepsis of the surgical field by a sterilisation technique based on ethylene oxide (EtO) at 37°C to avoid deformation (the glass transition temperature of PLA is around 55–65°C) and extensive degassing to remove residual EtO [15].

2.3. Validation

The surgical guide placement error and the AR point localisation error were evaluated. A set of phantom and surgical guide 3D printed models was used. These models contain 20 and 6 conical holes, respectively. Conical holes were used as reference points for registration and error measurement. An optical tracking system [Polaris, (Northern Digital, Inc., Canada)] was used as a gold-standard for the positioning measurements. A reference pointer with optical markers was used for point recording.

In addition, a qualitative assessment of the workflow and the functionality were performed during the use of the AR application in surgery by expert clinicians.

2.3.1. Surgical guide placement error

The assessment of the surgical guide placement accuracy was performed by attaching the surgical guide to the phantom and recording the position of the six conical holes with the optical tracking system. This process was repeated three times by removing and placing back again the guide on the phantom. The required point-based registration was computed using the conical holes included in the validation phantom.

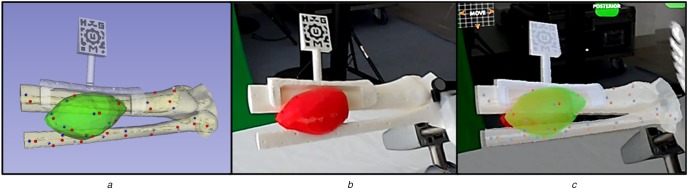

2.3.2. AR point localisation error

To validate the AR visualisation, a simulation of the surgery was performed using the HoloLens application. First, the surgical guide was positioned on the phantom and the tracking pattern was used to register the AR scene. Afterwards, 15 randomly distributed spherical models (3 mm diameter) were augmented on the surface of 3D phantom model. The tip of the reference pointer was positioned on every projected spherical model, using just the augmented data as reference, and the position of the tip (provided by the optical tracking system) was recorded (Fig. 3). The Euclidian distance between this recorded position and actual location was measured to quantify the AR tracking error. This experiment was performed by three different users and each user repeated the steps three times.

Fig. 3.

Point recording on phantom

a Virtual view

b Real view

c AR view

2.3.3. AR at the operating room

Once the AR application was tested and validated on the 3D printed phantom, the workflow was evaluated in the surgical intervention of the patient. Surgeons used HoloLens device and a sterilised patient-specific surgical guide (Fig. 2) in the operating room. The developed AR application projected the preoperative images and 3D models of the tumour and bone on the patient for surgical guidance.

3. Results and discussion

The detection of the tracking marker (dual-colour 3D printed pattern on the surgical guide) by Vuforia SDK was feasible and almost immediate. One of the limitations for the pattern detection was the required proximity, around 20 cm, to be correctly identified. Nevertheless, once the marker is detected an optimal visualisation is achieved at longer distances, since the marker detection is required only once at the beginning of the navigation process. The dependence of this process with light conditions was not evaluated. However, the OR scenario presented very intense and focused light sources that did not hinder this step of the workflow.

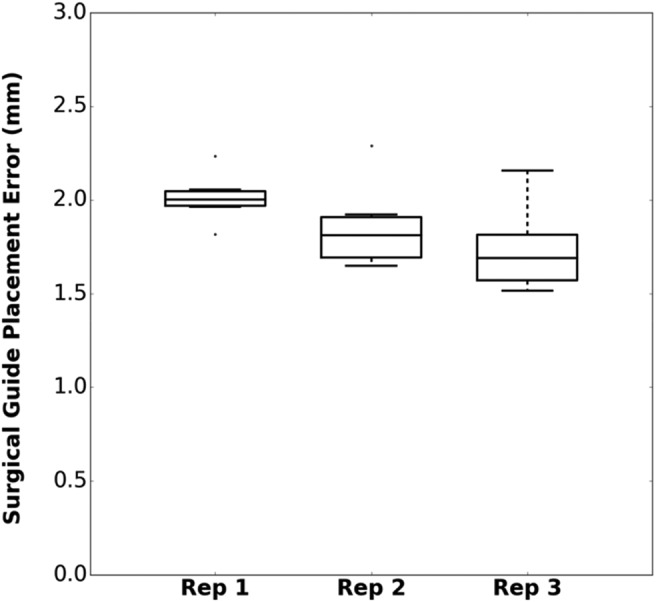

The accuracy results for the repeated placement of the surgical guide on the 3D printed phantom are displayed on Fig. 4: the average placement root-mean-squared error across all three repetitions (RMSE) was 1.87 mm. Several factors other than the surgical guide placement contribute to this error: 3D printing accuracy of the models (phantom and surgical guide), conical holes localisation error using the tracked tool and intrinsic error from the optical tracker.

Fig. 4.

Surgical guide placement error for three repetitions

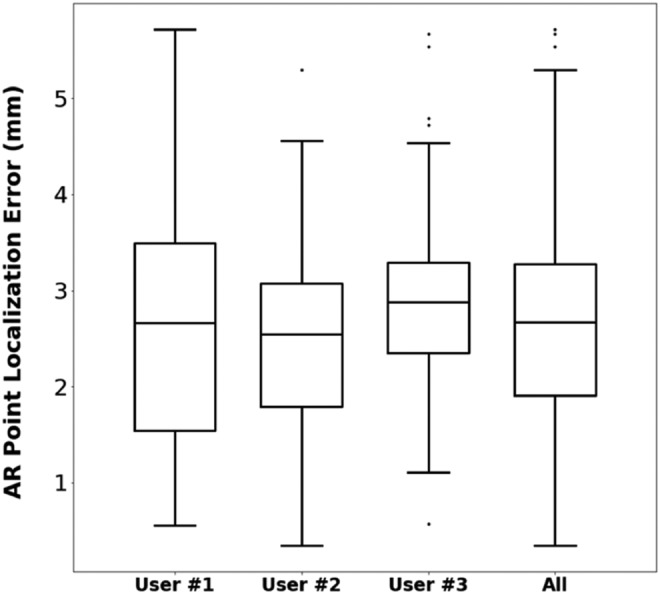

Regarding the AR visualisation error with HoloLens, the average RMSE for all users and repetitions on the 15 points distributed along the surface of the phantom was 2.90 mm (Fig. 5). This result is mainly influenced by the pointer tip positioning with respect to the holograms due to the depth perception, the size of the projected spheres in the AR environment and the positioning of the surgical guide. All error sources described for the surgical guide placement evaluation have also influence in this value. Nevertheless, the system is considered sufficiently accurate for many AR applications in the OR.

Fig. 5.

AR point localisation error for three users

Once the feasibility and accuracy of the proposed workflow were demonstrated, the developed software application was tested by several physicians with extensive experience in surgical navigation and AR on the phantom, receiving positive feedback. The registration methods based on the positioning of the surgical guide presented an optimal accuracy and did not interfere with the usual surgical workflow. The limitation regarding the required proximity for marker detection could be overcome, so physicians believed that the application was ready to be used in a real clinical case.

Finally, an expert surgeon evaluated the complete workflow during surgery. HoloLens device was attached and fixed prior to the sterilisation procedure. Once the area was cleared and the tumour was visible, the surgeon placed the guide on the bone (Fig. 6). The surgical guide was easily placed and fitted as planned in the desired area. A second surgeon manually held the guide until it was detected by the HoloLens application, and then it was removed from the scene since it was not necessary during the navigation step. No quantitative error measurements were obtained during surgery, although according to the surgeons experience the alignment between the augmented data and the actual anatomy was accurate.

Fig. 6.

Surgeon placing surgical guide in patient's tibia

a HoloLens

b Surgical guide

4. Conclusion and future work

AR can be an interesting technology for clinical scenarios as an alternative to surgical navigation. However, the registration between augmented data and real-world spaces is a limiting factor. In this work, we propose a method based on desktop 3D printing to create patient-specific tools containing a visual pattern that enables automatic registration. This custom guide fits in a unique region in the bone surface, avoiding placement errors. This solution has been developed in a software application running on Microsoft HoloLens. The workflow was first validated on a 3D printed phantom replicating the anatomy of an Ewing's sarcoma patient, and then on the actual surgery of this clinical case. The application allowed physicians to visualise skin, bone and tumour location overlaid on the phantom. This workflow can be applied in cases where hard body structures such as bones are involved, so it could be extended to many clinical applications in the surgical field and also for training and simulation. Although we have tested our workflow on wearable AR devices, we believe that a similar approach can be applied to other devices such as tablets or smartphones.

The evaluation results show that the surgical guide that includes the tracking pattern can be placed precisely on the reference bone anatomy, with accuracy of ∼2 mm. The addition of the AR component results in an overall localisation error of around 3 mm, which can be considered accurate considering that tracking information is obtained from the HoloLens RGB camera. Moreover, the clinical experience during surgery demonstrated the feasibility of the proposed workflow for AR guidance during surgical interventions.

The main advantages of our proposal are the simplicity, the easy interaction with the AR environment and the accurate registration using patient-specific 3D printed tools. No objective evaluation was performed regarding the added value provided by the AR guidance on the surgical scenario. However, we think that the results from this work will encourage the development of simple and efficient AR systems for educational, simulation and guidance purposes on the medical field.

5. Funding and declaration of interests

This work was supported by projects PI15/02121 (Ministerio de Economía y Competitividad, Instituto de Salud Carlos III and European Regional Development Fund ‘Una manera de hacer Europa’) and TOPUS-CM S2013/MIT-3024 (Comunidad de Madrid). The authors want to specially thank 6DLab company for their expertise in developing Augmented and Virtual Reality solutions for this work.

6. Conflict of interest

None declared.

7 References

- 1.Inoue D., Cho B., Mori M., et al. : ‘Preliminary study on the clinical application of augmented reality neuronavigation’, J. Neurol. Surg. A. Cent. Eur. Neurosurg., 2013, 74, pp. 71–76 (doi: 10.1055/s-0032-1333415) [DOI] [PubMed] [Google Scholar]

- 2.Coles T.R., John N.W., Gould D., et al. : ‘Integrating haptics with augmented reality in a femoral palpation and needle insertion training simulation’, IEEE Trans. Haptics, 2011, 4, (3), pp. 199–209 (doi: 10.1109/TOH.2011.32) [DOI] [PubMed] [Google Scholar]

- 3.Abhari K., Baxter J., Chen E., et al. : ‘Training for planning tumour resection: augmented reality and human factors’, IEEE Trans. Biomed. Eng., 2015, 62, (6), pp. 1466–1477 (doi: 10.1109/TBME.2014.2385874) [DOI] [PubMed] [Google Scholar]

- 4.Van Krevelen R., Poelman R.: ‘A survey of augmented reality technologies, applications and limitations’, Int. J. Virtual Real., 2010, 9, p. 1 [Google Scholar]

- 5.DAQRI.: ‘Artoolkit.org: Open source augmented reality sdk’. [Online]. Available at: http://artoolkit.org/ [Accessed: 25-Feb-2018]

- 6.Chen L., Day T.W., Tang W., et al. : ‘Recent developments and future challenges in medical mixed reality’. 2017 IEEE Int. Symp. on Mixed and Augmented Reality (ISMAR), Nantes, France, October 2017, pp. 123–135 [Google Scholar]

- 7.Tang R., Ma L., Xiang C., et al. : ‘Augmented reality navigation in open surgery for hilar cholangiocarcinoma resection with hemihepatectomy using video-based in situ three-dimensional anatomical modeling: a case report’, Medicine (Baltimore)., 2017, 96, (37), p e8083 (doi: 10.1097/MD.0000000000008083) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kuhlemann I., Kleemann M., Jauer P., et al. : ‘Towards X-ray free endovascular interventions – using HoloLens for on-line holographic visualisation’, Healthc. Technol. Lett., 2017, 4, (5), pp. 184–187 (doi: 10.1049/htl.2017.0061) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Pratt P., Ives M., Lawton G., et al. : ‘Through the HoloLensTM looking glass: augmented reality for extremity reconstruction surgery using 3D vascular models with perforating vessels’, Eur. Radiol. Exp., 2018, 2, (1), p. 2 (doi: 10.1186/s41747-017-0033-2) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Plantefève R., Peterlik I., Haouchine N., et al. : ‘Patient-specific biomechanical modeling for guidance during minimally-invasive hepatic surgery’, Ann. Biomed. Eng., 2016, 44, (1), pp. 139–153 (doi: 10.1007/s10439-015-1419-z) [DOI] [PubMed] [Google Scholar]

- 11.Vaquero J., Arnal J., Perez-Mañanes R., et al. : ‘3D patient-specific surgical printing cutting blocks guides and spacers for open- wedge high tibial osteotomy (HTO) - do it yourself’, Rev. Chir. Orthopédique Traumatol., 2016, 102, (7), p. S131 (doi: 10.1016/j.rcot.2016.08.136) [Google Scholar]

- 12.Arnal-Burró J., Pérez-Mañanes R., Gallo-del-Valle E., et al. : ‘Three dimensional-printed patient-specific cutting guides for femoral varization osteotomy: do it yourself’, Knee, 2017, 24, (6), pp. 1359–1368 (doi: 10.1016/j.knee.2017.04.016) [DOI] [PubMed] [Google Scholar]

- 13.Zhu M., Liu F., Chai G., et al. : ‘A novel augmented reality system for displaying inferior alveolar nerve bundles in maxillofacial surgery’, Sci. Rep., 2017, 7, p. 42365 (doi: 10.1038/srep42365) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.PTC Inc.: ‘Vuforia’. [Online]. Available at: http://www.vuforia.com/ [Accessed: 15-Feb-2018]

- 15.Zislis T., Martin S.A, Cerbas E., et al. : ‘A scanning electron microscopic study of in vitro toxicity of ethylene-oxide-sterilized bone repair materials’, J. Oral Implantol., 1989, 15, pp. 41–46 [PubMed] [Google Scholar]